A Novel Hybrid Framework for Stock Price Prediction Integrating Adaptive Signal Decomposition and Multi-Scale Feature Extraction

Abstract

1. Introduction

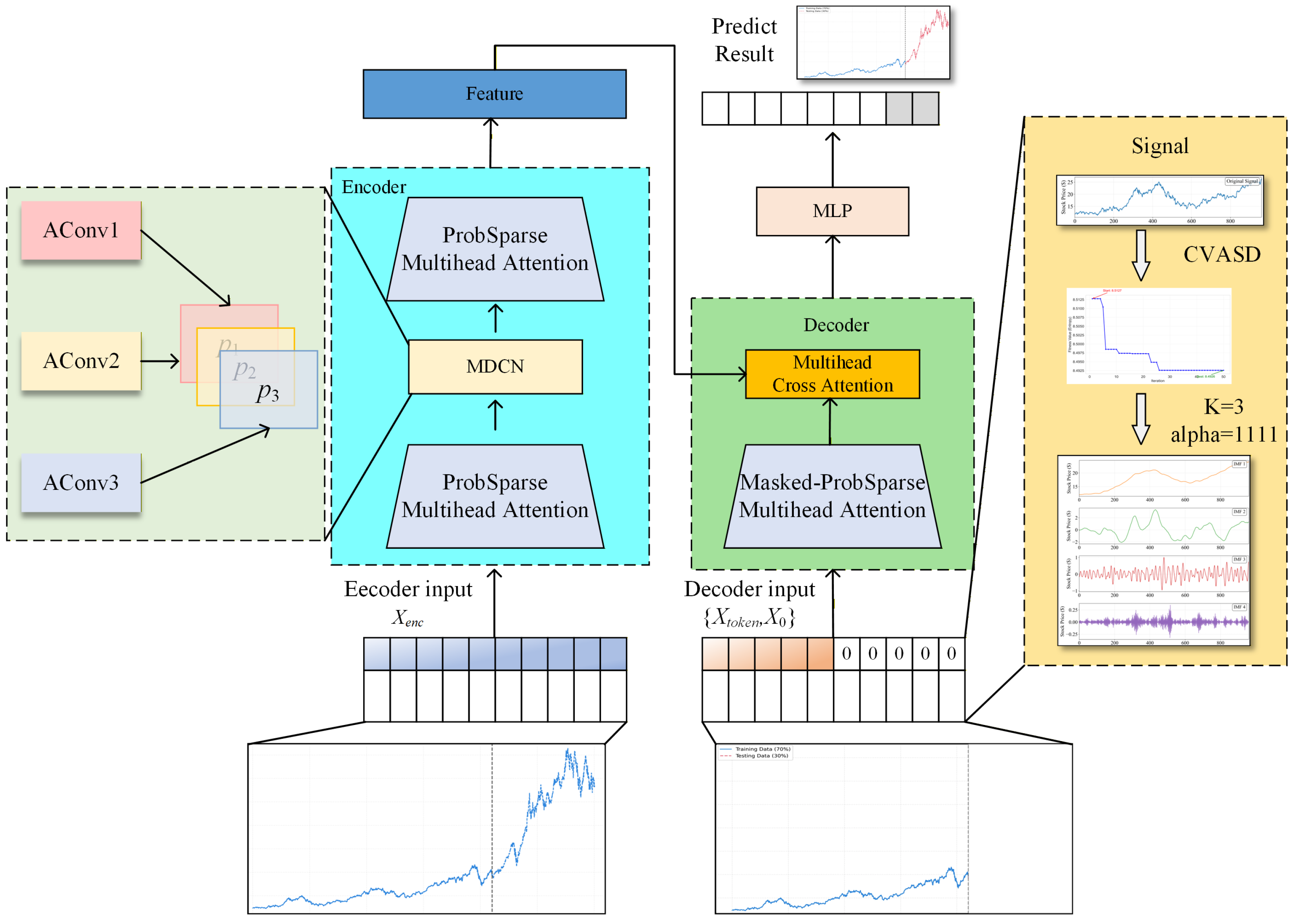

- We propose a novel stock price prediction framework that fuses adaptive signal decomposition with multi-scale feature extraction. By combining VMD, CPO-based parameter optimization, and multi-scale dilated convolutions, the framework significantly enhances long-sequence forecasting capabilities.

- We are the first to apply the Conditional Optimization (CPO) algorithm to optimize VMD parameters. By automatically searching for the number of modes and the penalty factor, CPO resolves the issue of empirical parameter setting in traditional VMD and achieves superior decomposition performance on complex financial series.

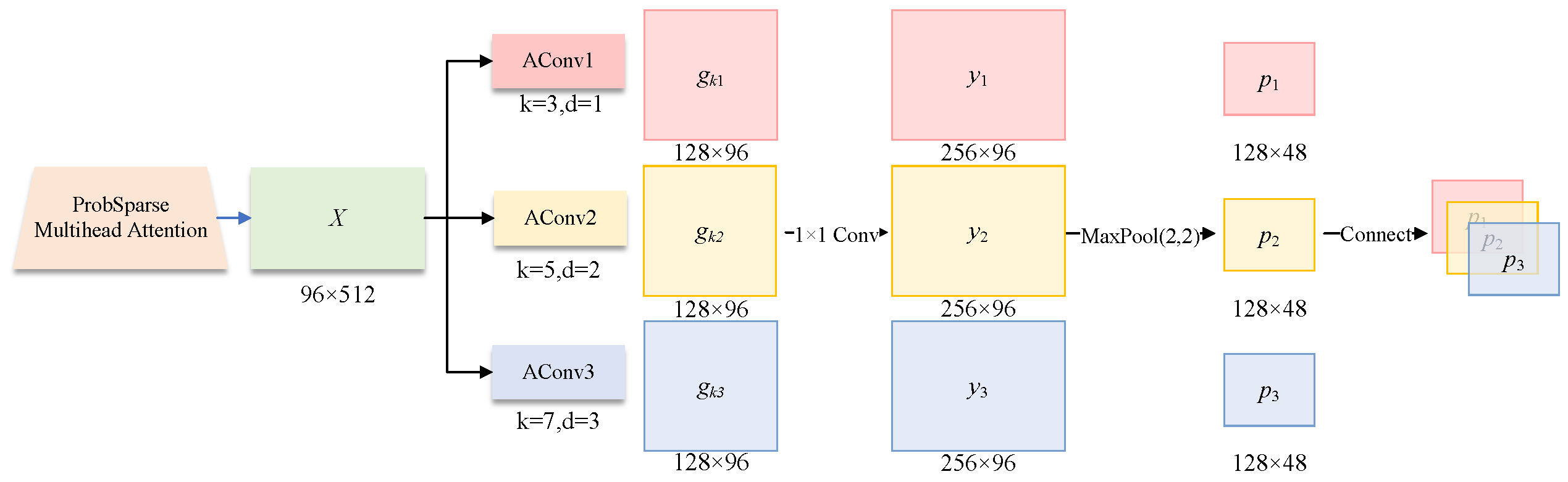

- We design a Multi-scale Dilated Convolution Module (MDCM). This module utilizes different dilation rates to construct multi-scale receptive fields, substantially strengthening Informer’s ability to capture features at various time scales.

- Extensive experiments on real-world stock market data validate the superiority of the proposed model. Compared to baseline models, CVASD-MDCM-Informer demonstrates significant improvements in prediction accuracy, stability, and computational efficiency.

2. Related Work

2.1. Deep Learning in Time Series Forecasting

2.1.1. RNN/LSTM-Based Models

2.1.2. Transformer-Based Models

- Autoformer: Decomposes the input series into trend and seasonal components and captures periodic information using a stacked auto-correlation mechanism for long-term time series forecasting [18].

- FEDformer: Combines seasonal-trend decomposition with frequency domain analysis, introducing a frequency-enhanced module that enables the Transformer to capture global properties in the frequency domain while reducing computational complexity to a linear level [19].

- Other Models: Models such as LogSparse Transformer, Longformer, and Reformer employ sparse or local attention strategies to reduce time complexity [10].

2.2. The Decomposition–Ensemble Paradigm

2.2.1. Signal Decomposition Techniques

2.2.2. Hybrid Prediction Models

2.3. Intelligent Optimization of VMD Parameters

3. Methodology

- (1)

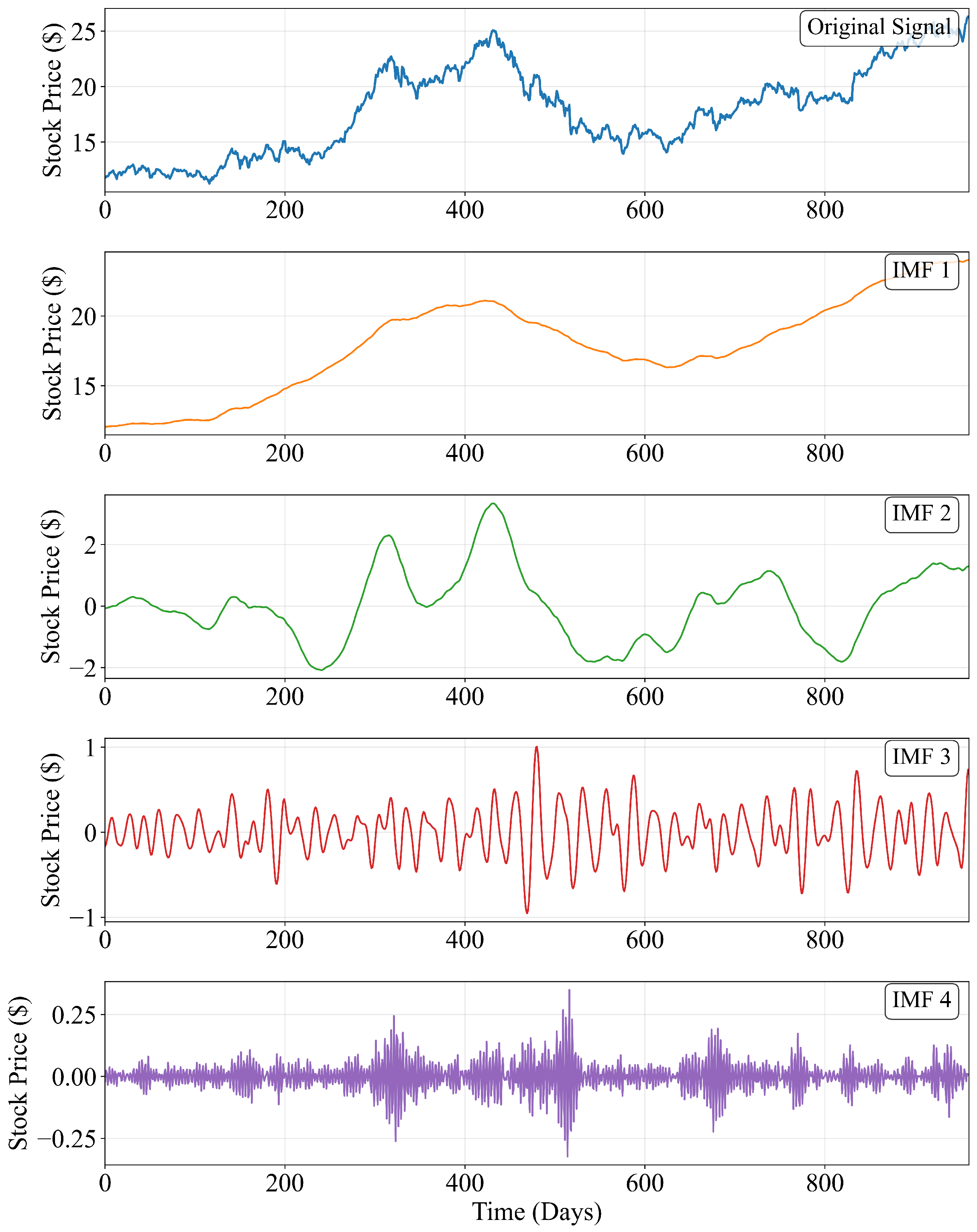

- CPO-VMD Adaptive Signal Decomposition (CVASD) Module: Prior to data input, we first apply a rolling decomposition to the original stock price series using a Variational Mode Decomposition (VMD) method optimized by the Crested Porcupine Optimizer (CPO). This module decomposes the complex original series into a set of Intrinsic Mode Functions (IMFs), each possessing distinct frequency characteristics. This process effectively separates trends and fluctuations occurring at different time scales. These components are then fed individually into the subsequent network for prediction, which effectively reduces noise interference and enhances the model’s ability to capture detailed features.

- (2)

- Multi-scale Dilated Convolution Module (MDCM): To more comprehensively extract temporal features from each decomposed component series, we replace the standard one-dimensional convolutional layers in the Informer’s encoder with a Multi-scale Dilated Convolution Module (MDCM). This module employs three parallel convolutional kernels with different dilation rates, enabling it to simultaneously capture short- and long-term dependencies across various receptive fields, and enhances the model’s feature representation capabilities through feature fusion.

- (3)

- Differentiation from Built-in Decomposition Transformers: It is important to distinguish our “decomposition–prediction–ensemble” paradigm from Transformer variants that embed a fixed trend/seasonal split (e.g., Autoformer [18]). Autoformer’s in-network decomposition is highly effective for series with pronounced periodicity (such as energy consumption or meteorology). In contrast, financial time series are dominated by high noise, nonstationarity, regime shifts, and irregular volatility rather than stable seasonality. To address this, the proposed CVASD module leverages CPO-optimized VMD to adaptively partition the input into frequency-based Intrinsic Mode Functions (IMFs), effectively separating high-frequency noise from medium-term oscillations and low-frequency trends. The subsequent MDCM then performs multi-scale, dilation-based feature extraction on each IMF, producing component-aware representations that align better with the heterogeneous, nonstationary structure of financial data than a general-purpose trend/seasonal decomposition.

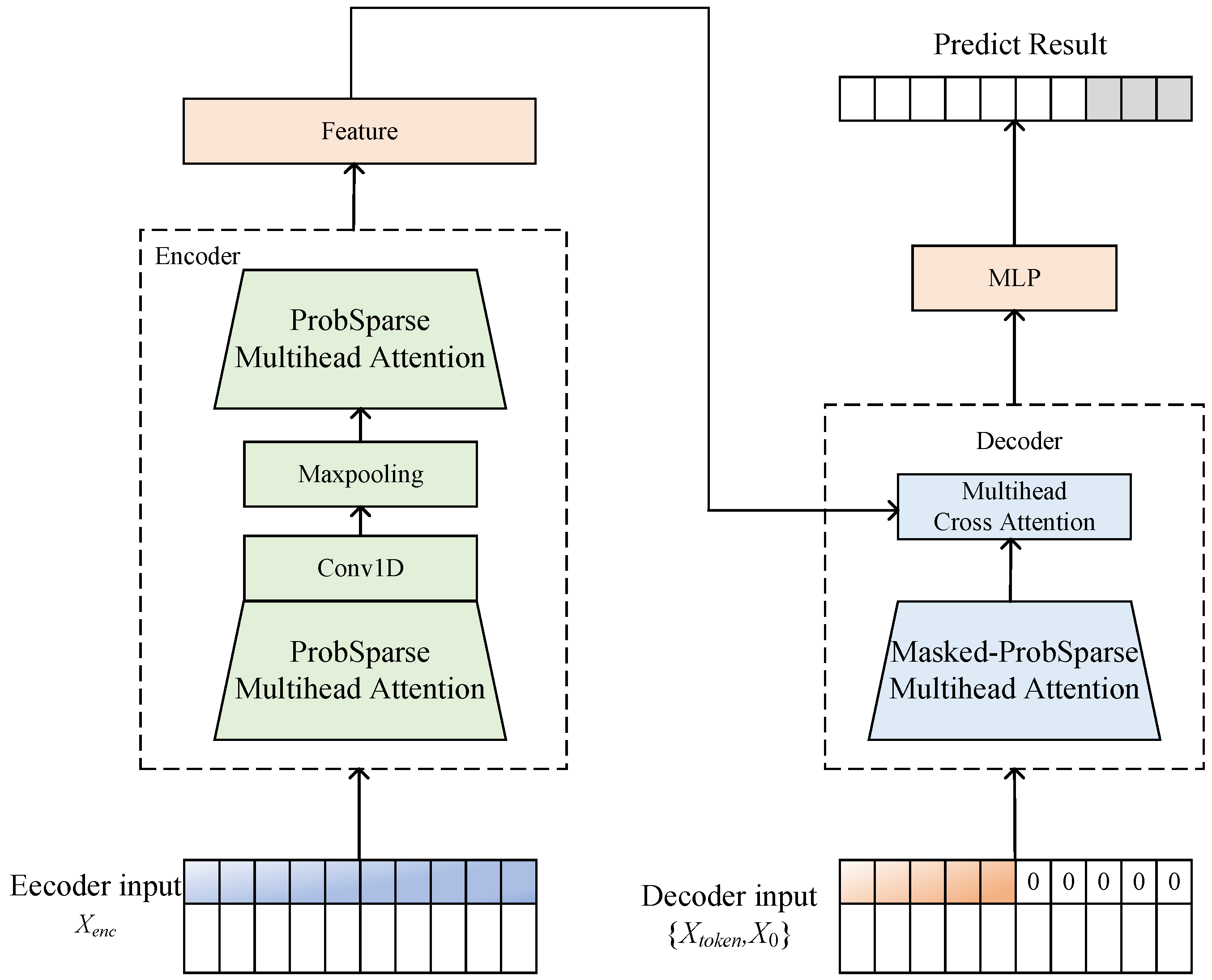

3.1. The Informer Model

3.1.1. ProbSparse Self-Attention Mechanism

3.1.2. Self-Attention Distilling

3.1.3. Generative-Style Decoder

3.2. CPO-Based VMD Parameter Optimization

3.2.1. Variational Mode Decomposition (VMD) Principle

3.2.2. CPO-VMD Parameter Optimization Process

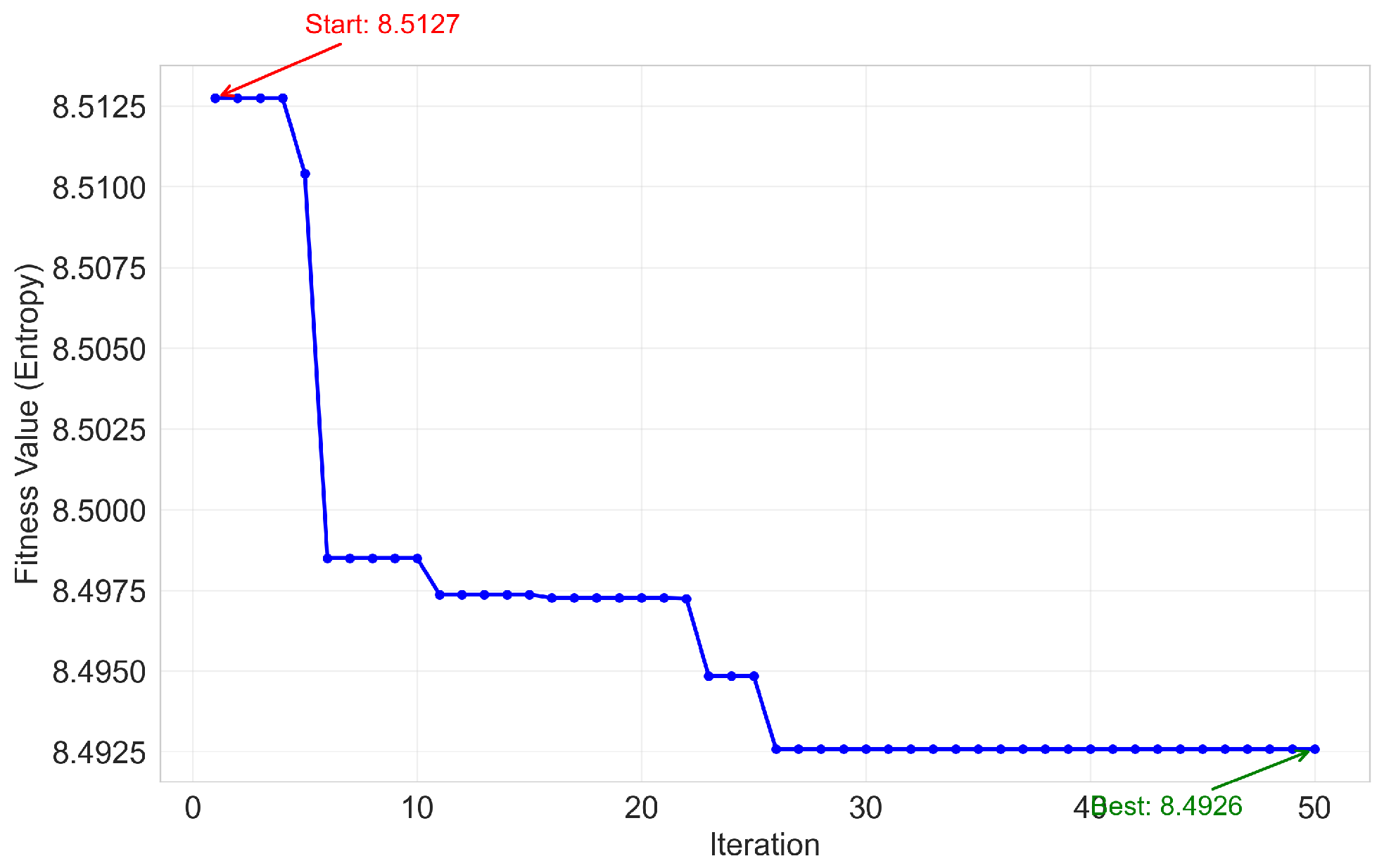

- Define the Fitness Function: We select Sample Entropy as the fitness function. Sample Entropy is a key metric for measuring the complexity of a time series; a smaller value indicates stronger regularity and lower noise levels. An ideal VMD decomposition should produce a series of stationary and physically meaningful modal components, which typically have low Sample Entropy values. Therefore, we set the average Sample Entropy of all modal components as the optimization objective. That is, the goal of the CPO algorithm is to find a set of parameters that minimizes this fitness function.

- Execute CPO Optimization: Within a predefined parameter range (e.g., , ), the CPO algorithm searches for the optimal parameter combination by iteratively updating the population’s positions.

- Sliding Decomposition Strategy: A critical aspect of our methodology is to strictly prevent data leakage. We perform CPO-VMD decomposition using a sliding window approach. While our model’s input sequence length (seq_len) is 96, we found that VMD requires a longer sequence for stable decomposition. Therefore, to generate the input for time t (which requires data from to t), we perform the decomposition on a longer preceding window of 960 time steps (i.e., the interval ). Crucially, from the resulting K decomposed IMFs (each of length 960), we only extract the final 96 time steps (the interval ) to serve as the input for the Informer network. This rolling decomposition ensures that at no point does the model use future information. For example, to predict from time 961, we decompose the series from time 1 to 960, and use the IMF segments from time 865 to 960 as input. This design assumes a ‘warm-up’ period of data (960 − 96 = 864 steps) is available before the first prediction.

- Information Technology: ,

- Financials: ,

- Consumer Staples: ,

3.3. Multi-Scale Dilated Convolution

3.3.1. Fundamental Theory

3.3.2. Multi-Scale Dilated Convolution Module

4. Experiments

4.1. Dataset

- Information Technology: This sector is typically characterized by high growth and is sensitive to market risk appetite. Its stock price dynamics are well-described by features such as momentum, earnings forecast revisions, and R&D intensity.

- Financials: This sector is highly sensitive to interest rate changes. Its performance is closely correlated with exogenous factors like macroeconomic policies (e.g., policy rates), market interest rates (e.g., long- and short-term government bond yields), and credit spreads.

- Consumer Staples: This sector possesses defensive characteristics. Companies in this sector have relatively stable earnings and lower volatility, often exhibiting strong downside resistance during market-wide drawdowns.

4.2. Baseline Models

- Transformer [26]: This architecture employs a self-attention mechanism and an encoder-decoder structure, supporting parallel processing of sequential data to effectively capture long-range dependencies. However, its quadratic time/memory complexity () limits its direct application in long-sequence time series forecasting.

- Autoformer [18]: A decomposition-based Transformer with an auto-correlation mechanism for long-term time series forecasting. The model incorporates a series decomposition block as a built-in operator to progressively separate trend-cyclical and seasonal components. The auto-correlation mechanism replaces self-attention, mining sub-series dependencies based on periodicity via Fast Fourier Transform and aggregating similar sub-series. It has achieved state-of-the-art performance on datasets for energy, traffic, and weather.

- Informer [10]: An efficient Transformer for long-sequence time series forecasting. The model addresses the limitations of the Transformer through three innovations: ProbSparse self-attention, self-attention distilling, and a generative-style decoder. It outperforms traditional models on large-scale datasets such as ETT and Traffic.

- Informer-CGRU [27]: A model based on Informer for non-stationary time series forecasting. It introduces multi-scale causal convolutions to capture causal relationships across time scales and uses a GRU layer to re-learn causal features, enhancing robustness to non-linearity and high noise.

- iTransformer [28]: An inverted Transformer architecture optimized for time series forecasting. This model improves the input processing and attention mechanism of the Transformer to enhance temporal pattern capturing. By reformulating the attention computation paradigm, it improves the efficiency of modeling long-range dependencies in time series data.

- FEDformer [19]: A frequency-enhanced decomposition Transformer for long-term forecasting. The model integrates seasonal-trend decomposition with frequency-domain transforms. Frequency-enhanced blocks and attention mechanisms replace self-attention and cross-attention, ensuring linear complexity by randomly selecting frequency components. This model improves the distributional consistency between predicted and true sequences.

- DAT-PN [29]: A Transformer-based dual-attention framework for financial time series forecasting. The framework consists of two parallel networks: a Price Attention Network (PAN) processes historical price data using multi-head masked self-attention (MMSA) and multi-head cross-attention (MCA) to capture temporal dependencies, while a Non-price Attention Network uses a convolutional LSTM, a bidirectional GRU, and self-attention to extract features from non-price financial data.

- VLTCNA [12]: A stock sequence prediction model that integrates Variational Mode Decomposition (VMD) with dual-channel attention. VMD decomposes the stock sequence into stable frequency sub-windows using a sliding window to reduce volatility. A dual-channel network (LSTMA + TCNA) comprises an LSTMA (2 LSTM layers + self-attention) and a TCNA (4 F-TCN modules + self-attention), where the former captures long-term dependencies and the latter captures local/short-term patterns.

4.3. Experimental Environment and Parameter Settings

4.3.1. Experimental Environment

4.3.2. Hyperparameter Settings

4.4. Evaluation Metrics

4.5. Comparative Analysis of Experiments

4.6. Ablation Study

5. Conclusions and Future Work

5.1. Limitations

- Survivorship Bias: As noted by the reviewer, our dataset construction, which selected 352 companies with complete data from 2011 to 2022, introduces survivorship bias. By excluding delisted or inactive firms, our sample is skewed towards more stable and successful companies, which may artificially inflate the model’s predictability. Future work should explore methods to incorporate data from delisted stocks to create a more representative dataset.

- Computational Overhead and Data Requirement: The CPO-VMD rolling decomposition strategy, while effective, is computationally intensive. Furthermore, our use of a 960-step window for stable decomposition requires a significant ‘warm-up’ period (864 data points in our study), making the model less suitable for ‘cold-start’ scenarios or very short time series.

5.2. Future Work

- Broader Asset Classes: The framework’s effectiveness should be tested on other financial instruments, such as exchange-traded funds (ETFs), cryptocurrencies, and macroeconomic indicators, which possess different volatility structures and noise characteristics.

- Cross-Domain Applications: The proposed methodology is not limited to finance. It can be extended to other domains characterized by complex, non-stationary time series, such as energy load forecasting, electricity price prediction, and meteorological forecasting.

- Model Efficiency: Future research could explore more lightweight adaptive decomposition techniques or investigate knowledge distillation methods to reduce the computational burden of the CVASD module without sacrificing performance.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rezaei, H.; Faaljou, H.; Mansourfar, G. Stock price prediction using deep learning and frequency decomposition. Expert Syst. Appl. 2021, 169, 114332. [Google Scholar] [CrossRef]

- Hossein, A.; Charlotte, C.; Hou, A.J. The effect of uncertainty on stock market volatility and correlation. J. Bank. Financ. 2023, 154, 106929. [Google Scholar]

- Su, J.; Lau, R.Y.K.; Yu, J.; NG, D.C.T.; Jiang, W. A multi-modal data fusion approach for evaluating the impact of extreme public sentiments on corporate credit ratings. Complex Intell. Syst. 2025, 11, 436. [Google Scholar] [CrossRef]

- Barberis, N.; Jin, L.J.; Wang, B. Prospect theory and stock market anomalies. J. Financ. 2021, 76, 2639–2687. [Google Scholar] [CrossRef]

- Peress, J.; Schmidt, D. Noise traders incarnate: Describing a realistic noise trading process. J. Financ. Mark. 2021, 54, 100618. [Google Scholar] [CrossRef]

- Farahani, M.S.; Hajiagha, S.H.R. Forecasting stock price using integrated artificial neural network and metaheuristic algorithms compared to time series models. Soft Comput. 2021, 25, 8483. [Google Scholar] [CrossRef] [PubMed]

- Suthaharan, S. Support vector machine. In Machine Learning Models and Algorithms for Big Data Classification: Thinking with Examples for Effective Learning; Springer: Berlin/Heidelberg, Germany, 2016; pp. 207–235. [Google Scholar]

- Medsker, L.; Jain, L.C. Recurrent Neural Networks: Design and Applications; CRC Press: Boca Raton, FL, USA, 1999. [Google Scholar]

- Nagar, P.; Shastry, K.; Chaudhari, J.; Arora, C. SEMA: Semantic Attention for Capturing Long-Range Dependencies in Egocentric Lifelogs. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 7025–7035. [Google Scholar]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 11106–11115. [Google Scholar]

- Zhang, C.; Sjarif, N.N.A.; Ibrahim, R. Deep learning models for price forecasting of financial time series: A review of recent advancements: 2020–2022. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2024, 14, e1519. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, S.; Tian, X.; Zhang, F.; Zhao, F.; Zhang, C. A stock series prediction model based on variational mode decomposition and dual-channel attention network. Expert Syst. Appl. 2024, 238, 121708. [Google Scholar] [CrossRef]

- Ma, R.; Han, T.; Lei, W. Cross-domain meta learning fault diagnosis based on multi-scale dilated convolution and adaptive relation module. Knowl.-Based Syst. 2023, 261, 110175. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Variational mode decomposition. IEEE Trans. Signal Process. 2013, 62, 531–544. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Abouhawwash, M. Crested Porcupine Optimizer: A new nature-inspired metaheuristic. Knowl.-Based Syst. 2024, 284, 111257. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Hu, Y.; Liu, H. Multi-feature stock price prediction by LSTM networks based on VMD and TMFG. J. Big Data 2025, 12, 74. [Google Scholar] [CrossRef]

- Jain, A.; Zamir, A.R.; Savarese, S.; Saxena, A. Structural-rnn: Deep learning on spatio-temporal graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 5308–5317. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. Adv. Neural Inf. Process. Syst. 2021, 34, 22419–22430. [Google Scholar]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. Fedformer: Frequency enhanced decomposed transformer for long-term series forecasting. In Proceedings of the International Conference on Machine Learning, PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 27268–27286. [Google Scholar]

- Lv, P.; Shu, Y.; Xu, J.; Wu, Q. Modal decomposition-based hybrid model for stock index prediction. Expert Syst. Appl. 2022, 202, 117252. [Google Scholar] [CrossRef]

- Gao, Z.; Zhang, J. The fluctuation correlation between investor sentiment and stock index using VMD-LSTM: Evidence from China stock market. N. Am. J. Econ. Financ. 2023, 66, 101915. [Google Scholar] [CrossRef]

- Li, Q.; Wang, G.; Wu, X.; Gao, Z.; Dan, B. Arctic short-term wind speed forecasting based on CNN-LSTM model with CEEMDAN. Energy 2024, 299, 131448. [Google Scholar] [CrossRef]

- Rayi, V.K.; Mishra, S.; Naik, J.; Dash, P.K. Adaptive VMD based optimized deep learning mixed kernel ELM autoencoder for single and multistep wind power forecasting. Energy 2022, 244, 122585. [Google Scholar] [CrossRef]

- Ding, J.; Huang, L.; Xiao, D.; Li, X. GMPSO-VMD algorithm and its application to rolling bearing fault feature extraction. Sensors 2020, 20, 1946. [Google Scholar] [CrossRef]

- S&P Global Market Intelligence. The Global Industry Classification Standard (GICS®); Technical Report; Standard & Poor’s Financial Services LLC (S&P), MSCI: New York, NY, USA, 2018. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar] [CrossRef]

- Yang, Y.; Dong, Y. Informer_Casual_LSTM: Causal Characteristics and Non-Stationary Time Series Prediction. In Proceedings of the 2024 7th International Conference on Machine Learning and Natural Language Processing (MLNLP), Chengdu, China, 18–20 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Liu, Y.; Hu, T.; Zhang, H.; Wu, H.; Wang, S.; Ma, L.; Long, M. itransformer: Inverted transformers are effective for time series forecasting. arXiv 2023, arXiv:2310.06625. [Google Scholar]

- Hadizadeh, A.; Tarokh, M.J.; Ghazani, M.M. A novel transformer-based dual attention architecture for the prediction of financial time series. J. King Saud Univ. Comput. Inf. Sci. 2025, 37, 72. [Google Scholar] [CrossRef]

| Factor Type | Factor Name |

|---|---|

| Basic Factors | OPEN, CLOSE, HIGH, LOW, VOLUME |

| DIF, DEA, MACD | |

| KDJ, BIAS1, BIAS2, BIAS3, | |

| Technical Factors | UPPER, MID, LOWER, |

| PSY, PSYMA, CCI, | |

| MTM, MTMMA, AR, BR |

| Environment Component | Specification |

|---|---|

| Runtime Environment | Python 3.7 |

| Deep Learning Framework | Pytorch 1.9.1 + CUDA 11.1 |

| Operating System | Windows 10 |

| CPU Model | Intel Core i7-12700K @ 4.9 GHz |

| Memory (RAM) | 64 GB (4 × 16 GB) DDR4 @ 3600 MHz |

| GPU Model | NVIDIA GeForce RTX 3090 (24 GB) |

| Parameter Name | Description | Value |

|---|---|---|

| batch_size | Batch Size | 64 |

| learn_rate | Learning Rate | 0.0001 |

| seq_len | Input Sequence Length | 96 |

| label_len | Label Sequence Length | 48 |

| pred_len | Output Sequence Length | 48 |

| factor | Sparsity Factor | 5 |

| n_heads | Number of Attention Heads | 8 |

| e_layers | Number of Encoder Layers | 2 |

| d_layers | Number of Decoder Layers | 1 |

| d_model | Model Dimension | 512 |

| Parameter | Search Space | Selected Value |

|---|---|---|

| Learning rate | {0.0001, 0.0005, 0.001} | 0.0001 |

| Batch size | {32, 64, 128} | 64 |

| Encoder layers () | {2, 3, 4} | 2 |

| MDCM kernel sizes | {(1, 3, 5), (3, 5, 7), (1, 5, 9)} | (3, 5, 7) |

| MDCM dilation rates | {(1, 2, 4), (1, 3, 5), (1, 4, 8)} | (1, 2, 4) |

| Model dimension () | {256, 512} | 512 |

| Model | Metric | Information Tech | Financials | Consumer Staples |

|---|---|---|---|---|

| Transformer | MAE | 0.3977 | 0.3978 | 0.3378 |

| MSE | 0.2228 | 0.2380 | 0.2089 | |

| RMSE | 0.4728 | 0.4910 | 0.4690 | |

| Autoformer | MAE | 0.3845 | 0.4240 | 0.3776 |

| MSE | 0.2395 | 0.2398 | 0.2067 | |

| RMSE | 0.4890 | 0.4889 | 0.4677 | |

| Informer | MAE | 0.3727 | 0.4011 | 0.3578 |

| MSE | 0.2024 | 0.2386 | 0.1890 | |

| RMSE | 0.4503 | 0.4876 | 0.4335 | |

| Informer-CGRU | MAE | 0.3378 | 0.3658 | 0.3349 |

| MSE | 0.1701 | 0.2210 | 0.1779 | |

| RMSE | 0.4125 | 0.4613 | 0.4212 | |

| iTransformer | MAE | 0.3089 | 0.3678 | 0.3581 |

| MSE | 0.1489 | 0.2183 | 0.1852 | |

| RMSE | 0.3852 | 0.4673 | 0.4398 | |

| DAT-PN | MAE | 0.3389 | 0.3508 | 0.3238 |

| MSE | 0.1771 | 0.1910 | 0.1681 | |

| RMSE | 0.4209 | 0.4384 | 0.4230 | |

| FEDformer | MAE | 0.3294 | 0.3585 | 0.3224 |

| MSE | 0.1644 | 0.1994 | 0.1448 | |

| RMSE | 0.4087 | 0.4470 | 0.3843 | |

| VLTCNA | MAE | 0.3268 | 0.3715 | 0.3231 |

| MSE | 0.1617 | 0.2094 | 0.1767 | |

| RMSE | 0.4103 | 0.4564 | 0.4109 | |

| Ours | MAE | 0.3110 | 0.3380 | 0.2942 |

| MSE | 0.1411 | 0.1809 | 0.1356 | |

| RMSE | 0.3760 | 0.4279 | 0.3722 | |

| Improvement | MAE | −0.68% | 3.65% | 8.74% |

| MSE | 5.24% | 5.29% | 6.35% | |

| RMSE | 2.39% | 2.40% | 3.15% |

| Industry | Variant | MAE | MSE | RMSE |

|---|---|---|---|---|

| Information Technology | w/o CVASD & MDCM | 0.3874 | 0.2028 | 0.4672 |

| w/o CVASD | 0.3432 | 0.1745 | 0.4262 | |

| w/o MDCM | 0.3234 | 0.1670 | 0.4003 | |

| Our Model | 0.3110 | 0.1411 | 0.3760 | |

| Financials | w/o CVASD & MDCM | 0.3789 | 0.2257 | 0.4766 |

| w/o CVASD | 0.3537 | 0.1909 | 0.4460 | |

| w/o MDCM | 0.3581 | 0.2136 | 0.4578 | |

| Our Model | 0.3380 | 0.1809 | 0.4279 | |

| Consumer Staples | w/o CVASD & MDCM | 0.3673 | 0.1830 | 0.4335 |

| w/o CVASD | 0.3104 | 0.1689 | 0.4031 | |

| w/o MDCM | 0.3207 | 0.1589 | 0.3899 | |

| Our Model | 0.2942 | 0.1356 | 0.3722 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, J.; Lau, R.Y.K.; Du, Y.; Yu, J.; Zhang, H. A Novel Hybrid Framework for Stock Price Prediction Integrating Adaptive Signal Decomposition and Multi-Scale Feature Extraction. Appl. Sci. 2025, 15, 12450. https://doi.org/10.3390/app152312450

Su J, Lau RYK, Du Y, Yu J, Zhang H. A Novel Hybrid Framework for Stock Price Prediction Integrating Adaptive Signal Decomposition and Multi-Scale Feature Extraction. Applied Sciences. 2025; 15(23):12450. https://doi.org/10.3390/app152312450

Chicago/Turabian StyleSu, Junqi, Raymond Y. K. Lau, Yuefeng Du, Jia Yu, and Hui Zhang. 2025. "A Novel Hybrid Framework for Stock Price Prediction Integrating Adaptive Signal Decomposition and Multi-Scale Feature Extraction" Applied Sciences 15, no. 23: 12450. https://doi.org/10.3390/app152312450

APA StyleSu, J., Lau, R. Y. K., Du, Y., Yu, J., & Zhang, H. (2025). A Novel Hybrid Framework for Stock Price Prediction Integrating Adaptive Signal Decomposition and Multi-Scale Feature Extraction. Applied Sciences, 15(23), 12450. https://doi.org/10.3390/app152312450