Interactive Platform for Hand Motor Rehabilitation Using Electromyography and Optical Tracking

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design and Participants

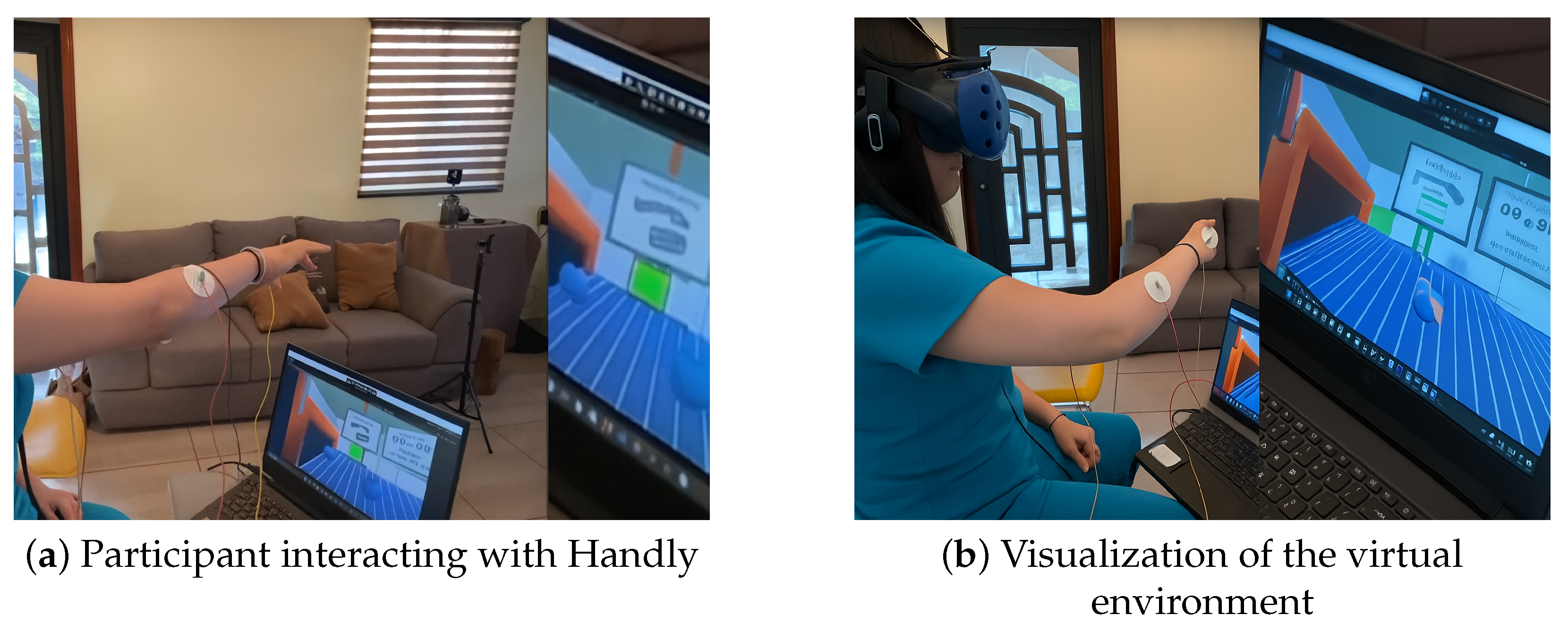

2.2. Experimental Procedure

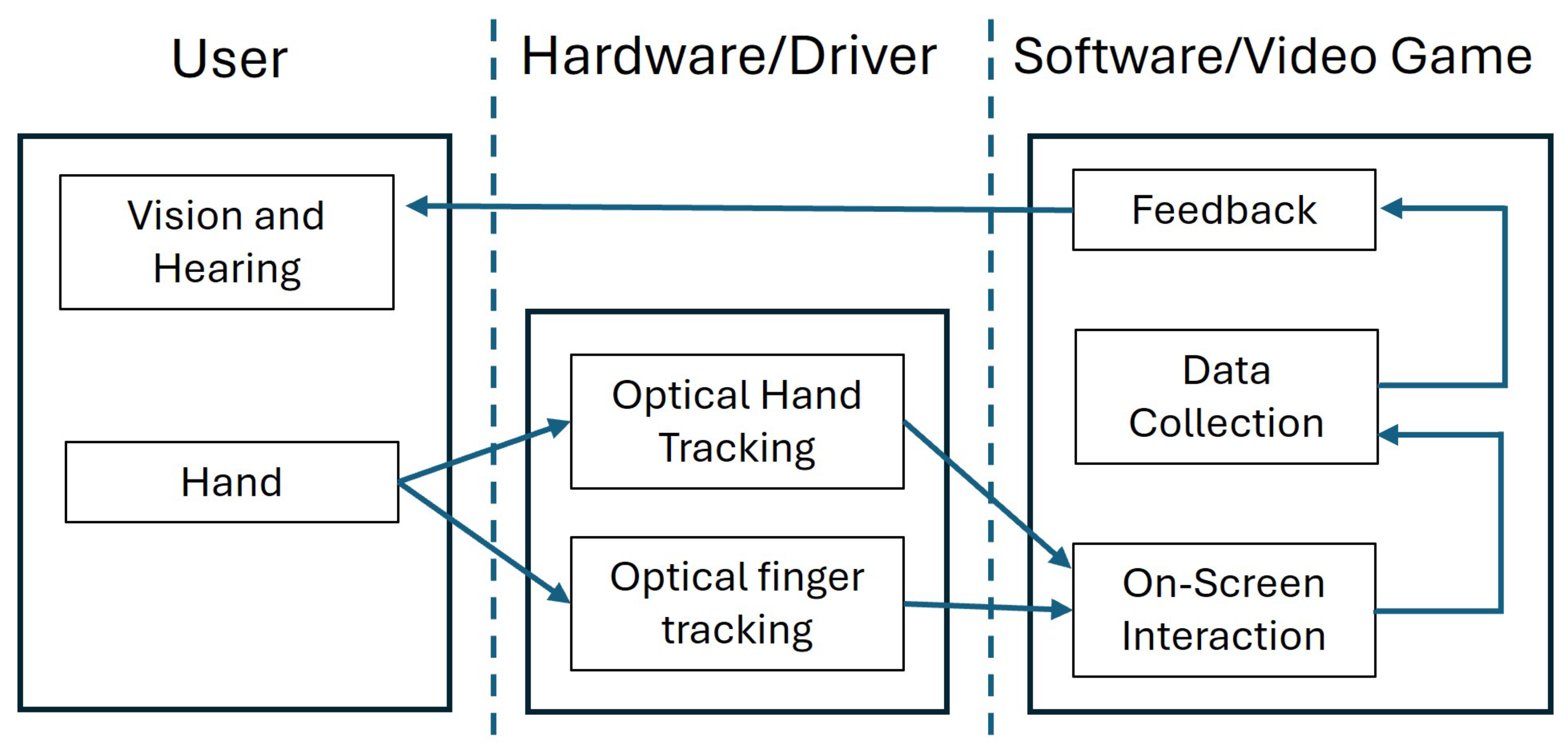

2.3. System Architecture

- Leap Motion Sensor: Tracks the real-time position of the hand and fingers;

- BioAmp EXG Pill, ESP32, Surface EMG Sensors: Placed on the forearm and connected to a board to record muscle activation;

- HTC Vive Virtual Reality Headset Kit System: Provides immersive virtual reality visualization;

- OMEN LAPTOP: Windows 11, Intel i7, 32 GB RAM, 300 Hz display, and NVIDIA RTX 3070 GPU;

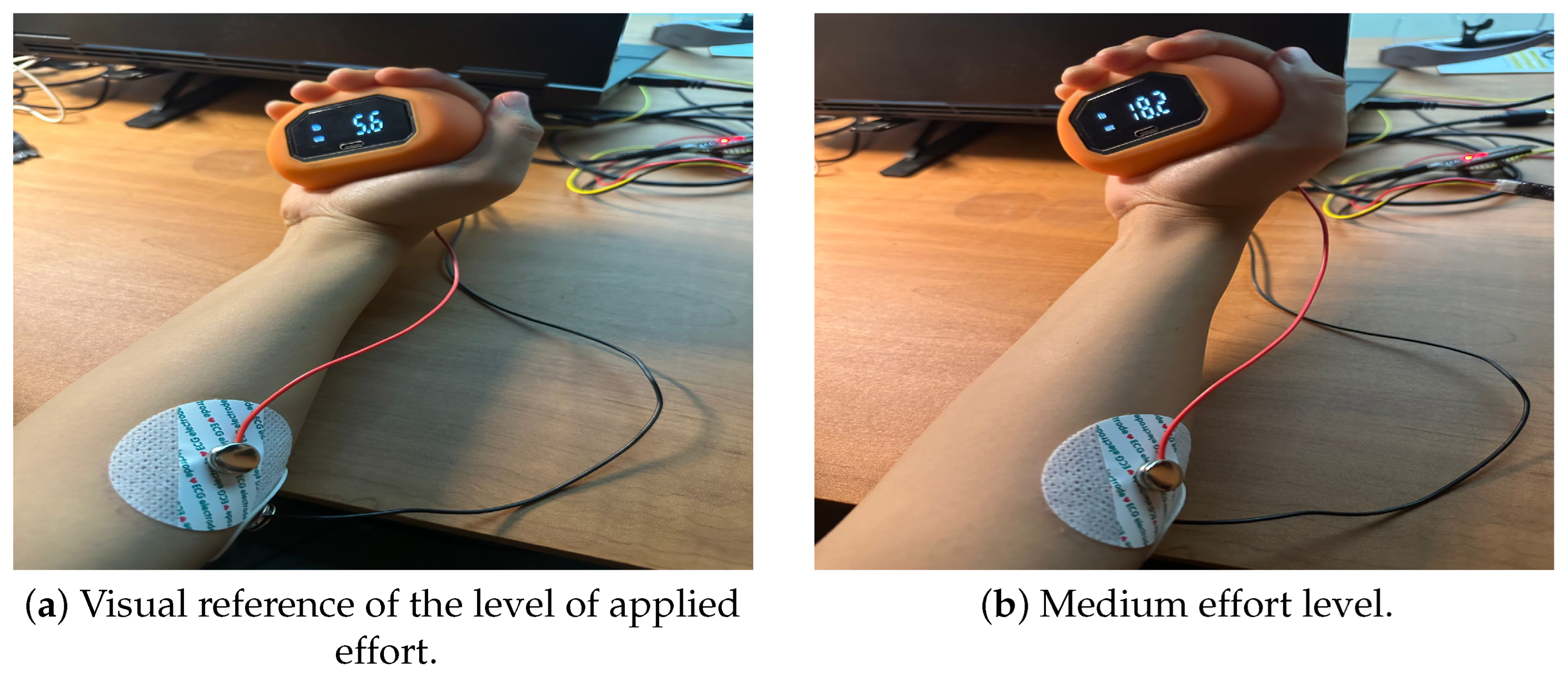

- Palm Grip Strength Tester: A Hichor Handheld Dynamometer (Range 0–90 kgf; resolution 0.1 kgf; calibrated before each session) was used to measure grip force during the MVC calibration procedure;

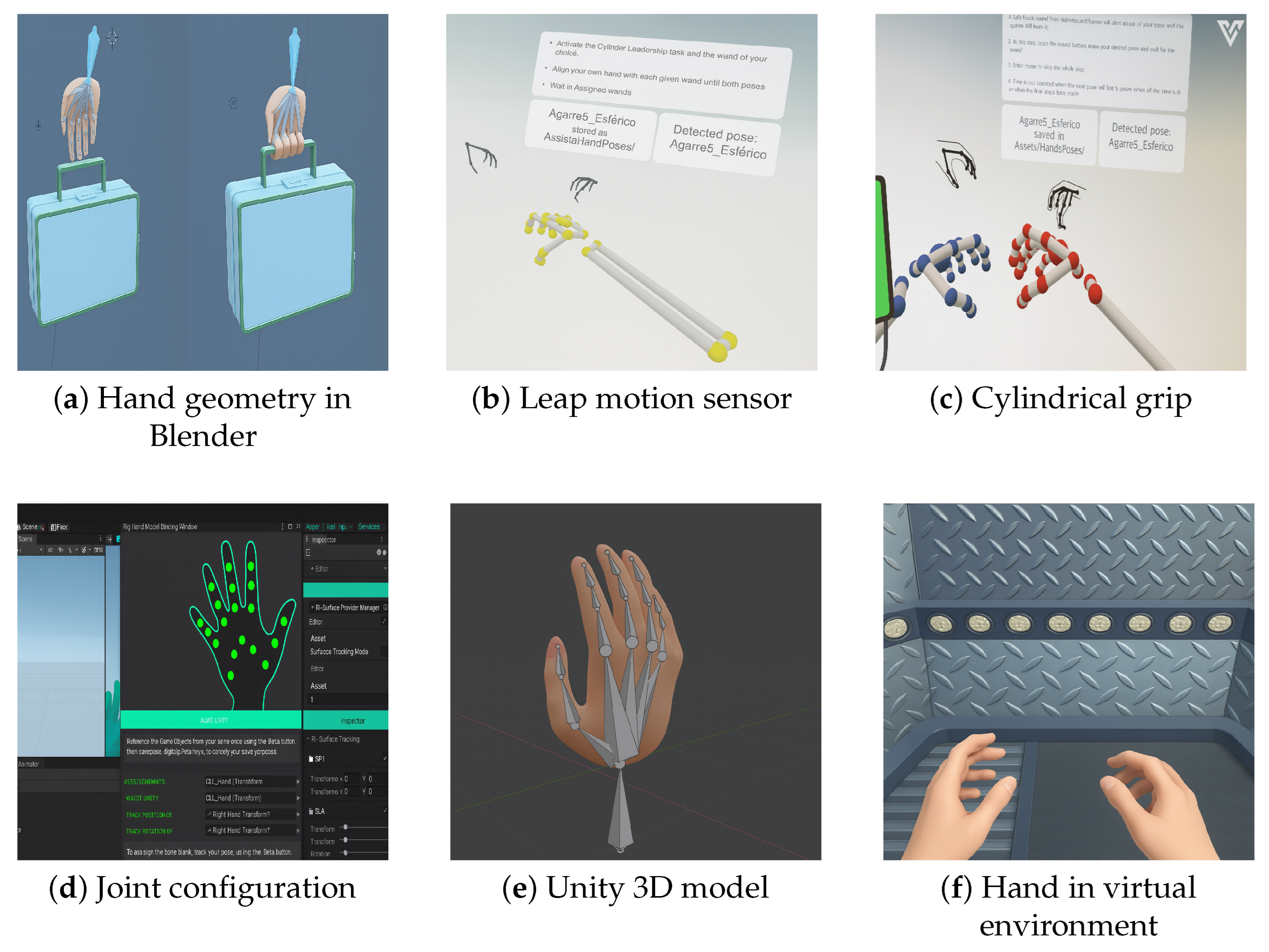

- Unity 3D, 2021.3.11f1 (LTS): Used as the main development environment for creating the virtual reality scenes and implementing interactivity logic;

- Blender 3.5.1: Employed for 3D modeling and animation of the virtual objects used in the tasks;

- Visual Studio 2019: Used for scripting in C# within Unity 3D, enabling the control logic of the system;

- HTC VIVE Software (SteamVR 1.26): Provided tracking and runtime support for the VR headset used in the study;

- Leap Motion SDK (Orion 4.0.0): Enabled precise hand tracking and integration into the Unity 3D environment;

- Spyder 5.4.3 (Anaconda 2021.11): Used for data analysis and visualization through Python libraries such as NumPy, Pandas, and Matplotlib. Available online: https://www.spyder-ide.org/ (accessed on 15 December 2024).

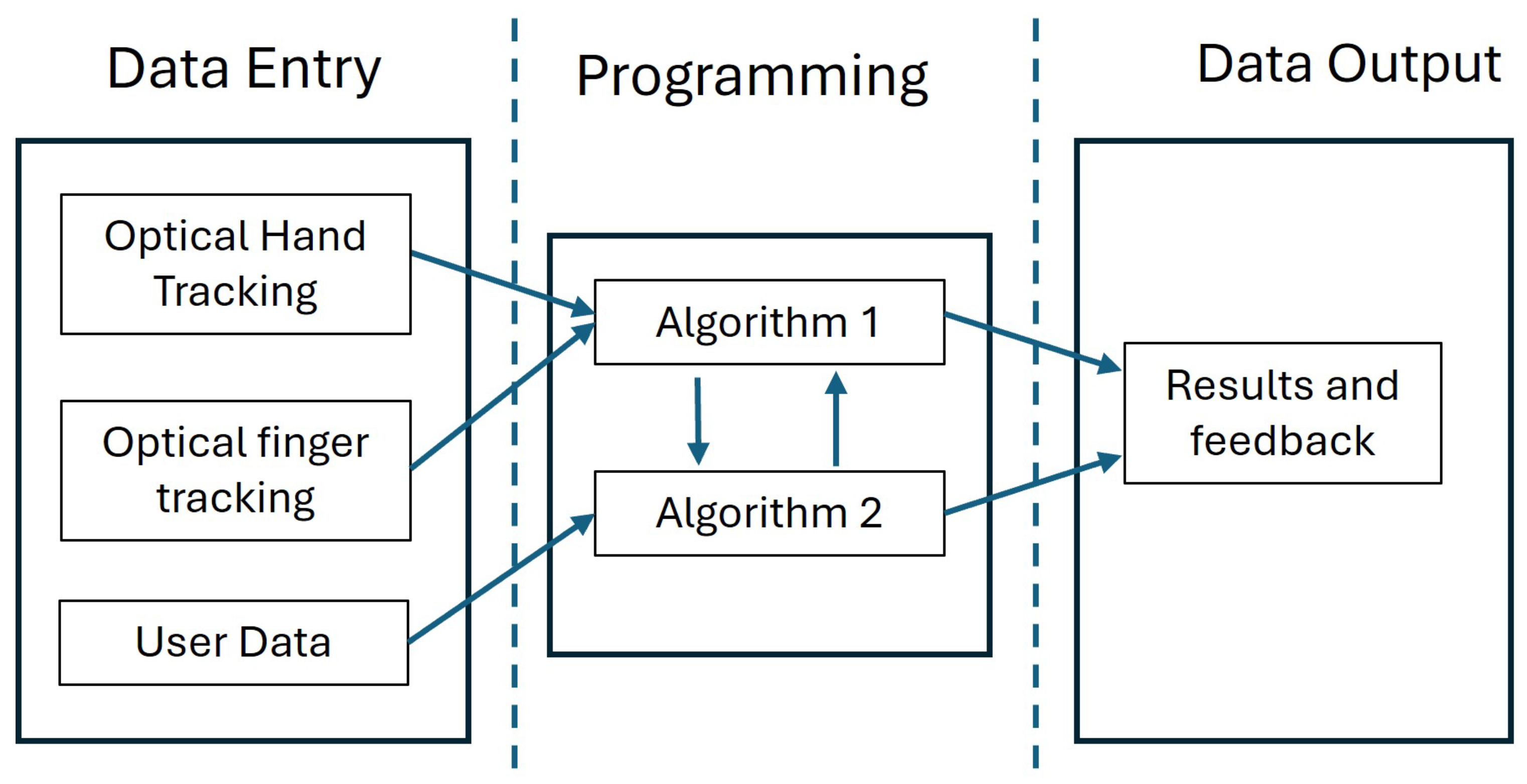

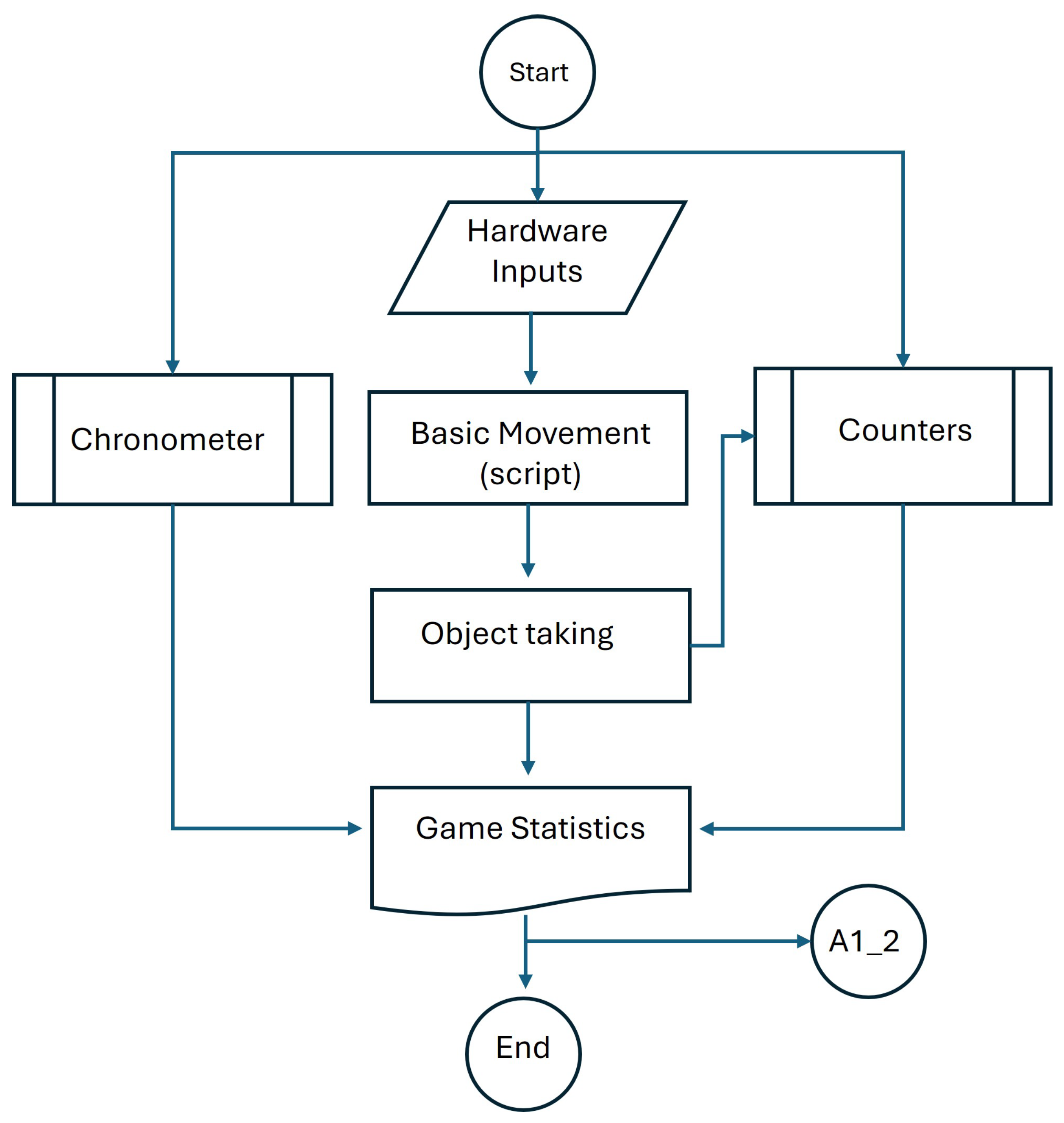

- Basic Movement (script): This script is directly linked to the virtual hand. It receives serial input from the hardware and translates it into positional data, determining both the movement direction and the type of grasp performed by the user.

- Chronometer (script): This script manages gameplay time by tracking the total duration of each session and implementing time-based challenges when required.

- Counters (script): This component assigns random targets within the virtual environment based on the selected difficulty level. It also maintains a real-time count of completed versus pending targets.

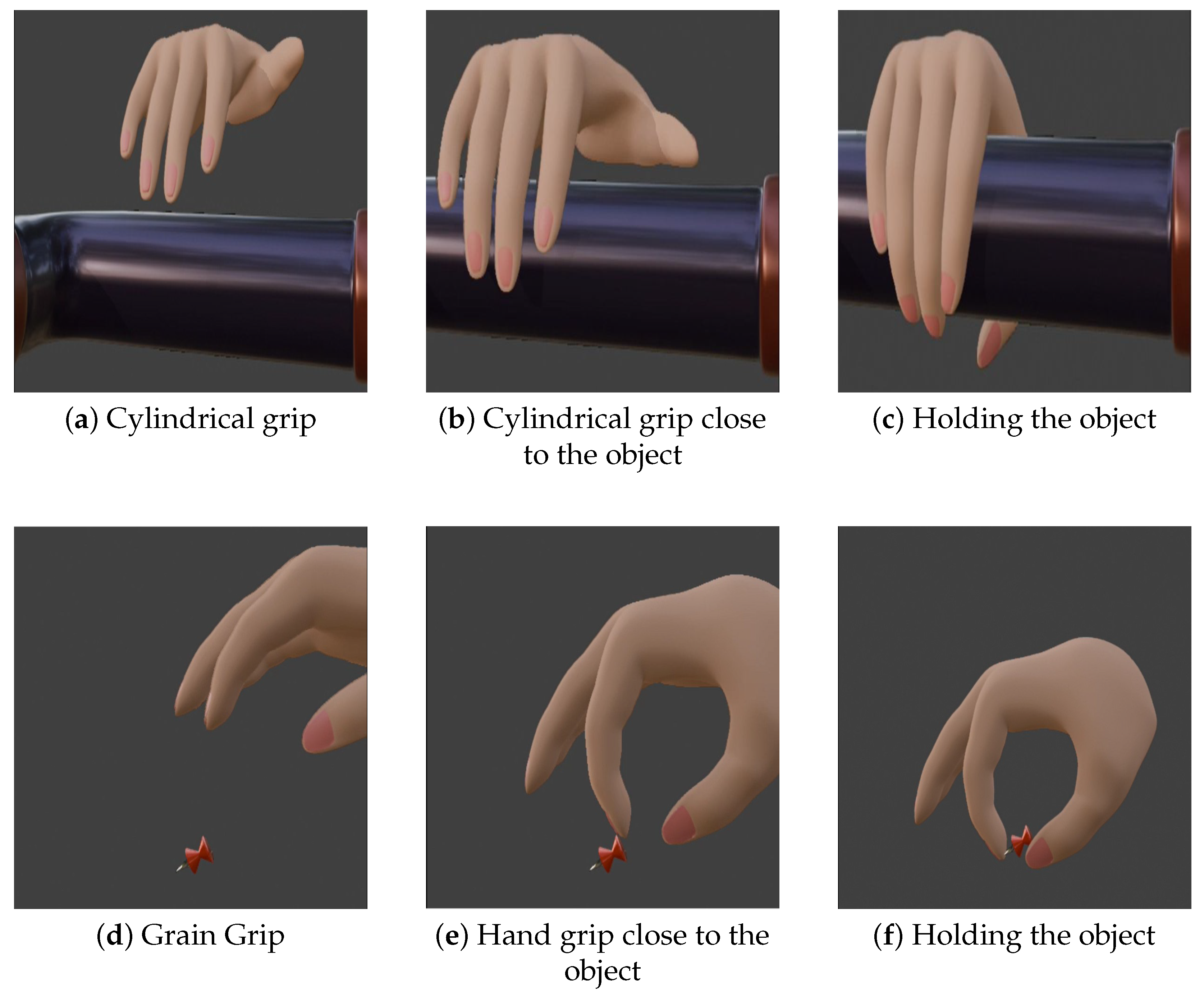

- Object Taking (script): This script detects the user’s interaction with virtual objects in real time. It triggers corresponding hand animations based on the detected grasp posture and contains a description of each object. It is continuously referenced by other scripts to validate effective object manipulation.

- Game Statistics (script): This script is responsible for collecting and temporarily storing key user performance metrics. It provides feedback to the Counters, Object Taking, and Chronometer components, allowing them to adapt their behavior and enabling the logging of relevant data for later analysis.

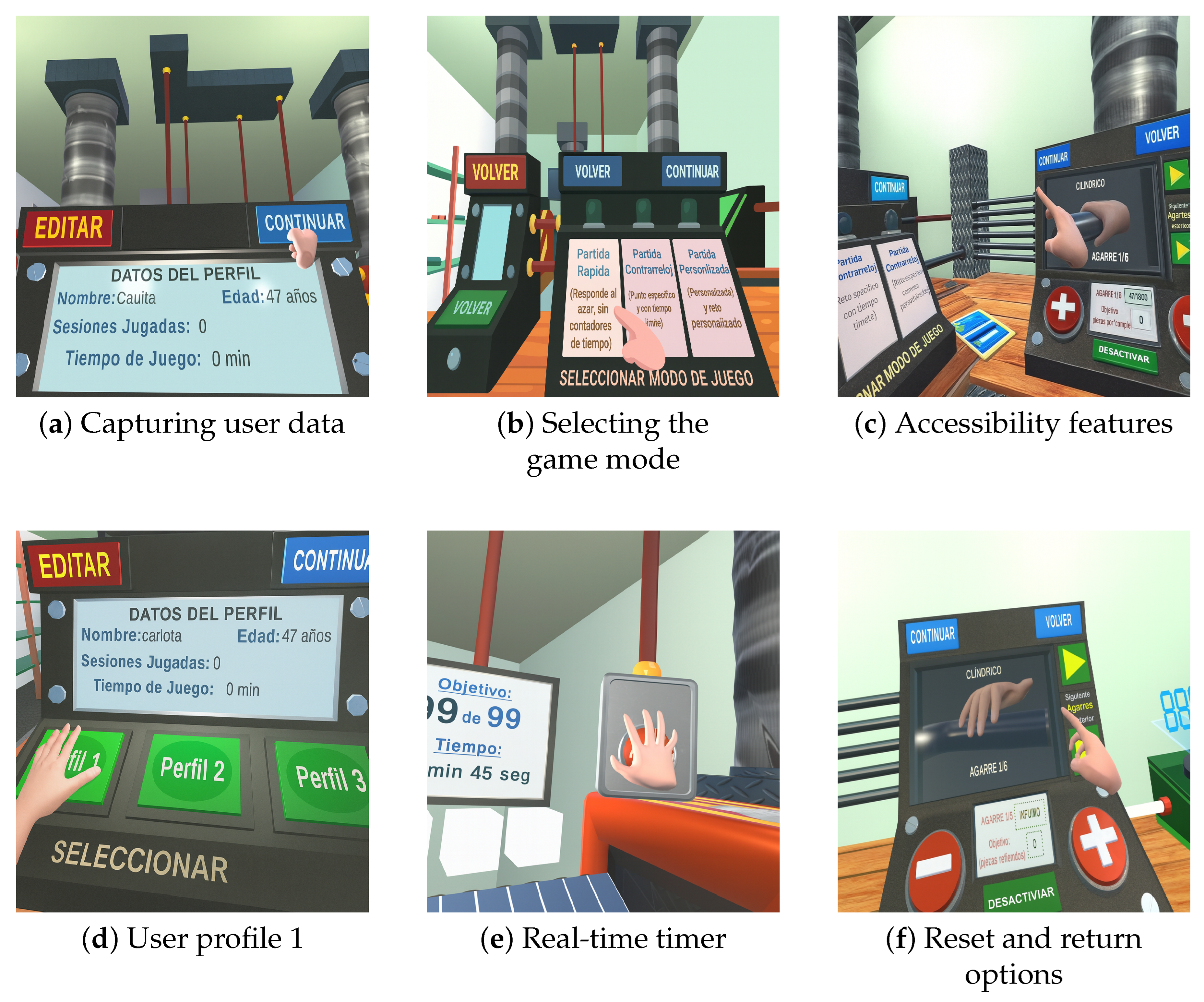

2.4. User Interface Elements

2.5. Handly Software Levels

- Level 1. Involves the random search for objects. There is no time limit, and its purpose is to qualitatively assess whether the user can grasp the objects and identify which ones pose greater difficulty.

- Level 2. Requires the user to grasp specific objects. This level does not end until the task is completed, and it also has no time limit, allowing the user to focus on movement accuracy and control.

- Level 3. Introduces time-limited challenges. The user must locate and grasp the objects within the allotted time, thus increasing the demands on speed, coordination, and decision-making, as shown in Figure 7b.

2.6. User Interaction with the Handly System

3. Data Acquisition and Outcome Measures

Statistical Analysis

4. Results

4.1. Descriptive Performance Outcomes

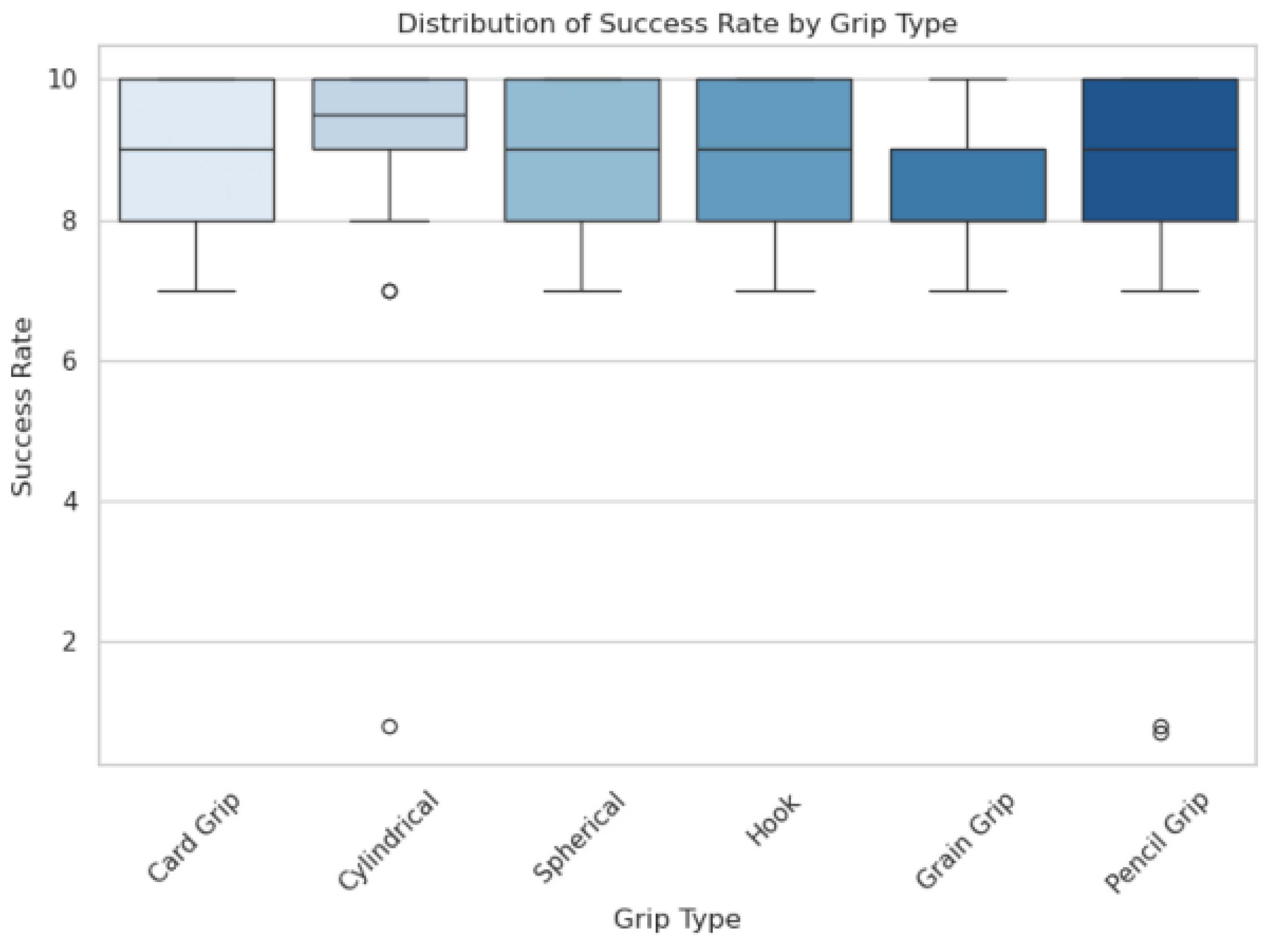

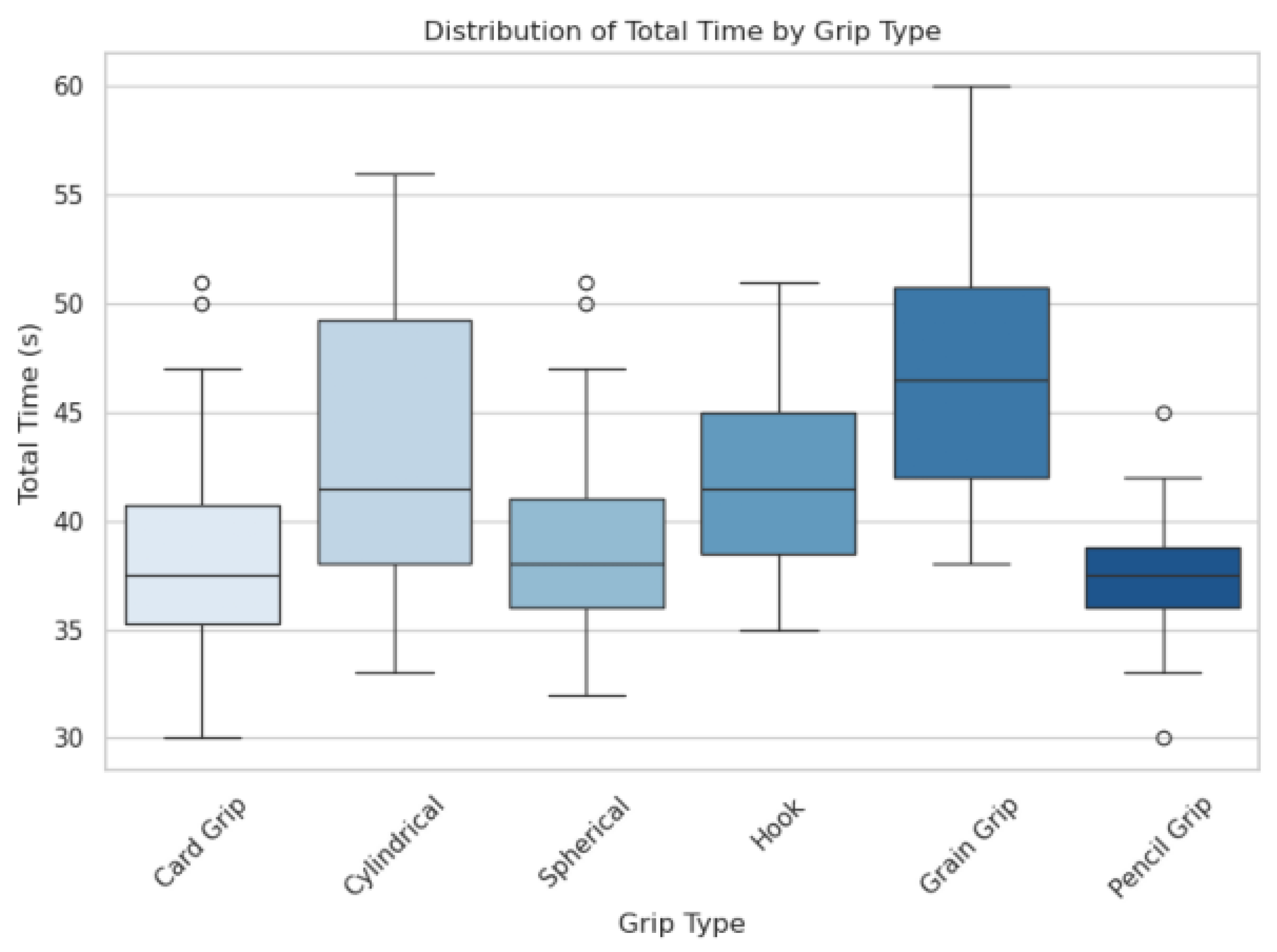

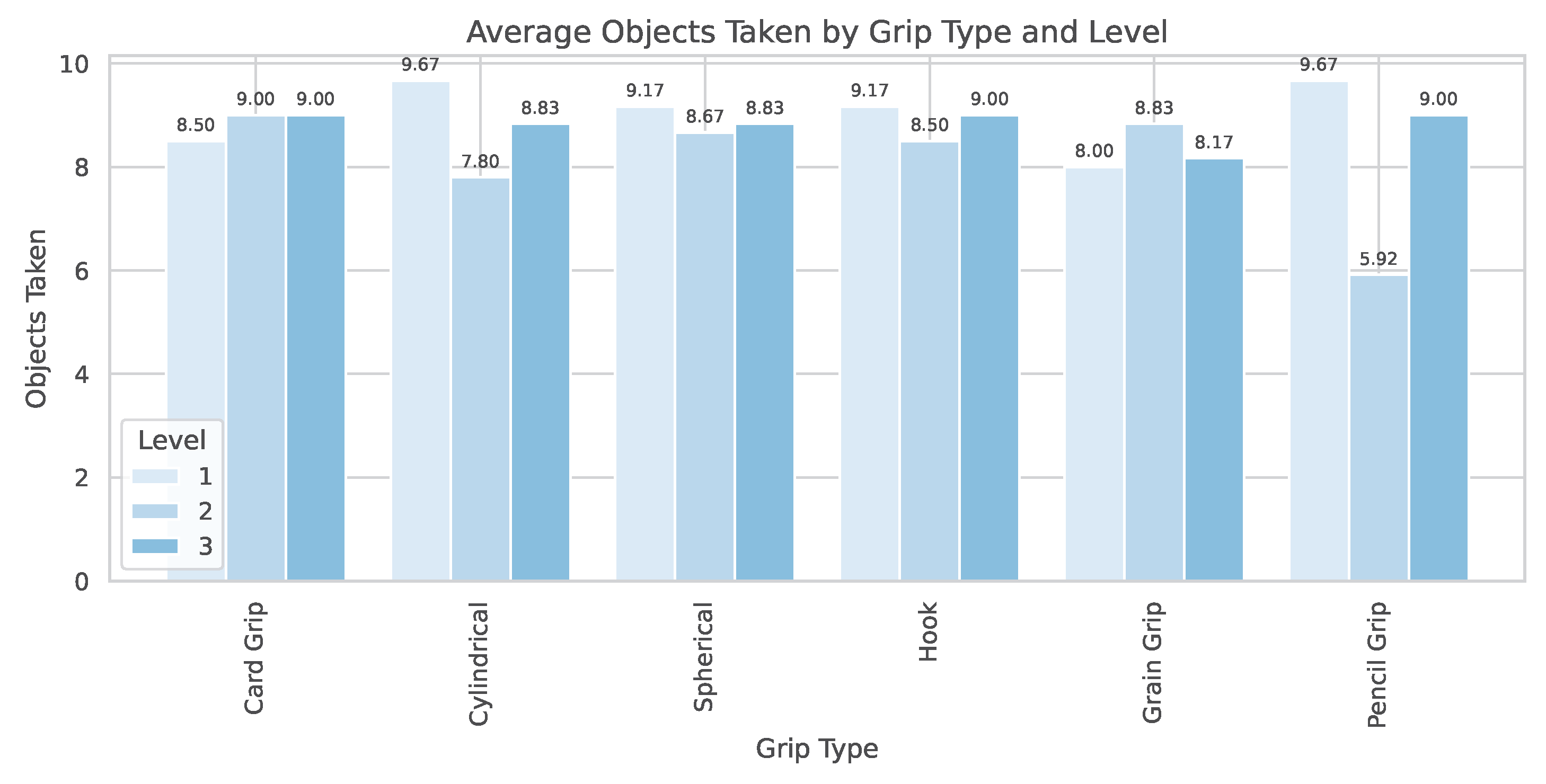

4.2. Performance Analysis by Grip Type

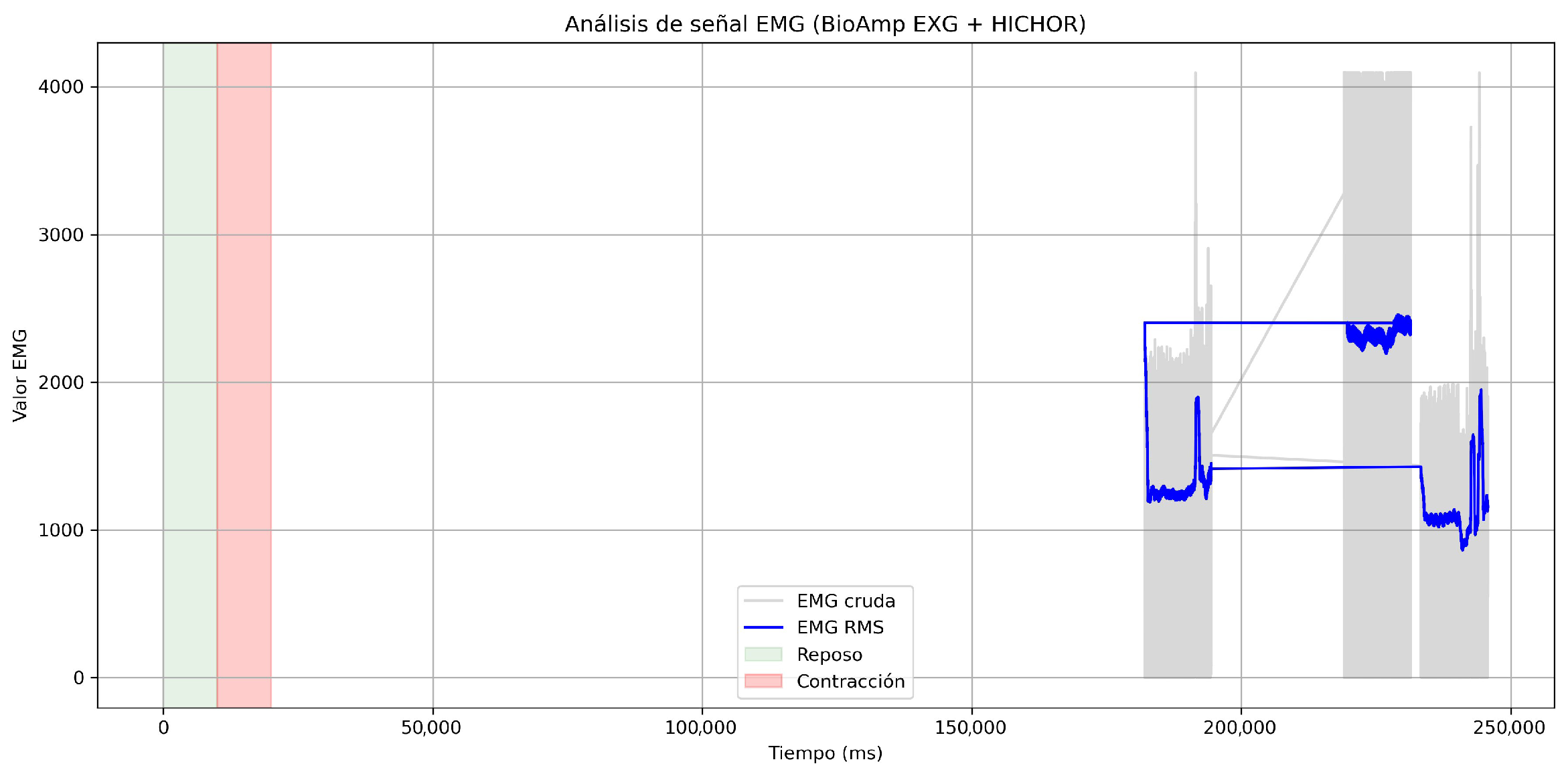

4.3. EMG Calibration and Dynamic Thresholding

4.4. Motor Performance and Grip Complexity in VR Tasks

4.5. Advantages of RMS-Based Dynamic Thresholding

4.6. Statistical Summary

4.7. Implications for Personalized Rehabilitation

4.8. Discussion

5. Conclusions

Limitations and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ferreira, A.; Hanchar, A.; Menezes, V.; Giesteira, B.; Quaresma, C.; Pestana, S.; de Sousa, A.P.; Neves, P.; Fonseca, M. VR for rehabilitation: The therapist interaction and experience. In Proceedings of the International Conference on Human-Computer Interaction, Virtual, 26 June–1 July 2022. [Google Scholar] [CrossRef]

- Melo, R.L.; da Silva Moreira, V.; do Amaral, É.M.H.; Domingues, J.S., Jr. Victus exergame: An approach to rehabilitation of amputees based on serious games. J. Interact. Syst. 2025, 16, 137–147. [Google Scholar] [CrossRef]

- Dávila-Morán, R.C.; Montenegro, J.S.; Chávez-Diaz, J.M.; Peralta Loayza, E.F. Usos de la realidad virtual en la rehabilitación física: Una revisión sistemática. Retos 2024, 61, 1060–1070. (In Spanish) [Google Scholar] [CrossRef]

- Paladugu, P.; Kumar, R.; Ong, J.; Waisberg, E.; Sporn, K. Virtual reality-enhanced rehabilitation for improving musculoskeletal function and recovery after trauma. J. Orthop. Surg. Res. 2025, 20, 404. [Google Scholar] [CrossRef]

- Holden, M.K. Virtual environments for motor rehabilitation. Cyberpsychol. Behav. 2005, 8, 187–211. [Google Scholar] [CrossRef]

- Cuesta-Gómez, A.; Sánchez-Herrera-Baeza, P.; Oña-Simbaña, E.D.; Martínez-Medina, A.; Ortiz-Comino, C.; Balaguer-Bernaldo-de-Quirós, C.; Jardón-Huete, A.; Cano-de-la-Cuerda, R. Effects of virtual reality associated with serious games for upper-limb rehabilitation in patients with multiple sclerosis: Randomized controlled trial. J. Neuroeng. Rehabil. 2020, 17, 90. [Google Scholar] [CrossRef]

- Merians, A.S.; Tunik, E.; Adamovich, S.V. Virtual reality to maximize function for hand and arm rehabilitation: Exploration of neural mechanisms. Stud. Health Technol. Inform. 2009, 145, 109–114. [Google Scholar]

- Gutiérrez, Á.; Sepúlveda-Muñoz, D.; Gil-Agudo, Á.; de los Reyes Guzmán, A. Serious game platform with haptic feedback and EMG monitoring for upper-limb rehabilitation and smoothness quantification on spinal cord injury patients. Appl. Sci. 2020, 10, 963. [Google Scholar] [CrossRef]

- Verdejo, P.Á.; Lería, J.D.; Cativiela, J.G.; Falgueras, L.C.; Périz, V.M.; Combalía, R.A. La realidad virtual en fisioterapia: Una revolución en la rehabilitación. Dialnet 2024, 5, 514. (In Spanish) [Google Scholar]

- Dias, G.; Adrião, M.L.; Clemente, P.; da Silva, H.P.; Chambel, G.; Pinto, J.F. Effectiveness of a gamified and home-based approach for upper-limb rehabilitation. In Proceedings of the 44th Annual International Conference of the IEEE EMBS (EMBC), Glasgow, UK, 11–15 July 2022. [Google Scholar] [CrossRef]

- Burke, J.W.; McNeill, M.; Charles, D.; Morrow, P.; Crosbie, J.; McDonough, S. Serious games for upper-limb rehabilitation following stroke. In Proceedings of the 2009 Conference in Games and Virtual Worlds for Serious Applications (VS-Games), Coventry, UK, 23–24 March 2009. [Google Scholar] [CrossRef]

- Cela, A.F.; Oña, E.D.; Jardón, A. eJamar: A novel exergame controller for upper-limb motor rehabilitation. Appl. Sci. 2024, 14, 11676. [Google Scholar] [CrossRef]

- Guerrero-Hernández, A.L.; Albán, Ó.A.V.; Sabater Navarro, J.M. Sistema de rehabilitación de motricidad fina del miembro superior utilizando juegos serios. Ing. Desarro. 2023, 41, 1. (In Spanish) [Google Scholar] [CrossRef]

- Hidalgo, J.C.C.; Delgado, J.D.A.; Bykbaev, V.R.; Bykbaev, Y.R.; Coyago, T.P. Serious game to improve fine motor skills using Leap Motion. In Proceedings of the CACIDI 2018, Buenos Aires, Argentina, 28–30 November 2018. [Google Scholar] [CrossRef]

- Álvarez-Rodríguez, M.; Sepúlveda-Muñoz, D.; Lozano-Berrio, V.; Ceruelo-Abajo, S.; Gil-Agudo, A.; Gutiérrez-Martín, A.; de los Reyes-Guzmán, A. Preliminary development of two serious games for rehabilitation of spinal cord injured patients. In Proceedings of the International Conference on NeuroRehabilitation, Pisa, Italy, 16–20 October 2008; Springer: Cham, Switzerland, 2018. [Google Scholar] [CrossRef]

- Corrêa, A.G.D.; Kintschner, N.R.; Blascovi-Assis, S.M. System of upper-limb motor rehabilitation training using Leap Motion and Gear VR in sessions of home game therapy. In Proceedings of the 2019 IEEE Symposium on Computers and Communications (ISCC), Barcelona, Spain, 29 June–3 July 2019. [Google Scholar] [CrossRef]

- Padilla Magaña, J.F.; Peña Pitarch, E.; Sánchez Suarez, I.; Ticó Falguera, N. Evaluación del movimiento de la mano mediante el controlador Leap Motion. In Proceedings of the Memorias 16 Congreso Nacional de Ciencia, Tecnología e Innovación, Córdoba, Spain, 12–14 October 2021. (In Spanish). [Google Scholar]

- Herrera, V.; Albusac, J.; Angulo, E.; Gzlez-Morcillo, C.; de los Reyes, A.; Vallejo, D. Virtual reality-assisted goalkeeper training for upper-limb rehabilitation in a safe and adapted patient environment. IEEE Access 2024, 12, 194256–194279. [Google Scholar] [CrossRef]

- Lourenço, F.; Postolache, O.; Postolache, G. Tailored virtual reality and mobile application for motor rehabilitation. In Proceedings of the 2018 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Houston, TX, USA, 14–17 May 2018. [Google Scholar] [CrossRef]

- Eldaly, S. Effect of electrical muscular stimulation on occupational activities: A systematic review and meta-analysis. Res. Sq. 2023. Preprint. [Google Scholar] [CrossRef]

- Kumar, M.; Srivastava, S.; Das, V.S. Electromyographic analysis of selected shoulder muscles during rehabilitation exercises. J. Back Musculoskelet. Rehabil. 2018, 31, 947–954. [Google Scholar] [CrossRef]

- Batista, T.V.; dos Santos Machado, L.; Valença, A.M.G.; de Moraes, R.M. FarMyo: A serious game for hand and wrist rehabilitation using a low-cost electromyography device. Int. J. Serious Games 2019, 6, 3–19. [Google Scholar] [CrossRef]

- Yang, X.; Yeh, S.C.; Niu, J.; Gong, Y.; Yang, G. Hand rehabilitation using virtual reality electromyography signals. In Proceedings of the 2017 5th International Conference on Enterprise Systems (ES), Beijing, China, 22–24 September 2017. [Google Scholar] [CrossRef]

- Tang, P.; Cao, Y.; Vithran, D.T.A.V.; Xiao, W.; Wen, T.; Liu, S.; Li, Y. The efficacy of virtual reality on the rehabilitation of musculoskeletal diseases: Umbrella review. J. Med. Internet Res. 2025, 27, e64576. [Google Scholar] [CrossRef]

- Zhang, N.; Wang, H.; Wang, H.; Qie, S. Impact of the combination of virtual reality and noninvasive brain stimulation on the upper-limb motor function of stroke patients: A systematic review and meta-analysis. J. Neuroeng. Rehabil. 2024, 21, 179. [Google Scholar] [CrossRef]

- Sun, R.; Wang, Y.; Wu, Q.; Wang, S.; Liu, X.; Wang, P.; He, Y.; Zheng, H. Effectiveness of virtual and augmented reality for cardiopulmonary resuscitation training: A systematic review and meta-analysis. BMC Med. Educ. 2024, 24, 730. [Google Scholar] [CrossRef] [PubMed]

- Toledo-Peral, C.L.; Vega-Martinez, G.; Mercado-Gutiérrez, J.A.; Rodriguez-Reyes, G.; Vera-Hernandez, A.; Leija-Salas, L.; Gutierrez-Martinez, J. Virtual/augmented reality for rehabilitation applications using electromyography as control/biofeedback: Systematic literature review. Electronics 2022, 11, 2271. [Google Scholar] [CrossRef]

- Bouteraa, Y.; Ben Abdallah, I.; Elmogy, A. Design and control of an exoskeleton robot with EMG-driven electrical stimulation for upper-limb rehabilitation. Ind. Robot. 2020, 47, 489–501. [Google Scholar] [CrossRef]

- Dash, A.; Lahiri, U. Design of Virtual Reality-Enabled Surface Electromyogram-Triggered Grip Exercise Platform. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 444–452. [Google Scholar] [CrossRef]

- Kuiken, T.A.; Li, G.; Lock, B.A.; Lipschutz, R.D.; Miller, L.A.; Stubblefield, K.A.; Englehart, K.B. Targeted muscle reinnervation for real-time myoelectric control of multifunction artificial arms. JAMA 2009, 301, 619–628. [Google Scholar] [CrossRef] [PubMed]

- Konrad, P. The ABC of EMG: A Practical Introduction to Kinesiological Electromyography; Noraxon USA Inc.: Scottsdale, AZ, USA, 2006. [Google Scholar]

- Merletti, R.; Parker, P.J. Electromyography: Physiology, Engineering, and Non-Invasive Applications; Wiley: Hoboken, NJ, USA, 2004. [Google Scholar]

- Phinyomark, A.; Khushaba, R.N.; Scheme, E. Feature extraction and selection for myoelectric control based on wearable EMG sensors. Sensors 2018, 18, 1615. [Google Scholar] [CrossRef] [PubMed]

| Participant | Sessions | Levels | Type of Grips | Duration/Session (min) | Days |

|---|---|---|---|---|---|

| P1–P5, P10 | 4 | 3 | 4 | 20 | 12 |

| P6–P9 | 3 | 3 | 3 | 20 | 6 |

| Grip Type | Level | Mean Time (s) | Std Time | Mean Success | Std Success | Mean Objects | Std Objects | Samples |

|---|---|---|---|---|---|---|---|---|

| Card Grip | 1 | 39.33 | 6.86 | 0.85 | 0.14 | 8.50 | 1.38 | 6 |

| Card Grip | 2 | 38.50 | 6.41 | 0.90 | 0.13 | 9.00 | 1.26 | 6 |

| Card Grip | 3 | 37.83 | 5.60 | 0.90 | 0.11 | 9.00 | 1.10 | 6 |

| Cylindrical | 1 | 45.50 | 6.92 | 0.97 | 0.08 | 9.67 | 0.82 | 6 |

| Cylindrical | 2 | 43.00 | 7.04 | 0.93 | 0.12 | 7.80 | 3.62 | 6 |

| Cylindrical | 3 | 40.50 | 6.60 | 0.88 | 0.10 | 8.83 | 0.98 | 6 |

| Spherical | 1 | 40.50 | 6.50 | 0.92 | 0.10 | 9.17 | 0.98 | 6 |

| Spherical | 2 | 39.83 | 5.49 | 0.87 | 0.10 | 8.67 | 1.03 | 6 |

| Spherical | 3 | 38.50 | 4.97 | 0.88 | 0.13 | 8.83 | 1.33 | 6 |

| Hook | 1 | 43.67 | 5.35 | 0.92 | 0.12 | 9.17 | 1.17 | 6 |

| Hook | 2 | 42.67 | 5.16 | 0.85 | 0.14 | 8.50 | 1.38 | 6 |

| Hook | 3 | 40.50 | 4.04 | 0.90 | 0.13 | 9.00 | 1.26 | 6 |

| Grain Grip | 1 | 47.83 | 8.91 | 0.80 | 0.09 | 8.00 | 0.89 | 6 |

| Grain Grip | 2 | 48.50 | 5.24 | 0.88 | 0.08 | 8.83 | 0.75 | 6 |

| Grain Grip | 3 | 44.83 | 4.83 | 0.82 | 0.12 | 8.17 | 1.17 | 6 |

| Pencil Grip | 1 | 38.33 | 3.72 | 0.97 | 0.05 | 9.67 | 0.52 | 6 |

| Pencil Grip | 2 | 37.17 | 4.26 | 0.90 | 0.13 | 5.92 | 4.13 | 6 |

| Pencil Grip | 3 | 37.00 | 2.19 | 0.90 | 0.09 | 9.00 | 0.89 | 6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garcia-Villalba, L.A.; Rodríguez-Ramírez, A.G.; Rodríguez-Picón, L.A.; Méndez-González, L.C.; Ghasemlou, S.M. Interactive Platform for Hand Motor Rehabilitation Using Electromyography and Optical Tracking. Appl. Sci. 2025, 15, 12434. https://doi.org/10.3390/app152312434

Garcia-Villalba LA, Rodríguez-Ramírez AG, Rodríguez-Picón LA, Méndez-González LC, Ghasemlou SM. Interactive Platform for Hand Motor Rehabilitation Using Electromyography and Optical Tracking. Applied Sciences. 2025; 15(23):12434. https://doi.org/10.3390/app152312434

Chicago/Turabian StyleGarcia-Villalba, Luz A., Alma G. Rodríguez-Ramírez, Luis A. Rodríguez-Picón, Luis Carlos Méndez-González, and Shaban Mousavi Ghasemlou. 2025. "Interactive Platform for Hand Motor Rehabilitation Using Electromyography and Optical Tracking" Applied Sciences 15, no. 23: 12434. https://doi.org/10.3390/app152312434

APA StyleGarcia-Villalba, L. A., Rodríguez-Ramírez, A. G., Rodríguez-Picón, L. A., Méndez-González, L. C., & Ghasemlou, S. M. (2025). Interactive Platform for Hand Motor Rehabilitation Using Electromyography and Optical Tracking. Applied Sciences, 15(23), 12434. https://doi.org/10.3390/app152312434