Bridging Text and Knowledge: Explainable AI for Knowledge Graph Classification and Concept Map-Based Semantic Domain Discovery with OBOE Framework

Abstract

1. Introduction

- This study demonstrates how the OBOE principles can be extended to solve a fundamentally different problem: unsupervised domain discovery and explanation in knowledge graphs derived from text in classification task, establishing a new XAI paradigm beyond traditional supervised classification explanation.

- This study demonstrates that the OBOE framework principles can be extended to solve a fundamentally different problem from its original design. While the original OBOE framework explains a text classification decision based on text, our work establishes a novel XAI paradigm that addresses two interrelated challenges: (1) the explainable classification of knowledge graphs derived from text; (2) the unsupervised discovery and explanation of semantic domains within these graphs to ease the understanding of this classification.

- The integration of LLM-based reasoning and verification mechanisms inspired by QualIT to ensure coherence and prevent hallucination in generated explanations.

- A hybrid evaluation strategy combining quantitative clustering metrics with LLM-assisted qualitative assessment to achieve scalable explanation validation.

- An empirical demonstration across three corpora—Reuters Activities, BBC News, and Amazon Reviews—identifying 92 semantic domains across 17 topics with coherence and relevance scores close to 4.0/5.

- RQ1. To what extent can hierarchical clustering over concept-map-derived knowledge graphs reveal coherent and interpretable semantic domains within a broader conceptual domain?

- RQ2. How effectively can Large Language Models generate coherent and comprehensible natural-language explanations of these hierarchically identified semantic domains?

- RQ3. To what extent can structured prompting and verification mechanisms mitigate semantic inaccuracies or hallucinations in LLM-generated explanations?

- RQ4. How can LLM-assisted evaluation methods provide scalable and reliable assessments of explanation quality across multiple domains?

2. Related Work

2.1. Explainable AI in Text Classification

2.2. LLM-Enhanced Explainability

- Domain-specific adaptation: Zhao et al. [30] and related works provide taxonomies tailored to transformer-based classifiers, highlighting LLMs’ role in medical, legal, and social applications.

2.3. Knowledge Graphs and Concept Maps

2.4. Existing Frameworks and Limitations

- Lack of LLM integration: Most predate the rise in modern LLMs.

- Static explanations: Explanations are often one-shot and not verifiable.

- Limited semantic depth: Earlier frameworks overlook nuanced linguistic patterns.

- Minimal user interaction: Few allow interactive refinement of explanations (with the exception of explAIner).

2.5. Recent LLM-Based Frameworks

- Hybrid symbolic–LLM methods: Combining rule-based reasoning with LLMs to translate outputs into natural-language justifications [47].

2.6. Comparative Positioning

2.7. Research Gap

- H1. Explainable domain discovery over concept-map-derived knowledge graphs can reveal coherent and interpretable semantic domains.

- H2. Large Language Models can provide meaningful, verifiable natural-language explanations of these domains when guided by structured prompting and verification mechanisms.

- H3. Combining symbolic and statistical representations enhances both the transparency and the interpretability of knowledge organization systems.

3. Materials and Methods

3.1. Datasets and Other Resources

- Amazon Reviews [56] comprising 3000 documents, evenly distributed between the Books and Pet Supplies categories (1500 documents per category). The class labels are distinct domains (literature vs. pet products).

- BBC News [57]: comprises 2500 news article summaries across five topical categories: business, entertainment, politics, sport, and tech. We have 500 documents per category. The documents (news summaries) are moderate in length (a few paragraphs). We included this dataset to examine performance on multi-topic scenarios and to see how well the framework handles a broader range of topics with potentially overlapping domains (e.g., tech news might overlap with business at times).

- Reuters [58]: consisting of 2400 articles, evenly distributed among Corporate_Earnings, International_Trade, Energy_Resources and Agrigultural_Markets activities. We included this dataset both to examine performance on multi topic scenarios with potentially more overlapping domains than BBC News dataset. Also, this dataset exhibits highly specialized linguistic characteristics not present in Amazon or BBC.

3.2. Framework Implementation

3.2.1. (A) Reordering

3.2.2. (B) Representation

| Algorithm 1: Semantic triple extraction algorithm |

| Data: document(D), StanzaParser(), PatternRules(), stopWords(SW), threshold(θ) Result: tripleSet(T), linkedEntities(E) 1 begin 2 T ← ∅; // Initialize empty triple set 3 candidateTriples ← ∅; 4 validTriples ← []; 5 sentences ← SegmentSentences(D); 6 for sent ∈ sentences do 7 depGraph ← .parse(sent); // Stanza dependency parsing 8 T_dep ← ExtractFromDependencies(depGraph); 9 T_pat ← ExtractFromPatterns(sent,); // Pattern matching 10 if T_dep = ∅ and T_pat = ∅ then 11 fallback ← GenerateFallbackTriple(sent); 12 candidateTriples ← candidateTriples ∪ {fallback}; 13 else 14 candidateTriples ← candidateTriples ∪ T_dep ∪ T_pat; // Stage 2: Filtering and normalization 15 for (s,r,o) ∈ candidateTriples do 16 if s ∉ SW and o ∉ SW and |s| > 1 and |o| > 1 then 17 r ← RemoveAdverbs(r); // Linguistic normalization 18 r ← RemoveModals(r); 19 r ← Lemmatize(r); 20 validTriples.Add((s,r,o)); // Stage 3: Consolidation 21 T ← ConsolidateOverlapping(validTriples); // Stage 4: Entity linking 22 E ← ∅; 23 for (s,r,o) ∈ T do 24 s_linked ← LinkToDBPedia(s, θ); // Link if similarity 25 o_linked ← LinkToDBPedia(o, θ); 26 E ← E ∪ {s_linked, o_linked}; 27 T.update((s_linked,r,o_linked)); 28 return T, E |

- (Apple, announced, results) ← subject–verb–object chain.

- (results, has_property, quarterly) ← adjectival modification.

- (results, exceeded, expectations) ← clausal relation.

- Pattern augmentation captures linguistic structures underrepresented in dependency parses: possessive constructions [(price, has_component, oil)], noun compounds [(technology, related_to, sector)], and prepositional phrases [(company, located_at, London)].

- Fallback generation ensures semantic coverage by extracting triples from primary noun phrases when parsing fails on fragments or headlines.

- Semantic filtering removes triples containing stop words, single-character artifacts, or duplicate subject-object pairs, retaining only semantically meaningful relations.

- Linguistic normalization standardizes predicates through lemmatization and removal of adverbial/modal modifiers (e.g., quickly announced → announce), reducing relation vocabulary while preserving core semantics.

- Triple consolidation merges semantically equivalent triples extracted from different grammatical structures (e.g., announced and has_announced both map to canonical announce).

3.2.3. (C) Classification

- (C.1) Knowledge Graph Embedding Training. Triple representations are learned using three complementary KGE paradigms: TransE [40] models relations as translations in vector space (minimizing ||s + r − o||); ConvKB [62] applies convolutional filters over concatenated embeddings; and ComplEx [63] employs complex-valued embeddings with trilinear scoring; and DistMult [64] that sees a bilinear interaction between entities and relations but fails to model antisymmetric patterns. These models represent distinct relational modeling approaches—translational, convolutional, and tensor factorization—providing architectural diversity for embedding quality assessment.

- 2.

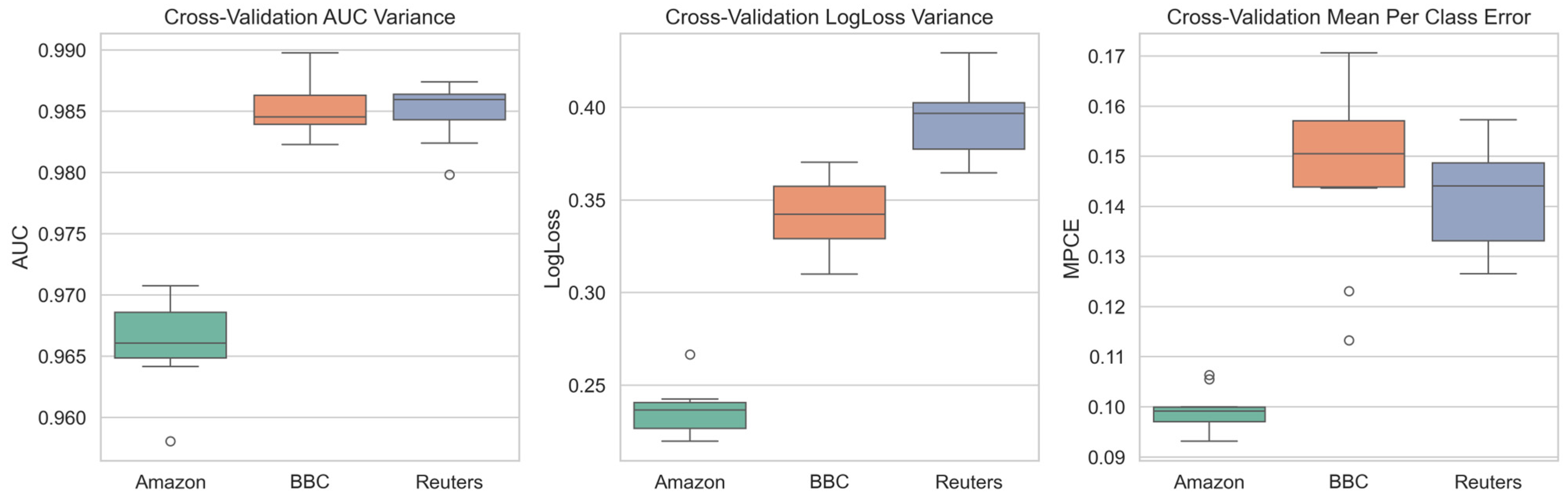

- (C.2) Topic Classification. The learned embeddings—specifically subject and object vectors from validated triples—serve as features for XGBoost [65] classifiers that predict topic assignments from Section 3.2.1. XGBoost was selected for computational efficiency and robust performance on structured features. During the hyperparameter optimization stage of the XGBoost classifier, a stratified cross-validation procedure with randomized cross validation was applied to tune the parameters with the highest influence on predictive capacity and model generalization. Specifically, combinations of n_estimators, max_depth, learning_rate, subsample, colsample_bytree, min_child_weight, and gamma were explored, while the regularization parameters (reg_alpha, reg_lambda) were kept fixed at their default values (0 and 1, respectively).

3.2.4. (D) Explanations

| Algorithm 2: Domain identification algorithm |

| Data: Triplets(T), topic, similarityThreshold, number of unique triplets (N), vocabulary(V), DBPediaTerms(dbpedia), NerTerms(ner) Result: dictionaryOfTerms, similarityMatrix 1 begin 2 T ← T[topic]; // triplets of the topic to explain 3 visitedTerms ← emptySet(); 4 numberOfTriplets ← 1; 5 visited ← emptySet(); // empty triplet set 6 while numberOfTriplets < N do 7 triplet ← GetNextTriplet(T,visited); // next non visited triplet 8 subject ← GetTermsFromSubject(triplet); 9 object ← GetTermsFromObject(triplet); 10 if ∀ ex Null o (NotDisjoint(subject,V) o NotDisjoint(object,V) then 11 termsOfTriplet ← GetTerms(subject,object); 12 for term en termsOfTriplet do 13 termsdb ← GetDBPediaResourceAndTypes(term,dbpedia) 14 termswordnet ← GetWNTermsAndTypes(term) 15 entityNer ← GetNerEntity(term,ner); 16 dictionaryOfTerms ← NewDictionaryFrom(term,termsdb,termswordnet,entityNer) 17 similarityMatrix ← BuildSimilarityMatrixWithEmbeddings(dictionaryOfTerms,similarityThreshold); 18 return similarityMatrix,dictionaryOfTerms |

- GetDBPediaResourceAndTypes: Retrieves ontological types and related concepts from DBpedia, providing structured semantic context.

- GetWNTermsAndTypes: Incorporates WordNet synonyms and hypernyms, expanding lexical coverage.

- GetNerEntity: Identifies named entities and their categories, capturing domain-specific terminology.

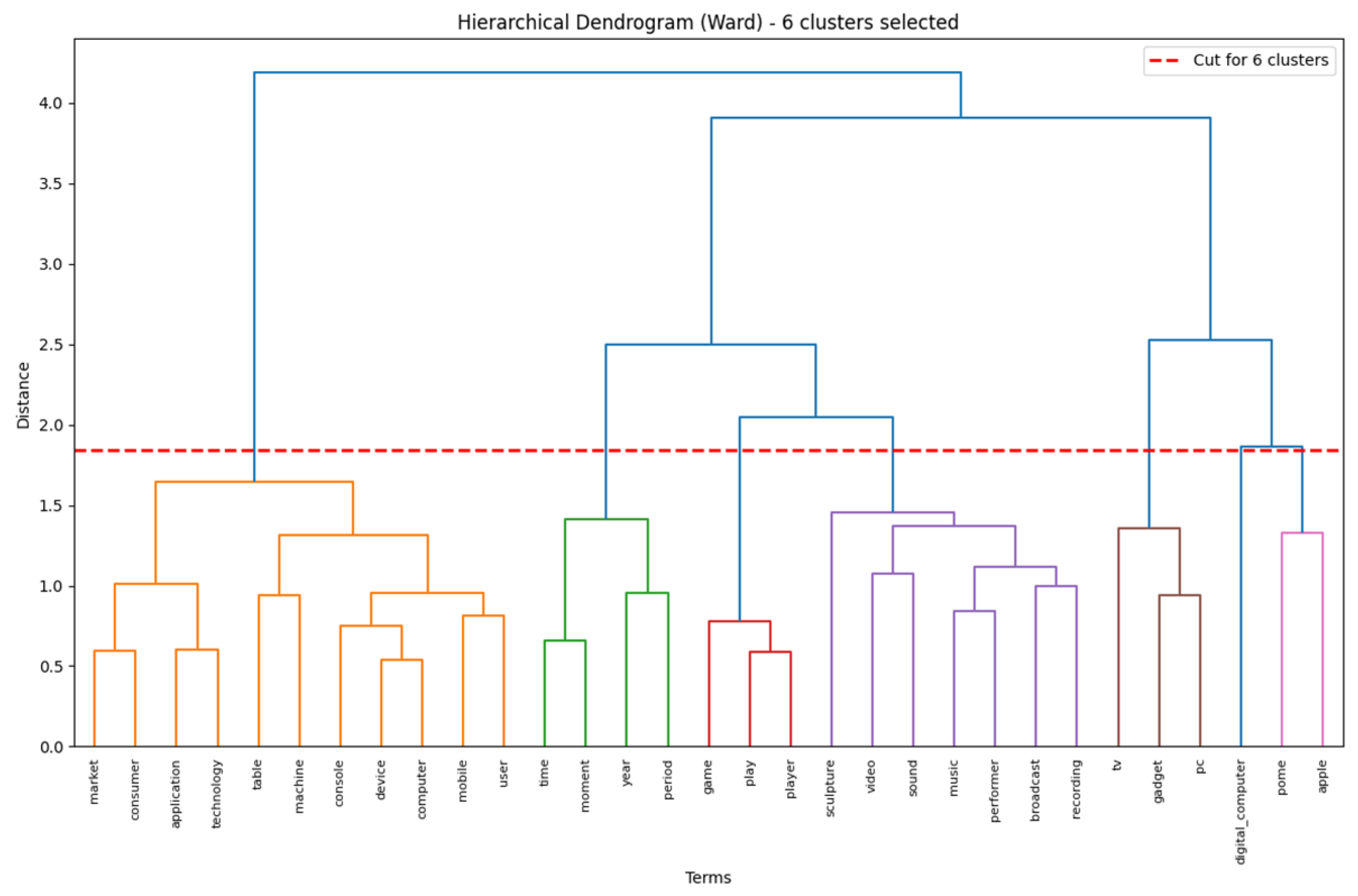

- Hierarchical clustering (Ward linkage) groups conceptually related terms into domains, enabling analysis of inter-domain relationships through dendrograms and facilitating identification of multiple granular domains within single topics.

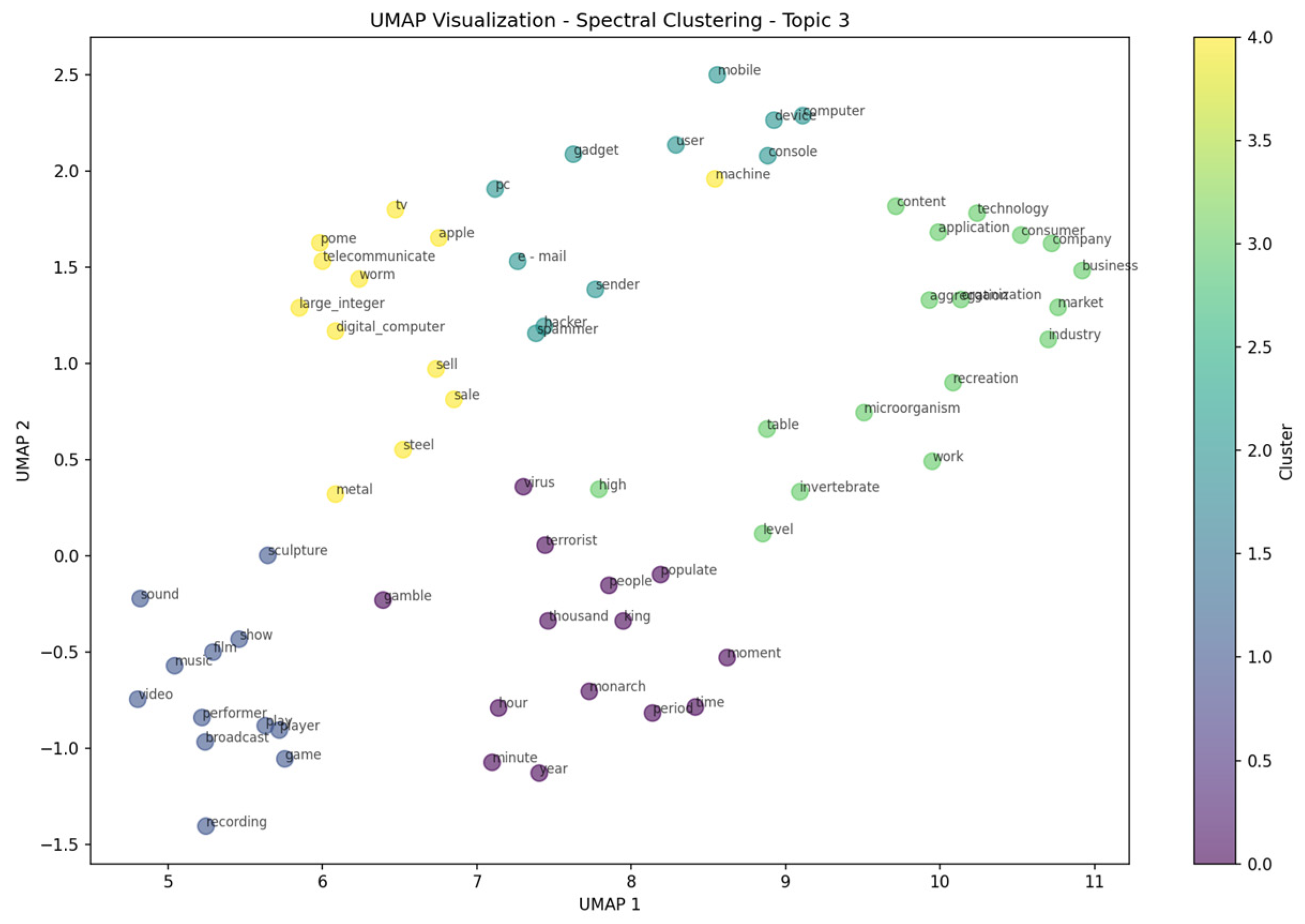

- Spectral clustering to capture non-convex semantic structures, where similarity scores are normalized to the [0, 1] range to form an affinity matrix. Spectral clustering applies eigen decomposition of the graph Laplacian to project terms into a lower-dimensional spectral space, where standard clustering algorithms can identify semantically coherent groups.

- Key Phrase Extraction (Prompt A.1, Appendix A): The model receives cluster terms and topic context, extracting 2–3 key phrases (2–4 words each) that capture central semantic relationships. For example, given terms {Arabian, oil, Saudi, energy, sector} in a technology topic, the model generates phrases like “Arabian oil” and “Saudi energy sector”.

- Semantic Verification (Prompt A.2, Appendix A): Generated phrases undergo validation to ensure: (i) grounding in actual cluster terms (no entity fabrication); (ii) alignment with topic domain; (iii) absence of spurious connections. The model returns binary validation (VALID/INVALID) with justification. Failed validations trigger phrase revision.

- Explanation Synthesis (Prompt A.3, Appendix A): Validated phrases are synthesized into coherent explanatory text covering three aspects—semantic coherence of terms, domain relevance to topic context, and clustering justification. The model generates 2–3 sentence descriptions maintaining factual alignment with cluster content.

- Structured Output Generation: Explanations are formatted as JSON objects containing: explanation text, reasoning narrative justifying quality scores, key phrases list, and preliminary scores (1–5 scale) for coherence, relevance, and coverage. For instance:

- Semantic Coherence: Evaluates whether terms exhibit logical unity and the explanation captures their conceptual relationships. The prompt instructs: “Are the terms semantically related? Does the explanation capture their unity?”

- Domain Relevance: Assesses connection to broader topic context and identification of domain-specific relationships. The prompt asks: “How relevant are these terms to the [topic] domain? Are domain-specific relationships identified?”

- Coverage Completeness: Determines whether main cluster aspects and key semantic relationships are adequately addressed. The prompt queries: “Does the explanation cover the main aspects? Is the scope appropriate?”

4. Results

- Topic Coherence (cv) has been shown to strongly correlate with human judgments of topic interpretability [18], with correlation coefficients of 0.7–0.8 across multiple studies. The coherence measure is employed, computed from the N words with the highest probability of belonging to a given topic, using mutual information as the similarity measure and cosine distance as the adjustment metric.

- Silhouette Coefficient [66] calculated from inter-cluster distance (a) and intra-cluster distance (b), providing an indicator of cluster compactness and separation.

4.1. (A) Reordering

4.2. (C) Classification

4.3. (D) Explanation

4.3.1. (D.1) Domains Identification

Topic Coherence

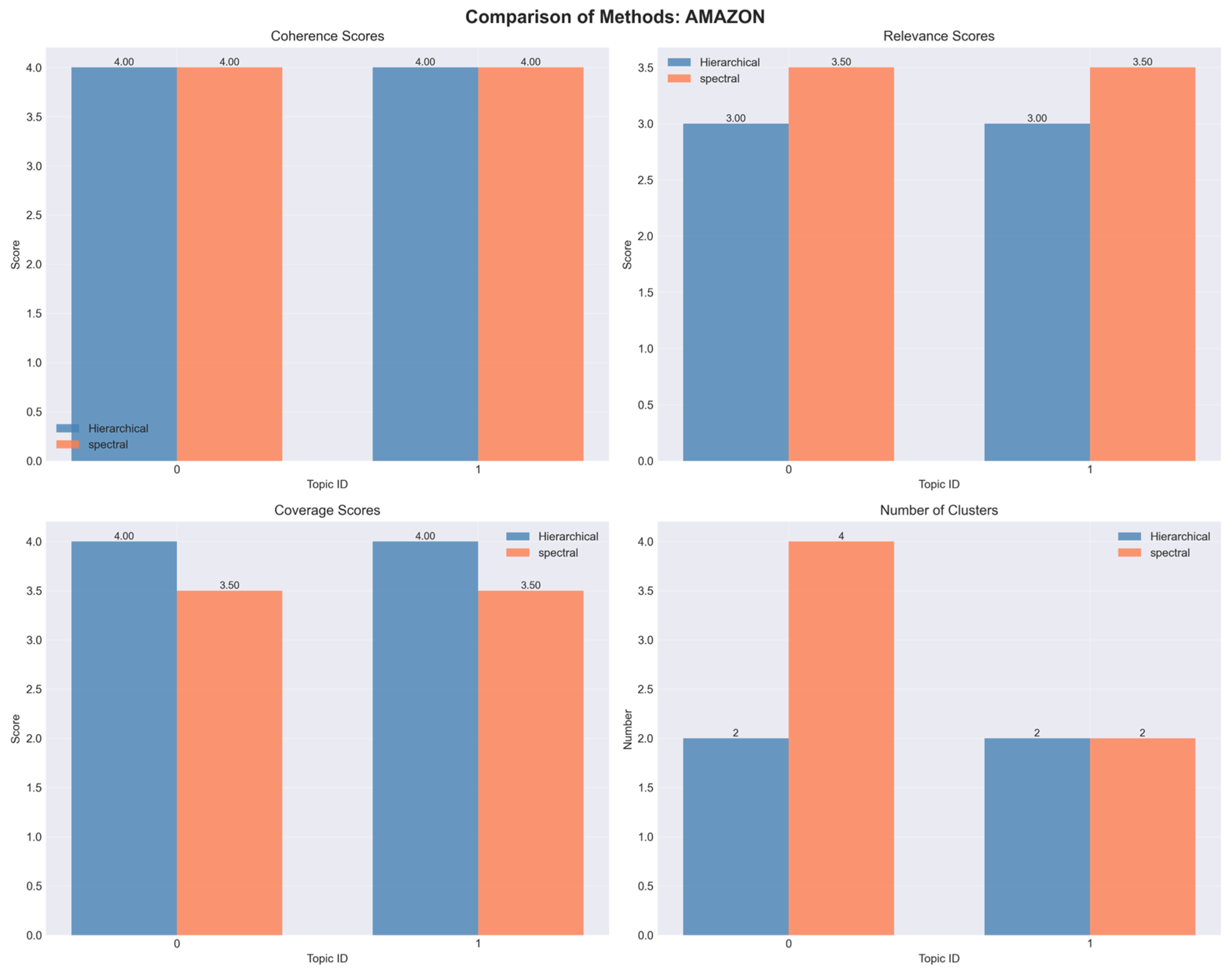

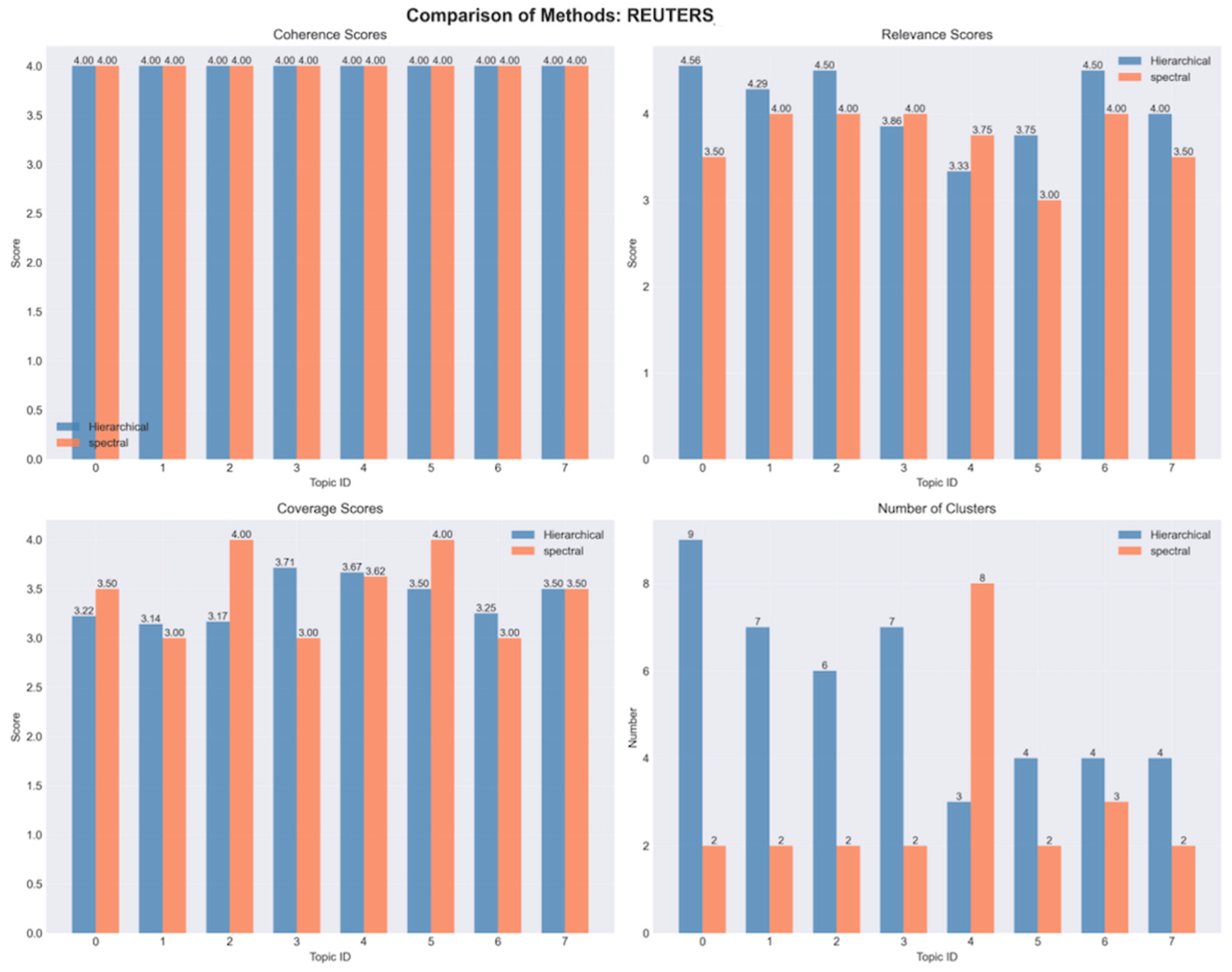

Domains Interpretation and Natural Language Evaluation

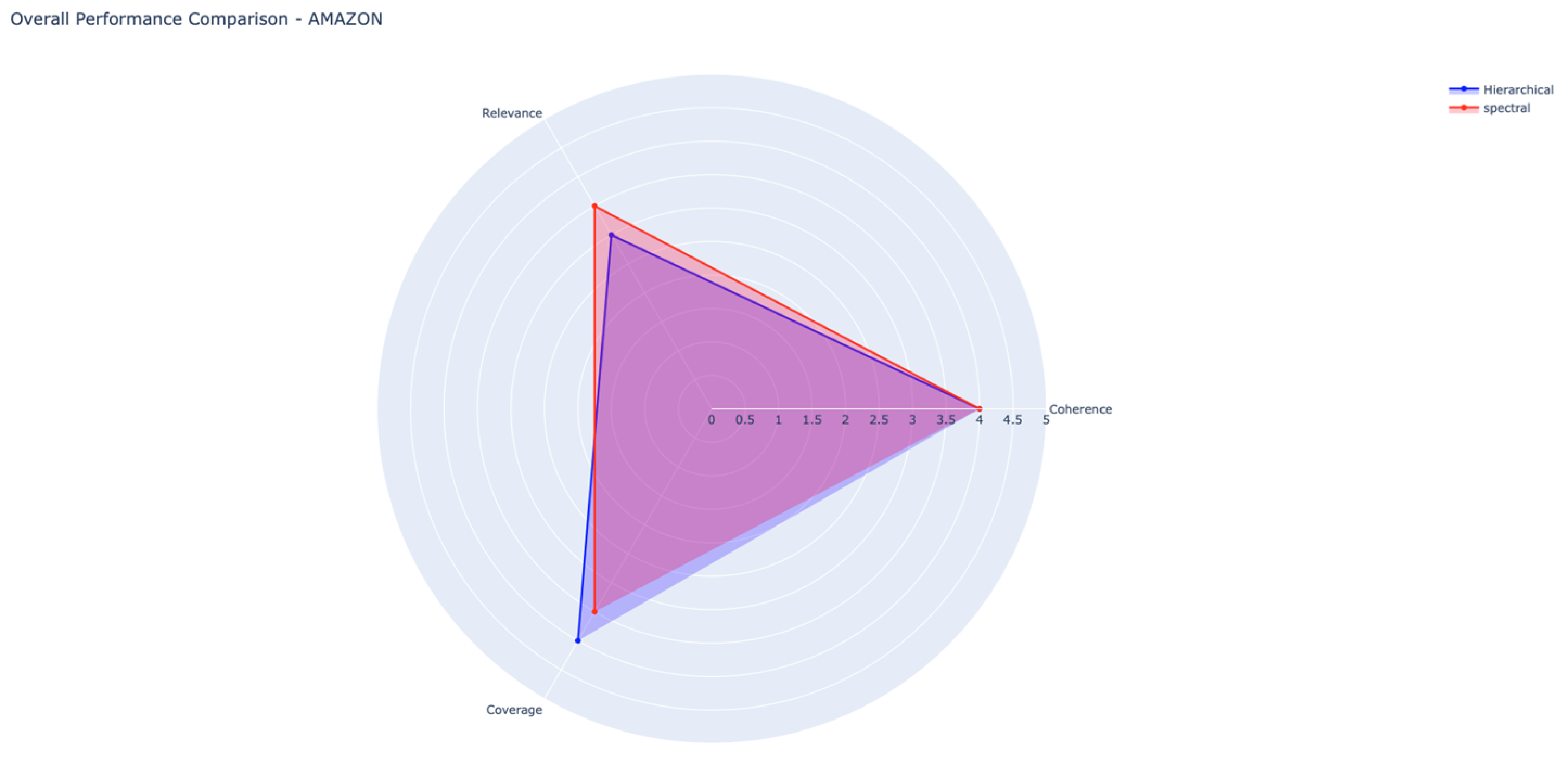

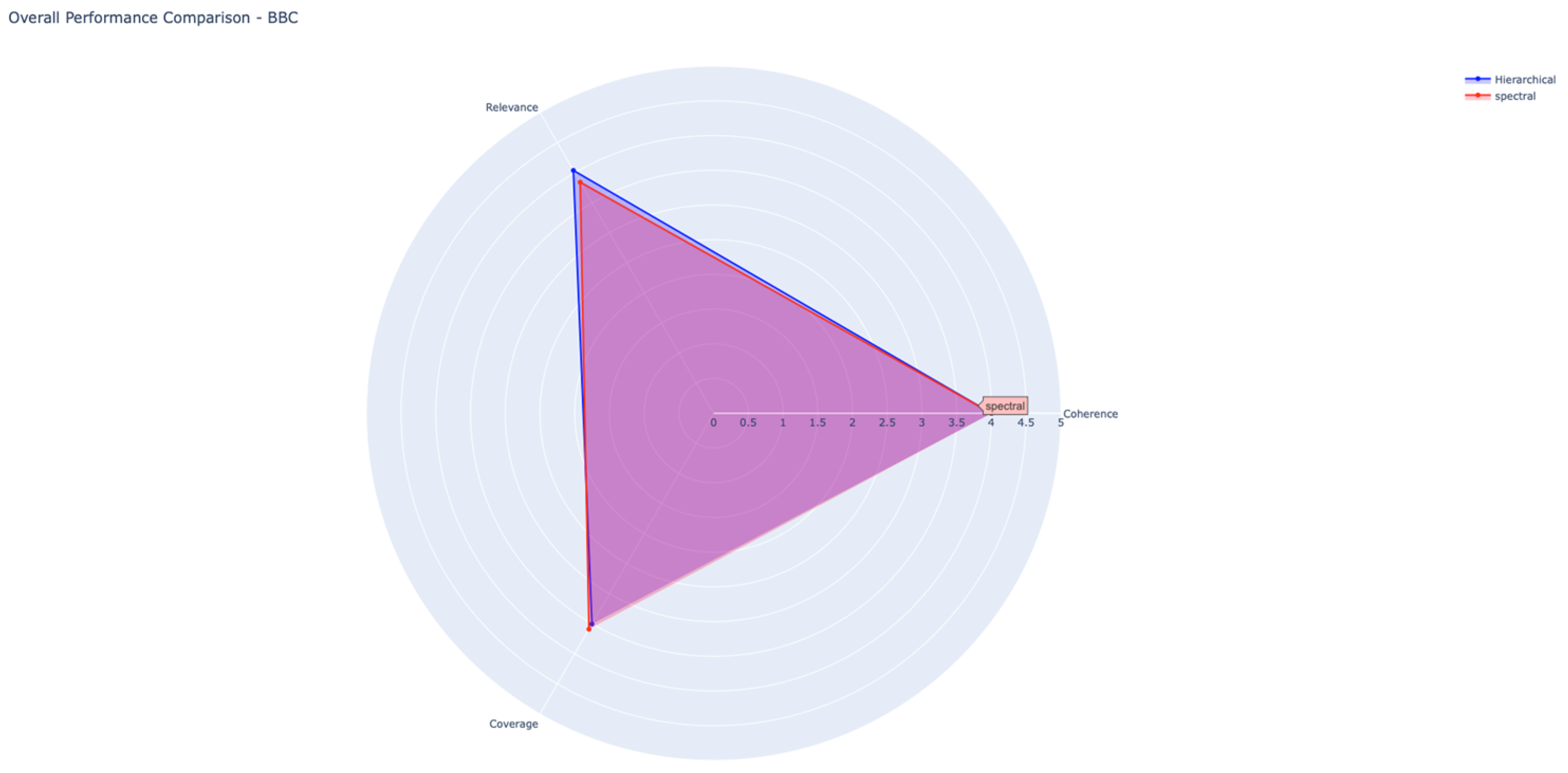

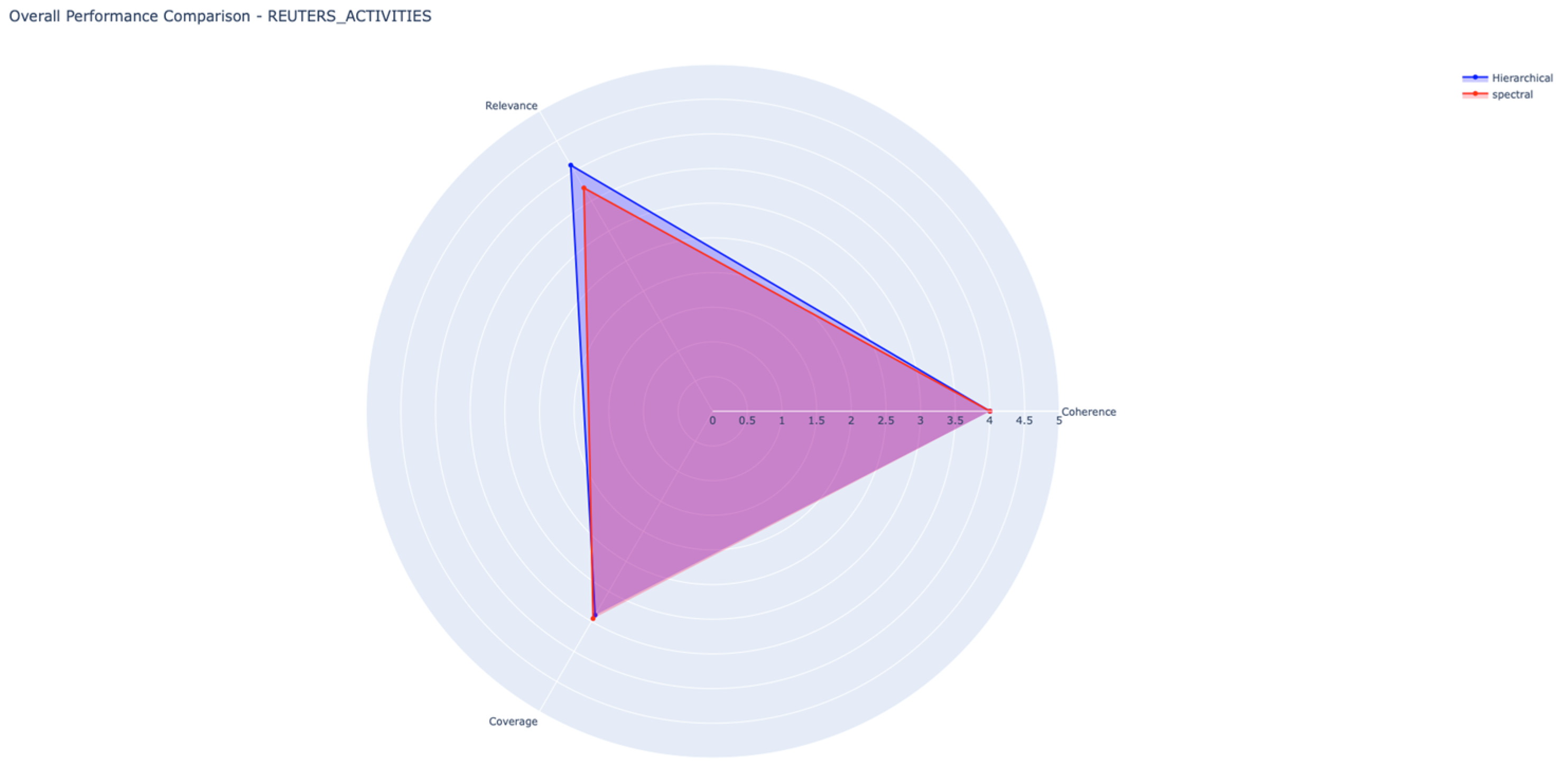

- Coherence, measuring the internal semantic consistency of terms within a cluster;

- Relevance, indicating alignment between the explanation and the contextual domain;

- Coverage, reflecting the degree to which the explanation represents the semantic diversity of the cluster.

Summary of LLM Evaluation Metrics

5. Discussion

5.1. RQ1/H1—Coherence and Interpretability of Semantic Domains

5.2. RQ2/H2—Natural-Language Explanation Generation via LLMs

- Coherence is consistently high (4.0) across datasets. This is exactly what we would expect when explanations are grounded in an already clustered, semantically filtered vocabulary.

- Relevance and coverage vary with the domain and clustering algorithm. Across datasets, topic coherence remained at its maximum level (4.0 ± 0.0), indicating that the embeddings encode well-defined conceptual spaces.

5.3. RQ3/H3—Mitigating Semantic Inaccuracies Through Symbolic–Statistical Integration

5.4. RQ4—Scalable and Reliable Evaluation of Explanations

5.5. Integrated Discussion and Theoretical Implications

- Hierarchical and Spectral clustering produces semantically meaningful domains (RQ1/H1).

- LLM-based NLG modules generate coherent and verifiable explanations (RQ2/H2).

- Symbolic–statistical integration mitigates hallucination and ensures factual grounding (RQ3/H3).

- LLM-based evaluation provides scalable and consistent assessments (RQ4).

5.6. Evaluation Metrics and Corpus Size Considerations

5.7. Design Implications for Multi-Stage XAI Systems

- Stage-appropriate metrics are essential. Intermediate components (topic modeling, clustering) should be evaluated using computational measures (coherence, silhouette), whereas final explanations require human-aligned metrics.

- Architectural decoupling enhances both discriminative performance and interpretability by allowing independent optimization of structure and language generation.

- Progressive refinement demonstrates that moderate intermediate metrics can still yield high interpretability at the output level.

- Scalability: OBOE supports effective explainable classification with moderate-sized corpora, a practical advantage for resource-limited applications.

5.8. Knowledge Discovery Potential

5.9. Robustness to Topic-Level Characteristics

- Topic granularity emerged as the most influential factor (Spearman ρ = 0.760, p < 0.001, 95% CI [0.42, 0.91]). Topics with finer-grained semantic domain differentiation (8–13 clusters) achieved higher explanation quality (M = 4.05) compared to coarse-grained ones (2–3 clusters, M = 3.67), representing an 11% improvement.

- 2.

- Cluster overlap (mean Jaccard similarity = 0.012 ± 0.015) exhibited a relevance–coverage trade-off: overlap positively correlated with relevance (ρ = 0.665, p = 0.004) but negatively with coverage (ρ = −0.565, p = 0.018).

- 3.

- Topic entropy and topic concentration had negligible effects (ρ < 0.1), suggesting that explanation quality is driven primarily by structural rather than distributional properties. Despite moderate noise in entity extraction (≈42% singleton entities), knowledge-graph embeddings and vocabulary-level aggregation effectively mitigated its impact.

5.10. Limitations and Future Directions

- Mixed-topic clusters: automatic clustering occasionally produced heterogeneous groups, requiring user refinement.

- Metric reliance: while coherence and silhouette are useful, they may not capture all dimensions of explanation quality.

- Computational constraints: Although OBOE has incorporated language models in its workflow, limited computational capacity has prevented the exploration of scenarios in which fine-tuning such models could have further enhanced the framework—for instance, in the generation of triples or in the retrieval of context-adapted explanations or coherence variance across embeddings libraries. Nevertheless, as will be discussed in the following section, this represents a promising direction for future work.

- Deeper integration of semantic resources (DBpedia, WordNet) to enhance hybrid explanations.

- Extending QualIT-based evaluation to systematically combine human feedback with metric-based results.

- Explore standardized protocols for evaluating multi-stage XAI systems, ensuring metrics align with the architectural layer and objective being assessed

- Integration with Retrieval-Augmented Generation (RAG) to incorporate external, dynamically updated knowledge sources into the explanation process. This would extend OBOE’s capabilities beyond static corpora, enabling richer semantic coverage, real-time adaptation, and enhanced support for knowledge discovery.

- The use of fine-tuned language models would enable the framework to integrate context-specific explanations and enriched triples, thereby enhancing both adaptability and interpretability across diverse domains.

6. Conclusions

- Exploratory potential: The clustering and explanation modules revealed latent semantic domains and conceptual relations within corpora, highlighting the framework’s capacity for both interpretability and knowledge exploration.

- Hybrid analytical workflow: The combination of graph embeddings (TransE), hierarchical and spectral clustering, and LLM-based explanation generation produced robust, interpretable, and human-readable results.

- Quantitative and qualitative validation: The integration of performance metrics (MacroAUC > 0.96, Mean Per Class Error ≤ 0.2, LogLoss ≈ 0.4) with explanation metrics (Coherence = 4.0, Relevance ≈ 3.9, Coverage ≈ 3.5) ensured a multi-level and consistent evaluation of interpretability.

- Cross-domain stability: Comparable results across the Reuters, BBC, and Amazon corpora indicate that the approach generalizes well, with minimal variance (≤0.3) in LLM-based evaluation scores.

- Complementary clustering behavior: Hierarchical clustering generated more relevant and interpretable topic structures, while spectral clustering offered broader coverage—together reinforcing the semantic coherence of the embedding space.

- Scalable explanation evaluation: The use of QualIT-aligned LLM evaluation (Prompt A.4) proved to be a reliable proxy for human judgment, enabling systematic benchmarking of explanation quality across domains.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| CM | Concept Map |

| KG | Knowledge Graph |

| KGE | Knowledge Graph Embedding |

| LDA | Latent Dirichlet Allocation |

| LLM | Large Language Models |

| NER | Name Entity Recognition |

| NLG | Natural Language Generation |

| NLP | Natural Language Processing |

| XAI | eXplainable Artificial Intelligence |

| XGB | eXtreme Gradient Boosting |

Appendix A

Appendix A.1. Key Phrase Extraction Prompt

Appendix A.2. Semantic Verification Prompt

Appendix A.3. Explanation Synthesis Prompt

Appendix A.4. Explanation Evaluator

Appendix B

Appendix B.1. Classification Metrics Comparison

| Dataset | KGE Model | Dim. | CV Accuracy | Test Accuracy | CV Macro AUC | CV LogLoss (±SD) | CV MPCE (±SD) | Statistical Validation (CV Folds) |

|---|---|---|---|---|---|---|---|---|

| Amazon | TransE | 32 | 0.901 ± 0.004 | 0.889 | 0.966 ± 0.003 | 0.236 ± 0.013 | 0.099 ± 0.004 | Wilcoxon p = 1.000 → ns |

| ComplEx | 16 | 0.882 ± 0.007 | 0.866 | 0.954 ± 0.004 | 0.274 ± 0.013 | 0.119 ± 0.007 | Wilcoxon p = 0.438 → ns | |

| ConvKB | 8 | 0.843 ± 0.008 | 0.836 | 0.931 ± 0.006 | 0.331 ± 0.013 | 0.158 ± 0.007 | Wilcoxon p = 0.625 → ns | |

| DistMult | 20 | 0.855 ± 0.008 | 0.846 | 0.940 ± 0.006 | 0.310 ± 0.014 | 0.146 ± 0.008 | Wilcoxon p = 0.625 → ns | |

| BBC | TransE | 32 | 0.897 ± 0.006 | 0.888 | 0.985 ± 0.002 | 0.342 ± 0.018 | 0.147 ± 0.017 | Wilcoxon p = 0.438 → ns |

| ComplEx | 32 | 0.859 ± 0.007 | 0.854 | 0.977 ± 0.003 | 0.439 ± 0.019 | 0.191 ± 0.017 | Wilcoxon p = 0.188 → ns | |

| ConvKB | 12 | 0.849 ± 0.007 | 0.846 | 0.972 ± 0.004 | 0.493 ± 0.021 | 0.212 ± 0.020 | Wilcoxon p = 0.125 → ns | |

| DistMult | 20 | 0.865 ± 0.005 | 0.862 | 0.977 ± 0.003 | 0.443 ± 0.017 | 0.188 ± 0.018 | Wilcoxon p = 0.438 → ns | |

| Reuters | TransE | 32 | 0.880 ± 0.008 | 0.870 | 0.985 ± 0.002 | 0.395 ± 0.020 | 0.142 ± 0.010 | Wilcoxon p = 1.000 → ns |

| ComplEx | 24 | 0.860 ± 0.007 | 0.857 | 0.979 ± 0.003 | 0.469 ± 0.023 | 0.170 ± 0.010 | Wilcoxon p = 1.000 → ns | |

| ConvKB | 12 | 0.820 ± 0.011 | 0.812 | 0.970 ± 0.005 | 0.593 ± 0.034 | 0.216 ± 0.019 | Wilcoxon p = 0.063 → ns | |

| DistMult | 20 | 0.842 ± 0.009 | 0.832 | 0.974 ± 0.004 | 0.536 ± 0.029 | 0.197 ± 0.013 | Wilcoxon p = 0.313 → ns |

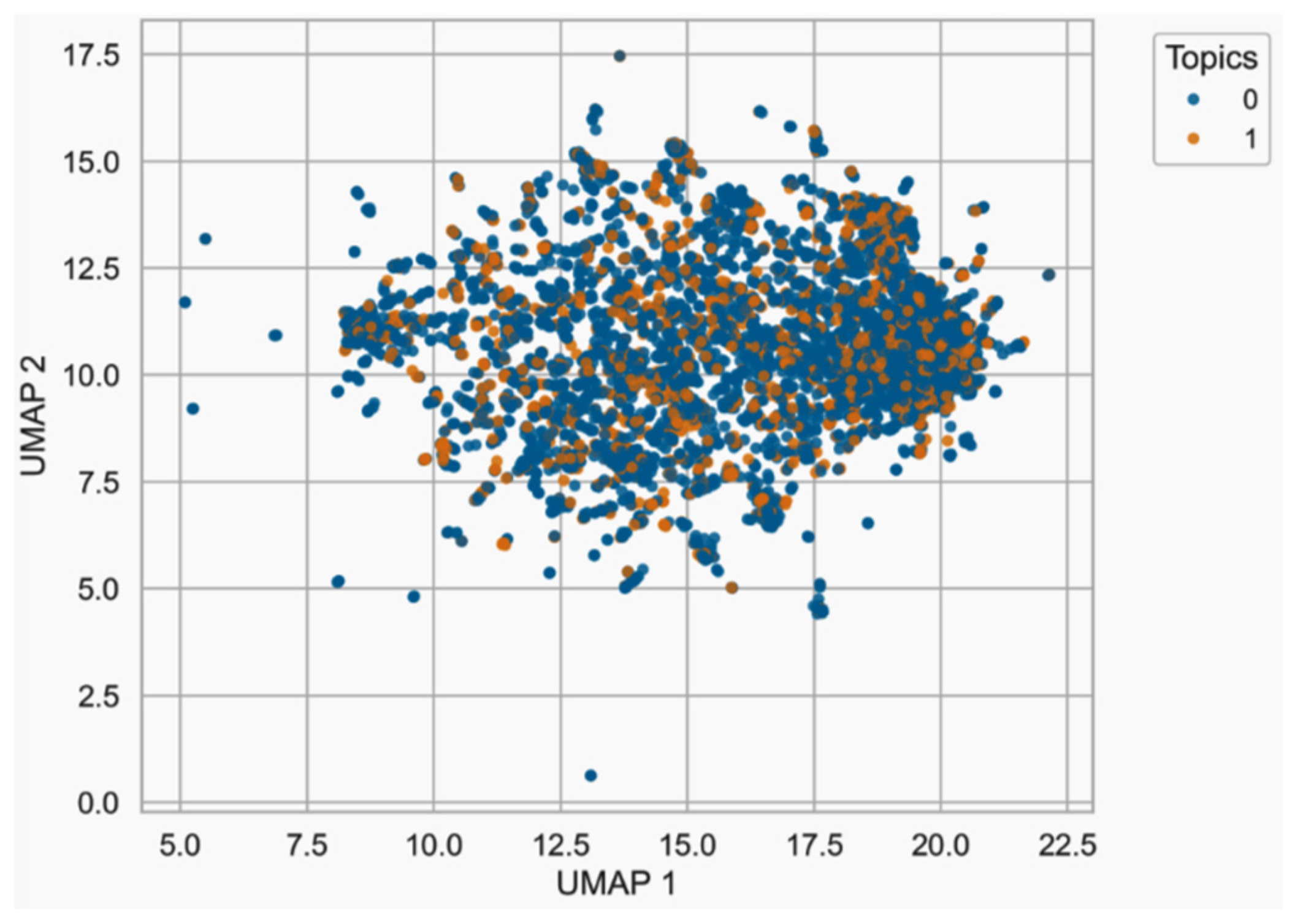

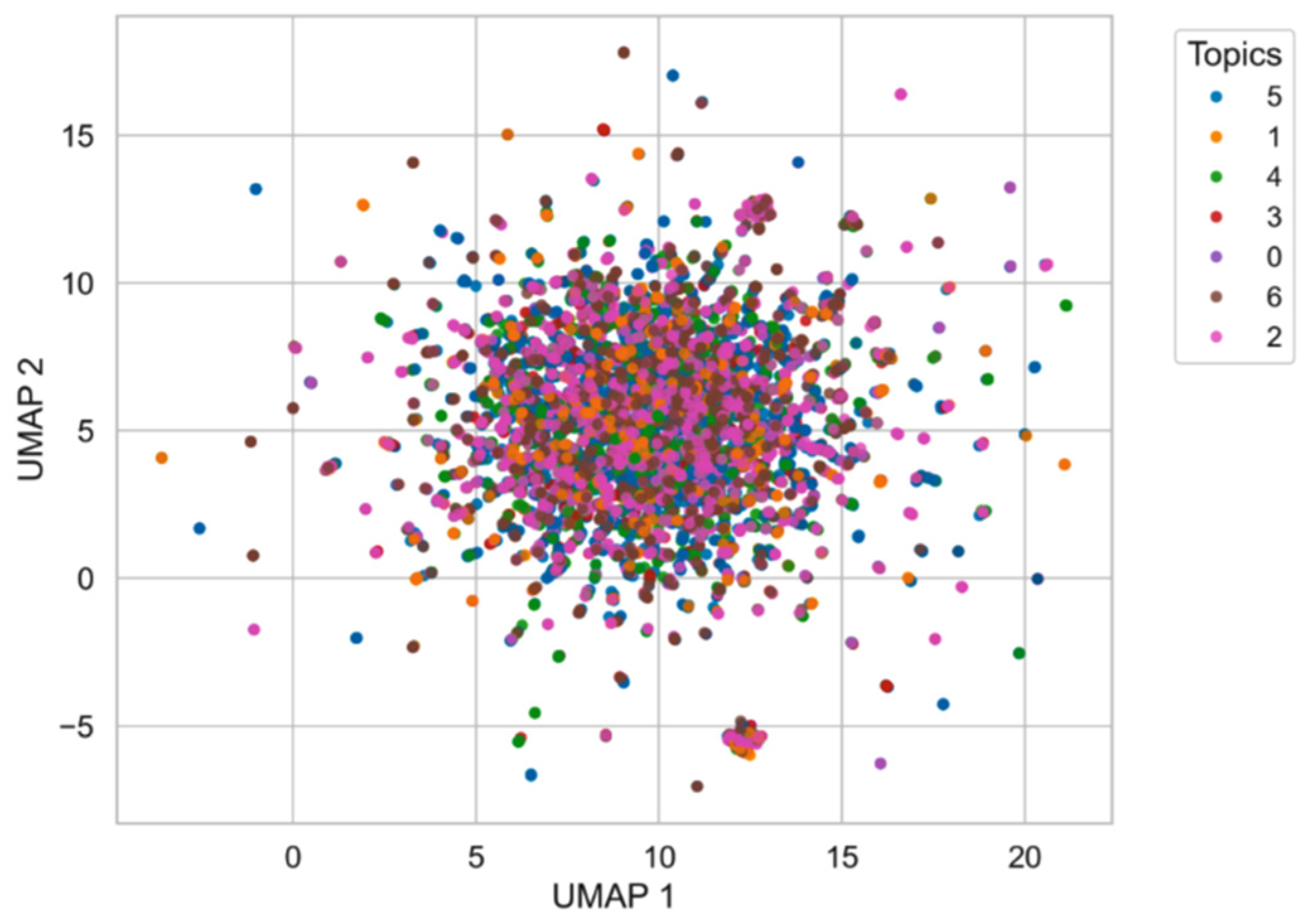

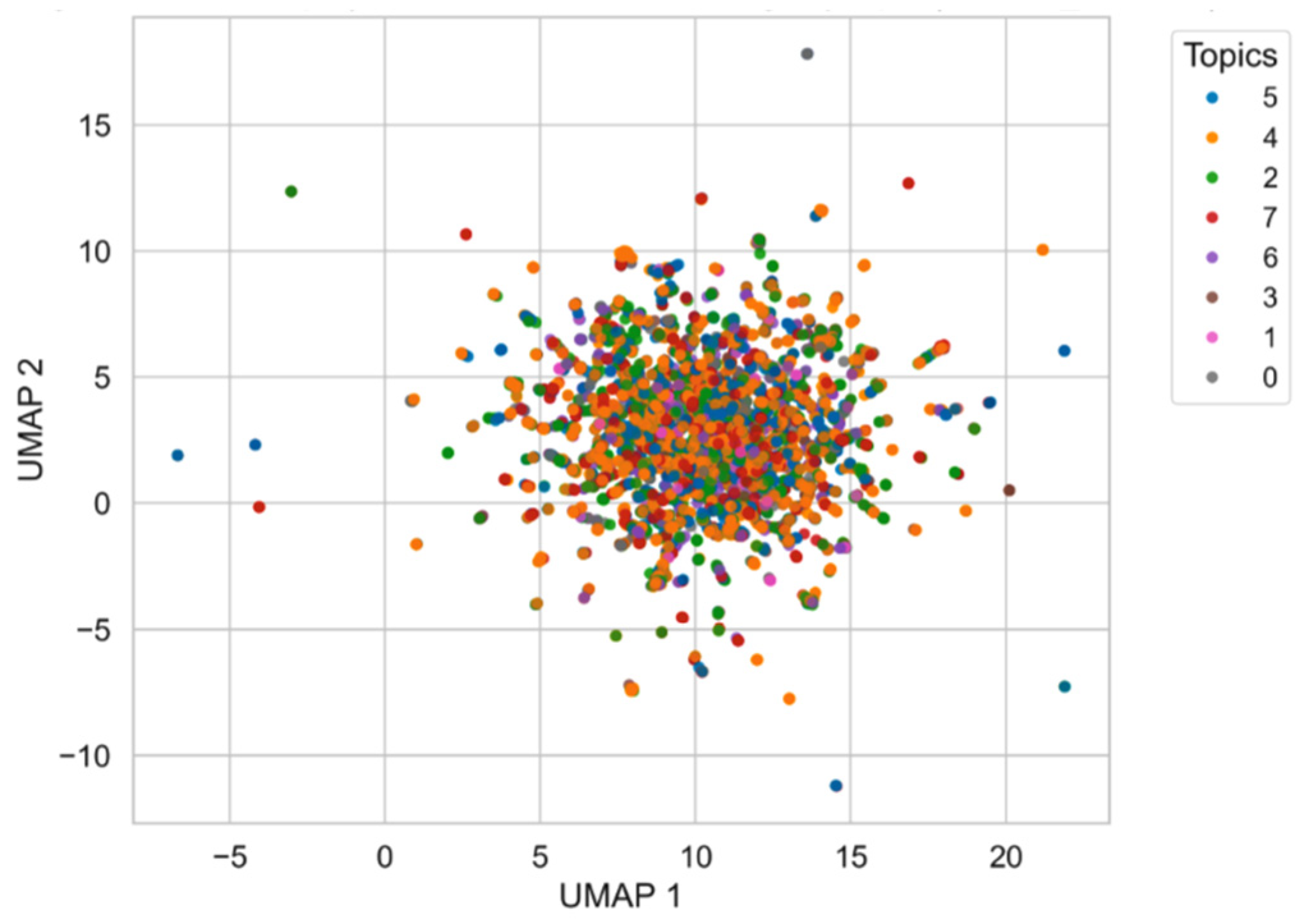

Appendix B.2. Embedding Visualization

Appendix C. BBC Topic 3: Hierarchical Clustering and Spectral Visualization Results

Appendix D. Executive Report Example

Appendix E. Topic by Topic Comparison of Hierarchical and Spectral Clustering Metrics Across Corpora (Amazon, BBC, and Reuters)

References

- Adadi, A.; Berrada, M. Peeking inside the Black-Box: A Survey on Explainable Artificial Intelligence (XAI). IEEE Access Pract. Innov. Open Solut. 2018, 6, 52138–52160. [Google Scholar] [CrossRef]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; García, S.; Gil-López, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, Taxonomies, Opportunities and Challenges toward Responsible AI. Inf. Fusion 2019, 58, 82–115. [Google Scholar] [CrossRef]

- Meske, C.; Bunde, E.; Schneider, J.; Gersch, M. Explainable Artificial Intelligence: Objectives, Stakeholders, and Future Research Opportunities. Inf. Syst. Manag. 2022, 39, 53–63. [Google Scholar] [CrossRef]

- Danilevsky, M.; Qian, K.; Aharonov, R.; Katsis, Y.; Kawas, B.; Sen, P. A Survey of the State of Explainable AI for Natural Language Processing. arXiv 2020, arXiv:2010.00711. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. “Why Should I Trust You?”: Explaining the Predictions of Any Classifier. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining—KDD ’16, San Francisco, CA, USA, 13–17 August 2016; ACM Press: San Francisco, CA, USA, 2016; pp. 1135–1144. [Google Scholar]

- Lundberg, S.; Lee, S.-I. A Unified Approach to Interpreting Model Predictions. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Lu, Q.; Dou, D.; Nguyen, T. ClinicalT5: A Generative Language Model for Clinical Text. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; Goldberg, Y., Kozareva, Z., Zhang, Y., Eds.; Association for Computational Linguistics: Abu Dhabi, United Arab Emirates, 2022; pp. 5436–5443. [Google Scholar]

- Wu, T.; Ribeiro, M.; Heer, J.; Singh, S. Polyjuice: Generating Counterfactuals for Explaining Predictions in NLP. In Proceedings of the ACL, Austin, TX, USA, 2–11 October 2021. [Google Scholar]

- Novak, J.D.; Cañas, A.J. The Theory Underlying Concept Maps and How to Construct Them. Fla. Inst. Hum. Mach. Cogn. 2006, 1, 1–31. [Google Scholar]

- Navarro-Almanza, R.; Juárez-Ramírez, R.; Licea, G.; Castro, J.R. Automated Ontology Extraction from Unstructured Texts Using Deep Learning. In Intuitionistic and Type-2 Fuzzy Logic Enhancements in Neural and Optimization Algorithms: Theory and Applications; Castillo, O., Melin, P., Kacprzyk, J., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 727–755. ISBN 978-3-030-35445-9. [Google Scholar]

- Hogan, A.; Blomqvist, E.; Cochez, M. Knowledge Graphs. ACM Comput. Surv. 2021, 54, 71. [Google Scholar] [CrossRef]

- Auer, S.; Bizer, C.; Kobilarov, G.; Lehmann, J.; Cyganiak, R.; Ives, Z. DBpedia: A Nucleus for a Web of Open Data. In Semantic Web; Aberer, K., Choi, K.-S., Noy, N., Allemang, D., Lee, K.-I., Nixon, L., Golbeck, J., Mika, P., Maynard, D., Mizoguchi, R., et al., Eds.; Springer: Berlin/Heidelberg, Germany, 2007; pp. 722–735. [Google Scholar]

- Suchanek, F.M.; Kasneci, G.; Weikum, G. Yago: A Core of Semantic Knowledge. In Proceedings of the 16th International Conference on World Wide Web, Banff, AB, Canada, 8–12 May 2007; ACM: New York, NY, USA, 2007; pp. 697–706. [Google Scholar]

- Medelyan, O.; Manion, S.; Broekstra, J.; Divoli, A.; Huang, A.-L.; Witten, I.H. Constructing a Focused Taxonomy from a Document Collection. In The Semantic Web: Semantics and Big Data; Cimiano, P., Corcho, O., Presutti, V., Hollink, L., Rudolph, S., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7882, pp. 367–381. ISBN 978-3-642-38287-1. [Google Scholar]

- Qasim, I.; Jeong, J.-W.; Heu, J.-U.; Lee, D.-H. Concept Map Construction from Text Documents Using Affinity Propagation. J. Inf. Sci. 2013, 39, 719–736. [Google Scholar] [CrossRef]

- Bilal, A.; Ebert, D.; Lin, B. LLMs for Explainable AI: A Comprehensive Survey 2025. arXiv 2025, arXiv:2504.00125. [Google Scholar]

- Cambria, E.; Malandri, L.; Mercorio, F.; Nobani, N.; Seveso, A. XAI Meets LLMs: A Survey of the Relation between Explainable AI and Large Language Models. arXiv 2024, arXiv:2407.15248. [Google Scholar] [CrossRef]

- Rahimi, H.; Mimno, D.; Hoover, J.; Naacke, H.; Constantin, C.; Amann, B. Contextualized Topic Coherence Metrics. In Proceedings of the Findings of the Association for Computational Linguistics: EACL 2024, St. Julian’s, Malta, 17–22 March 2024; Graham, Y., Purver, M., Eds.; Association for Computational Linguistics: St. Julian’s, Malta, 2024; pp. 1760–1773. [Google Scholar]

- Bhaduri, S.; Kapoor, S.; Gil, A.; Mittal, A.; Mulkar, R. Qualitative Insights Tool (QualIT): LLM Enhanced Topic Modeling. arXiv 2024, arXiv:2409.15626. [Google Scholar] [CrossRef]

- Nauta, M.; Trienes, J.; Pathak, S.; Nguyen, E.; Peters, M.; Schmitt, Y.; Schlötterer, J.; van Keulen, M.; Seifert, C. From Anecdotal Evidence to Quantitative Evaluation Methods: A Systematic Review on Evaluating Explainable AI 2022. ACM Comput. Surv. 2023, 55, 295. [Google Scholar] [CrossRef]

- Del Águila Escobar, R.; Suárez-Figueroa, M.d.C.; Fernández-López, M. OBOE: An Explainable Text Classification Framework. Int. J. Interact. Multimed. Artif. Intell. 2022; in press. 1–14. [Google Scholar] [CrossRef]

- Ribeiro, M.T.; Singh, S.; Guestrin, C. Anchors: High-Precision Model-Agnostic Explanations. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar] [CrossRef]

- Mehta, H.; Passi, K. Social Media Hate Speech Detection Using Explainable Artificial Intelligence (XAI). Algorithms 2022, 15, 291. [Google Scholar] [CrossRef]

- Ilias, L.; Askounis, D. Explainable Identification of Dementia from Transcripts Using Transformer Networks. IEEE J. Biomed. Health Inform. 2022, 26, 4153–4164. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Yin, Q.; Wang, W.Y. Towards Explainable NLP: A Generative Explanation Framework for Text Classification. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Arous, I.; Dolamic, L.; Yang, J.; Bhardwaj, A.; Cuccu, G.; Cudré-Mauroux, P. MARTA: Leveraging Human Rationales for Explainable Text Classification. Proc. AAAI Conf. Artif. Intell. 2021, 35, 5868–5876. [Google Scholar] [CrossRef]

- Karen Simonyan, A.Z. Andrea Vedaldi Deep Inside Convolutional Networks: Visualising Image Classification Models and Saliency Maps. In Proceedings of the Workshop at International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Augasta, M.G.; Kathirvalavakumar, T. Reverse Engineering the Neural Networks for Rule Extraction in Classification Problems. Neural Process. Lett. 2012, 35, 131–150. [Google Scholar] [CrossRef]

- Bologna, G. A Simple Convolutional Neural Network with Rule Extraction. Appl. Sci. 2019, 9, 2411. [Google Scholar] [CrossRef]

- Zhao, H.; Chen, H.; Yang, F.; Liu, N.; Deng, H.; Cai, H.; Wang, S.; Yin, D.; Du, M. Explainability for Large Language Models: A Survey. ACM Trans. Intell. Syst. Technol. 2024, 15, 20. [Google Scholar] [CrossRef]

- Lecue, F. Semantic Web Journal; IOS Press: Amsterdam, The Netherlands, 2018; p. 9. [Google Scholar]

- Rožanec, J.M.; Fortuna, B.; Mladenić, D. Knowledge Graph-Based Rich and Confidentiality Preserving Explainable Artificial Intelligence (XAI). Inf. Fusion 2022, 81, 91–102. [Google Scholar] [CrossRef]

- Flisar, J.; Podgorelec, V. Document Enrichment Using Dbpedia Ontology for Short Text Classification. In Proceedings of the 8th International Conference on Web Intelligence, Mining and Semantics, Novi Sad, Serbia, 25–27 June 2018. [Google Scholar]

- Frayling, E.; Macdonald, C.; McDonald, G.; Ounis, I. Using Entities in Knowledge Graph Hierarchies to Classify Sensitive Information. In Experimental IR Meets Multilinguality, Multimodality, and Interaction; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13390, pp. 125–132. [Google Scholar]

- Okebukola, P.A. Can Good Concept Mappers Be Good Problem Solvers in Science? Educ. Psychol. 1992, 12, 113–129. [Google Scholar] [CrossRef]

- Aguiar, C.; Zouaq, A.; Cury, D. Automatic Construction of Concept Maps from Texts. In Proceedings of the 7th International Conference on Concept Mapping, Tallinn, Estonia, 5–9 September 2016. [Google Scholar]

- Atapattu, T.; Falkner, K.; Falkner, N. A Comprehensive Text Analysis of Lecture Slides to Generate Concept Maps. Comput. Educ. 2017, 115, 96–113. [Google Scholar] [CrossRef]

- Falke, T.; Gurevych, I. Fast Concept Mention Grouping for Concept Map-Based Multi-Document Summarization. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 695–700. [Google Scholar]

- Shao, Z.; Li, Y.; Wang, X.; Zhao, X.; Guo, Y. Research on a New Automatic Generation Algorithm of Concept Map Based on Text Analysis and Association Rules Mining. J. Ambient Intell. Humaniz. Comput. 2020, 11, 539–551. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-Relational Data. In Advances in Neural Information Processing Systems; Burges, C.J., Bottou, L., Welling, M., Ghahramani, Z., Weinberger, K.Q., Eds.; Curran Associates, Inc.: New York, NY, USA, 2013; Volume 26. [Google Scholar]

- Wang, C.; Nulty, P.; Lillis, D. A Comparative Study on Word Embeddings in Deep Learning for Text Classification. In Proceedings of the 4th International Conference on Natural Language Processing and Information Retrieval, Toronto, ON, Canada, 23–24 September 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 37–46, ISBN 978-1-4503-7760-7. [Google Scholar]

- Chen, Q.; Wang, W.; Huang, K.; Coenen, F. Zero-Shot Text Classification via Knowledge Graph Embedding for Social Media Data. IEEE Internet Things J. 2022, 9, 9205–9213. [Google Scholar] [CrossRef]

- Ennajari, H.; Bouguila, N.; Bentahar, J. Knowledge-Enhanced Spherical Representation Learning for Text Classification. In Proceedings of the 2022 SIAM international conference on data mining (SDM), Alexandria, WV, USA, 28–30 April 2022; pp. 639–647. [Google Scholar]

- Spinner, T.; Schlegel, U.; Schäfer, H.; El-Assady, M. explAIner: A Visual Analytics Framework for Interactive and Explainable Machine Learning. IEEE Trans. Vis. Comput. Graph. 2019, 26, 1064–1074. [Google Scholar] [CrossRef]

- Mahoney, C.J.; Zhang, J.; Huber-Fliflet, N.; Gronvall, P.; Zhao, H. A Framework for Explainable Text Classification in Legal Document Review. In 2019 IEEE International Conference on Big Data (Big Data); IEEE Xplore: Los Angeles, CA, USA, 2019. [Google Scholar]

- Donadello, I.; Dragoni, M. SeXAI: A Semantic Explainable Artificial Intelligence Framework. In AIxIA 2020—Advances in Artificial Intelligence; Baldoni, M., Bandini, S., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 51–66. [Google Scholar]

- Billi, M. Hybrid Symbolic–LLM Explanations in Legal Text Classification. arXiv 2023. [Google Scholar] [CrossRef]

- Bhattacharjee, A.; Moraffah, R.; Garland, J.; Liu, H. Zero-shot LLM-guided Counterfactual Generation: A Case Study on NLP Model Evaluation. In Proceedings of the IEEE International Conference on Big Data, Washington, DC, USA, 15–18 December 2024; pp. 1243–1248. [Google Scholar] [CrossRef]

- Hong, D.; Wang, T.; Baek, S. ProtoryNet: Prototype Trajectories for Interpretable Text Classification. J. Mach. Learn. Res. 2023, 24. [Google Scholar]

- Wei, B.; Zhu, Z. ProtoLens: Advancing Prototype Learning for Fine-Grained Interpretability in Text Classification. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 4503–4523. [Google Scholar]

- Zhang, D.; Sen, C.; Thadajarassiri, J.; Hartvigsen, T.; Kong, X.; Rundensteiner, E. Human-like Explanation for Text Classification with Limited Attention Supervision. In 2021 IEEE International Conference on Big Data (Big Data); IEEE Xplore: Los Angeles, CA, USA, 2021; pp. 957–967. [Google Scholar]

- Chrysostomou, G.; Aletras, N. Improving Attention-Based Explanations with Task Scaling (TaSc). In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), Punta Cana, Dominican Republic, 7–11 November 2021. [Google Scholar]

- Zhao, Z.; Vydiswaran, V.G.V. LIREx: Label-Specific Rationale Generation for Multi-Label Classification. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL), Bangkok, Thailand, 1–6 August 2021. [Google Scholar]

- Ribeiro, M. SLIME: Statistical and Linguistic Insights for Model Explanation. arXiv 2024. [Google Scholar] [CrossRef]

- Rahulamathavan, Y. PLEX: Perturbation-Free Local Explanations for Transformer-Based Classifiers. arXiv 2023. [Google Scholar] [CrossRef]

- McAuley, J.; Targett, C.; Shi, Q.; van den Hengel, A. Image-Based Recommendations on Styles and Substitutes. arXiv 2015, arXiv:150604757. [Google Scholar]

- Greene, D.; Cunningham, P. Practical Solutions to the Problem of Diagonal Dominance in Kernel Document Clustering. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; Association for Computing Machinery: New York, NY, USA, 2006; pp. 377–384. [Google Scholar]

- Lewis, D.D.; Yang, Y.; Rose, T.G.; Li, F. RCV1: A New Benchmark Collection for Text Categorization Research. J. Mach. Learn. Res. 2004, 5, 361–397. [Google Scholar]

- Fellbaum, C. (Ed.) WordNet: An Electronic Lexical Database; Language, Speech, and Communication; MIT Press: Cambridge, MA, USA, 1998; ISBN 978-0-262-06197-1. [Google Scholar]

- Mendes, P.N.; Jakob, M.; García-Silva, A.; Bizer, C. DBpedia Spotlight: Shedding Light on the Web of Documents. In Proceedings of the 7th International Conference on Semantic Systems—I-Semantics ’11; ACM Press: Graz, Austria, 2011; pp. 1–8. [Google Scholar]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet Allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Nguyen, D.Q.; Nguyen, T.D.; Nguyen, D.Q.; Phung, D. A Novel Embedding Model for Knowledge Base Completion Based on Convolutional Neural Network. In Proceedings of the 16th Annual Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT), New Orleans, LA, USA, 1–6 June 2018; pp. 327–333. [Google Scholar]

- Trouillon, T.; Welbl, J.; Riedel, S.; Gaussier, E.; Bouchard, G. Complex Embeddings for Simple Link Prediction. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Balcan, M.F., Weinberger, K.Q., Eds.; PMLR: New York, NY, USA, 2016; Volume 48, pp. 2071–2080. [Google Scholar]

- Yang, B.; Yih, S.W.; He, X.; Gao, J.; Deng, L. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. In Proceedings of the International Conference on Learning Representations 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Rousseeuw, P.J. Silhouettes: A Graphical Aid to the Interpretation and Validation of Cluster Analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.-Y. LightGBM: A Highly Efficient Gradient Boosting Decision Tree. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 3146–3154. [Google Scholar]

- Prokhorenkova, L.; Gusev, G.; Vorobev, A.; Dorogush, A.V.; Gulin, A. CatBoost: Unbiased Boosting with Categorical Features. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montréal, QC, Canada, 3–8 December 2018; pp. 6638–6648. [Google Scholar]

- Newman, D.; Lau, J.H.; Grieser, K.; Baldwin, T. Automatic Evaluation of Topic Coherence. In Proceedings of the Human Language Technologies: The 2010 Annual Conference of the North American Chapter of the Association for Computational Linguistics, Los Angeles, CA, USA, 2–4 June 2010; Association for Computational Linguistics: Stroudsburg, PA, USA, 2010; pp. 100–108. [Google Scholar]

- Aletras, N.; Stevenson, M. Evaluating Topic Coherence Using Distributional Semantics. In Proceedings of the 10th International Conference on Computational Semantics (IWCS 2013), Potsdam, Germany, 19–22 March 2013; pp. 13–22. [Google Scholar]

- Röder, M.; Both, A.; Hinneburg, A. Exploring the Space of Topic Coherence Measures. In Proceedings of the Eighth ACM International Conference on Web Search and Data Mining, Shanghai, China, 2–6 February 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 399–408. [Google Scholar]

- Gourgoulias, K.; Ghalyan, N.; Labonne, M.; Satsangi, Y.; Moran, S.; Sabelja, J. Estimating Class Separability of Text Embeddings with Persistent Homology. arXiv 2023, arXiv:2305.15016. [Google Scholar]

- Schilling, A.; Maier, A.; Gerum, R.; Metzner, C.; Krauß, P. Quantifying the Separability of Data Classes in Neural Networks. Neural Netw. 2021, 139, 278–293. [Google Scholar] [CrossRef] [PubMed]

| Framework | Task Scope | LLM Use | Data Representation | Customizable Components | Model Independent |

|---|---|---|---|---|---|

| Legal Document Review | Explanations as examples | None | Text | ✗ | ⭘ |

| explAIner | Interactive classification explanation | None | Text | ⭘ | ⭘ |

| MARTA | Prototype based classification | None | Text | ✗ | ✗ |

| GEF | Feature-based global explanation | None | Text | ✗ | ✗ |

| SEXAI | Semantic enrichment of explanations | None | Text | ✗ | ✗ |

| TaSc | Attention-based explanation | None | Text | ⭘ | ✗ |

| HELAS | Attention-based hierarchical explanation | None | Text | ✗ | ✗ |

| LIREx | Rationale extraction for classification | None | Text tokens | ✓ | ⭘ |

| ClinicalT5 | Supervised explanation generation | Generation | Text | ✗ | ✗ |

| PLEX | Counterfactual explanation | Generation | Text features | ✗ | ✓ |

| SLIME | Local counterfactual explanations | Generation | Text features | ⭘ | ⭘ |

| ProtoryNet | Prototype-based rationale explanation | None | Text | ✗ | ✗ |

| ProtoLens | Visual prototype inspection | None | Text | ⭘ | ✗ |

| Polyjuice | Counterfactual generation | Generation | Text | ✓ | ✓ |

| FIZLE | Counterfactual generation | Generation | Text | ✓ | ✓ |

| Hybrid Symbolic-LLM | Symbolic + generative explanation | Generation | Text + Rule templates | ✗ | ⭘ |

| Ours (OBOE extension) | Structure Domain discovery and explanation | Generation Validation Evaluation | Knowledge Graph Embeddings | ✓ | ✓ |

| Corpus 1 | Metric | Result |

|---|---|---|

| Amazon | AUC | 0.99 |

| Amazon | Mean per class error | 0.01 |

| BBC | LogLoss | 0.23 |

| BBC | Mean per class error | 0.06 |

| Reuters | LogLoss | 0.26 |

| Reuters | Mean per class error | 0.09 |

| Dataset | KGE Model | Dim. | CV Accuracy | CV Macro AUC | CV LogLoss (±SD) | CV MPCE (±SD) |

|---|---|---|---|---|---|---|

| Reuters | TransE | 32 | 0.901 ± 0.004 | 0.889 | 0.966 ± 0.003 | 0.236 ± 0.013 |

| BBC | TransE | 32 | 0.897 ± 0.006 | 0.888 | 0.985 ± 0.002 | 0.342 ± 0.018 |

| Amazon | TransE | 32 | 0.880 ± 0.008 | 0.87 | 0.985 ± 0.002 | 0.395 ± 0.020 |

| Corpus 1 | Coherence |

|---|---|

| Amazon | 0.42 |

| BBC | 0.59 |

| Reuters | 0.55 |

| Cluster | Key Concept | Coherence | Relevance | Coverage | Summary |

|---|---|---|---|---|---|

| 0 | Resource Quality & Usage | 4 | 4 | 3 | Focused on the evaluation and utilization of resources or products by quality and efficiency. |

| 1 | Pet accessories | 4 | 3 | 4 | Describes domesticated animals and related items; clear theme but limited technological specificity. |

| Dataset | Method | Coherence (±SD) | Relevance (±SD) | Coverage (±SD) | Avg. Clusters | Total Clusters | Overall Score | Relative Δ vs. Hierarchical |

|---|---|---|---|---|---|---|---|---|

| Amazon | Hierarchical | 4.000 ± 0.000 | 3.000 ± 0.000 | 4.000 ± 0.000 | 2 | 4 | 3.66 | — |

| Spectral | 4.000 ± 0.000 | 3.500 ± 0.000 | 3.500 ± 0.000 | 3 | 6 | 3.66 | Relevance +16.7%; Coverage −12.5% | |

| BBC | Hierarchical | 4.000 ± 0.000 | 4.039 ± 0.379 | 3.505 ± 0.159 | 6.3 | 44 | 3.85 | — |

| Spectral | 4.000 ± 0.000 | 3.843 ± 0.547 | 3.590 ± 0.313 | 3.1 | 22 | 3.81 | Relevance −4.9%; Coverage +2.4% | |

| Reuters | Hierarchical | 4.000 ± 0.000 | 4.098 ± 0.438 | 3.395 ± 0.228 | 5.5 | 44 | 3.83 | — |

| Spectral | 4.000 ± 0.000 | 3.719 ± 0.364 | 3.453 ± 0.422 | 2.9 | 23 | 3.72 | Relevance −9.2%; Coverage +1.7% | |

| Global Mean | — | 4.000 ± 0.0 | 3.80 ± 0.3 | 3.57 ± 0.2 | — | — | 3.77 ± 0.1 | Hierarchical slightly superior |

| Dataset | Method | Coherence (±SD) | Relevance (±SD) | Coverage (±SD) | LLM Mean Score | Observations |

|---|---|---|---|---|---|---|

| Amazon | Hierarchical | 4.00 ± 0.00 | 3.00 ± 0.00 | 4.00 ± 0.00 | 3.67 | Broader topical scope; coherent but less domain-focused. |

| Spectral | 4.00 ± 0.00 | 3.50 ± 0.00 | 3.50 ± 0.00 | 3.67 | Compact, highly coherent explanations. | |

| BBC | Hierarchical | 4.00 ± 0.00 | 4.04 ± 0.38 | 3.51 ± 0.16 | 3.85 | Balanced and semantically rich explanations. |

| Spectral | 4.00 ± 0.00 | 3.84 ± 0.55 | 3.59 ± 0.31 | 3.81 | Slightly better coverage but lower relevance. | |

| Reuters | Hierarchical | 4.00 ± 0.00 | 4.10 ± 0.44 | 3.40 ± 0.23 | 3.83 | Domain fidelity and semantic stability. |

| Spectral | 4.00 ± 0.00 | 3.72 ± 0.36 | 3.45 ± 0.42 | 3.72 | Good local coherence; narrower topical scope. | |

| Global Mean | — | 4.00 ± 0.0 | 3.70 ± 0.3 | 3.55 ± 0.2 | 3.76 ± 0.1 | High semantic consistency across methods. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

del Águila Escobar, R.A.; del Carmen Suárez-Figueroa, M.; Fernández López, M.; Villazón Terrazas, B. Bridging Text and Knowledge: Explainable AI for Knowledge Graph Classification and Concept Map-Based Semantic Domain Discovery with OBOE Framework. Appl. Sci. 2025, 15, 12231. https://doi.org/10.3390/app152212231

del Águila Escobar RA, del Carmen Suárez-Figueroa M, Fernández López M, Villazón Terrazas B. Bridging Text and Knowledge: Explainable AI for Knowledge Graph Classification and Concept Map-Based Semantic Domain Discovery with OBOE Framework. Applied Sciences. 2025; 15(22):12231. https://doi.org/10.3390/app152212231

Chicago/Turabian Styledel Águila Escobar, Raúl A., María del Carmen Suárez-Figueroa, Mariano Fernández López, and Boris Villazón Terrazas. 2025. "Bridging Text and Knowledge: Explainable AI for Knowledge Graph Classification and Concept Map-Based Semantic Domain Discovery with OBOE Framework" Applied Sciences 15, no. 22: 12231. https://doi.org/10.3390/app152212231

APA Styledel Águila Escobar, R. A., del Carmen Suárez-Figueroa, M., Fernández López, M., & Villazón Terrazas, B. (2025). Bridging Text and Knowledge: Explainable AI for Knowledge Graph Classification and Concept Map-Based Semantic Domain Discovery with OBOE Framework. Applied Sciences, 15(22), 12231. https://doi.org/10.3390/app152212231