In this section, we present the results of our experiments with various LLMs on the DACSA dataset. First, we describe the evaluation metrics used to assess summarization quality. Next, we provide a detailed analysis of the quantitative results across models and approaches. Finally, we compare the impact of our proposed bottleneck strategy to standard zero-shot and one-shot baselines.

4.1. Quantitative Results

Table 3 summarizes the results of the fine-tuned baselines (mBART and mT5) compared to a simple highlight-join heuristic. As expected, mT5 outperforms the other baselines. It achieves ROUGE-2 and ROUGE-L scores of 13.40 and 24.73, respectively, demonstrating its strong ability to generate abstractive summaries in Spanish. In contrast, the highlight-join heuristic underperforms, confirming that simply concatenating salient sentences cannot match the fluency and abstraction of pretrained seq2seq models.

Table 4 shows the results of the zero-shot setting. Large LLMs, such as LLaMA-3.1-70B and Qwen2.5-7B, achieved competitive results with ROUGE-1 scores above 30. These results demonstrate the ability of instruction-tuned LLMs to generalize to summarization tasks without fine-tuning. Notably, smaller models, such as Gemma-2-2B and Mistral-7B, demonstrate comparable performance, highlighting the efficiency of recent medium-sized LLMs.

Table 5 shows the results of the one-shot experiment with one in-context example. In this setting, we observe consistent improvements across all models, except Gemma-2-2b and Phi-3.5. For example, Gemma-2-9B and LLaMA-3.1-70B achieved ROUGE-1 scores of 33.68 and 33.41, respectively, and their BERTScore F1 values approached 69. These results demonstrate the effectiveness of in-context learning in aligning summaries more closely with human-written references, even with minimal supervision.

Table 6 shows how well our proposed bottleneck prompting method works. Gemma-2-9B achieves the highest ROUGE-1 (35.29) and ROUGE-L (24.02) scores among the evaluated systems. This demonstrates that generating content based on entity-rich highlights yields measurable improvements. LLaMA-3.1-70B and Gemma-2-9B also benefits from bottleneck prompting, improving its ROUGE-2 score to 14.28, the highest score across all experiments. These results confirm that the bottleneck strategy improves factual consistency without sacrificing fluency.

Finally,

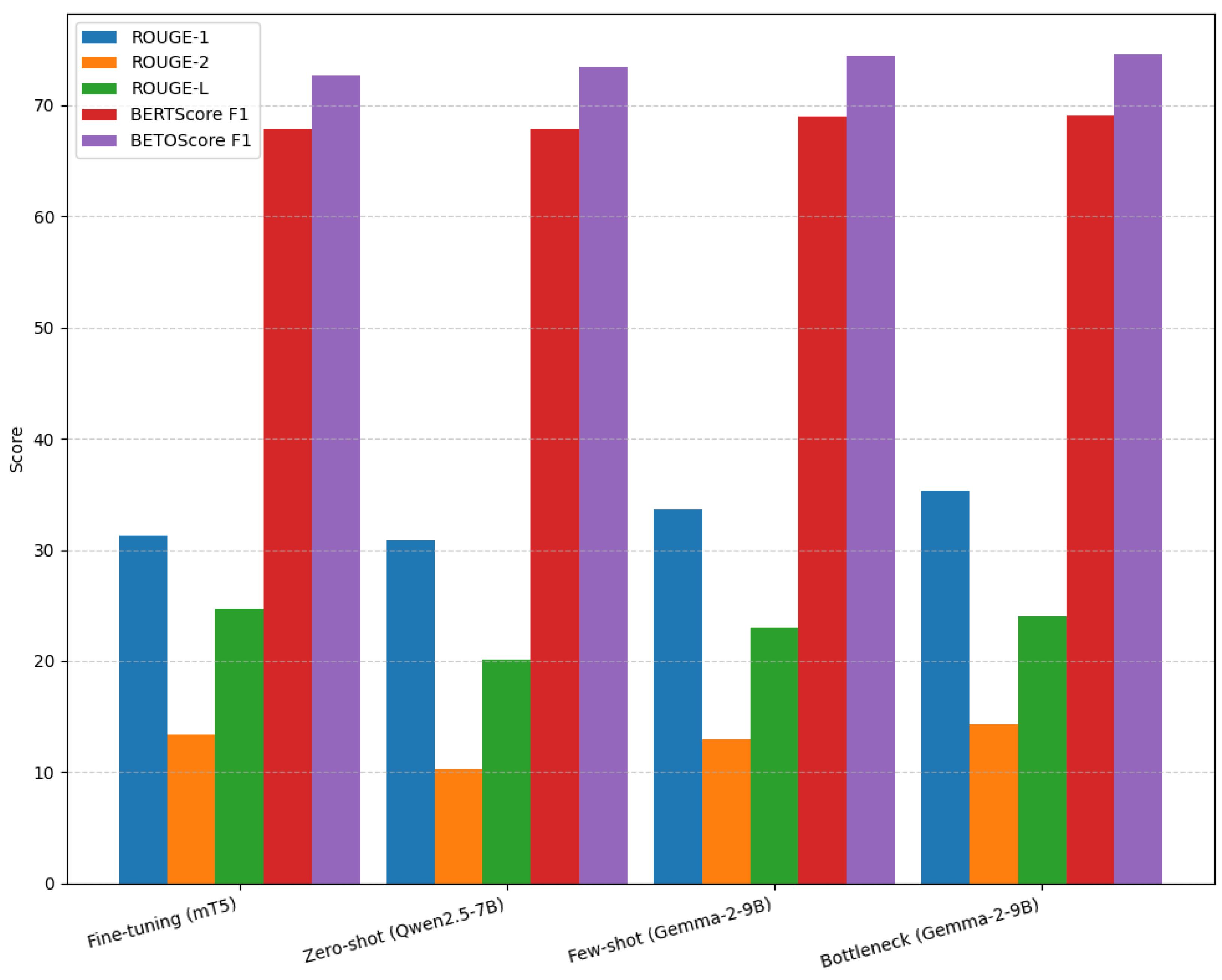

Table 7 compares the best-performing model from each approach. The results demonstrate clear progression. Fine-tuned mT5 establishes a robust supervised baseline, zero-shot Qwen2.5-7B exhibits strong out-of-the-box performance, and bottleneck-prompted Gemma-2-9B achieves the best overall ROUGE-1, ROUGE-2, highest BERTScore and BEToScore scores. These results suggest that bottleneck prompting can complement standard prompting methods and provide an effective, low-cost alternative to full fine-tuning.

Table 8 presents the results of the paired

t-test comparing the performance of the mT5 baseline and the proposed bottleneck prompting approach across lexical and semantic evaluation metrics (ROUGE-1, ROUGE-2, ROUGE-L, BERTScore F1, BETOScore F1). The analysis was conducted to determine whether the observed performance differences between the two models statistically significant. The results show that the bottleneck prompting method achieves statistically significant improvements in ROUGE-1, BERTScore, and BETOScore with

p-value < 0.05, while the difference in ROUGE-2 and ROUGE-L are not statistically significant (

p > 0.1).

Figure 4 summarizes the results using bar plots, allowing a more intuitive comparison of the methods. The plots highlight that bottleneck prompting consistently improves ROUGE-1 and ROUGE-L scores across all models, particularly for LLaMA-3.1-70B and Gemma-2-9B.

Overall, the results highlight three key findings. First, one-shot prompting significantly improves upon zero-shot performance for all models. Second, bottleneck prompting improves content selection and entity preservation, yielding additional gains. Third, fine-tuned baselines remain competitive, but modern open LLMs achieve comparable or better performance without requiring supervised training.

4.2. Analysis of Results

In this section, we analyze the performance of different models and approaches using the DACSA dataset. First, we review the supervised, fine-tuned baselines. Next, we discuss the performance of zero-shot and one-shot prompting. Lastly, we evaluate the impact of our proposed bottleneck prompting strategy. The section concludes with a comparative analysis of the best model from each approach.

As expected, the supervised baselines confirm that mT5 outperforms mBART by a small margin, achieving ROUGE-2 scores of 13.40 and ROUGE-L scores of 24.73. In contrast, the heuristic highlight-join approach performed considerably worse, showing that simply concatenating salient sentences cannot match the abstraction and fluency of neural models.

In the zero-shot setting, large, instruction-tuned LLMs demonstrate robust summarization abilities. For instance, LLaMA-3.1-70B and Qwen2.5-7B achieve ROUGE-1 scores above 30 and BERTScore F1 values around 68, respectively. This shows that modern open-weight models can generalize to summarization tasks without specific training. Though medium-sized models like Gemma-2-2B and Mistral-7B perform slightly worse, they still deliver competitive results, showcasing the effectiveness of recent 7B-class models.

In the one-shot setting, adding a single in-context example consistently improves performance across families. On average, ROUGE-1 increases by nearly one point, and ROUGE-L increases by more than one point, compared to zero-shot. This confirms that minimal supervision helps models better align with the target summarization style. Gemma-2-9B and LLaMA-3.1-70B are the strongest one-shot models, achieving ROUGE-1 scores above 33 and BERTScore F1 values close to 69. These results demonstrate the effectiveness of in-context learning for Spanish summarization.

Compared to one-shot prompting, the bottleneck prompting approach further improves ROUGE-1 and ROUGE-L scores, particularly for larger models such as LLaMA-3.1-70B and Gemma-2-9B. However, BERTScore F1 decreases slightly, suggesting that, although bottleneck prompting enhances factual grounding and lexical alignment, it reduces paraphrastic flexibility.

The improvement in lexical overlap indicates that generating content based on entity-rich highlights strengthens factual alignment and sequence-level coherence. However, this approach may limit the flexibility in paraphrasing captured by embedding-based metrics. At the model level, the gains are most evident for larger LLMs. For instance, LLaMA-3.1-70B increases its ROUGE-2 and ROUGE-L scores to 14.28 and 24.00, respectively, surpassing the fine-tuned mT5 model in bigram and subsequence overlap. Gemma-2-9B achieves the highest ROUGE-1 score, 35.29, across all settings. Qwen-2.5-7B also benefits, improving its ROUGE scores across the board with only a negligible drop in BERT F1. Conversely, smaller models, such as Gemma-2-2B, perform worse under the bottleneck. This indicates that their limited capacity hinders their ability to effectively integrate structured guidance.

When comparing the best-performing model in each approach, a clear progression emerges. While the fine-tuned mT5 baseline remains a strong supervised reference, the zero-shot Qwen-2.5-7B model performs competitively without additional training. Ultimately, bottleneck prompting delivers the best ROUGE-1, ROUGE-2, BERTScore and BETOScore scores, with Gemma-2-9B and LLaMA-3.1-70B leading the way. These results confirm the effectiveness of the proposed bottleneck prompting approach.

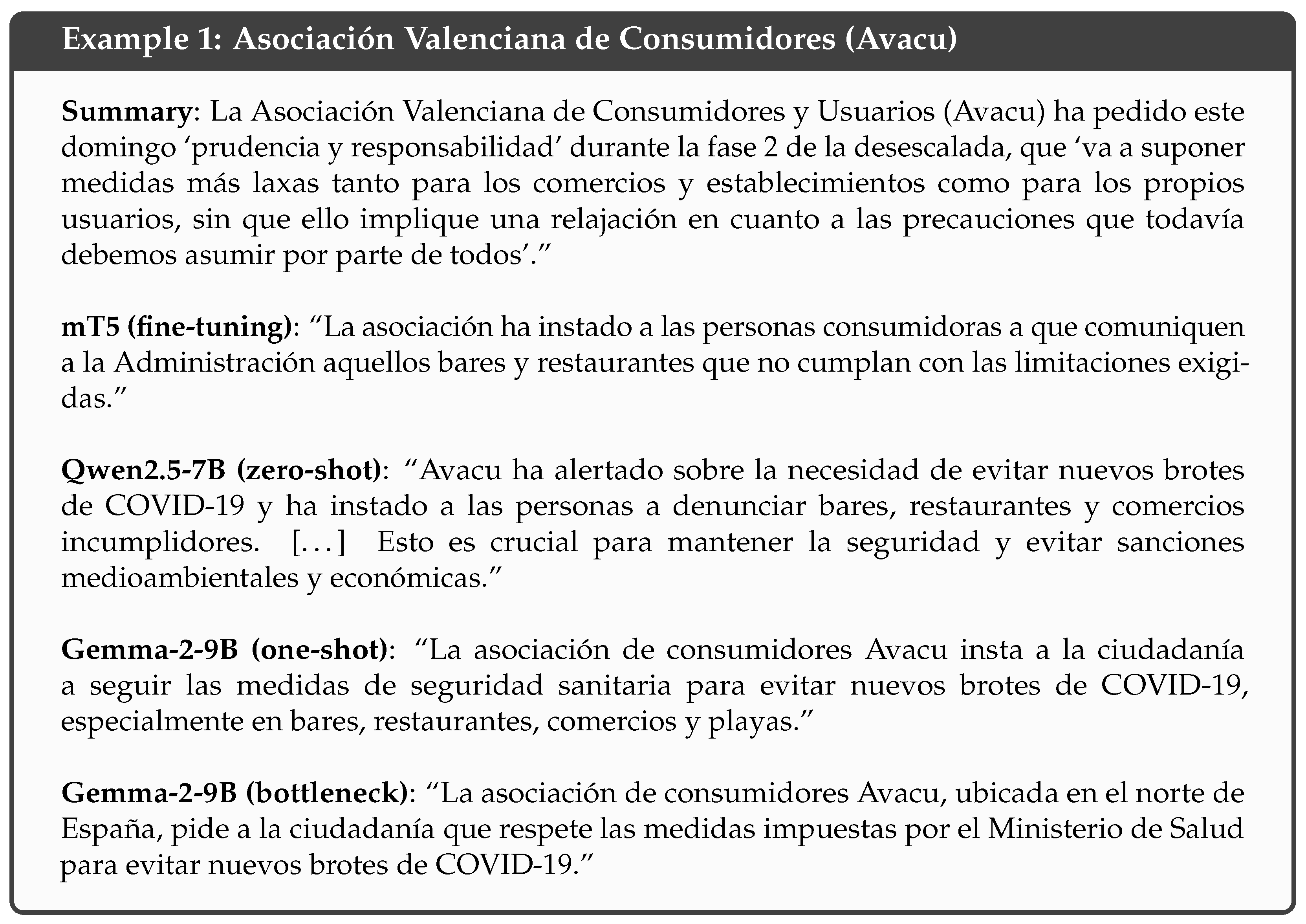

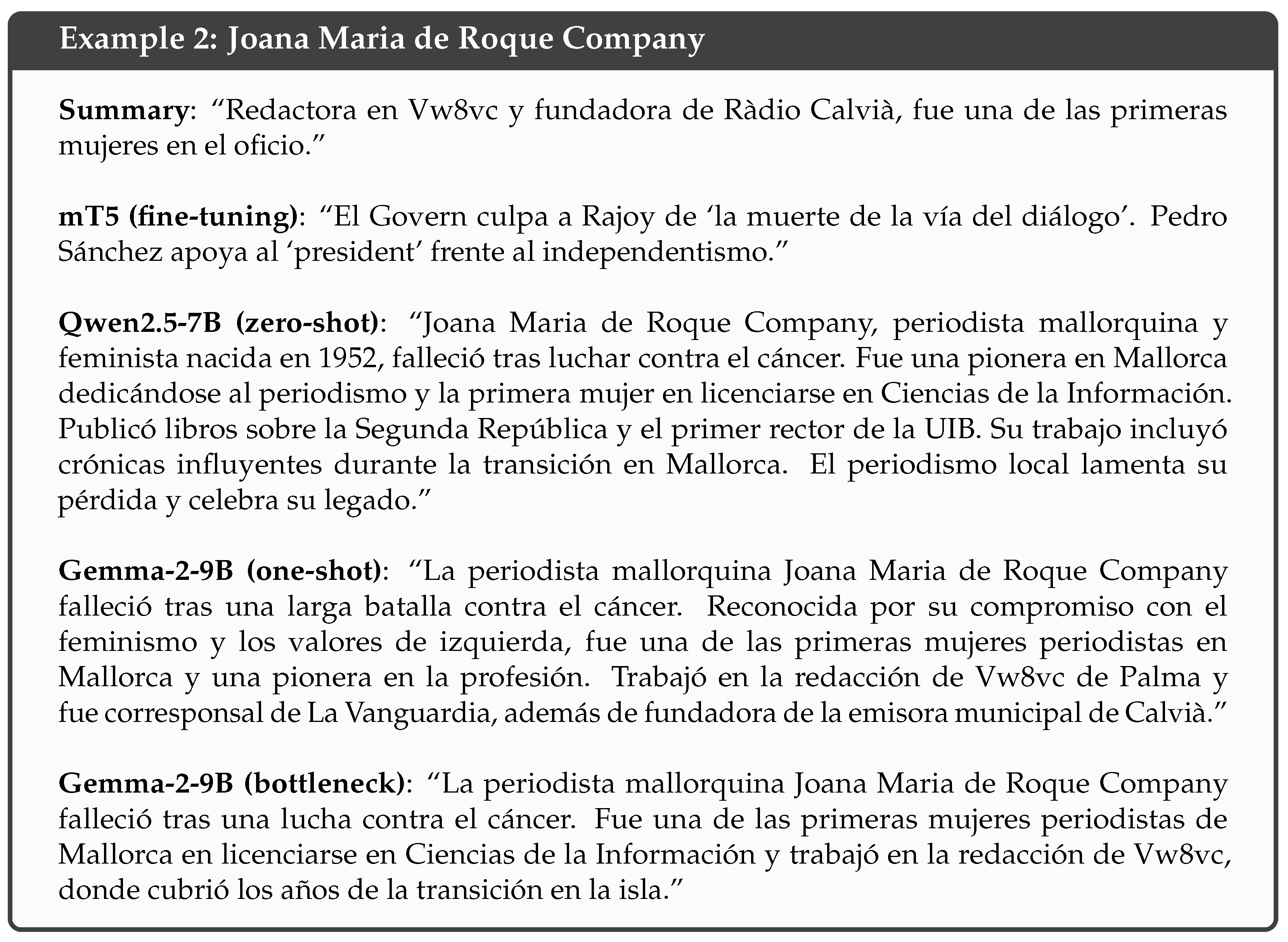

To better understand the numerical results, we evaluated model outputs using representative DACSA articles. Two illustrative cases, one institutional and one biographical, highlight the main behavioral patterns and qualitative advantages of our approach.

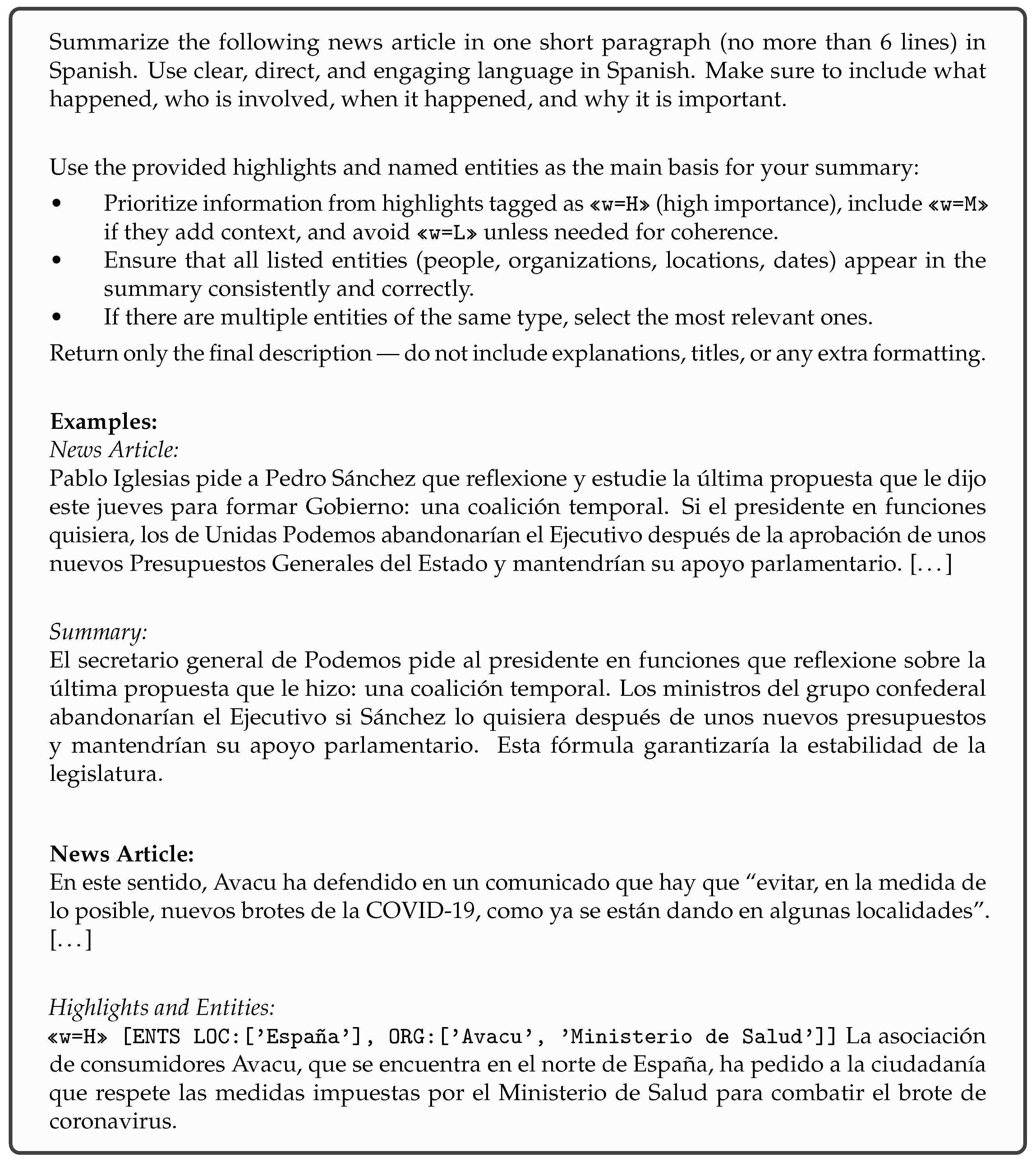

Figure 5 clearly illustrates differences in information coverage and factual control. The fine-tuned mT5 misinterprets the source, introducing a new action that is not present in the gold summary (“reporting non-compliant establishments”). Qwen2.5-7B produces a fluent yet overly detailed summary, adding fabricated elements such as “sanctions” and “mask use”. The one-shot Gemma-2-9B aligns better with the reference, preserving the intended meaning (“prudence and responsibility”) and avoiding hallucinated content. The bottleneck version yields the most structured and contextually rich summary and adds entities like “Ministerio de Salud”. Thus, this example demonstrates that the bottleneck prompting effectively enhances coherence and factual control.

Figure 6 shows mT5’s complete failure, producing unrelated political content and indicating poor domain generalization. Qwen2.5-7B generates a rich, albeit largely fabricated, biography, adding plausible yet nonexistent facts. The one-shot Gemma-2-9B model produces a balanced and contextually coherent summary. The bottleneck version provides the most factually faithful and semantically grounded text. It correctly retains entities (“Vw8vc“, “Roque Company”, “Ciencia de la Información”) and structures the narrative around real information from the gold summary.

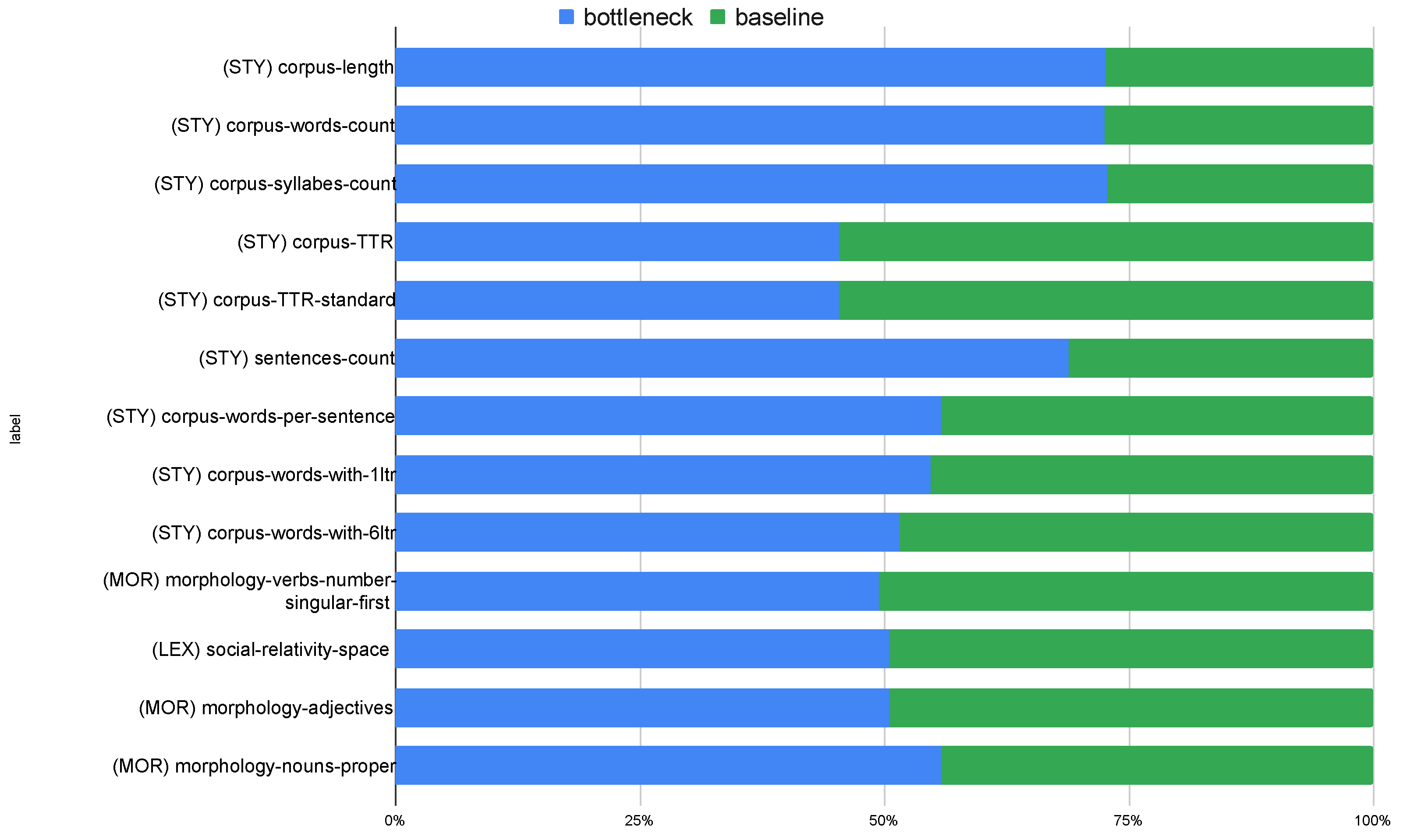

Figure 7 presents a comparative analysis between the baseline (mT5) summarization model and the proposed bottleneck prompting approach, focusing on linguistic, morphological, and lexical characteristics of the generated summaries. To conduct this deeper linguistic analysis, we employed the UMUTextStats [

29] tool. After obtaining the linguistic features, we calculated the information gain for each set of summaries. Then, we ordered the features by their information gain coefficients and calculated the mean for each feature. We normalized their values to 100% to observe the differences between the two models. As can be seen, the bottleneck model generates longer summaries than the baseline model, but the baseline model has a higher type-token ratio (TTR), meaning it uses a greater variety of words. Other morphological features, such as the use of first-person verbs, proper nouns, and adjectives, did not show significant variation among the produced summaries.

Overall, three findings stand out from the results. First, one-shot prompting consistently outperforms zero-shot summarization across all models, highlighting the benefits of minimal supervision. Second, bottleneck prompting enhances factual grounding and content selection, particularly for higher-capacity LLMs. This yields higher ROUGE-1, ROUGE-2, and ROUGE-L scores. Third, fine-tuned baselines, such as mT5, remain competitive. However, modern open LLMs with structured prompting can achieve comparable or superior results without requiring domain-specific training. These results suggest a promising path for multilingual summarization in settings with limited resources, such as Spanish news.