Parkinson’s Disease Classification Using Gray Matter MRI and Deep Learning: A Comparative Framework

Abstract

1. Introduction

2. Data and Methods

2.1. Early Diagnosis and Disease Stage Classification of Parkinson’s Disease

2.2. Dataset Used (PPMI)

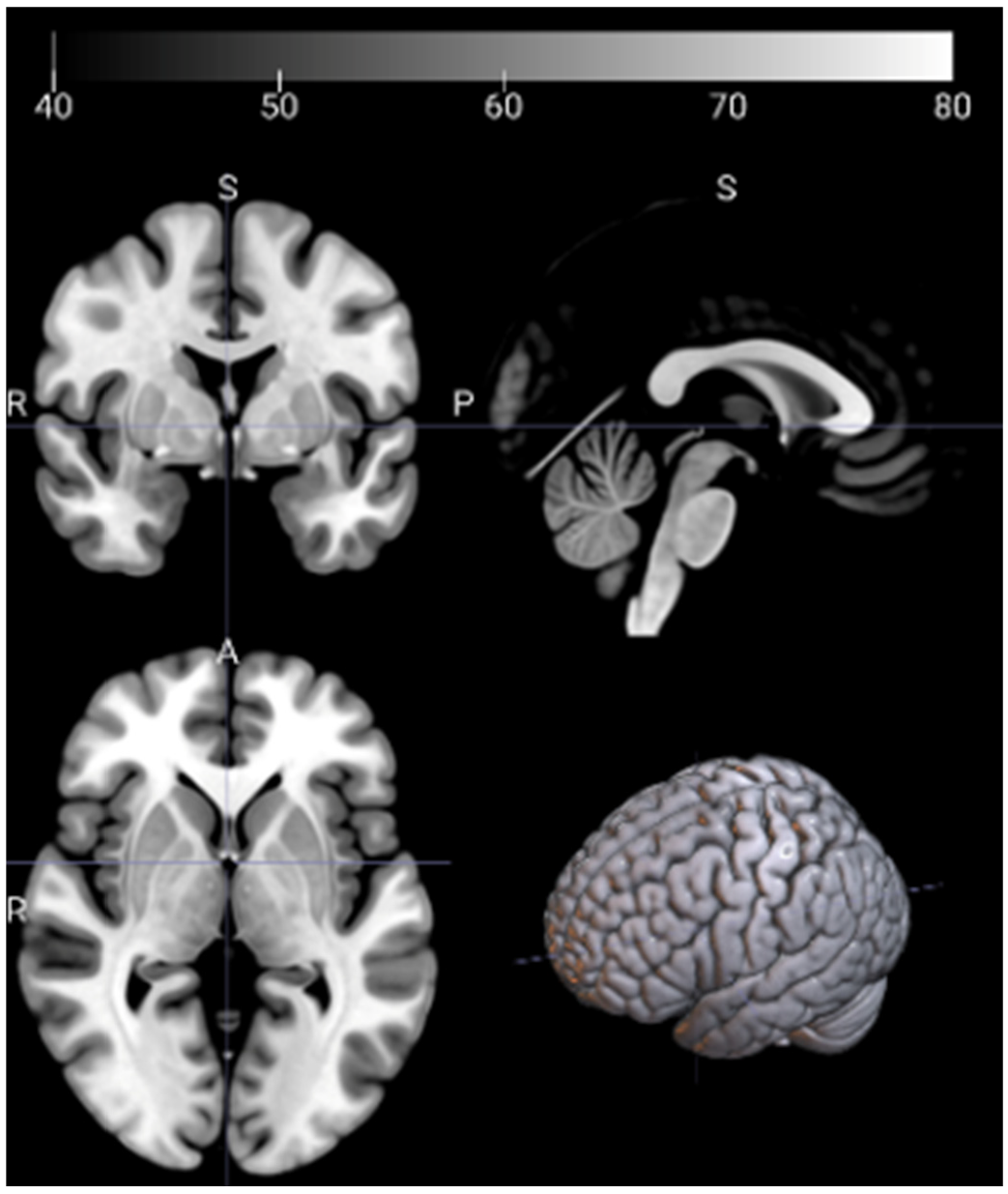

2.3. Image Preprocessing and Feature Extraction

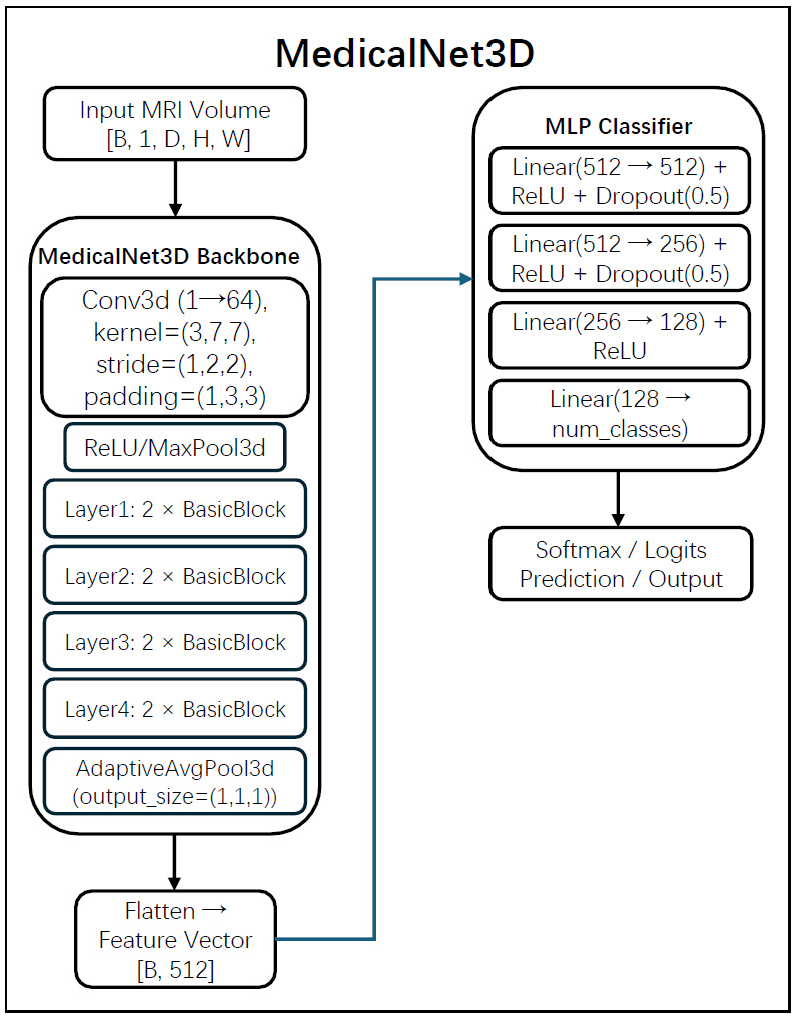

2.4. Model Architectures for Feature Extraction

2.5. Feature Learning Strategies Based on Subject-Wise Data Splits

- -

- The first two layers (Input → 512, 512 → 256) incorporate ReLU activation and Dropout (p = 0.5).

- -

- The third layer (256 → 128) uses ReLU only.

- -

- The final layer (128 → num_classes) produces outputs corresponding to three-class classification.

2.6. Evaluation Methodology

- -

- Accuracy, representing overall classification correctness.

- -

- F1 score, measuring discriminative power across classes.

- -

- AUC (Area Under the Curve), indicating the model’s ability to distinguish between categories.

3. Experiments and Results

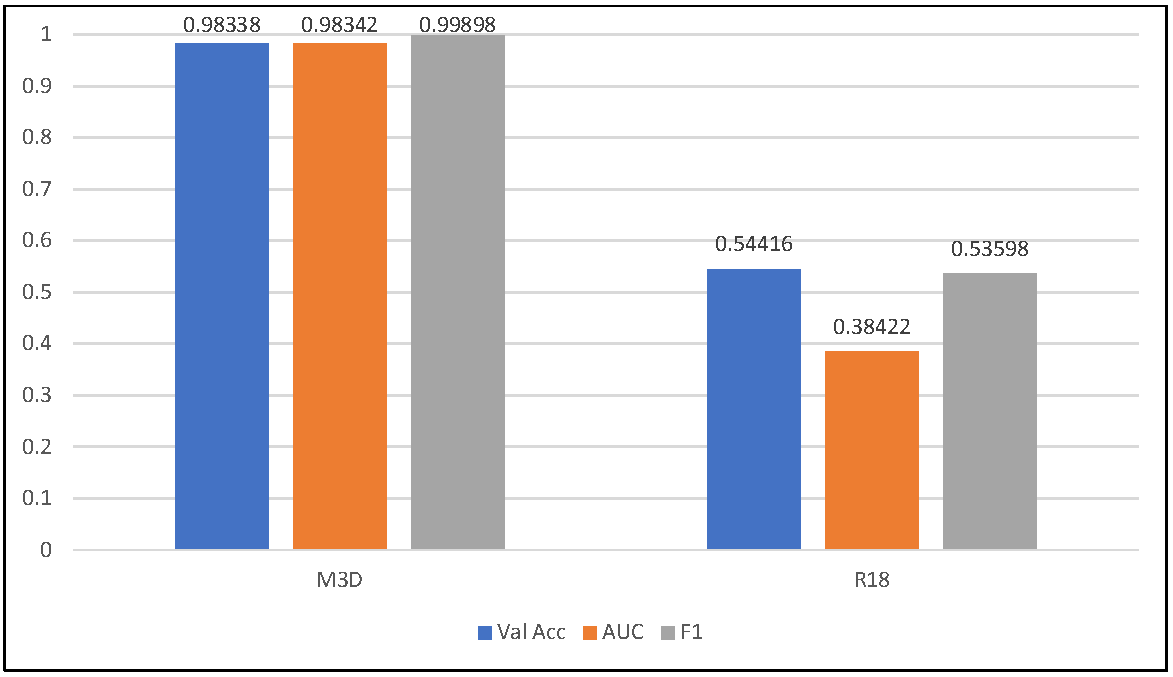

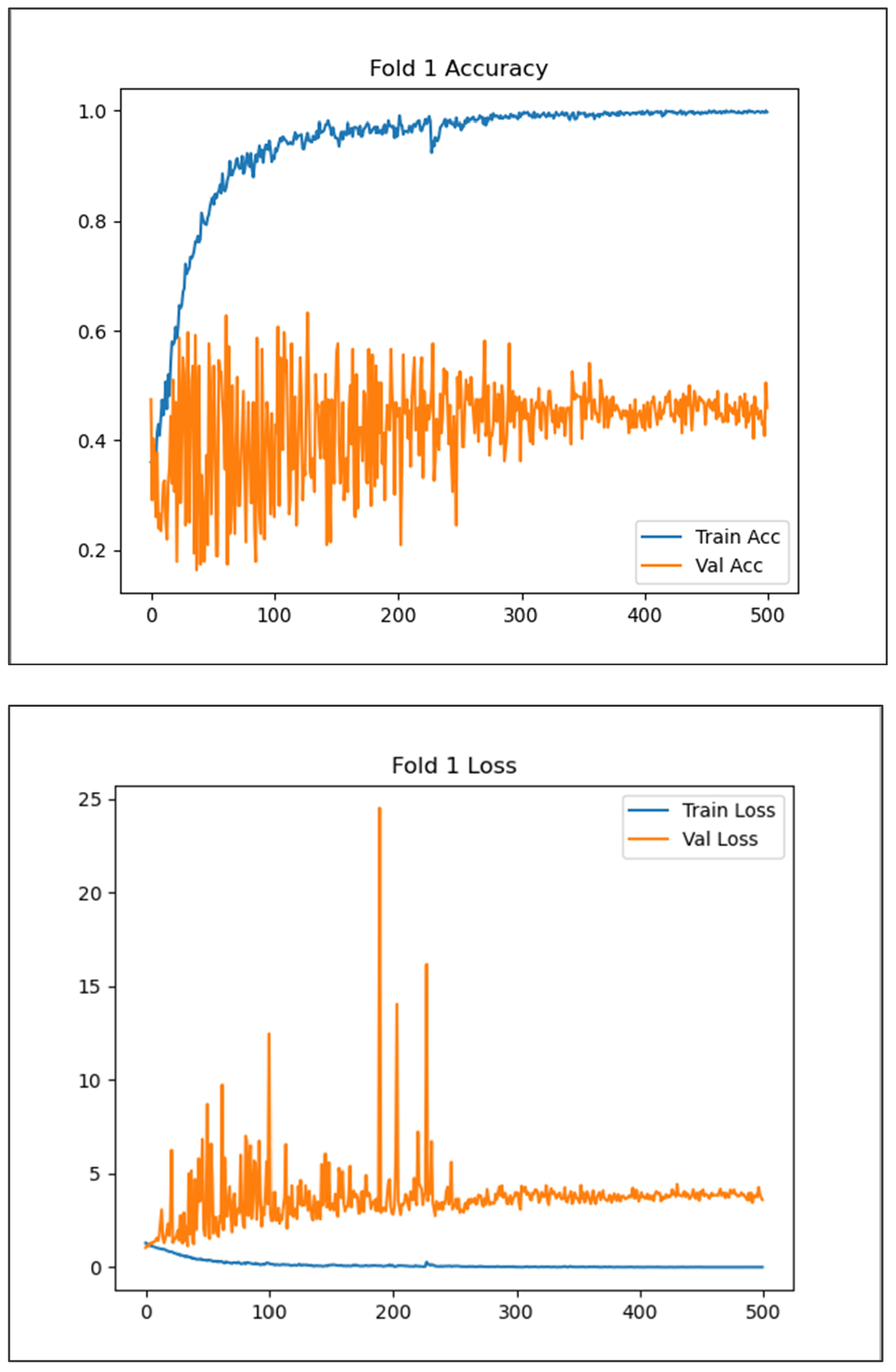

3.1. Global Feature Learning

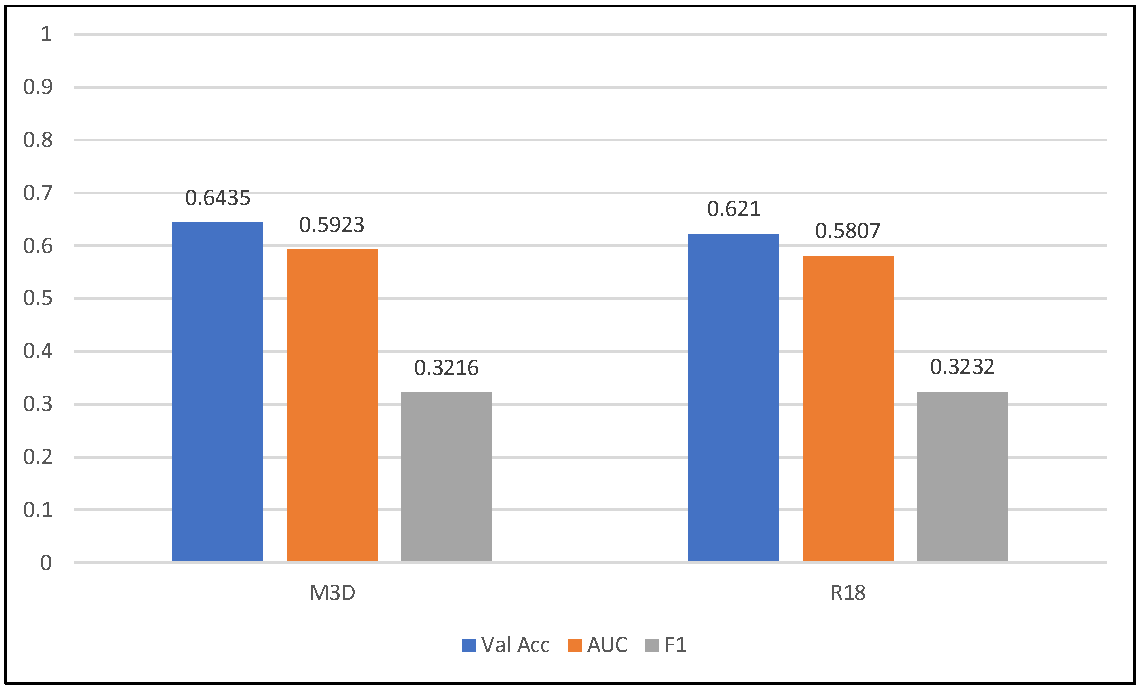

3.2. Group-Wise Feature Fusion Strategy

3.3. ID-Separated Group-Wise Feature Fusion Strategy

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Welton, T.; Hartono, S.; Lee, W.; Teh, P.Y.; Hou, W.; Chen, R.C.; Chen, C.; Lim, E.W.; Prakash, K.M.; Tan, L.C.S.; et al. Classification of Parkinson’s Disease by Deep Learning on Midbrain MRI. Front. Aging Neurosci. 2024, 16, 1425095. [Google Scholar] [CrossRef] [PubMed]

- Dentamaro, V.; Impedovo, D.; Musti, L.; Pirlo, G.; Taurisano, P. Enhancing early Parkinson’s disease detection through multimodal deep learning and explainable AI: Insights from the PPMI database. Sci. Rep. 2024, 14, 20941. [Google Scholar] [CrossRef] [PubMed]

- Makarious, M.B.; Leonard, H.L.; Vitale, D.; Iwaki, H.; Sargent, L.; Dadu, A.; Violich, I.; Hutchins, E.; Saffo, D.; Bandres-Ciga, S.; et al. Multi-modality machine learning predicting Parkinson’s disease. npj Park. Dis. 2022, 8, 35. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Lei, H.; Zhou, F.; Gardezi, J.; Lei, B. Longitudinal and Multi-modal Data Learning for Parkinson’s Disease Diagnosis via Stacked Sparse Auto-encoder. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 384–387. [Google Scholar]

- Pahuja, G.; Prasad, B. Deep learning architectures for Parkinson’s disease detection by using multi-modal features. Comput. Biol. Med. 2022, 146, 105610. [Google Scholar] [CrossRef]

- Makarious, M.B.; Leonard, H.L.; Vitale, D.; Iwaki, H.; Sargent, L.; Dadu, A.; Violich, I.; Hutchins, E.; Saffo, D.; Bandres-Ciga, S.; et al. Multimodal phenotypic axes of Parkinson’s disease. npj Park. Dis. 2021, 7, 6. [Google Scholar] [CrossRef]

- Huang, Z.; Lei, H.; Zhao, Y.; Zhou, F.; Yan, J.; Elazab, A.; Lei, B. Longitudinal and multi-modal data learning for Parkinson’s disease diagnosis. In Proceedings of the 2018 IEEE 15th International Symposium on Biomedical Imaging (ISBI 2018), Washington, DC, USA, 4–7 April 2018; pp. 1411–1414. [Google Scholar] [CrossRef]

- Xu, Y.; Yang, J.; Hu, X.; Shang, H. Voxel-Based Meta-Analysis of Gray Matter Volume Reductions Associated with Cognitive Impairment in Parkinson’s Disease. J. Neurol. 2016, 263, 1178–1187. [Google Scholar] [CrossRef]

- Calomino, C.; Bianco, M.G.; Oliva, G.; Laganà, F.; Pullano, S.A.; Quattrone, A. Comparative Analysis of Cross-Validation Methods on PPMI Dataset. In Proceedings of the 2024 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Eindhoven, The Netherlands, 26–28 June 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Li, J.; Yang, J.; Gan, H.; Huang, Z. Parkinson’s Disease Diagnosis with Sparse Learning of Multi-Modal Adaptive Similarity. In Proceedings of the 2024 IEEE International Symposium on Biomedical Imaging (ISBI), Athens, Greece, 27–30 May 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Qiu, S.; Miller, M.I.; Joshi, P.S.; Lee, J.C.; Xue, C.; Ni, Y.; Wang, Y.; De Anda-Duran, I.; Hwang, P.H.; Cramer, J.A.; et al. Multimodal deep learning for Alzheimer’s disease dementia assessment. Nat. Commun. 2022, 13, 3404. [Google Scholar] [CrossRef]

- Cui, X.; Zhou, Y.; Zhao, C.; Li, J.; Zheng, X.; Li, X.; Shan, S.; Liu, J.-X.; Liu, X. A Multiscale Hybrid Attention Networks Based on Multiview Images for the Diagnosis of Parkinson’s Disease. IEEE Trans. Instrum. Meas. 2024, 73, 2501011. [Google Scholar] [CrossRef]

- Camacho, M.; Wilms, M.; Almgren, H.; Amador, K.; Camicioli, R.; Ismail, Z.; Monchi, O.; Forkert, N.D.; Alzheimer’s Disease Neuroimaging Initiative. Exploiting macro- and micro-structural brain changes for improved Parkinson’s disease classification from MRI data. npj Park. Dis. 2024, 10, 43. [Google Scholar] [CrossRef]

- Zhang, J. Mining imaging and clinical data with machine learning approaches for the diagnosis and early detection of Parkinson’s disease. npj Park. Dis. 2022, 8, 13. [Google Scholar] [CrossRef]

- Camacho, M.; Wilms, M.; Mouches, P.; Almgren, H.; Souza, R.; Camicioli, R.; Ismail, Z.; Monchi, O.; Forkert, N.D. Explainable Classification of Parkinson’s Disease Using Deep Learning Trained on a Large Multi-Center Database of T1-Weighted MRI Datasets. NeuroImage Clin. 2023, 38, 103405. [Google Scholar] [CrossRef] [PubMed]

- Hara, K.; Kataoka, H.; Satoh, Y. Can Spatiotemporal 3D CNNs Retrace the History of 2D CNNs and ImageNet? In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6546–6555. [Google Scholar] [CrossRef]

- Chakraborty, S.; Aich, S.; Kim, H.-C. Detection of Parkinson’s Disease from 3T T1-Weighted MRI Scans Using 3D Convolutional Neural Network. Diagnostics 2020, 10, 402. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Ma, K.; Zheng, Y. Med3D: Transfer Learning for 3D Medical Image Analysis. arXiv 2019, arXiv:1904.00625. [Google Scholar] [CrossRef]

- scikit-learn. GroupKFold (and StratifiedGroupKFold)—Documentation. Available online: https://scikit-learn.org/stable/modules/generated/sklearn.model_selection.GroupKFold.html (accessed on 17 September 2025).

- Bu, S.; Pang, H.; Li, X.; Zhao, M.; Wang, J.; Liu, Y.; Yu, H. Multi-Parametric Radiomics of Conventional T1-Weighted and Susceptibility-Weighted Imaging for Differential Diagnosis of Idiopathic Parkinson’s Disease and Multiple System Atrophy. BMC Med. Imaging 2023, 23, 204. [Google Scholar] [CrossRef]

- Bradshaw, T.J.; Huemann, Z.; Hu, J.; Rahmim, A. A Guide to Cross-Validation for Artificial Intelligence in Medical Imaging. Radiol. Artif. Intell. 2023, 5, e220232. [Google Scholar] [CrossRef]

- Yan, J.; Luo, X.; Xu, J.; Li, D.; Qiu, L.; Li, D.; Cao, P.; Zhang, C. Unlocking the Potential: T1-Weighted MRI as a Powerful Predictor of Levodopa Response in Parkinson’s Disease. Insights Imaging 2024, 15, 141. [Google Scholar] [CrossRef]

- Marek, K.; Jennings, D.; Lasch, S.; Siderowf, A.; Tanner, C.; Simuni, T.; Coffey, C.; Kieburtz, K.; Flagg, E.; Chowdhury, S.; et al. The Parkinson Progression Marker Initiative (PPMI). Prog. Neurobiol. 2011, 95, 629–635. [Google Scholar] [CrossRef]

- Marek, K.; Chowdhury, S.; Siderowf, A.; Lasch, S.; Coffey, C.S.; Caspell-Garcia, C.; Simuni, T.; Jennings, D.; Tanner, C.M.; Trojanowski, J.Q.; et al. The Parkinson’s Progression Markers Initiative (PPMI)—Establishing a PD Biomarker Cohort. Ann. Clin. Transl. Neurol. 2018, 5, 1460–1477. [Google Scholar] [CrossRef]

- Fonov, V.S.; Evans, A.C.; McKinstry, R.C.; Almli, C.R.; Collins, D.L. Unbiased Nonlinear Average Age-Appropriate Brain Templates from Birth to Adulthood. NeuroImage 2009, 47, S102. [Google Scholar] [CrossRef]

- Gaser, C.; Dahnke, R.; Thompson, P.M.; Kurth, F.; Luders, E. Computational Anatomy Toolbox 12: Isotropic Surfaces, Sulcal Depth, and Developmental Data. GigaScience 2024, 13, giae049. [Google Scholar] [CrossRef]

- SPM12 Manual Wellcome Centre for Human Neuroimaging, U.C.L. Available online: https://www.fil.ion.ucl.ac.uk/spm/doc/manual.pdf (accessed on 17 September 2025).

- Torchvision: r3d_18—Video Models, A.P.I. Available online: https://docs.pytorch.org/vision/main/models/generated/torchvision.models.video.r3d_18.html (accessed on 17 September 2025).

- Rumala, D.J. How You Split Matters: Data Leakage and Subject Characteristics Studies in Longitudinal Brain MRI Analysis. arXiv 2023, arXiv:2309.00350. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Online, 11–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

| Class | Number |

|---|---|

| Healthy Control (HC) | 139 |

| Prodromal Parkinson’s Disease (Prodromal) | 146 |

| Parkinson’s Disease (PD) | 498 |

| Class | Number |

|---|---|

| Healthy Control (HC) | 173 |

| Prodromal Parkinson’s Disease (Prodromal) | 184 |

| Parkinson’s Disease (PD) | 622 |

| Total | 979 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Liang, T.; Yao, R.; Kuremoto, T. Parkinson’s Disease Classification Using Gray Matter MRI and Deep Learning: A Comparative Framework. Appl. Sci. 2025, 15, 11812. https://doi.org/10.3390/app152111812

Li H, Liang T, Yao R, Kuremoto T. Parkinson’s Disease Classification Using Gray Matter MRI and Deep Learning: A Comparative Framework. Applied Sciences. 2025; 15(21):11812. https://doi.org/10.3390/app152111812

Chicago/Turabian StyleLi, Haotian, Tong Liang, Runhong Yao, and Takashi Kuremoto. 2025. "Parkinson’s Disease Classification Using Gray Matter MRI and Deep Learning: A Comparative Framework" Applied Sciences 15, no. 21: 11812. https://doi.org/10.3390/app152111812

APA StyleLi, H., Liang, T., Yao, R., & Kuremoto, T. (2025). Parkinson’s Disease Classification Using Gray Matter MRI and Deep Learning: A Comparative Framework. Applied Sciences, 15(21), 11812. https://doi.org/10.3390/app152111812