Abstract

Anxiety and emotional stress are pervasive psychological challenges that profoundly impact human health in today’s fast-paced society. Traditional assessment methods, such as self-reports and clinical interviews, often suffer from subjective biases and lack the capability for objective, real-time evaluation of mental states. However, the integration of physiological signals—including electroencephalography (EEG), heart rate (HR), electrodermal activity (EDA), and eye movements—with advanced machine learning (ML) techniques, offers a promising approach to automate and objectify mental health assessments. A systematic review was conducted to explore recent advances in the early detection of anxiety and stress by combining physiological signals and ML methods. To assess methodological quality, a specific analysis framework was designed for the 113 studies included, which identified significant deficiencies in the literature. This highlights the urgent need to adopt standardized reporting guidelines in this field. The role of these technologies in feature extraction, classification, and predictive modeling was analyzed, also addressing critical challenges related to data quality, model interpretability, and the influence of intersectional factors like gender and age. Ethical and privacy considerations in the current research were also included. Finally, potential avenues for future research were summarized, highlighting the potential of ML technologies for early detection and proactive intervention in mental disorders.

1. Introduction

Mental disorder issues refer to clinically significant disturbances in an individual’s cognition, emotional regulation, or behavior, often leading to distress or impairment in critical areas of functioning. These issues have become a major global concern, with profound impacts on society and public health systems. By 2023, the prevalence of probable mental disorders among children aged 8 to 16 in England rose to 20.3%, while it reached 23.3% for those aged 17 to 19, with anxiety and stress disorders being the most prevalent [1]. The COVID-19 pandemic, which began in 2020, has further exacerbated the mental disorder problems. The prevalence of anxiety and emotional stress has increased significantly by 26% [2]. The number of female patients is twice that of men [3], posing a major challenge to both individuals and the health care system.

Anxiety and emotional stress are two key factors affecting mental health problems and are the body’s natural response to a perceived threat or need. Appropriate levels of brief, situation-specific anxiety and emotional stress are beneficial and can provide extra strength to protect oneself or enable a quick response to avoid danger [4,5]. However, chronic anxiety or emotional stress poses significant challenges [6] that can persist over extended periods and can impair cognitive function and emotional stability [7,8], and have serious consequences for overall well-being [9], increasing the risk of various health issues, including cardiovascular disease [10], diabetes [11], and immune system dysfunction [12]. Furthermore, in addition to their direct physiological consequences, chronic anxiety and stress frequently serve as primary etiological factors for the development of specific mental disorders. These conditions impose a significant burden on both the quality of life of affected individuals and the socioeconomic resources of global healthcare systems [13,14]. Moreover, they may represent pivotal precursors to the onset of substance abuse and illicit drug use [15].

Therefore, effective monitoring and predicting of the levels of anxiety and emotional stress can prevent these harmful effects and improve the quality of life. Physiological signals, including electroencephalography (EEG), heart rate (HR), electrodermal activity (EDA), and eye movements, offer a streamlined and effective approach, providing key insights into the body’s response to stressors [16]. Unlike traditional techniques, such as self-report questionnaires and cortisol tests, which rely primarily on subjective perception, biomarkers such as EEG, HR, EDA, and eye movement, are the direct and reliable reflection of the body’s stress state and may provide an objective, non-invasive method for assessing anxiety and emotional stress levels, as well as preventing the bias of traditional methods.

Through advancements in Machine Learning (ML) applied to physiological data, the classification and prediction of anxiety and emotional stress levels have evolved considerably [17]. ML techniques excel at analyzing complex physiological data and identifying patterns that might be missed by traditional methods [18]. This capability allows for improved early detection and management of mental disorders, providing a nuanced understanding of an individual’s anxiety and emotional stress levels and overall mental health. However, despite its potential, the application of ML to assess anxiety and emotional stress based on physiological signals faces several challenges. Firstly, ensuring the quality of physiological signals is difficult due to their low signal-to-noise ratio (SNR), leading to inaccurate or incomplete data and undermining the reliability of ML models. Secondly, interpreting ML models can be complex, as they often function as “black boxes,” making it difficult to understand how they arrive at their conclusions. Thirdly, ML models often struggle to account for the impact of other important factors such as gender and age [19,20], in addition to physiological signals, on anxiety and emotional stress. Finally, intersectional factors such as race, socioeconomic status, sexual orientation, ethics, and privacy are often not incorporated into physiological signals, directly affecting the credibility of anxiety and emotional stress assessment results using ML. The primary objective of this review is to explore how ML methods are applied to classify and predict anxiety or stress-related mental disorders using physiological signals. The PRISMA 2020 guidelines [21] will be followed to assess the effectiveness of these methods, highlight their limitations, and address key challenges such as data integrity, model interpretability, demographic influences, and ethical considerations. A secondary objective is to advance early detection and intervention strategies for mental health disorders.

The contributions of this review are summarized in four aspects: (1) We quantify the recent landscape across the included studies by modality and compare machine learning and deep learning performance. (2) We identify recurrent failure modes, especially the challenge of distinguishing stress from neutral states. (3) We connect technical choices to data quality along the end-to-end pipeline from acquisition and preprocessing to feature learning and evaluation. (4) We integrate demographic, intersectional, and privacy considerations into actionable guidance for reproducible and equitable study design.

2. Materials and Methods

To improve the clarity and precision of the results, a rigorous methodological approach adhering to PRISMA guidelines was employed. This involved the implementation of a well-defined search strategy, systematic data collection, clearly delineated inclusion and exclusion criteria, and a comprehensive review and synthesis of the findings.

2.1. Search Strategy

A focused and comprehensive search strategy was employed to investigate the application of ML in the analysis of physiological signals associated with anxiety and emotional stress. This search was conducted between 1 January 2024, and 19 July 2024, to identify the relevant literature published in English within the last 5 years and available in the PubMed and Web of Science databases. The selected search terms ensured a thorough examination of the integration of physiological monitoring and ML techniques. The search terms used are listed below (for more details, please refer to Appendix A).

- (“anxiety” [Title/Abstract]

- OR “blood pressure” [Title/Abstract]

- OR “EL” [Title/Abstract]

- OR “eye tracking” [Title/Abstract]

- OR “heart rate” [Title/Abstract]

- OR “skin conductance” [Title/Abstract]

- OR “stress” [Title/Abstract])

- AND (“classification” [Title/Abstract]

- OR “deep learning” [Title/Abstract]

- OR “machine learning” [Title/Abstract])

This search strategy was designed to comprehensively cover a wide range of research studies investigating the application of ML to diverse physiological signals associated with anxiety and emotional stress. By incorporating both medical and computational terminology, the search strategy facilitated the identification of studies at the intersection of computational neuroscience, encompassing the variability of physiological responses, the influence of demographic factors, and the imperative need for transparent and interpretable ML models. This strategic approach to literature selection was crucial for assembling a comprehensive body of research that aligned with the objectives of this review and provided a solid foundation for understanding the current state and future potential of ML in the study of anxiety and emotional stress.

2.2. Inclusion and Exclusion Criteria

The keyword search yielded 1871 documents. To refine the inclusion criteria in the data collection phase, a two-stage selection process was implemented. In the first stage, titles and abstracts were screened to identify studies relevant to the assessment of anxiety and/or stress using physiological signals such as EEG, electrocardiogram (ECG), EDA, HRV, or eye tracking, involving ML methods for data analysis, and reporting evaluation metrics with results demonstrating model performance. In the second stage, full texts of the selected articles were examined to confirm their true relevance to the review goals. To ensure the accuracy and consistency of the selected articles, a dual independent extraction and cross-validation method was adopted, and the screening results were compared and discussed, with no inconsistencies found. This rigorous selection process ensured the inclusion of studies that were most relevant to the study objectives. The review included only those articles that assessed anxiety or stress using physiological signals analyzed with ML methods, reported specific evaluation metrics, and provided clear findings. Conversely, studies that were unrelated to anxiety or stress assessment, were written in languages other than English, were unpublished, involved only subjects without anxiety or stress, or were specifically focused on conditions such as Post-Traumatic Stress Disorder (PTSD) were excluded.

2.3. Screening Synthesis and Results

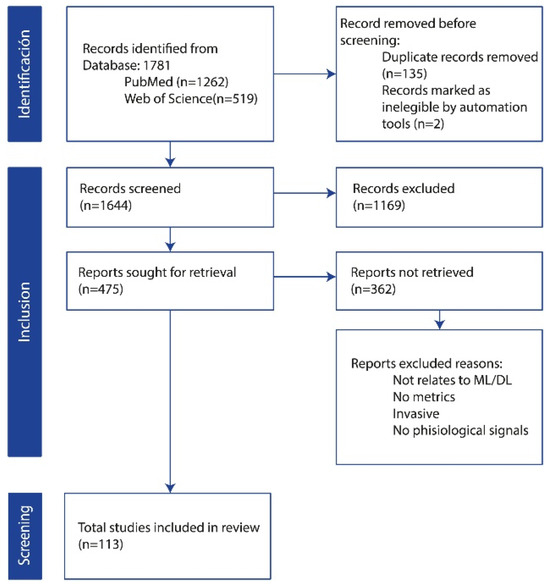

Following the establishment of the search strategy and the definition of inclusion and exclusion criteria, a comprehensive literature search yielded a total of 1781 records from two primary databases, PubMed (n = 1262) and Web of Science (n = 519). As depicted in Figure 1, the PRISMA flow diagram outlines the study selection process, detailing the total number of records identified, the screening procedure after duplicate records were removed, and the final studies included for analysis. In the identification phase, an initial screening identified and removed 135 duplicate records. Automation tools were further employed to eliminate an additional 2 records. The remaining 1644 records underwent a title and abstract screening to assess their relevance to the study objectives. Consequently, 1169 records were excluded based on the predefined criteria. Of the 475 potentially eligible studies, 362 could not be retrieved due to reasons such as irrelevance to (ML/DL), lack of reported metrics, invasive nature of the procedures, or absence of the specified physiological signals. The remaining studies were assessed for eligibility, resulting in the final inclusion of 113 studies for analysis.

Figure 1.

PRISMA flow diagram for study selection.

These studies underwent detailed data extraction, and information was collected on research objectives, data types, and data collection equipment, sample characteristics, preprocessing methods, ML or deep learning (DL) models employed, feature extraction techniques, evaluation metrics, and main results. This rigorous approach ensured a comprehensive analysis of relevant and high-quality literature.

2.4. Study Quality Assessment

To address the absence of standardized quality assessment instruments specifically designed for machine learning studies utilizing physiological signals, we developed and implemented a domain-specific evaluation framework to systematically assess the methodological rigor of all 113 included studies. This retrospective assessment was conducted based exclusively on information explicitly reported in the published manuscripts.

2.4.1. Assessment Framework and Evaluation Criteria

Our quality assessment framework encompassed five critical domains fundamental to the validity and reproducibility of machine learning research in physiological signal analysis. These domains included study population and sample characteristics, signal acquisition methodology, data preprocessing transparency, model validation strategies, and confounding factor consideration.

The first domain evaluated the adequacy of sample size reporting and the completeness of participant demographic information, including age distribution and gender composition, which are essential for assessing the generalizability of findings across different populations.

The second domain focused on the transparency of reporting regarding data collection procedures, examining whether studies provided sufficient detail about hardware specifications, sampling rates, electrode placement protocols, and experimental conditions to enable replication.

Data preprocessing and signal processing transparency constituted the third evaluation criterion. This assessment examined the comprehensiveness of preprocessing methodology reporting, particularly focusing on artifact removal procedures, noise reduction techniques, and signal filtering approaches that directly impact data quality and subsequent model performance.

The fourth domain addressed model validation and evaluation strategies. We evaluated the robustness of validation approaches employed, distinguishing between studies that relied solely on training set performance and those implementing more rigorous cross-validation procedures or independent test set evaluation methodologies.

Finally, we assessed each study’s consideration of potential confounding factors. This evaluation examined whether studies acknowledged and addressed confounding variables such as medication effects, baseline anxiety levels, and other demographic or clinical factors that might influence physiological responses and thereby affect model validity.

2.4.2. Quality Assessment Results

Our systematic evaluation revealed substantial heterogeneity in methodological reporting quality across the included literature. Through comprehensive analysis of the 113 studies, we identified several recurring patterns that highlight areas requiring improvement in current research practices.

The quality and completeness of methodological reporting varied considerably across studies, with many investigations providing insufficient detail to enable comprehensive quality assessment. Among studies that provided adequate methodological information for detailed analysis, we observed substantial variation in research design and implementation approaches. Sample sizes across the literature demonstrated considerable range, with some investigations involving as few as 10 participants while others included over 100 individuals, reflecting the diversity of research contexts and objectives within this field.

Examination of validation methodologies revealed inconsistent implementation of rigorous evaluation strategies across the literature. While most studies reported some form of model validation, there was considerable variation in the sophistication of these approaches. Many investigations relied primarily on cross-validation techniques, with limited implementation of independent test set evaluation or external validation procedures. This pattern raises concerns about the generalizability of reported model performance beyond the specific study populations and experimental conditions.

The reporting of data preprocessing methodologies also demonstrated significant inconsistencies. Although the majority of studies acknowledged implementing preprocessing procedures, the level of methodological detail varied markedly across publications. Some studies provided comprehensive descriptions of artifact removal, filtering, and signal conditioning procedures, while others offered only cursory mentions of these critical steps. This variability substantially limits the reproducibility of reported findings and complicates meaningful comparison of results across different investigations.

Furthermore, our analysis revealed limited systematic consideration of potential confounding variables that could influence physiological responses and, consequently, model performance. Factors such as participant medication use, pre-existing medical conditions, and baseline anxiety or stress levels were infrequently reported or systematically controlled for in the analytical approaches. This oversight represents a significant methodological limitation that may compromise the validity of reported associations between physiological signals and anxiety or stress states.

The heterogeneity in reporting quality prevented systematic quantification of methodological adequacy across all included studies. However, the patterns identified through our assessment highlight fundamental challenges in evidence synthesis within this rapidly evolving field, particularly the need for standardized reporting frameworks that would facilitate more rigorous systematic evaluation of research quality.

2.4.3. Assessment Limitations and Methodological Considerations

Several important limitations constrain the interpretation of our quality assessment findings. First, this evaluation was necessarily limited to information explicitly reported in the published manuscripts, and the absence of reporting does not definitively indicate that appropriate methodological procedures were not implemented. Second, the heterogeneity in journal requirements and article length restrictions may have contributed to variations in methodological detail across studies. Finally, the retrospective nature of this assessment precluded the application of more comprehensive quality evaluation instruments that would require access to original study protocols or datasets.

These findings collectively highlight the critical need for the development and adoption of standardized reporting guidelines specific to machine learning applications in physiological signal research, similar to established frameworks in other research domains.

3. Results

3.1. Physiological Signals

This comprehensive literature review emphasizes that physiological signals, such as EEG [22], HR [23], HRV [24], EDA [25], and eye movements [26], provide valuable data for machine learning-based early detection of mental health disorders. EEG offers high temporal resolution but is susceptible to noise, while MRI, despite high detail, is costly and less accessible [27]. HR and HRV, easily collected via wearable devices, are linked to stress but provide limited information compared to EEG. Respiratory rate is easily measured but provides less information than EEG or HRV [26]. EDA is sensitive to emotional responses but is susceptible to environmental factors [25]. Eye movement data provide insights into attention and emotion but are prone to head movement interference [26].

Among the 113 reviewed papers, approximately 51 utilized EEG, 21 used HR or HRV, 17 employed EDA, 26 adopted ECG, 14 implemented PPG, and 4 incorporated EMG.

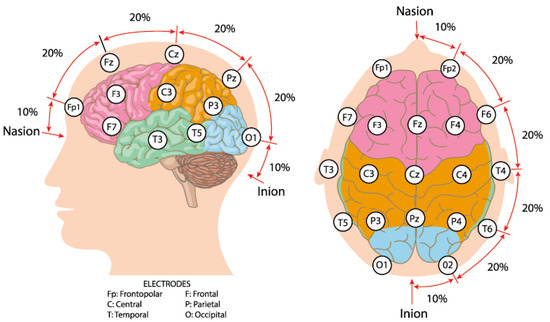

3.1.1. EEG

EEG, a non-invasive technique, records brain electrical activity through scalp electrodes, reflecting neuronal synchronization and cognitive-emotional states [27,28]. The location of electrodes on the EEG cap always (e.g., 64, 128) follows the 10–20 system (Figure 2) [29,30]. Raw EEG data usually contain the noise derived from eye blinking and muscle activity, which introduces artifacts. To mitigate the adverse effects of these artifacts, preprocessing is essential. A typical preprocessing pipeline involves band-pass filtering and the application of independent component analysis (ICA) to identify and remove artifact-related components. In EEG analysis, feature extraction serves as a critical step bridging the signals and ML models. Handcrafted features are commonly employed to represent the time and frequency domain characteristics of EEG signals [18,19,31]. Time domain features include mean, variance, peak-to-peak amplitude, and signal-to-noise ratio of multichannel EEG, which capture the overall fluctuations of the signal. Frequency domain features always focus on the frequency bands Delta (0.5–4 Hz), Theta (4–8 Hz), Alpha (8–13 Hz), Beta (13–30 Hz), and Gamma (30–100 Hz) [27,28], which are often associated with specific emotional states. These handcrafted features offer high interpretability and are well-suited for classifiers such as Support Vector Machines (SVMs), Linear Discriminant Analysis (LDA), and Random Forests.

Figure 2.

International 10–20 system brain electrode distribution map. Source: author’s own work.

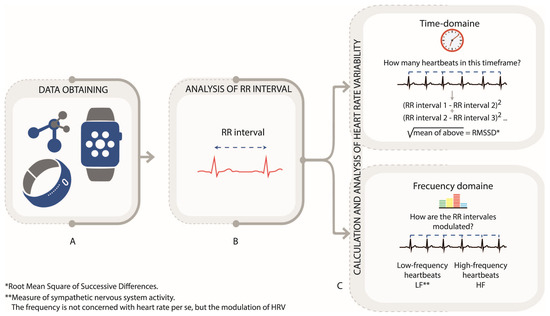

3.1.2. Heart Rate (HR) and Heart Rate Variability (HRV)

Heart rate (HR) and heart rate variability (HRV) are key indicators of cardiovascular function and autonomic nervous system activity, and can be recorded through photoplethysmography (PPG) or electrocardiogram (ECG) devices [32,33,34,35,36]. HRV measures the fluctuation in RR intervals (Figure 3A), reflecting the balance between sympathetic and parasympathetic activity, with decreased HRV associated with stress and anxiety [37,38,39,40,41,42]. In HR and HRV data, ectopic beats, motion artifacts, and missing intervals can distort variability measures. Preprocessing procedures include the detection and removal of ectopic beats, interpolation of missing intervals, and resampling for evenly spaced time series when spectral analysis is applied. Extracted features involve time-domain (e.g., SDNN, RMSSD) and frequency-domain (Figure 3B,C) [18,19,31]. High HRV indicates good cardiovascular health, while low HRV is associated with increased stress and health risks [34]. Table 1 summarizes key HRV metrics.

Figure 3.

Heart rate variability (HRV) analysis via PPG and RR Intervals. (A). Data obtain. (B). Analysis of RR interval. (C). Calculation and Analysis oh heart rate variability Author’s own figure.

Table 1.

HRV indicators in the time domain.

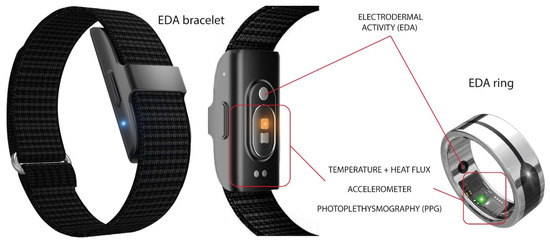

3.1.3. Electrodermal Activity (EDA)

Electrodermal Activity (EDA), reflecting autonomic nervous system activity, measures changes in skin conductance due to sweat gland activity [43,44,45,46]. EDA is typically measured using non-invasive sensors (e.g., fingertip sensors, wristbands) (Figure 4) [30]. Similarity, some artifacts arising from motion, temperature fluctuations, and sensor noise would also decrease the quality of the EDA signals. Common approaches include down-sampling, smoothing, baseline correction, and artifact removal using methods such as median filtering or adaptive thresholding. Signal decomposition is often applied to separate tonic (skin conductance level, SCL) and phasic (skin conductance response, SCR) components, which correspond to slow baseline changes and rapid event-related responses, respectively [47]. Feature extraction from EDA can be performed in multiple domains. Time-domain features include mean and variance of SCL, as well as amplitude, latency, and frequency of SCR peaks. Frequency-domain features, obtained through Fourier or wavelet analysis, characterize oscillatory patterns associated with autonomic activity. After that, the obtained EDA signals can be used for effective stress and anxiety monitoring [48,49,50].

Figure 4.

Examples of wearable Electrodermal Activity (EDA) devices include commercial products such as the Iamjoy® Smart Health Wristband and Paetae® Smart Ring. Source: author’s own work.

3.1.4. Eye Movement

Eye movement refers to the motion or activity of the eyes. By analyzing fixations, saccades, and variations in pupil diameter derived from eye movements, visual attention and cognitive processes can be distinguished [50]. Eye-tracking devices can collect non-invasive, high-resolution gaze position, pupil diameter, or some blink events [51,52,53,54,55,56,57]. Preprocessing of eye movement data is necessary to reduce noise from head movements or blinks. The pipeline includes filtering, blink detection and interpolation, coordinate normalization, and calibration correction, which could ensure consistency across trials or sessions. For gaze position, smoothing or velocity thresholding are often applied to identify saccades and fixations accurately [58]. Features extracted from eye movement also include spatial and temporal parts. Spatial features such as fixation duration, dispersion, and saccade velocity reflect visual attention and search strategies, while temporal features such as blink rate, pupil dilation dynamics, and inter-saccadic intervals can relate to cognitive load or emotion arousal [59,60]. Through the preprocessing and feature extraction, it is possible to use eye movement data to predict the anxiety and stress state of subjects.

3.1.5. Other Physiological Signals

Beyond EEG and EDA, respiratory rate [24], blood pressure [59], and blood oxygen levels [60] are crucial physiological indicators of stress and anxiety. Rapid and shallow breathing patterns often accompany heightened anxiety [30]. Blood pressure fluctuations reflect stress responses, with elevated levels indicating increased anxiety and potential health risks [61]. Decreased blood oxygen saturation may signal respiratory distress or heightened anxiety. These physiological signals, in conjunction with EEG and EDA, provide a comprehensive understanding of the body’s stress response.

3.2. Machine Learning Methods in Stress and Anxiety

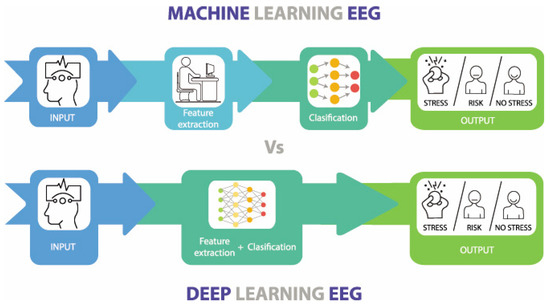

At the heart of the modern AI revolution lie two main approaches to machine learning: traditional ML and DL models (Figure 5). Due to the outstanding performance of conventional ML and DL methods in feature extraction and classification evaluation, many researchers have applied them to assessing and predicting stress and anxiety. A clear understanding of these methodologies is essential for enhancing predictive modeling and improving mental health interventions. Although both aim to enable computers to think, they differ significantly in their execution and applications, ML techniques excel in handling complex and high-dimensional data, and are particularly suited for feature extraction and classification tasks in physiological signal analysis [62]. This section details commonly used ML and DL methods for anxiety and stress evaluation and explains the differences between them, as well as their relationship.

Figure 5.

The main differences between Machine Learning and Deep Learning. Source: author’s own work.

3.2.1. ML Approaches for Emotional Stress and Anxiety Analysis Using Physiological Signals

Machine Learning involves separate steps of each process for feature extraction and classification, requiring manual intervention. Traditional ML methods are extensively applied in the analysis of physiological signals [63]. These methods include, but are not limited to, Support Vector Machines (SVMs), Naive Bayes, and Multilayer Perceptrons (MLPs). SVM is a supervised learning technique that classifies data by finding the optimal separating hyperplane. SVM performs well with high-dimensional data and is suitable for classifying physiological signals such as (HRV) and Skin Conductance Responses (SCR) [64]. Naive Bayes is based on Bayes’ theorem and assumes independence among features. Although this assumption may not be held in practical applications, Naive Bayes still demonstrates good performance in physiological signal classification, especially with smaller datasets [65]. MLP, a feedforward neural network with input, hidden, and output layers, uses the backpropagation algorithm to capture non-linear relationships in physiological signals [66]. Beyond SVM, Naive Bayes, and MLP, we also consider classic tabular-learning baselines and tree ensembles that are well suited to handcrafted physiological features (e.g., HRV and EDA statistics). K-Nearest Neighbor (KNN) serves as a nonparametric reference that classifies samples by their local neighborhood; it is simple and effective when features are well scaled. Logistic Regression provides a linear, interpretable baseline with calibrated decision scores, often competitive when signal-derived features are informative. Decision Trees capture non-linear interactions in a transparent rule-based form, but may be overfit without pruning. Random Forests mitigate such variance by ensembling many trees, yielding robust performance across heterogeneous feature sets. Gradient boosting implementations (LightGBM, XGBoost, CatBoost) further improve accuracy on tabular data by sequentially correcting residuals; they handle non-linearities and feature interactions with built-in regularization, albeit at the cost of careful hyperparameter tuning and higher computational demand. Together with SVM/MLP, this spectrum of models spans linear, instance-based, and ensemble methods, enabling fair comparisons across decision-boundary complexity, interpretability, and robustness for stress/anxiety detection from physiological signals. For predicting anxiety and stress from physiological signals, ML involves several key steps. Data preprocessing ensures data quality by removing noise and normalizing values. Data augmentation adds diversity to the dataset, helping prevent overfitting. Feature selection identifies relevant attributes using techniques like PCA and MI. Traditional algorithms, such as SVM and Naive Bayes, are trained to classify and predict [67]. Hyperparameter tuning refines model performance through methods like grid search. Finally, model evaluation uses metrics like accuracy and confusion matrices to assess prediction reliability.

3.2.2. Fusion Strategies of Multimodal Physiological Signals

In some studies, multimodal physiological signals are used. The fusion strategies can be broadly categorized into early fusion, intermediate fusion, and late fusion.

Early fusion, also known as feature-level fusion, which concatenates features from different modalities after feature extraction and selection, is the most widely used approach in machine learning [57,68,69,70,71,72,73]. Its main advantage lies in its simplicity and the ability to directly exploit complementary information across modalities. This makes it particularly suitable for traditional shallow machine learning models with limited representation capacity. However, early fusion is sensitive to differences while processing features with different scales or dimensions, and it is possible to introduce noise when modalities are heterogeneous. Moreover, the model cannot dynamically learn complex cross-modal relationships, which means the performance may degrade if some modalities are missing or corrupted. Intermediate and late fusion are less commonly used in traditional machine learning. Intermediate fusion, which integrates modality-specific representations at hidden layers, and late fusion, which combines decisions from individual modalities, offer greater flexibility and robustness but are more complex to implement and typically require deeper models or larger datasets. In this way, these two strategies are limited in shallow, resource-constrained machine learning settings. Existing fusion strategies have their own advantages and limitations, and their performance may vary across different datasets. Therefore, the choice of a fusion strategy should be guided by the specific characteristics and requirements of the task.

3.2.3. ML Performance in Analyzing Physiological Signals

Machine learning models have shown promising performance in predicting stress and anxiety using physiological signals, and they are widely employed by researchers. Table 2 summarizes recent studies that applied traditional machine learning methods for stress detection and reports their final classification accuracy. For each study, the models demonstrating the highest levels of accuracy were chosen. It is important to note that these accuracy values are for reference only, as different studies used different signals and datasets, making direct comparison difficult. However, these values still offer valuable reference points. As shown in Table 2, traditional machine learning methods have demonstrated excellent performance in stress and anxiety prediction based on neural signals. Despite the variations in neural signals, models, and datasets used across studies, most models achieved an accuracy of over 75%. Notably, the KNN model reached an accuracy of over 94% when classifying stress based on ECG and EDA signals [67,74], highlighting the great potential of machine learning methods in this area of research. Ensemble learning methods, which combine multiple models for classification prediction, also exhibited superior performance in predicting stress and anxiety from physiological signals [64,75]. Despite demonstrating a clear ability to distinguish between ‘stress’ and ‘relaxation’ states using HRV signals, machine learning models have shown limitations in differentiating between ‘stress’ and ‘neutral’ states [42]. This underscores the challenges machine learning models face in this field, suggesting that while they show significant potential, further refinement is needed to address these limitations.

Table 2.

Recent studies on stress detection using ML.

A comprehensive analysis of Table 2 indicates that model efficacy is highly coupled with the types of physiological signals used and their specific research contexts, rather than being solely represented by isolated accuracy figures. EEG signals, which reflect central nervous system activity [66,75], demonstrate outstanding performance in well-controlled experimental settings due to their high temporal resolution and capacity to characterize neural mechanisms. For instance, Ref. [75] achieved over 97% accuracy using a large clinical sample, highlighting EEG’s strong potential for fine-grained emotion discrimination. However, such approaches generally rely on specialized equipment and constrained environments, which limit their ecological validity and practical scalability. In contrast, signals derived from the peripheral nervous system—such as ECG, EDA, and PPG—offer stronger real-world applicability due to their compatibility with wearable devices, as exemplified using Empatica E4 in Ref. [45]. Studies such as Refs. [67,68] have shown that even with small sample sizes (N = 18 and N = 10, respectively), fusion of ECG and EDA can achieve around 94% accuracy. It should be noted, however, that such high performance may be limited to distinguishing between extreme states. A fundamental challenge common across all signal types was highlighted by Ref. [42]: although HRV-based models (derived from ECG/PPG) effectively differentiated between “stress” and “relaxation” states (Random Forest, 89.2%), their performance dropped significantly to approximately 60% when identifying more nuanced distinctions such as between “stress” and “neutral” states. This suggests that current models may be overfitting to prominent physiological responses in specific experimental scenarios, rather than capturing the more complex and subtle patterns of stress encountered in real-world environments. In terms of model selection, ensemble learning methods—such as LightGBM and XGBoost [69] and Random Forest in Ref. [42]—exhibited superior and consistent performance across different signals and datasets, making them a preferred robust option. Moreover, the comparable results between Logistic Regression (76.4%) and Random Forest (76.5%) in Ref. [45] indicate that, in some applications, feature engineering and signal quality may be as critical as model selection. Future research must move beyond singular focus on accuracy metrics, adopt more rigorous cross-subject validation protocols, and focus on enhancing decoding capacity for subtle and continuous mental states in naturalistic settings.

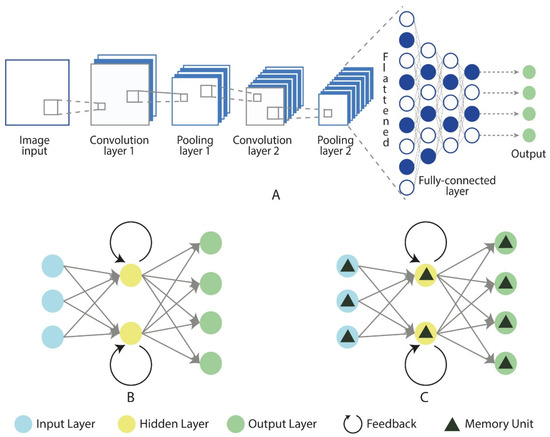

3.3. DL Approaches for Emotional Stress and Anxiety Analysis Using Physiological Signals

Deep learning, a branch of machine learning, simplifies the process of feature extraction and classification through complex neural networks, thereby improving both efficiency and accuracy (Figure 6) [62]. In recent years, deep learning methods have achieved significant success in analyzing physiological signals such as EEG and ECG. Common architectures in deep learning include Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Long Short-Term Memory (LSTM) networks [77]. CNNs are widely used to extract spatial features through convolutional and pooling layers, reducing the need for manual feature engineering (Figure 6A). Moreover, CNNs are not only adept at extracting spatial features, but can also capture temporal features to some extent through their layered structure and local receptive fields, making them suitable for analyzing time-series data. However, CNNs have limitations in capturing more complex long-term dependencies. To address this, RNNs (Figure 6B) use recurrent connections to retain temporal information, making them well-suited for time-series data analysis [78].

Figure 6.

Fundamental architectures of neural networks: CNN (A), RNN (B), and LSTM (C). Source: author’s own work.

However, RNNs face challenges such as the vanishing gradient problem when processing long sequences. LSTM networks (Figure 6C), an improved version of the RNN architecture, were specifically designed to overcome the vanishing gradient issue in standard RNNs. Unlike standard RNNs, LSTMs introduce memory cells and gating mechanisms that enable them to effectively capture and maintain long-term dependencies, making them more suitable for handling long time-series data [79]. In the application of deep learning for stress and anxiety prediction, neural signal data are first preprocessed to ensure high-quality input. In some cases, data augmentation techniques are employed to expand the dataset, enhancing the model’s generalization ability and reducing the risk of overfitting. CNNs are used to capture spatial features and, to some extent, temporal features, while RNNs and LSTMs focus on capturing complex temporal patterns. Together, these three architectures can automatically learn meaningful features from the data for prediction. These foundational architectures form the basis of the most existing deep learning models.

Deep learning methods have shown promising results in predicting stress and anxiety using physiological signals, and they are increasingly adopted by researchers. Table 3 summarizes recent studies applying deep learning techniques for stress detection, listing the final classification accuracy. In the “Method” column, the model names are either the ones given by the original authors or, if not provided, the primary architecture of the model is used as the name. The “Structure” column indicates the key architectures used in the models.

An analysis of accuracy has been performed in Table 3, where based on neural signals in order to detect anxiety and stress, the different deep learning methods used demonstrate excellent performance, and despite variations in datasets, models and signal most models achieve accuracy rates exceeding 80%. Notably, the DNN model reported an accuracy of 95.51% for EEG-based stress detection [80], underscoring the ability of DNNs to capture complex patterns in large datasets, which is crucial for precise stress and anxiety evaluation. Convolutional Neural Networks (CNNs) have also played a significant role, particularly in spatial data analysis, such as EEG signal processing. The LConvNet model, a hybrid CNN-LSTM, demonstrated outstanding performance with an accuracy of 97%, which highlights the model’s effectiveness in handling both spatial and temporal features [81]. Similarly, the MuLHiTA and BLSTM models achieved accuracy rates of 91.8% and 84.13%, respectively, further validating the strength of LSTM-based models in analyzing sequential data [61]. In addition to EEG signals, the CNN and 1D-CNN models for ECG-based stress detection showed promising results, with accuracies of 93.74% and 83.55%, respectively [64,82]. The CNN-TLSTM hybrid model, which combines EEG and pulse rate data, achieved an impressive accuracy of 97.86%, suggesting that incorporating multiple physiological signals enhances model performance [69]. Although the deep learning models summarized in Table 3 generally report high accuracy rates (mostly exceeding 80%) in anxiety and stress detection, a tiered evaluation based on dataset scale, quality, and model generalizability reveals significant differences in their practical value. Studies utilizing large-scale, diverse samples with detailed acquisition information (e.g., Ref. [80] with 81 subjects and over 18,000 samples) demonstrate higher reliability and potential generalizability and should be regarded as high-quality evidence. In contrast, studies relying on small sample sizes (e.g., Ref. [69] with only 19 subjects) or insufficiently described datasets, while reporting high performance metrics, may suffer from overfitting and limited generalizability, and their results should be interpreted cautiously. In terms of model selection, the suitability of an architecture highly depends on data scale and task requirements. For low-data environments or scenarios requiring implementable deployment, lightweight architectures such as EEGNet (84.66% in [61]) and 1D-CNN (83.55% in [64]) are more practical and reliable choices due to their parameter efficiency and training stability. Conversely, although large hybrid models (e.g., CNN-LSTM and CNN-TLSTM) can achieve peak performance with sufficient data (97.86% in [69]), their vast parameter counts and complex structures make them highly prone to overfitting in limited-data settings, constituting “over-engineering” that should be avoided without justification. Furthermore, although LSTM-based architectures outperform CNNs in modeling long-range dependencies, they still face limitations such as low training efficiency and difficulties in capturing complex relationships in ultra-long sequences. Moreover, the field as a whole has not yet fully incorporated more advanced global modeling mechanisms, such as Transformers. Additionally, a fundamental methodological challenge in current research paradigms lies in the conflation of experimental design and model learning objectives. Many studies (including some in Table 2) use “task-induced states vs. resting states” as a proxy for “stress/anxiety vs. relaxation.” This approach carries an inherent risk: models may learn not the physiological fingerprints specific to stress or anxiety, but rather general physiological differences between task performance and resting states (e.g., changes due to cognitive load, muscular activity, or sensory input). Therefore, the high accuracy reported by many models may reflect the model’s ability to distinguish behavioral states rather than specific affective states. Future studies should adopt more nuanced experimental designs—such as employing multiple distinct stress-induction tasks, including neutral task controls, and utilizing ecological momentary assessment—coupled with explainability techniques to ensure that models capture core, generalizable features of stress across contexts rather than artifacts of specific experimental paradigms.

Table 3.

Recent studies on stress detection using DL.

Table 3.

Recent studies on stress detection using DL.

| Research | Signal | Method | Accuracy% | Structure | Dataset Details |

|---|---|---|---|---|---|

| [69] (2023) | EEG, pulse rate | CNN-TLSTM | 97.86 | CNN, LSTM | 19 subjects (15 M/4 F, 31 ± 14 years), 8 electrodes, 250 Hz sampling rate. Each participant yields 20 × 90 s EEG measurements, generating a 30 min EEG record per user. |

| [80] (2022) | EEG | DNN | 95.51 | - | 45 GAD patients (41.8 ± 9.4 years, 13 M/32 F); 36 HC (36.9 ± 11.3 years, 11 M/25 F). 16-channel EEG, 250 Hz. 10,273 GAD and 7773 HC samples. |

| [70] (2024) | EEG | GRU | 94.4 | LSTM | 24 healthy subjects; EEG acquired via ECL electrodes, filtered with a 4–400 Hz band-pass filter at a 256 Hz sampling rate. |

| [82] (2020) | ECG | CNN | 93.74 | CNN | - |

| [61] (2022) | EEG | MuLHiTA | 91.8 | LSTM | |

| [61] (2022) | EEG | CNN-LSTM | 87.57 | CNN, LSTM | |

| [83] (2019) | EEG | CNN | 87.5 | CNN | - |

| [61] (2022) | EEG | EEGNet | 84.66 | CNN | MIST: 20 males, 22.54 ± 1.53 years, 500 Hz, 24 electrodes. STEW: 45 males, 18–24 years, 128 Hz, 14 electrodes. DMAT: 25 subjects (20 F/5 M), 18.60 ± 0.87 years, 500 Hz, 23 electrodes. |

| [61] (2022) | EEG | BLSTM | 84.13 | LSTM | |

| [64] (2021) | ECG | 1D-CNN | 83.55 | CNN | |

| [64] (2021) | ECG | VGG | 83.09 | CNN | DriveDB: multiparameter recordings during driving; ECG, QRS, GSR. Arachnophobia: 80 spider-fearful individuals (18–40 years); 100 Hz, 10-bit resolution; ECG, GSR, respiration. |

| [81] (2023) | EEG | LConvNet | 97 | CNN, LSTM | TUEP v2.0.0: 49 epilepsy, 49 healthy sessions; ≥25 channels. |

| [81] (2023) | EEG | DeepConvNet | 96 | CNN | |

| [83] (2019) | HRV, EDA, EEG | CNN | 90 | CNN | - |

| [83] (2019) | HRV, EDA | CNN | 84 | CNN | - |

| [81] (2023) | EEG | ShallowConvNet | 78 | CNN |

3.4. Software and Hardware Platforms

We systematically extracted and summarized information on the software and hardware platforms used to implement the machine learning models wherever such information was available. The most commonly reported software environments were MATLAB (version 2024b) and Python 3.11.3 (with libraries such as scikit-learn, TensorFlow, Keras, and PyTorch). Hardware descriptions were infrequently provided; when specified, implementations typically ran on standard desktop workstations or laptops, and a minority explicitly reported GPU acceleration (e.g., NVIDIA GPUs) for deep learning workloads.

4. Discussion

In this review, we synthesize modality-spanning evidence via a PRISMA workflow and map pitfalls onto the end-to-end ML pipeline, yielding concrete guidance for model selection and deployment. We explicitly link demographic, intersectional, and privacy issues to technical decisions (preprocessing, feature learning, evaluation) and distill comparative observations, including advantages of multimodal fusion, the persistent difficulty of separating stress from neutral states, and the limits of small-sample generalization into testable recommendations for future work.

4.1. Comparison of the Advantages and Disadvantages of Different Models and Usage Scenarios

Recent advancements in deep learning models have substantially improved data collection methods for stress detection and prediction. These models enable the remote quantification of stress through mobile device cameras and non-contact imaging techniques, effectively reducing the need for additional hardware and lowering system complexity. This provides a scalable and efficient solution for cognitive stress assessment. Particularly noteworthy is the feasibility of real-time monitoring using low-cost wearable devices, which enhances the practicality of these methods. The integration of non-invasive techniques, such as virtual reality simulations and single-channel EEG for stress classification, further highlights the convenience and ecological validity of these approaches [84]. Despite these advancements, the application of deep learning models to stress detection is not without challenges. A critical issue is the limited dataset size in many studies, which often involve small sample groups ranging from 12 to 45 participants [85]. Such limited datasets can impair the generalizability and robustness of the models, as they may fail to adequately represent diverse populations or real-world conditions. Moreover, experimental design is pivotal in determining the effectiveness of stress detection models. However, many studies are conducted in controlled environments, which, while ensuring precision, do not fully replicate the complexity of real-life situations. This discrepancy may diminish the ecological validity of the models [86,87], as they are less likely to capture the variability and unpredictability of stress responses encountered in everyday life. Data preprocessing is another area where deep learning models exhibit both strengths and weaknesses. Advanced preprocessing techniques, such as frequency domain feature extraction and multilayer perceptron classifiers, can significantly improve classification performance and accuracy [88]. However, the reproducibility of results is often complicated by the lack of detailed descriptions of preprocessing methods, signal acquisition processes, and the equipment used. The quality of physiological data, such as PPG and EEG signals, is frequently compromised by artifacts, motion noise, and limited signal clarity, which can adversely affect model accuracy and interpretability [87,89] The complexity of high-performance models, such as CNN-TLSTM with attention mechanisms and XGBoost, also presents a double-edged sword. While these models have demonstrated exceptional classification accuracies, CNN-TLSTM averaging 98.83% and XGBoost reaching up to 99.2% in stress detection [87,90], their computational demands may limit practical deployment, especially in resource-constrained environments. Another significant challenge lies in the generalizability of these models. The reliance on specific datasets or singular types of physiological signals often results in models that do not fully capture the diversity of stress responses across various scenarios [84,85]. This limitation underscores the need for more comprehensive, large-scale studies that can enhance the generalizability of these findings. While non-invasive methods, such as remote photoplethysmography and wearable devices, offer practical solutions for continuous stress assessment [88,89], making these methods more accessible by reducing the reliance on expensive equipment, the initial costs associated with deploying advanced deep learning models and acquiring high-quality physiological data can still be prohibitive for some settings. Finally, the quality of data remains a crucial factor in the performance of stress detection models. The reliability of physiological signals, like PPG and EEG, is often undermined by artifacts, motion noise, and limited clarity [84,89], leading to potential inaccuracies in stress assessments. Additionally, the complexity of interpreting subtle physiological changes, such as those in HRV, further complicates the analysis, potentially reducing the accuracy of these models.

4.2. Pitfalls and Challenges

In the analysis of physiological signals associated with stress and anxiety, several challenges and limitations can compromise the effectiveness of predictive models. Ensuring high signal quality is critical, as environmental noise and artifacts can introduce distortions that affect interpretation. Proper feature preprocessing is required to extract meaningful indicators from raw data, while controlling model complexity is essential to avoid issues such as overfitting or underfitting. Addressing these challenges is vital to enhance the reliability and accuracy of the results, ultimately contributing to more effective interventions and support for individuals experiencing stress and anxiety.

4.2.1. Pitfalls

Signal quality and environmental interference are critical for accurate physiological data analysis, yet several pitfalls can compromise it. Environmental interference, such as background noise and electromagnetic disturbances, can distort signals, making it challenging to extract meaningful information [91]. Improper sensor placement further exacerbates this issue, as even slight misalignments can lead to weak or distorted signals [92].

Movement artifacts and sensor placement introduce noise, particularly in wearable devices, skewing the readings during data collection [93]. Physiological variability among individuals, such as differences in EDA or HR, also complicates data interpretation and can affect overall signal quality [94]. Ensuring correct sensor placement and addressing movement-related noise is essential to maintaining signal accuracy.

Signal saturation and calibration occur when the signal exceeds the sensor’s maximum range, resulting in critical information loss [95]. Regular calibration of sensors is essential to mitigate these issues, as inadequate calibration can introduce biases [96]. Proper calibration ensures reliable data collection.

Data loss and connectivity issues from intermittent connections or hardware failures can create gaps in the analysis, leading to incomplete or skewed results [97]. Addressing hardware reliability and ensuring consistent data transmission can help minimize this pitfall and enhance the overall validity of the analysis.

Noise reduction and preprocessing are crucial steps in preparing physiological data for analysis, yet they pose pitfalls. Insufficient noise reduction can result in inadequate filtering, failing to eliminate artifacts and irrelevant variations, which can mislead results [98]. Proper filtering methods must be applied to improve signal clarity.

Feature selection, model complexity, and model overfitting/underfitting are common concerns. Overfitting occurs when too many or irrelevant features are selected, causing the model to learn noise rather than meaningful patterns, reducing generalizability to new data. Underfitting, on the other hand, overlooks important features, leading to missed opportunities for enhancing model performance [71]. A balanced feature selection process is key to improving the model’s generalization. Otherwise, balancing the complexity of models is crucial for optimal performance [99,100].

Inconsistent preprocessing techniques across datasets can create discrepancies that hinder effective comparison and analysis. Improper handling of missing data is another common pitfall; failing to address gaps appropriately can skew results and lead to inaccurate interpretations [98]. Standardizing preprocessing methods is crucial for reliable comparisons.

Scaling, normalization, and bias can significantly affect the analysis. Inconsistent application of scaling and normalization can introduce bias, allowing features with vastly different scales to dominate the analysis [100]. Ensuring consistent scaling and normalization reduces the risk of bias.

Hyperparameter tuning and resource constraints also pose difficulties. Complex models require more resources, including processing power and time, which can be barriers in real-time applications or when handling large datasets [99]. Moreover, complex models may be harder to interpret, complicating the understanding of predictions, which can hinder trust and usability. Hyperparameter tuning becomes more complicated with increased model complexity, as larger models have more parameters to optimize, leading to longer training times and a higher risk of suboptimal configurations [101].

Signal quality, environmental interference, artifacts, and signal saturation are well-recognized pitfalls in physiological signal processing, which remain nearly insurmountable even under current conditions. Nonetheless, researchers continue to refine models and methods within these constraints, striving to develop optimal predictive approaches that best accommodate these difficulties.

4.2.2. Challenges

The heterogeneity in study quality identified through our systematic assessment presents a fundamental challenge for evidence synthesis in this field. Our evaluation revealed significant variability in methodological rigor across the included studies, ranging from investigations with comprehensive validation procedures and transparent reporting to those with substantial methodological limitations. This variability directly impacts the interpretability of findings and complicates the drawing of robust conclusions from the literature.

Studies demonstrating more rigorous methodological approaches, including comprehensive validation strategies and detailed reporting of preprocessing procedures, often present more conservative performance estimates compared to investigations with methodological weaknesses. This pattern suggests that apparent differences in model performance across studies may partially reflect variations in methodological quality rather than genuine differences in analytical approaches or signal processing techniques. The observed heterogeneity in research quality underscores the urgent need for standardized methodological frameworks and reporting guidelines that would facilitate more meaningful comparison of results across investigations and enable more robust evidence synthesis.

Age and physiological responses present a significant challenge in mental health assessments. Age-related differences can influence both biological sex and gender identity, affecting physiological responses to stress and anxiety due to developmental changes, hormonal fluctuations, and varying life experiences [102]. For example, younger individuals may exhibit distinct coping mechanisms compared to older adults. Adolescents might experience heightened emotional responses driven by hormonal changes, while older adults often rely on more adaptive coping strategies shaped by their life experiences [103]. This variability complicates data interpretation and the development of age-appropriate interventions, requiring a tailored approach that accounts for these differences [104].

Gender identity adds another layer of complexity to physiological research and mental health assessments. Individuals who identify outside the traditional male–female binary may face unique stressors related to societal acceptance and discrimination, which can significantly impact their physiological responses to stress and anxiety [105]. Traditional assessments often fail to capture the experiences of non-binary or transgender individuals, leading to gaps in understanding and support [106]. Researchers must develop inclusive methodologies that validate diverse gender identities. By creating tailored assessment tools, we can better understand the physiological signals associated with anxiety and stress in these populations, ultimately leading to more effective interventions [107].

Medication use among study participants was assessed. The use of medications for clinical anxiety (e.g., benzodiazepines or antidepressants) or other chronic medical conditions (antihypertensives, antiarrhythmics, lipid-lowering agents, hypoglycaemic agents, anticoagulants, etc.) can significantly alter physiological signals related to emotional stress and anxiety, thereby compromising the validity of the analytical results [108]. It is crucial to include these parameters in data collection and to consider the possibility of recalling bias or intentional omission of this information by study participants [109].

The distinction between baseline and acute anxiety, characterized by the presence of autonomic symptoms (e.g., cold sweats, dizziness, bradycardia, and fluctuations in blood pressure), presents a methodological challenge in data acquisition and analysis. Given the dynamic nature of anxiety, an individual’s anxiety level can fluctuate rapidly, transitioning between states with varying physiological manifestations, which can complicate the interpretation of assessment results. Both basal and acute anxiety significantly contribute to emotional stress, but have distinct clinical implications. Consequently, establishing a standardized baseline of anxiety for all participants is desirable, but may be difficult to achieve in practice [110].

Intersectionality and socio-economic factors also play a critical role in shaping individuals’ experiences related to biological sex and gender identity. Factors such as race, socio-economic status, and cultural background can influence access to healthcare and support systems for managing stress and anxiety [26]. Individuals from marginalized communities often face unique stressors, including systemic discrimination and economic instability, which exacerbate mental health challenges [111]. Recognizing these intersectional influences is essential for developing comprehensive strategies that support individuals across different demographics [112].

In practical applications, several challenges warrant greater attention. First, the convenience of wearing and using wearable devices remains limited, which restricts their broader adoption. For example, EEG caps typically rely on wet electrodes that require conductive gel, and prolonged electrode pressure on the scalp may cause discomfort or even dizziness. Second, achieving real-time assessment of stress or anxiety demands sufficient battery life and computational capacity. At the same time, integrating multimodal data in real time is challenging, as signals from different sensors often vary in temporal resolution and sampling rates. Moreover, users may develop resistance to long-term monitoring, particularly those already experiencing stress or anxiety [113]. They may be reluctant to remain under continuous surveillance and concerned about the risk of data leakage leading to stigma or discrimination. Collectively, these practical issues constitute key bottlenecks that hinder the translation of research outcomes into real-world applications [114,115,116].

Cross-dataset generalization remains largely under-examined. Many studies report high within-dataset accuracy on benchmarks such as WESAD or SWELL, but few evaluate models across datasets or populations. Addressing this requires standardized protocols, cross-dataset validation as a default reporting practice, and techniques such as domain adaptation or self-supervised pretraining to improve robustness. In parallel, privacy-preserving learning paradigms (e.g., federated learning, secure aggregation, differential privacy) should be explored to enable training on distributed sensitive physiological data without centralizing identifiable information. Scaling models by training on substantially larger, more diverse physiological corpora, an approach that underpins the generalization of modern foundation models, may improve out-of-distribution performance, but is currently limited by data availability and privacy constraints.

Neural rights emerge as a critical issue with the rise of terotechnology and brain–computer interfaces. Protecting rights related to mental privacy, personal identity, and free will is becoming increasingly important [117]. Future research must explore these rights and contribute to developing policies and frameworks that ensure individuals maintain control over their neural data [118]. Addressing these privacy and data protection issues will help establish a secure and ethical foundation for the use of machine learning and physiological signal analysis in mental health [118].

4.3. Future Opportunities

Signal quality and data processing are critical aspects to address for improving early detection of anxiety and stress. Enhancing sensor calibration and developing more robust noise reduction techniques are necessary to mitigate issues such as signal distortion. This can help ensure that the data collected are more accurate and reliable, which is essential for effective analysis. Improving preprocessing methods, such as artifact removal and signal filtering, will further refine the data, leading to better model performance and more reliable physiological data analysis.

Personalization of models plays a crucial role in improving detection accuracy. Accounting for individual physiological variability, such as differences related to age, gender, and intersectionality, is essential to creating models that can accurately assess stress and anxiety across diverse populations. Personalized models can address the unique physiological responses of different individuals, leading to more accurate and tailored interventions [103,105].

Multimodal data integration offers significant potential for enhancing the accuracy of stress and anxiety detection. By combining different physiological signals, such as EEG, HRV, and EDA, through deep learning models, researchers can create a more comprehensive picture of an individual’s mental state. This multimodal approach allows for the identification of stress and anxiety from multiple angles, leading to more precise and reliable detection methods [108].

Inclusivity in assessment tools is another critical opportunity. Developing inclusive tools that account for diverse gender identities, races, medical histories, and socio-economic backgrounds will help ensure that mental health assessments are equitable and effective for all individuals. Addressing the unique stressors faced by marginalized communities, such as discrimination and economic instability, is key to creating comprehensive mental health strategies that support individuals across different demographics [112,113].

Innovative sensor technologies will enhance the precision and convenience of physiological signal collection. By embracing advanced wearable devices and sensor technologies, real-time monitoring of stress and anxiety can become more practical and accessible. This will drive the future of personalized healthcare, enabling continuous and unobtrusive data collection that can inform timely interventions and improve patient outcomes.

To address these intersectional challenges, future ML model development should incorporate demographic-aware architectures that explicitly account for population-specific variations in physiological responses. This could include implementing stratified validation frameworks where model performance is evaluated separately across different demographic subgroups, ensuring equitable accuracy across age, gender, race, socio-economic status, and cultural background. Additionally, fairness-constrained optimization techniques and adversarial debiasing methods could be employed to minimize discriminatory outcomes while maintaining overall model performance.

Demographic factors in signal modeling should also be considered. It is necessary to adopt richer, comparable metadata at data collection time (including race/ethnicity, socioeconomic indicators, and recruitment channels) and conduct stratified and sensitivity analyses to quantify their effects on performance and bias. Otherwise, advancing standardized statements of data usage boundaries (permitted reuse, redistribution limits, retention policies, and cross-institution transfer conditions) alongside transparency practices to improve auditability and reproducibility are essential. We encourage subsequent primary studies and dataset initiatives to publish clear demographic and ethical metadata.

Leveraging these opportunities will facilitate the development of more accurate tools for early identification, tracking, and management of anxiety and stress, thereby enhancing patient outcomes and promoting general health and well-being.

4.4. Methodological Recommendations

Based on the patterns identified through our systematic quality assessment, several key areas emerge as priorities for methodological improvement in future investigations. The development of more rigorous research standards in this field would substantially enhance the reliability and comparability of findings across studies.

Future studies would benefit significantly from implementing adequate sample size determination procedures, ideally supported by formal power calculations that account for the complexity of machine learning model development and validation. Such approaches would help ensure sufficient statistical power for detecting meaningful associations while avoiding the limitations associated with underpowered investigations that have characterized much of the current literature.

Transparent and comprehensive reporting of data preprocessing methodologies represents another critical area for improvement. Studies should provide detailed descriptions of artifact removal procedures, filtering techniques, and signal conditioning approaches to enable replication and facilitate meaningful comparison of results across investigations. The current variability in preprocessing methodology reporting substantially limits the reproducibility of findings and complicates evidence synthesis efforts.

The implementation of robust validation strategies, particularly those incorporating independent or external validation procedures, would address fundamental concerns regarding the generalizability of reported model performance. Cross-validation approaches, while valuable, should be complemented by evaluation on truly independent datasets to provide more realistic assessments of model performance in real-world applications.

Additionally, systematic consideration and reporting of demographic and clinical confounding factors, including medication use, comorbid conditions, and baseline physiological states, would enhance the validity of reported associations between physiological signals and anxiety or stress states. The current limited attention to such factors represents a significant methodological gap that may compromise the reliability of findings.

Finally, the adoption of standardized performance reporting metrics that extend beyond simple accuracy measures would facilitate more nuanced evaluation of model performance and enable more meaningful comparison of results across different analytical approaches and study populations.

5. Conclusions

A systematic review of 113 studies employing machine learning techniques to evaluate anxiety and stress based on physiological signals highlights its potential for early detection and intervention. These studies have investigated the efficacy of capturing objective physiological indicators, elucidating their role in mental health, exploring individual differences in susceptibility, and underscoring the significance of early predictions in preventing symptom exacerbation. Collectively, these findings suggest that current machine learning methodologies offer promising avenues for continuous monitoring and early prediction, which are essential for timely therapeutic interventions. Despite encouraging performance, progress is constrained by the lack of standardized, open benchmarks for stress and anxiety detection. Existing datasets differ in sensing modalities, sampling rates, labeling schemes, and elicitation paradigms, and many studies rely on proprietary data collected under heterogeneous protocols. Advancing the field requires harmonized datasets with standardized elicitation paradigms, controlled acquisition procedures that minimize environmental interference, and comprehensive metadata (e.g., demographics, medications, task context, ethics), alongside predefined cross-subject splits and external-validation procedures. Such benchmarks are essential for fair comparison, robust generalization, and reproducible improvement of current methods. Therefore, future research should not only explore the relationships between physiological signals and mental health, but also focus on developing models that perform well across diverse individuals and enhancing metrics for cross-subject detection. Innovations should include advanced predictive models and preventive strategies, alongside integrating cutting-edge sensor technologies and real-time data analysis into clinical practice. This proactive approach will improve the practicality and effectiveness of machine learning methods, ultimately enhancing individual well-being and mitigating the impact of mental health issues.

Author Contributions

Conceptualization, M.-T.H. and H.Z.; methodology, Y.L., T.B. and Y.O.; validation, H.Z. and M.-T.H.; investigation, Y.L., M.-I.P., C.T., Y.O., Z.L., Z.W. and F.T.; writing—original draft preparation, Y.L., M.-I.P., T.B., C.T., H.Z. and M.-T.H.; writing—review and editing, M.-I.P., C.T. and M.-T.H.; visualization, M.-I.P. and M.-T.H.; supervision, H.Z. and M.-T.H.; project administration, M.-T.H.; funding acquisition, H.Z. and M.-T.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the NSFC with grant No. 62076083, Zhejiang Provincial Natural Science Foundation of China under Grant No. ZCLZ24F0301; and by grants from the Spanish Agencia Estatal de Investigación, Ministerio de Ciencia, Innovación y Universidades, co-funded by European Union, Next Generation EU/PRTR Ref. Number: TED2021-130942B-C21//MICIU/AEI/10.13039/501100011033; the Fundación Primafrio with the code number 39747; COST Participatory Approaches with Older Adults (PAAR-net), with the code number CA22167, and China-Spain AI Technology Joint Laboratory with Hangzhou Dianzi University.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A

Search Terms Used for Identifying Relevant Studies

This table lists the search terms used to identify studies related to physiological signals and machine learning. Terms are categorized by physiological signal types including EEG (Electroencephalogram), eye-tracking technologies, heart rate measurements, skin conductance, and blood pressure indicators. Variants and synonyms of each term were included to ensure a comprehensive search of the literature.

- (((“Anxiety/classification” [Mesh] OR “Anxiety/diagnosis” [Mesh] OR “Stress, Psychological/classification” [Mesh] OR “Stress, Psychological/diagnosis” [Mesh])

- AND (“Deep Learning” [Mesh] OR “Machine Learning” [Mesh] OR “Neural Networks, Computer” [Mesh]))

- AND (“Electroencephalography” [Mesh] OR “Eye-Tracking Technology” [Mesh]

- OR “Heart Rate” [Mesh] OR “Galvanic Skin Response” [Mesh] OR “Blood Pressure” [Mesh] OR “Oxygen Saturation” [Mesh]

- OR “Biomarkers” [Mesh]))

- OR

- (((((Angst [Title/Abstract]) OR (Nervousness [Title/Abstract]) OR (Hypervigilance [Title/Abstract]) OR (Social Anxiety [Title/Abstract]) OR (Anxieties [Title/Abstract])

- OR (Anxiety [Title/Abstract]) OR (Social Anxieties [Title/Abstract]) OR (Anxiousness [Title/Abstract]) OR (Psychological Stresses [Title/Abstract])

- OR (Stressor [Title/Abstract]) OR (Psychological Stressor [Title/Abstract]) OR (Psychological Stressors [Title/Abstract])

- OR (Psychological Stress [Title/Abstract]) OR (Stress [Title/Abstract]) OR (Psychologic Stress [Title/Abstract]) OR (Life Stress [Title/Abstract])

- OR (Life Stresses [Title/Abstract]) OR (Stress [Title/Abstract]) OR (Emotional Stress [Title/Abstract])

- OR (Stressful Conditions [Title/Abstract]) OR (Psychological Stressful Condition [Title/Abstract])

- OR (Psychological Stressful Conditions [Title/Abstract]) OR (Stressful Condition [Title/Abstract]) OR (Psychologically Stressful Conditions [Title/Abstract])

- OR (Psychologically Stressful Condition [Title/Abstract])

- OR (Stressful Condition [Title/Abstract]) OR (Stress Experience [Title/Abstract]) OR (Psychological Stress Experience [Title/Abstract])

- OR (Psychological Stress Experiences [Title/Abstract]) OR (Stress Experiences [Title/Abstract])

- OR (Individual Stressors [Title/Abstract]) OR (Individual Stressor [Title/Abstract]) OR (Cumulative Stress [Title/Abstract])

- OR (Cumulative Stresses [Title/Abstract]) OR (Psychological Cumulative Stresses [Title/Abstract])

- OR (Psychological Cumulative Stress [Title/Abstract]) OR (Stress Overload [Title/Abstract]) OR (Psychological Stress Overload [Title/Abstract])

- OR (Psychological Stress Overloads [Title/Abstract]) OR (Stress Measurement [Title/Abstract])

- OR (Psychological Stress Measurement [Title/Abstract]) OR (Psychological Stress Measurements [Title/Abstract])

- OR (Stress Processes [Title/Abstract]) OR (Psychological Stress Processe [Title/Abstract]) OR (Psychological Stress Processes [Title/Abstract])

- OR (Stress Processe [Title/Abstract]))

- AND ((classif * [Title/Abstract]) OR (diagnos * [Title/Abstract]) OR (assess * [Title/Abstract]) OR (predict * [Title/Abstract])))

- AND ((Deep Learning [Title/Abstract]) OR (Hierarchical Learning [Title/Abstract]) OR (Machine Learning [Title/Abstract]) OR (Computer Neural Network [Title/Abstract])

- OR (Computer Neural Networks [Title/Abstract])

- OR (Neural Network [Title/Abstract]) OR (Deep learning

- Model [Title/Abstract]) OR (Model [Title/Abstract]) OR (Network Model [Title/Abstract])

- OR (Network Models [Title/Abstract]) OR (Neural Network Model [Title/Abstract]) OR (Neural Network Models [Title/Abstract]) OR

- (Connectionist Models [Title/Abstract])

- OR (Connectionist Model [Title/Abstract]) OR (Perceptrons [Title/Abstract])

- OR (Perceptron [Title/Abstract]) OR (Computational Neural Networks [Title/Abstract]) OR (Computational Neural Network [Title/Abstract])

- OR (Neural Network [Title/Abstract])

- AND ((EEG [Title/Abstract]) OR (Electroencephalogram [Title/Abstract])

- OR (Electroencephalograms [Title/Abstract]) OR (Eye-Tracking Technologies [Title/Abstract]) OR (Eye Tracking Technology [Title/Abstract])

- OR (Eyetracking Technology [Title/Abstract]) OR (Eyetracking Technologies [Title/Abstract]) OR (Gaze-Tracking Technology [Title/Abstract])

- OR (Gaze-Tracking Technologies [Title/Abstract])

- OR (Gaze Tracking Technology [Title/Abstract]) OR (Gaze-Tracking System [Title/Abstract]) OR (Gaze Tracking System [Title/Abstract])

- OR (Gaze-Tracking Systems [Title/Abstract])

- OR (Gazetracking System [Title/Abstract]) OR (Gazetracking Systems [Title/Abstract]) OR (Eye-Tracking System [Title/Abstract])

- OR (Eye Tracking System [Title/Abstract])

- OR (Eye-Tracking Systems [Title/Abstract]) OR (Eyetracking System [Title/Abstract]) OR (Eyetracking Systems [Title/Abstract]) OR (Eye-Tracking [Title/Abstract])

- OR (Heart Rates [Title/Abstract]) OR (Cardiac Rate [Title/Abstract]) OR (Cardiac Rates [Title/Abstract])

- OR (Pulse Rate [Title/Abstract]) OR (Pulse Rates [Title/Abstract]) OR (Heartbeat [Title/Abstract]) OR (Heartbeats [Title/Abstract])

- OR (Cardiac Chronotropism [Title/Abstract]) OR (Heart Rate Control [Title/Abstract])

- OR (Cardiac Chronotropy [Title/Abstract]) OR (Galvanic Skin Responses [Title/Abstract])