1. Introduction

LoRa (Long Range), an important member of LPWAN (Low Power Wide Area Network) technology, has become a popular research topic in IoT (Internet of Things) projects due to its low-power, long-range data transmission capabilities. LoRa technology is typically used in IoT applications for transmitting smaller amounts of data, such as sensor data, due to its low data transmission speeds and limited bandwidth. Along with small sensor data, image data is also an important metric for many critical applications, such as remote security monitoring, access control, or human identification. LoRa’s long-range communication capabilities and low power consumption will significantly contribute to developing these security and identification-based studies in areas without reliable cellular coverage. However, due to LoRa’s limited data transmission capacity, transmitting image data poses a significant challenge for LoRa. Therefore, solving the image transmission problem with LoRa is a significant research topic. Guerra and colleagues proposed a low-speed encoder for image transmission using LoRa modules. The aim is to compress images before transmission and reduce the impact of data loss that may occur during transmission [

1]. Kirichek and colleagues used JPEG and JPEG2000 compression algorithms for image transmission in their study on image and sound transmission via a LoRa module on an unmanned aerial vehicle (UAV). It was determined that the JPEG2000 compression format resulted in less distortion after transmission [

2]. Wei and colleagues combined WebP compression and base64 encoding methods to transmit a 200 × 150-pixel image over LoRa in 25.7 s. [

3]. Ji and colleagues proposed a technique that divides the image into grid segments and sends only the parts of the image that contain differences [

4]. Chen and his colleagues applied JPEG compression to images for agricultural monitoring and transmitted them via LoRa. To reduce transmission time, they proposed a transmission protocol called the Multi-Packet LoRa Protocol (MPLR) with packet acknowledgment requirements. While this approach is suitable for small-sized images, the system becomes inefficient as the amount of data increases [

5]. In their research, Haron and colleagues are investigating suitable compression methods for transmitting large-sized images over wireless networks for telemetry data. They used the Fast Fourier Transform (FFT) method to compress a grayscale image with a size of 512 × 512 pixels and a size of 176 KB [

6].

This study aims to address the image transmission problem with LoRa by converting image data to low resolution before transmission. Then, at the receiver node, we will enhance these images using the Enhanced Super-Resolution Generative Adversarial Network (ESRGAN) model, producing high-resolution images that closely resemble the original.

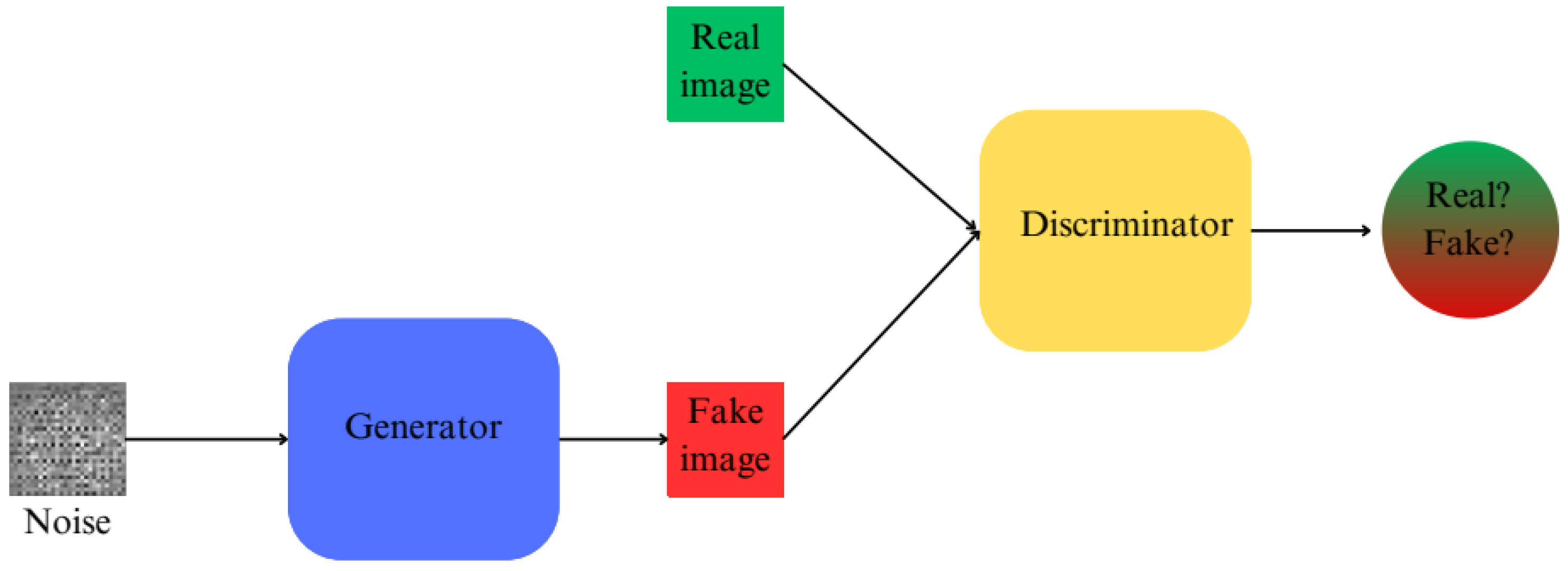

Introduced in 2014 by Ian Goodfellow et al. [

7] and one of the key components of modern generative models [

8], generative adversarial networks (GANs) comprise two competing networks: the Generator and Discriminator networks. For image data, the generative network generates new (fake) images while the discriminator network detects whether the generated images are fake or not. The determinations of the discriminator neural network regarding the images fed to the generative neural network ensure that the generated images become indistinguishable from the real images over time.

Figure 1 shows the basic working logic of the GAN architecture.

Following the discovery of the basic GAN architecture, Radford et al. [

9] developed the deep convolutional generative adversarial network (DCGAN) in 2015 to produce synthetic images. With the SRGAN [

10] proposed in 2017 and the subsequent ESRGAN [

11], significant improvements were achieved in enhancing the visual quality of low-resolution images. The development of these studies has revealed the effectiveness of GAN-based approaches in improving images that are corrupted or have low quality in transmission environments with limited bandwidth.

Super-resolution (SR) imaging is the process of obtaining high-resolution (HR) images from low-resolution (LR) images. The theoretical basis of the SR technique dates back to Harris’s 1964 work on information extraction from images generated by diffraction-limited optical systems [

12]. Tsai and Huang first addressed the idea of SR to improve the spatial resolution of Landsat TM images [

13]. Based on this idea, different methods were used to focus on SR [

14,

15,

16,

17]. In studies on face images, face hallucination approaches were applied as an SR method to obtain high-resolution face images from low-resolution face images [

18,

19].

With the development of deep learning techniques, researchers have achieved significant success by using deep learning-based methods for SR. In particular, GAN [

1] have emerged as an effective method for generating high-resolution images from low-resolution images. The SRGAN (Super- Resolution Generative Adversarial Network) model, pioneered by Ledig and colleagues in 2017, has become a key reference in the literature [

10]. In 2018, Wang and colleagues developed ESRGAN (Enhanced Super-Resolution Generative Adversarial Network), an improved version of the SRGAN model that produces more realistic and natural textures [

11]. In 2021, Wang and colleagues further developed ESRGAN to propose Real ESRGAN, which can better simulate complex real-world distortions [

20]. Real-ESRGAN has been used in resolution enhancement of various medical images [

21,

22,

23], creating higher-resolution images from low-quality data in remote monitoring applications such as disaster and landslide detection [

24], and general image enhancement applications [

25,

26].

There are studies in the literature that propose GAN-based restoration methods to solve the problems of low bandwidth and low channel SNR in wireless image transmission [

27,

28]. However, these studies do not define a specific physical transmission network; they consider the problem under the assumption of a low-bandwidth wireless channel and primarily focus on developing a GAN architecture.

In contrast, our study emphasizes that LoRa communication is a wireless transmission technology that provides transmission over long ranges under low bandwidth conditions and is advantageous in terms of low energy consumption. Accordingly, it proposes a GAN-supported solution to the problem of image transmission over LoRa. To our knowledge, our study is the first to apply a GAN-based image restoration approach to the LoRa-based image transmission problem.

In this study, JPEG or WebP compression is applied after downsampling to reduce the resolution of face images, which are then sent via LoRa. Then, a super-resolution image was produced from the low-resolution image transmitted to the receiver node using the ESRGAN model trained within the scope of the study.

The remainder of this paper is organized in the following sections.

Section 2 describes in detail the materials and methods used in the study, as well as the implemented LoRa transmission and ESRGAN experiments.

Section 3 presents the results obtained from the experiments and the discussions.

Section 4 presents the study conclusions and recommendations for future work.

2. Materials and Methods

Our study aims to reduce transmission times to overcome the difficulties in image transmission with LoRa. To this end, image processing techniques are applied to the images to be transmitted, reducing their resolution, followed by image compression. In this study, JPEG and WebP compression techniques are compared for image compression, and LoRa technology is utilized for transmitting the compressed images. To enhance the resolution of the low-resolution image transmitted to the receiver via LoRa, the Real ESRGAN model and the ESRGAN model trained on face images are utilized on the Raspberry Pi 4 B (Raspberry Pi OS, Debian 11 “Bullseye”), which is connected to the LoRa receiver.

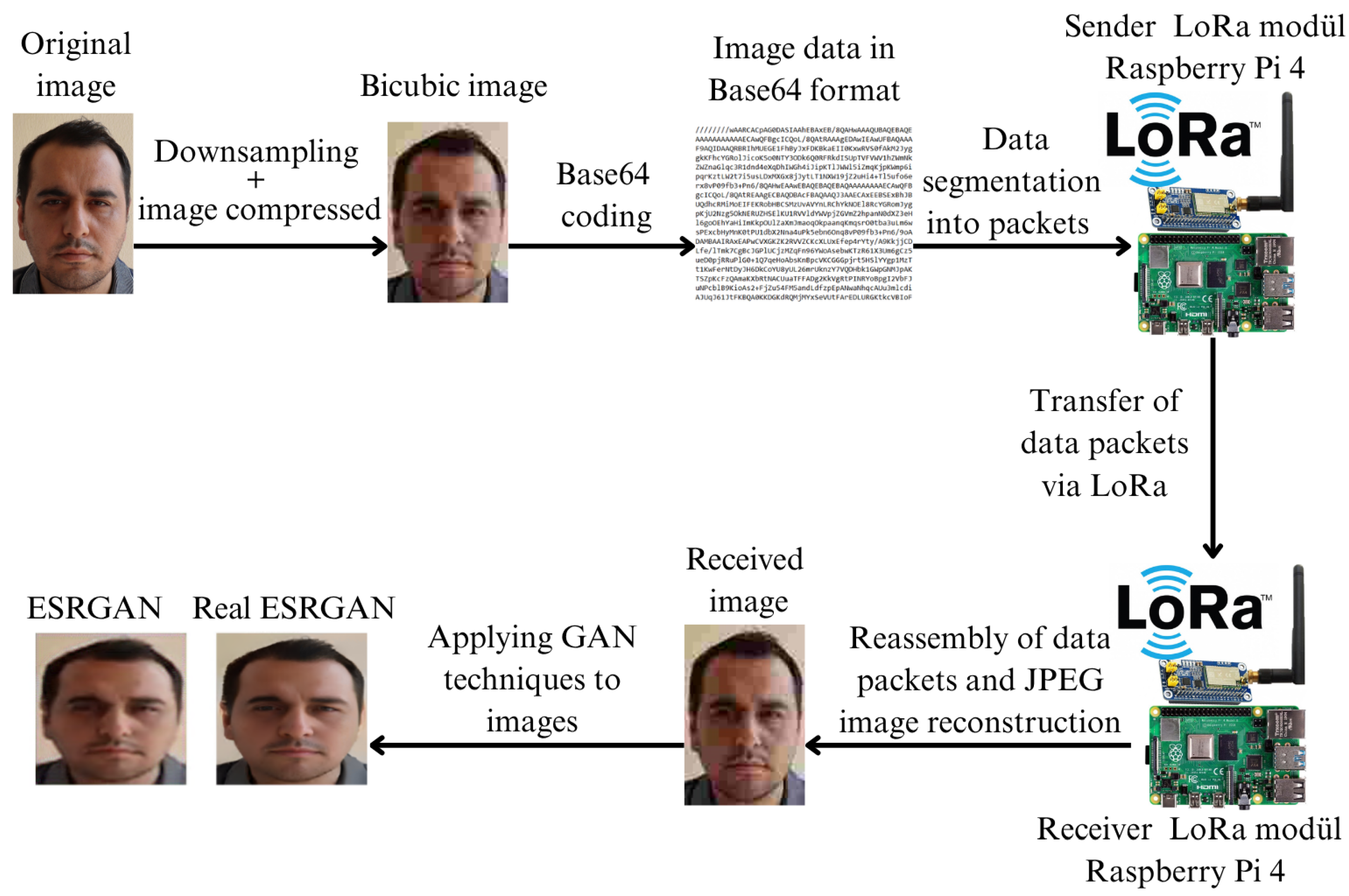

Figure 2 shows the workflow of the study.

In this study, a network system consisting of two SX1262 LoRa HAT (E22 900T22S) modules, one for the sender and one for the receiver, mounted on a Raspberry Pi 4, provides point-to-point communication. To ensure that the image is transmitted as quickly as possible, the image to be transmitted on the sender Raspberry Pi 4 is down-sampled, compressed and then converted into a minimal data size using base64 encoding. The base64-encoded string data obtained here is divided into packets suitable for the LoRa module’s limited transmission size and transmitted. For the SX1262 LoRa HAT module, the packet transmission length is 240 bytes in total, consisting of a 6-byte header and a 234-byte message. Therefore, each data packet is packaged to contain 234 bytes of data.

The image data received in packets via LoRa is combined in the order it was received in a *.txt file in the relevant file location on the Raspberry Pi 4. This file, which contains base64 string codes, is then converted into a JPG image. The converted image is of lower quality than the original image due to the downsampling and compression processes applied before transmission. The received low-resolution image is reproduced using super-resolution-based GAN techniques such as ESRGAN and Real ESRGAN. The resulting images are evaluated using peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM) metrics.

Informed consent was obtained from the relevant individuals for the use of their face images in most of the descriptions in the study. Other face images were selected from a publicly accessible face database [

29]. Additionally, another face image dataset containing open-access images was used to train the ESRGAN network [

30].

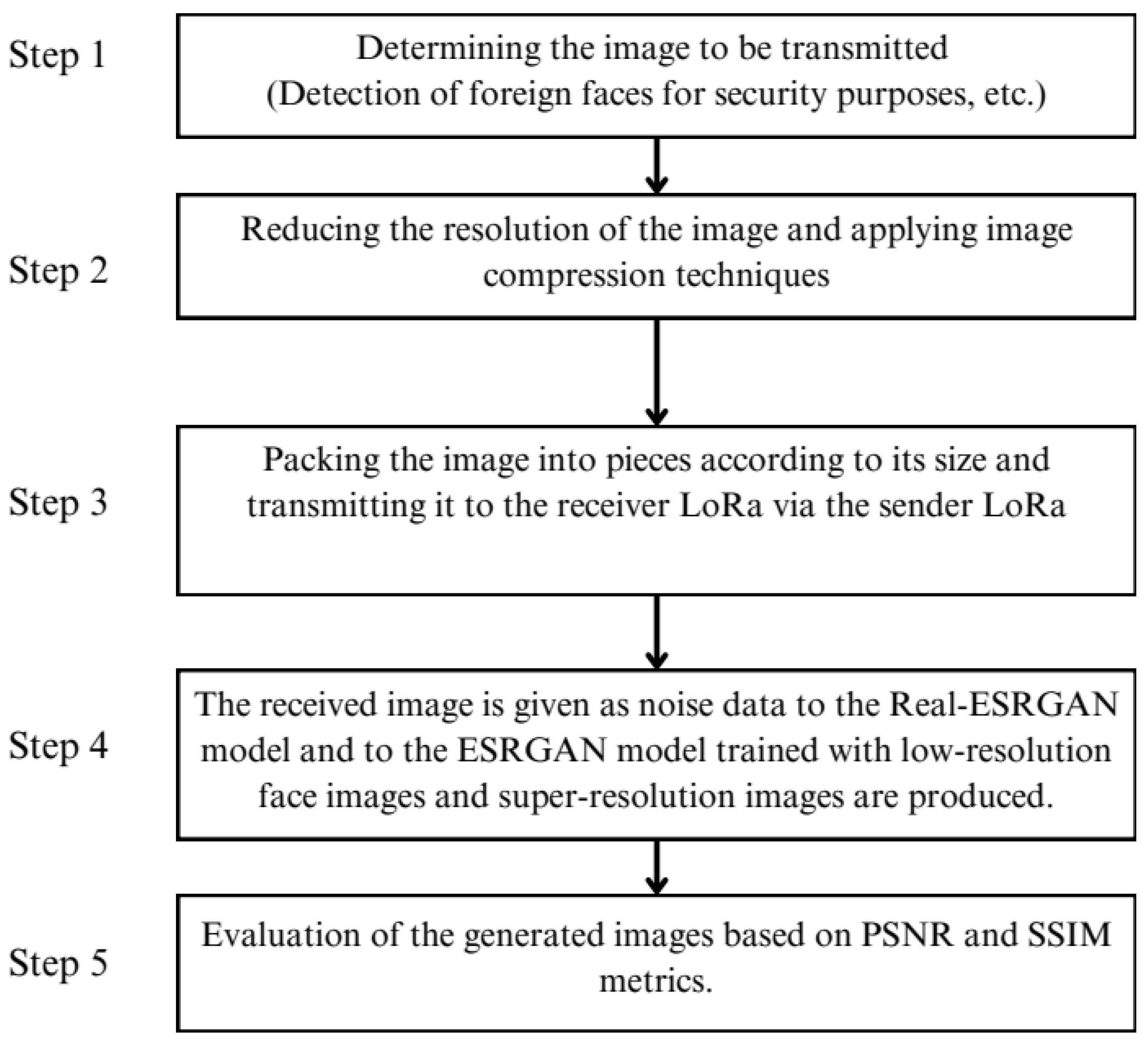

The study’s methodology comprises five basic steps, as illustrated in

Figure 3.

2.1. Compression of the Image to Be Transmitted with LoRa

The methods used to compress an image are divided into two categories: lossy and lossless methods. Because the LoRa network is suitable for low-size data transmission, lossy compression methods are generally used to reduce the size of images transmitted over the LoRa network to the smallest size possible. However, lossless compression methods can also be applied for scenarios where data must be transmitted without loss, such as medical imaging.

Since the study is based on image production techniques using GAN, lossy compression methods are expected to be more effective in terms of the study results. Since our research focuses on the reproduction of low-resolution and degraded images, the degradation in image quality and data loss resulting from lossy image compression is considered part of the study rather than a problem. For this reason, the compression process will be performed using the lossy compression techniques JPEG and WebP in the compression stage to increase compression efficiency. The compression processes we use in the Python programming language, utilizing the Pillow and base64 libraries, significantly reduce the size of the image to be transmitted. As a result, images with considerably reduced resolution are transmitted via LoRa in short periods.

After image transmission, super-resolution images will be generated from the low-resolution image received at the receiver node using Real-ESRGAN and ESRGAN. The generated images will be compared according to PSNR and SSIM metrics.

2.1.1. JPEG Compression

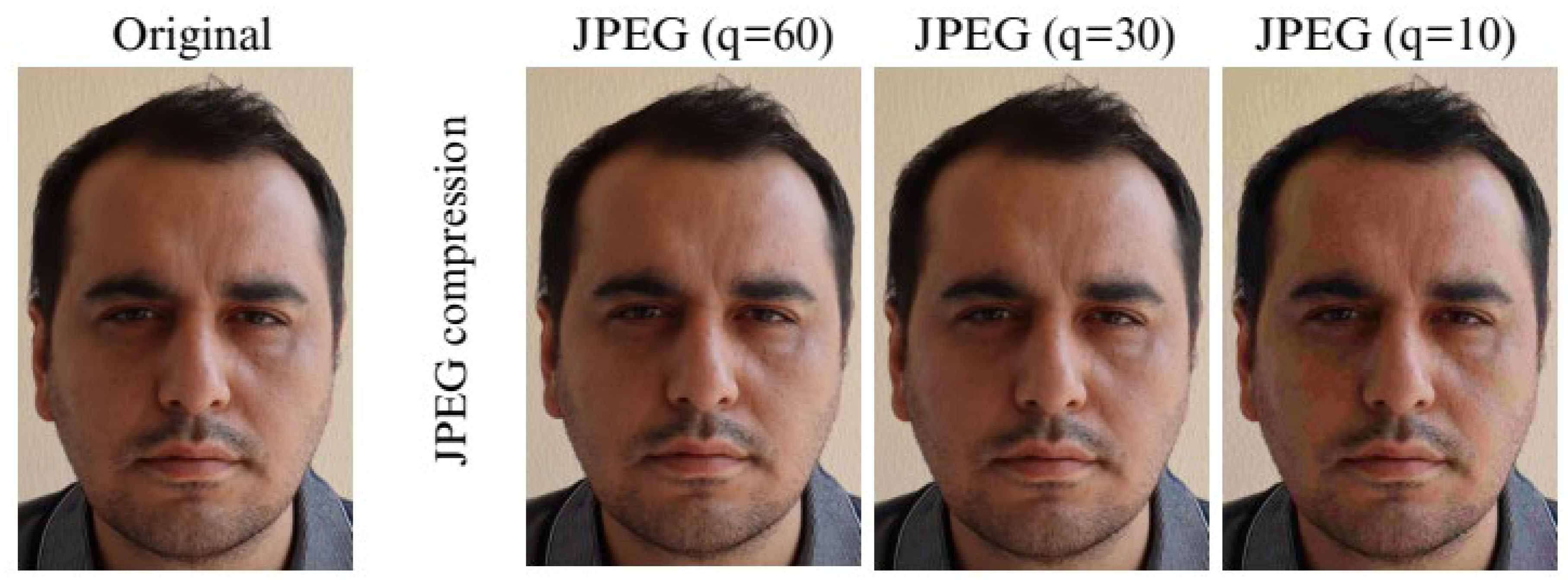

The JPEG compression method offers high compression rates but results in significant quality loss at low bit rates. The original image (1124 × 1679 pixel) and the results of JPEG compression applied to this image at different quality ratios are shown in

Figure 4 for comparison. This illustrates that as the quality factor decreases with JPEG compression, the image quality also decreases. However, no dimensional changes are made to the image; only the way the data is stored changes.

Figure 5 shows the results of JPEG compression after a 10× downsampling process.

Downsampling directly reduces the number of pixels by reducing image resolution, thus significantly reducing the amount of data to be processed or compressed. Since we will be performing super-resolution image reproduction with Real-ESRGAN on the receiver node, the images obtained by JPEG compression at different quality factors (q = 10, 30, 60) applied after the downsampling process in

Figure 5, will be transmitted via LoRa. This image also meets the minimum data size requirement for rapid image transmission via LoRa.

2.1.2. WEBP Compression

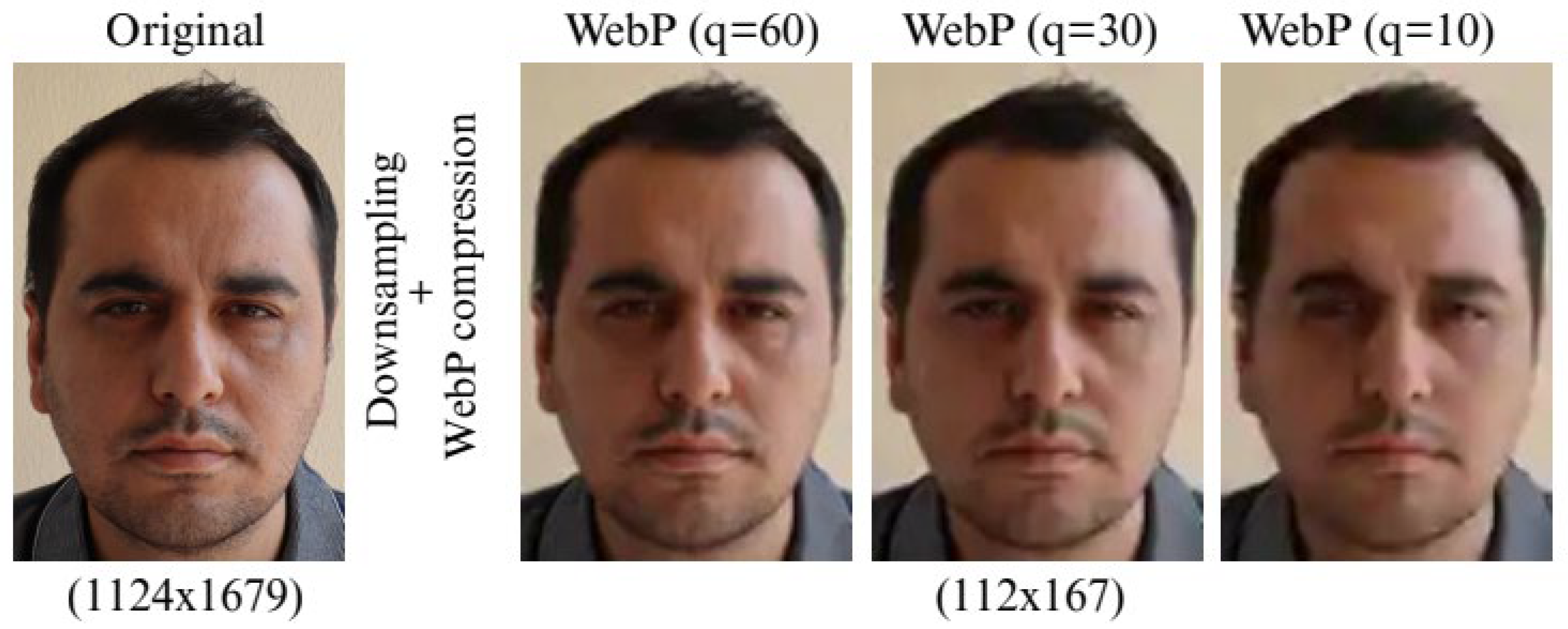

It is a more modern compression method that offers less distortion and a better compression ratio than JPEG. To compare it with JPEG compression, WebP compression with different quality factors (q = 10, 30, 60) was applied to the same original image (1124 × 1679 pixel) and the results are shown in

Figure 6. When WebP compression is performed with a quality factor of q = 10, the original image size of 358 KB is reduced to 33.7 KB.

When downsampling is applied before WebP compression, the compression results with different quality factors are shown in

Figure 7. According to these results, although the quality perceived by the human eye is significantly reduced, the original 358 KB image is reduced to 0.805 KB as a result of the q = 10 quality factor compression process. In the following sections of the study, this degraded image, sent via LoRa to the receiver LoRa node, was regenerated with Real-ESRGAN and ESRGAN for resolution enhancement. The results are compared with those produced using Real-ESRGAN and ESRGAN for the image compressed with JPEG.

A comparative table of JPEG and WebP compression is shown in

Table 1.

According to this table, WebP compression yields significantly better results than JPEG compression. Both images compressed with JPEG and WebP at q = 10 appear to be of inferior quality to the human eye. However, the PSNR values for both results were calculated as 22.87 dB for JPEG (q = 10) and 23.67 dB for WebP (q = 10). Considering these results, WebP compression achieves both a better PSNR and compression than JPEG, achieving the targeted smaller data size.

The image compression was performed on a Raspberry Pi 4, to which the sender’s LoRa module is also connected, using the Pillow and base64 libraries in the Python programming language.

Since JPEG compressed data is in binary format and cannot be reliably stored in plain text files, the binary buffer is encoded using base64 encoding. Base64 encoding converts binary data into ASCII text, providing reversible protection for the content. The resulting base64 string is saved in a .txt file. The image data in this file is read using file reading, divided into packets, and transmitted to the receiver LoRa via the sender LoRa. The data packets read by the receiver LoRa are saved to a .txt file. For base64 decoding, the .txt file is read, and the base64 string is decoded back into its original binary format. The resulting data is then saved as a viewable image file, either .jpg or .webp, depending on the original image format. This method enables end-to-end compression and transmission of image data via plain text while maintaining reasonable visual quality through DCT-based compression algorithm.

2.2. Image Transmission with LoRa

LoRa technology is preferred in IoT applications over other network technologies due to its ability to transmit data over long distances (kilometers) without requiring hops and its low energy requirements. This technology can provide a range of up to 20 km in rural areas and 5 km urban areas [

31]. Furthermore, thanks to its low power consumption, devices can boast years of battery life [

32,

33]. LoRa provides a significant advantage over other LPWAN technologies by using Chirp Spread Spectrum (CSS) modulation technology, which provides an elevated level of interference resistance [

34]. In addition, there are no licensing costs because the LoRa network operates on unlicensed frequency bands [

35,

36]. However, LoRa cannot use these public bands without restriction and must comply with a 1% duty cycle limit per hour [

37,

38,

39]. The duty cycle limitation means that LoRa modules can transmit data for 36 s per hour on the same band. This limitation makes visual environment monitoring via LoRa challenging due to the low transmission speeds of LoRa modules.

In LoRa communication, the time on air and transmission range of the data increase at low transmission speeds. At high transmission speeds, the time on air and the transmission range of data decrease. For this reason, if transmission is desired over long range, data transmission must be performed at lower transmission speeds.

LoRa Transmission Conditions

In the study, LoRa transmissions were conducted using real hardware devices. E22 900T22S LoRa modules were installed on two Raspberry Pi 4, one as a receiver and one as a transmitter. 5 dBi antenna was used for both LoRa modules. Additionally, 10,000 mAh power banks were used for long-distance transmissions. The characteristics of the LoRa module used in the study are listed in

Table 2.

Some of the experiments were conducted at close range by connecting Raspberry Pi 4 devices to displays. This way, data packets sent and received were monitored and packet loss was tested continuously. The data packet size for the LoRa module is 240 bytes. Six bytes of this constitute the header information, and the remaining 234 bytes are for data. Because image data, due to its large size, must be transmitted in multiple 234-byte packets, collisions occur if a new packet arrives while the receiver is processing the previous one. Therefore, to prevent packet loss, the LoRa sender and receiver codes include delay times after each packet transmission.

Once the experimental parameters were stable, image transmission was tested in an open area over a distance of 1500 m. The 868 MHz frequency band and 125 kHz, 2.4 kbps air data rate bandwidth were used for transmission. The LoRa transmission times in our study were calculated using these experiments. No packet loss was observed under these conditions.

2.3. Face-Focused Enhanced Super-Resolution Generative Adversarial Network (ESRGAN)

To train the super-resolution generative adversarial network developed in this study, we used 1000 images of CelebA face images dataset as the training dataset [

30].

The images in the dataset consist of mixed-resolution images and have similar resolutions. Our ESRGAN model uses two image datasets for its training: high-resolution (HR) and low-resolution (LR). The original front-facing images served as an HR image dataset. The original images were downscaled by a factor of 4 to create a bicubic LR image dataset.

While the model is being trained, the generator network is fed with LR images and attempts to produce super-resolution (SR) images. The discriminator network is trained using the SR images generated and the original images from the HR dataset. The generator network attempts to deceive the discriminator network that the generated image is real. The discriminator network measures the fakeness of the generated image using a loss function (adversarial loss). This loss value is fed back to the generator network. As a result, the generator network attempts to produce more realistic images.

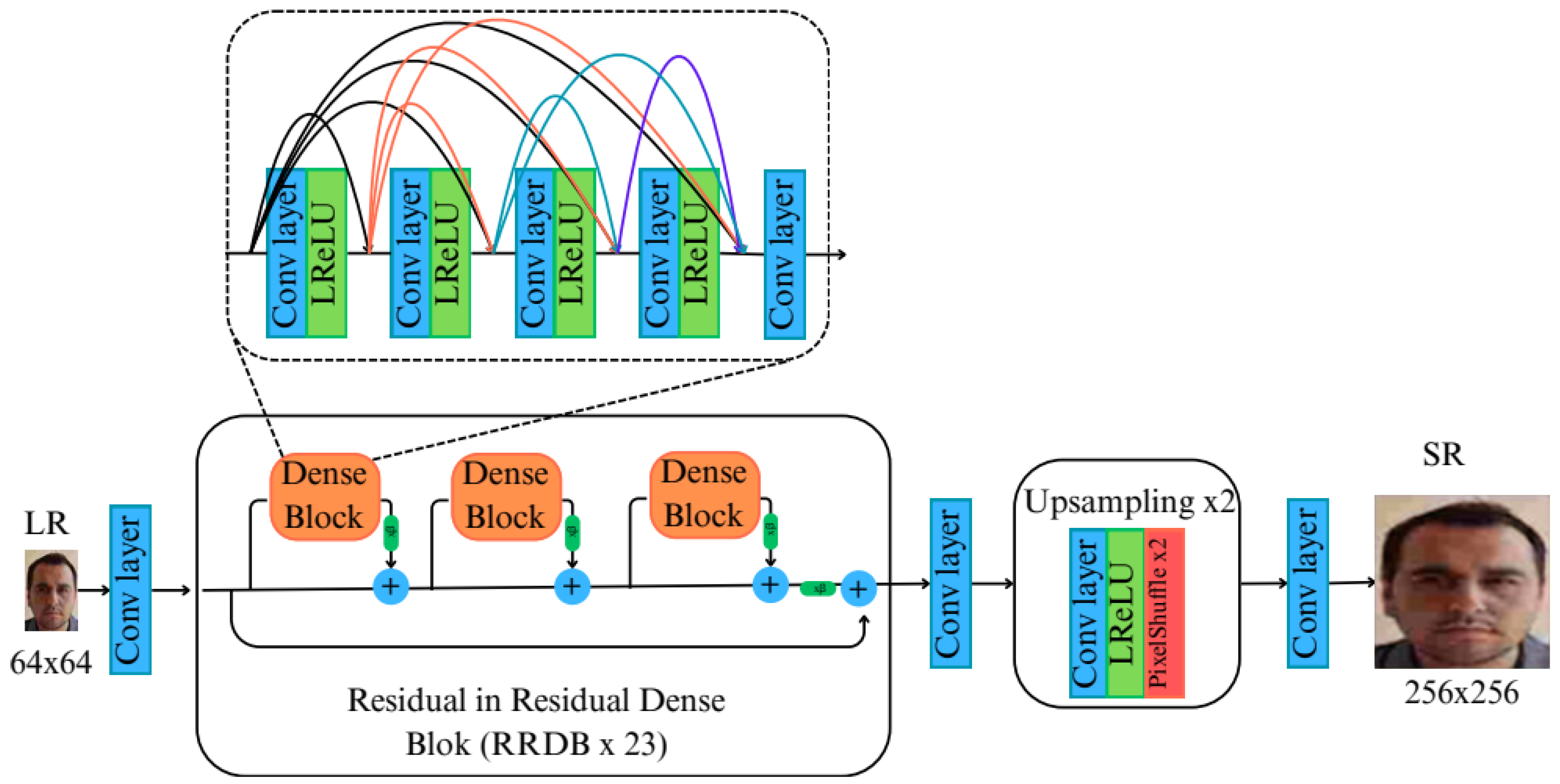

Figure 8 illustrates the generator architecture of the trained network. Unlike the SRGAN network, this model incorporates a Residual-in-Residual Dense Block architecture without batch normalization. Perceptual loss features have also been improved [

5].

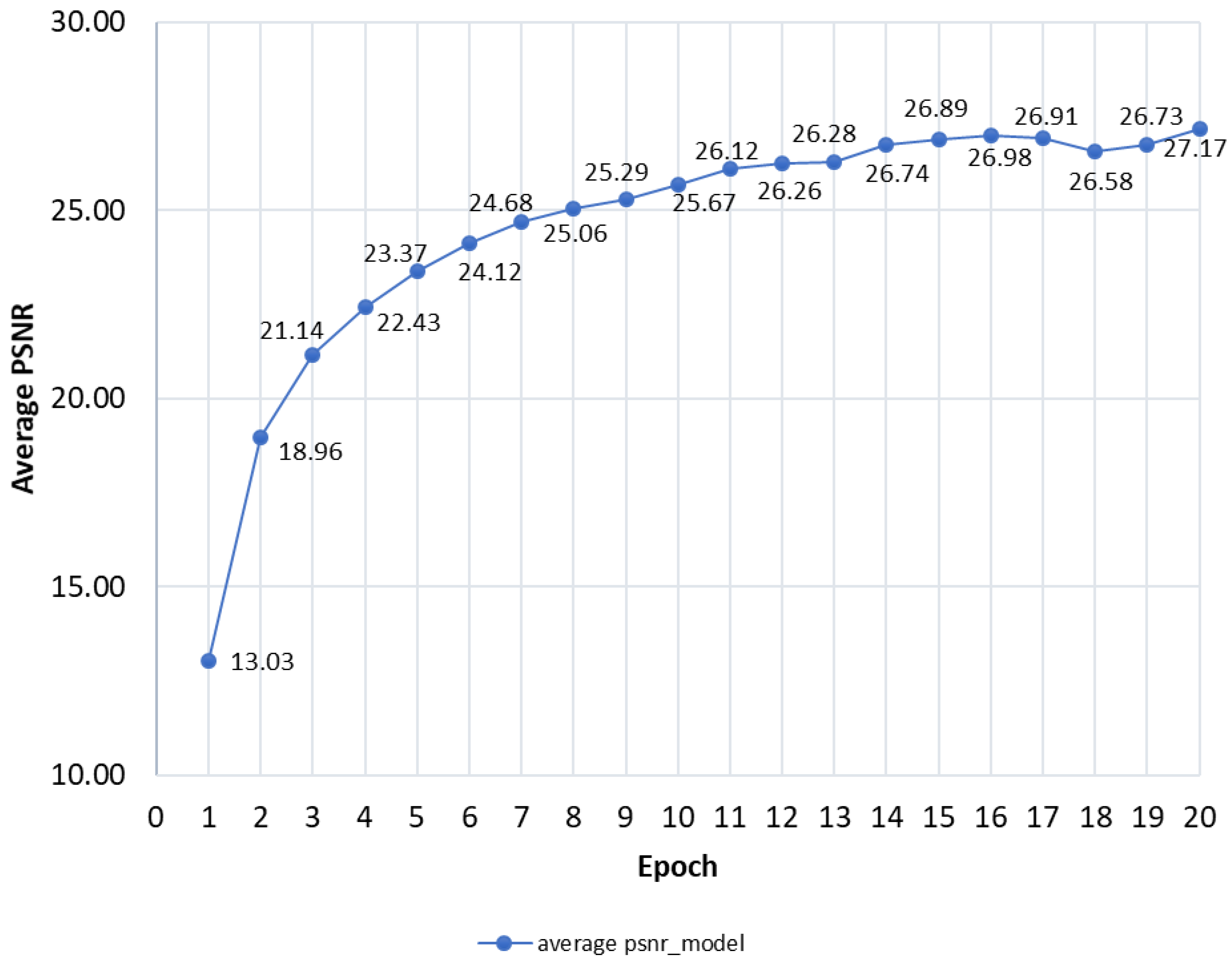

In our study, the training of the ESRGAN network is performed on a Windows Server 2016 virtual machine equipped with a 32-core CPU and 64 GB of RAM. The image dataset consists of 1000 face images. Training was performed over 20 epochs. The average PSNR in the final epoch was 27.17 dB. The average PSNR for 20 epochs is 24.52.

The methodology applied in the study for image enhancement in the receiver consists of 4 steps:

(Step 1) Creating an Image Training Set: For this stage, 1000 frontal face images were scanned in the open access CelebA face database [

30] and prepared for training. The resolution of these HR images was reduced by a factor of 4 to obtain LR images. HR and LR images were used as the training dataset.

(Step 2) Training the ESRGAN Network: For this stage, HR and LR images were used as input to the generator and discriminator networks. The discriminator network fed back to the generator network using loss calculation to attempt to produce realistic images. Training was performed for 20 epochs, resulting in a face-focused ESRGAN network model.

(Step 3) Testing the Face-Focused ESRGAN Model: LR images transmitted from the sender’s LoRa module to the receiver’s LoRa node were fed into the trained network model as input. The ESRGAN network model produced 256 × 256 pixel SR images as output.

(Step 4) PSNR and SSIM Measurements and Results: The images produced during the testing phase were compared with the original images and calculated PSNR and SSIM measurements. The measurement results were evaluated.

Training Detail

The model trained in our study adopts the ESRGAN architecture. This architecture consists of a 23-block Residual-in-Residual-Dense Block (RRDB) generator and a multi-scale discriminator with skip connections. The model was trained using a combination of relativistic adversarial loss, content loss (MSE), and perceptual loss (VGG19-based L1). 1000 face images from the celebA face image dataset were used as the training dataset. 64 × 64 pixel patches were used for the LR image set and 256 × 256 pixel patches were used for the HR image set. The LR image set was obtained by downscaling the HR image set by 4× using the interpolation method. In the experiments, the 64 × 64 → 256 × 256 scaling aimed to maintain training stability and optimize PSNR values. Training was performed for 20 epochs with a batch size of 4 using Adam optimization (lr = 2 × 10−5) and StepLR scheduling (γ = 0.5 every 10 epochs).

3. Results and Discussion

In our study, the images were first downsampled by a factor of 10 to reduce the size of the high-resolution image data for transmission. Then, the images were compressed using JPEG and WebP compression techniques at different quality metrics (q = 10, 30, 60). The downsampled and compressed sample images were then converted to string format using base64 encoding for transmission via LoRa. Converting the data to a base64 string format converts the compressed JPEG and WebP binary data into ASCII text, ensuring compatibility with text-only systems while reversibly preserving the content. The original image used in the study, along with its size and transmission time via LoRa, is listed in

Table 3.

The original image was intended to be transmitted as a string of data using only base64 string format, without any processing. This original image has a data size of 489,033 bytes and is transmitted over LoRa in 2090 packets over 42 min. Both the 1% duty cycle limitations and the long transmission times required for a face image demonstrate the difficulty of direct image transmission over LoRa. Therefore, reducing image transmission times will facilitate the use of LoRa transmission for environmental monitoring in environments requiring long-distance transmission without internet access.

The transmission times over LoRa of sample images compressed with JPEG and WebP after 10-× downsampling are shown in

Table 4. According to the results in

Table 4, WebP compression is the most suitable compression technique among JPEG and WebP compression techniques. WebP compression exhibits less distortion perceived by the human eye than JPEG, resulting in better compression. Each sample image compressed with different quality metrics was transmitted from the sender LoRa node to the receiver LoRa node over the LoRa network. The transmission time results show that WebP compression provides faster transmission, directly proportional to compression performance. While the direct transmission time of the original image was 42 min (2520 s), the image compressed with WebP compression at q = 10 quality was transmitted in 4.51 s. Based on these results, an improvement of approximately 99.82% is achieved in transmission time.

All transmission times and packet counts reported in this study (

Table 4 and

Table 5) are based not on the original binary data size, but on the Base64-encoded string size, which introduces an additional data size overhead of approximately 33%. For example, a 0.805 KB WebP (q = 10, downsampling = yes) binary file (

Table 1) results in a Base64 string of 1101 bytes, and our transmission calculations (4.51 s) are based on this 1101-byte size (

Table 4). Although direct binary packaging appears to be more efficient in terms of data size, Base64 was preferred for text-based communication due to its simplicity, compatibility, and robustness against transmission errors.

Additionally, satisfactory results were achieved in compliance with the duty cycle limit of only 1% data transmission per hour. Under this constraint, a total of 36 s of data transmission per hour can be achieved. An evaluation using a sample image compressed with WebP (q = 10) shows that a single image can be transmitted in approximately 4.51 s. Based on this transmission time, approximately seven images per hour can be transmitted for images of similar sizes.

In the next phase of the study, the image received by the receiver node is reproduced with the trained ESRGAN model. Additionally, low-resolution face images are reproduced using the Real-ESRGAN model to demonstrate the comparative performance of super-resolution GAN models.

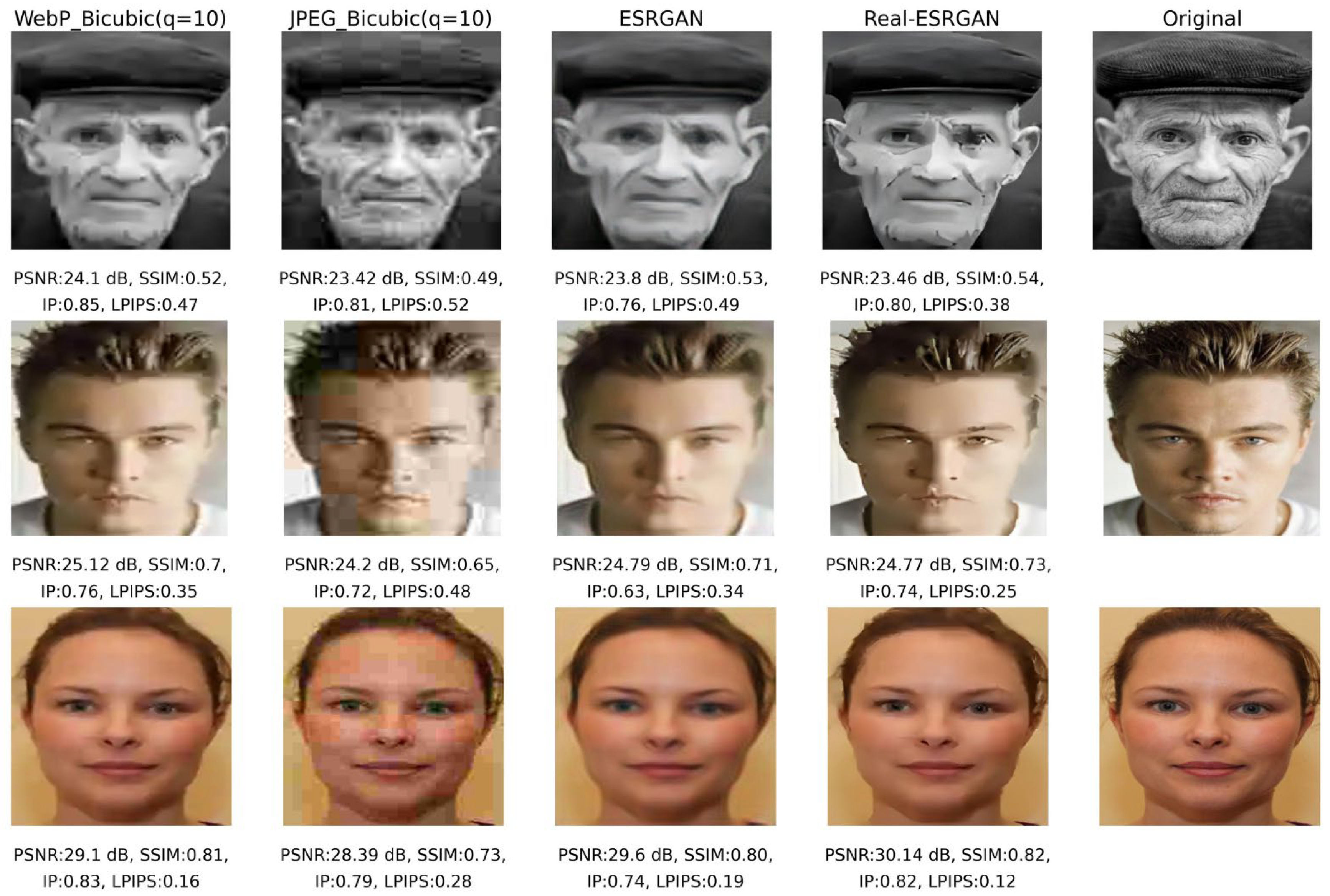

Figure 9 shows the results of the ESRGAN and Real-ESRGAN models applied to low-resolution images compressed with JPEG.

Figure 10 shows the results of the ESRGAN and Real-ESRGAN models applied to sample images compressed with WebP. The generated images are evaluated with the original images using PSNR and SSIM metrics.

To compare the images produced by our own trained ESRGAN model, SR images were also produced using the ready-made Real-ESRGAN model [

40].

Figure 9 shows the SR image outputs generated by the ESRGAN model and the Real-ESRGAN pre-built model. The first image in each row is the image that was first downsampled (10×) and compressed with JPEG at q = 10, q = 30 and q = 60 respectively and transmitted to the receiver. ESRGAN and Real-ESRGAN were applied to these three images with different quality ratios. These images were compared with the original images in terms of PSNR and SSIM metrics. Since LoRa transmission requires the lowest data size, if we evaluate the image created by ESRGAN using a JPEG-compressed LR image at q = 10 quality, the PSNR value is 23.03 dB and the SSIM value is 0.6543. For Real-ESRGAN, the PSNR value is 23.12 dB and the SSIM value is 0.6579.

The SR images generated by ESRGAN and Real-ESRGAN from LR images with WebP compression shown in

Figure 10, were compared in terms of PSNR and SSIM metrics. The PSNR and SSIM values of the SR images generated by ESRGAN and Real-ESRGAN are pretty close. When examining these values for an image compressed with WebP at a quality value of q = 10, the PSNR value of the image generated by ESRGAN is 23.47 dB and the SSIM value is 0.6650. For Real-ESRGAN, the PSNR is 23.35 dB and the SSIM is 0.6676. These results demonstrate that the ESRGAN network we trained under limited hardware conditions can achieve results similar to, and sometimes superior to, the Real-ESRGAN network.

While the results from the Real-ESRGAN model are slightly higher than those from the ESRGAN model, the PSNR and SSIM results for both models are close in value. When evaluated by human perception, the images produced by Real-ESRGAN appear more artificial. The ESRGAN model produces more realistic images. However, due to issues such as insufficient training time caused by the computer infrastructure used for training the ESRGAN model (graphics card, CPU-RAM insufficiency) and disconnections during long epochs, the number of epochs was kept short. Additionally, due to the crashes that occurred during the lengthy training process, the dataset’s image count (1000 images) was kept low. However, compared to Real-ESRGAN, the face-focused ESRGAN model trained in our study also yielded good results. Specifically, as seen in

Figure 10, the PSNR value of the image generated with ESRGAN is higher than that generated with Real-ESRGAN for the WebP compressed image at a quality metric of 10.

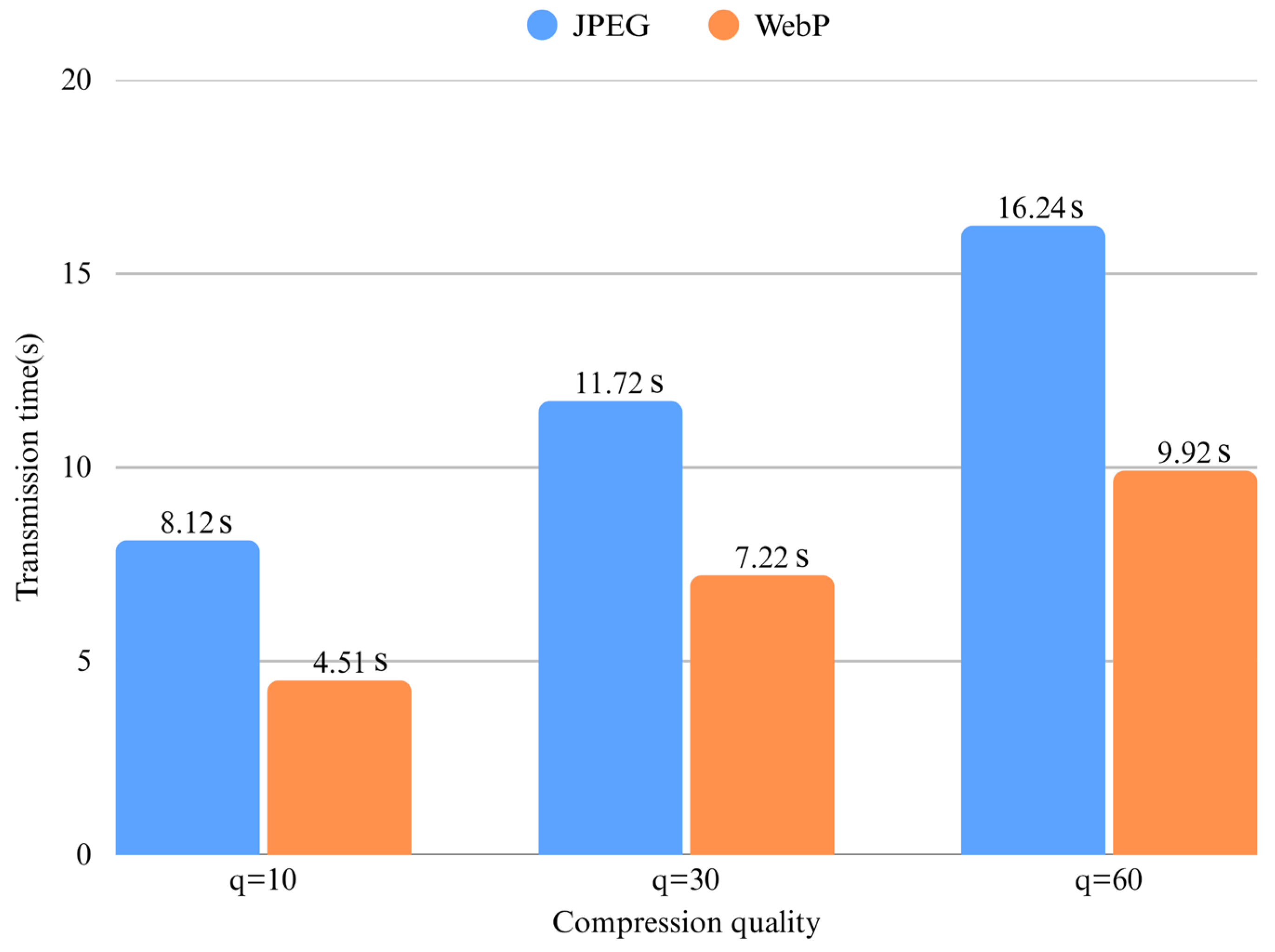

According to the test results, the transmission times of images compressed with JPEG and WebP compression formats via LoRa are shown graphically in

Figure 11. The graph shows that transmission times for images compressed in WebP format are shorter than for images compressed in JPEG format.

Figure 12 shows the PSNR changes that occurred during the 20 epochs of ESRGAN model training. The PSNR values increase linearly at each epoch step. This shows that the ESRGAN model improved linearly throughout training.

When compression methods are evaluated according to experimental results, WebP compression provides a better compression ratio and lower distortion compared to JPEG compression. Accordingly, LoRa transmission times for WebP-compressed images have a shorter duration than those for JPEG compression. Additionally, the SR image results obtained from the WebP compressed image are deemed superior to those from the JPEG-compressed image. The WebP compression method, which offers advantages in every aspect, is considered the most suitable compression method in this research for image transmission using LoRa.

In

Figure 13, image quality is evaluated using the Identity Preservation (IP) and Learned Perceptual Image Patch Similarity (LPIPS) metrics, in addition to PSNR and SSIM, which measure pixel and structural similarity. Of these metrics, LPIPS measures the visual closeness of the generated images to the original images and provides a quality assessment closer to human perception. In this study, an AlexNet-based feature extraction model is used to measure perceptual similarity. Unlike other metrics, lower values for LPIPS are more efficient. IP is a metric that measures how well a reconstructed face image preserves the identity of the original person. For this metric, face embeddings are extracted using the ArcFace-based InsightFace model and evaluated using cosine similarity.

Figure 13 shows the evaluation performed with 3 different face images selected from the Human Dataset [

29], which is different from the database used in GAN training. Within the scope of this evaluation, PSNR, SSIM, IP, and LPIPS metrics were calculated for WebP compression, JPEG compression, face-oriented ESRGAN, and Real-ESRGAN models. The three face images selected for this evaluation were compressed to a quality level of 10 using WebP compression, which is believed to provide more efficient compression. Each image was then reconstructed using both the face-focused ESRGAN and Real-ESRGAN models.

Assessing the metric results in

Figure 13, WebP compression results are consistent with WebP’s high compression and low distortion approach. It provides good results in terms of PSNR and identity protection.

JPEG is a lossy compression method. This method exhibits high image distortion. The results of this method show that compared to SR-based methods, it achieves moderate PSNR, SSIM, and identity protection. It is the least efficient method for LPIPS, which uses human perception as a metric.

Real-ESRGAN achieves the highest value in SSIM and the lowest in LPIPS, demonstrating its superiority in both structural and perceptual quality.

The ESRGAN model, despite being trained under limited hardware (CPU) conditions, performed better than classical compression methods in terms of both structural similarity (SSIM) and perceptual quality (LPIPS), achieving results comparable to Real-ESRGAN.

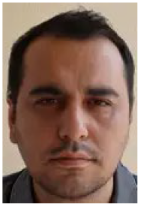

Table 5 shows the data sizes, packet counts, and LoRa transmission times for the original and WebP compressed after 4× downsampling formats of the images in

Figure 13. Accordingly, 18,343 bytes of image data are transmitted in approximately one and a half minutes (94.99 s) without any processing. This time exceeds the duty cycle, and it is a relatively long time for data transmission. When this image is reduced to 853 bytes with WebP compression, the transmission time is reduced to 4.81 s. This provides an approximately 95.19% improvement in transmission time. The second image experienced a 98.77% improvement (reduction) in transmission time, and the third image experienced a 99.08% improvement.

For the overall evaluation of the study, a mini-performance evaluation study was conducted using 15 different face images from the Human Dataset [

29], including the three images in

Figure 13. Images obtained using ESRGAN, Real-ESRGAN, JPEG compression, and WebP compression techniques were evaluated using PSNR, SSIM, LPIPS, and IP metrics.

In addition, the Mean Opinion Score (MOS) evaluation, which measures human observers’ perception of quality, was also conducted in this section. MOS scoring was performed using Google Forms. Fifteen images obtained using ESRGAN, Real-ESRGAN, JPEG compression, and WebP compression techniques were presented along with the original image for each image. Participants were asked to evaluate each image against its original image. The MOS score for each image generation technique was determined by evaluating the scoring results of 63 participants. The same 15 images were used for the other metric methods. The averages of the evaluation results were calculated, and all results are presented in

Table 6.

Higher PSNR, SSIM, IP, and MOS values in the evaluation indicate better preservation of the structural integrity, identification information, and perceptual quality of the images. Conversely, lower LPIPS values indicate that the generated images are perceptually closer to the original images. Therefore, an ideal model should have high PSNR, SSIM, IP, and MOS values and low LPIPS scores.

According to the results, Real-ESRGAN achieved the highest average PSNR value (25.29 dB). Next, WebP has an average PSNR value (25.1 dB). Since it is a compression method that causes little distortion, this result is expected. ESRGAN (24.84 dB) has produced a competitive result despite being trained under CPU limitations. JPEG shows the most noise and information loss with the lowest average PSNR (24.37 dB).

SSIM values are highest in Real-ESRGAN at 0.66. The ESRGAN model yielded a very close result at 0.63. This demonstrates that SR-based methods successfully generalize in preserving structural similarity compared to traditional compression methods.

The LPIPS value measures perceptual quality, with lower values indicating higher visual similarity. Real-ESRGAN (0.24) provided the highest perceptual quality with the lowest average LPIPS value. WebP (0.32) came in second, while ESRGAN (0.36) performed competitively despite being trained under CPU constraints.

When the IP metric is evaluated, all methods provide average identity protection. However, the WebP method achieved the highest kimlik koruma value and largely preserved identity integrity after compression. However, SR-based methods need further improvement in identity protection compared to traditional methods.

In subjective evaluation, Real-ESRGAN has the highest average MOS score (3.85). ESRGAN ranked second with a score of 3.46 and was observed to produce satisfactory results in terms of visual quality according to human perception despite CPU limitations. Among traditional methods, WebP (3.26), which causes less distortion, is better than the JPEG (2.78) method.

Latency and Embedded System Feasibility

A practical challenge for this system is the total latency on the receiver node, which is a constrained embedded device (Raspberry Pi 4). We analyzed the total latency as two components: (1) Transmission Latency and (2) Inference Latency.

The transmission latency for the WebP (q = 10) image was 4.51 s. We then measured the Inference Latency required for our trained ESRGAN model to perform SR on the received image. On the Raspberry Pi 4, this process took an average of 10 s.

Therefore, the total end-to-end latency (Transmission + Inference) is approximately 14.51 s. While this is unsuitable for real-time video, it is highly feasible for the target applications of non-real-time remote security alerts or identity verification checks.