Mulch-YOLO: Improved YOLOv11 for Real-Time Detection of Mulch in Seed Cotton

Abstract

1. Introduction

2. Materials and Methods

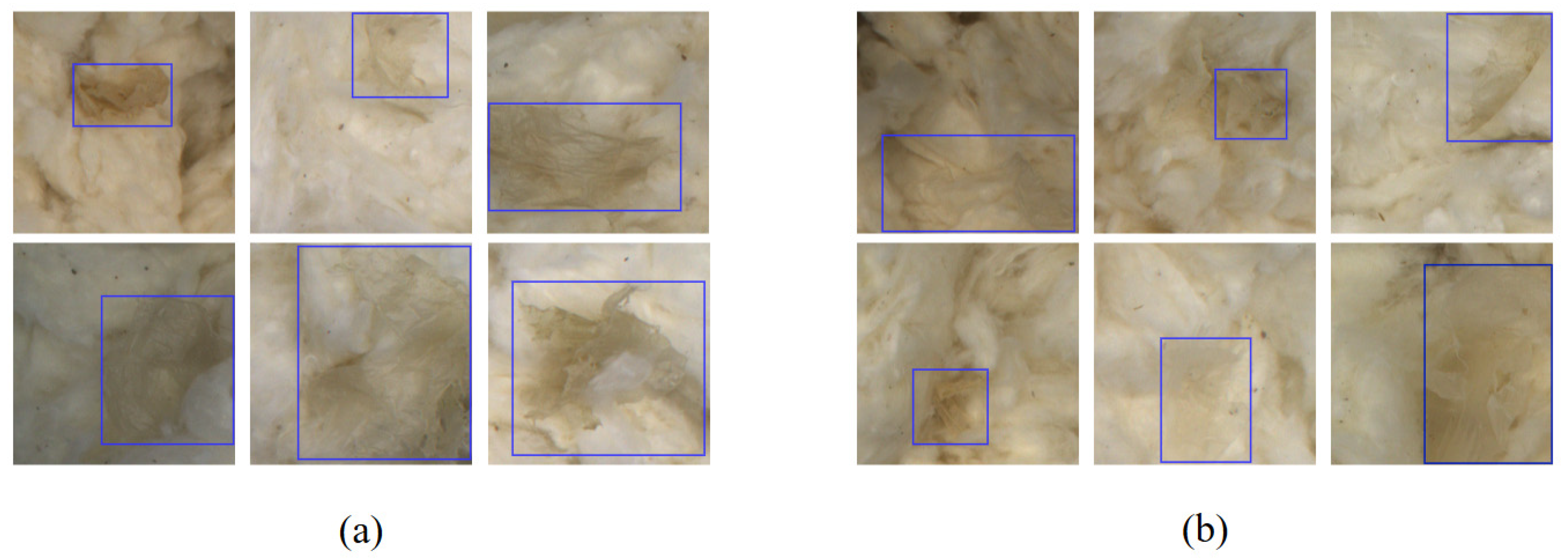

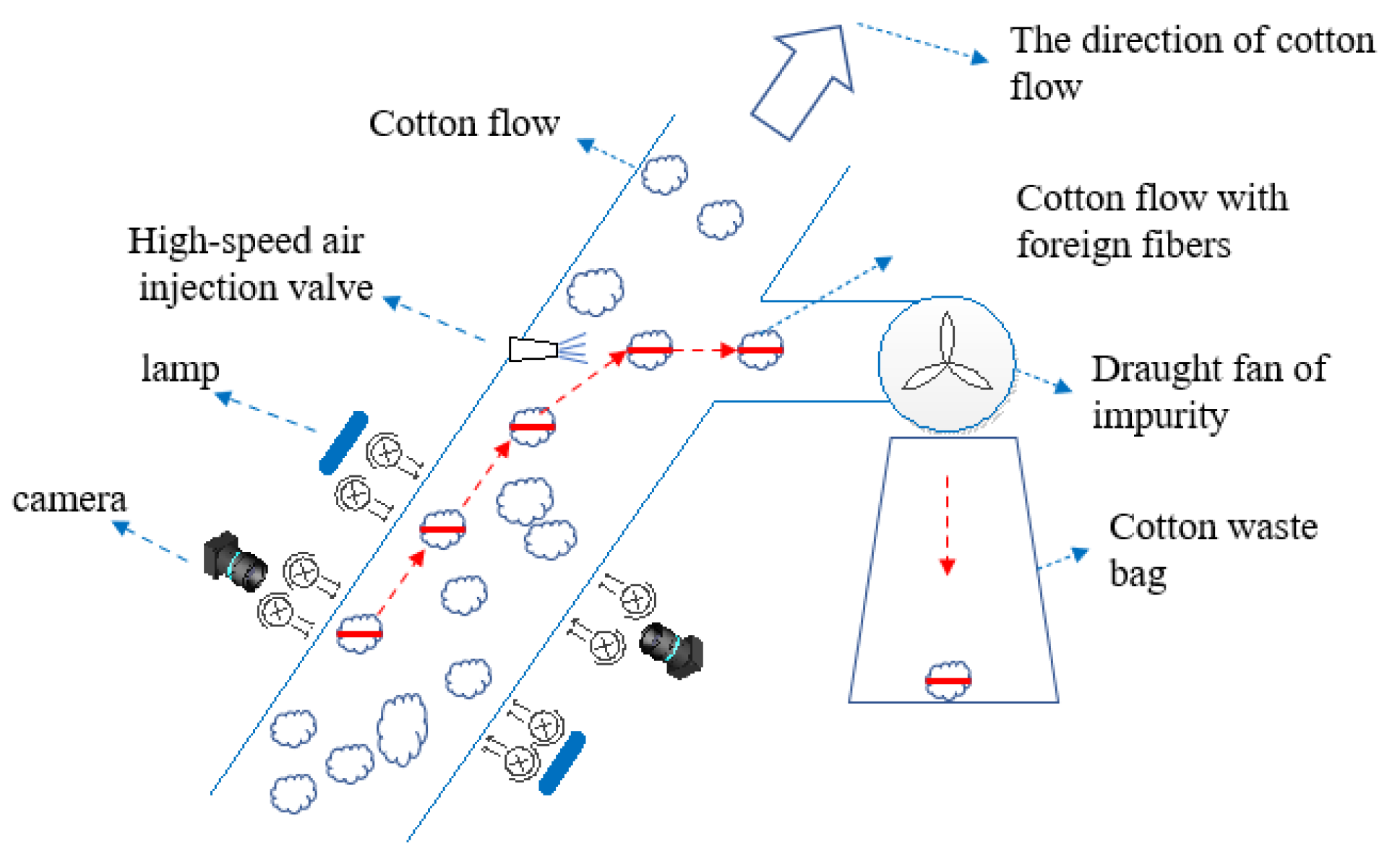

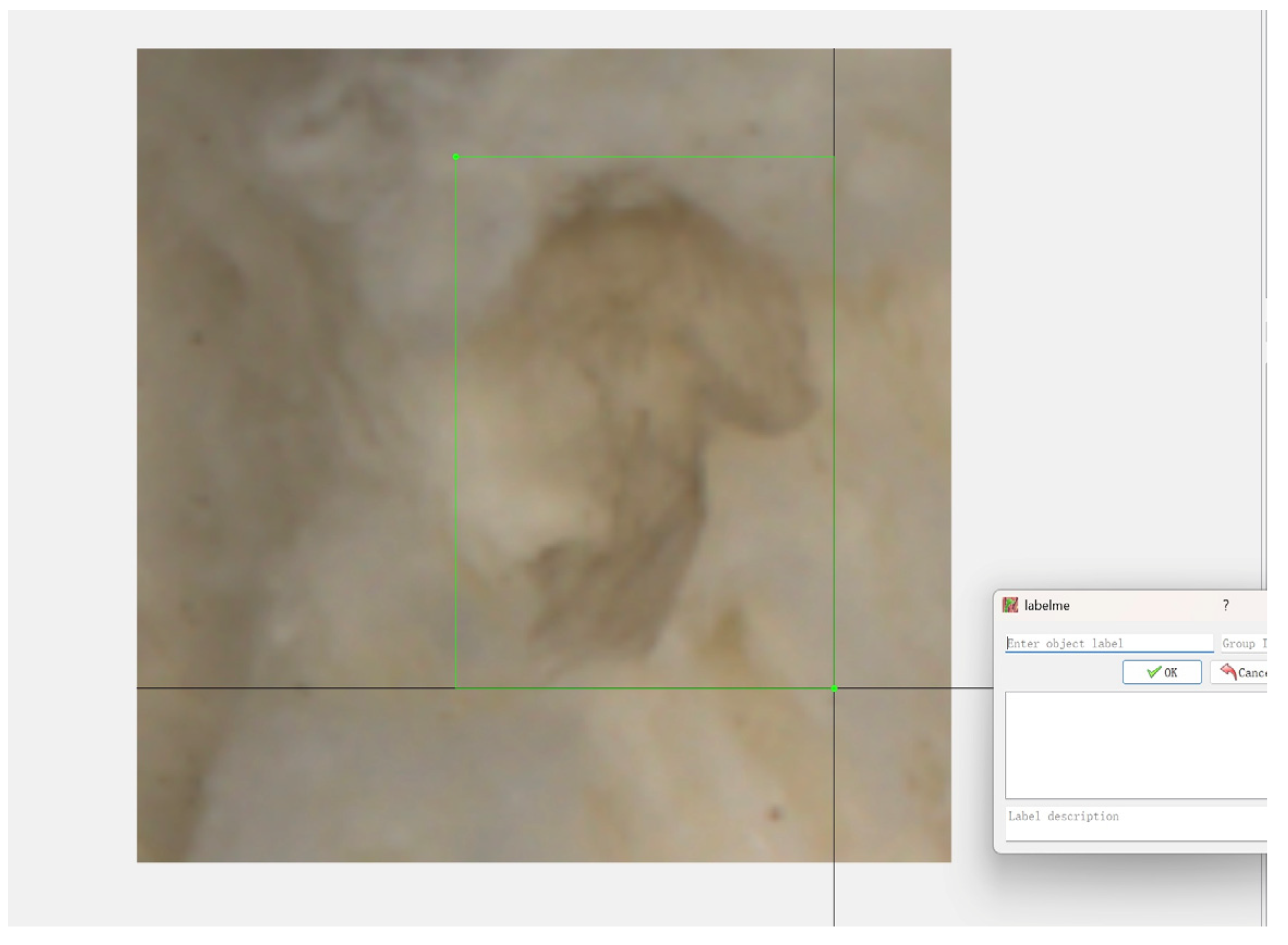

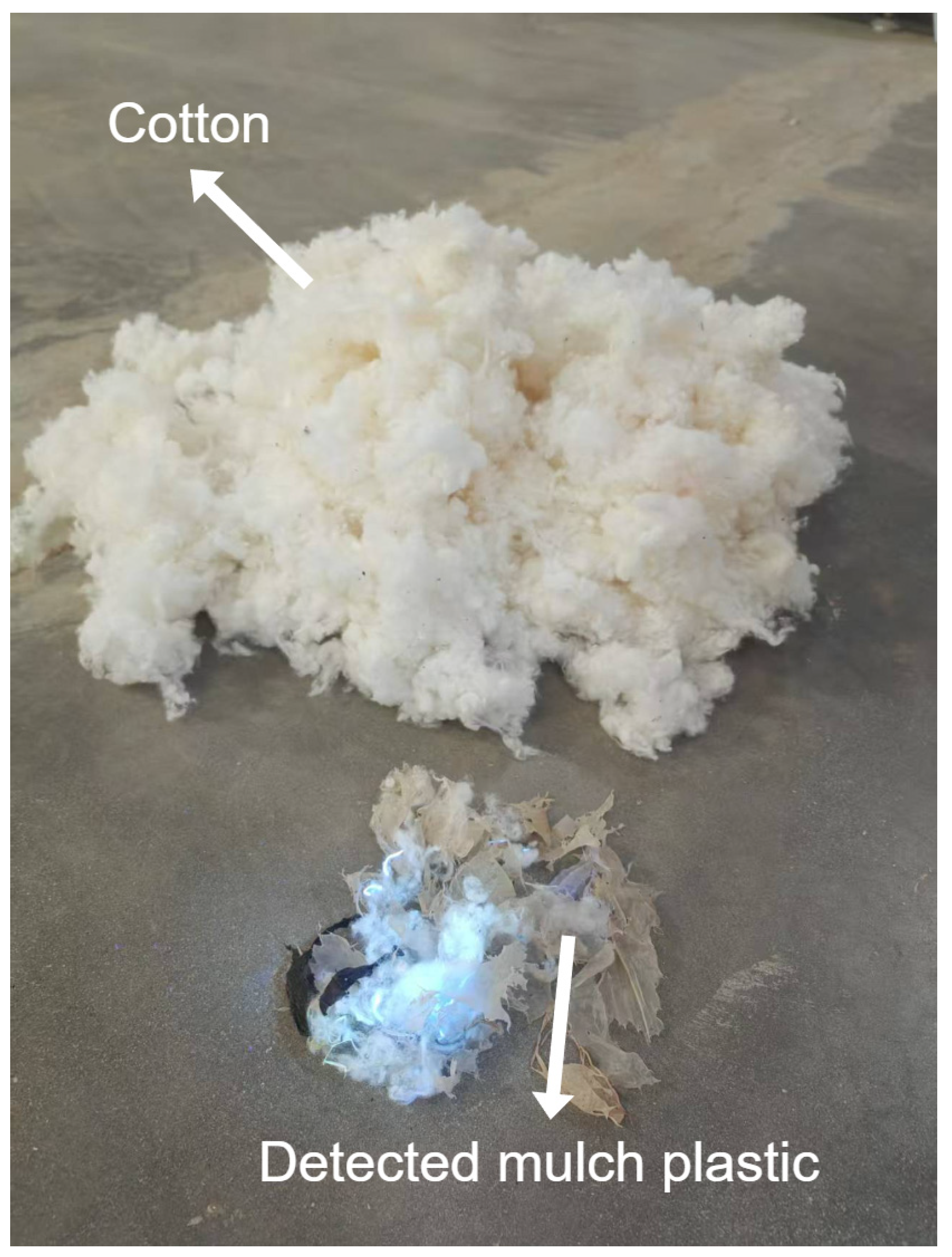

2.1. Materials

2.2. Methods

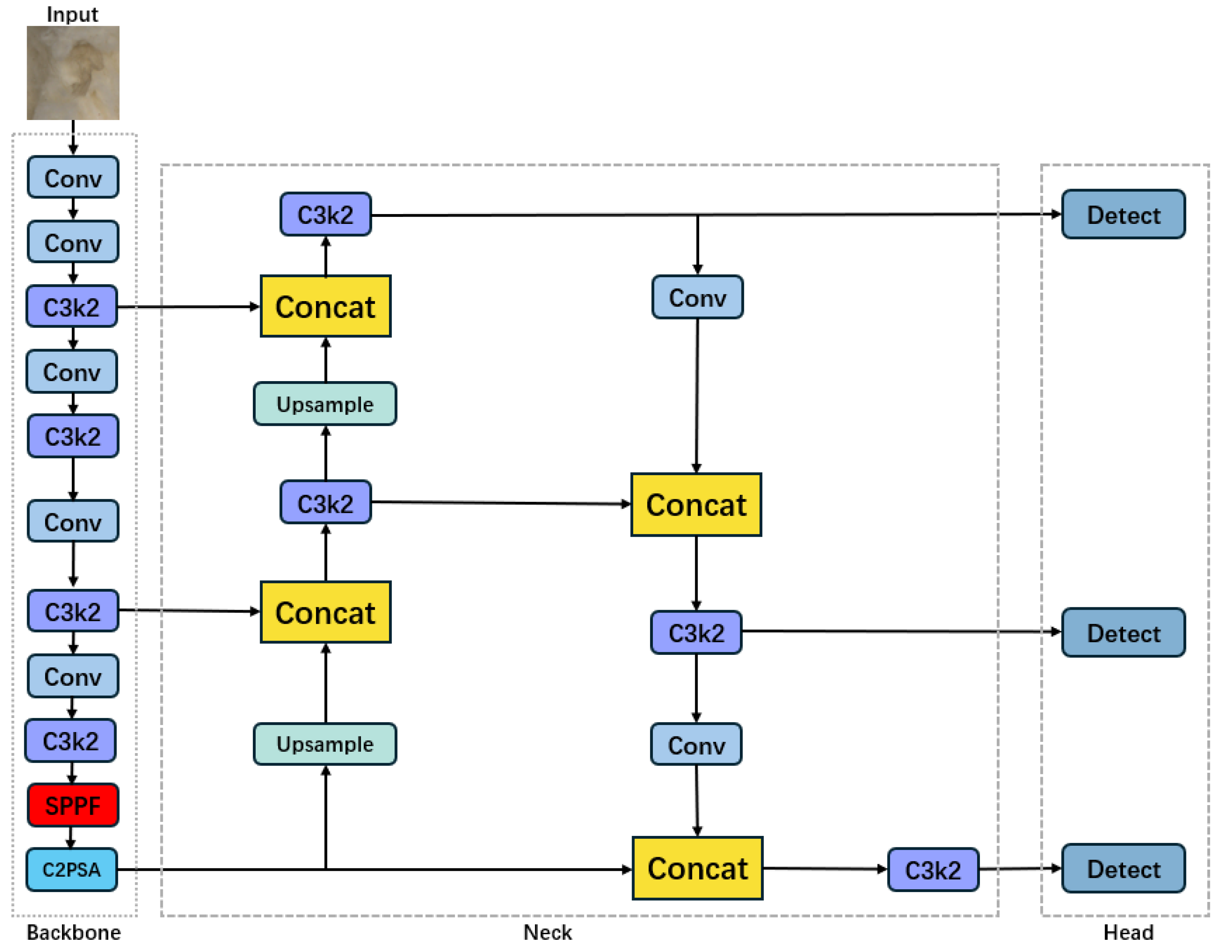

2.2.1. YOLOv11

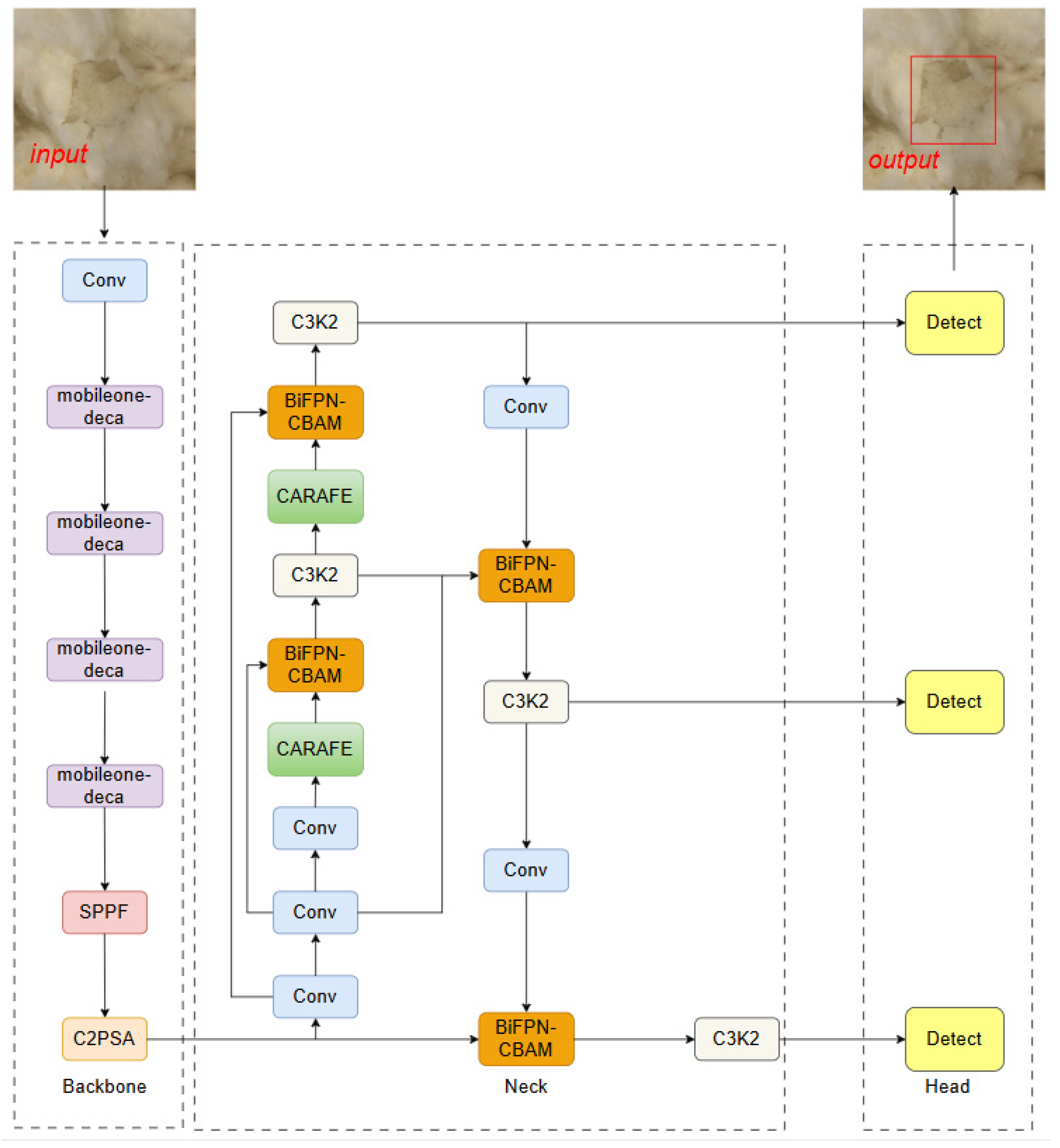

2.2.2. Improved YOLOv11

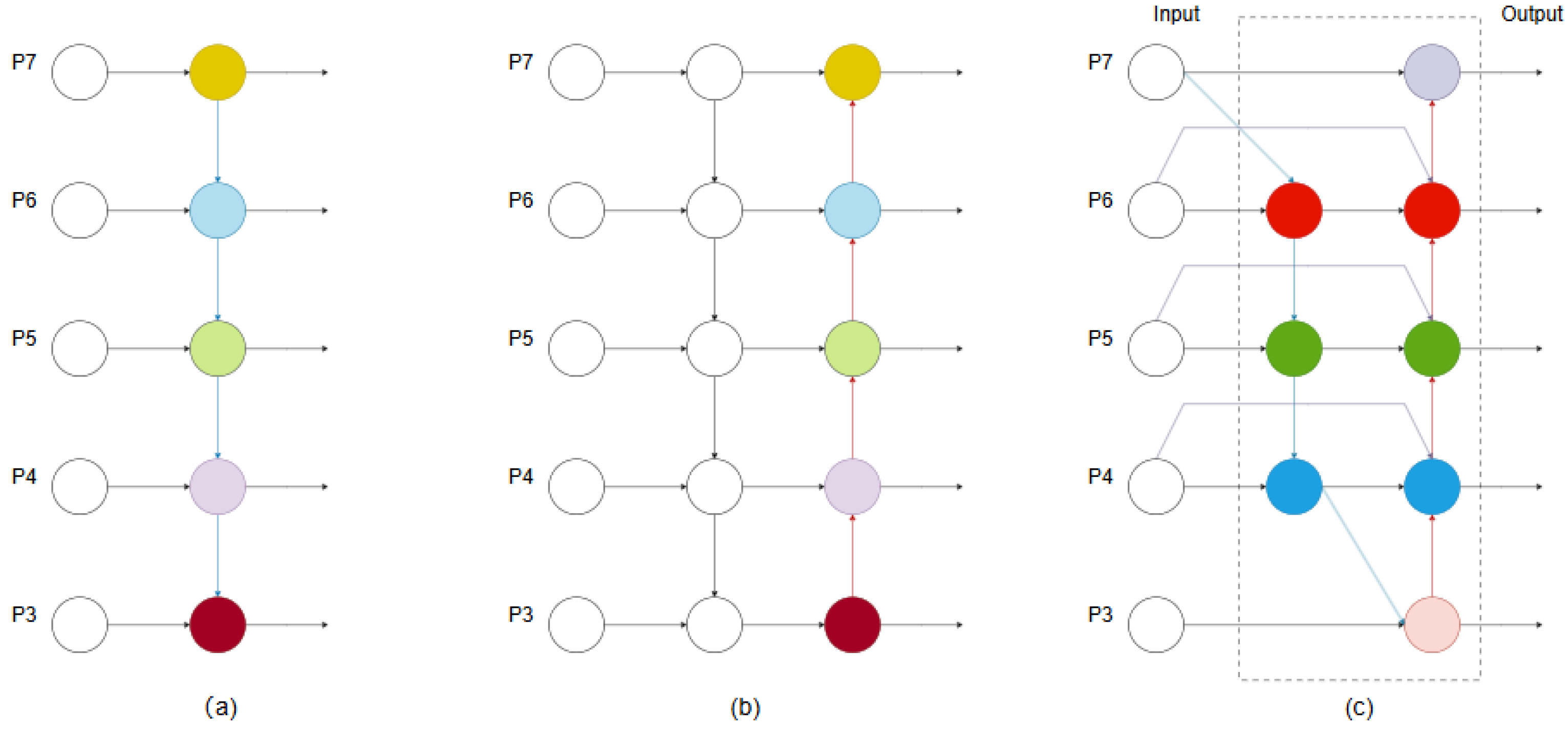

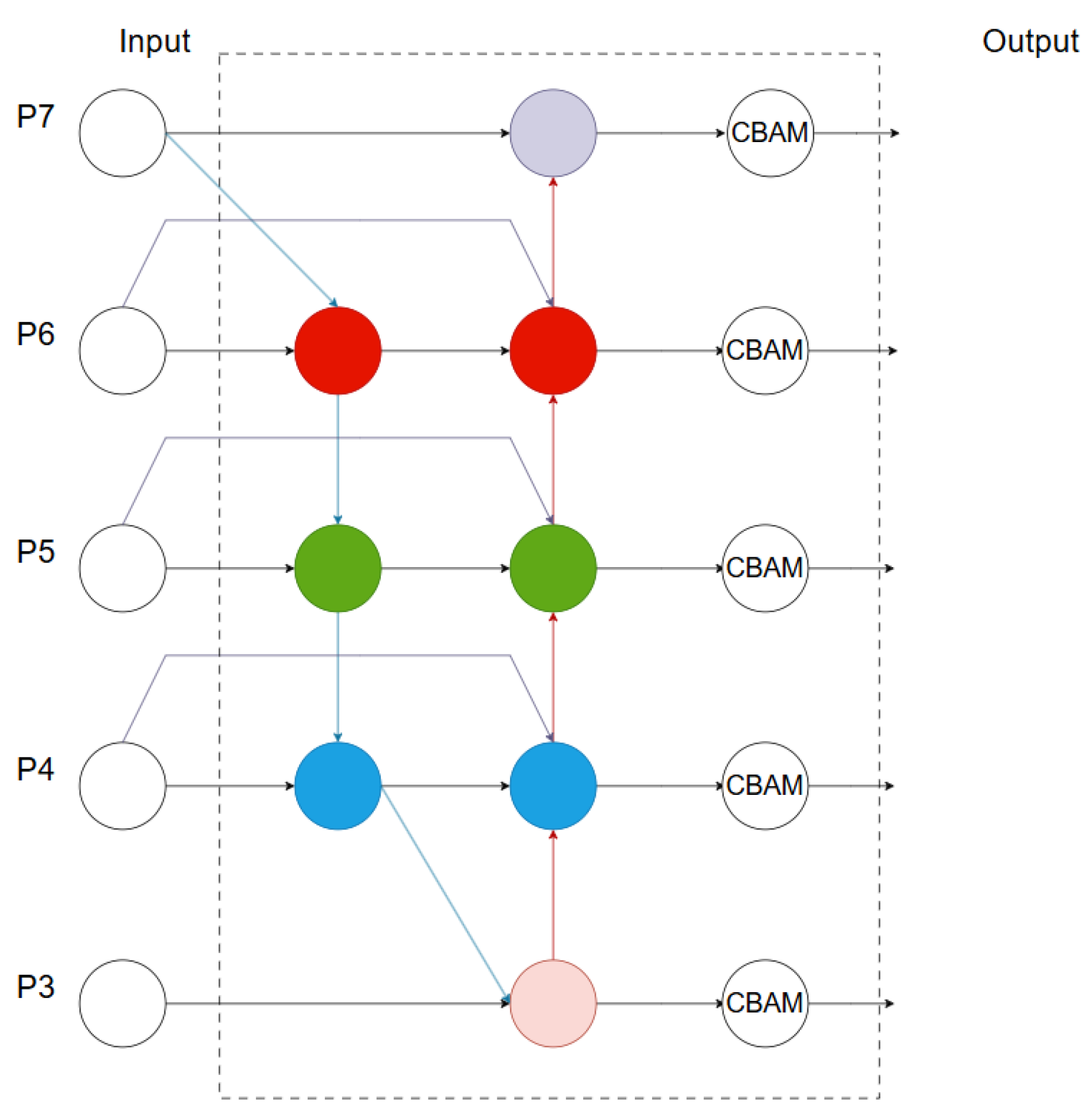

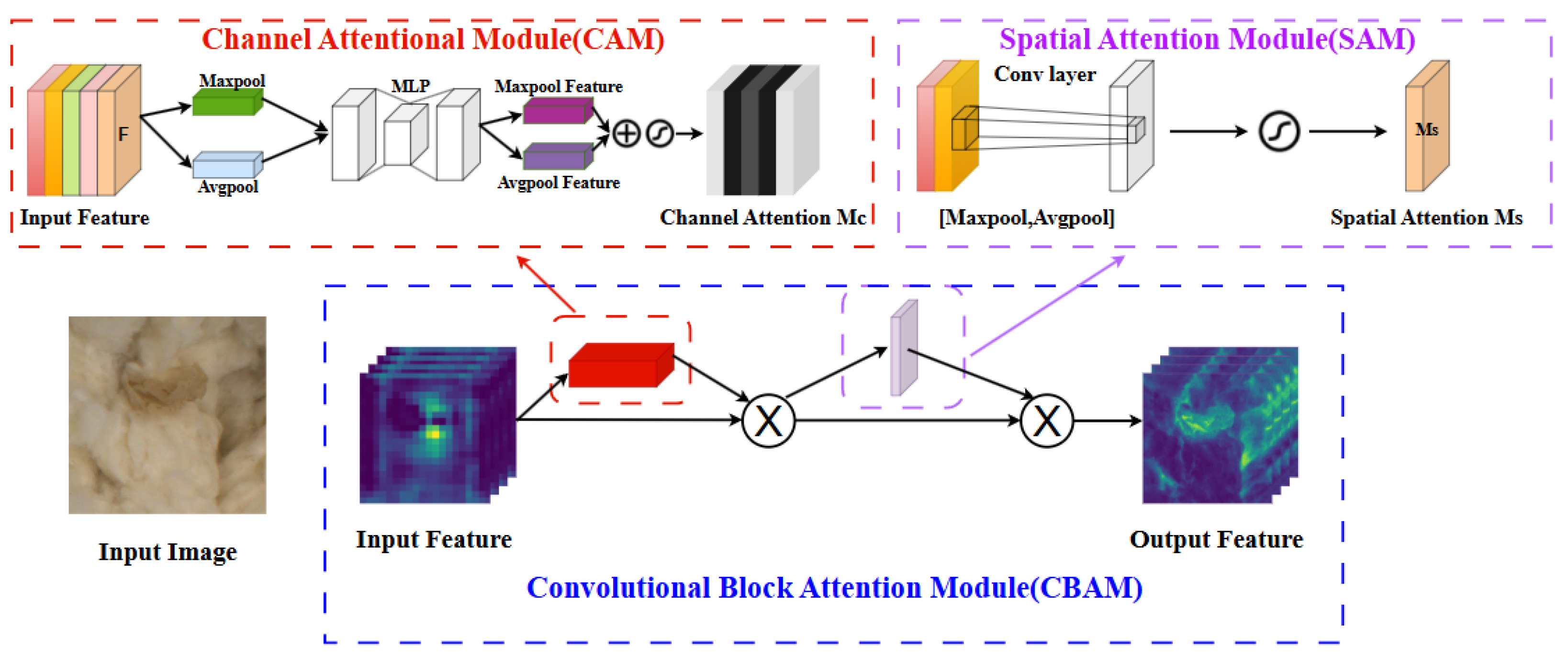

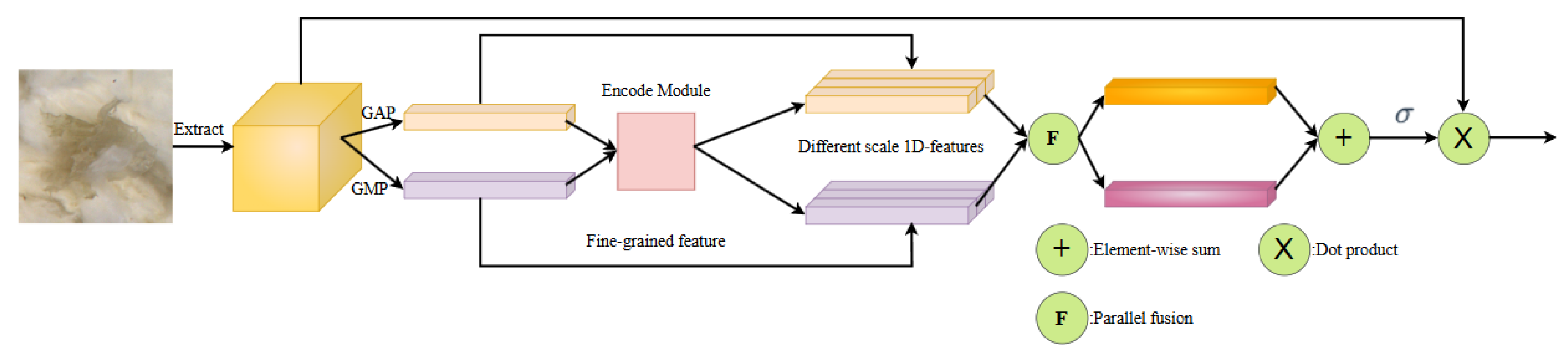

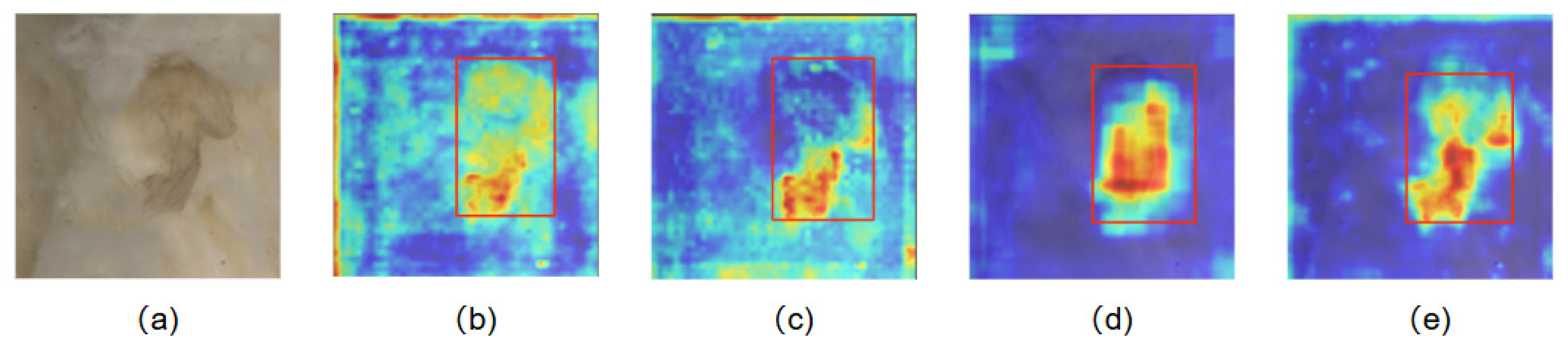

2.2.3. BiFPN-CBAM

2.2.4. CARAFE-Mulch

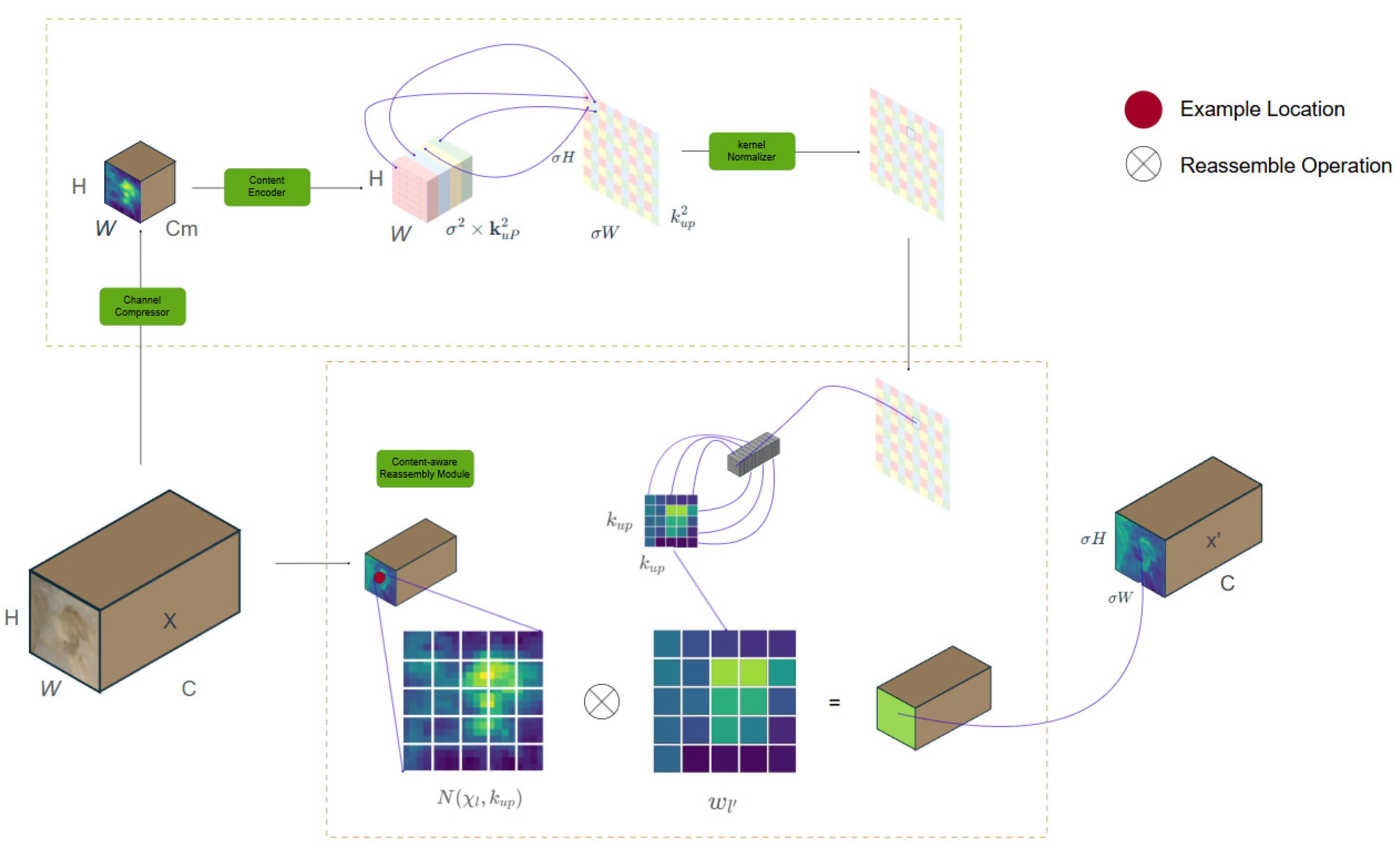

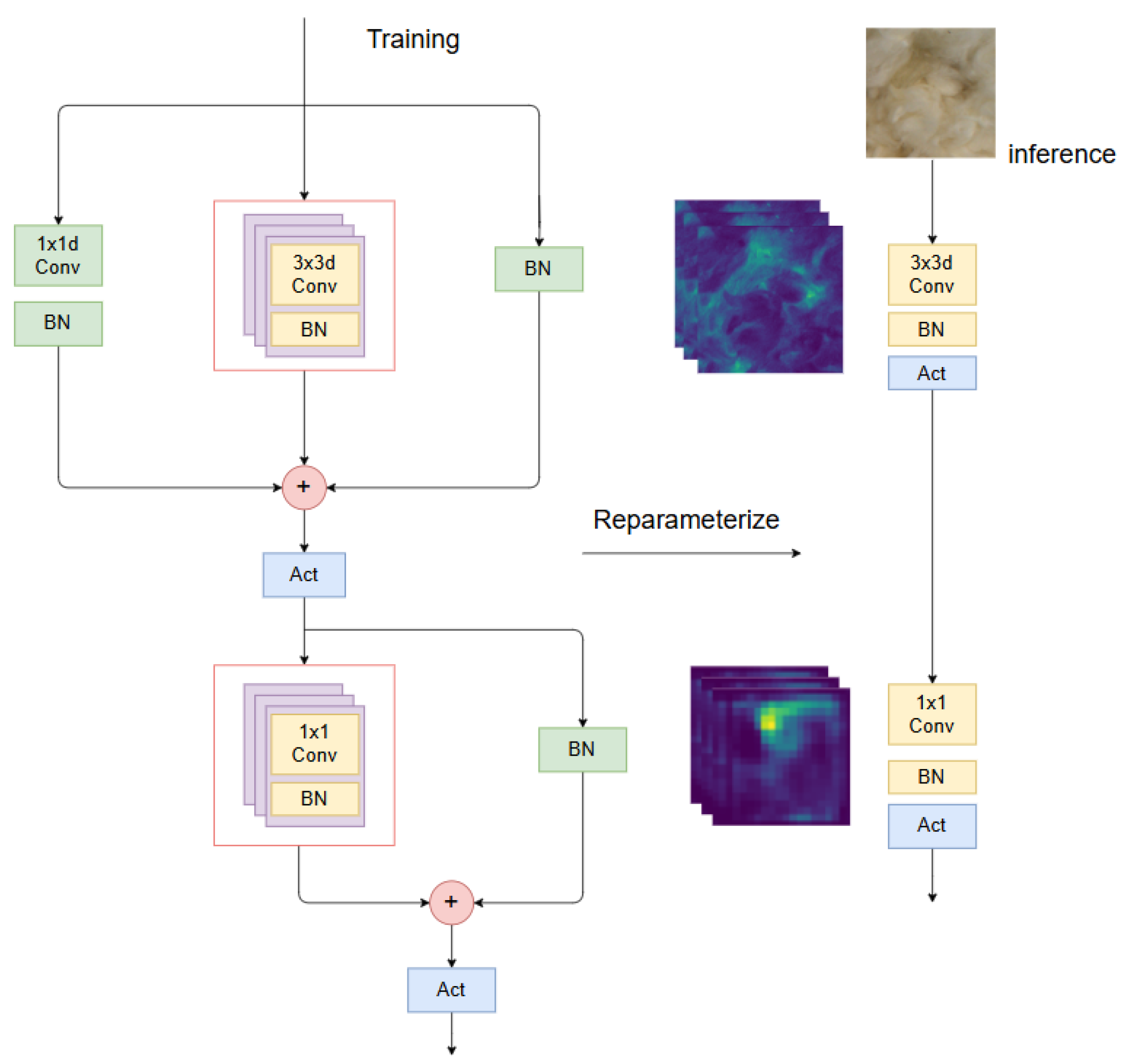

2.2.5. MobileOne-DECA

3. Results

3.1. Environment and Configuration

3.2. Performance Evaluation

3.3. Ablation Experiments

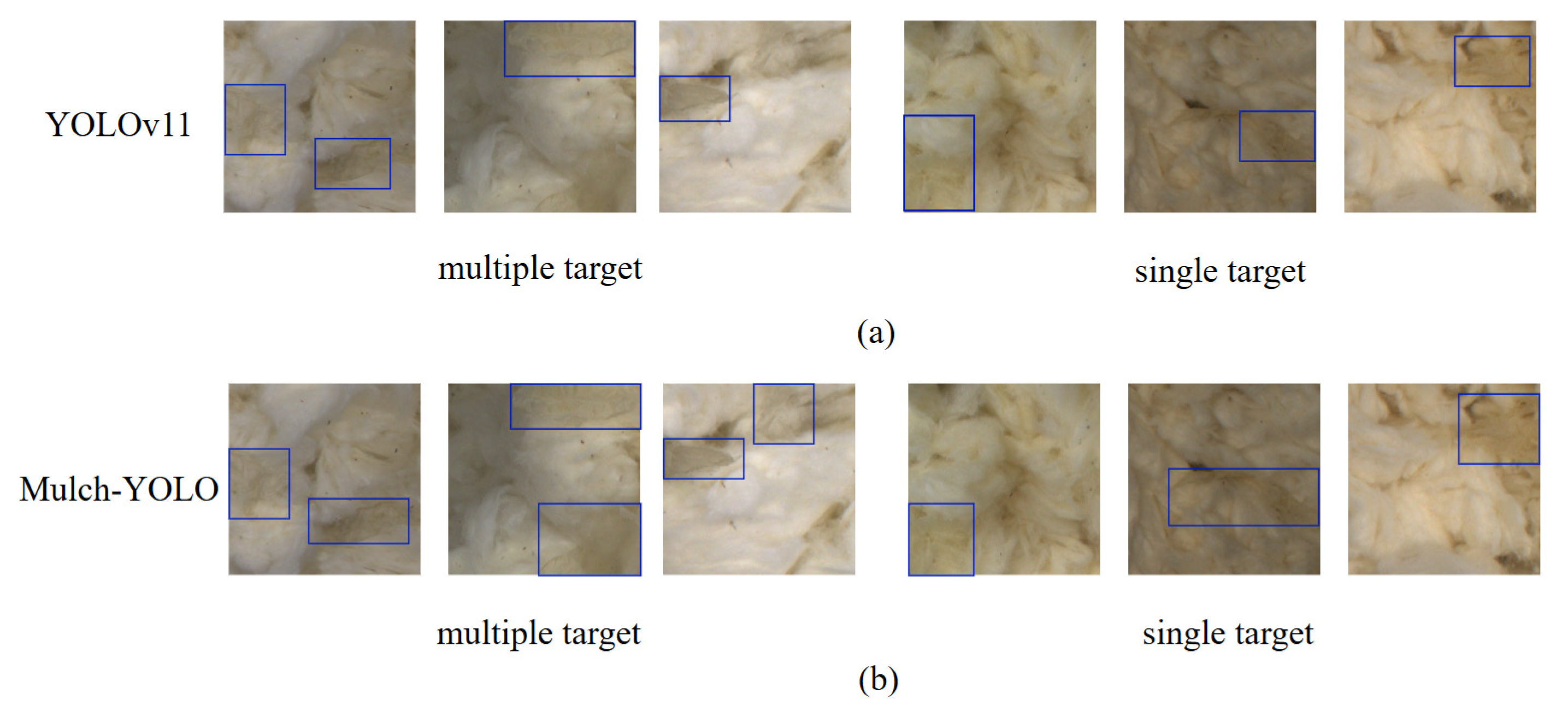

3.4. Comparison Experiment

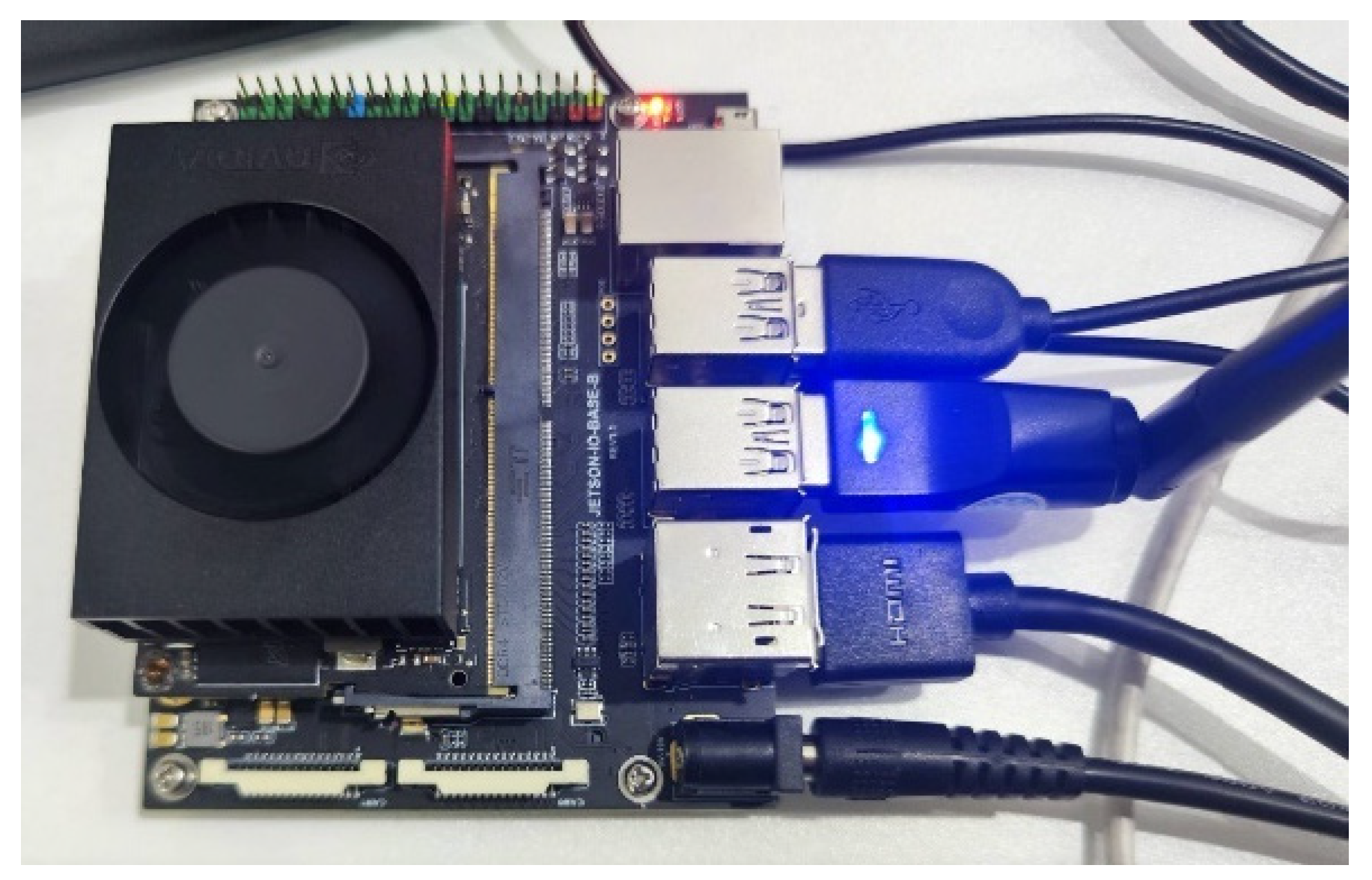

3.5. Deployment

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yu, M.F. New Frontiers in Textiles: Developments in New Textile Materials and Application Fields; Tsinghua University Press Co., Ltd.: Beijing, China, 2002. (In Chinese) [Google Scholar]

- Ma, Z.R.; Liu, Y.S.; Zhang, Q.Q.; Ying, G.G. Current status of agricultural plastic film usage and analysis of environmental pollution. Asian J. Ecotoxicol. 2020, 15, 4. (In Chinese) [Google Scholar]

- Chen, Z.; Xing, M.J. Research Progress on Detection Methods of Foreign Fibers in Cotton. Cotton Text. Technol. 2016, 44, 77–81. [Google Scholar]

- Xu, W.F.; Hu, J.W.; Zhu, X.J.; Ye, J.J. Application Status of Machine Vision in Intelligent Spinning Production. Cotton Text. Technol. 2022, 50, 607. (In Chinese) [Google Scholar]

- Chang, J.Q.; Zhang, R.Y.; Pang, Y.J.; Zhang, M.Y.; Zha, Y. Classification and Detection of Impurities in Machine-Picked Seed Cotton Using Hyperspectral Imaging. Spectrosc. Spectr. Anal. 2021, 41, 3552–3558. (In Chinese) [Google Scholar]

- Liu, Q.; Zhang, Y.; Yang, G. Small unopened cotton boll counting by detection with MRF-YOLO in the wild. Comput. Electron. Agric. 2023, 204, 107576. [Google Scholar] [CrossRef]

- Lu, Z.; Han, B.; Dong, L.; Zhang, J. COTTON-YOLO: Enhancing Cotton Boll Detection and Counting in Complex Environmental Conditions Using an Advanced YOLO Model. Appl. Sci. 2024, 14, 6650. [Google Scholar] [CrossRef]

- Jiang, L.; Chen, W.; Shi, H.; Zhang, H.; Wang, L. Cotton-YOLO-Seg: An enhanced YOLOV8 model for impurity rate detection in machine-picked seed cotton. Agriculture 2024, 14, 1499. [Google Scholar] [CrossRef]

- Li, Q.; Ma, W.; Li, H.; Zhang, X.; Zhang, R.; Zhou, W. Cotton-YOLO: Improved YOLOV7 for rapid detection of foreign fibers in seed cotton. Comput. Electron. Agric. 2024, 219, 108752. [Google Scholar] [CrossRef]

- Fan, X.; Sun, T.; Chai, X.; Zhou, J. YOLO-WDNet: A lightweight and accurate model for weeds detection in cotton field. Comput. Electron. Agric. 2024, 225, 109317. [Google Scholar] [CrossRef]

- Hu, J.; Gong, H.; Li, S.; Mu, Y.; Guo, Y.; Sun, Y.; Hu, T.; Bao, Y. Cotton Weed-YOLO: A Lightweight and Highly Accurate Cotton Weed Identification Model for Precision Agriculture. Agronomy 2024, 14, 2911. [Google Scholar] [CrossRef]

- Ni, C.; Li, Z.Y.; Zhang, X.; Zhao, L.; Zhu, T.T.; Jiang, X.S. Sorting Algorithm for Seed Cotton and Plastic Film Residues Based on Short-Wave Near-Infrared Hyperspectral Imaging and Deep Learning. Trans. Chin. Soc. Agric. Mach. 2019, 50, 170–179. (In Chinese) [Google Scholar]

- Zhou, X.; Wei, W.; Huang, Z.; Su, Z. Study on the Detection Mechanism of Multi-Class Foreign Fiber under Semi-Supervised Learning. Appl. Sci. 2024, 14, 5246. [Google Scholar] [CrossRef]

- Zhang, H.; Qiao, X.; Li, Z.B.; Li, D.L. Hyperspectral Image Segmentation Method for Plastic Film in Ginned Cotton. Trans. Chin. Soc. Agric. Eng. 2016, 32, 13. [Google Scholar]

- Ma, C.; Chi, G.; Ju, X.; Zhang, J.; Yan, C. YOLO-CWD: A novel model for crop and weed detection based on improved YOLOv8. Crop. Prot. 2025, 192, 107169. [Google Scholar] [CrossRef]

- Shuai, Y.; Shi, J.; Li, Y.; Zhou, S.; Zhang, L.; Mu, J. YOLO-SW: A Real-Time Weed Detection Model for Soybean Fields Using Swin Transformer and RT-DETR. Agronomy 2025, 15, 1712. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, J.; Peng, Y.; Wang, Y. FreqDyn-YOLO: A High-Performance Multi-Scale Feature Fusion Algorithm for Detecting Plastic Film Residues in Farmland. Sensors 2025, 25, 4888. [Google Scholar] [CrossRef]

- Fang, H.; Xu, Q.; Chen, X.; Wang, X.; Yan, L.; Zhang, Q. An Instance Segmentation Method for Agricultural Plastic Residual Film on Cotton Fields Based on RSE-YOLO-Seg. Agriculture 2025, 15, 2025. [Google Scholar] [CrossRef]

- Zhou, S.; Zhou, H.; Qian, L. A multi-scale small object detection algorithm SMA-YOLO for UAV remote sensing images. Sci. Rep. 2025, 15, 9255. [Google Scholar] [CrossRef]

- Giri, K.J. SO-YOLOv8: A novel deep learning-based approach for small object detection with YOLO beyond COCO. Expert Syst. Appl. 2025, 280, 127447. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.W.; Loy, C.C.; Li, D.H. Carafe: Content-aware reassembly of features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Vasu, P.K.A.; Gabriel, J.; Zhu, J.; Tuzel, O.; Ranjan, A. Mobileone: An improved one millisecond mobile backbone. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7907–7917. [Google Scholar]

- Wang, J.; Yu, J.; He, Z. DECA: A novel multi-scale efficient channel attention module for object detection in real-life fire images. Appl. Intell. 2022, 52, 1362–1375. [Google Scholar] [CrossRef]

- Gong, Y.; Yu, X.; Ding, Y.; Peng, X.K.; Zhao, J.; Han, Z.J. Effective fusion factor in FPN for tiny object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 6–8 January 2021; pp. 1160–1168. [Google Scholar]

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, D.; Feng, J. Panet: Few-shot image semantic segmentation with prototype alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019 ; pp. 9197–9206. [Google Scholar]

- Wan, G.; Fang, H.; Wang, D.; Yan, J.; Xie, B. Ceramic tile surface defect detection based on deep learning. Ceram. Int. 2022, 48, 11085–11093. [Google Scholar] [CrossRef]

- Padilla, R.; Netto, S.L.; Da Silva, E.A.B. A survey on performance metrics for object-detection algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024; pp. 1–21. [Google Scholar]

- Sohan, M.; Sai Ram, T.; Rami Reddy, C.V. A review on yolov8 and its advancements. In International Conference on Data Intelligence and Cognitive Informatics; Springer: Singapore, 2024; pp. 529–545. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. A Review of YOLOv12: Attention-Based Enhancements vs. Previous Versions. arXiv 2025, arXiv:2504.11995. [Google Scholar] [CrossRef]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In European Conference on Computer Vision; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

| Parameter Name | Parameter Value |

|---|---|

| Sensor Type | CMOS (Global Shutter) |

| Sensor Model | IMX273 |

| Resolution | 1.6 Megapixels |

| Image Size | 1440 × 1080 |

| Signal-to-Noise Ratio | 40 dB (Excellent image noise control) |

| Dynamic Range | 71.1 dB (Suitable for complex lighting environments) |

| Category | Train | Test | Val |

|---|---|---|---|

| Number | 16,107 | 2013 | 2014 |

| Parameters | Values |

|---|---|

| size | 640 |

| epochs | 150 |

| batchsize | 16 |

| Initial learning rate | 0.01 |

| cos_lr | False |

| fliplr | 0.5 |

| mosaic | 1.0 |

| scale | 0.5 |

| YOLOv11n | +BiFPN-CBAM | +CARAFE | +MobileOne-DECA | mAP@0.5 | mAP@0.5:0.95 | Parameter/106 | FLOPs/G |

|---|---|---|---|---|---|---|---|

| √ | 90.8% | 65.3% | 2.58 | 6.3 | |||

| √ | √ | 93.9% | 65.7% | 2.7 | 7.2 | ||

| √ | √ | √ | 94.4% | 67.1% | 2.8 | 7.4 | |

| √ | √ | √ | √ | 95.5% | 68.6% | 1.96 | 5.2 |

| Mode | mAP@0.5 | mAP@0.5:0.95 | Parameter/106 | FLOPs/G |

|---|---|---|---|---|

| YOLOv10n | 92.2% | 63.9% | 2.6 | 8.2 |

| YOLOv9t | 91.2% | 64.1% | 2.1 | 8.5 |

| YOLOv8n | 89.5% | 62.8% | 3.1 | 8.9 |

| YOLOv11n | 90.8% | 65.3% | 2.58 | 6.3 |

| YOLOv12n | 89.8% | 60.1 | 2.5 | 5.8 |

| Faster RCNN | 85.1% | 65.2% | 125.1 | 47.9 |

| DETR | 89.6% | 66.7% | 473.95 | 15.1 |

| Mulch-YOLO | 95.5% | 68.6% | 1.96 | 5.2 |

| Detection Method | Advantages | Disadvantages | Recommended Use Cases |

|---|---|---|---|

| YOLOv10n | NMS-free, stable inference | Moderate small-object detection capability | Real-time applications on edge devices |

| YOLOv9t | Lightweight design | Moderate robustness to low-quality images | Embedded devices with extreme resource constraints |

| YOLOv8n | Stable training, easy to use | Relatively high computational cost | Rapid prototyping |

| YOLOv11n | Rapid prototyping | Relatively new, limited community support and tutorials | Industrial applications requiring a balance between accuracy and speed |

| YOLOv12n | Small parameter count, suitable for model compression | Relatively low mAP@0.5:0.95, may underfit in complex scenes | Ultra-low-power devices |

| Faster RCNN | High localization accuracy, suitable for large objects | Huge parameter count, difficult to deploy | Tasks requiring extremely accurate localization |

| DETR | End-to-end design, no need for NMS or anchor boxes | Slow training convergence, requires large datasets | Large-scale, sparse-object scenarios |

| Mulch-YOLO | Highest accuracy, excellent lightweight design | Design for specific scenarios | High-accuracy applications with moderate hardware resources |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, Z.; Wei, W.; Huang, Z.; Yan, R. Mulch-YOLO: Improved YOLOv11 for Real-Time Detection of Mulch in Seed Cotton. Appl. Sci. 2025, 15, 11604. https://doi.org/10.3390/app152111604

Su Z, Wei W, Huang Z, Yan R. Mulch-YOLO: Improved YOLOv11 for Real-Time Detection of Mulch in Seed Cotton. Applied Sciences. 2025; 15(21):11604. https://doi.org/10.3390/app152111604

Chicago/Turabian StyleSu, Zhiwei, Wei Wei, Zhen Huang, and Ronglin Yan. 2025. "Mulch-YOLO: Improved YOLOv11 for Real-Time Detection of Mulch in Seed Cotton" Applied Sciences 15, no. 21: 11604. https://doi.org/10.3390/app152111604

APA StyleSu, Z., Wei, W., Huang, Z., & Yan, R. (2025). Mulch-YOLO: Improved YOLOv11 for Real-Time Detection of Mulch in Seed Cotton. Applied Sciences, 15(21), 11604. https://doi.org/10.3390/app152111604