Abstract

Cloud infrastructure supports modern services across different sectors, such as business, education, lifestyle, government and so on. With the high demand for cloud computing, the security of network communication is also an important consideration. Distributed denial-of-service (DDoS) attacks pose a significant threat. Therefore, detection and mitigation are critically important for reliable operation of cloud-based systems. Intrusion detection systems (IDS) play a vital role in detecting and preventing attacks to avoid damage to reliability. This article presents DDoS detection using a convolutional neural network (CNN) and recurrent neural network (RNN) model enhancement with a multi-head attention mechanism for cloud infrastructure protection enhances the contextual relevance and accuracy of the DDoS detection. Preprocessing techniques were applied to optimize model performance, such as information gained to identify important features, normalization, and synthetic minority oversampling technique (SMOTE) to address class imbalance issues. The results were evaluated using confusion metrics. Based on the performance indicators, our proposed method achieves an accuracy of 97.78%, precision of 98.66%, recall of 94.53%, and F1-score of 96.49%. The hybrid model with multi-head attention achieved the best results among the other deep learning models. The model parameter size was moderately lightweight at 413,057 parameters with an inference time in a cloud environment of less than 6 milliseconds, making it suitable for application to cloud infrastructure.

1. Introduction

In the world of digitalization, where communication via the internet has significantly influenced our daily lives, many aspects of life have become considerably more convenient through cloud computing. This infrastructure efficiently supports a variety of user operations and provides several advantages such as elasticity, scalability, flexibility, and demand availability. However, this also presents a double-edged sword, where cyber threats must be seriously considered, especially in cloud systems where attackers exploit system vulnerabilities using advanced techniques. These attacks often occur on network layers, particularly targeting the open systems interconnection (OSI) model through malicious transmission control protocol (TCP) by request transmissions into cloud-based computers. Attacks on the application layer can be particularly impactful, as they directly affect end users and make it extremely difficult to distinguish malicious traffic from normal traffic.

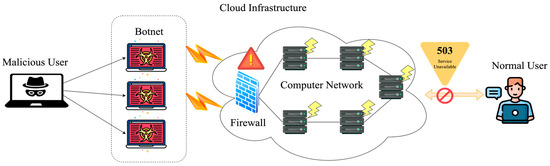

Among these threats, distributed denial of service (DDoS) attacks are particularly disruptive form of cyberattack as shown in Figure 1. It aims to completely disable servers, networks, or websites by simultaneously flooding with a massive volume of requests from numerous hacked devices known as botnets. Such attacks can severely affect businesses, governments, and online platforms by crashing cloud systems for longer periods, causing revenue loss, and damaging an organization’s credibility.

Figure 1.

Example DDoS attacks on cloud infrastructure.

Therefore, organizations must proactively ensure that their digital infrastructure, especially cloud-based systems, is secure and trustworthy. According to a 2024 report by StormWall [1], a provider of cybersecurity as a service, the global number of DDoS attacks has increased by 108% compared to 2023. This increase, which almost doubled in a single year, reflects a continual growth trend, with a prior increase of 63% from 2022 to 2023. Organizations that rely on digital technology must actively implement detection or prevention measures to identify anomalous traffic and effectively mitigate the risks of future attacks.

An anomaly-based intrusion detection system (AIDS) is a specialized form of intrusion detection system (IDS) designed to detect malicious traffic in computer systems, networks, and information systems. AIDS plays a crucial role in continuously monitoring system activities to identify malicious traffic. AIDS is designed to detect unknown threats using machine learning techniques, which involve extracting knowledge from massive datasets using complex rules, methods, or transfer functions to identify significant patterns. Both machine learning and deep learning techniques are increasingly being applied within AIDS frameworks, employing various algorithms and approaches to enhance detection accuracy and adapt to evolving threats of attacking traffic.

This article introduces an anomaly-based traffic detection approach for cloud DDoS attacks using a hybrid deep learning architecture that integrates a convolution neural network, a recurrent neural network, and an attention mechanism. This framework innovatively integrates synthetic minority over-sampling technique (SMOTE) with an information-gain-based feature selection strategy to develop a deep learning model optimized for time-series network traffic. It effectively overcomes key limitations of traditional detection approaches, including challenges in high-dimensional feature extraction, and class imbalance. Validation was performed using the BCCC-cPacket-Cloud-DDoS-2024 dataset [2], which consists of real-time network traffic DDoS attack data collected over a four-day period on a cloud infrastructure.

We explored the problem of DDoS attacks in cloud environments, particularly focusing on TCP-based DDoS attack types. Our research aimed to investigate and enhance the effectiveness of anomaly detection systems through the application of deep learning and statistical techniques. The major contributions of this study are as follows:

- This study investigates multiple TCP-based DDoS attack types and systematically analyzes each feature using statistical methods. By calculating the information gained, we can accurately identify the factors that significantly impact network traffic behavior under DDoS conditions in cloud systems.

- We propose a novel anomaly detection method that is specifically designed to identify cloud-based DDoS attacks. This method employs a hybrid learning architecture that effectively combines a convolutional neural network (CNN) and bidirectional long short-term memory (BiLSTM), further enhanced with multi-head attention to capture temporal and spatial features.

- We utilize preprocessing techniques, such as normalization and SMOTE, which are intelligently integrated with dynamic feature selection. These processes help to mitigate the class imbalance problem in the BCCC-cPacket-Cloud-DDoS-2024 dataset. In addition, we applied information gain to statistically evaluate the influence of each feature. This process significantly improved the recall of minority classes and strengthened the overall robustness of the model.

- We demonstrate that our proposed hybrid deep learning approach consistently achieves a high detection accuracy and recall on the BCCC-cPacket-Cloud-DDoS-2024 dataset. The model was trained on 50 selected features across 519,614 records to effectively distinguish between benign, suspicious, and multiple DDoS traffic types.

- Our model was designed with a lightweight architecture consisting of 413,057 parameters, which is suitable for application in a cloud infrastructure environment.

The remainder of this paper is structured as follows: Section 2 explores essential topics such as DDoS, IDS, Deep learning hybrid models, and various DDoS evaluation datasets in the field, which is the subject of our study. Section 3 presents our workflow and preprocessing techniques implemented in the dataset, and Section 4 proposes a hybrid model architecture. Section 5 presents the results and a discussion of the experiments. Section 6 presents the conclusions and future work.

2. Related Work

A DDoS attack is the most critical threat to cloud computing technology, significantly impacting both user experience and organizational reliability. Extensive research has been conducted to develop various techniques for preventing these attacks.

To effectively evaluate the performance of DDoS detection models in cloud environments, it is essential to use datasets that accurately reflect traffic behavior under both normal and abnormal conditions. Sharafaldin et al. [2] introduced CICDDoS2019, a dataset comprising 11 DDoS attack types, for evaluating IDS. It provides the most important feature set for detecting various types of DDoS attacks, along with their corresponding weights. Moreover, Shafi et al. [3] established a comprehensive review of state-of-the-art DDoS datasets, evaluating 16 publicly available datasets and identifying 15 key limitations from different perspectives. They also proposed a new roadmap for creating comprehensive network datasets and introduced a new cloud-based dataset called BCCC-cPacket-Cloud-DDoS-2024.

Research on DDoS detection and prevention has extensively utilized machine learning algorithms. For example, Fathima et al. [4] presented a supervised machine learning–based intrusion detection system for DDoS attack classification, employing random forest, k-nearest neighbors and logistic regression on the CSE-CICIDS2017, CSE-CICIDS2018, and CICDoS datasets, with random forest achieving the highest accuracy of 97.6%. Liu et al. [5] applied machine learning approaches, including tree-based models, SVM, and neural networks, to DDoS detection on datasets such as KDD CUP99 and NSL-KDD. Naiem et al. [6] proposed an enhanced framework for detecting DDoS attacks in cloud computing using a gaussian naïve bayes classifier. Ismail et al. [7] identified the current state of DDoS attacks using a machine learning approach for DDoS attack type classification and prediction using random forest and extreme gradient boosting (XGBoost) classification algorithms.

In addition, deep learning techniques have been applied for detection. Zhao et al. [8] proposed a DDoS attack detection method that combines the self-attention mechanism with CNN-BiLSTM to address the issues of high dimensionality and multiple feature dimensions conducted on the CIC-ISD2017 and CICDDoS2019 datasets. Naja et al. [9] proposed a hybrid model combining a CNN and BiLSTM with an attention mechanism to capture both spatial and temporal features. Cil et al. [10] used deep neural networks (DNN) to classify network traffic for DDoS detection using CICDDoS2019. Hsu et al. [11] proposed a CNN-LSTM architecture to classify network traffic validated using the NSL-KDD dataset. Abusitta et al. [12] introduced a cooperative IDS using a denoising autoencoder (DA) as the foundation for deep neural networks. Abdelaty et al. [13] developed a Generative adversarial network based adversarial training framework (GADoT) for generating adversarial DDoS samples. Najar et al. [14] presented a robust DDoS detection model using an efficient feature-selection algorithm. Hnatme et al. [15] proposed a CNN-BiLSTM model with additional hidden layers. Yaser et al. [16] proposes a hybrid technique to recognize DDoS attacks that combine deep learning and feedforward neural networks as autoencoders. Aydin et al. [17] proposed a long short-term memory (LSTM) cloud, an LSTM-based system for detecting and preventing DDoS in public cloud networks. Cheng et al. [18] proposed a DDoS attack detection method based on network flow grayscale matrix features via a multiscale convolutional neural network. Based on the different characteristics of the attack and normal flows in the IP protocol. Jihado et al. [19] presented a network intrusion detection system (NIDS) using deep learning, specifically a CNN for spatial feature extraction and BiLSTM for temporal feature extraction. Evaluated on the CICIDS2017 and UNSW-NB15 datasets, it achieved up to 99.83% and 94.22% accuracy for binary classification, respectively. Kirubavathi et al. [20] introduce the self-Attention and inter-sample attention transformer (SAINT), a unique deep learning system that incorporates Sparse Logistic Regression. SAINT uses dual attention techniques to record both intra-flow and interflow interactions, resulting in a full comprehension of traffic patterns. Akgun et al. [21] proposed an intrusion detection system incorporating preprocessing procedures and deep learning models to detect DDoS attacks, including deep neural networks, CNN, and LSTM, were evaluated in terms of detection accuracy and real-time performance. Le et al. [22] demonstrated the DDoS bidirectional encoder representations from transformers (DDoSBERT) model to enhance system resilience against text-based transformation DDoS attacks. The model was validated across diverse benchmark datasets. Ahmim et al. [23] proposed a model that aims to detect all types of DDoS attacks with their specific subcategory by combining different types of deep learning models, including CNN, LSTM, deep autoencoder, and deep neural networks. Said et al. [24] proposed a hybrid approach that integrates a CNN and BiLSTM model. To enhance generalization and reduce overfitting, the training process incorporated L2 regularization and a dropout rate of 0.5, which helped prevent overfitting during training. Elubeyd et al. [25] presented hybrid deep learning approach which combined three types of deep learning algorithms achieved high accuracy rates of 99.81% and 99.88% on two different datasets.

Furthermore, data preprocessing techniques are important for enhancing the performance of deep learning models. Batchu et al. [26] introduced a hybrid sampling approach combining SMOTE and Tomek link pairs of data points of different classes that are nearest to each other, along with a hybrid feature selection method to enhance performance and reduce training time. Alhayan et al. [27] presented intrusion detection applied feature selection using a dung beetle optimizer (DBO) for precise intrusion detection and optimized hyperparameters with the spotted hyena optimization algorithm. Xiao et al. [28] introduced an IDS that combined feature reduction to simplify and optimize input data with a CNN for accurate intrusion detection.

Moreover, DDoS detection was applied in Sogüt et al. [29], who demonstrated a scaled-down version of a real water plant via a testbed environment created including a supervisory control and data acquisition (SCADA) system to prevent cyberattacks due to the openness of the SCADA architecture using a hybrid deep learning model. Varalakshmi et al. [30] and Assis et al. [31] introduced an application of the DDoS detection model on the internet of things (IoT) and wireless software defined network (SDN). Elaziz et al. [32] proposed an intrusion detection approach for cloud and IoT environments using deep learning, and the capuchin search algorithm feature selection technique was proposed based on a recently developed swarm intelligence optimizer.

Table 1 summarizes existing approaches for detecting anomalous network traffic, focusing on the techniques, advantages, and limitations of the reviewed literature.

Table 1.

Summary of existing DDoS detection approaches.

After studying related work on various DDoS detection and different DDoS attack datasets, the implementation of a deep learning model with different model selections and optimizations, hybrid model techniques, and fine-tuning parameters in the training process was applied to enhance the performance of the model based on the evaluation matrix. Additionally, feature selection is utilized to filter significant data, and data preprocessing techniques are used to generate high quality data. The methods of related work will be applied to design the model and adapt it to DDoS attack datasets to test and compare the results. The developed model will be deployed on cloud infrastructure in real-world environments.

3. Methods and Tools Used in Experiment

3.1. Experiment Workflow

In this study, to detect DDoS attack traffic, we employed deep learning technology using a hybrid model approach that integrates CNN and recurrent neural network (RNN) to enhance the classification performance. Deep learning was selected because of its capability to learn high level features from large scale data, effectively capture complex temporal patterns. Moreover, it offers scalability and the ability to learn from new data, enabling the continuous improvement of model accuracy over time. Comparative analysis was conducted with other deep learning techniques and alternative hybrid learning approaches to identify the model architecture that yields the best performance. The experiments utilized the BCCC-cPacket-Cloud-DDoS-2024 dataset, which is a modern dataset specifically designed to detect DDoS attack traffic in cloud environments.

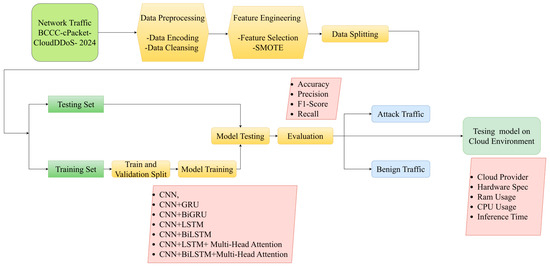

Figure 2 illustrates the workflow of the experiment, starting with the data collection and cleaning of missing values. Second, data preprocessing was used to enhance the data quality. Techniques were applied Feature selection, normalization to scale the data within the same range, and SMOTE were used to augment samples in minority classes within the imbalanced dataset. These steps directly affected the performance of the model. Finally, the dataset was divided into two subsets, the training set and the testing set, and the model was then evaluated using key metrics, that is, accuracy, precision, recall, and F1-score to determine the model’s effectiveness in classifying benign and attack traffic. In the final step, to confirm its practical applicability, the developed model was deployed and tested on a real cloud environment with different hardware specifications to prove its effectiveness for production environments.

Figure 2.

Workflow of the experiment.

3.2. Dataset

In the experiments, we utilized a modern dataset called BCCC-cPacket-Cloud-DDoS-2024, sourced from publicly available data that contains network traffic collected in a cloud computing environment over a period of five days. The dataset emphasized DDoS attack detection using the TCP, specifically developed to address issues identified in previous datasets, such as the lack of realistic network traffic. Incompatibility with modern protocols and limited diversity of attacks by simulating a cloud infrastructure that includes 17 different DDoS attack scenarios [2].

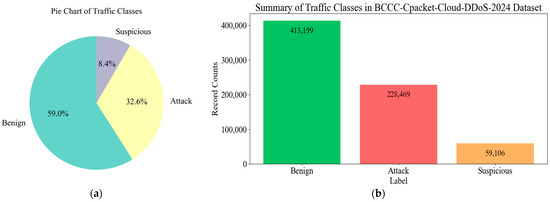

The dataset contains 700,774 traffic records with 326 features. This makes it highly suitable for studying and developing DDoS detection techniques for modern cloud environments. The traffic was categorized as shown in Figure 3, consisting of 413,199 benign traffic records, 228,469 attack traffic records and 59,106 suspicious traffic records.

Figure 3.

Proportion of traffic classes in the BCCC-cPacket-Cloud-DDoS-2024 dataset: (a) pie chart and (b) bar chart.

To prepare the data for model training and evaluation, the records were split into training, validation, and test sets in a 70:10:20 ratio. The training set, including 363,729 records, was used to train the model, while the validation set of 51,962 records helped tune hyperparameters and monitor performance during training. The test set contained 103,923 records. This split helps the model learn effectively from the training data, validate performance on validation data, and evaluate its practical performance on the test data, ensuring it works well on different traffic patterns.

3.3. Data Preprocessing

3.3.1. Data Cleansing and Labeling

In preparing data for deep learning model development, data cleansing is an essential step, particularly with structural data. This is important when the data contain a null value, which can lead to inaccurate analysis or prevent the model from effectively learning. The categorical output label classes were encoded into numerical classes, with 0 representing benign and 1 representing attack.

3.3.2. Feature Selection

To improve the model’s performance, ee removed features that contained duplicate entries or that exhibited low information gain. This step was conducted based on a statistical analysis to ensure that only the most relevant and significant features were retained. This enhances the model’s capability to effectively detect DDoS attacks. The selection method involved measuring the correlation between the features and the target outcome. Specifically, mutual information was calculated to assess the information gain between each feature and target variable. This approach is grounded in the principles of information theory as defined in (1):

where is the feature input of the model; is the target output that the model aims to predict; is the joint probability of features x and y; is the individual probability of the feature; and is the individual probability of the outcome.

The top 50 features based on the feature selection specifications are listed in Table 2. The first group of features that plays a significant role in determining whether network traffic is an attack consists primarily of the statistical values of the packet inter-arrival time. The second group comprises features closely related to the number of packets in the forward direction.

Table 2.

A total of 50 features by information gain.

Next are features that involve the overall size of packet header bytes, which are also meaningful in the detection process. Conversely, features with relatively lower importance in this context, such as the standard deviation of backward packet inter-arrival time (IAT) and the standard deviation of backward packet length are generally associated with the behavioral patterns of backward packets.

3.3.3. Normalization

Normalization is a crucial step in data preparation before applying a deep learning model. Raw data across different features may have varying units or ranges of values. For example, some features may have values in single digits, whereas others may have values in thousands. Allowing such discrepancies can cause the model to overemphasize certain features simply because of their larger scale [33]. Normalization is applied to prevent the model from assigning disproportionate importance to any feature owing to its scale, as defined by (2):

where x represents the raw data, the mean of the feature, the standard deviation of the feature, and Ζ the value scaled to have a mean of 0 and a standard deviation of 1.

3.3.4. SMOTE

After analyzing and visualizing the data, we found that the distribution of samples between the attack and benign classes was significantly imbalanced. Specifically, the benign class contains a much higher number of samples than the number of attack classes. This class imbalance directly affects the performance of the deep learning model and introduces a bias in the evaluation metrics, such as the confusion matrix. The model may tend to predict the majority class, which is a benign traffic class, to achieve a high overall accuracy while failing to correctly detect the minority attack class. This issue is reflected in the much lower precision, recall, and F1-score values for the attack class, even though the overall accuracy may appear acceptable.

To enable the model to classify both classes accurately, it is necessary to address the class imbalance problem. One of the most effective techniques is SMOTE, which is specifically designed to handle imbalanced datasets by generating synthetic samples for minority classes. Unlike traditional oversampling methods that duplicate existing instances, SMOTE creates new synthetic examples from existing data, thereby increasing the diversity without introducing redundancy [34].

The main concept of SMOTE is to randomly select the k-nearest neighbors of a minority class instance and generate a new sample along the line segment between the original instance and one of its neighbors. The new synthetic sample is shown in (3) as follows:

where represents a selected minority class sample, represents the neighboring minority class sample of , represents a random value drawn from a uniform distribution. represents a newly generated synthetic sample.

After data preprocessing process, including feature selection, normalization, and SMOTE, the dataset was reduced to 50 features. The total number of records was 519,614, consisting of 349,178 normal records and 170,436 attack records, as summarized in Table 3.

Table 3.

Details of original data and preprocessing data.

4. The Proposed Network Intrusion Detection Model

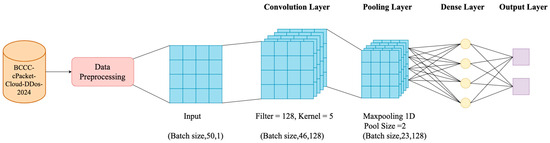

4.1. Convolution Neural Network

A CNN is a type of neural network based on convolution operations designed for data with spatial structures or sequential relationships. A CNN typically comprises three main layers as shown in Figure 4. The convolution layer is responsible for extracting important features from images or sequential data. Second, the pooling layer reduces data dimensionality to minimize complexity while preserving essential features. Finally, the fully connected layers integrate the features extracted from the previous layers and feed them into the decision-making process by connecting each node in each layer to every node in the next layer [35].

Figure 4.

The architecture of CNN.

In convolutional neural networks, the size of the output feature map is determined by the relationship between the input size, kernel size, stride, and padding. The convolution operation progressively extracts local patterns while adjusting the spatial or temporal dimensions of the data. A smaller stride or larger padding helps preserve the resolution of the feature map, whereas larger strides or the use of valid padding reduces its dimensionality resolution. This control over feature map size enables the model to balance between computational efficiency and representational capacity. The resulting feature map size after the convolution can be calculated in (4):

where represents number of output features, represents number of input features, p represents convolution padding size, k represents convolution kernel size, and s represents convolution stride size.

After CNN operations, the data are processed through the rectified linear unit (ReLu) activation function, a nonlinear function that sets negative values to zero while allowing positive values to pass unchanged, as expressed in (5). To develop our model, we employed a dimensional convolutional layer. The dataset used in this study consists of sequential data that records DDoS attack patterns:

Here, represents the numerical value obtained from either raw input features or intermediate transformations within the layers of a neural network.

4.2. Recurrent Neural Network

Long short-term memory (LSTM) is a special type of RNN designed to help computers retain important information for longer periods. This is unlike conventional RNN, which tends to forget earlier details as the sequence length increases. LSTM achieves this through memory cells that can decide which information to retain and which to discard [36].

Gated recurrent unit (GRU) is another RNN variant that mitigates the vanishing gradient problem. GRU aims to enable each network unit to adaptively capture dependencies across varying time intervals. Similarly to LSTM, the GRU employs gating mechanisms to control the flow of information within a unit. However, unlike LSTM, the GRU does not maintain a separate memory cell [37].

For anomaly detection in network traffic, bidirectional recurrent neural networks (BRNNs) are utilized to learn from both past and future contexts. Traditional unidirectional RNNs are limited because they leverage only the past context. The bidirectional approach overcomes this problem by allowing the model to access both the preceding and succeeding elements of the sequence simultaneously. When this concept is applied to LSTM or GRU, the resulting models are BiLSTM and bi-directional GRU (BiGRU).

BiLSTM is a type of recurrent neural network designed to overcome the limitations of traditional RNN. This architecture effectively addresses the vanishing gradient problem and enhances the ability to learn sequential dependencies by processing information from both the past and future contexts.

4.3. Multi-Head Attention Mechanism

Because DDoS attack data exhibit sequential characteristics with attack patterns occurring continuously over time, such as sending numerous packets in a short period or repeating specific behaviors, the attention mechanism is effective for processing this type of data. The attention mechanism enables the model to focus on particular time steps or specific attributes that are critical for detecting attacks, which are essential for determining whether an attack is occurring.

The core idea of the attention mechanism is to allow the model to process each position in a sequence by considering its importance to the overall output. This helps to capture dependencies between elements that may be far apart in the sequence, which is particularly beneficial for tasks involving sequential data [38]. The mechanism computes attention weights to emphasize only the relevant parts of the input before passing them forward, thereby allowing the model to focus on essential information.

An extension of this concept is multi-head attention, which divides the learning process into multiple attention heads. Each head analyzes the relationships between data from different perspectives, and when combined, the model enables the comprehensive capture of complex contextual information.

4.4. Hybrid Deep Learning Model

When applied to deep learning models, the hybrid deep learning model is significantly more complex than its application to traditional classifiers. Deep neural network models consist of millions to billions of hyperparameters and require substantial time and computational resources for training. When training multiple-base deep learning for the hybrid model, selecting and tuning hyperparameters are some of the main challenges in applying hybrid learning techniques to deep models [39,40].

The combination of CNN and BiLSTM has gained popularity for anomaly detection due to its ability to learn deep feature representations from data. CNN excels in extracting local features within segments of the data, whereas BiLSTM analyzes sequential relationships bidirectionally for complex anomaly detection.

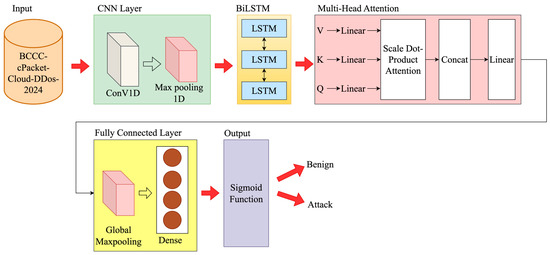

Additionally, enhancing the model using a multi-head attention mechanism further enhances the capability of the model to detect sequential data patterns without close sequence positions. This allowed the model to better understand the dependencies. The model architecture is illustrated in Figure 5, and the detailed dimensions of each layer are shown in Table 4.

Figure 5.

Hybrid model architecture.

Table 4.

Model layer dimension.

The process begins as the input layer receives sequences of shape (batch, 50, 1). These are fed into a one-dimensional CNN with 128 filters and a kernel size of 5, a stride of 1, and a valid padding configuration, which slightly reduces the temporal dimension of the feature maps. The output is passed through a ReLU nonlinear activation function, resulting in the shape of (batch, 46, 128). Subsequently, a maxpooling1d layer downsamples the sequence by a factor of 2, compressing the representation to a shape of (batch, 23, 128).

Next, the data flows into a BiLSTM layer with 128 units in each direction. This bidirectional setup allows the layer to capture dependencies both forward and backward along the temporal sequence, improving the representation of temporal features. As a result, the layer outputs a tensor with shape (batch, 23, 256).

To enhance capture sequence dependencies, a multi-head attention mechanism with 4 attention heads is integrated, each with a key vector dimensionality of 32. This enables the model to attend to multiple aspects of the sequence simultaneously, with each head analyzing relationships from different perspectives. The concatenated output from all heads has a dimension of 128. Since this differs from the layer input dimension of 256, a linear projection through a dense layer automatically maps the 128-dimensional output back to 256. This process is internally operated within the Keras library, ensuring that the output shape (batch, 23, 256) matches the input, thereby allowing for a residual connection.

Finally, a globalaveragepooling1d layer summarizes information across the entire time dimension, converting the output to (batch, 256). This compressed representation is processed by a fully connected dense layer with 64 neurons to extract deep features and reduce dimensionality to (batch, 64). The processed data is then passed to the output layer, which uses a sigmoid activation function to produce the final binary classification result in the range of 0 to 1 with a shape of (batch, 1).

5. Experiment and Result

5.1. Experiment Setup

In this experiment, a hybrid model between CNN and BiLSTM combined with multi-head attention was built to precisely classify abnormal network traffic on the cloud infrastructure. The model training and testing were conducted using Python 3.12.12 on Google Colab, utilizing libraries such as Numpy and Pandas for data manipulation and numerical computation, TensorFlow 2.19.0 and Keras 3.10.0 for deep learning model development, scikit-learn 1.6.1 for class imbalance handling and model evaluation. Matplotlib 3.10.0 and Seaborn 0.13.2 for data visualization, and Psutil 5.9.5 for system performance monitoring. The versions of libraries are listed in Table 5.

Table 5.

Library versions.

The training process was executed on a Google Colab Pro virtual machine featuring with an Intel Xeon CPU 2.20 GHz and 22.5 GB of virtual random-access memory (RAM) and NVIDIA L4 GPU, which provides high performance computing capabilities suitable for deep learning model training. Finely tuned hyperparameter techniques were applied to enhance model performance. In addition, early stopping techniques were used to prevent overfitting, whereas the learning rate was adaptively adjusted during training based on loss reduction, enabling more effective and efficient learning.

The model was trained for a maximum of 100 epochs with a batch size of 64, balancing computational efficiency with robust gradient updates. Binary cross entropy was employed as the loss function, which is particularly suitable for binary classification tasks, ensuring effective penalization of incorrect predictions. These hyperparameter configurations and optimization strategies established a balanced training framework that emphasized generalization, convergence stability, and computational efficiency. The fine-tuned parameter values are summarized in Table 6.

Table 6.

Hyper parameter value.

5.2. Model Evaluation

In this experiment, the model performance was evaluated using several metrics, including the accuracy, precision, recall, and the F1-score. The evaluation variables are defined as follows:

- True Positive (TP): The number of instances where the system correctly detects an attack, and the data is indeed attack traffic.

- False Positive (FP): The number of instances where the system predicted an attack, but actually benign traffic.

- True Negative (TN): The number of instances where the system correctly predicted as benign and proved to be benign traffic.

- False Negative (FN): The number of instances where the system was predicted to be benign but was attack traffic.

The evaluation metrics were calculated using the following equations:

Accuracy is the proportion of correctly classified instances, calculated as in (6):

Precision is the proportion of true positives among all predicted positives and is calculated as in (7):

Recall is the proportion of true positives among all the actual positives. This is calculated as in (8):

F1-score is the harmonic mean of the precision and recall. This is calculated as shown in (9):

5.3. Results and Discussion

5.3.1. Compared with Other Deep Learning Architectures and Hybrid Models

The results of detecting both attacks and benign traffic specify related to DDoS attacks on binary classification. A hybrid learning approach combining CNN and BiLSTM, along with a multi head attention mechanism, was utilized. The model was applied to BCCC-cPacket-Cloud-DDoS-2024, where data preprocessing was conducted using information gain for feature selection, normalization, and SMOTE.

Our results clearly indicate that the proposed model is capable of accurately de-tecting and classifying various types of DDoS traffic. Furthermore, we conducted a comparative study between the proposed model and several deep learning architectures to identify the most effective model. All models were subjected to the same pre-processing pipeline prior to training.

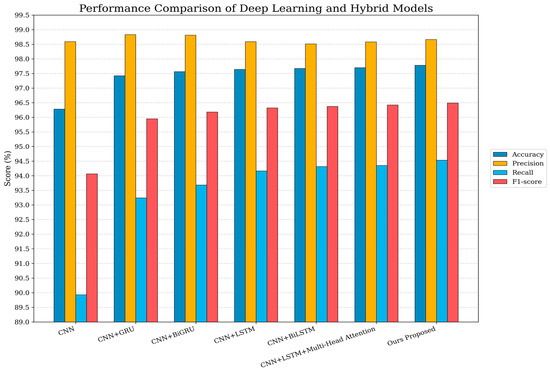

The results from Table 7 and Figure 6 show that multi-head attention combined with CNN and BiLSTM performs the best in various deep learning model architectures. The same data preprocessing technique was used. The CNN result exhibited the lowest accuracy of 96.28%. For combined CNN and RNN models, such as GRU, LSTM, BiLSTM, and BiGRU, the accuracies of GRU and BiGRU are 97.42% and 97.56%, respectively, and BiLSTM and LSTM have similar accuracies of 97.64% and 96.67%, respectively. Subsequently, when connecting multi-head attention with LSTM and BiLSTM, the accuracy increased in parallel with the precision, recall, and F1-score. LSTM with multi-head attention accuracy reaching 97.70% and BiLSTM produced the highest accuracy and F1-score at 97.78% and 96.49%, respectively. Moreover, the integration of the CNN and BiLSTM combined with multi-head attention is much better than CNN and LSTM alone, achieving higher key metrics compared to other methods, and has the most significant impact on the hybrid model results.

Table 7.

Results compared with other deep learning architectures and hybrid models.

Figure 6.

Performance comparison results of deep learning hybrid models.

The comparative results presented in Table 7 further emphasize the impact of architectural design on model performance. Despite having the lowest number of 20,933 parameters, the standalone CNN model achieved 96.28% accuracy and the lowest recall of 89.93%, indicating its limited capability in capturing sequential dependencies. However, CNN and RNN combinations such as GRU, BiGRU, LSTM, and BiLSTM significantly enhanced performance. The benefit of bidirectional processing in learning contextual information was validated by the significantly smaller improvement in the performance of the BiLSTM models over LSTM.

The training and inference performance results are summarized in Table 8, showing the computational resource utilization and efficiency of each deep learning architecture. During model training on Google Colab Pro with an NVIDIA L4 GPU, both CPU and GPU resources were utilized efficiently, with moderate memory consumption across configurations. Models with simpler architectures, such as the standalone CNN, achieved the fastest training time per epoch at 13.6 s and the lowest average inference time at 0.04 ms. As the model architectures became more complex—particularly those recurrent layers that have component such as GRU, LSTM, and especially bidirectional variants—the training time and inference latency increased accordingly.

Table 8.

Model training and inference performance.

The bidirectional models, like CNN + BiGRU and CNN + BiLSTM, exhibited higher computational costs, with average inference times of 0.2 ms and 0.181 ms, respectively, due to their doubled sequence processing paths. However, even with these complexities, the inference times remained below 0.2 ms, indicating that the models can still perform real-time detection when accelerated by GPU processing. Our proposed model illustrated the longest training duration per epoch at 119 seconds because of multilayer and attention-enhanced, it maintained efficient GPU memory usage of 2.22 GB, equivalent to approximately 9.87% of the total 22.5 GB available on the L4 GPU, demonstrating a balance between computational cost and detection capability.

The results were as follows: accuracy, 97.78%; precision, 98.66%, F1-score of 96.49%; recall, 94.53%. The loss at the end of training was 0.0646, with a false-negative rate of 5.56%, false-positive rate of 0.42%, true-positive rate of 31.01% and true-negative rate of 66.78% at 93 epochs.

A key observation is that the integration of multi-head attention significantly enhances both the LSTM and BiLSTM models. CNN + LSTM with multi-head attention achieved an accuracy of 97.70%. Similarly, CNN and BiLSTM with multi-head attention, which is our proposed model, achieved the best overall performance across all evaluation metrics with an accuracy of 97.78% and an F1-score of 96.56%. This performance gain demonstrates the effectiveness of the attention mechanisms in improving both efficiency and generalization by enabling the model to focus on more informative features.

The amount of model parameter related to model complexity and performance. The CNN model contains 20,933 parameters. In addition, the CNN combined with a GRU layer consisting of 117,249 parameters, with a BiGRU layer having 232,961 parameters, an LSTM layer with 141,313 parameters, and a BiLSTM layer with 281,345 parameters. Moreover, the integration with multi-head attention increases the number of parameters, with the CNN model combined with an LSTM layer and multi-head attention containing 273,025 parameters, and the proposed CNN model combined with a BiLSTM layer and multi-head attention with 413,057 parameters. The increase in parameters helps the model to focus on important features and perform well on different types of DDoS traffic.

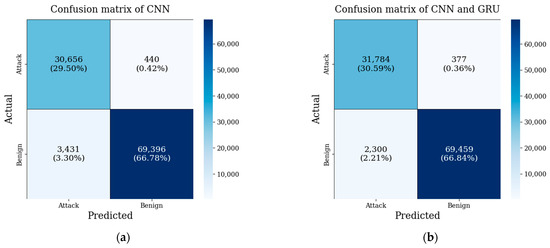

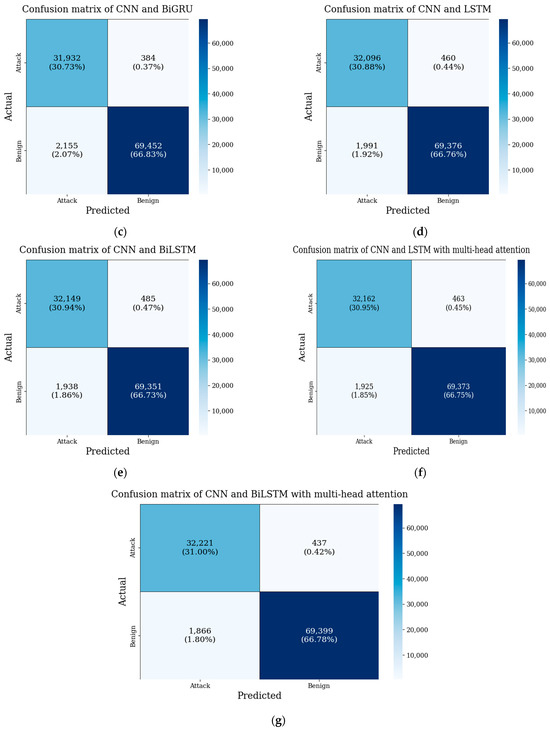

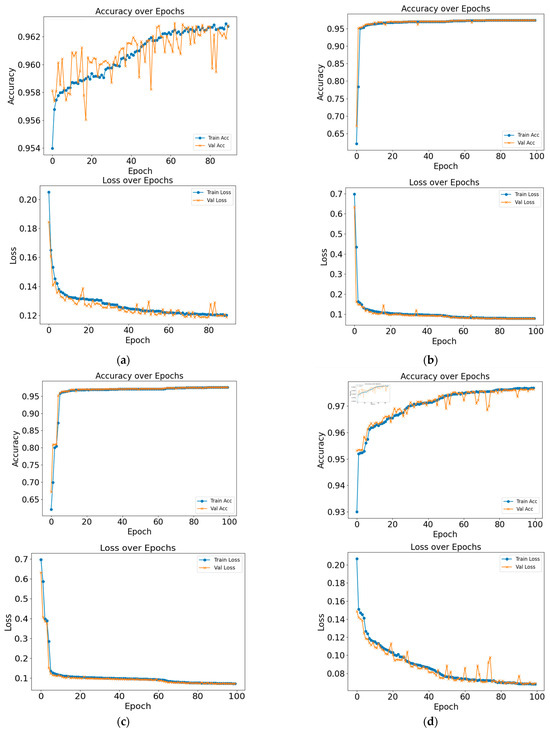

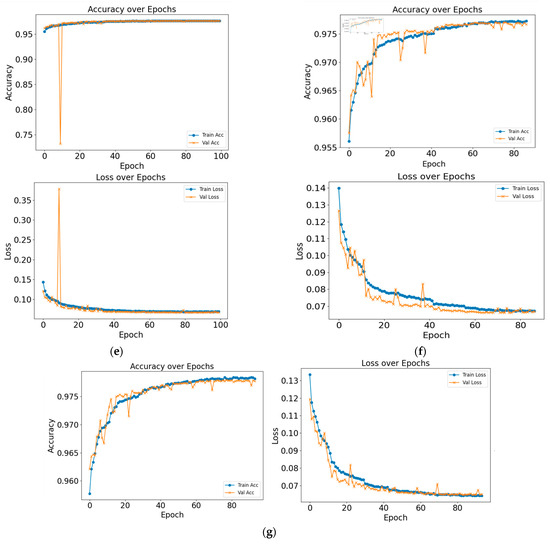

Figure 7 shows the confusion matrix of each model along with the validation accuracy and loss, illustrating the number of test samples predicted for each class versus the actual class. The results were used to calculate the true positives, true negatives, false positives, and false negatives, which are summarized in Table 9. Among all the evaluated models, the proposed CNN, BiLSTM and multi-head attention hybrid architecture consistently outperformed other configurations: the proposed model achieved the highest true positives of 32,221 and true negatives of 69,399, along with the lowest false negatives of 1863 and false positives of 437. This shows that the model detects attack samples more effectively while correctly identifying benign traffic and keeping false alarms low. Compared with other architectures, our proposed model consistently demonstrated higher accuracy in classifying both benign and attack samples, highlighting the effectiveness of integrating BiLSTM with multi-head attention for DDoS detection.

Figure 7.

Confusion matrix of models. (a) CNN (b) CNN + GRU (c) CNN + BiGRU (d) CNN + LSTM (e) CNN + BiLSTM (f) CNN + LSTM with multi-head attention (g) CNN + BiLSTM with multi-head attention.

Table 9.

Results of confusion matrix.

We found the key advantages of hybrid models using the CNN and BiLSTM techniques. Both two models are compatible with sequence data and extracting local patterns. In addition, integrating a multi-head attention mechanism enhances the ability to capture the complex relationship between data. Our hybrid model consists of 413,057 parameters that were categorized into a lightweight group suitable for implementation in a cloud environment for detecting network traffic in real time. In addition, converged faster owing to the early stopping at 93 epochs. This indicates that attention not only improves predictive accuracy but also prevents overfitting and reduces computational cost in the long run.

Figure 8 shows the results of the training and validation loss curves for the various hybrid models. The validation loss was monitored in every training epoch. To prevent overfitting, we reduced the learning rate when the loss was not reduced within five epochs and terminated the training session when the validation loss value was not reduced within 10 epochs. After training, we recovered the weight of the model by choosing epochs with a minimum validation loss to obtain the best model for implementation. The pattern of the training graph showed that the difference in fluctuation of the hybrid model utilizing GRU was less than that of LSTM.

Figure 8.

Training and validation accuracy and loss curves of the models. (a) CNN (b) CNN + GRU (c) CNN + BiGRU (d) CNN + LSTM (e) CNN + BiLSTM (f) CNN + LSTM with multi-head attention (g) CNN + BiLSTM with multi-head attention.

Even with our high accuracy value, it exhibited strong detection capability. On top of that, another important metric recall in DDoS detection. Relying only on the accuracy may not reflect the overall performance of the model. A low recall indicates a model tendency to overlook and miss the actual attack traffic, which poses a serious risk of undetected threats passing through the cloud system.

The sudden increase in loss and decrease in accuracy in the validation data. This behavior does not happen in the training data, indicating that the model is functioning normally. The sudden fluctuation in the validation loss and accuracy is influenced by the data sequences in the validation batches which contain more complex sequences such as abnormal traffic patterns.

These fluctuations appeared mainly from the mid-epoch to the last epoch, indicating an overfitting tendency. To prevent this, early stopping was applied, and the learning rate was reduced whenever the validation loss did not decrease within 10 epochs.

5.3.2. Inference Performance on Different Cloud Hardware Instances

To validate the model practical application in cloud environment, we conducted a series of experiments within the amazon web services (AWS) cloud environment. A simulated network was established, featuring an EC2 instance as the target server. The trained DDoS detection model was deployed on a separate EC2 instance.

Additionally, we evaluated the model’s inference performance on different hardware configurations, focusing on Intel Xeon Family CPUs with varying RAM sizes. This allowed us to observe the effect of hardware specifications on inference time, CPU usage, and memory utilization. The results demonstrated that our model could successfully detect DDoS attacks with high accuracy and low latency across different hardware setups, while maintaining efficient CPU and memory utilization.

The inference performance of the proposed DDoS detection model was evaluated across multiple AWS EC2 instance types equipped with Intel Xeon Family CPUs and varying RAM capacities of 2 GB, 4 GB, 8 GB, and 16 GB, corresponding to the t2.micro, t2.medium, t2.large, and t2.xlarge configurations, respectively.

The experimental results, which are summarized in Table 10, revealed a correlation between available memory and inference speed. Especially when the RAM was increased from 2 GB to 16 GB, the average inference time decreased significantly from 5.19 ms to 3.25 ms per sample, representing an approximate 37% improvement in processing speed. This reduction indicates that additional memory capacity enables smoother data handling and faster model execution during inference.

Table 10.

Evaluation of model inference on AWS cloud environments.

The model maintained efficient CPU performance across all configurations, with utilization ranging from 69.15% on t2.micro to 46.45% on t2.xlarge instances. Increasing RAM improved inference efficiency without adding significant CPU load, resulting in a more balanced system. This reveals that RAM primarily improves model throughput, while CPU usage remains stable, ensuring consistent processing performance

Overall, the results confirm that the proposed model demonstrates excellent scalability and efficiency across different hardware configurations. The results highlight that even under constrained computational resources, The model provides fast prediction results while on higher-memory configurations, it achieves faster response times with reduced CPU consumption. This ensures that the model is both resource-efficient and suitable for practical real time deployment in cloud-based DDoS detection systems.

5.3.3. Compared with State-of-the-Art DDoS Detection

The results of our proposed hybrid model for the binary classification of DDoS attacks compared with other research from the past two years under the same key metrics consisted of accuracy, precision, recall and F1-score as shown in Table 11.

Table 11.

Comparison with other state-of-the-art DDoS detection.

Table 11 compares the effectiveness of our hybrid model architecture when applied to either real or simulated datasets with state-of-the-art DDoS detection. We evaluated various models, datasets, approaches, and performance indicators.

Compared with the results in [3], which reported 94% precision, recall, and F1-score using a machine learning approach with feature selection, our approach demonstrates superior performance across all four metrics: accuracy, precision, recall, and F1-score. This improvement underscores the advantage of deep learning methods over traditional machine learning in DDoS detection because they can automatically learn complex patterns from traffic data for more robust and scalable detection. Additionally, [20] proposed the self-attention and inter-sample attention transformer (SAINT) model leveraging dual attention mechanisms to capture both intra-flow and inter-flow dependencies and evaluated it on the BCCC-cPacket-Cloud-DDoS-2024 dataset, which achieved 97% accuracy, 95% precision, 95% recall, and a 96% F1-score, our approach showed superior performance in terms of accuracy, precision, and F1-score, while performing slightly lower recall. The advantage of our hybrid model is that it combines CNN for local spatial patterns, BiLSTM for temporal dependencies, and multi-head attention to highlight important features, providing more robust detection than transformer models. Moreover, our model is relatively lightweight, with 413,057 parameters, making it more efficient and suitable for deployment in resource-constrained environments than large-scale transformers. Relative to [22], which reported 98.98% accuracy, 98.95% precision, 99.67% recall, and 99.31% F1-score using the DDoS-BERT model, our approach achieved almost equivalent performance while requiring a substantially smaller architecture of 413,057 parameters compared to 67 million in DDoS-BERT. This minimal performance gap highlights the practical advantage of our lightweight design, which is more efficient and suitable for real-time deployment in cloud environments. However, it suffers from heavy computational requirements, higher inference latency, and limited scalability for resource-constrained systems.

Compared with the results in [8], which reported 99.78% accuracy, 98.7% precision, 99.77% recall, and 99.44% F1-score using a CNN-BiLSTM with an attention mechanism on the CICDDoS2019 dataset, the results in [19], which achieved 99.83% accuracy, 99.91% precision, 99.87% recall, and 99.89% F1-score using a CNN-BiLSTM model on the CICIDS2017 dataset, our approach achieved competitive performance while being evaluated on the more representative BCCC-cPacket-Cloud-DDoS-2024 dataset. While CICDDoS2019 and CICIDS2017 are widely used and valuable for benchmarking, BCCC-cPacket-Cloud-DDoS-2024 offers traffic patterns that are closer to real cloud environments and incorporates a broader range of modern DDoS scenarios. This makes our evaluation not only strong in terms of performance, but also practical and relevant for current cloud-based applications.

6. Conclusions

We comprehensively proposed a DDoS attack anomaly detection method for network systems in cloud environments using hybrid deep learning techniques, particularly for binary classification tasks. We introduced a hybrid architecture that integrates layers of CNN, BiLSTM, and multi-head attention to effectively extract and model the sequential dependencies and attack timing patterns in network traffic. Moreover, we employed a series of well-structured data preprocessing methods. These included feature selection by information theory to identify the most impactful features, normalization to minimize the disparity between feature scales, integrates with SMOTE to effectively address class imbalance issues in the dataset. The proposed model was rigorously evaluated on the BCCC-cPacket-Cloud-DDoS-2024 dataset. The results demonstrated that the proposed model achieved an accuracy of 97.78%, recall of 94.53%, precision of 98.66%, and F1-score of 96.49%. Compared with other deep learning models from related research, our model outperformed others in detecting DDoS network attacks. Importantly, the model maintains a lightweight parameter size of 413,057, less inference time and high accuracy, supporting for low execution resource. Our model can be deployed in cloud infrastructure environments, and testing on a real cloud environment on AWS demonstrated that the model executes with an inference time of less than 6 milliseconds.

This study has several limitations that should be acknowledged. The proposed model relies on GPU acceleration to enable efficient training and inference. Its complex architecture demands substantial computational resources, making GPU utilization essential for achieving practical training durations and stable convergence. This dependency may pose challenges for deployment in environments where GPU resources are limited. Furthermore, our research focuses only on binary classification between benign and attack traffic, while multiclass classification could be considered among various DDoS attack types. In addition, the dataset used in this study presented a challenge due to class imbalance. Although the SMOTE was employed to mitigate this issue, a more balanced dataset might still lead to improved model generalizability.

Our future work, we plan to evaluate the current DDoS detection not only for binary classification, but also for multiclass classification. We intend to evaluate the model in real cloud application performance in software-defined networking (SDN) deployed in a real cloud environment. This will be accomplished through a prototype setup using a mininet program with an open flow controller to evaluate the model on the system effectiveness and stability under realistic network traffic and diverse threat scenarios.

Author Contributions

Conceptualization, P.S.; methodology, P.S.; software, P.S.; validation, B.P.; formal analysis, C.B.; investigation, P.S., W.K., V.C. and C.B., writing—original draft and editing, P.S.; writing—review and editing, W.K.; visualization, V.C.; supervision, C.B. and B.P.; project administration, V.C.; funding acquisition, B.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The dataset presented in this study is BCCC-cPacket Cloud DDoS 2024, part of the cybersecurity datasets released by the Behaviour-Centric Cybersecurity Center (BCCC) at York University. Detailed information and access to the dataset can be found at the BCCC website: https://www.yorku.ca/research/bccc/ucs-technical/cybersecurity-datasets-cds/ (accessed on 14 March 2025).

Acknowledgments

We sincerely thank King Mongkut’s Institute of Technology Ladkrabang (KMITL) for their invaluable support in providing tuition fee funding and research resources.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- StormWall. DDoS Attack Report 2024. StormWall Blog. 2024. Available online: https://stormwall.network/resources/blog/ddos-attack-statistics-2024 (accessed on 20 August 2025).

- Sharafaldin, I.; Lashkari, A.H.; Hakak, S.; Ghorbani, A.A. Developing Realistic Distributed Denial of Service (DDoS) Attack Dataset and Taxonomy. In Proceedings of the International Carnahan Conference on Security Technology (ICCST), Chennai, India, 1–3 October 2019. [Google Scholar]

- Shafi, M.; Lashkari, A.H.; Rodriguez, V.; Nevo, R. Toward Generating a New Cloud-Based Distributed Denial of Service (DDoS) Dataset and Cloud Intrusion Traffic Characterization. Information 2024, 15, 195. [Google Scholar] [CrossRef]

- Fathima, A.; Devi, G.S.; Faizaanuddin, M. Improving Distributed Denial of Service Attack Detection Using Supervised Machine Learning. Meas. Sens. 2023, 30, 100911. [Google Scholar] [CrossRef]

- Liu, C.; Zhong, S. DDoS Attack Detection Method Based on Machine Learning. In Proceedings of the 15th International Conference on Software Engineering and Service Science, Changsha, China, 13–14 September 2024. [Google Scholar]

- Naiem, S.; Khedr, A.E.; Idrees, A.M.; Marie, M.I. Enhancing the Efficiency of Gaussian Naïve Bayes Machine Learning Classifier in the Detection of DDoS in Cloud Computing. IEEE Access 2023, 11, 124597–124608. [Google Scholar] [CrossRef]

- Ismail; Mohand, I.M.; Hussain, H.; Khan, A.A.; Ullah, U.; Zakarya, M. A Machine Learning-Based Classification and Prediction Technique for DDoS Attacks. IEEE Access 2022, 10, 21443–21454. [Google Scholar] [CrossRef]

- Zhao, J.; Liu, Y.; Zhang, Q.; Zheng, X. CNN-AttBiLSTM mechanism: A DDoS attack detection method based on attention mechanism and CNN-BiLSTM. IEEE Access 2022, 11, 136308–136317. [Google Scholar] [CrossRef]

- Najar, A.A.; Naik, S.M. DDoS attack detection using CNN-BiLSTM with attention mechanism. Telemat. Inform. Rep. 2025, 18, 100211. [Google Scholar] [CrossRef]

- Cil, A.E.; Yildiz, K.; Buldu, A. Detection of DDoS attacks with feed forward based deep neural network model. Expert Syst. Appl. 2021, 169, 114520. [Google Scholar] [CrossRef]

- Hsu, C.M.; Azhari, M.Z.; Hsieh, H.Y.; Prakosa, S.W.; Leu, J.S. Robust Network Intrusion Detection Scheme Using Long-Short Term Memory Based Convolutional Neural Networks. Mob. Netw. Appl. 2021, 26, 1137–1144. [Google Scholar] [CrossRef]

- Abusitta, A.; Bellaiche, M.; Dagenais, M.; Halabi, T. A deep learning approach for proactive multi-cloud cooperative intrusion detection system. Future Gener. Comput. Syst. 2019, 98, 308–318. [Google Scholar] [CrossRef]

- Abdelaty, M.; Hayward, S.S.; Doriguzzi-Corin, R.; Siracusa, D. GADoT: GAN-based adversarial training for robust DDoS attack detection. In Proceedings of the IEEE Conference on Communications and Network Security (CNS), Virtual, 4–6 October 2021; pp. 119–127. [Google Scholar]

- Najar, A.A.; Naik, M. A Robust DDoS Intrusion Detection System Using Convolutional Neural Network. Comput. Electr. Eng. 2024, 117, 109277. [Google Scholar] [CrossRef]

- Hnamte, V.; Hussain, J. DCNNBiLSTM: An Efficient Hybrid Deep Learning-Based Intrusion Detection System. Telemat. Inform. Rep. 2023, 10, 100053. [Google Scholar] [CrossRef]

- Yaser, A.L.; Mousa, H.M.; Hussein, M. Improved DDoS Detection Utilizing Deep Neural Networks and Feedforward Neural Networks as Autoencoder. Future Internet. 2022, 14, 240. [Google Scholar] [CrossRef]

- Aydına, H.; Ormanb, Z.; Aydın, M.A. A long short-term memory (LSTM)-based distributed denial of service (DDoS) detection and defense system design in public cloud network environment. Comput. Secur. 2022, 118, 102725. [Google Scholar] [CrossRef]

- Cheng, J.; Liu, Y.; Tang, X.; Sheng, V.S.; Li, M.; Li, J. DDoS Attack Detection via Multi-scale Convolutional Neural Network. Comput. Mater. Contin. 2020, 62, 1317–1333. [Google Scholar] [CrossRef]

- Jihado, A.A.; Girsang, A.S. Hybrid Deep Learning Network Intrusion Detection System Based on Convolutional Neural Network and Bidirectional Long Short-Term Memory. J. Adv. Inf. Technol. 2024, 15, 219–232. [Google Scholar] [CrossRef]

- Kirubavathi, G.; Sumathi, I.R.; Mahalakshmi, J.; Srivastava, D. Detection and mitigation of TCP based DDoS attacks in cloud environments using a self-attention and intersample attention transformer model. J. Supercomput. 2025, 81, 474. [Google Scholar] [CrossRef]

- Akgun, D.; Hizal, S.; Cavusoglu, U. A new DDoS attacks intrusion detection model based on deep learning for cybersecurity. Comput. Secur. 2022, 118, 102748. [Google Scholar] [CrossRef]

- Le, T.T.H.; Heo, S.; Cho, J.; Kim, H. DDoSBERT: Fine-tuning variant text classification bidirectional encoder representations from transformers for DDoS detection. Comput. Netw. 2025, 262, 111150. [Google Scholar] [CrossRef]

- Ahmim, A.; Maazouzi, F.; Ahmim, M.; Namane, S.; Dhaou, I.B. Distributed Denial of Service Attack Detection for the Internet of Things Using Hybrid Deep Learning Model. IEEE Access 2023, 11, 119862–119875. [Google Scholar] [CrossRef]

- Said, R.B.; Sabir, Z.; Askerzade, I. CNN-BiLSTM: A Hybrid Deep Learning Approach for Network Intrusion Detection System in Software-Defined Networking with Hybrid Feature Selection. IEEE Access 2023, 11, 138732–138747. [Google Scholar] [CrossRef]

- Elubeyd, H.; Kaplan, D.Y. Hybrid Deep Learning Approach for Automatic DoS/DDoS Attacks Detection in Software-Defined Networks. Appl. Sci. 2023, 13, 3828. [Google Scholar] [CrossRef]

- Batchu, R.K.; Seetha, H. A generalized machine learning model for DDoS attacks detection using hybrid feature selection and hyperparameter tuning. Comput. Netw. 2021, 200, 108498. [Google Scholar] [CrossRef]

- Alhayan, F.; Kashif Saeed, M.; Allafi, R.; Abdullah, M.; Subahi, A.; Alghanmi, N.A.; Alkhudhayr, H. Hybrid deep learning models with spotted hyena optimization for cloud computing enabled intrusion detection system. J. Radiat. Res. 2025, 18, 101523. [Google Scholar] [CrossRef]

- Xiao, T.; Xing, C.; Zhang, T.; Zhao, Z. An Intrusion Detection Model Based on Feature Reduction and Convolutional Neural Networks. IEEE Access 2019, 7, 42210–42219. [Google Scholar] [CrossRef]

- Sogüt, E.; Erdem, O.A. Multi-Model Proposal for Classification and Detection of DDoS Attacks on SCADA Systems. Appl. Sci. 2023, 13, 5993. [Google Scholar] [CrossRef]

- Varalakshmi, I.; Thenmozhi, M. Entropy based earlier detection and mitigation of DDoS attack using stochastic method in SDN_IOT. Meas. Sens. 2025, 39, 101873. [Google Scholar] [CrossRef]

- Assis, M.V.O.D.; Carvalho, L.F.; Rodigues, J.J.P.C.; Lloret, J.; Proença, M.L., Jr. Near real-time security system applied to SDN environments in IoT networks using convolutional neural network. Comput. Electr. Eng. 2020, 86, 106783. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Al-qaness, M.A.A.; Dahou, A.; Ibrahim, R.A.; El-Latif, A.A.A. Intrusion detection approach for cloud and IoT environments using deep learning and Capuchin Search Algorithm. Adv. Eng. Softw. 2023, 176, 103402. [Google Scholar] [CrossRef]

- Patro, S.G.K.; Sahu, K.K. Normalization: A Preprocessing Stage. Int. Adv. Res. J. Sci. Eng. Technol. 2015, 2, 20–22. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- O’Shea, K.; Nash, R. An Introduction to Convolutional Neural Networks. arXiv 2015, arXiv:1511.08458. Available online: https://arxiv.org/abs/1511.08458 (accessed on 20 August 2025). [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; van Merrienboer, B.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder-Decoder Approaches. arXiv 2014, arXiv:1409.1259. Available online: https://arxiv.org/abs/1409.1259 (accessed on 20 August 2025). [CrossRef]

- Cordonnier, J.B.; Martin, A.L. Multi-Head Attention: Collaborate Instead of Concatenate. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 4 May 2021; Available online: https://arxiv.org/abs/2006.16362 (accessed on 20 August 2025).

- Mienye, I.D.; Swart, T.G. A Comprehensive Review of Deep Learning: Architectures, Recent Advances, and Applications, Information. Information 2024, 15, 755. [Google Scholar] [CrossRef]

- Xie, Y.; Wang, J.; Li, H.; Dong, A.; Kang, Y.; Zhu, J.; Wang, Y.; Yang, Y. Deep Learning CNN-GRU Method for GNSS Deformation Monitoring Prediction. Appl. Sci. 2024, 14, 4004. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).