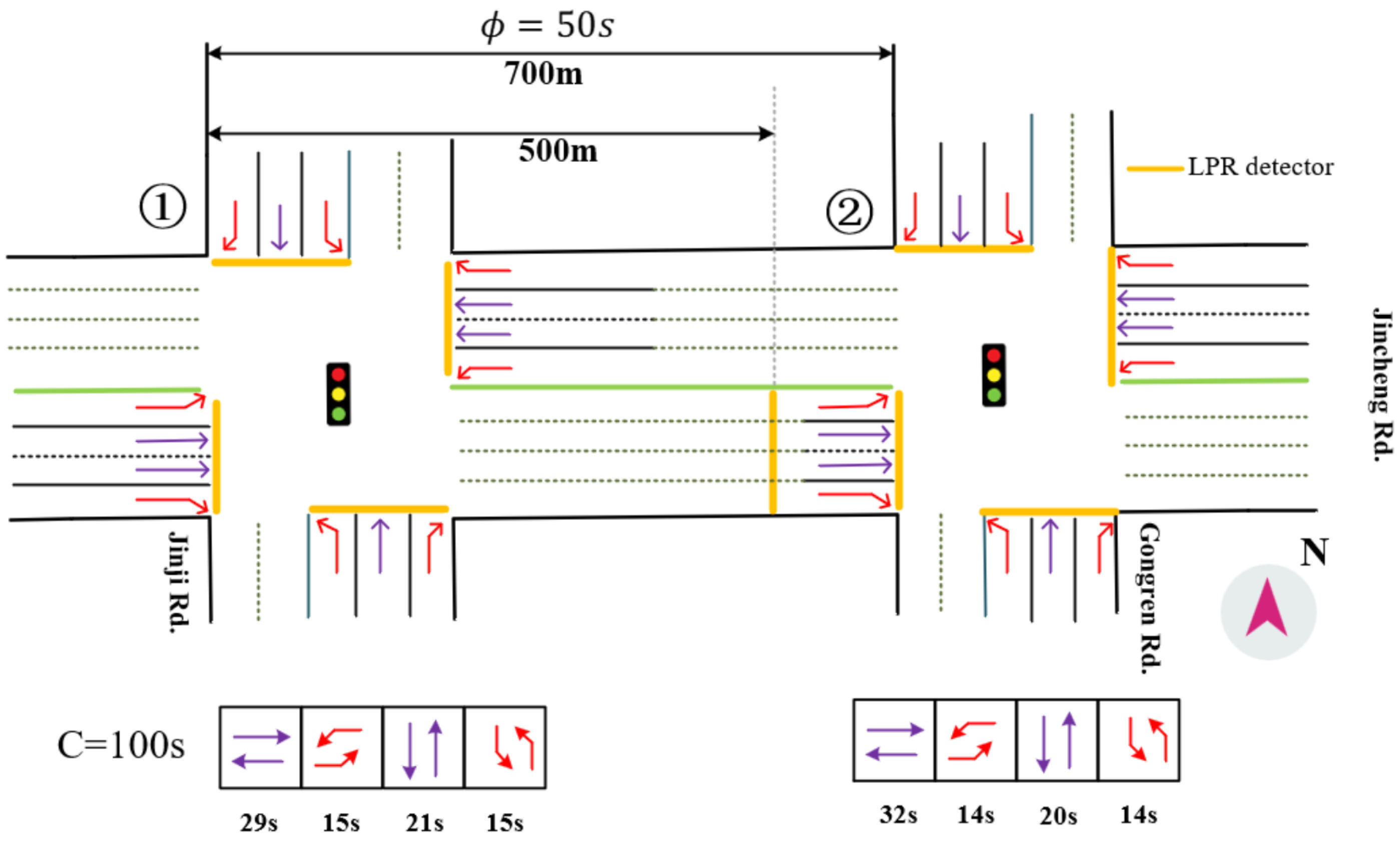

3.2.1. Rationale for Model Selection

The travel time data collected from ALPR matching is inherently a time series. The state of a vehicle in a platoon is not independent of the vehicles preceding it; rather, there exists a strong sequential dependency. For instance, a vehicle in a free-flow state is likely to be followed by another in free-flow. Conversely, the state of a vehicle following one delayed at the head of a queue depends on whether that queue has begun to dissipate. Conventional static classification methods, such as Gaussian Mixture Models or DBSCAN, treat each travel time as an independent data point, thereby ignoring this critical temporal information. This makes it difficult to distinguish between similar travel time values that arise from different traffic dynamics (e.g., queue formation vs. queue dissipation).

The HMM is a probabilistic graphical model specifically designed to analyze sequential data with unobservable latent states. By establishing a statistical relationship between the observable outputs (travel times) and the hidden states (traffic congestion states), HMM can effectively capture the dynamic characteristics and temporal evolution of sequential data. Therefore, HMM is the ideal choice for this problem.

3.2.2. HMM Formulation

In this study, we define the traffic condition experienced by a vehicle as one of three hidden states. These states are not directly observed but govern the vehicle’s travel time (the observation):

State m (head-of-queue delay): Vehicles at the head of a platoon are affected by a downstream red signal. Their travel times are long but tend to decrease as the queue dissipates after the light turns green.

State u (free-flow): Vehicles are unimpeded by signals or queues and travel at or near the free-flow speed. Their travel times are stable and typically short.

State s (tail-of-queue delay): Vehicles at the tail of a platoon are delayed by a queue that has already formed ahead. Their travel times are significantly longer and may even increase as they join the back of the queue.

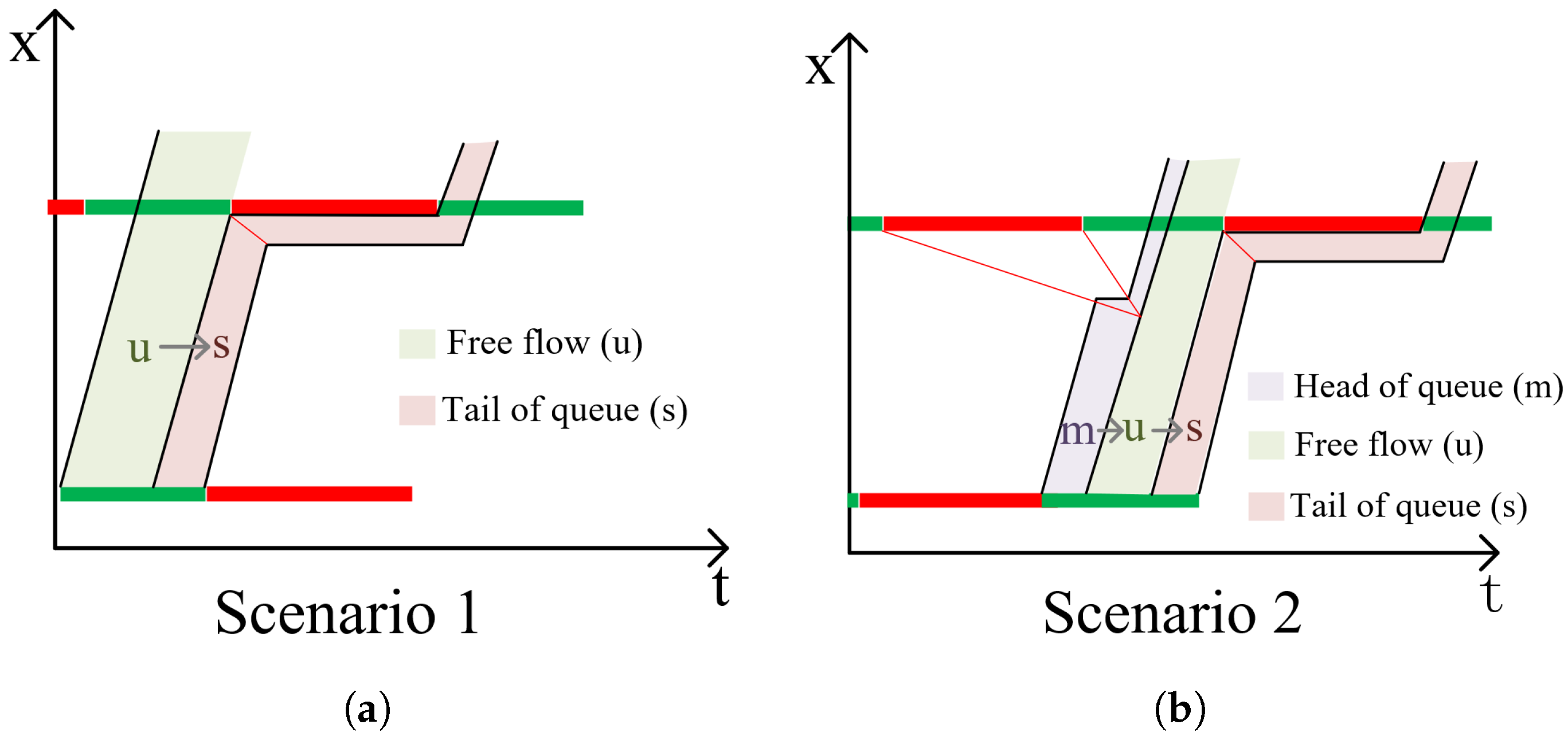

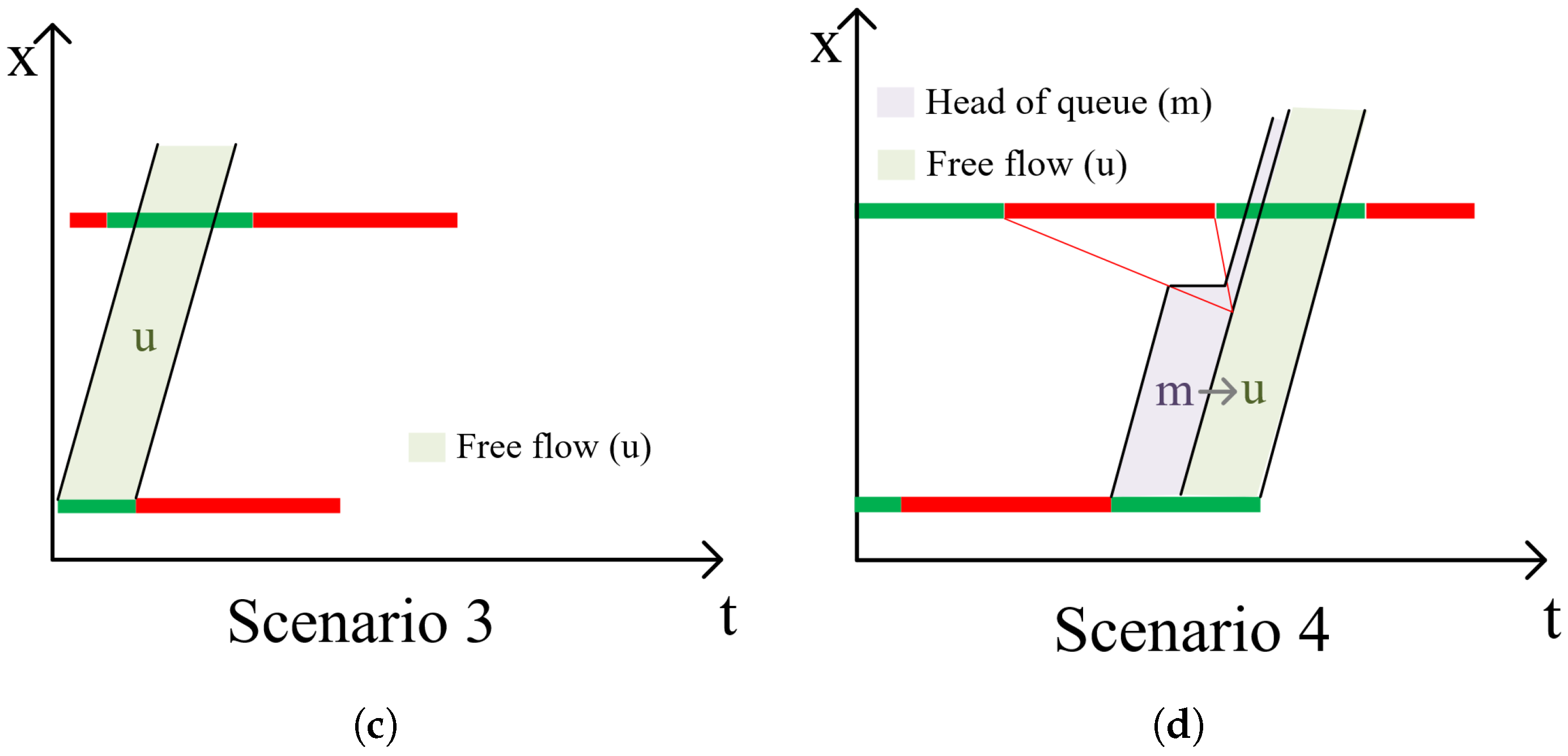

The state transitions among these three states reflect the physical process of a platoon moving through the intersection. The evolution of a platoon can be modeled as a sequence of these states. Based on the platoon’s arrival time relative to the signal phase, four typical state-transition scenarios can occur:

Scenario 1 (): A group of free-flow vehicles (State u) arrives, but subsequent vehicles begin to queue due to a signal change, causing a transition to tail-of-queue delay (State s).

Scenario 2 (): A queue dissipates (State m), allowing a set of vehicles to pass in free-flow (State u), before a new queue begins to form near the end of the green phase (State s).

Scenario 3 (u): An entire platoon passes through the intersection during a “green wave” or otherwise unimpeded, with all vehicles remaining in the free-flow state (State u).

Scenario 4 (): A queue fully dissipates (State m), and all subsequent vehicles observed proceed in free-flow (State u) until the end of the observation window.

It is important to note that while the physical descriptions of states m and s involve dynamic trends in travel time (e.g., decreasing for a dissipating queue), the standard HMM employed in this study simplifies this by assigning an independent observation distribution to each state. The model primarily distinguishes between these different congestion states by learning the state transition probabilities and analyzing the sequence of states, rather than by directly modeling trends within the emission probabilities. For instance, the Viterbi algorithm, by finding the most likely state sequence, can effectively differentiate typical patterns such as (queue dissipation) from (queue formation).

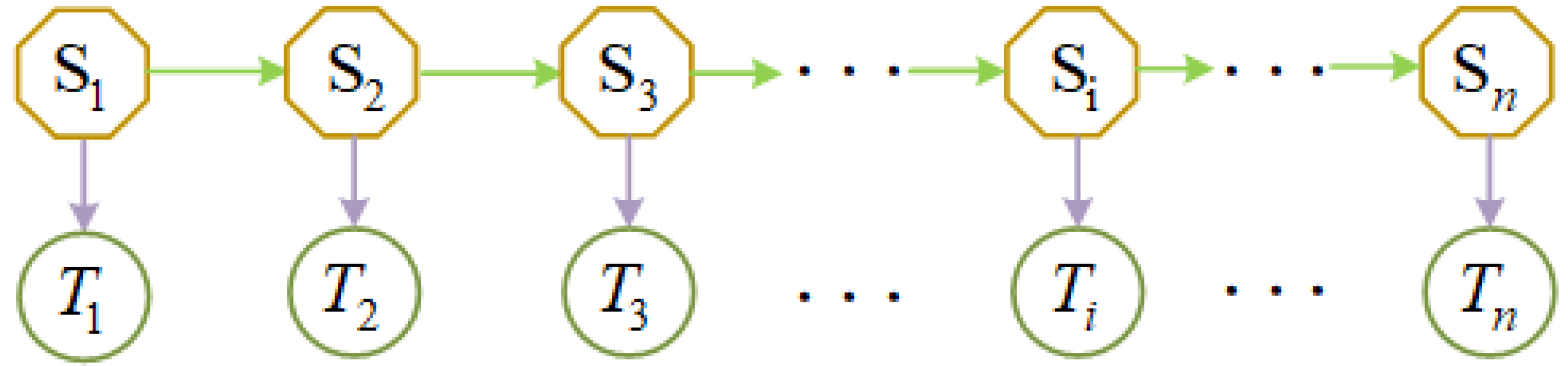

The HMM’s state transition matrix

(where

) can perfectly characterize all the above scenarios probabilistically by learning from the mixed data. These states and their corresponding transition scenarios are visually illustrated in

Figure 3.

The HMM framework is illustrated in

Figure 4; this layered structure explicitly models how latent congestion states (upper layer) probabilistically generate observable travel times (lower layer) while accounting for the chronological sequence of vehicles passing through downstream intersections.

The input data consists of a sequence of tuples sorted chronologically. The travel time component is selected as the observation sequence , where represents the travel time of the g-th vehicle in the set. The departure time through the downstream intersection is used for temporal alignment but not directly incorporated into the HMM framework.

We model the matched travel-time sequence and its latent congestion states with a first-order HMM. The joint probability factors into an initial term, state transitions, and emissions as follows:

Notation used in Equation (

1).

: hidden state of the g-th observation (queue head m, free flow u, queue tail s).

: link travel time (s) of the g-th observation; with .

: initial state probabilities.

: transition matrix with and i (origin), j (destination) .

: emission family; (univariate case ).

: parameter set of the HMM.

A compact list of all symbols is provided in

Table 1.

Since these parameters are not known beforehand, they must be learned directly from the observed data sequence

. For this task, we employ the Baum–Welch algorithm [

29,

30]. This algorithm, a specific application of the expectation-maximization (EM) procedure, iteratively adjusts the parameters

to maximize the likelihood of the observed travel times, as expressed in Equations (

2) and (

3). The detailed steps are provided in

Appendix A as Algorithm A1.

The HMM in this study uses continuous observations: each is a scalar link travel time and . Only the hidden state is discrete.

Once the model parameters have been estimated using the Baum–Welch algorithm, the next step is to use these parameters to infer the most probable sequence of hidden states that corresponds to the observed travel times. This process, known as decoding, is accomplished using the Viterbi algorithm.

The Viterbi algorithm [

31,

32] finds the single most likely state sequence

given the observations

and the now-trained model parameters

, as shown in Equation (

4). This dynamic programming approach recursively computes the maximum likelihood path through the trellis of states, with the procedure detailed in

Appendix A as Algorithm A2.

Finally, the inferred state sequence is used to partition the data into three groups corresponding to states . The group with the smallest mean is identified as the free-flow group (state u).

3.2.3. Free-Flow Speed Distribution Estimation

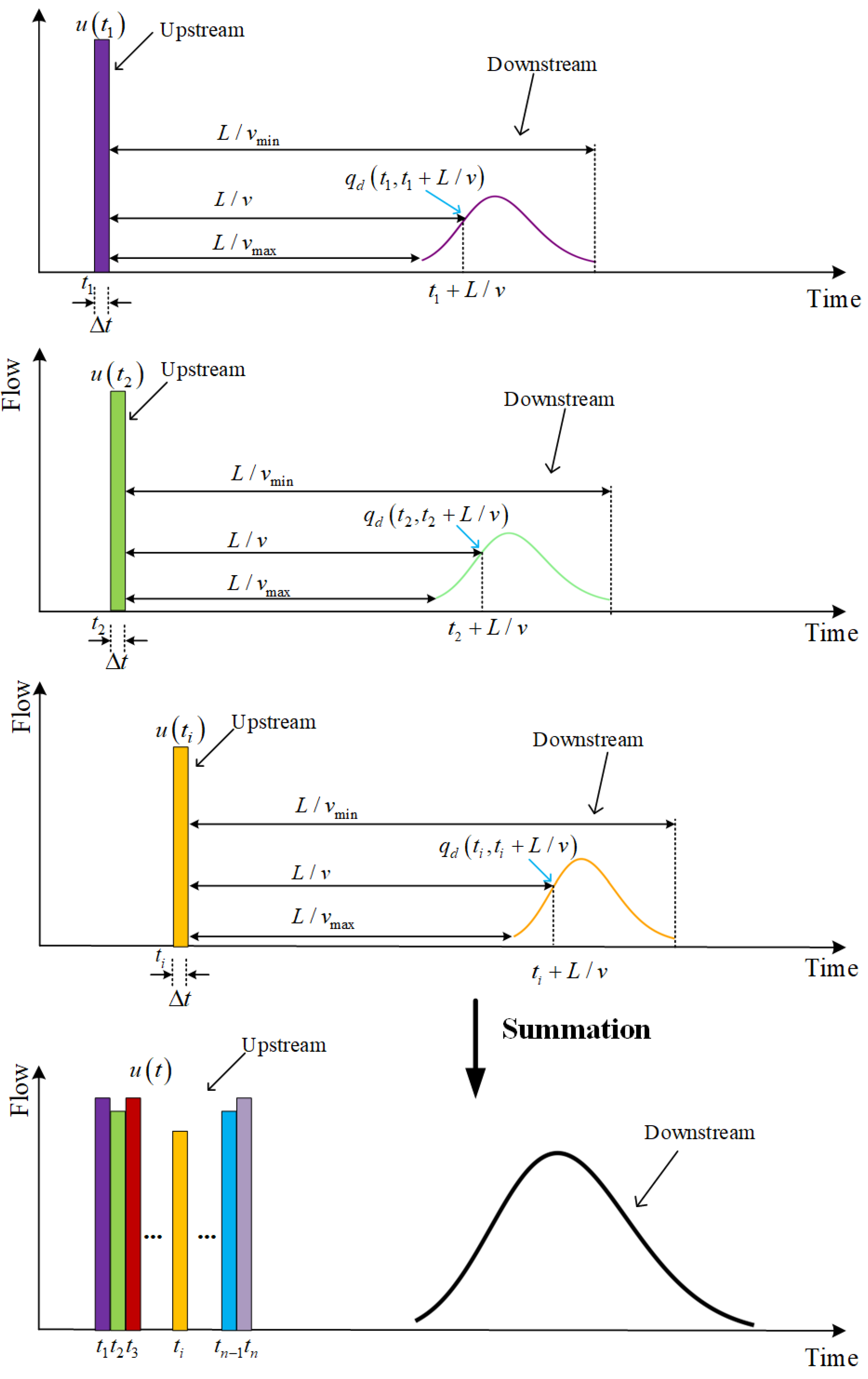

After obtaining the free-flow group, the parameters of the platoon dispersion model can be dynamically estimated. To establish the dynamic platoon dispersion model, we propose the following microscopic driving assumptions:

Speed heterogeneity: The speeds of different vehicles within the platoon are not uniform; instead, they are independent and identically distributed (i.i.d.) random variables following a common free-flow speed distribution .

Individual speed constancy: The speed of any individual vehicle i is assumed to be constant throughout its journey along the link from the upstream to the downstream intersection.

In this study, the free-flow speed distribution function is assumed to follow a truncated normal distribution, as given by the following formula:

where

c is the coefficient of truncated distribution,

is the average speed at time

over a 10 min time window,

is the mean square deviation of speed at time

over a 10 min time window,

is the minimum speed at time

over a time 10 min window, and

is the maximum speed at time

over a time 10 min window.

Within the free-flow group, the free-flow speed set can be obtained

. Here

M is the number of samples in the free-flow group within the current window. The parameters in Equation (

5) can be estimated using the following formula: