Abstract

First responders operate in high-stakes environments, demanding rapid, accurate decision-making. Recent research has provided recommendations on how Augmented Reality (AR) could be utilized to support their efforts. Building off of these studies, this paper presents EasyVizAR, an AR system designed to enhance situational awareness and operational efficiency in challenging indoor scenarios. Leveraging edge computing and advanced computer vision techniques, EasyVizAR addresses critical challenges, such as object detection, localization, and information sharing. This research details the system’s architecture, including its use of ParaDrop-based edge computing, and explores its application in rescue and active-shooter scenarios. We present our work in developing key features, including real-time object saliency cues, improved object detection, person identification, multi-user 3D map generation and visualization, and multimodal AR navigation cues.

1. Introduction

First responders face immense pressure in emergency situations, requiring split-second decisions that can determine life or death. The complexity of these scenarios, particularly in indoor environments characterized by limited visibility, restricted localization, and lack of accessible maps, underscores the need for innovative technological solutions. A common problem during these situations is the ability to effectively communicate pertinent information with several colleagues within the hazardous environment. This can be due to both physical challenges, for example, dangerous hazards, such as fire, or physical obstructions, such as large crowds, as well as technological challenges, such as radio communication interference or lack of internet connectivity [1]. These challenges can, in-turn, have negative mental health effects on first responders [2].

Over the past ten years, new technologies in the fields of virtual reality, augmented reality, digital twins, computer vision, wearable technologies, and edge computing have emerged through lower-cost, consumer-available hardware and devices. These advancements have created new opportunities for developing applications, using these technologies, to benefit first responders [3,4,5,6]. Among several emerging technologies, augmented reality (AR) and edge computing serve as two technologies that could bring some of the greatest benefits to first responders. AR brings the possibility of adding supplemental information to a first responders’ visual field of view, thus helping them with decision making in challenging environments. Edge computing serves as the central technology for sharing information between peers in the hazardous environment.

Another recent technological development comes from Vision Transformer (ViT) models, which have recently demonstrated significant potential in tackling complex computer vision tasks, particularly open-set object detection. Innovative methodologies, such as Grounding DINO [7], integrate language features derived from textual prompts to guide visual feature inference, enabling zero-shot detection of arbitrarily specified objects. This capability holds significant promise for emergency response scenarios.

Insights gained from interviews with public safety professionals [8] demonstrate the potential to deploy language-driven object detectors in a variety of challenging emergency situations. This approach offers the flexibility to adapt to diverse scenarios without the need for extensive model retraining. For instance, during a fire incident, the object detector could be prompted to identify doors and windows, crucial escape routes within a smoke-filled environment. Similarly, in a rescue mission, the same model could be tasked with identifying a specific individual based on a descriptive prompt of their appearance.

This language-driven approach significantly simplifies tasks that are traditionally cumbersome with closed-set object detectors, such as those trained on the COCO 80 object classes. These conventional models require extensive retraining to recognize new object classes, whereas ViT models like Grounding DINO offer the adaptability to respond to dynamic and unpredictable emergency situations through simple textual prompts. This capability enhances situational awareness and operational efficiency, providing critical support to first responders in time-sensitive and high-pressure environments.

Augmented Reality (AR) offers a promising avenue to enhance situational awareness by overlaying critical information onto the user’s real-world view. Recent work has shown the possibilities of using AR to augment the needs of first responders [8,9,10]. These papers help to define the needs of these groups, providing guidelines on how AR systems should be designed to meet these users needs. In this paper, we aim to turn these recommendations into a reality.

This paper introduces EasyVizAR, an end-to-end AR system designed to address the challenges faced by first responders, focusing on indoor fire rescue and active-shooter scenarios as case studies. In particular, the developed technologies aim to support novel methods for object detection, localization and navigation, and information sharing and collaboration. In the sections below, we discuss the implementation of these technologies, as well as technical challenges and opportunities.

2. Related Work

Prior research has addressed key challenges faced by first responders, focusing on situational awareness and communication. Studies show that maintaining situational awareness is a significant challenge for all first responders due to dynamic and unpredictable environments. For example, paramedics struggle to track the latest information on victims in mass-casualty events, while firefighters often operate in low-visibility conditions caused by heavy smoke and steam, forcing them to navigate blindly by touching and counting walls [11,12,13]. Communication is another persistent problem, as traditional land mobile radios have limited bandwidth and are prone to signal interference. Furthermore, verbal communication often fails to effectively convey visual information, like maps, hindering a shared understanding of a scene [14,15].

While some research has explored the use of technology by first responders, the application of augmented reality (AR) has been limited. Early studies often asked participants to imagine the experience of using AR glasses, rather than providing hands-on experience [16,17]. The few works that did involve a physical device, such as the study with the Recon Jet eyewear [18], used technology that functioned more like an external phone screen than a true AR display. These devices required users to repeatedly shift their attention between the screen and the physical world, highlighting a major limitation and leaving a gap in understanding how modern AR head-mounted displays could be used.

Extensive efforts have been made to create AR systems for first responders, but most have focused on handheld AR on mobile devices [19,20,21,22,23,24,25]. While systems like THEMIS-AR [22] and others have shown promise by overlaying contextual information onto a smartphone camera’s video stream, they force users to occupy one of their hands and constantly switch their focus between the screen and their surroundings.

In contrast, head-mounted AR frees a user’s hands for critical primary tasks and avoids these attention shifts. However, a small number of studies have explored head-mounted AR for first responders [26,27,28,29]. These works typically focus on the technical framework and development of a system. Another example comes from Nelson et al., who utilized a user-centered approach to design head-worn AR tools for triaging [30].

More recently, teams have worked with first responders directly to better understand how to build effective solutions for their particular needs [8,9,10]. Additionally, recent work has demonstrated the utilization of AI and audio to support first responders in an Extended Reality (XR) scenario [31]. Finally, another recent example of collaborative XR for first responders include a study by Wilchek et al., which equipped canines with AI-powered cameras to enable precise and rapid object detection to improve survivor localization [32].

3. System Architecture

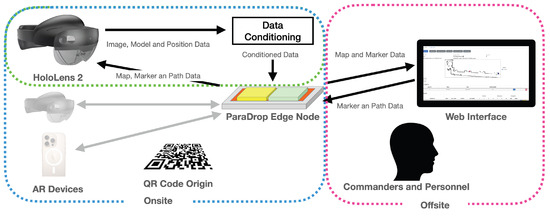

EasyVizAR leverages edge computing to address the computational demands of AR applications. By offloading computationally intensive tasks from the head-mounted AR device to nearby edge nodes, we reduce battery drain and thermal issues. We employ ParaDrop [33], a lightweight, containerized edge-computing platform, to provide flexible and efficient processing capabilities. This distributed architecture is crucial for supporting real-time object detection, image processing, and 3D map generation. A conceptual diagram of the EasyVizAR system can be found in Figure 1.

Figure 1.

A diagram of the EasyVizAR system architecture.

3.1. Edge Node

The goal of the edge node is to act as a central hub for data and computing. We utilize ParaDrop, a rugged, battery-powered unit that can be left in the emergency response vehicle or carried on site to provide cross-agency edge-computing support for collaboration in AR. ParaDrop uses containerization technologies to dynamically instantiate computation in a highly programmable manner. It uses a lightweight hardware footprint, such as common WiFi Access Point nodes, which can be augmented with additional hardware modules, such as NVIDIA Jetson to include GPU functionality and provide rich computational support. Finally, it provides specific access to multiple wireless access technologies (WiFi, Bluetooth, etc.) to allow for flexible interactions.

The edge node is tasked with providing security and collaboration capabilities that simplify access and sharing among multiple personnel in an easy and seamless manner. The edge node is first responsible for maintaining a list of active devices for which data will be acquired from and be pushed to. The edge node also provides web hosting to enable offsite personnel to view and interact with the system in real time. Data collected between devices is used to build a floor plan/map of the space. Onsite or offsite users can add labels to this map in real time. The edge node is responsible for ensuring that this information is distributed to all devices in real-time.

Additionally, the edge node can augment the computing capabilities of the AR devices. This enables operations to be performed that could not be performed on the device itself due to the limitations of the device. However, even in cases in which the AR device can perform these operations, edge computing can still provide significant value. Computationally intense operations can cause application-interrupting lag in the visual display, reduce battery life, and cause devices to overheat. Offloading of these operations oftentimes offsets the computational costs and provides benefits beyond the complexity of data transmission.

3.2. AR Devices

The developed system was primarily focused on building tools for the Microsoft HoloLens 2 headset, an optical see-through AR device. These devices were employed based on interviews with first responders who felt optical see-through AR provided the most value and safety because views were not mediated through a video camera. In contrast to video pass through, optical devices show the stereoscopic AR holograms via transparent displays, so if they lose power or tracking, the user can still see the surrounding environment. This leads to a failure case where the AR visuals are no longer present compared to the user having no view of the physical world at all. While the HoloLens 2 was the primary device, we note that the system works for any device that can self-locate. Other tested devices include mobile phones, quadruped robots, and quadcopter drones. A QR code was used to specify the origin as the means of placing any compatible device in the same unified coordinate frame.

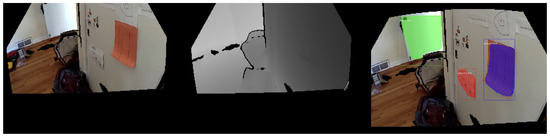

When enabled, the HoloLens 2 Research Mode allows a developer to access images from the six different cameras on the hardware platform. Using this access, the team developed a Unity plugin, which allows for accessing these camera streams from a C++ software framework while being able to share this acquired data at the higher-level Unity framework. One of the camera streams is for a Long-Range Depth Camera, and an example of its depth output can be seen in (Figure 2 Middle). This time-of-flight camera provides depth images (1–5 images per second) containing pixels in an unsigned 16 bit integer image format, whose value represents a distance in millimeters away from the user. By combining these images with a series of matrix transformations representing the location of the HoloLens 2 in the 3D environment, it is possible to recreate a 3D point cloud of what the user sees by projecting the 2D depth image pixels values back out into 3D space. Performing this with only the depth data stream results in a point cloud without color, and is thus hard for users to interpret. To alleviate this, the team determined a way to combine the HoloLens 2’s long-range depth image with the corresponding color camera image to find pixels that overlap in 3D space between the two images; the results of this alignment are shown in (Figure 2 Left). This combination allowed for the depth pixels to be associated with a color pixel and to produce a fully colored point cloud for the users to view. Note that, by default, captured color images on the HoloLens 2 differ in terms of both field of view and resolution from captured long-range depth images; thus, a rectification step is needed to find actual pixels that correctly coincide with the same 3D locations. To achieve this, the color and depth pairs are calculated by unprojecting the depth pixels into 3D space, and then projecting those 3D locations back onto the color image associated with the same timestamp, using the color camera’s intrinsic and extrinsic parameters. The result of these steps is that the pixels in camera space can be positioned in world space.

Figure 2.

Aligned captures from our HoloLens 2 application. Left: A color image indoors showing a hallway and door. Middle: A depth image captured and aligned at the same time as the color image, such that pixel correspondences are readily available. Right: A color image showing the three segmentation and identification results overlaid with contour highlighting.

3.3. Data

Three different types of data transfers were used from the device to the server consisting of text, images, and binary data. Serializable data was packed into JSON strings and sent to the server as strings of text. These strings included items such as the name of the device, the device’s pose, and other high-level actions.

Each device sends its own local 3D map of the space, exported from the HoloLens’ Mixed Reality Toolkit Software Development Kit (MRTK SDK) spatial awareness subsystem. This data is transferred as PLY files to the edge server. The server collects the PLY files from one or more headsets and integrates them into the map of the environment by cleaning the scans and removing areas of overlap. The integrated map can be visualized as a 3D model or as a series of 2D floor plans. Each AR device is capable of sending camera image data to the server in the JPEG format. These images are spatially located in the unified coordinate system. This allows for map annotations to indicate where the images were captured, for computer vision algorithms to be run in the context of a location, and for providing the ability to create color 3D models of the space.

The means of sharing data with registered clients utilized a variety of file formats. For web viewers, an SVG image was utilized. This provided an easy and accessible way to map from world coordinates to floor plan coordinates and back. This methodology enabled offsite individuals to create markers in 2D floor plan space that could be visualized by immersed individuals in the space itself. This includes sets of known communication icons, navigation paths for users, and hand-drawn map annotations.

For systems not capable of natively rendering SVG images, floor plans were rasterized and sent to devices as PNG images. This proved particularly useful by allowing individuals in augmented-reality setups to explore synchronized and annotated floor plan maps. This base system provides a variety of opportunities further described in the Section 4.

3.4. Data Conditioning

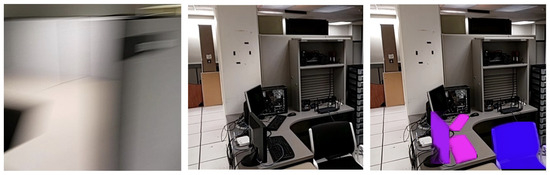

While the XR devices were constantly acquiring new data, not all of this information provided the same value for the system. As an example, when the headset is relatively stationary, most images from the camera are expected to be geometrically redundant. However, when the headset is moving quickly, images may be distorted by motion blur. Therefore, it was critical to design the system to only utilize high-quality image data that contains new information about the environment.

To accomplish this, the headset’s positional and rotational velocity are checked against defined thresholds. Next, a lightweight, truncated object detection model is run on the headset that reports maximum scores for object categories but not the precise location or count. The maximum score represents the likelihood that one or more instances of a given object class are present in the image. Images that produce a high score for a configurable object of interest should be sent to the edge server for further processing, which may include image segmentation. Finally, additional considerations include the time or distance traveled since the last transmitted image and how the set of probable positive object classes compares with the set from previous images. Such measures help assess the information value of further processing a given image. A visualization of this process can be seen in Figure 3.

Figure 3.

Data-conditioning evaluation Left: An image distorted by motion blur captured by HoloLens 2 and discarded after a low response in the truncated object processing on the headset. Middle: An image that produces a higher response in the truncated object detector is transmitted to the edge server for image segmentation analysis. Right: The edge contour segmentation annotations returned from the server; purple shows a monitor, blue shows a chair, and pink shows keyboards.

3.5. Edge-Based SLAM

While the AR devices can perform simultaneous localization and mapping (SLAM) on device, there are reasons for wanting to offload these types of computations. For example, Edge-SLAM [34] and AdaptSLAM [35] have shown a significant reduction in computation required on the mobile device by offloading some SLAM algorithm components through edge computing.

We see opportunities to expand on these approaches in two different ways. Recognizing that the leading approach to visual SLAM uses the bag of words approach for coarse localization and camera pose estimation by detecting features from a camera image, searches previously collected images, sometimes referred to as key frames, for the largest intersection set of features, and then performs point matching and camera pose estimation. Because this method lacks semantic information about the feature points, this approach can be unreliable in dynamic environments or when revisiting an area after objects changed their relative positions. As a practical demonstration of this problem, we have been able to create situations that confound the HoloLens 2 internal implementation of visual SLAM by scanning the same room with an alternating open and closed door. We hypothesize that robustness may be improved by increasing the importance of feature points on static surfaces, such as walls and floors, during the camera pose estimation step. It may also be beneficial to decrease the weight or expire over time features of to movable objects, such as furniture, or completely filter out features detected on humans and animals.

Secondly, the speed and completeness of mapping can be improved through multi-user SLAM. Potential benefits of multi-user SLAM include a faster and more accurate map construction due to sampling from more viewpoints while reducing the computation on each individual headset, which would be required to independently build and maintain a map of the environment. As first responders typically work in teams, this is potentially of substantial benefit. EasyVizAR leverages edge computing to aggregate mapping data from multiple sources to gain the benefits of multi-user mapping.

3.6. Explicit Corridor Mapping

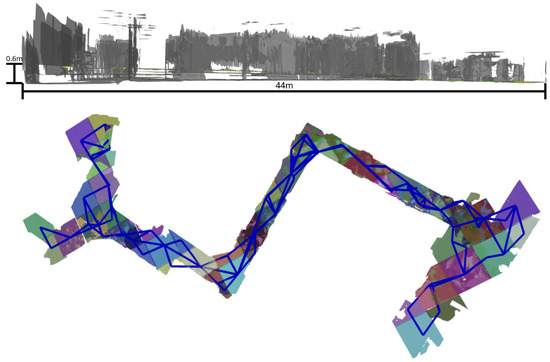

Explicit corridor map (ECM) [36] is a representation of a space that begins from a mesh structure for an indoor space and derives a graph that summarizes the important navigation points. Graph nodes are selected based on the property of being equidistant from two or more obstacles. The technique can be applied to multi-floor maps by separately processing distinct layers and merging the graphs at transition points such as stairs and ramps.

ECM is an attractive data structure for efficient navigation and compares favorably in experiments [37] to other methods, such as voxel-based methods, owing to its relative sparsity. However, the process of constructing an ECM from sensor data presented a few challenges. The first challenge was that the data collected from multiple headset scans was shown to produce vertical errors, as shown in Figure 4. We observed, in some experiments, that the headset estimate of vertical position would drift up or down over time, which leads to a poorly aligned composite mesh. We were able to identify and compensate for vertical drift to some extent by projecting user traces down to the nearest horizontal mesh element. Selecting the topmost layer produces a consistent and feasible walking surface for path planning, which is primarily concerned with movements in the horizontal plane. However, our compensation does not correct the underlying inaccuracy of the map, which requires further work similar to the optimization in bundle adjustment. In the example below, vertical drift is seen more strongly on the left side of the image than the right, appearing as multiple stacked floor meshes. The selected floor for navigation mesh is the yellow- and green-tinted topmost layer.

Figure 4.

Top: Building-scan example with significant vertical drift toward the left side of the image. Bottom: Extraction of walkable subcomponents of the spatial mesh. Each colored cell is a distinct subcomponent itself, comprising a triangle mesh. Blue lines indicate the inferred navigation graph. The scale of the map is approximately 44 m in length between the furthest points, and the vertical drift measures 0.6 m between the highest and lowest points.

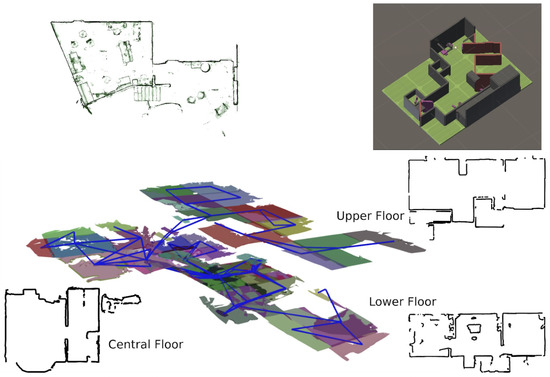

This approach, using simultaneous collection of spatial meshes and user traces, helps solve two of the major challenges we previously identified. The first challenge is that of movable vertical boundaries, such as doors, as well as gaps in the scanned walls due to sensing limitations. The user traces help us identify feasible paths that traverse doors and constrain pathfinding to known feasible movements. Second, our approach implicitly handles multiple floors by discovering the transition points that link them, which may include stair faces if they were detected or jump directly between floor landings in the worst case. Figure 5 shows the result of scanning a split-level residential building with three distinct levels. Given the resulting navigation mesh, our system is able to navigate a user between two points in any of the colored regions. Because of the coarse (2.5 m) granularity of the map structure, the resulting path may not be smooth, but it is nonetheless likely to be comprehensible in the AR display. However, we note that it is possible to refine the path through a two-stage operation that searches the finer cells that make up each visited region. Overall, we found that completing the construction of the ECM generally resulted in paths that were more intuitive and visually appealing.

Figure 5.

Top Left: Shader-based top–down map highlights local environment geometry with walls shown as heavier lines. Top Right: A simplified 3D model of a room, in which ordinary walls are colored gray, inferred walls are colored red, and furniture and other obstacles are colored purple. Bottom: Complete mapping of a split-level residential building with approximate floor plans and navigation graph connections as blue lines.

3.7. Navigation Mesh Path Planning

While the ECM approach described above provides results that are generally human-understandable, in practice, we found that the noise in the input often limited their ability when using path-planning techniques. We instead chose to aggregate data from the headset’s local models of the space and use this to perform path planning through existing path-planning algorithms in the Unity game engine, Navigation Meshes [38].

For this method, the edge server continually collects surface data, stored as PLY files, from one or more headsets. Each file contains a portion of the reconstructed triangle mesh, defined within a unique grid for each headset. These grids, which are not necessarily aligned with one another, are determined by the headset’s orientation at startup.

Each headset assigns a unique identifier (UUID) to these surface chunks. The UUID system attempts to maintain consistency for repeated scans of the same area, though it may change over time. To handle data redundancy, a two-fold filtering approach was implemented. First, older versions of files that share the same surface UUID are discarded, ensuring only the most recent scan data is used. Second, for surfaces with different UUIDs, the intersection-over-union (IoU) of their bounding boxes is calculated. If the IoU exceeds a threshold of 60%, only the newer surface is kept, effectively eliminating overlapping and redundant information. The remaining surfaces are then aggregated into a single, comprehensive mesh through a simple concatenation and re-indexing process. No attempt was made to stitch the seams between surfaces at this stage.

From this aggregated dataset, the mesh was run through Unity’s voxel-based algorithm to construct a NavMesh. This method, often used to control the way non-player characters can move around in a game, proved to be an efficient way of providing paths to individuals in the real-world. These NavMeshs can be used locally by individuals in the field or by remote commanders through Unity.

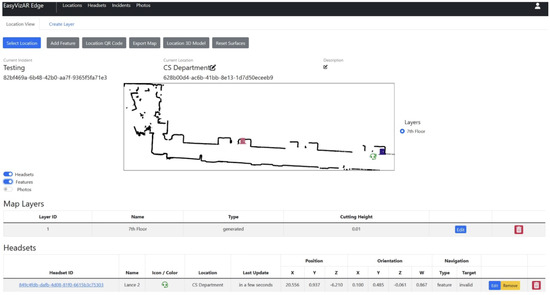

3.8. Floor Plan Generation

From the aggregated mesh generated using the method described above, a 2D floor plan is generated by computing the intersection of a horizontal plane with the 3D model (Figure 6). This process involves iterating through the mesh’s triangles to determine which ones intersect the plane. For each intersection, the resulting line segment is recorded. The library utilized leveraged triangle adjacency, which allowed for the output of continuous paths (a series of connected points) instead of individual, disconnected line segments. This method provides a more compact and coherent representation of the floor plan. The paths or line segments are then written to an SVG file, using SVG polyline or line elements. The SVG’s viewBox and transformation matrix are configured to ensure the generated map is correctly scaled and oriented. When needed, the SVG can be rasterized into a PNG file. The height of this cutting plane can be adjusted to capture different floors or levels within the environment. While it is possible to generate a detailed topographical map with numerous cutting planes, this was too computationally expensive for a real-time application. The default cutting height was set to zero, corresponding to the height of a reference QR code, which was typically placed at waist or table height for optimal results.

Figure 6.

The web dashboard shows the latest version of the floor plan including annotations. In this case, the map shows two door markers in pink and purple and the current location of a headset as a green marker. The dashboard enables command staff to perform various functions including adding markers by clicking on the map exporting the map into various 2D and 3D formats.

4. Applications

Building off the infrastructure described above, a series of applications were developed to better support first responders. Details are provided in the section below.

4.1. Object Detection and Person Identification

The first application uses color images from the HoloLens’ main camera to run edge-assisted object detection. The detector can return object bounding boxes or segmentation masks to the headset so that it may display additional information about the objects in augmented reality. The headset uses its integrated depth-sensing capability to measure the distance of the object and associate a point in space corresponding with that object to display an AR saliency cue. By matching corresponding pixels from the color image, depth image, and object mask, a location for each detected object can be inferred and marked on the building map. The matching and segmentation are visually demonstrated in (Figure 2). To gain deeper insight into this domain, we developed a baseline prototype that runs either a pretrained closed-set detector, such as YOLO or Grounding DINO on our edge server using unmodified camera images from the headsets along with a simple web interface to enable the commander to specify objects of interest using plain language. For instance, during a hostage-rescue scenario, the commander might input a description of the suspect individual, whereas during a fire scenario, the commander might instead select doors, windows, and ladders.

The second application uses face recognition on the edge server to return the names of individuals present in the scene to the headset to be displayed in AR in a similar way. In a deployed solution, personnel could populate the server database with images of personnel and persons of interest, or the system could be trained in an online manner as people present at the scene are identified.

In means of performing object detection and person identification, the YOLOv10 [39], Grounding DINO [7], and DeepFace [40] models were used. YOLOv10’s novel architecture eliminates the need for non-maximum suppression, improving performance on resource-constrained devices. Grounding DINO’s language-driven approach enables zero-shot detection, allowing users to identify objects based on textual descriptions.

Recently, vision transformer (ViT) models have shown promise in challenging computer vision tasks, such as open-set object detection. Novel approaches such as Grounding DINO fuse language features from a text prompt to guide inference on visual features and enable zero-shot detection for arbitrarily specified objects. Based on interviews with public safety professionals, we saw the potential to leverage language-driven object detectors in a variety of complicated emergency scenarios without the need for expensive model retraining. For example, the object detector could be prompted to find doors and windows during a fire incident, as those are potential escape routes. The same model could be prompted to identify a specific person by a description of their appearance during a rescue mission.

In means of assessing the suitability of this approach, we developed prototypes around the VILA [41] and Grounding DINO [42] models. VILA is a lightweight model suitable to responding to text queries about one or multiple images especially for summarizing or question-answering tasks, whereas Grounding DINO produces bounding boxes for the objects described in the prompt. For our prototype, the color images are captured on the headset, compressed, and sent to the edge compute server for processing.

4.2. Visualizations

To visualize the server-generated floor plan of the space, several techniques were explored. Beyond the simple SVG floor plan described, another approach to rendering top–down maps was developed, which better highlights objects and potential obstacles in the environment and which also readily incorporates data from multiple headsets (Figure 5 Top Left). This technique makes use of surface shaders, which can be run on either the edge host CPU or GPU and uses normal vectors from the environment mesh captured by the HoloLens headsets to calculate a continuous color gradient based on changes in local surface geometry. The example shown in Figure 5, which demonstrates an office workspace, visually reveals different types of furniture in the environment, including a couch, table, and chairs, with a small stairway readily apparent. This type of map visual is potentially too information-dense to use in a heads-up display but may be more suitably displayed in a top–down command dashboard to give outside users a better understanding of the interior environment. It may also enable users to refer to room features during verbal communications, e.g., by asking the team to explore what lies beyond the stairs in the map shown below.

Another visualization technique developed was a simplified 3D view of the map that highlights important semantic features, such as walls and floors, without extraneous detail (Figure 5 Top Right). This 3D view mode can be incorporated in the existing systems by making a scalable and rotatable 3D hologram in AR and VR or as a Unity WebGL component in the command dashboard. Because surfaces are labeled by type, we can show, hide, or color them differently; change transparency effects; and so on. We worked on ways to add iconography, navigation, and other useful information to the simplified 3D view. For example, we show icons for each individual’s location in a rotatable 3D view for the commander or show a simplified floor layout with hazardous areas colored red for first responders.

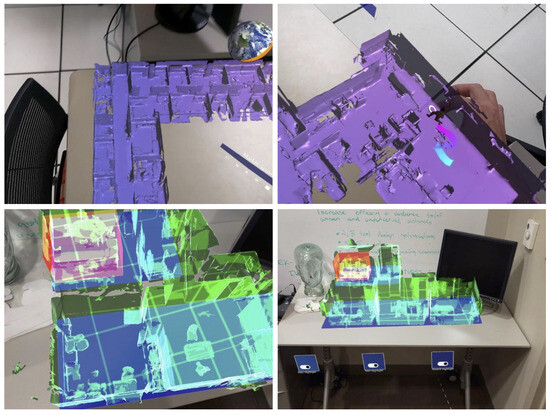

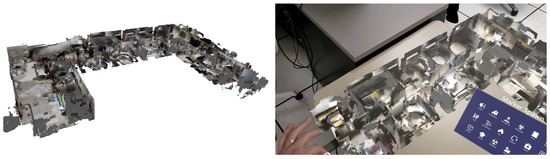

We also developed a prototype volumetric map that allows command personnel to view and interact with live data from an incident using a doll-house view laid out on a horizontal surface such as a conference room table shown in Figure 7. This system is able to render the collected data from one or more HoloLens 2 headsets and display positionally consistent markers and first responder avatars. These are driven by live data from the incident, including headset user locations and spatial markers placed by individuals such as hazard, exit, or environmental features. By leveraging the known camera pose, we can reverse-project the camera image planes to the scene geometry in order to transfer color to the existing mesh. Colorizing the mesh can be helpful when interpreting and visualizing the 3D data as can be seen in Figure 8. This approach, while similar to capturing a colored point cloud, offers some advantages, including reduced storage size and improved rendering efficiency.

Figure 7.

Top Left: The building map is shown scaled appropriately to the table on which it rests. Top Right: The headset wearer moves an avatar representing another person in order to assign navigation directions to that user in their headset. Bottom Left: The building map shown as a see-through virtual hologram with color annotations. Bottom Right: A view of the model demonstrating objects in the space.

Figure 8.

Vertex-shaded color 3D mesh constructed from HoloLens data. Left: The map is rendered in isolation. Right: The same map visualized in Augmented Reality. It is placed on a table, where the user can adjust the location of the AR visualization using a thumb and index finger pinch gesture.

As we built this system, we encountered a number of design challenges, each inviting further exploration. One pressing question is how to integrate camera images and spatial meshes in real-time on our edge server, as users are exploring the environment and also minimize the overhead associated with the ray intersection calculations. To address this concern, we developed several techniques aimed at reducing redundancy and improving system responsiveness. For instance, we created a dependency graph of mesh components and photo files, allowing us to efficiently recalculate only the necessary updates when changes to the mesh occur. We also implemented strategies for reducing redundant overlapping meshes, with simple metrics such as bounding box intersection-over-union, and temporal ordering, which have proven effective in practice. Finally, we employed a work-batching approach that returns the latest colored model after a timeout has been exceeded, ensuring real-time responsiveness even while using multiple headsets for lengthy scanning sessions, as shown in Figure 8.

Finally, discussions with first responders led to another possible visualization system, in which commanders or offsite personnel can interact with a 3D representation of the environment in AR. Holograms denoting humans and other important information can be projected into the 3D model of the space to give the user an intuitive visual depiction of the situation inside a building. Additionally, transparency and surface-culling options can give the user various ways to see through the walls.

Multi-User and Dataset Localization

Live updating of the volumetric map gives an offsite user contextual information about the environment while teams advance in the field. Implementing live map updating requires exchanging headset data through the edge server, optimizations in the 3D model file loader, and processing and filtering chains on the edge server. Filtering and reducing redundant meshes becomes especially important when multiple headsets are simultaneously exploring an environment. Our approach to this problem uses metrics such as intersection over union (IoU) to discard meshes with a high degree of overlap and simplify the 3D model.

Another feature of the volumetric map is visualization of important situational awareness information especially unit locations and map markers. We have chosen to represent personnel in the field with humanoid avatars that move within the map. We developed a novel interaction that enables the commander to convey walking directions to AR-equipped personnel by dragging their avatar to a different location on the map, as can be seen in Figure 7 Top Right. If a path can be calculated between the start and end points, it will be displayed for visual confirmation and automatically sent to the target headset. We hypothesize that this technique offers an intuitive way to convey walking directions to others and will be less likely to cause misunderstanding as compared to verbal directions.

4.3. Multimodal AR Cues to Assist Navigation

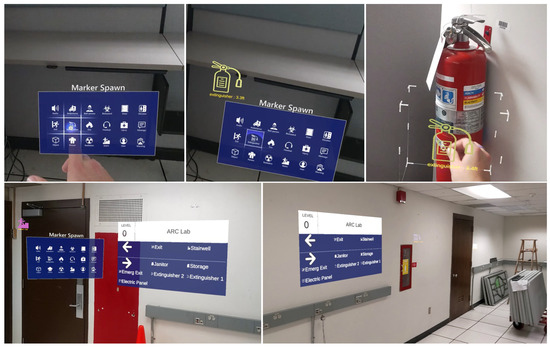

One of the goals of the developed system is to provide multimodal AR cues to enhance navigation and coordination. We have developed features such as virtual wall signs, interactive maps, and visual navigation paths. We have implemented the necessary mechanisms for team members to digitally annotate information in the environment and retrieve and display that information in AR. For data creation, we have implemented menus in both the AR interface and command dashboard for users to place different types of virtual markers that may signify environmental hazards or other points of interest, with several different icon shapes and colors, as shown in Figure 9. We have also designed primitives for specifying how the information should be displayed, whether as a hologram at a specific point in space or as an image projected on a surface such as a wall or floor, any text that should be displayed, and whether the information should be displayed within a certain radius or for a limited duration.

Figure 9.

Top: Three images showing the user experience of placing a virtual marker using the EasyVizAR headset. From left to right: (1) the user can select from a variety of marker types, which can be configured beforehand according to user responsibilities, (2) the marker appears as an icon in the AR space synchronized with other EasyVizAR interfaces, and (3) the user can move, rotate, and scale the marker with hand gestures as needed. Bottom: Two examples of virtual navigation signs, which are automatically populated from the navigation mesh and list of location landmarks. The signs are consistent with the surrounding environment. For example, in the left image, a door leading to the stairwell is visible, and in the right image, a fire extinguisher and janitor closet are visible.

We introduce one novel visual cue for navigation, the virtual sign. Like physical signs used in public spaces, such as airports and hospitals, virtual signs can assist users in navigating at key decision points. Unlike physical signs, virtual signs can be updated dynamically based on changing environment conditions, e.g., a blocked hallway, or based on user needs or job role. User-tailored virtual signs can reduce irrelevant visual clutter while presenting navigation cues in a familiar format. We use the user-placed markers and path-planning functions described previously to populate the signs while addressing some additional challenges.

The creation of virtual signs presents several technical challenges, such as identifying suitable locations on vertical surfaces that are large enough to accommodate a sign and ensuring clear, consistent directional guidance. The chosen sign placement must avoid ambiguous interpretations of directions. Additionally, the sign should remain legible and meaningful from various perspectives, whether viewed up close, across the room, or from the opposite side. Our primary objective is to replicate the intuitive and familiar experience of physical signs. Ideally, the sign placement should also avoid obstructing key visual elements of the environment, which could benefit from future improvements in scene understanding. Moreover, pathfinding from the sign must account for obstacles in close proximity, such as a table positioned below a sign. In such cases, the pathfinding should start at a suitable distance in front of the sign to avoid the obstruction. Some of these challenges relate to the intricacies of navigating within a 3D mesh without semantic information about the surfaces. Others can be mitigated by allowing users to place signs in appropriate locations, as our current prototype does.

We are also exploring additional enhancements that are unique to digital signs, such as interactivity. For instance, a user could click on a landmark displayed on the sign to transform it into a map with directions from their current location or activate a visual navigation path along the floor. Additionally, headsets can be configured to display customized information based on the user’s role, helping to reduce visual clutter. While all users may need directions to key locations like building entrances and exits, other specific destinations can be tailored to their immediate tasks.

Edge-Assisted Path Planning

Since first responders may not always be able to interact with AR systems through gestures or voice but are accustomed to maintaining radio contact with command staff, we have explored system designs which enable command and dispatch staff to influence the information displayed to responders in the field. One such idea in our current prototype allows the commander to configure a target point on the map for each individual. A target point could be specified as a coordinate in space, a named landmark on the map, or another user’s location. When a target is selected, the affected user headset will automatically be updated to show navigation directions to the target.

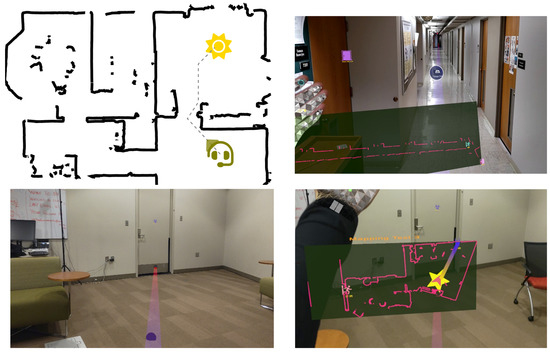

Our mapping system uses environment geometry data collected by multiple headsets and may potentially use other sensing platforms in the future. The resulting map can be used to respond to queries for navigation. Given a user’s current position and a target location, our navigation module generates a path and displays this to the user in AR as shown in Figure 10. Our current solution presents the path as a gradient-colored series of line segments projected onto the floor plane. We also display the path on the HUD map in addition to a prominent marker for the user’s current location and heading direction. We have also integrated our weighted A-star algorithm, which uses user location traces to bias the navigation to known explored locations.

Figure 10.

Top Left: Example pathfinding result which successfully routes around walls inferred from a previous exploration of the space from the headset icon to the sun icon destination. Top Right: The AR interface screenshot showing several navigation aids, including landmark icons, a hand-attached map, and a navigation route (transparent line with purple to pink gradient), which leads the user toward the end of the hallway. Bottom Left: A context-aware navigation aid leads the user to a doorway with a path projected on the floor. Small orbs mark waypoints along the path. Bottom Right: The navigation path is visible in the hand-attached map, along with an indicator of the user’s current location and orientation.

Our search algorithm uses two pieces of information that are captured by the EasyVizAR system: line segments corresponding to vertical obstacles and position and orientation time series captured while users move around the environment. In the absence of position history, such as when initially entering a building with only a pre-incident plan map, it may still be possible to find a path between two points using A* search. However, maps may contain errors both in the form of false walls and missing walls. False walls typically arise from closed doors but may also be a result of scanning or alignment error. Gaps or missing walls often occur due to incomplete scans but sometimes also result from highly absorptive surfaces such as large black screens. In the case of map errors, position history can be collected as users explore the space and used to bias the pathfinding search in favor of previously explored spaces in our weighted A* search implementation. Both components are implemented in the system.

The path is colored in a gradient that indicates the direction and placed below the head for comfort and ease of following. From information gained through our interviews with first responders, we recognize that hand interactions may not be feasible or preferred by users in all types of situations, so we have implemented an alternative workflow through our edge server, which enables the commander to set the navigation target for a user. Upon setting the navigation target, the directions will be sent to the affected headset and displayed for the user automatically.

4.4. Evaluation

To better understand how the system was performing, we ran a series of evaluations. First, we tested the runtime performance of the object detection algorithm on the HoloLens 2 headset compared with our prototype edge compute node. We use a YOLOv8n model exported as ONNX format. This is useful because optimized ONNX runtime libraries exist for both the HoloLens 2 provided by Unity and the Barracuda/Sentis libraries and for Python 3 running ONNX Runtime on the Linux-based edge compute node. Although differences may exist between the implementations, this enables a fair comparison of the runtime performance on each platform. Our experiment ran a total of 146 images, sequentially on the headset followed by tests on the edge node. Images were stored as files on the headset to simulate captured camera frames, and all images were 640 × 480 color images. For tests on the edge node, the headset uploaded the image file and waited for a response containing detection results in JSON encoding. Therefore, the total completion times in the table below include file I/O and network transfer delay which is an important consideration for edge compute offloading. We leave out the first test result in each test run, which was unusually high due to lazy initialization of the model. The results are shown in Table 1 below.

Table 1.

Shows object detection latency, mean completion time and standard deviation (ms) on HoloLens and edge compute node for 145 images. The Barracuda and ONNX tests were performed in June 2023; the Sentis tests were performed in October 2025.

We decompose the timing results into preprocessing, which consists of reshaping and converting the image to a tensor; execution, which includes the operations specified by the DNN model; and postprocessing, which includes interpreting the output of the model and drawing bounding boxes. A critical step that can either be included in the model or run separately during postprocessing is non-maxima suppression (NMS). YOLO and similar object detection models output a large number of candidate bounding boxes — in the case of YOLOv8n, the output is a fixed 6300 candidate boxes. NMS filters the large number of redundant and low-quality detections. The typical implementation of NMS has complexity is typically implemented on CPU and can be a concern for performance. In order to assess how NMS impacts object detection performance on the HoloLens 2 headset, we run two different test cases on the headset, one where NMS is implemented in C# as part of the postprocessing step, and in the other case, an NMS layer is appended to the model and run by the execution engine. The introduction of Unity Sentis after June 2023 brought new optimizations and performance improvements. Furthermore, Sentis supports executing a model one layer at a time, which enables us to profile the NMS layer directly.

The postprocess and embedded implementations of NMS revealed striking differences. Excluding NMS, model execution on the headset was surprisingly fast ( ms, ), and would be sufficiently fast for real-time performance at realistic camera frame rates. However, the NMS step introduces substantial delay, whether it is implemented separately or embedded in the ONNX model. Even in Unity Sentis, where run times were approximately twice as fast, our profiling suggests that the NMS layer is still the dominant source of delay (611 ms out of 621 ms total execution time). With the growing interest of running machine learning tasks on mobile devices, these results help highlight areas where there is greatest potential for performance gain.

The performance challenges faced on the HoloLens 2 headset also point to potential benefits for offloading to an edge compute server. Even taking network delay into consideration, our results support edge computing ( ms, ) as a viable alternative to running object detection on the headset with either Barracuda (, ) or Sentis ( ms, ). Network delay was predictably the dominant source of variability in the total task completion times when offloading and could result in substantial delays under adverse network conditions. In our experiment, the minimum and maximum transfer delays observed were 135 ms and 788 ms, respectively. It is important to note that the compute delay does not fully capture the user experience. In all of our implementations, running object detection on the headset caused perceptible stuttering in the user interface, which is not present when purely offloading to edge compute. When offloading to edge compute, the primary task that runs on the headset is JPEG compression in preparation for transmission, which we found to be relatively fast ( ms, ).

Although the delay is higher than would permit real-time operation at 30 FPS, we propose using a lightweight DNN on the headset to select candidate camera frames to send to the edge server for more in-depth processing. Candidate image selection could use binary present or not-present outputs or motion blur severity detection, either of which would not require NMS. There is further room for improvement in the network delay and through the use of a more powerful GPU server.

These results point to a promising path to deploying edge offloading for object detection, but they also demonstrate that identifying and selecting which camera frames to process as a critical need for overall system efficiency because none of the implementations tested could run at full camera frame rates. For maximum efficiency, redundant or low-quality images should be discarded as early as possible in the processing chain. Even the simple operation of applying JPEG compression and preparing an image for transmission to the edge server imposes a cost on the resource-constrained headset. This inherently limits the maximum frame rate that could be offloaded to edge computing. Furthermore, we observed impacts on AR interface responsiveness, which we attribute to thread synchronization, while the image is copied between GPU and system memory and may be difficult for application developers to eliminate entirely.

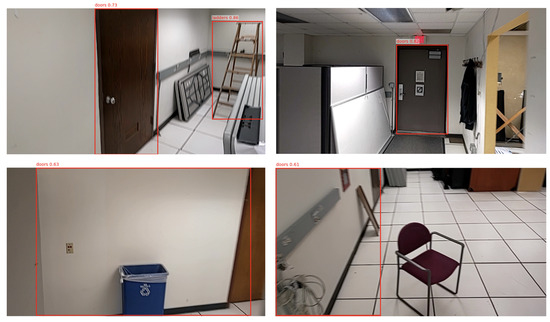

We also tested our object detection with indoor imagery. Our initial experiments suggest that a model like Grounding DINO can be used as a drop-in replacement for our edge-computing object detection pipeline and can be tuned with human-provided input to detect different objects of interest, such as doors or ladders. However, we do observe a tendency for false positives when the objects in the prompt are not present in the scene, as shown in Figure 11. It is unclear at this time whether this is an inherent problem with the feature enhancer, in which language features are used to guide visual feature attention, or whether this can be solved with better training that penalizes false positives. We are interested in exploring this question in more detail in future research.

Figure 11.

Top: Two examples of open-set detection using Grounding DINO with the prompt “doors, chairs, tables, ladders, and desks”. In these two images, two doors and one ladder are successfully identified with high confidence (>= 0.73). ViT models are especially noteworthy for their ability to detect objects without extensive training data such as ladders. Bottom: Two examples of false positives from using Grounding DINO when prompted with “doors”. The detection confidence is moderately high (0.63 and 0.61) for the falsely detected doors which suggests that setting a high threshold may not be sufficient to prevent all false positives.

We also tested our path-planning algorithms in means of identifying failure cases. The initial prototype constructs a rectangular grid and marks cells as passable or not passable using walls and other structures detected by the HoloLens 2. Then, path planning can be performed by running known algorithms, such as A-star search on the navigation mesh. Currently, the naive algorithm has a tendency to find gaps in the walls and route through unexplored spaces when it would result in a shorter path. We observe gaps frequently in practice as a result of incomplete depth information and also with surfaces that absorb infrared light such as large black TV screen or monitors. One potential solution to path-planning issues is weighting the navigation mesh with observed transitions, i.e., based on paths physically traversed by individuals who initially explored the space. With appropriate tuning, the path-planning algorithm will favor routes that have a high likelihood of being feasible.

5. Limitations and Discussion

While there are many ways that AR can support first responders, this work mainly focused on creating a collaboration between offsite and onsite personnel. While this work was developed with collaboration with first responders, the system has not yet been systematically evaluated. Field testing with first responders is difficult given the demands on these individuals. Furthermore, testing emerging technologies either in the field or even in simulated situations is often not feasible. We note the work of [43] which tested Augmented Reality’s ability to support indoor navigation for individuals with low vision. This work evaluated AR’s capabilities using metrics such as Correctness of Turns, Correctness of the Route Segment Lengths, Total Landmark Recall, and Recall of Augmented Landmarks, alongside qualitative interviews. While the results demonstrated the benefits for AR for the process of indoor navigation, it is unclear if these same results might map to first responders. Future work will aim to quantify the benefits of the developed system for real-world conditions.

We also note that methods used in DINO and YOLO create quite different tradeoffs. Object classification systems, such as YOLO, tend to provide a more accurate and faster prediction in terms of finding a known object; however, these types of classifiers are limited to the objects which they have been trained on. Conversely, DINO-style techniques are able to provide a greater deal of flexibility in terms of object recognition at the loss of accuracy. Comparisons between these techniques can be found in [44,45]. As of the time of writing, a specific dataset curated for content related to first-responder needs would provide the best level of support.

The presented work utilizes the capabilities of the AR device’s sensors alongside edge compute nodes. One of the major issues with this approach is that the environment needs to be visible in order for SLAM algorithms to work effectively. Low-visibility situations are especially prevalent for fire fighters, in which smoke can limit the ability of optical tracking systems. Thermal cameras may be a solution to this, although custom SLAM algorithms need to be utilized.

Another issue encountered with the SLAM systems came from doors and elevators. As the systems expected the environment to be static, both of these situations violate the core assumptions that these algorithms make. In the case of a door, the camera images show motion that is not generated from the user locomoting through the environment. In the case of an elevator, the opposite occurs and the camera images show the user being still, despite them physically changing location. Solutions to these situations likely require adding in other data sources to better help the algorithms understand these types of scenarios, in which optical motion cues may be in conflict with physical motion.

As the system utilized edge computing, the devices needed to maintain network connectivity for optimal use. For the case of the HoloLens 2, this meant that WiFi was the major method of communication to the edge compute node. However, we note that other networks, such as FirstNet [46], offer a more robust solution for communication. Other solutions would be to have the device connect to the first responder’s phone directly. This would allow the AR device to have the same capabilities (and securities) of the devices that are normally used in the field.

Finally, we note that the current generation of AR systems have a number of limitations. Between the limited computing power, the limited field of view of the display, and the use of voice and hand tracking as the major source of input, it is clear that these system cannot be plugged into the first-responder pipeline directly. However, as these systems continue to improve, there are clear opportunities for these types of systems to enhance the communication of first responders and offsite personnel.

6. Conclusions

EasyVizAR represents a significant advancement in the application of AR technology for first-responder operations. By leveraging edge computing and advanced computer vision techniques, we have developed a system that enhances situational awareness, improves coordination, and facilitates rapid decision-making in challenging indoor environments. Ongoing research and user studies aim to continue to refine and improve the system, ensuring its effectiveness in real-world emergency scenarios. The developed technologies have been demonstrated at the NIST Public Safety Innovation Lab and Public Safety Immersive Test Center. This facility hosts regular tours to a variety of emergency first responders, providing them the opportunity to test emerging technologies for public safety. The source code for the presented work and a video demonstrating the HoloLens 2 AR application can be found at https://github.com/EasyVizAR/ (accessed on 20 October 2025) and https://github.com/widVE/NIST/ (accessed on 20 October 2025).

One of the challenges with inter-agency collaboration is that responders arrive to the site at different times. The need to update and share information is both a logistical challenge as well as a time cost when urgent action is required. The methods generated in the work would help to provide a unified communication system that could aid in their ability to coordinate more effectively. Another possible method for collaboration could come from the sharing of maps for spaces. Through the use of the indoor mapping tool, pre-planning could occur that would provide valuable information for first responders in the field.

Future work will focus on improving the core technology’s accuracy and robustness, particularly for object detection and person identification, which are critical for providing reliable information in high-stakes environments. We also plan to develop more sophisticated navigation and coordination tools that can help first responders move and work together more effectively in complex, unfamiliar spaces.

A significant part of this research will involve enhancing data integration to create a more comprehensive and intelligent system. This means expanding the system’s ability to connect with other crucial data sources, such as sensor networks and building management systems. By doing this, the system can provide real-time, external information—like structural data or hazardous material alerts—directly within the augmented-reality view. We also aim to expand the system’s capabilities to support a much wider range of emergency scenarios, from search-and-rescue to disaster relief, ensuring the technology is versatile enough to meet various needs.

Finally, future work will prioritize usability and volumetric mapping. The goal of this work is to continue refining the user experience, making our volumetric maps more intuitive and easy to use, even under immense stress. This focus is key, as a poorly designed tool can become a source of distraction or confusion in an emergency. These efforts will ensure that the system remains a practical and valuable tool that genuinely helps first responders by enhancing situational awareness and aiding critical decision-making.

Author Contributions

Conceptualization, K.P., L.H., Y.Z., R.T. and S.B.; Methodology, K.P., L.H., B.S. and Y.Z.; Software, L.H., Y.Z., B.S., R.T. and S.B.; Validation, L.H.; Formal analysis, L.H. and Y.Z.; Investigation, L.H., Y.Z.; Writing—original draft, K.P. and L.H.; Writing—review & editing, K.P., L.H., B.S.; Visualization, K.P. and B.S.; Supervision, K.P., L.H., Y.Z. and S.B.; Project administration, L.H. and S.B.; Funding acquisition, K.P., Y.Z., R.T. and S.B. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Institute of Standards and Technology award number 70NANB21H043.

Data Availability Statement

The original software presented in this paper are openly available at https://github.com/EasyVizAR/ (accessed on 20 October 2025) and https://github.com/widVE/NIST/ (accessed on 20 October 2025).

Acknowledgments

We would like to thank Rudy Banerjee, Ruijia Chen, Brianna R Cochran, Casey Ford, Haiyi He, Aris Huang, Kaustubh Khare, Shivangi Mishra, Kaiwen Shi, Sadana Ulaganathan, Yesui Ulziibayar, Lukas Wehrli, Jesse Yang, Huizhi Zhang, Hunter Zhang, Kexin Zhang, Anjun Zhu, and Boyuan Zou for their work on this project. We would like to thank our collaborators of West Allis Fire Department, Neenah SWAT Team, Fox Valley Technical College, and the Public Safety Communications Research Division of the National Institute of Standards and Technology.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Morrison, K.; Dawkins, S.; Choong, Y.Y.; Theofanos, M.F.; Greene, K.; Furman, S. Current problems, future needs: Voices of first responders about communication technology. In Proceedings of the Human-Computer Interaction. Design and User Experience Case Studies: Thematic Area, HCI 2021, Held as Part of the 23rd HCI International Conference, HCII 2021, Virtual Event, 24–29 July 2021; Proceedings, Part III 23. Springer: Berlin/Heidelberg, Germany, 2021; pp. 381–399. [Google Scholar]

- Smith, E.C.; Holmes, L.; Burkle, F.M. The physical and mental health challenges experienced by 9/11 first responders and recovery workers: A review of the literature. Prehospital Disaster Med. 2019, 34, 625–631. [Google Scholar] [CrossRef] [PubMed]

- Dimou, A.; Kogias, D.G.; Trakadas, P.; Perossini, F.; Weller, M.; Balet, O.; Patrikakis, C.Z.; Zahariadis, T.; Daras, P. FASTER: First Responder Advanced Technologies for Safe and Efficient Emergency Response. In Technology Development for Security Practitioners; Springer: Berlin/Heidelberg, Germany, 2021; pp. 447–460. [Google Scholar]

- Gutiérrez, Á.; Blanco, P.; Ruiz, V.; Chatzigeorgiou, C.; Oregui, X.; Álvarez, M.; Navarro, S.; Feidakis, M.; Azpiroz, I.; Izquierdo, G.; et al. Biosignals monitoring of first responders for cognitive load estimation in real-time operation. Appl. Sci. 2023, 13, 7368. [Google Scholar] [CrossRef]

- Zechner, O.; García Guirao, D.; Schrom-Feiertag, H.; Regal, G.; Uhl, J.C.; Gyllencreutz, L.; Sjöberg, D.; Tscheligi, M. NextGen Training for Medical First Responders: Advancing Mass-Casualty Incident Preparedness through Mixed Reality Technology. Multimodal Technol. Interact. 2023, 7, 113. [Google Scholar] [CrossRef]

- Tucker, S.; Jonnalagadda, S.; Beseler, C.; Yoder, A.; Fruhling, A. Exploring wearable technology use and importance of health monitoring in the hazardous occupations of first responders and professional drivers. J. Occup. Health 2024, 66, uiad002. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Zeng, Z.; Ren, T.; Li, F.; Zhang, H.; Yang, J.; Jiang, Q.; Li, C.; Yang, J.; Su, H.; et al. Grounding DINO: Marrying DINO with Grounded Pre-Training for Open-Set Object Detection. In Proceedings of the Computer Vision—ECCV 2024, Milan, Italy, 29 September–4 October 2024; Leonardis, A., Ricci, E., Roth, S., Russakovsky, O., Sattler, T., Varol, G., Eds.; Springer: Cham, Switzerland, 2025; pp. 38–55. [Google Scholar]

- Zhang, K.; Cochran, B.R.; Chen, R.; Hartung, L.; Sprecher, B.; Tredinnick, R.; Ponto, K.; Banerjee, S.; Zhao, Y. Exploring the design space of optical see-through AR head-mounted displays to support first responders in the field. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–19. [Google Scholar]

- Argo, E.; Ahmed, T.; Gable, S.; Hampton, C.; Grandi, J.; Kopper, R. Augmented Reality User Interfaces for First Responders: A Scoping Literature Review. arXiv 2025, arXiv:2506.09236. [Google Scholar] [CrossRef]

- Oztank, F.; Balcisoy, S. Towards Extended Reality in Emergency Response: Guidelines and Challenges for First Responder Friendly Augmented Interfaces. Comput. Animat. Virtual Worlds 2025, 36, e70056. [Google Scholar] [CrossRef]

- Grandi, J.G.; Cao, Z.; Ogren, M.; Kopper, R. Design and Simulation of Next-Generation Augmented Reality User Interfaces in Virtual Reality. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Virtual Event, 27 March–3 April 2021; pp. 23–29. [Google Scholar] [CrossRef]

- Jiang, X.; Chen, N.Y.; Hong, J.I.; Wang, K.; Takayama, L.; Landay, J.A. Siren: Context-aware computing for firefighting. In Proceedings of the International Conference on Pervasive Computing, Orlando, FL, USA, 14–17 March 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 87–105. [Google Scholar]

- Killeen, J.P.; Chan, T.C.; Buono, C.; Griswold, W.G.; Lenert, L.A. A Wireless First Responder Handheld Device for Rapid Triage, Patient Assessment and Documentation During Mass Casualty Incidents. In Proceedings of the AMIA Annual Symposium Proceedings, Washington, DC, USA, 11–15 November 2006; pp. 429–433. [Google Scholar]

- KYNG, M.; Nielsen, E.T.; Kristensen, M. Challenges in Designing Interactive Systems for Emergency Response. In Proceedings of the 6th Conference on Designing Interactive Systems (University Park, PA, USA) (DIS ’06), University Park, PA, USA, 26–28 June 2006; Association for Computing Machinery: New York, NY, USA, 2006; pp. 301–310. [Google Scholar] [CrossRef]

- Monares, A.; Ochoa, S.F.; Pino, J.A.; Herskovic, V.; Rodriguez-Covili, J.; Neyem, A. Mobile computing in urban emergency situations: Improving the support to firefighters in the field. Expert Syst. Appl. 2011, 38, 1255–1267. [Google Scholar] [CrossRef]

- Kapalo, K.A.; Bockelman, P.; LaViola, J.J., Jr. “Sizing Up” Emerging Technology for Firefighting: Augmented Reality for Incident Assessment. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Philadelphia, PA, USA, 1–5 October 2018; SAGE Publications: Sage CA: Los Angeles, CA, USA, 2018; Volume 62, pp. 1464–1468. [Google Scholar]

- Wilson, J.; Steingart, D.; Romero, R.; Reynolds, J.; Mellers, E.; Redfern, A.; Lim, L.; Watts, W.; Patton, C.; Baker, J.; et al. Design of monocular head-mounted displays for increased indoor firefighting safety and efciency. In Helmet-and Head-Mounted Displays X: Technologies and Applications; SPIE: Bellingham, WA, USA, 2005; Volume 5800, pp. 103–114. [Google Scholar]

- Cheng, D.; Wang, Q.; Liu, Y.; Chen, H.; Ni, D.; Wang, X.; Yao, C.; Hou, Q.; Hou, W.; Luo, G.; et al. Design and manufacture AR head-mounted displays: A review and outlook. Light. Adv. Manuf. 2021, 2, 350–369. [Google Scholar] [CrossRef]

- Augview. 2013. Available online: http://www.augview.net (accessed on 20 October 2025).

- Chen, Y.J.; Lai, Y.S.; Lin, Y.H. BIM-based augmented reality inspection and maintenance of fire safety equipment. Autom. Constr. 2020, 110, 103041. [Google Scholar] [CrossRef]

- Datcu, D.; Lukosch, S.G.; Lukosch, H.K. Handheld augmented reality for distributed collaborative crime scene investigation. In Proceedings of the 19th International Conference on Supporting Group Work, Sanibel Island, FL, USA, 13–16 November 2016; pp. 267–276. [Google Scholar]

- Nunes, I.L.; Lucas, R.; Simões-Marques, M.; Correia, N. An Augmented Reality Application to Support Deployed Emergency Teams. In Proceedings of the 20th Congress of the International Ergonomics Association (IEA 2018), Florence, Italy, 26–30 August 2018; Springer International Publishing: Cham, Switzerland, 2019; pp. 195–204. [Google Scholar]

- Sebillo, M.; Tortora, G.; Vitiello, G.; Paolino, L.; Ginige, A. The use of augmented reality interfaces for on-site crisis preparedness. In Proceedings of the International Conference on Learning and Collaboration Technologies, Washington, DC, USA, 29 June 2024; Springer: Berlin/Heidelberg, Germany, 2015; pp. 136–147. [Google Scholar]

- Sebillo, M.; Vitiello, G.; Paolino, L.; Ginige, A. Training Emergency Responders through Augmented Reality Mobile Interfaces. Multimed. Tools Appl. 2016, 75, 9609–9622. [Google Scholar] [CrossRef]

- Siu, T.; Herskovic, V. SidebARs: Improving awareness of off-screen elements in mobile augmented reality. In Proceedings of the 2013 Chilean Conference on Human-Computer Interaction, Temuco, Chile, 11–15 November 2013; pp. 36–41. [Google Scholar]

- Brunetti, P.; Croatti, A.; Ricci, A.; Viroli, M. Smart augmented fields for emergency operations. Procedia Comput. Sci. 2015, 63, 392–399. [Google Scholar] [CrossRef]

- Kamat, V.R.; El-Tawil, S. Evaluation of augmented reality for rapid assessment of earthquake-induced building damage. J. Comput. Civ. Eng. 2007, 21, 303–310. [Google Scholar] [CrossRef]

- Sainidis, D.; Tsiakmakis, D.; Konstantoudakis, K.; Albanis, G.; Dimou, A.; Daras, P. Single-handed gesture UAV control and video feed AR visualization for first responders. In Proceedings of the International Conference on Information Systems for Crisis Response and Management (ISCRAM), Blacksburg, VA, USA, 23–26 May 2021; pp. 23–26. [Google Scholar]

- Wani, A.R.; Shabir, S.; Naaz, R. Augmented reality for fire and emergency services. In Proceedings of the Int. Conf. on Recent Trends in Communication and Computer Networks, Byderabad, India, 8–9 November 2013. [Google Scholar]

- Nelson, C.R.; Conwell, J.; Lally, S.; Cohn, M.; Tanous, K.; Moats, J.; Gabbard, J.L. Exploring Augmented Reality Triage Tools to Support Mass Casualty Incidents. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Atlanta, GA, USA, 10–14 October 2022; Volume 66, pp. 1664–1666. [Google Scholar] [CrossRef]

- Cagiltay, B.; Oztank, F.; Balcisoy, S. The Case for Audio-First Mixed Reality: An AI-Enhanced Framework. In Proceedings of the 2025 IEEE International Conference on Artificial Intelligence and eXtended and Virtual Reality (AIxVR), Lisbon, Portugal, 27–29 January 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 165–170. [Google Scholar]

- Wilchek, M.; Wang, L.; Dickinson, S.; Feuerbacher, E.; Luther, K.; Batarseh, F.A. KHAIT: K-9 Handler Artificial Intelligence Teaming for Collaborative Sensemaking. In Proceedings of the 30th International Conference on Intelligent User Interfaces, Cagliari, Italy, 24–27 March 2025; pp. 925–937. [Google Scholar]

- Liu, P.; Willis, D.; Banerjee, S. ParaDrop: Enabling Lightweight Multi-tenancy at the Network’s Extreme Edge. In Proceedings of the 2016 IEEE/ACM Symposium on Edge Computing (SEC), Washington, DC, USA, 27–28 October 2016; pp. 1–13. [Google Scholar] [CrossRef]

- Ben Ali, A.J.; Kouroshli, M.; Semenova, S.; Hashemifar, Z.S.; Ko, S.Y.; Dantu, K. Edge-SLAM: Edge-assisted visual simultaneous localization and mapping. ACM Trans. Embed. Comput. Syst. 2022, 22, 1–31. [Google Scholar] [CrossRef]

- Chen, Y.; Inaltekin, H.; Gorlatova, M. AdaptSLAM: Edge-assisted adaptive SLAM with resource constraints via uncertainty minimization. In Proceedings of the IEEE INFOCOM 2023-IEEE Conference on Computer Communications, New York City, NY, USA, 17–20 May 2023; IEEE: Piscaraway, NJ, USA, 2023; pp. 1–10. [Google Scholar]

- van Toll, W.; Cook, A.F.; Geraerts, R. Navigation Meshes for Realistic Multi-Layered Environments. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 3526–3532. [Google Scholar] [CrossRef]

- van Toll, W.; Triesscheijn, R.; Kallmann, M.; Oliva, R.; Pelechano, N.; Pettré, J.; Geraerts, R. A Comparative Study of Navigation Meshes. In Proceedings of the 9th International Conference on Motion in Games, New York, NY, USA, 10–12 October 2016; MIG ’16. pp. 91–100. [Google Scholar] [CrossRef]

- Fachri, M.; Khumaidi, A.; Hikmah, N.; Chusna, N.L. Performance analysis of navigation ai on commercial game engine: Autodesk stingray and Unity3D. J. Mantik 2020, 4, 61–68. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. In Proceedings of the 38th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024; Volume 37, pp. 107984–108011. [Google Scholar]

- Serengil, S.; Ozpinar, A. A Benchmark of Facial Recognition Pipelines and Co-Usability Performances of Modules. J. Inf. Technol. 2024, 17, 95–107. [Google Scholar] [CrossRef]

- Wu, Y.; Zhang, Z.; Chen, J.; Tang, H.; Li, D.; Fang, Y.; Zhu, L.; Xie, E.; Yin, H.; Yi, L.; et al. Vila-u: A unified foundation model integrating visual understanding and generation. arXiv 2024, arXiv:2409.04429. [Google Scholar] [CrossRef]

- Ren, T.; Jiang, Q.; Liu, S.; Zeng, Z.; Liu, W.; Gao, H.; Huang, H.; Ma, Z.; Jiang, X.; Chen, Y.; et al. Grounding dino 1.5: Advance the“ edge” of open-set object detection. arXiv 2024, arXiv:2405.10300. [Google Scholar]

- Chen, R.; Jiang, J.; Maheshwary, P.; Cochran, B.R.; Zhao, Y. VisiMark: Characterizing and Augmenting Landmarks for People with Low Vision in Augmented Reality to Support Indoor Navigation. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025. CHI ’25. [Google Scholar] [CrossRef]

- Ansari, M.M. Evaluating Stenosis Detection with Grounding DINO, YOLO, and DINO-DETR. arXiv 2025, arXiv:2503.01601. [Google Scholar] [CrossRef]

- Teixeira, E.H.; Melo, M.C.; Junior, W.B.; Silva, E.C.; Cruz, M.R.; Pimenta, T.C.; Aquino, G.P.; Boas, E.C.V. Brain Tumor Images Class-Based and Prompt-Based Detectors and Segmenter: Performance Evaluation of YOLO, SAM and Grounding DINO. In Proceedings of the 2024 International Conference on Artificial Intelligence, Blockchain, Cloud Computing, and Data Analytics (ICoABCD), Bali, Indonesia, 20–21 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 202–207. [Google Scholar]

- Moore, L.K. The First Responder Network (FirstNet) and Next-Generation Communications for Public Safety: Issues for Congress. Congressional Research Service. Available online: https://allthingsfirstnet.com/wp-content/uploads/2017/05/CRS-FirstNet-Linda-Moore-Report-2016.pdf (accessed on 17 June 2016).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).