DFed-LT: A Decentralized Federated Learning with Lightweight Transformer Network for Intelligent Fault Diagnosis

Abstract

1. Introduction

- (1)

- Data Privacy Constraints: Monitoring data often contains sensitive commercial information (e.g., production volume and efficiency), causing device owners to restrict data access to local domains. This privacy protection requirement prevents the aggregation of multi-user data for traditional large-scale training datasets.

- (2)

- Edge Computing Limitations: FD intelligent devices are increasingly deployed as edge or handheld detection devices with constrained storage capacity and computational resources. These hardware limitations restrict the implementation of complex model architectures.

- (1)

- The proposed DFed-LT, as a new decentralized federated learning method, can achieve accurate FD with better data privacy protection capabilities, breaking through the problem of “data island”.

- (2)

- A newly designed lightweight transformer architecture for FD significantly reduces learnable parameters while maintaining diagnostic performance.

2. Related Work

2.1. Federated Learning for Fault Diagnosis

2.2. Transformer for Fault Diagnosis

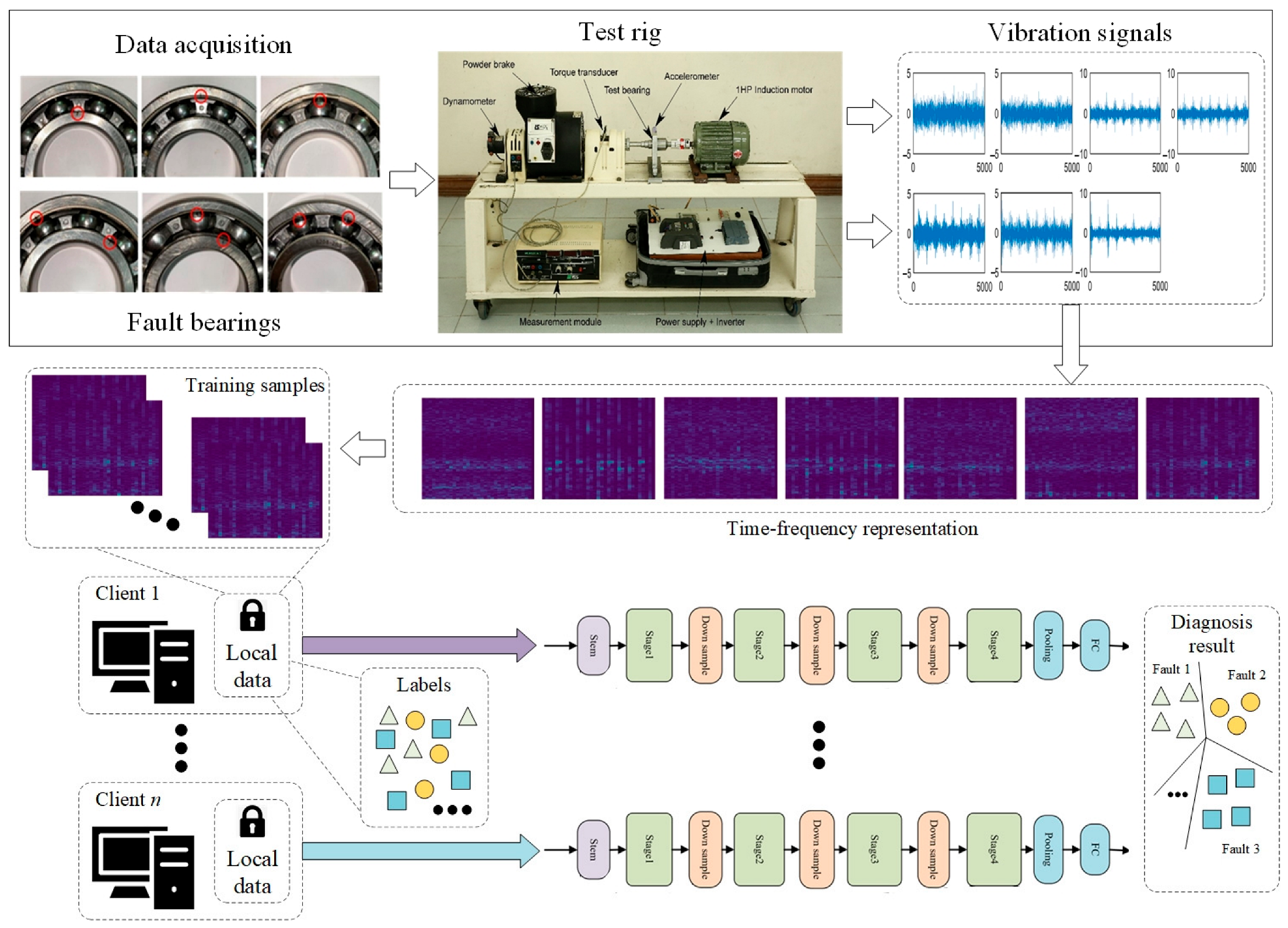

3. The Proposed DFed-LT for Fault Diagnosis

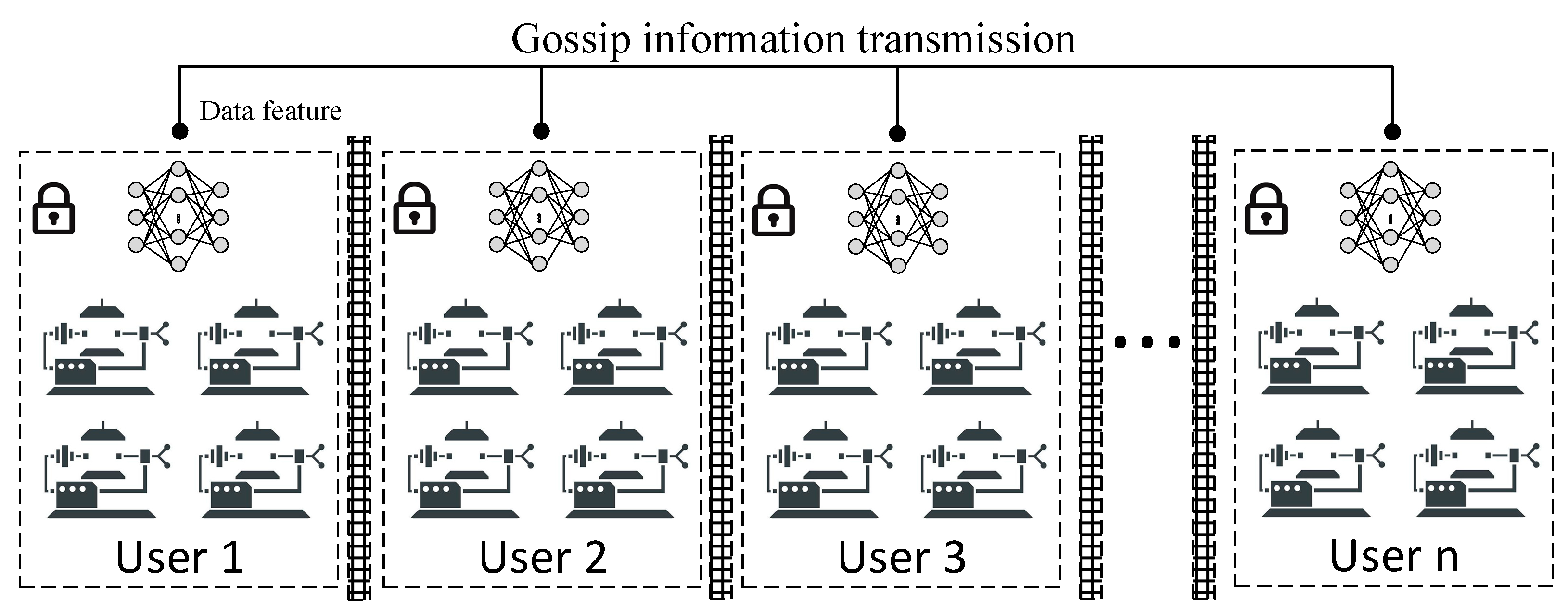

3.1. Decentralized Federated Learning

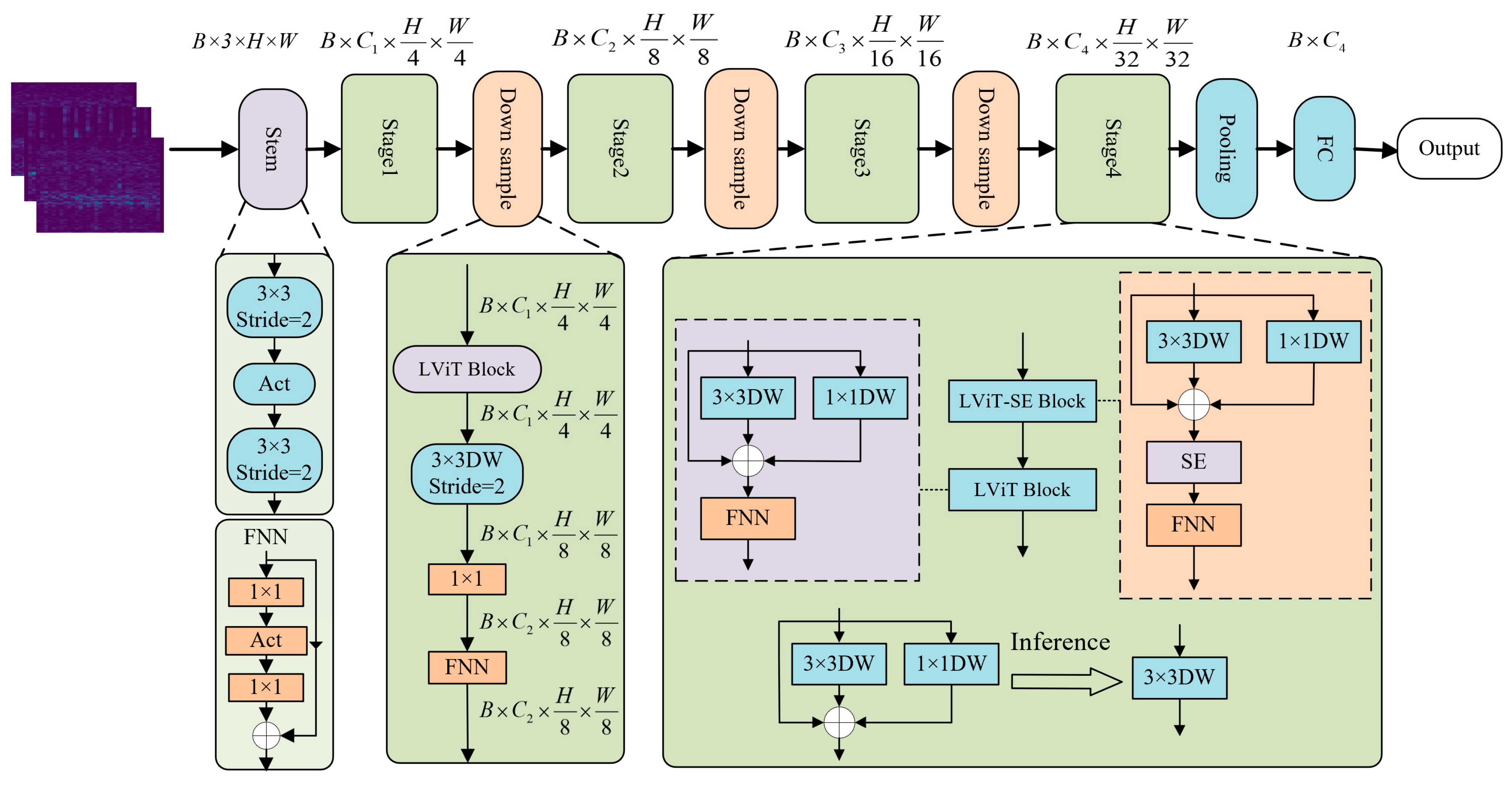

3.2. Proposed Lightweight Transformer Architecture

3.2.1. Stem Module

3.2.2. Stage Module

3.2.3. Downsample Module

3.2.4. Classifier Module

4. Experiments and Result Analysis

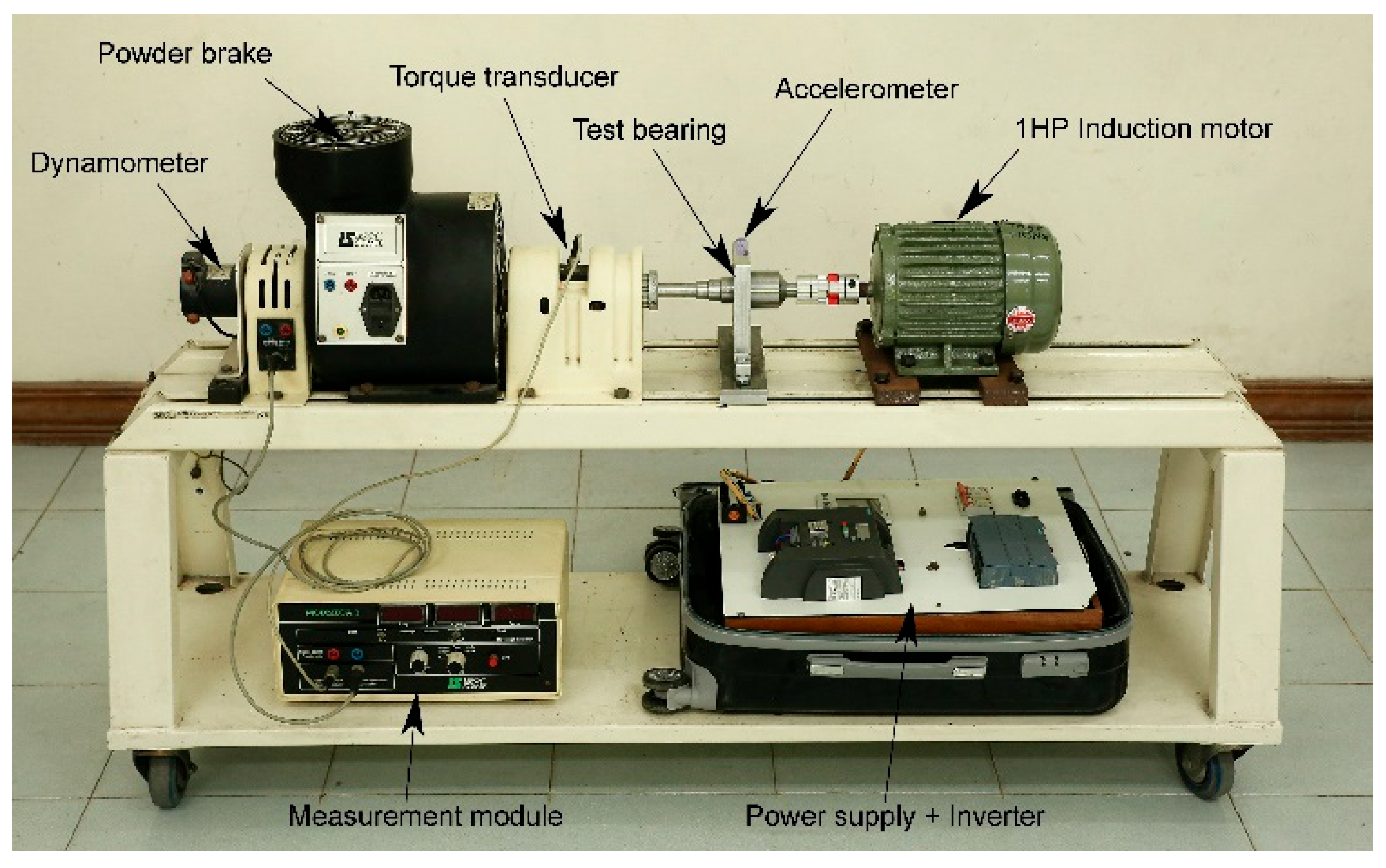

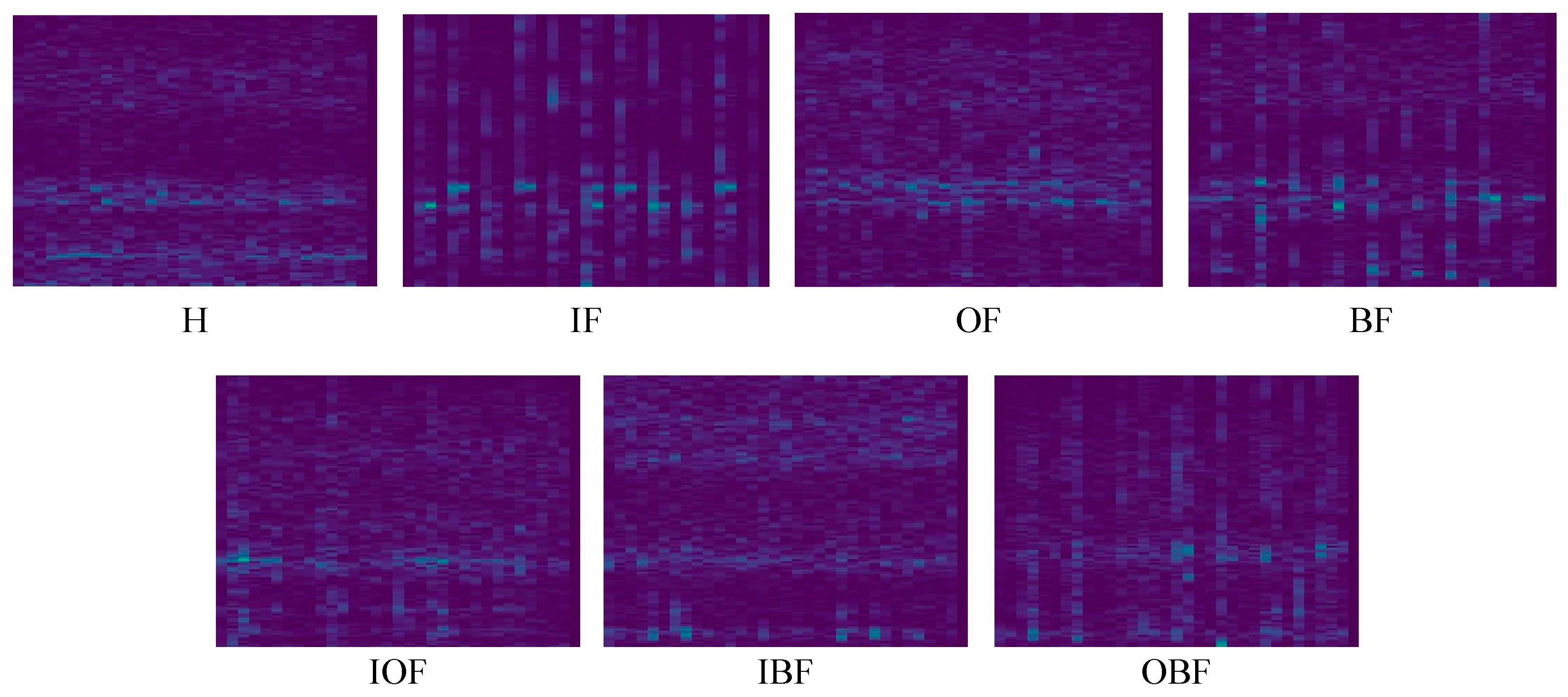

4.1. Experimental Setup and Data Description

4.2. Experiment 1: Fault Diagnosis Experiment Result Based on LT

4.2.1. Data Processing and Evaluation Indicators

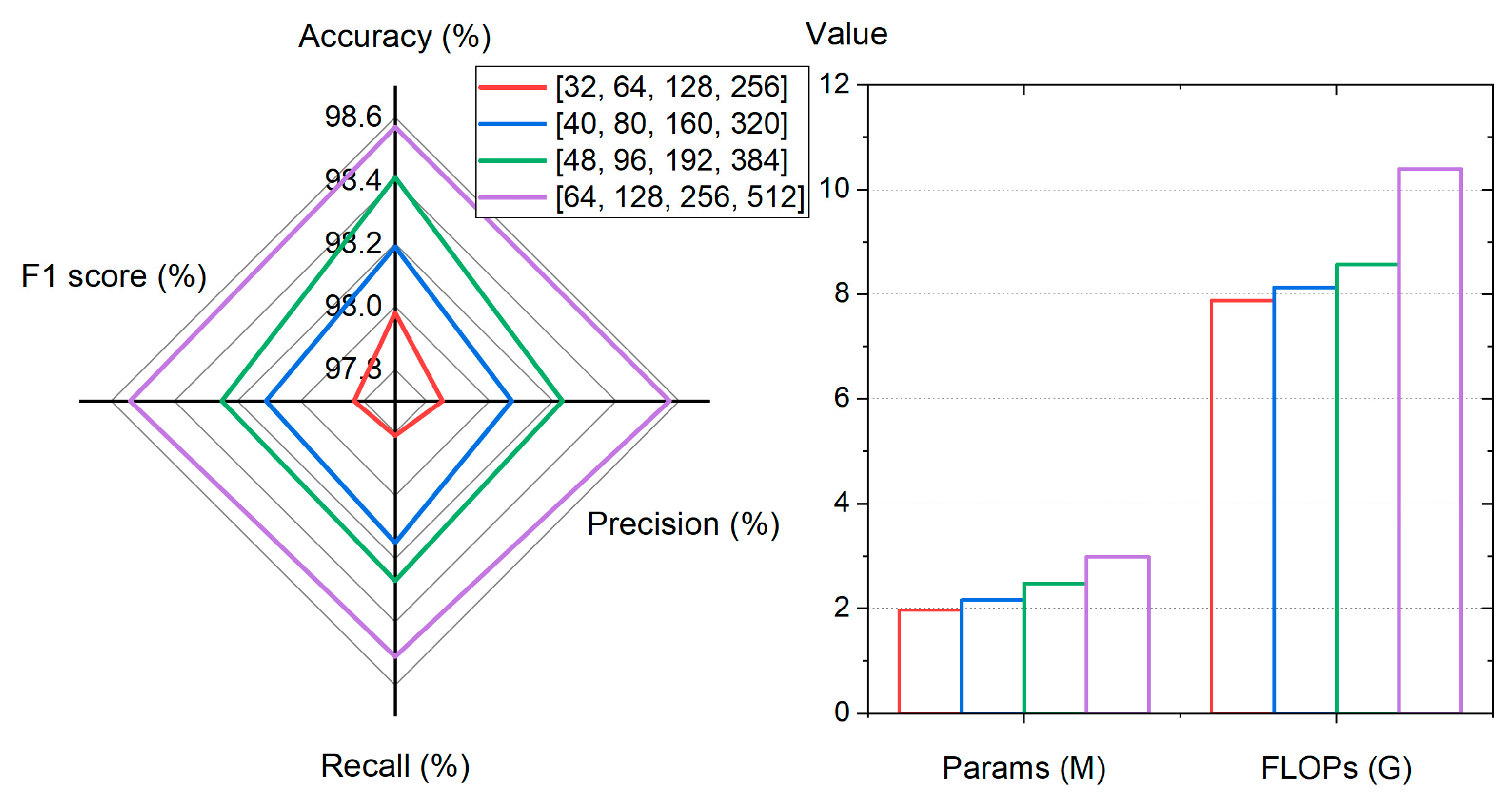

4.2.2. The Effect of Hyperparameters

- (1)

- The Effect of Expansion Ratio of CM

- (2)

- The Effect of Network Width

- (3)

- The Effect of SE layer Configurations

4.2.3. Diagnostic Results and Performance Comparison

- (1)

- In terms of classification performance, traditional CNN models have relatively low performance, with four indicators accounting for about 85%. MobileNetV3 performs stably, with all indicators reaching over 97%; Uniformer is relatively good, with all four indicators exceeding 98%. Among all models, the LT model performed the best, with significantly higher accuracy (99.06%), precision (99.17%), recall (98.87%), and F1 score (99.02%) compared to other models.

- (2)

- In terms of computational efficiency, the CNN model has the lowest parameter number (0.06 M) and FLOPs (1.52 G), but the performance gap is significant; the LT model achieved higher classification performance and better performance balance, with significantly lower parameter number (2.48 M) and FLOPs (8.58 G) compared to the Uniformer (20.89 M/127.69 G); the computational cost of MobileNetV3 (2.92 M/10.87 G) is similar to that of the LT model, but its performance is slightly inferior.

- (3)

- The LT model can achieve excellent diagnostic performance similar to larger models such as Uniformer, while also having relatively low model complexity. This makes it highly suitable in engineering applications with limited computing resources.

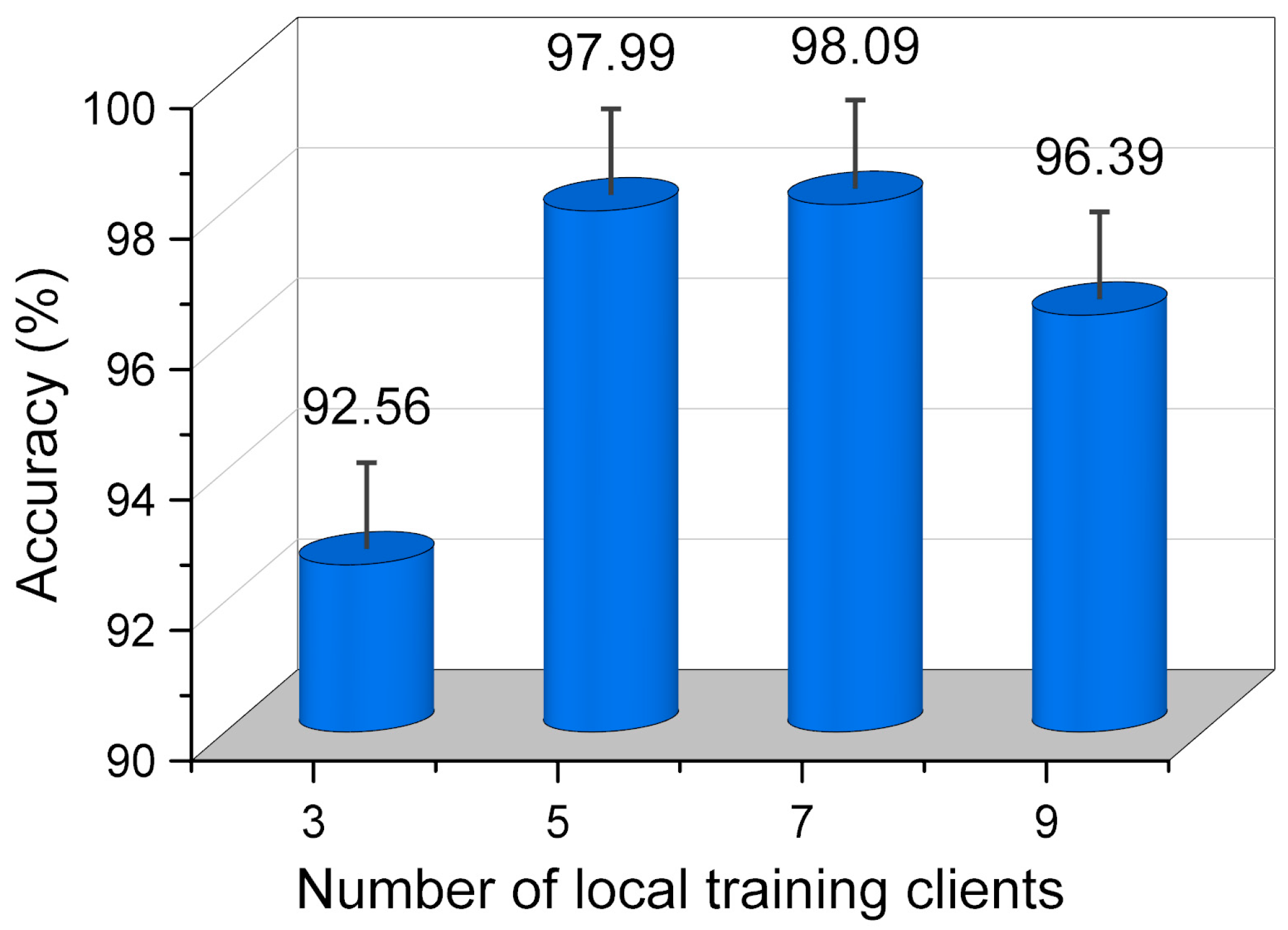

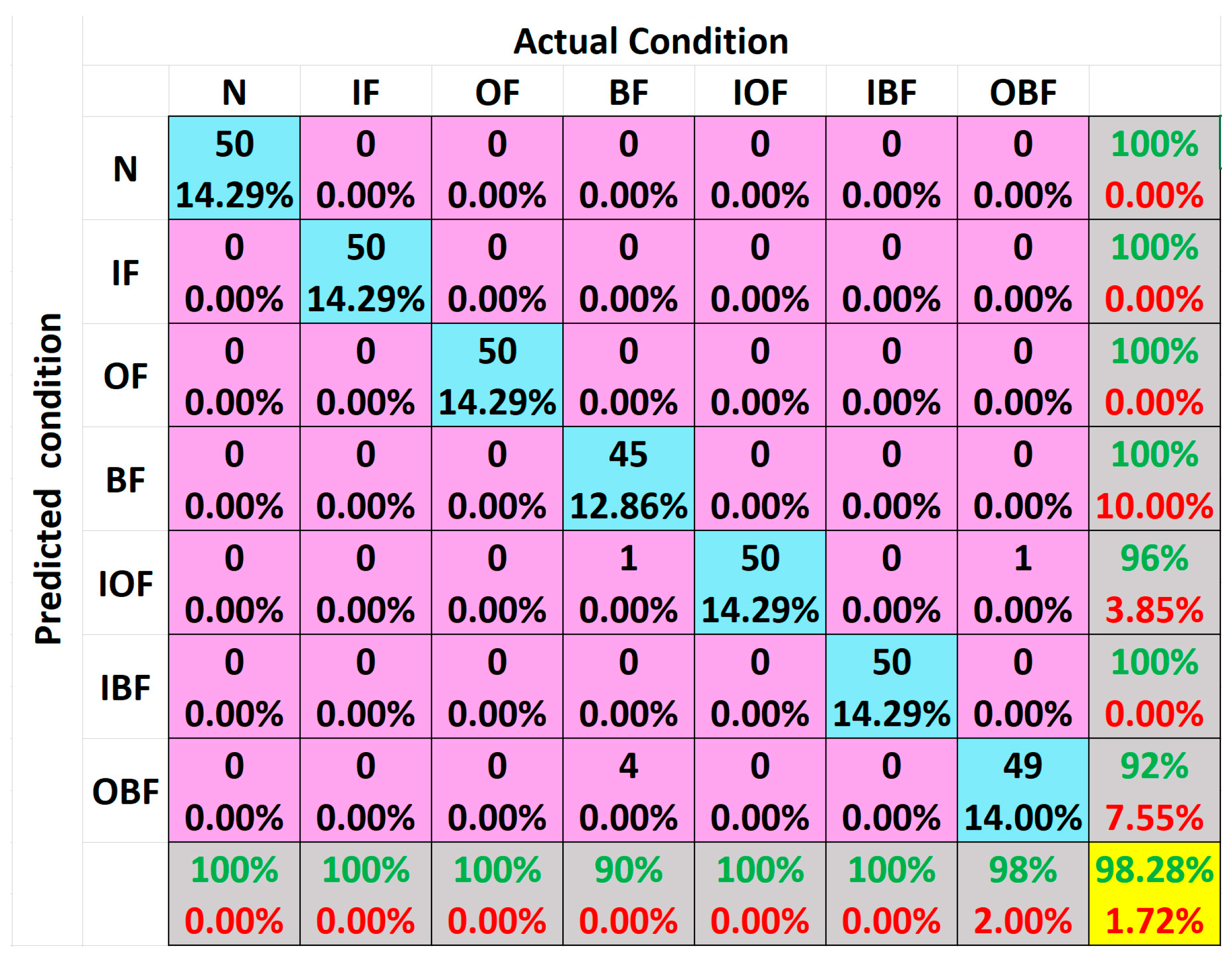

4.3. Experiment 2: Fault Diagnosis Experiment Result Based on DFed-LT

- Non-Fed: Non-federated, whereby each local client trains a local model for local diagnosis, and the final result is the average of each local diagnostic model.

- FedAvg-CNN: A federated learning approach using the Federated Average (FedAvg) framework with CNN as the local model.

- DFed-CNN: A decentralized federated learning approach with CNN as the local model.

- FedAvg-LT: This model is based on the FedAvg framework with LT as the local model.

- (1)

- The non-fed (non-federated learning) baseline method demonstrated the poorest performance (average accuracy: 51.34%, F1-score: 52.02%), indicating that individual local models struggle to achieve accurate diagnostic performance when trained on limited and isolated data samples.

- (2)

- Comparative analysis of FedAvg-CNN, DFed-CNN, FedAvg-LT, and DFed-LT reveals that employing the LT model as the local model yields significantly superior diagnostic performance compared to using CNN as the local model, further validating the enhanced feature extraction capability of the LT architecture. From an engineering perspective, the LT model’s scalability and efficiency in handling complex feature interactions make it more suitable for resource-constrained edge devices. Its balanced trade-off between computational cost and performance enhancement ensures practical deployability in real-world federated systems.

- (3)

- Methods utilizing the DFed framework consistently outperformed their FedAvg counterparts in diagnostic accuracy, statistically confirming the advantages and practical applicability of our proposed decentralized federated learning approach. The decentralized design eliminates single-point bottlenecks and enhances system robustness, making it ideal for large-scale or privacy-sensitive engineering scenarios.

- (4)

- The proposed DFed-LT method achieved optimal diagnostic performance (accuracy: 98.29%, F1-score: 99.28%) while maintaining data privacy, demonstrating its significant superiority over alternative methods in privacy-preserving medical diagnosis scenarios.

4.4. Experiment 3: Fault Diagnosis Experiment Result Based on DFed-LT in Noisy Environments

- (1)

- A clear and consistent trend observed across all methods is the monotonic decrease in diagnostic accuracy as the noise level increases. This inverse relationship between SNR and performance underscores the significant challenge that environmental noise poses to model stability and confirms that noise robustness is a critical metric for evaluating practical applicability. The performance degradation is most pronounced at the extreme noise level of −4 dB, where all methods exhibit their lowest accuracy scores.

- (2)

- Among the methods compared, the Non-Fed consistently demonstrates the poorest performance and robustness across the entire noise spectrum. Its accuracy declines from 48.21% at 8 dB to 34.62% at −4 dB. This substantial performance drop highlights the inherent limitation of models trained in isolation on limited local data, which fail to learn generalized and noise-invariant features, making them highly vulnerable to data corruption and unsuitable for real-world deployments where signal quality can vary significantly.

- (3)

- When comparing the federated learning approaches, a clear hierarchy of performance emerges based on the underlying model architecture and federation strategy. Methods utilizing the LT model as a local client consistently and significantly outperform those based on the CNN architecture at every SNR level. For instance, at 0 dB, FedAvg-LT (86.33%) surpasses DFed-CNN (68.59%) by a considerable margin. This pronounced performance gap, maintained even under high noise, provides strong evidence for the superior feature extraction and representation learning capacity of the LT architecture, which is evidently more resilient to signal degradation.

- (4)

- Within each architectural class, a consistent advantage is observed for the DFed framework over its FedAvg counterpart. This is illustrated by DFed-CNN (79.65% at 8 dB) outperforming FedAvg-CNN (76.88% at 8 dB) and, most importantly, by the proposed DFed-LT method achieving the top accuracy at every noise level. The DFed-LT method registers the highest recorded accuracies, from 95.85% at 8 dB to 81.28% at −4 dB. This result statistically validates the synergistic effect of combining the robust LT model with the decentralized federated learning (DFed) strategy, culminating in a system that excels in both performance and noise robustness.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lv, Y.; Yang, X.; Li, Y.; Liu, J.; Li, S. Fault detection and diagnosis of marine diesel engines: A systematic review. Ocean Eng. 2024, 294, 116798. [Google Scholar] [CrossRef]

- Liang, P.; Tian, J.; Wang, S.; Yuan, X. Multi-source information joint transfer diagnosis for rolling bearing with unknown faults via wavelet transform and an improved domain adaptation network. Reliab. Eng. Syst. Saf. 2024, 242, 109788. [Google Scholar] [CrossRef]

- Li, G.; Wu, J.; Deng, C.; Xu, X.; Shao, X. Deep reinforcement learning-based online domain adaptation method for fault diagnosis of rotating machinery. IEEE/ASME Trans. Mechatron. 2022, 27, 2796–2805. [Google Scholar] [CrossRef]

- Cheng, Y.; Lin, X.; Liu, W.; Zeng, M.; Liang, P. A local and global multi-head relation self-attention network for fault diagnosis of rotating machinery under noisy environments. Appl. Soft Comput. 2025, 176, 113138. [Google Scholar] [CrossRef]

- Yuan, M.; Zeng, M.; Rao, F.; He, Z.; Cheng, Y. An interpretable algorithm unrolling network inspired by general convolutional sparse coding for intelligent fault diagnosis of machinery. Measurement 2025, 244, 116332. [Google Scholar] [CrossRef]

- Yang, Z.; Wang, X.; Zhong, J. Representational learning for fault diagnosis of wind turbine equipment: A multi-layered extreme learning machines approach. Energies 2016, 9, 379. [Google Scholar] [CrossRef]

- Bhole, N.; Ghodke, S. Motor Current Signature Analysis for Fault Detection of Induction Machine–A Review. In Proceedings of the 2021 4th Biennial International Conference on Nascent Technologies in Engineering (ICNTE), NaviMumbai, India, 15–16 January 2021; pp. 1–6. [Google Scholar]

- Bayma, R.S.; Lang, Z.Q. Fault diagnosis methodology based on nonlinear system modelling and frequency analysis. IFAC Proc. 2014, 47, 8278–8285. [Google Scholar] [CrossRef]

- Alwodai, A.; Wang, T.; Chen, Z.; Gu, F.; Cattley, R.; Ball, A. A Study of Motor Bearing Fault Diagnosis using Modulation Signal Bispectrum Analysis of Motor Current Signals. J. Sig. Inform. Proc. 2013, 4, 72–79. [Google Scholar] [CrossRef]

- Ma, C.; Zhang, W.; Shi, M.; Zou, X.; Xu, Y.; Zhang, K. Feature identification based on cepstrum-assisted frequency slice function for bearing fault diagnosis. Measurement 2025, 246, 116753. [Google Scholar] [CrossRef]

- Kankar, P.K.; Sharma, S.C.; Harsha, S.P. Fault diagnosis of ball bearings using continuous wavelet transform. Appl. Soft Comput. 2011, 11, 2300–2312. [Google Scholar] [CrossRef]

- Ma, K.; Wang, Y.; Yang, Y. Fault Diagnosis of Wind Turbine Blades Based on One-Dimensional Convolutional Neural Network-Bidirectional Long Short-Term Memory-Adaptive Boosting and Multi-Source Data Fusion. Appl. Sci. 2025, 15, 3440. [Google Scholar] [CrossRef]

- Dladla, V.M.N.; Thango, B.A. Fault Classification in Power Transformers via Dissolved Gas Analysis and Machine Learning Algorithms: A Systematic Literature Review. Appl. Sci. 2025, 15, 2395. [Google Scholar] [CrossRef]

- Huang, X.; Teng, Z.; Tang, Q.; Yu, Z.; Hua, J.; Wang, X. Fault diagnosis of automobile power seat with acoustic analysis and retrained SVM based on smartphone. Measurement 2022, 202, 111699. [Google Scholar] [CrossRef]

- Liu, G.; Ma, Y.; Wang, N. Rolling Bearing Fault Diagnosis Based on SABO–VMD and WMH–KNN. Sensors 2024, 24, 5003. [Google Scholar] [CrossRef]

- Chen, J.-H.; Zou, S.-L. An Intelligent Condition Monitoring Approach for Spent Nuclear Fuel Shearing Machines Based on Noise Signals. Appl. Sci. 2018, 8, 838. [Google Scholar] [CrossRef]

- Liang, X.; Yao, J.; Zhang, W.; Wang, Y. A Novel Fault Diagnosis of a Rolling Bearing Method Based on Variational Mode Decomposition and an Artificial Neural Network. Appl. Sci. 2023, 13, 3413. [Google Scholar] [CrossRef]

- Yan, X.; Liu, Y.; Jia, M. Multiscale cascading deep belief network for fault identification of rotating machinery under various working conditions. Knowl.-Based Syst. 2020, 193, 105484. [Google Scholar] [CrossRef]

- Jiang, G.; Xie, P.; He, H.; Yan, J. Wind turbine fault detection using a denoising autoencoder with temporal information. IEEE/ASME Trans. Mechatron. 2018, 23, 89–100. [Google Scholar] [CrossRef]

- Li, G.; Wu, J.; Deng, C.; Chen, Z. Parallel multi-fusion convolutional neural networks based fault diagnosis of rotating machinery under noisy environments. ISA Trans. 2022, 128, 545–555. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Huang, H.; Deng, Z.; Wu, J. Shrinkage mamba relation network with out-of-distribution data augmentation for rotating machinery fault detection and localization under zero-faulty data. Mech. Syst. Signal Process. 2025, 224, 112145. [Google Scholar] [CrossRef]

- Wang, S.; Shuai, H.; Hu, J.; Zhang, J.; Liu, S.; Yuan, X.; Liang, P. Few-shot fault diagnosis of axial piston pump based on prior knowledge-embedded meta learning transformer under variable operating conditions. Expert Syst. Appl. 2025, 269, 126452. [Google Scholar] [CrossRef]

- Zhang, W.; Li, X.; Ma, H.; Luo, Z.; Li, X. Federated learning for machinery fault diagnosis with dynamic validation and self-super. Knowl.-Based Syst. 2021, 213, 106679. [Google Scholar] [CrossRef]

- Du, J.; Qin, N.; Jia, X.; Zhang, Y.; Huang, D. Fault diagnosis of multiple railway high speed train bogies based on federated learning. J. Southwest Jiaotong Univ. 2024, 59, 185–192. [Google Scholar]

- Zhang, Z.; Xu, X.; Gong, W.; Chen, Y.; Gao, H. Efficient federated convolutional neural network with information fusion for rolling bearing fault diagnosis. Control Eng. Pract. 2021, 116, 104913. [Google Scholar] [CrossRef]

- Geng, D.Q.; He, H.W.; Lan, X.C.; Liu, C. Bearing fault diagnosis based on improved federated learning algorithm. Computing 2022, 104, 1–19. [Google Scholar] [CrossRef]

- Wang, Q.; Li, Q.; Wang, K.; Wang, H.; Zeng, P. Efficient federated learning for fault diagnosis in industrial cloud-edge computing. Computing 2021, 103, 2319–2337. [Google Scholar] [CrossRef]

- Li, Y.; Chen, Y.; Zhu, K.; Bai, C.; Zhang, J. An Effective Federated Learning Verification Strategy and Its Applications for Fault Diagnosis in Industrial Iot Systems. IEEE Internet Things 2022, 9, 16835–16849. [Google Scholar] [CrossRef]

- Liang, Y.; Zhao, P.; Wang, Y. Federated Few-Shot Learning-Based Machinery Fault Diagnosis in the Industrial Internet of Things. Appl. Sci. 2023, 13, 10458. [Google Scholar] [CrossRef]

- Berghout, T.; Benbouzid, M.; Bentrcia, T.; Lim, W.H.; Amirat, Y. Federated Learning for Condition Monitoring of Industrial Processes: A Review on Fault Diagnosis Methods, Challenges, and Prospects. Electronics 2023, 12, 158. [Google Scholar] [CrossRef]

- Pei, X.; Zheng, X.; Wu, J. Rotating machinery fault diagnosis through a transformer convolution network subjected to transfer learning. IEEE Trans. Instrum. Meas. 2021, 70, 2515611. [Google Scholar] [CrossRef]

- Ding, Y.; Jia, M.; Miao, Q.; Cao, Y. A novel time-frequency Transformer based on self-attention mechanism and its application in fault diagnosis of rolling bearings. Mech. Syst. Signal Process. 2022, 168, 108616. [Google Scholar] [CrossRef]

- Jin, C.; Chen, X. An end-to-end framework combining time-frequency expert knowledge and modified transformer networks for vibration signal classification. Expert Syst. Appl. 2021, 171, 114570. [Google Scholar] [CrossRef]

- Zhou, H.; Huang, X.; Wen, G.; Dong, S.; Lei, Z.; Zhang, P.; Chen, X. Convolution enabled transformer via random contrastive regularization for rotating machinery diagnosis under time-varying working conditions. Mech. Syst. Signal Process. 2022, 173, 109050. [Google Scholar] [CrossRef]

- Liu, W.; Zhang, Z.; Zhang, J.; Huang, H.; Zhang, G.; Peng, M. A novel fault diagnosis method of rolling bearings combining convolutional neural network and transformer. Electronics 2023, 12, 1838. [Google Scholar] [CrossRef]

- Xie, F.; Wang, G.; Zhu, H.; Sun, E.; Fan, Q.; Wang, Y. Rolling bearing fault diagnosis based on SVD-GST combined with vision transformer. Electronics 2023, 12, 3515. [Google Scholar] [CrossRef]

- Xiao, Y.; Shao, H.; Wang, J.; Yan, S.; Liu, B. Bayesian variational transformer: A generalizable model for rotating machinery fault diagnosis. Mech. Syst. Signal Process. 2024, 207, 110936. [Google Scholar] [CrossRef]

- Chen, C.; Liu, C.; Wang, T.; Zhang, A.; Wu, W.; Cheng, L. Compound fault diagnosis for industrial robots based on dual-transformer networks. J. Manuf. Syst. 2023, 66, 163–178. [Google Scholar] [CrossRef]

- Huang, X.; Wu, T.; Yang, L.; Hu, Y.; Chai, Y. Domain adaptive fault diagnosis based on Transformer feature extraction for rotating machinery. Chin. J. Sci. Instrum. 2022, 43, 210–218. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1800–1807. [Google Scholar]

- Thuan, N.D.; Hong, H.S. HUST bearing: A practical dataset for ball bearing fault diagnosis. BMC Res. Notes 2023, 16, 138. [Google Scholar] [CrossRef]

- Xie, K.; Wang, C.; Wang, Y.; Cheng, Y.; Chen, L. A denoising diffusion probabilistic model-based fault sample generation approach for imbalanced intelligent fault diagnosis. Meas. Sci. Technol. 2025, 36, 066134. [Google Scholar] [CrossRef]

- Wen, L.; Li, X.; Gao, L. A transfer convolutional neural network for fault diagnosis based on ResNet-50. Neural Comput. Appl. 2019, 32, 6111–6124. [Google Scholar] [CrossRef]

- Chen, Z.; Wu, J.; Deng, C.; Wang, C.; Wang, Y. Residual deep subdomain adaptation network: A new method for intelligent fault diagnosis of bearings across multiple domains. Mech. Mach. Theory 2022, 169, 104635. [Google Scholar] [CrossRef]

- Liang, P.; Wang, W.; Yuan, X.; Liu, S.; Zhang, L.; Cheng, Y. Intelligent fault diagnosis of rolling bearing based on wavelet transform and improved ResNet under noisy labels and environment. Eng. Appl. Artif. Intel. 2022, 115, 105269. [Google Scholar] [CrossRef]

| Methods | Main Features | Advantages | Limitations |

|---|---|---|---|

| [23] | Dynamic validation; Self-supervision | Suitable for time series monitoring data | Client uploads risk leaks; server faults hurt fault diagnosis training; central setup hikes fault diagnosis costs |

| [24] | Multi-scale convolution | Diverse data features | |

| [25] | Adaptive communication | Less communication rounds | |

| [26] | Optimized the global model using F1 score; increased the accuracy difference | Less communication rounds | |

| [27] | Edge picks cloud models async via local data | Improved efficiency; reduced computational and communication costs | |

| [28] | Trained using a stacked network | Combined with the Internet of Things | |

| DFed-LT | Decentralized architecture | Calculates global model via node-to-node relay | - |

| Methods | Main Features | Advantages | Limitations |

|---|---|---|---|

| [31] | Combines transformer encoder and CNN | First application of transformer to rotating machinery FD; leverages benefits of both architectures | Complex structures; numerous learnable parameters; require substantial computational resources; difficult to deploy on edge devices |

| [32] | Based on time–frequency transformer | Addresses shortcomings of classical structures in computational efficiency and feature representation; demonstrated superiority | |

| [33] | Four-step process: data preprocessing, time–frequency feature extraction, improved transformer, integral optimization | Structured end-to-end workflow for vibration signal classification | |

| [34] | Improved self-attention via depthwise separable convolution and random comparison regularization | Enables deep transformer encoder; improves generalization under different operating conditions | |

| [35] | Balances CNN-like and transformer-like features | Addresses limitations of CNN and strict data quality requirements of the transformer | |

| [36] | Uses visual transformer | Deals with noise interference and makes better use of data features beyond 1D information | |

| [37] | Uses transformer to focus on important features | Improves feature selection ability | |

| [38] | Combines wavelet time–frequency analysis and swin transformer | Improves FD performance by using image classification ability | |

| [39] | Uses VOLO transformer as feature extractor | Obtains finer-grained fault feature representations | |

| DFed-LT | Lightweight transformer | Simple model structure; smaller computational load | - |

| Index | Fault Type | Bearing Type | Operating Condition | Sampling Rate | Sample Number (Training/Validation/Testing) |

|---|---|---|---|---|---|

| N | Normal | 6206 | 0 W | 51.2 kHz | 400/50/50 |

| IF | Inner race fault | 6206 | 0 W | 51.2 kHz | 400/50/50 |

| OF | Outer race fault | 6206 | 0 W | 51.2 kHz | 400/50/50 |

| BF | Ball fault | 6206 | 0 W | 51.2 kHz | 400/50/50 |

| IOF | Inner and outer race faults | 6206 | 0 W | 51.2 kHz | 400/50/50 |

| IBF | Inner race and ball faults | 6206 | 0 W | 51.2 kHz | 400/50/50 |

| OBF | Outer race and ball faults | 6206 | 0 W | 51.2 kHz | 400/50/50 |

| Model | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|

| CNN | 85.64 | 85.22 | 85.6 | 85.41 | 0.06 | 1.52 |

| Uniformer | 98.22 | 98.06 | 98.44 | 98.25 | 20.89 | 127.69 |

| MobileNetV3 | 97.14 | 97.30 | 97.28 | 97.29 | 2.92 | 10.87 |

| LT model | 99.06 | 99.17 | 98.87 | 99.02 | 2.48 | 8.58 |

| Methods | Accuracy (%) | Precision (%) | Recall (%) | F1 Score (%) |

|---|---|---|---|---|

| Non-Fed | 51.36 | 52.40 | 51.67 | 52.03 |

| FedAvg-CNN | 81.14 | 82.53 | 82.39 | 82.46 |

| DFed-CNN | 83.49 | 83.78 | 82.61 | 83.19 |

| FedAvg-LT | 97.94 | 97.38 | 97.10 | 97.24 |

| DFed-LT | 98.27 | 99.23 | 99.27 | 99.25 |

| Methods | SNR = 8 dB | SNR = 4 dB | SNR = 0 dB | SNR = −4 dB |

|---|---|---|---|---|

| Non-Fed | 48.21 | 44.37 | 39.85 | 34.62 |

| FedAvg-CNN | 76.88 | 71.54 | 65.12 | 57.91 |

| DFed-CNN | 79.65 | 74.72 | 68.59 | 61.44 |

| FedAvg-LT | 95.28 | 91.45 | 86.33 | 79.82 |

| DFed-LT | 95.85 | 92.35 | 87.55 | 81.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, K.; Cheng, C.; Cheng, Y.; Wang, Y.; Chen, L.; Wen, W.; Shang, W. DFed-LT: A Decentralized Federated Learning with Lightweight Transformer Network for Intelligent Fault Diagnosis. Appl. Sci. 2025, 15, 11484. https://doi.org/10.3390/app152111484

Xie K, Cheng C, Cheng Y, Wang Y, Chen L, Wen W, Shang W. DFed-LT: A Decentralized Federated Learning with Lightweight Transformer Network for Intelligent Fault Diagnosis. Applied Sciences. 2025; 15(21):11484. https://doi.org/10.3390/app152111484

Chicago/Turabian StyleXie, Keqiang, Cheng Cheng, Yiwei Cheng, Yuanhang Wang, Liping Chen, Wen Wen, and Wei Shang. 2025. "DFed-LT: A Decentralized Federated Learning with Lightweight Transformer Network for Intelligent Fault Diagnosis" Applied Sciences 15, no. 21: 11484. https://doi.org/10.3390/app152111484

APA StyleXie, K., Cheng, C., Cheng, Y., Wang, Y., Chen, L., Wen, W., & Shang, W. (2025). DFed-LT: A Decentralized Federated Learning with Lightweight Transformer Network for Intelligent Fault Diagnosis. Applied Sciences, 15(21), 11484. https://doi.org/10.3390/app152111484