Machine Learning Approaches for Detecting Hate-Driven Violence on Social Media

Abstract

1. Introduction

- Integration of Behavioral and Temporal Dynamics: We introduce a structured set of temporal (e.g., night time activity, session duration) and behavioral (e.g., toxicity rate, user affinity) indicators that, to our knowledge, have not been jointly evaluated with contextualized embeddings in the cyberbullying domain. These features provide richer insights into interaction dynamics than lexical analysis alone.

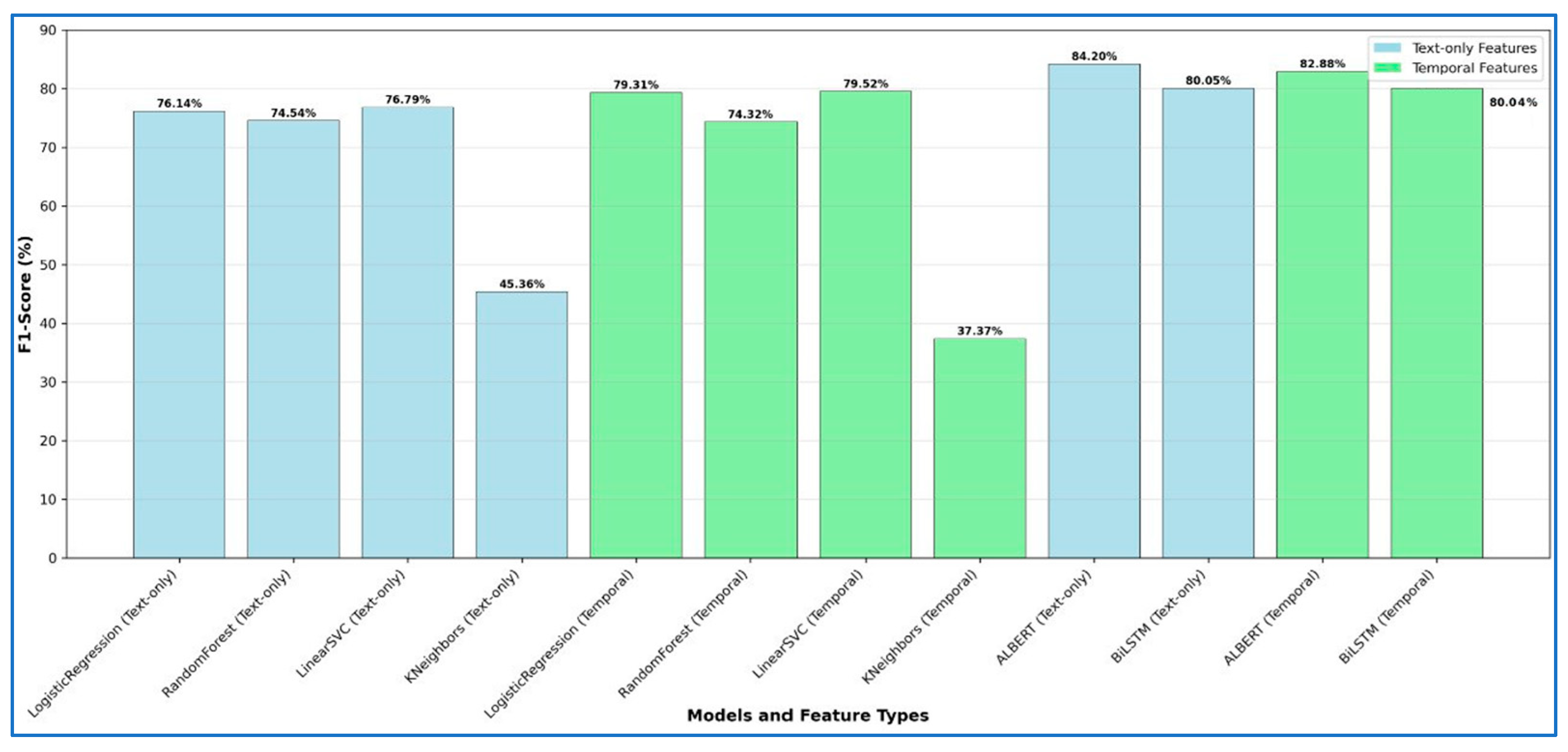

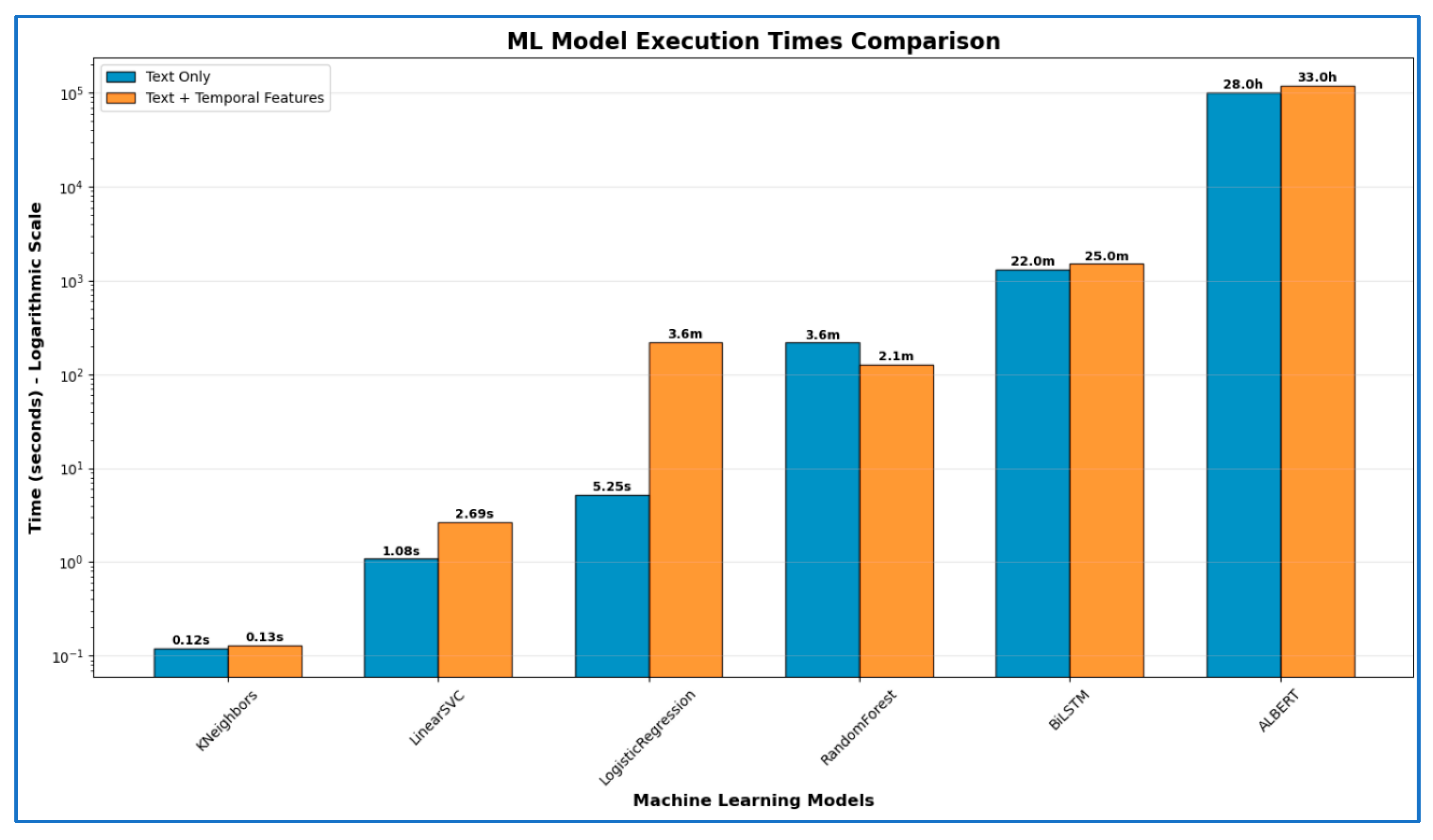

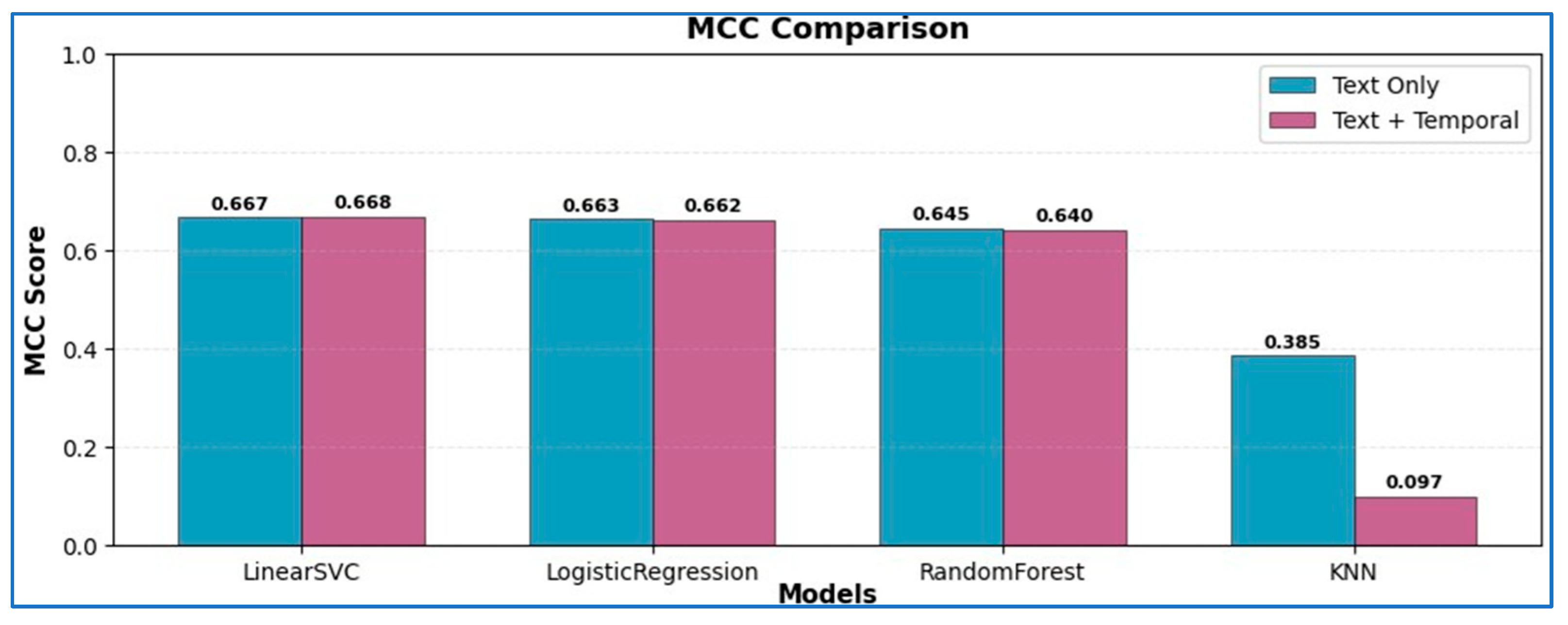

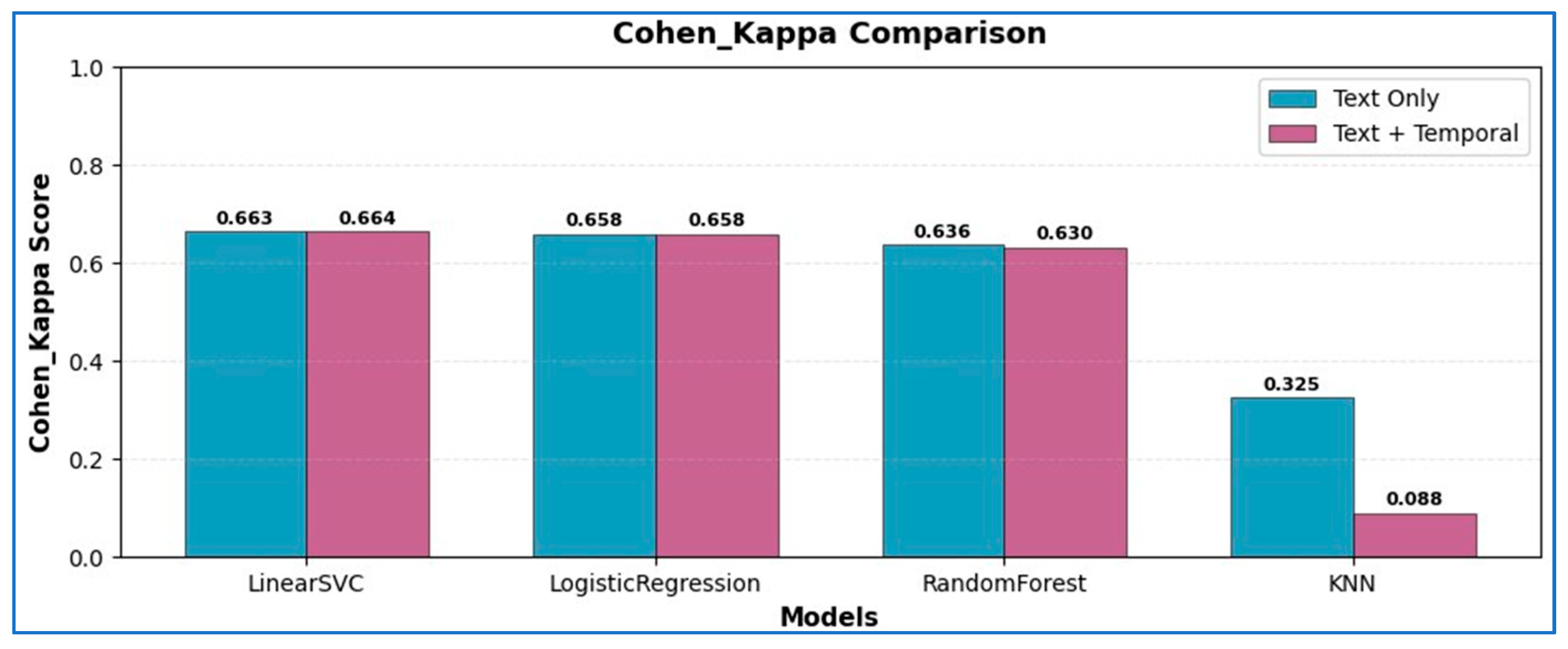

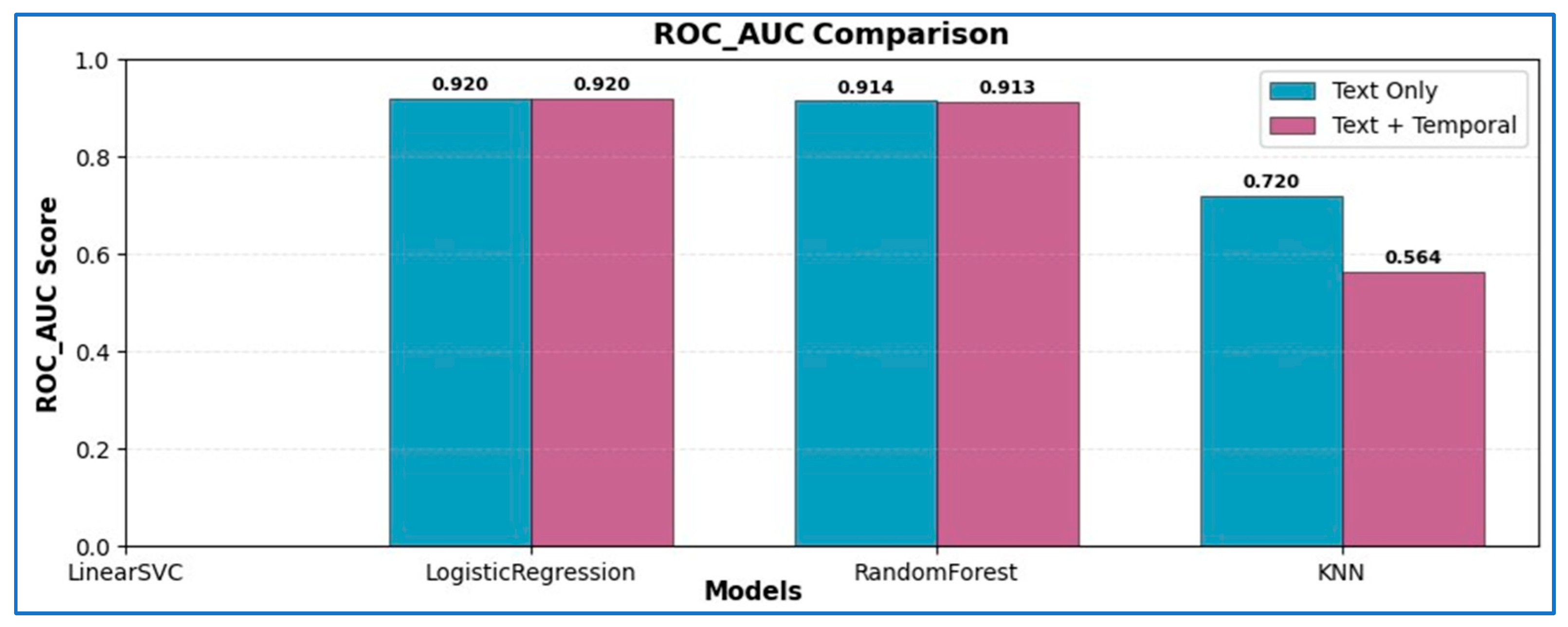

- Systematic Multi-Family Evaluation: We design a unified evaluation framework that contrasts classical models (LinearSVC, Logistic Regression, Random Forest, KNN) with state-of-the-art neural networks (ALBERT and BiLSTM) across two feature settings: text-only and text + temporal/behavioral. This systematic approach highlights how different model families benefit from contextual signals.

- Enhanced Preprocessing and Validation Pipeline: Beyond our earlier study, we implement refined preprocessing steps, including time normalization, balanced dataset construction, and model-specific text cleaning, ensuring comparability across heterogeneous models.

- Neural Network Extension with Contextual Features: We provide the first comparative evidence of how transformer-based architectures (ALBERT) and sequence-oriented models (BiLSTM) perform when enriched with temporal and behavioral features, offering new empirical insights into context-aware detection.

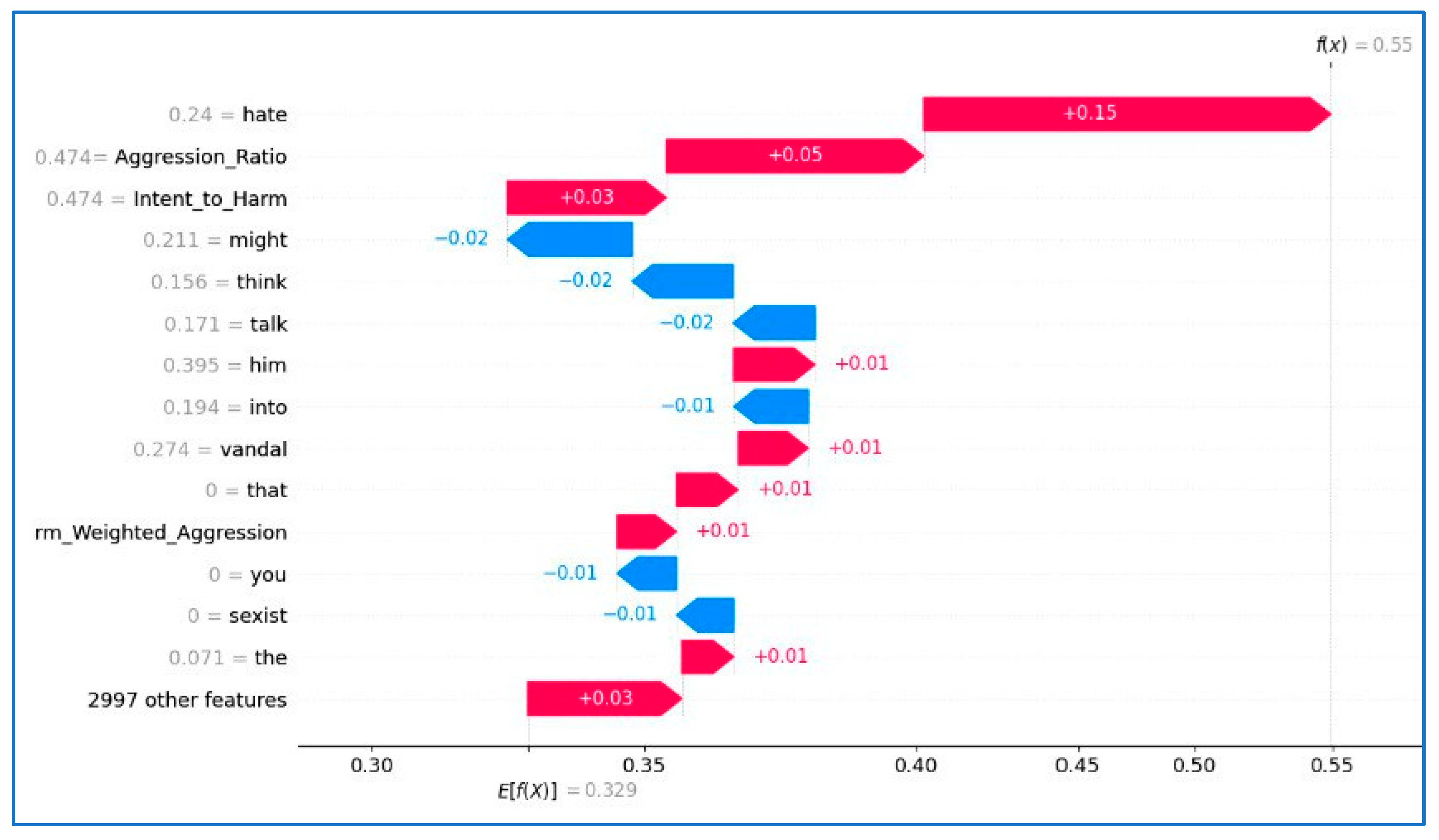

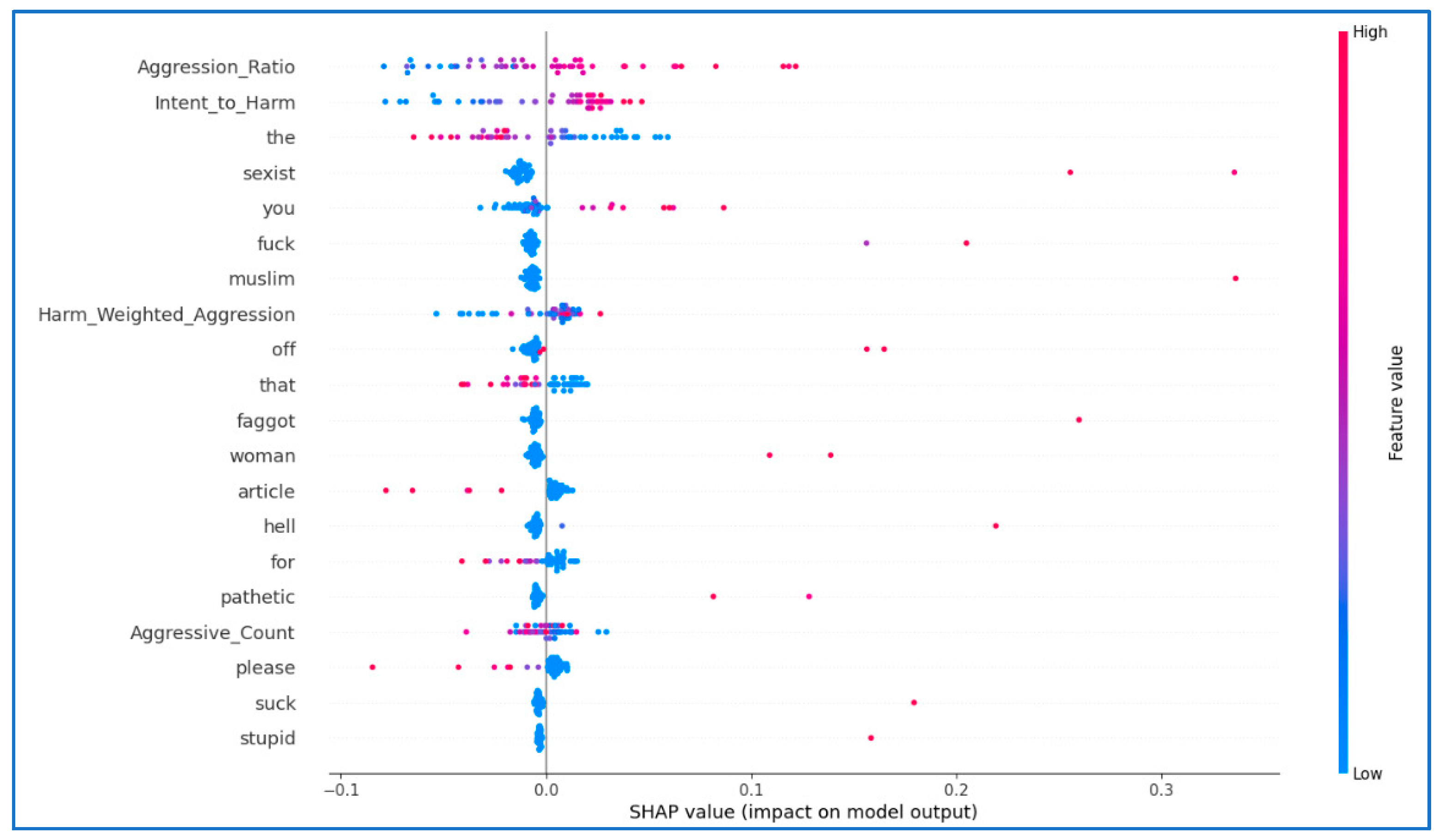

- Practical Relevance and Interpretability Potential: While not a full explainability study, we discuss how feature importance and contextual cues can support interpretability and outline how such models may be deployed in monitoring pipelines.

- Scalability and Future Directions: Finally, we chart a roadmap toward broader generalization, including multimodal datasets (text, image, audio), demographic-aware modeling, and API-based real-time collection from platforms such as TikTok and X.

2. Background

3. Feature Engineering for Social Media Harassment Detection

3.1. Social Network Datasets

- Cross-platform datasets from sources such as Reddit, TikTok, and multilingual Twitter corpora to test generalization across different ecosystems.

- Multimodal signals (e.g., image-text, audio-text) to capture visual and auditory aggression cues.

- Graph- and thread-based modeling to reflect reply structures, interaction networks, and harassment propagation.

- Multilingual and code-switched corpora to assess robustness in diverse linguistic environments.

3.2. Data Preprocessing

- Class Weighting for Classical Models: Algorithms such as LinearSVC, Logistic Regression, and Random Forest were trained with class_weight = ‘balanced’ in scikit-learn. This automatically scales the loss function inversely proportional to class frequency, ensuring that misclassifying bullying instances incurs a higher penalty.

- Weighted Loss for Neural Models: For BiLSTM and ALBERT, we incorporated class weights directly into the training loss function to counteract imbalance and improve minority-class sensitivity.

- Stratified Train–Test Split: The 80/20 data split was stratified to preserve the original class distribution in both training and test sets, ensuring that performance metrics reflect realistic operating conditions.

- Evaluation Beyond Accuracy: Since accuracy alone can be misleading on imbalanced datasets, we emphasize precision, recall, and F1-score, which better capture the trade-offs between false positives and false negatives in this domain.

- Removing Patterns: During this phase, certain patterns or sub-series of textual data are eliminated to increase their quality and relevance for the analysis that will come later. This process focuses on features such as the absence of user-impacting issues as well as other static observations that may cause confusion or inconvenience in the dataset. By recognising these patterns regularly and using routine methods or similar techniques, the structure of the data becomes more intuitive, and it is prepared for further preprocessing steps without compromising its integrity or affecting its content [22,23].

- Clean Text: Data cleaning involves a series of tasks aimed at providing standardized and streamlined textual content to facilitate meaningful analysis. This phase often involves removing extra lines, punctuation marks, and other nonlinear markers that may interfere with comprehension or introduce bias in subsequent analysis. Converting text to a consistent and uniform format reduces noise potential sources, making the dataset ideally suited for the construction of natural language processing techniques [22,23].

- Tokenization: This is the process of breaking up pure text into individual groups of tokens, usually words or subwords, to enable further analysis and processing. This step involves partitioning text based on whitespace or alphanumeric characters boundaries to extract meaningful groups of information. Tokenization is an important preprocessing step in natural language processing tasks, and it provides a set of textual content that can be used for tasks such as feature extraction, sentiment analysis, and machine learning-based classification [22,23].

- Lemmatization: Lemmatization is a linguistic process aimed at reducing vocabulary to bases or elementary sets, known as lemmas, to generate appropriate vocabulary, and subsequently improve and interpret analyses. Lemmatizing text with language-specific rules uses the generator to strengthen synonymous variables, reduce redundancy, and enhance the underlying natural language processing tasks [23].

- Vectorization (TF-IDF): Term Frequency–Inverse Document Frequency (TF–IDF) transforms cleaned and tokenized text into numerical feature vectors suitable for machine learning algorithms. TF–IDF weights reflect the relative importance of terms in distinguishing documents [22,24], and remain widely adopted in practical pipelines through Python 3.13.7 libraries such as scikit-learn [25].

- Removing Patterns: Non-informative patterns were removed to enhance the quality of the input text.

- Minimal Text Cleaning: Basic cleaning, such as removing extra spaces or control characters, was performed. Punctuation, capitalization, and other contextual cues were retained to preserve semantic information.

- Tokenization and Vectorization: These steps were performed internally by the models’ tokenizers, which convert text into subword embeddings suitable for deep learning. Lemmatization and TF-IDF were not applied.

3.3. System Architecture

- Classical ML Pipeline: Preprocessing includes pattern removal, text cleaning, tokenization, and lemmatization, followed by TF-IDF vectorization. Temporal and behavioral features are then concatenated with textual embeddings. Four traditional classifiers were trained: Logistic Regression, Random Forest, LinearSVC, and KNN.

- BiLSTM Pipeline: Input text is preprocessed with light cleaning before being tokenized and padded. The BiLSTM model processes sequences bidirectionally, capturing contextual dependencies from both past and future tokens.

- ALBERT Pipeline: Similar to BiLSTM, preprocessing remains minimal to preserve semantic cues. Text is encoded using the ALBERT tokenizer, which generates subword embeddings. The model leverages parameter sharing and factorized embeddings to efficiently capture contextual patterns.

3.4. Data Modelling

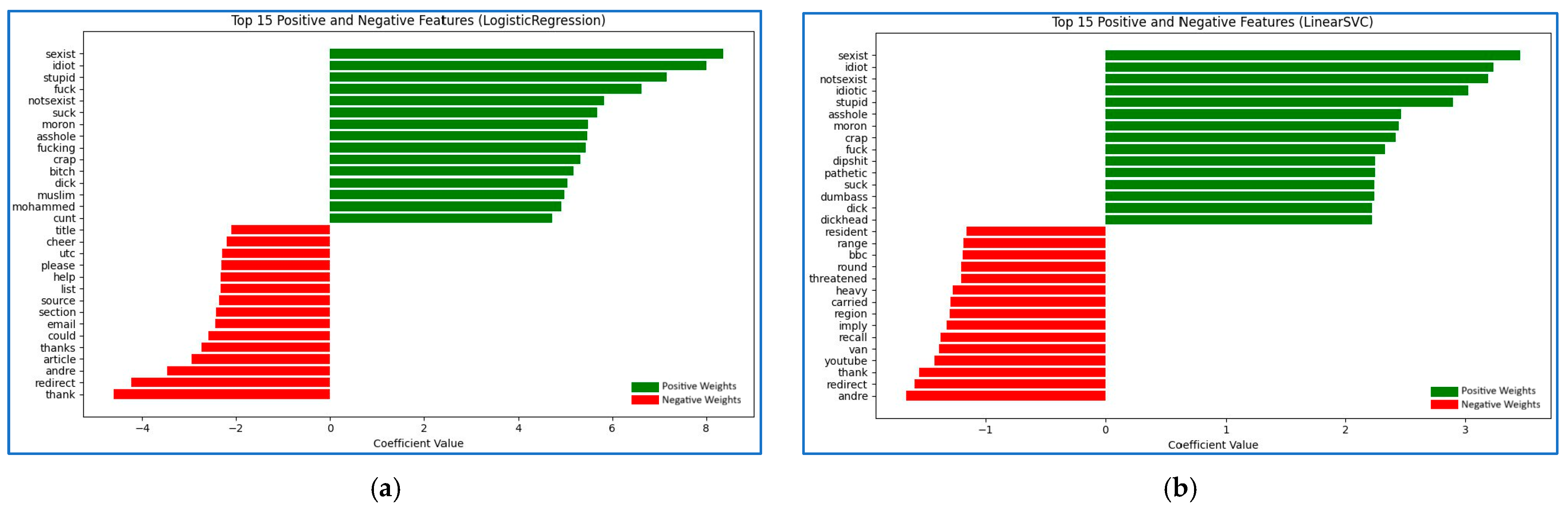

- LinearSVC Model: The Linear Support Vector Classification (LinearSVC) model is based on the Support Vector Machine (SVM) framework [26], widely available through scikit-learn [25]. The model fits a hyperplane to maximize the margin between classes, optimizing linear decision boundaries to minimize classification errors. LinearSVC has demonstrated efficiency in high-dimensional feature spaces, making it suitable for text classification tasks such as cyberbullying detection [21]. By training the LinearSVC model on annotated data, the classifier gains the ability to distinguish toxic from non-toxic samples with effective generalization in real-world contexts.

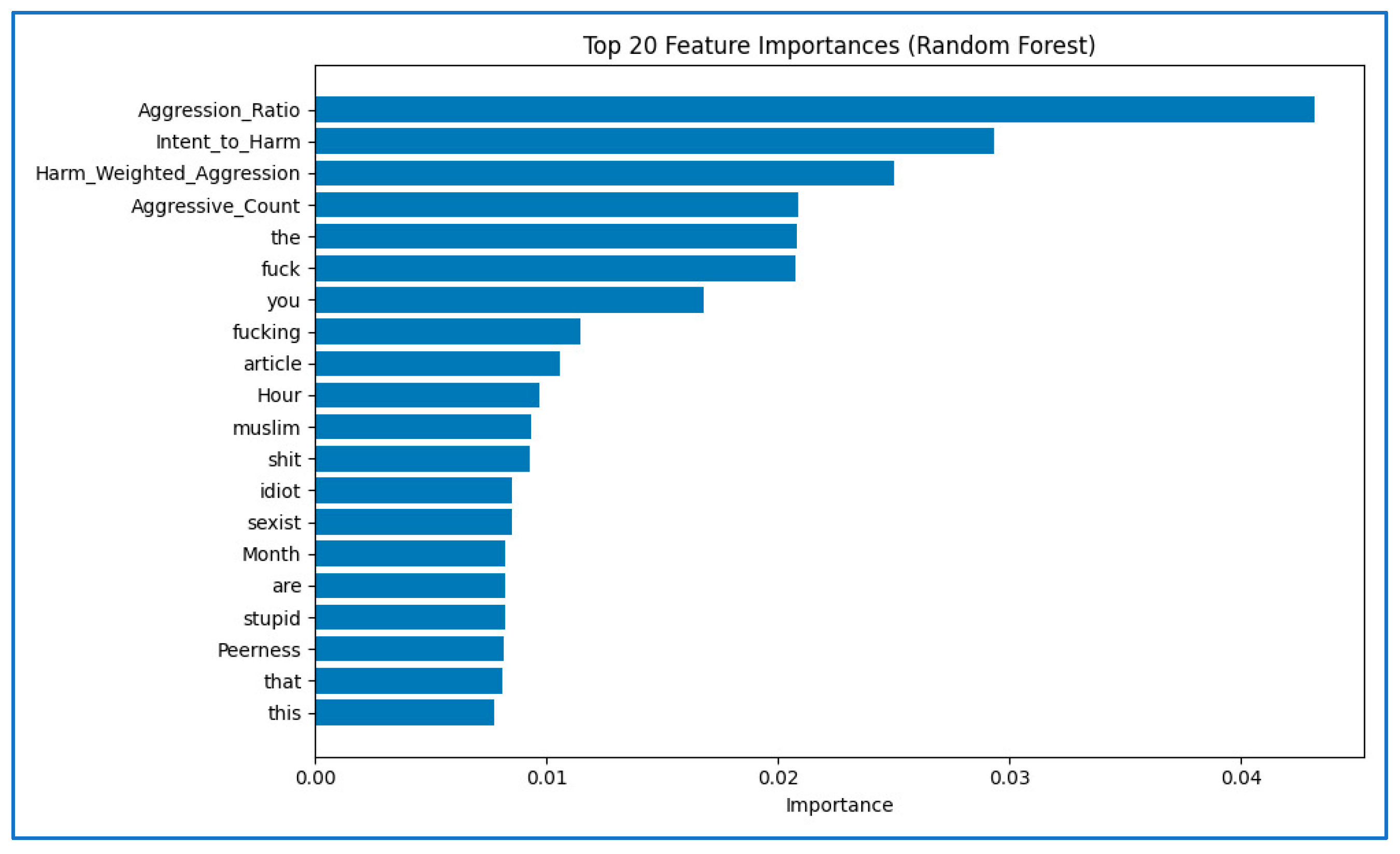

- Random Forest Model: Random Forests are ensemble classifiers that construct multiple decision trees on bootstrapped samples and combine their outputs for robust predictions [27]. Each tree is trained on a random subset of features, reducing overfitting and improving generalizability. This diversity allows Random Forests to perform well in noisy social media environments where individual features may be weak predictors. When applied to cyberbullying detection, Random Forests provide a balance of accuracy and interpretability, supported by their implementation in Python 3.13.7 libraries [25].

- LogisticRegression Model: Logistic Regression is a statistical model originally introduced by Cox [28], commonly implemented in modern ML libraries [25]. It estimates the probability of binary outcomes—such as toxic versus non-toxic posts—based on weighted linear combinations of features. Logistic Regression remains popular for its computational efficiency, well-calibrated probability outputs, and ease of interpretation. Recent studies [21] confirm its utility in social media classification, though its performance often lags behind more complex models in handling nuanced or context-dependent abuse.

- KNN Model: KNN is a non-parametric method introduced by Cover and Hart [29]. It classifies new samples based on the majority class among their k nearest neighbors in the feature space. This makes KNN highly adaptive to diverse data distributions and particularly effective when decision boundaries are locally smooth. In cyberbullying detection scenarios [21], KNN provides a simple yet flexible approach; however, its computational cost grows with dataset size since all training samples must be stored and compared.

- ALBERT Model: The ALBERT (A Lite BERT) model is a transformer-based architecture designed to reduce the memory and computation requirements of BERT while maintaining high performance [30]. By factorizing embedding parameters and employing cross-layer parameter sharing, ALBERT efficiently models long-range dependencies and semantic nuances within text. This makes it particularly useful for capturing context in online conversations where abusive language may be subtle or indirect.

- BiLSTM Model: Bidirectional Long Short-Term Memory (BiLSTM) networks extend the traditional LSTM architecture by processing input sequences in both forward and backward directions [31]. This allows the model to exploit both past and future context simultaneously, improving performance on sequential data such as conversational exchanges. BiLSTM has been applied successfully in Arabic sentiment analysis [32] and is equally applicable to cyberbullying detection, where understanding context from both directions is critical for identifying subtle harassment patterns.

3.5. Feature Importance and Justification

- Avg_IsWeekend: This metric monitors users’ activity during weekends, and this may indicate an emotional break from the daily routine. Numerous studies suggest that the behavior of internet users on weekends is associated with increased emotional expressiveness and impulsive behavior.

- Avg_IsNightMessage: Activity at night is usually associated with aggressive behavior in the late hours or impulsive reactions. This scale measures possible emotional changes or feelings of social isolation.

- Avg_TimeSinceLastMsg: The time it takes to reply can be an indication of the intensity of the dialogue. Short periods may indicate enthusiastic conversations, while longer periods may reflect a decrease in interaction or a lack of interest.

- Avg_SessionDuration: Long user sessions may indicate obsessive or direct behavior. This measure helps to distinguish between unintentional use and intentional actions.

- ToxicityRate: This scale measures the amount of hostile or abusive language in users‘ messages. A high level of toxicity is strong evidence of cyberbullying, especially when combined with how frequent it is.

- ThreatLevel: This scale reveals the use of threatening language or a hostile tone, which indicates an increase in harassment.

- UserAffinity: This indicator measures the number of times users interact with each other. A high level of closeness with frequent aggression may indicate specific bullying.

- MsgVolume: This metric keeps track of the number of messages exchanged in one conversation. An increase in the volume of messages with a high level of toxicity can indicate that the abuse continues.

3.6. Hyperparameter Settings and Justification

- LinearSVC: We used the default hinge loss and an L2 regularization penalty with the regularization strength C = 1.0. This setting is known to provide robust generalization on sparse, high-dimensional feature spaces like TF-IDF. The default tolerance (tol = and maximum iterations (max_iter = 1000) ensured convergence without overfitting.

- Logistic Regression: Configured with penalty = ‘l2′, solver = ‘lbfgs’, and max_iter = 1000. This configuration stabilizes optimization in large-scale text classification and provides well-calibrated probabilities.

- Random Forest: We employed n_estimators = 100 decision trees and left max_depth unconstrained, allowing the ensemble to capture complex, nonlinear patterns. A fixed random_state = 42 was used to ensure reproducibility.

- K-Nearest Neighbors (KNN): We set the number of neighbors to k = 5, a commonly used choice that balances bias and variance in text classification tasks. Euclidean distance was used as the similarity metric.

- BiLSTM: The network architecture consisted of an Embedding layer (vocab_size = 10,000, embedding_dim = 128), followed by a Bidirectional LSTM layer with 64 hidden units. Two Dropout layers with a rate of 0.5 were used to reduce overfitting, and a fully connected dense layer (64 neurons, ReLU activation) preceded the final sigmoid classification layer. The model was optimized using the Adam optimizer with a learning rate of 0.001, a batch size of 64, and trained for a maximum of 10 epochs with early stopping (patience = 3).

- ALBERT: We fine-tuned the pre-trained albert-base-v2 transformer model with a learning rate of using the AdamW optimizer. The maximum sequence length was set to 128 tokens, with a batch size of 16 for both training and evaluation. The model was trained for 3 epochs, and a linear learning rate scheduler with warmup was applied.

4. Experimental Results

- Precision: Precision is also known as the positive predicted value. It is the proportion of predictive positives that are positive. See the formula in Equation (1):

- Recall: Recall is the proportion of actual positives which are predicted positive. See the formula in Equation (2):

- F-Measure: F-Measure is the harmonic means of precision and recall. The standard F-measure (F1) gives equal importance to precision and recall. See the formula in Equation (3):

- Accuracy: Accuracy is the number of correctly classified instances (true positives and true negatives). See the formula in Equation (4):

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abuhamda, Y.; García Teodoro, P. Detection and analysis of hate-driven violence on social networks. In Proceedings of the IX Jornadas Nacionales de Investigación en Ciberseguridad (JNIC), Seville, Spain, 27–29 May 2024; pp. 125–131. Available online: https://idus.us.es/items/508e612b-3df5-458a-98be-2d5f7c3fb7d2 (accessed on 29 September 2025).

- Prama, T.T.; Amrin, J.F.; Anwar, M.M.; Sarker, I.H. AI enabled user-specific cyberbullying severity detection with explainability. arXiv 2025, arXiv:2503.10650. [Google Scholar] [CrossRef]

- García-Méndez, S.; De Arriba-Pérez, F. Promoting security and trust on social networks: Explainable cyberbullying detection using large language models in a stream-based machine learning framework. arXiv 2025, arXiv:2505.03746. [Google Scholar] [CrossRef]

- Yi, P.; Zubiaga, A.; Long, Y. Detecting harassment and defamation in cyberbullying with emotion-adaptive training. arXiv 2025, arXiv:2501.16925. [Google Scholar] [CrossRef]

- Balakrishnan, V.; Kaity, M. Cyberbullying detection and machine learning: A systematic literature review. Artif. Intell. Rev. 2023, 56, 1375–1416. [Google Scholar] [CrossRef]

- Akter, M.S.; Shahriar, H.; Cuzzocrea, A. A trustable LSTM-autoencoder network for cyberbullying detection on social media using synthetic data. arXiv 2023, arXiv:2308.09722. [Google Scholar] [CrossRef]

- Cheng, L.; Guo, R.; Silva, Y.N.; Hall, D.; Liu, H. Modeling temporal patterns of cyberbullying detection with hierarchical attention networks. ACM/IMS Trans. Data Sci. 2021, 2, 8. [Google Scholar] [CrossRef]

- Li, J.; Wu, Y.; Hesketh, T. Internet use and cyberbullying: Impacts on psychosocial and psychosomatic wellbeing among Chinese adolescents. Comput. Hum. Behav. 2023, 138, 107461. [Google Scholar] [CrossRef]

- Alsubait, T.; Alfageh, D. Comparison of machine learning techniques for cyberbullying detection on YouTube Arabic comments. Int. J. Comput. Sci. Netw. Secur. 2021, 21, 1–5. Available online: http://paper.ijcsns.org/07_book/202101/20210101.pdf (accessed on 29 September 2025).

- Mahat, M. Detecting cyberbullying across multiple social media platforms using deep learning. In Proceedings of the International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE), Greater Noida, India, 4–5 March 2021. [Google Scholar] [CrossRef]

- Dadvar, M.; Kai, E. Cyberbullying detection in social networks using deep learning based models. In Proceedings of the International Conference on Big Data Analytics and Knowledge Discovery, Bratislava, Slovakia, 14–17 September 2020; pp. 245–255. [Google Scholar] [CrossRef]

- Yin, D.; Xue, Z.; Hong, L.; Davison, B.D.; Kontostathis, A.; Edwards, L. Detection of harassment on Web 2.0. In Proceedings of the Content Analysis in the Web 2.0 Workshop, Madrid, Spain, 20–21 April 2009; pp. 1–7. [Google Scholar]

- Zhang, X.; Tong, J.; Vishwamitra, N.; Whittaker, E.; Mazer, J.P.; Kowalski, R.; Hu, H.; Luo, F.; Macbeth, J.; Dillon, E. Cyberbullying detection with a pronunciation-based convolutional neural network. In Proceedings of the 15th IEEE International Conference on Machine Learning and Applications (ICMLA), Anaheim, CA, USA, 18–20 December 2016; pp. 740–745. [Google Scholar] [CrossRef]

- Idrizi, E.; Hamiti, M. Classification of text, image, and audio messages used for cyberbullying on social media. In Proceedings of the 2023 46th MIPRO ICT and Electronics Convention (MIPRO), Opatija, Croatia, 22–26 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Haidar, B.; Maroun, C.; Serhrouchni, A. A multilingual system for cyberbullying detection: Arabic content detection using machine learning. Adv. Sci. Technol. Eng. Syst. J. 2017, 2, 275–284. [Google Scholar] [CrossRef]

- Mehta, H.; Passi, K. Social media hate speech detection using explainable artificial intelligence (XAI). Algorithms 2022, 15, 291. Available online: https://www.mdpi.com/1999-4893/15/8/291 (accessed on 29 September 2025). [CrossRef]

- Azumah, S.W.; Elsayed, N.; ElSayed, Z.; Ozer, M.; La Guardia, A. Deep learning approaches for detecting adversarial cyberbullying and hate speech in social networks. arXiv 2024, arXiv:2406.17793. [Google Scholar] [CrossRef]

- Nitya Harshitha, T.; Prabu, M.; Suganya, E.; Sountharrajan, S.; Bavirisetti, D.P.; Gadde, N.; Uppu, L.S. ProTect: A hybrid deep learning model for proactive detection of cyberbullying on social media. Front. Artif. Intell. 2024, 7, 1269366. Available online: https://www.frontiersin.org/articles/10.3389/frai.2024.1269366/full (accessed on 29 September 2025). [CrossRef]

- Aboujaoude, E.; Savage, M.W. Cyberbullying: Next-generation research. World Psychiatry 2023, 22, 45–46. [Google Scholar] [CrossRef] [PubMed]

- Shahi, G.K.; Majchrzak, T.A. Hate speech detection using cross-platform social media data in English and German language. arXiv 2024, arXiv:2410.05287. [Google Scholar] [CrossRef]

- Hadiya, M. Cyberbullying detection in Twitter using machine learning algorithms. Int. J. Adv. Eng. Manag. 2022, 4, 1172–1184. [Google Scholar]

- Manning, C.D.; Raghavan, P.; Schütze, H. Introduction to Information Retrieval; Cambridge University Press: Cambridge, UK, 2008. [Google Scholar]

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python; O’Reilly Media: Sebastopol, CA, USA, 2009. [Google Scholar]

- Salton, G.; Buckley, C. Term-weighting approaches in automatic text retrieval. Inf. Process. Manag. 1988, 24, 513–523. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. Available online: https://jmlr.csail.mit.edu/papers/v12/pedregosa11a.html (accessed on 29 September 2025).

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cox, D.R. The regression analysis of binary sequences. J. R. Stat. Soc. Ser. B Methodol. 1958, 20, 215–242. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A lite BERT for self-supervised learning of language representations. arXiv 2019, arXiv:1909.11942. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Elfaik, S.; Nfaoui, E.H. Deep bidirectional LSTM network learning-based sentiment analysis for Arabic text. Procedia Comput. Sci. 2020, 170, 702–707. [Google Scholar] [CrossRef]

| Study | Method/Model | Dataset(s) | Results/Contributions | Limitations |

|---|---|---|---|---|

| [2] Prama et al. | Explainable AI model | Custom dataset | Improved interpretability and severity assessment | Limited adaptability to evolving abuse; context-specific |

| [3] García-Méndez & De Arriba-Pérez | LLM-based monitoring | Social media streams | Real-time recognition; enhances trust | High computational cost; ignores behavioral/temporal cues |

| [4] Yi, Zubiaga & Long | Emotion-adaptive framework | User-generated content | Detects subtle/hidden abuse | Text-driven; lacks interaction/behavioral modeling |

| [5] Balakrishnan & Kaity | Systematic review | Multiple studies | Taxonomy of ML approaches | Reveals fragmented research focus |

| [6] Akter et al. | LSTM-Autoencoder | Synthetic dataset | Strong generalization in low-resource contexts | Synthetic data limits ecological validity |

| [7] Cheng et al. | Hierarchical attention | Cyberbullying corpora | Captures escalation of harmful behavior | Computationally expensive |

| [8] Li et al. | Statistical analysis | Survey (3378 adolescents, China) | Links cyberbullying to mental/physical health | Descriptive, not predictive |

| [9] Alsubait & Alfageh | MNB, CNB, LR | Benchmark corpora | Computational efficiency | Weak performance with informal text |

| [10] Mahat | SVM, CNN, LSTM, NB | Social media datasets | LSTM achieved best results | Text-only; lacks context integration |

| [11] Dadvar & Kai | CNN | Twitter & others | Effective deep learning detection | Needs large datasets; low interpretability |

| [12] Yin et al. | CNN + word vectors | Twitter (cyberbullying tweets) | Stronger semantic representation | Sensitive to class imbalance |

| [13] Zhang et al. | PCNN w/labor-cost adjustment | Two datasets | Better handling of class imbalance | Dataset-specific; elitist behavior |

| [14] Idrizi & Hamiti | GCN + MFCCs | Mixed-media social posts | Accurate multimodal classification | High complexity; heavy feature engineering |

| [15] Haidar et al. | ML + NLP | Multilingual dataset | Covers linguistic diversity | Lacks sequential/temporal modeling |

| [16] Mehta & Passi | Explainable AI (DT + SHAP) | Social media posts | Transparency for moderators/users | Lower predictive accuracy |

| [17] Azumah et al. | CNN + RNN hybrid | Social media conversations | Robust to spelling/syntax variation | Complex; scalability issues |

| [18] N.H.T. et al. (ProTect) | Deep learning + attention | Social media posts | Anticipates incidents before escalation | Limited to repetitive text patterns; lacks behavioral context |

| [19] Aboujaoude & Savage | Literature review | Multiple | Identifies overlooked dimensions (victim–perpetrator roles, bystanders) | Non-technical; conceptual gaps remain |

| [20] Shahi & Majchrzak | Bilingual text analysis | Social media platforms | Exposes cross-lingual challenges | Poor generalization across languages/platforms |

| Attribute | Description |

|---|---|

| Date | Calendar date when the message was sent. |

| Time | Exact time when the message was sent. |

| User ID | Unique anonymized identifier for each user in the dataset. |

| Message Text | Raw textual content of the message exchanged between users. |

| Cyberbullying Label | Binary classification indicating cyberbullying presence: 0; No cyberbullying detected, and 1; Cyberbullying detected. |

| Category | Feature | Description |

|---|---|---|

| Temporal | Avg_IsWeekend | Proportion of messages sent during weekends. |

| Avg_IsNightMessage | Proportion of messages sent during night hours. | |

| Avg_TimeSinceLastMsg | Average time between consecutive messages. | |

| Avg_SessionDuration | Average duration of user conversation sessions. | |

| Behavioral/Relational | ToxicityRate | Proportion of toxic messages in a session. |

| ThreatLevel | Severity level of threatening language. | |

| UserAffinity | Degree of inferred closeness between users (e.g., interaction frequency). | |

| MsgVolume | Total message count or aggression density per dialogue. |

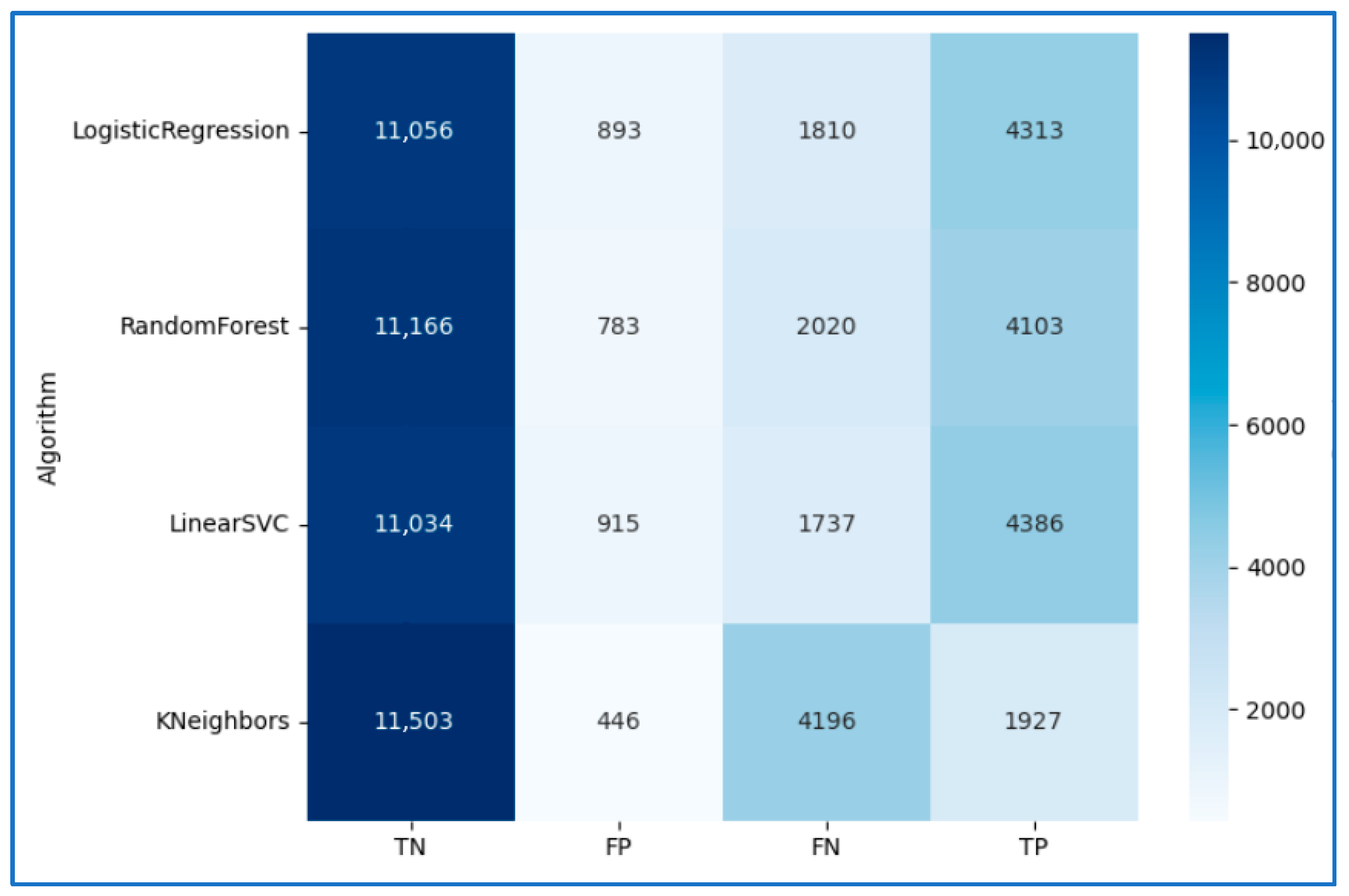

| Algorithm | Accuracy | Precision | Recall | F1Score |

|---|---|---|---|---|

| LogisticRegression | 85.04% | 82.85% | 70.44% | 76.14% |

| RandomForest | 84.49% | 83.97% | 67.01% | 74.54% |

| LinearSVC | 85.33% | 82.74% | 71.63% | 76.79% |

| KNeighbors | 74.31% | 81.21% | 31.47% | 45.36% |

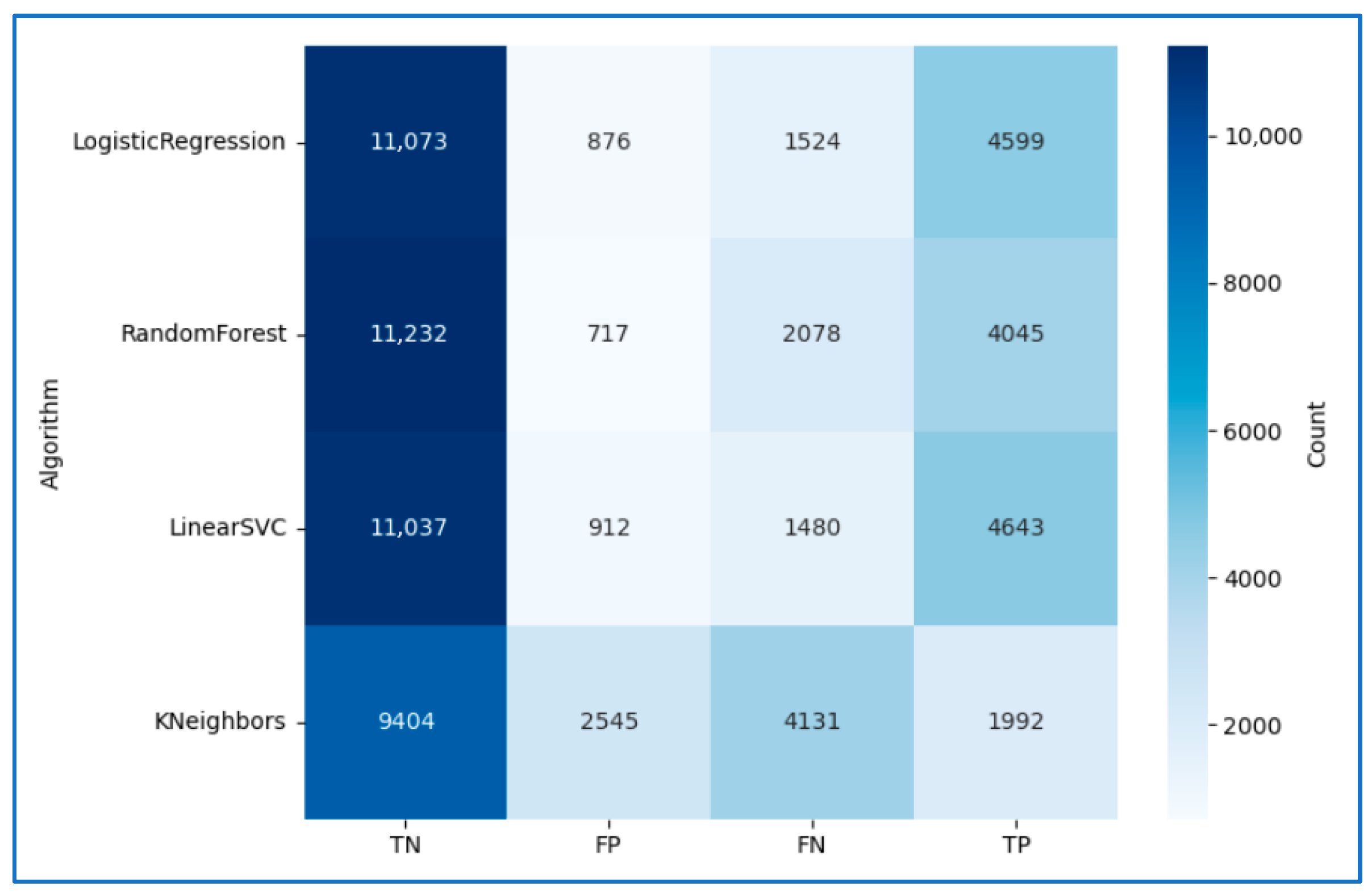

| Algorithm | Accuracy | Precision | Recall | F1Score |

|---|---|---|---|---|

| LogisticRegression | 86.72% | 84.00% | 75.11% | 79.31% |

| RandomForest | 84.53% | 84.94% | 66.06% | 74.32% |

| LinearSVC | 86.76% | 83.58% | 75.83% | 79.52% |

| KNeighbors | 63.06% | 43.91% | 32.53% | 37.37% |

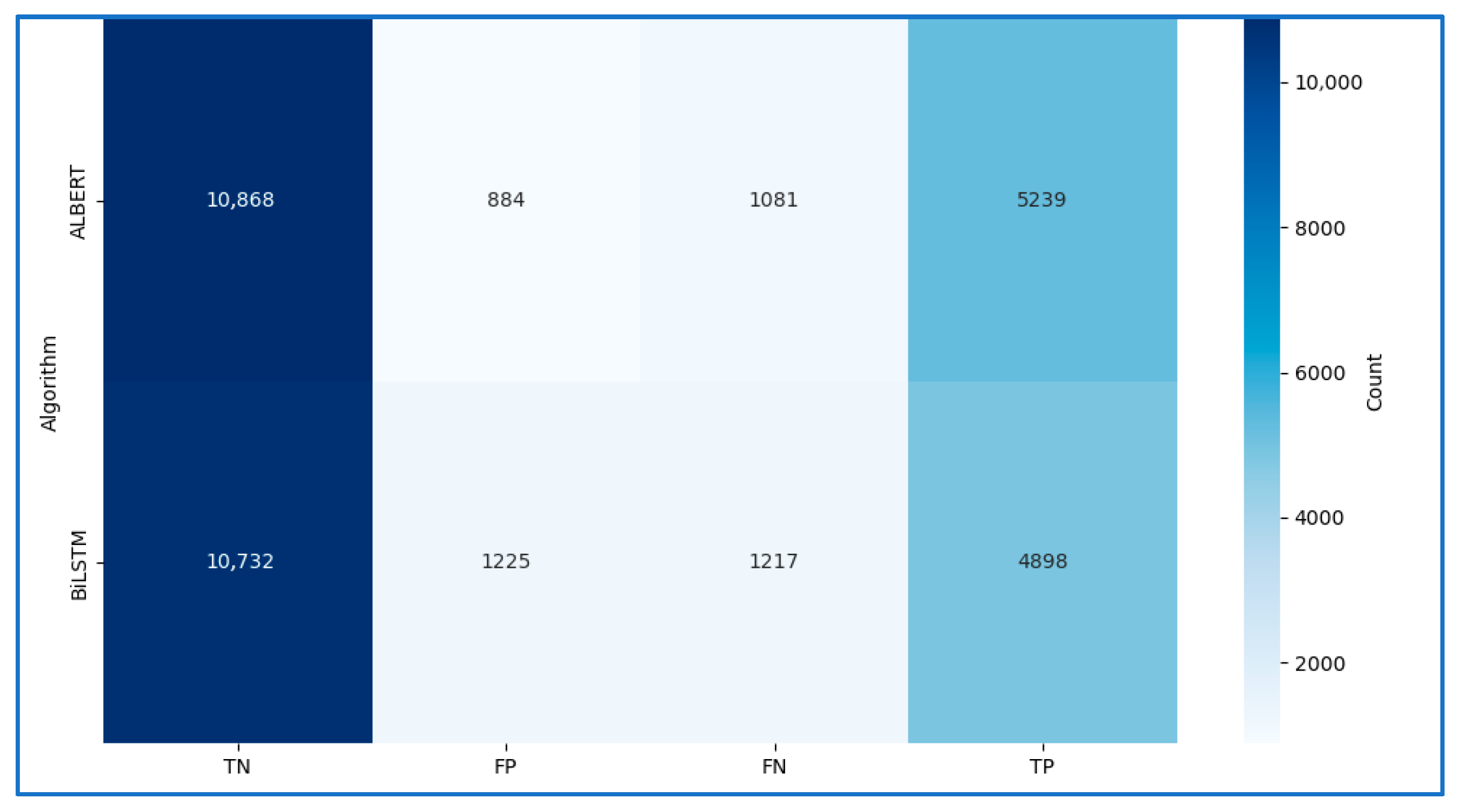

| Algorithm | Accuracy | Precision | Recall | F1Score |

|---|---|---|---|---|

| ALBERT | 89.12% | 82.90% | 85.56% | 84.20% |

| BiLSTM | 86.49% | 80.01% | 79.99% | 80.05% |

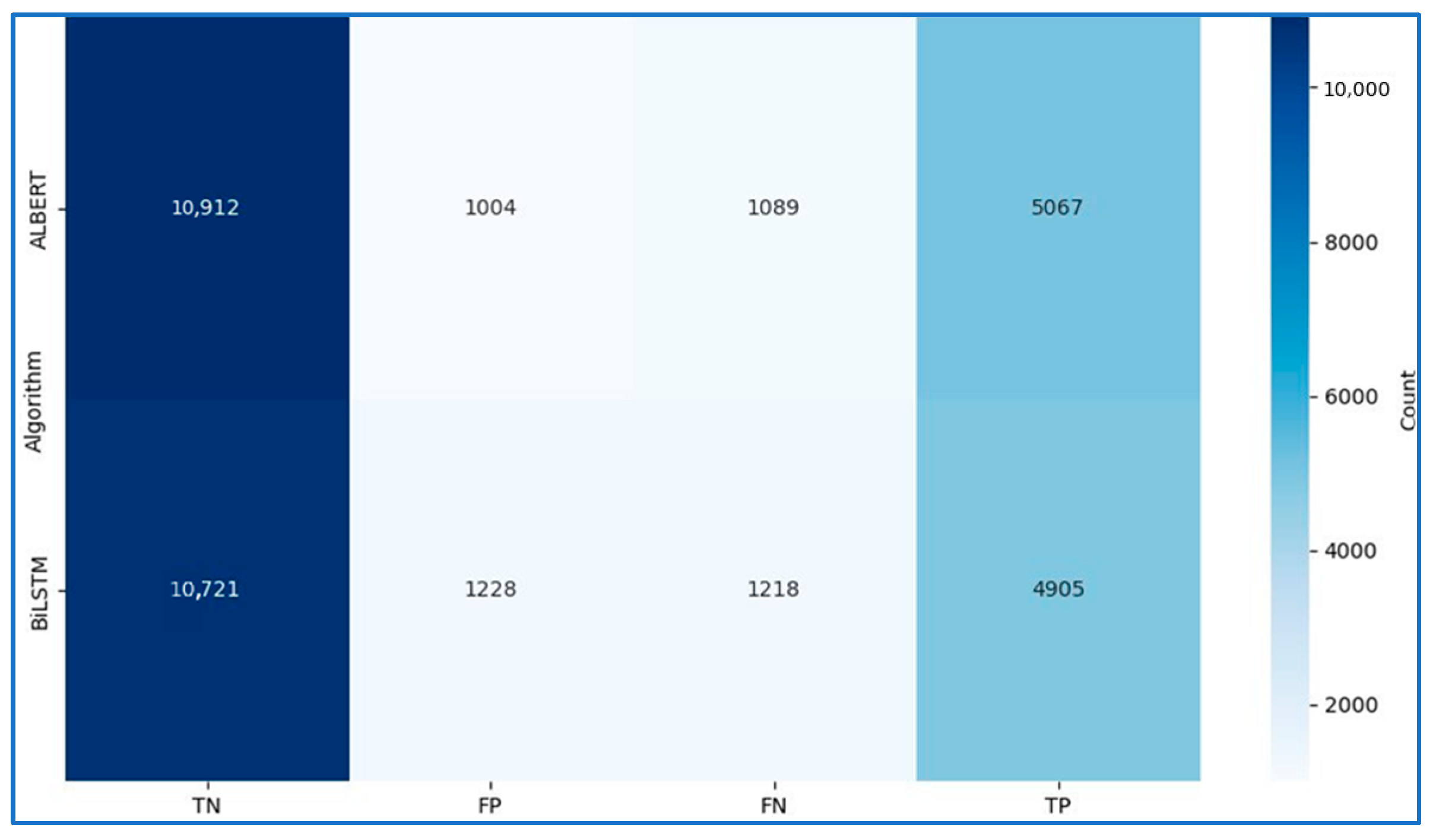

| Algorithm | Accuracy | Precision | Recall | F1Score |

|---|---|---|---|---|

| ALBERT | 88.42% | 83.46% | 82.31% | 82.88% |

| BiLSTM | 86.47% | 79.98% | 80.11% | 80.04% |

| Algorithm | Accuracy Text Only | Accuracy Temporal | Recall Text Only | Recall Temporal | ||

|---|---|---|---|---|---|---|

| LogisticRegression | 85.04% | 86.72% | +1.68% | 70.44% | 75.11% | +4.67% |

| RandomForest | 84.49% | 84.53% | +0.04% | 67.01% | 66.06% | −0.95% |

| LinearSVC | 85.33% | 86.76% | +1.43% | 71.63% | 75.83% | +4.20% |

| KNeighbors | 74.31% | 63.06% | −11.25% | 31.47% | 32.53% | +1.06% |

| ALBERT | 89.12% | 88.42% | −0.70% | 85.56% | 82.31% | −3.25% |

| BiLSTM | 86.49% | 86.47% | −0.02% | 79.99% | 80.11% | +0.12% |

| Algorithm | Feature | Accuracy | Precision | Recall | F1-Score | Train Time |

|---|---|---|---|---|---|---|

| LinearSVC | Text Only | 0.8548 ± 0.0023 | 0.8334 ± 0.0058 | 0.7143 ± 0.0062 | 0.7692 ± 0.0036 | 16.99 s |

| RandomForest | Text Only | 0.8487 ± 0.0023 | 0.8473 ± 0.0066 | 0.6764 ± 0.0090 | 0.7528 ± 0.0061 | 2551.75 s |

| LogisticRegression | Text Only | 0.8538 ± 0.0023 | 0.8359 ± 0.0064 | 0.7104 ± 0.0052 | 0.7680 ± 0.0034 | 93.17 s |

| KNeighbors | Text Only | 0.4573 ± 0.0069 | 0.3770 ± 0.0028 | 0.9092 ± 0.0076 | 0.5330 ± 0.0025 | 481.53 s |

| LinearSVC | Text + Temporal | 0.8678 ± 0.0031 | 0.8416 ± 0.0045 | 0.7537 ± 0.0081 | 0.7952 ± 0.0054 | 45.73 s |

| RandomForest | Text + Temporal | 0.8453 ± 0.0030 | 0.8494 ± 0.0045 | 0.6635 ± 0.0105 | 0.7450 ± 0.0072 | 1528.42 s |

| LogisticRegression | Text + Temporal | 0.8679 ± 0.0033 | 0.8435 ± 0.0060 | 0.7518 ± 0.0092 | 0.7950 ± 0.0064 | 1767.32 s |

| KNeighbors | Text + Temporal | 0.6293 ± 0.0037 | 0.4388 ± 0.0071 | 0.3160 ± 0.0060 | 0.3674 ± 0.0055 | 501.17 s |

| Model | Mean ΔF1-Score | Paired t-Test (p-Value) | Wilcoxon Signed-Rank (p-Value) | Significance |

|---|---|---|---|---|

| LinearSVC | +0.0260 | 0.0047 | 0.0061 | Significant (p < 0.01) |

| LogisticRegression | +0.0270 | 0.0039 | 0.0054 | Significant (p < 0.01) |

| RandomForest | −0.0078 | 0.1842 | 0.2115 | Not significant |

| KNeighbors | −0.1656 | 0.0925 | 0.1178 | Not significant |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abuhamda, Y.; García-Teodoro, P. Machine Learning Approaches for Detecting Hate-Driven Violence on Social Media. Appl. Sci. 2025, 15, 11323. https://doi.org/10.3390/app152111323

Abuhamda Y, García-Teodoro P. Machine Learning Approaches for Detecting Hate-Driven Violence on Social Media. Applied Sciences. 2025; 15(21):11323. https://doi.org/10.3390/app152111323

Chicago/Turabian StyleAbuhamda, Yousef, and Pedro García-Teodoro. 2025. "Machine Learning Approaches for Detecting Hate-Driven Violence on Social Media" Applied Sciences 15, no. 21: 11323. https://doi.org/10.3390/app152111323

APA StyleAbuhamda, Y., & García-Teodoro, P. (2025). Machine Learning Approaches for Detecting Hate-Driven Violence on Social Media. Applied Sciences, 15(21), 11323. https://doi.org/10.3390/app152111323