1. Introduction

Cognitive development in early childhood is organically promoted through three main factors: physical activity, social interaction, and environmental exploration [

1,

2]. These developmental processes can be maximized through interaction with the surrounding environment and voluntary participation, rather than one-way delivery of standardized educational content [

3,

4]. Recently, social robots and digital interfaces have been introduced as supplementary educational tools in early childhood education settings, showing a certain level of effectiveness in stimulating children’s interest and participation [

5]. Specifically, social interaction between humans and robots leads to robots being perceived not just as machines but as social beings, and it has a positive impact on promoting emotional engagement and learning participation in young children [

6]. Social interaction-based kindergarten robots can be used as effective educational tools because they help children participate in class and can also induce emotional stability and a positive learning attitude during the process of forming relationships with peers and teachers [

7]. Furthermore, interaction between young children and robots functions not merely as a source of interest but also as a medium that simultaneously fosters cognitive and emotional development [

8]. Through robots, children experience a combination of play and learning, which contributes to strengthening their voluntary exploration and problem-solving abilities [

9]. In this context, social robots can be positioned as effective tools in early childhood education by supporting the creation of participation-centered learning environments and fostering positive learning attitudes [

10].

However, most social robots currently used in early childhood education are highly dependent on predefined conversation scenarios and limited interaction rules [

11]. Conti et al. conducted an experiment with 81 kindergarten children in which a robot told two types of fairy tales and compared the memory effects under static and expressive speaker conditions [

12]. Keren et al. played classical music to develop children’s spatial cognitive abilities and guided their learning by having them press buttons attached to a robot according to given questions [

13]. De et al. increased students’ language learning rates by having children respond with different appropriate words and gestures depending on whether their answers to questions were correct or incorrect [

14]. While this method can be effective in encouraging short-term learning engagement, it has limitations in dynamic and unpredictable environments such as real classrooms, given that it does not fully account for children’s spontaneous reactions or diverse social situations.

The cognitive development process of young children cannot be explained solely by simple robot–child interactions; environmental contexts such as the arrangement of classroom space, play tools, and collaborative activities with peers have a crucial influence [

15,

16]. Considering these points, educational robots should go beyond simply providing conversational responses and be able to comprehensively understand and reflect the environment and the child’s activities. To achieve this, robots must have the ability to move autonomously within a specific space. Additionally, they should be able to recognize the semantic information of the objects that make up the environment and, based on this, reconfigure and perform tasks in accordance with the changing classroom situation. Furthermore, this environmental understanding and planning ability needs to be connected to social interaction through conversations with children. This allows robots to play a richer and more adaptive educational role within the learning context.

In recent robotics, spatial perception technology is moving beyond simply mapping geometric structures. Semantic simultaneous localization and mapping (SLAM), which semantically recognizes objects within the environment while simultaneously estimating their position and constructing maps for use in real-world tasks, is becoming central [

17,

18,

19]. These technologies allow robots to go beyond simply obtaining spatial coordinates for obstacle avoidance; they can now distinguish objects such as desks, teaching aids, and toys in a classroom and understand their functional meaning. However, in the research on educational robots, the application of semantic SLAM is still in its initial stages, with most systems being limited to simple localization or basic object detection. In this study, we propose a method for robots to autonomously navigate by perceiving the classroom environment, focusing on objects related to interaction with children by leveraging existing semantic SLAM technology tailored to an educational context.

Robots must not only comprehend the meaning of their environment but also develop and execute contextually appropriate task plans based on their perception. Task planning methods are broadly divided into learning-based and logic-based approaches. The learning-based approach primarily leverages reinforcement learning or deep neural networks to learn optimal action policies through experience [

20,

21,

22]. This method can exhibit robust performance in environments with high uncertainty and variability, but it has limitations in that it requires large amounts of training data and long training times. By contrast, the logic-based approach leverages formal languages such as planning domain definition language (PDDL) or Stanford research institute problem solver (STRIPS) to define target states and derive reasonable plans under given constraints [

23,

24]. This method is highly likely to be interpreted based on explicit rules and reasoning processes, and it can ensure the reliability and consistency of plans in environments that are structured but have many variables, such as classrooms. However, the conventional logic-based approach has limitations in adapting immediately to environmental changes. Therefore, in this study we propose an automated task planning system using PDDL that aims to overcome these limitations while leveraging the advantages of the logic-based approach. This allows a robot to autonomously move within a changing classroom environment and flexibly plan and execute interacting behaviors with children.

Visual question answering (VQA), which has advanced through the fusion of computer vision and natural language processing technologies, is gaining attention as a technology that combines visual input and linguistic queries to generate meaningful answers [

25,

26,

27]. Early VQA primarily relied on learning-based approaches based on large datasets, which involved extracting image features, combining them with the question, and then statistically deriving the most suitable answer [

28,

29]. However, this approach focused on simple pattern recognition and showed limitations in complex logical reasoning or contextual understanding. To address this problem, neuro-symbolic approaches have been actively researched recently. Neuro-symbolic VQA combines the representation learning capabilities of deep learning with the interpretability of symbolic reasoning, offering the advantage of logically interpreting queries about complex visual scenes and generating more explainable answers [

30,

31,

32]. In this study, we propose an educational robot interaction model that incorporates this development trend into the educational field, using VQA based on the neuro-symbolic approach. The proposed approach structurally represents the semantic relationships between objects and attributes within the classroom and reflects them in the question-answering process, enabling the generation of contextual and explainable answers to children’s questions, going beyond simple visual factual responses.

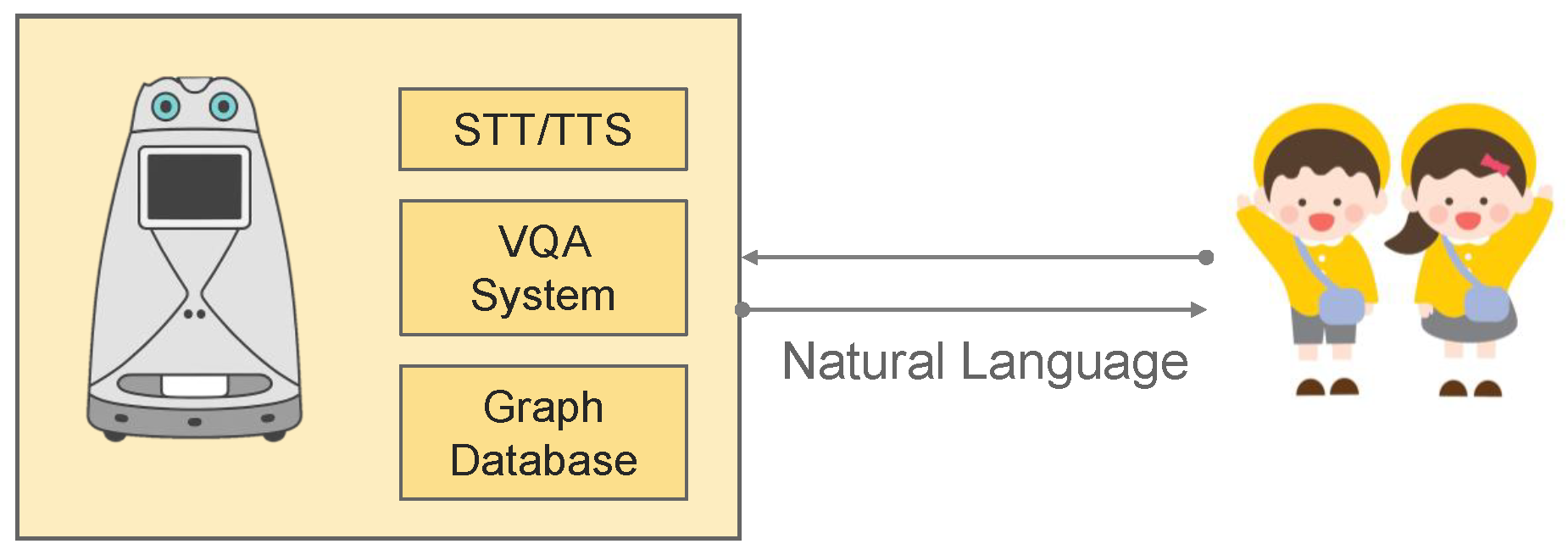

Hence, we propose a new framework that integrates an environment-aware dialog and planning system to enable social robots to effectively perform an educational role in early childhood education environments. The proposed robot recognizes objects and positional information within the classroom through semantic SLAM and autonomously plans and executes tasks in response to changing situations using PDDL-based automated task planning. Additionally, this robot integrates a neuro-symbolic-based VQA module to provide explainable answers to children’s questions, going beyond simple factual responses.

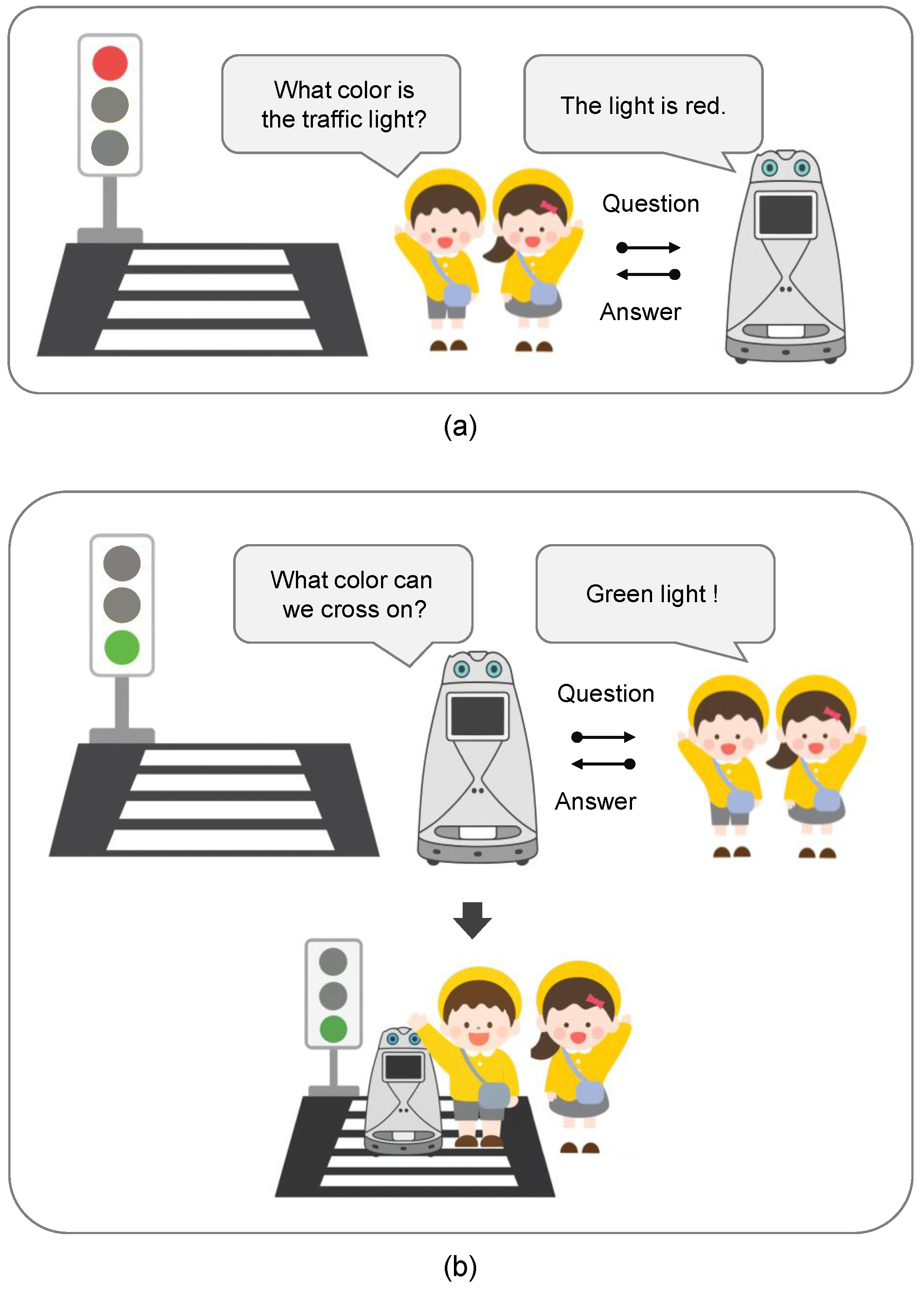

Figure 1 illustrates an example of a practical application scenario for the framework proposed in this study. The robot recognizes the color of the traffic lights installed at the intersection and exhibits a safe behavior by providing appropriate linguistic responses for the situation, such as “The light is red” or “Green light,” to children’s questions. Meanwhile, the robot asks the child, “What color is the traffic light?” or “What color can we cross on?” By presenting questions like these, children are guided to recognize and answer questions about traffic light colors and rules of behavior on their own. This study validated the proposed framework through a student-centered scenario focused on dialogue with children. Through this interaction process, the robot functions as both a respondent answering children’s questions and a questioner posing new ones, expanding the conversation with children from a one-way transmission to a two-way question-and-answer system. Furthermore, this process demonstrates an example in which semantic SLAM, task planning, and VQA modules work together to achieve educational interaction. The proposed system is designed with a bidirectional structure in which the robot not only responds to children’s questions but also initiates its own questions and reacts to new inquiries from the children. This structure allows children to participate not as passive recipients of information but as active thinkers and explorers, thereby enabling child-centered learning.

First, we validated the performance of each component of the proposed framework individually. The semantic SLAM module was confirmed to be able to reliably detect, classify, and estimate the positions of various objects in a classroom environment. The PDDL-based task planning module demonstrated autonomous execution capability by deriving reasonable and consistent task plans in response to dynamic changes in a real driving environment. Additionally, the VQA module effectively evaluated the accuracy and explainability of responses through a question dataset constructed to match the developmental level of kindergarten children. Furthermore, the entire proposed framework, including autonomous driving based on semantic SLAM and object recognition capabilities, was demonstrated to be integrally operable through experiments conducted on children and university students in a real kindergarten classroom environment. Therefore, we demonstrate that robots can precisely reflect the physical environment within a classroom to promote children’s exploration and learning engagement. This study is significant in that it lays a foundational basis for the development of early childhood education robots.

4. Experiment Results

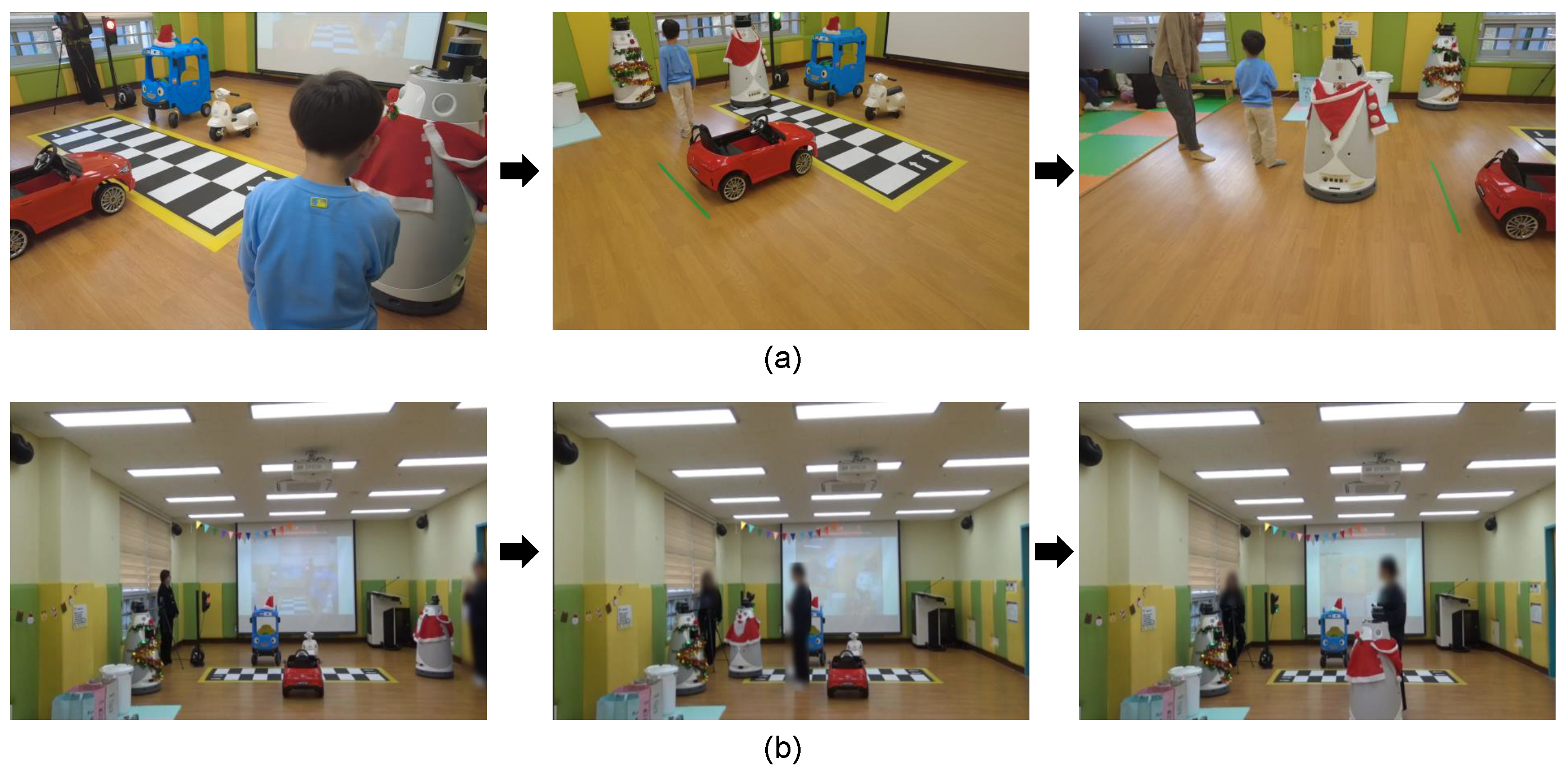

To verify the performance of the framework proposed in this study, experiments were conducted in a real kindergarten environment. The target participants were children aged 6 to 7 years old based on international age. As shown in

Figure 6, the classroom was designed as a space with various learning tools and interactive elements, such as crosswalks, traffic lights, and vehicle models. The robot hardware was based on the kindergarten robot developed by REDONE Technologies, and experiments were conducted by integrating the architecture designed in this study into the robot. The experiments were conducted with two scenarios: one involving traffic safety education and the other involving a recycling activity. A total of 12 children and 10 university students participated in the experiments. The child participants were children enrolled at the Suncheon National University kindergarten, who had engaged in English play-based learning sessions twice a week for 20 min per session prior to the experiment. The university participants consisted of students from various majors, aged between 18 and 24 years. As this study represents an initial stage of technical validation, no control group was included. Instead, the focus was placed on verifying whether the proposed framework could operate stably in a real kindergarten environment and enable real-time interaction with children.

The experiment was conducted by verifying the performance of each major module of the proposed framework. First, the semantic SLAM module was evaluated to determine whether it could accurately acquire semantic information such as position, type, size, and color of objects. Next, in the automated planning system, we verified whether the robot could adaptively replan its path and successfully complete the given task in a changing environment. Finally, in the VQA module, we confirmed whether the system could appropriately respond to questions through natural language-based interaction with children. Through this experimental design, we aimed to demonstrate that the proposed framework can reliably support child-centered learning and social interaction in a real kindergarten environment.

4.1. Semantic SLAM

To verify the performance of the semantic SLAM module, semantic information about various objects was extracted in a real kindergarten environment. The experiment was conducted in the classroom environment shown in

Figure 6, where the robot used camera and LiDAR sensors to perceive the surrounding scene and perform object detection and attribute extraction. As a result, robots were able to effectively acquire not only the presence of objects but also various semantic information such as their names, colors, sizes, materials, uses, and positional coordinates. For example, the semantic information extracted for the traffic lights and car objects that make up the environment in

Figure 6 is summarized in

Table 1. For traffic light objects, attributes such as color, light, and purpose were recorded together, while for car objects, detailed recognition results were shown, including information on sub-components such as wheels. In addition, the ROS2 Humble nav2 1.0.0 package was employed to perform localization and collision avoidance, enabling the robot to achieve stable navigation and perception even in dynamic classroom environments [

50]. These results demonstrate that the proposed semantic SLAM module can generate semantically rich scene representations in real classroom environments, providing high-level environmental information that can be leveraged by subsequent automated planning systems and VQA modules.

4.2. Automated Planning System

To verify the performance of the proposed automated planning system, we conducted experiments where plans were automatically generated based on environmental information obtained from semantic SLAM and then executed by a real robot. The purpose of the experiment was to evaluate whether the generated plan presents a reasonable sequence of actions and whether the robot can reliably perform the task based on that plan. First, the plans defined in this system are expressed in PDDL format, and various actions are described in the domain.

Table 2 shows two example actions (robot_traffic_question, recycle_location_check), each composed of parameters, preconditions, and effects. Based on these action definitions, given a problem, the planner generates a sequence of actions to reach the target state from the initial state.

Table 3 shows an example of a generated plan, where you can see the sequence of actions the robot must perform, such as moving, asking a question, responding, and entering a crosswalk.

Figure 7 illustrates the process in which the robot interacts with children and moves according to a predefined sequence of actions in a real kindergarten classroom environment. The classroom was equipped with various learning tools such as crosswalks, vehicle models, and traffic lights, and the children participated in traffic rule and safety education activities together with the robot. The robot sequentially executed planned actions such as moving, asking questions, and responding, thereby interacting naturally with the children. Through this process, it was confirmed that the proposed framework can operate stably even in an actual educational environment. The specific sequence of actions performed during this interaction is presented in

Table 3. As shown in the table, the robot executed a series of actions, including moving to specific locations, asking questions, checking traffic conditions, conducting question–answer exchanges with children, and crossing the crosswalk. This action sequence was generated based on a predefined PDDL domain, demonstrating that the robot can reliably execute the planned procedures in a real-world environment.

The results of applying the generated plans to a real robot platform and performing the tasks are summarized in

Table 4. As can be seen from the results, the success rate was 100% in simple environments, and it decreased somewhat as the number of objects in the environment increased and the situation became more complex. However, in most cases, it maintained stable performance of over 80%, demonstrating that the proposed automatic planning module effectively operates even in dynamic situations such as those taking place in a real kindergarten environment. In summary, we experimentally validated that the proposed automated planning system can establish reasonable plans based on semantic scene information and support robots in successfully executing those plans.

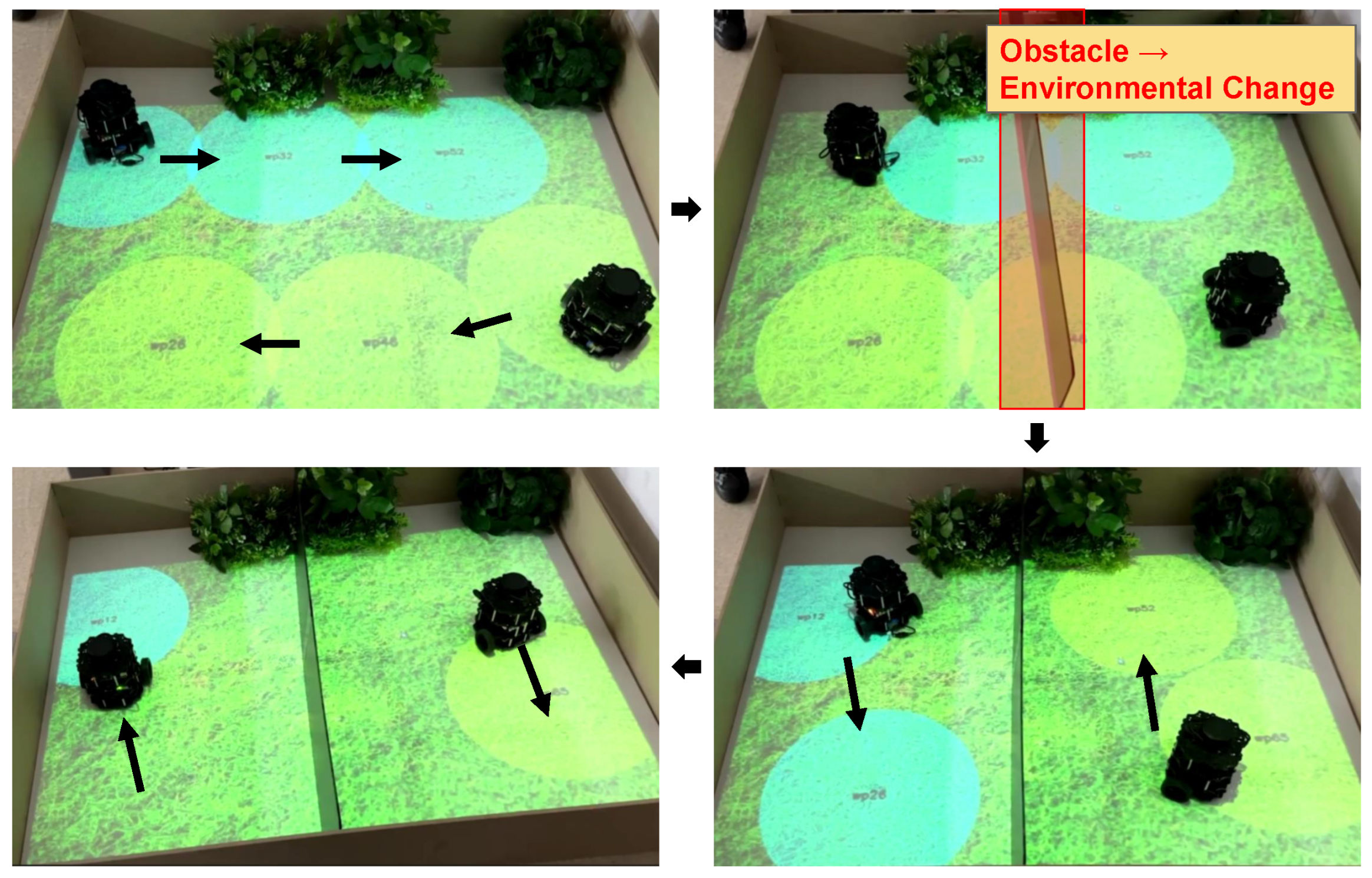

In addition,

Figure 8 illustrates the process of dynamic replanning in a changing environment. The objective of each robot was to sequentially visit all designated waypoints (wp), but when unexpected obstacles appeared, the robot was unable to follow its original path. In such cases, the system automatically triggered a replanning process, allowing the robot to continue its mission and complete the waypoint visitation task through an alternative path. Importantly, this experiment was not limited to verifying plan execution in a static setting; rather, it focused on evaluating how quickly and reliably the robot could respond when the environment changed in real time. Through this, it was confirmed that the proposed planning system operates flexibly even in dynamic and unpredictable environments such as a real kindergarten, enabling the robot to effectively adapt to environmental changes.

4.3. Visual Question Answering

To verify the performance of the VQA module proposed in this study, a question-and-answer experiment was conducted with kindergarten children. It could be employed in real English education. The sentences used in the experiment are presented in

Table 5; they range from simple word-level questions to sentence-level communication questions. This was designed to help children learn English vocabulary and understand basic sentences. We designed stepwise questions considering young children’s stages of English language development and enabled a robot to interact with children based on visual information. Specifically, in Stage 1, the robot guided basic cognitive and linguistic responses by recognizing the location of objects and naming them. In Stage 2, it supported vocabulary expansion through questions about basic attributes such as color. In Stage 3, questions about children’s preferences and experiences were included to encourage emotional expression and the development of sentence structure. In Stages 4 and 5, questions involving grammatical elements such as quantity, comparison, and past tense were presented to promote deeper linguistic thinking.

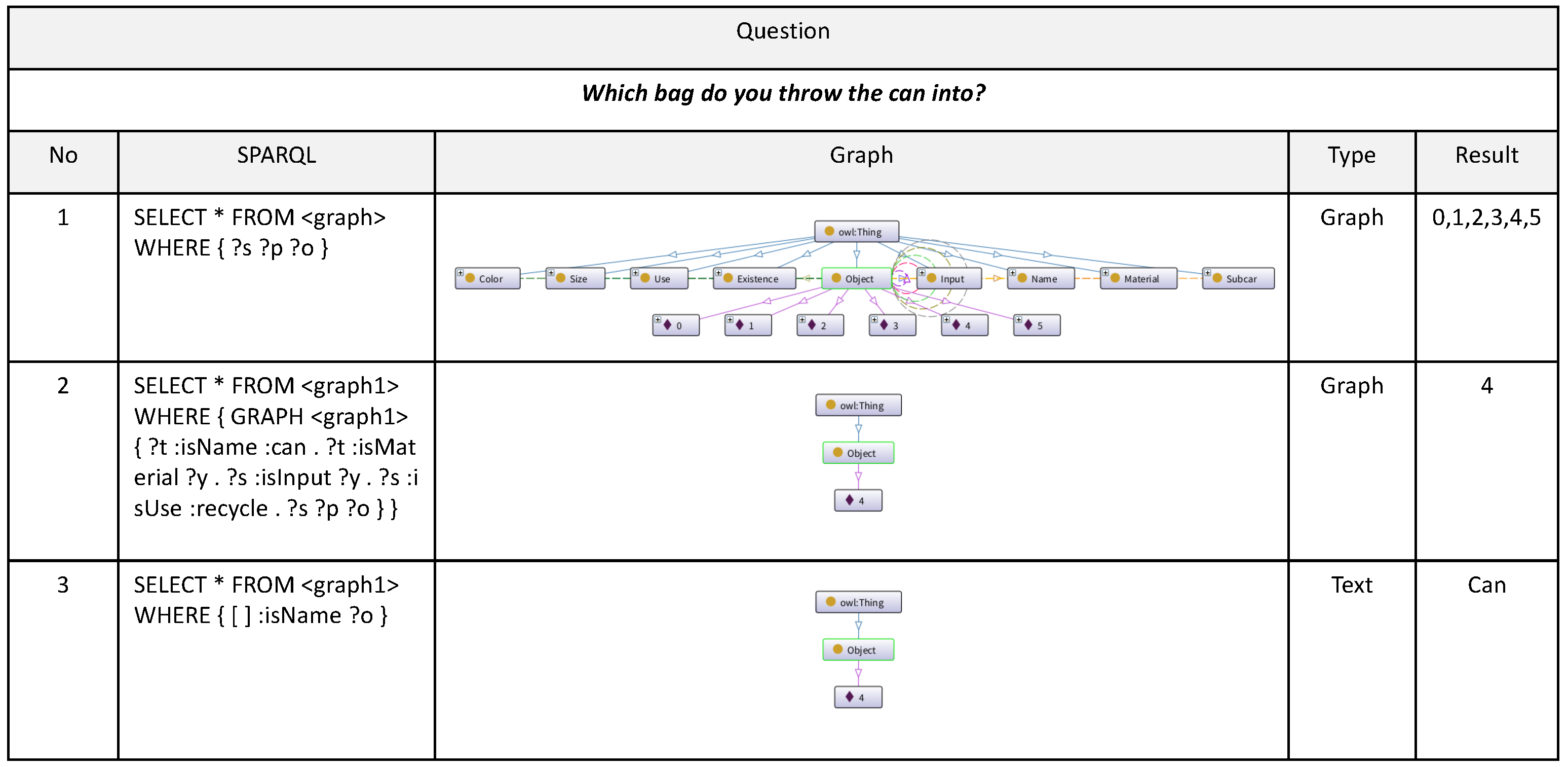

The main questions used in this experiment focused on object colors, such as What color is the car? object locations, such as What is in front of the bus? and object attributes or functions, such as When can we cross the traffic light? and Which bag do you throw the can into? These questions were designed to help children not only learn English vocabulary but also recognize and reason about the properties and relationships of objects, thereby promoting conceptual learning. In addition, accurately answering these questions requires the robot to precisely perceive and utilize visual environmental information, including the color, shape, and spatial relationships of objects in the classroom. Through this process, the VQA module serves as a key component that goes beyond simple language processing by integrating visual and linguistic information to enable meaningful educational interactions with children.

The VQA module converts the input natural language sentence into a SPARQL query and then infers the answer on the scene graph.

Table 6 shows the results of example sentences converted into SPARQL syntax, allowing us to see how questions such as “What color is the motorcycle?” or “How many cars are there?” are transformed into SPARQL queries and processed on a graph database. For processing children’s speech, the Google Speech-to-Text and Text-to-Speech APIs were employed, enabling reliable conversion of spoken input into text and subsequent synthesis back into speech [

51].

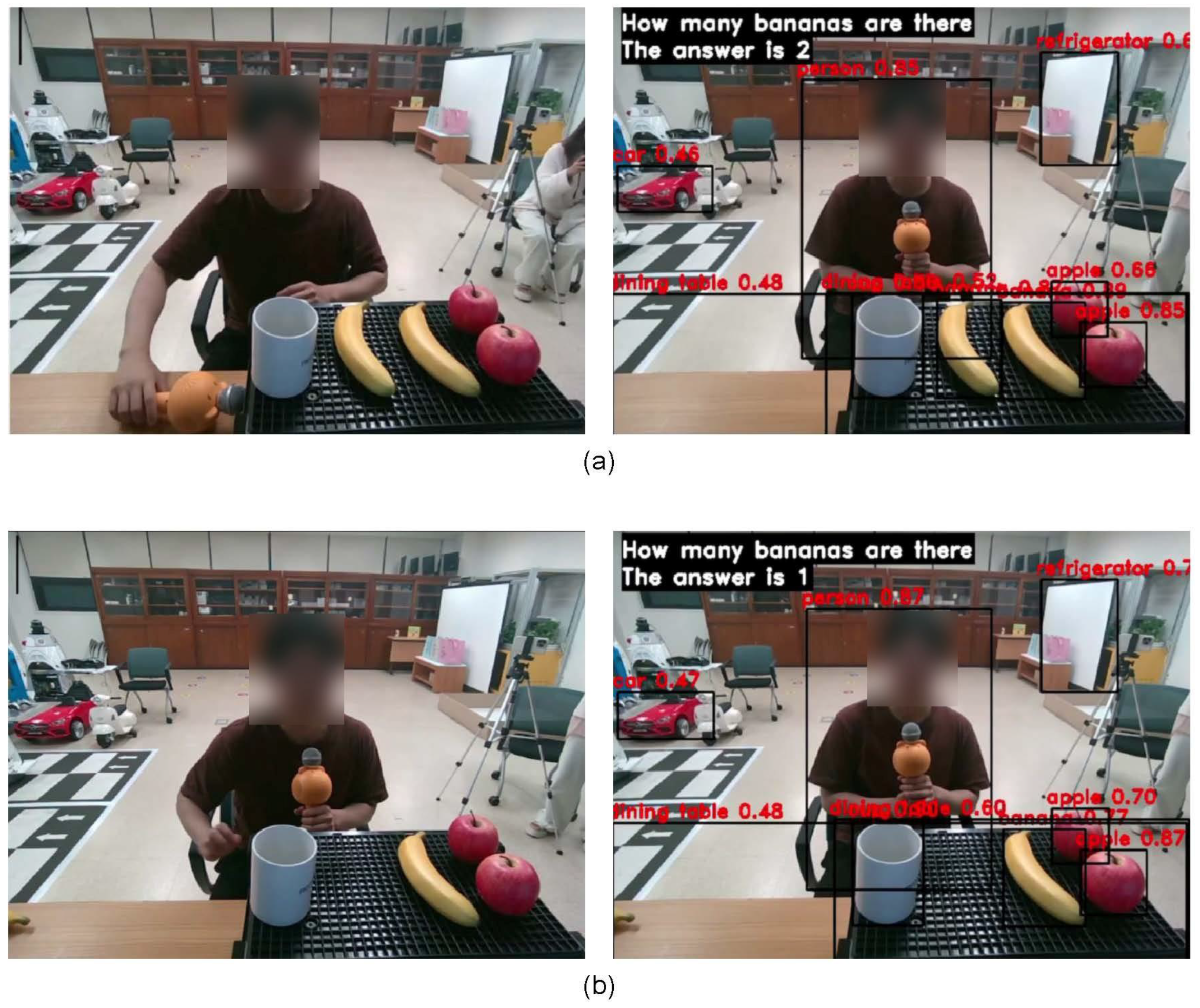

Figure 9 illustrates how the VQA module performs question answering in dynamically changing environments. Even when the same question is asked, the system reinterprets the scene according to the changed number of objects and provides a new answer. This demonstrates that the proposed framework can operate flexibly not only in fixed settings but also in continuously changing real classroom environments.

Additionally, the object information obtained from semantic SLAM is organized as shown in

Figure 10, including attributes such as name, color, size, purpose, and material of each object, as well as their positional relationships. This information is converted into a graph structure and used in the scene perception stage of the VQA module.

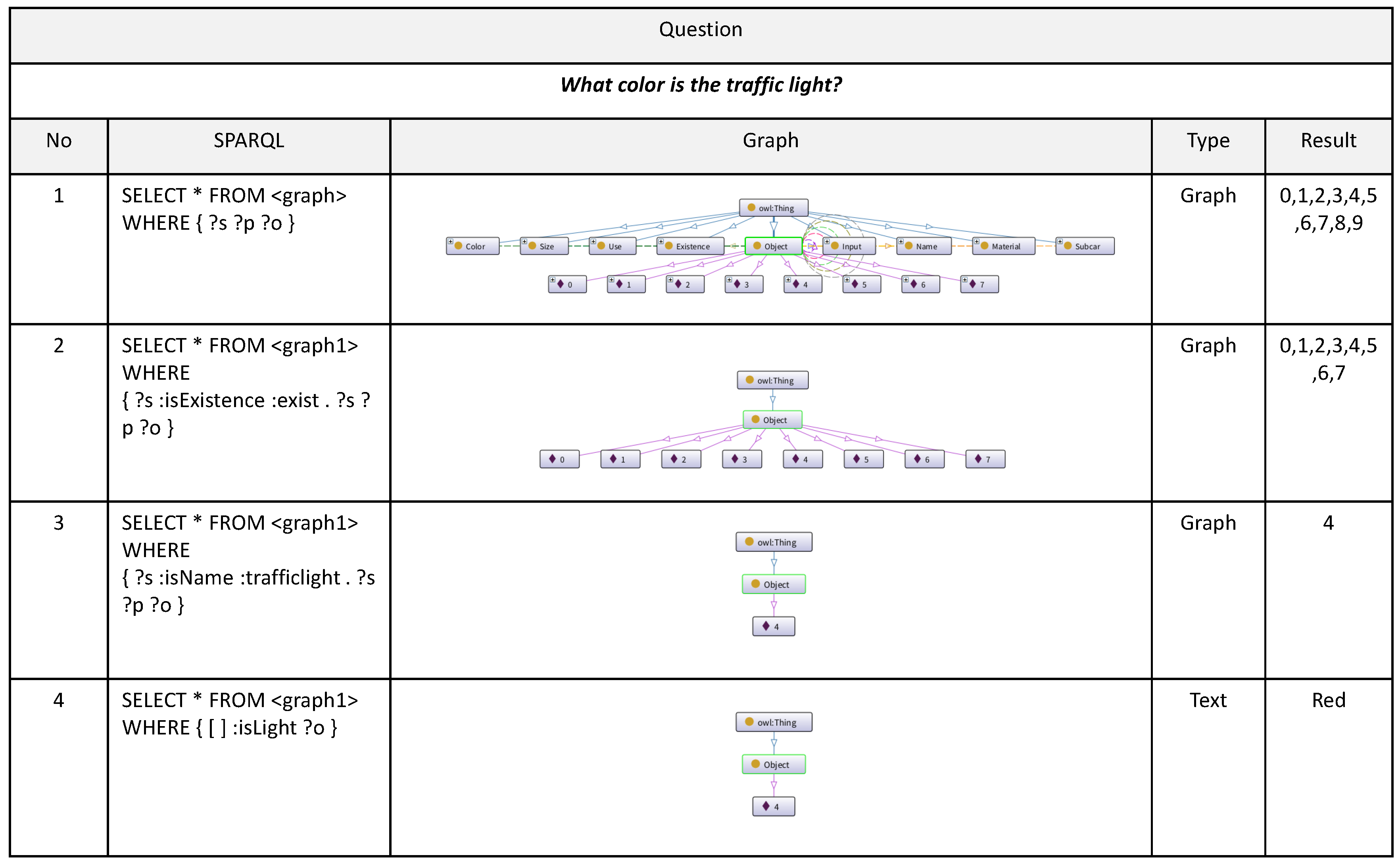

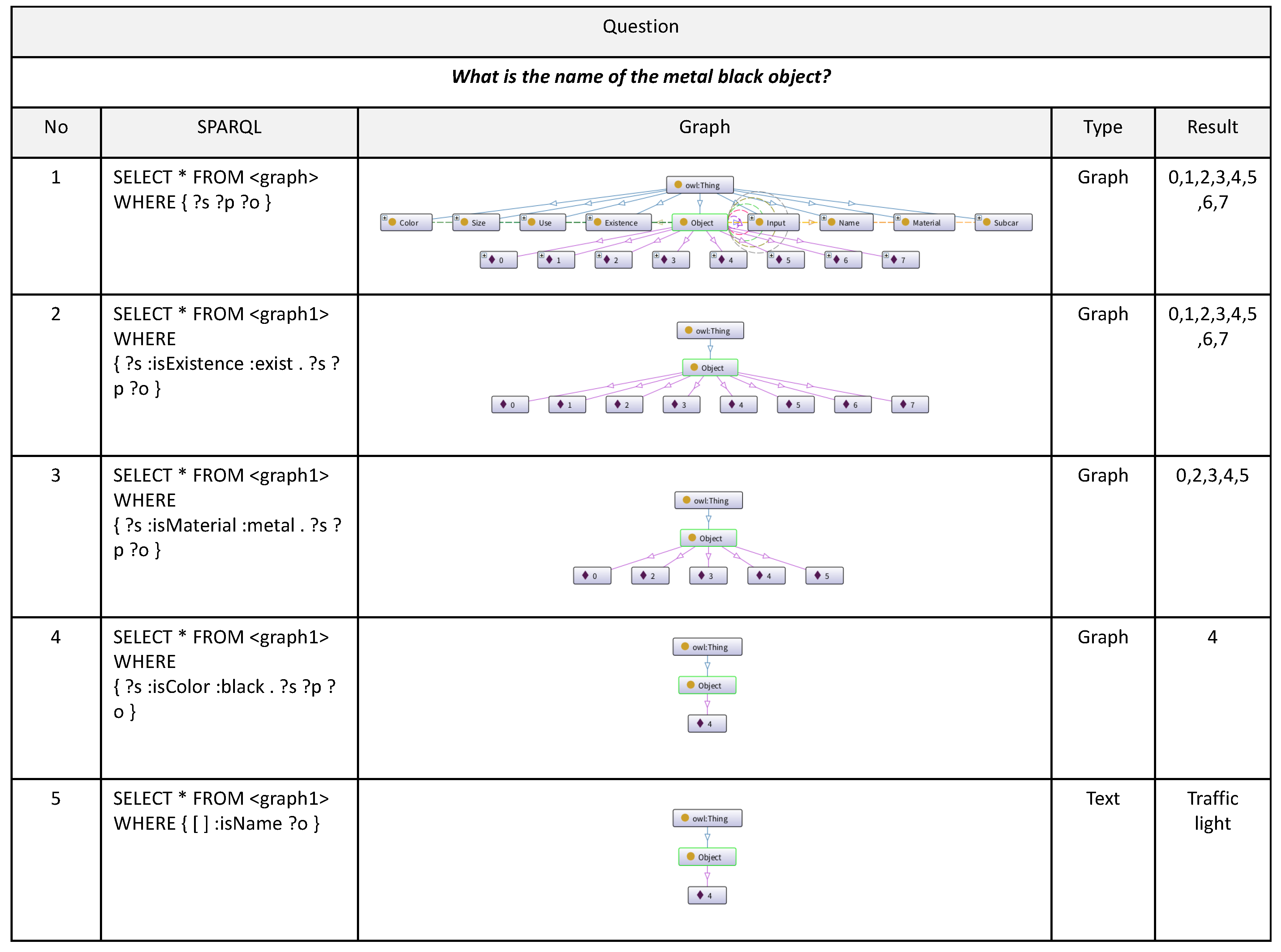

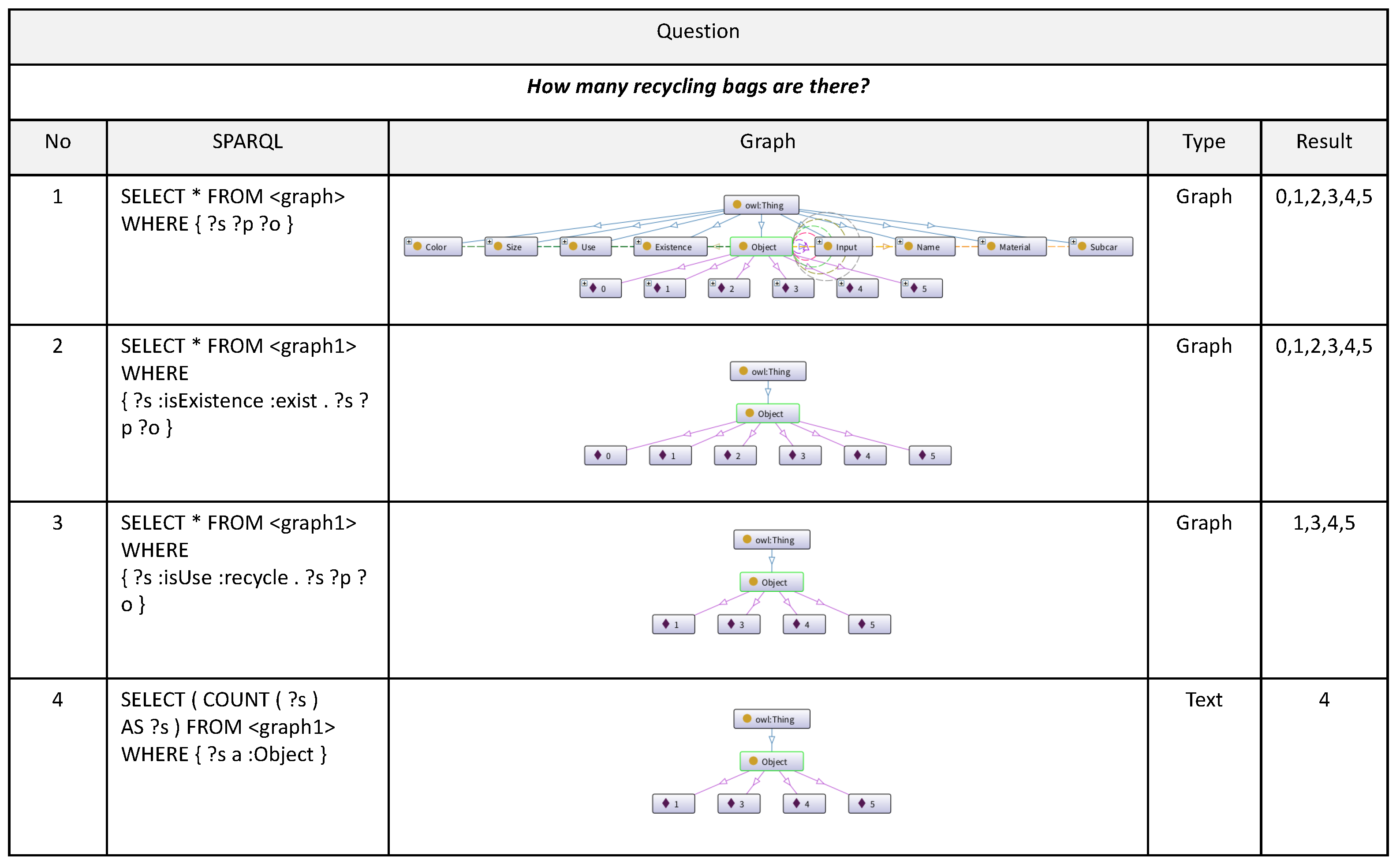

Figure 11,

Figure 12 and

Figure 13 visually represent the process of finding answers to different questions in the same environment. For example, in response to the question “What color is the traffic light?”, the system performed a series of SPARQL queries step by step and ultimately produced the answer “Red.” In response to the question “What do you see in front of the bus?”, it produced the results “Car, Motorcycle, Crosswalk.” Finally, for the question, “What is the name of the black metal object?” the correct answer, “Traffic light,” was inferred. Additional question-answering examples are presented in

Figure 14,

Figure 15,

Figure 16 and

Figure 17, which demonstrate the generality and scalability of the proposed VQA module. These results demonstrate that the proposed VQA module can semantically interpret children’s questions and reliably generate answers by leveraging a semantic SLAM-based scene graph. Furthermore, the results indicate that the module can be effectively used not only for simple object recognition but also for English education and social interaction through children’s question-and-answer sessions.

In summary, the semantic SLAM, automated planning system, and VQA modules proposed in this study were found to operate stably through interaction with children in a real kindergarten environment. Semantic SLAM effectively constructed a semantic scene representation including various object attributes and positional relationships, while the automated planning system demonstrated that the robot could successfully plan and execute tasks even in a changing environment. Furthermore, the VQA module has shown the potential to be used in educational interactions by accurately interpreting and responding to children’s natural language questions. These results demonstrate that the architecture proposed in this study has practical potential to effectively implement child-centered learning support and social interaction in real educational settings.

5. Discussion

The proposed interactive environment-aware dialogue and planning system is designed to be developmentally appropriate for kindergarten children. First, early childhood is a period in which linguistic expression and cognitive inquiry abilities expand rapidly, and bidirectional dialogue experiences maximize learning effectiveness. The VQA module developed in this study allows the robot to function not merely as a passive respondent to a child’s questions but as an active interactive partner that can both generate its own questions and receive new ones from the child. This approach overcomes the limitations of conventional educational robot systems, which have typically relied on pre-defined scenarios or one-way feedback structures, enabling the robot to respond to a child’s linguistic reactions and curiosity while expanding the dialogue. This structure encourages children to explore concepts through language use and to develop higher-order thinking skills.

Second, the automated planning system adjusts the robot’s behavior according to the child’s responses and environmental changes, thereby supporting experiences in which children actively construct their own knowledge. Unlike conventional systems that operate based on fixed action sequences, the proposed planning module incorporates a PDDL-based dynamic replanning mechanism, allowing the robot to reflect real-time changes in the learning environment. Through this capability, the robot can adapt effectively to classroom situations and maintain continuous interaction with children.

Third, the Semantic SLAM-based environmental perception enables the robot to semantically understand the objects, spaces, and relationships within a real classroom, allowing it to generate contextually appropriate questions, answers, and feedback in real time. Whereas previous educational robots were limited to physical localization or basic object detection, the proposed system achieves structural innovation by integrating semantic, scene-level perception with linguistic interaction and behavioral planning. Through this integration, children experience a comprehensive form of linguistic, cognitive, and social interaction within an authentic educational environment.

Consequently, the proposed framework demonstrates that the robot can serve not merely as an information provider but as a learning companion that engages in reciprocal question–answer exchanges with children, overcoming the technical and interactional limitations of existing educational robots and promoting children’s cognitive, linguistic, and social development in a developmentally appropriate manner.

The approach proposed in this study represents a novel structural integration that combines Semantic SLAM, automated planning, and VQA modules into a single coherent framework, distinguishing it from previous studies that have explored these technologies independently. Unlike prior research that primarily focused on improving the performance of individual functions, the proposed system aims to expand the educational applicability of social robots through the integrated connection of environmental perception, planning, and dialogue. Therefore, the key contribution of this study lies not merely in the implementation of each component but in the presentation of an integrated educational robot architecture designed to support child-centered learning.

7. Conclusions

In this study, we propose an architecture that combines environmental perception and interaction to support preschool children’s learning using social robots. The proposed system includes three modules: semantic SLAM, automated planning system, and VQA. Each module works in a complementary manner to enable the robot to engage in meaningful interactions with children in a real classroom environment. The experimental results indicate that semantic SLAM provides precise semantic scene information, including various object attributes and positional relationships, and the automated planning system successfully completes tasks with a high success rate even in changing environments. Furthermore, the VQA module demonstrated its potential for social interaction and educational use by accurately interpreting children’s natural language questions and generating appropriate responses.

The proposed system supports child-centered learning by enabling the robot to semantically understand objects and spatial relationships within the classroom, adjust its behavior according to children’s responses and environmental changes, and engage in active interaction by generating questions and answers. Through this process, the robot is demonstrated to function not merely as an information provider but as a learning companion that facilitates children’s linguistic exploration and concept formation. However, this study did not quantitatively verify the educational effectiveness of the system, and the speech recognition module did not fully reflect the characteristics of children’s speech. Future research will focus on improving these technical aspects and conducting long-term experiments to strengthen the educational applicability and child-adaptive interaction capabilities, thereby advancing the proposed system into a more complete child-centered educational robotics platform.