1. Introduction

As we enter a new era of technological advancement, automated vehicles (AVs) are expected to fundamentally transform mobility by enhancing efficiency [

1], safety, convenience, and user experience [

2]. With the growing diversity and complexity of human–machine interfaces (HMIs) [

3] and infotainment systems, it has become increasingly critical to ensure that drivers understand the functionality and use of these systems to enable safe and enjoyable operation. Consequently, substantial efforts have been directed toward shifting from a technology-centered to a human-centered approach in the design and development of automotive user interfaces [

4,

5]. Such efforts include, for example, investigations of reaction times in take-over scenarios [

6] and studies on the influence of driver personality on preferred automation styles [

7,

8].

However, user-centered interface design often relies on the assumption that individuals consistently behave rationally and make logical decisions, an expectation that diverges considerably from actual human behavior. Research in cognitive psychology has shown that humans are prone to systematic deviations from rational judgment and decision-making [

9]. These so-called

Cognitive Biases are systematic errors in thinking that shape how individuals perceive, interpret, and evaluate information [

10]. They reflect ingrained tendencies in human cognition that can compromise decision quality. Prominent examples include

Confirmation Bias (favoring information that aligns with existing beliefs) [

11],

Anchoring Bias (relying disproportionately on the first piece of information encountered) [

12], and the

Availability Heuristic (basing judgments on easily accessible information) [

9].

Cognitive Biases play a central role in behavioral economics, social psychology, and decision theory, shaping outcomes in domains such as business, politics, education, healthcare, and technology [

13]. Given their wide-ranging impact, it is essential to discuss their influence in the context of automated mobility as well. Indeed, recent evidence suggests that biases such as

Illusory Control can emerge in interactions with automated driving systems [

14]. We therefore argue that recognizing these biases and understanding their mechanisms might be crucial for developing interventions and strategies that mitigate their adverse effects and foster more rational decision-making.

Using an exploratory approach, this work examined four prevalent Cognitive Biases in the context of automated driving: the Truthiness Effect, Automation Bias, Action Bias, and Illusory Control. A three-step experiment with N = 34 participants was conducted to assess the manifestation of these biases and to analyze the influence of automation explainability and users’ mental models.

2. Related Work

The following sections provide an explanation of the general psychological concepts of

Mental Models and

Cognitive Biases in human–computer interaction. Subsequently, existing literature on

Cognitive Biases in the context of automated driving is reviewed, along with an introduction to the four biases selected for investigation in this study. These biases were identified through a focus group process, during which 151 known biases [

15,

16] were evaluated by the authors for their relevance to automated driving.

Illusory Control [

14,

17], and

Automation Bias [

18,

19] were chosen due to preliminary evidence suggesting their occurrence in automated driving contexts.

Truthiness Effect and

Action Bias were selected based on a discussion that anticipated high relevance within the field. Lastly, the impact of providing explanations about the automated system’s behavior on the manifestation of these

Cognitive Biases is outlined.

2.1. Mental Models in Automated Driving

Mental Models can be seen as a core concept of cognitive psychology and refer to the cognitive framework that users form to understand and interact with AVs [

20]. Accurate

Mental Models allow users to anticipate how the vehicle will respond in situations such as lane changes, obstacle avoidance, or emergency braking. Discrepancies between the user’s mental model and the actual system behavior can lead to reduced trust, misuse, or overreliance on the technology [

21]. Understanding how users form, adjust, and rely on these mental models is critical for designing AVs that align with human expectations and a safe and enjoyable driving experience [

22]. A key challenge for interface design is the fact that mental models are dynamic, functioning as flexible constructs that evolve with users’ growing experience of a system over time [

23].

2.2. Cognitive Biases

Cognitive Biases are systematic errors in thinking that affect the decisions and judgments that individuals make. These biases can stem from different mental shortcuts or

Heuristics that human brains use to speed up the decision-making process, especially under uncertainty or information overload [

24].

Cognitive Biases are part of the cognitive framework, and while often useful, they can lead to perceptual distortion, inaccurate judgment, or illogical interpretation. More than 150

Cognitive Biases have been identified and studied so far [

15,

16]. Still, research in this area is ongoing, and new biases are discovered and classified as the understanding of human cognition and behavior continues to evolve.

2.3. Cognitive Biases in Automated Driving

Only a few studies on

Cognitive Biases in the context of automated driving have been conducted so far. Măirean et al. [

25] investigated the relationship between drivers’

Cognitive Biases (i.e.,

Optimism Bias,

Illusory Control) and risky driving behavior in manual driving. The results showed that risky driving behavior was negatively predicted by

Optimism Bias and positively predicted by

Illusory Control. Frank et al. [

26] examined the influence of

Cognitive Biases in moral decisions in fully autonomous driving in a large-scale series of seven studies. The research focused on the participants’ decisions when confronted with ethical dilemmas. Using an adapted trolley problem scenario, the effect of situational factors was considered, with

Cognitive Biases such as

Action Bias being found as a cause of the decision outcomes. In this work, we investigated the impact of four specific

Cognitive Biases, which are introduced in the following.

2.3.1. Truthiness Effect

The

Truthiness Effect refers to the tendency to trust information or arguments based on intuitive feelings or “gut instincts” rather than on logical reasoning or factual evidence [

27]. In essence, the

Truthiness Effect leads individuals to believe that something is true because it “feels” true, regardless of the presence or absence of empirical evidence supporting such beliefs. Because it is easily manipulated by emotions, visual images, anecdotes, and rhetoric, it can strongly influence perceptions, decision-making, and behavior. This phenomenon is particularly relevant in contexts such as mass communication, social media, advertising, politics, and consumer behavior [

28], and is often studied in connection with visualizations. For instance, a user study by Derksen et al. [

27] used ambiguous trivia claims (that people are unlikely to know) presented as true-or-false statements. The study involved participants from different age groups and cultures. Participants were asked to judge simple statements as either true or false. Half of the statements were accompanied by photos. For example, the claim “Hippopotamus milk is bright pink” was presented together with a photograph of the animal itself. The findings revealed that people were significantly more likely to believe a statement when it was presented with an illustration, even if the image was irrelevant to the content, supporting results from similar research [

29].

2.3.2. Automation Bias

Automation Bias is a

Cognitive Bias that occurs when individuals over-rely on automated systems and ignore or undervalue non-automated or manual input, even when it may be correct [

30]. This can manifest in two ways: by over-trusting the automated system [

18] and ignoring manual overrides, or by over-relying on the automated system and failing to notice when it causes errors, malfunctions, or requires human intervention. An example of

Automation Bias in automated driving can be seen when a driver over-relies on their vehicle’s autopilot system and neglects key aspects of the driving environment, believing that the system can handle all situations flawlessly. A prominent example is overconfidence in Tesla’s Autopilot, as it has already led to fatal accidents [

19].

2.3.3. Action Bias

Action Bias describes the behavioral pattern where individuals prefer to take action, even when doing nothing or maintaining the status quo may yield a better outcome [

31]. The bias tends to arise in stressful situations, where decision-makers feel compelled to do something rather than nothing, even if the action could lead to a negative result. Bar-Eli et al. [

31] uses a goalkeeper’s need to move to the left or right, which is stronger than to stay in the middle, as an example of the human tendency to favor action over inaction in stressful situations.

2.3.4. Illusory Control

Illusory Control is a universal psychological phenomenon where individuals overestimate their ability to control events or outcomes that are essentially, if not entirely, determined by chance. It leads to a subjective belief of having personal control over an outcome, often resulting in an inflated self-assessment of one’s actual influence on the situation [

32].

Illusory Control can significantly affect decision-making processes, risk perception, and behavior, often leading to overconfidence and increased risk-taking. In the context of automated driving, initial evidence for a situational occurrence of

Illusory Control was found. In a work by Koo et al. [

17], providing the driver with more information during an automated ride led to a heightened sense of control, even though the ride itself was fully automated, leaving the driver with neither more nor less control over the situation. Manger et al. [

14] found similar evidence in a user study that revealed a higher sense of

Illusory Control when information about the (fully automated) vehicle behavior was provided.

2.4. Cognitive Biases and Explainability

As automated driving technology advances and interfaces become increasingly complex, providing explanations about automated driving functions is confirmed as a crucial prerequisite to ensure drivers’ understanding and has been repeatedly shown to increase trust, user experience, and overall acceptance [

33,

34,

35].

Cognitive Biases were investigated regarding their influence on the effectiveness of explanations in several application fields [

36]. In healthcare, for example, AI researchers identified the main heuristic biases that lead to diagnostic errors and defined strategies for mitigating them, significantly advancing the healthcare ecosystem regarding safety and trust [

37].

3. Research Questions

The field of automotive user research has emphasized the importance of a human-centered approach to automated mobility, which includes a comprehensive understanding of the user. However, there remains a significant gap in understanding human flaws in decision-making and how Cognitive Biases impact the automated driving experience and the effectiveness of interfaces designed to help users develop accurate mental models of the automation’s capacity.

This work aimed to spark a deeper discussion by investigating four prevalent Cognitive Biases in an exploratory experiment, and developed the following research questions:

RQ1: (Bias Manifestation): Are users in the context of automated driving susceptible to the Cognitive Biases, such as Truthiness Effect, Automation Bias, Action Bias, and Illusory Control?

RQ2: (Influence of the Mental Model): Does the accuracy of the Mental Model formed about automated driving influence the users’ susceptibility to these Cognitive Biases?

RQ3: (Influence of Explanations): Does providing Explanations about the AV’s behavior influence the users’ susceptibility to these Cognitive Biases?

4. Method

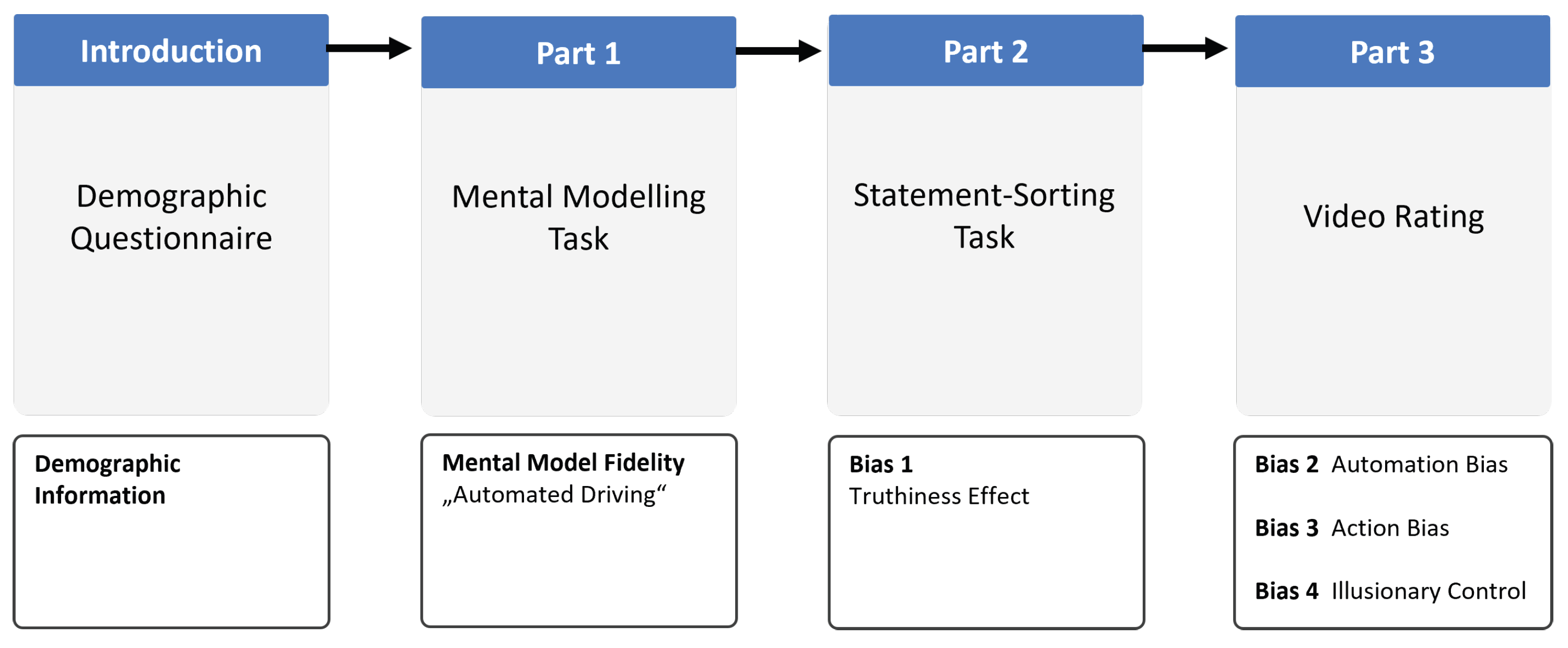

To explore the research questions, a three-step experiment was designed in which

N = 34 participants took part. In the first part of the study, the participants’

Mental Models of automated driving were assessed with

M-Tool (version 2023) [

38], an online mental modeling tool developed by HCI researchers, described in detail below. In the second part, the

Truthiness Effect was investigated using a statement sorting task, in which the participants gave their assumptions about whether statements about automated driving were true or false, whereby the statements were presented with different levels of explanation or additional statistical information. In the third part, videos of real rides with AVs were shown, and subjective questionnaires were used to elicit

Action Bias,

Automation Bias, and

Illusory Control, as well as the impact of the fidelity of the

Mental Model and explanations about the AV’s behavior on these biases. A visual overview of the study design is presented in

Figure 1.

4.1. Procedure

Given the involvement of human participants, the study followed strict ethical guidelines. The study design and data protocols were approved by the Joint Ethics Committee of Bavarian Universities (GEHBa). The N = 34 participants were invited to the university’s HCI lab and provided with a regular office table (Statement-sorting task) and a computer with a 27-inch monitor (Mental modeling task and Video Rating) to perform the three different parts of the experiment. The study started by welcoming the participants and introducing them to the study procedure. The participation was voluntary without compensation and lasted about 60 min. All participants gave informed consent before starting the study by filling in a short demographic questionnaire. As basic demographic information, participants were asked about their age, gender, and employment status. In relation to the automotive research context, they were also asked how frequently they travel by car. To make sure that expertise in automated driving did not influence the occurrence of biases, experts were not invited. Additionally, participants rated both their prior knowledge of automated driving and their trust in AVs on a 5-point Likert scale ( to ). The subsequent procedure and instructions are described in the following sections.

4.2. Part 1: Mental Modeling

In the first part of the experiment, the participants’

Mental Model about automated driving was assessed with

M-Tool, a free Mental Model Mapping Tool designed to capture perceptions (or mental models) of complex systems [

38]. It enables categorizing the participants’

Mental Models into different levels of accuracy and thus allows using them as a filter for the subsequent aspects of the study. In the following,

M-Tool, its application in the study, and the chosen classification criteria for the distinction between high- and low-fidelity mental models are explained in detail.

4.2.1. Introducing M-Tool

The tool was developed and validated at Heidelberg University and Utrecht University, and introduced in 2021 [

39]. Participants can visualize their mental models by arranging icons about the topic and visualizing relations between them by placing arrows. For this, possible components of the

Mental Model are fed into the tool in the form of pictograms and stored with audio tracks for labeling [

38]. According to the authors, not only the concept’s core elements and actors but also the consequences, measures, and resources of a system or concept can be portrayed. To weight relations and causalities, thin arrows represent a weak influence, medium-thick arrows represent a moderate influence, and thick arrows represent a strong influence.

4.2.2. Application of M-Tool in the Study

For this experiment, the tool was set up as follows. In a focus group session, the authors brainstormed terms and concepts related to automated driving. Following the removal of excessively complex technical terms, 41 relevant terms were extracted. 41 license-free pictograms of these terms selected on FREEP!K [

40] were then embedded in

M-Tool into six different categories: (1)

assistance systems (e.g., emergency brake assist), (2)

technical components (e.g., lidar), (3)

traffic components (e.g., highway), (4)

information and entertainment (e.g., display), (5)

descriptive adjectives and verbs (e.g., to control), and (6)

general keywords (e.g., mobility or environment). The complete set of pictograms can be found in

Appendix A. A screencast and audio explanations were created using the free text-to-speech generator ttsmp3 [

41]. A pictogram of an AV was placed as the main component in the middle of the drawing area, which was intended as the starting point. The mental modeling task began with the study instructor explaining the general concept of

Mental Models, and participants did an example reconstruction of a small model consisting of 5 elements. After this introduction, they were asked to visualize their individual

Mental Models of AVs. Additional guiding questions were shown in the screencast to give the participants an impulse to map their mental model of an AV: (1)

What is an automated vehicle? (2)

Which technologies are used in automated vehicles? (3)

What potential do automated vehicles offer? (4)

Which tasks does the automation perform? (5)

Which tasks does the human driver have to perform? After completing the task, the data was exported as CSV files, and an additional screenshot of each participant’s final

Mental Model was taken. The modeling process was not recorded through video or think-aloud protocols, as an exploratory and efficient application of

M-Tool was prioritized in this study.

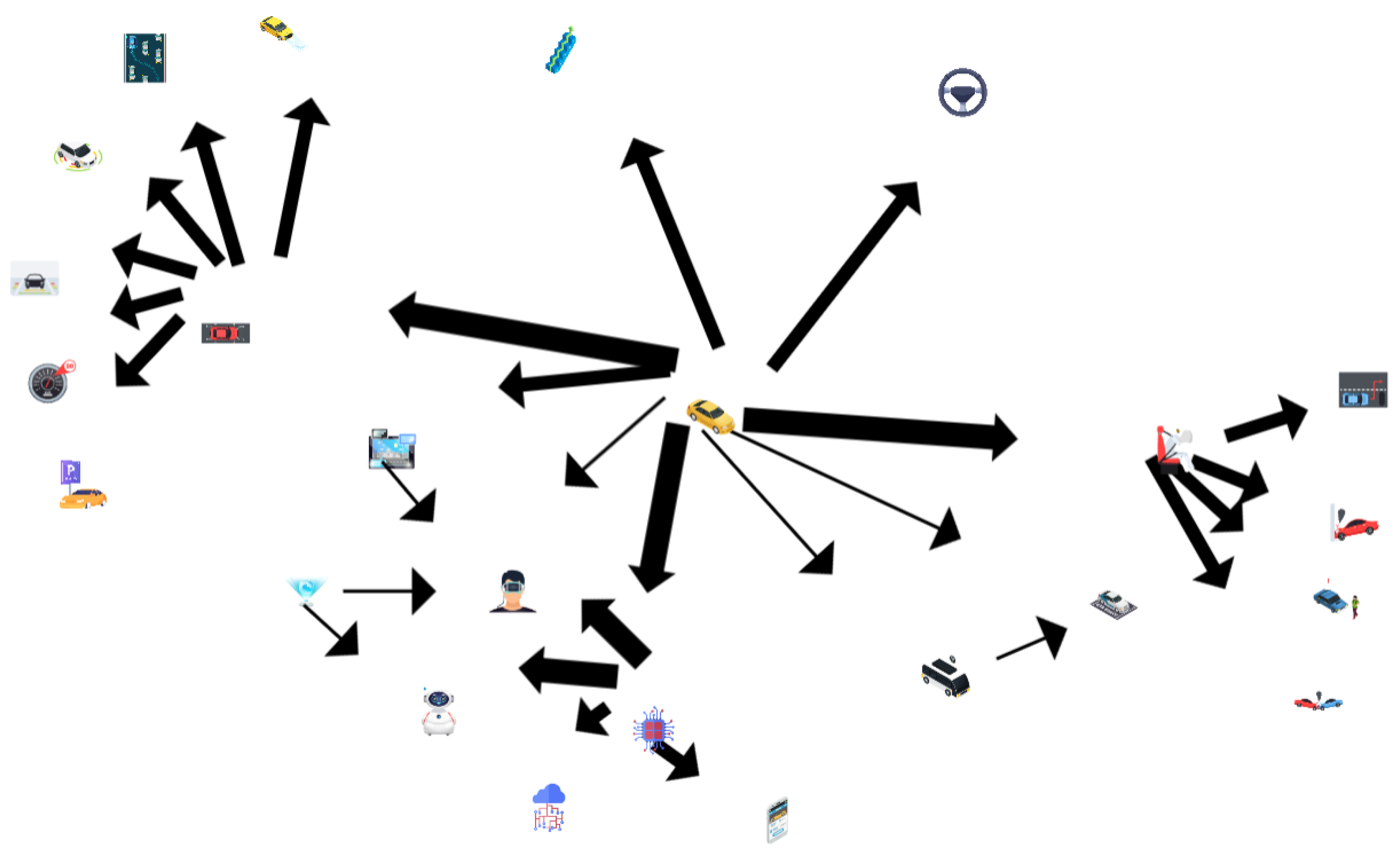

In accordance with prior applications of the tool, two evaluation criteria were defined to classify the created

Mental Models as high- or low-fidelity: the

number of elements used and the

number of connections made per element. First, a median split based on the number of elements was performed to divide the participants into two groups (high- and low-fidelity

Mental Models). The median split is a commonly used technique in statistical analysis, particularly in psychology and social sciences, to dichotomize continuous variables by dividing them into two groups based on the median [

42]. To avoid counting randomly or wrongly placed elements, each element must additionally be connected to at least one other element. Subsequently, for a

Mental Model to be classified as high-fidelity, it had to contain ≥15 elements and simultaneously possess an average number of ≥1 connections made per element. Only if both conditions were fulfilled was the

Mental Model classified as high-fidelity, whereas models that fulfilled none or only one of the conditions were rated as low-fidelity. An example of a

Mental Model (high-fidelity) that a study participant visualized is shown in

Figure 2.

4.3. Part 2: Statement Sorting (Truthiness Effect)

The second part of the experiment aimed to test the occurrence of the

Truthiness Effect, which describes the tendency to accept a statement or claim as true based on intuition rather than evidence or logic, and was shown to be impacted by including different levels of explanations or statistical information. To investigate its occurrence in the context of automated driving, a statement sorting task was designed in which twenty-four printed statements about automated driving were presented to the participants, which were either true or false. Participants were asked to categorize each statement as to whether they believed it to be true or a lie. For this task, twelve true and twelve false statements about automated driving were collected in popular magazines for science and automotive technology. Specialists’ claims from professional automotive journals and websites were used to formulate true statements, and lies or widespread misconceptions were chosen to formulate false statements. Since the aim of this task was not to test participants’ factual knowledge but to identify the occurrence and the factors that drive

Truthiness Effect, it was essential that responses could be based on intuition. To ensure this, the statements needed to be either ambiguous, as applied by Derksen et al. [

27], or unfamiliar to the participants. Consequently, official experts, such as those working in the automotive field, were excluded from participation.

To test for the Truthiness Effect and its influencing factors, different kinds of additional information were added to the statements. In total, four categories of statements were created:

Short statements without justification

e.g.,

“Self-driving cars require fewer parking spaces.” (Truth) [

43]

Statements backed up with statistics

e.g., “The Ministry of Economic Affairs expects additional revenue of around 2.3 million euros per year in the future due to the unauthorized driving of autonomous vehicles under the influence of alcohol.” (Lie)

Why explanations (according to Guesmi et al. [

44])

e.g.,

“Vehicle fleets stationed in the municipalities will make it possible to request self-driving cars via an app and have them take you to your destination.” (Truth) [

45]

How explanations (according to Guesmi et al. [

44])

“Animal rights activists are campaigning against the use of ultrasonic sensors in autonomous vehicles, as these can severely disrupt bats’ sense of direction.” (Lie)

The complete set of statements and categories can be found in

Appendix B.

To execute the sorting task, all 24 statements were printed on cards, stacked together in randomized order, and given to the participants, together with a green and a red envelope. The participants were then instructed to read the cards one after another and, according to their gut feeling, put the statements they believed to be true in the green envelope and the statements they did not believe to be true in the red envelope.

4.4. Part 3: Video Rating (Automation Bias, Action Bias, and Illusory Control)

The third part of the study aimed to uncover the participants’ tendencies to form

Automation Bias,

Action Bias, and

Illusory Control by having them experience videos of automated driving scenes and subsequently rate their perceptions using subjective questionnaires. To present a traffic scenario and a vehicle behavior as realistic as possible, the use of real-world material rather than a simulated environment was preferred as study material. Therefore, eight videos of real-world automated driving scenarios published on the YouTube channel AI Addict [

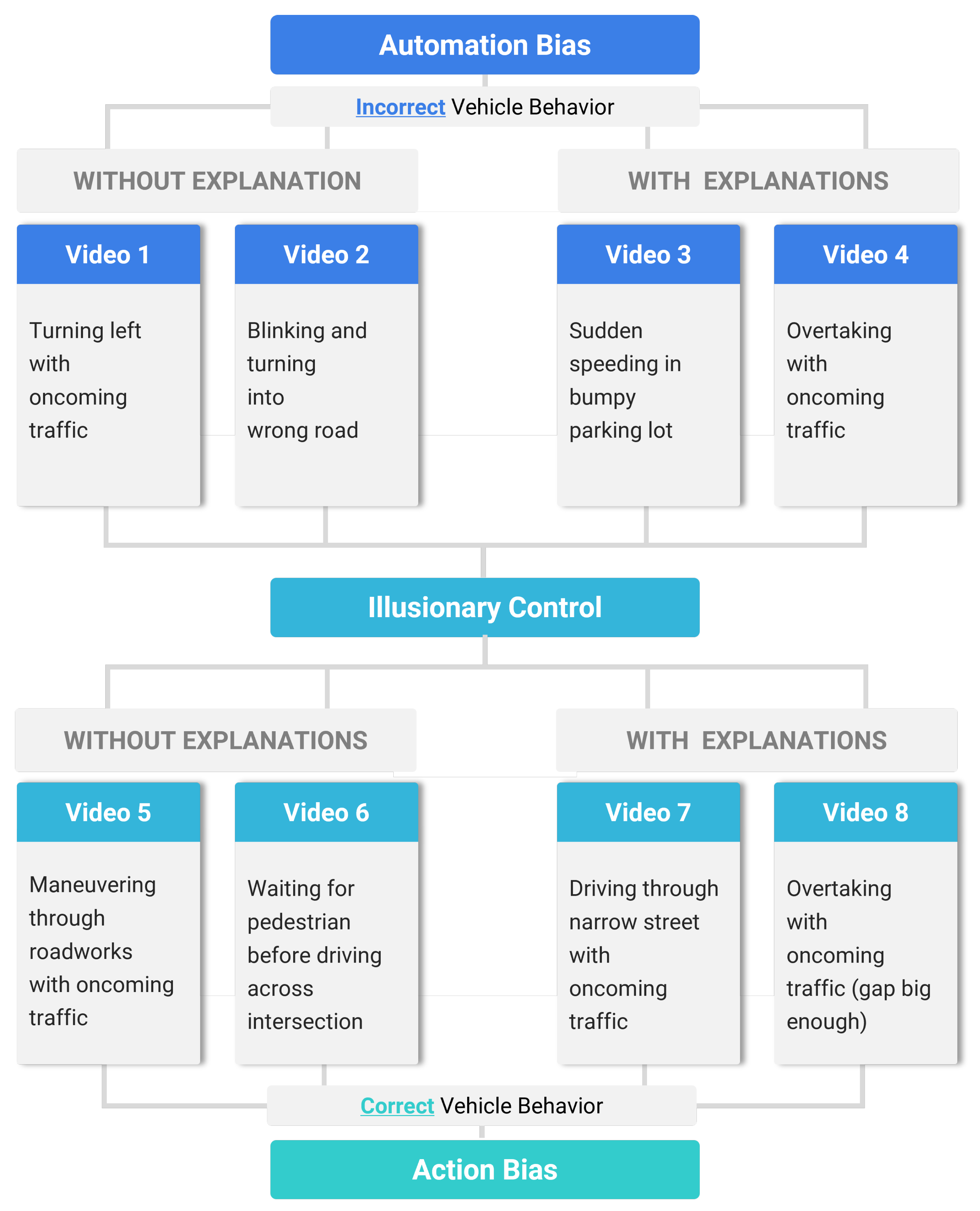

46] were selected, which demonstrated the driving behavior of Tesla’s Full Self-Driving (FSD) mode.

In a within-subject design, half of the videos were presented with a baseline HMI concept displaying only an environmental map with the surrounding traffic scene, other road users, and the ego vehicle’s current maneuver. The remaining videos incorporated an explainable HMI concept that supplemented the baseline information with text and speech explanations of the current maneuver.

Adobe After Effects was used for the editing of the videos, together with

Adobe Illustrator for the text overlays [

47]. The auditory overlays were created with the free text-to-speech generator ttsmp3 [

41]. A schematic overview of the chosen situations for inducing the different

Cognitive Biases is illustrated in

Figure 3. Screenshots of the driving situations and HMIs can be seen in

Figure 4.

In a within-subject design, the videos were presented to the participants on the computer screen in randomized order. To avoid confounding evaluations of previous or subsequent traffic situations, the videos were kept short (about 20 s). The tendencies for the different Cognitive Biases were assessed using subjective questionnaires integrated into an online survey. The participants rated the following statements on 5-point Likert scales on a range from (strongly disagree) to (strongly agree):

- 1.

“The automated vehicle behaved appropriately to the traffic situation.” (Automation Bias).

- 2.

“I feel that I am in control of the situation.” (Illusory Control).

- 3.

“I feel the need to intervene in the driving task.” (Action Bias).

Automation Bias was tested with four of the videos. Each of them showed an incorrect action of the AV: (1) turning left with oncoming traffic, (2) blinking and turning into the wrong road, (3) sudden speeding in a bumpy parking lot, and (4) overtaking with oncoming traffic. To test whether participants approve of the automation’s decision, although it is not appropriate in the existing traffic situation, a possible indication of Automation Bias, participants were asked to rate how appropriately the vehicle behaved in the traffic situation.

Action Bias was tested with the four remaining videos. These videos showed situations in which the vehicle’s decision was not immediately obvious without explanation and could be perceived as risky, but can technically be executed without any problem: (1) maneuvring through roadworks with oncoming traffic, (2) waiting for pedestrians before driving across an intersection, (3) driving through a narrow street with oncoming traffic, and (4) overtaking with oncoming traffic (gap is big enough). Action Bias, as defined earlier, describes a behavioral decision where individuals prefer to take action, especially in stressful or risky situations, even when doing nothing or maintaining the status quo may yield a better outcome. It was therefore tested by assessing the participant’s need to intervene (take action) in the driving situation, although the vehicle’s automation was handling the driving situation safely.

Illusory Control, the overestimation of an individual’s influence over a situation, was tested with all eight of the described videos by assessing the participants’ perceived Sense of Control. As all videos showed a fully automated driving scene with no actual opportunity to intervene, a high rating of sense of control could indicate Illusory Control.

5. Results

In the following, the characteristics of the participant sample, the mental model classification, and the results of measures are presented.

5.1. Participant Sample

N = 34 participants (14 females and 20 males) took part in the study. The age ranged from 24 to 66 years (M = 38.03, SD = 14.84). The majority of the participants (69%) were employed, 16% were students, 9% were retired, and 6% were unemployed. Moreover, the group tended to be experienced drivers as 84% of the participants drive daily or multiple times a week. Overall, the participants rated their prior knowledge of automated driving as neutral with a mean value of 0.0 (SD = 0.94) on a 5-point Likert scale (−2 to + 2), and their trust in this technology was also rated as neutral to relatively low with a mean value of 0.82 (SD = 1.01).

5.2. Participants’ Mental Model

In the first part of the user study, participants were asked to visualize their individual

Mental Model of automated driving using

M-Tool [

38]. To derive classification criteria, the

Mental Models were descriptively analyzed based on the screenshots taken, and further analysis was performed based on the CSV output. The classification criteria for the analysis and the distinction between high- and low-fidelity mental models are explained in the Method section. Based on these criteria, the

Mental Models of 18 participants were classified as low-fidelity, while the remaining 16 were classified as high-fidelity.

5.3. Results Truthiness Effect

The second part of the experiment, the statement sorting task, aimed to determine whether the degree and type of explanation about automated driving statements influence the perceived correctness of the statements by inducing a Truthiness Effect. Four explanations were examined: short statements without explanation (as baseline statements), statements backed up with numbers, why explanations, and how explanations. 50% of the statements were false statements, while the remaining half were true statements.

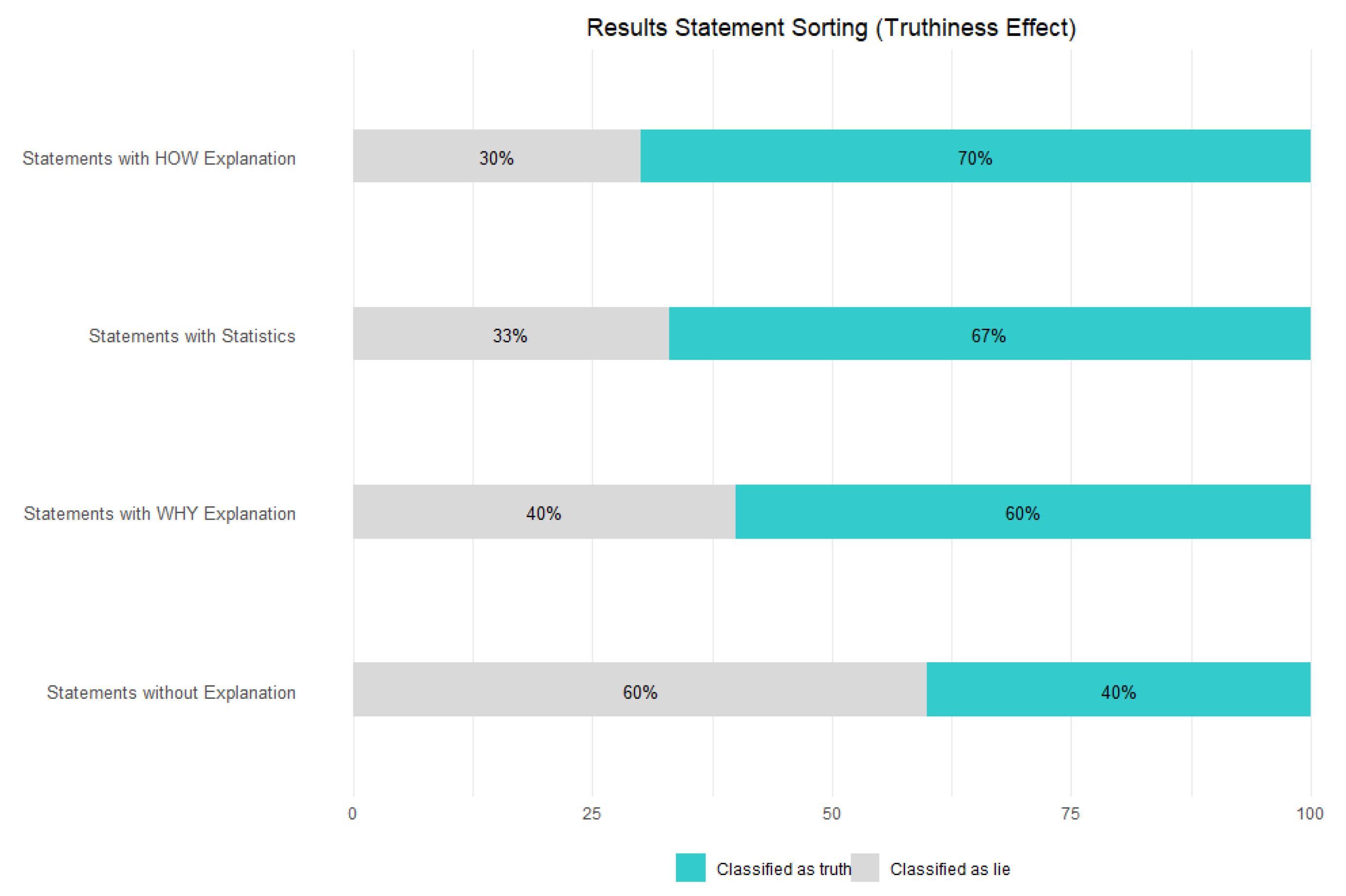

Looking at the data as summarized in

Figure 5, it can first be seen that a total number of 59.31% of the statements were classified as true, while 40.69% were classified as lies. Of these, the short statements without explanation were perceived as true by only 39.71% of the participants. Statements including why explanations were perceived as true at a much higher percentage (60.29%). Statements that were backed up with statistics were perceived as true in 66.67% of the cases, and finally, statements that included how explanations were rated the most credible, as participants assumed them to be true in 70.59% of the cases. Consequently, credibility appears to increase with increasing transparency of explanations and with the citation of numerical data. A chi-square test of independence revealed a significant association between explanation type and belief in the truth of the statements

, with a small-to-medium effect size (Cramer’s

V = 0.24). This indicates that the type of explanation provided influenced whether participants judged the statements to be true or false. Pairwise chi-square tests with Bonferroni correction showed that participants were significantly more likely to believe statements when provided with

how explanations compared to no explanation (

adjusted p < 0.001), and when provided with statistics compared to no explanation (

adjusted p < 0.001). No significant differences were found between the three explanation types (

all adjusted ps > 0.05).

Influence

of the Mental Model Fidelity on the Truthiness Effect

The results show that the fidelity of the Mental Model appears to be an influencing factor on the participants’ susceptibility to the Truthiness Effect. When comparing the results of participants with a less detailed mental model with those who have a more detailed one, the results showed that participants with a low-fidelity model seemed to be more susceptible to a Truthiness Effect and were more likely to believe the statements than participants with a high-fidelity model. Over all categories, participants with a low-fidelity model rated 53.24% of the statements as true, whereas participants with a high-fidelity mental model were more skeptical and rated only 46.35% of the statements as true. Interestingly, the high-fidelity group rated the statements with why explanations (33.33%) even slightly less credible than the short statements without any explanations (35.42%), and were most convinced of statements backed up by numbers to be true (66.67%). The low-fidelity group evaluated the short statements without explanations as least likely to be true (42.59%) and seemed to be more influenced by the Truthiness Effect.

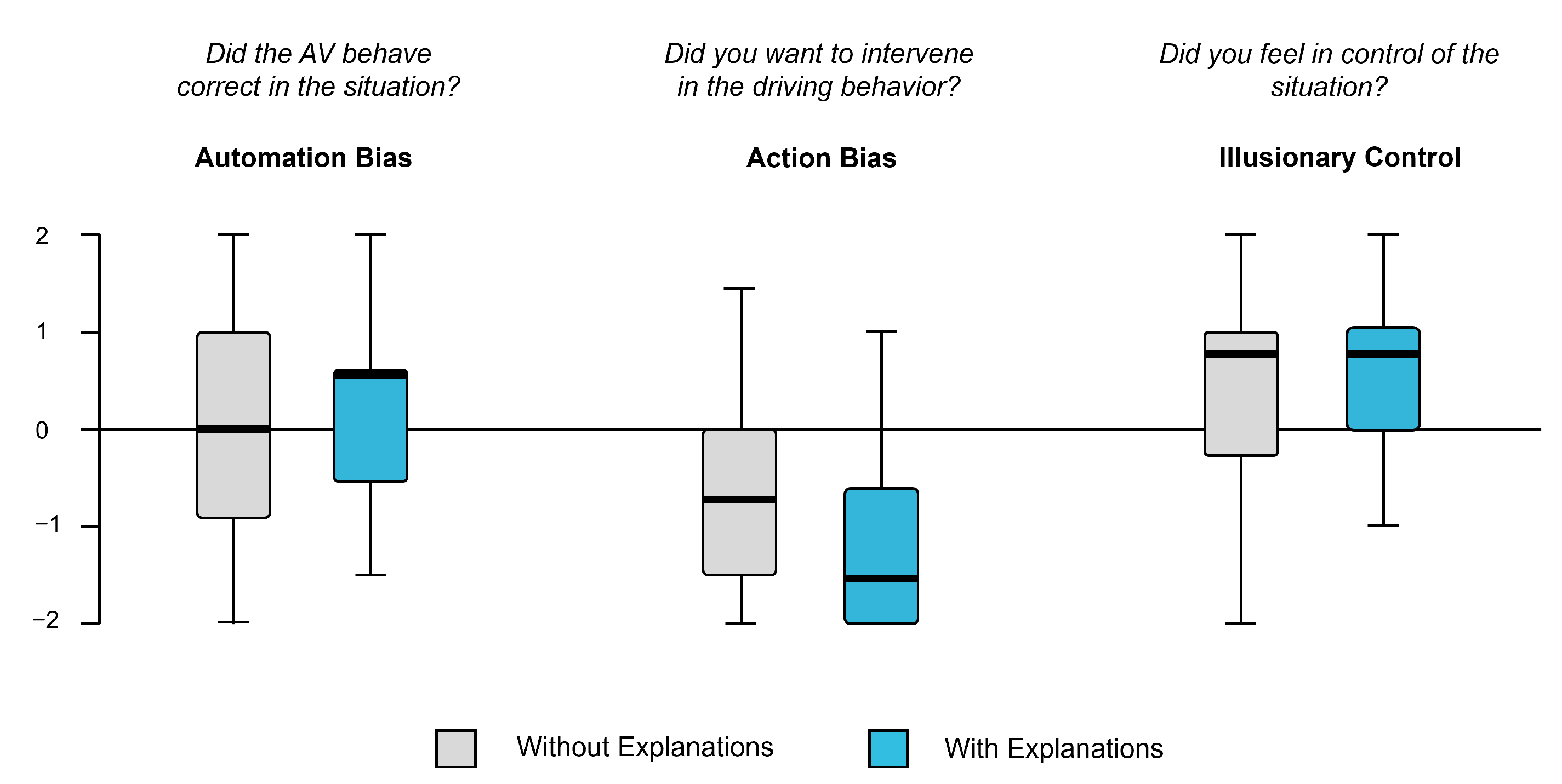

5.4. Agreement with the AV’s Action (Automation Bias)

The ratings of the videos were analyzed with the statistical software JASP (Version 0.16.40) [

48]. An overview of the results is shown in

Figure 6. To test the tendency to form

Automation Bias, the participants experienced four videos that showed an incorrect action or inappropriate decision by the vehicle and were asked to rate the statement

“the vehicle behaved correctly in the traffic situation” on a Likert scale ranging from

(

strongly disagree)

(

strongly agree). Agreement with the statement could indicate an overconfidence in the automation and, therefore, a susceptibility to

Automation Bias. The results show that, considering all four scenarios, in the baseline concept (no explanations), participants’ approval was rather neutral (

M = −0.14,

SD = 1.20), with no indication of an

Automation Bias. However, the explainable concept increased the impression that the AV behaved correctly (

M = 0.56,

SD = 0.89). Aditionally, a repeated measures ANOVA on the perceived appropriateness of the vehicle’s behavior across Videos 1–4 revealed significant differences between the situations, (

F(3, 87) = 5.17,

p = 0.0025, partial

= 0.15). Post-hoc comparisons (Bonferroni corrected) showed that vehicle behavior in Video 2, in which the vehicle blinked and turned into the wrong road (

M = 1.57,

SD = 0.86), was rated as significantly more appropriate than Video 4, in which the vehicle overtook despite oncoming traffic (

M = 0.63,

SD = 1.25;

p = 0.0025). No other pairwise comparisons reached significance.

The fidelity of participants’ Mental Models did not have a significant influence.

5.5. Need to Intervene (Action Bias)

No evidence for the occurrence of Action Bias was revealed in the results, as the participants did not feel a distinct need to intervene in the presented driving situations. However, providing explanations decreased the need to intervene even more (M = −2.06, SD = 1.10) compared to the baseline concept (M = −1.26, SD = 0.97).

A repeated measures ANOVA on the perceived need to intervene across Videos 4–8 revealed significant differences between the situations (F(4, 116) = 6.82, p < 0.001, partial = 0.19). Post-hoc comparisons (Bonferroni corrected) showed significant differences between Video 5 and Video 7 (p = 0.020), Video 6 and Video 8 (p = 0.010), and Video 7 and Video 8 (p < 0.001). Among these, Video 7, in which the vehicle drove through a narrow street with oncoming traffic, resulted in the highest need to intervene (M = 0.63, SD = 1.45), whereas Video 8, in which the vehicle overtook with oncoming traffic but with a sufficient gap, showed the lowest ratings (M = −1.07, SD = 1.36).

Again, the Mental Model fidelity did not influence these results.

5.6. Perceived Sense of Control (Illusory Control)

To test for Illusory Control, the rating for the Sense of Control for all eight driving situations was analyzed. Overall, participants perceived a rather neutral Sense of Control with no indication of Illusory Control. However, participants did feel slightly more in control when the explainable concept was presented (M = 1.06, SD = 0.90) compared to the baseline concept (M = 0.70, SD = 0.89). Again, the perceived sense of control significantly differed between the specific situations (F(7) = 11.15, p < 0.001). Video 7, where the vehicle was driving through a narrow street with upcoming traffic (explainable concept), resulted in the lowest sense of control (M = −1.50, SD = 1.48). The highest sense of control was perceived in video 1, where the vehicle was turning left on a crossing with upcoming traffic without explanations (M = 1.46 SD = 0.94).

5.7. The Overall Influence of Explanations

When comparing the baseline concept to the explainable concept, considering all eight videos, a significantly higher agreement with the AV’s behavior can be seen under the use of the explainable concept (M = 1.64, SD = 0.78) compared to the baseline concept without explanations (M = 1.06, SD = 0.78), (t(29) = −2.13, p = 0.04). Furthermore, although not significant, explanations slightly increased participants’ sense of control (M = 1.06, SD = 0.90) compared to the baseline concept (M = 0.70, SD = 0.89). In turn, the need to intervene slightly decreased with explanations (M = −1.14, SD = 0.72) compared to the baseline concept (M = −0.78, SD = 0.84).

6. Discussion

In the following, the results are discussed with a focus on bias manifestation, the influence of mental model fidelity, and the role of explanations. Finally, theoretical as well as practical implications are derived.

6.1. Bias Occurrence (RQ1)

Research Question 1 asked whether the Cognitive Biases Truthiness Effect, Action Bias, Automation Bias, and Illusory Control can occur in the context of automated driving.

6.1.1. Indications of Truthiness Effect

First, it must be pointed out that the method used to investigate the

Truthiness Effect differed from the methods used to test the other biases. As we wanted to explore the

Truthiness Effect through the credibility of statements, as done in [

27], the results of the

Truthiness Effect refer to the participants’

general perception of the topic of automated driving rather than specific interactions or vehicle behavior. Of course, the

Truthiness Effect might also be triggered situationally through the information shown in the HMI, and future work could compare different categories of information and their presentation during an automated ride.

The findings of this study provide clear indications of a

Truthiness Effect in participants’ evaluation of statements related to automated driving. Across the four statement categories, credibility ratings increased notably when statements were accompanied by statistical or explanatory content. This is consistent with previous research, which showed that people are more likely to judge statements as true when they are presented with seemingly relevant but potentially superficial context [

27]. In the results,

how explanations describing technical mechanisms or system behavior led to the highest proportion of statements being rated as true, followed by statements supported by statistical information. This suggests that explanations offering technical detail or numeric credibility are especially persuasive, even when the actual content may be misleading. The findings point to a potential risk: in contexts where users lack deep technical understanding, such explanations might unintentionally reinforce misinformation or overconfidence in system capabilities. This has important implications for the design of human–machine interfaces in AVs. While transparency and explainability are often cited as key factors in building trust and understanding, the results suggest that even minimal explanatory content can strongly shape users’ beliefs, regardless of accuracy. Designers, therefore, carry a responsibility not only to provide accessible information but to ensure it does not give a false sense of certainty or correctness.

Additionally, the data revealed that participants with a low-fidelity mental model of automated driving were more susceptible to the Truthiness Effect. They more often judged statements as true compared to those with more complex mental models, suggesting that a more elaborated understanding may serve as a protective factor against intuitive but incorrect assumptions. Nonetheless, even participants with high-fidelity models were not immune to the Truthiness Effect, particularly when statements were backed by statistics.

6.1.2. Indications of Automation Bias, Action Bias, and Illusory Control

In the presented study, participants did not seem to develop

Automation Bias, as they did not agree with the vehicle in situations where it did not behave appropriately in the traffic situation. Nevertheless, it cannot be concluded that drivers of AVs are generally not susceptible to

Automation Bias, as repeated exposure to an automated driving system leads to learned trust over time [

18] and

Complacency, which can further reduce monitoring behavior and reinforce

Automation Bias over time [

49]. Furthermore, beyond automated driving,

Automation Bias is studied as a central

Cognitive Bias influencing user interaction with automated systems in various related fields, such as flight automation, medical recommender systems [

50,

51,

52,

53]. Its manifestation includes both omission errors (failing to act when automation omitted information) and commission errors (following incorrect automated advice without sufficient verification) [

50]. In the present study, no specific vehicle brand or fully automated system was communicated to the participants, which may have reduced the tendency to develop overtrust in the automation. In addition, the cognitive load during the experiment was relatively low. Prior research has shown, however, that high cognitive load (e.g., through multitasking) and limited attentional resources can increase the likelihood of users accepting system outputs without verification, thereby heightening the risk of

Automation Bias [

49].

Therefore, Automation Bias should be regarded as a critical human factor, particularly in levels of automation involving shared task allocation between the system and the human driver. To support safe automated driving, interaction design should incorporate strategies to mitigate this bias.

Regarding

Action Bias, no evidence was found that drivers favor action over inaction in ambiguous driving situations, as they did not express a need to intervene in the driving task. As

Action Bias occurs especially in stressful situations, it might be the case that the simulated driving scenario did not induce a sufficient amount of stress in the participants to trigger this bias. Again, it cannot be concluded that

Action Bias does not occur in the context of automated driving, as real-world automated driving, especially in time- or safety-critical situations, such as overtaking on a highway, is expected to induce a higher probability for the

Action Bias to occur. Interestingly, the participants reported a rather high sense of

Feeling in Control over all of the eight presented driving situations, although they did not have any possibility to control or intervene in the situation. These results indicate a general tendency for

Illusory Control during automated rides, which is in line with previous research [

14,

17]. Experiencing a high

Sense of Control and, at the same time, a low need to intervene in the situation highlights the dependency and correlation of different

Cognitive Biases. The specific driving situation was also found to have a strong and significant influence on all of the considered measures. This means that the emergence of a

Cognitive Bias is dependent on external situational influencing factors that cannot be easily predicted.

6.2. Influence of Mental Model Fidelity (RQ2)

Research Question 2 asked if the fidelity of the driver’s

Mental Model formed about automated driving can influence the occurrence of

Cognitive Biases. Overall, this question can partially be answered with a potential yes. In this work, the

Mental Model fidelity of automated driving did indeed appear to be an influencing factor in the susceptibility to the

Truthiness Effect. People with a more complex

Mental Model are more skeptical about the credibility of statements and, therefore, have a lower susceptibility to the

Truthiness Effect than people with a more simplified concept of automated driving. Nevertheless, drivers with a high-fidelity

Mental Model still tend to fall victim to the

Truthiness Effect, but to a lesser extent than those with a low-fidelity model. The other biases did not seem to be influenced by the drivers’

Mental Model in this work. However, we still recommend including this factor in future research and suggest using multiple methods to elicit the driver’s

Mental Models, as it is challenging to assess them completely. Lastly, personal and demographic factors such as driving experience or prior knowledge of automated driving technology were additionally explored in this work, but interestingly did not appear to play a significant role in the fidelity of the formation of the

Mental Model. Other, more general parameters such as profession, gender, and trust in the technology did not seem to influence variables in the participant sample either. As these results contradict prior work [

22], we still recommend including demographic factors and system experience in future investigations on

Cognitive Biases in automated driving.

6.3. Influence of Explanations (RQ3)

The results confirmed the importance of providing sufficient explanations about the AV’s behavior. Explanations increased the credibility of statements about automated driving. Provided as information in the HMI, they also led to a significantly higher agreement with the AV’s behavior, with an additional tendency to increase the driver’s Sense of Control and decrease the need to intervene.

The results of this study emphasize the critical role that explanations play in shaping user perceptions in the context of automated driving. Across multiple measures, the inclusion of explanations, both in the form of enriched statements in the sorting task and explainable HMI elements in the video-based evaluation, significantly influenced participants’ judgments. Explanations increased the perceived credibility of statements (

Truthiness Effect), led to higher agreement with the vehicle’s behavior even in cases of incorrect actions (potential indication of

Automation Bias), slightly enhanced the sense of control (related to

Illusory Control), and decreased the participants’ self-reported need to intervene (

Action Bias). While not all effects were statistically significant, the consistent direction of these results points to a general trend: explanatory content tends to increase user confidence and acceptance of automated behavior. This confirms prior work that highlights the benefits of transparency and explainability for trust and user experience [

34,

54,

55].

6.4. Theoretical Implications

The findings extend theoretical human–automation interaction in several ways. The study provides empirical evidence that classic cognitive biases also manifest in the context of automated driving. This supports the theoretical generalizability of existing bias frameworks beyond traditional domains such as aviation or medical decision-making. Furthermore, the results underline the importance of mental model fidelity in bias susceptibility. A more elaborated understanding of automation appears to reduce, but not fully prevent, susceptibility to biases. This adds nuance to theories of calibrated trust, showing that accurate mental models contribute to resilience but cannot eliminate intuitive misjudgments. Regarding the influence explanations, theoretical work on explainable AI often assumes that explanations primarily improve understanding and trust. This study shows that explanations can also reinforce biases (e.g., Truthiness Effect, Automation Bias). This complicates existing models of explainability and suggests that theoretical frameworks need to account for unintended persuasive effects of explanatory content. The situational dependence of Action Bias and Illusory Control highlights the need also to integrate environmental and contextual moderators into bias theories and research to align with the dynamic aspects of cognitive decision-making.

6.5. Practical Implications

Combining the discussed aspects and theoretical implications, it can be concluded that explanations should be carefully crafted to inform without overstating certainty. Designers should avoid superficial statistical references or overly technical details that may artificially boost credibility. Instead, explanations should include cues about system limitations and uncertainty to counteract the Truthiness Effect. Moreover, given the risks of Automation Bias and Illusory Control, HMI design should include features that promote active monitoring and encourage user verification rather than passive acceptance of automated decisions. Examples include highlighting system uncertainty, surfacing alternative interpretations of the environment, or requiring minimal confirmation in ambiguous situations. Enhancing users’ mental model fidelity through onboarding, training, or gradual exposure may reduce susceptibility to certain biases. Even so, since high-fidelity models do not provide full protection, system design must still anticipate biased judgments.

6.6. Limitations

It is essential to outline the most relevant limitations of the exploratory study design to ensure a cautious and accurate interpretation of the findings.

First, eliciting a person’s mental model of an automated system is inherently challenging. A variety of approaches have been used in prior research, such as think-aloud protocols during system interaction, scenario-based questioning about system behavior in specific traffic situations, or diagramming tasks to externalize users’ understanding of system components and functions. Each method has its own advantages and limitations. In this study, we opted to use M-Tool, a promising tool that enables participants to create interactive visualizations of their mental models. While this method proved to be useful in the context of this study, the resulting categorization into high- and low-fidelity mental models is inherently dependent on the chosen assessment and classification method. Therefore, the classification should not be regarded as universally valid. Nonetheless, the tool was applied and analyzed in line with previous research and served as a practical instrument for the investigation in this context.

Second, the use of video-based stimuli to present automated driving scenarios represents an explicit limitation. We deliberately chose a laboratory-based study design using short, real-world video clips to ensure a controlled and efficient study setup while minimizing confounding variables. However, this low-fidelity exposure lacks ecological validity and the immersive and interactive qualities of high-fidelity driving simulations or real-world driving experiences, which are likely to evoke different user perceptions and responses. Consequently, participant judgments, particularly regarding Cognitive Biases, should be interpreted with caution. In addition, relying on subjective self-report measures (e.g., Likert scales) introduces potential biases, such as social desirability or response tendencies, that may affect the validity of the results.

Finally, the study was conducted with a relatively small sample size (N = 34), which may restrict the generalizability of the results. Although a balanced sample in terms of gender (14 females, 20 males) and age distribution (24–66 years) was aimed for, further investigations should include more participants to ensure the statistical power of the results and allow for more fine-grained analyses across demographic subgroups as well as stronger validation of the observed effects.

7. Future Research Directions

Despite the discussed limitations, the combination of methods employed provided a suitable basis for the intended exploratory investigation of Cognitive Biases in the context of automated driving. The study revealed interesting and noteworthy patterns and tendencies that can inform future research and contribute to a deeper discourse on user cognition and overall user experience in automated driving. Building on the findings, several directions for future research emerge:

Testing and Exploration of additional Cognitive Biases: Beyond the biases examined in this study, future research could investigate other potentially relevant Cognitive Biases in automated driving. For example, confirmation bias, the tendency to favor information that aligns with preexisting beliefs, could influence how users interpret system feedback or incident explanations.

Relevance of Cognitive Biases Across Automation Levels: Further conceptual and empirical work should discuss whether cognitive biases are relevant across all levels of automation. For instance, they may be highly influential during safety-critical interactions in Level 3 systems (e.g., take-over requests), but largely irrelevant in Level 5 full automation, where user engagement with the automation is minimal or absent.

Mitigation vs. Exploitation of Biases: Future work could also explore whether Cognitive Biases in automated driving should be mitigated (e.g., through awareness education or uncertainty visualizations) or whether some biases could be strategically leveraged to enhance user experience, trust, or perceived safety, especially in early adoption phases.

Dynamic and Contextual Manifestation of Biases: The situational dependency observed in this study highlights the need to examine how specific traffic scenarios, time pressure, and perceived risk affect the manifestation of Cognitive Biases such as Automation Bias, Action Bias, or Illusory Control.

High-Fidelity Studies: Future research should employ high-fidelity driving simulators or on-road studies that more closely replicate the perceptual and cognitive demands of automated driving. This is particularly important for biases such as Action Bias, which are more likely to emerge in rapid or stressful decision-making contexts and can critically affect safety in real-world scenarios.

8. Conclusions

This work strived to create new insights for the development of human-centered interfaces for intelligent systems by understanding the user in terms of misconceptions and flawed thinking. It investigated the topic of Cognitive Biases in the context of automated driving and the influence of explanations about the automation’s behavior and the users’ Mental Models. Four selected cognitive biases (Truthiness Effect, Automation Bias, Action Bias, and Illusory Control) were tested in a three-part study with N = 34 participants. The study results revealed evidence for the users’ susceptibility to Truthiness Effect and Illusory Control. Although it cannot be concluded that Cognitive Biases occur in every driving scenario, they nevertheless can manifest situationally, and their emergence is influenced by external factors that cannot be easily predicted. Furthermore, the importance and strong influence of explanations about the automation’s behavior was confirmed once more. Providing explanations increased the agreement with the automation, led to a higher Sense of Control, and a lower need to intervene in the driving task. The study underscores both theoretical and practical implications: bias frameworks need to integrate contextual moderators and the persuasive effects of explanations, while interface design must balance informativeness with mechanisms that counteract overtrust and illusory control. Training and onboarding can help strengthen user mental models, but cannot fully eliminate susceptibility to Cognitive Biases, reinforcing the need for robust system design. However, the findings must be interpreted with caution, given the limited ecological validity due to the small sample size and the reliance on video-based scenarios and a simplified method for mental model elicitation. Intended as a hypothesis-generating exploration, this study seeks to initiate a dialog on how Cognitive Biases shape human reasoning and decision-making in interactions with AVs. Looking ahead, future research should examine additional biases, explore whether certain biases might be mitigated or strategically leveraged, and employ high-fidelity simulation or on-road studies to validate how biases manifest in real-world driving contexts. Therefore, we encourage the HCI research community to deepen the discussion about users’ cognitive shortcomings, misconceptions, and flawed thinking to develop truly human-centered interfaces and facilitate a safe and user-friendly deployment of automated systems.

9. Materials and Methods

AI-based grammar support tools were used to improve grammar and enhance the overall writing style. In a second iteration of the final manuscript, generative AI was used to support the deeper analysis of connections between the combined results, contributing to a more nuanced discussion.