A Review on AI Miniaturization: Trends and Challenges

Abstract

1. Introduction

1.1. Background

1.2. Comparison with Existing Reviews

- (i)

- Systematic comparison of representative computing architectures. We summarize and compare cloud computing, edge computing, fog computing, cloudlet, and MDCs, analyzing their respective advantages and limitations in terms of latency, energy efficiency, cost, and structural adaptability. This provides insights into deployment constraints under different computing paradigms;

- (ii)

- Distillation of three core miniaturization strategies. We categorize existing scattered research into three strategic approaches—redundancy compression, knowledge transfer, and hardware–software co-design—and organize representative methods into a unified classification framework;

- (iii)

- Proposal of three structural design principles. Inspired by the development of embedded systems, we propose three principles for AI miniaturization: reducing the execution burden on end devices, enhancing native computational capability through hardware–software co-design, and balancing local intelligence with centralized AI. This emphasizes that miniaturization is not merely model compression but structural reconfiguration;

- (iv)

- Construction of a practice-oriented design framework. Building on the architectural comparison, strategic pathways, and structural principles, we propose a practice-oriented framework for AI miniaturization, offering methodological references for deployment under both energy-first and performance-first scenarios.

1.3. Methodology

- (i)

- publications written in English;

- (ii)

- works closely related to AI miniaturization, deployment architectures, or energy efficiency optimization;

- (iii)

- review papers or high-quality (frequently cited) research articles.

- (i)

- non-English publications;

- (ii)

- works without full-text availability;

- (iii)

- papers weakly related to the topic.

1.4. Organization of This Review

2. Existing Work

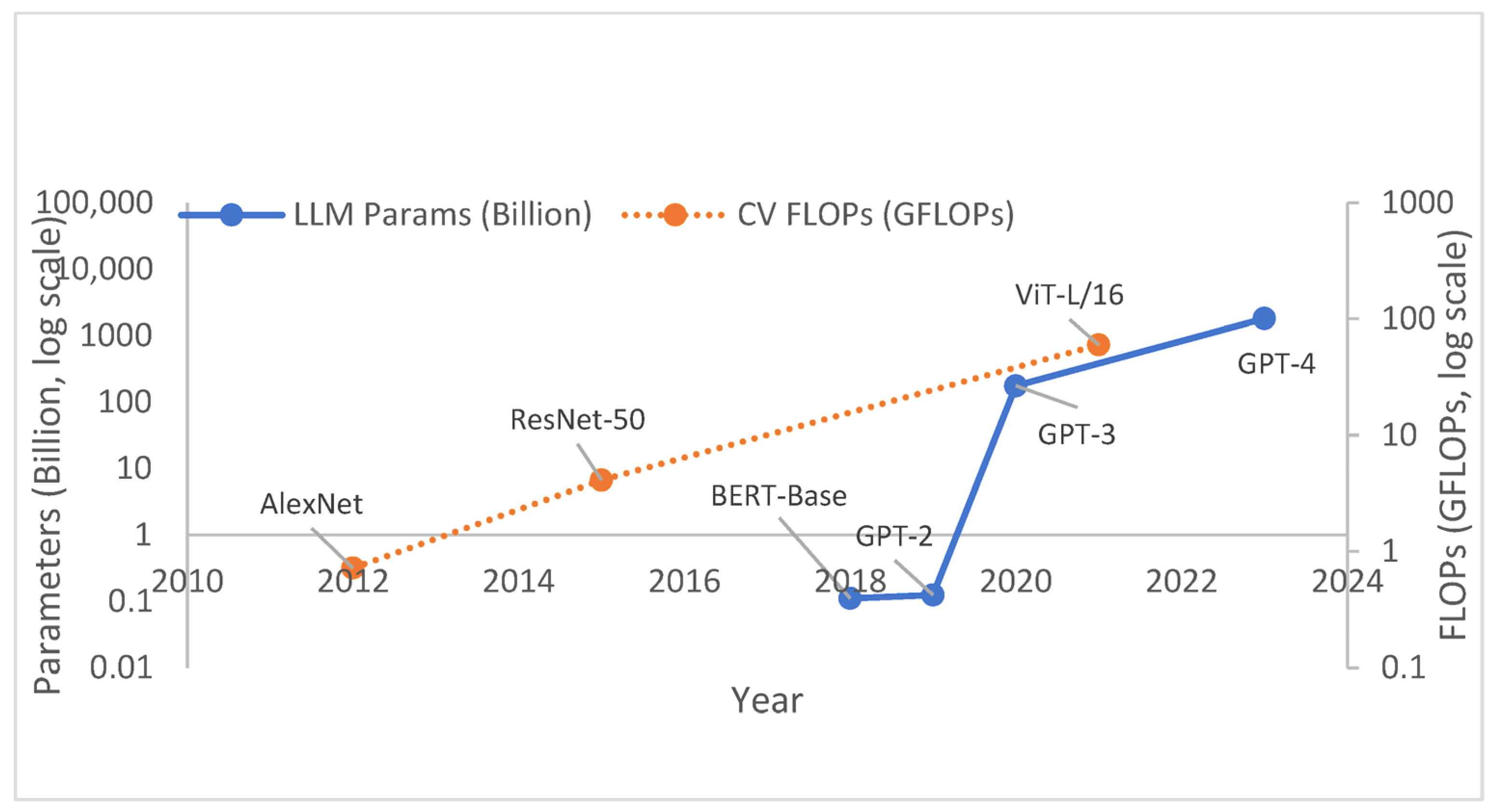

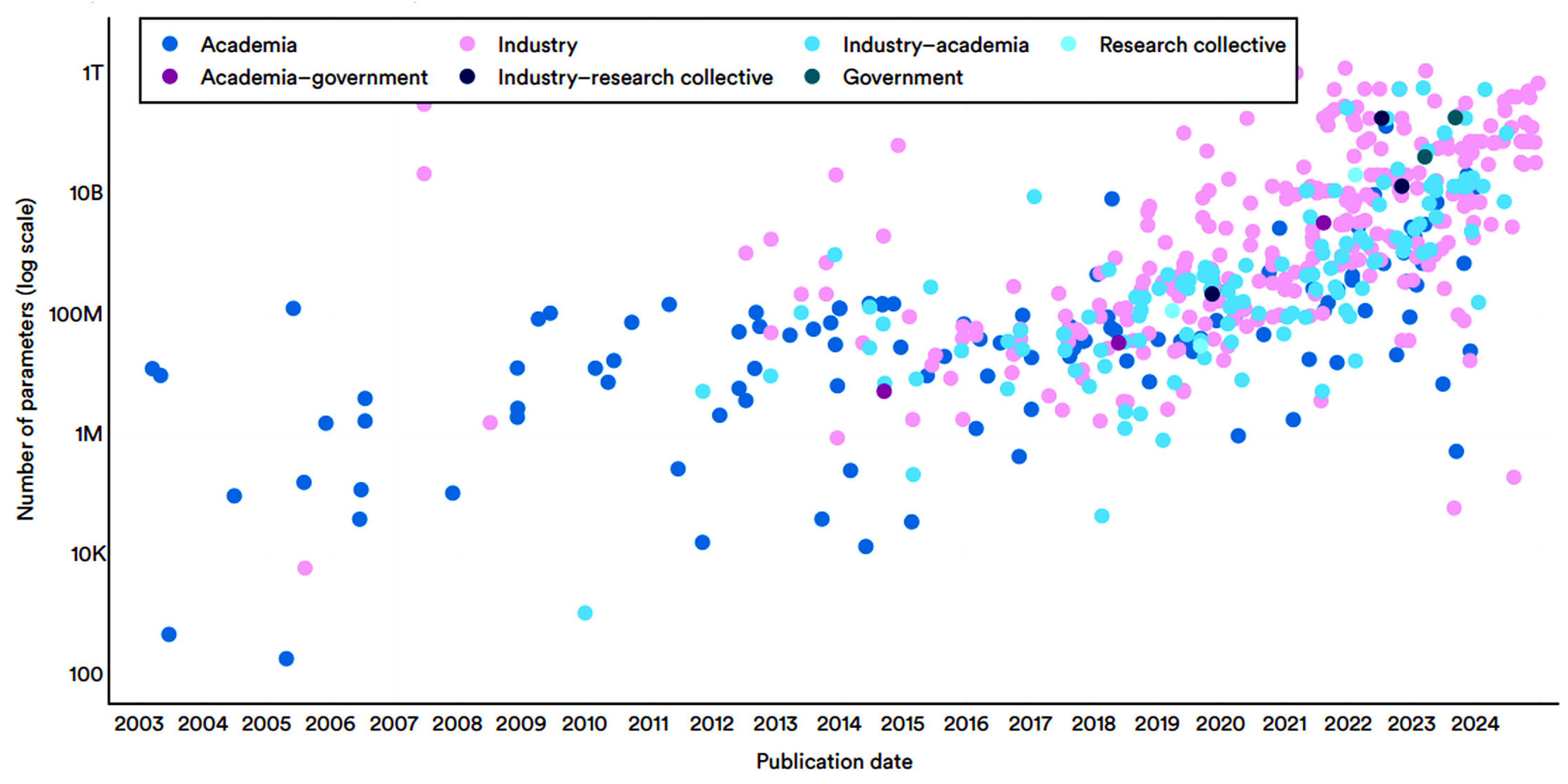

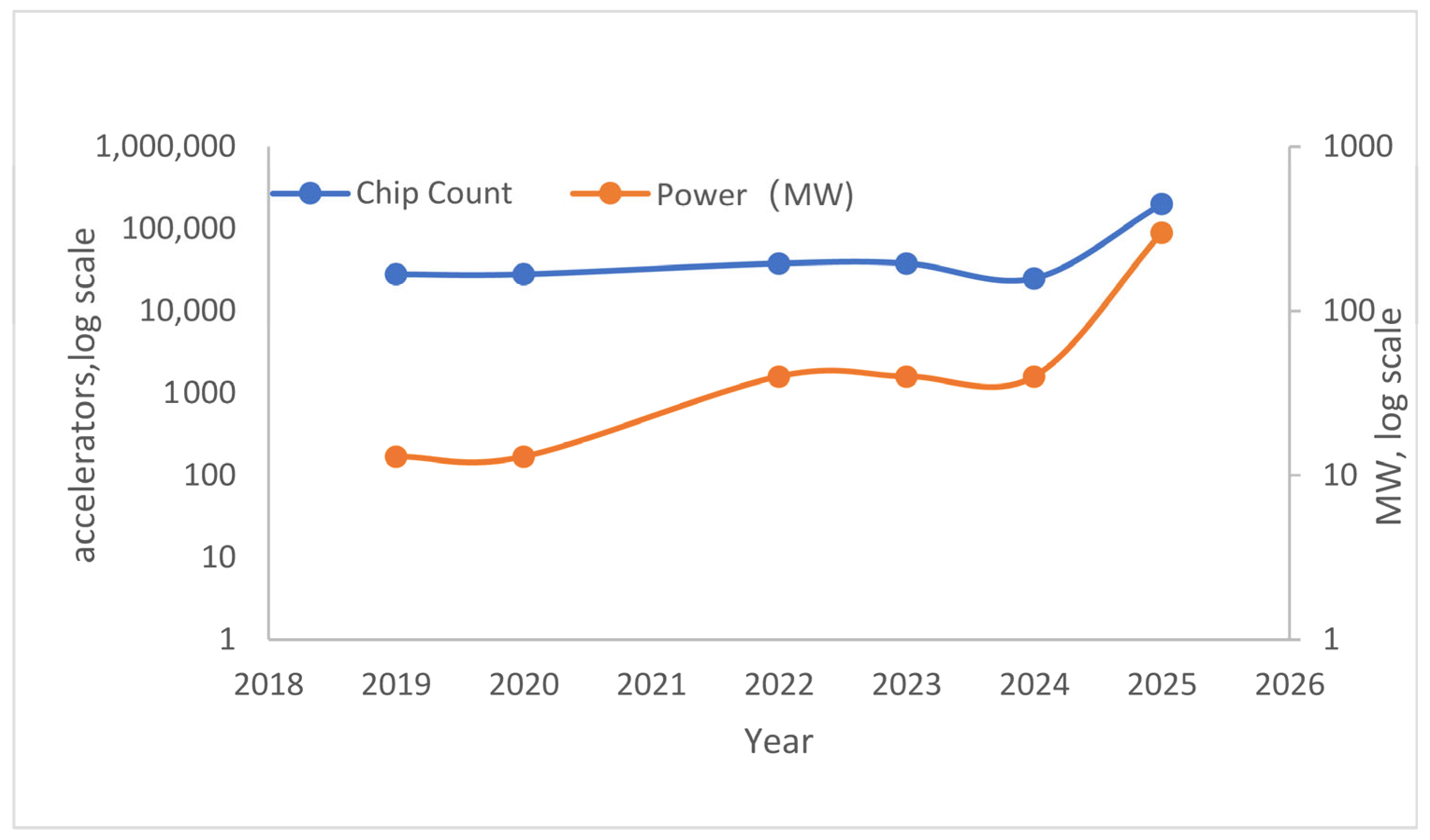

2.1. Exponentially Increasing Demands on Software and Hardware Resources for AI

| Model Name | Parameter Size (B) | Training Hardware Used | Estimated Equivalent A100 GPUs |

|---|---|---|---|

| AlexNet (2012) | 0.06 | GTX 580 × 2 | ≈0.01 A100-equivalent |

| BERT-Large (2018) | 0.34 | Tensor Processing Unit v3 × 64 | ≈4 A100 GPUs |

| GPT-2 (2019) | 1.5 | V100 × hundreds | ≈50 A100 GPUs |

| GPT-3 (2020) | 175 | V100 × 3640 | ≈2000 A100 GPUs |

| GPT-4 (2023) | Not disclosed; | A100 × 25,000+ | ≈25,000 A100 GPUs |

| GPT-4 Turbo (2024) | Not disclosed; energy-first deployment (OpenAI, 2024) | H100/A100 hybrid deployment | Not disclosed, but energy-first deployment (undisclosed) |

2.2. Cloud and Edge Computing Form a Trade-Off Solution for Existing AI Systems

2.2.1. Cloud Computing

- (i)

- Public Clouds. Platforms such as Amazon Web Services (AWS), Azure, and Google Cloud require no local infrastructure, provide elastic scalability, and adopt a pay-as-you-go model. These platforms form the foundation of large-scale AI deployment, supporting services such as GPT-4 and Google Bard. However, they suffer from limited data privacy [36], latency issues, and reduced customization due to shared infrastructure. This classification and definition were formally provided by National Institute of Standards and Technology (NIST) [41];

- (ii)

- Private Clouds. Represented by IBM Cloud Private and OpenStack, private clouds offer enhanced security and customization, making them suitable for sensitive sectors such as finance, healthcare, and government. Typical applications include medical image analysis and banking risk models. However, they involve high construction and maintenance costs [37];

- (iii)

- Hybrid Clouds. Solutions such as Azure Stack and Amazon Outposts enable flexible switching between local and cloud environments. These architectures are often used in “cloud-based training and on-premises deployment” models, particularly for industrial AI and autonomous driving. Yet, their management complexity remains a major challenge;

- (iv)

- Community Clouds. Typically used in consortia involving healthcare, research, or public institutions, community clouds support resource sharing and cost distribution across multiple organizations. Representative applications include federated diagnostic model training within healthcare alliances or GPU sharing among universities. However, their user scope is limited, and governance issues remain significant [38];

- (v)

- Edge Clouds. Platforms such as NVIDIA Edge Computing Platform (EGX), Huawei Cloud Edge, and edge clouds operated by telecom carriers emphasize local processing to reduce backhaul bandwidth consumption, thereby achieving low latency [12]. This concept has already been incorporated into discussions of edge intelligence [19,42].

2.2.2. Edge Computing

- (i)

- Fog Computing. Proposed and formalized by Cisco, fog computing emphasizes pushing computation and storage downward to network-layer nodes closer to the data source, thereby achieving lower latency and higher reliability [45];

- (ii)

- Cloudlet Computing. Introduced by Satyanarayanan, this concept involves deploying small-scale virtualized data centers near wireless access points, enabling mobile devices to access low-latency computational support over wireless connections [46];

- (iii)

- Mobile Edge Computing. Standardized by the European Telecommunications Standards Institute (ETSI), MEC features deep integration with telecommunication access networks. Typical applications include vehicular networks, emergency response systems, and the industrial IoT [47] (ETSI MEC White Paper, 2014/2019/2022);

- (iv)

- Micro Data Centers (MDCs). Designed to replicate full data center functionality at the edge, MDCs are suitable for high-investment scenarios such as industrial automation and remote environmental monitoring [42].

2.3. AI Miniaturization Strategies and Technical Pathways

2.3.1. Major Strategies for AI Miniaturization

- (1)

- Redundancy Reduction

- (i)

- Model Pruning [52]. By removing unimportant neurons or connections, pruning reduces model size. Han et al. introduced a three-step approach consisting of pruning, quantization, and entropy coding, achieving up to a 49× compression ratio on CNNs. However, pruning remains challenging in large-scale transformer models. Recently, movement pruning was proposed to adaptively select sparsity patterns during fine-tuning based on gradient migration strength, achieving significantly greater sparsity while maintaining a comparable level of accuracy. This method has become representative of adaptive pruning [55];

- (ii)

- Quantization. Reducing the parameter precision from 32 bit to 8 bit or lower can significantly decrease storage and computational overhead [56]. Representative approaches include INT8 quantization combined with quantization-aware training (QAT), which maintains accuracy while achieving acceleration and compression [56]. More recently, FP8 formats have been adopted for training and inference, reducing bandwidth and memory usage while improving throughput [57]. Even more aggressive 4-bit methods such as SmoothQuant (2023), Activation-aware Weight Quantization (AWQ) [58], and GPTQ [59] further compress parameters while preserving robustness, making the deployment of language models (LLMs) on consumer-grade GPUs feasible;

- (iii)

- Low-Rank Decomposition [60]. This technique approximates weight tensors through matrix factorization, thereby reducing computational complexity. It is commonly applied to compress fully connected layers or attention weights;

- (iv)

- Lightweight Architecture Design [61]. Models such as MobileNet and ShuffleNet, which are based on depthwise separable convolutions or channel shuffling, are specifically designed for mobile deployment.

- (2)

- Knowledge Transfer

- (i)

- Knowledge Distillation [53]. Small models are trained to mimic the outputs of larger models. Hinton et al. first proposed the use of “soft labels” to transfer knowledge effectively;

- (ii)

- Parameter Sharing [62]. Sharing weights across related tasks reduces the total number of parameters, thereby improving efficiency;

- (iii)

- Transfer Learning. Cross-domain applications are enabled by fine-tuning pretrained models or extracting transferable features;

- (iv)

- Parameter-Efficient Fine-Tuning. Recent methods such as Low-Rank Adaptation (LoRA) [63] freeze the base model weights while introducing only low-rank adaptation matrices, significantly reducing the number of trainable parameters. Building on this, Quantized Low-Rank Adaptation (QLoRA) [64] combines four-bit weight quantization with the LoRA paradigm, enabling the efficient fine-tuning of large models even in single-GPU or low-memory environments. These approaches have become critical techniques for adapting large models to resource-constrained platforms.

- (3)

- Hardware–Software Co-Design

- (i)

- Neural Architecture Search [65]. NAS automates the design of model architectures under hardware constraints. For example, EfficientNet employs a compound scaling strategy to balance depth, width, and resolution, achieving both efficiency and accuracy.

- (ii)

- Computation Graph Compilation Optimization [48]. At the framework level, Tensor Virtual Machine (TVM) [48] enables end-to-end operator generation and tuning; Open Neural Network Exchange (ONNX) Runtime [55] and Accelerated Linear Algebra (XLA) [66] accelerate inference through graph fusion and cross-hardware optimization; and NVIDIA TensorRT (TensorRT) further leverages operator-level optimizations on GPUs to deliver low latency and high throughput.

- (iii)

- Deployment-Specific Optimizations [54]. These include caching strategies, batch-size tuning, and operator fusion. More recently, research has proposed KV cache compression and quantization methods. For instance, ZipCache [67] employs saliency-based selection to reduce redundant cache entries, significantly lowering memory footprint while preserving accuracy in long-context inference.

- (4)

- Integration of Strategies.

2.3.2. Major Directions/Scenarios for AI Miniaturization

- (1)

- Energy-First Systems

- (i)

- Early Exit. Terminates inference once a target confidence level is reached to avoid redundant computation [70];

- (ii)

- Cascade Detectors. Use lightweight models for initial screening, followed by more complex models only when necessary [25];

- (iii)

- Adaptive Sampling and Hierarchical Pipelines. Reduce transmission frequency and bandwidth consumption by structuring layered processing [19];

- (iv)

- Dynamic Voltage and Frequency Scaling (DVFS)-aware Scheduling. Dynamically adjusts voltage and frequency to reduce power consumption [42];

- (v)

- Small Context Windows. Restrict context length in language tasks to reduce memory and computational overhead [64].

- (2)

- Performance-First Systems

- (i)

- Split Computing. Partitions the model so that the early layers are deployed on the device side, while subsequent layers are executed in the cloud [23];

- (ii)

- Near-End Refinement. Edge devices perform low-precision inference, while cloud servers provide high-precision correction [75];

- (iii)

- Speculative Decoding. Makes parallel predictions of multiple candidate outputs to reduce response latency [76];

- (iv)

- Key–Value (KV) Cache Reuse. Reduces redundant computation in large LLMs during long-context tasks [67];

- (v)

- Micro-Batching. Improves throughput and hardware utilization by running small-batch parallel workloads. While widely adopted in cloud-based GPU scenarios, its application to resource-constrained edge devices requires further exploration.

- (vi)

- Performance-first systems can be found in the following cases.

3. Discussion and Recommendations

3.1. AI Miniaturization as a Key Step in AI Development

3.2. AI Miniaturization: A Natural Evolution Rather than a Mere Compromise

3.3. Trends in AI Miniaturization: Insights from the Evolution of Embedded Systems

3.4. Reflections on AI Miniaturization, Productization, and Implementation Pathways

3.4.1. The Expanding Role of AI Through Miniaturization

3.4.2. Not Just Making AI Smaller, but Making AI-Driven Devices Lighter

3.4.3. Co-Evolution of AI Chips and Models: Computation as an Endogenous Structural Resource

3.4.4. Ecological Symbiosis of AI Models: Integrating Local Intelligence with Global Optimization

3.5. Methodological Framework and Implementation Pathways

- (i)

- Profiling (Performance and Energy Characterization). Establishes quantitative profiles of performance, latency, energy consumption, and memory footprint;

- (ii)

- Partitioning (Computation and Communication Allocation). Divides computational tasks and communication loads across cloud, edge, and device layers according to system constraints;

- (iii)

- Optimization (Constraint-Driven Combinatorial Tuning). Applies joint optimization under multi-dimensional constraints, balancing accuracy, latency, energy efficiency, and memory usage;

- (iv)

- Validation (Standardized Verification). Conducts standardized evaluation and benchmarking to ensure replicability, reliability, and fairness across deployment environments.

3.5.1. Profiling (Performance and Energy Characterization)

- (i)

- Computational Overhead. The computational load and inference latency of individual layers or operators;

- (ii)

- Memory Footprint. Peak memory usage of model weights and intermediate activations;

- (iii)

- Communication Performance. Uplink and downlink bandwidth, as well as round-trip latency;

- (iv)

- Energy Distribution. Power consumption and runtime of different modules.

3.5.2. Partitioning (Computation and Communication Allocation)

- (i)

- Device–Cloud Split Computing. Partitioning model layers between local devices and the cloud;

- (ii)

- Cascade/Two-Stage Filtering. Lightweight local models perform preliminary screening before invoking more complex remote models;

- (iii)

- Edge-Side Refinement. Performing low-precision inference at the edge, with cloud servers providing high-precision correction;

- (iv)

- Streaming or Batch Transmission. Selecting transmission modes depending on bandwidth and latency constraints.

3.5.3. Optimization (Method Mapping and Combination)

- (i)

- Latency Reduction. Compiler and runtime optimizations [81], operator fusion, speculative decoding, and parallel or pipelined execution;

- (ii)

- Energy Reduction. Early exiting, adaptive sampling and hierarchical pipelines, DVFS scheduling, and compressed or pruned data transmission;

- (iii)

- Memory Footprint Reduction. Low-bit quantization (INT8, FP8, four-bit), cache compression or quantization, and activation checkpointing;

- (iv)

- Model Size Reduction. Structured or movement pruning, knowledge distillation, low-rank decomposition, and parameter-efficient fine-tuning methods such as LoRA/QLoRA;

- (v)

- Collaborative Optimization. Device–cloud partitioning, near-end refinement, and cache reuse.

3.5.4. Validation (Verification and Regression)

- (i)

- Model Accuracy. Metrics such as classification accuracy and detection precision;

- (ii)

- Latency. Not only mean latency but also tail latency measures such as P95;

- (iii)

- Energy Consumption per Inference. The energy required for an inference pass;

- (iv)

- Peak Memory Usage. Maximum memory footprint during execution;

- (v)

- Stability. Indicators such as thermal behavior, throttling events, and error rates;

- (vi)

- Cost. Both hardware acquisition and operational expenses.

3.6. Design Checklist

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| AWQ | Activation-aware Weight Quantization |

| AWS | Amazon Web Services |

| CV | Computer Vision |

| DVFS | Dynamic Voltage and Frequency Scaling |

| EGX | NVIDIA Edge Computing Platform |

| ETSI | European Telecommunications Standards Institute |

| FLOPs | Floating-Point Operations |

| FP16 | 16-bit Floating Point |

| FP8 | 8-bit Floating Point |

| GFLOPs | Giga Floating-Point Operations |

| GPU | Graphics Processing Unit |

| GPT | Generative Pre-trained Transformer |

| HAR | Human Activity Recognition |

| INT8 | 8-bit Integer |

| IoT | Internet of Things |

| KV Cache | Key–Value Cache |

| LLM | Large Language Model |

| LoRA | Low-Rank Adaptation |

| QLoRA | Quantized Low-Rank Adaptation |

| MDC | Micro Data Center |

| MEC | Mobile Edge Computing |

| NAS | Neural Architecture Search |

| NIST | National Institute of Standards and Technology |

| NLP | Natural Language Processing |

| OFA | Once-for-All |

| ONNX | Open Neural Network Exchange |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| QAT | Quantization-Aware Training |

| TensorRT | NVIDIA TensorRT |

| TVM | Tensor Virtual Machine |

| XGBoost | eXtreme Gradient Boosting |

| XLA | Accelerated Linear Algebra |

| YOLO | You Only Look Once |

Appendix A

Appendix A.1. PRISMA Flow Diagram

Appendix A.2. Data and Normalization Disclosure

- (1)

- Data Sources

- (2)

- Definitions and Normalization

- (3)

- Estimation vs. Reported Values

- (4)

- Uncertainty and Caveats

References

- McCarthy, J.; Minsky, M.L.; Rochester, N.; Shannon, C.E. A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence. AI Mag. 2006, 27, 12–14. [Google Scholar] [CrossRef]

- Brynjolfsson, E.; Rock, D.; Syverson, C. Artificial Intelligence and the Modern Productivity Paradox: A Clash of Expectations and Statistics; National Bureau of Economic Research: Cambridge, MA, USA, 2017; w24001. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al.; OpenAI GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. Image Recognit. 2015, 7, 327–336. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training; OpenAI Technical Report; 2018; Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 11 October 2025).

- Jumper, J.; Evans, R.; Pritzel, A.; Green, T.; Figurnov, M.; Ronneberger, O.; Tunyasuvunakool, K.; Bates, R.; Žídek, A.; Potapenko, A.; et al. Highly Accurate Protein Structure Prediction with AlphaFold. Nature 2021, 596, 583–589. [Google Scholar] [CrossRef]

- Strubell, E.; Ganesh, A.; McCallum, A. Energy and Policy Considerations for Deep Learning in NLP. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3645–3650. [Google Scholar]

- Gonzales, J.T. Implications of AI Innovation on Economic Growth: A Panel Data Study. J. Econ. Struct. 2023, 12, 13. [Google Scholar] [CrossRef]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, Toronto, ON, Canada, NY, USA, 3–10 March 2021; ACM: New York, NY, USA; pp. 610–623. [Google Scholar]

- Vinuesa, R.; Azizpour, H.; Leite, I.; Balaam, M.; Dignum, V.; Domisch, S.; Felländer, A.; Langhans, S.D.; Tegmark, M.; Fuso Nerini, F. The Role of Artificial Intelligence in Achieving the Sustainable Development Goals. Nat. Commun. 2020, 11, 233. [Google Scholar] [CrossRef]

- OECD. Measuring the Environmental Impacts of Artificial Intelligence Compute and Applications: The AI Footprint; OECD Digital Economy Papers. No. 341; OECD Publishing: Paris, France, 2022. [Google Scholar] [CrossRef]

- Sinha, S.; State of IoT 2024: Number of Connected IoT Devices Growing 13% to 18.8 Billion Globally. IoT Anal. 2024. Available online: https://iot-analytics.com/number-connected-iot-devices/ (accessed on 9 October 2025).

- Hammi, B.; Khatoun, R.; Zeadally, S.; Fayad, A.; Khoukhi, L. IoT Technologiesfor Smart Cities. IET Netw. 2018, 7, 1–13. [Google Scholar] [CrossRef]

- Gubbi, J.; Buyya, R.; Marusic, S.; Palaniswami, M. Internet of Things (IoT): A Vision, Architectural Elements, and Future Directions. Future Gener. Comput. Syst. 2013, 29, 1645–1660. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge Computing: Vision and Challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, X.; Li, E.; Zeng, L.; Luo, K.; Zhang, J. Edge Intelligence: Paving the Last Mile of Artificial Intelligence with Edge Computing. Proc. IEEE 2019, 107, 1738–1762. [Google Scholar] [CrossRef]

- Mosenia, A.; Jha, N.K. A Comprehensive Study of Security of Internet-of-Things. IEEE Trans. Emerg. Top. Comput. 2017, 5, 586–602. [Google Scholar] [CrossRef]

- Li, H.; Ota, K.; Dong, M. Learning IoT in Edge: Deep Learning for the Internet of Things with Edge Computing. IEEE Netw. 2018, 32, 96–101. [Google Scholar] [CrossRef]

- Duan, Q.; Huang, J.; Hu, S.; Deng, R.; Lu, Z.; Yu, S. Combining Federated Learning and Edge Computing Toward Ubiquitous Intelligence in 6G Network: Challenges, Recent Advances, and Future Directions. IEEE Commun. Surv. Tutor. 2023, 25, 2892–2950. [Google Scholar] [CrossRef]

- Wang, X.; Tang, Z.; Guo, J.; Meng, T.; Wang, C.; Wang, T.; Jia, W. Empowering Edge Intelligence: A Comprehensive Survey on On-Device AI Models. ACM Comput. Surv. 2025, 57, 1–39. [Google Scholar] [CrossRef]

- Dantas, P.V.; Sabino Da Silva, W.; Cordeiro, L.C.; Carvalho, C.B. A Comprehensive Review of Model Compression Techniques in Machine Learning. Appl. Intell. 2024, 54, 11804–11844. [Google Scholar] [CrossRef]

- Liu, D.; Zhu, Y.; Liu, Z.; Liu, Y.; Han, C.; Tian, J.; Li, R.; Yi, W. A Survey of Model Compression Techniques: Past, Present, and Future. Front. Robot. AI 2025, 12, 1518965. [Google Scholar] [CrossRef]

- Deng, S.; Zhao, H.; Fang, W.; Yin, J.; Dustdar, S.; Zomaya, A.Y. Edge Intelligence: The Confluence of Edge Computing and Artificial Intelligence. IEEE Internet Things J. 2020, 7, 7457–7469. [Google Scholar] [CrossRef]

- Chéour, R.; Jmal, M.W.; Khriji, S.; El Houssaini, D.; Trigona, C.; Abid, M.; Kanoun, O. Towards Hybrid Energy-Efficient Power Management in Wireless Sensor Networks. Sensors 2021, 22, 301. [Google Scholar] [CrossRef]

- Maslej, N. Artificial Intelligence Index Report 2025. arXiv 2025, arXiv:2504.07139. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; Curran Associates, Inc.: New York, NY, USA, 2012; Volume 25. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners; OpenAI Technical Report; OpenAI: San Francisco, CA, USA, 2019; Available online: https://cdn.openai.com/better-language-models/language_models_are_unsupervised_multitask_learners.pdf (accessed on 11 October 2025).

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- The 2025 AI Index Report|Stanford HAI. Available online: https://hai.stanford.edu/ai-index/2025-ai-index-report (accessed on 24 September 2025).

- Kaplan, J.; McCandlish, S.; Henighan, T.; Brown, T.B.; Chess, B.; Child, R.; Gray, S.; Radford, A.; Wu, J.; Amodei, D. Scaling Laws for Neural Language Models. arXiv 2020, arXiv:2001.08361. [Google Scholar] [CrossRef]

- Van Der Vlist, F.; Helmond, A.; Ferrari, F. Big AI: Cloud Infrastructure Dependence and the Industrialisation of Artificial Intelligence. Big Data Soc. 2024, 11, 20539517241232630. [Google Scholar] [CrossRef]

- Zhang, Q.; Cheng, L.; Boutaba, R. Cloud Computing: State-of-the-Art and Research Challenges. J. Internet Serv. Appl. 2010, 1, 7–18. [Google Scholar] [CrossRef]

- Sikeridis, D.; Papapanagiotou, I.; Rimal, B.P.; Devetsikiotis, M. A Comparative Taxonomy and Survey of Public Cloud Infrastructure Vendors. Comput. Netw. 2020, arXiv:1710.01476168, 107019. [Google Scholar]

- Srivastava, P.; Khan, R. A Review Paper on Cloud Computing. Int. J. Adv. Res. Comput. Sci. Softw. Eng. 2017, 7, 362–365. [Google Scholar] [CrossRef]

- Goyal, S. Public vs Private vs. Hybrid vs. Community—Cloud Computing: A Critical Review. Int. J. Comput. Netw. Inf. Secur. 2014, 6, 20–29. [Google Scholar] [CrossRef]

- Moghaddam, S.M.S.H.; Dashtdar, M.; Jafari, H. AI Applications in Smart Cities’ Energy Systems Automation. Repa Proceeding Ser. 2022, 3, 1–5. [Google Scholar] [CrossRef]

- Sun, L.; Jiang, X.; Ren, H.; Guo, Y. Edge-Cloud Computing and Artificial Intelligence in Internet of Medical Things: Architecture, Technology and Application. IEEE Access 2020, 8, 101079–101092. [Google Scholar] [CrossRef]

- Mell, P.; Grance, T. The NIST Definition of Cloud Computing; National Institute of Standards and Technology (NIST): Gaithersburg, MD, USA, 2011; p. 7. [Google Scholar]

- Iftikhar, S.; Gill, S.S.; Song, C.; Xu, M.; Aslanpour, M.S.; Toosi, A.N.; Du, J.; Wu, H.; Ghosh, S.; Chowdhury, D.; et al. AI-Based Fog and Edge Computing: A Systematic Review, Taxonomy and Future Directions. Internet Things 2023, 21, 100674. [Google Scholar] [CrossRef]

- Admin, L.; Elmirghani, J. A Survey of Big Data Machine Learning Applications Optimization in Cloud Data Centers and Networks. Appl. Sci. 2021, 11, 7291. [Google Scholar] [CrossRef]

- Khan, W.Z.; Ahmed, E.; Hakak, S.; Yaqoob, I.; Ahmed, A. Edge Computing: A Survey. Future Gener. Comput. Syst. 2019, 97, 219–235. [Google Scholar] [CrossRef]

- Bonomi, F.; Milito, R.; Zhu, J.; Addepalli, S. Fog Computing and Its Role in the Internet of Things. In Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing, Helsinki, Finland, 17 August 2012; ACM: New York, NY, USA, 2012; pp. 13–16. [Google Scholar]

- Satyanarayanan, M.; Bahl, P.; Caceres, R.; Davies, N. The Case for VM-Based Cloudlets in Mobile Computing. IEEE Pervasive Comput. 2009, 8, 14–23. [Google Scholar] [CrossRef]

- Welcome to the World of Standards! Available online: https://www.etsi.org/ (accessed on 26 September 2025).

- Chen, T.; Moreau, T.; Jiang, Z.; Zheng, L.; Yan, E.; Cowan, M.; Shen, H.; Wang, L.; Hu, Y.; Ceze, L.; et al. TVM: An Automated End-to-End Optimizing Compiler for Deep Learning. In Proceedings of the 13th USENIX Symposium on Operating Systems Design and Implementation (OSDI 18), Carlsbad, CA, USA, 8–10 October 2018; USENIX Association: Berkeley, CA, USA, 2018; pp. 578–594. [Google Scholar]

- Pilz, K.F.; Sanders, J.; Rahman, R.; Heim, L. Trends in AI Supercomputers. arXiv 2025, arXiv:2504.16026. [Google Scholar] [CrossRef]

- Mishra, A.; Nurvitadhi, E.; Cook, J.J.; Marr, D. Wrpn: Wide Reduced-Precision Networks. arXiv 2018, arXiv:1709.01134. [Google Scholar]

- Stiefel, K.S.; Coggan, J. The Energy Challenges of Artificial Superintelligence. Front. Artif. Intell. 2022, 5, 1–5. [Google Scholar] [CrossRef]

- Han, S.; Mao, H.; Dally, W.J. Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. arXiv 2016, arXiv:1510.00149. [Google Scholar] [CrossRef]

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Ren, A.; Zhang, T.; Ye, S.; Li, J.; Xu, W.; Qian, X.; Lin, X.; Wang, Y. ADMM-NN: An Algorithm-Hardware Co-Design Framework of DNNs Using Alternating Direction Methods of Multipliers. In Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, Providence, RI, USA, 13–17 April 2019; ACM: New York, NY, USA, 2019; pp. 925–938. [Google Scholar]

- Sanh, V.; Wolf, T.; Rush, A.M. Movement Pruning: Adaptive Sparsity by Fine-Tuning. Adv. Neural Inf. Process. Syst. 2020, 33, 20378–20389. [Google Scholar]

- Jacob, B.; Kligys, S.; Chen, B.; Zhu, M.; Tang, M.; Howard, A.; Adam, H.; Kalenichenko, D. Quantization and Training of Neural Networks for Efficient Integer-Arithmetic-Only Inference. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2704–2713. [Google Scholar]

- Micikevicius, P.; Stosic, D.; Burgess, N.; Cornea, M.; Dubey, P.; Grisenthwaite, R.; Ha, S.; Heinecke, A.; Judd, P.; Kamalu, J.; et al. FP8 Formats for Deep Learning. arXiv 2022, arXiv:2209.05433. [Google Scholar] [CrossRef]

- Lin, J.; Tang, J.; Tang, H.; Yang, S.; Xiao, G.; Han, S. AWQ: Activation-Aware Weight Quantization for On-Device LLM Compression and Acceleration. GetMobile Mob. Comput. Commun. 2025, 28, 12–17. [Google Scholar] [CrossRef]

- Frantar, E.; Ashkboos, S.; Hoefler, T.; Alistarh, D. GPTQ: Accurate Post-Training Quantization for Generative Pre-Trained Transformers. arXiv 2023, arXiv:2210.17323. [Google Scholar]

- Denton, R.; Zaremba, W.; Bruna, J.; LeCun, Y.; Fergus, R. Exploiting Linear Structure Within Convolutional Networks for Efficient Evaluation. Adv. Neural Inf. Process. Syst. 2014, 27, 1269–1277. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for Self-Supervised Learning of Language Representations. arXiv 2020, arXiv:1909.11942. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. ICLR 2022, 1, 3. [Google Scholar]

- Dettmers, T.; Pagnoni, A.; Holtzman, A.; Zettlemoyer, L. QLORA: Efficient Finetuning of Quantized LLMs. Adv. Neural Inf. Process. Syst. 2023, 36, 10088–10115. [Google Scholar]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Snider, D.; Liang, R. Operator Fusion in XLA: Analysis and Evaluation. arXiv 2023, arXiv:2301.13062. [Google Scholar] [CrossRef]

- He, Y.; Zhang, L.; Wu, W.; Liu, J.; Zhou, H.; Zhuang, B. ZipCache: Accurate and Efficient KV Cache Quantization with Salient Token Identification. In Proceedings of the 38th Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 10–15 December 2024; Curran Associates, Inc.: New Orleans, LA, USA, 2024; Volume 37. forthcoming. [Google Scholar]

- Li, M.; Liu, Y.; Liu, X.; Sun, Q.; You, X.; Yang, H.; Luan, Z.; Gan, L.; Yang, G.; Qian, D. The Deep Learning Compiler: A Comprehensive Survey. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 708–727. [Google Scholar] [CrossRef]

- Zhu, X.; Li, J.; Liu, Y.; Ma, C.; Wang, W. A Survey on Model Compression for Large Language Models. Trans. Assoc. Comput. Linguist. 2023, 12, 1556–1577. [Google Scholar] [CrossRef]

- Jazbec, M.; Timans, A.; Veljkovic, T.H.; Sakmann, K.; Zhang, D.; Naesseth, C.A.; Nalisnick, E. Fast yet Safe: Early-Exiting with Risk Control. Adv. Neural Inf. Process. Syst. 2024, 37, 129825–129854. [Google Scholar]

- Lattanzi, E.; Contoli, C.; Freschi, V. Do We Need Early Exit Networks in Human Activity Recognition? Eng. Appl. Artif. Intell. 2023, 121, 106035. [Google Scholar] [CrossRef]

- Yu, J.; Zhang, L.; Cheng, D.; Huang, W.; Wu, H.; Song, A. Improving Human Activity Recognition With Wearable Sensors Through BEE: Leveraging Early Exit and Gradient Boosting. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 3452–3464. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.; Wang, P.; Lin, B.; Wang, L.; Zeng, X.; Hu, X.; Yuan, J.; Li, J.; Ren, J.; Zhao, H. An Optimized Lightweight Real-Time Detection Network Model for IoT Embedded Devices. Sci. Rep. 2025, 15, 3839. [Google Scholar] [CrossRef]

- Wan, S.; Li, S.; Chen, Z.; Tang, Y. An Ultrasonic-AI Hybrid Approach for Predicting Void Defects in Concrete-Filled Steel Tubes via Enhanced XGBoost with Bayesian Optimization. Case Stud. Constr. Mater. 2025, 22, e04359. [Google Scholar] [CrossRef]

- Singh, R.; Gill, S.S. Edge AI: A Survey. Internet Things Cyber-Phys. Syst. 2023, 3, 71–92. [Google Scholar] [CrossRef]

- Xu, D.; Yin, W.; Zhang, H.; Jin, X.; Zhang, Y.; Wei, S.; Xu, M.; Liu, X. EdgeLLM: Fast On-Device LLM Inference With Speculative Decoding. IEEE Trans. Mob. Comput. 2025, 24, 3256–3273. [Google Scholar] [CrossRef]

- Chen, L.; Feng, D.; Feng, E.; Zhao, R.; Wang, Y.; Xia, Y.; Chen, H.; Xu, P. HeteroLLM: Accelerating Large Language Model Inference on Mobile SoCs Platform with Heterogeneous AI Accelerators. arXiv 2025, arXiv:2501.14794. [Google Scholar]

- Ulicny, C.; Rauch, R.; Gazda, J.; Becvar, Z. Split Computing in Autonomous Mobility for Efficient Semantic Segmentation Using Transformers. In Proceedings of the 2025 IEEE Symposia on Computational Intelligence for Energy, Transport and Environmental Sustainability (CIETES), Trondheim, Norway, 17–20 March 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–8. [Google Scholar]

- Li, B.; Wang, Y.; Ma, H.; Chen, L.; Xiao, J.; Wang, S. MobiLoRA: Accelerating LoRA-Based LLM Inference on Mobile Devices via Context-Aware KV Cache Optimization. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vienna, Austria, 27 July–1 August 2025; Association for Computational Linguistics: Stroudsburg, PA, USA, 2025; pp. 23400–23410. [Google Scholar]

- Hu, K.; Chen, Z.; Kang, H.; Tang, Y. 3D Vision Technologies for a Self-Developed Structural External Crack Damage Recognition Robot. Autom. Constr. 2024, 159, 105262. [Google Scholar] [CrossRef]

- Xia, C.; Zhao, J.; Sun, Q.; Wang, Z.; Wen, Y.; Yu, T.; Feng, X.; Cui, H. Optimizing Deep Learning Inference via Global Analysis and Tensor Expressions. In Proceedings of the 29th ACM International Conference on Architectural Support for Programming Languages and Operating Systems, La Jolla, CA, USA, 27 April–1 May 2024; ACM: New York, NY, USA, 2024; Volume 1, pp. 286–301. [Google Scholar]

| Reference | Source | Coverage Theme | Main Contribution | Limitation |

|---|---|---|---|---|

| Zhou et al., 2019 [19] | Proc. IEEE | Edge intelligence architectures and applications | Systematic overview of edge intelligence and deployment architectures | Limited focus on model miniaturization strategies |

| Duan et al., 2023 [22] | IEEE COMST | Federated learning + edge computing | Analysis of collaborative intelligence in 6G and edge networks | Communication-oriented, little emphasis on AI miniaturization |

| Wang et al., 2025 [23] | ACM CSUR | On-device AI models | Comprehensive review of device-side model optimization and hardware acceleration | Lacks cross-layer/system-level perspective |

| Dantas et al., 2024 [24] | Applied Intelligence | Model compression review | Summarized pruning, quantization, distillation, etc. | Algorithm-level focused, limited discussion on system evolution |

| Liu et al., 2025 [25] | Frontiers in Robotics & AI | Evolution of model compression | Reviewed compression methods from early CNNs to large models | Insufficient analysis of adaptability and energy efficiency |

| Deng et al., 2020 [26] | IEEE IoT J. | Edge intelligence + AI confluence | Proposed taxonomy of edge intelligence applications, challenges, and future directions | More conceptual, limited discussion on energy efficiency and deployment trade-offs |

| Year | Model | Training Cost (USD) | log10(Cost) | Metric (Elo Ranking) | Value (%) |

|---|---|---|---|---|---|

| 2017 | Transformer | ≈670 | 2.83 | – | – |

| 2019 | RoBERTa Large | ≈160,000 | 5.2 | – | – |

| 2023 | GPT-4 | ≈79,000,000 | 7.9 | Top-1 vs. Top-10 gap | 11.9 |

| 2023 | GPT-4 | ≈79,000,000 | 7.9 | Top-1 vs. Top-2 gap | 4.9 |

| 2024 | Llama 3.1-405B | ≈170,000,000 | 8.23 | Top-1 vs. Top-2 gap | 0.7 |

| Application | Scenario Type | Core Principle | Applied Techniques |

|---|---|---|---|

| Huawei Watch GT Series | Energy-First | Redundancy Compression HW–SW Co-Design | Pruning, Quantization MindSpore Lite Optimization Platform Adaptation |

| NVIDIA Jetson Nano | Energy-First | Redundancy Compression HW–SW Co-Design | Pruning, Quantization Lightweight Architecture (e.g., MobileNet, ShuffleNet) TensorRT Fusion Deployment Optimization |

| YOLOv5-Nano/v8-Nano | Performance-First | Redundancy Compression HW–SW Co-Design | NAS Lightweight Architecture Quantization (e.g., INT8, FP16) Custom Mobile Structure Inference Engine Integration |

| MobileNetV3 | Performance-First | Knowledge Transfer Redundancy Transfer | Knowledge Distillation (e.g., DistilBERT, TinyBERT) Parameter Sharing Pruning |

| DistilBERT/TinyBERT | Performance-First | Knowledge Transfer HW–SW Co-Design | Knowledge Distillation Weight Reduction Structure Simplification |

| Once-for-All (OFA) | Performance-First | Knowledge Transfer Redundancy Compression | Supernetwork Distillation Submodel Transfer NAS + Multi-Platform Generation |

| Dimension | Energy-First Systems | Performance-First Systems |

|---|---|---|

| Objective | Maximize battery lifetime; reduce overall energy consumption | Minimize end-to-end latency; increase throughput |

| Constraints | Limited memory capacity and strict energy budget | Accuracy floor must be satisfied; higher hardware or network cost acceptable |

| Methods | Early exit (e.g., multi-branch networks), cascaded detectors, adaptive sampling, DVFS-based scheduling | Split computing, near-end refinement, speculative decoding, cache reuse |

| Trade-offs | Energy saving and longer device lifetime, but possible minor accuracy or latency degradation | Lower latency and higher throughput, but increased power consumption and deployment cost |

| Validation | Focus on power profiling, runtime stability, and reproducibility across hardware | Focus on latency distribution, throughput, and system scalability across workloads |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, B.; Du, S.; Smith, A.J. A Review on AI Miniaturization: Trends and Challenges. Appl. Sci. 2025, 15, 10958. https://doi.org/10.3390/app152010958

Tang B, Du S, Smith AJ. A Review on AI Miniaturization: Trends and Challenges. Applied Sciences. 2025; 15(20):10958. https://doi.org/10.3390/app152010958

Chicago/Turabian StyleTang, Bin, Shengzhi Du, and Antonie Johan Smith. 2025. "A Review on AI Miniaturization: Trends and Challenges" Applied Sciences 15, no. 20: 10958. https://doi.org/10.3390/app152010958

APA StyleTang, B., Du, S., & Smith, A. J. (2025). A Review on AI Miniaturization: Trends and Challenges. Applied Sciences, 15(20), 10958. https://doi.org/10.3390/app152010958