Abstract

This study compares immersive VR-based control systems with conventional keyboard-based control to examine the efficacy of VR interfaces for controlling robotic arms in Internet of Things (IoT) education. A 5-DOF robotic arm with MG996R servomotors and controlled by an Arduino microcontroller and Raspberry Pi wireless communication was operated by 31 third-year engineering students in hands-on experiments using both control modalities. To determine student preferences across in-person, online, and hybrid learning contexts, the study applied a mixed-methods approach that combined qualitative evaluation using open-ended questionnaires and quantitative analysis through Likert-scale surveys. First, it should be mentioned that most of the reported papers either use a robotic arm or a VR system in education. However, we are among the first to report a combination of the two. Secondly, in most cases, there are either technical papers or educational quantitative/qualitative research papers on existing technologies reported in the literature. We combine an innovative education context (robotic arm and VR), completed with a quantitative and qualitative study, making it a complete experiment. Lastly, combining qualitative with quantitative research that complement each other is an innovative aspect in itself in this field.

1. Introduction

According to Saenz-Zamarron, D. [1], robotic arms can serve as useful teaching aids that enable students to put their theoretical understanding into practice by giving them hands-on experience with complex engineering concepts such as design, electronics, and kinematics. These learning resources are helpful for increasing understanding in a number of engineering fields. As shown in Rukangu, A. [2], virtual reality (VR) technology can be used to create a remote robotic laboratory that allows students to take online courses to participate in hardware-intensive robotics exercises. This helps to overcome the technical challenges of providing hands-on laboratory experiences in remote learning environments and the high cost of equipment.

Recent research has further explored the potential of immersive technologies for robotics education. It has been shown that XR simulations improve learning outcomes by providing interactive virtual environments for robot programming and control [3]. While immersive learning and the development of practical skills have been identified as major themes in more general AR educational applications [4], robotics uses of AR can increase student engagement and strengthen understanding of robot operations [5]. Furthermore, the need for safely and successfully integrating hardware and software has been emphasized by IoT security studies [6].

This study examines the effectiveness of various learning modalities and virtual reality (VR) interfaces for controlling robotic arms within an Internet of Things (IoT) course. Thirty-one third-year students from the Faculty of Engineering in Foreign Languages (FILS), including both computer science and electronics engineering students, engaged in practical experiments. They used both direct keyboard input and VR-based control of a robotic arm. In addition, this study also investigates student preferences for in-person, online, and hybrid learning methods; evaluates the usability and efficacy of virtual reality interfaces for robotic control; and evaluates the practical applications of these systems. Similar approaches have been effectively used in other engineering fields. Vergara et al. [7], for instance, showed how to integrate virtual reality (VR) with practical laboratory exercises for concrete compression tests, complete with student feedback. Unlike prior research that exclusively focused on virtual simulations or real robots, our approach enables students to interact with both real and virtual robotic systems simultaneously. This work’s unique addition to robotics education is seen in its dual strategy, combined with the validation received from the participants, which differentiates it from other digital twin or hybrid teaching research.

The paper presents an innovative way to approach educational robots. Compared to the current literature presented in Section 2, we present the benefits of combining a virtual reality system with a robotic arm. The benefits are validated by a quantitative and a qualitative study, which was done through a full semester with a complete lesson plan at the Faculty of Engineering in Foreign languages from the National University of Science and Technology Politehnica Bucharest. The full lesson plan and the way the experiment was completed are presented in Section 4 and Section 5. For the application of our lesson plan, we utilized a real-time digital approach, enabling students to engage with one another in both virtual and real-life settings simultaneously. This topic is little documented in the literature.

2. State of the Art

This chapter presents the current technologies, software applications, and types of robots used in the educational field for teaching a robotics-focused curriculum, and a comparison with our own approach that combines virtual reality with a robotic arm. It is also necessary to differentiate between two similar terms in the literature, “educational robots” and “educational robotics” [8]. The first term refers to the use of robots as a medium of communication and interaction with a user for the purpose of explaining or aiding them in their task. The second term, “educational robotics”, is the main focus of this work, as it refers to the actual programming and building of robots with the purpose of improving learning performances, especially the learning attitudes of the students [9].

According to the meta-analysis by Ouyang, F et al. [9] and Nilüfer Atman Uslu et al. [8], it was found that the use of educational robotics improves the students’ average learning attitude and interest in the subject, but it is not conclusive if it improves their actual performance in STEM (Science, technology, engineering, and mathematics) domain. According to another systematic review, augmented reality and robotics can improve engineering education by offering immersive simulations, multimodal feedback, and visualizations that facilitate robot assembly, control, and programming [10].

A common type of robot used for educational purposes is a robotic arm with multiple degrees of freedom (DOF) that varies between 4- and 6-DOF, depending on the complexity of the tasks that it needs to accomplish. For this purpose, Zeng C et al. [11] designed a 6-DOF arm that can be equipped with multiple tools (grippers, conveyor belt, vacuum pump), which can carry a variety of small objects and has its own customized operating system, iArm, based on Robot Operating System (ROS). The purpose of the operating system and the robotic arm is the let students program and enhance the base behavior of the robot with computer vision or modify its utility based on the required task in the curriculum. The 5 main traits that were analyzed and improved were: problem abstraction, algorithm design, generalization and application, test and correction, and iteration optimization. This study was done face-to-face over the course of a semester with 13 students, and the results have shown an average improvement of the 5 dimensions presented above by 0.6 points on a scale of 1 to 5, from an average of 2.9 to 3.5 for freshmen students, and an improvement of 0.4 for sophomores, from an average of 3.4 to 3.8.

Another study that focuses on face-to-face teaching was done by Kwantongon et al. [12], who designed a Programmable Logic Controller (PLC) for a 5-DOF robotic arm. This study has a sample size of 30 students and compares the effectiveness of the teaching method that uses a PLC controller and one that does not. The tests and performance criteria respected the ISO 9283 standards (ISO, 1998) [13]. The robot used is similar to a Dorna-type robot, and the PLC controller is a Mitsubishi FX5U CPU Model (Mitsubishi Electric Corporation, Tokyo, Japan). The authors analyzed the data before and after the use of the PLC teaching aid to learn the robot. The study was done over a semester, and the first test was given after the first class to explain the functionalities and how the robot works. The test consisted of programming the movements of the robot for a simple task. The second test was taken at the end of the semester after the use of PLC teaching methods during the semester. They have found a significant difference between the two: first test (M = 14.97, SD = 0.81), second test (M = 26.00, SD = 1.93).

Another example of a physical, low-cost, modular robotic arm used in education is presented in Lopez-Neri et al. [14]. The project’s objective was to give students mechatronics and Industry 4.0 skills. The paper’s primary focus is on the robotic arm tool’s design, implementation, and instructional design approach; student experiments were not documented. In Rokbani et al. [15], m-PSO and its multi-objective form, MO-m-PSO, are presented for solving the inverse kinematics of a real 5-DOF robotic arm that is 3D printed and has educational value. Instead of conducting user research with students, they used the 3D-printed arm to test the technology and simulate scenarios. Shintemirov et al. [16] used a potentiometer and two inertial measurement units (IMUs) to create an open-source 7-DOF wireless human arm motion-tracking system for robotics research and mechatronics education. In addition, the study also did not involve student experiments; instead, it focused on the system’s functionality, design, and possible uses in research and education.

An alternative method of employing robots for educational purposes is with the use of newer technologies like Augmented Reality (AR) or Virtual Reality (VR). With the use of these new methods, the dynamics of robot education can change significantly due to the attraction they provide towards the younger generation. The main difference between AR and VR is that AR overlaps virtual interfaces over the physical space, whereas VR immerses the user in a fully virtual environment. An example that integrates Augmented Reality with robot programming is done in the study of Konstantinos L et al. [17]. Their approach uses AR technology to control an industrial robot arm by using hand gestures and User Interface (UI) inputs through the AR interface, thereby facilitating the control of the robot. The hardware used for AR is the HoloLens glasses, and the calibration of AR and physical world position is done through the scan of a QR code placed on the base of the robot. A potential use of this approach is the facilitation of new workers’ training for basic tasks. Some limitations in this study include the precision of movement; due to the AR-Physical world interaction, a precision of under 1 cm could not be achieved.

A second example of an AR implementation is done with the RoSTAR system designed by Xue C. et al. [18]. This system was implemented with the integration of ROS, Unity 3D, and HoloLens. In the AR application, a 3D representation of a physical robotic arm (the robot used is the open-source Niryo One 6-DOF model, Lille, French) was created, which is controlled by the user through the AR UI. All the movements done in the application are replicated and sent to the real arm. The system Unity 3D AR application acts as a Server and sends the information to the ROS client as JSON messages. The system can display the trajectory of the robot before the action has been taken. The goal of the study is to create a less intensive training method for operating robotic arms. One consideration that needs to be taken into account is the calibration of the application; both studies [17,18] reported a problem with the device calibration and the precision of robot movement.

One of the requirements of designing an AR application, as seen in previous work [17,18], is the need to properly calibrate the AR overlay and interaction with the real robot in the physical space. This issue, however, would not appear if the application were switched to VR technology. The main way to use VR for educational purposes and training with robots is to create a replica of a laboratory or the robot itself in the virtual space. This process can be called a “Digital Twin” of a laboratory [19], as in Erdei T. et al.’s study. They digitally recreated an industrial robot laboratory to analyze the impact it can have on training and teaching new students. One of the main reasons for this work is the availability of this technology; with the use of VR, the physical limitations of room capacity and the schedule for the laboratory are removed, and multiple students can be taught in parallel. The laboratory was replicated with the use of Unreal Engine 4, and the test was done on a group of 10 students, of which 5 used the digital laboratory for the training and the other 5 only the standard documentation. The results show that the group that used the “Digital Twin” was significantly faster in solving the given problems compared to the other group. It should be noted that the digital laboratory was not integrated in full VR, and the robot actions in the digital laboratory were controlled from a display screen UI. Another “Digital Twin” approach is mentioned in Tarng et al. [20], which allowed high school students to observe synchronized real-world motion while controlling a virtual model of a real robot.

Unlike the Erdei T. [19] approach, G. Bolano et al. [21] created a robot laboratory in full VR. In the software implementation, they used Unity 3D for the VR environment and FZI Motion Pipeline, an RoS 1 software that transmits the data from Unity to the robot. One downside of the research is the lack of subjects; the robot was only tested for its functionalities and was not tested for its educational or training potential. A similar study was conducted by Christopoulos A. et al. [22], where they tried to create a new remote learning style for robotics using VR. They developed a similar Digital Twin model for their robotics laboratory on a scale. The technologies used were Unity 3D for the VR environment with the robot and a standard messaging protocol for Internet of Things (IoT) devices, MQTT, for sending data over the web to the remote robot to send the position coordinates. Maddipatla Y. et al. [23] showed the educational effectiveness of virtual reality (VR) in robotics education by using an extensive VR-based approach to simplify and illustrate complex robot kinematics, lowering cognitive load while improving learning motivation. This study [23] has the same downside as the previous ones [21,22], as no evaluation was done on students using this technology. Another Digital Twin approach, using solely VR, is described in Wu W. et al. [24]. Twenty students took part in the study, performing six tasks each using three different approaches: real-time adjustment, key-point signaling, and direct trajectory planning. In contrast to general opinions about the experience, the evaluation focused on task performance and workload, collecting data through the NASA-TLX questionnaire, which measured mental strain, effort, and dissatisfaction.

A study by Gonzalez [25] combined an existing virtual robotic arm simulator for teaching basic robotics concepts such as DH parameters, forward and inverse kinematics, and trajectory planning with a small physical robotic arm (DOBOT Magician). By enabling the physical arm to follow the movements of the virtual arm, the hybrid system preserved the freedom of simulation while giving the appearance of programming a genuine industrial arm. According to a comparative study conducted in an undergraduate robotics course, assignment completion rates on cooperative programming tasks rose by 21% (from 57% to 78%) following the integration of the DOBOT arm, while other assignments stayed the same. The physical arm improved motivation and enhanced educational experience, according to a student survey based on the opinions of nine students.

In a related method, Rukangu et al. [26] examined reinforcement exercises with a group of eight students using either a real robotic arm or an AR-based robotic arm. Although both strategies were found to be motivating, the physical robot activities improved perceptions of utility and real-world applicability, whereas the AR activities only increased situational interest through novelty.

Mixed reality is another approach to the digital twin technology in industry. Luo et al. [27] developed a mixed-reality telecollaboration system that combined VR with a physical robotic arm. Two user studies were conducted outside of an academic classroom setting, using adult volunteers. Five experienced VR users examined both local and remote roles, while 24 participants tested three view-sharing strategies for search, assembly, and disassembly tasks. Surveys and interviews regarding task performance, usability, and workload were used to collect data. Another approach is described in Wu et al. [28], where they did a study with 30 graduate students regarding the system’s performance, not academic potential.

The purpose of this chapter was to examine the technologies and approaches that were taken in evaluating different types of training methods using robotic arms specifically. The first 2 studies [11,12] used a classic UI interface that interacted with a robotic arm, and they were evaluated on a small group of students but for a significant period of time (1 semester). Then, another three studies [14,15,16] showed only the implementation of a physical robotic arm used in education but did not conduct any experiments. The next nine studies [17,18,19,20,21,22,23,24,25,26] focused on implementing newer technologies and proving a functional working concept that combines AR and VR with robotic arms. They focused on the VR side exclusively without connecting the VR application to a real robot as well, and only four of them [19,20,24,25] were briefly evaluated in one sitting with a group of students. Another study [26] managed to create a 3DOF robotic arm with VR integration, but it conducted a survey of only nine students.

As seen in this section, most of the reported works [11,12,14,15,16,17,18,19,20,21,22,23,24,25] either use a robotic arm or a VR system separately for education purposes, with only a few doing a survey on a small group of students. However, we are among the first to report a combination of the two. Secondly, in most cases, there are either technical papers or educational quantitative/qualitative research papers with a small sample size that use a part of the approach that we designed. This study combines an innovative education context (a real robotic arm and a VR “Digital Twin”) with a quantitative and qualitative study, making it a complete experiment. The software application integration with the robotic arm and lesson plan as well as the evaluation process will be explained in the next sections.

3. Robotic Arm Implementation

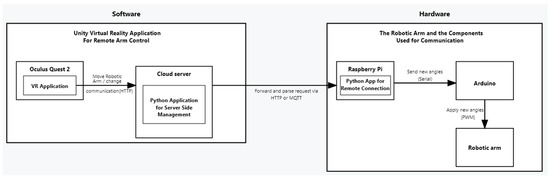

The Meta Quest 2 VR headset was the focus of the development of the virtual reality application, which was designed with the use of the Unity game engine. In Figure 1, we present the system architecture, which consists of two main parts:

Figure 1.

System Architecture Diagram.

1. The Virtual Reality System that has as subcomponents the Unity Virtual Reality Application, which runs on Oculus Quest 2 and is used as a communication interface for the robotic arm that includes the “Digital Twin” representation of the real arm. And the second subcomponent is the Backend Application that uses Python 3.11 and C# 9 scripts for server-side management in order to send data over the Cloud Server to the robotic arm. The implementation is further explained in Section 3.1.

2. The second part of the system architecture is represented by the Robotic Arm itself, which has 3 main subcomponents: the Raspberry Pi board used for server-side communication and receives data over the Cloud. The second subcomponent is the Arduino Board, which takes the data from the Raspberry Pi using serial communication and applies it directly to the third subcomponent, the Robotic arm motors, with the use of Pulse Width Modulation signals. This part is further explained in Section 3.2.

The VR application uses Meta XR libraries, which provide APIs that track the controllers’ positions and record the button presses for the robotic arm’s controls. In addition to those libraries, there is a C# script that manages the robotic arm’s angles, updates its virtual reality representation, and transmits those angles with one of the user-selected protocols, Message Queue Telemetry Transport Protocol (MQTT) or Websockets, to the backend Python 3.11 application for server management that uses Cloud Server services, where they are routed to the real-world robot’s servos with the Raspberry Pi controller. The application build profile was configured for Android with an SDK level of 34 for Meta Quest compatibility. The functionalities and data transmission tests were done on a mock-up back-end in Spring Boot to verify the flow of data and the delay of the real robotic arm response to the VR input. The Raspberry Pi serves as the Robotic Arm backend receiver, sending parsed angle data to an Arduino via a serial connection. The Arduino decodes the commands it receives using Pulse Width Modulation (PWM) signals and applies the correct angles to the robotic arm. This architecture (Figure 1) assures communication between virtual and physical environments while allowing for real-time control of the robotic arm with virtual reality.

3.1. Unity Virtual Reality Application Design

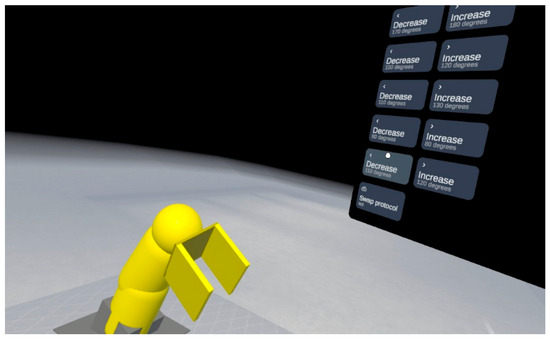

The unity application design (Figure 2) went through multiple development cycles to ensure a friendly user UI. In the last iteration, the user would be shown a virtual chamber with a panel with five rows of buttons, each controlling an arm servomotor. There are two buttons in each row: one for raising the chosen motor’s angle and another for lowering it. Additionally, each button would indicate the angle at which the arm moves if the user presses the button. An additional button was added to the panel to alter the internet protocol. The current protocol will be obtained with a GET request when the VR application loads, and when the user clicks on it, a POST request to modify it will be issued. To show the user how the machine moves when the buttons are pressed, a model of the robot is positioned in front of the button panel.

Figure 2.

Unity Application Design.

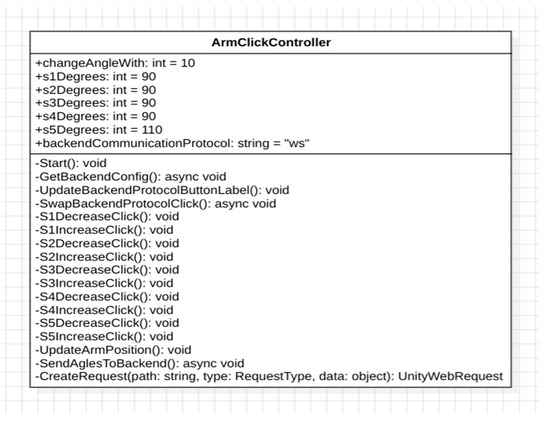

The variables that regulate the angles and the delta that is applied for each button click are located at the start of the script to facilitate script modifications. ChangeAngleWith (Figure 3) is the name of this delta, which, when set, will alter each servo with the specified offset. Each sXDegrees, where X is the servo’s number, is then subjected to the changeAngleWith. By decreasing a servo motor’s current value while keeping it within a specified range, the SXDecreaseClick method modifies the motor’s angle. In order to show the current angle values, the technique first finds two user interface (UI) components in the scene, designated DLS1 and ILS1. To change the text of these elements dynamically, it obtains their TextMeshProUGUI components. The variable s1Degrees represents the angle, which is lowered by a predetermined step size (changeAngleWith). The Mathf.Max method is used to confine the lower bound to zero degrees. The method simultaneously computes the possible increased angle, limited to 180 degrees, using Mathf.Min and changes the associated UI element with the modified value in the text of the reduced angle UI element. To ensure synchronization between the logical model and the actual robotic arm, the function UpdateArmPosition() is called to transfer the changed angle value to the servo motor or related mechanism.

Figure 3.

Virtual reality system class diagram.

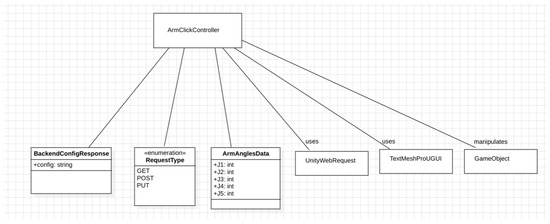

The data are sent to the server with the function SendAnglesToBackend, which serializes the angle data before sending it. All of the servomotor angles are included in ArmAnglesData (Figure 4), where J1 is the angle for the first servomotor through J5. The POST request is then created using the backendUrl specified at the script’s top, following the creation of the object. The ArmAnglesData object is serialized into its JSON format by the createRequest method, which then converts it into a byte array and delivers the raw byte array to the backend. The UnityEngine.Networking package is used in the procedure.

Figure 4.

Virtual reality arm controller class diagram.

3.2. Robotic Arm Hardware and Software Design

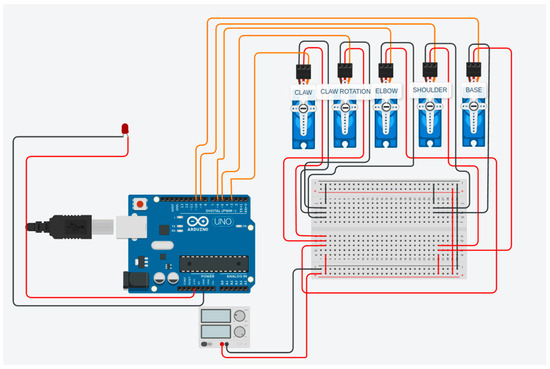

The robotic arm has four MG996R servomotors to manage elbow motion, hand rotation, shoulder movement, and claw control (Figure 5). A Tiankongrc 35 kg servomotor is included to provide support for shoulder rotation and improve stability during operation. The structure is made of 3D-printed parts, which provide cost-effectiveness, design flexibility, ease of modification, and quicker prototyping.

Figure 5.

Robotic arm Arduino setup with the servomotors.

An Arduino microcontroller serves as the main control unit for the system. The microcontroller was configured to use pulse width modulation (PWM) to control servomotor movement. Raspberry Pi is incorporated to offer wireless capabilities. The power supply offers a constant 12 V at 10 A and is controlled by a regulator to reduce the voltage to 6 V for the server motors.

There are two main scripts handling the behavior of the robot. The first one, “test.py”, is used to test the functionalities of the robot with manual control, perform a pre-set movement range, and calibrate the arm.

The second Python script “online.py” is the main script that processes the online functionalities and data transfer via Wi-Fi. It can use both the MQTT protocol and the WebSockets protocol. The MQTT protocol ensures efficient communication for fast message delivery. Alternatively, the WebSockets protocol provides a persistent connection that allows real-time control with low latency, enhancing responsiveness during VR control. The control_servo() function is designed to manage the robotic arm’s movement in real-time via keyboard inputs.

A process_message(message) function loads the request given in JSON format and checks to see if the request is valid or not. The request contains 5 parameters, each mapped to a servomotor (J1, J2, J3, J4, J5) and values for each one depicting an angle for each servomotor. The mqtt_client() function creates an MQTT client with TLS encryption, username-password authentication, and connects to a specified broker. It assigns handlers for connection and message events. The loop_start() method runs the client asynchronously for continuous data exchange. Lastly, a websocket_client() function establishes an asynchronous WebSocket connection to a server. It continuously listens to incoming messages, prints them, and processes them using the process_message() function. If the connection closes, it prints a closure message and exits the loop, ensuring stable communication in networked environments.

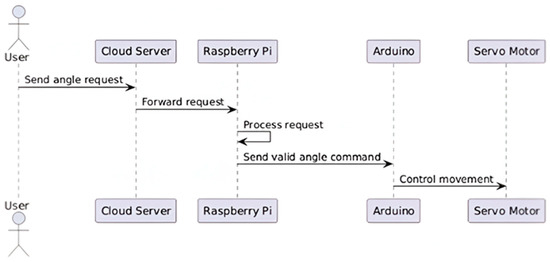

The diagram in Figure 6 follows the user use case. A user (typically a student) puts a VR headset on and is shown a mock-up of the robotic arm and a menu in which they can choose different angles of the servo motors. The user can choose an angle of one or multiple servos and send the request to the cloud server. The ‘client.py’s script on the Raspberry Pi listens to incoming requests on the cloud, checks to see if it’s a valid request, and then forwards the angles to the Arduino, which sends a signal to the servos.

Figure 6.

Use case diagram for the user.

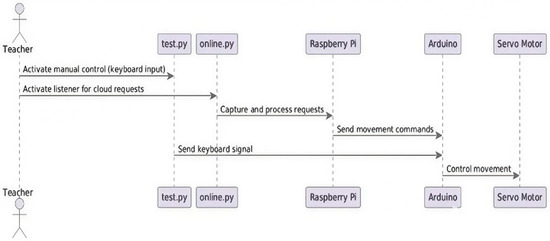

The second diagram shown in Figure 7 follows the Teacher use case. A teacher, depending on the current lesson, will decide on the type of control they want for the robotic arm: manual control from keyboard (made possible with the ‘test.py’ script) or online control from the VR headset (made possible with the ‘client.py’ script). After choosing the type of control desired, the angles are sent to the Arduino to control the servos.

Figure 7.

Use case diagram for the teacher.

4. Experimental Setup

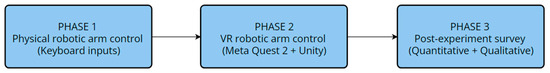

The experiment involved 31 third-year students from the Faculty of Engineering in Foreign Languages (FILS). The group distribution included 19 information engineering students, who comprised 61.3% of the sample, and 12 applied electronics students, representing 38.7% of the total number of participants. The experiment was organized into two separate phases to facilitate a thorough comparison of control approaches (Figure 8). During the first phase, the traditional control phase, students manipulated the robotic arm by standard keyboard inputs, allowing them core expertise in direct system control. In the second phase, the VR control phase (Figure 9), the same students used the robotic arm through a VR headset interface, allowing a direct comparison of user experiences and system efficacy across the two control paradigms. Following the completion of both stages, students completed a questionnaire that included both open-ended qualitative and quantitative questions. Their satisfaction, perceived difficulty, usability evaluations, and preferences for various learning approaches were collected in this survey.

Figure 8.

Sequence of the experimental setup with the students.

Figure 9.

Student with the headset controlling the robotic arm.

As part of this investigation, lesson plans were created in order to help students gain knowledge in using virtual interface systems and Internet of Things communication protocols to control a robotic arm. Starting with basic notions, the material moves on to communication protocols, system improvement, assessment, and virtual reality environment integration. In the first lesson, the robotic arm and its WebSocket and MQTT operating protocols are introduced. Students study the client-server communication model and Python 3 scripts to comprehend the fundamentals of remote actuation. In order to improve communication reliability, during the next class, students are asked to implement improvements to the MQTT protocol through logging and error-handling mechanisms. Similar enhancements are made to the WebSocket protocol in the following session, where students set up the client to automatically reconnect after disconnecting. In the last lesson focusing solely on the robotic arm, performance evaluation is the main focus. Both WebSocket and MQTT protocols’ latency is measured, and then a comparison is made.

The rest of the sessions use Unity and Meta Quest 2 to integrate the robotic arm with a virtual reality environment. The fifth lesson involves using a virtual reality interface to implement basic control of the robotic arm. In the upcoming class, a reset function is introduced, which puts the robotic arm back in its original setup. During the final lesson, students learn about incremental control mechanisms, which are developed for more precise joint position adjustments.

Upon concluding the practical sessions, students participated in a survey aimed at collecting their experiences and preferences via both quantitative and qualitative evaluation methods. The survey instrument integrated structured quantitative questions employing Likert scales from 1 to 5, as well as categorical rating scales from “very poor” to “very good” and “very easy” to “very difficult,” complemented by 10 open-ended questions that facilitate qualitative responses. The quantitative questions eased the evaluation of participant satisfaction, difficulty perceptions, and usability ratings, whereas the qualitative questions explored various dimensions, including preferences for learning methods across face-to-face, online, and hybrid formats, overall user experience, and perceived challenges and advantages of different approaches.

5. Results

This section examines how students perceive in-person and online learning environments. The effectiveness of these formats was investigated using a mixed-methods approach. Descriptive statistics and inferential tests have been used in quantitative analysis to evaluate the perceived advantages, disadvantages, and usability of various control systems. Also, more insights into students’ preferences were revealed by qualitative data gathered from open-ended responses. These investigations aimed to assess how new technologies can facilitate or improve learning in technical education settings. The questionnaire’s items were given sequential numbers between Q1 and Q10 (Appendix A). The combined measures displayed in Table 1 and Table 2 had been developed by integrating the responses to the first four questions (Q1–Q4), which addressed the perceived advantages and disadvantages of in-person and virtual reality (VR) learning environments. Specific elements of the user experience were the focus of the following questions (Q5–Q10): the overall keyboard and VR system learning experience (Q5–Q6), each control method’s perceived ease of use (Q7–Q8), the knowledge gained about IoT and VR technologies (Q9), and the ease of changing between control modes (Q10). Each of these components is presented separately in Table 3, Table 4, Table 5, Table 6 and Table 7.

5.1. Quantitative Analysis

Descriptive statistics were computed for four composite variables assessing students’ perceptions of learning methods in an IoT course involving a robotic arm: perceived benefits and downsides of face-to-face learning, and perceived benefits and downsides of online learning via a VR-controlled robotic interface (Table 1).

Table 1.

Descriptive Statistics.

Table 1.

Descriptive Statistics.

| Measure | Benefits of Face-to-Face Learning | Downsides of Face-to-Face Learning | Benefits of Online Learning (VR-Controlled Robotic Arm) | Downsides of Online Learning (VR-Controlled Robotic Arm) |

|---|---|---|---|---|

| Valid | 31 | 31 | 31 | 31 |

| Missing | 0 | 0 | 0 | 0 |

| Mean | 4.505 | 2.258 | 4.129 | 2.290 |

| Std. Deviation | 0.602 | 0.794 | 0.676 | 0.811 |

| Minimum | 3.000 | 1.000 | 2.333 | 1.000 |

| Maximum | 5.000 | 4.000 | 5.000 | 4.000 |

Each composite variable was created by computing the mean score of several Likert-type items (1 = Strongly Disagree, 5 = Strongly Agree) that assessed each respective dimension. Specifically, three items measured the benefits of face-to-face learning, two items measured its downsides, three items assessed the benefits of online learning (VR), and three items assessed the downsides of the VR-based format.

The results indicate that the benefits of face-to-face learning were evaluated very positively, with a mean score of 4.50 (SD = 0.60), suggesting strong agreement that hands-on activities and direct interaction with instructors supported understanding of the robotic system. The low standard deviation indicates that this perception was consistent across participants. In contrast, the downsides of face-to-face learning were rated significantly lower, with a mean of 2.25 (SD = 0.79), indicating that participants generally did not perceive physical lab constraints or access issues as substantial limitations.

Regarding the online learning condition using VR, students also reported positive perceptions, with a mean of 4.12 (SD = 0.67) for benefits. While this is slightly lower than the score for face-to-face learning, it still reflects a favorable evaluation of the flexibility and extended testing opportunities offered by the VR environment.

The downsides of the online learning experience were rated low as well, with a mean of 2.29 (SD = 0.81), suggesting that issues such as response time delays or the lack of physical manipulation were not major obstacles for most participants.

Overall, the findings indicate that students perceived both learning environments as beneficial, with a slight preference for face-to-face learning. Notably, the perceived drawbacks of each modality were relatively minor, supporting the potential value of integrating both approaches in hybrid learning designs for technical education.

To examine whether students perceived significant differences between the two learning modalities, paired-samples t-tests were conducted comparing the perceived benefits and downsides of face-to-face learning and online learning via a VR-controlled robotic arm (Table 2). The analysis revealed a statistically significant difference in perceived benefits, with face-to-face learning rated more positively than online learning (M = 4.51, SD = 0.60 vs. M = 4.13, SD = 0.68), t(30) = 3.10, p = 0.004. The effect size was moderate, Cohen’s d = 0.56, indicating a meaningful difference in favor of face-to-face learning in terms of perceived effectiveness and value.

In contrast, no statistically significant difference was found between the perceived downsides of the two learning methods (M = 2.26, SD = 0.79 vs. M = 2.29, SD = 0.81), t(30) = −0.21, p = 0.836, with a negligible effect size (Cohen’s d = −0.04). This suggests that participants perceived both formats as similarly low in terms of limitations and obstacles.

These results support the descriptive findings, indicating that while students appreciated both learning environments, they showed a clear preference for face-to-face learning when it comes to its benefits, without perceiving significantly more downsides for either modality.

Table 2.

Paired samples t-test.

Table 2.

Paired samples t-test.

| Measure 1 | Measure 2 | t | df | p | Cohen’s d |

|---|---|---|---|---|---|

| Mean_Face_to_face_learning | Mean_Benefits_Online_learning_VR_contolled_robotic_arm | 3.098 | 30 | 0.004 | 0.556 |

| Mean_Downsides_Face_to_face_learning | Mean_Downsides_Online_learning_VR_contolled_robotic_arm | −0.208 | 30 | 0.836 | 0.037 |

A paired-samples t-test was conducted to compare the overall learning experience between the keyboard-based control system and the VR-based control system. There was no statistically significant difference between the two conditions, t(30) = −0.494, p = 0.625 (Table 3).

Table 3.

Paired samples t-test for Q5 and Q6.

Table 3.

Paired samples t-test for Q5 and Q6.

| Measure 1 | Measure 2 | t | df | p |

|---|---|---|---|---|

| Q5 | Q6 | −0.494 | 30 | 0.625 |

The mean score for the keyboard-based experience was 4.290 (SD = 0.739), while the mean score for the VR-based experience was 4.355 (SD = 0.608) (Table 4).

Table 4.

Descriptive statistics for Q5 and Q6.

Table 4.

Descriptive statistics for Q5 and Q6.

| Measure | N | Mean | SD | SE |

|---|---|---|---|---|

| Q5 | 31 | 4.290 | 0.739 | 0.133 |

| Q6 | 31 | 4.355 | 0.608 | 0.109 |

Another paired-samples t-test was conducted to compare the perceived intuitiveness of controlling the robotic arm via keyboard versus using the VR interface. There was no statistically significant difference between the two conditions, t(30) = 0.215, p = 0.831 (Table 5).

Table 5.

Paired samples t-test for Q7 and Q8.

Table 5.

Paired samples t-test for Q7 and Q8.

| Measure 1 | Measure 2 | t | df | p |

|---|---|---|---|---|

| Q7 | Q8 | 0.215 | 30 | 0.831 |

The mean score for the keyboard-based control was 4.097 (SD = 0.790), while the mean score for the VR-based control was 4.065 (SD = 0.892) (Table 6).

Table 6.

Descriptive statistics for Q7 and Q8.

Table 6.

Descriptive statistics for Q7 and Q8.

| Measure | N | Mean | SD | SE |

|---|---|---|---|---|

| Q7 | 31 | 4.097 | 0.790 | 0.142 |

| Q8 | 31 | 4.065 | 0.892 | 0.160 |

Pearson correlation analyses (Table 7) were conducted to examine relationships between overall learning experience with the keyboard control system (Q5), VR-based control system (Q6), perceived intuitiveness of keyboard (Q7) and VR control (Q8), insight gained about IoT or VR technologies (Q9), and ease of transition from keyboard to VR control (Q10).

Table 7.

Pearson’s correlations.

Table 7.

Pearson’s correlations.

| Variable | Metric | Q5 | Q6 | Q7 | Q8 | Q9 | Q10 |

|---|---|---|---|---|---|---|---|

| Q5 | Pearson’s r | - | |||||

| p-value | - | ||||||

| Q6 | Pearson’s r | 0.431 * | - | ||||

| p-value | 0.016 | - | |||||

| Q7 | Pearson’s r | 0.236 | 0.065 | - | |||

| p-value | 0.202 | 0.729 | - | ||||

| Q8 | Pearson’s r | 0.375 * | 0.264 | 0.511 | - | ||

| p-value | 0.038 | 0.152 | 0.003 | - | |||

| Q9 | Pearson’s r | 0.609 *** | 0.583 *** | 0.173 | 0.521 ** | - | |

| p-value | <0.001 | <0.001 | 0.352 | 0.003 | - | ||

| Q10 | Pearson’s r | 0.333 | 0.458 ** | 0.392 * | 0.801 *** | 0.646 *** | - |

| p-value | 0.067 | 0.010 | 0.029 | <0.001 | <0.001 * | - | |

* p < 0.05, ** p < 0.01, *** p < 0.001.

Significant positive correlations were found between the overall learning experiences with keyboard and VR systems, r(29) = 0.431, p = 0.016. The overall VR learning experience (Q6) was also significantly correlated with perceived intuitiveness of VR control (Q8), r(29) = 0.511, p < 0.01.

Insight gained (Q9) showed strong positive correlations with both keyboard (Q5), r(29) = 0.609, p < 0.001, and VR learning experience (Q6), r(29) = 0.583, p < 0.001. Additionally, insight correlated positively with VR intuitiveness (Q8), r(29) = 0.521, p < 0.01.

Ease of transition from keyboard to VR control (Q10) was significantly positively correlated with VR learning experience (Q6), r(29) = 0.458, p = 0.010, perceived intuitiveness of keyboard (Q7), r(29) = 0.392, p = 0.029, intuitiveness of VR control (Q8), r(29) < 0.001, r(29) = 0.801, and insight gained (Q9), r(29) = 0.646, p < 0.001.

Other correlations, such as between the intuitiveness of the keyboard (Q7) and overall experiences, were positive but did not reach statistical significance. These results suggest that participants who reported greater insight about IoT and VR technologies also experienced better overall learning outcomes and found the VR interface more intuitive. Moreover, those who found the transition from keyboard to VR easier tended to rate both experiences and intuitiveness higher, indicating a close relationship between ease of adaptation and positive perceptions of the control systems.

5.2. Qualitative Analysis

The vast majority of students emphasized the importance of physical engagement with tangible devices in IoT education. Students consistently stated that direct physical engagement with hardware components, including the capacity to touch, control, and examine actual systems, greatly enhanced their understanding of difficult topics. The prompt feedback provided by in-person teaching, including real-time guidance from educators and collaborative problem-solving with colleagues, was much appreciated in all responses. For example, one of the responses was: “Face-to-face learning helps a lot because I get to actually use the tech, like the robotic arm, and understand how it works. It’s easier to ask questions and learn by doing”.

Despite the pronounced inclination towards practical learning, students recognized multiple organizational constraints within in-person educational methodologies. A lot of respondents indicated that flexibility was a principal concern, whilst a part of them specifically mentioned equipment restrictions as obstacles to beneficial learning experiences. Several students acknowledged the disadvantages of face-to-face education; nonetheless, they predominantly highlighted their enjoyment and the knowledge gained from experiential learning techniques while recognizing certain logistical challenges. For example, one student noted: “Less flexibility and time constraints can make experimentation and problem-solving more difficult compared to online”.

Students showed appreciation of the specific advantages provided by online learning formats, especially in accommodating individual learning preferences and schedules. The self-paced learning aspect was regularly emphasized, with students valuing the opportunity to revisit difficult elements again and advance through the curriculum at paces suitable for their personal understanding levels. Temporal flexibility appeared as a notable benefit, allowing students to interact with educational materials during individually convenient times instead of conforming to rigid institutional schedules. Another student responded: “Online learning gives me more time to go over the materials at my own pace, which helps me understand the concepts better. I can revisit recordings and reflect more before trying things out”.

The limitations of online learning in hardware-intensive educational settings were expressed clearly and consistently in student feedback. The lack of interaction with tangible hardware components was identified as the primary restriction, with students noting that being unable to engage with real systems resulted in significant holes in understanding. Reduced levels of participation were often observed, with students indicating that only theoretical methods did not sustain the interest and motivation gained through practical contact. One of the responses was: “Lack of physical access to devices limits practical skills and real-time troubleshooting experience”.

Student preferences indicated a clear agreement supporting hybrid learning models, with almost all respondents selecting a combination of online and in-person procedures. One student noted: “I prefer hybrid. I like learning online, but I also want to try the devices in class sometimes.” A portion of students favored exclusively face-to-face learning, whilst just a few preferred online-only methods. This distribution shows a deeper awareness of the complementary advantages of different educational methods. For example, one of the responses was “the face-to-face experience for sure, it would also make me excited to come to class since I can see the object that I’m studying”.

Student reactions to VR control interfaces were largely positive, with the majority of participants indicating that the technology appeared intuitive after short adaptation periods. The level of enthusiasm remained elevated, with students expressing considerable involvement and curiosity in the immersive control experience. Although initial problems were recognized, they were generally overcome quickly through practice. For example, one student said: “It was fun! A little hard at first, but I got used to it. It felt cool to move the robot with VR”.

The testing experience offered students significant insights into the nuances and functionalities of combined VR-IoT systems. Students were repeatedly surprised by the precision of VR-to-physical system translation, with several expressing their admiration for how accurately the robotic arm mirrored their virtual actions. This immediate feedback generated significant learning opportunities that led to improved understanding of system integration principles and the role of calibration in complex technological systems. One response was: “I loved the VR, I wasn’t expecting the arm to have so many movements, but overall it was very simple to understand”.

Some technical and usability difficulties were identified through student testing experiences, providing important recommendations for system improvement. The accuracy requirements for obtaining fine motor control via VR interfaces caused difficulties for several students, especially those executing elaborate or delicate movements. One student noticed: “There was a bit of lag, but almost undetectable; it did not make the interaction less enjoyable”. The adaptation period necessary for students unfamiliar with VR technology occasionally caused early obstacles to effective system use. Students offered constructive recommendations for development, including an improved interface design with more explicit visual feedback. One of the suggestions was: “instead of buttons that we have to press, it would be easier if the arm moved using motion recognition with the VR controller(s)”.

The experimental procedure provided students with new concepts into technology integration possibilities and practical applications. Many students were interested in the discovery of VR’s capability for managing physical IoT devices, having previously perceived VR primarily as an instrument for entertainment or visualization. This experience improved understanding of real-time communication protocols, including MQTT and WebSocket implementations in practical applications, offering applied examples of theoretical principles gained in courses. For example, one student noted: “I learned how powerful and efficient the integration between IoT and VR technologies can be. It was fascinating to see how a VR system could seamlessly control a physical robotic arm using real-time communication”.

5.3. Comparative Discussion and Limitations

Our quantitative and qualitative evaluations indicate that students appreciate both in-person and virtual reality learning environments. Nonetheless, students show a marginal preference for in-person instruction due to their interactive nature and the immediate assistance available from their educators. Quantitative research indicated that traditional learning offered statistically significant advantages, while both styles exhibited minimal perceived downsides. Qualitative comments were consistent, emphasizing the significance of physical engagement while also recognizing the flexibility and self-directed learning afforded by VR.

Our findings align with existing research indicating that educational robotics enhances student engagement in academic tasks, while also introducing a novel dimension by demonstrating these effects within a hybrid environment throughout a semester. Students’ favorable reactions to our VR-controlled robotic arm system are consistent with Zeng et al.’s findings. [11] who used their 6-DOF iArm system to show notable gains in problem abstraction and algorithm design skills. Unlike their study, which only looked at conventional programming interfaces, our hybrid approach showed that students valued hands-on experience while also appreciating the immediate nature of VR engagement. The face-to-face learning preference found in our quantitative analysis is supported by Kwantongon et. al. [12] involving PLC-controlled robotic arms since developing skills required direct manipulation and real-time feedback. Our study adds to this area by showing that VR interfaces provide clear benefits like individualized scheduling and opportunities for repeated practice, all while achieving similar levels of enjoyment. This suggests that combining the two approaches could improve learning outcomes more successfully than using each one separately.

The limitations found in research on AR-based robotic control are similar to the technical difficulties with VR accuracy and calibration. Xue et. al. [18] discovered issues with their RoSTAR system’s calibration, while Konstantinos et al. [17] observed that the HoloLens-based system they used had precision limitations of less than 1 cm. Students still reported early adaptation and precision problems when performing tasks requiring precise motor control, even though our VR technique avoids some of the spatial registration issues that come with AR applications. Unlike the AR studies that mainly concentrated on technical feasibility, where students reported higher intuitiveness scores, our evaluation, after a short period of adaptation, showed that these initial challenges were largely overcome through practice.

The theoretical potential shown in earlier VR robotics research is supported by our results. Erdei et. al. [19] discovered that students using their digital twin lab solved problems much more quickly than those using traditional documentation-based learning, even though their study was restricted to a single session with ten participants. Their results are supported and enhanced by our study, which involved 31 participants and was carried out over the course of a semester. It shows both short-term usability benefits and long-term engagement and learning insights.

Bolano et al. [21], as well as Christopoulos et. al. [22] and Maddipatla et. al. [23] had only virtual approaches, thus there are significant differences in the educational outcomes. These studies showed that combining VR and robotics was technically feasible, but they lacked a thorough evaluation of the students. By using virtual reality’s versatility and visualization capabilities while preserving tactile and instantaneous feedback of a physical system, the advantages of our hybrid approach can fill this gap. The importance of acknowledging that virtual activities have real-world repercussions, a component not found in purely simulated environments, was repeatedly underlined in the qualitative comments we received for our study.

The meta-analytical findings of Ouyang et al. [9] are strongly supported by the qualitative data showing that students showed increased interest in IoT technology regarding how learning attitudes are positively impacted by educational robotics. However, our study goes beyond attitude assessment to show understanding of technology integration and the development of practical skills. Significant relationships between overall learning outcomes and the ease of switching between control systems suggest that exposure to a variety of interaction modalities may improve technological adaptability and increase interest from students in this field.

This study recognizes limitations that must be taken into consideration when evaluating results and designing future research. The relatively small number of 31 participants constrains the applicability of the findings to larger and more diverse groups of students. The majority of earlier VR or AR-based robotics research examined only included short one-session evaluations or smaller groups (between 8 and 30 students) [11,12,19,24,25,26], only one of them having 75 participants [20]. The limited time spent experiencing, although longer than most comparable studies, may fail to include important aspects of long-term knowledge retention and skill development that could result from extended interaction with VR-controlled systems.

6. Conclusions

This study presented an innovative approach to the educational robotics domain by combining the use of a real robotic arm with its “Digital Twin”, providing students with both hands-on and virtual learning experiences. In contrast to earlier digital twin or hybrid research, this work provides clear evidence of improved engagement, understanding, and technical skill development through a systematic quantitative and qualitative evaluation. These findings demonstrate the methodology’s novelty and importance for developing immersive and hybrid robotics education.

The experiment involved 31 third-year engineering students (19 information engineering and 12 applied electronics), who participated in two stages. In the first part, students operated an actual robotic arm with typical keyboard inputs. In the second phase, they used a Meta Quest 2 headset to control the robotic arm via a virtual reality interface. Lesson plans guided students through basic robotic arm operation and IoT communication protocols (WebSocket and MQTT) to advanced VR integration, which included reset functionality and incremental joint control. After completing both phases, students answered a survey with open-ended, qualitative questions and quantitative Likert-scale questions about their satisfaction, usability, and perceived difficulty of controlling the robotic arm as well as their preferences for in-person, online, and hybrid learning.

Results from both quantitative and qualitative results indicate that students thought both in-person and virtual reality (VR) learning were beneficial; however, they somewhat preferred in-person training since it was more hands-on and allowed them to interact directly with teachers. Quantitative analysis revealed a statistically significant advantage for in-person instruction in terms of reported learning benefits, even if the perceived disadvantages of both modalities were minimal. These patterns were supported by qualitative data, which emphasized the benefits of online formats’ flexibility and self-paced learning as well as the importance of in-person interactions and real-time feedback.

Students responded well to the use of VR-controlled robotic arms, appreciating the system’s usability and educational potential. The majority of participants indicated a greater understanding of system integration and real-time communication protocols, despite the fact that early usability issues were identified. These findings were supported by correlational analyses, which demonstrated that more positive learning experiences and more insight were associated with perceived intuitiveness and ease of transition across control systems.

Overall, the findings highlight how online and in-person learning settings complement each other. The study found that hybrid models that use immersive technologies, such as virtual reality, may improve technical education students’ engagement, flexibility, and conceptual understanding. VR-controlled robotic arms, in particular, provide a stimulating and scalable way to teach complex IoT and robotics concepts, allowing hands-on learning for larger class sizes or remote learners.

The objective of future efforts is to improve both the technology implementation and the evaluation methodology. We will enhance the system’s performance by reducing latency, increasing responsiveness, and exploring more intuitive interaction methods, such as gesture-based or voice-activated controls in virtual reality environments. From an educational perspective, larger and more diverse student groups will be included in order to obtain a better understanding of the scalability of VR-integrated learning and its long-term impact on information retention and skill development. The ongoing research will improve our understanding of the impact of VR-integrated IoT education on short-term engagement and long-term skill retention.

Author Contributions

Conceptualization, N.G.; formal analysis, A.-F.P., R.P., M.G. and D.-A.C.; investigation: D.-A.C.; methodology, D.-A.C.; software, E.-O.M., D.-Ș.R., L.-N.P., C.-A.B., B.P., A.-V.S. and R.-F.N.; writing—original draft preparation, I.-A.B., D.-A.C. and N.G.; writing—review and editing, I.-A.B., D.-A.C. and N.G.; visualization, E.-O.M., D.-Ș.R., I.-A.B., D.-A.C., L.-N.P., C.-A.B., A.-F.P. and R.P.; supervision, N.G.; project administration, N.G. All authors have read and agreed to the published version of the manuscript.

Funding

The paper publication was funded from the PubArt program of National University of Science and Technology Politehnica Bucharest, 060042 Bucharest, Romania.

Institutional Review Board Statement

This study was exempted from ethical review and approval because it was designed as an anonymous survey with no risks to participants. Additionally, it was not intended as official human-subjects research; rather, it was conducted as a regular teaching activity. Participation was entirely voluntary, and no personally identifiable information was collected.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study, when they agreed to complete the given questionnaire. We used the following script: “Your involvement in this study is entirely voluntary, and you are free to withdraw at any moment without providing a justification. We will not gather any personal information that could be used to identify you because the study is anonymous. We promise to keep your responses private and use them exclusively for research. You consent to participate in the study under these terms by completing the provided questionnaire”.

Data Availability Statement

The data presented in this study are available on request from the corresponding author due to privacy.

Conflicts of Interest

Although authors Nicolae Goga and Maria Goga, and Alexandru-Filip Popovici and Ramona Popovici are married, their marital status had no impact on the study’s design, analysis, or reporting.

Abbreviations

The following abbreviations are used in this manuscript:

| Pearson’s r | Pearson’s correlation coefficient |

| p-value | Probability value |

| N | Sample size |

| Mean | Arithmetic average |

| SD | Standard deviation |

| SE | Standard error |

| t | t-statistic (from a t-test) |

| df | Degrees of freedom |

| p | Probability |

| Cohen’s d | Cohen’s d (effect size measure) |

Appendix A

In this section, the questions used for the quantitative analysis are presented.

On a scale from 1 to 5, where 1—Strongly Disagree, 2—Disagree, 3—Neutral, 4—Agree, 5—Strongly Agree.

- 1.

- Benefits of Face-to-Face Learning

To what extent do you agree with the following statements regarding face-to-face learning in this IoT course?

- Hands-on interaction with the robotic arm improved my understanding of its functionality.

- Direct guidance from instructors during face-to-face sessions was beneficial.

- Physical interaction with the robotic arm helped me grasp its mechanical constraints better.

- 2.

- Downsides of Face-to-Face Learning

To what extent do you agree with the following statements regarding the challenges of face-to-face learning?

- The lack of flexibility in face-to-face sessions made it harder to explore the system independently.

- Waiting for physical access to the robotic arm limited my practice time.

- I found the physical interface less intuitive compared to the VR interface.

- 3.

- Benefits of Online Learning (VR-Controlled Robotic Arm)

To what extent do you agree with the following statements regarding online learning with the VR system?

- The VR interface allowed for more experimentation and testing of commands.

- The online environment gave me more time to reflect on the system’s behavior.

- The VR interface was intuitive to use after an initial adaptation period.

- 4.

- Downsides of Online Learning (VR-Controlled Robotic Arm)

To what extent do you agree with the following statements regarding the challenges of VR-based learning?

- The lack of physical manipulation limited my understanding of the robotic arm’s mechanical constraints.

- I encountered issues with system response time during VR interactions.

- The VR interface required a significant adaptation period.

- 5.

- Challenges with VR Interface

To what extent do you agree with the following statements regarding the VR interface?

- The interface was user-friendly.

- The system’s response time was adequate for precise movements.

- 6.

- Preferred Learning Mode

Which learning method would you prefer for future IoT classes involving robotics and VR?

- Face-to-Face

- Online (VR-based)

- Hybrid (Combination of both)

- 7.

- Learning Experience with Robotic Arm System

Rate your overall learning experience with the keyboard control system.

- Very Poor

- Poor

- Neutral

- Good

- Very Good

- 8.

- Initial Impressions of VR Interface

How intuitive did you find the VR interface for controlling the robotic arm?

- Very Difficult

- Difficult

- Neutral

- Easy

- Very Easy

- 9.

- Learning Experience with VR System

Rate your overall learning experience with the VR-based control system.

- Very Poor

- Poor

- Neutral

- Good

- Very Good

- 10.

- Insights on IoT and VR Technologies

What new insights did you gain about IoT or VR technologies through this experiment?

- No new insights

- Somewhat informative

- Informative

- Very informative

References

- Saenz Zamarrón, D.; Arana de las Casas, N.I.; García Grajeda, E.; Alatorre Ávila, J.F.; Jorge Uday, N.A. Educational Robot Arm Platform. Comput. Sist. 2020, 24. [Google Scholar] [CrossRef]

- Rukangu, A.; Tuttle, A.; Johnsen, K. Virtual Reality for Remote Controlled Robotics in Engineering Education. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021. [Google Scholar] [CrossRef]

- Mulero-Pérez, D.; Zambrano-Serrano, B.; Enrique, R.Z.; Fernandez-Vega, M.; Garcia-Rodriguez, J. Enhancing Robotics Education Through XR Simulation: Insights from the X-RAPT Training Framework. Appl. Sci. 2025, 15, 10020. [Google Scholar] [CrossRef]

- Ghobadi, M.; Shirowzhan, S.; Ghiai, M.M.; Mohammad Ebrahimzadeh, F.; Tahmasebinia, F. Augmented Reality Applications in Education and Examining Key Factors Affecting the Users’ Behaviors. Educ. Sci. 2023, 13, 10. [Google Scholar] [CrossRef]

- Makhataeva, Z.; Varol, H. Augmented Reality for Robotics: A Review. Robotics 2020, 9, 21. [Google Scholar] [CrossRef]

- Răstoceanu, F.; Ciubotaru, B.-I.; Rădoi, I.; Marian, C. Extended Analysis Using NIST Methodology of Sensor Data Entropy. U.P.B. Sci. Bull. Ser. C 2021, 83, 121–132. [Google Scholar]

- Vergara, D.; Rubio, M.P.; Lorenzo, M. New Approach for the Teaching of Concrete Compression Tests in Large Groups of Engineering Students. J. Prof. Issues Eng. Educ. Pract. 2017, 143, 05016009. [Google Scholar] [CrossRef]

- Uslu, N.A.; Yavuz, G.O.; Usluel, Y.K. A systematic review study on educational robotics and robots. Interact. Learn. Environ. 2022, 31, 5874–5898. [Google Scholar] [CrossRef]

- Ouyang, F.; Xu, W. The effects of educational robotics in STEM education: A multilevel meta-analysis. Int. J. STEM Educ. 2024, 11, 7. [Google Scholar] [CrossRef]

- Pasalidou, C.; Lytridis, C.; Tsinakos, A.; Fachantidis, N. Augmented reality and robotics in education: A systematic literature review. Comput. Hum. Behav. Artif. Hum. 2025, 4, 100157. [Google Scholar] [CrossRef]

- Zeng, C.; Zhou, H.; Ye, W.; Gu, X. iArm: Design an Educational Robotic Arm Kit for Inspiring Students’ Computational Thinking. Sensors 2022, 22, 2957. [Google Scholar] [CrossRef]

- Kwantongon, J.; Suamuang, W.; Kamata, K. A Teaching Demonstration Set of a 5-DOF Robotic Arm Controlled by PLC. Int. J. Inf. Educ. Technol. 2022, 12, 1458–1462. [Google Scholar] [CrossRef]

- ISO 9283; Manipulating Industrial Robots—Performance Criteria and Related Test Methods. International Organization for Standardization: Geneva, Switzerland, 1998.

- Lopez-Neri, E.; Luque-Vega, L.F.; González-Jiménez, L.E.; Guerrero-Osuna, H.A. Design and Implementation of a Robotic Arm for a MoCap System within Extended Educational Mechatronics Framework. Machines 2023, 11, 893. [Google Scholar] [CrossRef]

- Rokbani, N.; Neji, B.; Slim, M.; Mirjalili, S.; Ghandour, R. A Multi-Objective Modified PSO for Inverse Kinematics of a 5-DOF Robotic Arm. Appl. Sci. 2022, 12, 7091. [Google Scholar] [CrossRef]

- Shintemirov, A.; Taunyazov, T.; Omarali, B.; Nurbayeva, A.; Kim, A.; Bukeyev, A.; Rubagotti, M. An Open-Source 7-DOF Wireless Human Arm Motion-Tracking System for Use in Robotics Research. Sensors 2020, 20, 3082. [Google Scholar] [CrossRef]

- Konstantinos, L.; Christos, G.; Nikos, F.; Niki, K.; Sotiris, M. AR based robot programming using teaching by demonstration techniques. Procedia CIRP 2021, 97, 459–463. [Google Scholar] [CrossRef]

- Xue Er Shamaine, C.; Qiao, Y.; Henry, J.; McNevin, K.; Murray, N. RoSTAR: ROS-based Telerobotic Control via Augmented Reality. In Proceedings of the 2020 IEEE 22nd International Workshop on Multimedia Signal Processing (MMSP), Tampere, Finland, 21–24 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Erdei, T.I.; Krakó, R.; Husi, G. Design of a Digital Twin Training Centre for an Industrial Robot Arm. Appl. Sci. 2022, 12, 8862. [Google Scholar] [CrossRef]

- Tarng, W.; Wu, Y.-J.; Ye, L.-Y.; Tang, C.-W.; Lu, Y.-C.; Wang, T.-L.; Li, C.-L. Application of Virtual Reality in Developing the Digital Twin for an Integrated Robot Learning System. Electronics 2024, 13, 2848. [Google Scholar] [CrossRef]

- Bolano, G.; Roennau, A.; Dillmann, R.; Groz, A. Virtual Reality for Offline Programming of Robotic Applications with Online Teaching Methods. In Proceedings of the 2020 17th International Conference on Ubiquitous Robots (UR), Kyoto, Japan, 22–26 June 2020; pp. 625–630. [Google Scholar] [CrossRef]

- Christopoulos, A.; Coppo, G.; Andolina, S.; Priore, S.L.; Antonelli, D.; Salmas, D.; Stylios, C.; Laakso, M.-J. Transformation of Robotics Education in the Era of COVID-19: Challenges and Opportunities. IFAC-PapersOnLine 2022, 55, 2908–2913. [Google Scholar] [CrossRef]

- Maddipatla, Y.; Tian, S.; Liang, X.; Zheng, M.; Li, B. VR Co-Lab: A Virtual Reality Platform for Human–Robot Disassembly Training and Synthetic Data Generation. Machines 2025, 13, 239. [Google Scholar] [CrossRef]

- Wu, W.; Li, M.; Hu, J.; Zhu, S.; Xue, C. Research on Guidance Methods of Digital Twin Robotic Arms Based on User Interaction Experience Quantification. Sensors 2023, 23, 7602. [Google Scholar] [CrossRef]

- Gonzalez, F.G. A hybrid physical-virtual educational robotic arm. ASEE Comput. Educ. 2025, 14, 1–19. [Google Scholar] [CrossRef]

- Rukangu, A.; Morelock, J.R.; Johnsen, K.; Moyaki, D.O. Virtual and physical robots in engineering education: A study on motivation and learning with augmented reality. CoED 2025, 14, 1–27. [Google Scholar] [CrossRef]

- Luo, L.; Weng, D.; Hao, J.; Tu, Z.; Jiang, H. Viewpoint-Controllable Telepresence: A Robotic-Arm-Based Mixed-Reality Telecollaboration System. Sensors 2023, 23, 4113. [Google Scholar] [CrossRef]

- Wu, Y.; Zhao, B.; Li, Q. The Teleoperation of Robot Arms by Interacting with an Object’s Digital Twin in a Mixed Reality Environment. Appl. Sci. 2025, 15, 3549. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).