Abstract

Serious games are increasingly recognized as valuable tools in educational settings due to their capacity to enhance learning through engaging and interactive experiences. However, existing usability evaluation instruments often fail to account for the specific features and pedagogical goals inherent to serious games, thereby limiting their utility in iterative design improvement. This study presents a usability evaluation questionnaire SGU specifically designed for serious games, developed through a systematic literature review (SLR) following the methodology of Kitchenham and Charters. The resulting tool is a standardized questionnaire intended to comprehensively assess key usability dimensions, including gameplay mechanics, interface design, narrative engagement, and gamification elements. The questionnaire was applied in multiple serious game case studies developed within educational contexts, allowing for the identification of recurring usability issues and context-specific design challenges. The findings highlight the instrument’s relevance, clarity, and diagnostic potential, demonstrating its practical value for evaluating and refining serious games. This research contributes to the field by addressing a critical gap in usability assessment and offering a structured, context-sensitive approach for improving user experience in educational game design.

1. Introduction

The integration of interactive technologies into education has significantly transformed how knowledge is accessed, experienced, and retained [1,2,3]. Among these technologies, serious games have emerged as powerful tools that combine entertainment and pedagogy, utilizing gameplay mechanics to support instructional objectives [3,4,5]. Their ability to increase learner motivation, foster active participation, and promote knowledge retention has led to their increasing adoption across diverse educational settings, from primary education to professional training contexts [6,7,8,9].

Despite their pedagogical potential, the success of serious games depends heavily on their usability, that is, how effectively, efficiently, and satisfactorily users can interact with the system to achieve specific learning goals [10,11,12]. Traditional usability evaluation methods, originally designed for general-purpose software, often fall short when applied to serious games as they often overlook game-specific dimensions such as narrative coherence, gamification elements, player immersion, and learning flow [13,14]. Consequently, evaluations based on these generic instruments may fail to identify critical user experience issues that directly impact learning effectiveness.

Recent research highlights have underscored the lack of consensus regarding appropriate instruments to assess usability in serious games [15]. While some researchers adapt standardized questionnaires like the System Usability Scale (SUS) or Questionnaire for User Interaction Satisfaction (QUIS), others develop custom instruments tailored to specific projects or game genres. However, these approaches are rarely generalizable and often lack validation in a variety of educational contexts. Moreover, most existing tools do not comprehensively account for the pedagogical and interactive features that distinguish serious games from traditional software applications.

To address this gap, this study proposes a usability evaluation instrument SGU—Serious Games Usability—specifically designed to assess serious games. The questionnaire was developed based on a systematic literature review (SLR), following the guidelines of Kitchenham and Charters [16], with the goal of identifying and synthesizing existing methods, tools, and usability dimensions pertinent to serious games.

However, mainstream usability questionnaires such as SUS, CSUQ, or SUMI, while effective in assessing general-purpose software, show important limitations when applied to serious games. These tools primarily capture aspects such as interface efficiency, task completion, and user satisfaction but do not account for pedagogical alignment, narrative immersion, gamification mechanics, or immersive interfaces, dimensions that are central to the effectiveness of serious games in educational contexts. These shortcomings directly motivated the development of the present study, which introduces a domain-specific instrument capable of evaluating both functional and pedagogical aspects of serious games.

The proposed questionnaire was applied in multiple serious game case studies to assess its effectiveness in identifying usability issues and informing design improvements. Through this application, the study demonstrates the clarity, adaptability, and practical relevance of the instrument for both researchers and developers seeking to evaluate serious games within educational frameworks.

The main objective of this research is to provide a validated, structured, and context-sensitive usability questionnaire that fills a critical gap in the evaluation of serious games. The expected contributions include a theoretically grounded instrument, empirical evidence of its application, and a framework for future usability studies in game-based learning environments.

This paper is structured as follows: Section 2 reviews the background on serious games, the main characteristics of serious games, and usability concepts; Section 3 presents the methodology and protocol of the systematic literature review; Section 4 describes the design of the usability questionnaire; Section 5 reports its application in real-world case studies; Section 6 discusses the results, insights, and limitations; and Section 7 concludes with final reflections and suggestions for future research.

2. Background

The evaluation of the usability of serious games presents unique challenges due to the complexity and multifunctional nature of these applications. Unlike conventional software systems, serious games incorporate diverse components, such as narrative structures, gamification mechanics, and immersive interfaces that serve both entertainment and instructional purposes [17,18]. However, current usability evaluation instruments often fail to fully capture these multidimensional aspects [19,20]. As a result, when tools that are not specifically designed for serious games are applied, important elements of the user experience may be overlooked, leading to incomplete or misleading usability assessments. This section provides a comprehensive overview of the theoretical and empirical foundations related to usability, laying the groundwork for the development of a tailored questionnaire-based evaluation instrument.

2.1. Video Games and Serious Games

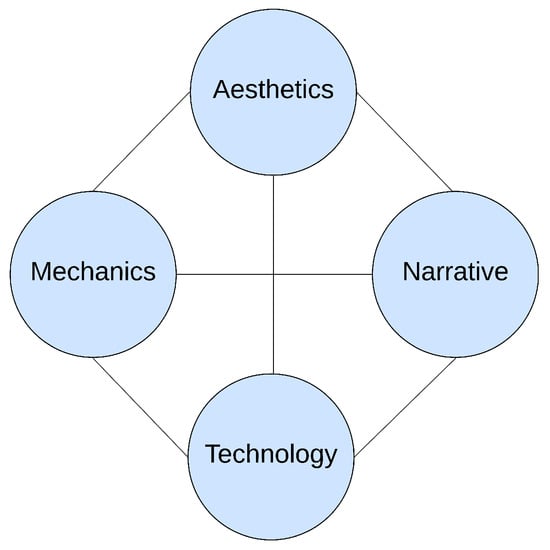

A video game is a form of interactive software primarily designed for entertainment purposes, utilizing computing technology to enable real-time human–machine interaction. Gameplay can occur on a wide range of electronic platforms, including televisions, personal computers, consoles, and other similar devices [21]. According to Lewis, a video game can be defined as “a computer-based environment that displays a game on a screen whose rules have been pre-programmed” [22]. Video games typically consist of four fundamental components: aesthetics, mechanics, narrative, and technology [23].

- Aesthetics: This is one of the most crucial layers of a video game as it directly interfaces with the player. It shapes the sensory experience of the game, encompassing what the player sees and hears, and significantly influences immersion and engagement.

- Mechanics: These represent the formal rules of the game. They define what actions the player can perform and establish the objectives to be achieved within the game environment.

- Narrative: The storyline or sequence of events that unfolds throughout the game. Provides context and depth, often giving players the sense of agency through interactive decision-making.

- Technology: Refers to the tools, platforms, and technical infrastructure used to develop and execute the game. Virtually any computing technology may serve as a foundation for video game development.

These fundamental elements, Figure 1, are adapted and expanded in serious games, which apply similar interactive structures to serve educational or training purposes beyond pure entertainment. Serious games are broadly defined as digital games developed for purposes that go beyond entertainment [24]. One of the earliest formal definitions was proposed by American researcher Clark C. in 1970, who described serious games as those designed with explicit educational objectives rather than solely for user enjoyment [25]. Later, in 2008, Julián Álvarez expanded this definition by emphasizing that serious games are digital applications that integrate instructional elements, such as tutoring, teaching, training, communication, and information, with playful and technological components drawn from traditional video games [26]. The purpose of this hybridization is to transform practical or useful content into a fun and engaging experience for the user. When serious games are developed for educational communication, they tend to exhibit a set of distinct features that differentiate them from purely recreational games.

Figure 1.

Elements of a video game.

2.2. Characteristics of Serious Games

To develop a robust and context-aware usability evaluation instrument tailored to serious games, it was first essential to identify and define the core criteria that characterize them. This process followed an integrative approach that combined theoretical analysis, empirical evidence, and expert validation [6,27,28,29].

The identification of criteria began with a comprehensive review of the academic literature on serious game design, usability in educational software, and gamification strategies. Key references included conceptual frameworks, methodological guidelines, and applied case studies from the domains of educational technology and game-based learning [29]. In parallel, these findings were contrasted with principles from established software development methodologies to ensure alignment with usability evaluation practices. Furthermore, the research process included reflective analysis and expert input, supported by the direct examination of various educational serious games.

Through this triangulated approach, the researchers identified a set of recurring and meaningful characteristics considered fundamental for the design and evaluation of serious games, some of which are necessary, while others are sufficient to classify a video game as a serious game [29]. These characteristics were subsequently organized based on their functional relevance and logical necessity within the serious game framework. The following attributes emerged as consistently essential or supportive components in the effective design and assessment of serious games.

- (1)

- Serious Objectives: This is the defining feature of a serious game. Content must be intentionally designed to support educational or training goals, ensuring that the user engages in meaningful interactions that lead to learning outcomes. Without this component, the game cannot be classified as “serious”.

- (2)

- Storytelling: A well-integrated storyline enhances comprehension, contextualizes tasks, and provides motivation by immersing the player in a progressive virtual world. Required in all serious games, narrative elements often strengthen cognitive and emotional engagement.

- (3)

- Gamification techniques: Gamification involves applying game-like mechanics to reinforce engagement and motivation [30]. In serious games, these techniques serve to structure progression and reward learning behavior. Common techniques include

- Points: Numerical values assigned to quantify performance and progress.

- Levels: Unlockable stages that become accessible as users meet specific objectives.

- Leaderboards: Ranking systems to introduce competition and benchmarking.

- Badges: Visual rewards given for completing specific tasks or achieving milestones, serving as indicators of progress and competence.

- Challenges/Missions: Clearly defined tasks or objectives within the game that guide user behavior and provide structured progression.

- Onboarding: The process through which a game intuitively teaches players its rules and goals without requiring textual instruction.

- Customization: Allows players to personalize elements of the game world, such as avatars, names, or environments, enhancing their emotional connection.

- (4)

- Gameplay: Rules and interactions that define how the player engages with the game. Well-designed mechanics are fundamental to promoting active learning and maintaining user motivation.

- (5)

- Multimedia: The use of combined media—images, audio, text, video, and animation—enriches the delivery of information and increases the multisensory appeal of the game. While not mandatory, multimedia elements enhance clarity, engagement, and cognitive retention.

- (6)

- Interfaces: This includes both hardware and software components that facilitate interaction with the game. Intuitive, accessible, and responsive interfaces significantly impact usability, though some minimalistic games may function without advanced UI structures.

- (7)

- World visualization: This refers to the spatial and interactive environments that support user exploration. Although not always necessary, offering freedom of movement and environmental feedback can increase immersion and facilitate situated learning.

- (8)

- Character design: The inclusion of identifiable or controllable characters supports personalization, role-play, and user agency. This feature enhances engagement but may not be essential in all serious game formats, such as abstract simulations.

These core characteristics, when appropriately balanced, allow serious games to fulfill both their pedagogical and experiential objectives. While these traits may vary in form depending on the context and design goals, they collectively define the architecture of an effective serious game.

Among the diverse applications of serious games, educational video games represent one of the most prominent and impactful subtypes [31,32]. These interactive digital environments are intentionally designed to support the acquisition of academic or procedural knowledge through structured activities embedded in game-based contexts [33]. Although serious games are also used in fields such as health, defense, and social awareness, educational games focus specifically on cognitive development, skill acquisition, and knowledge reinforcement in both formal and informal learning settings [34,35,36,37,38]. This perspective is supported by Gros, who defines educational games as software designed to facilitate learning by combining didactic objectives with interactive, game-based mechanics [39].

Given their pedagogical focus and widespread adoption, educational video games offer a practical and representative domain for applying usability evaluation instruments. For this reason, they were selected as case studies in the present research to validate the proposed usability questionnaire.

2.3. Usability in Software and Educational Contexts

Usability is defined by the ISO 9241-11 standard [40] as:

The extent to which a system, product or service can be used by specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use [40].

This definition emphasizes the importance of designing products that help users achieve their goals while providing a reliable basis for measuring and comparing the usability of different software systems. In practical terms, usability refers to the ease with which a user can perform a given task using a system or product.

According to ISO 9241-11, the three core quality components of usability are

- Effectiveness: The degree to which users are able to successfully complete tasks and achieve intended outcomes. A product that enables users to consistently meet their objectives is considered effective.

- Efficiency: The amount of effort required by users to achieve their objectives. An efficient product allows users to complete tasks with minimal effort and resource expenditure.

- Satisfaction: The user’s subjective response to the product. Satisfaction encompasses the emotional and psychological aspects of the user experience.

Evaluating the usability of software products offers a wide range of benefits [41], including

- Reduced learning costs and a corresponding decrease in the need for user support and assistance.

- Optimization of design, redesign, and maintenance processes.

- Increased conversion rates from casual users to active users or clients.

- Enhanced reputation and credibility of the product or brand.

- Improvement in overall quality and performance.

- Better user well-being by reducing stress, increasing satisfaction, and increasing productivity.

In educational contexts, usability not only facilitates ease of use but also directly influences learners’ motivation, comprehension, and retention. Unlike traditional productivity software, educational systems must balance functional usability with pedagogical effectiveness. Therefore, accurate and context-aware usability evaluation becomes essential when designing or adapting serious games for effective learning.

3. Systematic Literature Review

This research adopts a systematic literature review (SLR) methodology to comprehensively explore the current landscape of usability evaluation in serious games. The goal is to identify, analyze, and synthesize existing methods, techniques, and instruments that assess usability in this context, ultimately supporting the development of a dedicated evaluation tool tailored to the unique characteristics of serious games.

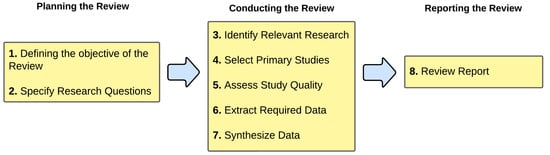

The review was conducted following the well-established guidelines proposed by Kitchenham and Charters [16]. This structured approach ensures the reliability and completeness of the evidence collected and strengthens the methodological foundation of the proposed instrument; see Figure 2.

Figure 2.

The systematic literature review steps.

The protocol for the systematic literature review (SLR) was structured into three main phases: (1) planning the review, (2) conducting the review, and (3) reporting the review. Each phase was carefully designed to ensure that only high-quality and relevant studies were included, thereby contributing valuable insights into usability evaluation practices in serious game environments.

3.1. Planning the Review

Usability is a critical factor in the effectiveness of serious games, especially in educational contexts where user engagement, cognitive retention, and task performance must converge. Despite its importance, the instruments currently employed to evaluate usability in serious games are often inadequate. Many of these tools originate from general software or web usability contexts and are not designed to address the distinctive features of serious games, such as interactive gameplay, narrative immersion, and pedagogical alignment.

To guide the scope and focus of the review, the following research questions (RQs) were formulated; see Table 1. These questions are intended to support the identification, classification, and analysis of existing user-centered evaluation approaches employed in the usability assessment of both general and serious games:

Table 1.

Research questions.

3.2. Conducting the Review

The search was conducted across five major digital databases to ensure a complete coverage of the high-quality academic literature:

- IEEE Xplore [42];

- ACM Digital Library [43];

- Elsevier (ScienceDirect) [44];

- Google Scholar [45];

- Springer Link [46].

The primary keywords selected for the search process were “methods”, “techniques”, “instruments”, “evaluation”, “usability”, “serious games”, and “games”. These terms were derived directly from the research questions and refined through synonym analysis and semantic expansion to capture all relevant variations across sources.

The search strings were constructed following the steps recommended in the SLR guidelines, which include

- Identifying the key concepts of the research questions.

- Determining alternative spellings and synonyms for each concept.

- Verifying and refining keywords based on relevant preliminary studies.

- Using the Boolean operator “OR” to incorporate synonymous or related terms.

- Using the Boolean operator “AND” to combine the main concepts into structured queries.

To organize and facilitate the construction of search strings, a table was developed to classify the keywords into four thematic groups; see Table 2. Column “A” included terms related to evaluation along with their Spanish equivalents; Column “B” focused on terms associated with serious games and their translations; Column “C” contained keywords pertaining to usability; and Column “D” comprised terms related to methods, techniques, and instruments relevant to usability evaluation.

Table 2.

Search string keywords.

This structured classification helped ensure consistency and completeness in the formulation of search queries.

Using this structure, the following search strings (SS) were defined:

- SS1: (A1 OR A2) AND (C1) AND (B1 OR B2 OR B3 OR B4 OR B5)

- SS2: (A3 OR A4 OR A5) AND (C2) AND (B6 OR B7 OR B8 OR B9 OR B10)

- SS3: (D1 OR D2 OR D3) AND (A1 OR A2) AND (C1) AND (B1 OR B2 OR B3 OR B4 OR B5)

- SS4: (D4 OR D5 OR D6) AND (A3 OR A4 OR A5) AND (C2) AND (B6 OR B7 OR B8 OR B9 OR B10)

The search strings were iteratively refined through pilot tests conducted in Scopus and Web of Science, aiming to balance sensitivity (maximizing the retrieval of relevant publications) and specificity (minimizing irrelevant results). During this process, terms that produced excessive noise, such as “game design” or “playability,” were excluded after preliminary testing due to their low precision in retrieving relevant studies.

All retrieved articles were cataloged and organized using SR-Accelerator, an online tool that facilitated the management of metadata, inclusion/exclusion tracking, and classification [47]. Two independent reviewers conducted the screening process. Discrepancies were resolved through discussion, and in cases of persistent disagreement, a third reviewer acted as arbiter. In addition, Mendeley was employed as a reference management tool for proper citation and bibliography control throughout the research process.

The selection process for relevant studies was carried out to ensure methodological rigor and alignment with research objectives. First, a preliminary screening of all documents retrieved during the search process. In addition, all records were evaluated according to predefined inclusion and exclusion criteria (see Table 3).

Table 3.

Inclusion and exclusion criteria.

In particular, one of the main exclusion criteria was applied to studies describing serious games without explicit usability evaluation data. This led to the omission of high-impact projects such as “Galaxy Zoo” [48], “Sea Hero Quest” [49], and “Borderlands Science” [50]. Despite their wide reach and scientific relevance, the lack of usability reporting made them unsuitable for this analysis, underscoring a broader gap in the literature and reinforcing the need for standardized instruments like the one proposed in this study.

Then, an in-depth analysis was conducted on the studies that had been previously shortlisted. This phase involved a thorough reading of the full text of each document to accurately assess its content and relevance. Following this, each study was assessed using a set of quality evaluation criteria, enabling us to verify its methodological rigor and its contribution to the objectives of this research.

To evaluate the methodological quality and relevance of the documents identified during the selection process, a quality assessment questionnaire was developed and applied to each study that was successfully retrieved. This questionnaire was designed to assess how well each document aligned with the primary objectives and thematic scope of the systematic literature review.

The instrument consisted of seven quality evaluation questions (QEs). These questions helped determine the extent to which each study contributed to understanding usability evaluation in games and serious games. Each question addressed a specific aspect of methodological or conceptual relevance. The set of quality evaluation questions is presented in Table 4.

Table 4.

Quality Evaluation Questions.

To assess the quality of the selected studies, a binary scoring system was applied to the quality assessment questions. For each question, a study received a score of 1 if it met the corresponding criterion and a score of 0 if it did not. This approach ensures consistency and objectivity in evaluating the methodological contribution of each document.

At the end of the assessment, only the studies that achieved a minimum score of 5 out of 7 possible points were selected for inclusion in the final analysis, ensuring that the reviewed literature contributed meaningfully to the construction of the proposed usability evaluation instrument for serious games.

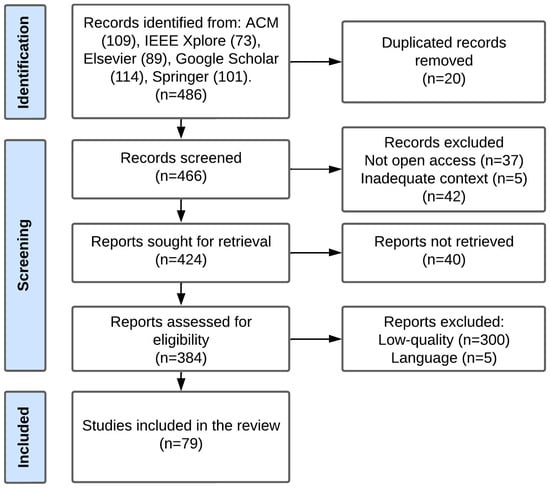

To ensure methodological rigor and minimize selection bias, a structured screening and selection process was conducted in alignment with best practices for systematic literature reviews. The complete process is summarized in the flow diagram shown in Figure 3, which illustrates the identification, screening, and inclusion phases.

Figure 3.

PRISMA flow diagram for the literature review.

Phase 1: Identification. A comprehensive search strategy was executed across five major scientific databases: ACM Digital Library (109), IEEE Xplore (73), Elsevier ScienceDirect (89), Google Scholar (114), and SpringerLink (101), yielding a total of 486 records. After applying an automated and manual duplicate removal process, 20 redundant entries were excluded, resulting in 466 unique records retained for the initial screening phase. This stage ensured a wide and representative coverage of the literature related to usability evaluation in games and serious games.

Phase 2: Screening. The 466 records were systematically reviewed based on titles, abstracts, and keywords to determine their relevance to the research scope. During this step, 42 articles were excluded, 37 due to restricted access (non-open access) and 5 for lacking contextual alignment with the focus of usability evaluation. Consequently, 424 studies were considered eligible for full-text retrieval. However, 40 of these could not be accessed in full, reducing the articles to 384 full-text articles. These were then assessed in detail using the predefined inclusion and exclusion criteria (see Table 3).

Phase 3: Inclusion. Each of the 384 full-text articles underwent a rigorous eligibility and quality assessment process. Based on the quality evaluation criteria (see Table 4), 300 studies were excluded due to insufficient methodological quality, and 5 were excluded due to language incompatibility (i.e., not available in English or Spanish). As a result, a total of 79 high-quality studies were selected for inclusion in the final review synthesis. These papers constitute the core evidence base from which the findings of this systematic review were derived.

The complete list of the 79 included publications is presented in Table 5. The list includes the assigned paper ID (generated using the first two letters of the author’s last name, the first letter of their first name, and year of publication), the article’s title, the first author, and the bibliographic reference.

Table 5.

List of scientific publications included in this review.

3.3. Reporting of the Review

The analysis presented here emphasizes not only what methods, techniques, and instruments have been used in prior research but also how they have been applied and what limitations they present in the context of game-based learning environments.

RQ1: What user-centered methods, techniques, and instruments are used to evaluate the usability of games and serious games? Based on the studies reviewed in the SLR, it was observed that most of the methods used in the context of serious games are adaptations or direct applications of approaches originally developed for general software or entertainment games. However, while the overall structure of evaluation strategies remains similar, their application in serious games often demands additional pedagogical considerations.

Two primary user-centered methods were consistently identified in the reviewed literature:

- Empirical Methods: These involve observing users as they interact with the game to collect behavioral data and detect potential usability issues.

- Inquiry Methods: These rely on obtaining user feedback through instruments such as questionnaires, interviews, or focus groups, capturing users’ subjective experiences and perceptions.

These methods provide complementary perspectives: empirical approaches capture real-time interaction patterns and behaviors, while inquiry approaches gather subjective insights on usability, satisfaction, and experience.

Each method is associated with specific techniques that facilitate the usability assessment process, as summarized in Table 6. Most of these techniques are applicable in both entertainment and serious game contexts, although their focus may differ depending on the educational objectives of the game.

Table 6.

Primary studies on user-centered methods and techniques for usability evaluation.

Among the identified techniques, questionnaires are the only ones that consistently use standardized instruments for usability assessment. Other techniques, such as observation or interviews, typically rely on qualitative protocols rather than validated measurement tools.

As shown in Table 7, the SLR identified several widely used questionnaire-based instruments. These are frequently adapted to suit the context of serious games but often lack comprehensive coverage of all the features specific to these environments (narrative, gameplay dynamics, gamification, etc).

Table 7.

Primary studies employing user-centered questionnaire instruments for usability evaluation.

RQ2: What are the key characteristics of the user-centered methods, techniques, and instruments identified in RQ1?

This research question aims to describe the core attributes of the user-centered methods, techniques, and instruments identified in the previous stage. Specifically, it seeks to clarify how these approaches are applied and what kind of insights they are capable of generating in the context of usability evaluation for games and serious games.

The literature review confirms that two primary user-centered methods are widely used in this domain:

- Empirical methods are based on observing behavior and performance of users as they interact with the game. These methods rely heavily on user participation to generate real-time usability data. Evaluators typically include a moderator and observers who guide and monitor test sessions. Participants are asked to complete specific tasks while their actions and reactions are documented.

- Inquiry methods focus on gathering data from users through verbal or written responses. These methods involve structured interaction between the evaluator and the users, either individually or in groups, to understand their experiences, perceptions, and satisfaction with the system.

Table 8 summarizes the main techniques associated with each method:

Table 8.

User-centered evaluation techniques for serious games.

Among all techniques, only questionnaires are supported by standardized usability instruments; see Table 9. In contrast, techniques like interviews or focus groups are often semi-structured or ad hoc and lack formal scoring schemes. Below is a summary of the most commonly used questionnaires identified through the SLR:

Table 9.

Characteristics of usability questionnaires for games and serious games.

RQ3. How are the identified user-centered methods, techniques, and instruments applied in the usability evaluation of games and serious games?

This research question aims to describe the practical application of usability evaluation strategies in user-centered studies of games and serious games. It synthesizes how empirical and inquiry-based methods are operationalized, how techniques are implemented during usability assessments, and how standardized instruments are integrated into the evaluation process.

- Empirical methods involve direct observation of users performing predefined tasks within the game environment. These methods focus on capturing behavioral data, interaction patterns, and real-time problem-solving processes. The presence of an evaluator (moderator) who guides the session and observes participants is common.

- Inquiry methods focus on gathering data from users through verbal or written responses. These methods involve structured interaction between the evaluator and the users, either individually or in groups, to understand their experiences, perceptions, and satisfaction with the system. The quality of the data collected depends on clear definitions of the evaluation objectives and usability indicators.

The following table (Table 10) summarizes how each empirical technique is applied in practice:

Table 10.

Application of usability techniques for games and serious games.

In serious game evaluations, standardized questionnaires are widely employed to assess usability dimensions such as effectiveness, satisfaction, workload, and player experience. These instruments are typically administered immediately after users complete a predefined set of gameplay tasks, ensuring reliable and context-relevant responses.

The process involves assigning users a task list aligned with core game interactions, followed by the application of one or more questionnaires. When combined with other methods (observation or think-aloud), a minimum of 15 participants is recommended; when applied independently, at least 30 users are advised to ensure robustness [125].

Commonly used instruments include the SUS, NASA-TLX, GEQ, SUMI, PSSUQ, QUIS, CSUQ, and ad hoc questionnaires. These tools provide structured, often multidimensional data that support consistent usability assessment and facilitate comparisons across studies and contexts. Their use contributes to greater methodological rigor in the evaluation of serious games [14].

In summary, the systematic literature review provided a comprehensive and structured overview of the current state of user-centered usability evaluation in serious games. It identified the main methods, techniques, and standardized instruments employed in practice, as well as their specific characteristics and application protocols. The findings highlight a predominant reliance on empirical and inquiry-based approaches, with varied levels of methodological rigor and contextual adaptation. These insights not only confirm the need for evaluation frameworks tailored to the unique features of serious games but also serve as a foundational reference for the development of a context-sensitive usability evaluation instrument aligned with educational goals and user engagement dynamics.

4. Development of the Usability Evaluation Instrument

This section describes the design process of the proposed usability evaluation SGU (Serious Games Usability) tailored for serious games. Based on the findings of the SLR, existing questionnaires used to assess usability in digital systems were analyzed to identify limitations when applied to serious games. A structured GAP analysis was then conducted to systematically compare these instruments against the multidimensional requirements specific to serious game environments. The final result is a questionnaire-based tool that integrates both adapted and newly created items, ensuring a comprehensive assessment of usability.

4.1. GAP Analysis

To systematically support the development of the proposed instrument, a GAP analysis was carried out. This analysis aimed to critically compare the scope of widely used usability evaluation instruments with the specific multidimensional requirements of serious games. The process began by identifying a set of core usability dimensions that emerged from the systematic literature review, which are essential for capturing the full spectrum of user experience in serious games. These dimensions include serious objectives, storytelling, gamification techniques, gameplay, multimedia integration, interface design, world visualization, and character visualization.

Each of these dimensions reflects a critical component of how users engage with and perceive serious games, encompassing both functional usability and experiential qualities tied to learning, immersion, and emotional response. The selected instruments were then mapped against these dimensions to evaluate their coverage and to identify areas where existing tools fall short. The results of this GAP analysis provide the empirical foundation for designing a new, context-aware usability questionnaire that addresses the limitations of existing approaches and better aligns with the unique characteristics of serious game environments.

The findings of this analysis are summarized in Table 11, which illustrates the coverage of each reviewed instrument across the main dimensions of serious games.

Table 11.

GAP analysis of existing instruments.

The symbols have the following meaning:

- - means that the instruments consider the dimensions minimally.

- + means that the instruments consider the dimensions substantially.

- NA means that the instruments do not take the dimensions into consideration.

Based on the comparative results presented in Table 11, it was possible to identify significant gaps in the coverage provided by existing usability tests when evaluated against the specific requirements of serious games. Notably, most instruments demonstrated limited or no integration of critical dimensions such as serious objectives, narrative and story visualization, world and character visualization, gamification techniques, and multimedia integration.

These omissions suggest that current tools, although effective in general software or traditional game contexts, are insufficiently equipped to evaluate the experiential and pedagogical elements that are central to serious games.

4.2. Final Structure of the Instrument

The absence of specific evaluation items related to core components of serious games—such as learning outcomes, storyline integration, world and character design, and gamification strategies—can result in assessments that overlook fundamental usability aspects. Consequently, evaluations that rely solely on general-purpose instruments may yield incomplete or misleading insights about the game’s effectiveness and user experience in educational or training scenarios.

To address this shortcoming, we developed a tailored usability evaluation instrument in the form of a structured questionnaire. The design of this tool was informed by the evidence and gaps identified in the SLR and grounded in user-centered inquiry methodology, which prioritizes the collection of direct user feedback regarding interaction, engagement, and perceived usability [131].

The construction of SGU was guided by three complementary principles. First, existing items from the SUS and PSSUQ were adapted to the educational context to ensure relevance while retaining comparability with widely used benchmarks [131]. Second, validated constructs, such as items from MEEGA+, were selectively incorporated to address serious objectives and gamification strategies [132], and questions from the Game Engagement Questionnaire (GEQ) were included to capture narrative immersion and visualization aspects [133]. Third, new items were created when no existing tool adequately covered critical dimensions identified in the GAP analysis, particularly those related to the explicit linkage between gameplay and pedagogy or multimedia integration. At the same time, exclusions were applied to avoid redundancy or conceptual misalignment, for example, by omitting workload measures from NASA-TLX and overlapping satisfaction constructs from QUIS. This structured process ensured that the resulting questionnaire integrates functional usability and pedagogical effectiveness in a coherent and context-sensitive manner.

The resulting instrument is designed to assess a comprehensive range of usability factors relevant to serious games, including gameplay mechanics, user engagement, educational integration, narrative immersion, and interface design. By systematically addressing these dimensions, the questionnaire offers a holistic and reliable evaluation framework that captures both the functional and pedagogical aspects of usability.

Structurally, the proposed tool comprises 21 items distributed across eight dimensions, each aligned with the theoretical framework previously established in this study. All items are formulated using a Likert-type scale, enabling consistent data collection, user perception quantification, and comparative analysis across different serious game implementations. The complete structure of the SGU is detailed in Table 12.

Table 12.

Proposed usability evaluation questionnaire for serious games.

To complete the questionnaire, each participant carefully read each item and indicated their opinion by selecting a response that best reflected their level of satisfaction. A 5-point Likert scale was used for this purpose (Table 13), with the following equivalence:

Table 13.

Likert scale.

To facilitate comparative analysis and enhance interpretability, the responses collected through the 5-point Likert scale were transformed to a standardized 0–100 scale, consistent with the approach used in the System Usability Scale (SUS). This conversion allows for direct comparison with existing usability benchmarks while preserving the ordinal nature of the original responses.

This study adopts a linear scaling approach more suitable for unidirectional questionnaires. Specifically, each response score is scaled proportionally to a 100-point range using a direct rule-of-three formula. For instruments based on a 5-point Likert scale, the transformation is expressed as

where

- represents the response to item on the 5-point Likert scale;

- The value 5 denotes the maximum point on the Likert scale.

This method provides an intuitive and interpretable representation of usability perceptions, particularly when all questionnaire items are phrased in a consistent direction (i.e., higher scores indicate better usability). It also simplifies the aggregation and comparison of results across different case studies and game-based evaluations.

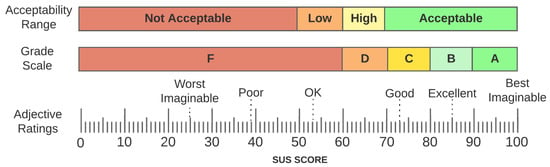

To interpret usability results, we adopted the scoring system of the System Usability Scale (SUS) developed by John Brooke [134]. According to this model, a score below 68 suggests the presence of significant usability issues, while a score above 68 indicates acceptable usability (Figure 4). In addition, the SUS provides three complementary classification models that offer deeper insight into system usability:

Figure 4.

Bangor scale.

- Acceptance Range: Scores are categorized as acceptable (above 70), marginal-high (60–69), marginal-low (50–59), or not acceptable (below 50).

- Classification Scale: A score above 90 earns an A (excellent), between 80 and 89 corresponds to a B (outstanding), and between 70 and 79 is rated C (good). Scores between 60 and 69 are assigned a D (fair), while any score below 60 receives an F (poor), signaling a critical need for usability improvements.

- Adjectives classification: Provides a qualitative interpretation of the score by assigning a descriptive label based on user perception. For example, scores below 25 are categorized as worst imaginable, those below 39 as poor, and scores around 52 as OK. A score near 73 is described as good, while scores close to 85 are considered excellent. The highest range, between 86 and 100, corresponds to the label best imaginable, indicating an exceptional user experience.

These classification models provide a comprehensive framework for evaluating and interpreting the usability of serious games, enabling developers and researchers to identify both strengths and areas for improvement based on user feedback.

5. Application of the Usability Instrument in Case Studies

SGU was applied to three serious game projects: El Tesoro del Ser Politécnico [1], Flagschool [135], and Funcarac [136]. Each game targets distinct educational and cultural objectives.

“El Tesoro del Ser Politécnico” aims to foster ethical values within the polytechnic community through an exploratory game set on campus. Players navigate virtual scenarios, face interactive challenges, and uncover historical facts and ethical principles. The game is accessible through web browsers on both mobile and desktop platforms through the following link: https://tesoropolitecnico.epn.edu.ec/ (accessed on 29 August 2025).

“Flagschool” is designed to enhance critical thinking, problem-solving, creativity, and cognitive speed through the study of vexillology. The project uses interactive elements to create an engaging and educational experience. The game is available for download through the following repository: https://github.com/David-Morales-M/Vexillology-Serious-Game.git (accessed on 29 August 2025)

Finally, “Funcarac” focuses on preserving and promoting the intangible cultural heritage of indigenous agricultural communities in Ecuador. Through gameplay, users explore ancestral practices and worldviews rooted in agro-ecological knowledge. The game is accessible through web browsers on both mobile and desktop platforms through the following link: https://saminay.epn.edu.ec/ (accessed on 29 August 2025).

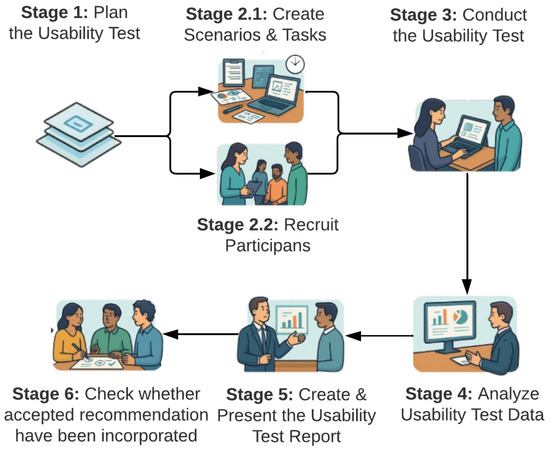

To evaluate usability, the protocol proposed by Abhay Rautela [137] was followed (see Figure 5). Questionnaires were selected as the primary evaluation technique due to their efficiency in capturing users’ perceptions and experiential feedback.

Figure 5.

Usability protocol.

A minimum of 15 participants were recruited for each evaluation. As supported by Laura Faulkner’s findings [138], testing with five users can identify up to 85% of usability issues, while larger samples increase the likelihood of uncovering a broader range of problems.

Prior to testing, participants received the necessary documentation, including informed consent and a detailed task list. They then interacted with each game by completing predefined tasks and freely exploring the interface. Upon completion, participants responded to the usability evaluation questionnaire, providing feedback based on their experience.

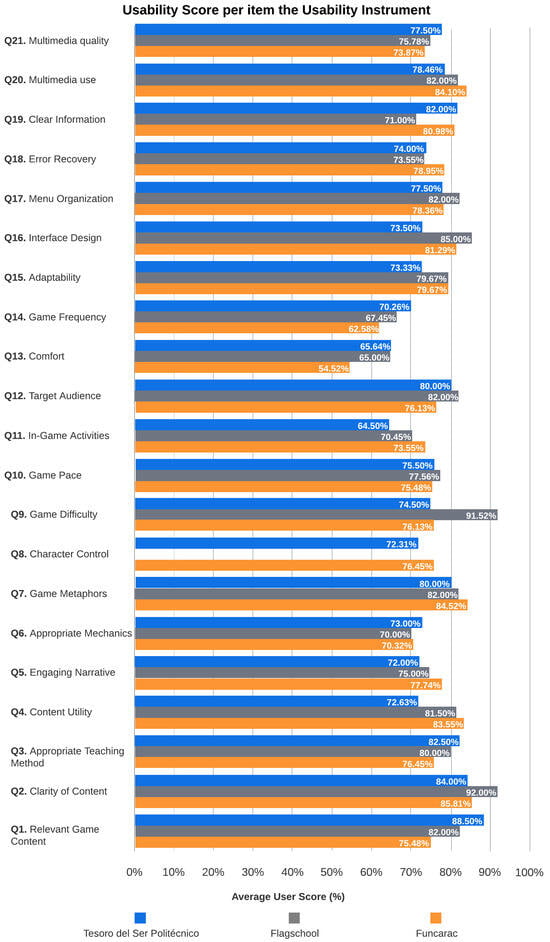

The proposed usability evaluation instrument was applied across three serious games El Tesoro del Ser Politécnico, Flagschool, and Funcarac to assess its ability to capture the multidimensional nature of user experience in diverse educational game contexts; see Figure 6.

Figure 6.

Average user score per item in the usability instrument.

Overall, the questionnaire revealed high levels of user satisfaction across the three case studies, with most items exceeding a 70% positive response threshold. This indicates that the instrument successfully identified areas where each game delivered meaningful experiences, as well as those requiring refinement.

For example, items related to serious objectives (Q1–Q4) consistently scored highly, with “Funcarac” achieving 88.5% in Q1 and “Flagschool” reaching 92.0% in Q2. These results confirm that the questionnaire effectively captures users’ perceptions of content relevance and learning effectiveness for evaluating the “serious” component of serious games.

Items addressing interface usability (Q16–Q19) also showed strong performance, particularly in “El Tesoro de ser Politécnico” and “Flaschool”, where scores remained above 78%, suggesting that the questionnaire reliably measures key aspects of system usability such as navigability, information clarity, and error recovery.

In terms of engagement and gamification (Q9–Q11), Vexillology Game notably outperformed the others in Q9 (91.52%), evidencing the instrument’s sensitivity in capturing motivational elements central to user engagement. Similarly, Q8, focused on the player’s perceived control over the game character, revealed moderate ratings in Ser Politécnico (76.4%) and lower results in Funcarac (72.3%), which underscores the diagnostic capacity of this item in identifying limitations in character interaction and decision-making.

However, it is important to note that dimensions such as world visualization and character design are not mandatory components in all serious games. Their relevance depends largely on the pedagogical goals, game mechanics, and intended user experience of the game in question. For instance, some serious games may prioritize abstract cognitive tasks, textual information delivery, or simulation-based logic without employing rich visual environments or controllable avatars. In such cases, questions targeting world or character representation (e.g., Q7 and Q8) may be considered optional or omitted entirely, depending on the nature of the application. This flexibility reinforces the adaptability of the proposed questionnaire to a wide range of serious game designs, maintaining its validity while ensuring contextual relevance.

In summary, the application of the SGU across three distinct case studies validates its utility and robustness. It demonstrates not only a high level of user comprehensibility and applicability but also the capacity to generate actionable insights that reflect both functional usability and the educational effectiveness of serious games. The results reaffirm the instrument’s relevance as a holistic and scalable evaluation tool for projects aiming to integrate learning objectives with engaging user experiences.

6. Discussion

The development and application of SGU, the proposed usability evaluation instrument, represent a substantive advancement in addressing the methodological gaps identified in the systematic literature review (SLR). By integrating domains such as serious objectives, narrative immersion, character and world visualization, gamification strategies, multimedia design, and educational alignment, the tool offers a holistic framework specifically tailored to the complexities of serious games, going far beyond traditional usability tools like the SUS or CSUQ, which primarily focus on general system performance and interface satisfaction.

A critical analysis of the instrument’s structure reveals that its multidimensional approach provides a more comprehensive and relevant evaluation framework for serious games. Unlike conventional tools such as the SUS or CSUQ, which are limited in capturing pedagogical or narrative aspects, the proposed questionnaire incorporates constructs from MEEGA+ and GEQ to better reflect the unique characteristics of serious games. This customization allows evaluators to measure not only ease of use and engagement but also the alignment of the game with learning objectives and its ability to foster immersive experiences.

Importantly, the instrument’s design accommodates flexibility by including an N/A (not applicable) response option, which acknowledges that not all serious games require certain features, such as character or world visualization, to achieve their educational objectives. This adaptability enhances the questionnaire applicability across diverse genres of serious games, where such elements may be absent or intentionally minimized without compromising the seriousness of the game or its pedagogical intent.

Compared to instruments identified in the SLR, the proposed tool demonstrates significantly greater coverage and contextual specificity. While existing instruments like MEEGA+, GEQ, or SUMI offer partial solutions, none effectively integrate both pedagogical relevance and usability performance in a unified structure. The proposed tool fills this gap by merging validated items from various sources and organizing them into coherent dimensions that align with the core attributes of serious games. This structure enables evaluators to assess not only functionality and satisfaction but also narrative consistency, learning alignment, and engagement depth.

Furthermore, SGU facilitates comparative analysis through its standardized Likert-scale format and the subsequent transformation into a SUS-equivalent percentage scale. This allows for intuitive interpretation and benchmarking, which is particularly valuable for iterative design cycles and cross-game usability assessments. For example, games like Flagschool achieved over 90% on items related to educational clarity and challenge balance, suggesting high usability in cognitively demanding contexts. In contrast, Funcarac exhibited slightly lower results in emotional comfort and novelty, highlighting areas for design refinement.

These results are consistent with previous research showing that serious games with well-defined pedagogical goals often achieve higher usability ratings, whereas games emphasizing cultural narratives or novel experiences face greater challenges in maintaining emotional comfort and engagement. The observed differences across the case studies highlight that usability is not solely determined by interface quality but also by the integration of narrative depth, cultural context, and affective design. This underscores the importance of considering contextual and cultural variables in usability evaluations, as also noted in the recent literature. From a practical standpoint, these findings suggest that designers should prioritize both clarity of educational objectives and strategies to foster emotional immersion, ensuring that serious games are both instructive and engaging across diverse user populations.

Despite SGU demonstrating utility, certain limitations must be acknowledged. The instrument has not yet undergone large-scale psychometric validation, and its reliability and sensitivity across different user demographics and game types require further exploration. Additionally, while the structured questionnaire offers practical advantages in terms of cost and scalability, it may benefit from being complemented with qualitative methods to capture more nuanced user feedback.

In conclusion, the proposed questionnaire presents a significant contribution to the domain of serious game evaluation. It provides a targeted, user-centered, and context-aware tool capable of measuring dimensions that were previously neglected in conventional usability frameworks. Its flexibility, clarity, and comprehensive scope position it as a robust instrument for both formative and summative assessments in educational, health, and training-oriented game environments. Future work will focus on empirical validation, refinement based on user feedback, and cross-cultural adaptation to support broader adoption.

7. Conclusions, Limitations, and Future Directions

This study introduced SGU (Serious Games Usability), a novel usability evaluation instrument that addresses methodological gaps in evaluating serious games. By integrating pedagogical alignment, narrative immersion, gamification, multimedia quality, and usability principles, SGU offers a structured and comprehensive framework that goes beyond the scope of traditional tools such as the SUS or CSUQ.

The main contribution of this research is the development of SGU, a domain-specific, multidimensional, and user-centered instrument that combines traditional usability criteria with game-specific aspects such as pedagogical value, engagement, and experiential design. Its application in three case studies demonstrated strong diagnostic capacity, effectively identifying strengths and usability challenges across diverse educational, cultural, and training-oriented game designs.

However, several limitations must be acknowledged. First, the sample sizes in the case studies were limited and relatively homogeneous, which may constrain the generalizability of the findings. Second, cultural and contextual differences in user perception were not systematically addressed, leaving open questions about the instrument’s applicability across different regions and populations. Third, although the inclusion of an N/A option provides flexibility, further validation is required to ensure consistency when applied across games of varying genres and levels of complexity. Finally, while the questionnaire items were derived from validated instruments and contextualized for serious games, future applications may reveal the need for further refinement or domain-specific adaptation depending on genre, user profile, or learning objectives.

Despite these limitations, the proposed tool offers strong potential for broader applicability. Its low implementation cost, clear dimensional structure, and ease of integration into iterative development cycles make it a practical tool for usability professionals, educators, and developers. Moreover, its compatibility with Likert-based scales and subsequent percentage normalization facilitate cross-study comparisons and benchmarking.

Future research should therefore prioritize large-scale psychometric validation of SGU, incorporating diverse participant profiles across different educational stages, cultural contexts, and subject domains. Cross-cultural adaptations will be essential for international adoption, while comparative studies with other usability frameworks can help establish its discriminant validity. Moreover, applying SGU to high-impact serious games, such as citizen science initiatives with millions of participants, could strengthen its robustness and relevance. To complement quantitative insights, qualitative approaches, including interviews and focus groups, should also be incorporated to capture nuanced cultural and contextual factors that may not be reflected in numerical data. Ultimately, SGU provides a practical, flexible, and user-centered approach to usability evaluation, supporting the development of more effective and inclusive serious games.

Author Contributions

Conceptualization, M.C.-T., L.B. and M.S.; methodology, M.C.-T., D.M.-M. and M.S.; validation, M.S., L.B. and P.A.-V.; formal analysis, M.C.-T., L.B. and D.M.-M.; investigation, M.C.-T., L.B. and D.M.-M.; writing—original draft preparation, M.C.-T., M.S., L.B., J.G., J.A. and D.M.-M.; writing—review and editing, M.S., D.M.-M., J.G., J.A. and P.A.-V.; supervision, M.C.-T.; project administration, M.S., M.C.-T. and P.A.-V. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Universidad de Las Américas—Ecuador as part of the internal research project 489. A.XIV.24.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

The authors wish to acknowledge the contribution of the “Laboratorio de Investigación de Sistemas de Información e Inclusión Digital”-LudoLab https://ludolab.epn.edu.ec/ (accessed on 22 July 2025) for its support in the research and publication of this article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Santórum, M.; Morales-Martínez, D.; Caiza, J.; Carrión-Toro, M.; Galindo, J.; Acosta-Vargas, P. Serious Games, Serious Impact: Enhancing Ethical Education Through Interactive Learning. In Proceedings of the 2024 International Conference on Computer, Information and Telecommunication Systems (CITS), Girona, Spain, 17–19 July 2024; pp. 1–8. [Google Scholar]

- Krajčovič, M.; Gabajová, G.; Matys, M.; Grznár, P.; Dulina, L.; Kohár, R. 3D Interactive Learning Environment as a Tool for Knowledge Transfer and Retention. Sustainability 2021, 13, 7916. [Google Scholar] [CrossRef]

- Cook-Chennault, K.; Villanueva Alarcón, I.; Jacob, G. Usefulness of Digital Serious Games in Engineering for Diverse Undergraduate Students. Educ. Sci. 2022, 12, 27. [Google Scholar] [CrossRef]

- Alrehaili, E.A.; Osman, H.A. A virtual reality role-playing serious game for experiential learning. Interact. Learn. Environ. 2022, 30, 922–935. [Google Scholar] [CrossRef]

- Gurbuz, S.C.; Celik, M. Serious games in future skills development: A systematic review of the design approaches. Comput. Appl. Eng. Educ. 2022, 30, 1591–1612. [Google Scholar] [CrossRef]

- Djaouti, D.; Alvarez, J.; Jessel, J.P. Classifying serious games: The G/P/S model. In Handbook of Research on Improving Learning and Motivation Through Educational Games; Advances in Game-Based Learning; IGI Global: Hershey, PA, USA, 2011; pp. 118–136. [Google Scholar] [CrossRef]

- Vásquez, L.M.L. Juegos Con Propósitos Educativos Como Herramientas Para la Enseñanza de la Ética. Ph.D. Thesis, Universidad Nacional de Colombia, Bogotá, Colombia, 2017. [Google Scholar]

- Tavares, N. The use and impact of game-based learning on the learning experience and knowledge retention of nursing undergraduate students: A systematic literature review. Nurse Educ. Today 2022, 117, 105484. [Google Scholar] [CrossRef]

- Lampropoulos, G.; Kinshuk. Virtual reality and gamification in education: A systematic review. Educ. Technol. Res. Dev. 2024, 72, 1691–1785. [Google Scholar] [CrossRef]

- Aster, A.; Hütt, C.; Morton, C.; Flitton, M.; Laupichler, M.C.; Raupach, T. Development and evaluation of an emergency department serious game for undergraduate medical students. BMC Med. Educ. 2024, 24, 1061. [Google Scholar] [CrossRef]

- Checa, D.; Miguel-Alonso, I.; Bustillo, A. Immersive virtual-reality computer-assembly serious game to enhance autonomous learning. Virtual Real. 2023, 27, 3301–3318. [Google Scholar] [CrossRef]

- Tito Cruz, J.; Coluci, V.R.; Moraes, R. ORUN-VR2: A VR serious game on the projectile kinematics: Design, evaluation, and learning outcomes. Virtual Real. 2023, 27, 2583–2604. [Google Scholar] [CrossRef]

- Marin, D.; Castrillo, J.; Bermúdez, G.S. Pruebas de la usabilidad en usuarios discapacitados. Rev. Repert. Científico 2012, 1, 55–65. [Google Scholar]

- Moreno-Ger, P.; Torrente, J.; Hsieh, Y.G.; Lester, W. Usability testing for serious games: Making informed design decisions with user data. Adv. Hum.-Comput. Interact. 2012, 2012, 369637. [Google Scholar] [CrossRef]

- Da Silveira, A.C.; Martins, R.X.; Vieira, E.A.O. E-Guess: Evaluación de usabilidad para juegos educativos. RIED. Rev. Iberoam. Educ. Distancia 2021, 24, 245–263. [Google Scholar]

- Kitchenham, B.; Charters, S. Guidelines for performing Systematic Literature Reviews in Software Engineering; Technical Report EBSE-2007-01; Keele University: Keele, UK, 2007. [Google Scholar]

- Damaševičius, R.; Maskeliūnas, R.; Blažauskas, T. Serious Games and Gamification in Healthcare: A Meta-Review. Information 2023, 14, 105. [Google Scholar] [CrossRef]

- Natucci, G.C.; Borges, M.A.F. The Experience, Dynamics and Artifacts Framework: Towards a Holistic Model for Designing Serious and Entertainment Games. In Proceedings of the 2021 IEEE Conference on Games (CoG), Copenhagen, Denmark, 17–20 August 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Alshar’e, M.; Albadi, A.; Jawarneh, M.; Tahir, N.; Al Amri, M. Usability Evaluation of Educational Games: An Analysis of Culture as a Factor Affecting Children’s Educational Attainment. Adv. Hum.-Comput. Interact. 2022, 2022, 9427405. [Google Scholar] [CrossRef]

- Carlier, S.; Naessens, V.; De Backere, F.; De Turck, F. A Software Engineering Framework for Reusable Design of Personalized Serious Games for Health: Development Study. JMIR Serious Games 2023, 11, e40054. [Google Scholar] [CrossRef]

- Frasca, G. Videogames of the Oppressed: Videogames as a Means for Critical Thinking and Debate. Master’s Thesis, Georgia Institute of Technology, Atlanta, GA, USA, 2001. [Google Scholar]

- Czernik, D.S.L. Videojuegos y alfabetización digital. Aula Innovación Educ. 2005, 1, 48–50. [Google Scholar]

- Schell, J. The Art of Game Design: A Book of Lenses; CRC Press: Boca Raton, FL, USA, 2008. [Google Scholar]

- Kars, L. What Are Serious Games? 2025. Available online: https://grendelgames.com/what-are-serious-games/#:~:text=Serious%20games%20are%20games%20with,and%20other%20businesses%20and%20industries (accessed on 20 May 2025).

- Abt, C.C. Serious Games; University Press of America: Lanham, MD, USA, 1987. [Google Scholar]

- Alvarez, J.; Michaud, L. Serious Games: Advergaming, Edugaming, Training and More; IDATE: Montpellier, France, 2008. [Google Scholar]

- Ferdig, R.; Winn, B. The Design, Play, and Experience Framework. In The Design, Play, and Experience Framework; IGI Global: Hershey, PA, USA, 2009; pp. 1010–1024. [Google Scholar] [CrossRef]

- Carrion, M.; Santórum, M.; Flores, H.; Aguilar, J.; Perez, M. Serious Game, gamified applications, educational software: A comparative study. In Proceedings of the 2019 International Conference on Information Systems and Software Technologies (ICI2ST), Quito, Ecuador, 13–15 November 2019; pp. 55–62. [Google Scholar]

- Carrión Toro, M.d.C. iPlus Una Metodología Centrada en el Usuario Para el Diseño de Juegos Serios. Ph.D. Thesis, Escuela Politécnica Nacional, Quito, Ecuador, 2022. [Google Scholar]

- Deterding, S.; Sicart, M.; Nacke, L.; O’Hara, K.; Dixon, D. Gamification. using game-design elements in non-gaming contexts. In In Proceedings of the CHI ’11 Extended Abstracts on Human Factors in Computing Systems, New York, NY, USA, 7–12 May 2011; CHI EA ′11. pp. 2425–2428. [Google Scholar] [CrossRef]

- Ullah, M.; Amin, S.U.; Munsif, M.; Yamin, M.M.; Safaev, U.; Khan, H.; Khan, S.; Ullah, H. Serious games in science education: A systematic literature. Virtual Real. Intell. Hardw. 2022, 4, 189–209. [Google Scholar] [CrossRef]

- Yu, Z.; Gao, M.; Wang, L. The Effect of Educational Games on Learning Outcomes, Student Motivation, Engagement and Satisfaction. J. Educ. Comput. Res. 2021, 59, 522–546. [Google Scholar] [CrossRef]

- Didácticos, N. ¿Qué son Los Juegos Educativos? 2018. Available online: https://www.noedidacticos.com/blog/que-son-los-juegos-educativos-0de1c230067b (accessed on 20 May 2025).

- Hodhod, R.; Hardage, H.; Abbas, S.; Aldakheel, E.A. CyberHero: An Adaptive Serious Game to Promote Cybersecurity Awareness. Electronics 2023, 12, 3544. [Google Scholar] [CrossRef]

- Marengo, A.; Pagano, A.; Soomro, K.A. Serious games to assess university students’ soft skills: Investigating the effectiveness of a gamified assessment prototype. Interact. Learn. Environ. 2024, 32, 6142–6158. [Google Scholar] [CrossRef]

- Tan, C.K.W.; Nurul-Asna, H. Serious games for environmental education. Integr. Conserv. 2023, 2, 19–42. [Google Scholar] [CrossRef]

- Sun, L.; Guo, Z.; Hu, L. Educational games promote the development of students’ computational thinking: A meta-analytic review. Interact. Learn. Environ. 2023, 31, 3476–3490. [Google Scholar] [CrossRef]

- Guan, Y.; Qiu, Y.; Xu, F.; Fang, J. Effect of Game Teaching Assisted by Deep Reinforcement Learning on Children’s Physical Health and Cognitive Ability. Mob. Inf. Syst. 2022, 2022, 6150261. [Google Scholar] [CrossRef]

- Gros Salvat, B. Certezas e interrogantes acerca del uso de los videojuegos para el aprendizaje. Comunicación. Revista Internacional de Comunicación Audiovisual; Editorial Universidad de Sevilla: Sevilla, España, 2009; Volume 1, Number 7; pp. 251–264. [Google Scholar]

- ISO 9241-11:2018; Ergonomics of Human-System Interaction—Part 11: Usability: Definitions and Concepts. ISO: Geneva, Switzerland, 2018. Available online: https://www.iso.org/standard/63500.html (accessed on 18 July 2025).

- Sánchez, W.O. La usabilidad en Ingeniería de Software: Definición y características. Revista Ing-Novación No. 02 2015, 1, 7–21. [Google Scholar]

- IEEE Xplore. Available online: https://ieeexplore.ieee.org/Xplore/home.jsp (accessed on 15 July 2025).

- ACM Digital Library. Available online: https://www.acm.org/ (accessed on 15 July 2025).

- Elsevier. Available online: https://www.elsevier.com/ (accessed on 15 July 2025).

- Google Scholar. Available online: https://scholar.google.com/ (accessed on 15 July 2025).

- Springer Nature Link. Available online: https://link.springer.com/ (accessed on 15 July 2025).

- Clark, J.; Glasziou, P.; Mar, C.D.; Bannach-Brown, A.; Stehlik, P.; Scott, A.M. A full systematic review was completed in 2 weeks using automation tools: A case study. J. Clin. Epidemiol. 2020, 121, 81–90. [Google Scholar] [CrossRef]

- Zooniverse. Galaxy. 2025. Available online: https://www.zooniverse.org/projects/zookeeper/galaxy-zoo/ (accessed on 29 August 2025).

- Glitchers Ltd. Sea Hero Quest. 2025. Available online: https://seaheroquest.com/ (accessed on 29 August 2025).

- Waldispuhl, J.; Szantner, A. Video game unleashes millions of citizen scientists on microbiome research. Nat. Biotechnol. 2025, 43, 36–37. [Google Scholar]

- Tolentino, G.P.; Battaglini, C.; Pereira, A.C.V.; Oliveria, R.J.d.; Paula, M.G.M.d. Usability of Serious Games for Health. In Proceedings of the 2011 Third International Conference on Games and Virtual Worlds for Serious Applications, Athens, Greece, 4–6 May 2011; pp. 172–175. [Google Scholar] [CrossRef]

- Diah, N.M.; Ismail, M.; Ahmad, S.; Dahari, M.K.M. Usability testing for educational computer game using observation method. In Proceedings of the 2010 International Conference on Information Retrieval & Knowledge Management (CAMP), Shah Alam, Malaysia, 17–18 March 2010; pp. 157–161. [Google Scholar] [CrossRef]

- Falcão, I.; da S. Souza, D.; Araujo, F.P.; dos S. Fernandes, J.G.; Pires, Y.P.; Cardoso, D.L.; Seruffo, M.C. Usability and Cognitive Benefits of a Serious Game to Combat Aedes Aegypti Mosquito. In Proceedings of the 2019 IEEE 32nd International Symposium on Computer-Based Medical Systems (CBMS), Cordoba, Spain, 5–7 June 2019; pp. 720–725. [Google Scholar] [CrossRef]

- Omar, H.; Jaafar, A. Playability Heuristics Evaluation (PHE) approach for Malaysian educational games. In Proceedings of the 2008 International Symposium on Information Technology, Kuala Lumpur, Malaysia, 26–28 August 2008; Volume 3, pp. 1–7. [Google Scholar] [CrossRef]

- Hussain, A.; Mutalib, N.A.; Zaino, A. A usability testing on JFakih Learning Games for hearing impairment children. In Proceedings of the The 5th International Conference on Information and Communication Technology for The Muslim World (ICT4M), Kuching, Malaysia, 17–18 November 2014; pp. 1–4. [Google Scholar] [CrossRef]

- Ismail, M.; Diah, N.M.; Ahmad, S.; Kamal, N.A.M.; Dahari, M.K.M. Measuring usability of educational computer games based on the user success rate. In Proceedings of the 2011 International Symposium on Humanities, Science and Engineering Research, Kuala Lumpur, Malaysia, 6–7 June 2011; pp. 56–60. [Google Scholar] [CrossRef]

- Dagdag, R.J.E.; Mariano, R.V.; Atienza, R.O. Teacher tool for visualization and management of a technology-enhanced learning environment. In Proceedings of the TENCON 2011—2011 IEEE Region 10 Conference, Bali, Indonesia, 21–24 November 2011; pp. 1426–1430. [Google Scholar] [CrossRef]

- Desurvire, H.; El-Nasr, M.S. Methods for Game User Research: Studying Player Behavior to Enhance Game Design. IEEE Comput. Graph. Appl. 2013, 33, 82–87. [Google Scholar] [CrossRef]

- Papaloukas, S.; Patriarcheas, K.; Xenos, M. Usability Assessment Heuristics in New Genre Videogames. In Proceedings of the 2009 13th Panhellenic Conference on Informatics, Corfu, Greece, 10–12 September 2009; pp. 202–206. [Google Scholar] [CrossRef]

- Höysniemi, J.; Hämäläinen, P.; Turkki, L. Using peer tutoring in evaluating the usability of a physically interactive computer game with children. Interact. Comput. 2003, 15, 203–225. [Google Scholar] [CrossRef]

- Rosyidah, U.; Haryanto, H.; Kardianawati, A. Usability Evaluation Using GOMS Model for Education Game “Play and Learn English”. In Proceedings of the 2019 International Seminar on Application for Technology of Information and Communication (iSemantic), Semarang, Indonesia, 21–22 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Soomro, S.; Ahmad, W.F.W.; Sulaiman, S. Evaluation of mobile games with playability heuristic evaluation system. In Proceedings of the 2014 International Conference on Computer and Information Sciences (ICCOINS), Kuala Lumpur, Malaysia, 3–5 June 2014; pp. 1–6. [Google Scholar] [CrossRef]

- May, J. YouTube Gamers and Think-Aloud Protocols: Introducing Usability Testing. IEEE Trans. Prof. Commun. 2019, 62, 94–103. [Google Scholar] [CrossRef]

- Liao, Y.H.; Shen, C.Y. Heuristic Evaluation of Digital Game Based Learning: A Case Study. In Proceedings of the 2012 IEEE Fourth International Conference on Digital Game and Intelligent Toy Enhanced Learning, Takamatsu, Japan, 27–30 March 2012; pp. 192–196. [Google Scholar] [CrossRef]

- Jegers, K. Investigating the Applicability of Usability and Playability Heuristics for Evaluation of Pervasive Games. In Proceedings of the 2008 Third International Conference on Internet and Web Applications and Services, Athens, Greece, 8–13 June 2008; pp. 656–661. [Google Scholar] [CrossRef]

- Hookham, G.; Nesbitt, K.; Kay-Lambkin, F. Comparing usability and engagement between a serious game and a traditional online program. In Proceedings of the Australasian Computer Science Week Multiconference, Canberra, Australia, 1–5 February 2016. ACSW ′16. [Google Scholar] [CrossRef]

- Baauw, E.; Bekker, M.M.; Markopoulos, P. Assessing the applicability of the structured expert evaluation method (SEEM) for a wider age group. In Proceedings of the 2006 Conference on Interaction Design and Children, Tampere, Finland, 7–9 June 2006; IDC ′06. pp. 73–80. [Google Scholar] [CrossRef]

- Yue, W.S.; Zin, N.A.M. Usability evaluation for history educational games. In Proceedings of the 2nd International Conference on Interaction Sciences: Information Technology, Culture and Human, Seoul, Republic of Korea, 24–26 November 2009; ICIS ′09. pp. 1019–1025. [Google Scholar] [CrossRef]

- Papaloukas, S.; Xenos, M. Usability and education of games through combined assessment methods. In Proceedings of the 1st International Conference on PErvasive Technologies Related to Assistive Environments, Athens, Greece, 16–18 July 2008. PETRA ′08. [Google Scholar] [CrossRef]

- Pinelle, D.; Wong, N.; Stach, T. Heuristic evaluation for games: Usability principles for video game design. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; CHI ′08. pp. 1453–1462. [Google Scholar] [CrossRef]

- Rodio, F.; Bastien, J.M.C. Heuristics for Video Games Evaluation: How Players Rate Their Relevance for Different Game Genres According to Their Experience. In Proceedings of the 25th Conference on l’Interaction Homme-Machine, Talence, France, 12–15 November 2013; IHM ′13. pp. 89–93. [Google Scholar] [CrossRef]

- Pinelle, D.; Wong, N.; Stach, T.; Gutwin, C. Usability heuristics for networked multiplayer games. In Proceedings of the 2009 ACM International Conference on Supporting Group Work, Sanibel Island, FL, USA, 10–13 May 2009; GROUP ′09. pp. 169–178. [Google Scholar] [CrossRef]

- Desurvire, H.; Caplan, M.; Toth, J.A. Using heuristics to evaluate the playability of games. In Proceedings of the CHI ’04 Extended Abstracts on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; CHI EA ′04. pp. 1509–1512. [Google Scholar] [CrossRef]

- Korhonen, H.; Koivisto, E.M.I. Playability heuristics for mobile games. In Proceedings of the 8th Conference on Human-Computer Interaction with Mobile Devices and Services, Helsinki, Finland, 12–15 September 2006; MobileHCI ′06. pp. 9–16. [Google Scholar] [CrossRef]

- Fabiano, F.; Sanchez-Francisco, M.; Díaz, P.; Aedo, I. Evaluating a pervasive game for urban awareness. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Barcelona, Spain, 3–6 September 2018; MobileHCI ′18. pp. 197–204. [Google Scholar] [CrossRef]

- Karoulis, A. Evaluating the LEGO–RoboLab interface with experts. Comput. Entertain. 2006, 4, 6–29. [Google Scholar] [CrossRef]

- Mohd, F.; Noor, M.; Daud, E.H.C.; Hasbullah, S.S. Mobile Games Usability Using the Idea of Mark Overmars. In Proceedings of the 10th International Conference on Ubiquitous Information Management and Communication, Danang, Vietnam, 4–6 January 2016. IMCOM ′16. [Google Scholar] [CrossRef]

- Verkuyl, M.; Romaniuk, D.; Mastrilli, P. Virtual gaming simulation of a mental health assessment: A usability study. Nurse Educ. Pract. 2018, 31, 83–87. [Google Scholar] [CrossRef]

- Johnsen, H.M.; Fossum, M.; Vivekananda-Schmidt, P.; Fruhling, A.; Slettebø, Å. Teaching clinical reasoning and decision-making skills to nursing students: Design, development, and usability evaluation of a serious game. Int. J. Med. Inform. 2016, 94, 39–48. [Google Scholar] [CrossRef] [PubMed]

- Savazzi, F.; Isernia, S.; Jonsdottir, J.; Di Tella, S.; Pazzi, S.; Baglio, F. Design and implementation of a serious game on neurorehabilitation: Data on modifications of functionalities along implementation releases. Data Brief 2018, 20, 864–869. [Google Scholar] [CrossRef] [PubMed]

- Savazzi, F.; Isernia, S.; Jonsdottir, J.; Di Tella, S.; Pazzi, S.; Baglio, F. Engaged in learning neurorehabilitation: Development and validation of a serious game with user-centered design. Comput. Educ. 2018, 125, 53–61. [Google Scholar] [CrossRef]

- Hauge, J.B.; Riedel, J.C. Evaluation of simulation games for teaching engineering and manufacturing. Procedia Comput. Sci. 2012, 15, 210–220. [Google Scholar] [CrossRef]

- Senap, N.M.V.; Ibrahim, R. A review of heuristics evaluation component for mobile educational games. Procedia Comput. Sci. 2019, 161, 1028–1035. [Google Scholar] [CrossRef]