LCW-YOLO: An Explainable Computer Vision Model for Small Object Detection in Drone Images

Abstract

1. Introduction

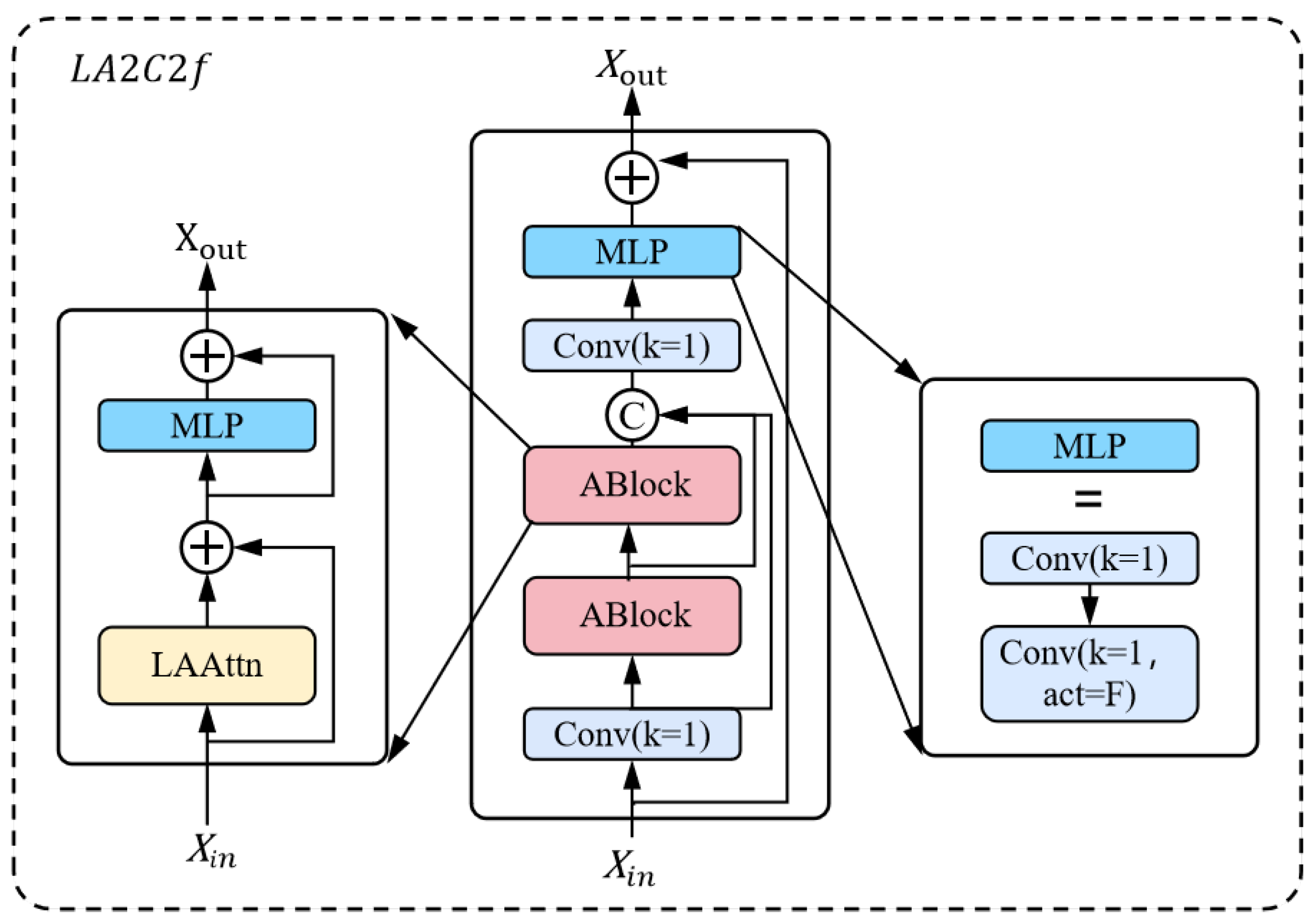

- Heterogeneous multiscale convolution is applied to improve the original Area Attention (AAttn) module of YOLOv12, and the enhanced module is incorporated into the neck’s A2C2f. This integration yields a lightweight and more effective structure, termed Lightweight Channel-wise and Spatial Attention with Context (LA2C2f), which significantly enhances spatial perception for small targets while reducing model computational complexity.

- The Convolutional Attentive Integration Module (CAIM) is proposed, deeply integrating a convolutional structure with an improved Residual Path-Guided Multi-dimensional Collaborative Attention Mechanism (RMCAM). This architecture enables the capture of contextual dependencies through convolution while facilitating enhanced information fusion across four dimensions containing channel, height, width, and original features, thereby enabling deep coupling of local and global features.

- Introducing Wise-IoU (WIoU) v3 [19] with a dynamic non-monotonic focusing mechanism as the bounding box regression loss. By dynamically allocating gradient gains through outlier degree , we suppress interference from low-quality samples and improve the model’s generalization performance in complex scenarios.

2. Principles and Innovations

2.1. YOLOv12 Model

2.2. Proposed Method

2.2.1. Lightweight Channel-Wise and Spatial Attention with Context

2.2.2. Convolution and Attention Integration Module

2.2.3. Wise-IoU

3. Experiments

3.1. Performance Evaluation

3.2. Experimental Setup

3.3. Experimental Results

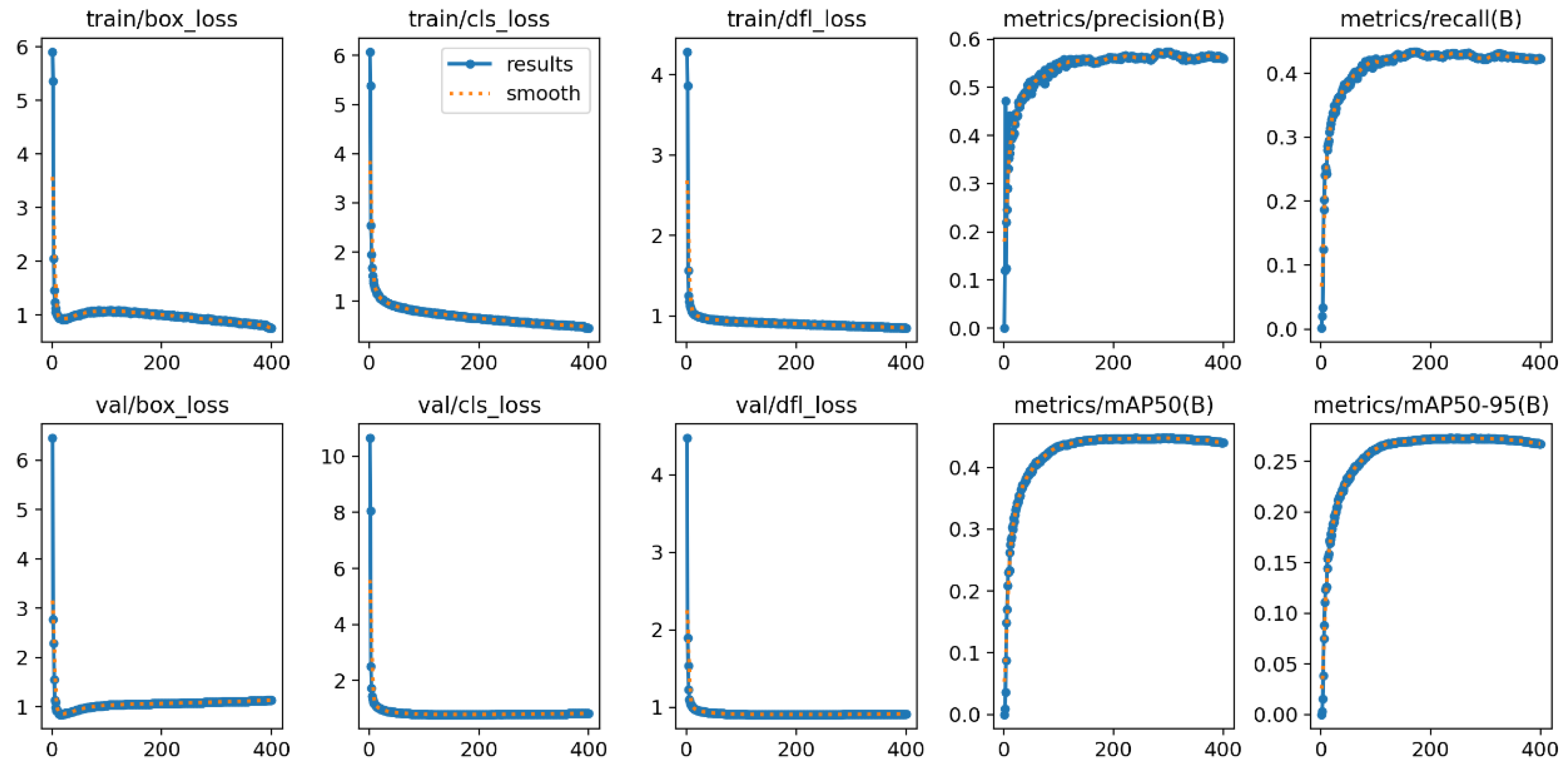

3.3.1. Training Results and Analysis

3.3.2. Comparative Experiment

3.3.3. Ablation Experiment

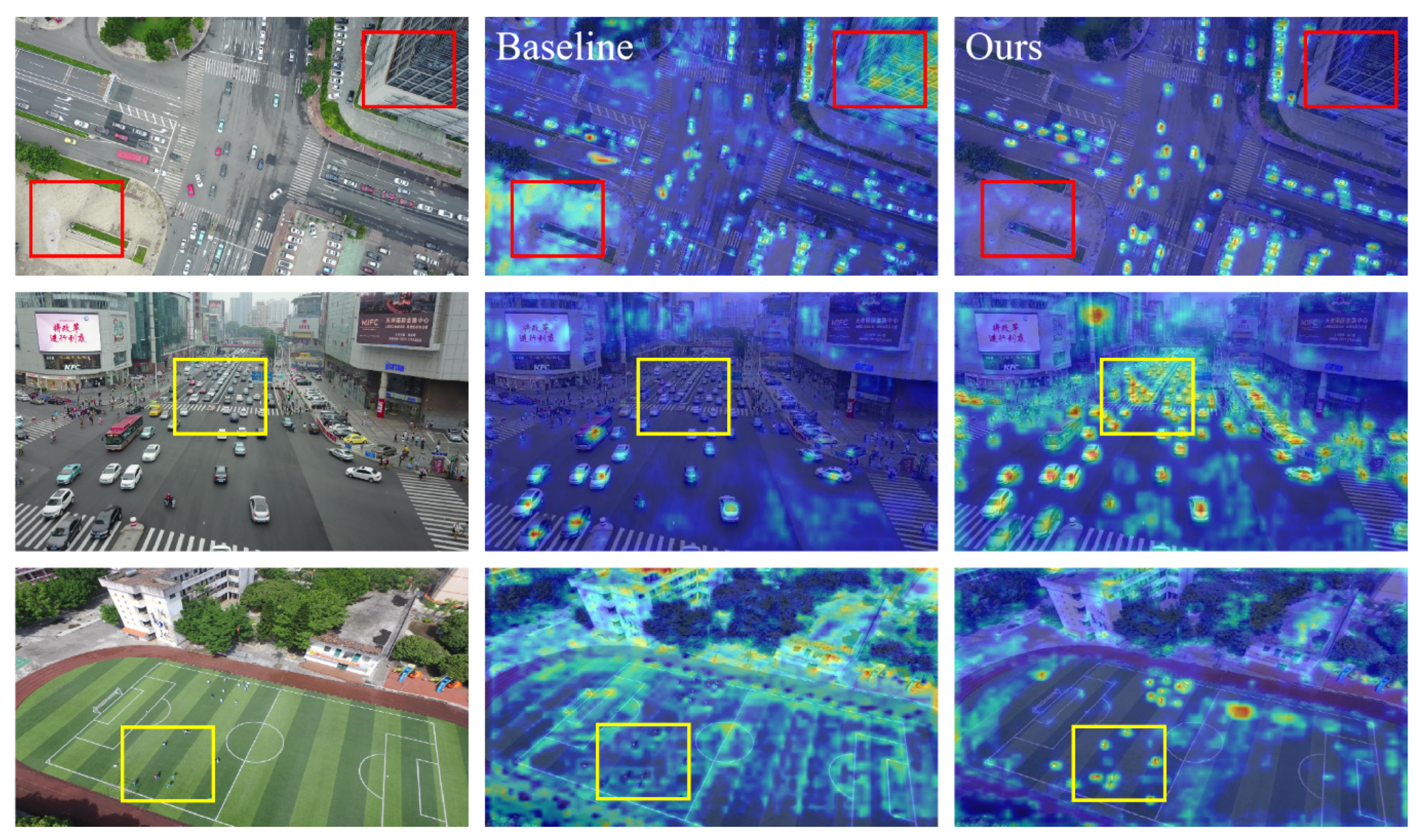

3.3.4. Visualization

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jin, T.; Wang, W.; Sun, C.; Yu, Z.; Wu, Y.; Chen, X. TGC-YOLO: Detection Model for Small Objectsin UAV Image Scene. In Proceedings of the 2024 IEEE International Conference on Cognitive Computing and Complex Data (ICCD), Qinzhou, China, 28–30 September 2024; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2024; pp. 119–124. [Google Scholar] [CrossRef]

- Guenther, N.; Schonlau, M. Support vector machines. Stata J. 2016, 16, 917–937. [Google Scholar] [CrossRef]

- Liu, M.; Jiang, Q.; Li, H.; Cao, X.; Lv, X. Finite-time-convergent support vector neural dynamics for classification. Neurocomputing 2025, 617, 128810. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2001), Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar] [CrossRef]

- Zhang, Z.; He, Y.; Mai, W.; Luo, Y.; Li, X.; Cheng, Y.; Huang, X.; Lin, R. Convolutional Dynamically Convergent Differential Neural Network for Brain Signal Classification. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 8166–8177. [Google Scholar] [CrossRef]

- Qu, C.; Zhang, L.; Li, J.; Deng, F.; Tang, Y.; Zeng, X.; Peng, X. Improving feature selection performance for classification of gene expression data using Harris Hawks optimizer with variable neighborhood learning. Briefings Bioinform. 2021, 22, bbab097. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Zhou, W.; Geng, M. Automatic Seizure Detection Based on S-Transform and Deep Convolutional Neural Network. Int. J. Neural Syst. 2020, 30, 1950024. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.; Ren, S.; Wang, J.; Zhou, W. Efficient Group Cosine Convolutional Neural Network for EEG-Based Seizure Identification. IEEE Trans. Instrum. Meas. 2025, 74, 1–14. [Google Scholar] [CrossRef]

- Liu, G.; Zhang, R.; Tian, L.; Zhou, W. Fine-Grained Spatial-Frequency-Time Framework for Motor Imagery Brain–Computer Interface. IEEE J. Biomed. Health Inform. 2025, 29, 4121–4133. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Cham, Switzerland, 2018; pp. 3–19. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. arXiv 2019, arXiv:1905.02244. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and Efficient IOU Loss for Accurate Bounding Box Regression. arXiv 2022, arXiv:2101.08158. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Qin, Z.; Weian, G. Survey on deep learning-based small object detection algorithms. Appl. Res. Comput. 2025, 1–14. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Liu, G.; Zhang, J.; Chan, A.B.; Hsiao, J.H. Human attention guided explainable artificial intelligence for computer vision models. Neural Netw. Off. J. Int. Neural Netw. Soc. 2024, 177, 106392. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Zhang, M.; Ye, S.; Zhao, S.; Wang, W.; Xie, C. Pear Object Detection in Complex Orchard Environment Based on Improved YOLO11. Symmetry 2025, 17, 255. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the 13th European Conference on Computer Vision (ECCV)—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; PT, V., Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Yu, Y.; Zhang, Y.; Cheng, Z.; Song, Z.; Tang, C. MCA: Multidimensional collaborative attention in deep convolutional neural networks for image recognition. Eng. Appl. Artif. Intell. 2023, 126, 107079. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Li, Y.; Yuan, G.; Wen, Y.; Hu, J.; Evangelidis, G.; Tulyakov, S.; Wang, Y.; Ren, J. EfficientFormer: Vision Transformers at MobileNet Speed. arXiv 2022, arXiv:2206.01191. [Google Scholar]

- Han, D.; Ye, T.; Han, Y.; Xia, Z.; Pan, S.; Wan, P.; Song, S.; Huang, G. Agent Attention: On the Integration of Softmax and Linear Attention. arXiv 2024, arXiv:2312.08874. [Google Scholar]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Huang, J.; Wang, K.; Hou, Y.; Wang, J. LW-YOLO11: A Lightweight Arbitrary-Oriented Ship Detection Method Based on Improved YOLO11. Sensors 2025, 25, 65. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and Tracking Meet Drones Challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 7380–7399. [Google Scholar] [CrossRef]

- Kraft, M.; Piechocki, M.; Ptak, B.; Walas, K. Autonomous, Onboard Vision-Based Trash and Litter Detection in Low Altitude Aerial Images Collected by an Unmanned Aerial Vehicle. Remote Sens. 2021, 13, 965. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. mixup: Beyond Empirical Risk Minimization. arXiv 2018, arXiv:1710.09412. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. Computer Software. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 9 August 2025).

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Authors, P. Paddledetection: Object Detection and Instance Segmentation Toolkit Based on PaddlePaddle. 2019. Available online: https://github.com/PaddlePaddle/PaddleDetection (accessed on 29 August 2025).

- Yang, C.; Huang, Z.; Wang, N. QueryDet: Cascaded Sparse Query for Accelerating High-Resolution Small Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 13658–13667. [Google Scholar] [CrossRef]

- Yang, F.; Fan, H.; Chu, P.; Blasch, E.; Ling, H. Clustered Object Detection in Aerial Images. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8310–8319. [Google Scholar] [CrossRef]

- Xu, C.; Ding, J.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.S. Dynamic Coarse-to-Fine Learning for Oriented Tiny Object Detection. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7318–7328. [Google Scholar] [CrossRef]

- Tang, S.; Zhang, S.; Fang, Y. HIC-YOLOv5: Improved YOLOv5 For Small Object Detection. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 6614–6619. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2021, arXiv:2010.04159. [Google Scholar]

- Roh, B.; Shin, J.; Shin, W.; Kim, S. Sparse DETR: Efficient End-to-End Object Detection with Learnable Sparsity. arXiv 2022, arXiv:2111.14330. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, K.; Gan, Z.; Zhu, G.N. UAV-DETR: Efficient End-to-End Object Detection for Unmanned Aerial Vehicle Imagery. arXiv 2025, arXiv:2501.01855. [Google Scholar]

- Minh, H.T.; Mai, L.; Minh, T.V. Performance Evaluation of Deep Learning Models on Embedded Platform for Edge AI-Based Real time Traffic Tracking and Detecting Applications. In Proceedings of the 2021 15th International Conference on Advanced Computing and Applications (ACOMP), Electr Network, Virtual, 24–26 November 2021; Le, L., Nguyen, H., Phan, T., Clavel, M., Dang, T., Eds.; IEEE: New York, NY, USA, 2021; pp. 128–135. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

| Kernel | APval (50:95) | Latency |

|---|---|---|

| 3 × 3 | 40.4 | 1.60 |

| 5 × 5 | 40.4 | 1.61 |

| 7 × 7 | 40.6 | 1.64 |

| 9 × 9 | 40.7 | 1.79 |

| Model | Input Size | Params (M) | GFLOPs | mAP@0.5:0.95 | mAP@0.5 |

|---|---|---|---|---|---|

| Real-time Object Detectors | |||||

| YOLOv8-M [34] | 640 × 640 | 25.9 | 78.9 | 24.6 | 40.7 |

| YOLOv8-L [34] | 640 × 640 | 43.7 | 165.2 | 26.1 | 42.7 |

| YOLOv9-S [35] | 640 × 640 | 7.2 | 26.7 | 22.7 | 38.3 |

| YOLOv9-M [35] | 640 × 640 | 20.1 | 76.8 | 25.2 | 42.0 |

| YOLOv10-M [36] | 640 × 640 | 15.4 | 59.1 | 24.5 | 40.5 |

| YOLOv10-L [36] | 640 × 640 | 24.4 | 120.3 | 26.3 | 43.1 |

| YOLOv11-S [37] | 640 × 640 | 9.4 | 21.3 | 23.0 | 28.7 |

| YOLOv11-M [37] | 640 × 640 | 20.0 | 67.7 | 25.9 | 43.1 |

| YOLOv12-M [17] | 640 × 640 | 20.2 | 67.2 | 26.9 | 46.0 |

| UAV-Specific Detectors | |||||

| PP-YOLOE-P2-Alpha-1 [38] | 640 × 640 | 54.1 | 111.4 | 30.1 | 48.9 |

| QuayDet [39] | 2400 × 2400 | 33.9 | 212 | 28.3 | 48.1 |

| ClusDet [40] | 1000 × 600 | 30.2 | 207 | 26.7 | 50.6 |

| DCFL [41] | 1024 × 1024 | 36.1 | 157.8 | - | 32.1 |

| HIC-YOLOv5 [42] | 640 × 640 | 9.4 | 31.2 | 26.0 | 44.3 |

| End-to-end Object Detectors | |||||

| DETR [43] | 1333 × 750 | 60 | 187 | 24.1 | 40.1 |

| Deformable DETR [44] | 1333 × 800 | 40 | 173 | 27.1 | 42.2 |

| Sparse DETR [45] | 1333 × 800 | 40.9 | 121 | 27.3 | 42.5 |

| RT-DETR-R18 [46] | 640 × 640 | 20 | 60.0 | 26.7 | 44.6 |

| RT-DETR-R50 [46] | 640 × 640 | 42 | 136 | 28.4 | 47.0 |

| Real-time E2E Detectors for UAV | |||||

| UAV-DETR-EV2 [47] | 640 × 640 | 13 | 43 | 28.7 | 47.5 |

| UAV-DETR-R18 [47] | 640 × 640 | 20 | 77 | 29.8 | 48.8 |

| UAV-DETR-R50 [47] | 640 × 640 | 42 | 170 | 31.5 | 51.5 |

| Proposed UAV Detector | |||||

| LCW-YOLO (ours) | 640 × 640 | 19.8 | 65.5 | 30.6 | 49.3 |

| Model | Params (M) | GFLOPs | mAP@0.5:0.95 | mAP@0.5 |

|---|---|---|---|---|

| YOLOv11-S [37] | 9.4 | 21.3 | 27.8 | 63.0 |

| HIC-YOLOv5 [42] | 9.4 | 31.2 | 30.5 | 65.1 |

| RT-DETR-R18 [46] | 20.0 | 57.3 | 36.3 | 72.6 |

| RT-DETR-R50 [46] | 42.0 | 129.9 | 37.4 | 73.5 |

| UAV-DETR-EV2 [47] | 13 | 43 | 37.1 | 70.6 |

| UAV-DETR-R18 [47] | 20 | 77 | 37.0 | 74.0 |

| UAV-DETR-R50 [47] | 42 | 170 | 37.5 | 75.9 |

| YOLOv12-M [17] | 20.2 | 67.2 | 35.7 | 73.2 |

| LCW-YOLO (ours) | 19.8 | 65.5 | 37.3 | 75.1 |

| Baseline | CAIM | LA2C2f | WIoU v3 | Params (M) | GFLOPs | mAP@0.5:0.95 | mAP@0.5 |

|---|---|---|---|---|---|---|---|

| ✓ | - | - | - | 20.2 | 67.2 | 26.9 | 46.0 |

| ✓ | ✓ | - | - | 19.8 | 65.8 | 28.4 | 47.5 |

| ✓ | ✓ | ✓ | - | 19.8 | 65.5 | 29.8 | 48.5 |

| ✓ | ✓ | ✓ | ✓ | 19.8 | 65.5 | 30.6 | 49.3 |

| Model | CAIM Location | Params (M) | GFLOPs | mAP@0.5:0.95 | mAP@0.5 | Recall |

|---|---|---|---|---|---|---|

| YOLOv12-M [17] (Baseline) | - | 20.2 | 67.2 | 26.9 | 46.0 | 59.9 |

| YOLOv12-M + MCAM [19] | Backbone | 21.0 | 67.8 | 27.2 | 46.4 | 60.7 |

| YOLOv12-M + RMCAM | Neck | 19.7 | 65.5 | 27.8 | 47.0 | 59.9 |

| YOLOv12-M + RMCAM | Backbone | 19.8 | 65.8 | 28.4 | 47.5 | 61.1 |

| Model | Convolution Type | Feature Fusion | Params (M) | GFLOPs | mAP@0.5:0.95 | mAP@0.5 |

|---|---|---|---|---|---|---|

| YOLOv12-M+RMCAM (Backbone) | 7 × 7 convolution | - | 19.8 | 65.8 | 28.4 | 47.5 |

| + 3 × 5 Parallel | 3 × 5 Parallel convolution | Direct splicing | 19.8 | 65.8 | 29.6 | 48.3 |

| + 3 × 5 Sequential | 3 × 5 Sequential convolution | Direct splicing | 19.8 | 65.8 | 29.1 | 48.0 |

| + 3 × 5 Parallel + Concat 1 × 1 | 3 × 5 Parallel convolution | 1 × 1 Convolution compression | 19.8 | 65.5 | 29.8 | 48.5 |

| Model | Params (M) | GFLOPs | FPS | Avg. Power (W) | FPS/W |

|---|---|---|---|---|---|

| YOLOv12-m [17] | 20.2 | 67.2 | 76 | 12.5 | 6.08 |

| LCW-YOLO (ours) | 19.8 | 65.5 | 80 | 10.8 | 7.41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liao, D.; Bi, R.; Zheng, Y.; Hua, C.; Huang, L.; Tian, X.; Liao, B. LCW-YOLO: An Explainable Computer Vision Model for Small Object Detection in Drone Images. Appl. Sci. 2025, 15, 9730. https://doi.org/10.3390/app15179730

Liao D, Bi R, Zheng Y, Hua C, Huang L, Tian X, Liao B. LCW-YOLO: An Explainable Computer Vision Model for Small Object Detection in Drone Images. Applied Sciences. 2025; 15(17):9730. https://doi.org/10.3390/app15179730

Chicago/Turabian StyleLiao, Dan, Rengui Bi, Yubi Zheng, Cheng Hua, Liangqing Huang, Xiaowen Tian, and Bolin Liao. 2025. "LCW-YOLO: An Explainable Computer Vision Model for Small Object Detection in Drone Images" Applied Sciences 15, no. 17: 9730. https://doi.org/10.3390/app15179730

APA StyleLiao, D., Bi, R., Zheng, Y., Hua, C., Huang, L., Tian, X., & Liao, B. (2025). LCW-YOLO: An Explainable Computer Vision Model for Small Object Detection in Drone Images. Applied Sciences, 15(17), 9730. https://doi.org/10.3390/app15179730