Artificial Intelligence and Aviation: A Deep Learning Strategy for Improved Data Classification and Management

Abstract

1. Introduction

1.1. Main Contributions

1.2. Paper Structure

2. Literature Review

2.1. Some Applications of DL and ML in Aviation

- (a)

- Safety and Incident Analysis

- (b)

- Flight Operations and Training

- (c)

- Maintenance and Monitoring

2.2. Forecasting and Predictive Modeling

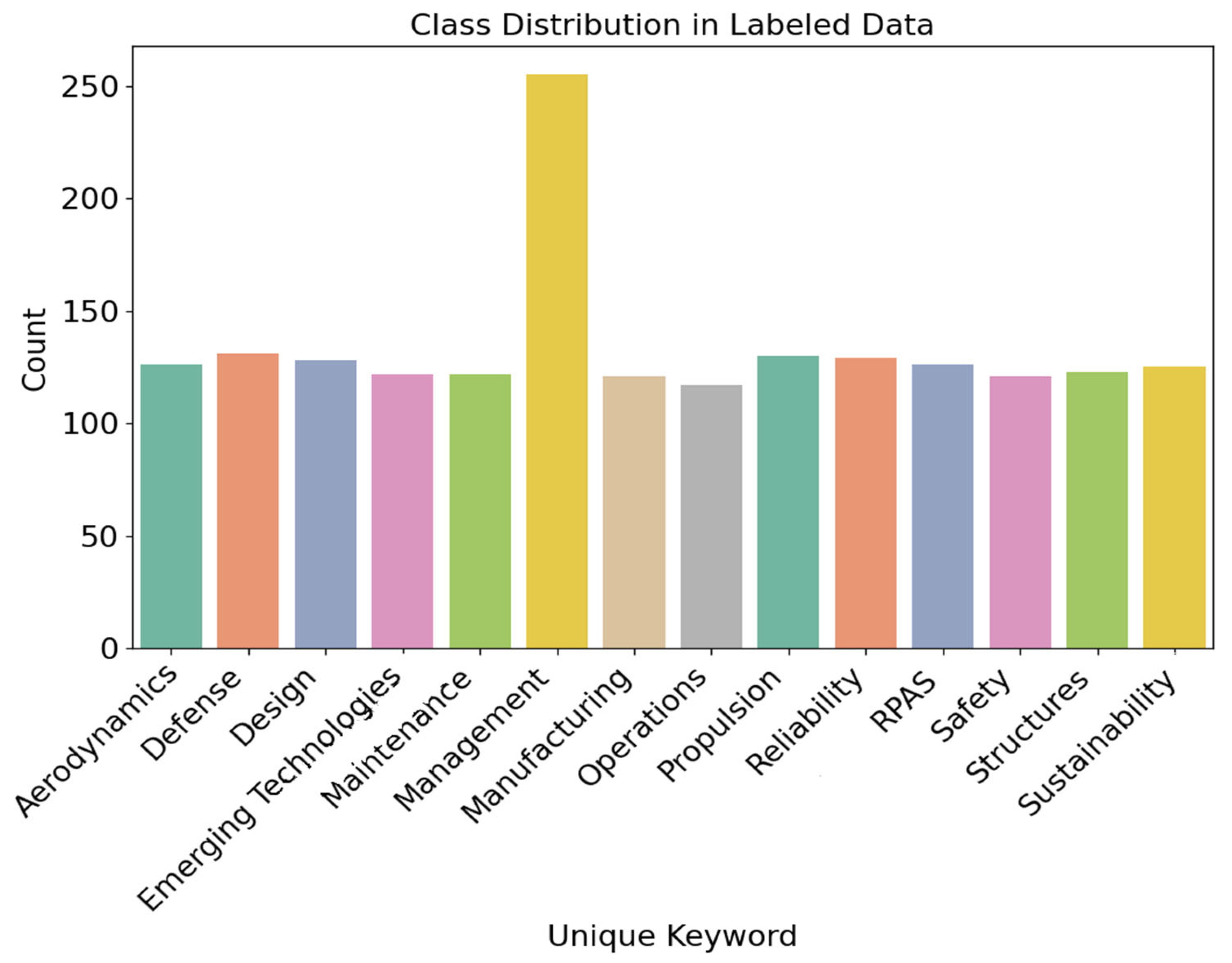

3. Methodology

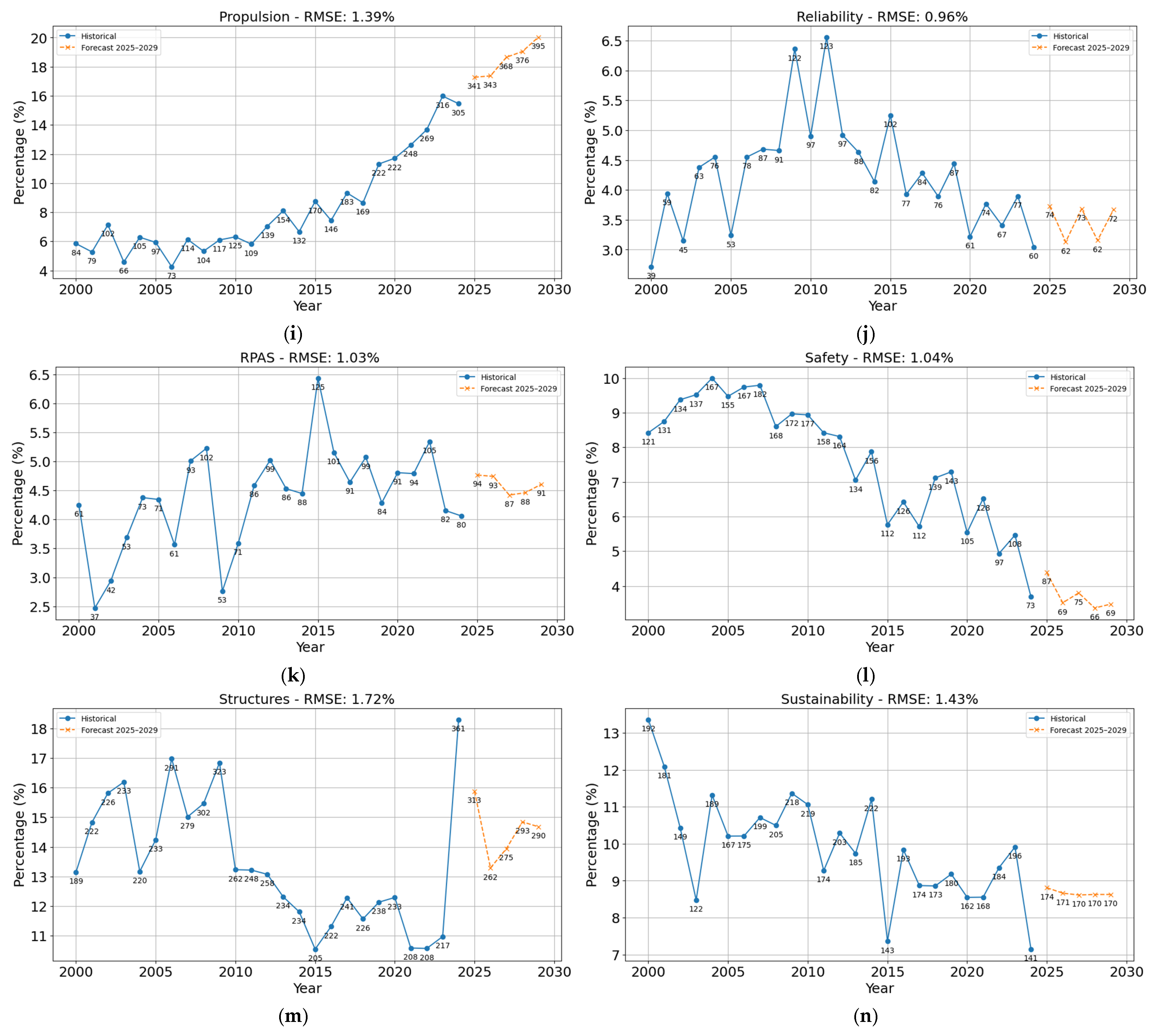

3.1. Data Collection and Labeling

3.2. Data Preprocessing Pipeline and Validation

- (i)

- Text tokenization using the Hugging Face bert-base-uncased tokenizer with padding=True, truncation=True, max_length=512, and return_tensors=“tf”.

- (ii)

- Vector representation obtained from the [CLS] token of the final hidden state of the BERT encoder.

- (iii)

- Data balancing performed with SMOTE (Synthetic Minority Oversampling Technique, random_state=42).

- (iv)

- Dataset splitting into 80% training and 20% testing sets (random_state=42).

- (v)

- Model training with two strategies:

- a.

- One-shot method using LogisticRegression (max_iter=1000) optimized via GridSearchCV (param_grid={“C”:[0.001, 0.01, 0.1, 1, 10, 100]}, cv=5).

- b.

- Epochs method using SGDClassifier (loss=“log_loss”, learning_rate=“constant”, eta0=0.01, max_iter=1, tol=None, random_state=42) trained for 500 epochs via incremental partial_fit.

- (vi)

- Evaluation with macro-averaged precision, recall, F1 score, and ROC–AUC. Learning curves were computed with cv=5 and scoring=“accuracy”.

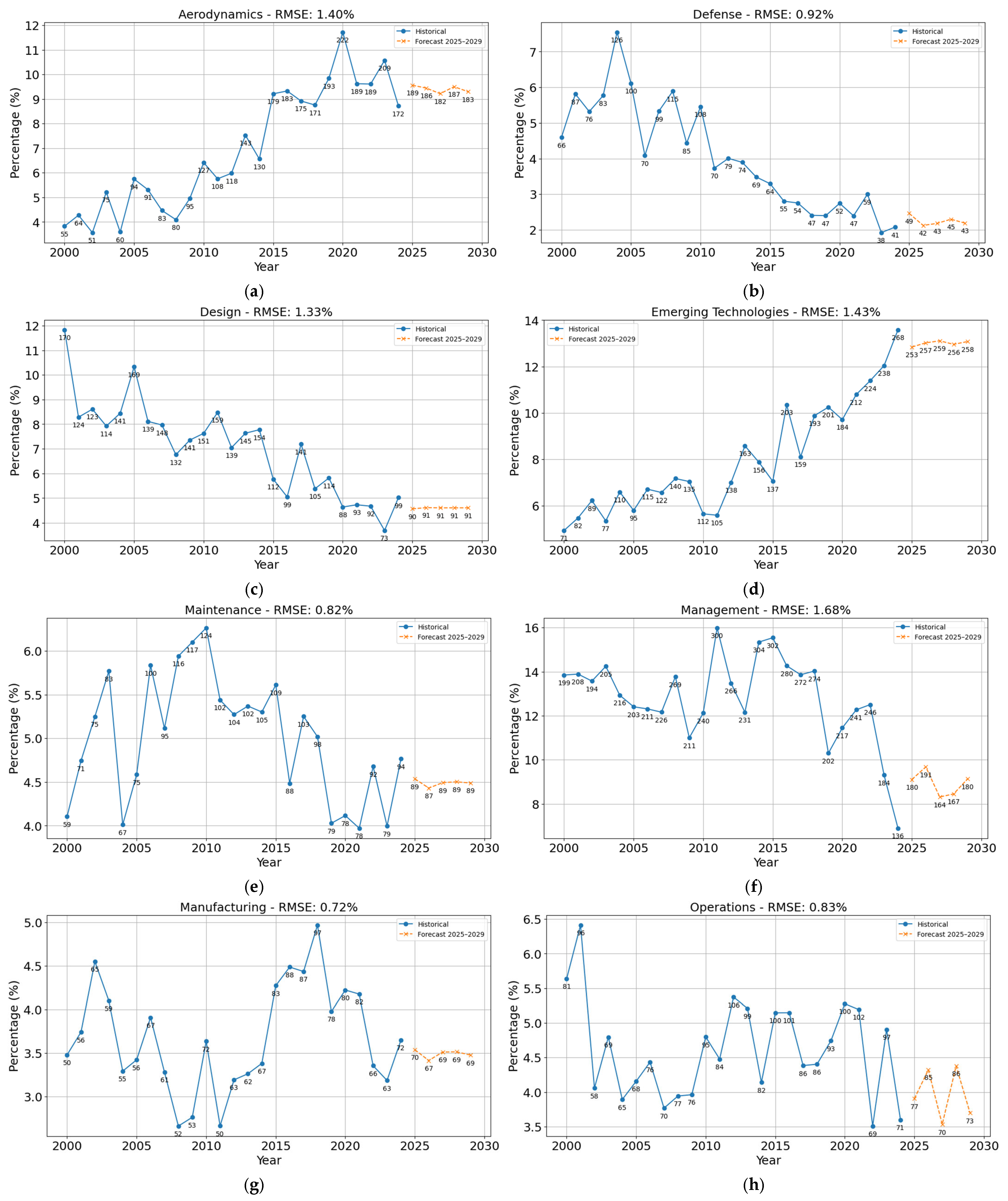

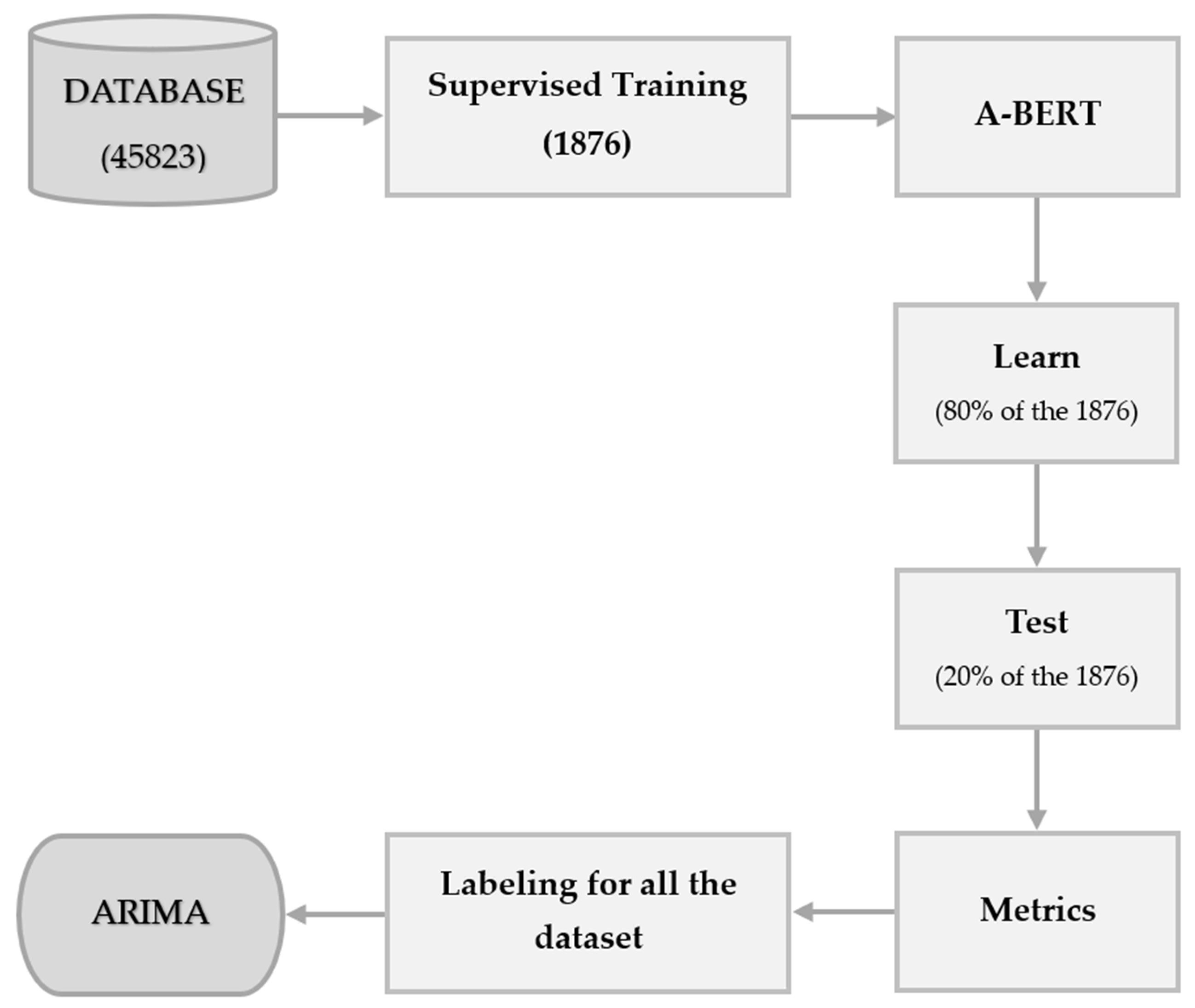

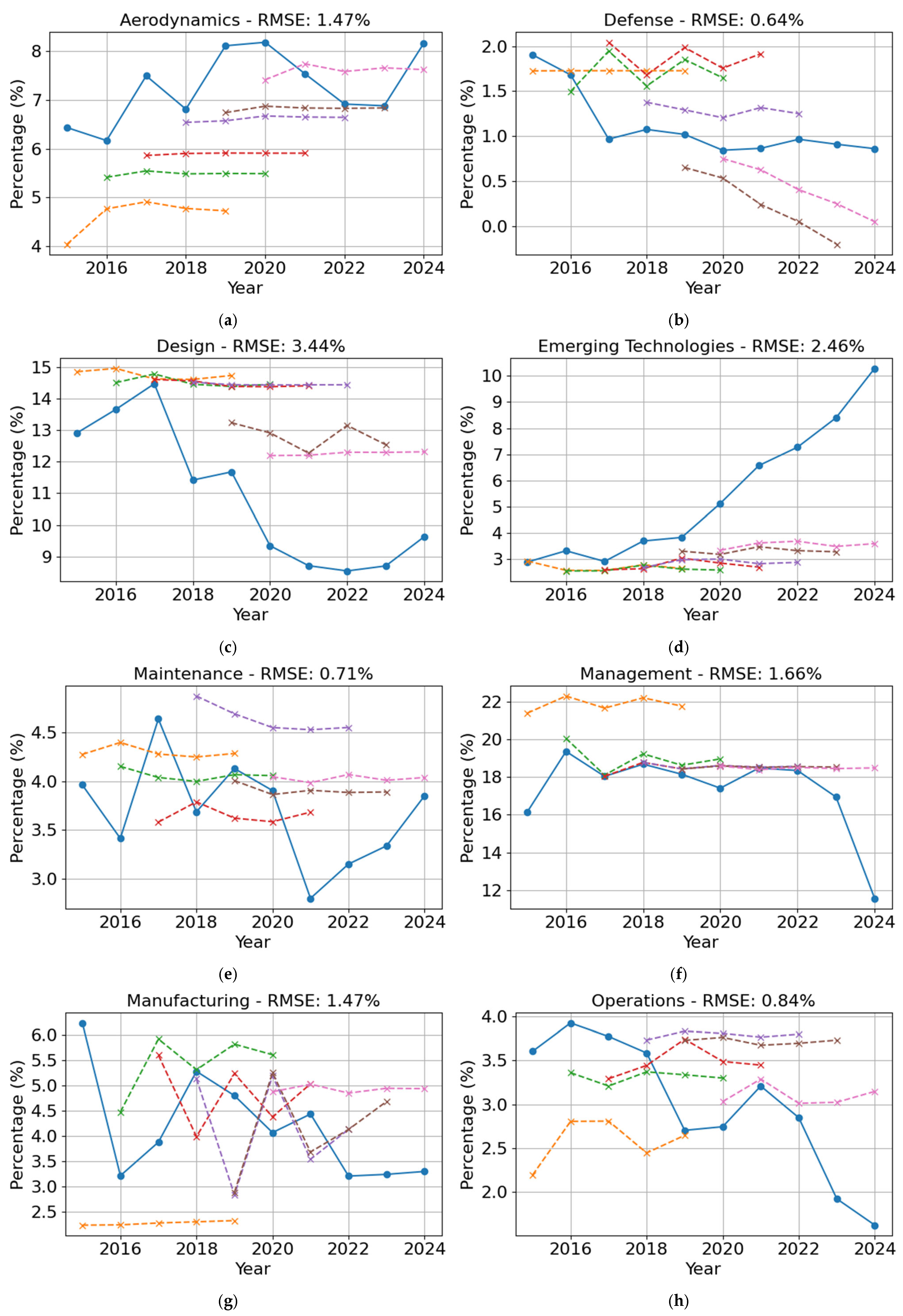

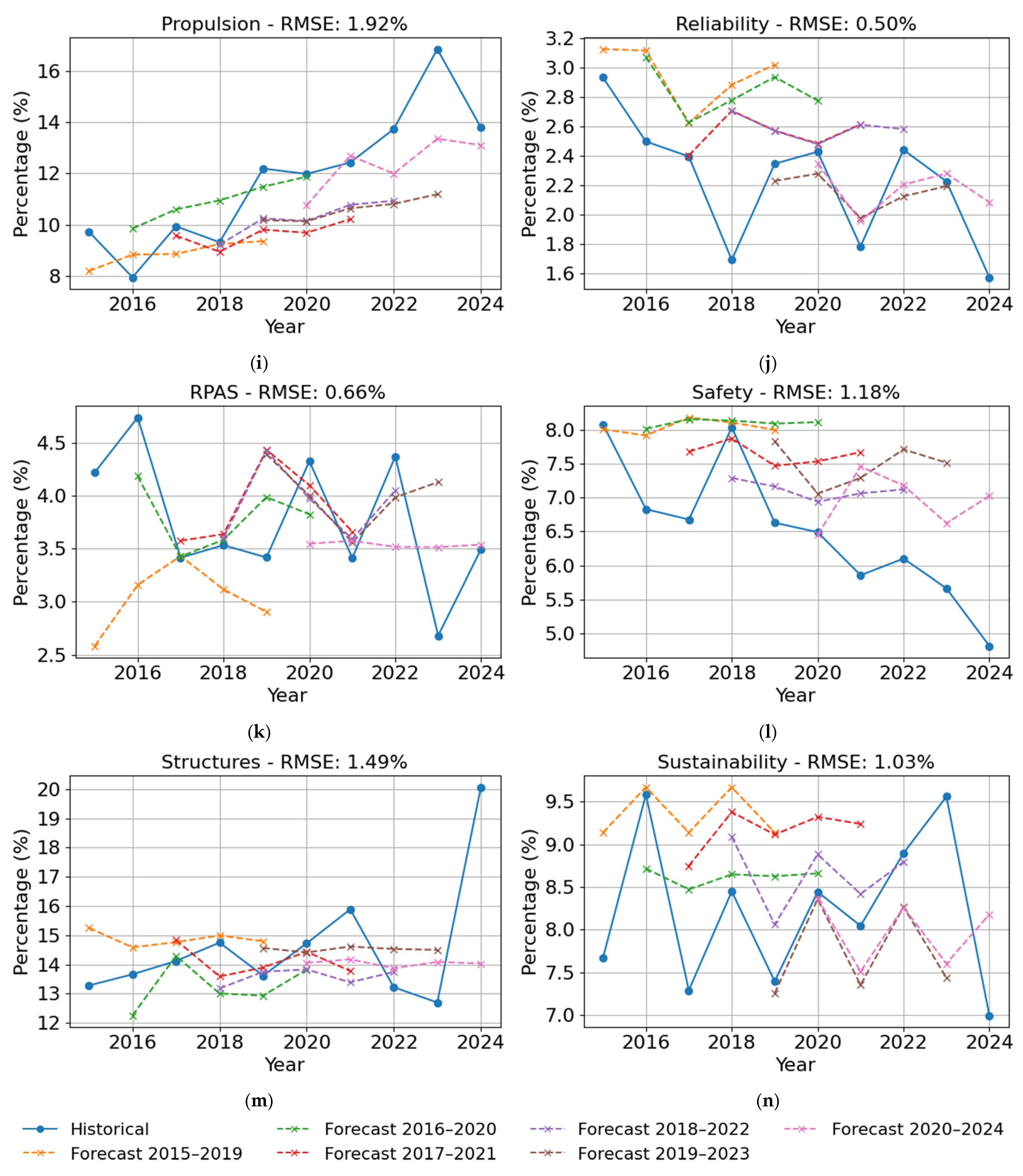

3.3. Forecasting with ARIMA

4. Results and Discussion

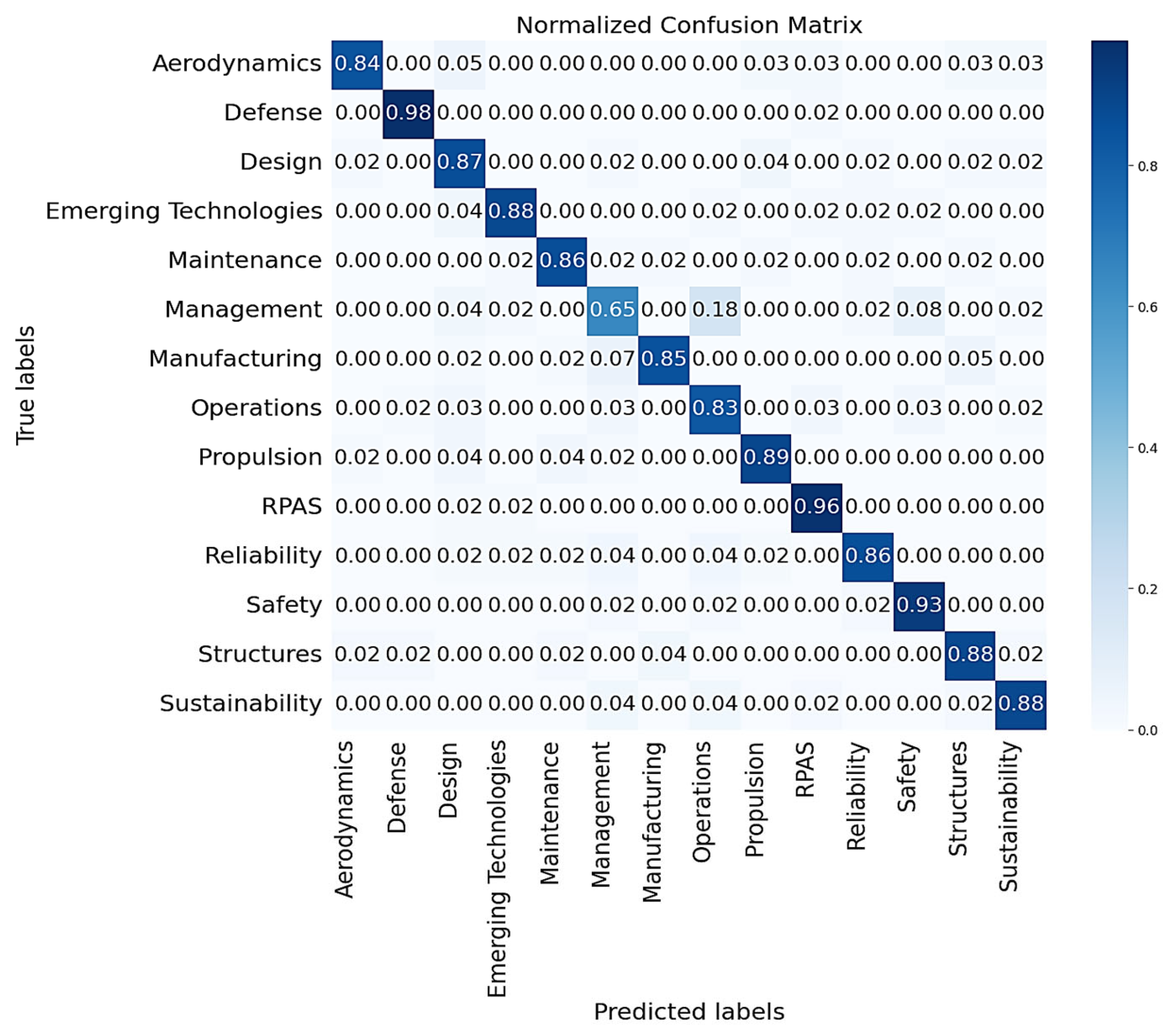

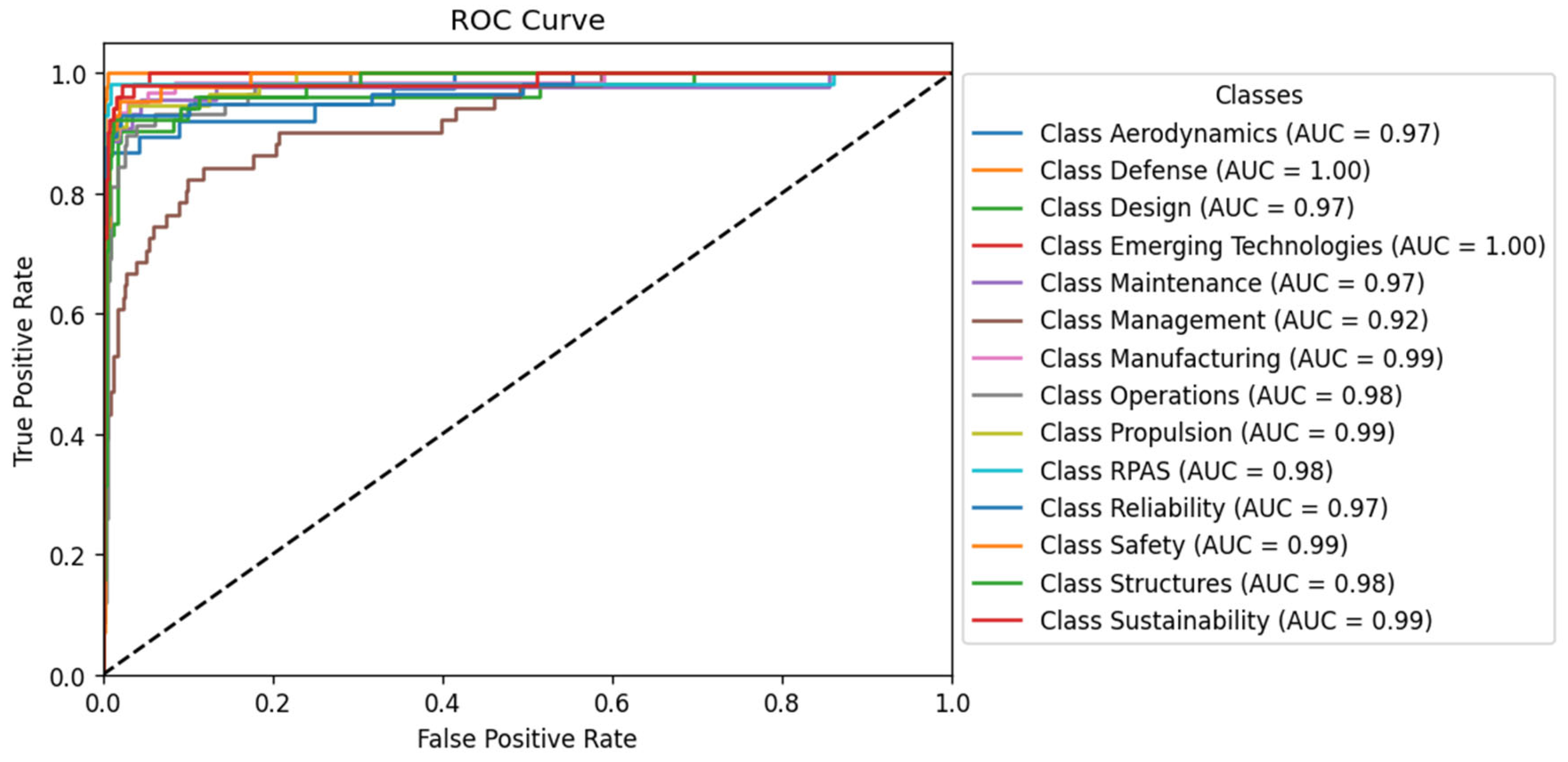

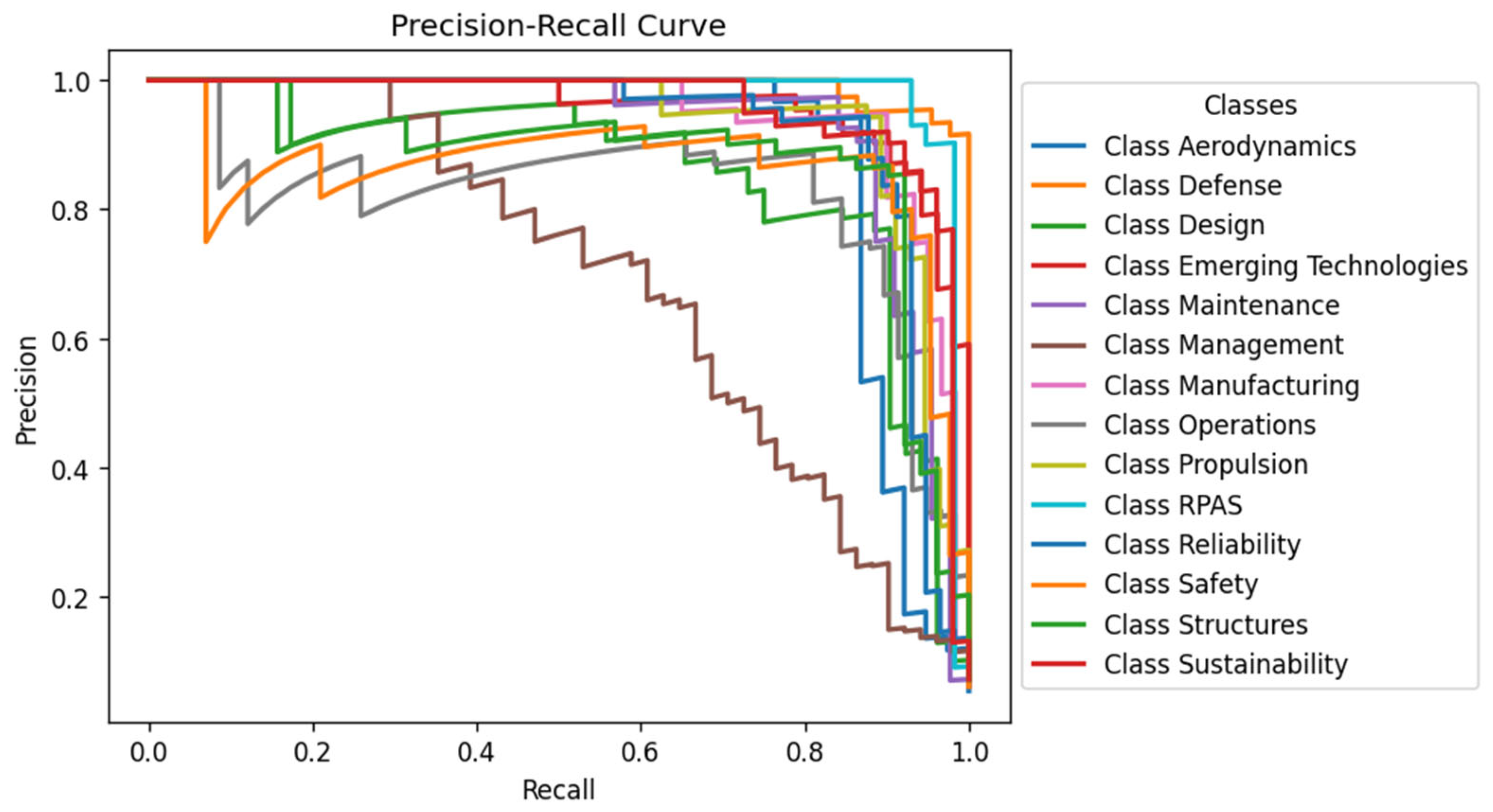

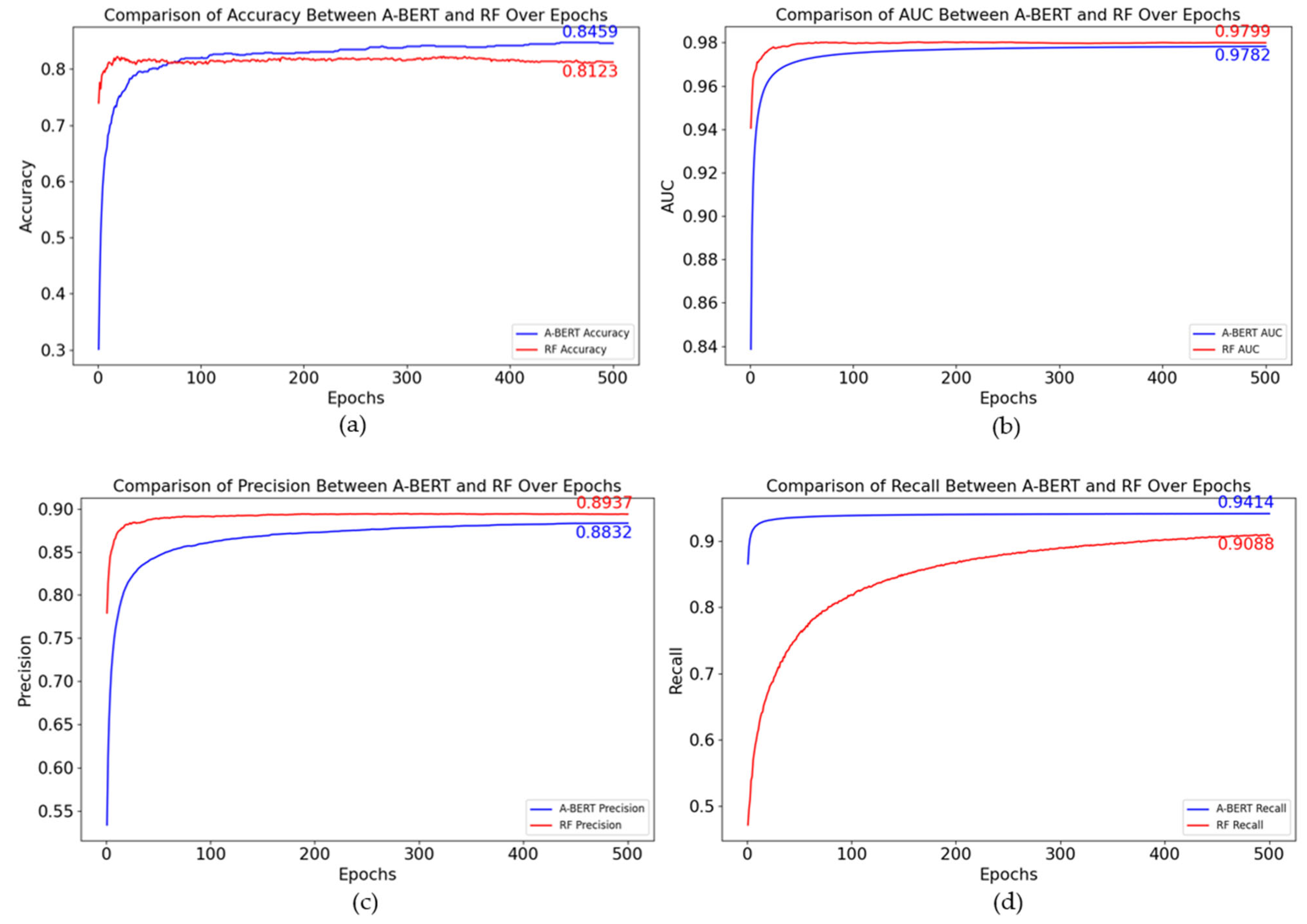

4.1. Discussion of the Results

4.2. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| A-BERT | Aviation Bidirectional Encoder Representations for Transformers |

| AI | Artificial Intelligence |

| ARIMA | Auto Regressive Integrated Moving Average |

| AUC | Area Under the Curve |

| BERT | Bidirectional Encoder Representations for Transformers |

| CNN | Convolutional Neural Networks |

| DL | Deep Learning |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| NTSB | National Transportation Safety Board |

| RF | Random Forest |

| RMSE | Root Mean Square Error |

| RNN | Recurrent Neural Networks |

| RoBERTa | Robustly Optimized Bidirectional Encoder Representations from Transformers |

| ROC | Receiver Operating Characteristic |

| RPAS | Remotely Piloted Aircraft System |

| SciBERT | Scientific Bidirectional Encoder Representations for Transformers |

Appendix A

| Component | Parameter | Value |

|---|---|---|

| Tokenizer (Hugging Face) | model_id | bert-base-uncased |

| Tokenizer call | padding | True |

| truncation | True | |

| max_length | 512 | |

| return_tensors | “tf” | |

| BERT encoder | model_id | bert-base-uncased |

| Encoding function | batch_size | 16 |

| Encoding/pooling | pooling | [CLS] token (last_hidden_state[:,0,:]) |

| SMOTE | random_state | 42 |

| Train/test split | test_size | 0.2 |

| random_state | 42 | |

| LogisticRegression | max_iter | 1000 |

| GridSearchCV | param_grid | {‘C’: [0.001, 0.01, 0.1, 1, 10, 100]} |

| cv | 5 | |

| Prediction (probabilities) | batch_size | 16 |

| Precision metric | average | “macro” |

| Learning curve | cv | 5 |

| scoring | “accuracy” | |

| n_jobs | −1 | |

| train_sizes | np.linspace(0.1, 1.0, 5) |

| Component | Parameter | Value |

|---|---|---|

| Tokenizer (Hugging Face) | model_id | bert-base-uncased |

| Tokenizer call | padding | True |

| truncation | True | |

| max_length | 512 | |

| return_tensors | “tf” | |

| BERT encoder | model_id | bert-base-uncased |

| Encoding function | batch_size | 16 |

| Encoding/pooling | pooling | [CLS] token (last_hidden_state[:,0,:]) |

| SMOTE | random_state | 42 |

| Train/test split | test_size | 0.2 |

| random_state | 42 | |

| SGDClassifier | loss | ‘log_loss’ |

| max_iter | 1 | |

| tol | None | |

| learning_rate | ‘constant’ | |

| eta0 | 0.01 | |

| random_state | 42 | |

| SGD training loop | epochs | 500 |

| SGD partial_fit | classes | np.unique(labels) |

Appendix B

References

- Fatine, E.; Raed, J.; Niamat, U.I.H.; Marc, B.; Chad, K.; Safae, E.A. Applying systems modeling language in an aviation maintenance system. IEEE Trans. Eng. Manag. 2022, 69, 4006–4018. [Google Scholar] [CrossRef]

- Madeira, T.; Melicio, R.; Valério, D.; Santos, L. Machine learning and natural language processing for prediction of human factors in aviation incident reports. Aerospace 2021, 8, 247. [Google Scholar] [CrossRef]

- Keller, R.M. Ontologies for aviation data management. In Proceedings of the Digital Avionics Systems Conference (DASC), Sacramento, CA, USA, 25–29 September 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Lázaro, F.L.; Nogueira, R.P.R.; Melicio, R.; Valério, D.; Santos, L.F.F.M. Human Factors as Predictor of Fatalities in Aviation Accidents: A Neural Network Analysis. Appl. Sci. 2024, 14, 640. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Samarra, J.; Santos, L.F.; Barqueira, A.; Melicio, R.; Valério, D. Uncovering the hidden correlations between socio-economic indicators and aviation accidents in the United States. Appl. Sci. 2023, 13, 4797. [Google Scholar] [CrossRef]

- Amaral, Y.; Santos, L.F.F.M.; Valério, D.; Melicio, R.; Barqueira, A. Probabilistic and statistical analysis of aviation accidents. IOP Conf. Ser. Mater. Sci. Eng. 2023, 2526, 012107. [Google Scholar] [CrossRef]

- Andrade, S.R.; Walsh, H.S. SafeAeroBERT: Towards a Safety-Informed Aerospace-Specific Language Model. In AIAA AVIATION 2023 Forum; American Institute of Aeronautics and Astronautics (AIAA): San Diego, CA, USA, 2023; Paper AIAA 2023 3437. [Google Scholar] [CrossRef]

- Tikayat Ray, A.; Cole, B.F.; Pinon Fischer, O.J.; White, R.T.; Mavris, D.N. aeroBERT-Classifier: Classification of Aerospace Requirements Using BERT. Aerospace 2023, 10, 279. [Google Scholar] [CrossRef]

- New, M.D.; Wallace, R.J. Classifying Aviation Safety Reports: Using Supervised Natural Language Processing (NLP) in an Applied Context. Safety 2025, 11, 7. [Google Scholar] [CrossRef]

- Beltagy, I.; Lo, K.; Cohan, A. SciBERT: A Pretrained Language Model for Scientific Text. arXiv 2019, arXiv:1903.10676. [Google Scholar] [CrossRef]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. arXiv 2019, arXiv:1907.11692. [Google Scholar] [CrossRef]

- Nwoye, C.I.; Alapatt, D.; Yu, T.; Vardazaryan, A.; Xia, F.; Zhao, Z.; Xia, T.; Jia, F.; Yang, Y.; Wang, H.; et al. Cholectriplet2021: A benchmark challenge for surgical action triplet recognition. Neurocomputing 2023, 86, 102803. [Google Scholar] [CrossRef]

- Ali Gombe, A.; Elyan, E. MFC GAN: Class imbalanced dataset classification using multiple fake class generative adversarial network. Neurocomputing 2019, 361, 212–221. [Google Scholar] [CrossRef]

- Hashemi, A.; Dowlatshahi, M. Neural Networks and Deep Learning. In Neural Networks and Deep Learning; Springer Nature: Singapore, 2023; Chapter 1. [Google Scholar] [CrossRef]

- Sotvoldiev, D.; Muhamediyeva, D.T.; Juraev, Z. Deep learning neural networks in fuzzy modeling. IOP Conf. Ser. Mater. Sci. Eng. 2020, 1441, 012171. [Google Scholar] [CrossRef]

- Zhang, C. Text classification using deep learning methods. In Proceedings of the 2022 Conference on Topics in Computing Systems, New Orleans, LA USA, 29 April–5 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1327–1332. [Google Scholar] [CrossRef]

- Liang, K.; Sakakibara, Y. MetaVelvet DL: A MetaVelvet deep learning extension for de novo metagenome assembly. BMC Bioinform. 2021, 22, 373. [Google Scholar] [CrossRef]

- Sahu, S.K.; Mokhade, A.; Bokde, N.D. An overview of machine learning, deep learning, and reinforcement learning based techniques in quantitative finance: Recent progress and challenges. Appl. Sci. 2023, 13, 1956. [Google Scholar] [CrossRef]

- Kouris, P.; Alexandridis, G.; Stafylopatis, A. Text summarization based on semantic graphs: An abstract meaning representation graph to text deep learning approach. Res. Sq. 2022. preprint. [Google Scholar] [CrossRef]

- Maylawati, D.S.; Kumar, Y.J.; Kasmin, F.B.; Ramdhani, M.A. An idea based on sequential pattern mining and deep learning for text summarization. IOP Conf. Ser. Mater. Sci. Eng. 2019, 1402, 077013. [Google Scholar] [CrossRef]

- Gasparetto, A.; Marcuzzo, M.; Zangari, A.; Albarelli, A. A survey on text classification algorithms: From text to predictions. Information 2022, 13, 200. [Google Scholar] [CrossRef]

- Gorenstein, L.; Konen, E.; Green, M.; Klang, E. Bidirectional encoder representations from transformers in radiology: A systematic review of natural language processing applications. J. Am. Coll. Radiol. 2024, 21, 914–941. [Google Scholar] [CrossRef]

- Moon, S.; Chi, S.; Im, S.B. Automated detection of contractual risk clauses from construction specifications using bidirectional encoder representations from transformers (BERT). Autom. Constr. 2022, 142, 104465. [Google Scholar] [CrossRef]

- Chaudhry, P. Bidirectional encoder representations from transformers for modelling stock prices. Int. J. Res. Appl. Sci. Eng. Technol. 2022, 10, 404. [Google Scholar] [CrossRef]

- Özçift, A.; Akarsu, K.; Yumuk, F.; Söylemez, C. Advancing natural language processing (NLP) applications of morphologically rich languages with bidirectional encoder representations from transformers (BERT): An empirical case study for Turkish. J. Control Meas. Electron. Comput. Commun. 2021, 62, 226–238. [Google Scholar] [CrossRef]

- Özdil, U.; Arslan, B.; Taşar, D.E.; Polat, G.; Ozan, Ş. Ad text classification with bidirectional encoder representations. In Proceedings of the 2021 6th International Conference on Computer Science and Engineering (UBMK), Ankara, Turkey, 15–17 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 169–173. [Google Scholar] [CrossRef]

- Nanyonga, A.; Wasswa, H.; Joiner, K.; Turhan, U.; Wild, G. A Multi-Head Attention-Based Transformer Model for Predicting Causes in Aviation Incidents. Modelling 2025, 6, 27. [Google Scholar] [CrossRef]

- Liu, H.; Shen, F.; Qin, H.; Gao, F. Research on Flight Accidents Prediction Based on Back Propagation Neural Network. arXiv 2024, arXiv:2406.13954. [Google Scholar] [CrossRef]

- Ma, N.; Meng, J.; Luo, J.; Liu, Q. Optimization of Thermal-Fluid-Structure Coupling for Variable-Span Inflatable Wings Considering Case Correlation. Aerosp. Sci. Technol. 2024, 153, 109448. [Google Scholar] [CrossRef]

- Verma, M.; Pardeep, K. Generic Deep-Learning-Based Time Series Models for Aviation Accident Analysis and Forecasting. Comput. Sci. 2023, 5, 32. [Google Scholar] [CrossRef]

- Lin, M. Civil aviation satellite navigation integrity monitoring with deep learning. Adv. Comput. Commun. 2023, 4, 260–264. [Google Scholar] [CrossRef]

- Nogueira, R.; Melicio, R.; Valério, D.; Santos, L. Learning methods and predictive modeling to identify failure by human factors in the aviation industry. Appl. Sci. 2023, 13, 4069. [Google Scholar] [CrossRef]

- Zhang, X.; Srinivasan, P.; Mahadevan, S. Sequential deep learning from NTSB reports for aviation safety prognosis. Saf. Sci. 2021, 142, 105390. [Google Scholar] [CrossRef]

- Wang, Z. Deep learning based foreign object detection method for aviation runways. Appl. Math. Nonlinear Sci. 2023, 8, 30. [Google Scholar] [CrossRef]

- Caballero, W.N.; Gaw, N.; Jenkins, P.R.; Johnstone, C. Toward automated instructor pilots in legacy air force systems: Physiology based flight difficulty classification via machine learning. Expert Syst. Appl. 2023, 231, 120711. [Google Scholar] [CrossRef]

- Jiang, Y.; Tran, T.H.; Williams, L. Machine learning and mixed reality for smart aviation: Applications and challenges. J. Air Transp. Manag. 2023, 111, 102437. [Google Scholar] [CrossRef]

- Li, P.; Liu, S.; Tian, Y.; Hou, T.; Ling, J. Automatic Perception of Aircraft Taxiing Behavior via Laser Rangefinders and Machine Learning. IEEE Sens. J. 2025, 25, 3964–3973. [Google Scholar] [CrossRef]

- Liang, Z.; Zhao, Y.; Wang, M.; Huang, H.; Xu, H. Research on the Automatic Multi-Label Classification of Flight Instructor Comments Based on Transformer and Graph Neural Networks. Aerospace 2025, 12, 407. [Google Scholar] [CrossRef]

- Xu, G.J.W.; Pan, S.; Sun, P.Z.H.; Guo, K.; Park, S.H.; Yan, F.; Wu, E.Q. Human-Factors-in-Aviation-Loop: Multimodal Deep Learning for Pilot Situation Awareness Analysis Using Gaze Position and Flight Control Data. IEEE Trans. Intell. Transp. Syst. 2025, 26, 8065–8077. [Google Scholar] [CrossRef]

- Helgo, M. Deep learning and machine learning algorithms for enhanced aircraft maintenance and flight data analysis. J. Robot. Spectrum 2023, 1, 090–099. [Google Scholar] [CrossRef]

- Lázaro, F.L.; Madeira, T.; Melicio, R.; Valério, D.; Santos, L.F.F.M. Identifying human factors in aviation accidents with natural language processing and machine learning models. Aerospace 2025, 12, 106. [Google Scholar] [CrossRef]

- Wei, M.; Yang, S.; Wu, W.; Sun, B. A multi-objective fuzzy optimization model for multi-type aircraft flight scheduling problem. Transport 2024, 39, 313–322. [Google Scholar] [CrossRef]

- Yang, C.; Huang, C. Natural Language Processing (NLP) in Aviation Safety: Systematic Review of Research and Outlook into the Future. Aerospace 2023, 10, 600. [Google Scholar] [CrossRef]

- Fredriksson, T.; Bosch, J.; Olsson, H.H. Machine learning models for automatic labeling: A systematic literature review. In Proceedings of the 15th International Conference on Software Technologies (ICSOFT), Paris, France, 7–9 July 2020; pp. 552–561. [Google Scholar] [CrossRef]

- Iqbal, M.; Naveed, A. Forecasting inflation: Autoregressive integrated moving average model. Eur. Sci. J. 2016, 12, 83. [Google Scholar] [CrossRef]

- Zou, Y.; Wang, T.; Xiao, J.; Feng, X. Temperature prediction of electrical equipment based on autoregressive integrated moving average model. In Proceedings of the 2017 32nd Youth Academic Annual Conference of Chinese Association of Automation (YAC), Hefei, China, 19–21 May 2017; pp. 197–200. [Google Scholar] [CrossRef]

- Yang, Y.; Wu, W.; Sun, L. Prediction of mechanical equipment vibration trend using autoregressive integrated moving average model. In Proceedings of the 10th International Congress on Image and Signal Processing, Biomedical Engineering and Informatics (CISP-BMEI), Shanghai, China, 14–16 October 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Sameh, B.; Elshabrawy, M. Seasonal autoregressive integrated moving average for climate change time series forecasting. Am. J. Bus. Oper. Res. 2022, 8, 25–35. [Google Scholar] [CrossRef]

- Chodakowska, E.; Nazarko, J.; Nazarko, Ł. ARIMA Models in Electrical Load Forecasting and Their Robustness to Noise. Energies 2021, 14, 7952. [Google Scholar] [CrossRef]

- Yuwei, C.; Kaizhi, W. Prediction of satellite time series data based on long short term memory–autoregressive integrated moving average model (LSTM-ARIMA). In Proceedings of the 2019 IEEE 4th International Conference on Signal and Image Processing (ICSIP), Wuxi, China, 19–21 July 2019; pp. 308–312. [Google Scholar] [CrossRef]

- Ramakrishna, R.; Aregay, B.; Gebregergs, T. The comparison in time series forecasting of air traffic data by ARIMA, radial basis function and Elman recurrent neural networks. Res. Rev. J. Stat. 2018, 7, 75–90. [Google Scholar]

- Saboia, J. Autoregressive integrated moving average (ARIMA) models for birth forecasting. J. Am. Stat. Assoc. 1977, 72, 264–270. [Google Scholar] [CrossRef]

- Khashei, M.; Bijari, M.; Ardali, G.A.R. Hybridization of autoregressive integrated moving average (ARIMA) with probabilistic neural networks (PNNs). Comput. Ind. Eng. 2012, 63, 37–45. [Google Scholar] [CrossRef]

- Subhash, N.N.; Minakshee, P.M. Forecasting telecommunications data with ARIMA models. In Proceedings of the 2015 International Conference on Recent Advances in Engineering & Computational Sciences (RAECS), Chandigarh, India, 21–22 December 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar] [CrossRef]

- He, P.; Sun, R. Trend Analysis of Civil Aviation Incidents Based on Causal Inference and Statistical Inference. Aerospace 2023, 10, 822. [Google Scholar] [CrossRef]

- Schneider, P.; Xhafa, F. Anomaly Detection: Concepts and Methods. In Anomaly Detection and Complex Event Processing over IoT Data Streams; Schneider, P., Xhafa, F., Eds.; Academic Press: Cambridge, MA, USA, 2022; pp. 49–66. [Google Scholar] [CrossRef]

- Hamed, K.H.; Rao, A.R. A Modified Mann–Kendall Trend Test for Autocorrelated Data. J. Hydrol. 1998, 204, 182–196. [Google Scholar] [CrossRef]

- Raković, M.; Rodrigo, M.M.; Matsuda, N.; Cristea, A.I.; Dimitrova, V. Towards the Automated Evaluation of Legal Casenote Essays. In Artificial Intelligence in Education. AIED 2022; Rodrigo, M.M., Matsuda, N., Cristea, A.I., Dimitrova, V., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13355, pp. 139–151. [Google Scholar] [CrossRef]

- Oliveira, J.M.; Ramos, P. Evaluating the Effectiveness of Time Series Transformers for Demand Forecasting in Retail. Mathematics 2024, 12, 2728. [Google Scholar] [CrossRef]

- Kontopoulou, V.I.; Panagopoulos, A.D.; Kakkos, I.; Matsopoulos, G.K. A Review of ARIMA vs. Machine Learning Approaches for Time Series Forecasting in Data Driven Networks. Future Internet 2023, 15, 255. [Google Scholar] [CrossRef]

| Year | No. Papers | Year | No. Papers | Year | No. Papers | Year | No. Papers | Year | No. Papers |

|---|---|---|---|---|---|---|---|---|---|

| 2000 | 1437 | 2005 | 1636 | 2010 | 1980 | 2015 | 1943 | 2020 | 1895 |

| 2001 | 1498 | 2006 | 1716 | 2011 | 1876 | 2016 | 1962 | 2021 | 1964 |

| 2002 | 1429 | 2007 | 1858 | 2012 | 1973 | 2017 | 1962 | 2022 | 1967 |

| 2003 | 1439 | 2008 | 1954 | 2013 | 1900 | 2018 | 1953 | 2023 | 1977 |

| 2004 | 1671 | 2009 | 1918 | 2014 | 1981 | 2019 | 1961 | 2024 | 1973 |

| Class | A-BERT | Random Forest | ||||||

|---|---|---|---|---|---|---|---|---|

| F1 Score | AUC | Precision | Accuracy | F1 Score | AUC | Precision | Accuracy | |

| Aerodynamics | 0.89 | 0.97 | 87.6% | 87.3% | 0.90 | 1.00 | 87.2% | 86.5% |

| Defense | 0.95 | 1.00 | 0.96 | 1.00 | ||||

| Design | 0.83 | 0.97 | 0.79 | 0.98 | ||||

| Emerging Technologies | 0.89 | 1.00 | 0.89 | 1.00 | ||||

| Maintenance | 0.89 | 0.97 | 0.85 | 0.98 | ||||

| Management | 0.65 | 0.92 | 0.65 | 0.97 | ||||

| Manufacturing | 0.90 | 0.99 | 0.91 | 0.99 | ||||

| Operations | 0.81 | 0.98 | 0.80 | 0.98 | ||||

| Propulsion | 0.90 | 0.99 | 0.91 | 1.00 | ||||

| RPAS | 0.93 | 0.98 | 0.97 | 1.00 | ||||

| Reliability | 0.89 | 0.97 | 0.92 | 0.99 | ||||

| Safety | 0.89 | 0.99 | 0.87 | 0.99 | ||||

| Structures | 0.89 | 0.98 | 0.85 | 0.99 | ||||

| Sustainability | 0.91 | 0.99 | 0.84 | 0.99 | ||||

| Class/Years | 2000 | 2001 | 2002 | 2003 | 2004 | 2005 | 2006 | 2007 | 2008 | 2009 | 2010 | 2011 | 2012 | 2013 | 2014 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Aerodynamics | 55 | 64 | 51 | 75 | 60 | 94 | 91 | 83 | 80 | 95 | 127 | 108 | 118 | 143 | 130 |

| Defense | 66 | 87 | 76 | 83 | 126 | 100 | 70 | 99 | 115 | 85 | 108 | 70 | 79 | 74 | 69 |

| Design | 170 | 124 | 123 | 114 | 141 | 169 | 139 | 148 | 132 | 141 | 151 | 159 | 139 | 145 | 154 |

| Emerging Technologies | 71 | 82 | 89 | 77 | 110 | 95 | 115 | 122 | 140 | 135 | 112 | 105 | 138 | 163 | 156 |

| Maintenance | 59 | 71 | 75 | 88 | 67 | 75 | 100 | 95 | 116 | 117 | 124 | 102 | 104 | 102 | 105 |

| Management | 199 | 208 | 194 | 205 | 216 | 203 | 211 | 226 | 269 | 211 | 240 | 300 | 266 | 231 | 304 |

| Manufacturing | 50 | 56 | 65 | 59 | 55 | 56 | 67 | 61 | 52 | 53 | 72 | 50 | 63 | 62 | 67 |

| Operations | 81 | 96 | 58 | 69 | 65 | 68 | 76 | 70 | 77 | 76 | 95 | 84 | 106 | 99 | 82 |

| Propulsion | 84 | 79 | 102 | 66 | 105 | 97 | 73 | 114 | 104 | 117 | 125 | 109 | 139 | 154 | 132 |

| RPAS | 61 | 37 | 42 | 53 | 73 | 71 | 61 | 93 | 102 | 53 | 71 | 86 | 99 | 86 | 88 |

| Reliability | 39 | 59 | 45 | 63 | 76 | 53 | 78 | 87 | 91 | 122 | 97 | 123 | 97 | 88 | 82 |

| Safety | 121 | 131 | 134 | 137 | 167 | 155 | 167 | 182 | 168 | 172 | 177 | 158 | 164 | 134 | 156 |

| Structures | 189 | 222 | 226 | 233 | 220 | 233 | 291 | 279 | 302 | 323 | 262 | 248 | 258 | 234 | 234 |

| Sustainability | 192 | 181 | 149 | 122 | 189 | 167 | 175 | 199 | 205 | 218 | 219 | 174 | 203 | 185 | 222 |

| Class/Years | 2015 | 2016 | 2017 | 2018 | 2019 | 2020 | 2021 | 2022 | 2023 | 2024 | 2025 | 2026 | 2027 | 2028 | 2029 |

| Aerodynamics | 179 | 183 | 175 | 171 | 193 | 222 | 189 | 189 | 209 | 172 | 189 | 186 | 182 | 187 | 183 |

| Defense | 64 | 55 | 54 | 47 | 47 | 52 | 47 | 59 | 38 | 41 | 49 | 42 | 43 | 45 | 43 |

| Design | 112 | 99 | 141 | 105 | 114 | 88 | 93 | 92 | 73 | 99 | 90 | 91 | 91 | 91 | 91 |

| Emerging Technologies | 137 | 203 | 159 | 193 | 201 | 184 | 212 | 224 | 238 | 268 | 253 | 257 | 259 | 256 | 258 |

| Maintenance | 109 | 88 | 103 | 98 | 79 | 78 | 78 | 92 | 79 | 94 | 89 | 87 | 89 | 89 | 89 |

| Management | 302 | 280 | 272 | 274 | 202 | 217 | 241 | 246 | 184 | 136 | 180 | 191 | 164 | 167 | 180 |

| Manufacturing | 83 | 88 | 87 | 97 | 78 | 80 | 82 | 66 | 63 | 72 | 70 | 67 | 69 | 69 | 69 |

| Operations | 100 | 101 | 86 | 86 | 93 | 100 | 102 | 69 | 97 | 71 | 77 | 85 | 70 | 85 | 73 |

| Propulsion | 170 | 146 | 183 | 169 | 222 | 222 | 248 | 269 | 316 | 305 | 341 | 343 | 368 | 376 | 395 |

| RPAS | 125 | 101 | 91 | 99 | 84 | 91 | 94 | 105 | 82 | 80 | 94 | 93 | 87 | 88 | 91 |

| Reliability | 102 | 77 | 84 | 76 | 87 | 61 | 74 | 67 | 77 | 60 | 74 | 62 | 73 | 62 | 72 |

| Safety | 112 | 126 | 112 | 139 | 143 | 105 | 128 | 97 | 108 | 73 | 87 | 69 | 75 | 66 | 69 |

| Structures | 205 | 222 | 241 | 226 | 238 | 233 | 208 | 208 | 217 | 361 | 313 | 262 | 275 | 293 | 290 |

| Sustainability | 143 | 193 | 174 | 173 | 180 | 162 | 168 | 184 | 196 | 141 | 174 | 171 | 170 | 170 | 170 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lázaro, F.L.; Santos, L.F.F.M.; Valério, D.; Melicio, R. Artificial Intelligence and Aviation: A Deep Learning Strategy for Improved Data Classification and Management. Appl. Sci. 2025, 15, 9403. https://doi.org/10.3390/app15179403

Lázaro FL, Santos LFFM, Valério D, Melicio R. Artificial Intelligence and Aviation: A Deep Learning Strategy for Improved Data Classification and Management. Applied Sciences. 2025; 15(17):9403. https://doi.org/10.3390/app15179403

Chicago/Turabian StyleLázaro, Flávio L., Luís F. F. M. Santos, Duarte Valério, and Rui Melicio. 2025. "Artificial Intelligence and Aviation: A Deep Learning Strategy for Improved Data Classification and Management" Applied Sciences 15, no. 17: 9403. https://doi.org/10.3390/app15179403

APA StyleLázaro, F. L., Santos, L. F. F. M., Valério, D., & Melicio, R. (2025). Artificial Intelligence and Aviation: A Deep Learning Strategy for Improved Data Classification and Management. Applied Sciences, 15(17), 9403. https://doi.org/10.3390/app15179403