AI-Enabled Customised Workflows for Smarter Supply Chain Optimisation: A Feasibility Study

Abstract

1. Introduction

- -

- Is it feasible to employ LLMs in generating SCM-related processes, dynamically adapting to the ever-changing demands of the supply chain landscape?

- -

- Is the integration financially feasible, and what are the technical, operational and socio-technical implications for the overall supply chain operations to be considered?

- To evaluate the technical feasibility of leveraging LLMs for automating and optimising key SCM processes.

- To analyse the operational implications of integrating LLMs and chatbot technologies into existing SCM systems, assessing their impact on workflow efficiency, decision-making processes, and overall supply chain agility, through a case study.

- To conduct a comprehensive financial feasibility analysis of implementing LLM-based SCM solutions.

- To explore the socio-technical dimensions of adopting LLM-based workflow automation in SCM.

- To develop a practical and actionable roadmap for organisations seeking to implement LLM-powered workflow editors.

2. Literature Review

2.1. Core Supply Chain Processes

- -

- Planning: This involves creating a strategy for sourcing raw materials, production schedules, inventory levels, and distribution of finished goods. Its key processes include demand forecasting, setting inventory levels, and supplier selection. The Chain-AI Bot can significantly enhance this stage by providing more accurate demand forecasts using real-time data and market trends, allowing for better resource allocation and inventory management.

- -

- Production: This involves transforming raw materials into finished products efficiently (Manufacturing) and quality control to ensure products meet quality standards. LLMs can optimise production schedules based on demand fluctuations, reducing bottlenecks and improving overall efficiency.

- -

- Warehousing: This process mainly includes storing goods safely and efficiently, and Inventory Tracking to monitor stock levels and movements. The Chain-AI Bot can provide real-time inventory tracking and optimise warehouse space utilisation, reducing storage costs and improving retrieval times.

- -

- Delivery/Logistics: Managing the transportation and distribution of finished products to customers. This includes warehouse management, order fulfilment, transportation planning, and ensuring timely delivery. The Chain-AI Bot can refine delivery routes, optimise transportation modes, and provide real-time tracking, enhancing delivery speed and efficiency.

- -

- Supplier Relationship Management: Building and maintaining relationships with suppliers to ensure a smooth flow of materials, timely deliveries, quality assurance, and collaboration on improvements. LLMs like the Chain-AI Bot can automate communication, streamline contract management, and enhance collaboration with suppliers, reducing delays and costs.

- -

- Customer Service: This includes several business processes, but most importantly, marketing, communicating with customers, order processing, and return management to manage product returns and exchanges. The Chain-AI Bot can handle queries, process orders, and manage returns, providing quicker and more personalised responses, thereby improving customer satisfaction.

2.2. Challenges in Supply Chain Management

2.2.1. Process-Related Supply Chain Challenges

2.2.2. SCM and AI Technologies

2.3. Theoretical Underpinnings

2.3.1. Dynamic Capabilities Theory

2.3.2. Resource-Based View

2.3.3. Socio-Technical Systems Theory and the Use of AI for SCM Optimisation

3. Research Methodology

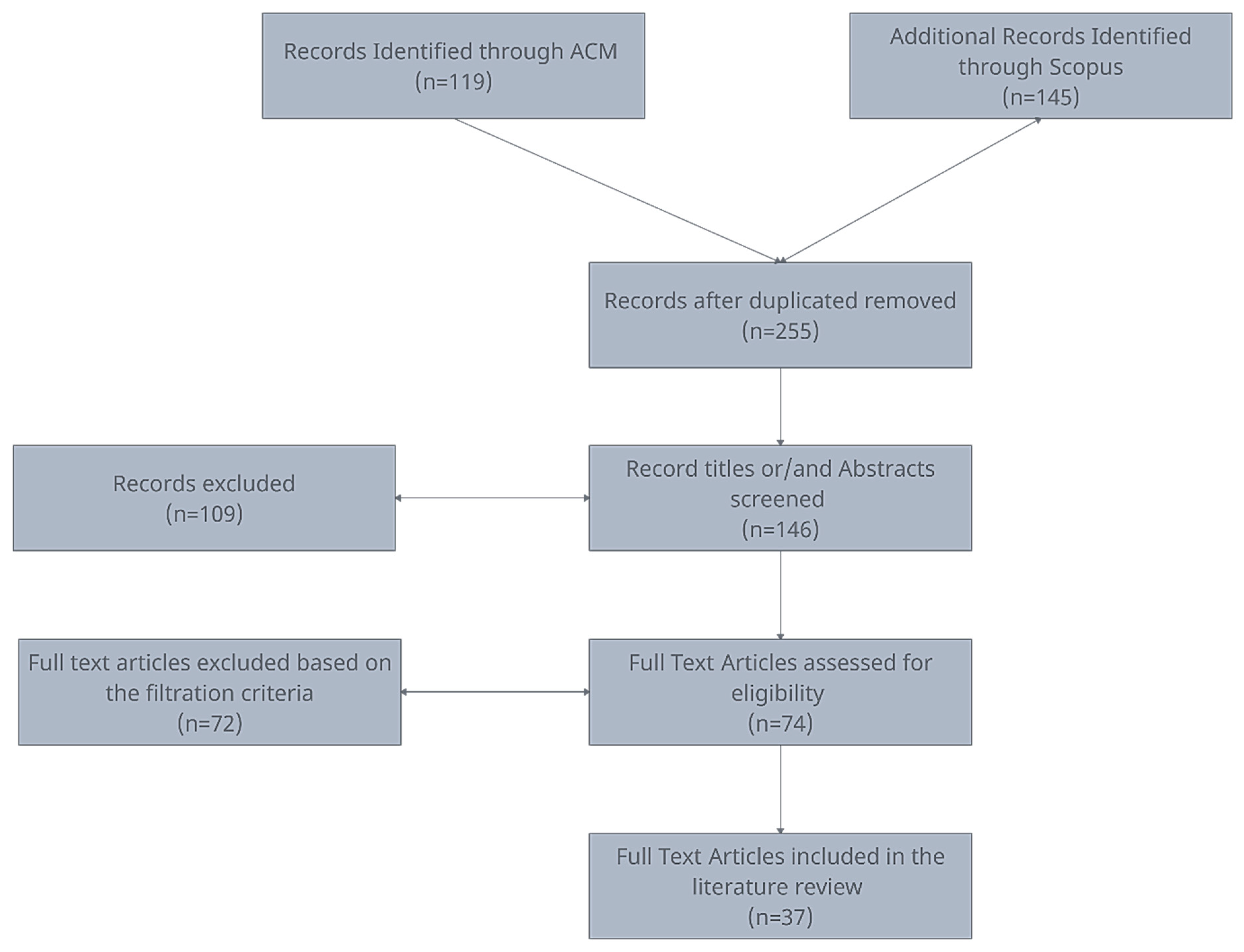

3.1. Literature Review Methodology

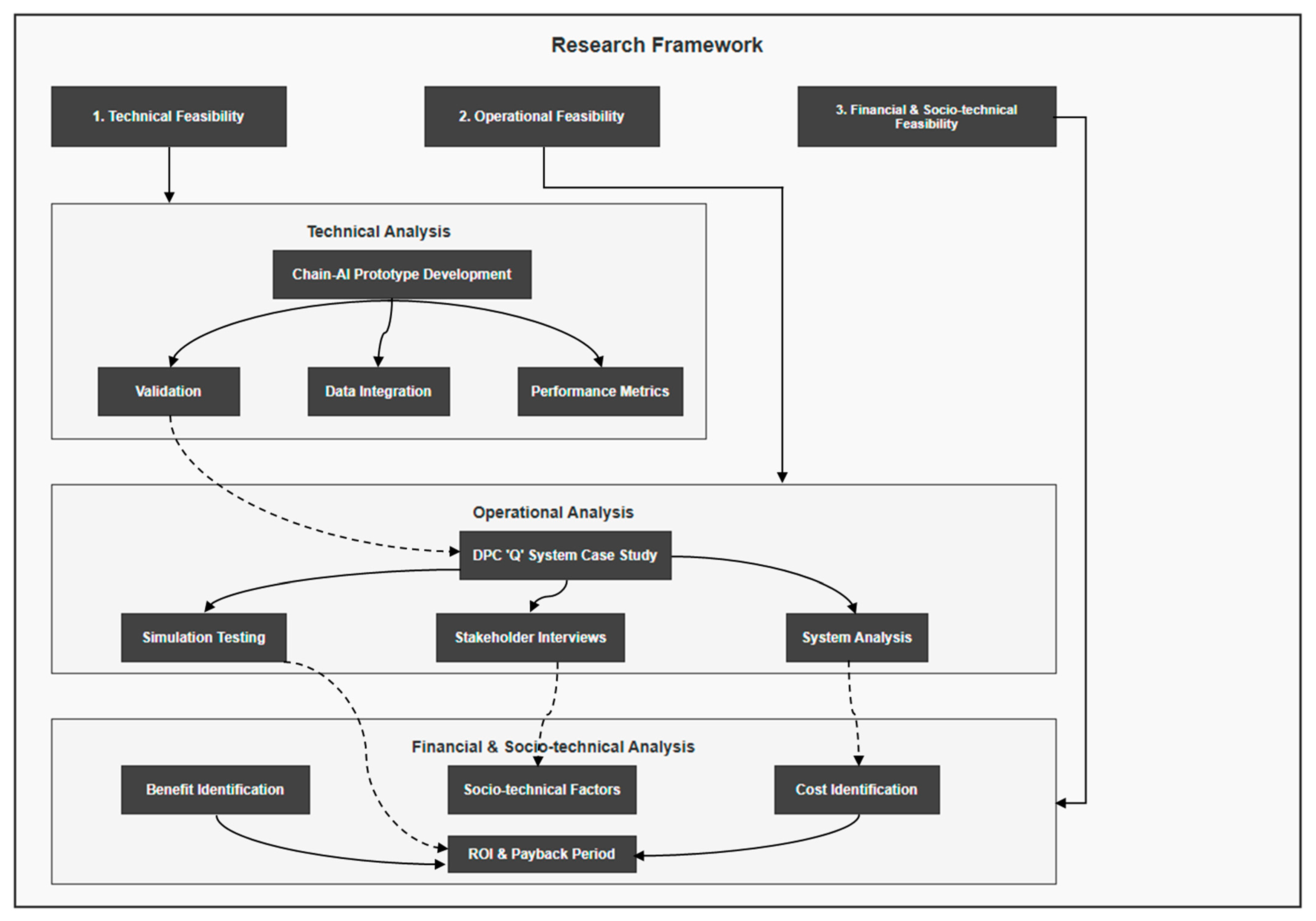

3.2. Research Framework and Core Objectives

- Technical Feasibility: To assess the potential capabilities of LLMs for automating workflows in SCM through prototype development.

- Operational Feasibility: To examine operational implications through a detailed case study of DPC’s “Q” system.

- Financial and Socio-technical Feasibility and Implications: To evaluate the financial and socio-technical aspects of implementing LLM-based solutions, providing insights for decision-making.

3.2.1. Technical Feasibility Analysis

- -

- Performance metrics: To evaluate the Chain-AI prototypes, metrics such as response accuracy (the LLM’s ability to generate contextually accurate responses), latency (the time taken to process data), and scalability (the model’s ability to handle varying data volumes) were measured.

- -

- Data Integration: The LLM prototypes were tested using structured data from Enterprise Resource Planning (ERP) systems (inventory levels, order statuses, procurement schedules), and unstructured data (supplier communications, product descriptions) to assess the LLM’s ability to handle diverse inputs.

- -

- Validation: To validate the technical feasibility, the performance of the Chain-AI prototypes was benchmarked against traditional SCM tools. This involved usability testing with SCM stakeholders, as well as gathering qualitative feedback through structured interviews to identify the system’s strengths and weaknesses.

3.2.2. Operational Feasibility Analysis

3.2.3. Financial and Socio-Technical Feasibility Analysis

- -

- Cost Identification: Analysis of development costs (AI model training, software integration, hardware infrastructure), implementation costs (system integration, user training, downtime during transition), and maintenance costs (system updates, bug fixes, and data storage).

- -

- Benefit Analysis: Evaluation of potential benefits such as improved operational efficiency, reduced labour costs, decreased inventory costs, and increased sales due to better customer satisfaction.

4. Research Findings

4.1. Technical Feasibility

4.1.1. Supply Chain Optimisation Using LLMs

4.1.2. Addressing the Process-Related Challenges of Inventory Management Using LLMs

4.1.3. The Feasibility of Generating Customised and Dynamic Workflows Using LLMs Based on the Specific Customers’ Needs in Real-Time

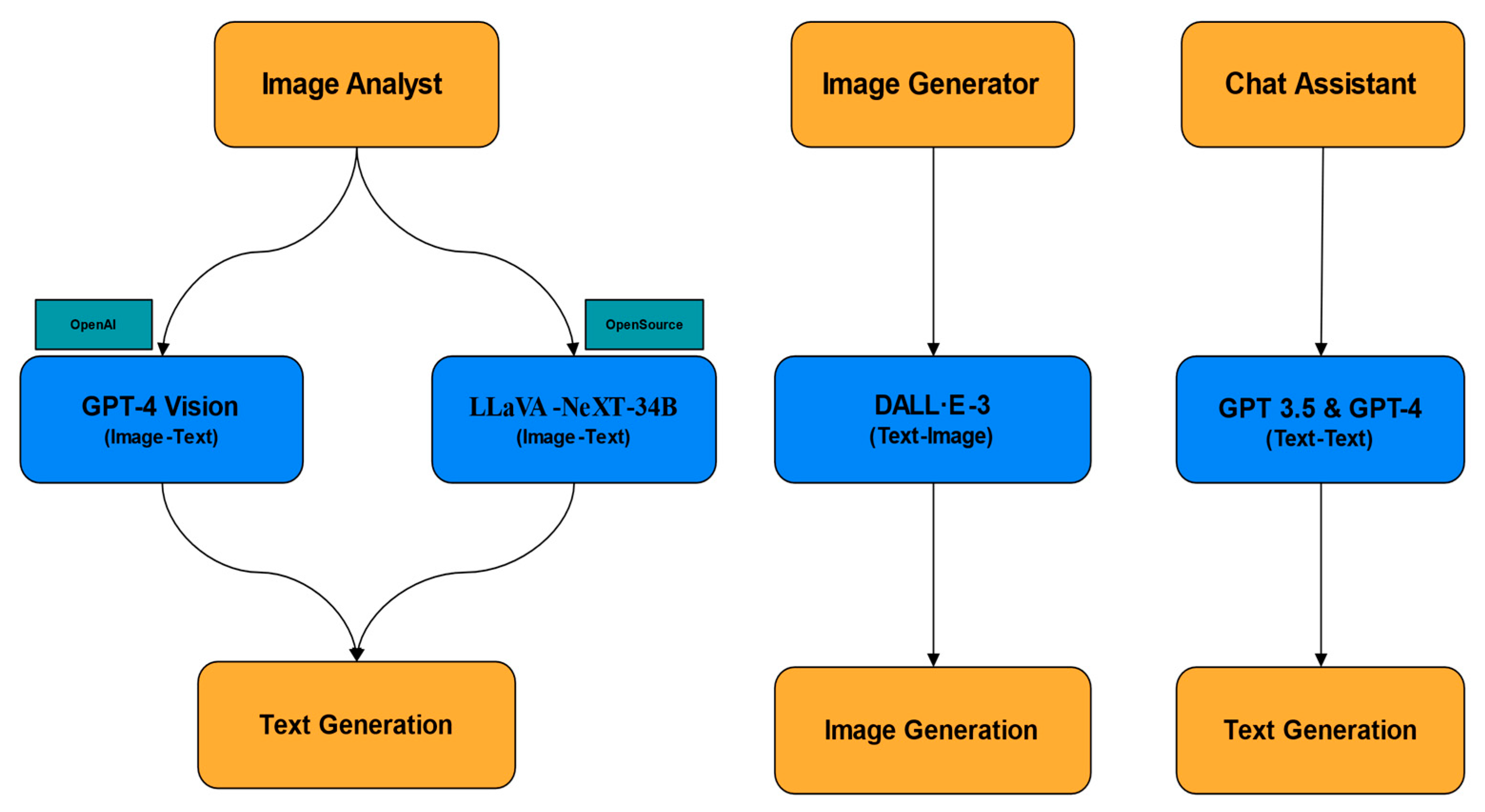

- -

- SCM AI Image Analyst: Image Analyst is an application developed for analysing supply chain-related images and facilitating conversations based on the insights generated. It utilises the following two powerful language and vision models:

- ○

- OpenAI GPT-4 Vision (https://platform.openai.com/docs/guides/vision) (accessed on 21 March 2025).

- ○

- LLaVA-NeXT-34B (https://github.com/haotian-liu/LLaVA) (accessed on 21 March 2025).

- -

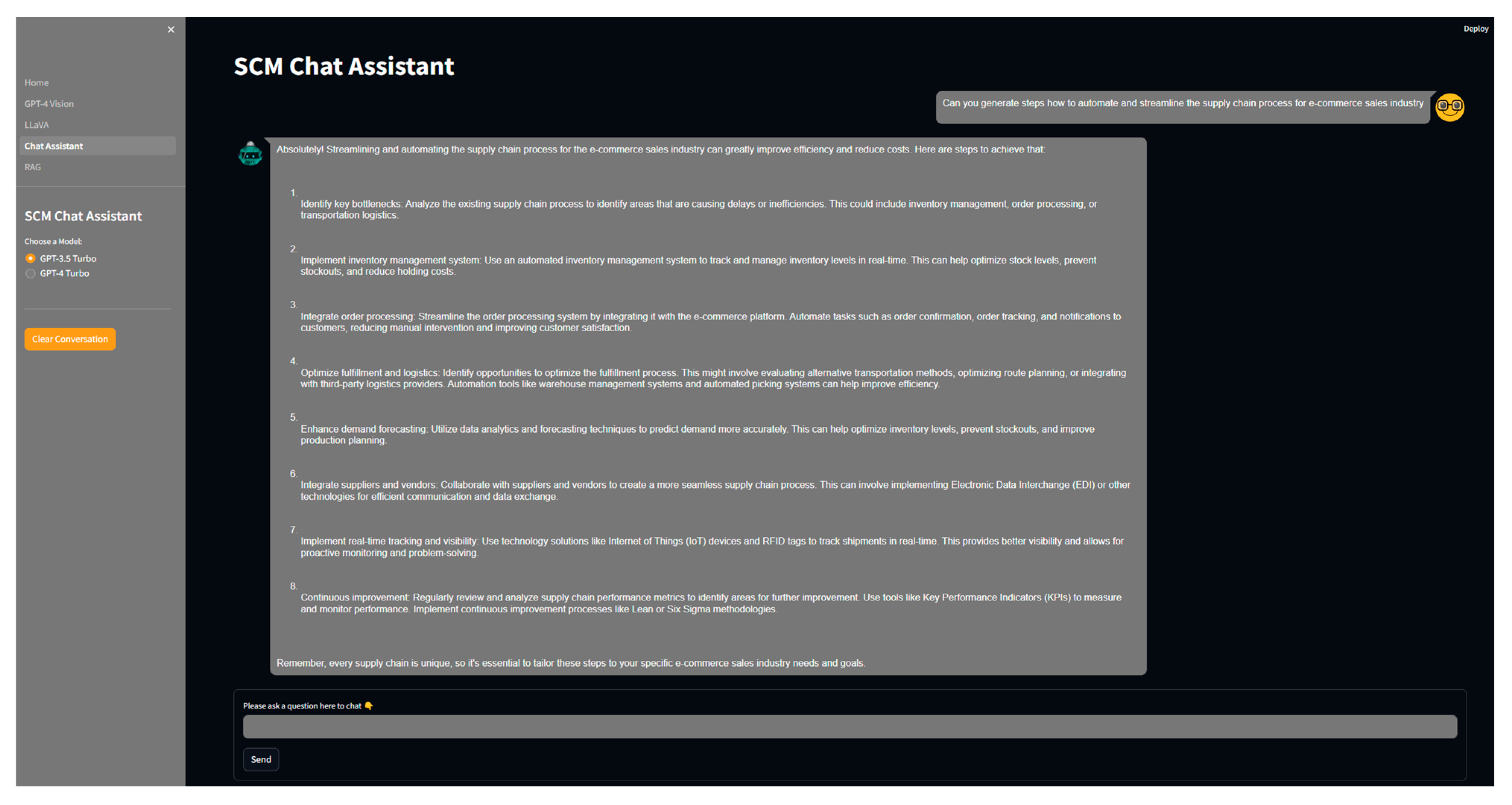

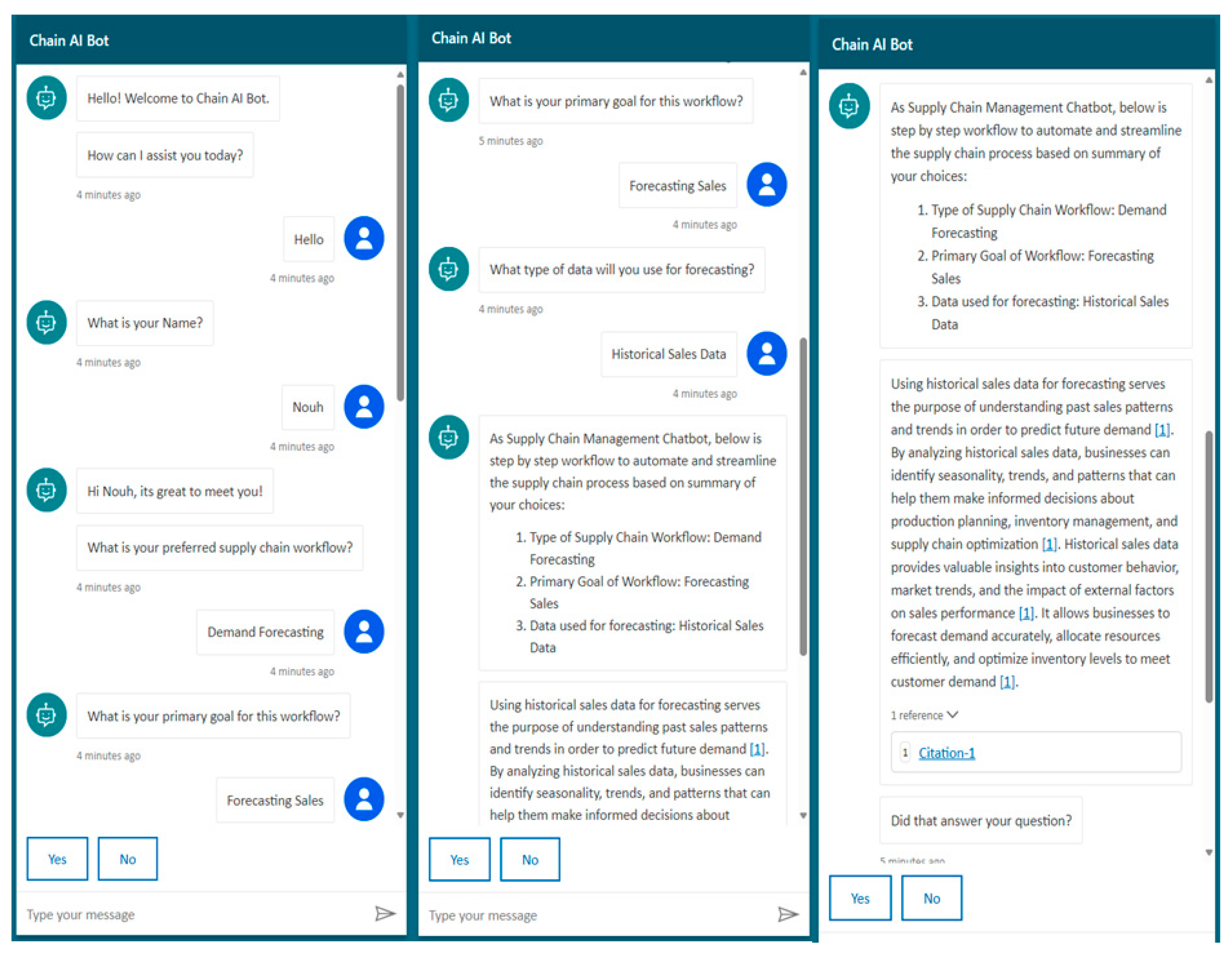

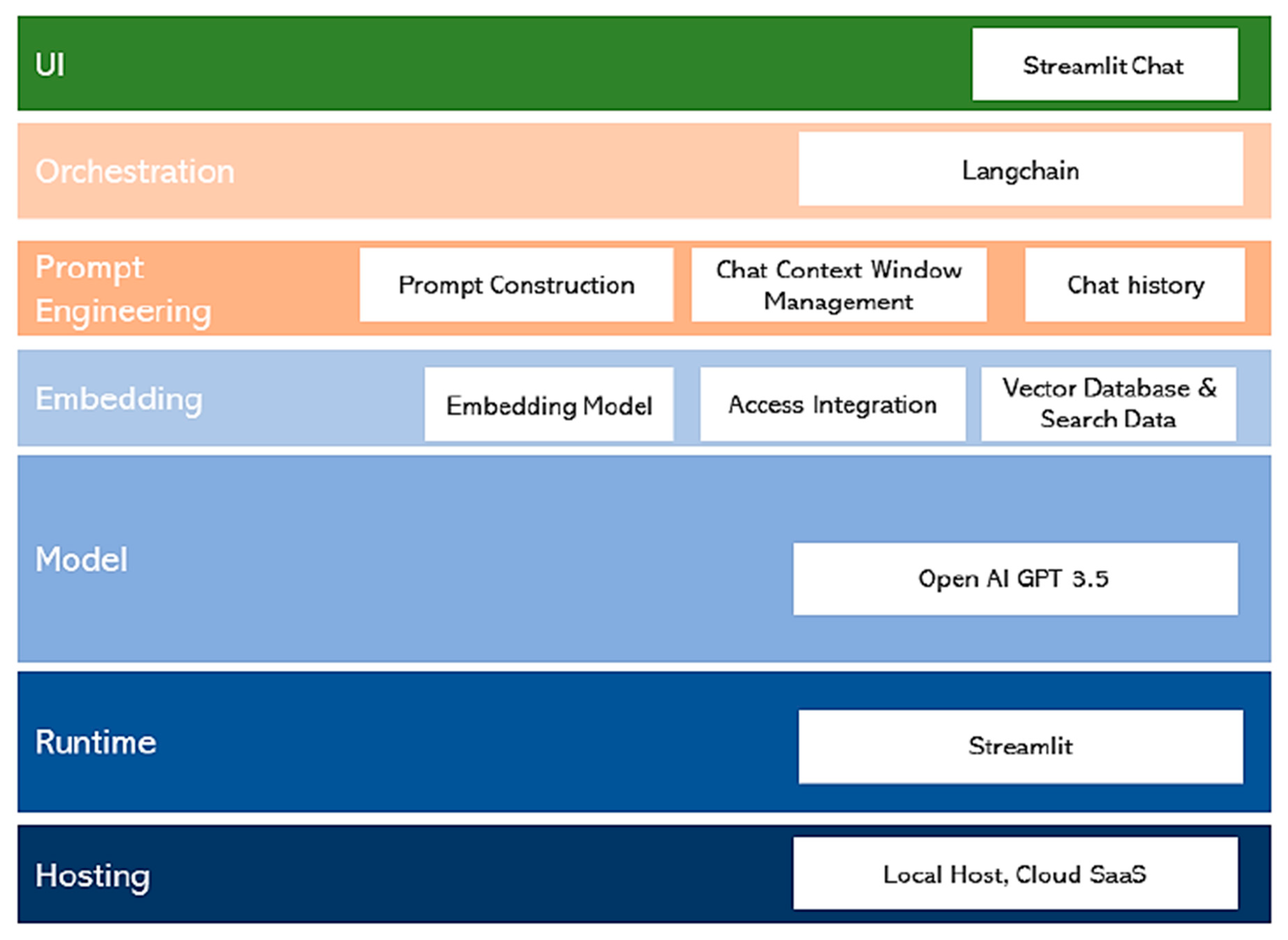

- SCM AI Chat Assistant: The SCM Chat Assistant is an intelligent conversational tool designed for efficient interaction and information retrieval within the realm of supply chain management. Powered by cutting-edge language models, including OpenAI GPT-3.5 Turbo (https://platform.openai.com/docs/models/gpt-3-5-turbo) (accessed on 21 March 2025) and GPT-4 Turbo (https://platform.openai.com/docs/models/gpt-4-and-gpt-4-turbo) (accessed on 21 March 2025), this chat assistant enables users to ask detailed questions about various aspects of supply chain workflows. By leveraging the advanced capabilities of these models, the SCM Chat Assistant delivers accurate and contextually relevant responses, facilitating streamlined communication and decision-making in the complex landscape of supply chain operations (Figure 5).

4.2. Operational Feasibility (Case Study: DPC Company: The “Q” System)

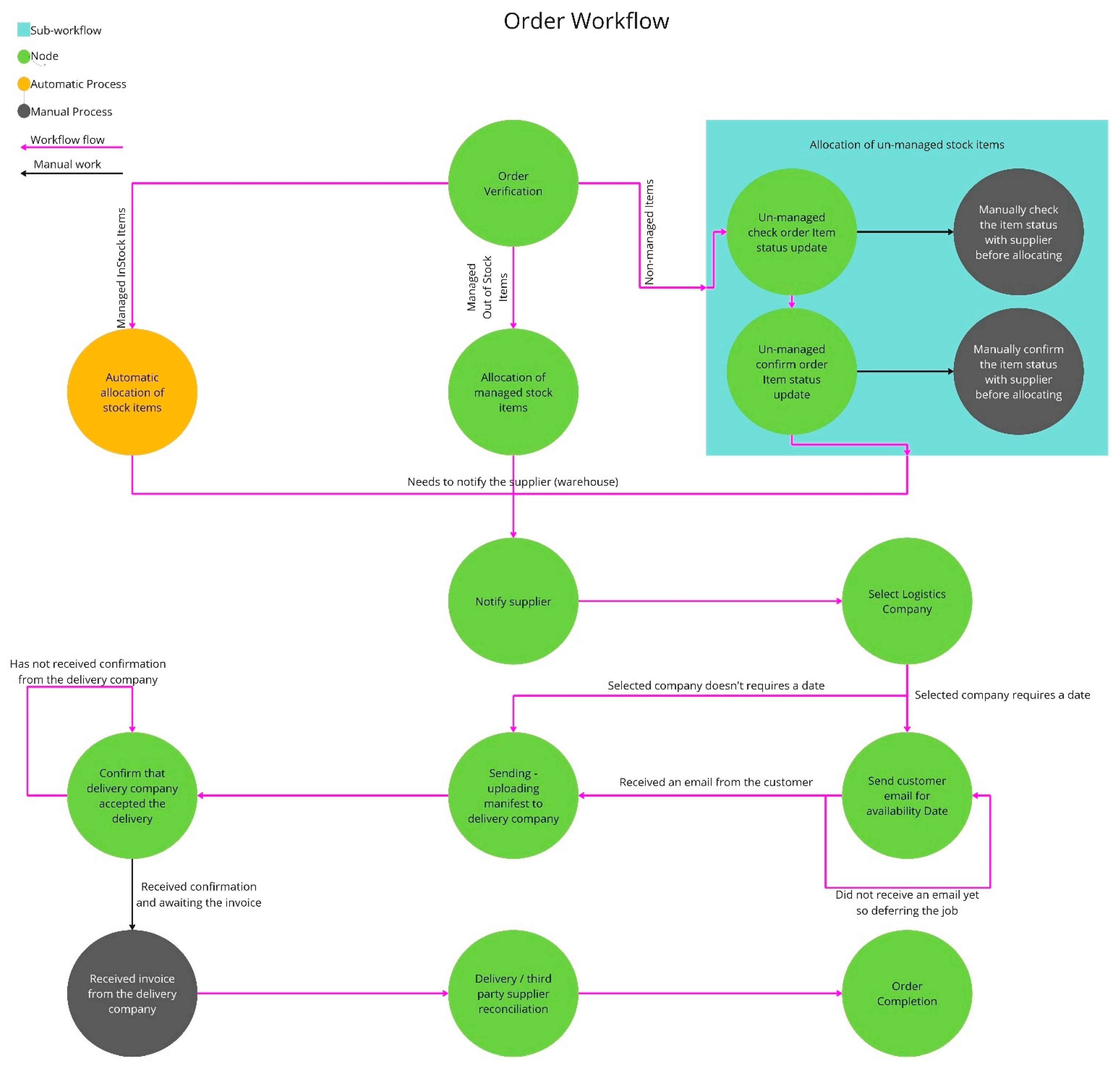

4.2.1. Key Processes of the “Q” System

- -

- Order Management: This is the entry point for fulfilling an order. The system ingests orders from the retailer’s website, creating stock groups, allocating items, selecting a logistics company, notifying the logistics company and sending confirmation emails. The system also creates a series of workflows that must be completed to fulfil the order. These include the following:

- ○

- Order Verification: This workflow verifies the order and customer details before moving the order to dispatch.

- ○

- Stock Allocation: This workflow allocates stock items, either from first-party or third-party suppliers, which involves checking availability and confirming delivery dates.

- ○

- Selecting a Delivery Company: This involves selecting a delivery company and calculating the estimated cost.

- ○

- Customer Availability Date: This involves contacting the customer to confirm a delivery date.

- ○

- Contacting Delivery Company: This workflow sends a manifest to the chosen delivery company.

- ○

- Awaiting Delivery Company Response: Staff await confirmation from the delivery company.

- ○

- Delivery Reconciliation: This confirms the order has been delivered and reconciles the estimated delivery cost with actual costs.

- -

- Stock Management: The “Q” system includes workflows for managing stock items, including adding, editing, increasing, and decreasing stock levels.

- -

- Product Management: The system provides workflows for managing products listed on the retailer’s website, including adding, editing, and publishing product details.

- -

- Range Management: Similarly to product management, the system manages product ranges using workflows for adding, editing and publishing range details.

- -

- Customer Service Cases: The “Q” system manages customer service cases using multiple workflows to simplify and expedite the resolution process. These include creating/editing follow-ups and escalating or de-escalating cases.

- -

- Container Workflows: This part of the system manages shipments of stock items owned by the retailer (first-party supplier items). This includes workflows for adding and editing container details and their arrival dates.

4.2.2. The Key Process-Related Challenge in DPC’s “Q” System

- -

- Supply Chain Connection: A primary challenge is the lack of seamless integration between the retailer, suppliers, and delivery companies. The need to connect these entities to optimise processes and ensure secure data sharing is a major pain point. This challenge aligns with the literature on supply chain visibility and collaboration, highlighting the need for better integration across different stakeholders.

- -

- Delivery Manifest: The delivery manifest workflows are currently complex because each delivery company requires a custom workflow. This results in many workflows to maintain and increases the manual effort involved. Moreover, the manual work involved in the response-related workflow can be reduced. This finding highlights the inflexibility of the current system and the need for a more adaptable solution, aligning with the research on the importance of agility in SCM.

- -

- Containers Shipment Addition: The process of adding new shipments for first-party supplier items is primarily manual. The “Q” system acts more as a database for this data rather than as a tool that facilitates and streamlines this task. This manual work leads to inefficiencies and increases the risk of errors. This demonstrates a specific pain point where LLM-based automation can significantly reduce manual effort and improve data management.

- -

- Generalised Workflow Framework: Adding a new workflow into the system requires software development, which is time-consuming. The current framework lacks adaptability, highlighting the need for a more flexible, data-driven system. This inflexibility is a critical barrier to rapid process improvement and innovation, showcasing the need for a dynamic workflow management approach that LLMs can enable.

4.2.3. Analysis and Interpretation

4.3. Financial Feasibility Analysis

- -

- Data Preparation and Annotation: This is a major cost driver. The expenses are influenced by factors such as data acquisition, the volume of data needed for effective training, manual annotation processes, licencing fees for third-party datasets and ensuring data privacy compliance. Strategies to optimise these costs, such as using smaller high-quality datasets with augmentation techniques, employing semi-automated annotation tools, and negotiating bulk licencing, are essential. The significant financial investment in data preparation demonstrates the need for a strategic approach to data management in LLM-based SCM solutions.

- -

- Model Training and Fine-Tuning: Training and fine-tuning LLMs can be computationally intensive and time-consuming. The high computational costs involved in training LLMs demand a scalable infrastructure.

- -

- Infrastructure Costs: Running and maintaining LLMs requires robust infrastructure, including servers with high computational power and storage capabilities. The cost will vary depending on factors such as usage volume, model size, and required response times. The study notes that experiments with hosting AWS and Azure showed that the cost grew exponentially without robust management.

- -

- Licencing Fees: Some LLMs are proprietary and require licencing fees for commercial use, which adds to the overall cost. The choice between a pay-by-token model and a hosting model needs to be considered carefully. The pay-by-token approach involves companies paying based on the amount of data processed by the model service, whereas hosting requires upfront infrastructure investment. The best option depends on the specific needs of the user, and for the purposes of this research, a pay-by-token model is chosen, due to the nature of the nodestream© and buttress products.

4.3.1. Sales of the Product

4.3.2. Maintain the Project’s Financial Viability and Attractiveness

- -

- Monitoring and review for adaptive financial management: The research underscores the necessity of continuous financial monitoring to ensure project viability. Regularly generating financial reports (monthly, quarterly, and yearly) is crucial for tracking project performance. However, simply generating reports is insufficient; these must be actively used to compare actual financial performance against predicted performance to identify deviations and their underlying causes. This analytical approach facilitates data-driven decision-making, allowing agile adjustments to financial strategy in response to evolving project needs. For example, if the cost of training the LLM exceeds the initial budget, this approach would allow the team to identify and address this overspending by either finding a more cost-effective solution or by adjusting the project’s budget. This proactive financial management stance moves beyond a descriptive approach.

- -

- User feedback integration for enhanced value: The study highlights the critical role of user feedback in maintaining the project’s appeal and usefulness. Actively incorporating user input into the project’s development ensures alignment with user needs and preferences. This feedback is not just about identifying bugs but about understanding how users interact with the system and what features add the most value. For example, the “Q” system, as described in the case study, highlights the complexities users face with manual processes in delivery manifest workflows, which can directly inform what aspects of the LLM-based system need to be prioritised. This user-centric approach drives continuous improvement and ensures the LLM-based solution remains relevant and effective. By understanding pain related to the LLM’s responsiveness for specific supply chain management tasks, the development team can prioritise refining the model in that area, ultimately enhancing user satisfaction.

- -

- Iterative improvement as a financial strategy: The research emphasises that continuous improvement is not merely about product development, but a financial necessity. Regularly gathering and analysing customer feedback directly feeds into product enhancements, addressing shortcomings promptly and improving the overall range. A commitment to quality and user responsiveness is not merely a customer service tactic; it also promotes loyalty and can act as a key differentiator in a competitive market. Furthermore, monitoring and reviewing available models will help with using the most cost-effective and efficient models. For instance, the use of the GPT-4 model might be more expensive than GPT-3.5, which can be a factor to be considered when a specific aspect of a project is being looked at in terms of cost-effectiveness. This iterative approach allows the business to grow by ensuring that the product delivers maximum value for money for its users.

- -

- User satisfaction and engagement for Long-Term Viability: The research emphasises the importance of user engagement, suggesting that fostering a sense of ownership and investment in the project’s user base is crucial to long-term financial health. When users are involved in the feedback process and feel their suggestions are considered, they develop a sense of ownership and loyalty, leading to higher engagement and satisfaction. For example, involving DPC employees, who use the “Q” system, in the development and feedback process for the LLM-based workflows can increase their satisfaction and adoption of the new system. This loyalty translates into sustained usage and a more reliable revenue stream. Active participation of users will also help in understanding the market and areas for enhancements. Encouraging a sense of ownership and investment within the project’s user group involves actively listening to their input and integrating their suggestions into our project, if possible. This promotes the belief that our company values and listens to the users, leading to increased satisfaction and loyalty among individuals.

- -

- Building a reputation for future growth: By actively incorporating feedback and demonstrating a commitment to continuous improvement, the project can cultivate a reputation for responsiveness and customer-centricity. This reputation is crucial to attracting new users and can have a positive impact on the project’s financial viability. A user-friendly and adaptable system attracts users, which drives up adoption rates and improves the revenue potential of the system.

4.3.3. Managing Financial Risks—Identification and Mitigation Strategies

5. Discussion

5.1. Enhanced Capabilities of LLMs in SCM

5.2. Implications of LLM Integration

5.2.1. Theoretical Implications

5.2.2. Framework for Addressing SCM-Related Challenges Using LLMs

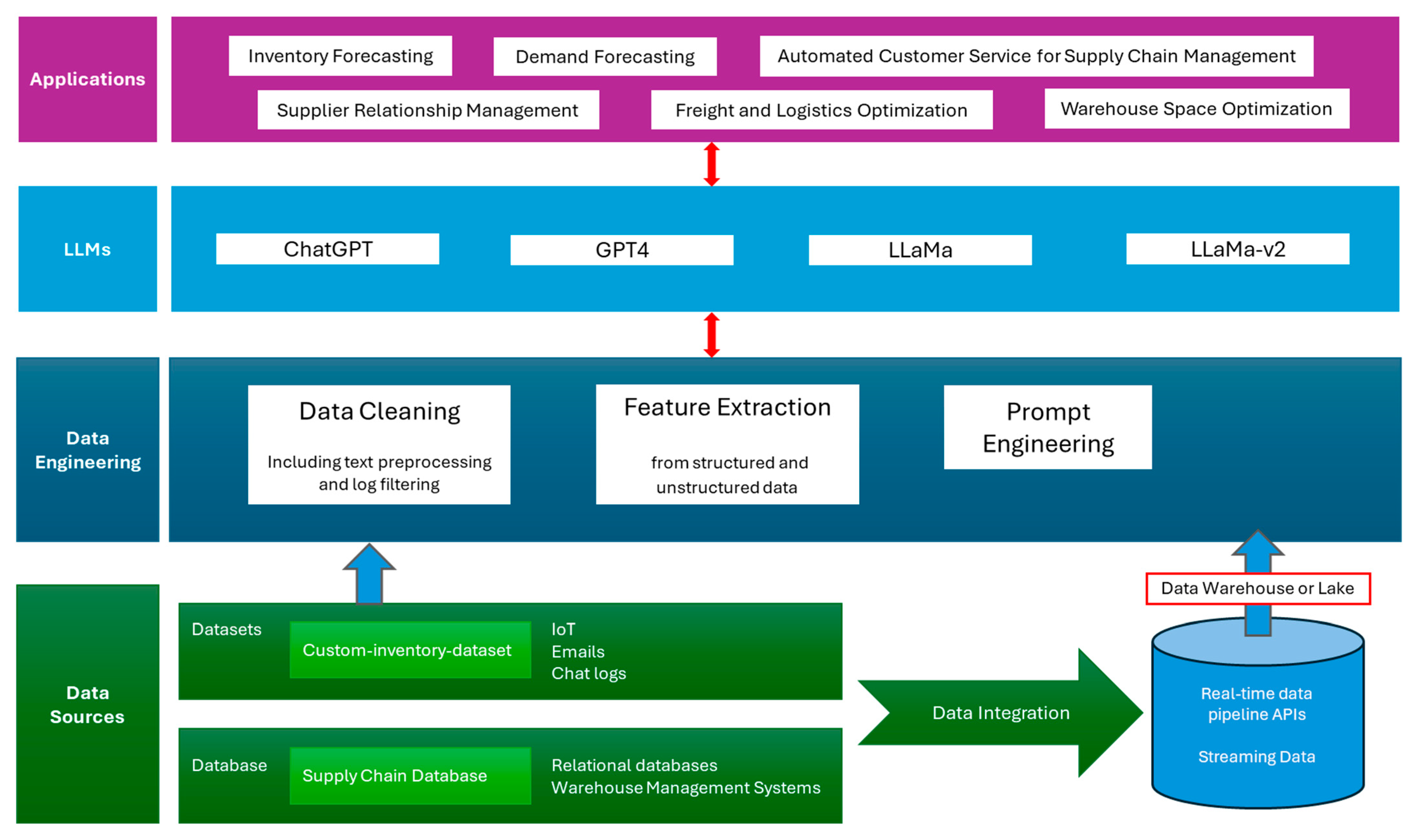

- Data Source Layer: Aggregates diverse sources like relational databases, warehouse management systems, IoT streams, and supplier communication platforms (e.g., emails and chat logs), ensuring real-time and comprehensive data access.

- Data Engineering Layer: Processes both structured and unstructured data through advanced cleaning, log parsing, and NLP techniques. Unstructured SCM data, such as IoT device logs and supplier emails, is pre-processed and transformed using appropriate pipelines. Feature extraction accommodates heterogeneous data formats, enabling refined insights for downstream tasks.

- LLM Layer: Fine-tunes LLMs for adaptability, ensuring accurate and relevant outputs aligned with evolving supply chain dynamics. and evolving supply chain contexts. The models are continuously improved using feedback loops, performance evaluation metrics (e.g., accuracy, response relevance, and latency), and retraining strategies to support ongoing learning and alignment with current supply chain data.

- Application Layer: Demonstrates practical applications of LLMs, including inventory forecasting, demand sensing, and supply chain risk management. Figure 11 shows how LLMs revolutionise various facets of supply chain management:

5.2.3. Practical and Managerial Implications

5.3. Financial Feasibility

- -

- Utilising semi-automated annotation tools, data augmentation techniques, and crowdsourcing can significantly reduce annotation expenses while maintaining data quality.

- -

- Choosing between pay-per-token models and hosting proprietary models depends on projected usage patterns. For enterprises with high transaction volumes, investing in proprietary hosting may offer better long-term financial feasibility.

- -

- A horizontal scaling architecture, combined with robust resource management practices, was identified as essential for containing infrastructure costs.

- -

- Strategies to maintain the project’s financial attractiveness include regular financial performance reviews, customer feedback incorporation, and user-focused design principles. These align financial management with user satisfaction and product quality.

5.3.1. Pay Model

5.3.2. Risk Mitigation and Revenue Generation

- -

- Flexible payment plans, including subscription and pay-per-use models, cater to diverse user needs and budgets.

- -

- Trial periods and introductory discounts encourage initial adoption and enable users to experience the benefits of the solution before full commitment.

- -

- Transparent pricing and billing build trust by clearly communicating cost structures and avoiding hidden charges.

- -

- Autopay and convenient billing features streamline transactions for users, promoting recurring payments.

- -

- Rewarding systems, such as loyalty points, incentivize continued engagement.

- -

- Social proof and testimonials build credibility by showcasing positive feedback from existing users.

5.4. Socio-Technical Aspects of Using LLMs for Inventory Management and Automated Workflow

5.4.1. Social Aspects

- -

- Organisational Structure: The adoption of LLMs for workflow automation necessitates a re-evaluation of organisational structures to accommodate new roles and responsibilities. In the context of our project, integrating LLM-based automation into Q and nodeStream© requires a shift towards more agile and flexible organisational structures. This might involve flattening traditional hierarchies within DPC to promote cross-functional collaboration among teams working on supply chain optimisation. Additionally, decision-making processes may become more decentralised, allowing frontline employees to leverage LLM capabilities effectively in their day-to-day operations.

- -

- Stakeholder Engagement: Effective stakeholder engagement is crucial for the successful implementation of LLM-based workflow automation within DPC’s ecosystem. In our project, engaging stakeholders from various departments within DPC, as well as external partners such as retailers, suppliers, and delivery companies, ensures buy-in and adopts a culture of collaboration and innovation. Clear communication channels established through Q and nodeStream© facilitate feedback and input, enabling stakeholders to actively participate in the optimisation of supply chain workflows. By involving stakeholders in the design and implementation process, we can tailor LLM-based solutions to meet their specific needs and address any concerns proactively.

- -

- Change Management: The introduction of LLM-based workflow automation represents a significant change for employees accustomed to traditional manual processes within DPC. To effectively manage this transition, changing management strategies is essential. In our project, implementing training programmes and workshops on how to use LLMs within Q and nodeStream© equips employees with the necessary skills and knowledge to adapt to the new workflow automation tools. Ongoing support and guidance provided by the project team help address any resistance to change and promote acceptance of LLM-based solutions. Emphasising the benefits of LLMs, such as time savings, error reduction, and enhanced decision-making capabilities, encourages employees to embrace the new technology and leverage its potential to optimise supply chain operations.

5.4.2. Technical Aspects

- -

- Integrating LLMs within existing systems such as Q and nodeStream© requires careful planning and coordination to ensure compatibility and seamless interoperability. APIs and data exchange protocols play a crucial role in facilitating communication between different components of the system, enabling data sharing and workflow execution.

- -

- As LLMs handle sensitive data related to supply chain operations, ensuring data privacy and security is paramount. Robust encryption mechanisms, access controls, and authentication protocols must be implemented to safeguard against unauthorised access, data breaches, and cyber threats. Compliance with regulatory requirements such as GDPR and CCPA is essential to maintain trust and credibility with stakeholders [40].

- -

- The algorithms powering LLMs must be transparent and accountable to mitigate the risk of bias, discrimination, and unintended consequences. Transparency measures such as model documentation, explainability techniques, and audit trails enable stakeholders to understand how decisions are made and detect potential biases or errors. Accountability frameworks, including mechanisms for oversight, recourse, and redress, hold stakeholders accountable for the outcomes of LLM-based workflows [41].

5.4.3. Integration of LLM-Based Workflow Automation in the “Q” and nodeStream©

- -

- Flattened Hierarchies: LLM-based workflow automation promotes a more decentralised approach to decision-making, enabling employees at all levels to contribute to the design and execution of workflows [42]. Flattened hierarchies empower frontline workers to innovate and adapt workflows to meet specific operational needs, advancing a culture of continuous improvement and agility.

- -

- Cross-Functional Collaboration: LLM-based workflow automation breaks down silos between departments and functions, facilitating cross-functional collaboration and knowledge sharing. By providing a common platform for workflow design and execution, NodeStream© raises collaboration among stakeholders across the supply chain, enabling real-time data exchange and collaboration.

- -

- Agile Work Practices: The agility afforded by LLM-based workflow automation enables organisations to respond rapidly to changing market conditions, customer demands, and supply chain disruptions. Agile work practices, such as iterative development, rapid prototyping, and continuous improvement, allow organisations to experiment with new workflows, iterate based on feedback, and adapt to evolving business needs [43].

- -

- Scalability and Performance: The scalability and performance of the system are critical factors to consider when integrating LLM-based workflow automation. As the volume of data and the complexity of workflows increase, the system must be able to scale horizontally to handle additional load and maintain responsiveness. Performance optimisation techniques, such as caching, parallel processing, and distributed computing, can help ensure that the system meets the demands of a growing user base and workload.

- -

- Data Integration and Interoperability: Effective data integration and interoperability are essential for seamless communication and collaboration between different components of the system. APIs, data standards, and data exchange protocols play a crucial role in facilitating data sharing and interoperability between Q, nodeStream©, and other systems within the supply chain ecosystem. Ensuring compatibility and consistency of data formats and schemas is essential for avoiding data silos and enabling cross-system workflows.

- -

- Model Training and Tuning: The performance and accuracy of LLMs depend on the quality of training data and the tuning of model parameters. Organisations must invest resources in curating high-quality training datasets that accurately reflect the domain-specific nuances of supply chain operations. Additionally, ongoing monitoring and tuning of LLMs are necessary to adapt to changing business requirements, evolving user needs, and shifts in market dynamics.

- -

- Algorithmic Fairness and Bias Mitigation: Addressing algorithmic fairness and bias mitigation is crucial to ensure equitable outcomes and avoid unintended consequences. Organisations must implement measures to detect and mitigate bias in LLM-based decision-making processes, such as bias audits, fairness-aware training, and algorithmic transparency. Ethical considerations, including privacy preservation and consent management, are also paramount to uphold user trust and integrity.

- -

- Human–Machine Interaction: The interaction between humans and machines in LLM-based workflow automation systems is characterised by collaboration, coordination, and communication. Human operators rely on LLMs to assist them in decision-making, automate repetitive tasks, and augment their cognitive abilities. Effective human–machine interaction requires intuitive user interfaces, natural language processing capabilities, and feedback mechanisms to support seamless communication and collaboration [46].

- -

- Organisational Learning and Adaptation: The adoption of LLM-based workflow automation forwards organisational learning and adaptation by enabling rapid experimentation, iterative improvement, and knowledge sharing. Organisations can leverage insights from LLM-generated workflows to identify patterns, optimise processes, and drive innovation. Learning organisations embrace a culture of continuous improvement, where employees are empowered to experiment with new ideas, learn from failures, and iterate based on feedback.

- -

- Socio-Cultural Impact: The socio-cultural impact of LLM-based workflow automation extends to organisational culture, employee behaviour, and societal norms. Organisations must consider the socio-cultural context in which LLMs are deployed, recognising the potential implications for job roles, career paths, and work–life balance. Embracing diversity, equity, and inclusion principles is essential for adopting a supportive and inclusive workplace culture that values the contributions of all employees.

- -

- Ethical and Legal Considerations: Ethical and legal considerations are paramount in the design, development, and deployment of LLM-based workflow automation systems [47]. Organisations must adhere to ethical principles, such as transparency, accountability, and fairness, to ensure responsible AI use. Legal compliance with regulations, such as data protection laws, intellectual property rights, and anti-discrimination statutes, is essential for mitigating legal risks and safeguarding against potential liabilities.

5.4.4. Actionable Workforce Training and Ethical Governance Strategies Informed by DPC Experience

- -

- Workforce Training and Upskilling Strategies: The transition to LLM-assisted workflows at DPC revealed several skill and adoption gaps, particularly in prompt design, interpretation of model outputs, and integration into legacy operations. Based on these insights, we propose the following phased training approach:

- ○

- Foundational AI Literacy (All Staff): Short, domain-relevant sessions introducing core LLM concepts, risks (e.g., hallucination), and appropriate use cases. These were essential to demystify the technology and promote user acceptance.

- ○

- Role-Specific Prompting Workshops (Operations/Tech Leads): Practical training in designing effective prompts, evaluating model outputs, and using Q/nodeStream© with LLMs. DPC staff benefited from hands-on experimentation during early prototypes.

- ○

- Cross-Functional Collaboration Labs (Managers, Logistics, IT): Facilitated sessions where different departments co-designed automated workflows, discussed constraints, and identified edge cases. These labs helped translate tacit domain knowledge into usable prompt frameworks.

- -

- Ethical AI Governance Measures: While DPC’s deployment remained exploratory, governance gaps quickly emerged around data sensitivity, output accountability, and system auditing. To address these, we propose the following initial governance scaffolding:

- ○

- AI Use Policy and Access Controls: Defining appropriate use cases for LLMs, who can run workflows, and how sensitive data (e.g., customer info, pricing) is handled. DPC implemented role-based access in nodeStream© to restrict certain operations.

- ○

- Human-in-the-Loop Verification Points: Embedding human checkpoints before critical actions, e.g., validating auto-generated delivery manifests before submission. This strategy balanced efficiency with control and auditability.

- ○

- Ethical Review Board or Steering Group: As workflows scale, DPC would benefit from a small multi-role advisory group to periodically review LLM outputs, assess drift or bias, and recommend updates to training data or prompting strategies.

5.5. Strategic Market Landscape and Positioning

- -

- Understanding Market Dynamics: Through industry reports and expert insights, we examine market size, growth trajectories, and major players in the SCM industry.

- -

- Identifying Emerging Trends: The analysis highlights trends such as increased AI adoption, sustainability practices, and demand for tailored solutions, guiding our research objectives.

- -

- Assessing Market Demand: Surveys and interviews with industry stakeholders inform us of our understanding of the demand for AI-enabled workflows and pain points in SCM.

- -

- Analysing Competitive Landscape: We study competitors’ strategies, product offerings, and customer feedback to identify strengths and weaknesses related to our solution.

- -

- Evaluating Market Readiness: Factors like technological infrastructure and regulatory landscape are evaluated to assess the market’s readiness for AI-driven solutions.

- -

- Forecasting Future Trends: Insights from the analysis help forecast future market shifts and technological advancements, guiding strategic research positioning.

- -

- Manufacturing Powerhouses: Large-scale manufacturers with intricate supply chains.

- -

- Logistics and Transportation Enterprises: Companies engaged in transportation, warehousing, and distribution.

- -

- E-commerce Enclaves: E-commerce platforms with complex supply chain needs.

- -

- Retail Prowess: Extensive retail chains managing diverse product portfolios.

- -

- Pharmaceutical Guardians: Industries governed by stringent regulatory frameworks.

- -

- Tech and Electronics Manufacturers: Companies with rapid product cycles and global supply chains.

- -

- Food and Beverage Stewards: Companies dealing with perishable goods.

- -

- Automotive Visionaries: The automotive industry with complex assembly processes.

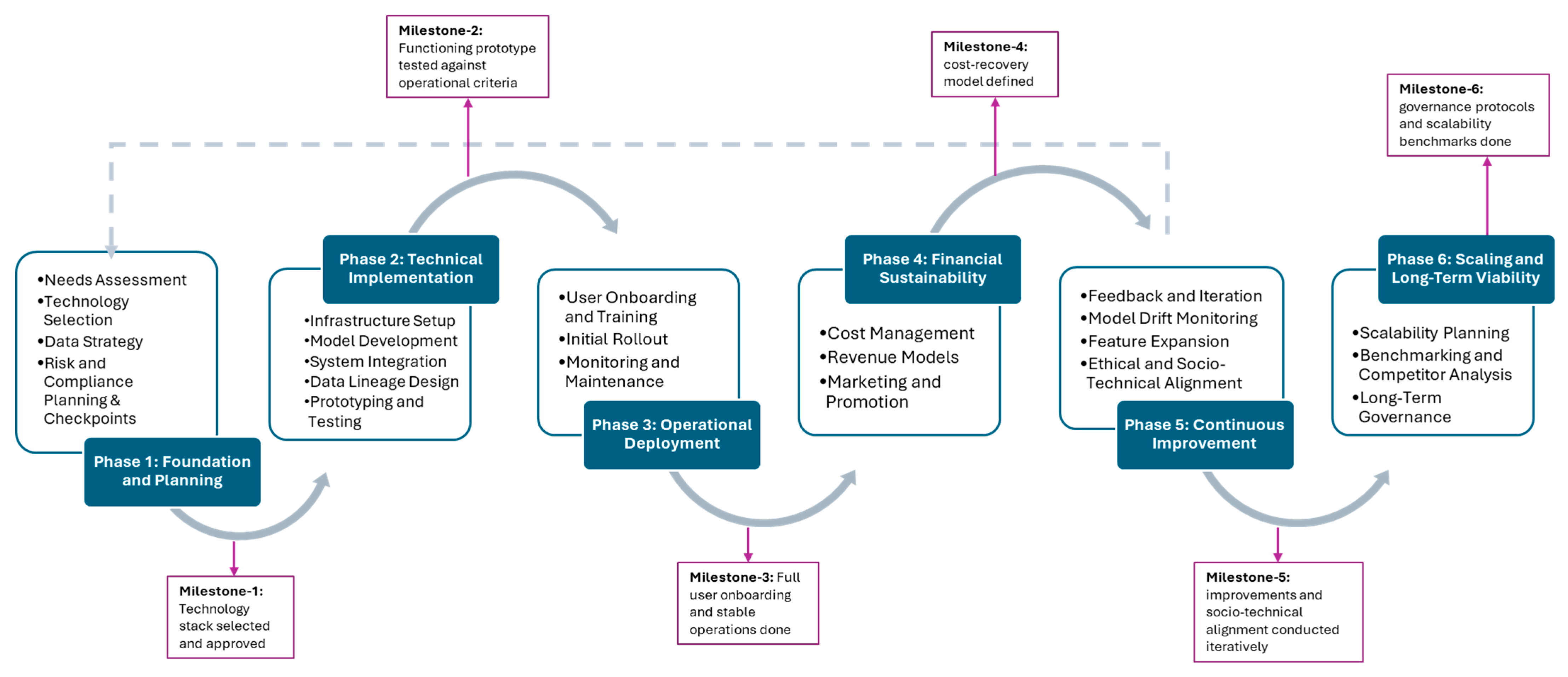

5.6. Roadmap for Implementing an LLM-Powered Workflow Editor

6. Conclusions

6.1. Research Contributions

- Prototype Development: The research presents the development of innovative prototypes, such as the Q inventory management system and the nodeStream© workflow editor, which leverage LLM technology to automate and optimise supply chain workflows. These prototypes not only demonstrate the feasibility of using LLMs to streamline complex processes but also set a precedent for how AI can reshape operational efficiencies and enhance organisational competitiveness.

- Integration of Cutting-Edge Technologies: By integrating cutting-edge technologies, including chatbots and LLMs, this research offers a multifaceted solution to address various aspects of SCM. The seamless integration of these technologies raises enhanced communication, collaboration, and decision-making capabilities within supply chain workflows, providing a robust framework for organisations to navigate the complexities of modern SCM.

- Financial Feasibility Analysis: The research explores the socio-technical implications of integrating LLM-based workflow automation within supply chain operations. By addressing technical, organisational, and socio-cultural dimensions, this study highlights critical human–machine interaction dynamics and ethical considerations. The analysis underscores the importance of collaborative, adaptive, and inclusive approaches to the deployment of LLMs in real-world settings.

- Socio-Technical Implications: The paper explores the socio-technical implications of integrating LLM-based workflow automation within supply chain operations. By considering both technical and social factors, the analysis highlights the organisational impacts, human–machine interactions, and ethical considerations associated with deploying LLMs in real-world environments.

- Development of a Roadmap for Implementation: As a pivotal output of this research, a detailed roadmap has been developed to guide organisations through the implementation of an LLM-powered workflow editor. This roadmap offers a structured and actionable framework for organisations, addressing technical, financial, operational, and socio-technical challenges across distinct phases of implementation. It bridges the gap between theoretical feasibility and practical execution, ensuring that organisations can navigate the complexities of AI integration with confidence and clarity.

6.2. Recommendations for Further Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mäenpää, M. Using artificial intelligence for data-driven supply chain management: A global ICT company case study. LaturiOuluFi 2024. Available online: https://oulurepo.oulu.fi/handle/10024/48231 (accessed on 5 July 2025).

- Yandrapalli, V. Revolutionizing Supply Chains Using Power of Generative AI. International. J. Res. Publ. Rev. 2023, 4, 1556–1562. [Google Scholar] [CrossRef]

- Zhu, B.; Vuppalapati, C. Enhancing Supply Chain Efficiency Through Retrieve-Augmented Generation Approach in Large Language Models. In Proceedings of the 2024 IEEE 10th International Conference on Big Data Computing Service and Applications (BigDataService), Shanghai, China, 15–18 July 2024; pp. 117–121. [Google Scholar] [CrossRef]

- Fosso Wamba, S.; Guthrie, C.; Queiroz, M.M.; Minner, S. ChatGPT and generative artificial intelligence: An exploratory study of key benefits and challenges in operations and supply chain management. Int. J. Prod. Res. 2024, 62, 5676–5696. [Google Scholar] [CrossRef]

- Schneider, J.; Abraham, R.; Meske, C.; Vom Brocke, J. Artificial Intelligence Governance For Businesses. Inf. Syst. Manag. 2023, 40, 229–249. [Google Scholar] [CrossRef]

- Li, B.; Mellou, K.; Zhang, B.; Pathuri, J.; Menache, I. Large Language Models for Supply Chain Optimization. arXiv 2023, arXiv:230703875. [Google Scholar] [CrossRef]

- Dubey, R.; Gunasekaran, A.; Papadopoulos, T. Benchmarking operations and supply chain management practices using Generative AI: Towards a theoretical framework. Transp. Res. Part E Logist. Transp. Rev. 2024, 189, 103689. [Google Scholar] [CrossRef]

- Shekhar, A.; Prabhat, P.; Umar, D.; Abdul, F.; Dibaba Wakjira, W. Generative AI in Supply Chain Management. Int. J. Recent Innov. Trends Comput. Commun. 2023, 11, 4179–4185. [Google Scholar] [CrossRef]

- Croxton, K.L.; García-Dastugue, S.J.; Lambert, D.M.; Rogers, D.S. The Supply Chain Management Processes. Int. J. Logist. Manag. 2001, 12, 13–36. [Google Scholar] [CrossRef]

- Hammer, M.; Champy, J. Reengineering the Corporation: A Manifesto for Business Revolution; Harper Business: New York, NY, USA, 1993. [Google Scholar]

- Hugos, M.H. Essentials of Supply Chain Management; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Papadopoulos, T.; Gunasekaran, A.; Dubey, R.; Fosso, W.S. Big data and analytics in operations and supply chain management: Managerial aspects and practical challenges. Prod. Plan. Control. 2017, 28, 873–876. [Google Scholar] [CrossRef]

- York, N.; Chopra, S. Supply Chain Management Strategy, Planning, and OPeratiOn. 2019. Available online: https://search.catalog.loc.gov/instances/6d7c68c4-ea9c-5199-8653-3a64c10fe746?option=lccn&query=2017035661 (accessed on 13 August 2025).

- Ageron, B.; Bentahar, O.; Gunasekaran, A. Digital supply chain: Challenges and future directions. Supply Chain. Forum Int. J. 2020, 21, 133–138. [Google Scholar] [CrossRef]

- Wu, H.; Li, G.; Zheng, H. How Does Digital Intelligence Technology Enhance Supply Chain Resilience? Sustainable Framework and Agenda. Ann. Oper. Res. 2024, 1–23. [Google Scholar] [CrossRef]

- Tiwari, S.; Wee, H.M.; Daryanto, Y. Big data analytics in supply chain management between 2010 and 2016: Insights to industries. Comput. Ind. Eng. 2018, 115, 319–330. [Google Scholar] [CrossRef]

- Papert, M.; Rimpler, P.; Pflaum, A. Enhancing supply chain visibility in a pharmaceutical supply chain: Solutions based on automatic identification technology. Int. J. Phys. Distrib. Logist. Manag. 2016, 46, 859–884. [Google Scholar] [CrossRef]

- Prajogo, D.; Olhager, J. Supply chain integration and performance: The effects of long-term relationships, information technology and sharing, and logistics integration. Int. J. Prod. Econ. 2012, 135, 514–522. [Google Scholar] [CrossRef]

- Soosay, C.A.; Hyland, P.W.; Ferrer, M. Supply chain collaboration: Capabilities for continuous innovation. Supply Chain. Manag. 2008, 13, 160–169. [Google Scholar] [CrossRef]

- Zhu, J.; Ding, X.; Ge, Y.; Ge, Y.; Zhao, S.; Zhao, H.; Wang, X.; Shan, Y. VL-GPT: A Generative Pre-trained Transformer for Vision and Language Understanding and Generation. arXiv 2023, arXiv:2312.09251. [Google Scholar]

- Richey, R.G.; Chowdhury, S.; Davis-Sramek, B.; Giannakis, M.; Dwivedi, Y.K. Artificial intelligence in logistics and supply chain management: A primer and roadmap for research. J. Bus. Logist. 2023, 44, 532–549. [Google Scholar] [CrossRef]

- Wamba, F.S.; Queiroz, M.M.; Jabbour, C.J.; Shi, C.V. Are both generative AI and ChatGPT game changers for 21st-Century operations and supply chain excellence? Int. J. Prod. Econ. 2023, 265, 109015. [Google Scholar] [CrossRef]

- Lee, K.; Cooper, A.F.; Grimmelmann, J.; Balkin, M.; Black, A.; Brundage, M. Talkin’ ’Bout AI Generation: Copyright and the Generative-AI Supply Chain. arXiv 2023, arXiv:2309.08133. [Google Scholar] [CrossRef]

- Wang, S.; Zhao, Y.; Hou, X.; Wang, H. Large Language Model Supply Chain: A Research Agenda. ACM Trans. Softw. Eng. Methodol. 2025, 34, 1–46. [Google Scholar] [CrossRef]

- Wamba, F.S.; Akter, S.; Edwards, A.; Chopin, G.; Gnanzou, D. How ‘big data’ can make big impact: Findings from a systematic review and a longitudinal case study. Int. J. Prod. Econ. 2015, 165, 234–246. [Google Scholar] [CrossRef]

- Bleady, A.; Ali, A.; Ibrahim, S. Dynamic Capabilities Theory: Pinning Down a Shifting Concept. Acad. Account. Financ. Stud. J. 2018, 22, 1–6. [Google Scholar]

- Beske, P. Dynamic capabilities and sustainable supply chain management. Int. J. Phys. Distrib. Logist. Manag. 2012, 42, 372–387. [Google Scholar] [CrossRef]

- Barney, J.; Wright, M.; Ketchen, D.J. The resource-based view of the firm: Ten years after 1991. J. Manag. 2001, 27, 625–641. [Google Scholar] [CrossRef]

- Dubey, R.; Bryde, D.J.; Blome, C.; Roubaud, D.; Giannakis, M. Facilitating artificial intelligence powered supply chain analytics through alliance management during the pandemic crises in the B2B context. Ind. Mark. Manag. 2021, 96, 135. [Google Scholar] [CrossRef]

- Jedbäck, W.; Rodrigues, V.; Jönsson, A. How a Socio-technical Systems Theory Perspective can help Conceptualize Value Co-Creation in Service Design: A case study on interdisciplinary methods for interpreting value in service systems. 2022. Available online: https://liu.diva-portal.org/smash/record.jsf?pid=diva2%3A1672571&dswid=4957 (accessed on 24 July 2025).

- Sony, M.; Naik, S. Industry 4.0 integration with socio-technical systems theory: A systematic review and proposed theoretical model. Technol. Soc. 2020, 61, 101248. [Google Scholar] [CrossRef]

- Soares, A.L.; Gomes, J.; Zimmermann, R.; Rhodes, D.; Dorner, V. Integrating AI in Supply Chain Management: Using a Socio-Technical Chart to Navigate Unknown Transformations; Springer: Cham, Switzerland, 2024; pp. 22–35. [Google Scholar] [CrossRef]

- Minshull, L.K.; Dehe, B.; Kotcharin, S. Exploring the impact of a sequential lean implementation within a micro-firm—A socio-technical perspective. J. Bus. Res. 2022, 151, 156–169. [Google Scholar] [CrossRef]

- Sai, B.; Thanigaivelu, S.; Shivaani, N.; Shymala Babu, C.S.; Ramaa, A. Integration of Chatbots in the Procurement Stage of a Supply Chain. In Proceedings of the 2022 6th IEEE International Conference on Computational System and Information Technology for Sustainable Solutions (CSITSS), Bangalore, India, 21–23 December 2022. [Google Scholar] [CrossRef]

- Wang, Y.; Ma, S.; Wang, J.; Wang, H.; Pu, W. Machine manufacturing process improvement and optimisation. Highlights Sci. Eng. Technol. 2023, 62. [Google Scholar] [CrossRef]

- Han, Y.; Ding, Z.; Liu, Y.; He, B.; Tresp, V. Critical Path Identification in Supply Chain Knowledge Graphs with Large Language Models. Int. J. Prod. Econ. 2024, 265, 109015. [Google Scholar]

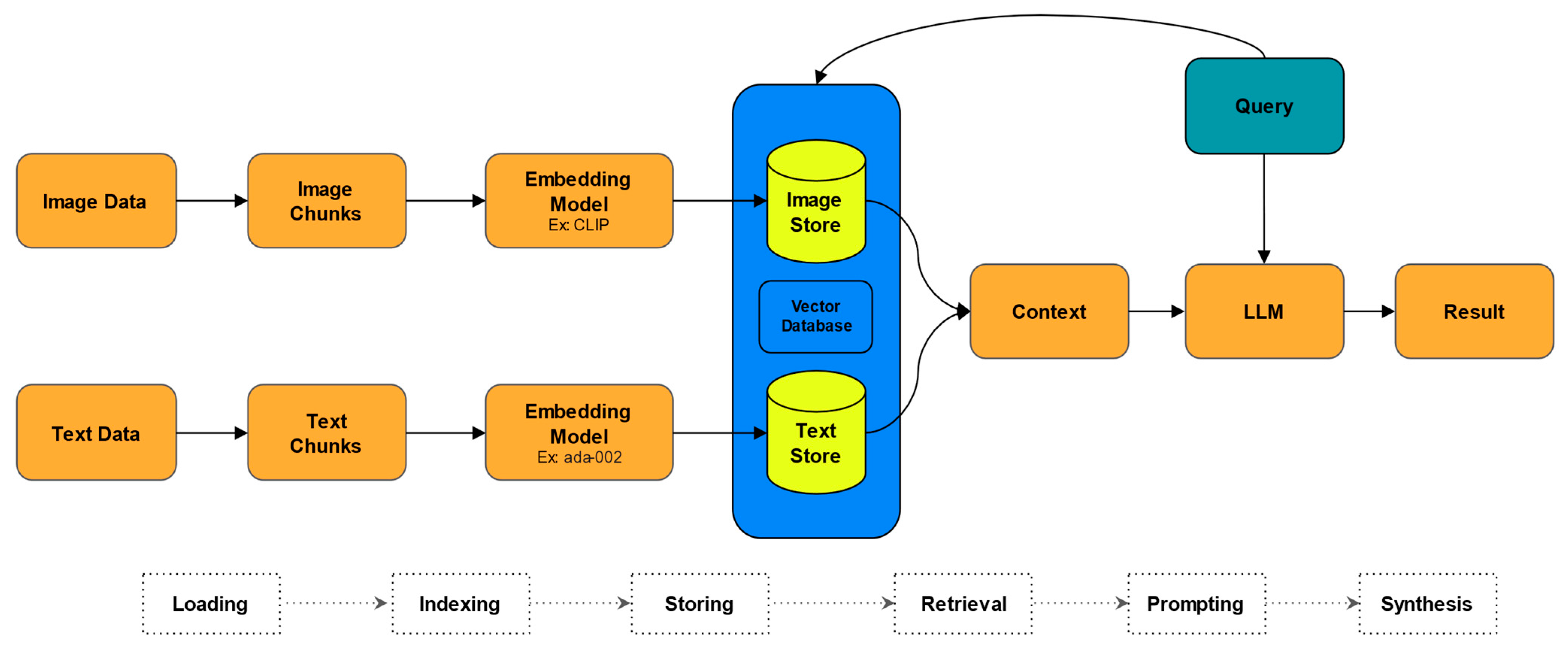

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, M.; Wang, H. Retrieval-Augmented Generation for Large Language Models: A Survey. 2023. Available online: https://simg.baai.ac.cn/paperfile/25a43194-c74c-4cd3-b60f-0a1f27f8b8af.pdf (accessed on 31 June 2025).

- Databricks. What is Retrieval Augmented Generation (RAG)? 2024. Available online: https://www.databricks.com/glossary/retrieval-augmented-generation-rag (accessed on 25 March 2024).

- Storey, J.; Emberson, C.; Godsell, J.; Harrison, A. Supply chain management: Theory, practice and future challenges. Int. J. Oper. Prod. Manag. 2006, 26, 754–774. [Google Scholar] [CrossRef]

- Ponde, S.; Kulkarni, A.; Agarwal, R. AI/ML Based Sensitive Data Discovery and Classification of Unstructured Data Sources. In Proceedings of the International Conference on Intelligent Systems and Machine Learning, Hyderabad, India, 16–17 December 2022; pp. 367–377. [Google Scholar] [CrossRef]

- Cheong, I.; Caliskan, A.; Kohno, T. Envisioning Legal Mitigations for Intentional and Unintentional Harms Associated with Large Language Models (Extended Abstract). 2023. Available online: https://blog.genlaw.org/CameraReady/32.pdf (accessed on 31 January 2025).

- Zhang, B.; Mao, H.; Ruan, J.; Wen, Y.; Li, Y.; Zhang, S.; Xu, Z.; Li, D.; Li, Z.; Zhao, R.; et al. Controlling Large Language Model-based Agents for Large-Scale Decision-Making: An Actor-Critic Approach. arXiv 2023, arXiv:2311.13884. [Google Scholar]

- Durach, C.F.; Gutierrez, L. “Hello, this is your AI co-pilot”—Operational implications of artificial intelligence chatbots. Int. J. Phys. Distrib. Logist. Manag. 2024, 54, 229–246. [Google Scholar] [CrossRef]

- Liao, Q.V.; Xiao, Z. Rethinking Model Evaluation as Narrowing the Socio-Technical Gap. arXiv 2023, arXiv:2306.03100. [Google Scholar] [CrossRef]

- Srivastava, A. A Rapid Scoping Review and Conceptual Analysis of the Educational Metaverse in the Global South: Socio-Technical Perspectives. arXiv 2023, arXiv:2401.00338. [Google Scholar] [CrossRef]

- Sharma, A.; Rao, S.; Brockett, C.; Malhotra, A.; Jojic, N.; Dolan, B. Investigating Agency of LLMs in Human-AI Collaboration Tasks. Assoc. Comput. Linguist. 2024, 1, 1968–1987. [Google Scholar]

- Liyanage, U.P.; Ranaweera, N.D. Ethical Considerations and Potential Risks in the Deployment of Large Language Models in Diverse Societal Contexts. J. Comput. Soc. Dyn. 2023, 8, 15–25. [Google Scholar]

- Grand View Research. Supply Chain Management Market Size & Share Report 2030. 2023. Available online: https://www.grandviewresearch.com/industry-analysis/supply-chain-management-market-report (accessed on 30 January 2025).

| Research Question (RQ) | Objective(s) Addressed | Method(s)/Data Used | Section(s) |

|---|---|---|---|

| RQ1: Is it feasible to employ LLMs in generating SCM-related processes, dynamically adapting to the ever-changing demands of the supply chain landscape? | 1, 2, 5 |

| |

| RQ2: Is the integration financially feasible, and what are the technical, operational and socio-technical implications for the overall supply chain operations to be considered? | 3, 4, 5 |

|

| Challenge | Description | Key Solutions |

|---|---|---|

| Integration of Technologies | Integrating evolving technologies like IoT, AI, blockchain, and data analytics into existing workflows while ensuring compatibility, secure information sharing, and coordination of physical logistics processes. | Harmonise technology with logistics operations, ensure data standardisation, address skill gaps, and implement robust infrastructure. |

| Data Management and Analysis | Managing and analysing diverse, high-volume data from multiple sources to generate meaningful insights, ensure data quality, and align skill sets with analytical needs. | Use sophisticated tools for handling diverse data, improving data lifecycle quality, and integrating disparate datasets for unified analysis and decision-making. |

| Agile and Responsive Operations | Adapting to volatile market conditions and disruptions while maintaining operational efficiency. | Establish agile workflows with real-time data analysis, allowing rapid adjustments and informed decision-making to meet dynamic demands effectively. |

| Supply Chain Visibility | Achieving real-time, end-to-end visibility across the supply chain despite data silos and fragmented systems, which impede decision-making and forecasting accuracy. | Integrate disparate systems, break down silos, use advanced analytics for real-time insights, and optimise workflows to enhance transparency and responsiveness. |

| Collaboration and Partnerships | Ensuring transparent communication and coordination among diverse global stakeholders while building trust and accountability. | Standardise processes, integrate systems, advance transparency, and establish robust communication channels to nurture stronger relationships and collaboration. |

| Research Focus | Key Insights | Limitations/Gaps | References |

|---|---|---|---|

| Conceptual Frameworks | Development of theoretical models, such as the inputs-process-outputs framework and theoretical toolboxes for managers. | Mostly theoretical; limited empirical validation of these models in real-world supply chains. | [7,8] |

| Optimisation and Decision-Making | AI enhances decision-making by generating insights and improving forecasting accuracy. | Studies often focus on isolated applications rather than an integrated system-wide approach. | [1,7] |

| Predictive Analytics | AI can improve demand forecasting, reduce overstocking, and optimise inventory management. | Requires high-quality data; model robustness in dynamic environments is a concern. | [1] |

| Automation | GenAI can automate repetitive operational and managerial tasks. | Potential scalability issues and lack of empirical validation in complex supply chains. | [4] |

| Risk Management | AI can pre-empt disruptions by analysing real-time and historical data. | Studies lack comprehensive guidelines on operationalising these insights. | [4] |

| Sustainability and Procurement | AI helps streamline logistics, analyse supply chain patterns, and optimise supplier portfolios. | Ethical concerns and biases in AI-driven decision-making remain underexplored. | [2,25] |

| Legal and Ethical Considerations | AI development and deployment introduce new legal risks, including intellectual property concerns. | Practical compliance strategies are underdeveloped. | [23] |

| LLM Applications | LLMs facilitate human-AI interaction, automate documentation, and enhance reasoning in supply chain decisions. | Technical challenges in large-scale implementation, including hallucinations and a lack of source attribution. | [3,6] |

| Retrieve-Augmented Generation (RAG) | RAG enhances LLM performance by integrating external knowledge to improve accuracy. | Requires balancing computational costs, security, and data privacy. | [3] |

| Challenges and Future Directions | Data integration, security, scalability, and AI model reliability remain critical issues. | Need for interdisciplinary research integrating technical, ethical, and managerial perspectives. | [1,4,7] |

| Criteria | Requirement |

|---|---|

| Source Type | Journal articles, conference papers, and technical reports |

| Publication Date | No older than 2015 |

| Subject Areas | Computer science, information technology, smart city topics |

| Language | English |

| Accessibility | Papers accessible via institutional contracts |

| Specifications | Information |

|---|---|

| Input |

|

| Process |

|

| Output |

|

| Functionality |

|

| Cost Factor | Description | Optimisation Strategy |

|---|---|---|

| Data Volume | The quantity of data needed for training and validation | Use smaller, high-quality datasets with augmentation |

| Data Annotation | Cost of manual annotation processes | Employ semi-automated tools or crowdsourcing methods |

| Data Licencing Fees | Licencing third-party datasets for commercial use | Negotiate bulk licencing or partnerships |

| Data Privacy Compliance | Costs related to anonymisation and regulatory adherence | Adopt robust anonymisation tools and practices |

| Provider | LLM | Context Length | Input/1K Tokens | Output/1K Tokens | Per Each Call/Request | Total Price (USD) |

|---|---|---|---|---|---|---|

| Chat Models | ||||||

| OpenAI | GPT-3.5 Turbo | 4K | $0.0015 | $0.002 | $0.0350 | $3.50 |

| OpenAI | GPT-3.5 Turbo | 16K | $0.003 | $0.004 | $0.0700 | $7.00 |

| OpenAI | GPT-4 | 8K | $0.003 | $0.06 | $0.9000 | $90.00 |

| OpenAI | GPT-4 | 32K | $0.06 | $0.12 | $1.8000 | $180.00 |

| Anthropic | Claude Instant | 100K | $0.00163 | $0.00551 | $0.0714 | $7.14 |

| Anthropic | Claude 2 | 100K | $0.01102 | $0.03268 | $0.4370 | $43.70 |

| Meta | Llama 2 70b | 4K | $0.001 | $0.001 | $0.0200 | $2.00 |

| PaLM 2 | 8K | $0.002 | $0.002 | $0.0400 | $4.00 | |

| Cohere | Command | 4K | $0.015 | $0.015 | $0.3000 | $30.00 |

| Fine-Tuning Models | ||||||

| OpenAI | GPT-3.5 Turbo | 4K | $0.012 | $0.016 | $0.2800 | $28.00 |

| PaLM 2 | 8K | $0.002 | $0.002 | $0.0400 | $28.00 | |

| Embedding Models | ||||||

| OpenAI | Ada v2 | — | $0.0001 | — | $0.0010 | $0.10 |

| PaLM 2 Embed | — | $0.0004 | — | $0.0040 | $0.40 | |

| Cohere | Embed | — | $0.0004 | — | $0.0040 | $0.40 |

| Audio Models | ||||||

| OpenAI | Whisper | — | — | — | $0.006/min | — |

| Deployment Component | LLM Type | Usage Volume | Token Estimate | Monthly Token Total | Estimated Monthly Cost (USD) |

|---|---|---|---|---|---|

| Manifest generation and validation | Chat (GPT-4) | 50 manifests/day | 2000 input + 1500 output = 3500 | 3500 × 30 = 105,000 tokens/day × 30 = 3.15 M | ~$63–95 (GPT-3.5 to GPT-4) |

| Partner-specific format transformation | Chat (GPT-4) | Integrated with above | Reuses prompt context | Included above | Included above |

| Constraint validation and reasoning | Chat (GPT-4) | Integrated with above | Reuses prompt context | Included above | Included above |

| Embedding of historical manifests (initial) | Embedding (OpenAI) | 5000 manifests (one-off) | 500 tokens × 5000 = 2.5 M tokens | One-time cost | ~$5 (OpenAI embedding) |

| Daily manifest embedding (ongoing) | Embedding (OpenAI) | 50 manifests/day | 500 tokens × 50 = 25,000 tokens | 750,000 tokens/month | ~$1.50 |

| Semantic search (e.g., delivery constraint matching) | Embedding search | Optional, ad hoc | Negligible (cached vector queries) | <100,000 tokens/month | <$1 |

| Total deployment estimate | — | Operational scale (real-world) | ~3.9–4 M tokens/month | ~$70–$100/month |

| Challenge | LLMs Solution |

|---|---|

| Supply Chain Connection | Assist in developing a secure data-sharing mechanism for retailers, suppliers, and delivery firms. |

| Delivery Manifest Customisation | Analyse commonalities across custom workflows and suggest a unified or modular approach. |

| Manual Work in Container Shipment | Contribute to automating manual work related to container shipment and status updates. |

| Generalised Workflow Framework | Aid in developing Data-Driven Workflows to automate and adapt workflows based on identified needs. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Javidroozi, V.; Tawil, A.-R.; Azad, R.M.A.; Bishop, B.; Elmitwally, N.S. AI-Enabled Customised Workflows for Smarter Supply Chain Optimisation: A Feasibility Study. Appl. Sci. 2025, 15, 9402. https://doi.org/10.3390/app15179402

Javidroozi V, Tawil A-R, Azad RMA, Bishop B, Elmitwally NS. AI-Enabled Customised Workflows for Smarter Supply Chain Optimisation: A Feasibility Study. Applied Sciences. 2025; 15(17):9402. https://doi.org/10.3390/app15179402

Chicago/Turabian StyleJavidroozi, Vahid, Abdel-Rahman Tawil, R. Muhammad Atif Azad, Brian Bishop, and Nouh Sabri Elmitwally. 2025. "AI-Enabled Customised Workflows for Smarter Supply Chain Optimisation: A Feasibility Study" Applied Sciences 15, no. 17: 9402. https://doi.org/10.3390/app15179402

APA StyleJavidroozi, V., Tawil, A.-R., Azad, R. M. A., Bishop, B., & Elmitwally, N. S. (2025). AI-Enabled Customised Workflows for Smarter Supply Chain Optimisation: A Feasibility Study. Applied Sciences, 15(17), 9402. https://doi.org/10.3390/app15179402