1. Introduction

With the advancement of information technology and the transformation of software engineering practices, software development models have evolved from early customization to standardization, microservice architecture, and low-code/no-code platforms, which have accelerated the speed and efficiency of software development and given rise to the open-source software movement and the rise of a collaborative culture. With its powerful resource-sharing and innovation capabilities, the open-source model has become the dominant model for software development worldwide [

1]. According to statistics from the 2023 China Open-source Blue Book [

2] the number of Chinese developers on GitHub has grown by 48%, ranking second in number. The collective open-source creation paradigm and the deep integration of many enterprise-level software production technologies have enabled the open-source model to burst with powerful productivity, giving birth to many open-source development ecosystems [

3]. With the widespread adoption of collaborative development paradigms and the increasing complexity of software systems, ensuring software quality has become an increasingly critical challenge. Modern software projects are often characterized by large codebases, high module coupling, and rapid version evolution, all of which increase the likelihood of various types of defects. Modular development, while improving scalability, may also introduce issues related to code consistency and version control, further exacerbating concerns around code quality and security. According to a 2022 report by Qi An Xin, a security assessment of 2098 open-source software projects involving 170 million lines of code revealed approximately 3 million security-related defects, including 220,000 high-risk vulnerabilities, indicating a generally high density of both total and severe defects [

4].

In contemporary software development, from large-scale enterprise-level closed-source systems to small agile teams, rapid iteration practices such as Continuous Integration (CI), Agile Development, and DevOps have become mainstream [

5,

6]. These practices require developers to cope with frequent code commits, multi-person collaboration, and heterogeneous language environments, making it easy for defects to be inadvertently introduced and accumulated, ultimately resulting in costly fixes. Furthermore, in fast-paced delivery settings, manual code review faces challenges such as high labor costs and limited coverage [

6,

7]. As a result, automated defect detection has become a crucial means of ensuring code quality and development efficiency. It not only helps identify potential issues in the early stages but also provides foundational support for downstream tasks such as code review, testing, and automated repair.

Code defect detection techniques are based on source code characterization, which captures the structural and semantic properties of the code by transforming the source code into an Abstract Syntax Tree (AST) or focusing on the fixed vocabulary units of the code [

8]. In recent years, many researchers have focused on code defect detection in open-source software, developing security coding guidelines, improving software testing, and refining methods for predicting code defects, all with the aim of enhancing software quality and security [

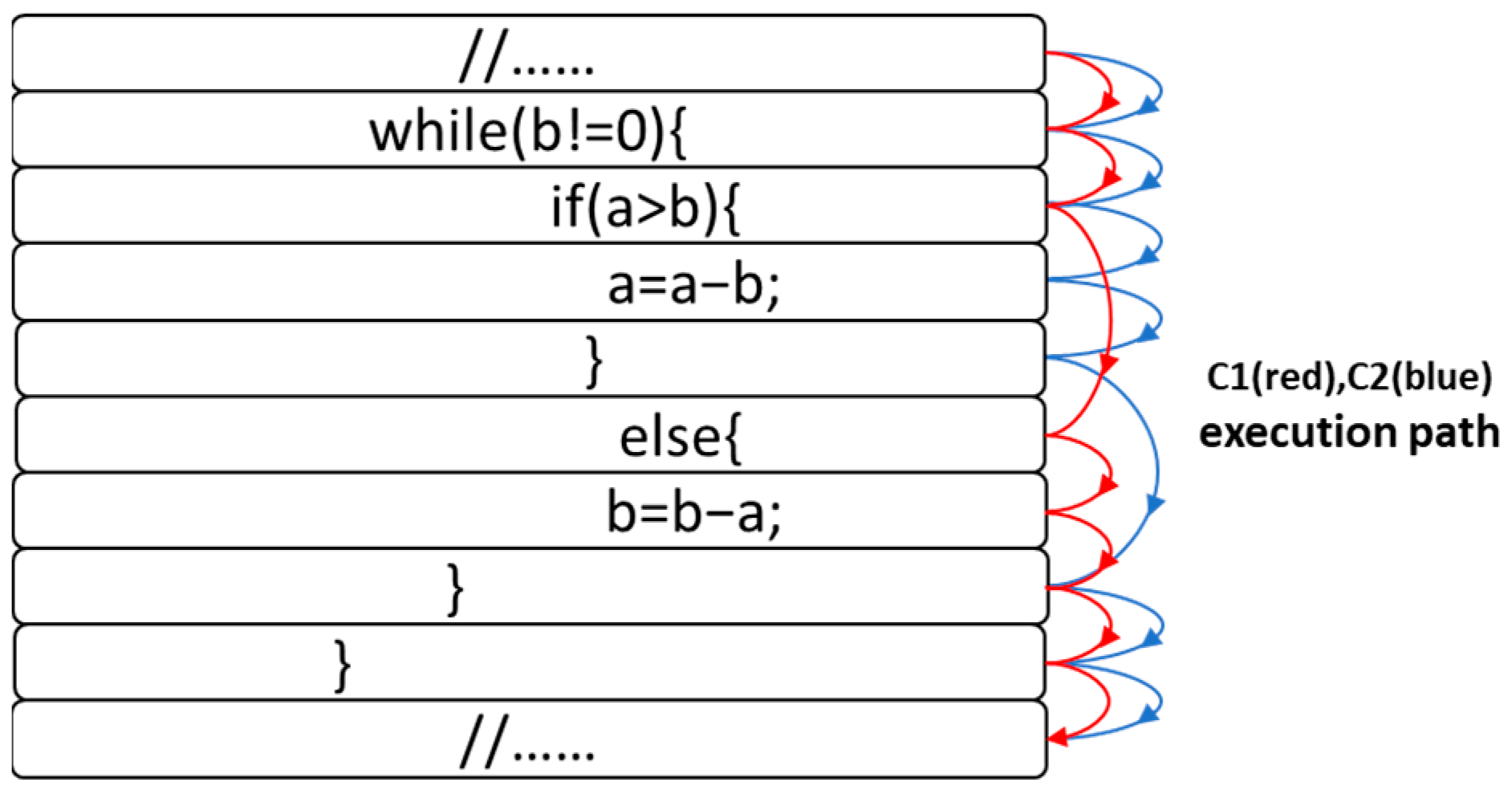

9]. Due to the openness of the development environment, software code presents features such as complex and diverse structure and high-dimensional and rich semantics, which are difficult to adapt to by traditional defect detection methods. When extracting semantic features of the source code, such as the code example in

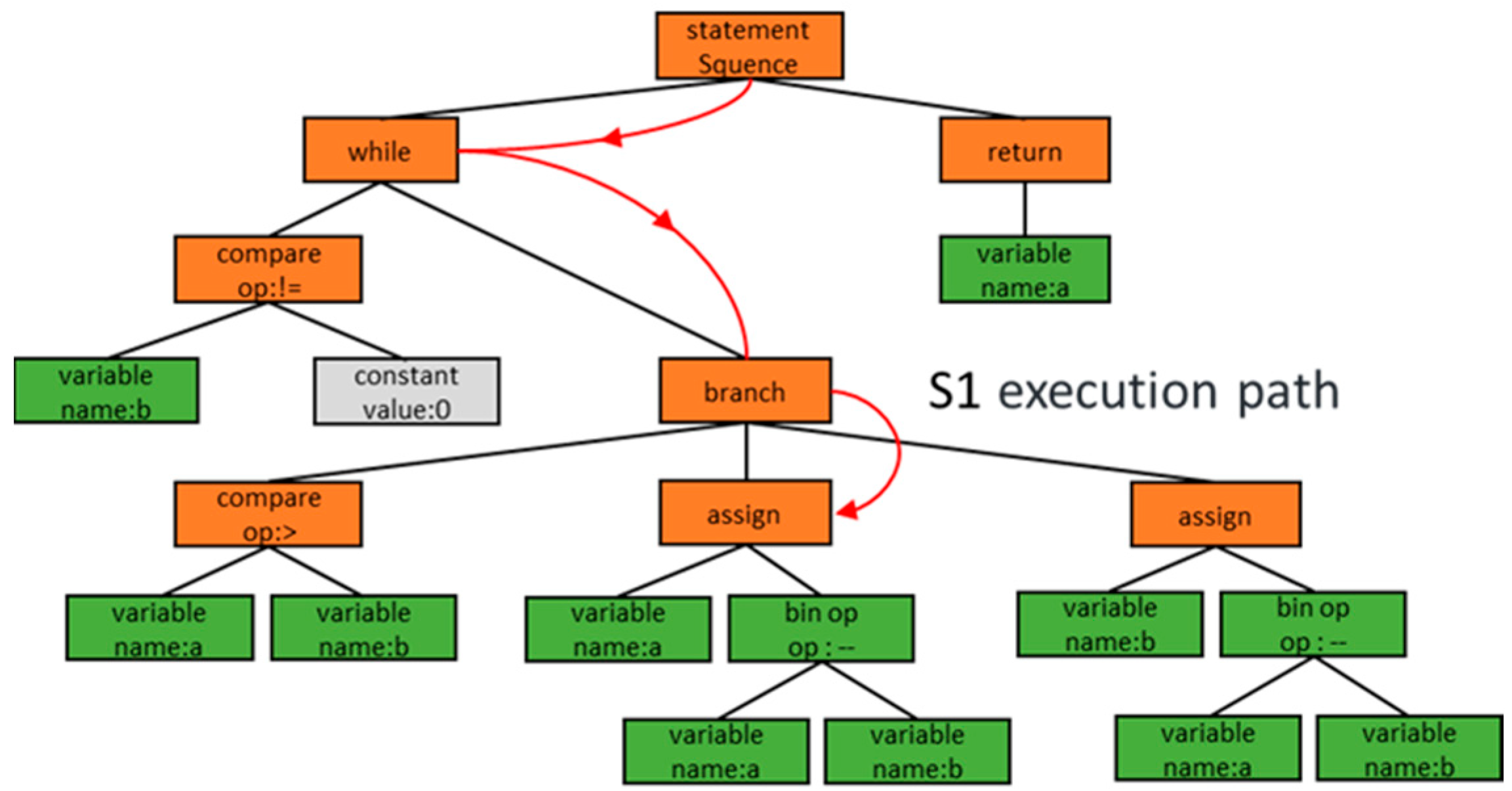

Figure 1, it is often impossible to fully capture the structural features formed by multiple execution paths, which can easily lead to information loss. When extracting structural features of the source code, as shown in

Figure 2, the AST nodes usually contain only limited information, which is not sufficient to fully reflect the contextual semantics of the code, such as keywords and identifiers, resulting in a lack of complete semantic information in the representation vectors. It is thus clear that the traditional approach of using semantic or structural representations alone for code defect detection has limitations. Therefore, in-depth exploration of the internal mechanism of open-source software to reveal the correlation between source code semantics, structure, and defects has become the key to improving the reliability and reducing the maintenance cost of open-source software.

Currently, multi-feature fusion methods are widely employed to enhance the representation capacity of source code [

10]. Common approaches include weighting [

11] or concatenating [

12,

13,

14] structural and semantic feature vectors. However, such linear fusion strategies struggle to align deep semantic relationships between high-dimensional, non-linear features, often resulting in incomplete integration and mismatched information. To overcome these limitations, recent studies have introduced attention mechanisms [

12,

13] to improve code representation learning. Although attention has achieved remarkable success in computer vision and natural language processing, its core design targets sequential data and assumes uniform relationships among features, making it difficult to adapt to the complex structural characteristics of code. On the one hand, general attention fails to capture the hierarchical associations present in Abstract Syntax Trees (ASTs); on the other hand, it is insufficient for modeling the dynamic correspondence between semantic units (e.g., variable names) and structural nodes (e.g., loop statements), as illustrated in

Figure 2. To address this key challenge, this paper proposes a novel Structure-Aware Attention mechanism that explicitly maps syntax tree nodes to semantic units, enabling deep integration of structural and semantic information and offering a new perspective for code representation research.

To address the aforementioned challenges, this paper proposes a defect detection approach that fuses multimodal code representations via a structure-aware attention mechanism. By constructing a deep fusion framework for semantic and structural features, the method aims to improve the accuracy and generalization ability of defect detection. This provides reliable support for automated defect identification and intelligent quality assurance in practical software development, ultimately helping reduce maintenance costs, enhance development efficiency, and improve system robustness.

The main contributions of this work are as follows:

Dual-path code representation optimization: We design a two-branch encoding strategy that leverages a multi-head attention-based CodeBERT [

14] model to extract fine-grained semantic representations at the character level, capturing rich contextual semantics. In parallel, a CBOW-based Word2Vec model [

15] is employed to generate structural feature vectors from code tokens, effectively capturing syntactic patterns and hierarchical information.

Structure-aware multimodal feature fusion mechanism: A novel attention mechanism is developed to integrate semantic and structural representations. By modeling the associations between semantic units and syntax tree nodes through a Query–Key–Value attention framework, the proposed method dynamically adjusts feature weights during detection, addressing the semantic-structural mismatch problem inherent in conventional linear fusion strategies and significantly enhancing fusion quality.

Structure-guided defect detection model: A defect detection model tailored for open-source code is constructed, which incorporates the proposed structure-aware attention to fuse semantic and structural information. The fused representation is then processed through a BiLSTM-DNN architecture to capture temporal dependencies and non-linear interactions. Compared to existing methods, our approach emphasizes multi-dimensional, hierarchical representation fusion, leading to superior accuracy and generalization on diverse codebases.

To validate the effectiveness of our method, we conduct extensive experiments on the SARD dataset and benchmark our results against state-of-the-art baselines.

2. Related Work

2.1. Research on Code Defect Detection

Software code defects represent an unavoidable challenge in software development. These defects refer to errors or flaws within program code that may cause compilation failures, runtime anomalies, or behavioral deviations from specifications. Contemporary research classifies code defects into three primary categories based on etiology: syntactic defects, logical defects, and security defects [

16]. Syntactic defects stem from violations of programming language syntax rules. Their explicit error patterns enable efficient detection through static rule checking. Logical defects (e.g., loop boundary errors) require integrated control-flow and data-flow analysis for identification. Security defects—including SQL injection, buffer overflows, and privilege escalation vulnerabilities—demand deep comprehension of semantic-structural contexts, data-flow semantics modeling, and external interface interactions. Characterized by high concealment and context-dependency, they constitute the most challenging detection category [

17] Shen’s [

18] empirical analysis indicates security defects account for >60% of real-world defects yet exhibit disproportionately low detection rates.

Detection challenges for security defects further diverge by subtype: Input validation defects require inter-procedural taint propagation analysis, where existing tools frequently fail due to broken call chains [

19]. Memory operation defects depend on pointer lifecycle modeling, with static methods encountering path explosion problems [

20]. Concurrency safety defects involve thread timing dependencies that traditional models struggle to capture due to non-deterministic behaviors [

19].

While traditional rule-based systems and test generation techniques [

20] prove effective for basic defects, they inadequately address complex vulnerabilities [

21,

22]. Empirical evidence from OpenStack/Qt [

23] demonstrates that purely automated tools exhibit insufficient coverage for sophisticated vulnerabilities, necessitating manual review and remediation. Although deep learning has enhanced detection capabilities for certain security defects, significant limitations persist: Representation mismatch between semantic and structural features causes incomplete code information capture [

19]; Inadequate modeling of long-range cross-module dependencies leads to critical context loss, resulting in high false-positive rates [

24] and weak generalizability [

25].

In summary, most syntactic and logical defects can be effectively detected through semantic or control-flow pattern recognition, whereas security defects demand more advanced structure-aware and context-sensitive mechanisms.

2.2. Research on Code Representation Methods

In recent years, code defect detection has achieved significant results, with research primarily focusing on two aspects: representation methods based on code semantics and representation methods based on code structure.

Code semantics-based representation methods primarily involve tagging key information in the source code, such as identifiers, keywords, function names, and operators, converting them into a sequence of tokens, and then mapping them into a vector space to form character vectors. Li et al. [

26] used heuristics to extract code fragments to represent the semantics of the program; Zou et al. [

27] matched the statement attributes and the code text with the vulnerability grammar rules; Xu et al. [

28] performed code slicing by analyzing program calls and normalizing them. Yamaguchi and Russell [

29,

30] parse the source code into individual functions and then map them into a vector space to form character vectors. Pradel et al. [

31] perform an embedded vectorial representation of naming through Word2Vec representations through Word2Vec to allow the model to access semantic information. Li et al. [

32] used the Bidirectional Gated Recurrent Unit (Bi-GRU) method to extract syntactic and semantic features related to vulnerabilities from the source code. Russell [

30] extracted localized features using multilayered convolutions or iterative processes to capture the before-and-after dependencies for code defect detection.

Code structure-based characterization usually involves parsing the source code into intermediate [

33] Abstract Syntax Trees (ASTs) to accurately capture its syntactic structure. Researchers have enhanced the characterization of ASTs through feature encoding and selective node processing [

34]. Li [

35] emphasized the importance of selecting representative AST nodes; while Anbiya [

36] obtained representation of important nodes and subtrees by traversing them with a breadth-first traversal algorithm and by pruning and filtering techniques. Huang [

37] differentiated between heavy and light child nodes of ASTs, giving preference to the heavy child nodes. Yamaguchi [

38] extracted ASTs based on the principle of island grammars using a parser for all functions in the codebase to determine their structural patterns. Lin [

39] obtained ASTs of the source code through the ANTLR syntactic analysis tool and used depth-first traversal to generate a serialized representation of the ASTs. In addition, Mou [

40] designs convolutional kernels on the AST of program code and introduces the ideas of continuous binary trees and dynamic pooling to achieve a unified representation by processing trees of different sizes and shapes. Li [

41] combines program dependency graphs and data flow information into an abstract syntax tree to strengthen the global context vector characterization of function code and reduce false positives.

In summary, code semantic-based representation methods utilize natural language processing techniques to emphasize the semantic information of the code, which has high interpretability and reduces the rate of false positives, but neglects the structural properties of the code. In contrast, code structure-based representation methods emphasize the structural features of the code, but the semantic information of the code is often lost in the transformation process, which limits the richness of the representation. Therefore, an ideal defect detection model should combine semantic and structural features to achieve a more comprehensive code representation.

2.3. Research on Feature Fusion Methods

Feature fusion originated in the military field in the 1970s, and was initially used for target identification and evaluation, aiming to combine more comprehensive information from different sources or different levels of features. With the rise of open-source big data and artificial intelligence, information fusion has gradually taken on complex characteristics such as nonlinearity, multimodality, deep coupling, and high dimensionality, which have driven the further development of feature fusion methods.

Among the feature fusion methods for deep learning, feature splicing and feature-weighted fusion are common methods based on combining features. For example, Wang [

42] used weighted fusion to design a selective additive learning mechanism to improve the generalization and prediction accuracy of multimodal sentiment analysis. Nojavanasghari [

43] feature-weighted three modalities, visual, audio, and text, and improved the prediction’s persuasive power by using complementary information between modalities. Anastasopoulos [

44] fused feature splicing speech and words to construct a multimodal language model to improve the prediction ability of the language model. Liang [

11] constructed a multilayer tandem fusion network based on the idea of feature weighting and feature splicing to improve the quality of medical image representation. Dong [

45] weighted and fused the twofold features extracted by the convolutional neural network and graph attention network to achieve excellent results in the field of hyperspectral image classification.

As the attention mechanism was proposed and showed excellent feature weight learning ability, many scholars began to explore its application to the research field of feature fusion. Hyeonseob [

15] and others proposed Dual Attention Networks (DANs) to fuse visual and textual features to demonstrate the effectiveness of the application of attention networks to feature fusion. Jiasen et al. [

46] constructed a new model of a common attention mechanism for visual question and answer to enhance the effectiveness of the answer prediction task. Wang et al. [

47] designed an attention-based adaptive feature fusion network to synthesize decoupled common structural features and different modal features in the reconstruction phase. Du [

48] proposed Dual Attention Networks (DANs) to jointly learn visual and textual attention models to explore fine-grained interactions between vision and language. Zhou [

49] used an attention-based feature fusion mechanism to fuse the global and local contexts of blind image features to achieve a more significant performance improvement on a blind image quality assessment task.

And nowadays, open-source codes are becoming increasingly complex. Researchers find that a single code characterization approach is no longer sufficient to fully express the potential features of the code, and multi-feature fusion of code characterization approaches has received attention. For example, Tao et al. [

50] fused AST and code change markup sequence information to enhance the ability of the model to characterize semantic information, and Zhou et al. [

51] fused convolutional neural network to extract code semantic information and code structure information, proving the excellence of multimodal characterization of the code, and Liu et al. [

52] fused the features of annotations and commit information in the code to further strengthen the code semantic characterization capability. Chen [

53] fused expert metric vectors from code and semantic metric vectors from code commit messages to enhance the capability of multi-objective on-the-fly software defect detection.

In summary, deep learning-based multimodal feature fusion techniques have been widely used in speech, video, and text. Although feature splicing and weighted fusion methods enhance the characterization capability, they have limitations as linear methods in dealing with the large amount of non-sequential data in open-source projects. To overcome this limitation, this paper proposes a fusion method based on the attention mechanism. The method computes a set of weights through fine-grained analysis of features from different modalities, which are non-linearly combined to dynamically capture the intrinsic correlations between them.

3. Method

In the context of the open-source ecology, code development is highly liberalised. The logical structure of the code syntax in open-source software is very complex, and the code has rich implicit semantics. Due to the rich contextual information and unique high-dimensional semantic features of the code in open-source projects, methods such as simple keyword extraction or rule-based analysis cannot understand the deep meaning of the code, making it difficult to accurately match the semantic and structural representations of the code. CodeBERT, on the other hand, is a Transformer-based model that automatically learns deep semantic information through large-scale pretraining and fine-tuning. It has powerful capabilities in understanding the context and semantics of code and is suitable for handling the complex linguistic characteristics of open-source code. Meanwhile, feature fusion methods based on traditional attention mechanisms mainly rely on word vectors, lack attention to code-specific structural information (such as function calls, control flows, etc.), and are difficult to adapt to the abstraction of code. By introducing a structural attention mechanism, the model can automatically focus on key code structures to achieve an accurate match between code semantics and structural features. However, code is not only static text, but also has a certain execution sequence, especially in terms of the logical structure of the code. The sequential execution and state changes may lead to code defects. Bidirectional Long Short-Term Memory Network (BiLSTM), as a bidirectional LSTM network, can learn to capture long-term contextual dependencies in code in both directions, avoiding contextual misjudgments caused by relying only on forward (or backward) information, thereby enhancing the recognition of cross-function and cross-module dependencies.

In summary, this paper starts from the perspective of precise semantic and structural integration of code, fully considers the interaction between the structure and semantics of open-source code, and designs an optimization method for code defect detection based on a structural attention mechanism. The method calculates the attention score between semantic representation vectors and structural representation vectors; it determines the matching relationship between semantic units and structural nodes to compensate for the defects of simple fusion methods that cannot adapt to open-source code prediction.

3.1. Task Definition

Software Defect Detection (SDP) can be defined as a binary classification problem. In this paper, we design a code defect detection model that combines semantic and structural representations. To train the prediction model, we require training data consisting of a semantic character dataset

, an Abstract Syntax Tree (AST) node dataset

, and data labels

. Here,

represents the total number of samples. In the Defect Detection problem,

, where

indicates that the module contains one or more defects, and

indicates that the module has no tendency towards defects.

, where

represents the

data in the semantic character dataset, and

represents the

string in the

data of the semantic character dataset

represents the

data in the AST node dataset, and

represents the

node in the

data of the AST node dataset. Therefore, the code defect detection task can be defined as the model M trained on datasets

and

to obtain the loss

L, as shown in Equation (1):

That is, based on the datasets , and the data labels Y, by optimizing the function , we aim to find an approximate optimal solution for L.

3.2. Model Architecture Design

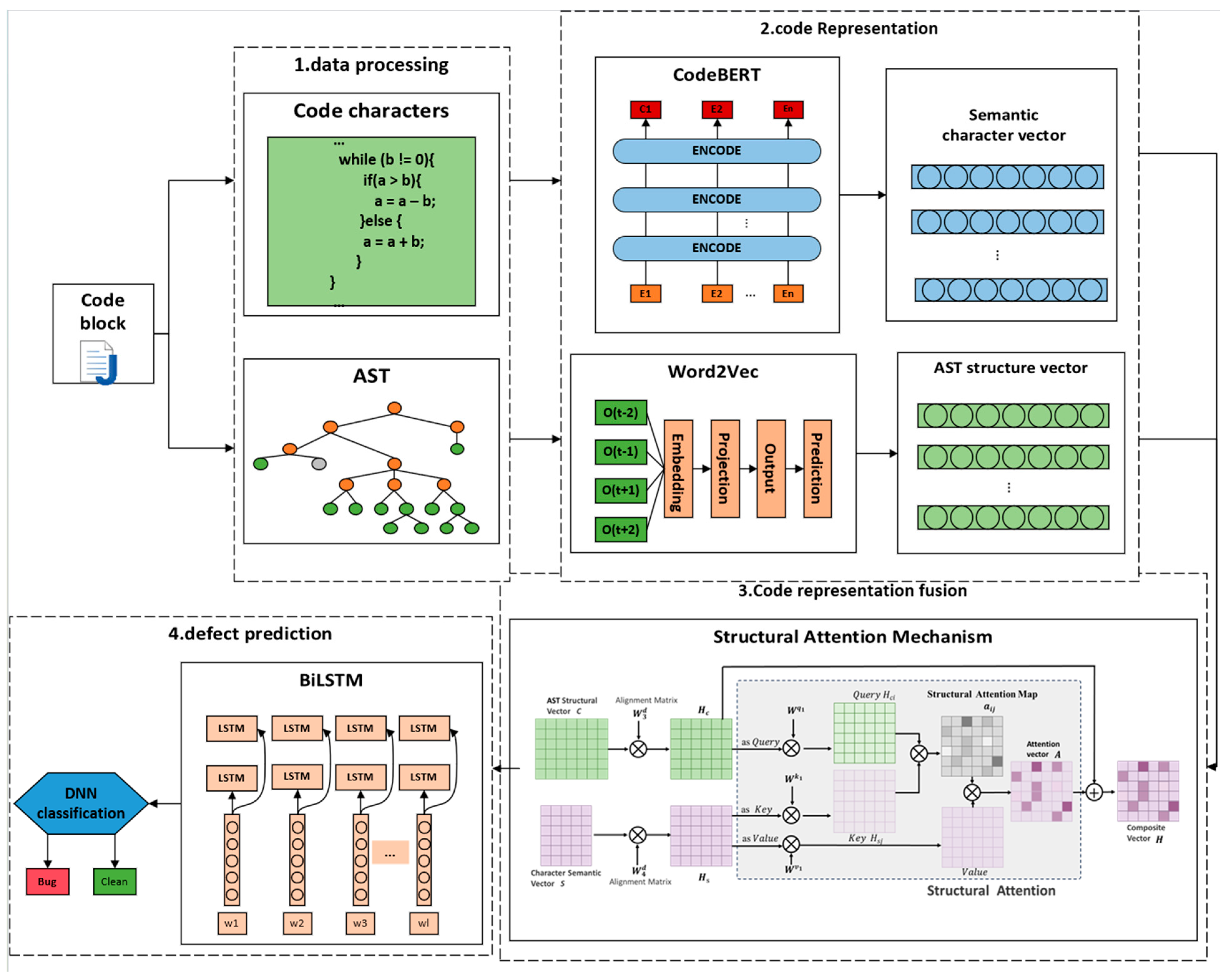

Based on the above definition, this paper designs a structural attention mechanism-based representation fusion model framework for the open-source development ecosystem, as shown in

Figure 3:

The model is divided into four parts, as detailed below:

- (1)

Data Processing. First, given the source code P, it is divided by functions F, i.e., . For each function or module, all comment lines and blank lines are removed to obtain the preprocessed code . Next, a character-level segmentation operation is performed on to obtain the character sequence , resulting in the set of character-level semantic code , which includes the character-level data of the source code. For each preprocessed function , using an abstract syntax tree construction method, the code is converted into , yielding the set of structural code data , which contains the abstract syntax tree node information of the source code.

- (2)

Code Representation. First, for the character-level semantic codeset , a CodeBERT model based on a structural multi-head attention mechanism is designed to convert each character sequence into its corresponding semantic representation vector C. Next, for the structural code dataset a Word2Vec-based CBOW model is constructed, mapping the abstract syntax tree node sequence to a vocabulary . Then, for each function , the probability distribution of its abstract syntax tree nodes in the vocabulary is calculated to construct the structural representation vector S.

- (3)

Representation Fusion. First, the semantic vector C and structural representation vector S are aligned to obtain and , respectively. Then, a structural attention mechanism is designed to fuse the code semantic representation vector with the structural representation vector , ultimately generating the comprehensive representation vector H.

- (4)

Defect Detection. In this study, considering the complexity of code structure in open-source software, we employ a Bidirectional Long Short-Term Memory Network (BiLSTM), which has bidirectional information flow, as the classification prediction model. This is beneficial for capturing contextual semantic information in the code. The comprehensive representation vector is input into the model for learning and training, ultimately classifying the code into defect or non-defect categories.

3.3. Data Processing

Code Semantic Character Preprocessing. The research in this paper takes Java method functions as data objects. In the data preprocessing stage, we adopt a file-level approach for data processing. First, each Java file is read one by one, and the Java method functions in the file are segmented using regular expressions. In order to eliminate the influence of method function names on the research results, we uniformly named all the method functions obtained from segmentation as fun (in monospaced font), and then further processed the data, including removing unnecessary blank characters, punctuation marks, and special symbols in each method function. This preprocessing process helps to ensure the consistency and reliability of the data and facilitates the subsequent unified representation and processing.

Code abstract syntax tree structure preprocessing. Firstly, all Java method functions in the data set are obtained through code semantic character processing. Subsequently, for these method functions, according to their hierarchical structure, the javalang package in Python 3.12.0 is used to convert them into abstract syntax trees. During this conversion process, we excluded some method functions that could not be successfully converted. Considering that the focus of this study is on the information about the structure of the code presented by the abstract syntax tree, we converted all node types to node sequences in a depth-first traversal. The purpose of this step is to preserve the structural information of the code and provide a convenient way for subsequent processing and analysis.

This study models Java methods as the fundamental data units, leveraging the clear function structure and language characteristics of the SARD dataset. By focusing on method-level defect detection, our approach allows the model to concentrate on capturing semantic and structural features within individual methods, which enhances both task specificity and trainability. For semantic representation, we adopt CodeBERT—a pretrained multilingual model trained on large-scale corpora that include major programming languages such as Java, Python, and C/C++. This provides the method with a degree of language-agnostic capability. Moreover, the modeling process for abstract syntax structures is transferable and can be applied to other languages with similar syntactic properties. As a result, the proposed approach demonstrates strong potential for cross-language generalization at the method level.

3.4. Code Semantic Feature Extraction

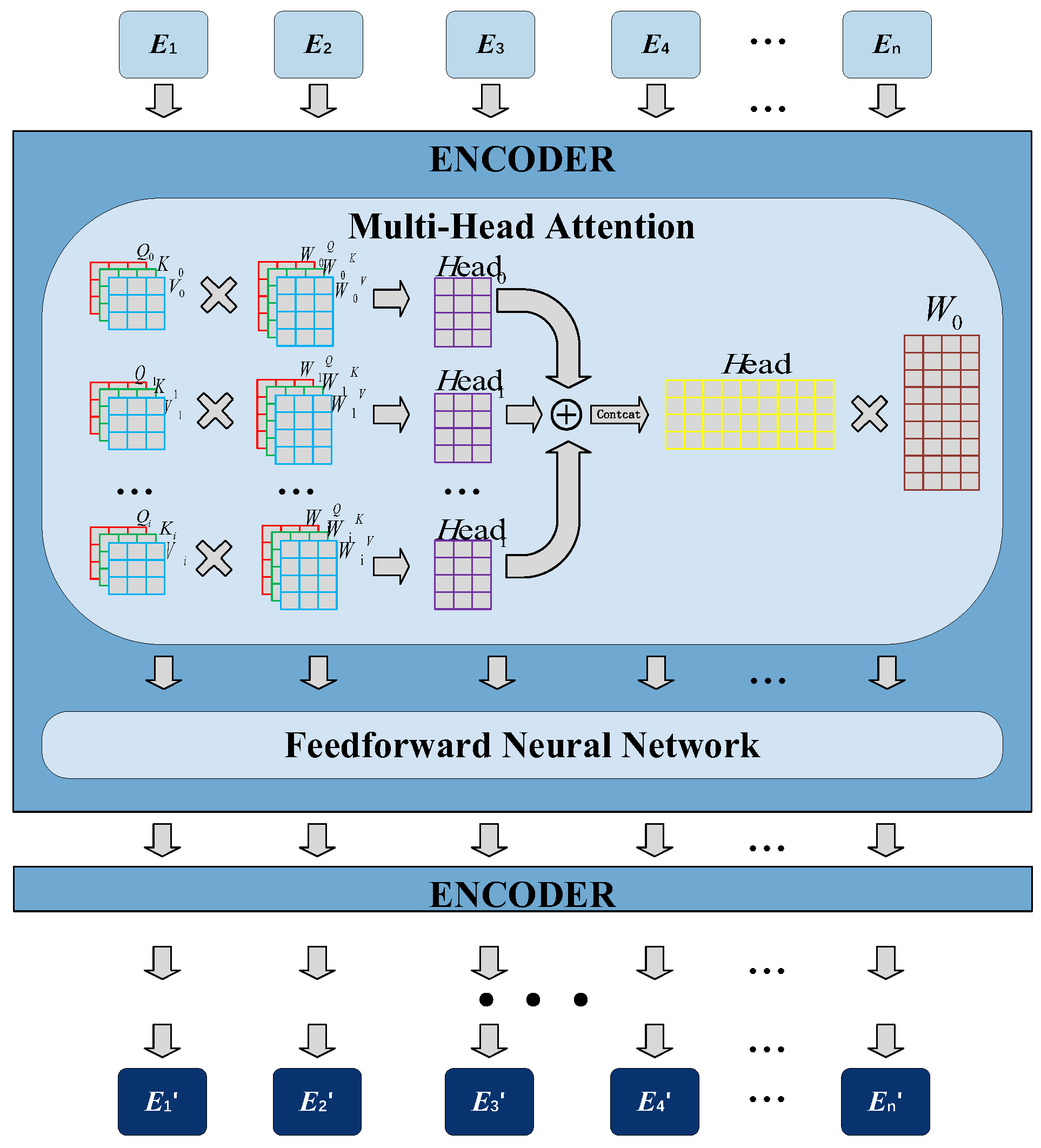

Due to the transparency, modifiability, and multi-participation characteristics of open-source projects, these attributes lead to complex logical structures, where the definition and implementation of functions often require multiple lines of code. Consequently, the phenomenon of multi-line code is very common in open-source projects. Multi-line code may include multiple branches, nested conditions, loops, and other structures, increasing the potential risk of logical errors. Therefore, understanding and capturing these cross-line dependencies is crucial for identifying potential defects. To address this issue, this paper draws on the global attention mechanism from natural language processing, specifically introducing the multi-head self-attention mechanism-based CodeBERT model to construct semantic unit representation vectors for code. This approach transforms each character in the code into a feature vector with global attention scores, which contains richer semantic information compared to the feature vectors transformed by the Word2Vec [

15] model. CodeBERT provides pure semantic vectors free from structural bias, which orthogonally complement structural features extracted from ASTs. This design enables the structural attention mechanism to explicitly model cross-modal relationships, thereby avoiding the performance saturation issues inherent in end-to-end pre-trained models. The conversion of semantic representation vectors is shown in

Figure 4:

The conversion process is as follows:

- (1)

Semantic encoding. Using the tokenizer in CodeBERT, the code characters are first mapped to vectors to obtain the word vector

, the encoding vector

, and the inter-sentence separation vector

in upper case. The three vectors are then added to obtain the comprehensive vector

.

- (2)

Multi-head self-attention mechanism encoding. is used as the input to the Encoder of the Transformer structure, which is mainly composed of the Multi-head Self-attention Mechanism (MHSA) and the Feedforward Neural Network (FNN). The Multi-head Self-attention Mechanism enables the model to learn the relationship between two positions in the sequence from multiple perspectives. It obtains the relationship information of different perspectives in the sentence sequence according to the learning, assigns different weights to them, and finally concatenates the information of all attention heads to obtain the encoding representation. The calculation method is shown in Equations (3)–(5):

where Q is the query matrix,

K is the key matrix,

V is the value matrix, and

is the dimension of the key vector.

is the learnable weight matrix corresponding to the

i-th head,

h is the number of heads, and

is the output linear transformation matrix.

- (3)

Semantic vector representation. Take the composite vector of the output of the last layer of CodeBERT

as the final semantic coding table representation

where each position of the sentence sequence corresponds to a 768-dimensional hidden layer vector. The vector encoding of the final layer is shown in Equation (6):

3.5. AST-Based Structural Feature Extraction

As open-source projects continue to iterate, the structural features of the code change, making it extremely easy to introduce new defects. Therefore, constructing models that can adapt to complex codes and effectively capture the structural features of codes is the key to improving the defect detection capability of open-source software. Although the traditional feature extraction method based on an abstract syntax tree is effective in representing the structural information of simple code, it is not adapted to the continuous updating of code. To address this problem, this paper draws on the design ideas of Xing et al. [

54] to construct a Word2Vec-based CBOW (Continuous Bag-of-Words) model for code structure feature extraction. First, the words in the code are transformed into vector representations, and the corresponding word vector representations are generated by the CBOW model. Subsequently, these word vectors are combined into a final sequence of node vectors for feature extraction by the subsequent encoder, as shown in

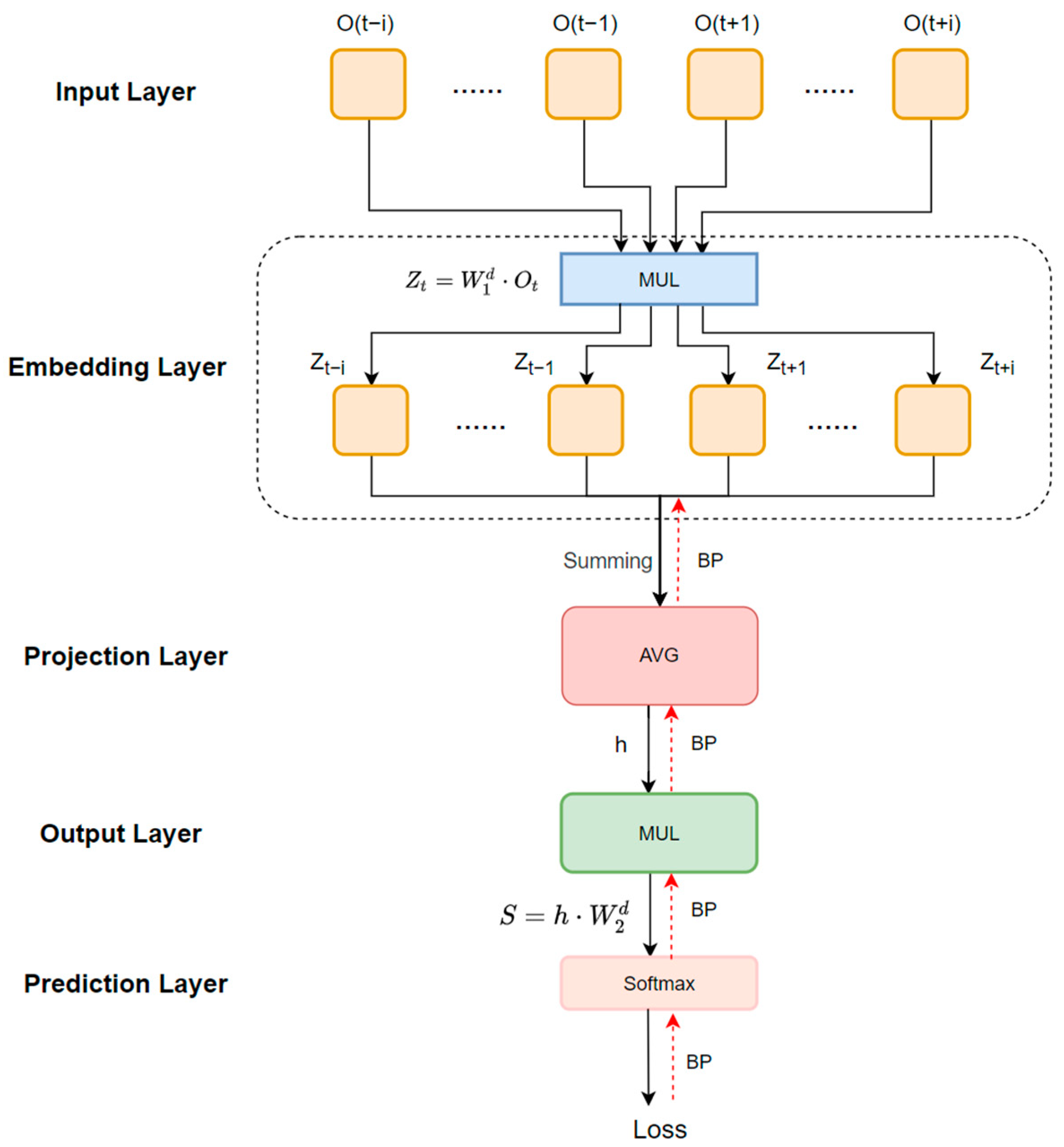

Figure 5:

Specifically, to extract structural features from program source code, we first perform a depth-first traversal (DFS) on the abstract syntax tree (AST) to obtain a sequence of nodes. This DFS sequence is then used as the input to a continuous bag-of-words (CBOW, Word2Vec) model, which encodes the nodes into vector representations. The CBOW model predicts the vector representation of a given node based on the surrounding nodes of that node, learns its vector representation using contextual information, and then represents the vocabulary as dense continuous vectors. The main steps are as follows:

- (1)

Input layer: given a central node of an abstract syntax tree, where is a context node of that central node, and when , m is the length of the window of context nodes. By a uniquely hot-coded representation, each node is represented as a vector of the same length as the dictionary. As shown in Equation (7):

where O is the input matrix and

is the unique heat coding vector of the tth node; n is the vector length.

- (2)

Word Embedding Layer: for each node in the input layer, there is a corresponding weight matrix, which is multiplied with the uniquely hot-coded vector to obtain the real vector representation of that node which is shown in Equation (8):

where

is the dimension of the word vector, and

is the corresponding weight matrix.

- (3)

Projection Layer: the vectors of all context nodes are summed and divided by the number of nodes to get the output vector h of the projection layer, as shown in Equation (9):

where

is the real vector representation of the

t +

i th node obtained through the word embedding layer, m is the length of the context window, and i is the index within the window.

- (4)

Output Layer: Convert the output vector h of the projection layer to the probability distribution of the target node. Multiply the output vector of the projection layer by the corresponding weight matrix to get the output vector S of the output layer, i.e., the structural representation vector, as shown in Equation (10):

- (5)

Prediction layer: the output layer vector S is softmax normalised to obtain the prediction distribution, is compared with the unique heat code of the target node to obtain the loss function L, and the weight matrix in the CBOW model is continuously updated by backpropagation, as shown in Equation (11):

where B is the batch size.

3.6. Multimodal Feature Fusion via Structural Attention Mechanism

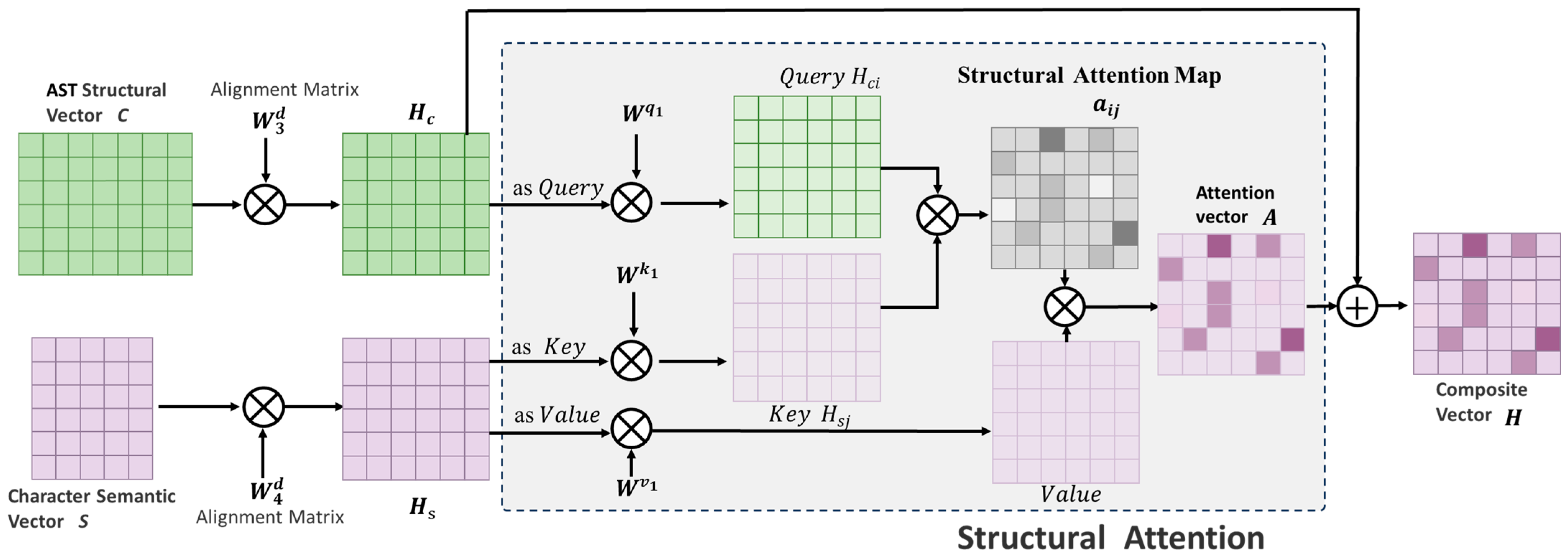

The preceding analysis establishes that the semantic information of code typically depends not only on lexical representations but also exhibits strong interdependencies with structural features. Abstract Syntax Tree (AST) nodes inherently store only basic syntactic information, failing to accurately capture contextual features such as keywords and identifiers. This results in irreversible information loss during AST conversion, yielding impoverished unimodal representations when relying solely on either semantic or structural approaches. Such limited representational capacity proves inadequate for detecting complex and diverse code defects. Concurrently, traditional feature fusion methods remain unable to resolve the fundamental challenge of aligning semantic units with structural nodes. To address these limitations, this work designs a structural attention mechanism that effectively matches semantic units with structural nodes, thereby enhancing the richness and precision of code representations. Specifically, since CodeBERT pretraining already incorporates contextual semantics of lexical symbols (including brackets), our structural attention mechanism guides the model to focus on semantic-structural relationships while respecting structural constraints. This approach preserves the hierarchical advantages of ASTs while compensating for their inherent loss of grammatical details.

3.6.1. Multimodal Feature Fusion

Building upon this foundation, we propose a multi-feature fusion methodology based on structural attention, with the detailed implementation process illustrated in

Figure 6.

- 1.

Vector Adaptive Alignment: Based on the previously obtained semantic representation vector C and structural representation vector S, due to the inconsistency in their dimensions, both vectors are first passed through alignment matrices. The weight model of these matrices can be self-adjusted through training data, allowing the semantic and structural representations to adaptively adjust their dimensions based on the actual requirements. This approach is more flexible and efficient than traditional hard-coded alignment. The following are the calculation Equations (12) and (13):

where

,

is the alignment matrix, and

is the semantics after dimension alignment,

is the dimensionally aligned structural representation vector and the structural representation vector.

- 2.

Vector Fusion: After obtaining the aligned semantic and structural representation vectors

and

, we design a structural attention mechanism to fuse these two vector representations. Given the rigid syntactic constraints of source code, the effectiveness of semantic units is highly dependent on their structural context. In the context of defect detection, attention mechanisms without structural constraints are prone to semantic noise, which can lead to inaccurate representations [

55]. To address this, we designate

as the query vector to prioritize syntactic guidance, while

is used as the key-value pair to assess semantic consistency within the structural framework. Therefore, the structural attention mechanism is essentially cross-attention applied to the code semantic–structural interaction setting—using the semantic vector as the Query and the structural vector as the Key–Value pair; by attending to structural information, it enhances the semantic representation and thereby fuses structural and semantic code information. The attention score

reflect the interdependency between semantic and structural information, indicating the importance of semantic units and structural nodes in the task. The model adaptively selects the most contributing structural features for the final prediction and captures the complex relationships between semantics and structure. The calculation formula is as follows:

where

represents the similarity between the query vector

and the key vector

, which can be computed using the dot product. The attention score

indicates the degree of attention paid to the j-th element in the structural features when predicting the i-th code element. (The specific weight distribution visualization is provided in the next section.)

- 3.

Vector Weighting: To enable the model to assign different levels of importance to different parts of the structural information based on the current context, thereby obtaining a more precise representation, we use the attention scores

to perform a weighted sum of the structural feature vectors

, resulting in the attention vector

. This vector represents the weighted influence of the structural features during the fusion process.

- 4.

Final Fusion: The attention vector

and the character feature vector

are summed to obtain the final feature vector representation

H. By doing so, the model effectively integrates structural information while preserving semantic details, ensuring that the final feature representation contains both the nuances of the semantics and the contextual relationships of the structure. This approach compensates for the information loss inherent in converting source code to ASTs, thereby enhancing the accuracy of the final code representation.

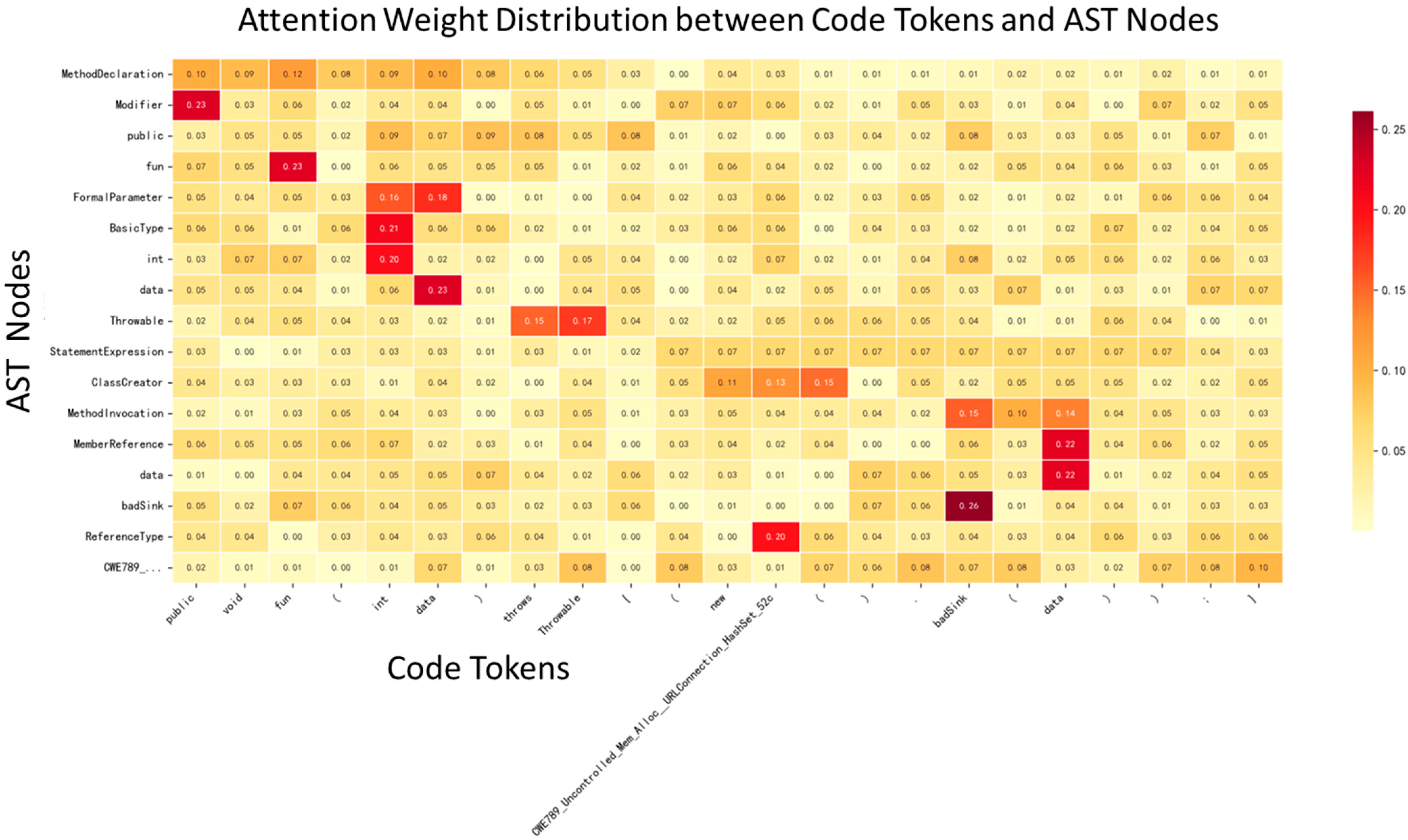

3.6.2. Attention Weights Visualization

To delve deeper into the interaction between the semantic vector and the structural vector during the fusion process, we performed a visual analysis of the attention weights between code tokens and AST nodes. Taking the following example code and its corresponding Abstract Syntax Tree (AST) as a case study:

- (1)

Example code:

public void fun (int data) throws Throwable {

(new CWE789_Uncontrolled_Mem_Alloc__URLConnection_HashSet_52c(). badSink (data));

}

- (2)

Sequence of AST nodes:

[‘MethodDeclaration’, ‘Modifier’, ‘public’, ‘fun’, ‘FormalParameter’, ‘BasicType’, ‘int’, ‘data’, ‘Throwable’, ‘StatementExpression’, ‘ClassCreator’,’MethodInvocation’,’MemberReference’,’data’,’badSink’,’ReferenceType’,’CWE789_Uncontrolled_Mem_Alloc__URLConnection_HashSet_52c’]

In the model, the semantic vector is used as the query vector (Query), while the structural vector serves as the key-value pair (Key/Value). By calculating the dot product similarity, we obtain the attention distribution of each code token with respect to different AST nodes, which is represented in the Attention MAP shown in

Figure 6. Visualizing the attention matrix as a heatmap allows for an intuitive reflection of the attention intensity between different code positions and structural nodes, as illustrated in

Figure 7.

Key Focus Patterns are shown in

Table 1:

3.7. Defect Detection Using BiLSTM Model

The integrated vector H obtained after fusion requires further enhancement of contextual semantic features. We note that the length of the integrated vector exceeds 400. In traditional Recurrent Neural Network (RNN) models [

56], as the sequence length increases, gradients may gradually vanish under the mechanism of backpropagation. The long-short-term memory (LSTM) and gated recurrent units (GRU) are two improved models of RNNs. Although RNNs are powerful, it is difficult to train a long-range sequence of data due to the vanishing or exploding gradient problem [

57]. Therefore, this paper selects the Bidirectional Long Short-Term Memory (BiLSTM) [

58] model, which can solve the problem of gradient disappearance caused by long-term dependencies in sequences, allowing the hidden state at each time step to capture context information before and after it. The BiLSTM updates the hidden state at each time step through cell state

and gating mechanisms (forget gate

, input gate

, output gate

) to maintain long-term memory. It is composed of basic units that maintain long-term memory by adding cell states and multiple gates in RNN neurons. The output gate

determines how much of

should be passed to the next node, used to determine the value of the next hidden state. The input gate

controls the importance of the input information at this time step for updating the cell state. The forget gate

functions to decide which information from previous time steps to discard or retain, and it is parameterized through weight matrices

,

,

,

, and bias vectors

,

,

,

. The following are the state updates at each time step

:

- 2.

Model Training.

To train the model in this paper, the output of the last unit of BiLSTM is selected linearly transformed is input to to perform classification. In the defect detection problem of this work: the is the predicted distribution of the results, and is the correct distribution of the results. indicates that the module contains one or more defects,

signifies that the module is defect-free

During the model training process, the model is optimised using a back propagation algorithm to minimise the cross-entropy error in defect classification:

where

denotes all the training instances, and

is the outcome category (bug or clean), the

is the predicted distribution of the results, and

is the correct distribution of the results, and

denotes the

regularisation.

4. Experiment Design

4.1. Experimental Data

The experimental data in this paper uses the Software Assurance Reference Dataset (SARD) [

59], created and maintained by the National Institute of Standards and Technology (NIST). The dataset contains a set of software programs intentionally designed with vulnerabilities that can replicate the security challenges that the open-source community may face in reality. In addition, the dataset covers multiple test cases, each corresponding to a specific vulnerability or type of vulnerability, such as buffer overflows, integer overflows, format string vulnerabilities, etc., which are common problems in open-source projects. Therefore, this dataset has high applicability and relevance for software security research and development work in open-source projects. In order to comprehensively evaluate the performance and effectiveness of our proposed model in the practical application of open-source projects, we chose the NIST Juliet Java test suite as the experimental platform. The test suite not only contains examples of known Java source code vulnerabilities, but is also categorized in detail according to an enumeration of common weaknesses.

After observing and analyzing the dataset, we found that the number of vulnerabilities in most of these categories is small, which is a big test for the generalization ability of the model. Therefore, in order to ensure the reliability of the training and validation of the model, this paper adopts different filtering rules to form three different datasets, which are: the number is greater than 1000, the number is greater than 100, and the number is greater than 10. These three datasets are named data-1000, data-100, and data-10, respectively, and are used for the training and validation of the model to comprehensively assess the performance of the model under different data volume levels. The number and quantity of defect types is shown in

Table 2.

4.2. Experimental Setup

The following experimental setup was conducted to answer the research questions of this study:

- (1)

Experimental Environment. The experimental environment was configured as follows: the operating system was Ubuntu 18.04, the GPU was NVIDIA GeForce RTX 3060Ti, and experiments were implemented using the PyTorch 2.3.0 framework. The experimental code runs on a single GPU with a system memory size of 8 GB.

- (2)

Experiment Flow. The specific flow of the experiment is described below: the code is first preprocessed and tokenised into character representations and structural feature vectors, and then the entire dataset is divided into a training set, a validation set, and a test set. The proposed model is trained using the training set, and the validation set is used for hyperparameter tuning to select the best model. Finally, the test set is used to evaluate the performance and effectiveness of the model and to perform comparative experiments with other models. In addition, ablation experiments are performed on the model by splitting its different parts to answer the set questions RQ1, RQ2, and RQ3.

4.3. Evaluation Metrics

This paper proposes the following evaluation metrics to assess the performance of the proposed model on defect detection in open-source software.

F1 is the reconciled average of accuracy and recall, which can comprehensively evaluate the accuracy and recall of the model and is applicable to the problem of data imbalance between different categories. The formula for F1-measure is as follows:

where precision denotes the accuracy rate and recall denotes the recall rate. Precision rate is the proportion of samples that are predicted to be positive cases that are truly positive cases and is calculated as:

where TP denotes true cases (model-predicted positive cases and actually positive cases) and FP denotes false positive cases (model-predicted positive cases but actually negative cases).

Recall is the proportion of samples that are true positive cases that are correctly predicted as positive cases and is calculated as:

where FN denotes false negative cases (cases that the model predicted as negative but were actually positive).

Accuracy is the ratio of the number of correctly predicted samples to the total number of samples and is calculated as:

where TN indicates true negative cases (model-predicted negative cases and actually negative cases).

The above three metrics can be used to comprehensively evaluate the classification performance of the model, but in the case of unbalanced data, using only the accuracy may hide the true performance of the model. Therefore, the main focus is on the F1 metric.

4.4. Baseline Methods

To evaluate the fusion method proposed in this paper, we selected methods based on code semantic representation and abstract syntax tree (AST) structural representation, as well as methods based on the BERT model for our evaluation experiments. Below is an introduction to the methods and experimental parameters for both our approach and the comparison methods.

CodeBERT-AST-Structural Attention-BiLSTM (Our-model): This method combines the code semantic features extracted by CodeBERT and the structural features extracted by Word2Vec, using Structural Attention for feature fusion, and finally performs classification detection through the BiLSTM model. The design of this method integrates semantic information, structural information, and attention mechanisms, enabling the capture of dependencies between semantics and structure, thereby improving the model’s performance in defect detection tasks.

Code-Word2Vec-BiLSTM (VulDeepecker) [

26]: This model uses only the code character features extracted by Word2Vec and performs defect detection through BiLSTM. As a baseline method, VulDeepecker is primarily used to verify the contribution of Word2Vec features to defect detection.

AST-Word2Vec-BiLSTM (ASTWB) [

39]: This model uses only the structural features extracted by AST and the semantic features from Word2Vec, and performs defect detection through BiLSTM. This method is used to verify the independent roles of structural and semantic information in defect detection. However, it lacks the Structural Attention mechanism and thus cannot effectively model the dependencies between semantics and structure.

BERT-BiLSTM (LineVul) [

60]: This model is a defect detection method based on the BERT model, which uses the BERT pre-trained model to extract code semantic information for defect detection. In our experiments, we adjusted it to a function-level classification task for a fair comparison with our model.

The experimental parameters are shown in

Table 3.

4.5. Research Questions

To verify the effectiveness of the method proposed in this paper for the problem of code defect detection, the following three research questions (RQs) were designed:

RQ1: Does the defect detection method designed in this paper perform better than existing methods?

RQ2: Does the fusion method based on the structural attention mechanism designed in this paper have superior representation capabilities compared to conventional fusion methods?

RQ3: Does this model have stable performance in the face of data imbalance and diverse types of code defects in the open-source ecosystem?

5. Results Analysis

This paper conducted a series of experiments for each research question, obtaining corresponding results. Now, we provide a detailed analysis of the experimental results for each research question.

5.1. RQ1: Does the Defect Detection Method Designed in This Paper Perform Better than Existing Methods?

To address this question, we selected three representative baseline models for comparison:

- (1)

VulDeepecker—Utilizes only the semantic information of the code, such as high-level semantic features including function calls and variable names, which are important for understanding the functionality and behavior of code;

- (2)

ASTWB—Relies solely on the abstract syntax tree (AST) structure of the program, focusing on capturing syntactic and structural features, and emphasizing the organization and control flow of the code;

- (3)

LineVul—A model that combines both semantic and structural features, with strong capabilities in line-level code processing.

By comparing with these three baseline models that represent different feature utilization strategies, we can more comprehensively analyze the performance differences of our method across scenarios involving purely semantic representation, purely structural representation, and combined semantic-structural representation.

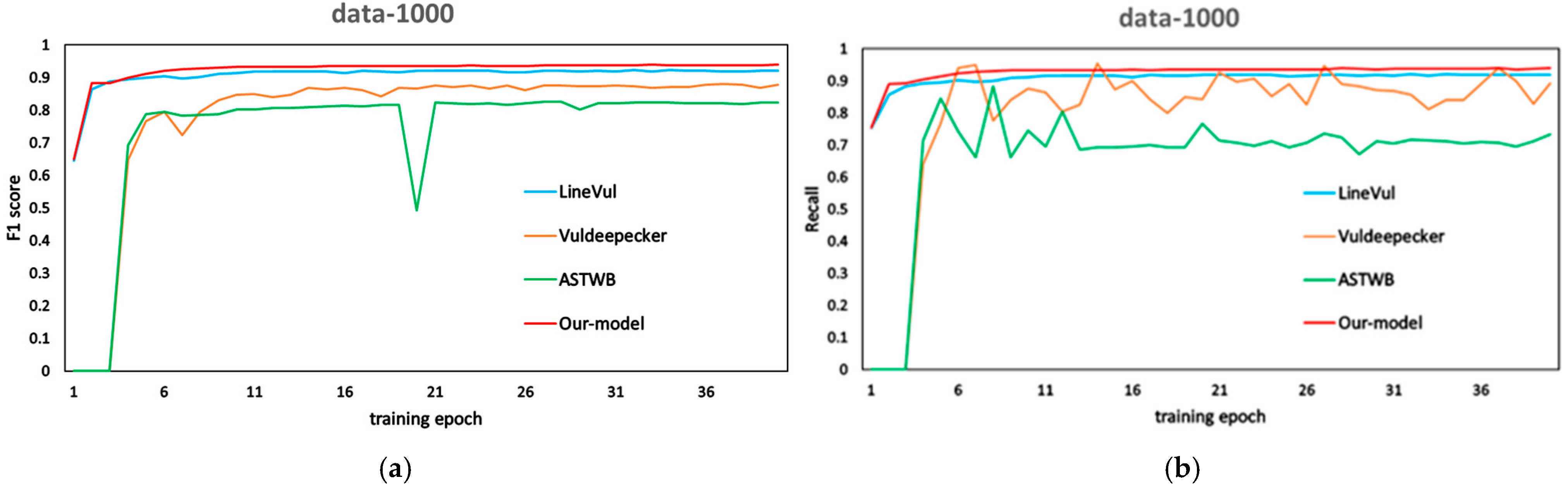

The experimental results are shown in

Table 4.

In response to Problem 1, we investigated the performance differences between the proposed method and traditional single-semantic representation approaches (VulDeepecker, ASTWB), as well as the combined semantic and structural representation model (LineVul). The results indicate that the proposed method significantly outperforms the three comparison methods in terms of F1 score. By comparing the best experimental results of the VulDeepecker and ASTWB models, as shown in the experimental results in

Table 4, the F1 score improved from 0.822 to 0.877 to 0.920, and the Recall value increased from 0.734 to 0.892 to 0.918. As shown in

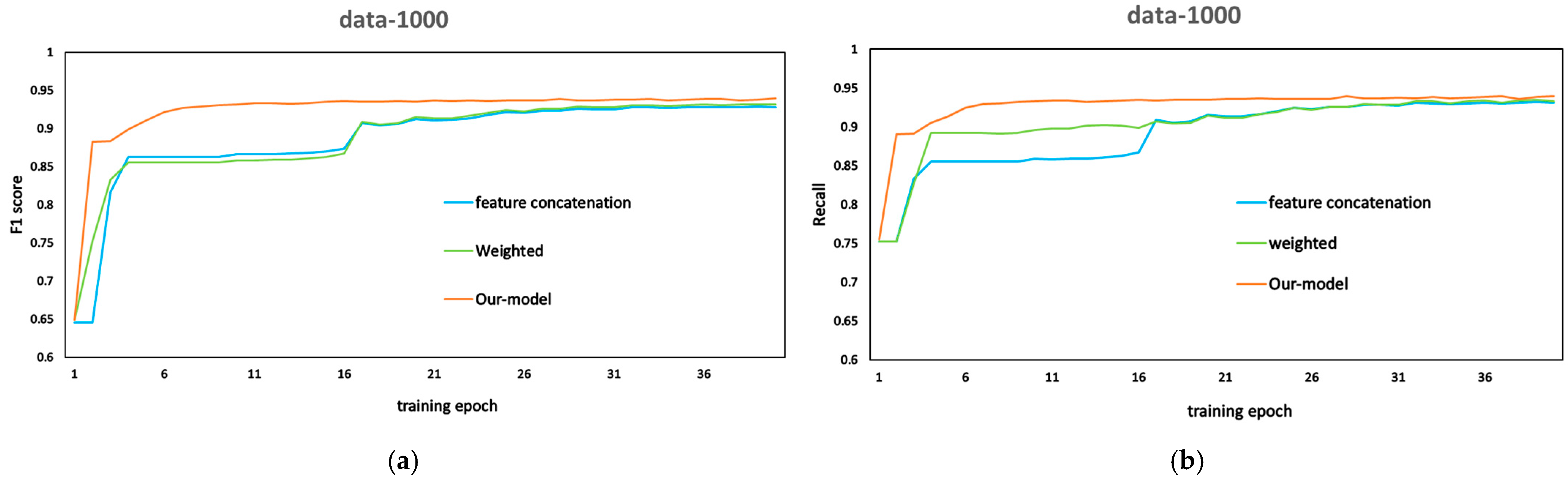

Figure 8, the proposed method shows a significant improvement in F1 performance compared to VulDeepecker, ASTWB, and LineVul. Within 1 to 6 training epochs, our model consistently outperforms the aforementioned single-representation models. This indicates that, during training, the proposed method is more efficient and converges faster than the single-representation models. Additionally, it is worth noting that the Recall value of the proposed method steadily increases, whereas the values of VulDeepecker and ASTWB fluctuate significantly as the number of training epochs increases, demonstrating that our method offers better stability.

In conclusion, compared to the aforementioned models, the proposed method better captures the semantic information of source code, facilitates a deeper understanding of the code, and thereby improves the model’s defect detection capability. This leads to a significant enhancement in model performance, validating that the method proposed in this paper outperforms existing methods.

5.2. RQ2: Does the Fusion Method Based on the Structural Attention Mechanism Designed in This Paper Have Superior Representation Capabilities Compared to Conventional Fusion Methods?

To answer this question, this paper compares the performance of Weighted fusion and feature concatenation fusion through experimental analysis. Weighted fusion combines information by assigning fixed or learned weights to different features; however, it does not consider the interactions or dependencies between features, making it overly simplistic. On the other hand, feature concatenation fusion directly concatenates the structural and semantic features of the code, preserving the original feature information. However, it fails to model the relationships between features and cannot adaptively adjust the contributions of different features. The fusion method based on a structural attention mechanism adaptively assigns different weights to different features, capturing the relationships between code structure and semantic features while enhancing the influence of important information. This method demonstrates strong expressive power. The results, including the F1 and Recall values, are shown in the

Table 5.

According to

Table 5 and the comparison results in

Figure 9, it can be seen that the trend of the F1 and recall curves is basically the same when using Weight and Piecing as the fusion methods, which indicates that the traditional simple fusion methods have similar effects. Additionally, the model’s performance improves somewhat in comparison to the ASTWord2vec and LineVul methods in RQ(1). This further validates that the semantic and structural information fusion method we adopted has achieved significant results. In comparison to the structural attention mechanism fusion approach we designed, our approach has maintained a leading edge in terms of F1 and recall values throughout the entire training process. The fusion approach we designed essentially converges after the 10th round, whereas the other two approaches require a gradual convergence after 26 rounds.

These results show that the fusion approach proposed in this paper has stronger characterization ability. A careful examination reveals that the fusion characterization method of code structure and semantic features in this study can better capture the structural and semantic information of the source code, making the model work much better. The method in this paper, which is based on the structural attention mechanism, successfully combines character- and structure-level data. This lets the model understand the code’s internal features more fully. From the experimental results, this fusion strategy shows excellent potential in the code defect prediction task, verifying that the structural attention mechanism fusion approach designed in this paper has better fusion results (RQ2).

5.3. RQ3: Does This Model Maintain Stable Performance When Facing Both Class Imbalance and Diverse Code Defect Types?

To systematically evaluate the stability of the model in scenarios with highly imbalanced defect categories and diverse defect types, this study constructs a three-tier progressive experimental setup based on real-world vulnerability distribution characteristics (NIST statistics show that the top 5% of defect types account for over 65% of the samples).

First, the data-1000 dataset (with over 1000 samples from 25 high-frequency defect types) is used to validate the model’s baseline detection capability for critical security vulnerabilities. Although this scenario retains some level of imbalance, the primary focus is on whether the model is dominated by mainstream vulnerabilities, potentially neglecting fine-grained features.

Next, the data-100 dataset (with over 100 samples from 50 defect types) is introduced to expand the evaluation to mid- and low-frequency defect types. The key focus here is to assess the model’s generalization degradation when the number of defect categories increases, simulating the challenge of detecting long-tail vulnerabilities in real-world development environments.

Finally, the data-10 dataset (with over 10 samples from 98 defect types) is used to construct an extreme imbalance scenario. This step aims to stress-test the model’s ability to detect rare vulnerabilities and reveal its robustness boundaries when dealing with highly scarce samples and severely skewed distributions.

The imbalance ratios of the datasets are provided in

Table 2 above. The results are shown in the

Table 6.

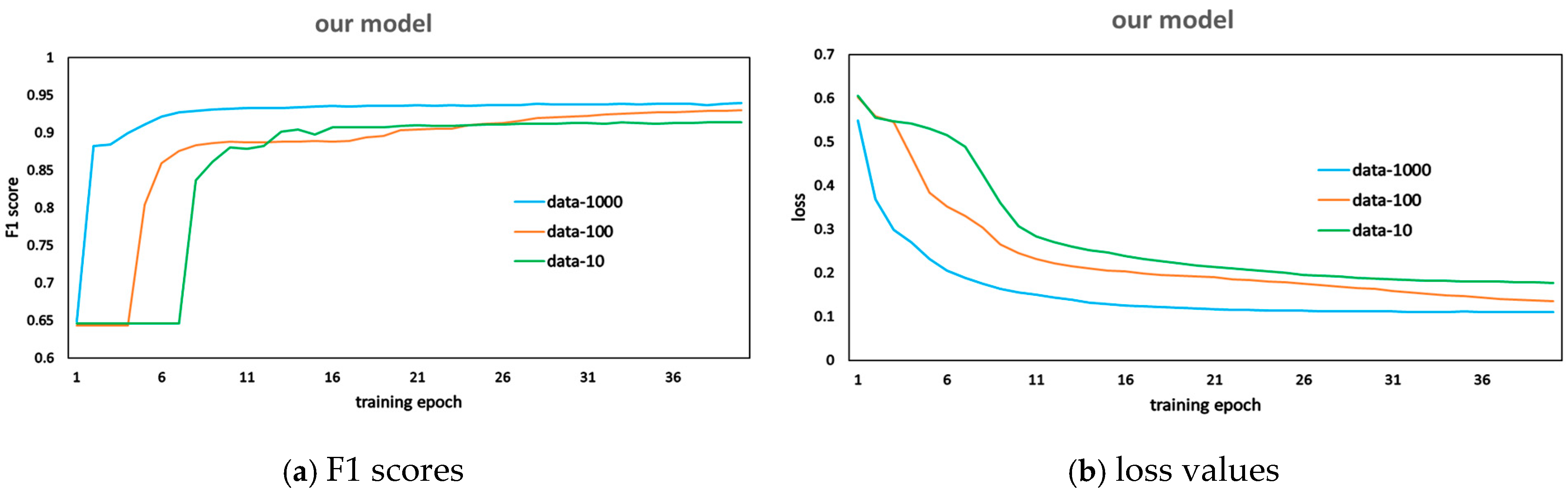

According to the results in

Table 6, when facing the challenges of data imbalance and multiple code defect types in open-source software, the model’s F1 score and Recall values slightly decreased from 0.9396 and 0.9394 to 0.9181 and 0.9140, respectively, but remained above 0.9. The F1 score and loss value change curves in

Figure 10 show that the three F1 curves generally follow the same trend. In the early stages of training, the F1 score increases rapidly and then gradually slows down. In the later stages of training, the F1 score becomes stable with minor fluctuations. Meanwhile, the three loss curves generated by the model during training maintain a stable downward trend, eventually rising slightly as the number of code defect types increases.

In summary, despite a slight decrease in training F1 and Recall values when facing the diversification of code defect types in open-source projects, the model still maintains values above 0.9, indicating good generalization performance. The consistent trends in training F1 and loss values demonstrate the model’s robustness. This further confirms that the model has stable performance, adapting to the current characteristics of the open-source development ecosystem with a multitude of code defects and complex types (RQ3).

6. Conclusions and Future Work

The proliferation of collaborative development paradigms and the continuous increase in software complexity have posed significant challenges for software defect prediction and quality assurance. Existing defect prediction methods suffer from insufficient code feature representation due to their failure to consider the interrelationship between code semantics and structure. To address this, we propose a code defect prediction approach based on a structural attention mechanism for fusing multimodal features. By designing this mechanism to compute attention scores between semantic and structural representation vectors, we establish matching relationships between semantic units and structural nodes. This enables the model to fully incorporate code organizational structure when processing semantic features, thereby achieving more accurate defect prediction.

Aligned with real-world vulnerability distribution patterns, we constructed a three-tiered progressive dataset (data-1000/data-100/data-10) based on the SARD benchmark. Experimental results demonstrate that our method outperforms traditional fusion strategies in both code representation capability and prediction performance.

However, this study has two main limitations: (1) The absence of cross-project validation on large-scale open-source projects affects the ecological adaptability of precise defect localization; (2) Static analysis capabilities remain constrained by manually defined rules, hindering efficient multilingual extension. Future work will establish cross-project benchmarks and implement generative large model-driven rule automation to advance the development of a highly adaptable, broad-coverage intelligent defect prediction framework.