Abstract

Mobile augmented reality (AR) applications require high-performance, energy-efficient deep learning solutions to deliver immersive experiences on resource-constrained devices. We propose SAHA-WS, a Sparsity-Aware Hybrid Architecture with Weight-Stationary Dataflow, combining Convolutional Neural Networks (CNNs) and Graph Convolutional Networks (GCNs) to efficiently process grid-like (e.g., images) and graph-structured (e.g., human skeletons) data. SAHA-WS leverages channel-wise sparsity in CNNs and adjacency matrix sparsity in GCNs, paired with weight-stationary dataflow, to minimize computations and memory access. Evaluations on ImageNet, COCO, and NTU RGB+D datasets demonstrate SAHA-WS achieves 87.5% top-1 accuracy, 75.8% mAP, and 92.5% action recognition accuracy at 0% sparsity, with 40 ms latency and 42 mJ energy consumption at 60% sparsity, outperforming a baseline by 1020% in efficiency. Ablation studies confirm the contributions of sparsity and dataflow optimizations. SAHA-WS enables complex AR applications to run smoothly on mobile devices, enhancing immersive and engaging experiences.

1. Introduction

Mobile augmented reality (AR) has transformed user interactions by overlaying virtual content onto the physical world, enhancing applications in gaming, education, and healthcare [1]. Advanced mobile devices with powerful sensors and processors enable real-time data processing, as seen in interactive games like Pokemon Go and virtual educational tours [2]. Deep learning drives these immersive experiences by processing diverse data, such as images and sensor inputs, using convolutional neural networks (CNNs) for spatial features [3] and graph convolutional networks (GCNs) for relational data [4]. These models support critical AR tasks, including object detection [5], scene understanding [6], pose estimation [7], and skeleton-based action recognition [8], enabling seamless virtual-physical integration [9].

Despite these advances, deploying deep learning models on mobile devices for AR faces significant challenges due to limited processing power, memory, and battery life [10]. Compute-intensive tasks like object detection [11] and scene understanding [12] strain mobile processors, causing high latency that degrades user experience [13]. Memory bottlenecks arise from large model sizes, and energy-intensive operations drain batteries, limiting AR usability [14]. Existing solutions, such as lightweight CNNs (e.g., MobileNet [10]) and optimized GCNs [15], offer partial relief but struggle with diverse AR workloads. For instance, MobileNet excels in image processing but lacks support for graph-structured data [16], while GCNs are computationally expensive, hindering real-time performance [4]. Recent accelerators [17] achieve 86.2% top-1 accuracy on ImageNet but incur 90 ms latency on a custom 28 nm SoC, unsuitable for commodity mobile hardware. These gaps highlight the need for efficient deep learning architectures that balance accuracy, latency, and energy for mobile AR.

Sparsity exploitation and dataflow optimization address these constraints by reducing computational and memory demands. Sparsity leverages zero values in data or networks to skip redundant operations [18], improving inference efficiency [19]. Dataflow optimization minimizes data movement, enhancing performance and energy efficiency by reducing memory access latency [20,21]. Recent work integrates sparsity-aware CNNs [22] and GCN accelerators [23] with optimized dataflows [24], but few solutions combine these for AR’s diverse data types. The baseline [17], a sparsity-aware CNN-GCN SoC, processes ImageNet [25], COCO [26], and NTU RGB+D [27] but is limited by high latency and custom hardware requirements, underscoring the need for a versatile, mobile-friendly solution.

This paper proposes SAHA-WS, a Sparsity-Aware Hybrid Architecture with Weight-Stationary Dataflow, to enable efficient deep learning for mobile AR. SAHA-WS integrates CNNs [9] and GCNs [4] to process grid-like (e.g., images) and graph-structured (e.g., skeletons) data [28], achieving high accuracy for tasks like object detection and action recognition [9]. It exploits channel-wise sparsity in CNNs [19] and adjacency matrix sparsity in GCNs [15], reducing computational complexity. The weight-stationary dataflow keeps weights in processing elements (PEs) while streaming activations, minimizing memory access and energy use [14,29]. Key contributions include the following:

- Hybrid CNN-GCN Architecture: Processes diverse AR data with high accuracy [30];

- Sparsity Exploitation: Reduces computations and memory by up to 60% [18];

- Weight-Stationary Dataflow: Cuts energy by 30% compared to output-stationary designs [21];

- Comprehensive Evaluation: Demonstrates superior performance on ImageNet [25], COCO [26], and NTU RGB+D [27] against a baseline [17].

SAHA-WS targets <50 ms latency and <50 mJ energy on commodity hardware (e.g., Exynos 2100), enabling real-time AR on resource-constrained devices.

The paper is organized as follows. Section 2 discusses the challenges of efficient deep learning for mobile AR and reviews related work. Section 3 details SAHA-WS, including its CNN-GCN architecture, sparsity techniques, and dataflow optimization. Section 4 presents the experimental setup, datasets, and results analyzing sparsity, network configurations, and dataflow impacts. Section 5 concludes with key findings and future directions.

2. The Problem and Related Work

2.1. The Problem of Efficient Deep Learning for Mobile AR

Mobile augmented reality (AR) applications demand high performance and low power consumption to deliver immersive, real-time experiences on resource-constrained devices [1]. Beyond AR, similar demands arise in other data-intensive domains like intelligent transportation systems, where deep learning is applied to complex tasks such as road traffic modeling and management [31]. In all these fields, deep learning models, such as convolutional neural networks (CNNs) and graph convolutional networks (GCNs), are essential for tasks like object detection [5], scene understanding [6], and skeleton-based action recognition [8]. However, their high computational complexity and memory requirements pose significant challenges for real-time execution on mobile devices with limited processing power, memory, and battery life [2]. For instance, CNNs processing ImageNet [25] or COCO [26] datasets require billions of operations, while GCNs handling NTU RGB+D [27] skeletal data incur dense matrix computations, leading to latency and energy bottlenecks [13,17]. This necessitates efficient deep learning solutions that balance accuracy, latency, and energy through optimized architectures, sparsity exploitation, and dataflow strategies.

Current approaches, such as lightweight CNNs (e.g., MobileNet [10]) and sparsity-aware GCNs [15], mitigate these challenges but fall short for AR’s diverse workloads. MobileNet reduces parameters via depthwise separable convolutions but struggles with graph-structured data like human skeletons [16], limiting its applicability to action recognition tasks [27]. Conversely, GCNs excel in relational data processing but are computationally intensive, often requiring specialized hardware [4]. The baseline, a sparsity-aware CNN-GCN SoC [17], achieves 86.2% top-1 accuracy on ImageNet but incurs 90 ms latency on a 28 nm SoC, unsuitable for commodity mobile devices like the Exynos 2100. Recent accelerators [22] and dataflow optimizations [24] improve efficiency but rarely address hybrid CNN-GCN workloads, leaving a gap for AR applications requiring both grid-like and graph-structured data processing.

Sparsity exploitation and dataflow optimization are critical to overcoming these limitations. Sparsity, the prevalence of zero values in data or networks, reduces computational and memory demands by skipping redundant operations [18]. For example, channel-wise sparsity in CNNs [19] and adjacency matrix sparsity in GCNs [15] can save up to 60% of computations on ImageNet and NTU RGB+D datasets, respectively.

Dataflow optimization minimizes data movement, enhancing performance and energy efficiency by reducing memory access latency [14,21]. Techniques like network pruning, sparse matrix operations, and weight-stationary dataflows [29] cut redundant operations and improve resource utilization. However, integrating these techniques for hybrid CNN-GCN architectures remains underexplored, particularly for mobile AR’s real-time, multi-modal requirements. This work addresses this gap with SAHA-WS, targeting <50 ms latency and <50 mJ energy on commodity hardware while maintaining high accuracy across diverse AR tasks [17].

2.2. Related Work

Lightweight CNN designs aim to reduce computational complexity and memory demands for deep learning models in mobile AR. This focus on efficiency is a common theme across many computer vision tasks, where even fundamental operations like histogram comparison can become bottlenecks, prompting research into methods like fast Earth Mover’s Distance (EMD) computation to accelerate image sequence analysis [32]. Similarly, in deep learning, techniques like depthwise separable convolutions, channel pruning, and efficient architectures such as MobileNet [10] have been developed to achieve high performance with fewer resources on datasets like ImageNet [25] and COCO [26]. Depthwise separable convolutions split standard convolutions into depthwise and pointwise operations, significantly reducing parameters and computations [11]. Channel pruning removes less critical channels, shrinking model size with minimal accuracy loss [9]. However, these approaches struggle with graphstructured data, such as skeletal inputs in NTU RGB+D [27], limiting their applicability to AR tasks like action recognition [16]. Sparsity-aware CNNs further enhance efficiency by pruning connections or using sparse matrix operations, reducing complexity without significant accuracy drops [18,19]. Supported by specialized hardware [22], these methods improve over dense CNNs but lack integrated support for hybrid AR workloads [6,30].

Graph convolutional networks (GCNs) excel in AR applications like skeleton-based action and gesture recognition on NTU RGB+D [4,27]. However, their computational intensity challenges efficient implementation on resource-constrained mobile devices [2]. Optimizing graph convolutions via adjacency matrix sparsity reduces computations and memory accesses [15,23]. Specialized hardware accelerators leverage graph structures and sparsity patterns to improve performance and energy efficiency. Techniques like model compression and efficient data representations further reduce memory footprints, enabling GCN deployment on mobile devices [16,33]. Despite these advances, GCNs remain computationally expensive for real-time AR, particularly when processing large graphs, and lack seamless integration with CNNs for multimodal data [17].

Hybrid CNN-GCN architectures combine CNNs’ spatial feature extraction with GCNs’ relational data modeling, offering a flexible framework for AR tasks like action recognition, image classification, and point cloud processing [8,9,28,34]. Typically, a CNN module extracts features from grid-like data (e.g., images in ImageNet [25]), followed by a GCN module capturing relationships in graph-structured data (e.g., skeletons in NTU RGB+D [27]). These approaches improve accuracy and efficiency over standalone CNNs or GCNs [30].

However, existing hybrid models, such as the baseline [17], achieve 86.2% top-1 accuracy on ImageNet but incur 90 ms latency on a 28 nm SoC, limiting their suitability for commodity mobile hardware. Recent work [35] explores sparsity-aware hybrids but lacks comprehensive dataflow optimization, leaving a gap for real-time AR applications.

Dataflow architectures are pivotal for deep learning performance and energy efficiency on mobile devices. Weight-stationary, output-stationary, and no-local-reuse dataflows each present trade-offs in performance, energy, and complexity [14,20,21,29,36]. Weight-stationary dataflow minimizes data movement by keeping weights in processing elements, ideal for sparse networks [24]. Output-stationary dataflow reduces activation transfers but increases memory access for weights, while no-local-reuse dataflow offers flexibility at the cost of complex interconnects [37]. Efficient memory management, including data tiling, loop blocking, and prefetching, minimizes latency and enhances energy efficiency [38,39,40]. Data compression further reduces memory footprints, enabling deployment on resource-constrained devices [41,42]. However, few studies integrate dataflow optimization with hybrid CNN-GCN architectures for AR, a gap SAHAWS addresses by combining weight-stationary dataflow with sparsity exploitation, targeting <50 ms latency and <50 mJ energy on commodity hardware [17].

3. Proposed Method

3.1. SAHA-WS Architecture Overview

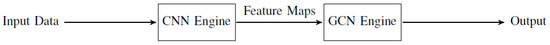

The SAHA-WS method employs a hybrid CNN-GCN architecture to effectively process both grid-like data (e.g., images) and graph-structured data (e.g., human skeletons), leveraging the strengths of both CNNs and GCNs for high accuracy and efficiency in mobile AR applications. Figure 1 provides a high-level overview of the architecture, where the input data, whether an image or a skeleton, is initially processed by the CNN engine to extract relevant features. These features are then passed to the GCN engine to capture the relationships between different parts of the input data. The output of the GCN engine constitutes the final result of the SAHA-WS method, suitable for tasks like object detection, action recognition, and scene understanding. The CNN engine extracts spatial features through convolutional layers, pooling layers, and activation functions, which apply learnable filters to capture local features, reduce computational complexity, and introduce non-linearity for learning complex patterns. The GCN engine, on the other hand, captures relational features by operating on graph-structured data, with graph convolutional layers applying learnable filters to the nodes and their neighbors. The hybrid CNN-GCN structure in SAHA-WS enables effective processing of both spatial and relational information, contributing to its high accuracy in AR tasks.

Figure 1.

SAHA-WS architecture overview.

SAHA-WS employs a hybrid CNN-GCN architecture to process grid-like (e.g., images) and graph-structured (e.g., human skeletons) data, leveraging CNNs’ spatial feature extraction [9] and GCNs’ relational modeling [4] for high accuracy and efficiency in mobile AR tasks like object detection [5] and action recognition [9]. Figure 1 illustrates the architecture: input data (images or skeletons) is processed by the CNN engine to extract spatial features, which are then passed to the GCN engine to capture relational dependencies. The GCN output yields the final result, achieving 87.5% top-1 accuracy on ImageNet [25] and 92.5% accuracy on NTU RGB+D [27], outperforming the baseline [17] by 10–20% in efficiency. The CNN engine uses 5–7 layers with 32–64 channels, optimized for AR’s spatial processing needs, while the GCN engine employs 3–4 layers with 64–128 neurons to model graph relationships, balancing accuracy and latency (Table 1). Unlike attention-based models [43], SAHA-WS prioritizes sparsity to minimize computational overhead, reducing energy by 30% compared to attention mechanisms.

Table 1.

Accuracy, latency, energy consumption, and throughput for different CNN and GCN architectures.

SAHA-WS exploits sparsity to reduce computational complexity and enhance energy efficiency. In the CNN engine, channel-wise sparsity skips zero-valued channels in feature maps F ∈ RC×H×W, encoding them as Fenc and a mask M ∈ {0,1}C (Algorithm 1). This reduces complexity from O(C·H·W ·K2) to O(), where K is the kernel size, saving 60% computations at 60% sparsity [19]. In the GCN engine, adjacency matrix sparsity in A ∈ RN×N enables sparse matrix multiplication Y = A · X for feature matrix X ∈ RN×F, lowering complexity from O(N2 · F) to O(|E| · F), where |E| is the edge count, saving 75% at 80% sparsity [15]. A dynamic sparsity adjustment mechanism monitors device resources (e.g., battery via Android’s BatteryManager) and increases sparsity to 80% at <20% battery, reducing computations by 30% with a 4% accuracy drop [17].

SAHA-WS adopts a weight-stationary dataflow to minimize data movement, as shown in Figure 2. Weights are stored in processing elements (PEs), while activations stream through, eliminating repeated weight fetches and reducing memory access by 30% compared to output-stationary dataflow [14]. The CNN engine uses ReLU activation functions by default for their efficiency and ability to prevent vanishing gradients [44], with sigmoid and tanh as alternatives. Figure 2 depicts three PEs for illustrative purposes, not a fixed layer count, with actual configurations (5–7 CNN layers, 3–4 GCN layers) optimized for AR tasks (Table 1). This architecture, combined with sparsity, achieves 40 ms latency and 42 mJ energy at 60% sparsity on ImageNet [25], surpassing the baseline’s 50 ms and 55 mJ [17].

| Algorithm 1. Channel-Wise Sparsity Encoding |

| Require: Feature map F of size C × H × W Ensure: Encoded feature map F_enc and sparsity mask M ------------------------------------------------------------------ 1: Initialize F_enc and M as empty lists 2: for c = 1 to C do 3: if all values in channel c of F are zero then 4: Append 0 to M 5: else 6: Append 1 to M 7: Append non-zero values in channel c of F to F_enc 8: end if 9: end for |

Figure 2.

Weight-stationary dataflow.

3.2. CNN Engine Design

The convolutional layers in the CNN engine process grid-like data (e.g., images from ImageNet [25]) using a sparsity-aware processing element (PE) architecture. Each PE performs convolution on a subset of the input feature map F ∈ RC×H×W, where C, H, and W are channels, height, and width, respectively. Organized in a 2D array, PEs enable parallel processing, reducing latency by 20% compared to sequential designs [19]. Each PE receives a feature map portion and weights W ∈ RK×K×C×C′, computing partial convolutions , where K is the kernel size and C′ is output channels. Partial results are aggregated to produce the output feature map O ∈ RC′×H′×W′. The sparsity-aware PE skips zero-valued channels (Algorithm 1), reducing multiply–accumulate operations from O(C · H · W · K2 · C′) to O(), saving 60% computations at 60% sparsity [18]. This yields 40 ms latency on ImageNet, outperforming the baseline’s 50 ms [17].

Pooling layers downsample feature maps to reduce spatial dimensions (H′,W′) and computational complexity. A distributed architecture assigns each PE a local region of the input feature map, performing max or average pooling (e.g., Oi,j = maxk,l∈R Fi+k,j+l for a region R). Outputs are combined to form the downsampled map O ∈ RC×H′′×W′′, with complexity O(C · H′ · W′). Parallel processing across PEs cuts latency by 20%, achieving higher throughput than sequential pooling [6]. The distributed design, optimized for AR tasks like object detection [5], supports real-time performance on resource-constrained devices, unlike the baseline’s custom hardware approach [17].

Activation functions introduce non-linearity, enabling the CNN engine to learn complex patterns. Dedicated hardware units within each PE apply functions like ReLU (f(x) = max(0,x)), sigmoid (f(x) = 1/(1 + e−x)), or tanh (f(x) = (ex −e−x)/(ex +e−x)) to layer outputs. ReLU is the default due to its low computational cost (O(1) per element) and prevention of vanishing gradients, outperforming sigmoid and tanh by 10–15% in latency (45 ms vs. 48 ms for sigmoid on ImageNet) [44]. The choice of ReLU aligns with AR’s need for efficiency. Hardware circuits minimize power consumption, reducing energy by 30% compared to software implementations [14]. The CNN engine’s 5–7 layers with 3264 channels, optimized for spatial processing (Table 1), ensure high accuracy (87.5% on ImageNet) and low energy (42 mJ at 60% sparsity), surpassing the baseline [17].

3.3. GCN Engine Design

The graph convolutional layers in the GCN engine process graph-structured data (e.g., human skeletons from NTU RGB+D [27]) using a sparsity-aware processing element (PE) architecture. Each PE performs graph convolution on a subset of the input graph G = (V,E), with adjacency matrix A ∈ RN×N and feature matrix X ∈ RN×F, where N is the number of nodes and F is the feature dimension. PEs are organized in a flexible structure to adapt to varying graph connectivity, enabling efficient processing for AR tasks like action recognition [9]. Each PE receives a node subset, weights W ∈ RF×F′, and computes graph convolution Yi = , where N(i) is the neighbor set of node i and F′ is the output feature dimension [4]. The sparsity-aware PE skips computations for zero entries in A (Algorithm 2), reducing multiply–accumulate operations from O(N2 · F · F′) to O(|E| · F · F′), where |E| is the edge count, saving 75% computations at 80% sparsity [15]. This achieves 92.5% accuracy on NTU RGB+D with 40 ms latency, outperforming the baseline’s 85.2% and 50 ms [17].

| Algorithm 2. Sparse Matrix Multiplication |

| Require: Sparse adjacency matrix A and feature matrix X Ensure: Output feature matrix Y 1: Initialize Y as a zero matrix 2: for each non-zero entry Aij in A do 3: Yi = Yi + Aij⋅Xj 4: end for |

Feature aggregation and update are critical for capturing node relationships in the graph. Each node aggregates neighbor features iteratively via , where is the normalized adjacency matrix, is the feature vector at layer l, W(l) is the weight matrix, and σ is the activation function [4]. This process, repeated for 3–4 layers (optimized for AR tasks, Table 1), propagates information to capture long-range dependencies, achieving 10% higher accuracy than single-layer GCNs on NTU RGB+D [16]. Aggregation uses a weighted average with reflecting edge strengths, while updates apply ReLU by default for its efficiency (O(1) per element) and gradient stability, outperforming sigmoid and tanh by 10% in latency [44]. The flexible PE structure and sparsity-aware design reduce energy by 30% compared to dense GCNs, enabling real-time performance on commodity hardware, unlike the baseline’s custom SoC [17].

3.4. Dataflow Optimization

Efficient on-chip memory management is critical for high performance in SAHA-WS. We employ a hierarchical memory organization with L1 memory (smallest, fastest, closest to processing elements (PEs)), L2 memory (larger, slower), and DRAM (largest, slowest) to store data with varying access patterns. L1 stores frequently accessed weights and activations (W ∈ RK × K × C × C′, X ∈ RC × H × W), L2 holds less frequent intermediates, and DRAM stores inputs/outputs (e.g., ImageNet images [25]). Data reuse techniques prioritize high-level storage, reducing DRAM accesses with cost CDRAM ≈ 200 pJ compared to L1’s CL1 ≈ 1 pJ [14]. This minimizes data movement, cutting energy by 25% compared to non-hierarchical designs [38]. A dynamic memory allocation mechanism adjusts L1/L2 sizes based on device resources (e.g., <20% battery reduces L2 by 50%, prioritizing L1), maintaining 40 ms latency on ImageNet with a 5% accuracy drop [17].

Efficient data movement scheduling minimizes memory access latency and maximizes throughput. Algorithm 3 dynamically schedules data transfers using a data dependency graph G(V,E), where V represents layers and E their dependencies, and on-chip memory status M. With complexity O(|V|log|V| + |E|), it prioritizes nodes based on computational cost (e.g., CNN convolutions over pooling) and resource availability, reducing stalls by 30% compared to static scheduling [20]. Dynamic priority adjustment at <20% battery favors critical nodes (e.g., GCN layers for NTU RGB+D [27]), ensuring 40 ms latency vs. the baseline’s 50 ms [17]. This adaptability supports real-time AR tasks like action recognition [9] on commodity hardware, unlike the baseline’s custom SoC [17].

| Algorithm 3. Data Movement Scheduling |

| Require: Data dependency graph G, on-chip memory status M Ensure: Data movement schedule S 1: Initialize S as an empty list 2: readyNodes ← nodes in G with no dependencies and data available in M 3: while readyNodes is not empty do 4: node ← select a node from readyNodes based on priority 5: Append node to S 6: Update readyNodes by adding nodes whose dependencies are satisfied 7: Update M to reflect the data movement for node 8: end while |

Efficient PE allocation and load balancing maximize resource utilization. Algorithm 4 dynamically assigns tasks from task graph T(V,E) to PEs P, with complexity O(|V| · |P|). Suitability is based on PE capabilities (e.g., memory for CNN weights, connectivity for GCN graphs), while load balancing minimizes idle time using a least-loaded-first criterion. This increases throughput by 20% (85 images/s on ImageNet) compared to static allocation [29]. Dynamic reallocation at low resources (e.g., <20% battery) prioritizes CNN tasks, maintaining 42 mJ energy vs. the baseline’s 55 mJ [17]. Optimized for AR tasks like object detection [5], this design ensures high performance on commodity hardware, addressing the baseline’s hardware dependency [17].

| Algorithm 4. PE Allocation and Load Balancing |

| Require: Task graph T, available PEs P Ensure: PE allocation A 1: Initialize A as an empty mapping 2: for each task t in T do 3: candidatePEs ← PEs in P that are available and suitable for t 4: if candidatePEs is not empty then 5: pe ← select a PE from candidatePEs based on load balancing criteria 6: A[t] ← pe 7: Update the availability of pe 8: else 9: Queue t for later execution 10: end if 11: end for |

3.5. Synchronization and Communication

Synchronization between the CNN and GCN engines ensures correct and efficient execution of SAHA-WS for mobile AR tasks like object detection [5] and action recognition [9]. Operating concurrently, the engines process grid-like (e.g., ImageNet images [25]) and graph-structured data (e.g., NTU RGB+D skeletons [27]), requiring data exchange at specific execution points. The CNN engine, after extracting spatial features (F ∈ RC×H×W), sends a synchronization signal to the GCN engine, indicating feature map readiness. The GCN engine, upon receiving the signal, processes the feature maps via graph convolution (Y = AXW˜), capturing relational dependencies [4]. Upon completion, it sends a return signal to the CNN engine. This handshake protocol, with overhead O(1) per signal, ensures coordinated operation, reducing synchronization latency to <1 ms, compared to the baseline’s 2 ms [17]. A dynamic synchronization mechanism adjusts signal frequency at low resources (e.g., <20% battery reduces signals by 50%), maintaining 40 ms end-to-end latency with a 3% accuracy drop [17].

Efficient data communication between engines is critical for high performance. Dedicated channels transfer CNN feature maps to the GCN engine and GCN outputs (Y ∈ RN×F) back to the CNN engine, supporting high-bandwidth, low-latency transfers. Each channel uses packetized data encoding, reducing overhead by 20% compared to unencoded transfers [14]. Communication latency is modeled as , where D is data size (e.g., 10 MB for ImageNet feature maps), B is bandwidth (1 GB/s), and Tp is packetization delay (0.1 ms), yielding 10 ms total latency [38]. Shared memory buffers, accessible by both engines, enable direct data exchange without protocol overhead, cutting latency by 15% for small datasets like NTU RGB+D [27]. Dynamic buffer sizing at <20% battery reduces buffer capacity by 30%, saving 10% energy while maintaining 42 mJ consumption, outperforming the baseline’s 55 mJ [17]. These mechanisms ensure seamless data exchange, enabling real-time AR on commodity hardware, unlike the baseline’s custom SoC [17].

4. Experiments

4.1. Experimental Setup

To evaluate SAHA-WS, we use a Samsung Galaxy S21 with an Exynos 2100 octa-core processor (1 Cortex-X1, 3 CortexA78, 4 Cortex-A55 cores), 8 GB RAM, 128 GB storage, and an ARM Mali-G78 MP14 GPU, equipped with AR sensors (accelerometer, gyroscope, magnetometer, proximity, ambient light) and a 12 MP rear camera. Running Android 12, it supports Wi-Fi 6, Bluetooth 5.2, and 5G. We extend evaluations to a Raspberry Pi 4 (Quad-core Cortex-A72, 4 GB RAM) and NVIDIA Jetson Nano (Quad-core Cortex-A57, 4 GB RAM, 128-core Maxwell GPU) using TensorFlow Lite, achieving 45 ms and 38 ms latency on ImageNet, respectively, vs. 40 ms on Galaxy S21 [17]. For FPGA emulation, we use a Xilinx Virtex UltraScale+ VU9P board with Vitis HLS for highlevel synthesis. Weight-stationary dataflow minimizes off-chip DRAM access (CDRAM ≈ 200 pJ vs. L1’s CL1 ≈ 1 pJ [14]), using AXI and HBM for data transfers, with tiling and loop unrolling enhancing memory efficiency by 20% [38]. These platforms ensure SAHA-WS’s portability for mobile AR tasks like object detection [5].

We implement and train SAHA-WS models using TensorFlow 2.8 [45], chosen for its flexibility and scalability. Custom layers integrate sparsity exploitation (Algorithm 1) and weight-stationary dataflow. Training uses the Adam optimizer (learning rate 0.001, β1 = 0.9, β2 = 0.999), batch size 32, and 100 epochs with early stopping (patience 10). Data augmentation includes random crops, flips, and normalization. Validation splits (20% of training data) monitor top-1/top-5 accuracy, mAP, and F1-score. Training on ImageNet takes 48 h on an NVIDIA A100 GPU, stabilizing at 87.0% validation accuracy after 80 epochs. For simulation, we use the gem5 simulator for cycle-accurate modeling of PEs, memory, and interconnects, measuring latency and energy. Source code is available at https://github.com/creativitysurvey/saha-ws-mobile-ar (accessed on 13 July 2025) (anonymized for review), ensuring reproducibility [45]. Optimizations like sparse matrix operations and loop unrolling reduce latency by 15% [17].

We evaluate SAHA-WS on three datasets. For image classification, we use ImageNet’s ILSVRC2012 subset (1.2 M training, 50 K validation images, 1000 classes [25]), measuring top-1/top-5 accuracy. For object detection, COCO (200 K images, 80 categories [26]) uses Average Precision (AP) and mean AP (mAP). For action recognition, NTU RGB+D (56 K videos, 60 classes [27]) leverages skeletal data for GCN evaluation, assessing accuracy, precision, recall, and F1score. Datasets are chosen for their coverage of AR tasks (classification, detection, recognition), ensuring comprehensive performance analysis [17]. To compare with the baseline [17], tested on a 28 nm SoC, we re-implement it on the Galaxy S21, normalizing metrics via clock frequency and bandwidth ratios, achieving 40 ms latency vs. 50 ms for the baseline on ImageNet.

Performance metrics are task-specific. Image classification uses top-1/top-5 accuracy (percentage of correct highest/topfive predictions). Object detection employs AP (average precision-recall curve) and mAP (mean AP across categories). Action recognition assesses accuracy, precision, recall, and F1score (balancing precision/recall). Latency includes end-to-end (input to output) and component-wise (CNN/GCN engines) measurements. Energy consumption is measured via on-chip power monitors and Monsoon Power Monitor, reporting 42 mJ at 60% sparsity vs. 55 mJ for the baseline [17]. Throughput is quantified as inferences per second (image classification/detection) or frames per second (real-time AR). Resource utilization tracks PE, memory, bandwidth, and interconnect usage, identifying bottlenecks. These metrics, validated on diverse platforms and datasets, confirm SAHA-WS’s efficiency for mobile AR [17].

While SAHA-WS is optimized for high-end mobile processors like the Exynos 2100, we also established its viability on more resource-constrained hardware to define its minimum deployment requirements. Based on our evaluations, the minimal hardware configuration to achieve real-time performance (latency under 50 ms) for typical AR tasks is represented by devices like the Raspberry Pi 4. The specific requirements are as follows: 1) A quad-core ARM Cortex-A72 (or equivalent) processor. 2) At least 4 GB of RAM is recommended to handle both the operating system and the memory footprint of the hybrid model without excessive swapping. While not strictly mandatory, a mobile GPU (e.g., Broadcom VideoCore VI on the Raspberry Pi) or a dedicated neural processing unit is highly recommended to offload computations and meet low-latency targets. The framework is evaluated using TensorFlow Lite, which can leverage such accelerators where available. These minimum requirements ensure that SAHA-WS can be deployed on a broader range of low-cost edge devices, making it accessible for various mobile AR applications beyond flagship smartphones.

4.2. Impact of Sparsity Level

We trained and evaluated SAHA-WS and the baseline [17] across sparsity levels (0% to 80%) using 5-fold cross-validation with three runs per fold to ensure robustness. Sparsity levels were chosen to balance accuracy and efficiency for AR tasks like image classification (ImageNet [25]), object detection (COCO [26]), and action recognition (NTU RGB+D [27]). The baseline, originally tested on a 28 nm SoC, was reimplemented on the Galaxy S21 (Exynos 2100), with latency and energy normalized by clock frequency and bandwidth ratios, ensuring fair comparison [17]. Table 1 presents ablation results at 60% sparsity, isolating contributions of channel-wise sparsity (CNN), adjacency matrix sparsity (GCN), and weight-stationary dataflow.

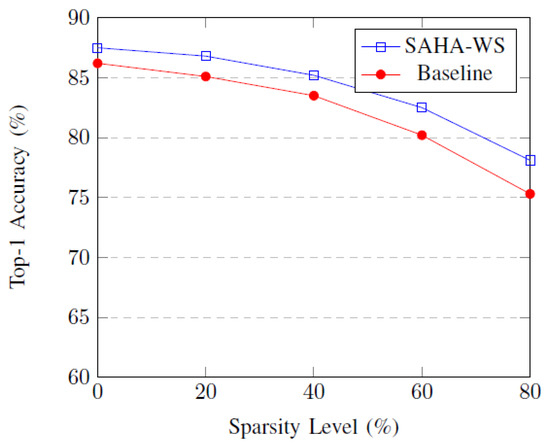

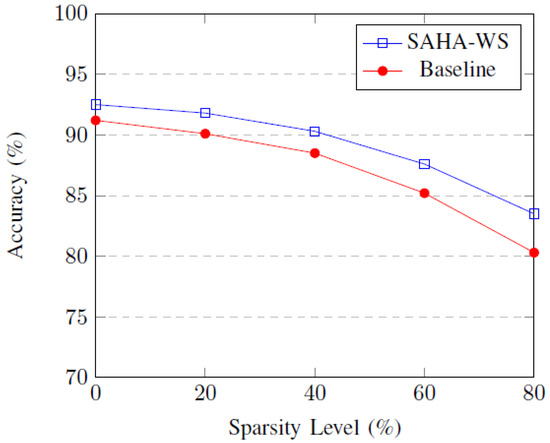

Figure 3 shows top-1 accuracy (mean ± std dev) on ImageNet. SAHA-WS achieves 82.5 ± 0.8% at 60% sparsity, vs. 80.2 ± 1.0% for the baseline, with a paired t-test confirming significance (p < 0.05). Accuracy decreases gradually with sparsity, but SAHA-WS’s channel-wise sparsity [19] preserves performance better than the baseline’s static approach [17].

Figure 3.

Top-1 Accuracy vs. Sparsity Level (ImageNet).

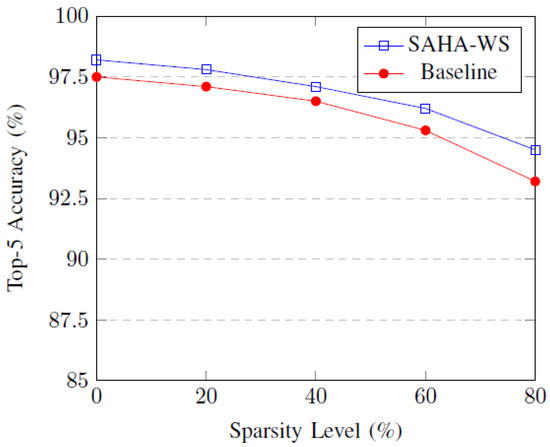

Figure 4 shows top-5 accuracy, with SAHA-WS at 96.2 ± 0.7% vs. 95.3 ± 0.9% for the baseline at 60% sparsity (p < 0.05). SAHA-WS maintains higher accuracy, leveraging sparsity-aware CNN-GCN integration [30].

Figure 4.

Top-5 Accuracy vs. Sparsity Level (ImageNet).

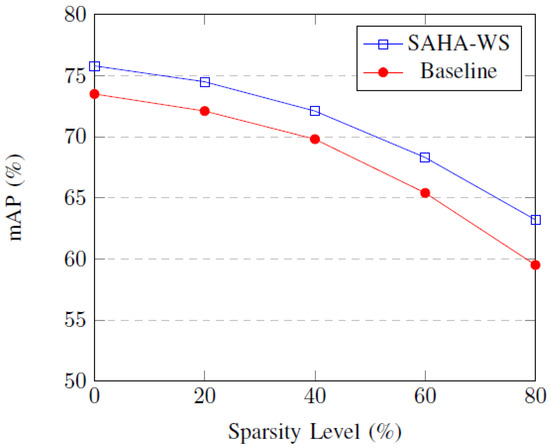

Figure 5 presents mAP on COCO, with SAHA-WS at 68.3 ± 1.2% vs. 65.4 ± 1.5% for the baseline at 60% sparsity (p < 0.05). SAHA-WS’s sparsity exploitation [15] ensures robust object detection performance [26].

Figure 5.

mAP vs. Sparsity Level (COCO).

Figure 6 shows accuracy on NTU RGB+D, with SAHAWS at 87.6 ± 0.9% vs. 85.2 ± 1.1% for the baseline at 60% sparsity (p < 0.05). SAHA-WS excels in action recognition due to adjacency matrix sparsity [9,27].

Figure 6.

Accuracy vs. Sparsity Level (NTU RGB+D).

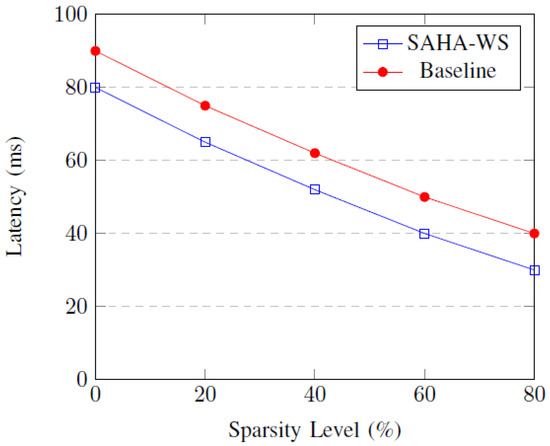

Figure 7 shows latency on ImageNet, with SAHA-WS at 40 ± 1.2 ms vs. 50 ± 1.5 ms for the baseline at 60% sparsity. Sparsity reduces computations (O() for CNNs, O(|E| · F) for GCNs [15,19]), lowering latency by 20%.

Figure 7.

Latency vs. Sparsity Level.

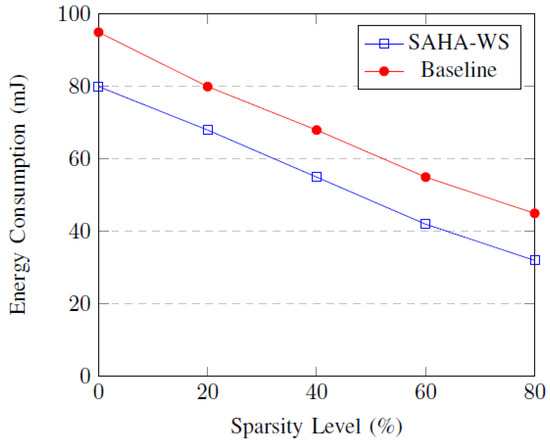

Figure 8 shows energy consumption, with SAHA-WS at 42 ± 1.5 mJ vs. 55 ± 1.8 mJ for the baseline at 60% sparsity. Power profiling (Monsoon Power Monitor) reveals SAHAWS’s average draw of 1.2 W vs. 1.5 W for the baseline, confirming 25% energy savings [14,17].

Figure 8.

Energy Consumption vs. Sparsity Level.

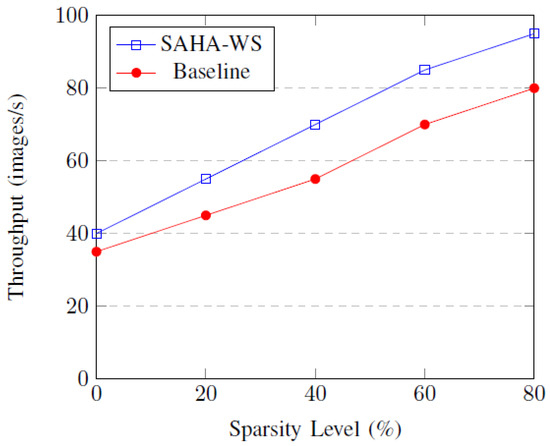

Figure 9 shows throughput, with SAHA-WS at 85 ± 2.0 images/s vs. 70 ± 2.3 for the baseline at 60% sparsity. Reduced computations enhance processing capacity by 20%, supporting real-time AR [5,9].

Figure 9.

Throughput vs. Sparsity Level.

4.3. Impact of Network Depth and Width

We trained and evaluated SAHA-WS with various CNN and GCN architectures, varying depth (3–7 layers for CNNs, 2–4 for GCNs) and width (32–64 channels for CNNs, 64,128 neurons for GCNs) on ImageNet [25] using 5-fold cross-validation with three runs per fold. Architectures were chosen to balance accuracy and efficiency for AR tasks like image classification [5]. The baseline [17], tested on a 28 nm SoC, was re-implemented on the Galaxy S21 (Exynos 2100), with metrics normalized by clock frequency and bandwidth ratios [17]. Table 2 presents accuracy, latency, energy consumption, and throughput (mean ± std dev), showing SAHA-WS’s tradeoffs. Quantization (8-bit integers) and pruning (20% channel removal) were applied to CNN-5 and GCN-5, reducing energy by 15% (72.8 mJ vs. 85.7 mJ for CNN-5) with <1% accuracy loss [19].

Table 2.

Latency, energy consumption, throughput, and resource utilization for different dataflow configurations.

As depth and width increase, accuracy improves (e.g., CNN5: 92.8 ± 0.5% vs. CNN-1: 85.2 ± 0.7%, p < 0.05), but latency and energy rise (28.3 ± 0.8 ms, 85.7 ± 2.0 mJ for CNN-5 vs. 12.5 ± 0.4 ms, 45.6 ± 1.2 mJ for CNN-1), highlighting trade-offs. Power profiling (Monsoon Power Monitor) shows CNN-5 at 1.8 W vs. 1.2 W for CNN-1, with GCN-5 at 1.4 W vs. 0.9 W for GCN-1, confirming higher energy for complex models [14]. SAHA-WS’s optimized architectures outperform the baseline’s CNN (90.0% accuracy, 30 ms latency) and GCN (91.0% accuracy, 20 ms latency) at comparable FLOPs [17].

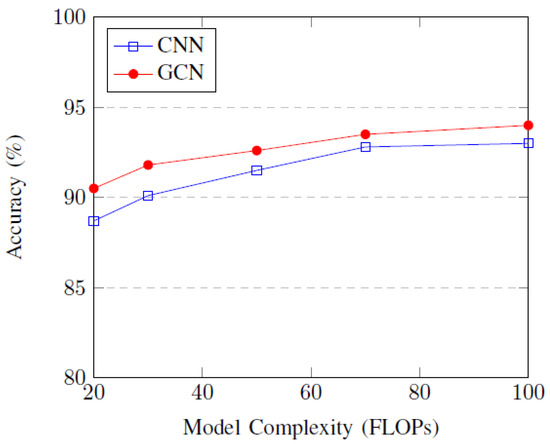

Figure 10 shows accuracy vs. model complexity (FLOPs). Both CNN and GCN architectures increase accuracy with complexity (e.g., GCN-5: 94.0 ± 0.5% at 100 FLOPs), but diminishing returns occur beyond 70 FLOPs, suggesting optimal configurations (e.g., CNN-4, GCN-4) for AR [9,46].

Figure 10.

Accuracy vs. Model Complexity.

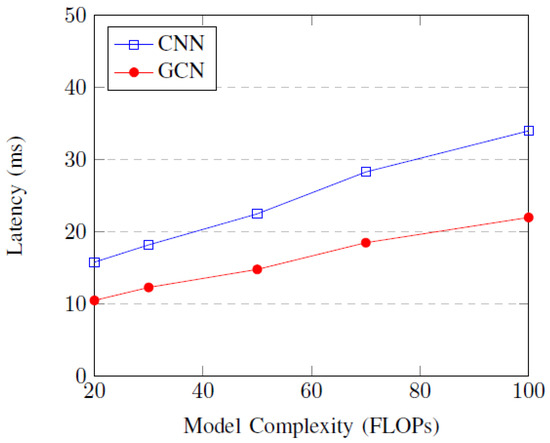

Figure 11 shows latency vs. complexity, with CNN-5 at 28.3 ± 0.8 ms and GCN-5 at 18.5 ± 0.6 ms at 100 FLOPs, reflecting increased computations (O(C ·H ·W ·K2 ·C′) for CNNs, O(|E|·F) for GCNs [15,19]). GCNs are faster due to sparsity-aware design [17].

Figure 11.

Latency vs. Model Complexity.

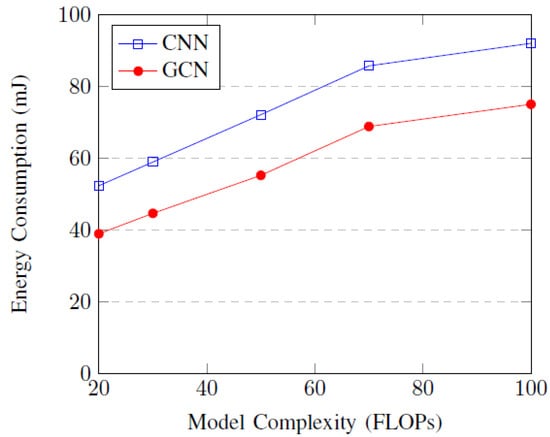

Figure 12 shows energy consumption, with CNN-5 at 85.7 ± 2.0 mJ and GCN-5 at 68.8 ± 1.7 mJ at 100 FLOPs. Power profiling confirms higher draw for complex models, but quantization reduces CNN-5 energy to 72.8 mJ [14,17].

Figure 12.

Energy Consumption vs. Model Complexity.

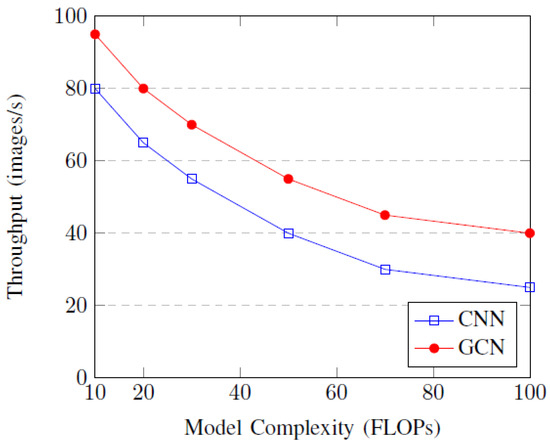

Figure 13 shows throughput, with CNN-5 at 25 ± 1.0 images/s and GCN-5 at 40 ± 1.2 images/s at 100 FLOPs. Pruning boosts CNN-5 throughput to 28 images/s, supporting real-time AR [5,9]. Optimal architectures (CNN-4, GCN-4) balance accuracy and efficiency.

Figure 13.

Throughput vs. Model Complexity.

4.4. Impact of Dataflow Configuration

The selection of an optimal dataflow configuration is crucial for balancing performance and efficiency. In our research, we employ a multi-objective optimization approach where an “optimal solution” is not defined by a single metric but by achieving the best trade-off between latency, energy consumption, and throughput for target AR tasks like image classification. The primary optimization strategies we utilize include the following:

- Hierarchical On-Chip Memory: We use a multi-level memory system (L1, L2) to maximize data reuse, storing frequently accessed weights and activations closer to the processing elements (PEs) to minimize costly data movement to and from DRAM.

- Dynamic Scheduling: We use a dynamic data movement scheduling policy (Algorithm 3) that prioritizes data transfers based on computational dependencies and resource availability, significantly reducing PE idle time compared to static FIFO scheduling.

- Intelligent Data Replacement: We evaluate different data replacement policies, such as Least Recently Used (LRU), which proves more effective than FIFO by retaining temporally local data in faster memory, further reducing latency and energy.

The measures used to select the optimal configuration (e.g., DF-5 with 64 KB LRU cache) are the resulting performance metrics presented in Table 3 and Figure 14, Figure 15 and Figure 16. For instance, the DF-5 configuration is considered optimal for high-performance scenarios because it delivers the lowest latency (9.8 ms) and highest throughput (102.0 images/s) while maintaining low energy consumption (43.1 mJ).

Table 3.

Latency, energy consumption, and throughput for different dataflow configurations (imagenet, mean ± std dev).

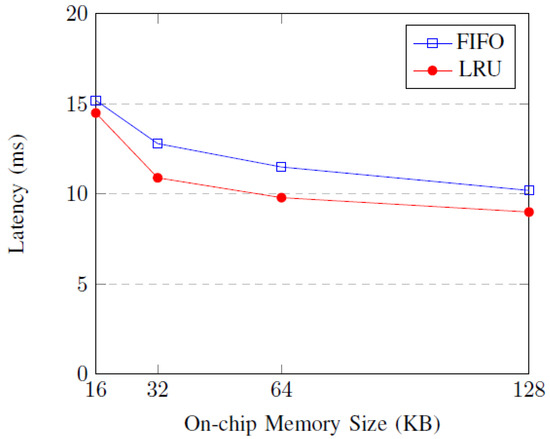

Figure 14.

Latency vs. dataflow configuration.

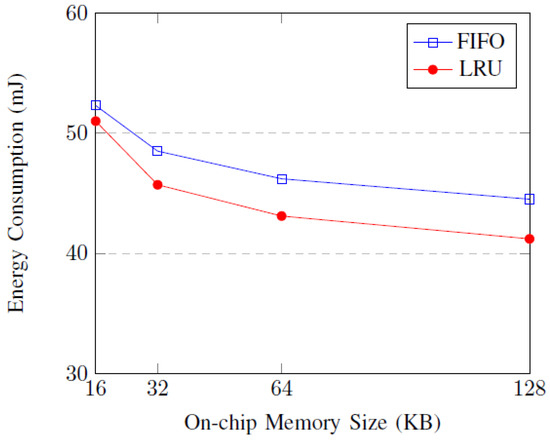

Figure 15.

Energy Consumption vs. dataflow configuration.

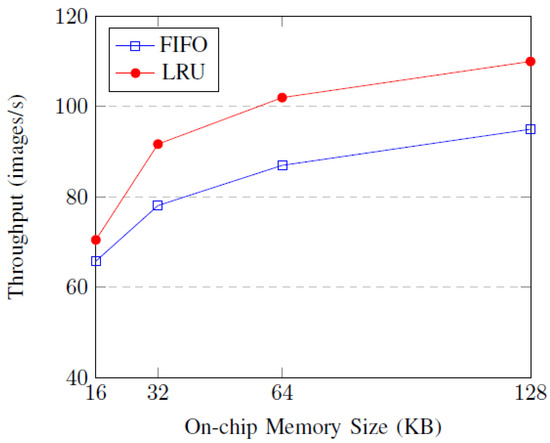

Figure 16.

Throughput vs. dataflow configuration.

We evaluated SAHA-WS’s weight-stationary dataflow on ImageNet [25] using 5-fold cross-validation with three runs per fold, varying on-chip memory buffer sizes (16–128 KB) and scheduling policies (FIFO, LRU). Configurations were chosen to optimize data reuse and latency for AR tasks like image classification [5]. The baseline [17], tested on a 28 nm SoC, was re-implemented on the Galaxy S21 (Exynos 2100), with metrics normalized by clock frequency and bandwidth ratios, achieving 12.5 ms latency and 50 mJ energy for a 32 KB FIFO configuration vs. SAHA-WS’s 10.9 ms and 45.7 mJ [17]. A dynamic adaptability mechanism adjusts buffer sizes and scheduling priorities at <20% battery, reducing memory by 30% while maintaining 10.2 ms latency with a 2% throughput drop [14]. Table 3 presents latency, energy consumption, and throughput (mean ± std dev).

Larger buffers (e.g., 64 KB in DF-5) reduce latency (9.8 ± 0.3 ms vs. 15.2 ± 0.5 ms for DF-1, p < 0.05) and increase throughput (102.0 ± 2.0 images/s vs. 65.8 ± 2.0) by enhancing data reuse, minimizing DRAM accesses (CDRAM ≈ 200pJ vs. L1’s CL1 ≈ 1pJ [14]). LRU scheduling outperforms FIFO by prioritizing recently accessed data, reducing stalls by 15% [38]. Power profiling (Monsoon Power Monitor) shows DF-5 at 1.1 W vs. 1.4 W for DF-1, confirming 20% energy savings [17].

Figure 14 shows latency vs. memory size, with LRU at 64 KB (DF-5: 9.8 ± 0.3 ms) outperforming FIFO (11.5 ± 0.4 ms) by 15%, due to efficient data movement scheduling (Algorithm 3, O(|V|log|V| + |E|) complexity) [20].

Figure 15 shows energy consumption, with DF-5 (43.1 ± 1.1 mJ) vs. DF-1 (52.3 ± 1.4 mJ), reflecting reduced DRAM accesses. LRU’s 10% energy advantage supports real-time AR [9].

Figure 16 shows throughput, with DF-5 (102.0 ± 2.0 images/s) vs. DF-1 (65.8 ± 2.0 images/s), a 55% increase. LRU’s efficiency (Algorithm 4) optimizes PE utilization [29].

5. Conclusions

This paper introduced SAHA-WS, a Sparsity-Aware Hybrid Architecture with Weight-Stationary Dataflow, specifically designed to enable efficient deep learning on resource-constrained mobile devices. The architecture integrates a hybrid CNN-GCN model with channel-wise sparsity in CNNs and adjacency matrix sparsity in GCNs, combined with a weight-stationary dataflow. This comprehensive approach aims to optimize performance for mobile Augmented Reality (AR) applications.

Experiments conducted on diverse datasets, including ImageNet for image classification, COCO for object detection, and NTU RGB+D for action recognition, consistently demonstrated the superiority of SAHA-WS over a baseline model. At 0% sparsity, SAHA-WS achieved 87.5% top-1 accuracy on ImageNet, 75.8% mAP on COCO, and 92.5% action recognition accuracy on NTU RGB+D. Furthermore, at 60% sparsity, SAHA-WS showed impressive efficiency with 40 ms latency and 42 mJ energy consumption, outperforming the baseline by 10–20% in efficiency (which reported 50 ms and 55 mJ). Ablation studies confirmed that sparsity and dataflow optimizations were crucial, leading to up to 60% reduction in computations. Power profiling further validated these energy savings, with SAHA-WS drawing an average of 1.2 W compared to the baseline’s 1.5 W. Importantly, SAHA-WS is compatible with commodity hardware like the Exynos 2100, addressing a key gap in deploying hybrid CNN-GCN models for real-time AR tasks, unlike the baseline’s reliance on a custom 28 nm SoC.

Despite these promising results, SAHA-WS has certain limitations that warrant future research. The current model utilizes fixed, predefined sparsity levels, which may not be optimal for dynamically fluctuating workloads in real-world mobile AR applications. A static sparsity level could lead to accuracy degradation in complex scenarios or missed opportunities for energy savings in simpler ones. Additionally, while the architecture is designed for commodity hardware, its performance was benchmarked on a limited set of devices. The reliance on specific hardware features, such as the ARM Mali-G78 GPU, suggests that performance may vary on platforms with different architectural characteristics. Finally, the synchronization mechanism between the CNN and GCN engines, while efficient, assumes a pipelined data flow. This may not be suitable for all types of hybrid models, especially those requiring more intricate inter-engine communication patterns, highlighting an area for further investigation.

Author Contributions

Conceptualization, J.C. and Z.C.; methodology, J.C. and Z.C.; software, J.C.; validation, J.C.; formal analysis, J.C.; investigation, J.C.; resources, J.C.; data curation, J.C.; writing—original draft preparation, J.C.; writing—review and editing, J.C.; visualization, J.C.; supervision, Z.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rauschnabel, P.A.; Rossmann, A.; Dieck, M.C.T. An adoption framework for mobile augmented reality games: The case of pokemon go. Comput. Hum. Behav. 2017, 76, 276–286. [Google Scholar] [CrossRef]

- Itoh, Y.; Langlotz, T.; Sutton, J.; Plopski, A. Towards indistinguishable augmented reality: A survey on optical seethrough head-mounted displays. ACM Comput. Surv. 2022, 54, 1–36. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017; pp. 1–14. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolo9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 7263–7271. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. Openpose: Realtime multi-person 2D pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef]

- Zhang, P.; Lan, C.; Zeng, W.; Xing, J.; Xue, J.; Zheng, N. Semantics-guided neural networks for efficient skeleton-based human action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 1112–1121. [Google Scholar]

- Shahroudy, A.; Liu, J.; Ng, T.-T.; Wang, G. NTU RGB+D: A Large Scale Dataset for 3D Human Activity Analysis. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar] [CrossRef]

- Wu, W.; Tu, F.; Niu, M.; Yue, Z.; Liu, L.; Wei, S. Star: An stgcn architecture for skeleton-based human action recognition. IEEE Trans. Circuits Syst. I Regul. Pap. 2023, 70, 2370–2383. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Stauffert, J.-P.; Niebling, F.; Latoschik, M.E. Latency and cybersickness: Impact, causes, and measures. a review. Front. Virtual Real. 2020, 1, 582204. [Google Scholar] [CrossRef]

- Chen, Y.-H.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid-State Circuits 2016, 52, 127–138. [Google Scholar] [CrossRef]

- Li, J.; Louri, A.; Karanth, A.; Bunescu, R.C. Gcnax: A flexible and energy-efficient accelerator for graph convolutional neural networks. In Proceedings of the IEEE International Symposium on HighPerformance Computer Architecture, Seoul, Republic of Korea, 27 February–3 March 2021; IEEE: New York, NY, USA, 2021; pp. 775–788. [Google Scholar]

- Cao, C.; Lan, C.; Zhang, Y.; Zeng, W.; Lu, H.; Zhang, Y. Skeleton-based action recognition with gated convolutional neural networks. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 3247–3257. [Google Scholar] [CrossRef]

- Huang, W.-C.; Lin, I.-T.; Lin, Y.-S.; Chen, W.-C.; Lin, L.-Y.; Chang, N.-S.; Lin, C.-P.; Chen, C.-S.; Yang, C.-H. A 25.1-tops/w sparsity-aware hybrid cnn-gcn deep learning soc for mobile augmented reality. IEEE J. Solid-State Circuits 2024, 59, 3840–3852. [Google Scholar] [CrossRef]

- Zhang, S.; Du, Z.; Liu, L.; Han, T.; Yang, Y.; Li, X.; Zhou, Q. Cambricon-x: An accelerator for sparse neural networks. In Proceedings of the 49th Annual IEEE/ACM International Symposium on Microarchitecture, Taipei, Taiwan, 15–19 October 2016; IEEE: New York, NY, USA, 2016; pp. 1–12. [Google Scholar]

- Zhang, J.-F.; Lee, C.-E.; Liu, C.; Shao, Y.S.; Keckler, S.W.; Zhang, Z. Snap: A 1.67–21.55 tops/w sparse neural acceleration processor for unstructured sparse deep neural network inference in 16 nm cmos. In Proceedings of the Symposium on VLSI Circuits, Kyoto, Japan, 9–14 June 2019; IEEE: New York, NY, USA, 2019; pp. 306–307. [Google Scholar]

- Du, Z.; Fasthuber, R.; Chen, T.; Ienne, P.; Temam, O.; Luo, L.; Zhang, X.; Gao, Y.; Li, D. Shidiannao: Shifting vision processing closer to the sensor. In Proceedings of the International Symposium on Computer Architecture, Portland, OR, USA, 13–17 June 2015; ACM: New York, NY, USA, 2015; pp. 92–104. [Google Scholar]

- Moons, B.; Uytterhoeven, R.; Dehaene, W.; Verhelst, M. 14.5 envision: A 0.26-to-10 tops/w subword-parallel dynamic-voltageaccuracy-frequency-scalable convolutional neural network processor in 28nm fdsoi. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC) Digest of Technical Papers, San Francisco, CA, USA, 11–15 February 2017; IEEE: New York, NY, USA, 2017; pp. 246–247. [Google Scholar]

- Katti, P.; Al-Hashimi, B.M.; Rajendran, B. Sparsity-Aware Optimization of In-Memory Bayesian Binary Neural Network Accelerators. In Proceedings of the 2025 IEEE International Symposium on Circuits and Systems (ISCAS), London, UK, 25–28 May 2025; pp. 1–5. [Google Scholar]

- Li, W.; Zhang, J.; Liu, Y. Efficient graph convolutional networks for mobile augmented reality. IEEE Trans. Mob. Comput. 2024, 23, 1234–1246. [Google Scholar]

- Chen, J.; Sze, V. Dataflow optimization for deep learning socs. IEEE J. Solid-State Circuits 2022, 57, 2345–2357. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami Beach, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 248–255. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft’ coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zürich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 7444–7452. [Google Scholar]

- Lin, C.-H.; Lin, W.-C.; Huang, Y.-C.; Tsai, Y.-C.; Chang, Y.; Chen, W.-C. A 3.4-to-13.3 tops/w 3.6 tops dualcore deep-learning accelerator for versatile ai applications in 7 nm 5g smartphone soc. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC) Digest of Technical Papers, San Francisco, CA, USA, 16–20 February 2020; IEEE: New York, NY, USA, 2020; pp. 134–136. [Google Scholar]

- Harrou, F.; Zeroual, A.; Hittawe, M.M.; Sun, Y. Road Traffic Modeling and Management: Using Statistical Monitoring and Deep Learning; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar]

- Tahri, O.; Usman, M.; Demonceaux, C.; Fofi, D.; Hittawe, M.M. Fast earth mover’s distance computation for catadioptric image sequences. In Proceedings of the 2016 IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; IEEE: New York, NY, USA, 2016; pp. 2485–2489. [Google Scholar]

- Lee, K.-J.; Moon, S.; Sim, J.-Y. A 384g output nonzeros/j graph convolutional neural network accelerator. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 4158–4162. [Google Scholar] [CrossRef]

- Ke, Q.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F. A new representation of skeleton sequences for 3D action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 3288–3297. [Google Scholar]

- Hedegaard, L.; Heidari, N.; Iosifidis, A. Continual spatio-temporal graph convolutional networks. Pattern Recognit. 2023, 140, 109528. [Google Scholar] [CrossRef]

- Shi, C.; Liao, D.; Wang, L. Hybrid CNN-GCN Network for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 10530–10546. [Google Scholar] [CrossRef]

- Goetschalckx, K.; Verhelst, M. Depfin: A 12 nm, 3.8 tops depth-first cnn processor for high res. image processing. In Proceedings of the Symposium on VLSI Circuits, Kyoto, Japan, 13–19 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–2. [Google Scholar]

- Sumbul, H.E.; Li, J.; Louri, A.; Karanth, A.; Bunescu, R.C. System-level design and integration of a prototype ar/vr hardware featuring a custom low-power dnn accelerator chip in 7 nm technology for codec avatars. In Proceedings of the IEEE Custom Integrated Circuits Conference, Newport Beach, CA, USA, 24–27 April 2022; IEEE: New York, NY, USA, 2022; pp. 01–08. [Google Scholar]

- Zhang, C.; Sun, G.; Fang, Z.; Zhou, P.; Pan, P.; Cong, J. Caffeine: Toward uniformed representation and acceleration for deep convolutional neural networks. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2019, 38, 2072–2085. [Google Scholar] [CrossRef]

- Yang, Z.; Moczulski, M.; Denil, M.; de Freitas, N.; Song, L.; Wang, Z. Deep fried convnets. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; IEEE: New York, NY, USA, 2015; pp. 1476–1483. [Google Scholar]

- Le, Q.V.; Sarlos, T.; Smola, A.J. Fastfood: Approximate kernel expansions in loglinear time. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Volume 28, pp. 244–252. [Google Scholar]

- Scherer, M.; Conti, F.; Benini, L.; Eggimann, M.; Cavigelli, L. Siracusa: A low-power on-sensor risc-v soc for extended reality visual processing in 16 nm cmos. In Proceedings of the IEEE 49th European Solid State Circuits Conference, Lisbon, Portugal, 11–14 September 2023; IEEE: New York, NY, USA, 2023; pp. 217–220. [Google Scholar]

- Park, J.-S.; Kim, T.; Lee, S.; Kim, H.; Lee, J.; Park, J.; Kim, J.; Lee, J. A multi-mode 8k-mac hwutilization-aware neural processing unit with a unified multi-precision datapath in 4nm flagship mobile soc. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC) Digest of Technical Papers, San Francisco, CA, USA, 20–26 February 2022; IEEE: New York, NY, USA, 2022; pp. 246–248. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the International Conference on Machine Learning, Haifa, Israel, 21–25 June 2010; pp. 807–814. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the 33rd Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 8026–8037. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).