Models for Classifying Cognitive Load Using Physiological Data in Healthcare Context: A Scoping Review

Abstract

1. Introduction

2. Method

2.1. Search Strategy

2.2. Inclusion and Exclusion Criteria

2.3. Data Extraction

2.4. Assessment of Study Quality

3. Results

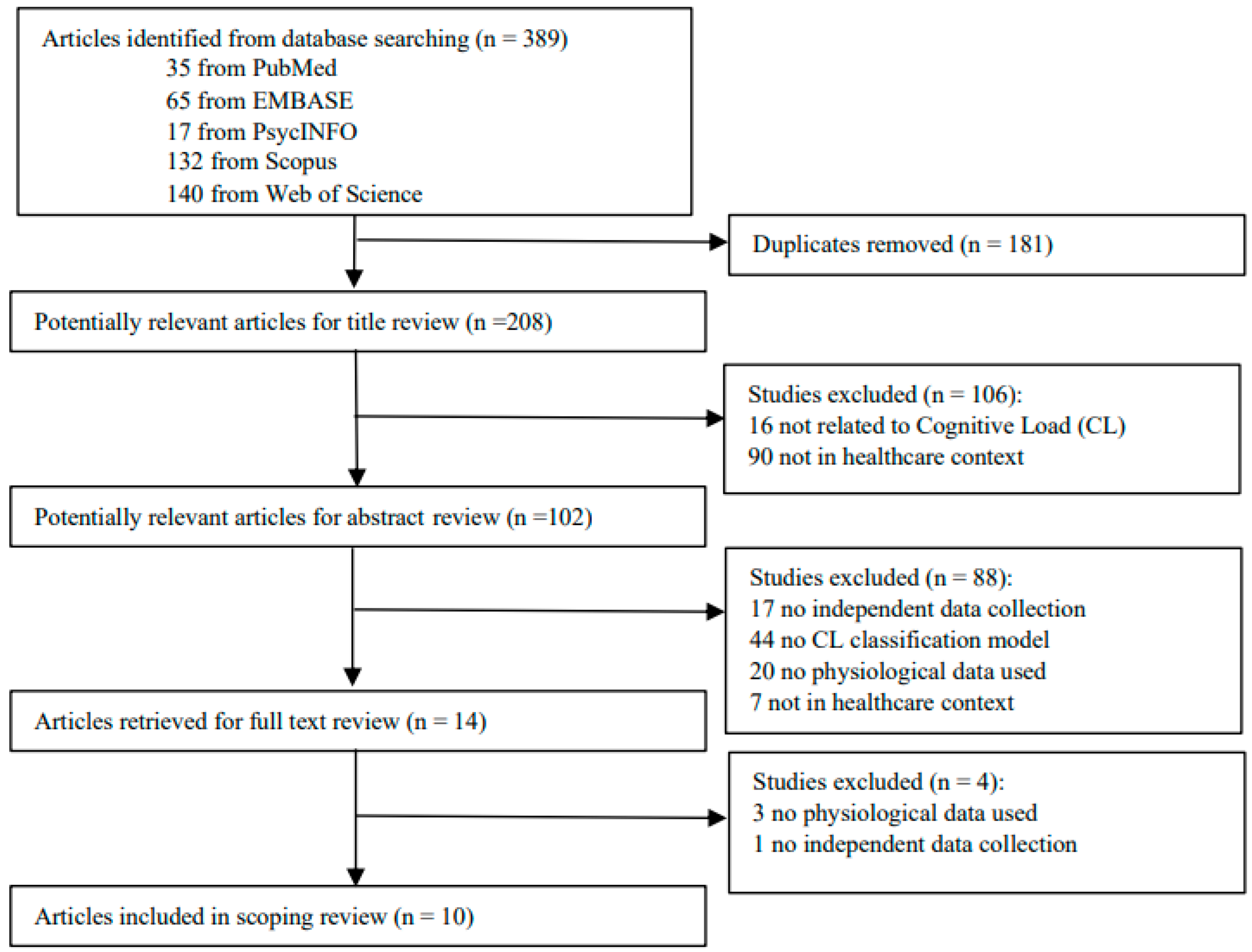

3.1. Trial Flow and Features of Reviewed Studies

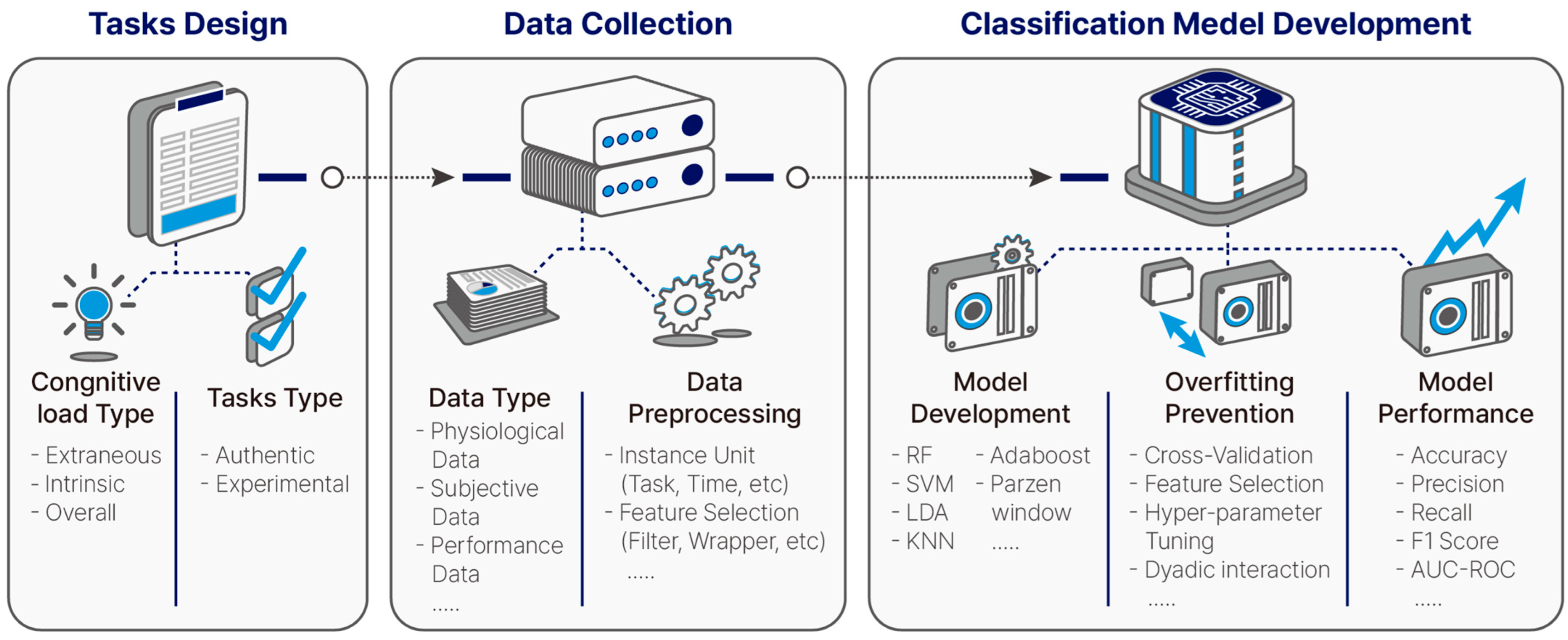

3.2. How Has the Task Design Been Conducted to Develop Cognitive Load Classification Models in Healthcare Contexts? (RQ1)

- (1)

- Cognitive load of interest and task design

- (2)

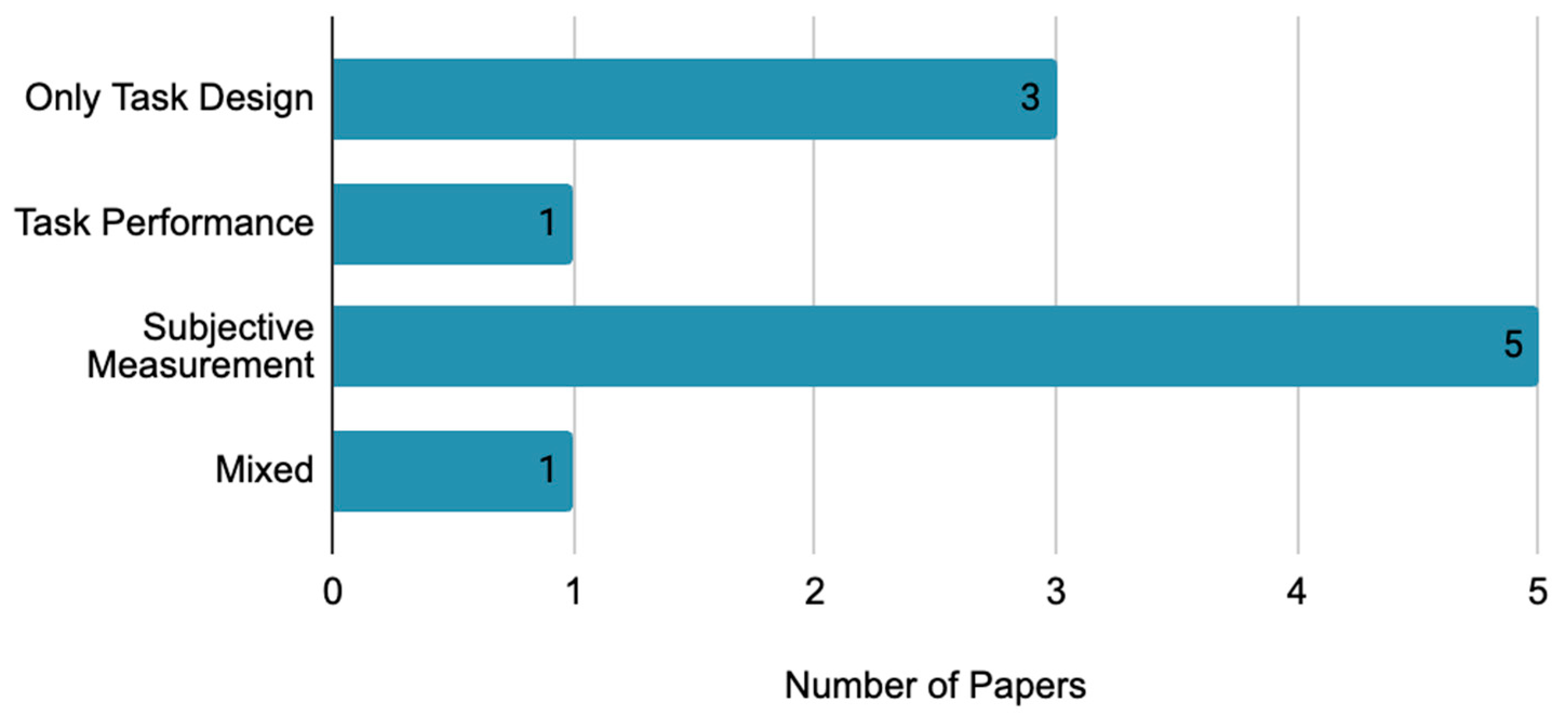

- Validation of cognitive load

- (3)

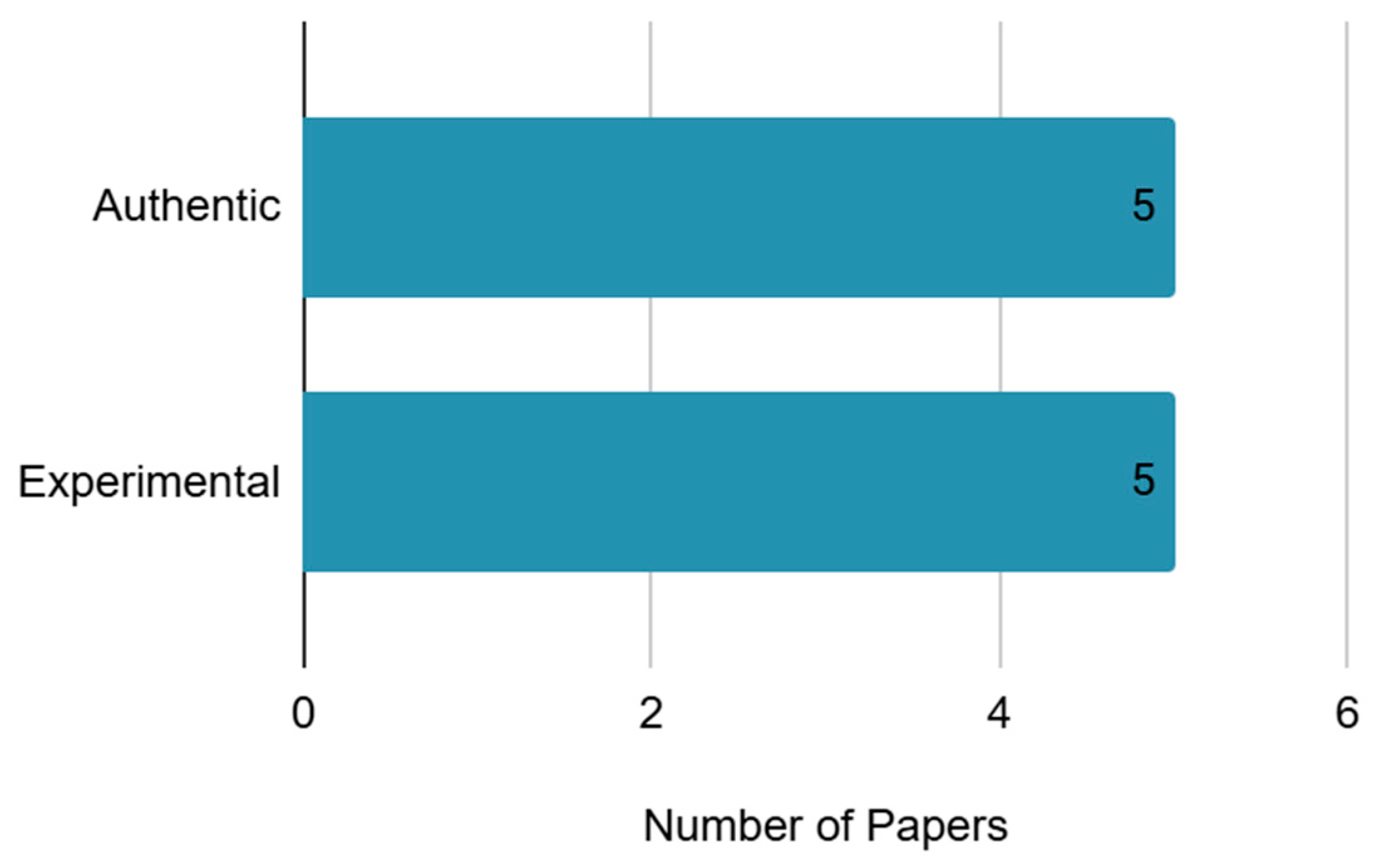

- Task type

3.3. What Types of Data Have Been Collected to Develop Cognitive Load Classification Models in Healthcare Contexts? (RQ2)

- (1)

- Participant

- (2)

- Data collection

3.4. How Has the Development of Cognitive Load Classification Models Been Carried out in Healthcare Contexts? (RQ3)

- (1)

- Data preprocessing and feature selection

- (2)

- Classification model

- (3)

- Overfitting Method

- (4)

- Model Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AdaBoost | adaptive boosting |

| AUC-ROC | area under the curve-receiver operating characteristic |

| BL | blink frequency |

| CL | cognitive load |

| ECG | electrocardiogram |

| EDA | electrodermal activity |

| EEG | electroencephalogram |

| EM | eye movement |

| FFS | forward feature selection |

| fMRI | functional magnetic resonance imaging |

| fNIRS | functional near-infrared spectroscopy |

| GSR | galvanic skin response |

| HRV | heart rate variability |

| ICA | independent component analysis |

| KNN | k-nearest neighbors |

| LDA | linear discriminant analysis |

| MOT | multiple object tracking |

| NASA-TLX | NASA Task Load Index |

| OSPAN | Operation Span Task |

| PCA | principal component analysis |

| PPG | photoplethysmogram |

| PRISMA | Preferred Reporting Items for Systematic Reviews and Meta-Analyses |

| PU | pupil dilation |

| RFE | recursive feature elimination |

| RSP | respiration rate |

| ST | skin temperature |

| SVM | support vector machine |

Appendix A. Details of Reviewed Studies

| Study | Q1. Cognitive Load Task Design | Q2. Data Collection | Q3. Model Development | ||||||||||||||||||

| Cognitive Load | Tasks | Participants | Data | Data Preprocessing | Classification Model | Performance of Model | |||||||||||||||

| CL Type | CL Validation | Task Manipulation | Task Type | Task Name | Bloom Taxonomy | Type | Total | Usable | % of Usable | Type | Sub-type | Instance Unit | Instance Num | Feature Selection | Specific Model | Feature Fusion Techniques | Overfitting Prevention Methods | Performance Metrics Reported | Accuracy | Performance Compared to Baseline | |

| Dasgupta et al. (2018) [2] | Extraneous | N | Using Secondary Tasks | Authentic | Walking + Elementary Arithmetic | Applying | Patient | 10 | 10 | 100 | Physiological Data | Body (Leg) | Task | 16,291 | Filter (Correlation), Wrapper (Recursive Feature Elimination (RFE)) | RF, SVM | Feature- Level | Cross-Validation, Feature Selection | Reporting Another Index | Ranged of 0.93–1 | N |

| Dorum et al. (2020) [13] | Intrinsic | N | Controlling Difficulty | Experimental | Multiple Object Tracking (MOT) Task | Remembering | Patient | 154 | 144 | 93.5 | Physiological Data | Brain (fMRI) | Task | 432 | Unused | LDA | Feature- Level | Cross-Validation | Reporting Only Accuracy | 95.8 | 187.8 |

| Kohout et al. (2019) [4] | Extraneous | Task Performance | Using Secondary Tasks | Authentic | Pill Sorting + OSPAN | Applying | Healthcare Professional | 8 | 8 | 100 | Physiological Data | Body (Hand, Hip) | Task | 16 | Filter (Mann–Whitney-U Test), Wrapper (Forward Feature Selection (FFS)) | SVM | Feature- Level | Cross-Validation, Feature Selection | Reporting Another Index | 90.0 | 80 |

| Keles et al. (2021) [6] | Overall | Subjective Measurement (NASA-TLX) | N | Authentic | Laparoscopic Surgery tasks | Applying | Healthcare Professional | 33 | 28 | 84.9 | Physiological, Subjective Data | Brain (fNIRS) | Time | Unreported | Filter (Pearson Correlation) | SVM | Feature- Level | Cross-Validation, Feature Selection | Reporting Only Accuracy | 90.0 | 80 |

| Zhang et al. (2017) [29] | Intrinsic | Subjective Measurement (Perceived Task Difficulty), Task Performance | Controlling Difficulty | Authentic | Driving | Applying | Patient | 20 | 20 | 100 | Physiological, Subjective, Performance Data | Brain (EEG), Eye (BL, EC, EM, PU), Heart (ECG, RSP, PPG), Skin (GSR, ST), Body (Muscle) | Task | 286 | Filter (Principal Component Analysis (PCA)) | KNN | Feature- Level | Cross-Validation, Feature Selection | Reporting Only Accuracy | 84.4 | 68.9 |

| Zhou et al. (2020) [27] | Intrinsic | Subjective Measurement (NASA-TLX) | Controlling Difficulty | Authentic | Surgery | Applying | Healthcare Professional | 12 | 12 | 100 | Physiological, Subjective Data | Brain (EEG), Heart (HRV), Body (Hand, Muscle), Skin (GSR) | Task | 119 | Filter (Independent Component Analysis (ICA)) | SVM | Feature- Level | Cross-Validation, Feature Selection | Reporting Another Index | 83.2 | 66.4 |

| Beiramvand et al. (2023) [8] | Intrinsic | N | Controlling Difficulty | Experimental | N-Back Task | Remembering | General Public | 15 | 15 | Unreported | Physiological Data | Brain (EEG) | Time | Unreported | Unused | AdaBoost | Feature- Level | Cross-Validation, Data Augmentation | Reporting Another Index | 80.9 | 61.7 |

| Chen & Epps (2019) [35] | Intrinsic | Subjective Measurement (Unspecified) | Controlling Difficulty | Experimental | Elementary Arithmetic | Applying | General Public | 24 | 24 | 100 | Physiological Data | Body (Head) | Task | 336 | Filter | Parzen Window | Feature- Level | Cross-Validation, Feature Selection | Reporting Only Accuracy | 69.8 | 39.6 |

| Gogna et al. (2024) [31] | Intrinsic | Subjective Measurement (NASA-TLX) | Controlling Difficulty | Experimental | Game (spotting the differences in similar-looking pictures) | Analyzing | General Public | 15 | 15 | 100 | Physiological Data | Brain (EEG) | Task | 45 | Wrapper (Recursive Feature Elimination, RFE) | SVM | Feature- Level | Cross-Validation, Feature Selection, Hyperparameter Tuning | Reporting Another Index | 91.2 | 173.9 |

| Yu et al. (2024) [32] | Intrinsic | Subjective Measurement (NASA-TLX) | Controlling Difficulty | Experimental | Elementary Arithmetic | Applying | General Public | 20 | 20 | 100 | Physiological, Subjective Data | Brain (fMRI), Eye (BL, EM, PU) | Task | 300 | Filter | RF | Feature- Level | Cross-Validation, Feature Selection | Reporting Only Accuracy | 87.8 | 251.2 |

Appendix B. Distribution of Quality Assessment Scores (QualSyst) Across Included Studies

| Element | Chen & Epps (2019) [35] | Dasgupta et al. (2018) [2] | Dorum et al. (2020) [13] | Keles et al. (2021) [6] | Kohout et al. (2019) [4] | Zhang et al. (2017) [29] | Zhou et al. (2020) [27] | Beiramvand et al. (2023) [8] | Gogna et al. (2024) [31] | Yu et al. (2024) [32] |

| 1. Objective | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 2. Study design | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 3. Group selection | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 4. Subject characteristics | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 5. Random allocation | . | . | . | . | . | . | . | . | . | . |

| 6. Investigator blinding | . | . | . | . | . | . | . | . | . | . |

| 7. Subject blinding | . | . | . | . | . | . | . | . | . | . |

| 8. Outcome/exposure measures | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 9. Sample size | . | . | . | 1 | . | . | . | . | . | . |

| 10. Analytical methods | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 11. Variance estimates | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 12. Confounding control | 1 | 1 | 1 | 1 | 1 | 2 | 1 | 1 | 1 | 1 |

| 13. Results detail | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| 14. Conclusions | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| Total | 19 | 19 | 19 | 20 | 19 | 20 | 19 | 19 | 19 | 19 |

| Average | 0.95 | 0.95 | 0.95 | 0.91 | 0.95 | 1.00 | 0.95 | 0.95 | 0.95 | 0.95 |

References

- Brüggemann, T.; Ludewig, U.; Lorenz, R.; McElvany, N. Effects of mode and medium in reading comprehension tests on cognitive load. Comput. Educ. 2023, 192, 104649. [Google Scholar] [CrossRef]

- Dasgupta, P.; VanSwearingen, J.; Sejdic, E. “You can tell by the way I use my walk.” Predicting the presence of cognitive load with gait measurements. Biomed. Eng. Online 2018, 17, 122. [Google Scholar] [CrossRef]

- Sweller, J. Cognitive load theory. In International Encyclopedia of Education, 4th ed.; Elsevier: Amsterdam, The Netherlands, 2023; pp. 127–134. [Google Scholar] [CrossRef]

- Kohout, L.; Butz, M.; Stork, W. Using Acceleration Data for Detecting Temporary Cognitive Overload in Health Care Exemplified Shown in a Pill Sorting Task. In Proceedings of the 32nd IEEE International Symposium on Computer-Based Medical Systems (IEEE CBMS), Cordoba, Spain, 5–7 June 2019; pp. 20–25. [Google Scholar]

- Skulmowski, A.; Xu, K.M. Understanding Cognitive Load in Digital and Online Learning: A New Perspective on Extraneous Cognitive Load. Educ. Psychol. Rev. 2021, 34, 171–196. [Google Scholar] [CrossRef]

- Keles, H.O.; Cengiz, C.; Demiral, I.; Ozmen, M.M.; Omurtag, A.; Sakakibara, M. High density optical neuroimaging predicts surgeons’s subjective experience and skill levels. PLoS ONE 2021, 16, e0247117. [Google Scholar] [CrossRef]

- Kucirkova, N.; Gerard, L.; Linn, M.C. Designing personalised instruction: A research and design framework. Br. J. Educ. Technol. 2021, 52, 1839–1861. [Google Scholar] [CrossRef]

- Beiramvand, M.; Lipping, T.; Karttunen, N.; Koivula, R. Mental Workload Assessment Using Low-Channel Pre-Frontal EEG Signals. In Proceedings of the 2023 IEEE International Symposium on Medical Measurements and Applications, Jeju, Republic of Korea, 14–16 June 2023; pp. 1–5. [Google Scholar]

- Anderson, L.W.; Krathwohl, D.R. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Complete edition; Addison Wesley Longman: New York, NY, USA, 2021. [Google Scholar]

- Reddy, L.V. Personalized recommendation framework in technology enhanced learning. J. Emerg. Technol. Innov. Res. 2018, 5, 746–750. [Google Scholar]

- Mu, S.; Cui, M.; Huang, X. Multimodal Data Fusion in Learning Analytics: A Systematic Review. Sensors 2020, 20, 6856. [Google Scholar] [CrossRef]

- Wu, C.; Liu, Y.; Guo, X.; Zhu, T.; Bao, Z. Enhancing the feasibility of cognitive load recognition in remote learning using physiological measures and an adaptive feature recalibration convolutional neural network. Med. Biol. Eng. Comput. 2022, 60, 3447–3460. [Google Scholar] [CrossRef] [PubMed]

- Dørum, E.S.; Kaufmann, T.; Alnæs, D.; Richard, G.; Kolskår, K.K.; Engvig, A.; Sanders, A.-M.; Ulrichsen, K.; Ihle-Hansen, H.; Nordvik, J.E.; et al. Functional brain network modeling in sub-acute stroke patients and healthy controls during rest and continuous attentive tracking. Heliyon 2020, 6, 11. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.; Bossuyt, P.; Boutron, I.; Hoffmann, T.; Mulrow, C.; Moher, D. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. arXiv 2020. [Google Scholar] [CrossRef]

- Dong, H.; Lio, J.; Sherer, R.; Jiang, I. Some Learning Theories for Medical Educators. Med. Sci. Educ. 2021, 31, 1157–1172. [Google Scholar] [CrossRef] [PubMed]

- Kmet, L.M.; Lee, R.C.; Cook, L.S. Standard Quality Assessment Criteria for Evaluating Primary Research Papers From a Variety of Fields. (HTA Initiative #13); Alberta Heritage Foundation for Medical Research: Edmonton, AB, Canada, 2004. [Google Scholar]

- Sweller, J. Element interactivity and intrinsic, extraneous, and germane cognitive load. Educ. Psychol. Rev. 2010, 22, 123–138. [Google Scholar] [CrossRef]

- Sarkhani, N.; Beykmirza, R. Patient Education Room: A New Perspective to Promote Effective Education. Asia Pac. J. Public. Heal. 2022, 34, 881–882. [Google Scholar] [CrossRef]

- Lee, L.; Packer, T.L.; Tang, S.H.; Girdler, S. Self-management education programs for age-related macular degeneration: A systematic review. Australas. J. Ageing 2008, 27, 170–176. [Google Scholar] [CrossRef]

- Sweller, J.; Ayres, P.; Kalyuga, S. Cognitive Load Theory; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- de Jong, T. Cognitive load theory, educational research, and instructional design: Some food for thought. Instr. Sci. 2010, 38, 105–134. [Google Scholar] [CrossRef]

- Sweller, J.; Ayres, P.; Kalyuga, S. The Expertise Reversal Effect. In Cognitive Load Theory, Explorations in the Learning Sciences; Instructional Systems and Performance Technologies; Sweller, J., Ayres, P., Kalyuga, S., Eds.; Springer: New York, NY, USA, 2011; pp. 155–170. [Google Scholar]

- Zu, T.; Hutson, J.; Loschky, L.C.; Rebello, N.S. Using eye movements to measure intrinsic, extraneous, and germane load in a multimedia learning environment. J. Educ. Psychol. 2020, 112, 1338. [Google Scholar] [CrossRef]

- Paas, F.; Renkl, A.; Sweller, J. Cognitive Load Theory: Instructional Implications of the Interaction between Information Structures and Cognitive Architecture. Instr. Sci. 2004, 32, 1–8. [Google Scholar] [CrossRef]

- Haapalainen, E.; Kim, S.; Forlizzi, J.F.; Dey, A.K. Psycho-Physiological Measures for Assessing Cogni-Tive load. In Proceedings of the 12th ACM international conference on Ubiquitous computing, Copenhagen, Denmark, 26–29 September 2010; pp. 301–310. [Google Scholar]

- Guo, J.; Dai, Y.; Wang, C.; Wu, H.; Xu, T.; Lin, K. A physiological data-driven model for learners’ cognitive load detection using HRV-PRV feature fusion and optimized XGBoost classification. Softw. Pr. Exp. 2020, 50, 2046–2064. [Google Scholar] [CrossRef]

- Zhou, T.; Cha, J.S.; Gonzalez, G.; Wachs, J.P.; Sundaram, C.P.; Yu, D. Multimodal Physiological Signals for Workload Prediction in Robot-assisted Surgery. ACM Trans. Hum. Robot. Interact. 2020, 9, 12. [Google Scholar] [CrossRef]

- Kumar, V.; Minz, S. Feature selection. SmartCR 2014, 4, 211–229. [Google Scholar] [CrossRef]

- Zhang, L.; Wade, J.; Bian, D.; Fan, J.; Swanson, A.; Weitlauf, A.; Warren, Z.; Sarkar, N. Cognitive Load Measurement in a Virtual Reality-Based Driving System for Autism Intervention. IEEE Trans. Affect. Comput. 2017, 8, 176–189. [Google Scholar] [CrossRef]

- Atrey, P.K.; Hossain, M.A.; El Saddik, A.; Kankanhalli, M.S. Multimodal fusion for multimedia analysis: A survey. Multimedia Syst. 2010, 16, 345–379. [Google Scholar] [CrossRef]

- Gogna, Y.; Tiwari, S.; Singla, R. Evaluating the performance of the cognitive workload model with subjective endorsement in addition to EEG. Med. Biol. Eng. Comput. 2024, 62, 2019–2036. [Google Scholar] [CrossRef] [PubMed]

- Yu, R.; Chan, A. Effects of player–video game interaction on the mental effort of older adults with the use of electroencephalography and NASA-TLX. Arch. Gerontol. Geriatr. 2024, 124, 105442. [Google Scholar] [CrossRef] [PubMed]

- Kalyuga, S. Cognitive Load in Adaptive Multimedia Learning. In New Perspectives on Affect and Learning Technologies; Springer: New York, NY, USA, 2011; pp. 203–215. [Google Scholar]

- Mihalca, L.; Salden, R.J.; Corbalan, G.; Paas, F.; Miclea, M. Effectiveness of cognitive-load based adaptive instruction in genetics education. Comput. Hum. Behav. 2011, 27, 82–88. [Google Scholar] [CrossRef]

- Chen, S.; Epps, J. Atomic Head Movement Analysis for Wearable Four-Dimensional Task Load Recognition. IEEE J. Biomed. Health Inform. 2019, 23, 2464–2474. [Google Scholar] [CrossRef]

| Database | Query |

|---|---|

| Scopus | TITLE-ABS-KEY ((“cognitive load”) AND (physiolog* OR psychophysiolog* OR bio*) AND (data) AND (classif*)) |

| Web of Science | ALL=((“cognitive load”) AND (physiolog* OR psychophysiolog* OR bio*) AND (data) AND (classif*)) |

| PubMed | (“cognitive load”) AND (physiolog* OR psychophysiolog* OR bio*) AND (data) AND (classif*) |

| EMBASE | ((“cognitive load”) AND (physiolog* OR psychophysiolog* OR bio*) AND (data) AND (classification))/br |

| PsycINFO | (“cognitive load”) AND (physiolog* OR psychophysiolog* OR bio*) AND (data) AND (classification) |

| Criteria | Inclusion | Exclusion |

|---|---|---|

| Time period | Published from May, 2014 to 2024 | |

| Language | English | Not English |

| Type of article | Peer-reviewed journal publications | Non-peer-reviewed publications |

| Type of study | Full-text research papers | Commentaries, editorials, book chapters, letters |

| Context | Healthcare context | Not related to healthcare context (e.g., data analysis, machine learning, studying, manufacturing) |

| Study focus | Studies using physiological data collected from experiments to develop classification models of cognitive load | Not collecting data Not using physiological data |

| Research Question | Component | Category | Subcategory |

|---|---|---|---|

| 1. Cognitive Load Tasks Design | Cognitive load | Type | Extraneous, Intrinsic, Overall |

| Validation | Task Performance, Subjective Measurement | ||

| Tasks | Manipulation | Using Secondary Tasks, Controlling Difficulty | |

| Type | Authentic, Experimental | ||

| Bloom Taxonomy | Remembering, Understanding, Applying, Analyzing, Evaluation, Creating | ||

| 2. Data Collection | Participants | Type | Patient, Healthcare Professional, General Public |

| Data | Type | Physiological Data, Subjective Data, Performance Data | |

| Data Preprocessing | Instance Unit | Task, Time | |

| Feature Selection | Filter, Wrapper | ||

| 3. Classification Models Development | Classification Model | Specific Model | RF, SVM, LDA, KNN, AdaBoost, Parzen Window |

| Overfitting Prevention Methods | Cross-validation, Feature Selection, Hyperparameter Tuning, Dyadic Interaction | ||

| Performance of Model | Performance Metrics Reported | Reporting Another Index, Reporting Only Accuracy |

| Type of CL | Task Manipulation | Number of Studies |

|---|---|---|

| Intrinsic Load | Controlling task difficulty | 7 |

| Extraneous Load | Using secondary task | 2 |

| Overall Load | Not used | 1 |

| CL Validation | Number of Studies | |

|---|---|---|

| Only Task Design | 3 | |

| Task Performance | 1 | |

| Subjective Measurement | NASA-TLX | 4 |

| Unspecified | 1 | |

| Mixed | Perceived Task Difficulty and Task Performance | 1 |

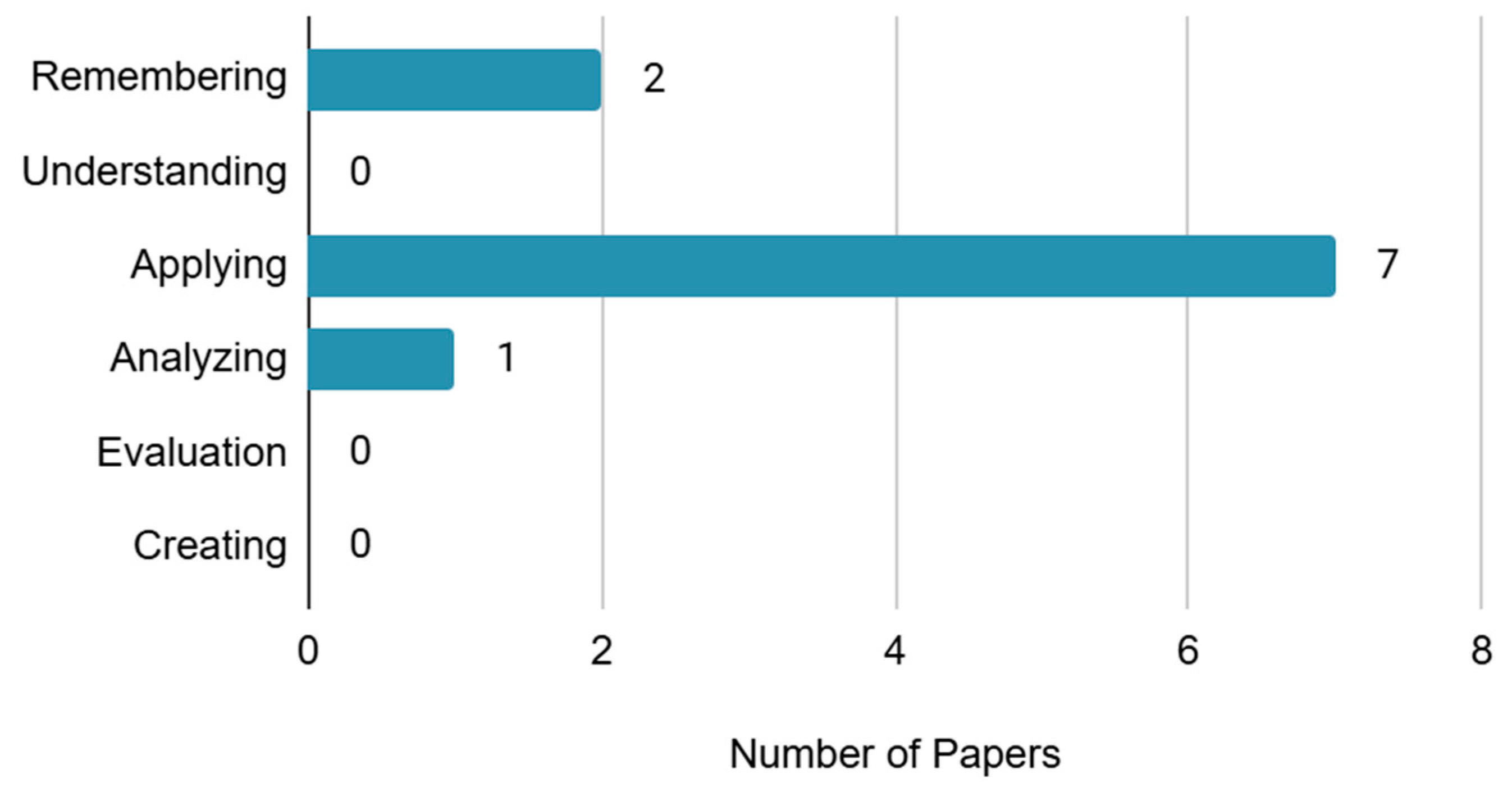

| Category | Subcategory | Number of Studies |

|---|---|---|

| Task Type | Authentic | 5 |

| Experimental | 5 | |

| Task Type according to Bloom Taxonomy | Remembering | 2 |

| Understanding | 0 | |

| Applying | 7 | |

| Analyzing | 1 | |

| Evaluation | 0 | |

| Creating | 0 |

| Data Category | Data Sub-Category | Number of Studies | |

|---|---|---|---|

| Physiological Data | Brain | EEG, fMRI, fNIRS | 7 |

| Body | Hand, Hip, Head, Leg, Muscle | 5 | |

| Eye | BL, EM, PU | 2 | |

| Heart | ECG, HRV, RSP, PPG | 2 | |

| Skin | GSR (EDA), ST | 2 | |

| Subjective Data | NASA-TLX | 4 | |

| Perceived task difficulty | 1 | ||

| Performance Data | Process | Driving | 1 |

| Result | Driving | 1 | |

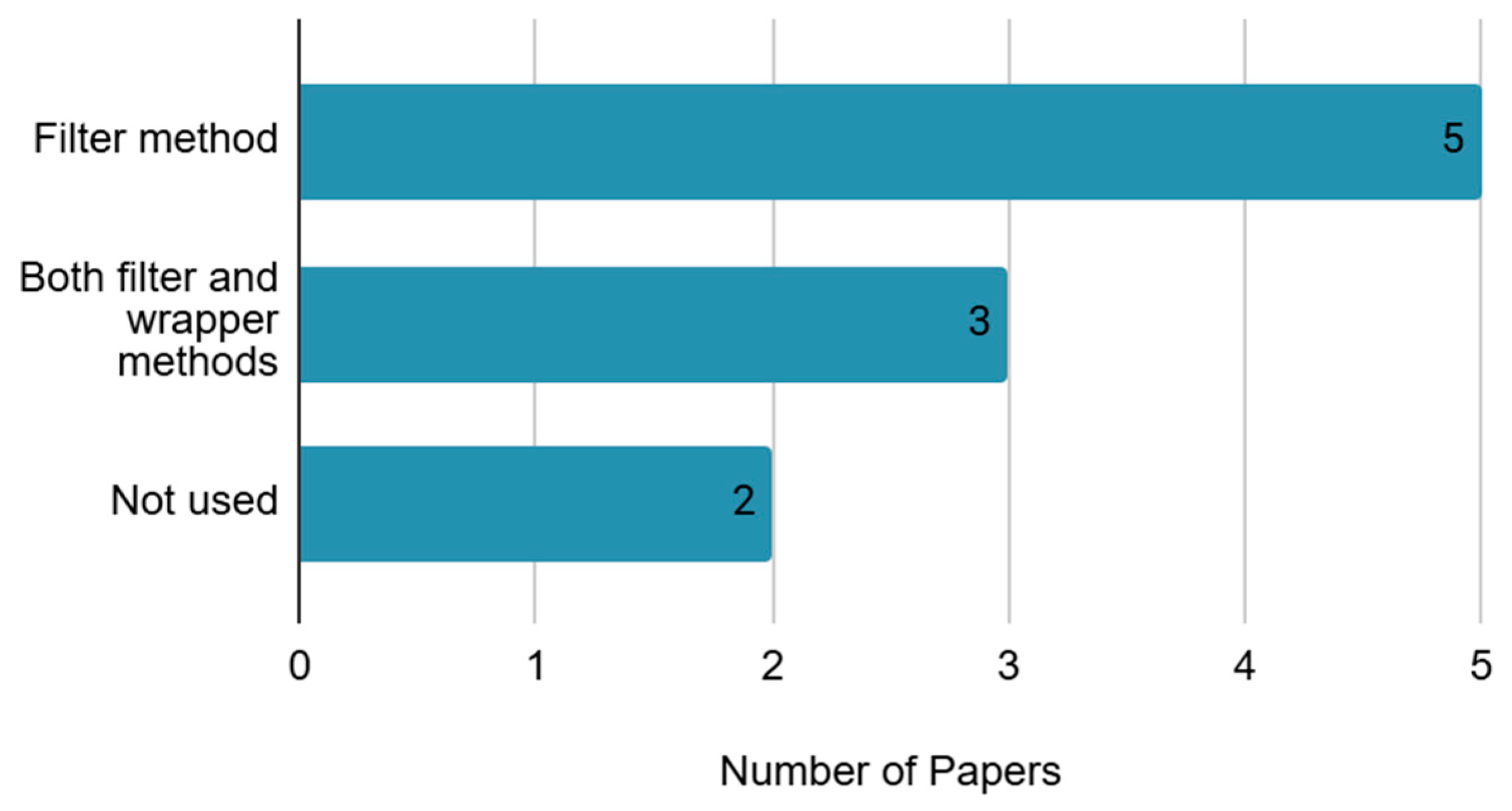

| Category | Sub-Category | Number of Studies |

|---|---|---|

| Instance Type | Task level | 8 |

| Time level | 2 | |

| Feature Selection Method | Filter method | 5 |

| Both filter and wrapper methods | 3 | |

| Not used | 2 |

| Category | Number of Studies |

|---|---|

| Support Vector Machine (SVM) | 4 |

| Support Vector Machine (SVM), Random Forest (RF) | 1 |

| Random Forest (RF) | 1 |

| K-Nearest Neighbors (KNN) | 1 |

| Adaptive Boosting (AdaBoost) | 1 |

| Linear Discriminant Analysis (LDA) | 1 |

| Distribution-Based Classifier (Parzen Window) | 1 |

| Category | Number of Studies |

|---|---|

| Cross-Validation, Feature Selection | 7 |

| Cross-Validation, Feature Selection, Hyperparameter Tuning | 1 |

| Cross-Validation, Data Augmentation | 1 |

| Cross-Validation | 1 |

| Category | Number of Studies |

|---|---|

| Reporting Only Accuracy | 5 |

| Reporting Accuracy with Another Index | 5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Kim, M.; Han, Y. Models for Classifying Cognitive Load Using Physiological Data in Healthcare Context: A Scoping Review. Appl. Sci. 2025, 15, 9155. https://doi.org/10.3390/app15169155

Kim H, Kim M, Han Y. Models for Classifying Cognitive Load Using Physiological Data in Healthcare Context: A Scoping Review. Applied Sciences. 2025; 15(16):9155. https://doi.org/10.3390/app15169155

Chicago/Turabian StyleKim, Hyeongjo, Minji Kim, and Yejin Han. 2025. "Models for Classifying Cognitive Load Using Physiological Data in Healthcare Context: A Scoping Review" Applied Sciences 15, no. 16: 9155. https://doi.org/10.3390/app15169155

APA StyleKim, H., Kim, M., & Han, Y. (2025). Models for Classifying Cognitive Load Using Physiological Data in Healthcare Context: A Scoping Review. Applied Sciences, 15(16), 9155. https://doi.org/10.3390/app15169155