1. Introduction

In recent years, with the rapid development of the emerging low-altitude economy, angular position information of aerial vehicles such as unmanned aerial vehicles (UAVs) are drawing attention in various systems and services. Due to the poor adaptability of active radars in complex urban electromagnetic environments and the small radar scattering cross-sectional area of ordinary UAVs, which is difficult to capture, passive localization technology has gradually become the preferred solution for UAV localization [

1]. Among the passive schemes, angle estimation based on sound source is regarded as a key method. Sound source localization using microphone array technology [

2] has become an important way to obtain critical information in complex environments [

3], which provides effective support for real-time localization by collecting signals through an array composed of multiple sensors, and then calculating the incidence angle of the sound source [

4].

Traditional direction of arrival (DOA) estimation techniques can be categorized into four types: beamforming methods [

5,

6], subspace decomposition methods, sparse reconstruction-based methods, and minimum variance-based methods.

Beamforming methods are fundamentally constrained by the Rayleigh resolution limit, which restricts their ability to resolve closely spaced sources [

7]. To overcome this limitation and enhance the estimation accuracy, a class of algorithms based on eigenvalue decomposition (EVD) [

8] has been developed, namely subspace decomposition methods including the well-known multiple signal classification (MUSIC) and the estimation of signal parameters via rotational invariance techniques (ESPRIT) [

9,

10]. The MUSIC algorithm conducts EVD [

11] on the covariance matrix of the received signals to separate the signal subspace and the noise subspace, which are mutually orthogonal [

12]. By exploiting this orthogonality, MUSIC constructs a spatial spectrum in which the peaks correspond to the directions of arrival. The spectrum is searched to identify these peaks, thereby yielding high-resolution DOA estimates. In contrast, the ESPRIT algorithm [

13] leverages a specially designed array geometry composed of two identical subarrays with a known and constant displacement between corresponding elements. This configuration ensures that the phase shift in an incoming plane wave across the subarrays is invariant to rotation. By solving the resulting generalized eigenvalue problem, the DOAs of the incident signals can be accurately estimated without the need for a spectral search [

14].

Beyond subspace-based techniques such as MUSIC and ESPRIT, various other classes of algorithms [

15] have been proposed to further improve DOA estimation performance under challenging conditions, such as a low signal-to-noise ratio (SNR), limited snapshots, or coherent sources.

Sparse reconstruction-based methods, inspired by compressive sensing theory, exploit the inherent sparsity of signal sources in the angular domain [

16]. By discretizing the angle space into a fine grid, DOA estimation is formulated as a sparse signal recovery problem [

17]. Techniques such as the L1-norm minimization and orthogonal matching pursuit have been widely adopted in this context. These methods can provide super-resolution DOA estimates even with a limited number of array elements or snapshots. However, their performance heavily depends on the grid resolution, and they may suffer from basis mismatch errors when the true DOA does not align with the predefined grid.

Minimum variance-based methods [

18], such as the minimum variance distortionless response (MVDR) beamformer, aim to suppress interference and noise while maintaining unity gains in the desired signal direction [

19]. MVDR constructs an adaptive spatial filter that minimizes the output power subject to a distortionless constraint in the steering direction. While MVDR offers improved resolution over conventional beamforming, its performance degrades in the presence of array calibration errors or coherent sources.

With high computational capacity and advanced modeling capabilities, deep learning technology has shown significant research potential in the field of sound source localization [

20]. Its advantage lies in that it can automatically extract effective information from a large amount of raw data without relying on artificial design features. Meanwhile, under strong noise and reverberation conditions, deep learning models can achieve a robust estimation of sound source location through data-driven methods, which is superior to traditional methods [

21]. The main methods include classification models, Transformer and hybrid architectures, and end-to-end raw signal modeling.

Currently, most deep learning-based DOA estimation methods commonly use classification models for angle prediction [

22]. For example, some studies have successfully achieved effective prediction of the true angle of arrival using deep neural networks (DNN) trained with the lower triangular element of the covariance matrix as an input feature [

23]. With the widespread application of convolutional neural networks (CNN), researchers can further learn spectral features through CNN to improve model performance and training efficiency [

24]. Subsequently, various CNN-based depth frameworks have been proposed, such as DeepMUSIC [

25], which divides DOA space into multiple sub-regions and estimates them separately. In parallel, regression-based networks have gained traction for their continuous angle estimation, eliminating quantization errors inherent in classification approaches. Techniques like multi-layer perceptron (MLP) regression heads and end-to-end convolutional regression architectures demonstrate sub-degree precision in 2D azimuth-elevation estimation [

26], proving especially valuable for full-spatial field analysis. To improve the estimation accuracy, some researchers propose to extract and splice the real part, imaginary part and phase information of covariance matrix of multi-channel signals as inputs, respectively, to enhance the DOA estimation ability under low-SNR conditions [

27].

With the widespread adoption of Transformer networks, researchers have also attempted to apply them to the DOA field [

28], contributing to the achievement of super-resolution DOA estimation [

29], namely Transformer and hybrid architectures, where self-attention mechanisms capture long-range dependencies across sensor arrays, enabling joint spatial–spectral feature learning. This has contributed to the achievement of super-resolution DOA estimation, with recent variants like cross-attention Transformers achieving real-time performance while maintaining millimeter-level accuracy in near-field scenarios [

30].

However, the above methods inevitably rely on the traditional DOA processing flow in the data preprocessing stage, which not only increases the calculation overhead, but also may lead to the loss of some feature information, thus affecting model performance. In this regard, some studies have proposed to directly input the original in-phase (I) and quadrature (Q) signals into the neural network for modeling to reduce the preprocessing cost, namely end-to-end raw signal modeling, and improve the estimation accuracy and achieved good results [

31]. Notably, these end-to-end approaches have catalyzed advancements in lightweight 2D DOA systems for UAVs. Mature frameworks now integrate time-domain convolutional networks (TCNs) with spatial covariance features, enabling real-time azimuth and elevation tracking on embedded platforms [

32]. Such systems compensate for UAV ego-noise through adversarial training and leverage multi-array spatial diversity to resolve front-back ambiguities—critical for navigation in complex urban environments [

33].

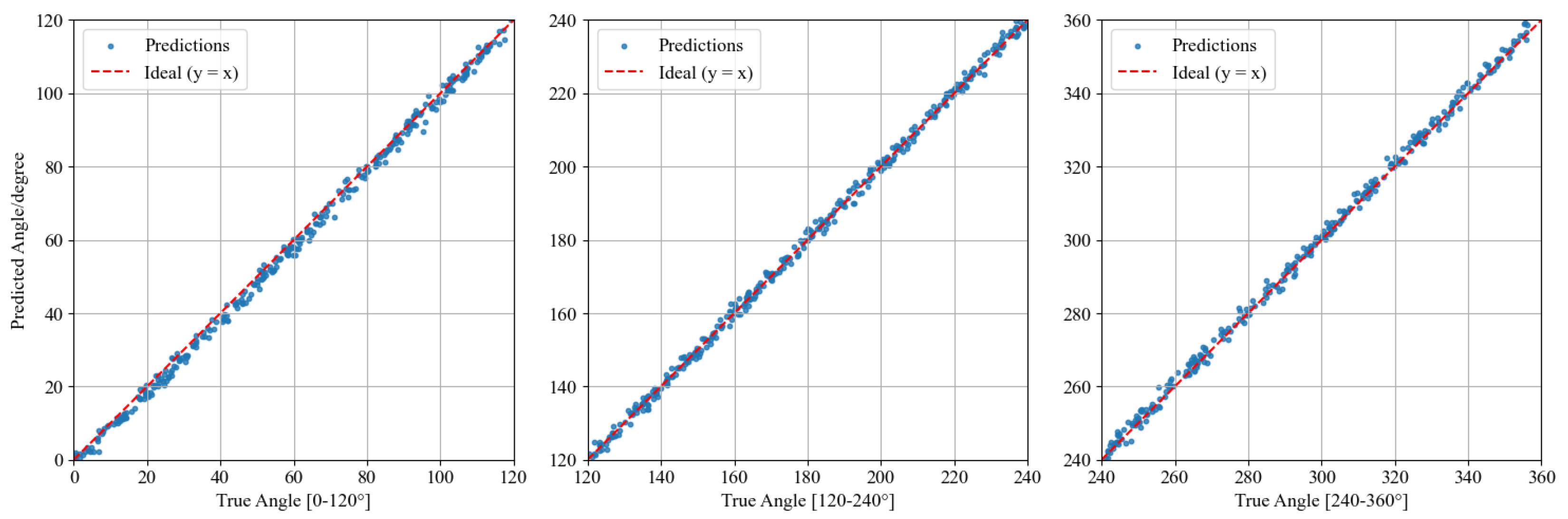

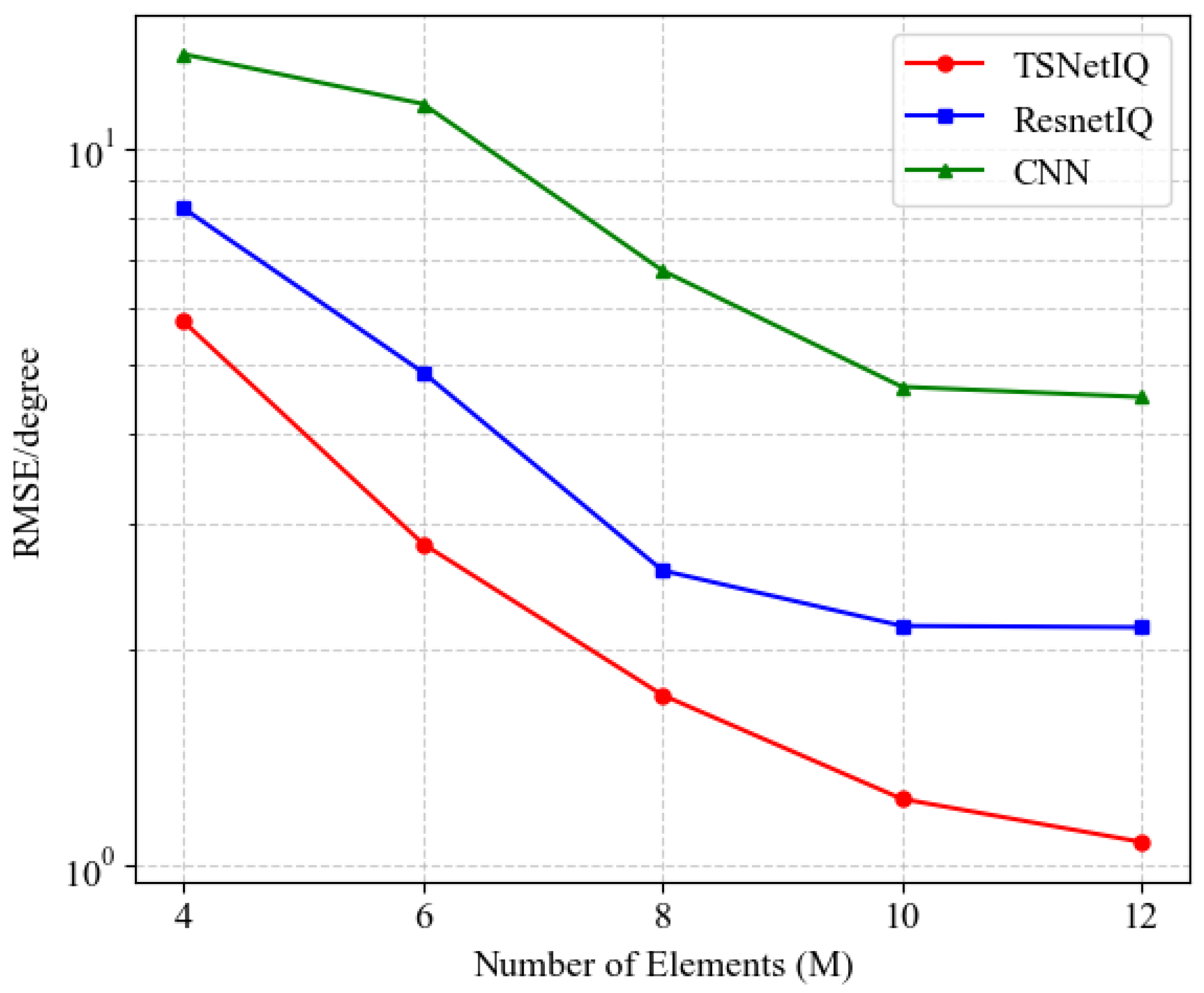

Based on end-to-end raw signal modeling, this paper proposes a new neural network model named TSNetIQ. This study takes the original

audio signals as input and models the DOA estimation task as a regression problem so that the model can output continuous angle values between 0 and 360°, avoiding the error problem caused by angle discretization in traditional classification methods. In addition, the regression method model is lighter and has a higher angular resolution than the classification model. In terms of network structure design, the advantages of Transformer and CNN are combined, and a squeeze-and-excitation (SE) module is introduced to enhance the modeling abilities of inter-channel information [

34], thereby further improving the accuracy of DOA estimation. Pyroomacoustics Python 3.12.9 package [

35] is used to construct a realistic acoustic simulation environment for synthesizing training datasets, thereby enhancing the credibility of the experimental results. Meanwhile, this article systematically analyzes the performance of the proposed network under different SNRs, sampling frequencies, and numbers of elements, and the results show that the proposed method has a stronger generalization ability and smaller estimation error than traditional CNN and ResNet trained based on the same input mode.

The main contributions of this study are as follows:

- -

A novel DOA estimation neural network architecture based on original input, named TSNetIQ, is proposed. The architecture combines the structural advantages of Transformer and CNN, and combines an SE module to enhance the feature extraction ability, which can significantly improve the accuracy of DOA estimation.

- -

By reformulating the DOA problem as a regression task, the proposed algorithm directly predicts continuous angle values between 0 and 360°, avoiding boundary classification errors. This strategy can offer higher resolution and a smaller model size.

- -

A dynamic simulation environment is constructed by rotating a microphone array in an anechoic chamber to simulate sound source reception at various angles. This procedure generates diverse datasets with random angles, enhancing the validity and interpretability of the training process.

3. Proposed DOA Estimation Method

3.1. Preprocessing and IQ Extraction

Unlike traditional DOA estimation methods based on covariance matrices, this paper adopts a strategy of directly using the

components of the received signals as the input. For the original signal

, which is a discrete signal received by each array element, its

Q signal

can be obtained using the discrete Hilbert transform, defined as

Furthermore, the analytic signal

can be derived from the formula as follows:

where

represents

I signal, which is numerically equal to the original signal. After that, the

I and

Q components are obtained by

Then, the

components of all array elements are sequentially concatenated to form the input matrix, which is explained as

This matrix has a dimension of . Using this approach, directional information is directly extracted from the raw signals.

Following the extraction of the components, a dedicated preprocessing pipeline is applied to prepare the data for neural network input. First, a fourth-order Butterworth bandpass filter is applied to preserve frequency components near 2000 Hz while removing out-of-band frequencies, thereby eliminating external noise and interference as the acoustic source emits a pure 2000 Hz sinusoidal tone. Subsequently, the denoised signal was transformed into its analytic representation, using the Hilbert transform to extract the components. The real and imaginary parts are concatenated to form a matrix. Prior to network input, all angle data are normalized to a range from 0 to 1. This normalization uniformly scales the input features, mitigating the influence of potential variations in signal amplitude across different recordings and ensuring stable gradient behavior during neural network optimization. Finally, the components and the normalized angles are concatenated to form an input feature matrix for the model.

3.2. DOA Estimation Using TSNetIQ

CNN is a deep learning model that is specifically designed to process data with grid-like structures. It was widely applied to feature extraction from data such as images and audio. CNN extracts local features through convolutional layers and uses parameter sharing and local connection mechanisms to significantly reduce model parameters, thereby improving computational efficiency and generalization ability.

The neural network in this study is designed with CNN as the main body, proposing an angle regression model, named TSNetIQ, which integrates the CNN and Transformer mechanisms. The goal is to extract spatiotemporal features from multi-channel signals and achieve high-precision direction estimation. The overall architecture consists of five 2D convolutional layers, an SE attention module, positional encoding, a Transformer encoder, and a fully connected output layer. Unlike traditional CNN architectures, the model avoids excessive use of pooling layers, except for the pooling layer in the SE module and the average pooling layer at the tail of the model, as pooling layers would degrade the performance of our model. A three-layer 2D convolutional structure was also tested, but it exhibited an insufficient feature extraction capability, leading to inferior performance compared to the five-layer 2D convolutional structure. Taking the case of 4 array elements , and as an example, the specific structure of the TSNetIQ network is as follows.

As shown in

Figure 2, the overall architecture consists of five 2D convolutional layers, an SE attention module, positional encoding, a Transformer encoder, and a fully connected output layer. The front end of the network is composed of five 2D convolutional layers, which extract the local spatial features of the signal layer by layer. The first layer uses a convolution kernel with a size of (8,7) to enhance joint time–frequency perception, while the remaining four convolutional layers adopt progressively smaller kernel sizes ((1,5), (1,3), (1,3), (1,1)), combined with a 1 × 2 stride to control the down-sampling rate. Each convolutional layer is followed by an activation layer and a normalization layer, where the normalization layer uses the BatchNorm2d function, and the activation layer uses the ReLU function to accelerate convergence and improve nonlinear expression capability, which is described as follows.

After the fifth convolutional layer, an SE attention module is introduced to enhance the sensitivity of the TSNetIQ model to channel features. This module generates attention weights for each channel through global average pooling and a two-layer fully connected network, achieving the adaptive recalibration of features, reinforcing effective features, and suppressing redundant information.

In the context of deep learning for DOA, modeling the relationships between channels is considered crucial. To capture sequence dependencies in the high-level features extracted by CNN, a Transformer structure is introduced after the convolutional layers, which consists of a position encoding layer and two Transformer encoder layers. First, the output of the convolutional layers is reshaped, and learnable positional encoding vectors are added to explicitly incorporate the relative ordering of temporal features and facilitate the modeling of sequential dependencies across time steps. The feature sequence is then input into two Transformer encoder layers; this consists of 8 attention heads, 256 embedding dimensions and 512 hidden layer sizes to effectively capture long-range dependencies, integrate global contextual features across the entire sequence, and learn continuous feature representations for precise value estimation.

The output of the Transformer encoder, which captures global temporal dependencies across the input sequence, is aggregated through a mean pooling operation to obtain a fixed-dimensional representation. This pooled feature is then passed through a linear layer, followed by a Sigmoid function defined as

to produce the final output. The inclusion of the Sigmoid function serves a critical role by constraining the model’s prediction between 0 and 1, which corresponds to the normalized angular range from 0 to 360°. This bounded output ensures consistency with the preprocessed training targets and prevents the model from generating physically invalid angle predictions (e.g., negative degrees or values exceeding 360°). Furthermore, by maintaining the output within a normalized and differentiable range, the Sigmoid function facilitates stable gradient propagation during backpropagation, thereby improving the convergence and generalization performance of the regression model.

This method not only normalizes the output range but also enhances the stability of model training and promotes better convergence.

In the regression model, the Huber loss function is used as the loss function of the model, with the following expression:

where

is a circle angle difference defined as

where

and

represent the true angle and the estimated angle of the

signal in the

sample, respectively.

denotes a positive factor.

The Huber loss function combines the advantages of the mean squared error (MSE) and mean absolute error (MAE). During gradient descent, the Huber loss tends to behave like MSE, while in the presence of outliers, it tends to behave like MAE. This dynamic adjustment provides an optimization objective that balances accuracy and robustness in DOA regression tasks, making it particularly suitable for scenarios with noise or outlier angles. More detailed network code and simulation code can be accessed via the link provided in the

Supplementary Materials at the end of the paper.

6. Future Work

Although the proposed TSNetIQ model demonstrates a superior performance in single-source DOA estimation under anechoic conditions, several important aspects remain to be addressed in future research. First, real-world acoustic environments often involve multiple simultaneous sound sources, leading to signal superposition and mutual interference. Therefore, extending the current framework to support multi-source localization will be a valuable and necessary enhancement. To achieve this, the model could be adapted to output multiple angle predictions, potentially through sequence modeling or attention-based multi-output regression mechanisms. Second, while our experiments are conducted in idealized anechoic chambers, actual environments frequently contain reverberation caused by reflections from surrounding surfaces. This reverberation can significantly distort the spatial features embedded in the raw signals. Future work will incorporate reverberant RIR modeling into the simulation process using tools such as the image-source method in pyroomacoustics Python package, thereby enhancing the realism and robustness of the training data.

The current results were achieved under relatively large-scale datasets. However, in practical applications, data efficiency is often a critical constraint. It is of interest to explore how the estimation accuracy and angular resolution of the model can be further improved without expanding the scale of the dataset. This could be approached by incorporating self-supervised learning, data augmentation, or knowledge distillation techniques, aiming to maximize information extraction from limited training data.

Increasing the angular resolution beyond the current 0.1° while maintaining computational feasibility remains an open challenge. Future efforts may consider multi-resolution regression strategies or hierarchical DOA prediction frameworks to achieve finer-grained direction estimation under the same data constraints. In addition, future work will consider the deployment of the proposed model on edge devices. In real-world applications, inference latency and power consumption are critical factors affecting feasibility. Therefore, we plan to adopt techniques such as model pruning, quantization, and a lightweight architecture design to reduce model complexity and improve runtime efficiency, aiming to enable real-time DOA estimation on embedded systems or mobile platforms.

The current study focuses solely on azimuthal DOA estimation within a single plane. To enhance the system’s spatial awareness, future efforts will extend the TSNetIQ framework to support 2D DOA estimation, i.e., simultaneously predicting both the azimuth and elevation angles of the sound source. This extension is particularly valuable for three-dimensional sound source localization in scenarios such as UAV tracking and aerial situational awareness.