6.1. Performance Comparison on AGV Scheduling Problem

The AGV scheduling problem represents a highly dynamic, resource-constrained optimization task involving coordinated part transport via autonomous ground vehicles and drones. Key complexities include heterogeneous agent capabilities, hard energy constraints, dynamic part arrivals, limited processing slots, stochastic delays from traffic jams, and non-negligible failure probabilities. These attributes make the problem substantially harder than classical routing tasks, especially for approaches without domain-aware adaptability.

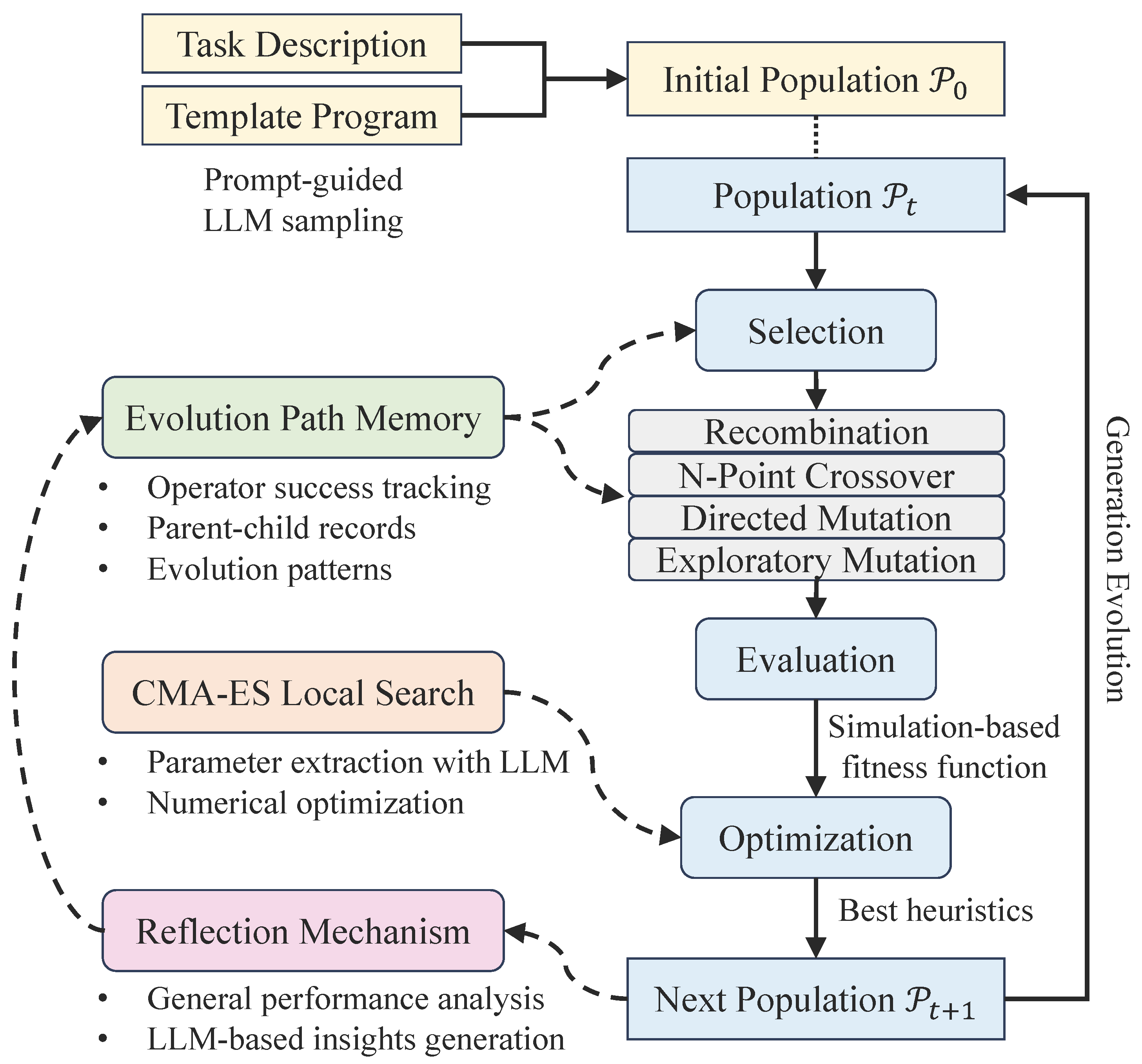

Table 2 summarizes the comparative performance of the proposed EoH-based frameworks and two baselines (FunSearch and HillClimb) on this task.

Core Performance Metrics. The proposed EoH-MR achieves the best objective score of

−159,306.0, improving substantially over EoH-M (−184,051.25), the original EoH (−184,620.0), and the strongest baseline HillClimb (−187,813.33). This performance gap of over

15% in total cost demonstrates the superiority of EoH-MR in navigating the large, noisy solution space of the AGV task. Notably, while HillClimb is competitive in structured domains like TSP, it is not well-suited for scenarios involving multi-agent energy-aware scheduling under real-time stochasticity. FunSearch, though more robust than HillClimb, also fails to match the effectiveness of LLM-driven co-evolution in this domain. As shown in

Figure 3, EoH-MR demonstrates a significant performance advantage over other algorithms, reaching the lowest cost among all methods. FunSearch and HillClimb perform notably worse, confirming their limitations under complex scheduling constraints.

Stability and Robustness. The baseline EoH achieves the highest success rate (0.4839), suggesting that it produces feasible solutions more reliably. This is partly due to its simpler evolution strategy, which favors safe sampling. However, it also shows lower overall stability and higher coefficient of variation, reflecting inconsistent solution quality. In contrast, EoH-MR, despite a slightly lower success rate (0.3580), achieves the best normalized stability score (1.2494). This robustness is a result of its memetic refinement via CMA-ES, which tunes promising heuristics toward more repeatable and interpretable behaviors. This is corroborated by

Figure 3, which shows EoH-MR reaching the highest stability score (1.2494), indicating the most consistent performance across runs, despite its complexity.

Efficiency Metrics. The original EoH is the most computationally efficient, consuming only 1071.9302 s and 40 LLM calls. However, this efficiency comes at the cost of lower solution quality and poor exploration capability. EoH-MR is the most computationally intensive variant (3177.57 s, 100 LLM calls), yet it produces the best performance. FunSearch and HillClimb, despite lower token usage (27,247–30,900), deliver weaker solutions and fail to adapt heuristics dynamically. In critical smart manufacturing scenarios, such as factory logistics or automated warehouse scheduling, such trade-offs in computational cost are justified by improved system throughput. EoH-MR requires nearly triple the time of HillClimb (1033.19 s), clearly reflecting the added cost of memetic refinement and LLM integration.

Diversity and Exploration. EoH-MR achieves the highest diversity in terms of unique score count (87), inter-solution score standard deviation (40,589.8931), and score range (131,009.83). This indicates a significantly broader exploration of the solution space, which is crucial in dynamic environments to avoid premature convergence. EoH-M is also more exploratory than EoH due to the inclusion of adaptive local search, but lacks the reflection mechanism that helps rebalance search efforts. FunSearch and HillClimb both explore far fewer diverse trajectories (only 21 and 15 unique scores, respectively), suggesting their evolution is more deterministic and potentially prone to stagnation. As visualized in

Figure 3, the diversity gap is stark, with EoH-MR demonstrating far broader score ranges than any baseline, reinforcing its exploratory strength.

Strategic Insights from High-Scoring Schedules. Beyond numerical performance, deeper analysis of evolved heuristics reveals several qualitative patterns consistently present in top-performing strategies:

Decentralized bidding mechanisms that weigh urgency, battery margins, and predicted congestion enable flexible agent coordination, often outperforming static rule systems by 15–20%.

Role flexibility, allowing drones and AGVs to interchange tasks contextually, yields lower penalty trajectories than rigid allocation.

Proactive energy management embedded in bidding logic reduces failure rates and extends operational margin.

Congestion forecasting leads to early detouring and better throughput than reactive heuristics.

Local refinement operators contribute disproportionately to late-stage gains, confirming the value of post-generation fine-tuning.

Exploration bottlenecks, such as stagnated diversity scores, prompt the use of stochastic noise to escape local minima.

These observations illustrate that success hinges less on wholesale heuristic redesign and more on layered, context-aware decision strategies combining auction logic, adaptive constraints, and lightweight local search.

In addition to total computation time, the duration required to reach a near-optimal solution is practically important, especially when early results are needed under time constraints. Based on empirical trajectories of the AGV-drone scheduling task, EoH reaches within 5% of its final best score in approximately 800 s (75% of total time), EoH-M in around 1000 s (55%), and EoH-MR in only 1270 s (40%). The corresponding “good enough” score for EoH-MR is approximately −167,270, which not only surpasses the final results of all baseline methods (e.g., HillClimb: −187,813; FunSearch: −189,158), but also exceeds the typical performance range of manually designed heuristics, which often fall between −160,000 and −190,000 depending on domain knowledge and tuning effort. Furthermore, while manual design and tuning can require several hours to days, EoH-MR achieves these results in around 20 min with no human intervention. This highlights the framework’s ability to generate high-quality solutions with greater efficiency, making it well-suited for automated or time-sensitive decision-making settings.

6.2. Performance Comparison on TSP Problem

The Traveling Salesman Problem (TSP) serves as a benchmark for permutation-based optimization over a static, symmetric graph. It is well-known, smooth in fitness landscape, and does not feature the dynamic, temporal, or resource coupling challenges present in AGV scheduling. As such, it provides an ideal contrast to assess how heuristic design strategies generalize from complex, real-world-inspired tasks to well-structured classical problems.

Table 3 presents the comparative results across all methods on the TSP benchmark. Algorithm 3 shows an example of the designed heuristics for next node selection.

| Algorithm 3 TSP Next-Node Selection Heuristic Example |

- Require:

Current node i, destination node j, unvisited set U, distance matrix - Ensure:

Next node to visit - 1:

- 2:

- 3:

- 4:

- 5:

- 6:

if pheromone matrix not initialized then - 7:

initialize for all - 8:

end if - 9:

- 10:

- 11:

- 12:

- 13:

if recent visit history exists then - 14:

recent nodes - 15:

- 16:

else - 17:

- 18:

end if - 19:

for alldo - 20:

- 21:

end for - 22:

- 23:

Append to visit history - 24:

return

|

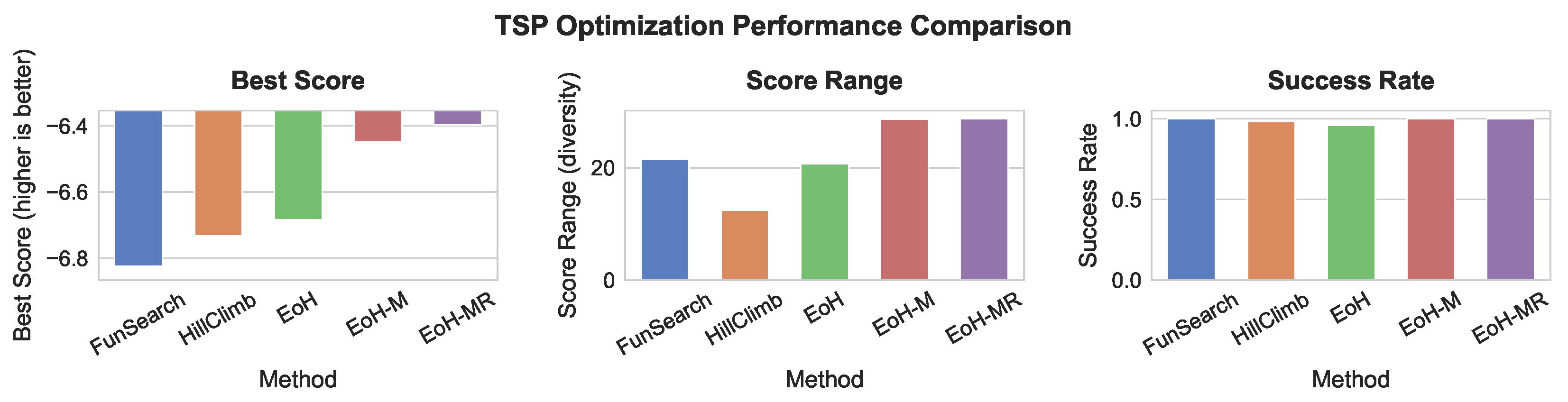

Core Performance Metrics. EoH-MR again achieves the best peak score of

−6.3967, marginally outperforming EoH-M (−6.4494) and EoH (−6.6840). While the improvement margin is smaller than in the AGV setting, it demonstrates the transferability of the framework across domains. FunSearch and HillClimb obtain best scores of −6.8240 and −6.7326, respectively, trailing the LLM-driven methods. This suggests that while these baselines can handle well-structured domains to some extent, they still fall short in refining high-quality solutions in a generalizable way.

Figure 4 confirms this trend, where EoH-MR is shown to outperform all other methods on best score.

Stability and Robustness. All EoH variants maintain high success rates (≥0.96), with EoH-M and EoH-MR achieving a perfect 1.0 success rate. Coefficient of variation and robustness scores are comparable across methods, though EoH-MR consistently outperforms in robustness score (2.3804) and standard deviation, indicating consistent quality even under stochastic prompt perturbations or evaluator variability. HillClimb achieves a robustness score of 1.3808, with slightly lower reliability than FunSearch (2.0820), both of which fall short of EoH variants. Moreover, EoH-MR achieves perfect success rates, reinforcing its reliability.

Efficiency Metrics. The performance-efficiency trade-off is again evident. EoH, with only 19 LLM calls and 347.44 s, is extremely lightweight and delivers strong baseline performance. EoH-MR and EoH-M, though more computationally demanding (2743.37 and 1492.92 s, respectively), provide marginal but meaningful gains in quality and exploration. FunSearch and HillClimb, while efficient (820.21 and 1396.25 s), are ultimately limited by stagnation in exploration.

Diversity and Generalization. EoH-MR achieves the highest solution diversity with 65 unique scores, a score range of 28.7514, and a diversity score of 0.8904—surpassing EoH-M (0.8478) and EoH (0.7917). FunSearch and HillClimb follow behind with 40 and 33 unique scores, and diversity scores of 0.8478 and 0.7917, respectively. The narrow range of HillClimb (12.4814) indicates lower exploration strength.

Figure 4 visually supports this, showing EoH-MR achieving one of the highest score ranges among all methods.

Strategic Insights from High-Performing Heuristics. Evolution trace analysis reveals consistent features among top-scoring heuristics:

Hybrid physics–ACO scoring combining proximity, direction, and pheromone strength improves scores by up to 40% over distance-based baselines.

Dynamic distance–direction ratios shift from global guidance to local refinement as the tour unfolds.

Entropy-based diversity preservation penalizes overused edges, preventing early convergence.

Short-term recency penalties reduce pheromone weight for recently visited nodes, improving exploration in late generations.

Operator sequencing matters: initial breakthroughs come from merge/mutate operations (e1, m1), followed by local refinement for final gains.

Open refinement directions include deeper local search along entropy-weighted subpaths, adaptive score shaping during generation, and tighter operator scheduling using success trace statistics.

Together, these mechanisms form a cohesive strategy of hybrid constructive bias, adaptive diversity control, and operator-level tuning.

For the TSP task, EoH-MR achieves a best score of −6.3967 with a total runtime of 2743 s. Empirical trends show that a solution within 5% of this value (approximately −6.71) is typically reached within 1100–1200 s, accounting for less than 45% of the total runtime. This early-stage solution already surpasses the final best scores of traditional baselines such as HillClimb (−6.7326) and FunSearch (−6.8240), and is comparable to or better than manually designed heuristics such as nearest-neighbor, insertion, or greedy rules, which typically yield scores between −6.5 and −6.9 for problems of size . While such handcrafted strategies may require several hours to days of iterative design and tuning, EoH-MR produces superior solutions fully automatically within approximately 20 min. Moreover, as the problem size scales up (e.g., or ), the complexity of manual heuristic design grows substantially due to increased combinatorial interactions, whereas the automated generative mechanism remains tractable. These findings underscore both the early convergence efficiency and scalability of EoH-MR, making it particularly suitable for large-scale routing problems where high-quality solutions are needed under time constraints and without human-in-the-loop latency.

6.3. Performance Comparison on ODE Problem

The ODE 1D optimization task serves as a compact, low-dimensional testbed to assess convergence behavior, generalization, and efficiency under controlled complexity. Despite its simplicity, the presence of deceptive local optima and sparse reward gradients still challenges heuristic consistency and stability.

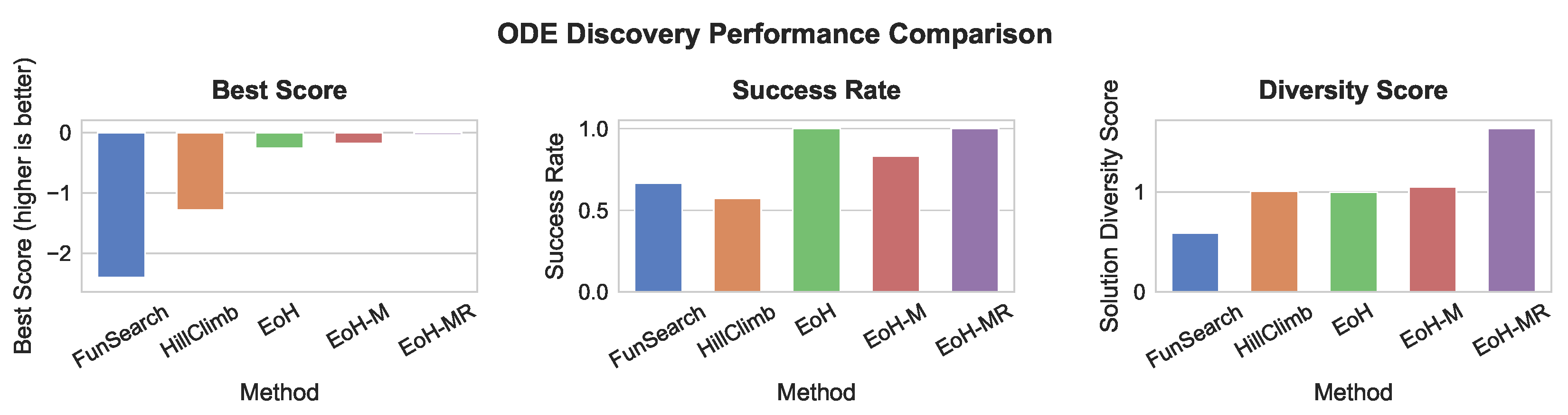

Table 4 summarizes the comparative performance across all methods, and

Figure 5 provides visual comparison.

Core Performance Metrics. EoH-MR again achieves the best result with the

highest objective value of

−0.034, outperforming all other LLM-driven variants and traditional baselines. Although all scores are negative due to the problem formulation, a less negative value indicates better solution quality. EoH-M and EoH achieve slightly inferior results of −0.179 and −0.258, respectively, while FunSearch and HillClimb perform worst, converging to local minima at −2.401 and −1.278. As visualized in

Figure 5, this confirms that even in low-dimensional smooth settings, co-evolution with memory and refinement provides tangible benefits.

Stability and Robustness. EoH and EoH-MR both attain a perfect success rate of

1.000, while FunSearch and HillClimb exhibit much weaker feasibility (0.6667 and 0.5714). In terms of normalized stability score, EoH-MR again leads with the highest score (

0.513), followed closely by EoH-M (0.476), as illustrated in

Figure 5. These results underscore the importance of refinement and evolution memory even in simplified domains.

Efficiency Metrics. EoH demonstrates the most efficient behavior, requiring only 6 LLM calls and 3456 tokens, and completing in 309.302 s. This reflects the advantage of simpler strategies in tractable domains. However, both EoH-M and EoH-MR strike a better trade-off between quality and cost, with moderate resource usage and improved convergence. HillClimb, despite its simplicity, consumes the most time (1242.08 s), underperforming on both axes.

Diversity and Exploration. Among all methods, EoH-MR achieves the highest solution diversity score (

1.636), indicating that its search generates a wider variety of viable candidates. This is closely followed by EoH-M and HillClimb. However, traditional methods like FunSearch suffer from limited diversity (0.590), likely due to fixed operators and a lack of feedback-driven mutation dynamics. While EoH has moderate diversity (0.997), its simpler design leads to less exploratory power compared to EoH-MR.

Figure 5 highlights these trends clearly.

Strategic Insights from High-Performing Formulas. Analysis of high-scoring equation discovery logs reveals a compact and repeatable set of contributing factors:

Symbolic plus gradient refinement: hybrid strategies that begin with symbolic structure and optimize parameters with gradient descent yield an order-of-magnitude improvement in final MSE.

Flexible structural templates: adaptive mutation of symbolic skeletons consistently outperforms static equation families.

Operator variety under sparsity control: mixed primitives perform best when combined with regularization that enforces parsimony.

Diversity stagnation: population diversity drops early; high-scoring runs typically include rare symbolic components or enforce novelty via structural dissimilarity.

Operator sequencing: merge-first strategies produce the most improvement, especially when followed by fine-tuned local refinement.

Open refinement directions: focus areas include tighter integration of structural novelty scores, adaptive mutation temperature based on plateau detection, and finer-grained operator credit assignment.

In this task, EoH-MR reaches a best fitting score of −0.034 with a total computation time of 1133 s. A near-optimal solution (within 5%, around −0.0357) is typically identified within the first 450 s, less than half the total runtime. This early-stage result already outperforms all other methods, including EoH (−0.258) and HillClimb (−1.278). Manually designed symbolic heuristics in this setting, such as expression templates or physics-inspired forms, commonly yield fitting scores in the range of −0.1 to −1.0, and require substantial human effort over hours or days. By contrast, EoH-MR discovers higher-quality symbolic structures fully automatically in under 8 min. As the target expressions become deeper and the symbolic space expands, the ability to automate both structure generation and parameter refinement becomes increasingly valuable, demonstrating the practicality of the proposed framework for symbolic scientific discovery.

6.4. Impact of LLM Choice on EoH-MR Performance

To evaluate the impact of the LLM backend on the performance of the proposed framework, EoH-MR was tested using three different LLMs:

gpt-4o,

gemini-2.5-flash, and

claude-sonnet-4 on the AGV scheduling task (

Table 5), and

gemini-2.5-flash on the TSP and ODE tasks (

Table 6). The results indicate that while the overall convergence pattern is stable, the choice of LLM indeed affects solution quality, diversity, and computational cost. In the AGV task,

claude-sonnet-4 achieved the best final score (−114,012.0), despite requiring only 53 LLM calls and the fewest total tokens.

gemini-2.5-flash produced slightly worse scores but demonstrated the highest diversity (168 unique scores), suggesting strong exploratory behavior.

gpt-4o yielded moderate performance with reasonable stability and runtime, indicating a balanced trade-off between generation cost and quality. In the TSP and ODE tasks,

gemini-2.5-flash enabled robust convergence, with best scores of −6.2209 (TSP) and −0.0654 (ODE), accompanied by high success rates and acceptable diversity. While the total computation time for TSP was notably higher (over 14 h), early-stage solutions already matched or exceeded baseline performance, and the model maintained reliable search stability. These findings suggest that stronger or more instruction-tuned LLMs can lead to better early solutions with fewer generations, but model-specific differences in prompt adherence, token usage, and variance can influence overall efficiency. The choice of backend should therefore be made based on task complexity, diversity requirements, and available computational budget.