An Interface Design Method Based on Situation Awareness and Immersive Analytics for Augmented and Mixed Reality Decision Support Systems in Construction

Abstract

1. Introduction

2. Theoretical Background

2.1. Situation Awareness (SA)

- (1)

- SA requirements analysis. This component determines the information required for decision-making, i.e., the SA requirements. It uses the goal-directed task analysis (GDTA) method, based on interviews, to elicit the decision-maker’s goals for successful job performance, the decisions needed to achieve those goals, and the information required for those decisions. The SA requirements are structured in three levels: level 1—perception of the elements in the environment, level 2—comprehension of the current situation, and level 3—projection of the future status. This construct represents how the information is processed in the mind of the decision-maker to address a decision, which is the basis of the SA-oriented design [26].

- (2)

- SA-oriented design principles. This component outlines principles for designing interfaces that increase SA. The principles are designed to present information effectively in alignment with how users process it for decision-making and goal attainment, allowing efficient information management and reducing cognitive load. Likewise, the principles aid designers to leverage technological features, such as display and audio, to improve perception and promote a deeper understanding of the situation, thereby supporting and enhancing SA.

- (3)

- SA measurement. This component presents methods for evaluating the design’s capacity to support SA and identifying SA design aspects for improvement. SA can be measured indirectly by inferring it from observable processes, behaviors, or performance outcomes, or directly from the user when interacting with the system. Indirect methods include verbal protocol analysis, psychophysiological assessment, performance assessment, among others. Direct methods can be subjective or objective. Subjective methods encompass the situational awareness rating technique (SART), SA-subjective workload dominance technique (SA-SWORD), self-ratings, and observer ratings. Objective methods include online probes, post-test questionnaires, and the situation awareness global assessment technique (SAGAT). These methods can be used individually or combined, depending on the purposes, resources, and circumstances of the study.

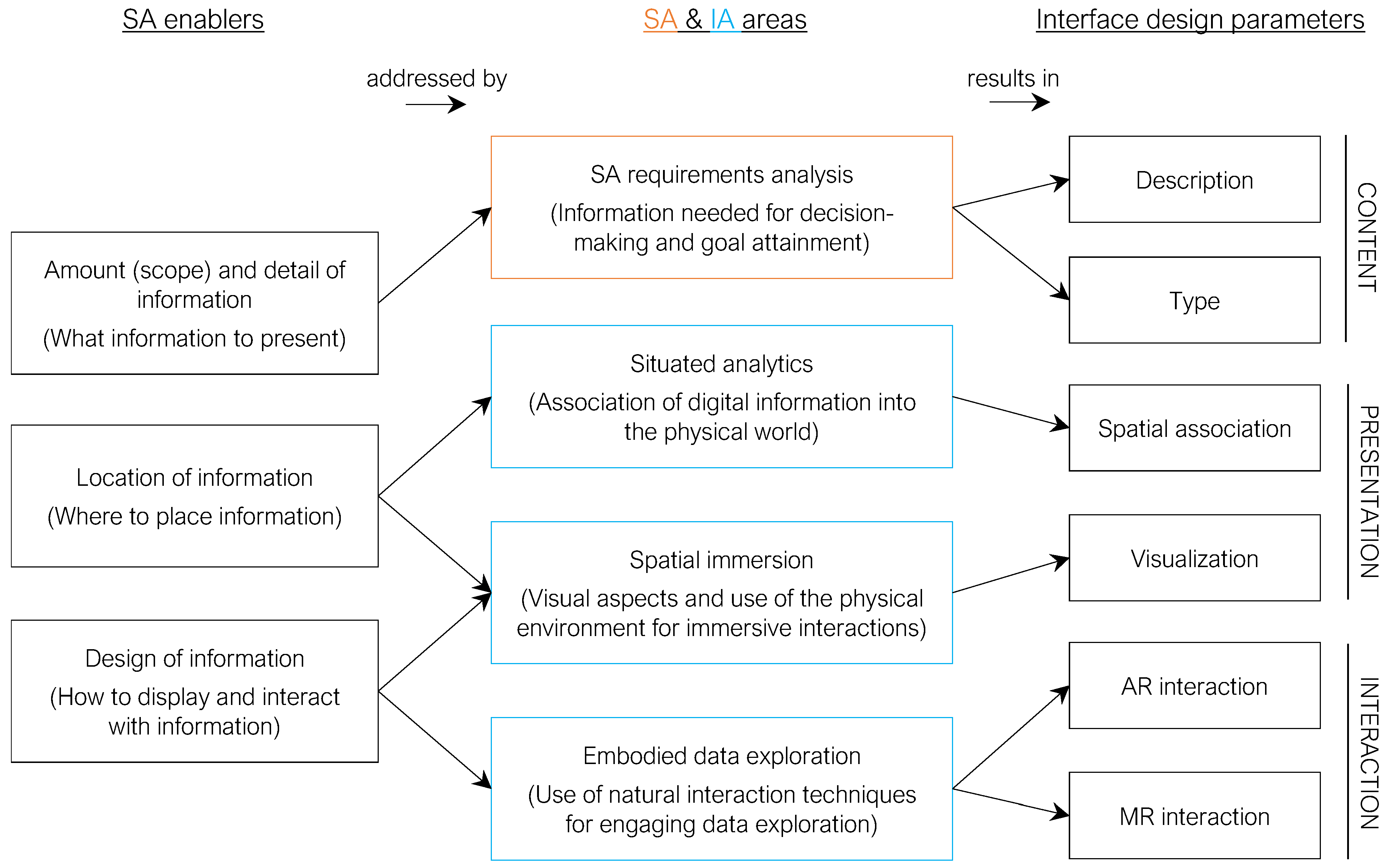

2.2. Immersive Analytics (IA)

- (1)

- Situated analytics. Relates to the use of data representations associated or organized in relation to objects, persons, or locations in the physical world to support in situ sense-making and decision-making.

- (2)

- Embodied data exploration. Addresses the use of natural interaction techniques (e.g., touch, gesture, voice, gaze, and tangible interaction) in the design of user interfaces that allow more intuitive and engaging data exploration in immersive environments.

- (3)

- Collaboration. Addresses how immersive technologies and visual analytics techniques can support shared interaction to enable different types of collaboration (i.e., co-located or remote, synchronous or asynchronous).

- (4)

- Spatial immersion. Addresses the visual aspects of 2D and 3D data representations within immersive environments, as well as the use of the real-world environment as a workspace for immersive visualizations and interactions.

- (5)

- Multi-sensory presentation. Relates to the use of other sensory channels (i.e., audio, haptic, smell, and taste) to present data as complements to vision for supporting technological immersion or as substitutes when vision is unavailable or not applicable.

- (6)

- Engagement (of stakeholders). Refers to the use of immersive technologies to generate immersive interactive narrative visualizations that deeply involve the users to support data understanding and more informed decision-making.

3. Research Process and Methods

4. SA Enablers and Interface Design Parameters

- (1)

- Amount (scope) and detail of information. This refers to what information to present to support SA.

- (2)

- Location of information. This refers to where to place the information to increase SA.

- (3)

- Design of information. This concerns how to display and interact with information in AR/MR.

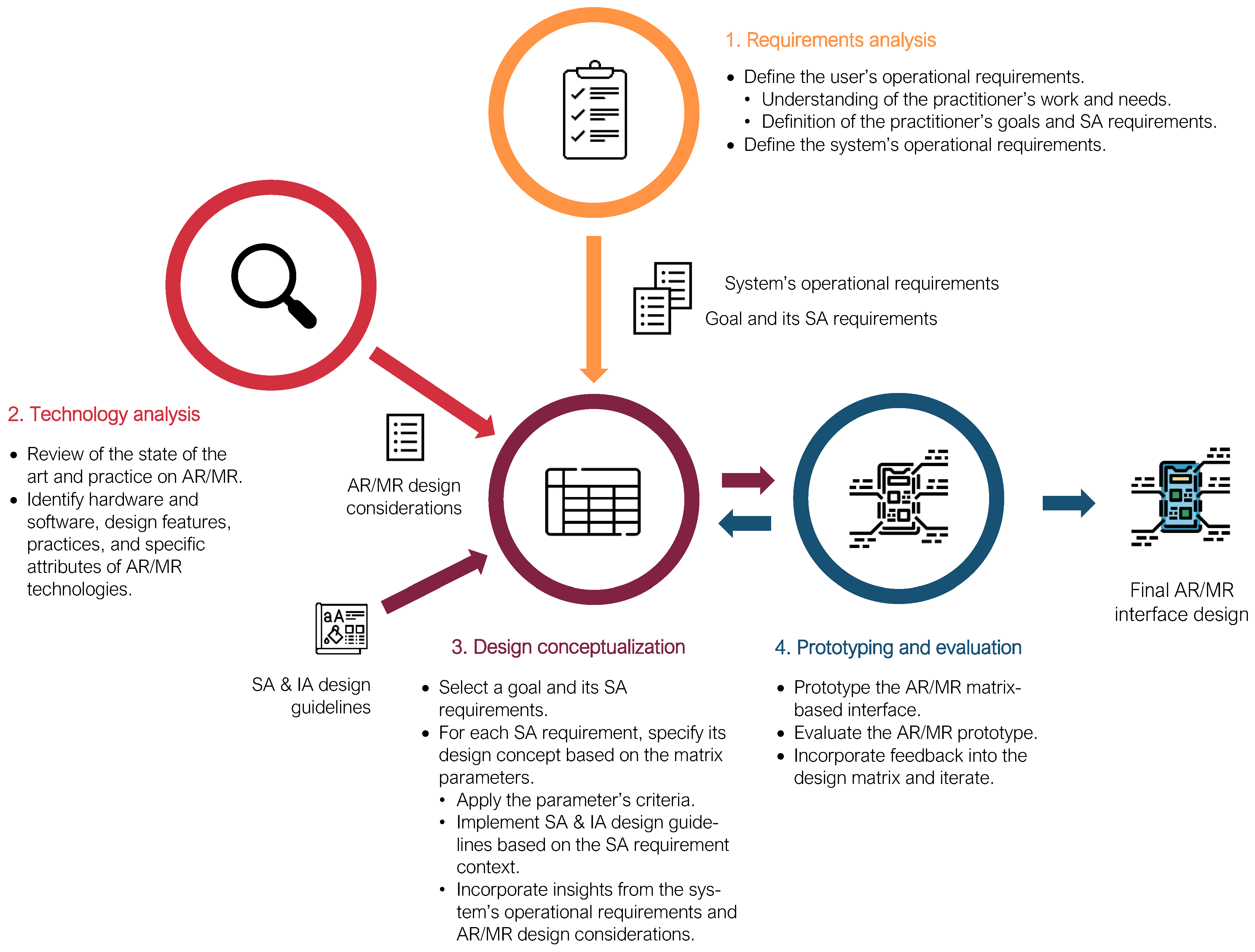

5. Proposed Interface Design Method

5.1. Requirements Analysis

5.2. Technology Analysis

5.3. Design Conceptualization

- (1)

- Description. The GDTA method facilitates the description of the SA requirement by providing context or insights into the practitioners’ work and information needs. It also identifies the SA level of the information requirement, which is crucial for effectively applying the SA and IA design guidelines.

- (2)

- Type. As indicated in Table 1, SA requirements can be explicit or implicit. For explicit SA requirements (e.g., text, numeric, date, and files such as attachments or multimedia), their type can be identified within their information source. Implicit SA requirements are mental constructs (e.g., SA levels 2-comprehension or 3-projection, practitioner’s experience) and it should be stated in the design concept.

- (3)

- Spatial association. The association of a SA requirement with specific elements within the immersive environment depends on its nature or context. For instance, a “practitioner’s experience” is implicit information that cannot be directly linked to an element. In contrast, a “planned task” is explicit information that can be associated with all the building elements involved in that task. In both cases, the design concept should explicitly state it. Additionally, the IA design guidelines from the situated analytics area provide insights for addressing the spatial association parameter.

- (4)

- Visualization. This specifies the position, visibility scope, arrangement, notifications, and other display features for the addressed SA requirement. The SA and IA design guidelines steer this specification. The designer should filter and apply the appropriate guidelines based on the SA level, type, and context of the SA requirement. A practical example is provided in Section 6.3 to assist the designer in this process.

- (5)

- AR and MR interaction, respectively. It specifies the devices (e.g., handset, headset), interaction modes (e.g., tactile, eye/head gaze, and hand gesture), interaction tasks (e.g., selecting, tracking, and navigating), and the overall AR/MR interaction flow for all SA requirements addressed. AR/MR design considerations from the technology analysis phase assist in this specification. In addition, the SA and IA design guidelines, particularly those from the embodied data exploration area, contribute to conceptualizing the interaction flow.

5.4. Prototyping and Evaluation

6. Practical Application and Results

6.1. Requirements Analysis

6.2. Technology Analysis

6.3. Design Conceptualization

- (1)

- Description. This is a level 2 SA requirement, as it involves comprehending the current situation of constraints for planned tasks based on perceived information. This cognitive outcome represents the field manager’s mental picture of whether planned tasks have their constraints removed or not, being most important in the latter as they need to be addressed.

- (2)

- Type. This is a mental construct about the constraints’ situation.

- (3)

- Spatial association. This mental construct cannot be directly associated with elements in the environment. However, it can be formed by associating information about tasks and their constraints with related virtual elements. For this, the digital building model should be overlaid onto its physical twin version to present and access information on demand.

- (4)

- Visualization. Following the guidelines in Table 4, the interface should “present level 2 information directly” (guideline 2) to support the comprehension of the task constraints situation. This involves visualizing if tasks have their constraints removed or not within the immersive environment. For this, the interface should use a visual encoding that informs without hindering the user’s actions (guideline 29). One approach is to use colors on virtual building elements associated with tasks with constraints not removed (guidelines 39 and 40). For tasks with constraints removed, their associated elements should be hidden to focus the field manager’s attention on the constraints not removed (guidelines 15 and 26). If all tasks have their constraints removed, i.e., no colored building elements are visible, the interface should display a notification to inform the field manager. This design concept highlights the critical cues that activate the mental schema of the situation (guideline 5) and minimizes task complexity (guideline 17), enabling the field manager to recognize, at a glance, planned tasks with constraints to be addressed.

- (5)

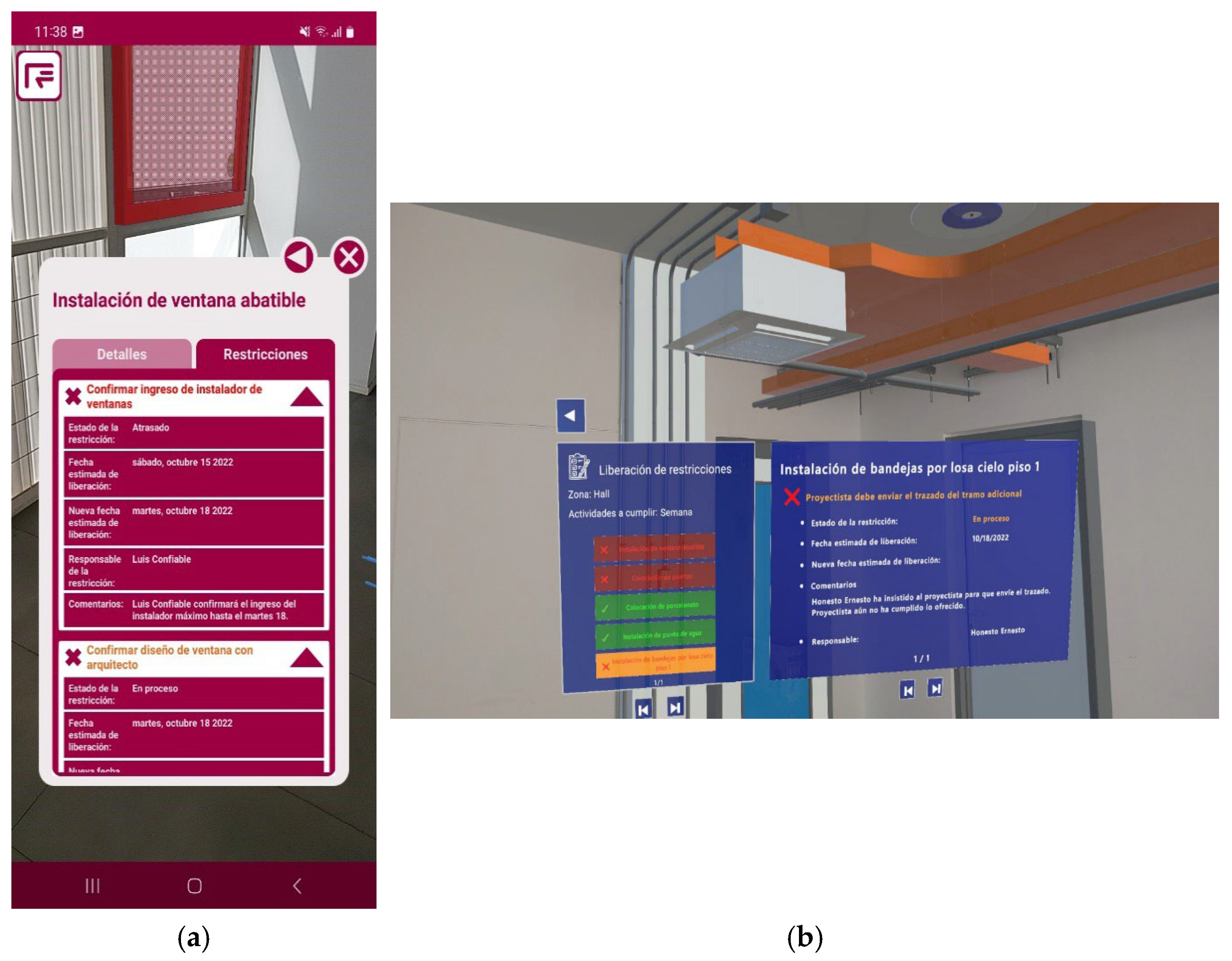

- AR interaction. Handsets, specifically smartphones, were employed for AR interaction, using tactile gestures as the interaction mode (Table 4, guideline 37). Proposed interaction tasks included tap for selecting and body-based physical movement for exploring/navigating within the immersive environment. The AR interaction flow begins with selecting the option/button for goal 1.1.1. Once selected, if all constraints were removed, a colored message appears in the center of the screen to notify the user (guidelines 31 and 36). If not, the interface displays only the colored virtual building elements with tasks with constraints not removed (guideline 31). In this state, the field manager can navigate the immersive environment by physically moving while holding and gazing at the handset to inspect the work area (guidelines 32 and 38).

- (6)

- MR interaction. The Trimble XR10 headset was employed for MR interaction, using multimodal interaction such as eye/head gaze and hand gesture as the interaction modes (Table 4, guideline 37). The interaction tasks included head gaze and eye gaze for tracking; air-tap, point and commit with hands, and eye gaze and pinch for selecting; and body-based physical movement for exploring/navigating. The MR interaction flow is similar to AR, differentiating in the application of interaction modes that MR provides.

6.4. Prototyping and Evaluation

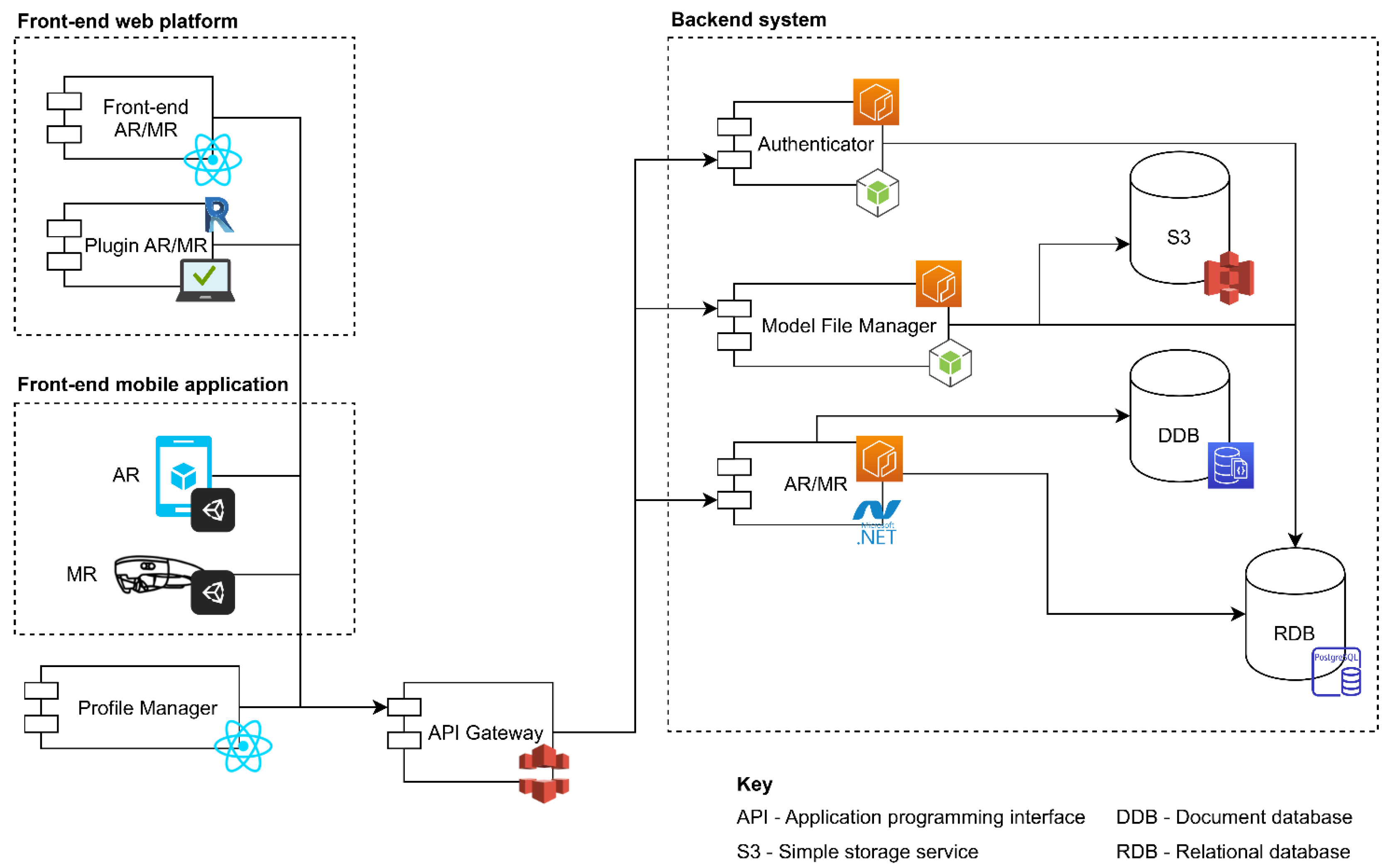

6.4.1. Rapid Prototyping

- (1)

- Front-end web platform. This is where users upload the information sources that provide data for the AR/MR solution. A plugin for Autodesk Revit was developed to facilitate the upload of BIM model metadata. In addition, a spreadsheet was created for manually assigning the SA information requirements to building elements of the BIM model. The spreadsheet was also uploaded to the web platform.

- (2)

- Back-end system. This organizes, stores, and processes data received from the front-end web platform. Likewise, it retrieves data from the storage services, including a simple storage service (S3), document database (DDB), and relational database (RDB), and formats it for consumption by the front-end mobile application. An application-programming interface (API) facilitates communication between the back-end and front-end, efficiently managing data traffic.

- (3)

- Front-end mobile application. It is the user interface of the AR and MR prototype solutions, implemented for Android smartphones and Trimble XR10/Microsoft HoloLens headsets, respectively. Visual representations of these interfaces are provided in Figure 6 and Figure S1 of the Supplementary Material. It is noteworthy that the prototypes only display information and do not include user input capabilities.

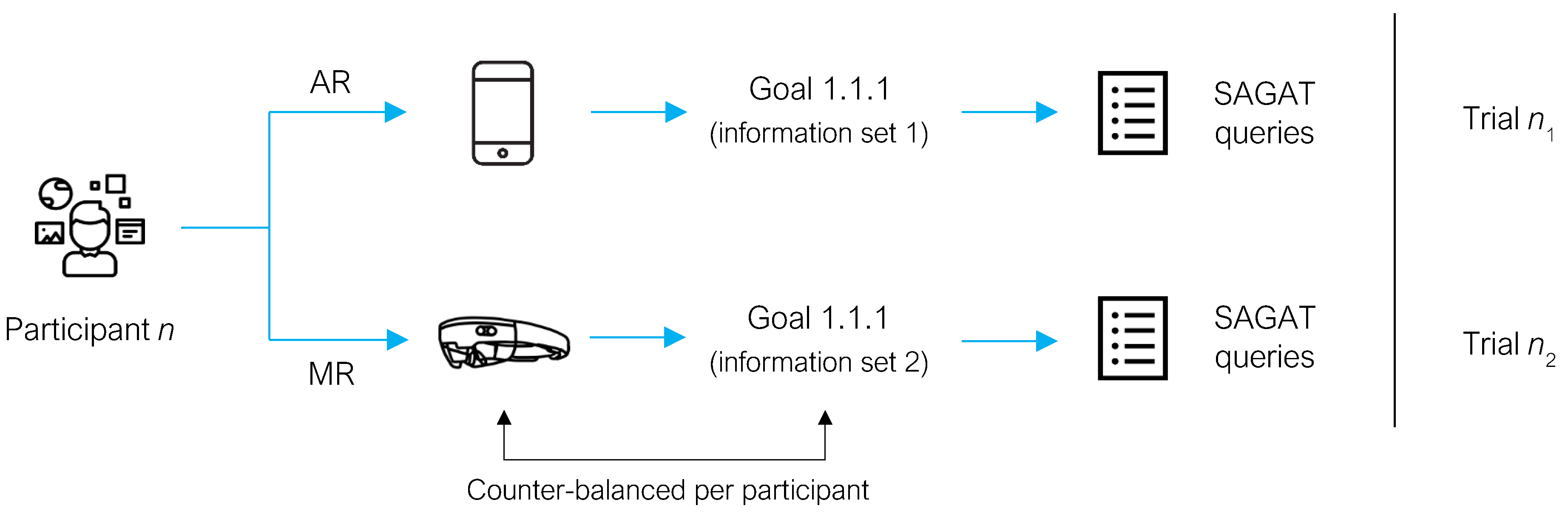

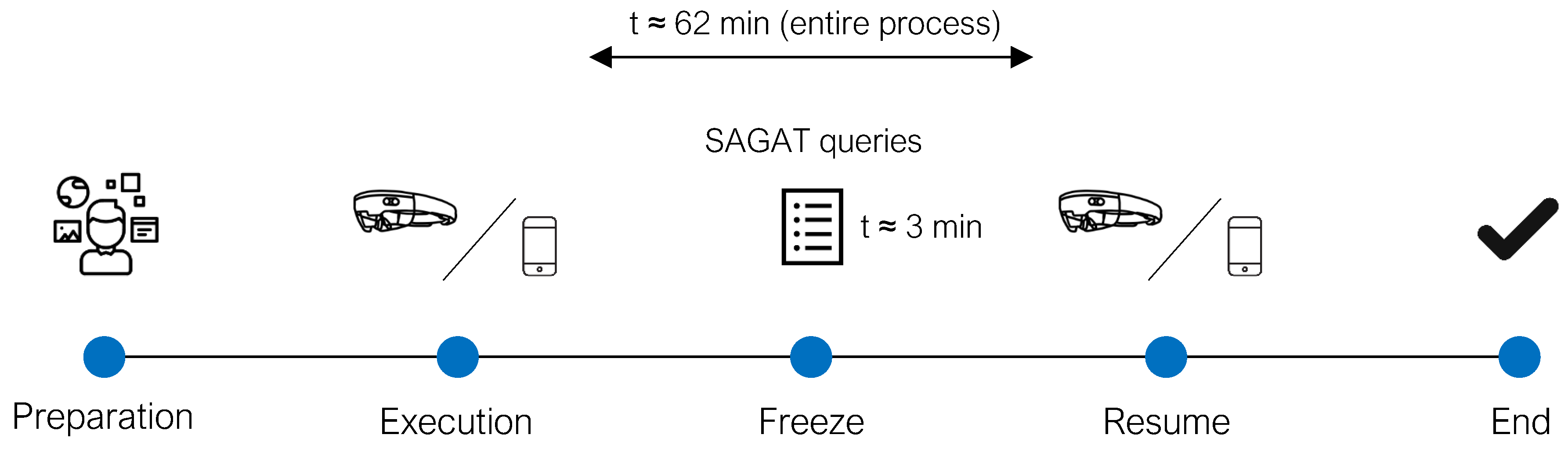

6.4.2. Simulation Testing

- (1)

- Preparation. Participants were briefed on the research and the purpose of the experiment. They then received detailed instructions on how to perform the trial and answer the queries. To familiarize themselves with the task and devices, participants conducted training trials using demo information with both AR and MR prototypes. Practice continued until they felt ready to proceed to the actual trial.

- (2)

- Execution. Participants were asked to perform a task as a field manager would normally do. The task intent was to verify the constraint removal on the weekly work plan (goal 1.1.1). For this, each participant was provided with five scheduled activities, some with constraints not removed. The AR/MR prototype provided information on which activities had constraints not removed and assisted the participant in deciding whether constraints could be removed on time or if rescheduling was necessary.

- (3)

- Freeze. During the task, a random pause was introduced (between 3 and 5 min after starting) where participants were required to leave the prototype and answer the SAGAT queries. The unexpected freeze prevented participants from preparing their answers in advance. The purpose of the pause was to take a mental picture of the information gathered by the participant through the prototype, which formed the current perception of the situation at that moment. Later, this perception could be compared to the actual status of each variable (i.e., the correct answer to each query) to measure the participant’s SA objectively.

- (4)

- Resume. After answering the queries, the trial was resumed, leaving the participant to continue reviewing the information to decide about the activities with constraints not removed.

- (5)

- End. The experiment concluded once the participant completed the assigned task.

7. Discussion

8. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AR | augmented reality |

| MR | mixed reality |

| SA | situation awareness |

| IA | immersive analytics |

| GDTA | goal-directed task analysis |

References

- Adekunle, P.; Aigbavboa, C.; Akinradewo, O.; Oke, A.; Aghimien, D. Construction Information Management: Benefits to the Construction Industry. Sustainability 2022, 14, 11366. [Google Scholar] [CrossRef]

- Marriott, K.; Schreiber, F.; Dwyer, T.; Klein, K.; Henry, N.; Itoh, T.; Stuerzlinger, W.; Thomas, B. (Eds.) Immersive Analytics; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11190, ISBN 978-3-030-01387-5. [Google Scholar]

- Brigham, T.J. Reality Check: Basics of Augmented, Virtual, and Mixed Reality. Med. Ref. Serv. Q. 2017, 36, 171–178. [Google Scholar] [CrossRef] [PubMed]

- Speicher, M.; Hall, B.D.; Nebeling, M. What Is Mixed Reality? In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Scotland, UK, 4–9 May 2019; pp. 1–15. [Google Scholar]

- Flavián, C.; Ibáñez-Sánchez, S.; Orús, C. The Impact of Virtual, Augmented and Mixed Reality Technologies on the Customer Experience. J. Bus. Res. 2019, 100, 547–560. [Google Scholar] [CrossRef]

- Al-Sabbag, Z.A.; Yeum, C.M.; Narasimhan, S. Enabling Human–Machine Collaboration in Infrastructure Inspections through Mixed Reality. Adv. Eng. Inform. 2022, 53, 101709. [Google Scholar] [CrossRef]

- Kazemzadeh, D.; Nazari, A.; Rokooei, S. Application of Augmented Reality in the Life Cycle of Construction Projects. In Industry 4.0 Applications for Full Lifecycle Integration of Buildings; Southfield Rd: Middelsbrough, UK, 2021; p. 248. [Google Scholar]

- Chen, K.; Xue, F. The Renaissance of Augmented Reality in Construction: History, Present Status and Future Directions. Smart Sustain. Built Environ. 2022, 11, 575–592. [Google Scholar] [CrossRef]

- Nguyen, D.-C.; Nguyen, T.-Q.; Jin, R.; Jeon, C.-H.; Shim, C.-S. BIM-Based Mixed-Reality Application for Bridge Inspection and Maintenance. Constr. Innov. 2022, 22, 487–503. [Google Scholar] [CrossRef]

- Katika, T.; Konstantinidis, F.K.; Papaioannou, T.; Dadoukis, A.; Bolierakis, S.N.; Tsimiklis, G.; Amditis, A. Exploiting Mixed Reality in a Next-Generation IoT Ecosystem of a Construction Site. In Proceedings of the 2022 IEEE International Conference on Imaging Systems and Techniques (IST), Kaohsiung, Taiwan, 21–23 June 2022; pp. 1–6. [Google Scholar]

- Ratajczak, J.; Schweigkofler, A.; Riedl, M.; Matt, D. Augmented Reality Combined with Location-Based Management System to Improve the Construction Process, Quality Control and Information Flow. In Advances in Informatics and Computing in Civil and Construction Engineering; Mutis, I., Hartmann, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 289–296. ISBN 978-3-030-00219-0. [Google Scholar]

- Kunic, A.; Naboni, R. Collaborative Design and Construction of Reconfigurable Wood Structures in a Mixed Reality Environment. In Proceedings of the Blucher Design Proceedings, São Paulo, Brazil, 16–20 October 2023; pp. 651–662. [Google Scholar]

- Woodward, J.; Ruiz, J. Analytic Review of Using Augmented Reality for Situational Awareness. IEEE Trans. Vis. Comput. Graph. 2022, 29, 2166–2183. [Google Scholar] [CrossRef]

- Zhang, X.; Bai, X.; Zhang, S.; He, W.; Wang, S.; Yan, Y.; Wang, P.; Liu, L. A Novel Mixed Reality Remote Collaboration System with Adaptive Generation of Instructions. Comput. Ind. Eng. 2024, 194, 110353. [Google Scholar] [CrossRef]

- Endsley, M.R.; Bolté, B.; Jones, D.G. Designing for Situation Awareness: An Approach to User-Centered Design, 1st ed.; CRC Press: Boca Raton, FL, USA, 2003; ISBN 9780429146732. [Google Scholar]

- Gheisari, M.; Irizarry, J. Investigating Facility Manager’s Decision Making Process through a Situation Awareness Approach. Int. J. Facil. Manag. 2011, 2, 1–11. [Google Scholar]

- Woodward, J.; Smith, J.; Wang, I.; Cuenca, S.; Ruiz, J. Examining the Presentation of Information in Augmented Reality Headsets for Situational Awareness. In Proceedings of the International Conference on Advanced Visual Interfaces, Salerno, Italy, 28 September–2 October 2020; pp. 28–32. [Google Scholar] [CrossRef]

- Chandler, T.; Cordeil, M.; Czauderna, T.; Dwyer, T.; Glowacki, J.; Goncu, C.; Klapperstueck, M.; Klein, K.; Marriott, K.; Schreiber, F.; et al. Immersive Analytics. In Proceedings of the 2015 Big Data Visual Analytics (BDVA), Hobart, Australia, 22–25 September 2015; Volume 39, pp. 1–8. [Google Scholar]

- Cid Martins, N.; Marques, B.; Dias, P.; Sousa Santos, B. Extending the Egocentric Viewpoint in Situated Visualization Using Augmented Reality. In Proceedings of the 2023 27th International Conference Information Visualisation (IV), Tampere, Finland, 15–28 July 2023; pp. 83–89. [Google Scholar]

- Schwajda, D.; Friedl, J.; Pointecker, F.; Jetter, H.-C.; Anthes, C. Transforming Graph Data Visualisations from 2D Displays into Augmented Reality 3D Space: A Quantitative Study. Front. Virtual Real. 2023, 4, 1155628. [Google Scholar] [CrossRef]

- Martins, N.C.; Marques, B.; Alves, J.; Araújo, T.; Dias, P.; Santos, B.S. Augmented Reality Situated Visualization in Decision-Making. Multimed. Tools Appl. 2022, 81, 14749–14772. [Google Scholar] [CrossRef]

- Wang, X.; Kim, M.J.; Love, P.E.D.; Kang, S.-C. Augmented Reality in Built Environment: Classification and Implications for Future Research. Autom. Constr. 2013, 32, 1–13. [Google Scholar] [CrossRef]

- Mondragon Solis, F.A.; O’Brien, W. Cognitive Analysis of Field Managers. In Construction Research Congress 2012: Construction Challenges in a Flat World; American Society of Civil Engineers: Reston, VA, USA, 2012; pp. 643–649. [Google Scholar]

- Pillajo, E.; Mourgues, C.; González, V.A. Formalizing the Information Requirements for Decision-Making of Field Managers during Indoor Construction Activities. Eng. Constr. Archit. Manag. 2024, 31, 4125–4145. [Google Scholar] [CrossRef]

- Endsley, M.R. Design and Evaluation for Situation Awareness Enhancement. Proc. Hum. Factors Soc. Annu. Meet. 1988, 32, 97–101. [Google Scholar] [CrossRef]

- Endsley, M.R.; Bolstad, C.A.; Jones, D.G.; Riley, J.M. Situation Awareness Oriented Design: From User’s Cognitive Requirements to Creating Effective Supporting Technologies. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2003, 47, 268–272. [Google Scholar] [CrossRef]

- Kaber, D.B.; Segall, N.; Green, R.S.; Entzian, K.; Junginger, S. Using Multiple Cognitive Task Analysis Methods for Supervisory Control Interface Design in High-Throughput Biological Screening Processes. Cogn. Technol. Work 2006, 8, 237–252. [Google Scholar] [CrossRef]

- Hong, T.C.; Andrew, H.S.Y.; Kenny, C.W.L. Assessing the Situation Awareness of Operators Using Maritime Augmented Reality System (MARS). Proc. Hum. Factors Ergon. Soc. 2015, 2015, 1722–1726. [Google Scholar] [CrossRef]

- Czauderna, T.; Haga, J.; Kim, J.; Klapperstück, M.; Klein, K.; Kuhlen, T.; Oeltze-Jafra, S.; Sommer, B.; Schreiber, F. Immersive Analytics Applications in Life and Health Sciences. In Immersive Analytics; Marriott, K., Schreiber, F., Dwyer, T., Klein, K., Riche, N.H., Itoh, T., Stuerzlinger, W., Thomas, B.H., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 289–330. ISBN 978-3-030-01388-2. [Google Scholar]

- Sermarini, J.; Michlowitz, R.A.; LaViola, J.J.; Walters, L.C.; Azevedo, R.; Kider, J.T., Jr. BIM Driven Retrofitting Design Evaluation of Building Facades. In Proceedings of the 2022 ACM Symposium on Spatial User Interaction, Online, 1–2 December 2022; pp. 1–10. [Google Scholar]

- Sangiorgio, V.; Martiradonna, S.; Fatiguso, F.; Lombillo, I. Augmented Reality Based—Decision Making (AR-DM) to Support Multi-Criteria Analysis in Constructions. Autom. Constr. 2021, 124, 103567. [Google Scholar] [CrossRef]

- Irizarry, J.; Gheisari, M. Situation Awareness (SA), a Qualitative User-Centered Information Needs Assessment Approach. Int. J. Constr. Manag. 2013, 13, 35–53. [Google Scholar] [CrossRef]

- Dennis, A.; Wixom, B.; Roth, R.M. Systems Analysis and Design, 5th ed.; Wiley Publishing: Hoboken, NJ, USA, 2012; ISBN 1118057627. [Google Scholar]

- Thomas, B.H.; Welch, G.F.; Dragicevic, P.; Elmqvist, N.; Irani, P.; Jansen, Y.; Schmalstieg, D.; Tabard, A.; ElSayed, N.A.M.; Smith, R.T.; et al. Situated Analytics. In Immersive Analytics; Marriott, K., Schreiber, F., Dwyer, T., Klein, K., Riche, N.H., Itoh, T., Stuerzlinger, W., Thomas, B.H., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 185–220. ISBN 978-3-030-01388-2. [Google Scholar]

- Büschel, W.; Chen, J.; Dachselt, R.; Drucker, S.; Dwyer, T.; Görg, C.; Isenberg, T.; Kerren, A.; North, C.; Stuerzlinger, W. Interaction for Immersive Analytics. In Immersive Analytics; Marriott, K., Schreiber, F., Dwyer, T., Klein, K., Riche, N.H., Itoh, T., Stuerzlinger, W., Thomas, B.H., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 95–138. ISBN 978-3-030-01388-2. [Google Scholar]

- Elmqvist, N.; Moere, A.V.; Jetter, H.C.; Cernea, D.; Reiterer, H.; Jankun-Kelly, T.J. Fluid Interaction for Information Visualization. Inf. Vis. 2011, 10, 327–340. [Google Scholar] [CrossRef]

- Marriott, K.; Chen, J.; Hlawatsch, M.; Itoh, T.; Nacenta, M.A.; Reina, G.; Stuerzlinger, W. Immersive Analytics: Time to Reconsider the Value of 3D for Information Visualisation. In Immersive Analytics; Marriott, K., Schreiber, F., Dwyer, T., Klein, K., Riche, N.H., Itoh, T., Stuerzlinger, W., Thomas, B.H., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 25–55. ISBN 978-3-030-01388-2. [Google Scholar]

- Ries, E. The Lean Startup: How Constant Innovation Creates Radically Successful Businesses, 14th ed.; Crown Publishing Group: New York, NY, USA, 2011; ISBN 978-0-307-88791-7. [Google Scholar]

- Núñez, D.; Ferrada, X.; Neyem, A.; Serpell, A.; Sepúlveda, M. A User-Centered Mobile Cloud Computing Platform for Improving Knowledge Management in Small-to-Medium Enterprises in the Chilean Construction Industry. Appl. Sci. 2018, 8, 516. [Google Scholar] [CrossRef]

- Inzunza, O.; Neyem, A.; Sanz, M.E.; Valdivia, I.; Villarroel, M.; Farfán, E.; Matte, A.; López-Juri, P. Anatomicis Network: Una Plataforma de Software Educativa Basada En La Nube Para Mejorar La Enseñanza de La Anatomía En La Educación Médica. Int. J. Morphol. 2017, 35, 1168–1177. [Google Scholar] [CrossRef]

- Endsley, M.R. Direct Measurement of Situation Awareness: Validity and Use of SAGAT. In Situation Awareness Analysis and Measurement; Endsley, M.R., Garland, D.J., Eds.; CRC Press: New York, NY, USA, 2000; pp. 147–174. ISBN 9781351548564. [Google Scholar]

- Hagl, M.; Friedrich, M.; Papenfuss, A.; Scherer-Negenborn, N.; Jakobi, J.; Rambau, T.; Schmidt, M. Augmented Reality in a Remote Tower Environment Based on VS/IR Fusion and Optical Tracking. In Engineering Psychology and Cognitive Ergonomics; Harris, D., Ed.; Springer International Publishing: Cham, Switzerland, 2018; pp. 558–571. ISBN 978-3-319-91122-9. [Google Scholar]

- Hasanzadeh, S.; Esmaeili, B.; Dodd, M.D. Examining the Relationship between Construction Workers’ Visual Attention and Situation Awareness under Fall and Tripping Hazard Conditions: Using Mobile Eye Tracking. J. Constr. Eng. Manag. 2018, 144, 04018060. [Google Scholar] [CrossRef]

- Endsley, M.R. Measurement of Situation Awareness in Dynamic Systems. Hum. Factors 1995, 37, 65–84. [Google Scholar] [CrossRef]

- Heer, J.; Shneiderman, B. Interactive Dynamics for Visual Analysis. Commun. ACM 2012, 55, 45–54. [Google Scholar] [CrossRef]

- Apple Developer Augmented Reality Design. Available online: https://developer.apple.com/design/human-interface-guidelines/technologies/augmented-reality/ (accessed on 24 August 2022).

- Microsoft Learn Mixed Reality—Design. Available online: https://learn.microsoft.com/en-us/windows/mixed-reality/design/app-patterns-landingpage (accessed on 8 September 2022).

- Soegaard, M. The Basics of User Experience Design; Interaction Design Foundation: Aarhus, Denmark, 2018. [Google Scholar]

- Lovreglio, R.; Gonzalez, V.; Feng, Z.; Amor, R.; Spearpoint, M.; Thomas, J.; Trotter, M.; Sacks, R. Prototyping Virtual Reality Serious Games for Building Earthquake Preparedness: The Auckland City Hospital Case Study. Adv. Eng. Inform. 2018, 38, 670–682. [Google Scholar] [CrossRef]

- Newman, S. Building Microservices, 1st ed.; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2015; ISBN 1491950358. [Google Scholar]

- Endsley, M.R.; Mogford, R.; Allendoerfer, K.; Snyder, M.; Stein, E. Effect of Free Flight Conditions on Controller Performance, Workload, and Situation Awareness; FAA William J. Hughes Technical Center: Atlantic City, NJ, USA, 1997. [Google Scholar]

- Hogan, M.P.; Pace, D.E.; Hapgood, J.; Boone, D.C. Use of Human Patient Simulation and the Situation Awareness Global Assessment Technique in Practical Trauma Skills Assessment. J. Trauma Inj. Infect. Crit. Care 2006, 61, 1047–1052. [Google Scholar] [CrossRef]

- Lee Chang, A.; Dym, A.A.; Venegas-Borsellino, C.; Bangar, M.; Kazzi, M.; Lisenenkov, D.; Qadir, N.; Keene, A.; Eisen, L.A. Comparison between Simulation-Based Training and Lecture-Based Education in Teaching Situation Awareness. A Randomized Controlled Study. Ann. Am. Thorac. Soc. 2017, 14, 529–535. [Google Scholar] [CrossRef]

- Vasileiou, K.; Barnett, J.; Thorpe, S.; Young, T. Characterising and Justifying Sample Size Sufficiency in Interview-Based Studies: Systematic Analysis of Qualitative Health Research over a 15-Year Period. BMC Med. Res. Methodol. 2018, 18, 148. [Google Scholar] [CrossRef]

- Dokter, G. Exploratory Research and Its Impact on Problem Identification. J. Res. Dev. 2023, 11, 219. [Google Scholar] [CrossRef]

- Kuder, G.F.; Richardson, M.W. The Theory of the Estimation of Test Reliability. Psychometrika 1937, 2, 151–160. [Google Scholar] [CrossRef]

- Liu, C.; González, V.A.; Lee, G.; Cabrera-Guerrero, G.; Zou, Y.; Davies, R. Integrating the Last Planner System and Immersive Virtual Reality: Exploring the Social Mechanisms Produced by Using LPS in Projects. J. Constr. Eng. Manag. 2024, 150, 1–18. [Google Scholar] [CrossRef]

- Brade, J.; Lorenz, M.; Busch, M.; Hammer, N.; Tscheligi, M.; Klimant, P. Being There Again—Presence in Real and Virtual Environments and Its Relation to Usability and User Experience Using a Mobile Navigation Task. Int. J. Hum. Comput. Stud. 2017, 101, 76–87. [Google Scholar] [CrossRef]

- Kim, H.-Y. Statistical Notes for Clinical Researchers: Sample Size Calculation 2. Comparison of Two Independent Proportions. Restor. Dent. Endod. 2016, 41, 154. [Google Scholar] [CrossRef]

- Nasser-Dine, A. A Systematic Method to Perform Goal Directed Task Analysis with Application to Enterprise Architecture. Ph.D. Thesis, Université du Québec, Québec, QC, Canada, 2021. [Google Scholar]

- Wang, J.; Yang, J.; Fu, Q.; Zhang, J.; Zhang, J. A New Dynamic Spatial Information Design Framework for AR-HUD to Evoke Drivers’ Instinctive Responses and Improve Accident Prevention. Int. J. Hum. Comput. Stud. 2024, 183, 103194. [Google Scholar] [CrossRef]

- Lee, B.; Sedlmair, M.; Schmalstieg, D. Design Patterns for Situated Visualization in Augmented Reality. IEEE Trans. Vis. Comput. Graph. 2023, 30, 1324–1335. [Google Scholar] [CrossRef]

- Kotian, A.L.; Nandipi, R.; Ushag, M.; Varshauk; Veena, G.T. A Systematic Review on Human and Computer Interaction. In Proceedings of the 2024 2nd International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT), Bengaluru, India, 4–6 January 2024; pp. 1214–1218. [Google Scholar]

| Category | Parameter | Detail |

|---|---|---|

| Content | Description | This explains the information requirement to be presented in the AR/MR interface, providing designers/developers with a clear idea of it. Additionally, it specifies the level of the SA (1-perception, 2-comprehension, and 3-projection) of the information requirement. |

| Type | This denotes the nature of the information requirement according to the data and/or the context in which it is addressed. The information requirement may be explicit (e.g., text, numeric, date, or file, such as attachments or multimedia) or implicit (e.g., mental constructs). | |

| Presentation | Spatial association | This specifies which element(s) in the immersive environment should be associated/linked with the information requirement. |

| Visualization | This specifies how to display the information according to its level of SA, type, and context in terms of colors, visibility scope, and position, among other features. | |

| Interaction | AR interaction | This specifies the interaction: devices, modes, tasks, and flow for interacting with the information requirements within the AR environment. |

| MR interaction | This specifies the interaction: devices, modes, tasks, and flow for interacting with the information requirements within the MR environment. |

| Goal | 1.1.1 Verify Constraint Removal on the Weekly/Immediate Work Plan |

|---|---|

| Decisions |

|

| SA requirements |

|

| Type of Requirement | Operational Requirements |

|---|---|

| Cognitive requirements |

|

| Physical environment |

|

| Technical environment |

|

| System integration |

|

| Portability |

|

| Maintainability |

|

| Theoretical Framework | Area | N° | Design Guidelines |

|---|---|---|---|

| Situation awareness (SA) [15] | Core principles | 1 | Organize information around goals |

| 2 | Present level 2 information directly (support comprehension) | ||

| 3 | Provide assistance for level 3 SA projections | ||

| 4 | Support trade-offs between goal-driven and data-driven processing: top-down and bottom-up processing | ||

| 5 | Make critical cues for schema activation salient | ||

| 6 | Use information filtering carefully | ||

| To address uncertainty | 7 | Explicitly identify missing information | |

| 8 | Support sensor reliability assessment | ||

| To taming complexity | 9 | Just say no to feature creep-buck the trend | |

| 10 | Manage rampant featurism through prioritization and flexibility | ||

| 11 | Insure logical consistency across modes and features | ||

| 12 | Minimize logic branches | ||

| 13 | Map system functions to the goals and mental models of users | ||

| 14 | Group information based on level 2/3 SA requirements and goals | ||

| 15 | Reduce display density, but do not sacrifice coherence | ||

| 16 | Provide consistency and standardization on controls across different displays and systems | ||

| 17 | Minimize task complexity | ||

| To design automated systems | 18 | Automate only if necessary | |

| 19 | Use automation for assistance in carrying out routine actions rather than higher level cognitive tasks | ||

| 20 | Provide SA support rather than decisions | ||

| 21 | Keep the operator in control and in the loop | ||

| 22 | Make modes and system states salient | ||

| 23 | Use methods of decision support that create human/system symbiosis | ||

| Immersive analytics (IA) [2] | Situated analytics [34] | 24 | Consider the size and visibility of the referents and environments with which the system will be used |

| 25 | Consider how easy is to distinguish, track, and connect physical referents with related data | ||

| 26 | Ensure that visual augmentations do not distract user’s attention away from the environment | ||

| 27 | Ensure the alignment between virtual presentations and physical referents | ||

| 28 | Emphasize the use of real-time data | ||

| 29 | Choose visual encodings that ensure the system informs but does not hinder the user’s actions | ||

| Embodied data exploration [35,36] | 30 | Use smooth animated transitions between states | |

| 31 | Provide immediate visual feedback during interaction | ||

| 32 | Minimize indirection in the interface (use direct manipulation) | ||

| 33 | Integrate user interface (UI) components into the visual representation (integrate 2D UIs in 3D environments) | ||

| 34 | Reward interaction | ||

| 35 | Ensure that interaction never “ends” | ||

| 36 | Reinforce a clear conceptual model (keep a clear idea of the system’s state) | ||

| 37 | Use interaction modes that minimize strain or fatigue on the user | ||

| 38 | Harness physical navigation for precise inspection or general overview within the immersive environment | ||

| Spatial immersion [37] | 39 | Choose between 2D and/or 3D representations according to the task to address | |

| 40 | Prefer 3D representations for depth-related tasks (including spatial understanding and spatial manipulation) | ||

| 41 | Prefer 2D representations for precise manipulation or accurate data value measurement or comparison | ||

| 42 | Prefer 2D billboard-style text display instead of 3D text attached directly on spatial objects | ||

| 43 | Harness the unlimited 3D display space for arranging multiple views |

| SA Information Requirements | Content | Presentation | Interaction | |||

|---|---|---|---|---|---|---|

| Description | Type | Spatial Association | Visualization | AR | MR | |

| Current status of the constraints of the weekly/immediate work plan | Level 2 SA. It represents the field manager’s mental picture of the situation regarding whether the tasks to perform have all their constraints removed or not, being most important those not removed as they need to be addressed. | Mental construct | • The digital building model should be overlaid on the real-world environment to present and access the information. • Planned tasks and their constraints can be associated/linked with virtual 3D building elements in the immersive environment. | • Only show the virtual 3D building elements that have tasks with constraints not removed. • Color these elements according to the status of the constraint not removed (e.g., red if the constraint removal is “delayed” or orange when the constraint removal is “in progress”). • If all the constraints were removed, present a colored message (e.g., in green) in the middle of the field view informing about it. | Interaction device: handset (smartphones). Interaction mode: tactile gesture. Interaction tasks (action-purpose): • Tap for selecting. • Body-based physical movement for navigating within the immersive environment. Interaction flow: • Once the button to address the current goal is selected, two scenarios can occur: (1) If all the planned task constraints were removed, a colored message will appear in the center of the screen to inform the field manager about it. (2) If there are tasks with constraints not removed, the immersive environment will present only the colored 3D building elements that have associated tasks with constraints not removed. • The field manager can move the handset or walk, holding and gazing at the handset, to inspect and recognize the elements and tasks with constraints not removed that need to be addressed. | Interaction device: headset (Trimble XR10). Interaction modes: multimodal interaction (i.e., eye/head gaze and hand gesture). Interaction tasks (action-purpose): • Head gaze and eye gaze for tracking. • Air-tap, point and commit with hands, eye gaze and pinch for selecting. • Body-based physical movement for navigating within the immersive environment. Interaction flow: • Once the button to address the current goal is selected, two scenarios can occur: (1) If all the planned task constraints were removed, a colored message will appear in front of the field manager within the immersive environment to inform about it. (2) If there are tasks with constraints not removed, the immersive environment will present only the colored 3D building elements that have associated tasks with constraints not removed. • The field manager can move the head or walk within the environment to inspect and recognize the elements and tasks with constraints not removed that need to be addressed. |

| Planned tasks | Level 1 SA. Planned tasks are activities that labor has to execute during an established time. Each task has information that varies according to the goal addressed. For goal 1.1.1, that information regards constraints. | Text | • Tasks can be associated with the virtual elements they address (e.g., the task “installation of luminaires in sector A” can be associated with the virtual lamps in the immersive environment). | • Tasks should denote the status of their constraints within the immersive environment, especially if they have constraints not removed. Use colors for this purpose. • When the task has more than one type of constraint not removed (e.g., “in progress” and “delayed”), apply the most critical case (i.e., “delayed”). | ||

| Constraints removed | Level 1 SA. Constraints are the requisites for performing a task (e.g., labor, material, equipment, authorizations, information). Constraints removed stand for the requisites that are ready for task execution. | Text | • Constraints can be associated with the virtual elements within the work area through their corresponding task. | • If a task has its constraints removed, the associated 3D virtual elements will not appear within the immersive environment. • If all the tasks have all their constraints removed, a colored message should appear in front of the field manager informing about it. | ||

| Constraints not removed | Level 1 SA. Constraints are the requisites for performing a task (e.g., labor, material, equipment, authorizations, information). Constraints not removed are the requisites that are not available yet for task execution. | Text | • Constraints can be associated with the virtual elements within the work area through their corresponding task. | • Constraints not removed can have two statuses: in progress and delayed, being more critical the latter. • Use different colors to differentiate them (e.g., red for “delayed” and orange for “in progress”). | ||

| Category | Attribute | Participants (n = 14) (%) |

|---|---|---|

| Occupation | Undergraduate civil engineering student | 4 (28.6%) |

| Construction manager | 1 (7.1%) | |

| Field manager | 1 (7.1%) | |

| Field inspector | 1 (7.1%) | |

| BIM manager | 1 (7.1%) | |

| General manager | 1 (7.1%) | |

| Real estate manager | 1 (7.1%) | |

| Project engineer | 1 (7.1%) | |

| Project manager | 2 (14.3%) | |

| R&D coordinator | 1 (7.1%) | |

| Work experience | No experience | 4 (28.6%) |

| 5–15 years | 7 (50.0%) | |

| Over 16 years | 3 (21.4%) |

| Query | AR | MR |

|---|---|---|

| p-Value | p-Value | |

| 1. Constraint’s current status | 1.000 | 0.714 |

| 2. Name of the planned task on review | 1.000 | 1.000 |

| 3. Number of constraints removed | 0.670 | 0.689 |

| 4. Number of constraints not removed | 0.689 | 0.210 |

| 5. Name of the constraint not removed | 0.546 | 0.280 |

| 6. Estimated removal date | 0.406 | 0.720 |

| 7. Type/nature of the constraint | 0.689 | 0.670 |

| 8. Comments on the constraint removal | 0.689 | 0.594 |

| 9. Name of the individual responsible | 0.720 | 0.406 |

| 10. Reliability in the responsible of the constraint | 0.495 | 0.330 |

| 11. Probability of removing constraint in time | 0.689 | 0.506 |

| Query | AR | MR | ||

|---|---|---|---|---|

| Student | Practitioner | Student | Practitioner | |

| 1. Constraint’s current status | 100% | 100% | 100% | 90% |

| 2. Name of the planned task on review | 100% | 100% | 100% | 100% |

| 3. Number of constraints removed | 75% | 80% | 75% | 70% |

| 4. Number of constraints not removed | 75% | 70% | 100% | 60% |

| 5. Name of the constraint not removed | 75% | 60% | 75% | 40% |

| 6. Estimated removal date | 75% | 50% | 50% | 50% |

| 7. Type/nature of the constraint | 75% | 70% | 75% | 80% |

| 8. Comments on the constraint removal | 75% | 70% | 50% | 40% |

| 9. Name of the individual responsible | 50% | 50% | 75% | 50% |

| 10. Reliability in the responsible of the constraint | 100% | 80% | 100% | 70% |

| 11. Probability of removing constraint in time | 75% | 70% | 75% | 90% |

| Query | p-Value |

|---|---|

| 1. Constraint’s current status | 0.500 |

| 2. Name of the planned task on review | 1.000 |

| 3. Number of constraints removed | 0.500 |

| 4. Number of constraints not removed | 0.661 |

| 5. Name of the constraint not removed | 0.352 |

| 6. Estimated removal date | 0.500 |

| 7. Type/nature of the constraint | 0.500 |

| 8. Comments on the constraint removal | 0.126 |

| 9. Name of the individual responsible | 0.500 |

| 10. Reliability in the responsible of the constraint | 0.500 |

| 11. Probability of removing constraint in time | 0.324 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pillajo, E.; Mourgues, C.; Neyem, A.; González, V.A. An Interface Design Method Based on Situation Awareness and Immersive Analytics for Augmented and Mixed Reality Decision Support Systems in Construction. Appl. Sci. 2025, 15, 7820. https://doi.org/10.3390/app15147820

Pillajo E, Mourgues C, Neyem A, González VA. An Interface Design Method Based on Situation Awareness and Immersive Analytics for Augmented and Mixed Reality Decision Support Systems in Construction. Applied Sciences. 2025; 15(14):7820. https://doi.org/10.3390/app15147820

Chicago/Turabian StylePillajo, Ernesto, Claudio Mourgues, Andrés Neyem, and Vicente A. González. 2025. "An Interface Design Method Based on Situation Awareness and Immersive Analytics for Augmented and Mixed Reality Decision Support Systems in Construction" Applied Sciences 15, no. 14: 7820. https://doi.org/10.3390/app15147820

APA StylePillajo, E., Mourgues, C., Neyem, A., & González, V. A. (2025). An Interface Design Method Based on Situation Awareness and Immersive Analytics for Augmented and Mixed Reality Decision Support Systems in Construction. Applied Sciences, 15(14), 7820. https://doi.org/10.3390/app15147820