1. Introduction

With the rapid development of smart cities, the requirement for video surveillance systems has significantly increased. Visual object tracking, a fundamental task in computer vision, is divided into single-object tracking (SOT) and multi-object tracking (MOT). In recent years, MOT, aiming to locate multiple specific objects and maintain their unique identities in sequential frames, has received increasing attention due to its capability to advance video understanding, intelligent surveillance, human–computer interaction, and medical diagnostics. The tracking-by-detection (TBD) framework has recently become the dominant paradigm in MOT [

1,

2,

3]. It transforms the MOT task into a frame-by-frame data association problem, typically implemented in a centralized system.

Extensive research has been dedicated to addressing complex environmental conditions and target occlusion, with the aim of mitigating association failures and target loss. However, pedestrian trajectories—the primary focus of MOT—often deviate from purely deterministic models. This is due to their spatiotemporal evolution, which is jointly governed by objective physical constraints and subjective cognitive decision-making processes. To characterize pedestrian locomotion patterns, we propose a dual-phase paradigm comprising a decision phase and an action phase. The decision phase encompasses non-observable processes including destination planning, obstacle avoidance, and speed adjustment. In contrast, the action phase only contains the observable process of physical movement. This dual-phase structure presents challenges for traditional tracking methods, as it introduces significant uncertainty due to variable cognitive intentions and external environmental perturbations.

The Hidden Markov Model (HMM), a kind of Probabilistic Graphical Model, provides a robust framework for modeling sequential probabilistic problems and performs efficient inference. Within this framework, latent states representing pedestrian intentions are influenced solely by the preceding state transition. This is particularly well-suited to the context of pedestrian tracking due to its ability to represent doubly stochastic processes: an unobservable decision phase governed by internal cognitive states and an observable action phase manifesting as measurement [

4].

Further, target features suffer from severe uncertainties as well, influenced by changes in orientation, pose, or appearance. This variability aligns with the entropy framework, which quantifies information disorder. Leveraging this insight, we employ weighted entropy [

5] to dynamically characterize feature uncertainty, enabling adaptive fusion strategies that balance noisy detections.

However, the introduction of these two mechanism causes unavoidable computation loads in the traditional approach of track management, in addition to the weak adaptability of the algorithm and limited scalability of the system. Thus, we introduce a multi-agent mechanism to manage the tracking of objects. With the hypothesis that pedestrians do not exhibit abrupt large displacements between consecutive frames, we formulate each object as an agent that maintains a separate HMM-based Kalman filter and independently decide the creation, association, prediction, termination, and weight of features for tracks, which is a Markov decision process. Each agent is trained based on rewards obtained from the environment. Detections are associated based on each filter’s prior prediction about the tracked object’s position. And the weight of features is determined by the evaluation of entropy given by the agents.

Therefore, the main contributions of this paper are as follows:

Decomposing pedestrian locomotion into a decision phase and an action phase according to human cognitive patterns: This addresses the analysis of dynamic moving patterns instead of objective conditions. And the dual-phase structure naturally accommodates probabilistic modeling frameworks.

Reconstructing Kalman filters within a unified HMM framework: This architecture explicitly models state transitions and observation processes corresponding to the dual-phase model, enabling probabilistic inference and better prediction of pedestrian trajectories.

The proposal of a weighted entropy mechanism for adaptive multi-cue fusion, dynamically balancing appearance and motion cues based on real-time uncertainty quantification: By leveraging entropy as a measure of feature reliability, our mechanism adjusts fusion weights to prioritize stable features during tracking, enhancing robustness to occlusions and appearance changes.

The introduction of a multi-agent mechanism to manage the tracks separately. By assigning each track to an independent agent, the HMM-based Kalman filter and multi-cue fusion with weighted entropy can be effectively conducted and updated according to the obtained rewards.

2. Related Work

In this section, we review recent developments in tracking-by-detection (TBD) frameworks and applications of probabilistic graphical models (PGMs) in multi-object tracking (MOT).

2.1. Tracking-by-Detection

In the TBD paradigm, a class of widely adopted MOT frameworks [

1,

2,

3] employs a two-stage pipeline: objects of interest are first detected, and then cross-frame associations are established using complementary cues such as appearance and motion features. SORT [

2] exemplifies this paradigm by leveraging the Kalman Filter for motion state prediction and the Hungarian algorithm for association. To address occlusion challenges, DeepSORT [

3] enhances the association strategy by incorporating appearance features extracted from deep convolutional neural networks into the cost matrix. Building on this foundation, Du et al. [

1] introduced the StrongSORT framework, which refines detection, embedding, and association modules through lightweight, plug-and-play components. Wang et al. [

6] proposed a General Recurrent Tracking Unit that captures long-term temporal dependencies to score track proposals, mitigating detection. Zhang et al. [

7] advanced monocular tracking via a detection multiplexing strategy, introducing a multiplex labeling graph model with independent nodes.

Despite their advancements, most of these methods predominantly rely on bipartite graph matching for data association, which often results in the inadequate modeling of intricate situations.

2.2. Multi-Agent Mechanism in MOT

Shen et al. [

8] propose a distributed MOT algorithm based on multi-agent reinforcement learning, which formulates MOT as a Markov decision process to improve the adaptability of the algorithm. Focusing on efficient online approaches that can run in real time, Pol Rosello and Mykel J. Kochenderfer [

9] present a novel, multi-agent reinforcement learning formulation of multi-object tracking that treats creating, propagating, and terminating object tracks as actions in a sequential decision-making problem.

2.3. Probabilistic Models in MOT

Segal and Reid [

10] introduce a latent data association framework that models state nodes across continuous time slices as interconnected through probabilistic dependencies, reframing data association and model selection as a unified inference problem. To decrease computational complexity, Chen [

11] employs Markov random fields with a Local Binary Pattern algorithm, replacing cluster graphs with a more tractable model with the assumption of prior knowledge of target count, detection probability, and clutter density. Wang, de La Corce, and Paragios [

12] tackle the challenges of image noise, cluttered backgrounds, and camera motion by integrating a pairwise Markov random field into a joint 2.5D layered model, which enables simultaneous image segmentation and tracking.

However, these methods often overlook the distinct stochastic motion patterns of pedestrians and the inherent instability of tracking cues, treating them the same as other dynamic objects in the scene. To address this, we emphasize the dual-phase pattern of targets by formulating tracking within an HMM framework. And we introduce the weighted entropy mechanism to quantify the uncertainty of feature characterization capacity during multi-cue fusion, enabling the adaptive recalibration of weight to mitigate bias in noisy or cluttered conditions.

3. Multi-Agent-Supported Tracking Based on Hidden Markov Model with Weighted Entropy

3.1. Overview

Unlike traditional TBD methods that perform global optimization and track updates centrally, we address the MOT problem using the multi-agent mechanism, assigning independent agents to each track when a new target enters the scene. The agents’ role is to manage a separate Kalman filter and take actions to modify the track under its responsibility. As shown in

Figure 1, in each frame the agent obtains detections from the detector and makes its decision according to the Kalman filter and weighted entropy mechanism. This framework can be conducted in both a decentralized way and a multi-thread way. The agents compete with each other to obtain the detections under the communication protocol proposed by Noukhovitch et al. [

13] and adjust their strategies through trust-region policy optimization (TRPO) based on rewards determined by the joint tracking performance relative to ground truth object tracks. The reward is denoted by

where

represents the motion similarity between track

and the selected detection

, while the appearance similarity is denoted as

, and

is a weight parameter.

3.2. Details of Process of Tracking

Based on the principle of StrongSORT [

1], tracks are initialized with detections and their extracted features and assigned to an agent, as shown in

Figure 1. In the

t-th frame of the video sequence

, the object detector first generates detections

, where

N represents the total number of detections. Each detection contains a location, width, and height parameter, denoted by

. These detections are distributed to agents as candidates, and the agents are allowed to either accept the detections or wait until the next frame. For agents choosing to wait, a reward of 1 is assigned if the corresponding ground-truth tracks also opt for waiting. Otherwise, they receive −1 as a penalty. In the case of agents accepting detections, a detection may potentially be assigned to more than one agent. For instance, there are four tracks managed by agents

that attempt to claim detection

at the same time, and it is obvious that

cannot be selected repeatedly. To address this,

is designated as the valid assignment, and

are allocated to the collision set

temporarily. Upon completion of all detection processing, the agents in the collision set locally compute and share the rewards of each candidate, and the total rewards are optimized to produce the final distribution of detections:

Then the agents associate these detections with the managed track according to the cost matrix, constructed through multi-cue fusion, via weighted entropy. The cost matrix represents the similarity between existing tracks and new detections, and models a bipartite graph for the matching problem. Before fusion, the agent performs normalization on appearance and motion features. The entropy of the fused feature is then computed to quantify its uncertainty. Through entropy minimization, the optimal coefficient

is derived to balance feature weights dynamically. Details of the formulations are provided in

Section 3.4.

Following association matching, the agent utilizes tracks with detections as measurements for the Kalman filter update, which involves calculating the Kalman gain, updating the state estimate, and refining the error covariance. Subsequently, with the hypothesis that pedestrians do not exhibit abrupt large displacements between consecutive frames, the agent deploys the updated HMM-based Kalman filter (detailed in

Section 3.3) to predict object locations in the subsequent frame, thus providing priors for the next iteration of association matching. For missed tracks and missed detections from the initial association, the agent employs IoU matching to generate additional valid track-detection pairs. Remaining unassociated detections are initialized as new tracks, while unmatched tracks are marked for deletion unless the corresponding targets reappear in subsequent frames.

3.3. Kalman Filter Reconstruction Through HMM

With detections acquired by the object detector, establishing continuous trajectories through the matching of new detections with existing tracks constitutes a fundamental challenge. A critical issue arises from the fact that the trajectories generated at time

may not accurately represent the exact positions of moving objects at time

t, with the complexity of pedestrian motion further exacerbating this difficulty. To address this, the Kalman filter is employed for prediction. The Kalman filter is a method for predicting the future state of a system based on previous ones as a different approach to statistical prediction and filtering [

14].

In this section, we present a reformulation of the Kalman filter within the framework of HMMs to better model the dual-phase movement patterns of pedestrians, as previously discussed. As the Kalman filter is rooted in principled probabilistic theory, it can indeed be systematically described within the paradigm of HMMs [

15]. This integration aims to enhance the predictive capability for scenarios characterized by abrupt changes in pedestrian dynamics, thereby improving the robustness of trajectory association in complex environments.

The state-space model is described as follows:

The sequence representing the states of objects is denoted as , with these states being non-observable and solely influenced by past states through the last observation. Mathematically, is expressed as a Markov process with ;

For each state, there exists a corresponding observable variable , which is a manifestation of the underlying latent state .

To ensure computational tractability of the proposed framework, several constraints are integrated.

The space and the state exhibit linear dependence (this is shared by both the Kalman filter and HMMs):

where

represents the zero-mean Gaussian measurement noise, and

denotes the observation matrix that projects the state space onto the measurement space.

The states at time

and

t exhibit linear dependence (this is shared by both the Kalman filter and HMMs):

where

represents the zero-mean Gaussian process noise, and

is the state transition matrix. The term

accounts for additional control inputs.

The initial state and noise vectors at each step are all mutually independent (this is shared by both the Kalman filter and HMMs).

The state variable adheres to a multi-dimensional normal distribution. This is specifically common to the Kalman filter. Meanwhile, for HMMs, the state variable is presumed to follow a multinomial distribution.

Under these constraints, the original state-space model specializes to a more restricted formulation, the Linear-Gaussian State-Space Model (LG-SSM).

Leveraging Equation (

8), the unconditional mean of

is derived through a recursive formulation as follows:

And the unconditional co-variance is computed as follows:

With the constrains on independence, exclusion of control inputs, and Equation (

8), we have

It establishes an equation for the unconditional covariance and is formally recognized as the Lyapunov equation.

The next task is to estimate the state

utilizing a subset of output sequences

. In standard Kalman filter notation, the governing equations for this estimation process are formulated as follows:

The time propagation process is structured into two sequential stages to preserve the classical formulations of Kalman filters:

Conditioned on the output sequence

, the variables

and

follow a joint Gaussian distribution with the mean and covariance matrix respectively denoted by

Further, with

, the Kalman filter can be formally represented through the following recursive equations:

To infer the final posterior distribution

, the following formulation is employed:

Thus, we formalize the Kalman filter as a special case of the HMM framework.

3.4. Multi-Cue Fusion by Weighted Entropy

The utilization of multiple features enables the acquisition of complementary information for object representation. While various cues may be employed, appearance and motion information remain the most intuitive and critical for cross-frame data association. However, these features are highly susceptible to changes in target characteristics, which diminish their discriminative capacity. To address this limitation, we employ these two widely used features governed by weighted entropy, a metric designed to quantify the uncertainty in their characterization capabilities, to construct a balanced cost matrix for association matching. This framework evaluates the similarity between existing tracks and new detections by integrating feature-specific uncertainties, thereby enhancing the robustness of the data association process against target variations.

We begin by formulating the likelihood of state

of the object conditioned on the observation

given by the detections in the

t-th frame as follows:

where

c denotes a normalization constant,

U is the projection matrix of the PCA subspace [

16],

represents the object feature, and

represents the empirical mean of historical object features across previous frames. The combined assessment of the

i-th object in the

t-th frame is defined as follows:

where

and

denote the normalized evaluations of the state, and

is the coefficient applied to

.

To reduce the perturbations of the discriminative capability of features, we formally introduce weighted entropy [

5] as a metric to quantify the uncertainty of the features:

Smaller

indicates greater discriminative capacity of the state evaluation metric. Consequently, we formulate the fusion coefficient optimization as a minimization problem of

. And the optimized

enables effective multi-cue fusion and subsequent construction of the assignment cost matrix.

4. Experiment

4.1. Setting

4.1.1. Datasets

Experimental evaluations were conducted on the MOT17 [

17] and MOT20 [

18] benchmark datasets under the “private detection” protocol. MOT17, a well-established MOT benchmark, comprises 7 training sequences with 5316 frames and 7 testing sequences with 5919 frames, and is widely used. MOT20, specifically designed for densely crowded environments, includes 4 training sequences with 8931 frames and 4 testing sequences with 4479 frames, providing a rigorous challenge for tracking in highly occluded and cluttered scenes.

4.1.2. Metrics

Performance evaluations were conducted using existing metrics, including MOTA, IDs, IDF1, HOTA, and FPS [

19,

20,

21]. MOTA primarily assesses detection performance by integrating FP, FN, and IDs, providing a holistic measure of tracking reliability. IDF1 focuses on the consistency of identity matching between consecutive frames. HOTA fuses DetA and AssA into a single metric, distinguishing itself by evaluating performance across a spectrum of detection similarity thresholds rather than a fixed criterion. This design inherently incorporates localization precision [

1]. Notably, direct comparisons of FPS for computational efficiency are often problematic due to inherent variability in hardware configurations and software optimizations across experimental setups. Additionally, for trackers following the TBD paradigm, the computational overhead of object detection is typically excluded.

4.1.3. Implementation Details

All experiments were conducted on a server equipped with a single NVIDIA V100 GPU. And the experimental results were validated through five independent trials. For object detection, we adopted YOLOX-X [

22] with a COCO-pretrained model [

23] as the initialized weights for its optimized balance between detection speed and accuracy. The training schedule was similar to the configuration of ByteTrack [

24], with 80 epochs. A threshold of 0.6 was set for the confidence of detection to filter low-confidence detections while preserving recall performance. The parameters optimized by CMA-ES (covariance matrix adaptation evolution strategy, an evolution strategy optimization method that can accommodate non-convex optimization problems) of the HMM-based Kalman filter are listed below:

4.2. Ablation Study

We conducted an ablation study experiment on the MOT17 training dataset, as most previous works do, using the StrongSORT framework [

1] as the baseline. First, the original Kalman filter was replaced with our HMM-based Kalman filter (denoted as

). As reported in

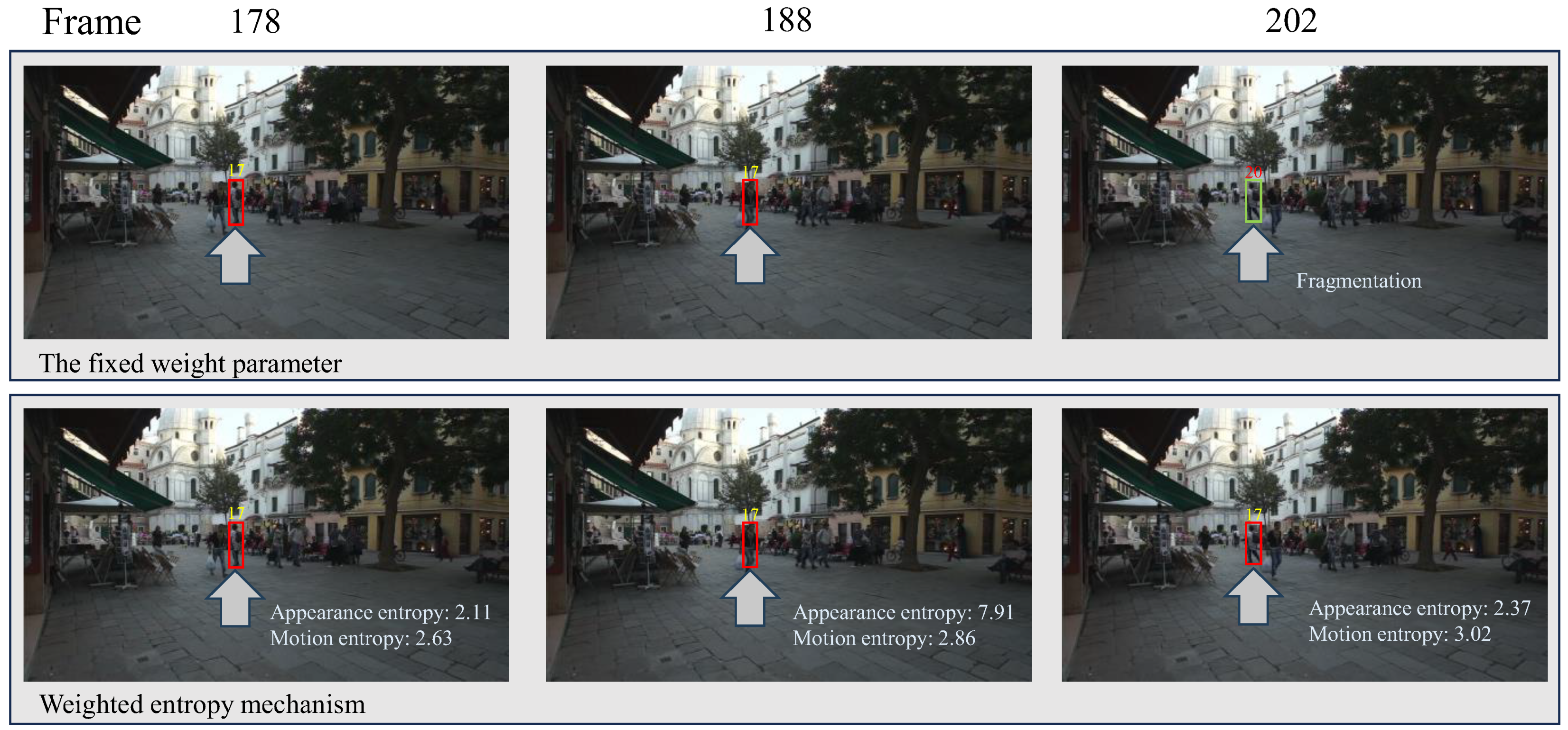

Table 1, this modification reduced the average ID count by 30, demonstrating that the HMM effectively enhances target location estimation and mitigates target missing errors. At the same time, the IDF1 and HOTA metrics exhibited significant improvements due to more reliable association. Subsequently, the default weight parameter (0.98) for multi-cue fusion was replaced with a weighted entropy mechanism (denoted as

), which adaptively balances appearance and motion feature contributions, as shown in

Figure 2. The results show that

achieved an average IDF1 increase of 1.1 over

, accompanied by a further reduction in IDs. This improvement is attributed to the weighted entropy’s capability to dynamically calibrate the trade-off between appearance and motion features, enhancing HOTA at the same time. Notably, since both HMM and the weighted entropy mechanism were not applied in the detecting stage, the proposed method had a negligible impact on MOTA as expected. Further, we replaced the original framework of track management with the multi-agent approach (denoted by

), which assigns each track to an agent for management. The significant increase in FPS demonstrates that the proposed approach can offset the additional computation loads caused by the application of the HMM-based Kalman filter and weighted entropy mechanism effectively.

4.3. Comparison with State-of-the-Art Methods

We compared the performance of our method with that of state-of-the-art methods on the test sets of MOT17 and MOT20 respectively. The results are shown in

Table 2 and

Table 3.

MOT17: As presented in

Table 2, the proposed method achieves state-of-the-art performance on the MOT17 benchmark, ranking first in the IDF1, MOTA, and HOTA metrics with scores of 82.1, 81.5, and 65.9, respectively. Meanwhile, the method ranks fourth in the IDs metric with a count of 105 more than the first. Our method outperforms the second method by a significant margin (+1.9 in IDF1, +0.8 in HOTA), demonstrating superior cross-frame matching precision. This performance gain is attributed to the adaptive weighting scheme that balances appearance and motion features in constructing the cost matrix, producing a more accurate association.

MOT20: As previously noted, MOT20 serves as a benchmark for highly crowded scenarios, where severe occlusion inherently increases the risk of detection failures and association errors. Unlike many state-of-the-art trackers that require network retraining or fine-tuning on MOT20 or auxiliary datasets to address these challenges, the proposed method achieves superior performance without such adaptation. As shown in

Table 3, our method ranks first on MOT20 across key metrics: IDF1 (81.2), MOTA (78.4), HOTA (65.7), and IDs (608). Its performance underscores the robustness of its design, particularly in managing dense occlusion scenarios. The method’s excellence in crowded environments is attributed to its HMM-based Kalman filter, which analyzes the two-phase moving pattern of pedestrians to mitigate occlusion impacts, as illustrated in

Figure 3. In particular, the method reduces IDs by 469 compared to the second method, achieving a substantial advantage in IDF1 at the same time.

5. Discussion

Through experimental validation, our method has demonstrated promising results. The reduction in IDs achieved by replacing the conventional Kalman filter with the HMM-based variant underscores the importance of motion context. Traditional models often fail to capture the irregular movements inherent in pedestrian trajectories, particularly during occlusions or abrupt direction changes. By modeling the two-phase motion pattern, the HMM-based Kalman filter provides a probabilistic framework that better accommodates practical situations. This improvement directly translates into improved HOTA scores (65.9 on MOT17 and 65.7 on MOT20), reflecting superior trajectory continuity. The weighted entropy mechanism addresses a fundamental limitation of fixed weighting schemes in multi-cue tracking frameworks. While appearance features dominate in sparse scenes, motion cues become more reliable during occlusions or rapid movements. The proposed dynamic calibration strategy achieves a 1.1 improvement in IDF1 and further reduction in IDs in the ablation study by balancing feature contributions in a self-adaptive way. And the multi-agent mechanism provides efficient computations as the underlying support, leading to satisfying performance on the FPS metric.

However, our work has limitations: The two-phase motion pattern and the model that accompanies it are specialized in tracking pedestrians, resulting in incompatibility for tracking vehicles or other objects. Thus, the suitable datasets for our method are limited. Further, pedestrians from different regions may have vastly different behavioral habits, posing a great challenge to the study of pedestrian movement patterns in this work. And the real-time computation of entropy brings heavy loads to devices, decreasing the FPS metric accordingly.

In addition, we compare the main contributions of previous works with ours in

Table 4.

6. Conclusions

In this work, our goal is to tackle the uncertainty problem of two-phase motion patterns when tracking pedestrians. We propose a novel multi-agent framework for MOT, leveraging multi-agent decision-making to enhance the efficiency and scalability of track management in complex scenes. In addition, the framework integrates two key methodological innovations: HMM-based reconstruction of the Kalman filter and a weighted entropy mechanism for the adaptive fusion of appearance and motion features.

Quantitative evaluations on the MOT17 benchmark demonstrate significant advancements over prior methods: Our method achieves 82.1 in IDF1 (+1.9 vs. second-best), 81.5 in MOTA, and 65.9 in HOTA (+0.8 vs. second-best), establishing new state-of-the-art results in all three primary metrics. For the challenging MOT20 benchmark featuring extreme crowd densities, our method achieves 81.2 in IDF1, 78.4 in MOTA, 65.7 in HOTA, and 608 IDs—all ranking first without dataset-specific fine-tuning. And the improvement in the FPS metric demonstrates that the multi-agent approach can enhance the efficiency of computation and track management.

Future work could further explore the decentralization of MOT. On the one hand, centralized computing architectures face inherent limitations in terms of security and robustness, primarily due to their susceptibility to single-point failures—a critical vulnerability that renders them unsuitable for deployment in industrial environments. On the other hand, it brings inevitable risks of data leakage for data owners and the administrator. Meanwhile, emerging blockchain technology has become a promising paradigm to enable trustworthy collaborative frameworks to be established, leveraging its salient attributes of decentralization, transparency, traceability, and immutability. The framework of blockchain-based MOT can consist of edge devices, compute units, and blockchain networks. Edge devices contain surveillance cameras and the communication protocol. Compute units include object detectors, ReID modules, and trackers. This architecture facilitates the seamless integration of multi-agent coordination mechanisms.

Author Contributions

Resources, H.S.; Writing—original draft, H.G.; Writing—review & editing, S.W., D.Y. (Da Yang), D.Y. (Dazhi Yang) and G.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by the National Key R&D Program of China (No. 2024YFB4506000); the National Natural Science Foundation of China (No. 62394332, 62372023); the Open Fund of the State Key Laboratory of Software Development Environment (No. SKLSDE-2023ZX-11); the Research Start-up Funds of Hangzhou International Innovation Institute of Beihang University (Grant No. 2024KQ012, 2024KQ087); the Zhejiang Provincial Natural Science Foundation of China under Grant No. LQN25F020028; the Open Funding of the State Key Laboratory of Intelligent Coal Mining and Strata Control (Grant No. SKLIS202405) and the Haiyou Plan Fund.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy concerns.

Acknowledgments

This study was supported by High-Performance Computing Center of Hangzhou International Innovation Institute, Beihang University. Thank you for the support from HAWKEYE Group.

Conflicts of Interest

Guanqun Su was employed by the company Shandong Qingniao HoT Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MOT | Multi-object tracking |

| HMM | Hidden Markov Model |

| SOT | Single-object tracking |

| TBD | Tracking-by-detection |

| TRPO | Trust-region policy optimization |

| LG-SSM | Linear-Gaussian State-Space Model |

| PCA | Principal component analysis |

| MOTA | Multiple object tracking accuracy |

| IDs | Identity switches |

| IDF1 | Identity F1 score |

| HOTA | Higher-order tracking accuracy |

| FPS | Frames per second |

| CMA-ES | Covariance matrix adaptation evolution strategy |

References

- Du, Y.; Zhao, Z.; Song, Y.; Zhao, Y.; Su, F.; Gong, T.; Meng, H. Strongsort: Make deepsort great again. IEEE Trans. Multimed. 2023, 25, 8725–8737. [Google Scholar] [CrossRef]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Chen, F.S.; Fu, C.M.; Huang, C.L. Hand gesture recognition using a real-time tracking method and hidden Markov models. Image Vis. Comput. 2003, 21, 745–758. [Google Scholar] [CrossRef]

- Guiaşu, S. Weighted entropy. Rep. Math. Phys. 1971, 2, 165–179. [Google Scholar] [CrossRef]

- Wang, S.; Sheng, H.; Yang, D.; Zhang, Y.; Wu, Y.; Wang, S. Extendable multiple nodes recurrent tracking framework with RTU++. IEEE Trans. Image Process. 2022, 31, 5257–5271. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Sheng, H.; Wu, Y.; Wang, S.; Ke, W.; Xiong, Z. Multiplex labeling graph for near-online tracking in crowded scenes. IEEE Internet Things J. 2020, 7, 7892–7902. [Google Scholar] [CrossRef]

- Shen, J.; Sheng, H.; Wang, S.; Cong, R.; Yang, D.; Zhang, Y. Blockchain-based distributed multiagent reinforcement learning for collaborative multiobject tracking framework. IEEE Trans. Comput. 2023, 73, 778–788. [Google Scholar] [CrossRef]

- Rosello, P.; Kochenderfer, M.J. Multi-agent reinforcement learning for multi-object tracking. In Proceedings of the 17th International Conference on Autonomous Agents and MultiAgent Systems, Stockholm, Sweden, 10–15 July 2018; pp. 1397–1404. [Google Scholar]

- Segal, A.V.; Reid, I. Latent data association: Bayesian model selection for multi-target tracking. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013; pp. 2904–2911. [Google Scholar]

- Chen, Z.Z.M. Efficient Multi-Target Tracking Using Graphical Models. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2008. [Google Scholar]

- Wang, C.; de La Gorce, M.; Paragios, N. Segmentation, ordering and multi-object tracking using graphical models. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 747–754. [Google Scholar]

- Noukhovitch, M.; LaCroix, T.; Lazaridou, A.; Courville, A. Emergent communication under competition. arXiv 2021, arXiv:2101.10276. [Google Scholar]

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems; Wiley-IEEE Press: Hoboken, NJ, USA, 1960. [Google Scholar]

- Louw, E.J. A Probabilistic Graphical Model Approach to Multiple Object Tracking. Ph.D. Thesis, Stellenbosch University, Stellenbosch, South Africa, 2018. [Google Scholar]

- Ding, Q.; Kolaczyk, E.D. A compressed PCA subspace method for anomaly detection in high-dimensional data. IEEE Trans. Inf. Theory 2013, 59, 7419–7433. [Google Scholar] [CrossRef]

- Milan, A. MOT16: A benchmark for multi-object tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Dendorfer, P. Mot20: A benchmark for multi object tracking in crowded scenes. arXiv 2020, arXiv:2003.09003. [Google Scholar]

- Bernardin, K.; Stiefelhagen, R. Evaluating multiple object tracking performance: The clear mot metrics. EURASIP J. Image Video Process. 2008, 2008, 1–10. [Google Scholar] [CrossRef]

- Luiten, J.; Osep, A.; Dendorfer, P.; Torr, P.; Geiger, A.; Leal-Taixé, L.; Leibe, B. Hota: A higher order metric for evaluating multi-object tracking. Int. J. Comput. Vis. 2021, 129, 548–578. [Google Scholar] [CrossRef] [PubMed]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 17–35. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; proceedings, part v 13. Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–21. [Google Scholar]

- Hu, M.; Zhu, X.; Wang, H.; Cao, S.; Liu, C.; Song, Q. Stdformer: Spatial-temporal motion transformer for multiple object tracking. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 6571–6594. [Google Scholar] [CrossRef]

- Yu, E.; Li, Z.; Han, S.; Wang, H. Relationtrack: Relation-aware multiple object tracking with decoupled representation. IEEE Trans. Multimed. 2022, 25, 2686–2697. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, T.; Zhang, X. Motrv2: Bootstrapping end-to-end multi-object tracking by pretrained object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22056–22065. [Google Scholar]

- Chu, P.; Wang, J.; You, Q.; Ling, H.; Liu, Z. Transmot: Spatial-temporal graph transformer for multiple object tracking. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 4870–4880. [Google Scholar]

- Zhao, Z.; Wu, Z.; Zhuang, Y.; Li, B.; Jia, J. Tracking objects as pixel-wise distributions. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2022; pp. 76–94. [Google Scholar]

- Gao, Y.; Xu, H.; Li, J.; Gao, X. Bpmtrack: Multi-object tracking with detection box application pattern mining. IEEE Trans. Image Process. 2024, 33, 1508–1521. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Han, G.; Yan, B.; Zhang, W.; Qi, J.; Lu, H.; Wang, D. Hybrid-sort: Weak cues matter for online multi-object tracking. Proc. AAAI Conf. Artif. Intell. 2024, 38, 6504–6512. [Google Scholar] [CrossRef]

- Yi, K.; Luo, K.; Luo, X.; Huang, J.; Wu, H.; Hu, R.; Hao, W. Ucmctrack: Multi-object tracking with uniform camera motion compensation. Proc. Aaai Conf. Artif. Intell. 2024, 38, 6702–6710. [Google Scholar] [CrossRef]

- Aharon, N.; Orfaig, R.; Bobrovsky, B.Z. Bot-sort: Robust associations multi-pedestrian tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar]

- Yang, F.; Odashima, S.; Masui, S.; Jiang, S. Hard to track objects with irregular motions and similar appearances? make it easier by buffering the matching space. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 4799–4808. [Google Scholar]

- Qin, Z.; Zhou, S.; Wang, L.; Duan, J.; Hua, G.; Tang, W. Motiontrack: Learning robust short-term and long-term motions for multi-object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 17939–17948. [Google Scholar]

- Liu, Z.; Wang, X.; Wang, C.; Liu, W.; Bai, X. Sparsetrack: Multi-object tracking by performing scene decomposition based on pseudo-depth. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 4870–4882. [Google Scholar] [CrossRef]

- Zeng, F.; Dong, B.; Zhang, Y.; Wang, T.; Zhang, X.; Wei, Y. Motr: End-to-end multiple-object tracking with transformer. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2022; pp. 659–675. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).