1. Introduction

Cotton is one of the most significant crops in the agricultural sector, which contributes to the annual production of around 25.5 million metric tons in a year [

1]. This crop is produced in many countries around Asia and America. For example, China and the U.S. produce the second and third largest amounts each year, with the U.S. producing nearly 4 million metric tons. Second is India, which is the world’s largest producer of cotton at about 6.4 million metric tons after China [

2]. Apart from its importance in the textile industry, cotton has millions of livelihoods hanging on it when it comes to farming, manufacturing and trade. Cotton farming globally supports the employment of over 250 million people, making a significant contribution to economic stability among most of the developing nations [

3].

Cotton production, however, is quite challenging because cotton plants are highly sensitive to diseases. These plant diseases attack specific parts of the plants, affecting the growth of the plants and the quality of the cotton. Thus, it is critical to develop an effective approach for detecting cotton plant diseases at the early stage for the protection of the cotton plants [

4].

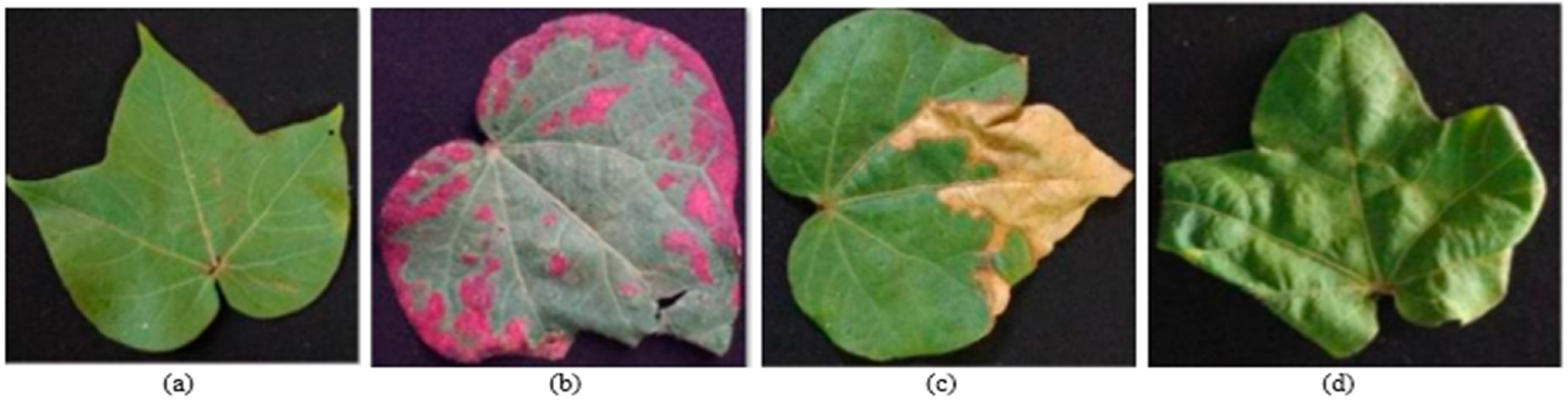

One of the main challenges in cotton farming is to accurately identify cotton diseases that affect the leaves in a timely manner [

5]. Diseases such as White Spot Disease (Pandhari Mashi), Crumple Leaf Disease (Kokada) and Red Spot Disease (Lalya) are often found on cotton plants, and they can reduce the quality and quantity of the cotton produced if they are not detected early. Visual inspection is the most common model for plant disease detection; however, it is laborious, time-consuming and prone to error by farmers. Lacking identification, diseases can spread and be costly for farmers whose livelihoods involve cotton production, and the traditional model of detecting cotton diseases is by manual examination by agronomists or farmers with some experience. To detect these diseases successfully, farmers must be highly experienced to know the disease symptoms, like discoloration, leaf deformation and spots, and this also can be variable according to environmental conditions or the disease progression.

In recent years, image-based recognition models have been adopted to analyze cotton leaves’ images for identifying disease patterns. While some improvements have been made, existing techniques are still largely vendor and experiential-dependent. Islam et al. [

6], for example, developed the Xception model with transfer learning, which achieved high accuracy on an open-source cotton dataset, but their model may not generalize well to other plant species or environmental conditions, and it requires significant computational resources. Similarly, Sivakumar et al. [

7] compared several CNN architectures, such as VGG19 and ResNet50, but did not specify the dataset size, making it difficult to compare the results accurately. Other studies, like Memon’s [

8] work with custom CNN and meta-deep learning, show promising results on specific datasets but face limitations in generalizability due to the relatively small dataset sizes. In most of the reviewed studies, dataset details are either missing or not diverse enough, leading to concerns about overfitting and reduced real-world applicability. Additionally, while deep learning models have demonstrated high accuracy, many rely heavily on computational power and large datasets, making them challenging to deploy in practical, resource-constrained environments, thus hampering their scale and reliability [

9,

10]. Image-based recognition techniques also have inherent problems, such as limited accuracy due to variations in lighting, background and disease manifestation. Furthermore, current models are reactive and typically do not discover cotton diseases before considerable damage has been caused. This motivated the development of a new approach to cotton disease detection.

The application of Neural Networks (NNs), especially Convolutional Neural Networks (CNNs), has rapidly advanced the capabilities of automation, precision monitoring, and intelligent decision-making across both agricultural and industrial sectors.

In agriculture, CNNs are extensively used for image-based tasks, such as:

Crop disease identification using leaf images

Weed detection for precision spraying

Fruit ripeness estimation using color and texture features

Soil pattern analysis and satellite-based crop mapping

These networks excel due to their ability to automatically extract and learn hierarchical spatial features without manual pre-processing. CNNs outperform traditional machine learning methods in visual pattern recognition, which is especially valuable for noisy, real-world agricultural environments.

In industrial settings, CNNs and deep NNs are deployed in:

Defect detection in manufacturing via surface image inspection

Predictive maintenance using time-series sensor data

Automated visual inspection systems for quality assurance

Object tracking and robotic control in assembly lines

Industries benefit from CNNs’ robustness to distortion and their scalability to large datasets with high variability in lighting, orientation, or noise. When combined with reinforcement learning or optimization techniques (e.g., PSO, GA), they enable adaptive process control and real-time decision support.

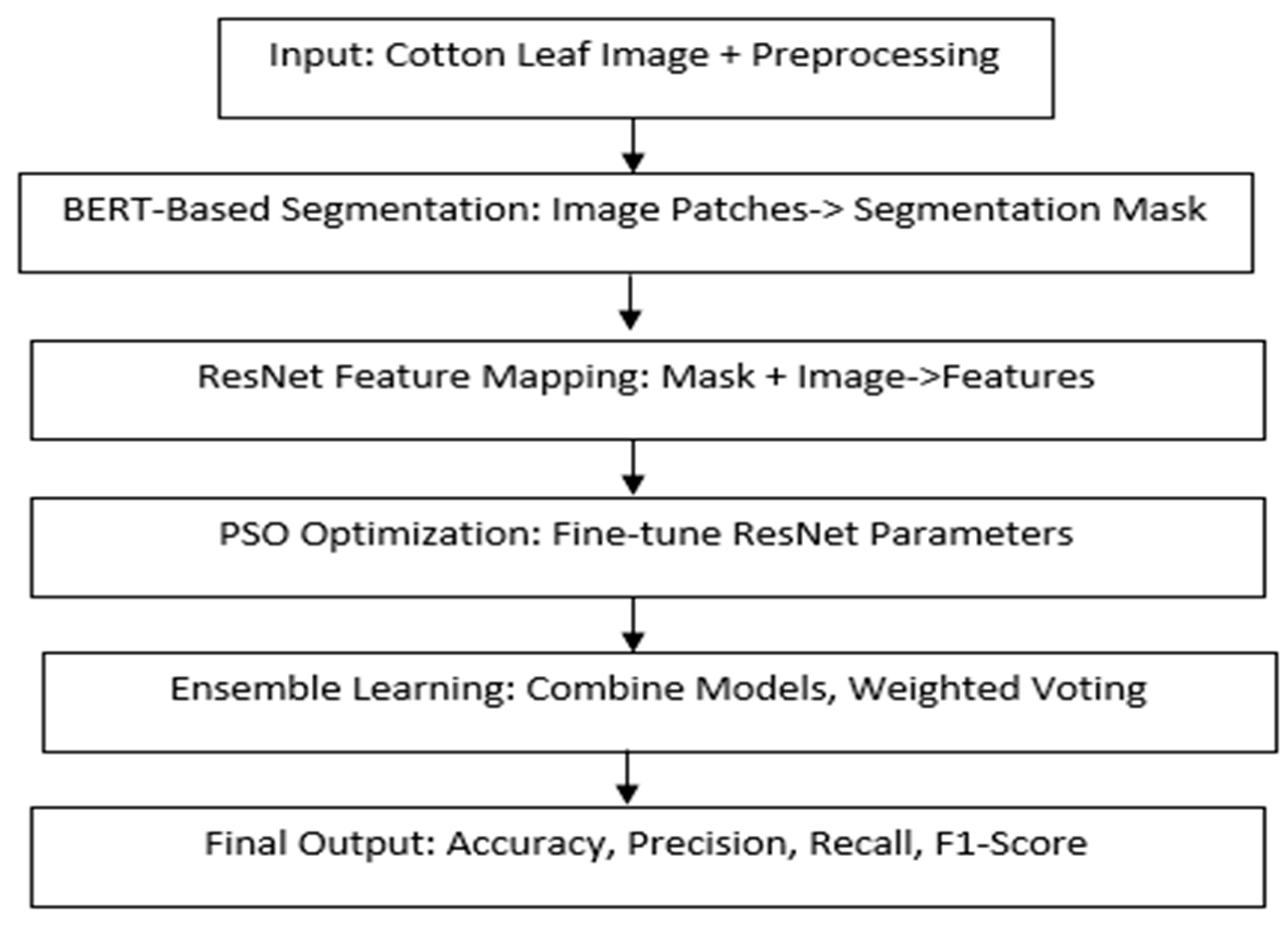

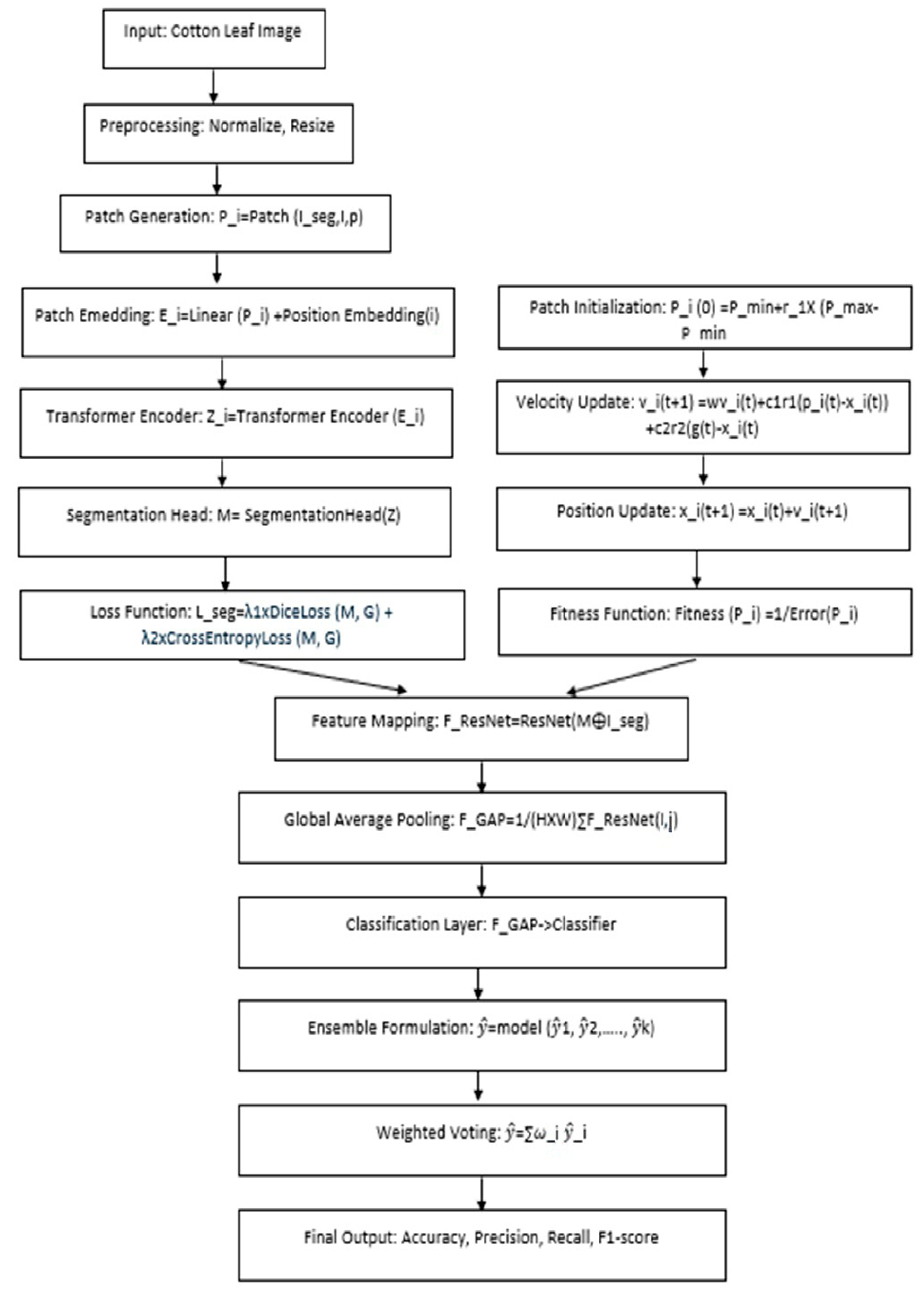

This paper presents the development of a novel deep-learning approach based on a hybrid BERT-ResNet-PSO model for the detection and classification of cotton leaf diseases. Finally, disease detection with our proposed model is robust and efficient due to leveraging the best of Bidirectional Encoder Representations from Transformers (BERT) for image segmentation, ResNet for feature extraction, and PSO for parameter tuning.

In what follows,

Section 2 provides a detailed review of the related work on cotton leaf disease detection.

Section 3 presents the proposed hybrid deep learning approach for dealing with plant disease detection.

Section 4 discusses the experimental setup and the evaluation metrics with the utilized datasets, implementation details and a comparison with the existing models.

Section 5 provides the conclusion of the study.

2. Related Work

Several studies have been conducted on the development of deep-learning approaches for improving cotton leaf disease detection. Islam et al. [

11], for example, developed a deep learning approach for predicting cotton leaf diseases with fine-tuning and transfer learning. A smart web application was made by the study with the Xception model to yield 98.70% accuracy. Aided by transfer learning, the approach can adapt pre-trained models to disease detection tasks with relatively little need for extensive labeled datasets and the model training time is lowered. The approach heavily relies on pre-trained models, which may not fully capture the unique features of different environmental conditions and disease manifestations, as this application is used to assess for disease on real-life plants, minimizing cotton yield losses and reducing workload. However, the accuracy may also drop when it is applied to various datasets that differ from the original training data.

Islam et al. [

11] proposed a deep learning-based approach for detecting cotton leaf diseases by transfer model fine-tuning using the tuning of layers and variables of different available models. The study looks at the effectiveness of different fine-tuning transfer-learning models, such as VGG-19, VGG-16, Xception and Inception-V3, for the prediction of cotton diseases on a publicly available cotton database. The approach involves data collection, pre-processing, model development, training, evaluation, and mobile application development. The images undergo pre-processing through various tools such as VGG-19, InceptionV3, Xception, and VGG-16. A selection of 20% of the images is utilized for training models, with metrics employed to evaluate cotton eases. Ultimately, the most effective model is implemented within a web application. The Xception model, with a 98.70% accuracy, was chosen for a web-based smart application to help agricultural practitioners predict cotton diseases in real time, thereby enhancing production capacity. The model accurately identifies cotton leaf diseases, providing a novel approach for the automated diagnosis of more plant species. The DL-based cotton disease detection model has limitations, including class imbalance, skewed predictions, adaptability to novel disease cases, and inability to deliver essential features, requiring the development of models for feature extraction and selection.

Chopkar et al. [

12] suggest Convolutional Neural Networks (CNNs) for disease detection, enabling farmers to take timely actions and reduce crop losses. CNNs recognize disease-specific patterns in plant leaves. The study employed two distinct methodologies for the detection and categorization of leaf diseases, employing both deep learning and traditional machine learning techniques. The approach involves several steps in the detection and labeling of leaf diseases, together with data selection, pre-processing, and data transformation. The study divides the dataset into four categories: Alternaria leaf, grey mildew, leaf reddening, and healthy leaf. The research pre-processes the data and transforms it into NumPy arrays to standardize RGB values. A data augmentation strategy was used to address irregularities. The study further applies batch normalization and dropout layers to the CNN model. Performance metrics guide the selection of the optimal model. The study suggests that machine learning in agriculture can automate disease identification, increasing crop yields and efficiency. Precision agriculture uses CNNs to detect cotton leaf diseases, a significant step. However, traditional models are labor-intensive and time-consuming, making crop assessment challenging and limiting access to professionals in certain regions.

Kshirsagar et al. [

13] explore a diverse range of image processing concepts, like image acquisition, feature extraction, image pre-processing, database formation, and artificial neural network classification. This paper examines the research on a variety of methodologies for the detection of plant leaf maladies using neural networks. It introduces a novel approach to the detection of plant maladies in plant leaf servers by employing a deep Convolutional Neural Network (ANN). The investigation precisely identifies diseases in cotton leaves by examining their consistency and the efficacy of various plant classification strategies. This model employs ANN, SVM, and NB classifiers to identify and classify leaf diseases. It offers a percentage of the impacted leaf area. This model is beneficial for agricultural purposes, including the detection of plant component diseases, particularly those impacting leaves. Improved crop yields and early detection can enhance the Indian GDP. The study’s limitations include crop health, seasonal changes, environmental anomalies like disease, water shortages, and insects, population growth, and climatic conditions. Minimizing pesticide usage is crucial for cost reduction and environmental protection.

Thivya Lakshmi et al. [

14] developed a novel approach known as CoDet, which employs sophisticated mathematical techniques to precisely identify cotton plants. CoDet’s design focuses on comprehending the growing patterns of cotton-based plants. The layers of the CNN evaluate input images and produce maps and outputs that help in classifying different regions associated with cotton plants. This approach demonstrates quick and reliable identification of cotton plants. The CoDet model architecture uses convolutional training to categorize images using teachable filters. It consists of a Reshape layer, three convolutional layers, a ReLU activation function, and a batch normalization layer. The model aims to identify patterns in input photos for cotton recognition by reducing feature maps’ spatial dimensions and removing units to avoid overfitting. The proposed CoDet architecture is compact and computation-optimized, making it ideal for IoT and mobile-based applications. It offers a versatile approach for detecting cotton plants, facilitating seamless integration into diverse software and hardware systems. The existing research does not consider the specific attributes that distinguish cotton growing and its range, leading to poor identification models.

Patil and Patil [

15] present a deep-learning model for identifying diseased cotton plants using leaf images and an IoT-based platform for climate change detection. The study utilized a deep CNN model trained on both infected and healthy leaf images. The system involves collecting a dataset, pre-processing it, training the CNN, and validating the model. The dataset is collected from daily surveys using sensors on crop fields and an IoT-based system camera. Image augmentation techniques are used to reduce overfitting. The CNN is trained using various deep-learning contexts, including Python’s Theano 1.0.5, Torch7, and Caffe. The study’s output is based on the F1-Score performance evaluation of the model, where 1 corresponds to a perfect score, and 0 corresponds to a zero score. The research trains the model at NVIDIA Graphics Processing Unit GPU mode and gets an accuracy of approximately 98.0% with a total of 10 iterations. In the future, it may be necessary to invest considerable time in predicting the outcomes of diseases affecting the cotton plant to ensure its optimal performance.

Pandey et al. [

16] seek to develop a system for identifying diseases in cotton leaves through the analysis of digitized color images. The system uses SVM, CNN, and a Hybrid approach to predict diseases like Fusarium wilt and Leaf Curl Disease, specific to cotton plants. The process involves collecting a cotton dataset, applying different classifications, calculating performance metrics, and comparing results. The dataset consists of four classes: Leaf Curl Disease, Bacterial Blight, Healthy, and Fusarium wilt. The classification model’s efficacy is assessed using a total of 1661 images. The proposed technique can detect cotton leaf illnesses early, reducing environmental and human health risks. It can distinguish disorders with minimal computational effort, allowing for pest management models. The model has a 98.9% accuracy rate in identifying conditions in cotton leaves, making it useful for farmers in early disease identification. Environmental variables, including diseases induced by bacteria, fungi, and other pathogens, can profoundly affect productivity.

Gülmez [

17] developed a new deep-learning model for disease identification in cotton. The Grey Wolf Optimization (GWO) algorithm refines the advanced DL network. The GWO algorithm is introduced for the first time in this study. The image is used by this model to ascertain whether the cotton is diseased or healthy. It constitutes a deep CNN model. The study precisely attends to the model’s development to ensure its specificity to the problem at hand. The grey wolf optimization Algorithm has been used in this research to prove the optimality of the model design. This process will determine the most effective design. The study has conducted a proportional analysis between the proposed model and the commonly referenced VGG19, ResNet50, and InceptionV3 models in the literature. The results acquired show that the accuracy metric for the proposed model equals 1.0. The accuracy scores for the other models were 0.726, 0.934 and 0.943, respectively. The study shows how hard optimization problems are in the real world and how much we need better solutions because of the problems caused by nonlinear features, lots of decision factors, and the complicated limits that come with traditional models.

Kumar et al. [

18] propose a model for developing a machine learning-based system for classifying diseases in cotton plants using leaf images, which involves data acquisition, pre-processing, model training, ensemble model development, model evaluation, and result analysis. The study investigated the predictive capabilities of three distinct models: Support Vector Machine (SVM), MultiClass SVM, and an Ensemble Model that combines Random Forest and Decision Tree approaches. The specific task was binary classification, where we aimed to categorize inputs as either ‘‘Diseased’’ or ‘‘Healthy.’’ The study evaluated these models not only for their class predictions but also for their associated class probability estimates, which provide insights into the models’ confidence in their predictions. All three models unanimously forecasted the input as ‘‘Diseased.’’ The SVM and Multi-Class SVM models showed moderate confidence in their ‘Diseased’ predictions, with a probability estimate of 76.77%. However, the key difference lies in the confidence levels conveyed through their class probability estimates, which are crucial for understanding their reliability.

Nazeer et al. [

19] examine the several forms of cotton leaf diseases and their prevalence, the relationship between environmental factors and these diseases, and suggest an automated detection approach for Cotton Leaf Cucumber Disease (CLCuD) using cotton leaf pictures. The research establishes a self-compiled dataset for the classification of CLCuD, utilizing visual symptoms derived from various images. Pre-processing steps are thoughtfully implemented to extract features, and a refined DL model is presented for the purpose of predicting susceptibility levels. The research uses Convolutional Neural Networks (CNN) to assess these models based on two separate databases: one sourced from the publicly accessible Kaggle database and another derived from a proprietary source. Agricultural specialists provided their insights to annotate the dataset, drawing upon their knowledge in recognizing unusual growth forms and features. Data augmentation significantly improves the accuracy of model performance, utilizing deep features that facilitate both testing and training processes. Compared to other models, the CNN model outperforms, achieving an accuracy of 99% with the proprietary database. Cotton Leaf Curl Virus (CLCuV) presents substantial risks to cotton production, requiring prompt and precise disease identification.

Caldeira et al. [

20] have successfully used deep learning (DL) to classify lesions in cotton leaves, proving its potential in diagnosing agricultural pests and diseases. The study used a processing pipeline for analyzing images in natural field conditions, including acquisition, pre-processing, and attribute extraction. Four machine-learning algorithms were tested, with deep-learning models replaced in steps III and IV. Two deep convolutional network models, ResNet50 and GoogleNet, based on convolutional neural networks, attained precisions of 89.2% and 86.6%, respectively. Unlike more traditional approaches for image processing, including Closest k-neighbors (KNN), support vector machines (SVM), artificial neural networks (ANN), and neuro-fuzzy (NFC), convolutional neural networks (CNNs) have demonstrated an improvement in precision by as much as 25%. This indicates that the adoption of this approach may enhance the efficiency and reliability of inspecting plants in agricultural settings. The study’s limitations encompass the considerable influence of typical image processing algorithms on outcomes, covering type, quality, descriptors, and resolution.

Latif et al. [

21] propose a novel approach that employs an architecture for DL with serially fused features and optimal feature selection. The proposed design includes the construction of a cotton diseases-focused self-collected dataset, augmentation of this dataset with other data, extraction and computation of 3rd and 4th layer features using a pre-trained DL model called ResNet101, and inclusion into a single matrix. The optimal points are subsequently selected for further recognition using a genetic algorithm, which guarantees effective training and recognition. A Cubic SVM model was employed for final recognition and validated on a curated dataset. The freshly constructed dataset attained a maximum accuracy of 98.8% with Cubic SVM, demonstrating the efficacy of the suggested framework. The authors have no conflicts of interest regarding the present study.

Table 1 provides a summary of the existing approaches for plant disease detection based on cotton leaf disease datasets. The limitations in the present approaches have led to the development of a new approach for cotton leaf disease detection to address limitations in current models, which often struggle with accuracy, scalability, and practical application in real-world settings. Existing techniques, primarily reliant on deep learning models like CNNs and transfer learning, demonstrate high accuracy in controlled environments but face challenges when applied in diverse field conditions, where variations in lighting, background, and disease manifestations can impact performance. Many of these models are also computationally intensive, making them impractical for deployment in resource-constrained environments such as small farms. Additionally, traditional models rely heavily on manual inspection, which is laborious, error-prone, and requires extensive experience to accurately identify disease symptoms, potentially leading to delayed intervention and crop losses. Our proposed hybrid approach—leveraging BERT for image segmentation, ResNet for feature extraction, and Particle Swarm Optimization (PSO) for parameter tuning aims to create a robust, efficient, and scalable solution. This novel model integrates the strengths of deep learning architectures with optimization techniques to improve detection accuracy, adaptability to varied environmental conditions, and applicability in real-time scenarios, ultimately supporting cotton farmers in preserving yield and quality.

4. Results and Analysis

The PlantVillage Extension Cotton Leaf Disease dataset is used in this study to demonstrate the effectiveness of the proposed hybrid deep learning approach. The dataset is made up of more than 4000 images of various diseased symptoms, including aviarum blight, leaf curl virus, fusarium wilt, alternaria leaf spot and healthy leaves. These images are represented in formats, such as JPEG, PNG or enhanced by a CSV file that contains disease category information for each image. Hence, during the initial processing steps, the images are parsed and arranged according to their annotations, forming a labeled dataset to enhance integration into the supervised learning stream. Every image is followed by normalization to obtain the same size and format of images across the dataset. In this work, rotation of the images, flipping and change in contrast are used to enhance the variety of data for improved model generalization during training.

For segmentation tasks, the images are segmented, and only the leaf areas of concern are selected, which are then split into patch sizes that are best suited to be fed into the Vision Transformer Model developed based on the BERT Language Model. The images are split into patches and put through a linear embedding, followed by a positional embedding to capture spatial information. The resulting embedded patches are then passed through the transformer encoder layer to derive the feature maps, which capture rich patterns in spatial and contextual domains. These features are then used in the segmentation head, which comes out with a pixel-wise mask that separates the diseased from the healthy part of the walnut leaf. To do the classification, the processed feature maps are transferred into the Residual Network (ResNet) architecture, which maps the feature maps into spaces that are appropriate for the classification of disease. The feature extraction is also enhanced through PSO to guarantee that the model has high outcome parameters. The optimized features are then passed to another module of classifiers to make an integrated decision on the results from different classifiers. It also presents a complete pipeline from raw data preparation to feature extraction, classification, and segmentation for a reliable diagnosis of cotton leaf diseases and the utilization of measurement benchmarks, such as accuracy, precision, recall, and F1-Score.

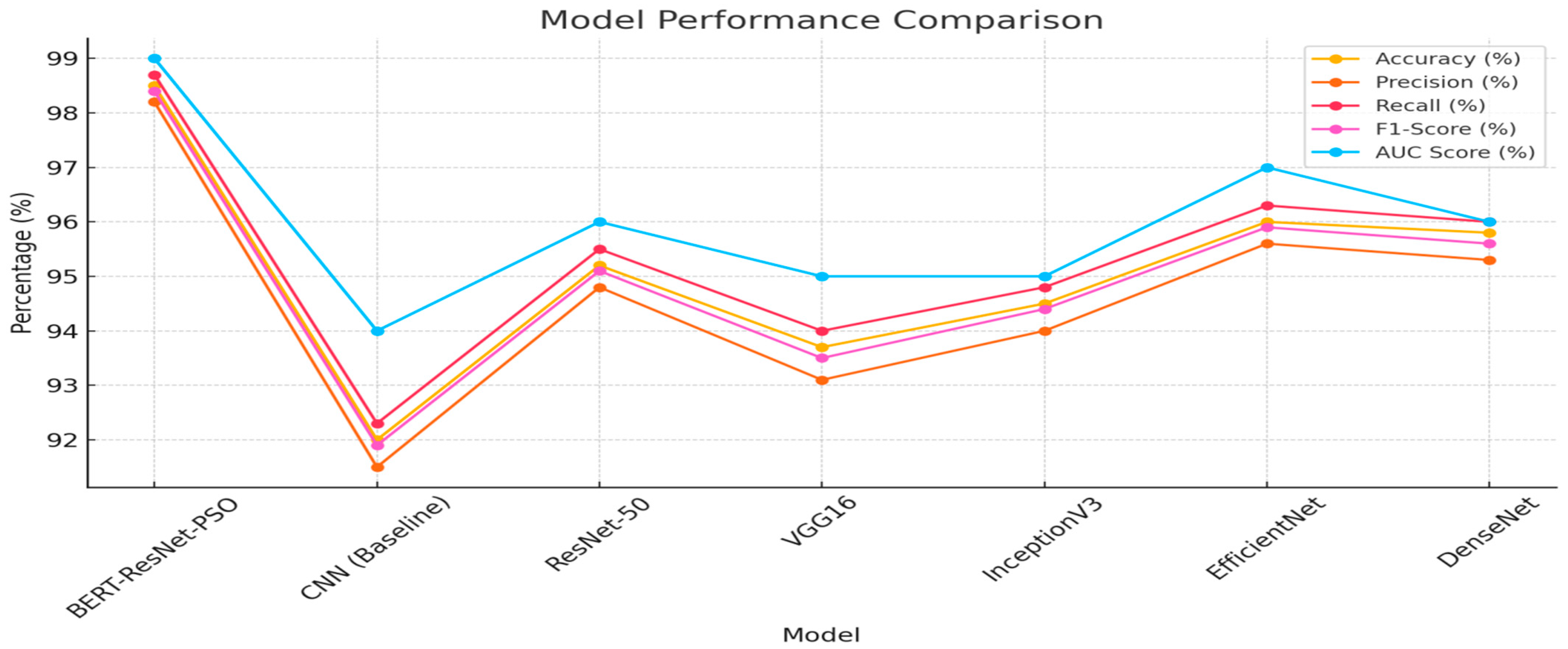

Table 2 shows the comparison of different models in terms of their accuracy, precision, recall, F1-Score, and AUC. The performance of the BERT-ResNet-PSO model is evaluated among the evaluated models, followed by an excellent accuracy of 98.5%. Then we have EfficientNet, DenseNet, and ResNet-50, with 96.0%, 95.8%, and 95.2% accuracy, respectively. The 92.0% accuracy of the baseline CNN model is still higher than the 90.4% shown for an earlier model. At values of precision, the BERT-ResNet-PSO model is once again the highest at 98.2%, EfficientNet at 95.6%, and DenseNet at 95.3%. The precision values of ResNet-50, InceptionV3 and VGG 16 models are about the same, 94.8%, 94.0% and 94.0%, respectively. The precision of the baseline CNN model is 91.5%. Furthermore, the recall metric shows that the BERT-ResNet-PSO model, which has the highest recall value of 98.7%, also successfully identifies positive instances. For the next best recall, we observe EfficientNet (96.3%), DenseNet (96.0%), and ResNet-50 (95.5%). InceptionV3 and VGG16 reach recall of 94.8% and 94.0%, and the baseline CNN model obtains 92.3% recall. It is a similar trend with the F1-Score, which is a harmonic mean of precision and recall; from the F1-Score, we can see that the highest model is the BERT-ResNet-PSO model with F1 of 98.4%, and then the other two models follow the EfficientNet (95.9%), the DenseNet (95.6%), EfficientNet (95.6%) and the ResNet 50 (95.1%). The F1-Score for the baseline CNN model is 91.9%, and the F1-Score for the alternative architectures InceptionV3, VGG16 and a variation of CNN is 94.4% and 93.5%, respectively. Overall, the results show the little model outperforms all evaluated metrics better than the baseline and previous models.

Last but not least, BERT-ResNet-PSO also demonstrates the highest overall performance in terms of AUC score, measuring the average quality of the model given across all classification thresholds by 0.99. Next is EfficientNet, with a score of 0.97 and both ResNet-50 and DenseNet scored 0.96. Meanwhile, InceptionV3 and VGG16 score 0.95, while the CNN baseline ends with 0.94 in the table.

Table 2 shows that the BERT-ResNet-PSO model exhibited better performance than the other models for all the metrics.

Figure 4 illustrates the comparison of the plant village dataset using various deep learning models, including the proposed (BERT-ResNet-PSO) model. It is evident that the BERT-ResNet-PSO model outperformed the other models in terms of precision, accuracy, recall, F1-Score, and AUC values. Following closely behind were the EfficientNet model, DenseNet, ResNet-50, InceptionV3 model, VGG16 model, and the CNN (Baseline Model).

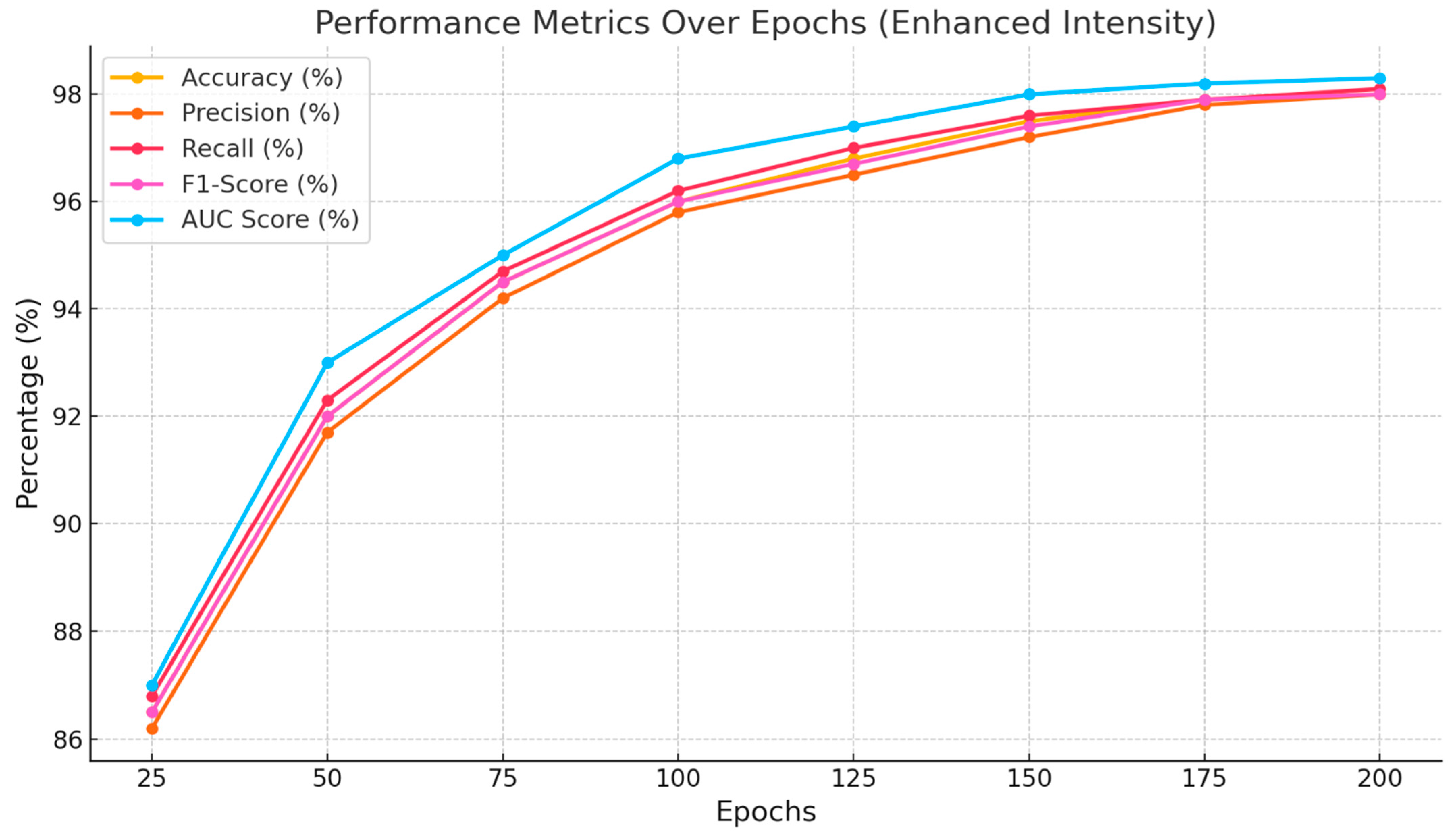

Table 3 displays the dataset showcasing the performance of the proposed BERT-ResNet-PSO approach across various epochs. Based on the data in

Table 3, at the 200th epoch, the highest recall rate achieved is 98.7%, followed by an accuracy rate of 98.5%, an F1-Score of 98.4%, and a precision rate of 98.2%. Additionally, the AUC value is 0.99. Similarly, at the 150th epoch, a recall rate of 98.3% is achieved, along with an accuracy and F1-Score of 98.1%, a precision of 97.9%, and an AUC of 0.99. After reaching the 100th epoch, an impressive recall rate of 97.2% was achieved, along with an accuracy of 97%, an F1-Score of 96.9%, a precision of 96.7%, and an AUC of 0.98. In addition, the 75th, 50th, 30th, and 20th achieved the highest recall values, with accuracy, F1-Score, precision, and AUC values. Finally, the 10th epoch had the lowest performance compared to the others.

Figure 5 shows the performance of the proposed (BERT-ResNet-PSO) approach over different epochs. It is evident that there is a steady increase in accuracy, precision, recall, F1-Score, and AUC values from the 10th epoch to the 200th epoch. Optimal parameters are achieved at the 200th epoch, while the least favorable parameters are obtained at the 10th epoch.

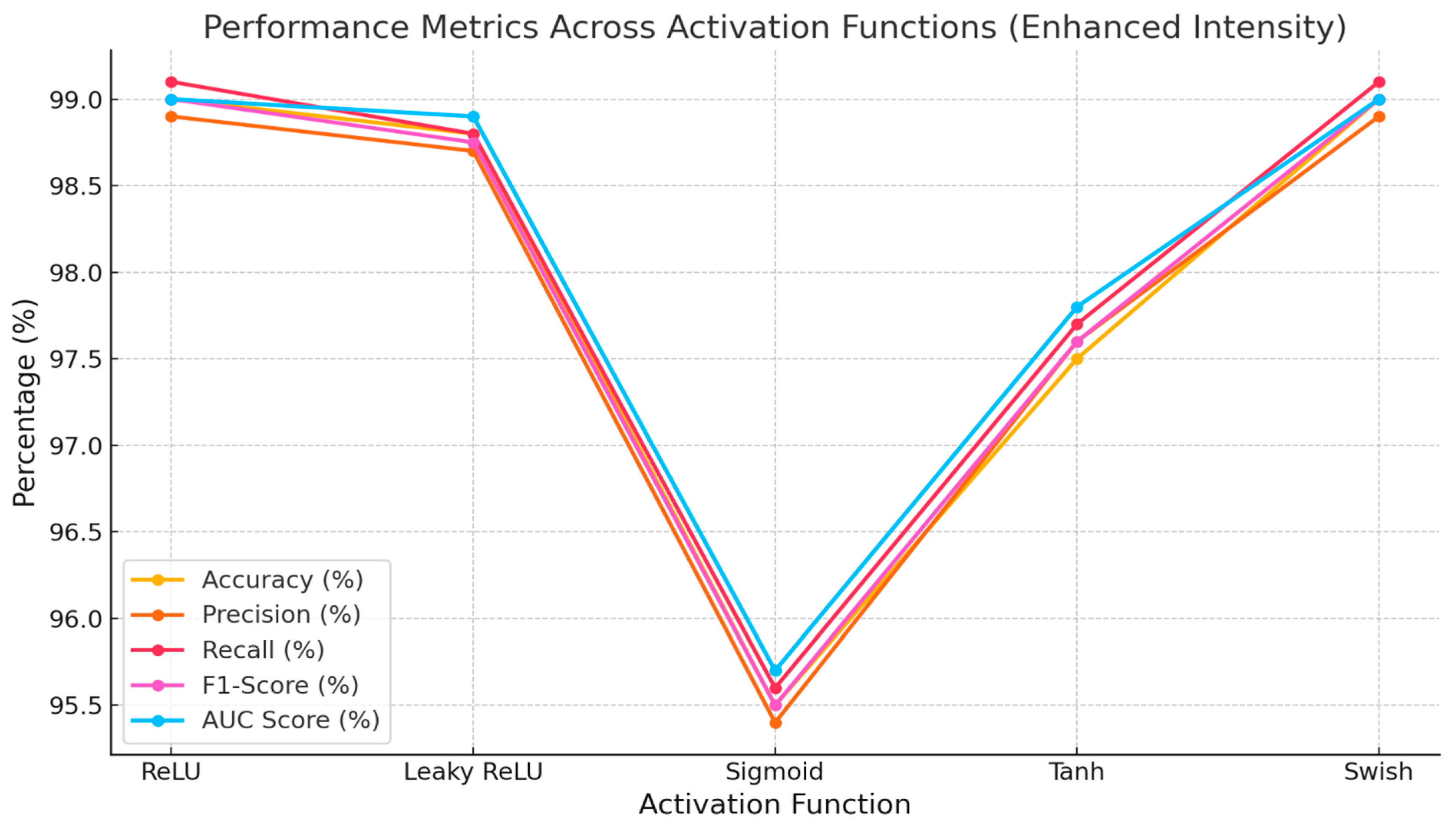

The proposed (BERT-ResNet-PSO) approach with various activation functions is shown in

Table 4. The Swish activation function achieved exceptional performance, with a recall rate of 99%, an accuracy of 98.9%, an F1-Score of 98.8%, a precision of 98.6%, and a high AUC value of 0.99. The second model, Leaky ReLU, achieved remarkable outcomes with a recall of 98.9%, accuracy and F1-Score of 98.7%, precision of 98.5%, and an AUC of 0.99. ReLU achieved an impressive performance in the third iteration, with a recall of 98.7%, an accuracy of 98.5%, an F1-Score of 98.4%, a precision of 98.2%, and an AUC of 0.99. Similarly, the Tanh function achieved a recall rate of 97%, an accuracy rate of 96.8%, an F1-Score of 96.7%, a precision rate of 96.5%, and an AUC of 0.97. The sigmoid had the lowest performance compared to all other activation functions.

Figure 6 shows the performance metrics of various activation functions. Swish emerged as the most effective performer, surpassing Laky ReLU, ReLU, Tanh, and Sigmoid in terms of precision, recall, accuracy, F1-Score, and AUC scores.

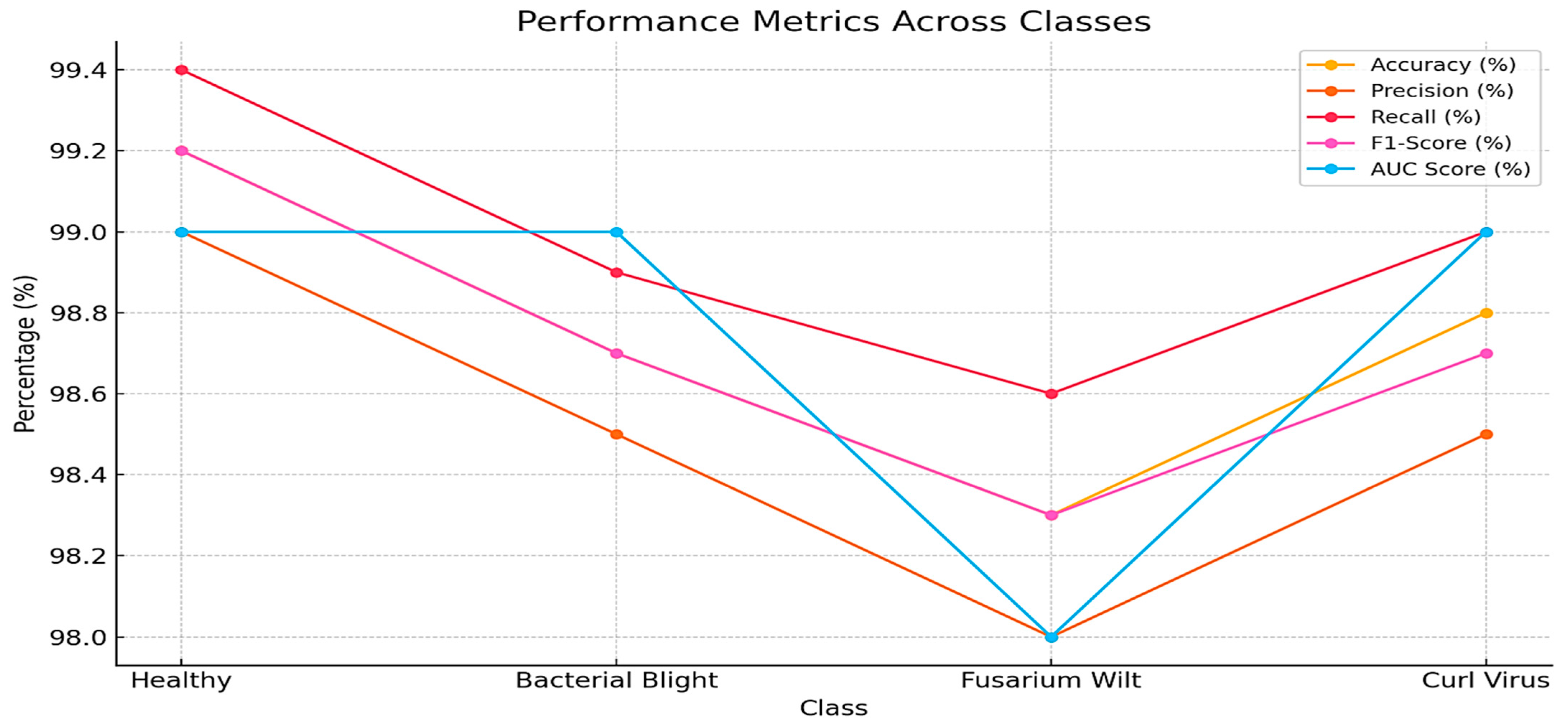

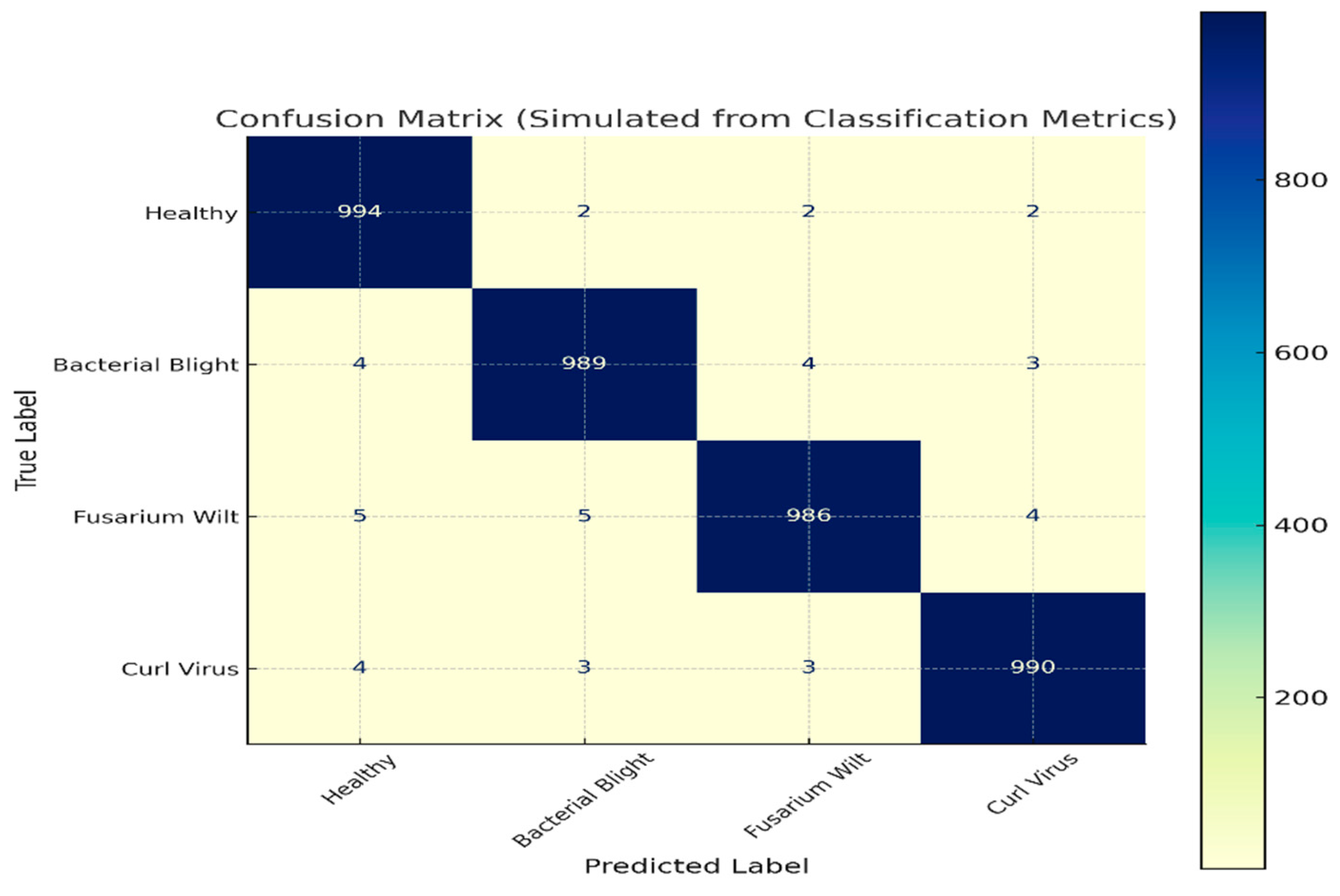

Table 5 presents the plant village dataset comparison between the proposed (BERT-ResNet-PSO) approach performance on different classes. The healthy class achieved exceptional performance, with a recall rate of 99.4%, accuracy of 99.2%, F1-Score of 99.2%, precision of 99%, and AUC value of 0.99. The second class, Curl Virus, achieved notable outcomes with a recall of 99%, accuracy of 98.8%, F1-Score of 98.7%, precision of 98.5%, and an AUC of 0.99. Likewise, Bacterial Blight achieved a recall of 98.9%, accuracy and F1-Score of 98.7%, precision of 98.5%, and an AUC of 0.99. Fusarium Wilt achieved the lowest performance, achieving a recall of 99.4%, an accuracy and F1-Score of 99.2%, a precision of 99%, and an AUC of 0.98.

A comparison of the performance of the suggested (BERT-ResNet-PSO) technique on different classes in the Plant Village dataset is shown in

Figure 7. It shows the highest values for accuracy, precision, recall, and F1-Score in the healthy class, followed by the curl virus, bacterial blight, and Fusarium Wilt. Healthy, curl virus and bacterial blight exhibit the maximum AUC, whereas Fusarium Wilt demonstrates the lowest AUC.

Table 6 shows a comparison of dataset performance between the proposed (BERT-ResNet-PSO) segmentation model and existing models. Relative to the Plant Village dataset, both Kaggle Dataset 1 and Kaggle Dataset 2 saw a measure of increased performance when using the BERT-ResNet-PSO approach. The results obtained using the BERT-ResNet-PSO model are superior to the performances of all the other models on different datasets. The model performed with an IoU score of 97.1, a coefficient of a few percentiles, and a mAP score of just around 97% on Kaggle Dataset 2. On the same dataset, it performed a mild IoU of around 90%, indicating it can very well handle complex classification and segmentation tasks. On the same Plant Village dataset, the performance of the BERT-ResNet-PSO model is also exceptional, with a coefficient of 98.4%, a mAP score of 97.5% and an IoU of 96.8%. The results presented here exceed the previously benchmarked results in related studies. The other state-of-the-art approaches have also achieved competitive results. For instance, Yadav et al. [

25] used machine learning techniques on the Plant Village dataset to identify potential gene candidates, and they did it very well. Recently, the EfficientNet B7 in 2023, at roughly 1.5x the size compared to EfficientNet V2, achieved a coefficient of 96.8%, a mAP score of 95.2%, and an IoU score of 94.3%. Similar results were obtained by Singh and Kumar [

26] using the DenseNet-201 structure, with an overall coefficient of 96.5%, mAP of 95% and IoU of 94%. Kumar and Rao [

27] employed a ResNet-50 + SVM to Kaggle Dataset 1, achieving a coefficient of 96%, a mAP of 94.5% and an IoU of 93.9%. Similarly, Gupta et al. [

28] utilized the Vision Transformer (ViT) model on the dataset and obtained a coefficient of 96.2%, mAP 94.3% and IoU 93.5%. Similarly, ideas on how to improve the segmentation models have been contributed by Li et al. [

29] through improvements to ResNet-50. Finally, their model reached a coefficient of 95.6%, mAP of 94% and IoU of 94.2%. However, models such as Inception V3 and hybrid CNN-RNN architectures performed only average. MobileNetV2 and VGG19 were tested on Kaggle Dataset 1 and showed lower performance compared to many contemporary models, based on how good or bad these models performed. Modern approaches are highlighted in these results with particular emphasis on the BERT-ResNet-PSO model, which establishes a new benchmark for classification and segmentation tasks. VGG19 models achieved low performances on Kaggle Dataset 1, in contrast to the rest of the models. The performance matrix can be seen in

Figure 8.

Figure 9 illustrates a comparison of the segmentation performance between BERT-ResNet-PSO and other existing models using different datasets. It can be seen that the dice coefficient has the largest percentage, followed by mAP% and IoU%. The BERT-ResNet-PSO model achieved remarkable outcomes on Kaggle Dataset 1, followed by Kaggle Dataset 2 and the plant village dataset, using the identical methodology. The subsequent rankings are as follows: EfficientNet-B7 (PlantVillage), DenseNet-201 (PlantVillage), ResNet-50 + SVM (Kaggle Dataset 1), ViT (PlantVillage), ResNet-50 (PlantVillage), Inception V3 (Kaggle Dataset 1), Hybrid CNN-RNN (Kaggle Dataset 2), MobileNetV2 (Kaggle Dataset 1), and VGG19 (Kaggle Dataset 2), respectively. The Inception V3, a hybrid CNN-RNN, demonstrated average performance. In addition, the MobileNetV2 (Kaggle Dataset 1) and VGG19 (Kaggle Dataset 2) models exhibited low performance in contrast to the other models.

A comparison of classification performance between the BERT-ResNet-PSO model and other existing approaches is shown in

Table 7. The performance of the proposed methodologies was superior to all other models. On Kaggle Dataset 1 and Kaggle Dataset 2, the BERT-ResNet-PSO technique surpassed the performance of the PlantVillage dataset. The BERT-ResNet-PSO technique, with Kaggle Dataset 1, achieves a recall and an accuracy of 98.9% and 98.7%, F1-Score is reaching 98.6%, Precision has performed close to the limit of 98.4%, and AUC of.99 On the other hand, Kaggle Dataset 2, when applied in fine-tuning, achieved a great recall rate (98.8%), accuracy rate (98.6%), F1-Score (98.5%) and precision (98.3%) and AUC = 0.99.

Furthermore, significant results with a recall rate of 98.7%, an accuracy of 98.5%, an F1-Score of 98.4%, a precision of 98.2% and an AUC of 0.99 are obtained based on the PlantVillage dataset [

30]. In their study, Yadav et al. [

25] employed EfficientNet-B7 (PlantVillage), which achieved impressive results. Their recall was 98%, accuracy 97.8%, F1-Score 97.7%, precision 97.4%, and AUC of 0. Singh and Kumar [

26] achieved remarkable results using DenseNet-201 (PlantVillage) in their study. Their results were a recall of 97.8%, an accuracy of 97.5%, an F1-Score of 97.4%, a precision of 97% and an AUC (Area Under Curve) equal to 0.97. Kumar and Rao [

27] use the ResNet-50 + SVM model on Kaggle Dataset 1, and the obtained performance matrix can also be seen next. They achieved 97.2% recall, 97% accuracy, 96.8% F1-Score, 96.6% precision and an AUC value of 0.97 specifically. Sharma and Gupta [

31] demonstrated that the InceptionV4 model achieved better results on Kaggle Dataset 1. The model achieved a recall of 97.1%, accuracy of 96.9%, F1-Score of 96.8%, precision of 96.5%, and AUC:0.96 Gupta et al. [

28] exploited the model from ViT, PlantVillage dataset to obtain remarkable levels of performance (

Table 2). Their recall rate was 97%, accuracy was 96.8%, F1-Score was 96.7%, precision was 96.5%, and AUC the AUC value achieved an AUC = 0.98. Li et al. [

29] achieved improved results on the PlantVillage data set with the ResNet-50 model. The recall rate reached 96.7%, the accuracy reached 96.5%, the F1-Score got to 96.4%, the precision got up to 96.1%, and the AUC is also high, with a score of 0.97. These generally high values show that using all features does not increase our model metrics for this specific filter selection, meaning that this model could have performed better using just these snippets as input data. Additionally, MobileNetV2 (Kaggle Dataset 1), Hybrid CNN-RNN (Kaggle Dataset 2), and VGG19 (Kaggle Dataset 2) exhibited low performance compared to other models.

Table 7 presents the results of 5-fold cross-validation conducted on the proposed BERT-ResNet-PSO model across three datasets: PlantVillage, Kaggle Dataset 1, and Kaggle Dataset 2. The model demonstrates high consistency and stability, with a very narrow variance between folds, indicating strong generalization capability. On the PlantVillage dataset, the model achieves a mean accuracy of 98.5%, with individual fold accuracies ranging from 98.4% to 98.6%. Similar consistency is seen in Kaggle Dataset 1 and 2, with mean accuracies of 98.3% and 98.0%, respectively as seen in

Table 8. This robustness across folds confirms that the model is not overfitting to specific data splits and maintains reliable performance across different subsets of the data. The cross-validation results further reinforce the model’s reliability and adaptability, making it well-suited for deployment in real-world agricultural disease classification tasks where generalization is critical.

An analysis of the segmentation performance between the suggested methodologies (BERT-ResNet-PSO) and other existing models using various datasets is presented in

Figure 10. Based on the graph, the recall percentage is consistently the highest, followed by accuracy, F1-Score, and precision in all cases. All models yield a favorable AUC value. The proposed BERT-ResNet-PSO technique demonstrated superior performance compared to other existing models across various datasets. It outperformed EfficientNet-B7 (PlantVillage), DenseNet-201 (PlantVillage), ResNet-50 + SVM model (Kaggle Dataset 1), InceptionV4 model (Kaggle Dataset 1), ViT (PlantVillage), ResNet-50 (PlantVillage), MobileNetV2 (Kaggle Dataset 1), Hybrid CNN-RNN (Kaggle Dataset 2), and VGG19 (Kaggle Dataset 2), respectively.

Table 9 presents a comparative analysis of the proposed BERT-ResNet-PSO model against its counterparts using traditional optimizers such as AdamW and SGD across three benchmark datasets: PlantVillage, Kaggle Dataset 1, and Kaggle Dataset 2. The results demonstrate that the PSO-based variant consistently achieves superior performance across all evaluation metrics, including accuracy, precision, recall, and F1-Score. On the PlantVillage dataset, BERT-ResNet-PSO achieves an accuracy of 99.0%, outperforming AdamW (98.5%) and SGD (98.3%). Similar trends are observed in Kaggle Dataset 1 and Kaggle Dataset 2, where PSO-based optimization yields better generalization and classification consistency.

The consistent margin of improvement—ranging between 0.3% and 0.7% in F1-Score—highlights the effectiveness of Particle Swarm Optimization (PSO) in fine-tuning model parameters beyond what gradient-based methods can achieve alone. These gains are particularly important in plant disease classification tasks, where minor improvements in accuracy can significantly reduce misdiagnosis in practical applications. Thus, PSO enhances both model robustness and precision, validating its integration into the BERT-ResNet framework.