Mixed Reality-Based Robotics Education—Supervisor Perspective on Thesis Works

Abstract

1. Introduction

- 1.

- Introduction of seven thesis works showcasing MR developments in the educational field of robotics, all supervised by the main author.

- 2.

- Comparative analysis of the presented works, highlighting the different approaches, insights, and contributions of each work.

- 3.

- Identification of key indicators for a comparative study of AR learning experiences in robotics.

- 4.

- Summary of advantages and obstacles during the development and use of MR applications from the supervisor’s point of view.

2. Materials and Methods

- 1.

- A basis for comparison is established where the research questions of each thesis are taken as a common starting point.

- 2.

- For a methodological comparison, we analyze whether the theses use experimental, simulation-based, or theoretical approaches and which tools and technologies have been used. We use classification models based on the survey paper [30]. Additionally, we consider how data are gathered (e.g., user studies, performance metrics) and analyzed (statistical methods, qualitative analysis).

- 3.

- In the next step, each work is clustered and organized using the same schematic:

- (a)

- Methodology

- i.

- Approach

- ii.

- Tools and Technologies

- iii.

- Data Collection and Analysis

- (b)

- Contributions

- (c)

- Main Findings

- 4.

- Following up on the results, the interpretations of each author’s findings are analyzed and compared with the focus on assessing how each thesis contributes to the broader field of AR in robotics.

- 5.

- Finally, the contribution of this work is the supervisor’s view on the results and decision steps for the evolution of MR-based applications for robotics education, as each work presented demonstrates different approaches and outcomes. To conclude, the evolution process is summarized as a set of guidelines for future MR-based robotics learning applications.

3. Results

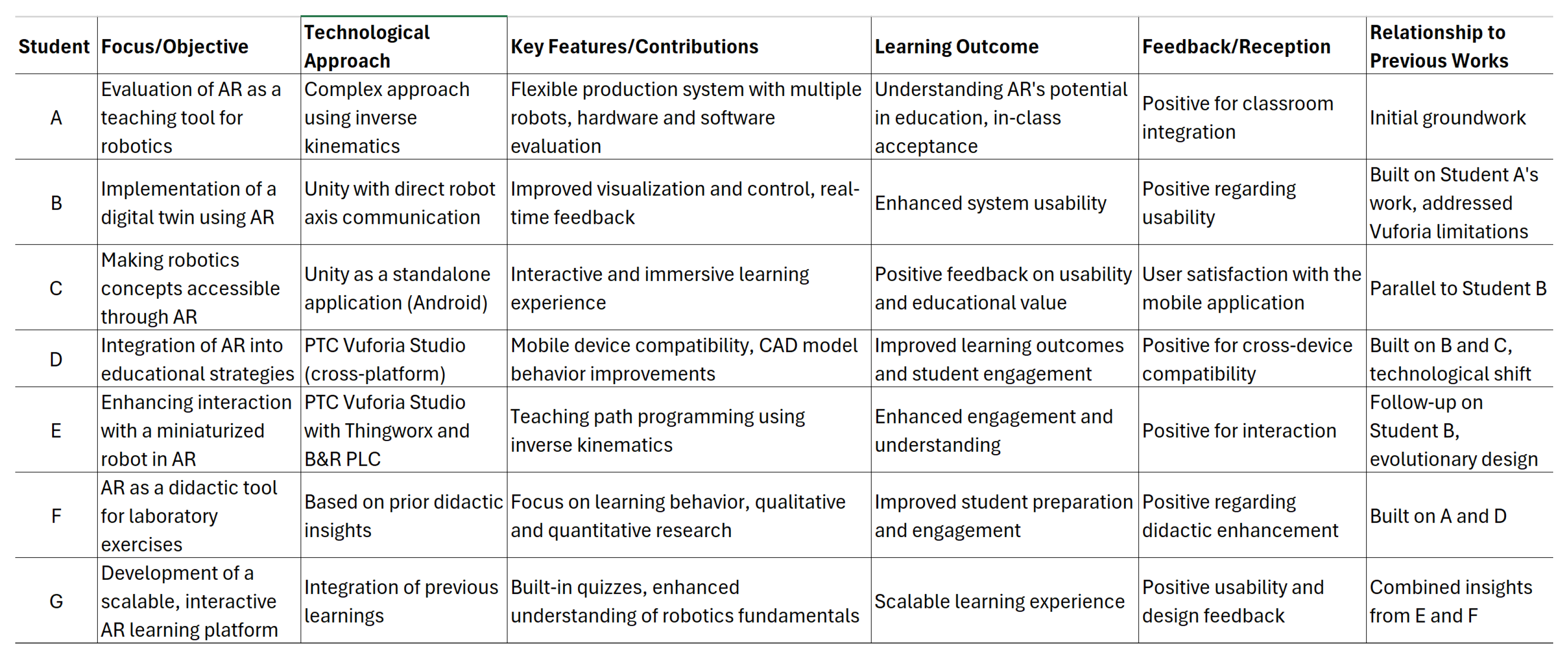

- Student A. 2018. Augmented Reality in a Digital FactoryRQ: How can an automated creation of AR simulations be achieved to support the understanding of robot-based production systems?Supervisor view: Identification of hardware and software limits during the AR development process.

- Student B. 2019. Realizing a Digital Twin for a 6-Axis Robot Using Augmented RealityRQ: How can a digital twin be effectively created and utilized using AR for a 6-axis robot?Supervisor view: Feasibility estimation of digital twin-based concept for AR robotics education.

- Student C. 2019. Development of an Augmented Reality-Based Application for Robotics EducationRQ: How can AR improve the accessibility and understanding of robotics concepts for students?Supervisor view: Feasibility estimation of Unity and Blender for AR development.

- Student D. 2020. Augmented Reality-based Robotics EducationRQ: How can AR-based platforms enhance the learning experience and interaction in robotics education?Supervisor view: Feasibility estimation of PTC Vuforia Studio for AR development in combination with ABB CAD Models.

- Student E. 2021. Desktop Robotics Combined with Augmented RealityRQ: How can AR be integrated with desktop robotics to enhance interaction and understanding?Supervisor view: Usability design on Vuforia Studio and digital twins in desktop robotics.

- Student F. 2021. Use of Augmented Reality as a Didactic Learning Medium in Mechatronics/RoboticsRQ: How can AR be utilized as a didactic tool to improve preparation for laboratory exercises in mechatronics/robotics?Supervisor view: Usability design on Vuforia Studio in combination with ABB CAD Models.

- Student G. 2022. Development of an Interactive Augmented Reality Learning ApplicationRQ: How can an interactive AR application be designed to improve learning outcomes in mechatronics and robotics?Supervisor view: Learning progress assessment built in AR application using full CAD Model of self-developed robot and Vuforia Studio.

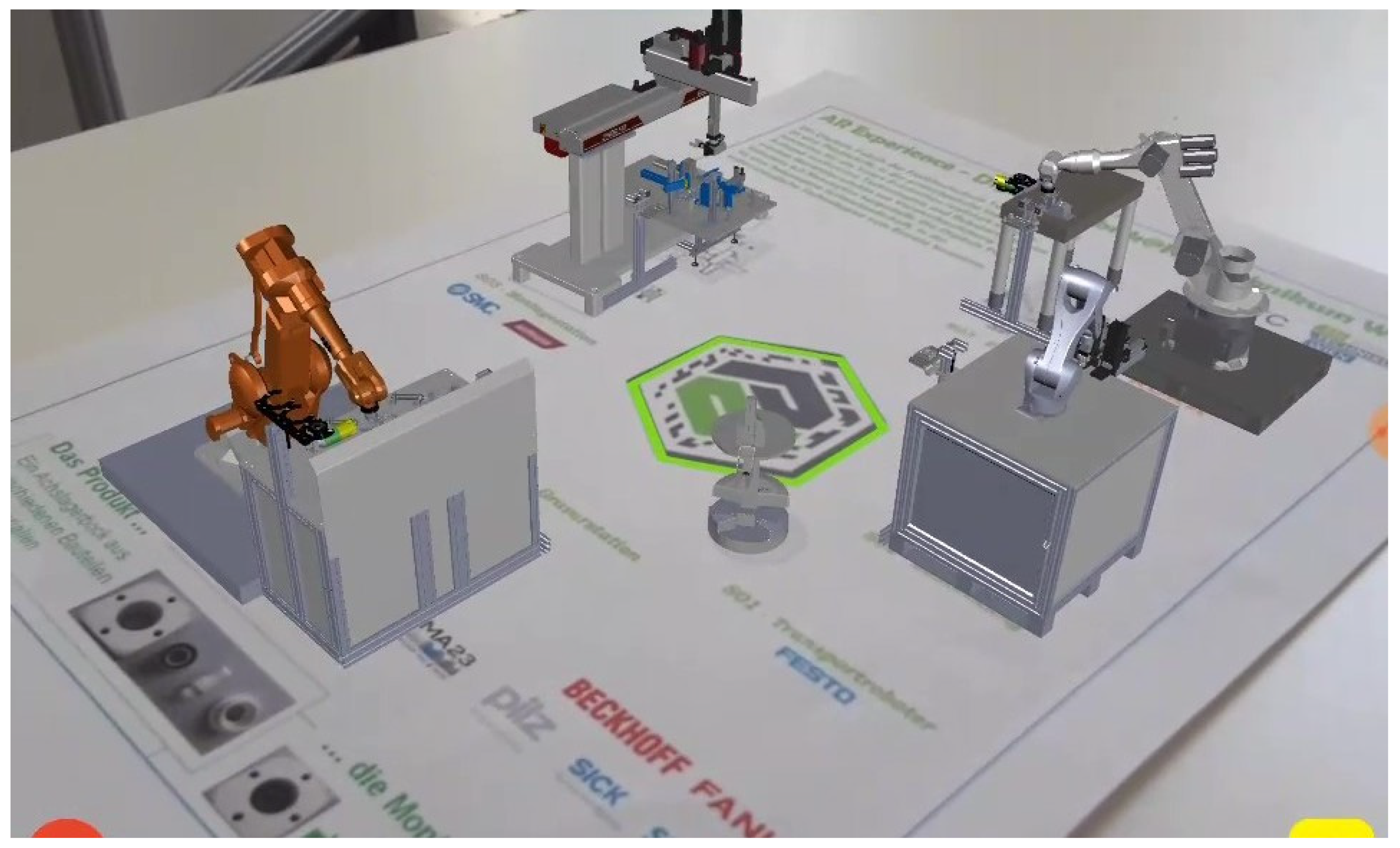

3.1. Student A—Augmented Reality in a Digital Factory

3.1.1. Methodology

3.1.2. Contributions

3.1.3. Main Findings

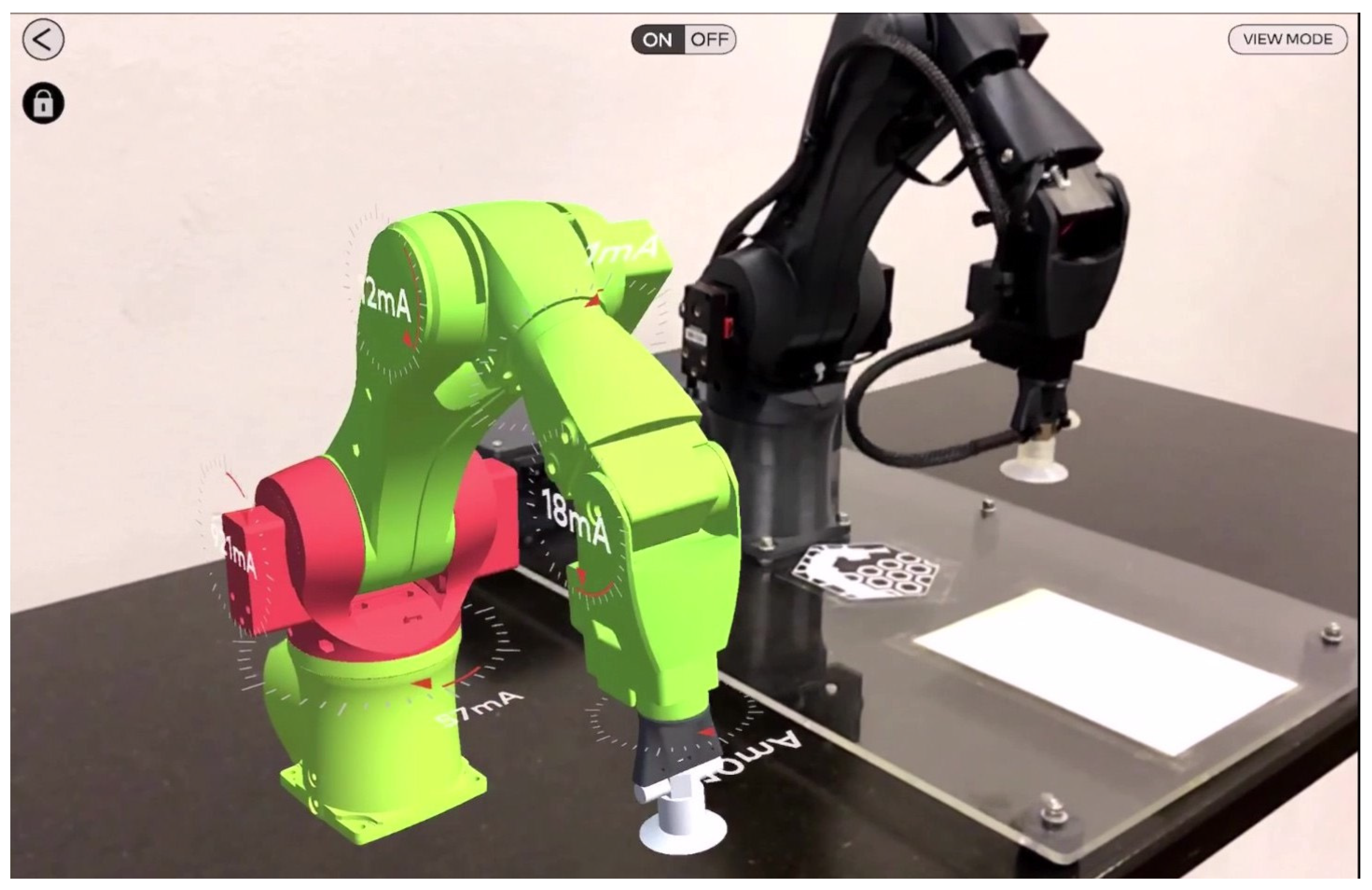

3.2. Student B—Realizing a Digital Twin for a Six-Axis Robot Using Augmented Reality

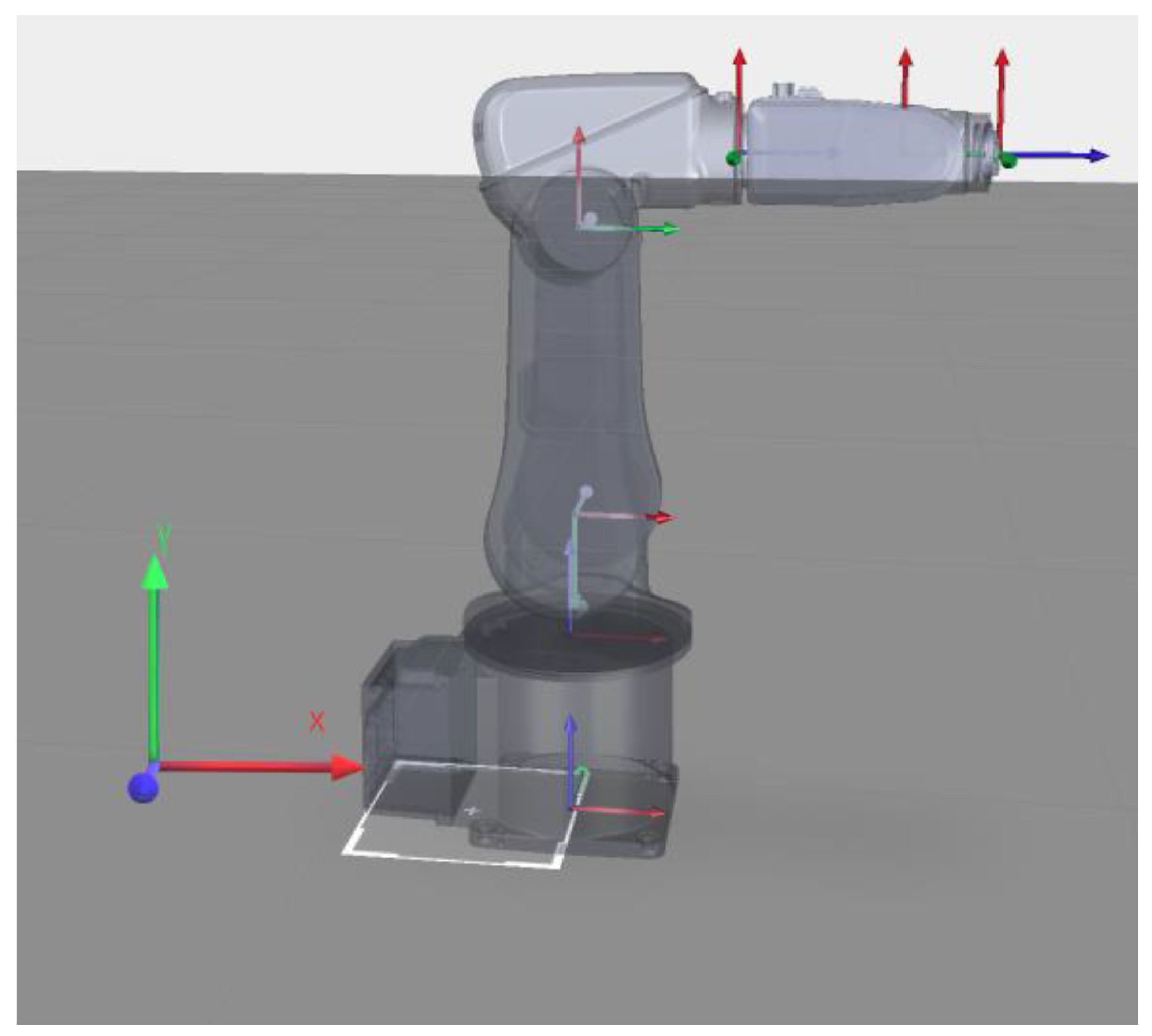

3.2.1. Methodology

3.2.2. Contributions

3.2.3. Main Findings

3.3. Student C—Development of an Augmented Reality-Based Application for Robotics Education

3.3.1. Methodology

3.3.2. Contributions

3.3.3. Main Findings

3.4. Student D—Augmented Reality-Based Robotics Education

3.4.1. Methodology

3.4.2. Contributions

3.4.3. Main Findings

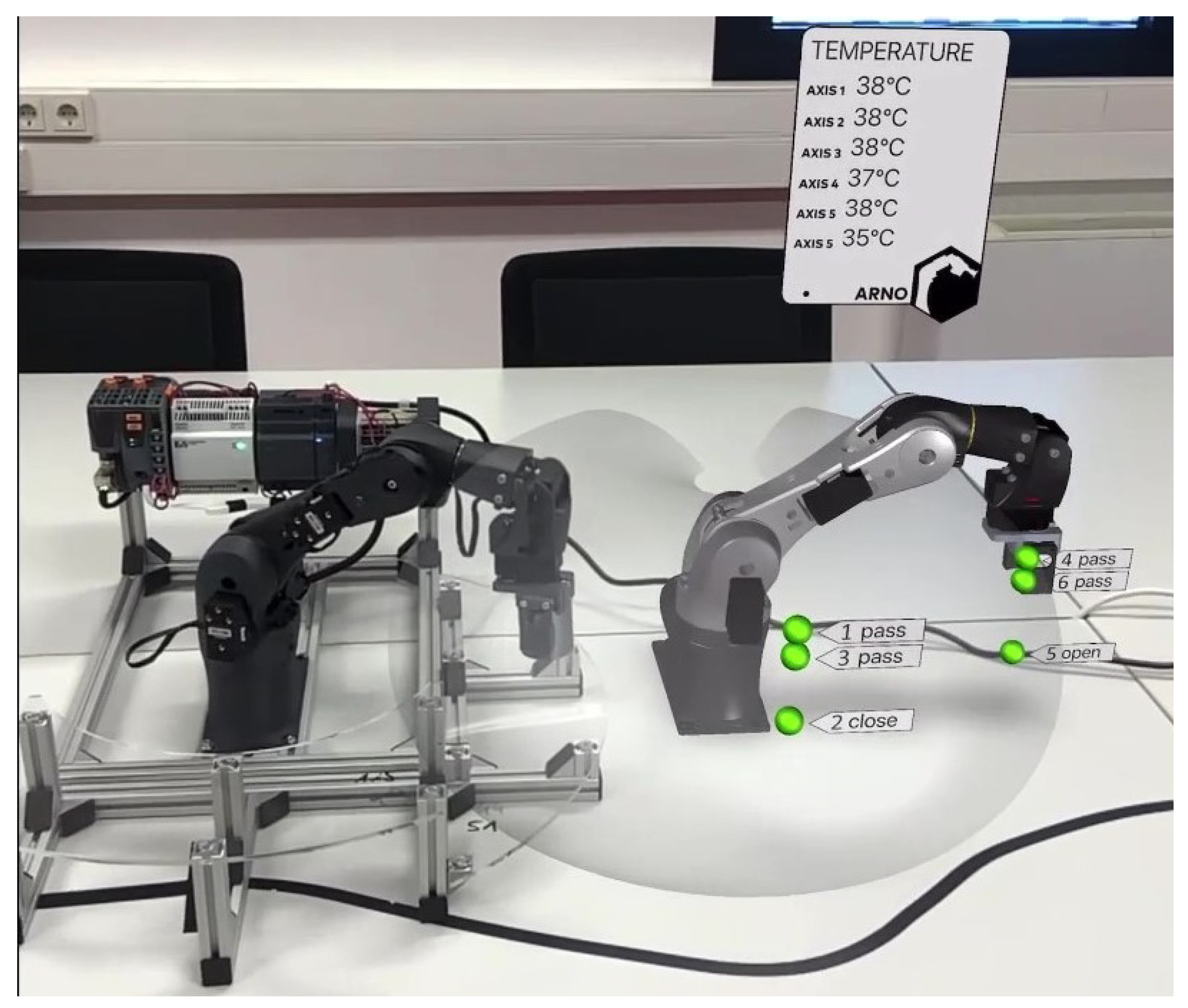

3.5. Student E—Desktop Robotics Combined with Augmented Reality

3.5.1. Methodology

3.5.2. Contributions

3.5.3. Main Findings

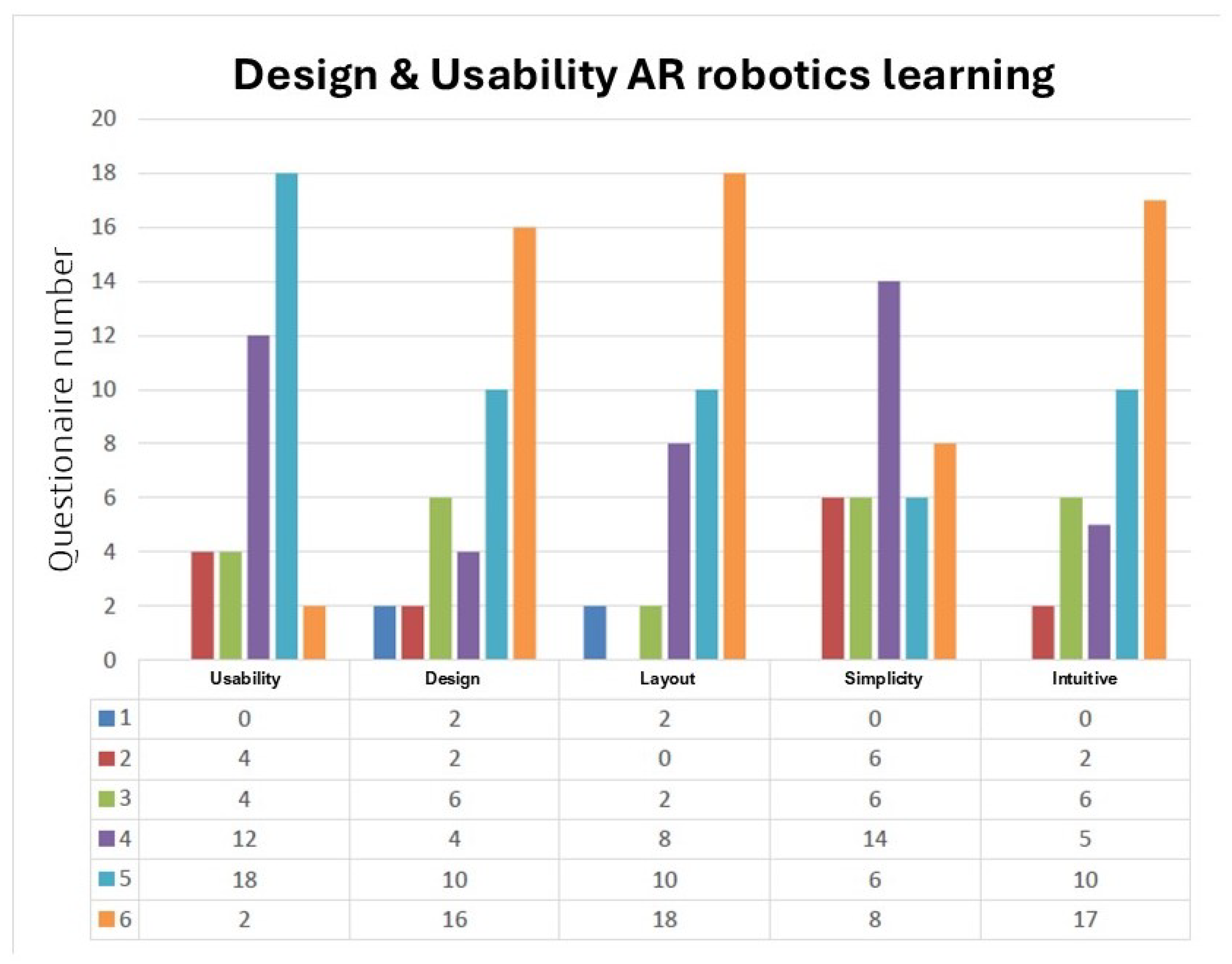

3.6. Student F—Use of Augmented Reality as a Didactic Learning Medium in Mechatronics/Robotics

3.6.1. Methodology

3.6.2. Contributions

3.6.3. Main Findings

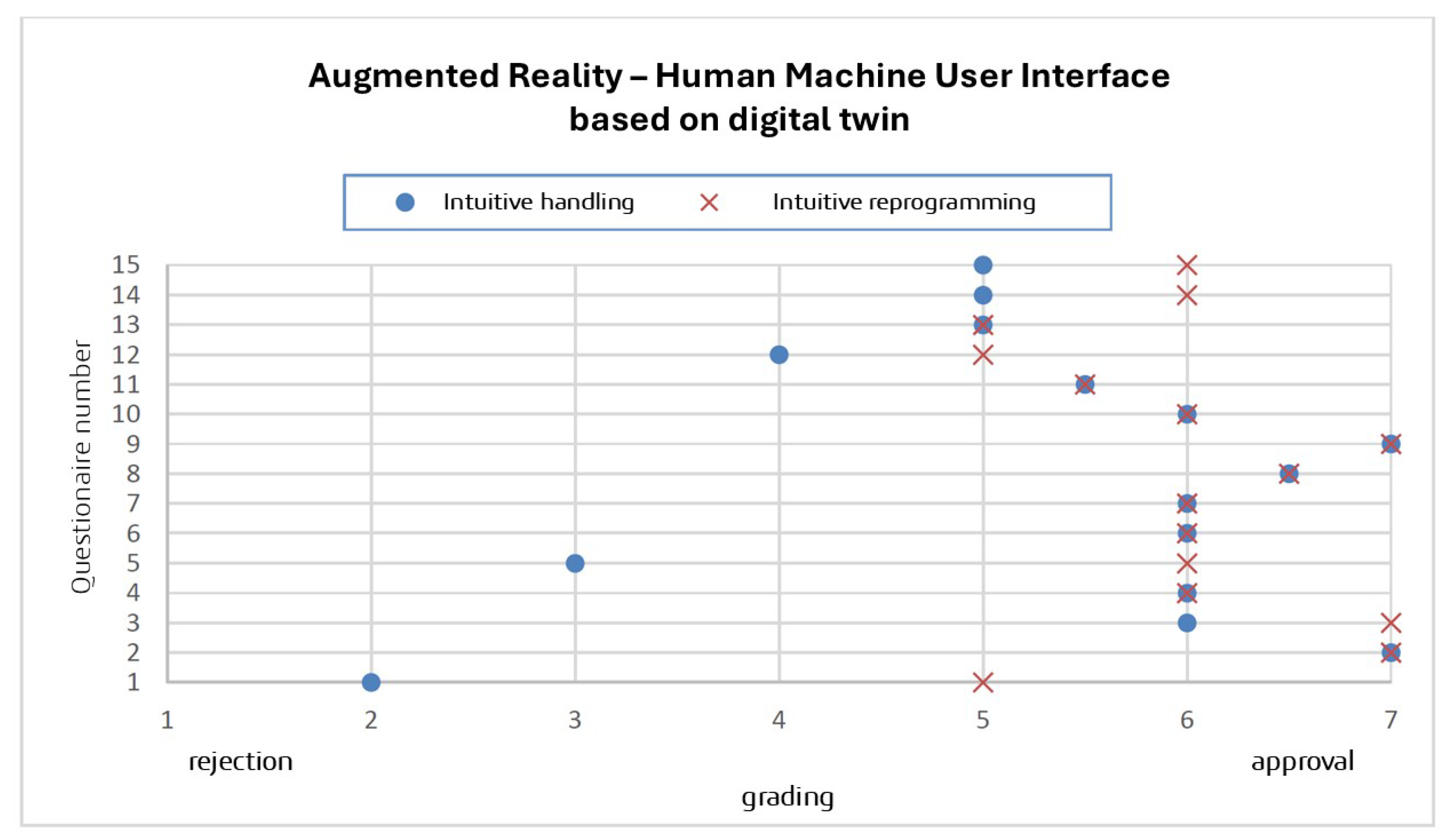

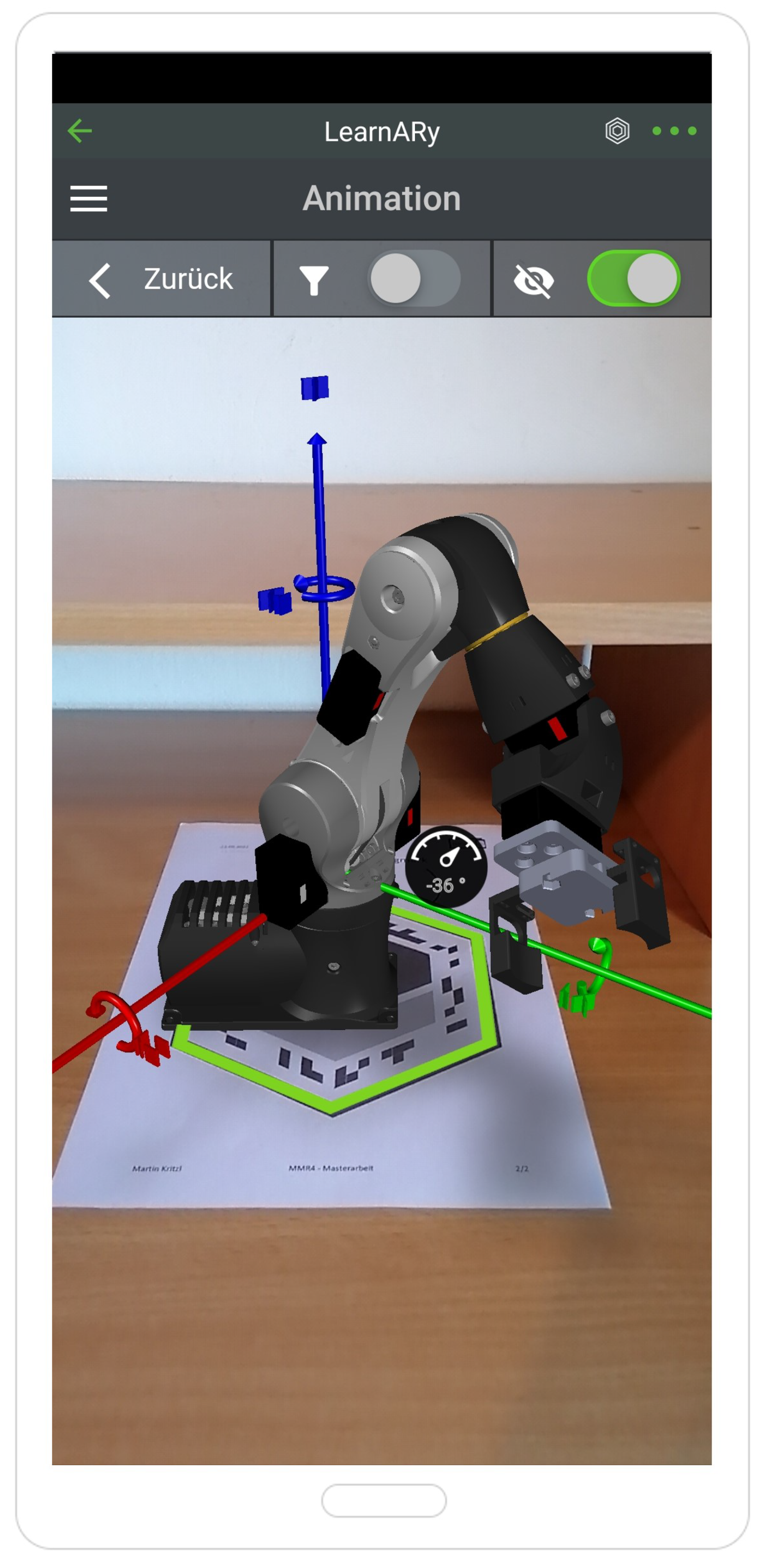

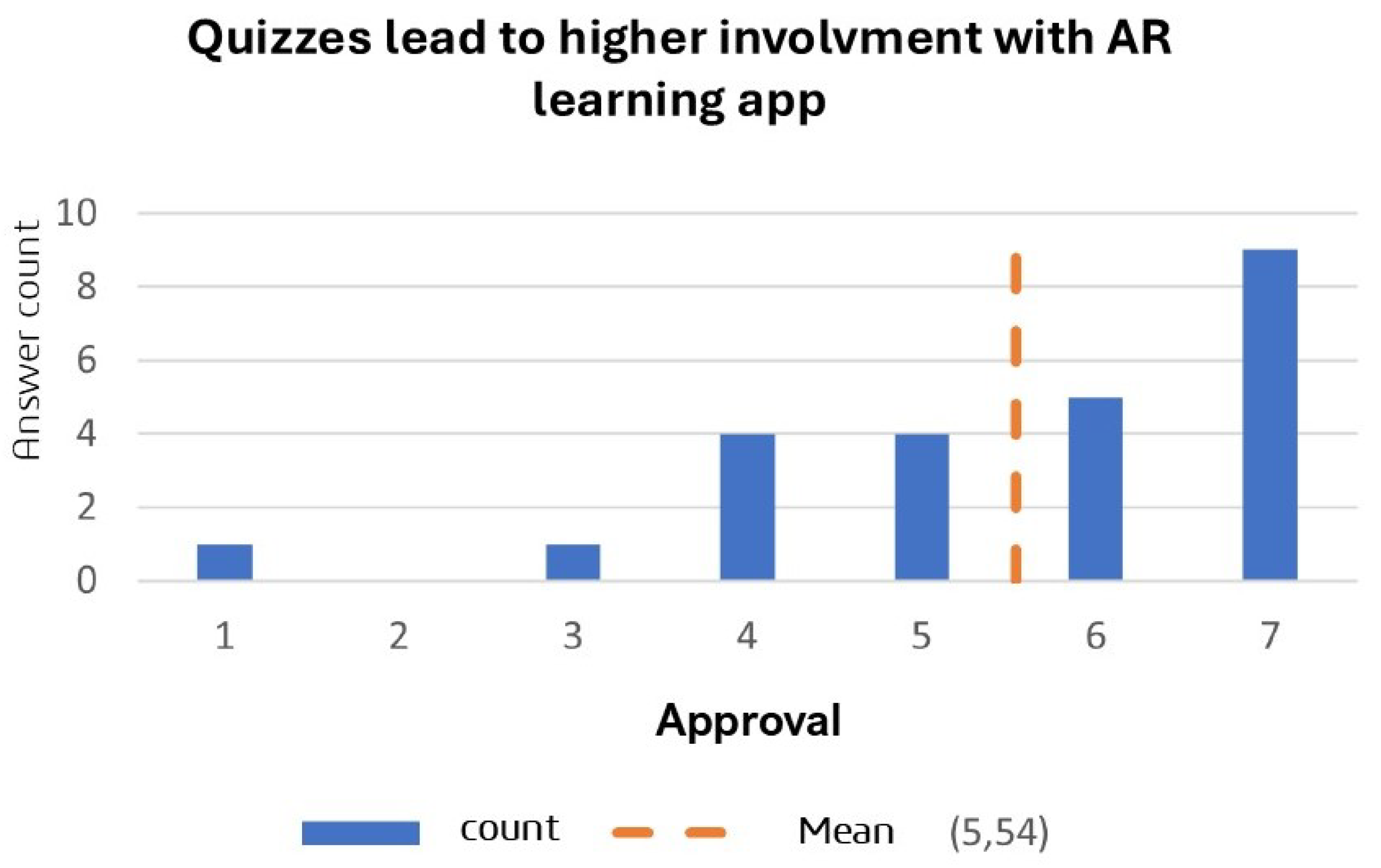

3.7. Student G—Development of an Interactive Augmented Reality Learning Application

3.7.1. Methodology

3.7.2. Contributions

3.7.3. Main Findings

4. Discussion

5. Conclusions

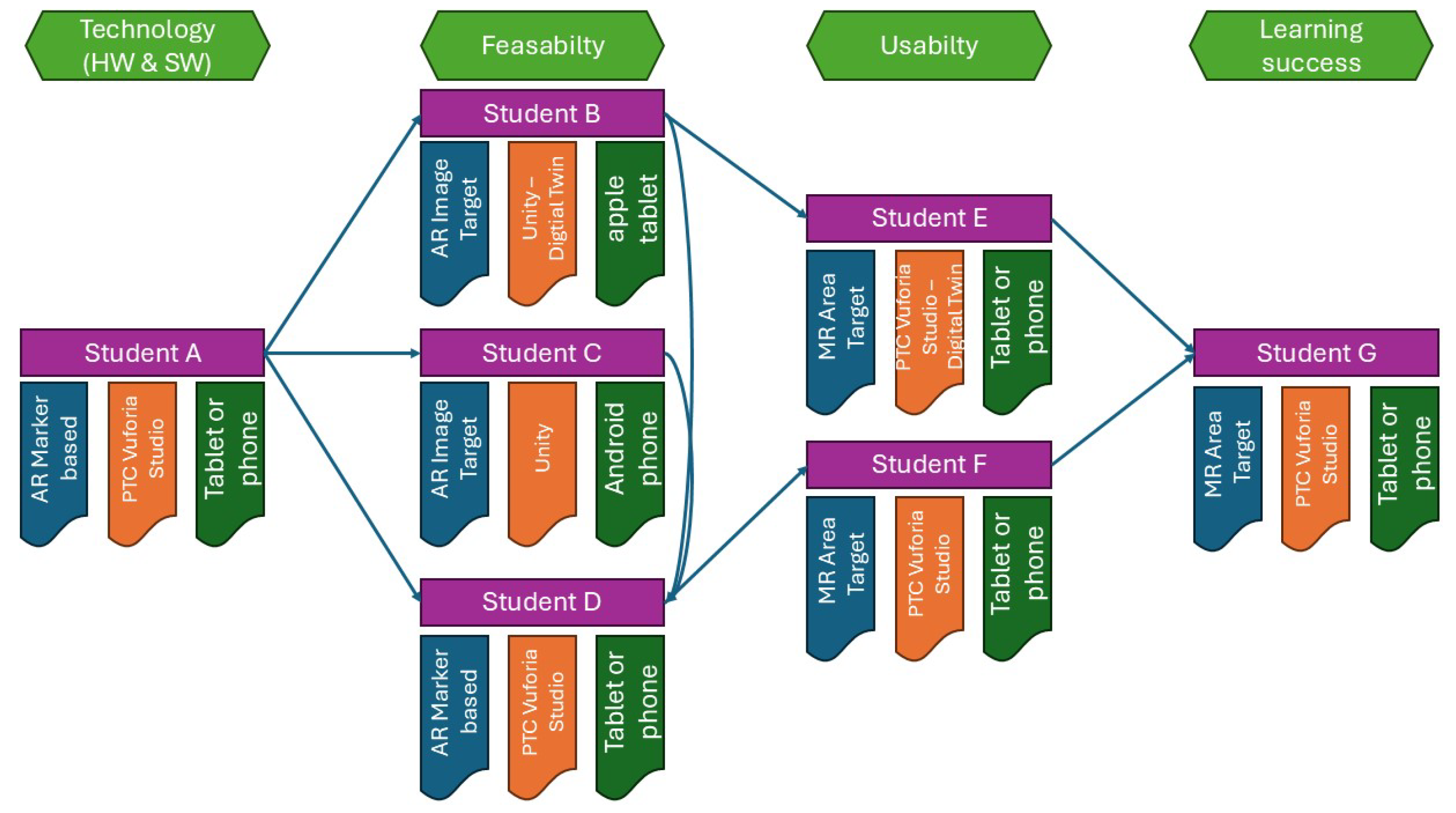

- Technology—decide the type of device, operating system, and software used for the development of the app in advance.

- Feasibility—estimate the effort depending on the selected technology.

- Usability—define learning goals and focus on simple, intuitive design of the learning app using early feedback during the design stage.

- Learning success—incorporate learning success measurements into the learning experience, such as micro learning or quizzes, to additionally motivate the learner as well as directly measure the efficiency of the app.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Müller, C. World Robotics 2025—Industrial Robots. Ifr Stat. Dep. Vdma Serv. Gmbh Frankf. Am Main Ger. 2025. [Google Scholar]

- Wiedmeyer, W.; Mende, M.; Hartmann, D.; Bischoff, R.; Ledermann, C.; Kroger, T. Robotics Education and Research at Scale: A Remotely Accessible Robotics Development Platform. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3679–3685. [Google Scholar] [CrossRef]

- Sergeyev, A.; Alaraje, N.; Parmar, S.; Kuhl, S.; Druschke, V.; Hooker, J. Promoting Industrial Robotics Education by Curriculum, Robotic Simulation Software, and Advanced Robotic Workcell Development and Implementation. In Proceedings of the 2017 Annual IEEE International Systems Conference (SysCon), Montreal, QC, Canada, 24–27 April 2017; pp. 1–8. [Google Scholar] [CrossRef]

- De Raffaele, C.; Smith, S.; Gemikonakli, O. Enabling the Effective Teaching and Learning of Advanced Robotics in Higher Education Using an Active TUI Framework. In Proceedings of the 3rd Africa and Middle East Conference on Software Engineering, AMECSE ’17, Cairo, Egypt, 12–13 December 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 7–12. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Orsolits, H.; Rauh, S.F.; Garcia Estrada, J. Using mixed reality based digital twins for robotics education. In Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Singapore, 17–21 October 2022; pp. 56–59. [Google Scholar] [CrossRef]

- Lohfink, M.A.; Miznazi, D.; Stroth, F.; Müller, C. Learn Spatial! Introducing the MARBLE-App—A Mixed Reality Approach to Enhance Archaeological Higher Education. In Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Singapore, 17–21 October 2022; pp. 435–440. [Google Scholar] [CrossRef]

- Darwish, M.; Kamel, S.; Assem, A. Extended reality for enhancing spatial ability in architecture design education. Ain Shams Eng. J. 2023, 14, 102104. [Google Scholar] [CrossRef]

- Billinghurst, M.; Duenser, A. Augmented Reality in the Classroom. Computer 2012, 45, 56–63. [Google Scholar] [CrossRef]

- Álvarez Marín, A.; Velázquez-Iturbide, J.A. Augmented Reality and Engineering Education: A Systematic Review. IEEE Trans. Learn. Technol. 2021, 14, 817–831. [Google Scholar] [CrossRef]

- Takrouri, K.; Causton, E.; Simpson, B. AR Technologies in Engineering Education: Applications, Potential, and Limitations. Digital 2022, 2, 171–190. [Google Scholar] [CrossRef]

- Ibáñez, M.B.; Delgado-Kloos, C. Augmented Reality for STEM Learning: A Systematic Review. Comput. Educ. 2018, 123, 109–123. [Google Scholar] [CrossRef]

- Ajit, G.; Lucas, T.; Kanyan, L. A Systematic Review of Augmented Reality in STEM Education. Stud. Appl. Econ. 2021, 39, 1–22. [Google Scholar] [CrossRef]

- da Silva, M.M.O.; Teixeira, J.M.X.N.; Cavalcante, P.S.; Teichrieb, V. Perspectives on How to Evaluate Augmented Reality Technology Tools for Education: A Systematic Review. J. Braz. Comput. Soc. 2019, 25, 3. [Google Scholar] [CrossRef]

- Crogman, H.; Cano, V.; Pacheco, E.; Sonawane, R.; Boroon, R. Virtual Reality, Augmented Reality, and Mixed Reality in Experiential Learning: Transforming Educational Paradigms. Educ. Sci. 2025, 15, 303. [Google Scholar] [CrossRef]

- Verner, I.; Cuperman, D.; Perez-Villalobos, H.; Polishuk, A.; Gamer, S. Augmented and Virtual Reality Experiences for Learning Robotics and Training Integrative Thinking Skills. Robotics 2022, 11, 90. [Google Scholar] [CrossRef]

- Fu, J.; Rota, A.; Li, S.; Zhao, J.; Liu, Q.; Iovene, E.; Ferrigno, G.; De Momi, E. Recent Advancements in Augmented Reality for Robotic Applications: A Survey. Actuators 2023, 12, 323. [Google Scholar] [CrossRef]

- Zhang, F.; Lai, C.Y.; Simic, M.; Ding, S. Augmented reality in robot programming. Procedia Comput. Sci. 2020, 176, 1221–1230. [Google Scholar] [CrossRef]

- Hörbst, J.; Orsolits, H. Mixed Reality HMI for Collaborative Robots. In Proceedings of the Computer Aided Systems Theory—EUROCAST 2022, Las Palmas de Gran Canaria, Spain, 20–25 February 2022; Moreno-Díaz, R., Pichler, F., Quesada-Arencibia, A., Eds.; Springer: Cham, Switzerland, 2022; pp. 539–546. [Google Scholar] [CrossRef]

- Delmerico, J.; Poranne, R.; Bogo, F.; Oleynikova, H.; Vollenweider, E.; Coros, S.; Nieto, J.; Pollefeys, M. Spatial Computing and Intuitive Interaction: Bringing Mixed Reality and Robotics Together. IEEE Robot. Autom. Mag. 2022, 29, 45–57. [Google Scholar] [CrossRef]

- Makhataeva, Z.; Varol, H.A. Augmented Reality for Robotics: A Review. Robotics 2020, 9, 21. [Google Scholar] [CrossRef]

- Mystakidis, S.; Lympouridis, V. Immersive Learning. Encyclopedia 2023, 3, 396–405. [Google Scholar] [CrossRef]

- Chang, H.Y.; Binali, T.; Liang, J.C.; Chiou, G.L.; Cheng, K.H.; Lee, S.W.Y.; Tsai, C.C. Ten years of augmented reality in education: A meta-analysis of (quasi-) experimental studies to investigate the impact. Comput. Educ. 2022, 191, 104641. [Google Scholar] [CrossRef]

- Gad, D. Robot Plan Visualization Using Hololens2. Master’s Thesis, Chalmers University of Technology Gothenburg, Göteborg, Sweden, 2024. [Google Scholar]

- Fang, C.M.; Zieliński, K.; Maes, P.; Paradiso, J.; Blumberg, B.; Kjærgaard, M.B. Enabling Waypoint Generation for Collaborative Robots using LLMs and Mixed Reality. arXiv 2024, arXiv:2403.09308. [Google Scholar]

- Xu, S.; Wei, Y.; Zheng, P.; Zhang, J.; Yu, C. LLM enabled generative collaborative design in a mixed reality environment. J. Manuf. Syst. 2024, 74, 703–715. [Google Scholar] [CrossRef]

- Kopácsi, L.; Karagiannis, P.; Makris, S.; Kildal, J.; Rivera-Pinto, A.; Ruiz de Munain, J.; Rosel, J.; Madarieta, M.; Tseregkounis, N.; Salagianni, K.; et al. The MASTER XR Platform for Robotics Training in Manufacturing. In Proceedings of the 30th ACM Symposium on Virtual Reality Software and Technology, Trier, Germany, 9–11 October 2024. [Google Scholar] [CrossRef]

- Frank, J.A.; Kapila, V. Towards teleoperation-based interactive learning of robot kinematics using a mobile augmented reality interface on a tablet. In Proceedings of the 2016 Indian Control Conference (ICC), Hyderabad, India, 4–6 January 2016; pp. 385–392. [Google Scholar] [CrossRef]

- Ashtari, N.; Bunt, A.; McGrenere, J.; Nebeling, M.; Chilana, P.K. Creating Augmented and Virtual Reality Applications: Current Practices, Challenges, and Opportunities. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, CHI ’20, Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–13. [Google Scholar] [CrossRef]

- Kuhail, M.A.; ElSayary, A.; Farooq, S.; Alghamdi, A. Exploring Immersive Learning Experiences: A Survey. Informatics 2022, 9, 75. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Orsolits, H.; Valente, A.; Lackner, M. Mixed Reality-Based Robotics Education—Supervisor Perspective on Thesis Works. Appl. Sci. 2025, 15, 6134. https://doi.org/10.3390/app15116134

Orsolits H, Valente A, Lackner M. Mixed Reality-Based Robotics Education—Supervisor Perspective on Thesis Works. Applied Sciences. 2025; 15(11):6134. https://doi.org/10.3390/app15116134

Chicago/Turabian StyleOrsolits, Horst, Antonio Valente, and Maximilian Lackner. 2025. "Mixed Reality-Based Robotics Education—Supervisor Perspective on Thesis Works" Applied Sciences 15, no. 11: 6134. https://doi.org/10.3390/app15116134

APA StyleOrsolits, H., Valente, A., & Lackner, M. (2025). Mixed Reality-Based Robotics Education—Supervisor Perspective on Thesis Works. Applied Sciences, 15(11), 6134. https://doi.org/10.3390/app15116134