Abstract

It is commonly accepted that ‘the brain computes’ and that it serves as a model for establishing principles of technical (first of all, electronic) computing. Even today, some biological implementation details inspire the implementation of more performant electronic implementations. However, grasping details without context often leads to decreasing operating efficiency. In the cases of those major implementations, the notion of ‘computing’ has an entirely different meaning. We provide the notion of generalized computing from which we derive technical and biological computing, and by showing how the functionalities are implemented, we also highlight what performance losses lead the solution. Both implementations have been developed using a success–failure method, keeping the successful part-solutions (and building on top of them) and replacing a less successful one with another. Both developments proceed from a local minimum of their goal functions to another, but some principles differ fundamentally. Moreover, they apply entirely different principles, and the part-solutions must cooperate with others, so grasping some biological solution without understanding its context and implementing it in the technical solution usually leads to a loss of efficiency. Today, technical systems’ absolute performance seems to be saturated, while their computing and energetic inefficiency are growing.

1. Introduction

Concerning computing performance, efficiency, and power, we have a high standard, a desired goal, and an existing prototype: “The human brain is …the most powerful and energy-efficient computer known to humankind” [1]. However, when attempting to imitate its operations, the fundamental difficulty is that “engineers want to design systems; neuroscientists want to analyze an existing one they little understand” [2]. Despite the impressive results of grandiose projects [1,3], “we so far lack principles to understand rigorously how computation is done in living, or active, matter” [4]. Even within biology, the too-close parallels with the electric operation are at least questioned [5] (given that the model is called “realistic”): “Is realistic neuronal modeling realistic?” Engineers have grasped a few aspects of the active matter’s operation and implemented those aspects without their context within the frames of their possibilities. Implementing “lacking the principles of understanding how computation is done in living matter” in the different contexts of technical computing is dangerous. It leads to solutions that are neither biological nor technical.

A typical example of misunderstanding the role of the most evident phenomenon in neural systems is spiking. Many publications and technical solutions use the idea of “spiking neural networks”. As we discuss in Section 4, spiking is just a technical tool nature uses to transmit information. Although it is well known from communication theory [6] that a single spike cannot deliver information, so-called neural information theory extensively uses the fallacy [7,8,9] that firing rate delivers information. Answering the fundamental question is abandoned: how the information is represented in neurons, in their networks, and in the transmission signal called spike. Despite that, the relevance of the “coding metaphor” is at least questionable for biology [10]; different coding systems are created based on hundreds of communication models [7,11]; not related to Shannon’s model, but referring to its theory. “What makes the brain a remarkable information processing organ is not the complexity of its neurons but the fact that it has many elements interconnected in a variety of complex ways” [12], p. 37.

Similarly, definitions such as “Multiple neuromorphic systems use Spiking Neural Network (SNN) to perform computation in a way that is inspired by concepts learned about the human brain” [13] and “SNNs are artificial networks made up of neurons that fire a pulse, or spike, once the accumulated value of the inputs to the neuron exceeds a threshold” [13] are misleading. That review “looks at how each of these systems solved the challenges of forming packets with spiking information and how these packets are routed within the system”. In other words, the technically needed “servo” mechanism, a well-observable symptom of neural operation, inspired the “idea of spiking networks”. The idea lacks knowing neurons’ information handling, replaces the (in space and time) distributed information content by well-located timestamps in packets, the continuous analog signal transfer by discrete packet delivering, the dedicated wiring of neural systems by bus-centered transfer with arbitration and packet routing, and the continuous biological operating time with a mixture of digital quanta of computer processing plus transferring time. Even the order of timestamps conveyed in spikes (not to mention the information they deliver) is not resemblant to biology; furthermore, due to the vast need for communication, the overwhelming majority of the generated events must be killed [14] without delivering and processing them to provide a reasonable operating time and to avoid the collapse [15] of the communication system. In the rest of the aspects, the system may provide an excellent imitation of the brain.

We review how computing demands govern the computing industry toward implementing higher computing capacity using different theoretical principles and technological solutions for different computing goals, while cost functions such as energy consumption, computing speed, fabrication cost, scalability, reliability, etc., change highly. We summarize the generalized principles of computing in Section 2 and derive how those principles can be implemented via technical and biological computing. We also show that any computing process inherently includes inefficiency (although it manifests in different implementations differently, making it at least hard to compare them) and derive the terms for describing it quantitatively. For technical computing, we only mention how those generic events are implemented and how the features of biological computing shall be interpreted in terms of technical computing. For biological computing, in Section 4, we derive a conceptual model in technical terms (without mentioning the biological details), emphasizing the consequences of how those events are generated. We emphasize the vital subject of data transmission (communication) between elementary computing units in chained operations. In light of the fundamental discrepancies, we discuss why the popular notions of learning and intelligence have only their name in common. Furthermore, we demonstrate how the wrong understanding of biological principles degrades the computing efficiency of technical systems.

2. Notions of Computation

“Devising a machine that can process information faster than humans has been a driving force in computing for decades” [11] since “computation is a useful concept far beyond the disciplinary boundaries of computer science” [4]. We aim to broaden this concept by applying it to technical and biological systems. Furthermore, our abstract handling enables constructing different hybrid computing devices, provided one can provide proper interfaces that can handle signals and arguments in both specific implementations. The interface between those two principles must provide the appropriate conversion between their data controlled, time-coded, analog, non-mathematical versus instruction controlled, amplitude-coded, digital, mathematical elementary operations.

“Research shows that generating new knowledge is accomplished via natural human means …this knowledge is acquired via scientific research” [16], including various forms of cooperation between those two kinds of computing. Their notions have been attempted to map to each other. Human-constructed computing introduced notions such as bit (including its information content), structural and functional units for computing, elementary computing units implementing mathematical functions, and storing and transmitting information in special devices; these terms were not known in nature-constructed biological computing [17]. It is questionable whether nature must accommodate its computing-related notions to human-made computing.

2.1. The Fundamental Task

When wanting to perform a computation, one needs to assign operations to the input data [18] in various forms, from verbal instructions to wiring different electronic computing units by cables to setting a program pointer to address the first instruction of a stored program. Usually, the operations must be chained. Practical computations comprise many elementary operations, supplying input data for each other. Their signal representation and path, sequencing, and timing shall be provided to chain them appropriately. Data transfer and processing must not be separated; they must be discussed together. For geared wheels, biological, neuromorphic, quantum, digital, analog, neuronal, and accelerated processing, the operation can only begin after its required input operands are transferred to the place of operation, and the output operand can only be delivered once the computing process terminates. Our paper applies this concept to technical and biological systems, highlighting its universal significance. The model of computing did not change during the times, for centuries:

- input operand(s) need to be delivered to the processing element

- processing must be wholly performed

- output operand(s) must be delivered to their destination

Correspondingly, the effective computing time comprises the sum of the transfer time(s) (in the case of chained operations, the delivery time of output operands to the next processing unit is the same as for the next unit the operands’ delivery time from the previous unit) and the processing time, even if, in the actual case, one can be neglected apart from the other (interestingly, biology uses point-like neurons and omits processing time aside from axonal delivery time, while technical computing omits transfer time aside from processing time, despite von Neumann’s warning). Phases of computing logically depend on each other and must be appropriately aligned. In their implementations, they need proper synchronization. In this way, the data transfer and processing phases block each other. That is, any technological implementation converts the logical dependence of their operations to temporally adjacent phases (used to be called von Neumann-style computing [19]): to perform a chained operation, one’s output must reach the other’s input. This definition enables multiple elementary operations to be performed in the second step, provided that they fulfill the other two requirements individually. This aspect deserves special attention when designing and evaluating the correct operation of computing accelerators, feedback, and recurrent circuits, as well as when designing and parallelizing algorithms: the computation considers the corresponding logical dependence through their timing relations [20].

The principle speaks only about sequential chained operations. However, it enables multiple operations to be performed simultaneously, provided that the synchronization points between the steps are kept (meaning that the next operation can only start when the slowest operation is finished). Looping elementary operations is only allowed when some independent method guarantees that the result of an operation performed later will be replaced by an appropriately good value for the actual operation. This latter need may remain hidden for cyclic operations but might come to light by causing drastic effects on the computation (e.g., making the otherwise correct initial or previously calculated values wrong), for example, when beginning a computation or changing one operation type to another.

2.2. Modeling Computation

To perform a computation, one needs to assign operations to the input data, whether the computation is manual or electronic [21]. Practical computations comprise many elementary operations, supplying input and output data for each other: the operations must be chained. Either the instruction looks up its associated operand (instruction-driven control), or the data looks for its associated instruction (data-driven control). Data transfer and processing must not be separated; they must be discussed together. Their method of correspondence, their sequencing and signal representation, path, and timing shall be provided to chain the operations appropriately. Events must signal the computation’s milestones (such as its beginning and the result’s availability). Von Neumann’s famous omission of the transfer time aside from the processing time in chained computing operations was valid only for [the timing relations of] vacuum tubes. He clarified that using “too fast” vacuum tubes (or other elementary processing switches) vitiates his simplified paradigm that forms the basis of today’s computing science.

The first operating unit of the chain is given the input data that operates the computing chain, and the last unit provides the result. A large number of elementary computing objects shall be provided for more sophisticated and lengthy computations, or some units can be reused after making an appropriate organization. The first option means wasting computing components, enabling considerable parallelization for short instruction sequences, but its operation needs almost no organization. The second option requires a higher-level organizer unit, enables minimum parallelization (presumes that the instructions use some computing units exclusively), and allows for unlimited operating time and computing operations. However, either operating mode needs a timing alignment for the correct operation.

2.3. Time Windows

The times of the starting and terminating events mark a time window in which the data processing happens; the window width is the time needed to perform the computing operation. Biology and technology mark the time windows differently. The position and width of the time window are key to the operation of the computations. In technical computations, the clock signal defines the position, while in biological computations, it defines the time of the first input.

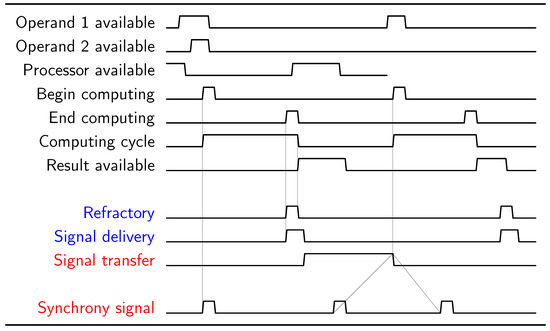

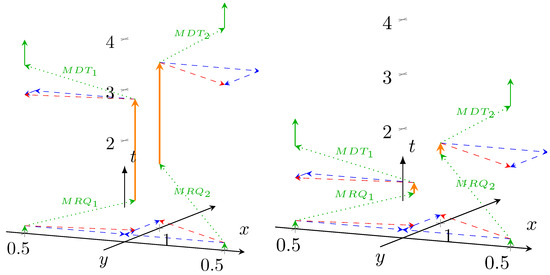

Technology commonly imitates those events and periods by using a fixed-time central synchrony signal. One edge of the signal defines the beginning, and another one defines the termination of the operation; see Figure 1 (the figure shows a symbolic notation for aligning events instead of clock edges). At the beginning, all input data must already be available in the input section of the unit, and the result must appear at its output before the time window terminates [22]. The designer is responsible for delaying the ‘Begin Computing’ signal at the start of the computation (leading to performance loss) for the clock signal because both signals have finite propagation speeds. In other words, all timings and lengths of operations must be known at the design time, and they cannot be fine-tuned at the run time. All operations have the same temporal length (allowing for pipelining). If the operation completes before the time window terminates, the remaining time is lost, leading to performance loss. If the operation is incomplete, the chained unit may work with garbage. The elementary operations implement a mathematical function, and the elementary computing units are self-contained. We can define the total length of the operation as the sum of the computing and transferring windows’ lengths.

Figure 1.

Timing relations of von Neumann’s model for chained computation. The operands must be available at the time when the operation begins; furthermore, signal transfer can only begin when the computation finished. Using a central synchrony signal may lead to prolonging the operating time if the bus transfer suffers delays.

The transfer time window starts when a unit produces its result and terminates at the time when the transferred data arrive at the next computing element. Instead of the previous calculation, the clock signal starts the unit, leading to further performance loss. Technology has various implementations, from direct galvanic connection to arbitrated sequential transfer over the bus to using special network transfer devices. Time windows with very different widths belong to those modes (ranging from psecs to msecs). No separate transfer time window is provided, so the transferred signal must adapt to the central clock signal. As predicted by von Neumann, under the varying timing relations of modern technology, theory cannot describe the computing operations precisely. The computing model itself, however, is correct, and the general computing paradigm [21] is appropriate for describing biological and technical computing, provided that the finite interaction speed is used instead of the classical instant interaction.

These time windows must not overlap: this would lead to causality issues. Data arriving from a more considerable distance or needing extended processing cause delays, block computing operations, and decrease computing efficiency. In the case of simple chained operations, this condition can be controlled, but ad hoc (mainly performed in multiple steps) parallelizations and loops (feedback, back-propagation, accelerators) need great care. They can lead to errors [21]. In those latter cases, the starting and the terminating data processing times are unknown at the design time; however, the operation of the system’s components must consider central synchronization (i.e., an external constraint) and must use fixed processing times.

Figure 1 shows the timing relations of the complete (time aware) model (it is a logical summary instead of a precise timing diagram). Notice that in chained computing operations using central synchrony signals, the total computing times must be as uniform as possible instead of the processing times. If the operation’s processing times (complexity) or transfer times (connection technology, including different package handling, or signal’s physical propagation speed or distance the signal has to pass) differ, the total computing time changes. It is worth noticing that the different timing contributions and their effects sensitively affect the system’s resulting performance.

Using time windows seems to be a general biological mechanism. The window’s width depends on the task’s complexity: more involved neuron levels (whether natural or artificial) need more time to deliver the result. The individual neurons transfer data in a massively parallel way, but the levels must wait until the result of the previous level arrives. The individual cells operate in the msec range; they cooperate in several msecs [23,24], implement Hebbian learning in tens of milliseconds [25], perform cognitive functions in several hundred msecs [26], and student learn in time windows of dozens of seconds [27].

2.4. Three-Stage Computing

An often forgotten requirement is that, theoretically, a three-state system is needed to define the direction of time [28]. In electronic computing, we can introduce this as an “up” edge and “down” edge, with a “hold” stage between. A charge-up process must always happen before discharging. As discussed below, biological computing has a computing stage, followed by “up” and “down” edges; always in this order. This requirement is a fundamental issue for quantum-based computing, where the processes are reversible: we must define the direction of time; otherwise, only random numbers can be generated.

2.5. Issues in Formulating Efficiency

The false parallels with electric circuits (i.e., neglecting the fundamental differences between the digital and neurological operating modes) and the preconception that nature-made biological computing must follow notions and conceptions of manufactured computing systems (see, for example, the electrotonic models [29], where one attempts to describe the properties of neurons assuming that they are electronically manufactured components) hinders understanding of genuine biological computing. Despite these difficulties, brave numerical comparisons exist, even with the so-called “Organoid Intelligence” [30].

The “Brain initiative”, after ten years, had to admit [1] that “yet, for the most part, we still do not understand the brain’s underlying computational logic” [1]. The Human Brain Project believes that it is sufficient to build a computing system with resources [31] sufficient for simulating 1 billion neurons, and it does not want to admit that it can be used to simulate only by orders of magnitude less [14], below 100 thousand. The presently available serial systems do not enable the performance to be reached [32]. The project is declared successful even though the targeted “studies on processes like plasticity, learning, and development exhibited over hours and days of biological time are outside our reach” [14]. Despite the lack of knowledge, the half-understood principles of neuromorphic computing [33] are extensively used [33,34], although it is seen that “brain-inspired computing needs a master plan” [35]. However, the hype is enormous [33].

It is not easy to compare technical and biological computing systems, either their computing ability or energetic efficiency. In their infancy, researchers saw many similarities between “the computer and the brain” [36]. We experience that “the brain computes!” [37], and the idea about their similarity was periodically warmed up and revisited [38,39]. Also, some resemblance is periodically claimed between natural and artificial intelligence. When one attempts to compare computing by the brain to computer computing, the first principal problem is assessing the brain’s computing power. One can “define an elementary operation of the brain as a single synaptic event” [40], and its operands and result are analog timing values. In contrast, “in a digital computer, an elementary operation constitutes …a few instructions such as load, add, and multiply” [40] using digital signals. It is at least problematic to compare the elementary operations by computing power or speed. Some characteristics of the major computing implementations are summarized in Table 1.

Table 1.

Summary of some characteristics of the major computing implementations.

The second is how to map these operations to each other given that thousands of machine instructions are needed to emulate an analog integration (diffusion) and thousands of neurons are needed to perform simple digital addition, not to mention the thousands of machine instructions required to connect to the external world [45,46,47]; so the question of how to map these operations to each other remains open. In reality, the biological data transmission time is up to two orders of magnitude longer than the computing time. In technical computing, the transmission through a bus might result in similar ratios. Furthermore, the operations can be at least partly parallelized. “The naïve conversion between Operations Per Second (OPS) and Synaptic Operations Per Second (SOPS) as follows: 1 SOPS ≈ 2 OPS” [48] has limited validity. It can differ from the real one by two to four orders of magnitude in both directions, depending on the context, not to mention cross-comparison between biological and technical implementations. Any conversion reflects the misunderstanding that the total operating time comprises only the computing time, and the data transmission time can be neglected.

The third problem we face is the “non-deterministic”, non-perfect, analog, asynchronous method of operation of the brain versus the deterministic, perfect, digital, synchronous operation of the computer. Furthermore, the arrival time of the received postsynaptic spikes [42] almost entirely determine the input arguments. We also know that the brain has no central clock, instruction pointer, or memory unit; furthermore, it uses mixed data-flow and event control and learns excellently.

The fourth one is that a neuron is “a multiaccess, partially degraded broadcast channel that performs computations on data received at thousands of input terminals and transmits information to thousands of output terminals by means of a time-continuous version of pulse position” [49] and works with information distributed in space and time. At the same time, technical communication applies Shannon’s point-to-point communication [6] model and works with discrete digital signals.

Fifth, the brain works with slow and heavy ions; furthermore, it is governed by ‘non-ordinary’ laws [50] describing mixed slow thermodynamical and fast electrical interactions [51]. In contrast, technical computing is governed by the well-known laws of electricity, and non-sequential technical signal processing times contribute to its ‘theoretical’ processing times.

As [35] classified, “the term neuromorphic encompasses at least three broad communities of researchers, distinguished by whether their aim is to emulate neural function (reverse-engineer the brain), simulate neural networks (develop new computational approaches), or engineer new classes of electronic device”. However, all these communities inherited the wrong ideas above.

Their operating principle, logical structure, architecture, signal representation and propagating speed, synchronicity, communication methods, and many other biological and technical computing aspects are fundamentally different. We have classical digital computers with (in the above sense, limited validity) comparable capacity and the brain itself (used many times as etalon for comparisons), plus an uncountable variety of so-called “neuromorphic” computing devices (including partly biological ones), providing a smooth transition toward fully biological “organoid” systems (such as [30,52]). We attempt to compare their elementary operations (serving as a base for their highest level of operation, “intelligence”) in a strictly technical sense at the level of elementary operations without touching philosophical, mathematical, or ethical questions.

As we discuss, those implementations have inherent, deeply rooted technology solutions. Of course, technological enhancements are needed and valuable, but they only enhance improper technological solutions without understanding the computing theory. Moreover, computing lacks the appropriate theory. What is commonly considered a theoretical base was validated by von Neumann [53] to technical conditions of vacuum tubes. As he predicted, the technical development invalidated his omissions introduced to simplify the initial designs.

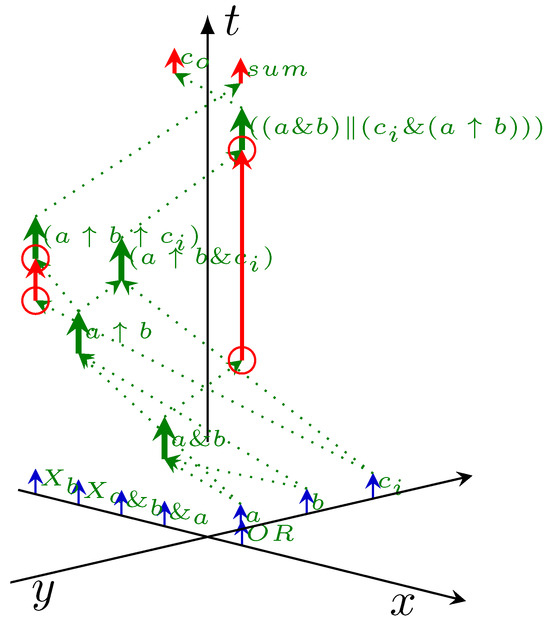

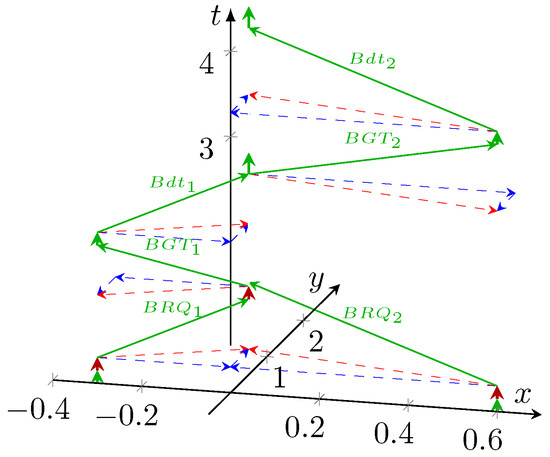

Figure 2 shows how the loss due to omitting the transfer time (and due to that, omitting the need for agreement between the temporal length of different logical paths) leads to hidden performance loss. The gates provide outputs for each other, so to start some activity, they must wait until all needed signals, including the result produced by the fellow gates, reach their input section. In the figure, the vertical axis shows the times when the needed logical activities happen at the gates. The locations of input signals and operations are shown on different axes for visibility. The red vectors show where the adder circuit is waiting (a non-payload activity).

Figure 2.

The diagram shows in the time–space diagram in a simple 1-bit adder, demonstrating how the payload times and operating times depend on the positioning of the gates. The times of the signals of the partial results and the times of the operands (the ‘input sections’) to the place of the actual logical operations are shown on axes X and Y, respectively. The red arrows between circles are non-payload times.

One cannot successfully grasp and implement an idea from biology without understanding its context, underlying solutions, and requirements. Without understanding the inspiring object’s operation, implementing it using already developed, available materials, technology, components, and principles can show initial successes (mainly in “toy” systems). However, it can enable the development of neither even tiny functionally equivalent parts of the brain nor biomorphic general-purpose computers, not to mention their computational efficiency. This is why judges of the Gordon Bell Prize noticed that “surprisingly, [among the winners of the supercomputer competition] there have been no brain-inspired massively parallel specialized computers” [54]. Computing principles (including their fundamental theory) need rebooting [55,56] (a leap-like development) instead of incremental enhancement of some of its components and manufacturing technology solutions.

We can only admire von Neumann’s genial prediction that “the language of the brain, not the language of mathematics” [36], given that most of the cited neuroscience experimental evidence was unavailable at his age. We also agree with von Neumann and Sejnowski that “whatever the system [of the brain] is, it cannot fail to differ from what we consciously and explicitly consider mathematics” [40]. However, the appropriate mathematical methods may not yet be invented.

2.6. Payload vs. Theoretical Efficiency

We define computing efficiency, based on the execution times, as the ratio of the ‘net computing’ time to the ‘total operating’ time from utilizing the computing machinery. The ‘net’ computing time is the period our ‘black box’ elementary computing unit needs after we make its needed arguments available through its input unit and generate the event ‘Begin computing’ until it produces its result in its output section by generating the event ‘End computing’. Since the data must be transferred from one unit’s output section to another’s input section, even if physically the same computing unit is used, chained computing inherently loses efficiency, given that transferring data has a finite (although large) speed. An operating time shorter than the clock period, a transfer time longer than the clock period, a delay in clock edges, or any other timing issue decreases payload efficiency. In contrast, the theoretical (theoretically possible) efficiency and raw computing power remain the same. When the operations follow each other without a break (i.e., the data transmission time can be omitted aside from the computing time, and all computing units receive in time the arguments they need to compute with), we have a 100% (or theoretical) efficiency. The payload efficiency depends on the system’s operating mode, construction, and workload [57].

Another way of defining efficiency is by determining the percentage of the available computing facilities used in the computation in the long run. Again, another way is to determine the percentage of power used directly for computation versus indirect computing (organizing the work or making non-payload computation) or the total power or the power needed for communication. Using a precise definition is important. When multiple-issue computing units are present but only part of them are used (say by out-of-order execution), or their result becomes obsolete (say by branch prediction), computing throughput (and power consumption) increases, but utilization of the available hardware decreases. Similarly, as we discuss in connection with deep learning (or other nonsense feedback), making obsolete and nonsense operations reduces computing efficiency.

For the commonly used instruction-driven sequential systems, the computing time equals the total time only if the transfer time can be omitted and all computing units can be fully utilized. That was the case for the vacuum tube implementation and a single computing unit: the transfer time was in the μs region, while the operation time and memory access time were in the ms region [53] (notice that when mentioning the so-called ‘von Neumann bottleneck’, the stored program concept, one must oppose the time of manually wiring the computing units to load the program in advance and have memory access per instruction).

2.7. Instruction- and Data-Driven Modes

In a computation, data, instructions, and their method of correspondence must be provided. Either the instruction finds its associated operand (instruction-driven control, usually implemented by fetching stored instruction given by their address), or the data find their associated instruction (data-driven control, usually by wiring). In the case of data-driven operation, all data have their exclusively used pre-wired data path, allowing for a high degree of parallelism. However, the price paid for this is that the individual computing units are used only once, and a unit always performs the same operation with the data it receives, i.e., it cannot be used for a different goal. The brain needs a hundred billion neurons (essentially instructions) because practically all elementary instructions need to use another neuron (or better, their combinations, even if a higher level organization unit and its cyclic operation provide re-use possibility). This is why we use only a tiny fraction of our neurons [24] each moment. The brain’s utilization (the payload computing efficiency) is very low. However, its energetic efficiency is high: when not computing, neurons’ maintenance consumes minimum energy [41]. Oppositely, the power consumption of technical computing is enormous.

Although modern processors (mainly the many-core ones) comprise gates comparable in number to the neurons in the brain, the overwhelming majority of those gates only increase the computing speed of a single computing thread, and the cyclic-sequential operating does not need much wiring. We could construct a data-controlled processor from the same number of gates, but it would be a purpose-built system. It would process all data in the same way and would need orders of magnitude times more wiring. Technology cannot imitate the biological mechanism required for the brain’s dynamic operation, mainly because of the amount of wiring involved and the lack of materials of unique construction that observe signal propagation time. We need to bargain between the number of components and wiring on the one side, the architecture’s complexity, and the processor’s operating speed on the other. Because of this, there are significant differences in the higher-level behavior of technical and biological processors.

Technical computing is instruction-flow and clock-controlled. A complex cyclic-sequential processor with a fixed external and variable internal data path is used, which interprets the data arriving at its input section as an instruction. After decoding, it sets up its internal state and data paths. After this, the data control the operation of the processor: until the operation terminates, the processor works in a data-driven mode, and the data flow through the previously set data path.

A sequential processor executes one instruction at a time, considerably limiting the parallelism (although the actual implementations enable some ad hoc parallelization). The result goes into the output section, and the processor continues in an instruction-controlled regime. In the data-controlled mode, the data can modify the instruction pointer (for example, depending on the sign of the result): the technical processor uses a combination of the two operating modes. In addition, using interrupts implements a kind of event-driven control; it enables multi-tasking. The temporary instruction-driven mode is needed to make the processor programmable, which is the idea behind the “stored program” concept. We pay a huge price for the universality: even the relatively simple processors work inefficiently [58].

The decoded instruction must refer to the data to be processed. Instructions would need inaccessibly dense wiring to make their required data directly accessible (by wiring). Furthermore, it would not allow using different data (for example, different vector elements) during processing. For this reason, the instruction references the data through their address in a storage unit, which can be accessed through a particular wiring system (the bus); see Figure 3. However, the bus can only be used by one computing component at a time, which means further significant limitations of the parallel operation. Using a bus makes the arrival time of the data delivered over the bus unknown at design time. The reconfigurable systems are effective because they use a data path set in advance (instead of setting it after decoding) and use the data paths in parallel. They slow down when they need to access external data. However, it would be possible to use an intermediate (configware) layer [59,60] between the hardware and software layers.

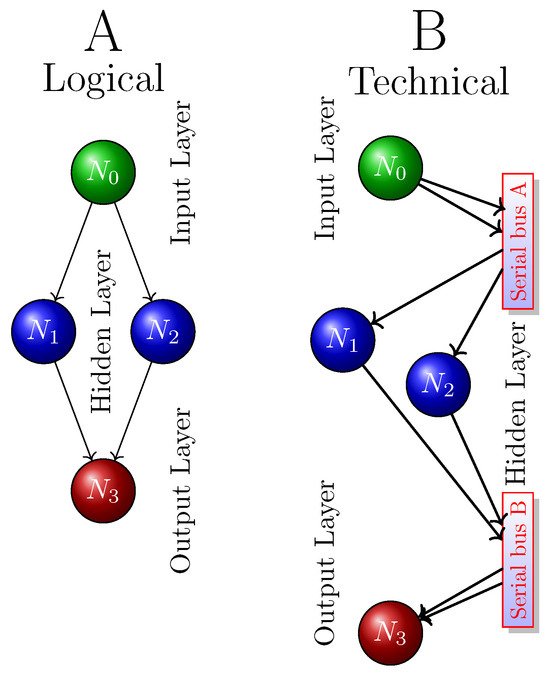

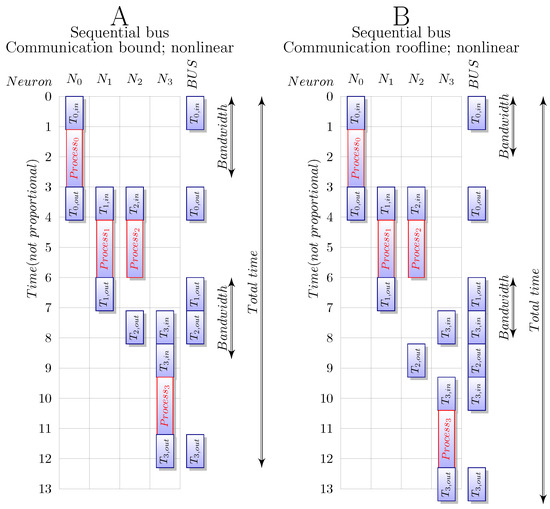

Figure 3.

The neuronal information transfer data paths. (A) Data path of the logical and biological transfer. (B) Data path of the technical transfer. In the technical implementation, data transfer is accomplished through a serial bus that drastically changes the timing relations and decreases computing efficiency.

2.8. Connecting Elemental Units

Given that real computations can only be implemented as the result of more elementary operation, independently of whether we use instruction or data-driven operating modes, we must connect the chained units and deliver signals from one unit’s output section to another unit’s input section, even in the case when the same physical unit is used for the consecutive operations (of course, after the corresponding organization and control). Transferring signals requires time (the signal speed cannot be exceeded); furthermore, the information transfer capacity of their connections has theoretical limits [6,7]. One of the fundamental issues when considering the performance of computing systems is that chaining remains out of sight of theorists and technologists. Von Neumann’s model correctly included both time contributions. Given that his goal was to establish the operation of their vacuum-tube construction mathematically, he made omissions that, in their case, the data transfer time can be omitted aside from the computing time. He warned that using “too fast processing units” (such as the ones technology produces today) vitiates his famous paradigm and that it was “unsound” to use his simplified paradigm for the timing relations of biological computing. Mainly due to the miniaturization and the increased wiring, the timing relations in modern technological design are firmly approaching the ones that were qualified as “unsound” conditions for applying (the mathematical) computing theory by von Neumann.

2.9. Proper Sequencing

One of the fundamental requirements mentioned by von Neumann is that the instruction must be executed using “proper sequencing”. It is a broadly interpreted notion that enables using an instruction pointer, indirect addressing, subroutine calls, callbacks, and interrupts (including operating systems and time-sharing modes). Notice that by using the signals “Begin Computing” and “End Computing”, we also define the direction of the time.

Von Neumann was aware that the temporal length of a computing operation matters (compared to the transfer time defined by science between processing units; the shorter processing time vitiates his omission). His discussion did not consider the that—time already known quantum logic [61]—mainly because of its probabilistic outcomes. Quantum processors can solve only particular problems rather than enable the building of general-purpose computers. They run “toy algorithms” with low accuracy (50–90%). “The main challenge in quantum computing remains to find a technology that will scale up to thousands of qubits” [62]. Quantum computing has shown essential advances in the past few years. To build a practically useful computer, one can estimate the number of qubits needed to be 100,000”. The technical difficulties make assembling a sufficiently large number of qubits challenging. Using the quantum Fourier transform, one can also imitate classical computing circuits [63]. However, one more principal problem with using quantum computing for building general-purpose processors is synchronization issues (’Begin computing’, ‘End Computing’, ’Operand available’ signals, furthermore defining the direction of the time), which remain outside the scope of this review.

As discussed in [28,64], one needs three-state systems to describe computing adequately. In two-state digital electronic logic systems, rising and falling clock edges and the ‘hold’ time define time’s direction. However, it is questionable if such a third state can be available among the quantum states. “Building such machines is decades away” [62]. This is not only because of technical issues but also because they do not fulfill the “proper sequencing” requirement.

2.10. Looping Circuits

Computing theory assumes simple linear circuits. After making an appropriate organization, one can use some computing units repeatedly, provided that the timing relations are not violated. The simple single-thread processors use the same computing circuitry repeatedly, per instruction. The price paid is that a stored instruction must be fetched and the internal multiplexed machinery reorganized per instruction. Given that the execution time of all elementary instructions must be shorter than a clock period, it does not create additional problems. Notice that using such a solution only makes sense when using the instruction-controlled mode: the processor uses an internally changed data path plus instruction-selected external data paths.

3. Technical Computing

In his classic paradigm, von Neumann assumed that the computer would initially be implemented using (the timing relations of) vacuum tubes; furthermore, the computer would perform a large number of computing operations on a small amount of data and provide limited results. The development of electronic technology quickly outdated the assumed timing relations, and the way computers are used today is far outside their original range of applicability. According to its inventor, today’s processors are implemented with a different (differently timed) technology that makes using the classic paradigm unsound. Inexpensive computers are used for goals other than computing and are emulated through computing (like synchronization and loop organization, among others). This usage method wastes resources; a processor can connect to the surrounding world using I/O instructions; it must imitate (using memory operations) multi-threading on a single-thread processor; and the processor has an inflexible architecture and runs sequential processes. A processor can hardly keep track of and take control of processes occurring in real time.

He made clear that using “too fast” vacuum tubes (or other elementary processing switches) vitiates his simplified paradigm that forms the basis of today’s computing science: his famous omission of the transfer time from aside the processing time in chained computing operations was valid only for [the timing relations of] vacuum tubes. In this sense, computing science is the firm theoretical basis for an abstract processor but cannot describe a technically (or biologically) implemented processor. R. P. Feynman, in the late 1970s, called attention to [65] the idea that computing belongs to the engineering field and the behavior of a technical processor tends to strongly deviate from the behavior of the abstract processor that the computing science can describe.

Von Neumann suggested that his proposed simplified computing paradigm should be revisited correspondingly when the implementation technology changes, but this has never been performed. The relevance and plausibility of neglecting the transfer time have never been made questionable; despite that in the brain operation that inspired von Neumann, the non-negligible conduction time (transfer time) was evident from the beginning. However, mathematics, as well as electronic circuit design, and consequently, technological computing, assume instant interaction, while brain simulation describes the “spatiotemporal” behavior using separated spatial and temporal variables.

We revisited and generalized [21] von Neumann’s paradigm, making it technology-independent. The proposed approach correctly interprets the idea of computing in von Neumann’s spirit (keeping his correct model). Consequently, it considers that any implementation converts the logical dependence to temporal dependence [19]. Formally, our handling only changes the concept that logical functions (the factual basis of computer science) depend on where and when they are evaluated. In this way, computer science and the underlying technology are in agreement with the modern state of science/technology.

Introducing time awareness into (electronic and neurobiological) computing is as revolutionary as in classic science more than a century ago: a series of new scientific disciplines were born. In parallel with science, introducing time into computing science leads to the birth of “modern computing”, which can explain the issues that “classic computing” cannot (from increased dissipation of processors through the inefficiency of large-scale computing systems to the nonlinear behavior of algorithms running on them), in complete analogy with the theories of relativistic and quantum physics proposed several decades ago. Under the current circumstances, the temporal behavior of components, all actors on the computing scene, must be considered, whether in geographically large systems, inside miniaturized processor chips, or within biological and artificial neurons.

Computing technology is based on elements that have drastically changed during technical development. The precise principles in von Neumann’s fundamental work [53], derived from the timing relations of vacuum tubes, are no longer valid for today’s technology and materials. However, those out-of-date omissions are behind technical designs.

3.1. Cost Function

Initially, manufacturing transistors was expensive, so the designers minimized the number of transistors in their design. Around 2006, a primary cost function was changed [66]: “Old [conventional wisdom]: Power is free, but transistors are expensive. New [conventional wisdom] is [that] power is expensive, but transistors are free”. Today, one can produce a transistor “for the price of sand”, and the component’s features (memory size, memory cell word length, processor word length, processor data path length, etc.) have been enormously enhanced. The need for enhancing design was recognized, but there were no investments to redesign fundamental circuits. “As we enter the dark silicon era, the benefits from transistor scaling are diminishing, and the current paradigm of processor design significantly falls short of the traditional cadence of performance improvements due to power limitations. These shortcomings can drastically curtail the industry’s ability to continuously deliver new capabilities” [67].

Technology and circuit design were developed isolatedly: new designs introduced new Intellectual Properties (IP) while keeping the already tested and validated IPs unchanged. Circuits such as an adder were designed to contain a minimum number of gates instead of a path with an identical temporal length for producing results and carry. As we discussed in [21], the increased word length increased the number of unwanted flops, i.e., the non-payload power consumption of processors. Investing in computer processors is a minor portion of the budget; the central part is to provide power (for supercomputers, dedicated power stations) and cooling (river water), so it would be time to change the cost function of building computing systems, including processors.

3.1.1. Thermal Limit

Given that only a fragment of the cores (or equivalently, all cores at a fragment of the nominal clock frequency) could be used, and the working cores were operating at their thermal limit, some cores were only used to “pad” the working cores [68,69]. Recently, at high processor densities (such as in the case of supercomputer nodes), the processors’ working clock frequency cannot be set to its allowed maximum value. The intense heat production requires intense cooling, which requires space between processors, increasing the data path length and decreasing efficiency and performance. The issues of thermal limit cannot be separated from the issues of increasing the processor’s word length, using a central clock, the efficiency of power consumption, and the physical limitations [70].

3.1.2. Word Length

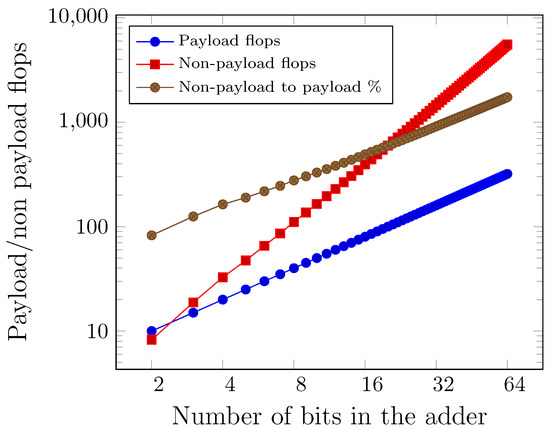

As the size of computing tasks grew, so did the address space and the elementary operand’s size. The increased operand size and the lack of redesigning the fundamental circuits lead to a drastic power consumption increase. Figure 4 depicts that, due to the wrong cost function (minimizing the number of transistors in the circuit instead of minimizing the time difference between signals’ arrival), non-payload flops are present, and their number and ratio exponentially increase with the number of bits in the argument.

Figure 4.

Comparing the number of payloads on nonpayload flops as a function of bits in the argument. Given that the cost function minimizes the number of gates instead of the difference in the signal arrival times, the proportion of non-payload flops increases unproportionally. Because of that, the longer the word length of the operands, the more power is wasted for heating instead of computing.

3.1.3. Wrong Execution Time

Von Neumann clarified that using “too fast” vacuum tubes (or other elementary processing switches) vitiates his simplified paradigm that forms the basis of today’s computing science. His famous omission of the transfer time aside from the processing time in chained computing operations was valid only for [the timing relations of] vacuum tubes. The growing complexity of processor chips requires more dense wiring, increasing the internal data delivery and transfer paths.

From the beginning of computing, the computing paradigm itself, “the implicit hardware/software contract [66]”, has defined how mathematics-based theory and its science-based implementation must cooperate. Mathematics, however, considers only logical dependencies between its operands; it assumes that its operands are instantly available. Even publications in most prestigious journals [34,71,72] are missing the need to introduce temporal behavior into computing. The “contract” forces us to develop engineering solutions that imitate a non-temporal behavior. Although the experience shows the opposite, processors are built in the spirit that signals transfer time is neglected aside from its processing time. In a good approximation, that approach is valid for (the timing relations of) vacuum tubes only. Clock domains and clock distribution trees have been introduced to cover the facts. Given that the total execution time comprises data transfer and processing times, it is not reasonable to fabricate smaller components without proportionally decreasing their processing time.

3.1.4. Central Clock Signal

Von Neumann suggested using a central clock signal for the initial designs only and at the timing relations of vacuum tubes. His statement is valid for the well-defined dispersionless synaptic delay (where the transfer time can be omitted aside from processing time, and a central synchronization clock is used) he assumed, but not at all for today’s processors. The recent activity of considering asynchronous operating modes is motivated by admitting that the present synchronized operating mode is disadvantageous in the present non-dispersionless world.

Given that von Neumann considered the intended vacuum tube implementation, he proposed neglecting the transfer time, aside from the processing time, to simplify the model. Consequently, and only for vacuum tube implementation (slow processors), he proposed using a central clock signal. The activity to consider asynchronous operating modes [11,34,48,73] is motivated by admitting that the present synchronized operating mode is disadvantageous in the non-dispersionless world. For today’s technology (the case of “too fast” processors, as von Neumann coined), the idea of using a central clock signal cannot be justified.

3.1.5. Dispersion

The synchronization inherently introduces performance loss: the processing elements will be idle until the next clock pulse arrives. It was correctly noticed [74] that “in the current architecture where data moves between the physically separated processor and memory, latency is unavoidable”. In single-processor systems, latency is the primary reason for the low efficiency. The effect grows as the system’s physical size grows or the processing time decreases apart from transfer time.

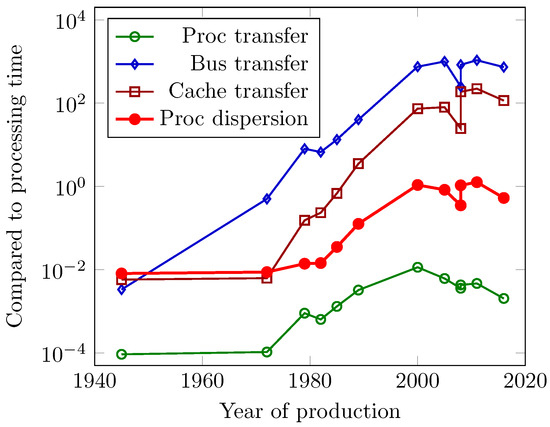

This difference in the arrival times is why von Neumann emphasized “The emphasis is on the exclusion of a dispersion” [53]. Von Neumann’s statement is valid for the well-defined dispersionless synaptic delay he assumed, but not at all for today’s processors, and even less for physically larger computing systems. If we consider a 300 m2 sized computer room and the 3000 vacuum tubes estimated [75], von Neumann considered a distance between vacuum tubes of about 30 cm (1 ns) as a critical value. The best case is to transfer a signal between neighboring vacuum tubes. The worst case is to transfer a signal to the other end of the computer room. At this distance, the transfer time is about three orders of magnitude lower than the processing time (between the “steps” he mentioned). With our definition, the dispersion of EDVAC is (at or below) 1 %. We estimate the distance between the processing elements in two ways for modern processors. We calculate the square root of the processor area divided by the number of transistors. This assumption gives the transistors a kind of “average distance”, and we consider it to be the minimum distance the signals must travel between neighboring transistors (notice that this transfer time also shows a drastic increase with the number of transistors but alone does not vitiate the classic paradigm). This value is depicted as “Proc transfer” in Figure 5. The maximum distance between the two farthest processing elements on the chip is the square root of the processor area. Introducing clock domains and multi-core processors evidently shades the picture. However, we cannot estimate more accurately without proprietary technological data.

Figure 5.

The history of dispersion of processors as a function of their year of production. Omitting the transfer time from computing theory led to vastly increased dispersion as the technology developed. The dispersion was at the level von Neumann considered as tolerable in the early 1980s.

As the “Proc dispersion” diagram line shows, the dispersion is near unity in today’s technology. We can no longer apply the “dispersionless” classic paradigm. Reaching the plateau of the diagram lines coincides with introducing the “explicitly parallel instruction set computer” [76]: the maximum that the classic paradigm enabled. On the one side, it means that today, electric power is almost entirely wasted because of the wrong theoretical base. On the other, this means that the operating regime of today’s processors is closer to the operating regime of the brain (where explicit “spatiotemporal” behavior is considered and it is “unsound” to use the classic paradigm to describe it) than the operating principle abstracted in the classic paradigm.

Von Neumann used the word “dispersion” only in a broad (and only mathematical) sense, so we quantitatively define a merit for dispersion (the idea can be concluded from his famous fundamental report) using technical data from the given implementation. We provide a “best-case” and a “worst-case” estimated value for the transfer time and define the dispersion as the geometric mean of the minimum and maximum “Proc transfer” times, divided by the processing time. The rough idea should be refined, and the dispersion shall be derived from proprietary technological and topological data.

Given that processing elements and storage elements usually are fabricated as separated technological blocks (this technical solution is misinterpreted as “the von Neumann architecture”; the structure and architecture are confused), and they are connected by wires (aka bus), we also estimated a “bus transfer” time. The memory access in this way is extended by the bus transfer time (this situation motivates the efforts to make “in-memory computing”). Because of this effect, we assumed that cache memory is positioned at distance of half processor size. This time is shown as “Cache transfer” time. The cache memories appeared at the end of the 1980s when it became evident that the bus transfer non-proportionally increases the memory transfer time (cache transfer time data can be calculated for processors without cache memory, however).

Given that EDVAC and Intel 8008 have the same number of processing elements, their relative processor and cache transfer times are on the same order of magnitude. However, the importance of bus transfer time has grown and started to dominate single-processor performance in personal computers. A decade later, the physical size of the bus necessitated introducing cache memories. The physical size led to saturation in all relative transfer times (the real cause of the “end of the Moore age” is that Moore’s observation is not valid for the bus’s physical size). The slight decrease in the dispersion in the past years can probably be attributed to the sensitivity of our calculation method to the spread of multicores, suggesting repeating our analysis with proprietary technological data.

3.1.6. Generating Square Waves

Digital technology needs square waves. The sooner a signal reaches (90% of) its amplitude, the better. However, the more energy is needed to do it. Action potentials resemble a damped oscillation with . The damping means not only speed but also energy consumption. Being digital also means losing the option of having analog memory due to damping.

3.1.7. Resource Utilization

The internal circuits for performing elementary operations can be subdivided into sections (performing “more elementary” operations) that provide inputs/outputs for each other. In this way, they can be organized to work simultaneously on executing different instructions. Pipelining needs interfacing with those sub-instructions, considerably increasing the need for data moving and temporary storing; it needs extra organization. Furthermore, they were designed for sequential operation, and non-sequential operations (such as interrupts, multithreading, and conditional jumps) kill the pipeline. The idea roots back to the times when “transistors were expensive”.

To increase single-processor performance, an apparently good idea is to use multiple-issue computing sub-units (one can classify designs such as Graphic Processing Unit (GPU), multiple arithmetic units, conditional jump, and out-of-order executions). However, they cannot be utilized at their full power. The benchmarks show genuine results when all issues have operands with which to work. It is the end-user’s responsibility to organize the workload to maximize the available computing power, if possible.

3.2. Hardware/Software Cooperation

Computing was, for decades, a sound success story with exponential growth. The provided computing performance was aligned with the computing demand for a long time. About two decades ago, however, as the report of Computer Science and Telecommunications Board (CSTB) of the US National Academy of Sciences stated, “Growth in single-processor performance has stalled—or at best is being increased only marginally over time” [77]. Experience showed that wiring (and its related transfer time) tended to have an increasing weight [70] in the timing budget, even within the core. When reaching the technology limit of around 200 nm, wiring started to dominate (compare this date to the year when the dispersion diagram line reached its saturation level in Figure 5). Further miniaturization can enhance the computing performance marginally and increase the number of issues due to approaching the limiting interaction speed. Since the computing industry heavily influences virtually all industrial segments, the stalling crashed long-term predictions in industry, military, research, economy, etc. The shortage of integrated circuits first became visible in the early 1920s, and recently, the proliferation of the Artificial Intelligence (AI) systems causes a shortage in computing power itself. The demand for computing keeps growing and shows an unsustainable increase; see Figure 1 in [35]. The increase in computing power is far from linear and divides into “ages”, marked by the beginning of using some technology. Until 2012, computing power demand doubled every 24 months; recently, this has shortened to approximately every two months. Mainly due to the wide proliferation of AI, this trend is continuing.

Computing, commonly called “computing stack”, is implemented in many layers with many interfaces between. Executing software instructions is using electronic gates in another way. We also introduce different layers within the software layer, such as application, Operating System (OS), networking, and buffers with their separate handling. Although using interfaces between them simplifies thinking and makes development less expensive, it decays efficiency, mainly due to the need for moving data.

3.2.1. Single-Thread View

In the original idea, safety and comfort were not present, so it remained for the software layer to provide it, although hardware created some tools. The operating system attempts to map the abstract mathematical operation to hardware. On the one side, it provides the illusion for the hardware that only one process runs on it, and for the software, that it has exclusive access to the hardware. However, at critical points, the hardware disappears for all software processes, and ‘foreign’ instructions are executed by the OS. It was noticed early that [78] “operating systems are not getting as fast as hardware”. Too much functionality remain for the software (SW). Even typical hardware (HW) tasks such as priority handling [79,80] remain for the SW.

The elementary size of executable instructions is too small. When several different tasks (and threads) share the processor, the change between them and the access to the processor’s critical resources need a forceful intervention (context switching), which is very expensive regarding executable instructions. To implement it for using the functionality of the OS, executing an Input/Output (I/O) instruction, or sharing resources (mutex), about 20,000 machine instructions must be executed [45,46].

Although G.M.Amdahl in 1968 questioned [81] the “validity of the single processor approach to achieving large scale computing capabilities”, even today, processors are optimized (and benchmarked) for single thread processing, and they are used under OSs and in many-thread environments. When switching threads (and even when calling subroutines), logically, a new processor is needed, so the OS or the application must save and restore (at least part of) its many registers using time-expensive memory operations. The instruction sets working with ‘registers only’ instructions attempt to reduce unneeded memory access. Using the ‘processors are free’ idea and a technical solution similar to the “big.LITTLE technology” [82], an alternative mode of using many-core processors could provide significant help [59,60]. As was demonstrated [83], cooperating processors significantly increase the efficiency of heavily communicating systems.

Unfortunately, the original paradigm did not theorize making I/O operations and, during the times, those operations were implemented as accessible through the OS. In the age of ‘Big Data’, ‘Internet of Things’, and graphical OSs, making I/O operations can represent a significant share of the executed instructions, given that their number must be weighted with the needed context changes.

3.2.2. Communication

Attempting to construct an artificial system of neurons with at least approximately as good features as the nature constructed is hopeless. “In the terminology of communication theory and information theory, [a neuron] is a multiaccess, partially degraded broadcast channel that performs computations on data received at thousands of input terminals and transmits information to thousands of output terminals employing a time-continuous version of pulse position. Moreover, [a neuron] engages in an extreme form of network coding; it does not store or forward the information it receives but rather fastidiously computes a certain functional of the union of all its input spike trains, which it then conveys to a multiplicity of select recipients” [49]. “A single neuron may receive inputs from up to 15,000–20,000 neurons and may transmit a signal to 40,000–60,000 other neurons” [84].

3.2.3. Wiring

Computing science has established itself on von Neumann’s simplified paradigm, and instead of working out mathematical formalism for the modern (and future) technological implementations, it quietly introduced an “empirical efficiency” for different computing systems, instead of understanding theoretically that computing inherently comprises efficiency and many of the shocking experiences, such as that the absolute performance decreases when increasing the number of processors in parallel processing systems [85,86], that the efficiency of a computing system depends on its workload [57], that the number of articial neurons in an Artificial Neural Newtwork (ANN) that contribute to the resulting performance is limited [44,87], that a vast system needing heavy communication can collapse [15], and that the need for communication restricts the usable HW to the 1% of the available HW [14].

Unexpectedly, this omission has far-reaching consequences and infects the thinking of HW designers. Claims such as “supercomputers, which, unlike the brain, physically separate core memory and processing units. This slows them down and substantially increases their energy consumption” are available. Furthermore, “The brain as a physical substrate of computing is also fundamentally different from general-purpose computers based on digital circuits. It is based on biological entities such as synapses and neurons instead of memory blocks and transistors” [34]. Neither sentence mentions the role of connection needed between those units. However, the data transmission time is about two orders of magnitude higher than the processing time in biology and is becoming similar to that in technical systems as the system size grows. The slow-down is not the consequence of separating the memory and processor; it is the direct consequence of omitting the data transmission time. Also, the experienced nonlinearity originates from the fact that the total execution time comprises a sum of the operating and transferring time, the proportion of the latter, omitted time, increases, and the technology proceeds.

The chain’s first operating unit receives the input data in both modes, and the last unit provides the result. Many elementary computing objects shall be provided for more sophisticated and lengthy computations. Alternatively, after making an appropriate organization, some units can be re-used. The first control option means wasting computing components. While it enables considerable parallelization for short instruction sequences, its operation needs almost no organization. The second option requires an organizer unit. While it enables minimum parallelization (presumes that the instructions use some computing units exclusively), it allows unlimited operating time and many computing operations. Both control modes require timing alignment for the correct operation.

Data-driven operating mode allows for a high degree of parallelism, given that all data have their exclusively used data path. The price paid is that the individual computing units are used only once, and a unit always performs the same operation with the data it receives. The brain needs a hundred billion neurons (essentially instructions) because practically all elementary operations need to use another neuron (even if a higher-level organization unit provides re-use possibility). This feature enables us to use only a tiny fraction of our neurons [24] every moment.

Although the neurons in the brain are comparable in number to the gates in modern processors (mainly the many-core ones), the overwhelming majority of those gates only increase the computing speed of a single computing thread, and the cyclic–sequential operating does not require much wiring. We could construct a data-controlled processor from the same number of gates, but it would be a purpose-built system. It would process all data similarly and require orders of magnitude times more wiring (see, in some sense, attempts to build AI systems with over a hundred billion [88]). The needed amount of wiring exceeds technological possibilities. Given that the paradigm does not consider the signal propagation time, technology cannot imitate the biological mechanism required for the brain’s dynamic operation. We must bargain between the wiring and the number of components on the one side, the processor’s operating speed, and the architecture’s complexity on the other. Because of this, there are significant differences between the elementary operations and the higher-level behavior of technical and biological processors.

Technical computing is fundamentally instruction-flow and clock-controlled. It uses a complex cyclic–sequential processor with a fixed external data path, which interprets the data arriving at its input section as an instruction. It sets up its internal state and data paths after decoding. Following that, the data control the processor’s operation. Until the operation terminates, the processor works in a data-driven mode, and the data flow through the previously set data path.

Although the actual implementations enable some ad hoc parallelization, a sequential processor executes one instruction at a time in the data-controlled mode, considerably limiting the parallelism. The result goes into the output section, and the processor continues in an instruction-controlled regime. In the data-controlled mode, the data (for example, when the result is negative) can modify the instruction pointer: the technical processor uses a combination of the two operating modes. Using interrupts implements a kind of event-driven control; it enables multi-tasking. The idea behind the “stored program” concept is that the temporary instruction-driven mode is needed to make the processor programmable. This mode enables single-thread, sequential (von Neumann-style [19]) programming, and implementing the functionality missing from the paradigm cannot keep pace with the hardware processing speed [78]. The huge price paid for the universality is that even relatively simple processors work inefficiently [58].

The decoded instruction must find its data to be processed by referring to them. To make their required data directly accessible (by wiring), the instructions would need inaccessibly dense wiring. Furthermore, it would not allow for using different data (for example, different vector elements) during processing. For this reason, the data can be accessed through a particular wiring system (the bus), and the instructions reference the data through their address in a storage unit. However, only one computing component can use the bus at a time, which means further significant limitations of the parallel operation: the software threads must compete (through the operating system) for accessing their instructions, and the instructions must compete for the data they need. What is worse, using a bus causes the time of arrival of the data delivered over the bus to be unknown at design time. The reconfigurable systems are apparently effective because they use a data path set in advance (instead of setting it after decoding) and enable using data paths in parallel. However, it would be possible to use an intermediate (configware) layer [59,60] between the hardware and software layers, with cooperating processors and a hierarchically organized distributed bus system.

3.3. Structure vs. Architecture

In his fundamental work, von Neumann clarified that the document was about logical organization. The technology formed technology blocks and introduced (based on the idea that the transfer time was negligible) a universal wiring system that connected the blocks. It was correctly noticed [74] that “in the current architecture where data moves between the physically separated processor and memory, latency is unavoidable”. We add that to move complex processors into distributed memories or globally accessible memory into processors is also impossible; this is the price paid for using the instruction-driven mode.

3.3.1. Single-Processor Performance

A decade ago, the limits of computing that the laws of nature enabled [70] had already been reached, as quantified by dispersion; see Figure 5. Processors are optimized for single-thread performance [76]. One of the limitations (which indeed caused a saturation of single-thread performance around 2005) was that simply no more reasonable functionality could be added to recent processors: “The exponential growth of sequential processors has come to an end” [89], precisely as it was contented a half-century ago: “the organization of a single computer has reached its limits” [81]. One of the many other reasons is that Moore’s observation is valid only for the density of components inside the processor. The rest of the computing systems, including their buses connecting the components, do not decrease at a similar rate. This circumstance should result in an S-curve [90] rather than an exponential increase. This case is valid in human-size computing systems and inside processors. Consequently, the relative weight of data transfer, as well as of other non-payload activities, increases with time. Rather than rethinking computing, preparations for the post-Moore era [91] were initiated.

The expectations against computing are rather demanding and excessive. Billions of processors are present in the edge devices. Millions of processors are crammed into single large-scale computing systems. Computing targets extreme and demanding goals, such as global weather simulation, Earth simulation, or brain simulation. These tendencies, accompanied by the growing amount of “Big Data”, “real-time connected everything”, and virtually infinite need for details of simulations, not to mention the irrationally enormous AI-purpose systems [88,92], are a real challenge. In several fields, it has already been admitted that the limitations of computing are near or already reached. Today, a growing number of demonstrative failures (both hardware and software) showed severe deficiencies in the fundamental understanding of computing. “Rebooting computing” [55,56] and “reinventing electronics” [93] are a real need, but no valuable ideas have been published, especially no appropriate computing paradigm.

3.3.2. Multi- and Many-Core Processors

After the single-processor performance stalled, the multi- and many-core processors could provide for a short period the needed higher performance, without a dramatic performance loss, at the price of higher power consumption and lower efficiency. The developed GPUs were well usable for their original goal. However, as general-purpose [94] accelerators, they introduced nearly two orders of magnitude worse computing efficiency and about an order of magnitude worse power efficiency. Similar is the case with the other Single Instruction Multiple Data (SIMDA) processors. They provide significant enhancement when used in the scenario they were designed for but significantly less in general cases of use [20].

Until GPU appeared, the need for computing power and its supply was moderate, and they roughly followed Moore’s observation. With the appearance of GPU, the need for computing power suddenly (in a leap-like way) increased. With the appearance of AI (actually, mainly Machine Learning (ML)), the increase became steeper, and the same happened (not yet shown in the figure) with the appearance of the Large Language Model (LLM)-based generative AI systems. The over-hyped popularity of using such chat programs made it evident that the development of computing ran into a dead-end street [95], primarily because of using inappropriate computing theory, causing sustainability issues with its power consumption, carbon emission, and environmental pollution. It is time to revisit computing (i.e., making drastic changes, starting from the basic principles and scrutinizing theoretical assumptions and omissions, operating principles, etc.).

3.3.3. Memory

In technical computing, registers are essentially another memory with short addresses and short access times; simulating data stored directly at synapses would require many registers. Using conventional “far” memory instead of the direct register memory introduces a worse performance of two to three orders of magnitude. In machine learning, summing synaptic inputs means scanning all registers, which requires several thousands of machine instructions and introduces another three to four orders of magnitude worse performance. In addition, the correction term, calculated from the gradient, is distributed between all upstream neurons, regardless of whether they affected the result; decreasing again about two to three orders of magnitude.

The idea of “in-memory computing” has been proposed, and when combined with analog vector–matrix operation [96], it can be effective, although it introduces a loss of precision. What is worse, the delivered results will depend on processors’ temperature (and through that, on their architecture and cooling efficiency). However, it is impossible to move a complex processor to a memory block; furthermore, the idea may lead to data accessibility and coherence problems.

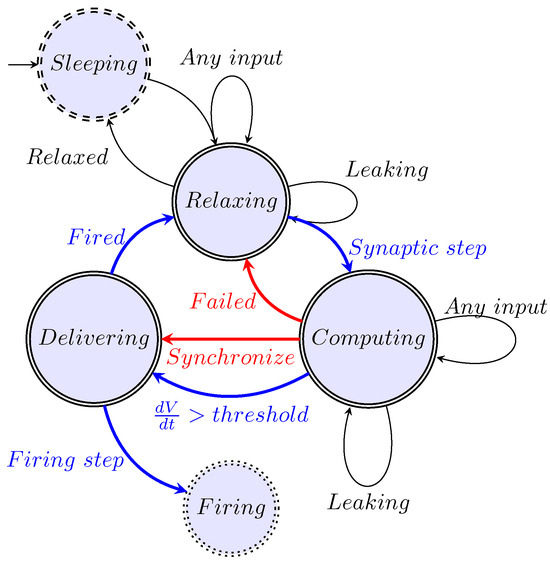

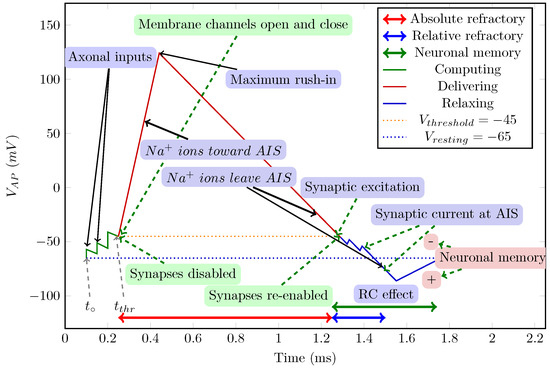

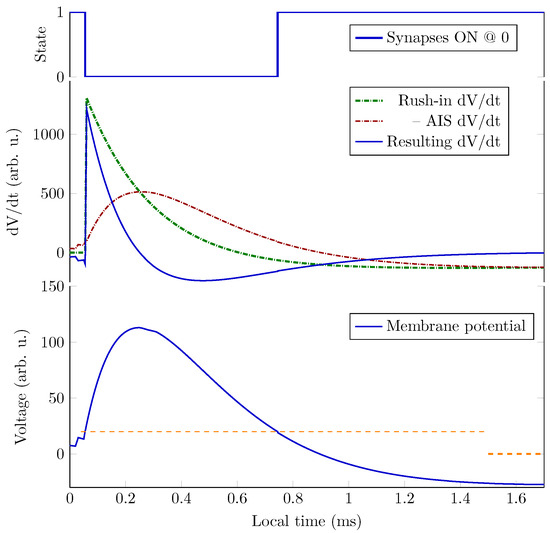

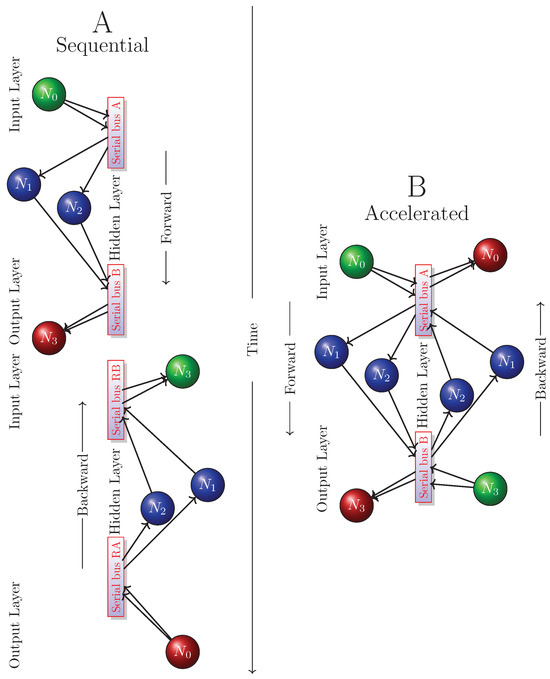

3.3.4. Bus