Abstract

Human–Machine Interfaces (HMIs) in traditional automobiles are essential in connecting drivers, passengers, and vehicle systems. In automated vehicles, the HMI has become a critical component. A well-designed HMI facilitates effective human oversight, enhances situational awareness, and mitigates risks associated with system failures or unexpected scenarios. Simultaneously, it serves as a crucial safeguard against cyber threats, preventing unauthorized access and ensuring the integrity of vehicular operations in increasingly connected environments. This narrative review delves into the evolving landscape of automotive HMI design, emphasizing its role in enhancing user experience (UX) and safety. By exploring usability challenges, technological advancements, and the integration of rapidly evolving technologies such as AI (Artificial Intelligence), AR (Augmented Reality), and gesture-based controls, this study highlights how effective HMIs minimize cognitive load while maintaining functionality. Significant attention is given to the new challenges that arise from technological advancements in terms of security and safety.

1. Introduction

Automotive HMIs are the primary touchpoints that enable interaction between users and vehicle systems [1]. As vehicles become increasingly advanced, the role of HMI design has expanded beyond functionality to encompass safety, usability, and emotional connection. A recent bibliometric analysis of the literature, conducted by X. Zhang et al. in 2022 [2], revealed that the primary focus is on users, interfaces, external environment, and technology implementation. The advent of automated vehicles (AVs) [3], electric vehicles (EVs) [4], and advanced driver-assistance systems (ADASs) [5] has further underscored the importance of user-centric HMI solutions. An intuitive and well-designed HMI can transform the driving experience by enhancing user engagement, reducing cognitive overload, and supporting safer driving environments. However, the growing complexity of in-vehicle systems [6] presents challenges such as information overload, interface usability, and accessibility for diverse user groups [7]. Moreover, with the integration of advanced technologies like artificial intelligence (AI) [8], augmented reality (AR) [9], and touchless interaction methods [10], there is a pressing need to ensure that these systems are seamlessly integrated and aligned with user expectations. Furthermore, as ADASs and AVs introduce fundamentally new interaction paradigms, UX design must not only align systems with users’ current expectations but also help users adapt and evolve their mental models to embrace these innovations. The hardware embedded within cars today increasingly resembles technologically advanced centers, with, for example, fingerprint sensors [11], wheel speed sensors [12], or inertial sensors [13]. Sensor data anomalies can also negatively influence safety on the road [14]. Furthermore, AVs [15], with their advanced onboard systems and new operational paradigms, resemble commercial airplanes in terms of safety and security [16,17], requiring rigorous fail-safe mechanisms, real-time monitoring, and robust cybersecurity measures to ensure reliable and secure operation.

This narrative review seeks to provide a comprehensive overview of user experience (UX) considerations in automotive HMI design. It addresses key aspects such as the principles of effective UX, the incorporation of emerging technologies, and the importance of global standards, such as ISO guidelines [18], in shaping safer, more accessible interfaces. In addition, this study examines the challenges from a safety and security point of view posed by rapid technological advancements and varying user needs, proposing strategies to navigate these complexities. By synthesizing insights from recent developments and research, the review seeks to inform the design and development of future automotive HMIs that prioritize user experience and safety in equal measure.

1.1. Contributions

The main contributions of this review can be summarized as an exploration of fundamental UX principles in automotive HMI design, emphasizing safety, usability, and user satisfaction while addressing the integration of emerging technologies such as AI, AR, and touchless interaction methods. It highlights the critical role of international standards, including ISO 15007 [18] and ISO 9241-110 [19], in ensuring that interfaces meet global safety and usability benchmarks. Furthermore, the study examines key challenges in the development of HMIs, such as information overload and accessibility for various user groups, proposing actionable strategies to mitigate these problems. By synthesizing insights from current research and trends, the review provides a forward-looking perspective on the design of next-generation HMIs that seamlessly integrate advanced technologies while prioritizing user needs, ultimately fostering safer, more intuitive, and engaging driving experiences.

1.2. Research Methodology

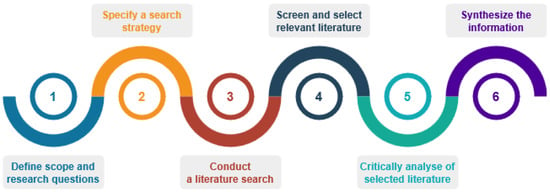

The aim of this review is to provide insight into the recent trends and developments in automotive Human–Machine Interface design, with a particular focus on enhancing user experience, safety, and security. We tried to select representative and up-to-date sources that present the considered issues. A narrative review methodology was adapted to summarize primary studies from which general conclusions may be drawn from a holistic point of view. The research flow is schematically illustrated in Figure 1.

Figure 1.

Flow of the research.

Specifically, our broad narrative approach helps to explore the combination and mutual impact of user experience, safety, and security in the modern automotive domain, as well as to explore how existing technologies could be adapted to better assist the driver. The research methodology is described below:

- Research questions (RQs):RQ1: How do recent technological innovations modify the evolution of automotive HMIs?RQ2: What are the new risks that these evolutions could generate in terms of safety?RQ3: What are the new risks that these evolutions could generate in terms of security?RQ4: What are the current challenges in terms of multimodality?RQ5: What are the current challenges in terms of enhancing emotional connection?RQ6: How should we manage the trade-offs between safety, security, and UX?

- Databases consulted: IEEE Xplore, MDPI, ScienceDirect (Elsevier), SpringerLink, GoogleScholar.

- Time frame: Publications between January 2018 and April 2025 were considered to ensure coverage of the most recent advancements.

- Main keywords used: “Automotive HMI”, “User Experience in Vehicles”, “Autonomous Vehicle Interfaces”, “Safety in Automotive HMI”, “Augmented Reality Vehicles”, “Multimodal Interaction Vehicles”, “Security in Automotive HMI”.

- Inclusion criteria: Peer-reviewed journal articles, conference papers, and technical reports directly addressing automotive HMI and user experience in AV.

- Exclusion criteria: Works focusing solely on mechanical or non-user-related aspects of vehicle systems were excluded.

- Selection process: An initial screening based on titles and abstracts was conducted, followed by full-text analysis for relevance. Preference was given to works offering empirical results, novel methodologies, or comprehensive reviews.

- Industry developments: To capture emerging trends, selected industrial white papers and official reports (e.g., from Mercedes-Benz, Volvo, Polestar) were included, but were clearly distinguished from academic sources in the analysis.

1.3. Structure of the Review

The review is structured as follows. Section 2 provides a short overview of the current automotive HMI. Section 3 points out which principles of UX design matter in automotive HMI. Section 4 discusses technological innovations. Section 5 and Section 6 present the challenges regarding the considerations of safety and security and propose a plan and ideas for a global safety framework. Section 7 focuses on new challenges pertaining to multimodal interaction, an emerging concept in AVs that enhances user experience but also poses some safety and security concerns. Section 8 pays attention to emotional connection. Section 9 identifies future technological challenges in automotive HMI design and summarizes and concludes the article.

2. Brief Overview of Automotive HMI

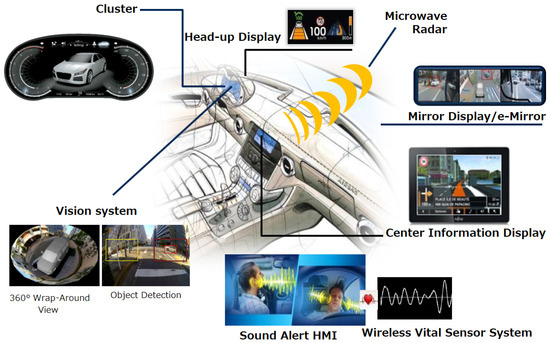

Automotive HMI refers to the systems and interfaces through which a driver or passenger interacts with a vehicle’s technology [20]. The key components include the display system (such as screens or heads-up displays), input devices (like touchscreens, buttons, knobs, and voice recognition systems), and the communication channels (e.g., visual, auditory or haptic feedback) that allow users to control and monitor various vehicle functions (a diagram illustrating a highly advanced automotive HMI architecture is shown in Figure 2). Over the years, automotive HMIs have evolved from basic mechanical dials and switches to complex digital interfaces integrated with advanced infotainment, navigation, and driver assistance systems (an example of a modern automotive HMI is shown in Figure 3). The transition to digital displays began with the advent of in-car entertainment systems [21], eventually incorporating features such as touchscreens, voice commands, and gesture recognition, enhancing intuitive interaction. Modern vehicles now support a variety of HMIs, including touchscreens, steering wheel controls, voice assistants like Alexa or Google Assistant, and gesture controls that allow drivers to perform functions without physically touching any interface [20]. These systems are designed to improve usability, safety, and comfort.

Figure 2.

Diagram of a highly advanced automotive HMI architecture, illustrating user inputs, processing, and output systems (Socionext Group, Yokohama, Japan, available online: https://socionextus.com (accessed on 20 January 2025).

Figure 3.

An example of a modern automotive HMI (Mercedes Benz, available online: https://www.mercedes-benz.pl (accessed on 26 January 2025).

Another major trend in automotive HMI is the growing need for personalization. Modern vehicles increasingly offer adaptive interfaces that adjust settings such as displays, control layouts, and interaction modes based on individual user profiles and preferences. Personalization not only enhances user comfort, satisfaction and fidelity but also contributes to safer driving by minimizing cognitive load through familiar and customized interactions. This trend reflects a broader shift toward user-centric design philosophies in the automotive industry. Current trends in automotive HMI are heavily influenced by advances in artificial intelligence, machine learning, and AR. AI-driven voice recognition systems enable hands-free control of navigation, media, and vehicle settings, while AR technologies are beginning to be integrated into heads-up displays, even with appearance-based gaze tracking [22], overlaying crucial information directly onto the windshield. In addition, touchless gestures are being integrated to minimize distraction, while increasingly sophisticated touchscreens offer haptic feedback for a more immersive and interactive experience [10]. These innovations aim to improve the overall driving experience, enhance safety through distraction reduction, and anticipate the needs of the driver and passengers in real-time.

3. Principles of Automotive HMI Design

3.1. Effective Human–Machine Interfaces

Effective HMIs focus on reducing driver distraction by presenting information in a prioritized and context-sensitive manner [23]. This includes adaptive displays that show only essential details during critical driving moments. Driver distraction is a significant contributor to road accidents, often caused by poorly designed in-vehicle interfaces.

To address this issue, the National Highway Traffic Safety Administration (NHTSA) issued in 2013 the Visual–Manual NHTSA Driver Distraction Guidelines for InVehicle Electronic Devices (DOT HS 811 141) [24]. These guidelines recommend limiting visual–manual interactions to short glances away from the road, ideally no longer than two seconds per glance, requiring minimal manual input (one hand only), and prohibiting high-risk tasks such as manual text entry while driving. Furthermore, they promote the use of hands-free and voice-operated controls and discourage non-driving-related activities like web browsing or video watching when the vehicle is in motion. Diver distraction is commonly defined as a shift of attention away from tasks essential to safe driving [25]. Research suggests that interactions with these devices should not exceed two seconds of visual–manual engagement, require minimal glances away from the road, and involve only one hand. By adhering to these guidelines, designers can develop interfaces that effectively reduce accident risks while maintaining functionality.

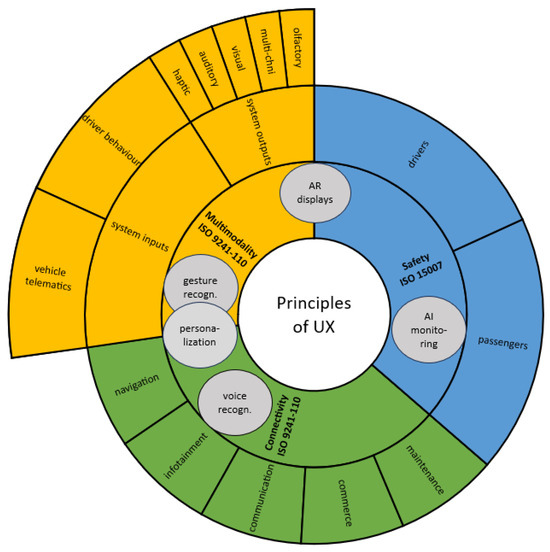

Achieving seamless interaction requires intuitive designs that allow drivers to operate controls without extensive learning. Key principles include consistent interaction patterns [26], intuitive layouts, and accessibility for diverse demographics (Figure 4 illustrates these principles in a multi-layered pie chart). Interfaces that incorporate human factors into their design, such as ergonomics and cognitive psychology principles, have been shown to significantly enhance usability [27]. The following sections explore these principles in greater detail.

Figure 4.

Principles of UX design in automotive HMI.

3.2. ISO Standards

The International Organization for Standardization (ISO) provides key guidelines to ensure that automotive Human–Machine Interfaces prioritize usability, safety, and user satisfaction. Two significant standards, ISO 15007 and ISO 9241-110, play a crucial role in shaping the design and evaluation of HMIs in the automotive industry [18].

ISO 15007 focuses on visual clarity, particularly in driver information systems. It provides a framework for quantifying and describing driver visual behavior, including parameters such as glance duration and frequency. By setting these measures, ISO 15007 helps ensure that displayed information—such as speed, navigation prompts, or warning signals—is easy to understand without overloading the driver with excessive visual demands. This standard is particularly useful for evaluating Transport Information and Control Systems (TICSs) and ensures that they align with the driver’s natural visual behavior.

ISO 9241-110 emphasizes ergonomic principles in human–system interaction. It provides guidelines on creating user-friendly interfaces by ensuring attributes like consistency, task compatibility, and user control. The standard emphasizes that interfaces should align with user expectations and minimize unnecessary complexity, ensuring drivers can operate vehicle systems without distraction. This principle is vital for ergonomic placement of controls and displays, ensuring critical information remains within the driver’s line of sight. Using eye-tracking methods in automotive HMIs underlines the importance of adherence to standards in reducing driver workload and improving situational awareness [19,28]. Usability-focused guidelines, such as those in ISO 9241, can lead to more effective in-vehicle navigation system designs. These insights reinforce the relevance of ISO standards in shaping HMIs that are both safe and efficient.

By adhering to these standards, automotive HMIs ensure uniformity and safety across the industry. This not only facilitates better usability for drivers but also ensures that automotive systems meet global benchmarks for ergonomic and visual interaction. These guidelines help reduce driver workload by enabling intuitive control mechanisms and clear information presentation, enhancing situational awareness and decision-making [29]. While these principles are well established, the rapid evolution of automotive technologies introduces new challenges for their effective application, particularly in the context of AV and connected ecosystems.

In general, ISO standards act as a foundational framework, enabling manufacturers to develop HMIs that prioritize user experience while adhering to critical safety and usability metrics.

4. Technological Innovations

Modern HMIs are incorporating technologies such as touchscreens, gesture recognition, voice recognition, AR displays, and AI to enhance usability and safety, transforming the driving experience. Capacitive touch technology, which is prevalent in most modern vehicles, has laid the foundation for these developments.

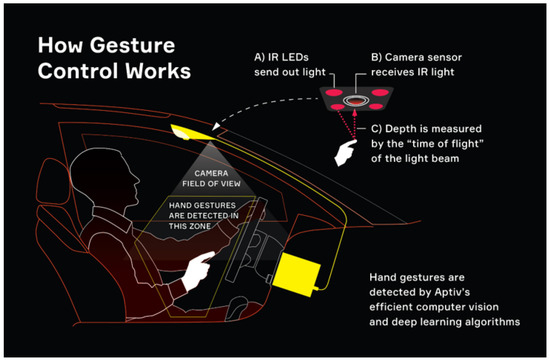

4.1. Gesture Recognition

Gesture recognition, like BMW’s gesture control [30], allows drivers to interact with their vehicle using simple hand movements, making tasks such as volume adjustment or call answering more intuitive and safer. Auditory-supported air gestures allow drivers to look more at the road screens [31]. This technology reduces physical interaction with controls, which not only adds convenience but also addresses hygiene concerns, particularly in contexts where minimizing physical touch is crucial. Gesture recognition reduces the reliance on traditional physical controls, thus improving both ease of use and hygiene in automotive settings [32,33]. For example, the gesture control recognition proposed for the first time by Aptiv, originally launched on the BMW 7 Series in 2015, is now being deployed across a wide range of models. A diagram showing the gesture-based control system is shown in Figure 5.

Figure 5.

Diagram showing gesture-based control system minimizing physical interaction with interface (Aptiv company, available online: https://www.aptiv.com (accessed on 26 January 2025)).

As illustrated in Figure 4, gesture recognition technologies directly contribute to improving multimodal interaction by enabling intuitive non-verbal controls. In addition, by minimizing physical contact and visual distraction, they also enhance driving safety.

4.2. Voice Recognition

Voice recognition has also become a cornerstone of hands-free interaction. It was introduced in 2005, in Honda Acura and Odyssey models, where navigation systems allowed drivers to select street names using voice commands [34]. Currently used systems, such as Amazon Alexa Auto [35] and Google Assistant [36], leverage advanced natural language processing to interpret commands in conversational language, providing drivers with a safer means of controlling navigation, climate, and infotainment systems. Tesla’s voice control system [37] allows drivers to configure specific vehicle settings using spoken commands, thus minimizing the need for manual input. Studies show that voice systems not only enhance safety but also improve overall user satisfaction, particularly among demographics less comfortable with touch interfaces. Voice recognition systems enable a more intuitive and natural interaction with the vehicle, which is crucial for enhancing driver comfort and safety [38,39].

Voice-based HMIs, shown in Figure 4, are a key enabler for multimodal interaction and also strengthen connectivity when integrated with cloud-based services such as Amazon Alexa. They significantly support safety goals by allowing hands-free operations.

4.3. Augmented Reality

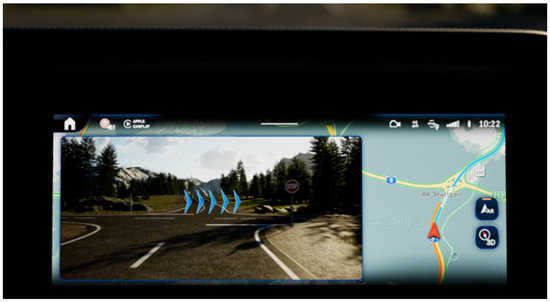

Augmented reality displays are significantly enhancing situational awareness in vehicles [40]. AR-based head-up displays (HUDs) project critical information such as navigation and hazard alerts directly onto the windshield, allowing drivers to keep their eyes on the road [41]. It should be noted that HUDs with different sizes of fields of view evoke similar driving performance, cognitive load, and user experience [42], so size does not have any significant effect on the driver’s situational awareness. Originally developed for military aircraft, HUDs were introduced into the automotive domain in the late 1980s by General Motors, becoming widely available in the early 2000s (BMW 2003, Audi 2010, Mercedes 2014). An illustration of Mercedes-Benz’s augmented reality-based HUD is shown in Figure 6.

Figure 6.

Augmented reality-based HUD projecting critical driving information, such as navigation directions and speed, directly onto the windshield (Mercedes Benz, available online: https://www.mercedes-benz-mena.com (accessed on 26 January 2025)).

Mercedes-Benz’s MBUX system (Mercedes-Benz User Experience) is the latest infotainment system, which overlays AR graphics onto real-world imagery to guide drivers in complex intersections [43]. It is a prime example of how AR can improve navigation. A picture of Mercedes-Benz’s MBUX system, which leverages AR for enhanced navigation, is shown in Figure 7. AR displays reduce cognitive load by allowing drivers to access key information without need to divert attention from the road, thus improving overall driving safety [9].

Figure 7.

Mercedes-Benz’s MBUX system (Mercedes Benz, available online: https://www.mercedes-benz-mena.com (accessed on 26 January 2025)).

AR displays enhance both multimodality, by merging real-world and digital content, and safety—by reducing the need for drivers to shift attention away from the road, as indicated in Figure 4.

4.4. Artificial Intelligence

Artificial intelligence is impacting and changing the automotive industry [44]. AI systems can learn driver behaviors and dynamically adjust vehicle settings based on individual preferences, such as optimal cabin temperature or preferred driving routes. More advanced AI applications, such as Volvo’s Driver Understanding System, monitor driver fatigue or distraction and provide real-time interventions to ensure safety [45]. Technically, two camera-based sensors monitor the driver’s gaze, and a capacitive steering wheel checks whether hands are on the wheel. In case of any impairment, the driver is alerted to take action—if they fail to do so, the car is automatically stopped and hazard lights are activated. The Volvo EX90 Driver Understanding System was recognized by TIME magazine as one of the best inventions of 2024. Driver monitoring systems mainly address safety concerns (Figure 4), by detecting fatigue, distraction, or emergencies in real-time, and triggering appropriate interventions.

In conclusion, the integration of innovative technologies, such as touchscreens, gesture recognition, voice recognition, AR displays, and AI, has reshaped the way drivers interact with their vehicles, making it more intuitive, personalized, and safe. These developments not only improve usability, but also foster safer driving environments by reducing distractions and enabling hands-free interactions [46]. AI-driven systems provide personalized experiences while also contributing to safety by detecting signs of driver fatigue and alerting drivers when necessary.

5. New Challenge: Safety

Human–Machine Interfaces that prioritize safety are engineered to support drivers by reducing cognitive load, enhancing situational awareness, and intervening when necessary to prevent accidents. These systems leverage real-time monitoring, adaptive displays, and data-driven algorithms, including selected applications of AI to create a safer driving environment. While some safety features rely on conventional programmed logic, more advanced systems incorporate machine learning models capable of analyzing driver behavior and environmental conditions to enhance situational awareness and decision-making.

The first subsection will be dedicated to the improvement of safety. The second subsection will be dedicated to the robustness of the HMI to failures. Indeed, with high emphasis on AVs, there comes high responsibility.

5.1. Additional Safety Provided by HMIs of AVs

Modern HMIs represent a synergy of thoughtful design and state-of-the-art technology, working collaboratively with drivers to create safer roads. Through adaptive interfaces, intuitive design, and proactive AI-driven interventions, these systems not only reduce the likelihood of accidents but also instill confidence in drivers, promoting a more secure and efficient driving experience.

One key aspect of safety-focused design is adaptive information delivery, such as in systems like Nissan/Infinity ProPILOT [47]. These interfaces assess real-time factors such as traffic density, vehicle speed, and road conditions to dynamically adjust the information displayed to the driver. For example, in high-speed or congested traffic scenarios, the system can limit the display to critical alerts, such as collision warnings or lane guidance, ensuring drivers are not overwhelmed with extraneous data during moments when rapid decision-making is essential. This context-aware approach minimizes distractions, sharpens focus, and enhances reaction times.

One of the most critical risks for AVs lies in their potential to encounter scenarios that were not represented in their training data. Despite extensive real-world testing and simulation efforts, the open-world nature of public roads makes it virtually impossible to anticipate and model every possible situation or anomaly. When AVs face novel events, such as unexpected pedestrian behavior, erratic vehicle maneuvers, or rare environmental conditions, their decision-making algorithms may not generalize appropriate outputs, potentially leading to unsafe behavior. This problem is widely recognized as a major challenge in the development and validation of safe automated driving systems. To address this challenge, Intel Mobileye has access to model-shared mining of Mobileye’s 200 petabytes of data, delivering thousands of results in seconds and tackling extremely rare conditions and scenarios (Credit: Mobileye, Jerusalem, Israel, an Intel Company).

Simplifying the presentation of information is another cornerstone of safe HMI design. Hierarchical displays and adaptive content filtering prioritize the most relevant data while organizing additional options in logical layers [48]. Systems, such as Toyota’s Entune 3.0, exemplify this by dynamically reshaping menus to make frequently used functions easily accessible. This not only reduces the time drivers spend interacting with the interface but also reduces mental strain, allowing them to concentrate on driving. Visual enhancements, including high-contrast colors, legible fonts, and universally recognizable icons, further contribute to clarity and ease of use, enabling quick interpretation of information even in low-visibility or high-stress conditions.

The incorporation of real-time driver monitoring through sensors and AI has become a defining feature of advanced HMIs. Technologies, such as Subaru’s EyeSight [49], use cameras and other sensors to monitor driver behavior, detecting signs of fatigue, distraction, or inattention. Alerts and warnings are issued in response to these cues, providing drivers with timely reminders to refocus or take a break. Beyond simple monitoring, such systems can assess facial expressions, head posture, and even biometric data, offering a comprehensive understanding of the driver’s state. For situations where drivers are unable to respond effectively, proactive safety interventions become critical. Hyundai Highway Driving Assist exemplifies this approach by integrating autonomous systems capable of taking over partial control of the vehicle during emergencies. Features like automatic braking, lane-centering, and adaptive cruise control work together to stabilize the vehicle and prevent collisions. In extreme cases, such as when the driver is unresponsive due to a medical event, these systems can maintain vehicle safety until external assistance is available.

Furthermore, advances in predictive analytics and machine learning enable HMIs to anticipate potential hazards before they escalate into imminent threats. By analyzing patterns in driving behavior and environmental conditions, these systems can provide preemptive warnings or automatically prepare to intervene when necessary. For example, upcoming intersections, sudden braking patterns from other vehicles, or changing weather conditions can trigger specific adjustments in display information or initiate autonomous responses, further enhancing safety.

5.2. Robustness of HMI to Failures

5.2.1. The New Paradigm of AV HMIs

The introduction of AVs represents a revolution from a regulatory safety perspective. Indeed, traditional vehicle safety regulations are built around human drivers, focusing on rules like speed limits, signaling, and driver attentiveness. In traditional vehicles, the HMI has always played a critical role in ensuring safety, usability, and minimizing driver distraction. Extensive research on human factors and ergonomics has historically focused on optimizing how information is presented to the driver and how controls are designed to reduce errors and cognitive load [50]. The revolution of AVs is that the safety responsibility is transferred from humans to the HMI and to the Artificial Intelligence. Unfortunately, potential users may still have unrealistic representations of AVs, the role of the driver in automation, or the impacts of full automation on the road transport system [51]. The term autonomy is in this context a little ironic, as it does not refer to separation, but to a consistent connection and relation of mutual influence [52].

Public perceptions play a crucial role in the wide adoption of AVs [53]. Therefore, a big challenge for HMIs is the huge media impact of accidents involving AVs. For example, the tragic Uber AV accident in Tempe, Arizona, on 18 March 2018, marked the first known pedestrian fatality involving a fully self-driving car. The Volvo XC90 (manufactured by Volvo Cars, Gothenburg, Sweden), operating in AV mode, struck and killed Elaine Herzberg, who was crossing the street outside a crosswalk at night while pushing a bicycle. Despite detecting Herzberg six seconds before impact, the vehicle’s sensors misclassified her as an “unknown object” and did not initiate emergency braking. The vehicle, traveling at 39 mph, continued on its path, and Elaine Herzberg was struck. The human safety driver, who was distracted by a video on her phone, failed to intervene, even though she had several seconds to act. An investigation by the National Transportation Safety Board (NTSB) revealed multiple issues [54]. The vehicle’s perception system, which relied on LIDAR, radar, and cameras, did not accurately classify Herzberg as a pedestrian. The decision-making algorithm was overly cautious, designed to avoid unnecessary braking for non-hazardous objects, but it failed to respond to a real threat. Furthermore, the safety driver’s distraction led to a critical failure in human oversight. The accident highlighted major gaps in both AV technology and the monitoring of human operators. This event prompted calls for stricter regulatory frameworks and improvements in both AI systems and safety protocols for AVs [55].

5.2.2. The Evolution of AV Safety Standards

Historically, the Vienna Convention on Road Traffic of 1968 [56] is a treaty, signed by 101 countries in March 2025. It is an international standard that was implemented to harmonize international road traffic and improve general safety. Article 8 of this treaty initially required that “Every moving vehicle or combination of vehicles shall have a driver” and also that “Every driver shall at all times be able to control his vehicle”. The treaty was amended in 2014, allowing “Systems influencing the way vehicles are driven” and thus facilitating the legal operation of AVs in signatory countries.

In Europe, the safety standards for AVs have been assessed and rules exist governed primarily by the European Union through a combination of regulations, directives, and international agreements. These rules ensure that AVs are safe for occupants, pedestrians, and other road users.

As of today, Regulation (EU) 2019/2144 [57] adopted on 27 November 2019 and applicable since 6 July 2022 is considered the General Safety Regulation for vehicle safety in the European Union. It mandates ADASs (Advanced Driver Assistance Systems) for all new vehicle types and sets the legal foundation for approving automated and driverless vehicles. It encompasses modern features such as Intelligent Speed Assistance (ISA), Autonomous Emergency Braking (AEB), Driver Drowsiness and Attention Warning (DDAW), and Emergency Lane-Keeping Systems (ELKSs). Further expansions are planned until 2029, including external user protection.

Regulation (EU) 2022/1426 [58], effective from 5 August 2022, complements the previously mentioned General Safety Regulation by providing uniform procedures and technical specifications for the type-approval of Automated Driving Systems in fully automated vehicles (SAE Level 4, such as urban shuttles or robotaxis). It includes requirements for testing, cybersecurity, data recording, safety performance monitoring, and incident reporting, ensuring that fully driverless vehicles meet rigorous safety standards before market entry.

5.2.3. Proposal for Universal Safety Framework in Automotive HMIs

While various standards, guidelines, and best practices exist for designing automotive HMIs (e.g., ISO 15007, ISO 9241-110, and NHTSA guidelines), there is currently no unified, universally applied safety framework that harmonizes these requirements across all vehicle systems and manufacturers. Inconsistent applications of safety principles can lead to confusion, inefficiencies, and even increased risk for drivers as interfaces and interaction models differ between vehicles. Therefore, it is relevant to explore the concept of a general and universally applicable HMI Safety Standard that could ensure consistency, reduce ambiguity, and enhance overall user trust and safety across the automotive industry.

Several leading European countries have also started to develop their own regulations. An ADAS safety regulatory framework would certainly be a good thing to have at the European or global level to avoid unnecessary and partially overlapping requirements, taking into account the specificity of the roads of each country. Such a document could follow the proposed plan below:

- 1.

- Aim: Definition of the aim of the document:

- (a)

- Provide a regulatory framework for the development of ADAS vehicles;

- (b)

- Remove unnecessary barriers to the development of ADAS vehicles;

- (c)

- Harmonize regulations between countries/member states.

- 2.

- Exclusion: Concepts excluded from the frame of the document:

- (a)

- Liability in case of accident;

- (b)

- Cybersecurity.

- 3.

- Used terms: Definition of terms and concepts:

- (a)

- ADAS/Traditional Vehicle;

- (b)

- Driver/user, occupant, another vehicle, pedestrian, etc.;

- (c)

- SAE levels of driving automation;

- (d)

- Technical terms: HMI (Human–Machine Interface), ISA (Intelligent Speed Assistance), AEB (Autonomous Emergency Braking), ELKS (Emergency Lane Keeping System), DDAW (Driver Drowsiness and Attention Warning), etc.;

- (e)

- Safety terms: ASIL (Automotive Safety Integrity Level), FMEA (Failure Modes and Effects Analysis), etc.

- 4.

- Failure types: Types of failures or events considered in the frame of the safety framework:

- (a)

- Hardware failures;

- (b)

- Transmission errors.

- 5.

- Scale of failures: Definition of the scale of failures and associated criticality (for example: Catastrophic for loss of life, Hazardous, Major, Minor, etc.). Associated qualitative objective, if any.

- 6.

- Quantitative requirements: Quantitative safety design requirements (similarly to aeronautics, a mean time between failures (MTBF) could be allocated to ensure a certain system probability of catastrophic failure, leading to loss of life—for example, a specific MTBF can be allocated to a critical function such as automatic braking).

- 7.

- Qualitative requirements: Qualitative safety design requirements:

- (a)

- An active redundancy required (inspired from the aeronautics fail-safe design concept) for all critical functions.

- (b)

- Diverse redundancy for critical software, which implies the determination of what are the critical functions and the critical software.

- 8.

- Design requirements: Safety design requirements—means of demonstration.

Demonstration could be carried out in a couple of ways. As in other domains, quantitative safety design requirements could be demonstrated through different safety analyses techniques such as Petri Nets, Markov Graphs, Failure Modes and Effects Analysis (FMEA), and Fault Tree Analysis (FTA). Quantitative safety design requirements could also be demonstrated with simulation or experience (open-road testing), which could be interesting with regards to transmission errors. Such demonstration is difficult to achieve in Aeronautics and Space domains due to the relatively low number of hours of experience and the difficulty and cost to implement the test. In the AV domain, it is possible to achieve rapidly millions of hours of experience. Furthermore, as seen previously (Figure 8 and Figure 9), AI is trained to tackle extremely rare conditions and scenarios.

Figure 8.

Intel Mobileye Bank of data “Streetlights and traffic lights around the world”, available to download from https://download.intel.com/newsroom/archive/2025/de-de-2022-01-05-mobileyes-selfdriving-secret-200pb-of-data.pdf (accessed on 23 March 2025).

Figure 9.

Intel Mobileye Bank of data “Tractors around the world”, available to download at https://download.intel.com/newsroom/archive/2025/de-de-2022-01-05-mobileyes-selfdriving-secret-200pb-of-data.pdf (accessed on 23 March 2025).

In conclusion, the AV demands a regulatory overhaul that moves from human-centric rules to technology-centric safety assurance, blending engineering, ethics [59], and law [60] in unprecedented ways. This shift promises safer roads, but requires a relatively open safety framework at the beginning in order to allow the development of the technology.

6. New Challenge: Security

The concept of security encompasses many aspects, including the security of data, communication, and resistance to manipulation from outside. Some security solutions are mandatory for the proper functioning of in-vehicle [61], vehicle-to-vehicle [62], and vehicle-to-something [63] communication networks. Moreover, the privacy of the telematic data in vehicles is threatened [64], as well as the process of charging electric vehicles [65]. Privacy attacks primarily focus on driver fingerprinting, location inferencing, and driving-behavior analysis [66].

Cybersecurity risks for the HMIs of AVs in Europe stem from their reliance on complex software, artificial intelligence, and connectivity, making them vulnerable to threats such as hacking, data breaches, and malicious interference. These risks could compromise vehicle control, expose sensitive user data, or disrupt communication with infrastructure, potentially leading to severe consequences for both passengers and the broader transport ecosystem. The repercussions are therefore similar to those of safety.

To date, no widely documented real-world cyberattack specifically targeting a fully AV has been publicly confirmed. A notable demonstration of vulnerability occurred in 2015 involving a Jeep Cherokee, which, while not fully automated, had semi-automated features. Security researchers Charlie Miller and Chris Valasek [67] remotely hacked the vehicle via its connected Uconnect infotainment system (Figure 10). They gained control over critical functions, including the brakes, steering, and engine, while the car was being driven on a highway. For instance, they were able to disable the brakes and turn the steering wheel, forcing the driver into a ditch at low speed as a controlled experiment. They also managed to incorporate a functionality scan on vulnerable vehicles that enabled them to scan other vulnerable vehicles and to infect them.

Figure 10.

The end of the story of the Jeep Cherokee, hacked remotely by security researchers Charlie Miller and Chris Valasek, highlighting the dangers of vulnerabilities in connected vehicles. Available online: https://x.com/WIRED/status/623458457870540800 (accessed on 23 March 2025).

This incident, though staged for research purposes, highlighted the potential risks of cyberattacks on vehicles with automated capabilities. Chrysler subsequently recalled 1.4 million vehicles to patch the vulnerability [68]. This example underscores how a cyberattack could theoretically disrupt an AV’s operation, posing risks to safety if exploited maliciously. Effective strategies against cyberattacks are developed by automotive OEMs (Original Equipment Manufacturers), as attackers will still try to gain access to secured systems [69]. The new solutions involve the latest technologies, such as artificial intelligence [70], machine learning [71], and blockchain technology [72].

An important step is made by uncorrelating these risks from traditional safety analyses and suggesting a dedicated framework for security. Another implicit suggestion is to consider that AI risks of incorrect behavior should be considered both in the frames of security and safety. The repercussions of incorrect AI behavior are similar to safety, but the causes are closer to those of security.

To address these challenges, the European Union has implemented robust regulations, notably through the UNECE Regulation No. 155 [73], adopted by the EU, which mandates a Cybersecurity Management System (CSMS) for manufacturers. This regulation, effective for new vehicle types as of July 2022 and all new vehicles as of July 2024, requires risk assessments, continuous monitoring, and incident response measures throughout a vehicle’s lifecycle. Additionally, the EU’s Cybersecurity Act and the ENISA (European Union Agency for Cybersecurity) guidelines [74] support a security-by-design approach, ensuring that cybersecurity is embedded from the development phase to deployment, safeguarding AVs against evolving digital threats.

7. New Challenge: Multimodality

Another challenge for vehicle HMI is multimodal interaction [75,76]. Originally, multimodal interactions denoted the employment of multiple senses to explore the environment [77]. In the automotive domain, this term refers to the integration and coordination of various system—such as satellites, vehicle-to-vehicle (V2V) communication, vehicle-to-infrastructure (V2I) systems, sensors, and human inputs—working together to enable safe and efficient navigation. While these interactions promise to enhance the capabilities of (self-driving) cars, they also introduce new safety challenges.

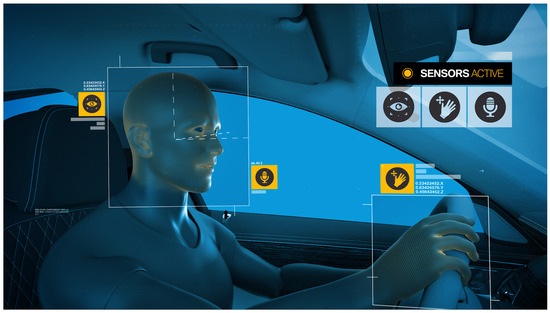

An example of a multimodal interaction system is the BMW Natural Interaction, first introduced in 2019 and available from 2021 [78]. It combines voice command technology (commands processed using Natural Language Understanding) with expanded gesture control (precise detection of hand/finger movements and directions) and gaze recognition (see Figure 11). An intelligent learning algorithm merges and interprets the complex information and, as a result, the car responds accordingly.

Figure 11.

BMW Natural Interaction system (BMW, available online: https://www.press.bmwgroup.com (accessed on 23 March 2025)).

Interaction between the user and the AV has advanced to a higher level [79]. Since the driving task is completely performed by the vehicle, the interaction is limited to non-driving-related tasks, such as choosing the driving style or setting the navigation goal. The AV continuously processes new data to plan comfortable motions [80]. Several technical issues arise, then, that are crucial to ensure safety.

7.1. Dependence on Satellite Positioning System

AVs often rely on Global Navigation Satellite Systems (GNSSs) like GPS for precise positioning [81]. However, this introduces several safety concerns:

- Signal Interference and Spoofing: GNSS signals can be disrupted by natural phenomena (e.g., solar flares) or “urban canyons” (tall buildings blocking signals). Worse, malicious actors can spoof signals [82], feeding false location data to a vehicle, potentially causing it to misjudge its position and crash.

- Latency: Even slight delays in satellite data transmission can be problematic for a vehicle moving at high speed, where split-second decisions are critical. Furthermore, Satellite Navigation Systems are not always conceived with the aim of being used for safety critical applications.

- Loss of Signal: In tunnels, dense forests, or remote areas, signal loss can leave a vehicle “blind”, forcing it to rely solely on onboard sensors.

7.2. V2V and V2I Communication

V2V and V2I systems use wireless communication (e.g., Dedicated Short-Range Communications or 5G) to share real-time data (speed, position, or road hazards) between vehicles [83] and infrastructure (e.g., traffic lights) [84]. Safety issues here include the following:

- Cybersecurity Risks: These communication channels are vulnerable to hacking. A compromised system could broadcast false information, such as a nonexistent obstacle, causing vehicles to brake suddenly or swerve dangerously.

- Data Overload: With potentially hundreds of vehicles and devices communicating simultaneously, an AV’s AI might struggle to filter relevant signals from noise, leading to delayed or incorrect responses.

- Interoperability: Different manufacturers may use incompatible protocols, reducing the effectiveness of V2V/V2I networks and creating blind spots in situational awareness.

7.3. Data Collection

Multimodal interaction often involves combining data from satellites, radar, LIDAR, cameras, and ultrasonic sensors. While this fusion aims to create a robust perception system, it is not foolproof. Note that these kinds of issues are similar to those encountered on an aircraft so both domains become closer, suggesting adapting the aircraft safety approach for AVs [85]. The following aspects need to be taken into account:

- Conflicting Inputs: If a satellite says the vehicle is in one location but LIDAR suggests another (e.g., due to a map error or sensor glitch), the system must resolve this discrepancy quickly. Failure to do so could lead to navigation errors.

- Environmental Limitations: Heavy rain, fog, or snow can degrade camera and LIDAR performance, forcing over-reliance on satellite or V2V data, which might also be impaired.

- Processing Demands: Real-time integration of multimodal data requires immense computational power. Any lag or failure in processing could delay critical actions like braking or steering.

7.4. Interaction with Human-Driven Vehicles

In mixed traffic environments [86], AVs must predict the behavior of human drivers, who do not communicate via V2V. Multimodal systems might misinterpret human actions if satellite or sensor data does not align with unpredictable human behavior—e.g., a sudden lane change not signaled to infrastructure.

7.5. Fail-Safe Mechanisms

A system is said to be fail-safe if—in case of any fault—it can cease its functionality and transition to a well-defined safe state [87]. So, a key safety issue is what happens when nominal mode fails. For example:

- If satellite connectivity drops and V2V is unavailable (e.g., in a rural area), the vehicle must rely on onboard sensors alone, which might not detect distant hazards.

- Over-reliance on any single mode (e.g., satellite navigation) without robust backups increases the risk of catastrophic failure.

Again, these kind of issues are similar to those encountered on an aircraft, suggesting that AVs are getting closer to aircraft from the safety point of view.

7.6. Mitigation Strategies

There are several mitigation strategies possible:

- Redundant Systems: Vehicles need backups—e.g., inertial navigation to complement GNSSs during signal loss.

- Advanced Encryption: Protecting V2V/V2I communication from cyberattacks is critical.

- AI Robustness: Machine learning models could be trained to handle conflicting multimodal inputs and prioritize safety-critical data.

- Standardization: Industry-wide protocols for multimodal interaction could reduce interoperability risks.

- Avoid, Limit, or Ban Multimodal Interaction: This radical solution could be considered if multimodal interaction brings many drawbacks.

In short, multimodal interaction is a double-edged sword for AVs. It enhances their ability to perceive and react to the world, but it also introduces complex safety risks stemming from reliance on external systems, cybersecurity vulnerabilities, and the challenge of integrating diverse data streams in real-time. As the technology evolves, balancing these factors will be key to making self-driving cars both capable and trustworthy.

8. New Challenge: Enhancing Emotional Connection

The design of an automotive HMI significantly impacts the overall user experience by enhancing usability, interaction efficiency, and safety. A well-designed HMI streamlines interactions, enabling drivers to access essential vehicle functions quickly and intuitively without diverting their attention from the road. By minimizing the number of steps needed to perform a task, such as adjusting the climate control or changing the radio station, the HMI improves efficiency and reduces cognitive load, allowing drivers to focus on the driving task itself [88].

Personalization and customization further enhance the user experience by tailoring the interface to individual preferences, such as adjusting settings for seat positions, favorite music, or preferred driving modes. This adaptability fosters a sense of comfort and control, making the driving experience more enjoyable and engaging. In terms of safety, reducing driver distraction is a critical goal, and effective HMI design can play a major role in this. Clear, well-organized interfaces, combined with voice command systems and intuitive touch gestures, allow drivers to perform tasks with minimal visual attention, ensuring they remain focused on the road.

Moreover, emotional design is an increasingly important aspect of automotive HMIs [89], aiming to create a positive emotional connection between the user and the vehicle. This can be achieved through aesthetically pleasing interfaces, seamless interaction flows, and responsive feedback that make using the vehicle feel enjoyable and rewarding, ultimately enhancing user satisfaction and loyalty. By integrating functionality, safety, and emotional appeal, HMI design can transform a car from a simple mode of transportation into a personalized, intuitive, and engaging environment. Designers may benefit from including emotional design features [90] as some emotional associations are similar in different cultures (e.g., in Europe and in Asia). Emotions influence the way drivers react to various factors. Affecting driver emotions can contribute to safer and more attentive driving. For example, music can be used as a possible multimodal strategy to mitigate the anger effects on driving performance [91]. Some research is already being carried out to regulate situational driving anger emotion through multimodal interaction-based approaches, as shown in [92].

One of the most innovative and user-friendly interfaces is Polestar’s Precept. It comprises user comfort, safety, and security. Their advertising slogan sums it all up aptly: You don’t always need to know everything. At least, not while you’re driving. Precept’s HMI only displays what’s needed, when it’s needed [93]. The driver’s attention is here considered as the crucial element. The displays are adjusted to the driver’s movements using eye-tracking technology and proximity sensors. The screen dims if the driver is focused on the road to prevent information overload (see Figure 12).

Figure 12.

Polestar Precept HMI: Driver’s concentration on the road (left) and the screen (right) (Polestar, available online: https://www.polestar.com/global/precept/hmi/ (accessed on 23 March 2025)).

Another example of emotional connection is the system detecting that the driver appears stressed or tired through facial recognition or voice analysis [94]. The vehicle could automatically adjust the lighting, play calming music, or change the cabin temperature to create a soothing atmosphere, which contributes to improving indirectly the safety of the trip and generating a sentiment of trust and recognition of the driver toward the AV.

A concrete example of this can be seen in Audi’s “AI:ME” concept car, which includes emotional AI that reacts to the passengers’ feelings (Figure 13). The vehicle can recognize when the driver is anxious and can activate features like ambient lighting, relaxing music, or even calming visual displays on the dashboard. The AI might also offer gentle, reassuring messages such as the following: It looks like you’re having a busy day. Let me take care of the driving so you can relax. As seen in Figure 4, personalization is directly related to multimodality but also safety and connectivity. Multimodal alerts are considered prominent, useful, and effective [95].

Figure 13.

The dashboard of Audi’s empathetic AI:ME, taken from https://www.engadget.com/2020-01-06-audi-ai-me-smart-ev-concept-car.html (accessed on 23 March 2025).

9. Future Challenges and Conclusions

Designing automotive HMIs entails navigating a complex landscape of challenges to achieve a delicate balance between user experience and safety [2]. Developers are tasked with creating intuitive and engaging interfaces that convey critical information without distracting the driver, a requirement made increasingly difficult by the growing sophistication and integration of in-vehicle technologies. As vehicles evolve into connected ecosystems featuring infotainment systems, navigation tools, and ADASs, ensuring seamless technological cohesion becomes paramount. Poor integration can lead to functional conflicts, information overload, or an overwhelming user experience, which compromises both usability and safety [96,97]. Moreover, in general, users often prefer comfort and ease of use over their security [98].

One of the greatest challenges in HMI design is striking the right balance between offering comprehensive features and maintaining an intuitive interface. Modern vehicles boast a vast array of functionalities—from voice assistants and gesture controls to customizable displays—that aim to enhance the general driving experience. However, including too many features can overwhelm users, particularly those unfamiliar with advanced technology. Designers must prioritize essential features and organize them in a manner that facilitates quick and easy access while minimizing cognitive load. In addition, it is essential that both humans and vehicles accurately understand the intentions and actions of each other [99].

The global nature of the automotive market introduces the challenge of catering to a wide spectrum of users with varying levels of technological familiarity, physical abilities, and cultural expectations. For instance, older users may find touch-based interfaces less intuitive than younger, tech-savvy drivers. Moreover, older drivers usually require more button presses to perform non-driving-related activities [100]. A useful interface should therefore take into account disabilities of the elder users [101]. Similarly, cultural differences can influence user preferences for color schemes, symbols, or the level of automation (e.g., UK versus Indian users [102]). Designers must create adaptable systems that accommodate diverse needs, ensuring accessibility and inclusivity across all demographics [103].

As vehicles become increasingly connected, concerns about data privacy and cybersecurity have grown. HMIs now manage a wealth of sensitive data, including location information, personal preferences, and biometric identifiers [104]. Ensuring robust data protection measures within the HMI is essential to safeguard user privacy and prevent unauthorized access. This challenge is compounded by the need to maintain a seamless user experience while implementing stringent security protocols, such as multi-factor authentication [105] or encrypted data transmission [106].

Driving requires constant attention, and HMIs must be designed to minimize distractions while providing necessary information. Developers face the challenge of presenting data in a way that supports situational awareness without overwhelming the driver. Features such as HUDs and auditory alerts are often employed to mitigate distraction, but these systems must be carefully tuned to avoid becoming intrusive or counterproductive [107]. Additionally, accounting for cognitive differences among users—such as varying levels of attentiveness and adaptability to new technologies—is critical for ensuring safety and usability.

The rapid pace of technological advancement presents another challenge in automotive HMI design. As new innovations such as AR, AI, and V2X communication emerge, designers must integrate these features into HMIs in a manner that feels seamless and intuitive [108]. This requires staying ahead of industry standards, anticipating user expectations, and ensuring compatibility with legacy systems. The iterative nature of software updates further complicates this process, as designers must ensure that updates do not disrupt the user experience or introduce new vulnerabilities.

Designing an effective automotive HMI demands rigorous usability testing under real-world conditions [109]. Simulated environments often fail to capture the full complexity of driving scenarios, making it essential to test interfaces in diverse contexts [110]. Factors such as lighting conditions, road types, and user stress levels can significantly impact HMI performance. Gathering user feedback and iteratively refining the design are crucial steps in creating interfaces that are both effective and resilient. AR offers here new possibilities to enhance automotive usability testing [111]. Overcoming these challenges is vital for developing automotive HMIs that not only enhance the driving experience but also uphold the highest standards of safety, accessibility, and reliability. As the automotive industry continues to evolve, the role of HMIs will become increasingly central to shaping the future of mobility—shifting the role from a driver to a passenger [112].

10. Conclusions

This narrative review underlines that the evolution of technology has led to HMIs playing a central role in the design of modern automobile vehicles. On the one hand (as seen in Section 2, Section 3 and Section 4), the evolution of HMIs represents a groundbreaking shift in automotive design, with intuitive interfaces, artificial intelligence, and augmented reality paving the way for a more immersive and efficient driving experience. These innovations enable vehicles to interact with drivers and passengers in new and intuitive ways, enhancing both convenience and user engagement. The UX can be significantly increased, and one of the most interesting challenges will be to make the HMI even more protective and friendly to the driver (as discussed in Section 8). On the other hand, as these technologies evolve, they also introduce new challenges, especially in terms of security and safety: these technologies place significant responsibility on the HMI (as developed in Section 5, Section 6 and Section 7). Ensuring that these advanced interfaces are sufficiently robust against failures or unexpected events, do not distract drivers if they do not have to, and do not compromise security will be critical in realizing the full potential of autonomous vehicles.

Finally, it is possible to see kind of a “trilemma” appearing in the technologic evolution of HMI design. The trilemma occurs when optimizing one of the three aspects comes at the expense of the two others:

- 1.

- Improving UX can compromise security: To enhance the user experience, designers might create seamless, easy-to-use interfaces, but this could lead to reduced security if these systems are not well protected. For instance, voice-activated features or mobile applications that control vehicle functions might be more convenient for users but could also open the door to unauthorized access or hacking.

- 2.

- Enhancing safety can reduce UX: Implementing systems that prioritize safety, like automatic intervention mechanisms or redundant control systems, might make the vehicle safer but could interfere with a smooth and enjoyable user experience. For example, a vehicle may activate a braking system aggressively in an emergency situation, which could be perceived as jarring or unpleasant by passengers, thus affecting their overall experience.

- 3.

- Security measures can hinder UX: The more security measures are implemented, such as authentication processes or encryption layers, the more complex and time-consuming the interactions may become for the user. For instance, requiring constant security checks or the use of biometric identification for access to the vehicle might inconvenience users and make the experience feel less seamless.

In fact, finding the right balance for the trilemma between UX, security, and safety in autonomous vehicles will be one of the most significant challenges for manufacturers and designers in the near future.

Author Contributions

Conceptualization, I.G., M.H. and D.M.; investigation, I.G., M.H. and D.M.; writing—original draft preparation, I.G., M.H. and D.M.; writing—review and editing, I.G. and D.M.; supervision, I.G.; project administration, I.G.; funding acquisition, I.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Burnett, G. Designing and evaluating in-car user-interfaces. In Human Computer Interaction: Concepts, Methodologies, Tools, and Applications; IGI Global Scientific Publishing: Hershey, PA, USA, 2009; pp. 532–551. [Google Scholar]

- Zhang, X.; Liao, X.P.; Tu, J.C. A Study of Bibliometric Trends in Automotive Human–Machine Interfaces. Sustainability 2022, 14, 9262. [Google Scholar] [CrossRef]

- Floridi, L. Autonomous Vehicles: From Whether and When to Where and How. In Ethics, Governance, and Policies in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2021; pp. 347–352. [Google Scholar]

- Patel, N.; Bhoi, A.K.; Padmanaban, S.; Holm-Nielsen, J.B. Electric Vehicles; Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Haas, R.E.; Bhattacharjee, S.; Möller, D.P. Advanced driver assistance systems. In Smart Technologies: Scope and Applications; Springer: Berlin/Heidelberg, Germany, 2019; pp. 345–371. [Google Scholar]

- Lee, S.C.; Ji, Y.G. Complexity of in-vehicle controllers and their effect on task performance. Int. J. Hum.-Comput. Interact. 2019, 35, 65–74. [Google Scholar] [CrossRef]

- Gong, Z. Challenges and Opportunities of Automotive HMI. In Proceedings of the International Conference on Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2025; pp. 23–35. [Google Scholar]

- Ma, J.; Gong, Y.; Xu, W. Predicting User Preference for Innovative Features in Intelligent Connected Vehicles from a Cultural Perspective. World Electr. Veh. J. 2024, 15, 130. [Google Scholar] [CrossRef]

- Boboc, R.G.; Gîrbacia, F.; Butilă, E.V. The application of augmented reality in the automotive industry: A systematic literature review. Appl. Sci. 2020, 10, 4259. [Google Scholar] [CrossRef]

- Young, G.; Milne, H.; Griffiths, D.; Padfield, E.; Blenkinsopp, R.; Georgiou, O. Designing mid-air haptic gesture controlled user interfaces for cars. Proc. ACM Hum.-Comput. Interact. 2020, 4, 1–23. [Google Scholar] [CrossRef]

- Alsayaydeh, J.A.J.; Indra, W.A.; Khang, W.; Shkarupylo, V.; Jkatisan, D. Development of vehicle ignition using fingerprint. ARPN J. Eng. Appl. Sci. 2019, 14, 4045–4053. [Google Scholar]

- Gao, L.; Xiong, L.; Xia, X.; Lu, Y.; Yu, Z.; Khajepour, A. Improved Vehicle Localization Using On-Board Sensors and Vehicle Lateral Velocity. IEEE Sens. J. 2022, 22, 6818–6831. [Google Scholar] [CrossRef]

- Bonfati, L.V.; Mendes Junior, J.J.A.; Siqueira, H.V.; Stevan, S.L. Correlation Analysis of In-Vehicle Sensors Data and Driver Signals in Identifying Driving and Driver Behaviors. Sensors 2023, 23, 263. [Google Scholar] [CrossRef]

- Zhao, X.; Fang, Y.; Min, H.; Wu, X.; Wang, W.; Teixeira, R. Potential sources of sensor data anomalies for autonomous vehicles: An overview from road vehicle safety perspective. Expert Syst. Appl. 2024, 236, 121358. [Google Scholar] [CrossRef]

- Parekh, D.; Poddar, N.; Rajpurkar, A.; Chahal, M.; Kumar, N.; Joshi, G.P.; Cho, W. A review on autonomous vehicles: Progress, methods and challenges. Electronics 2022, 11, 2162. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, L.; Huang, Y.; Zhao, J. Safety of autonomous vehicles. J. Adv. Transp. 2020, 2020, 8867757. [Google Scholar] [CrossRef]

- Mariani, R. An overview of autonomous vehicles safety. In Proceedings of the 2018 IEEE International Reliability Physics Symposium (IRPS), Burlingame, CA, USA, 11–15 March 2018; p. 6A-1. [Google Scholar]

- ISO 15007; Road Vehicles—Measurement of Driver Visual Behaviour Using Eye Tracking. International Organization for Standardization (ISO): Geneva, Switzerland, 2020.

- ISO 9241-110; Ergonomics of Human-System Interaction—Part 110: Interaction Principles. International Organization for Standardization (ISO): Geneva, Switzerland, 2020. Available online: https://www.iso.org/obp/ui/#iso:std:iso:9241:-110:ed-2:v1:en (accessed on 7 May 2025).

- Mandujano-Granillo, J.A.; Candela-Leal, M.O.; Ortiz-Vazquez, J.J.; Ramirez-Moreno, M.A.; Tudon-Martinez, J.C.; Felix-Herran, L.C.; Galvan-Galvan, A.; Lozoya-Santos, J.d.J. Human-Machine Interfaces: A Review for Autonomous Electric Vehicles. IEEE Access 2024, 12, 121635–121658. [Google Scholar] [CrossRef]

- Tester, J.; Fogg, B.; Maile, M. CommuterNews: A prototype of persuasive in-car entertainment. In Proceedings of the CHI’00 Extended Abstracts on Human Factors in Computing Systems, The Hague, The Netherlands, 1–6 April 2000; pp. 24–25. [Google Scholar]

- LRD, M.; Kumar, G.; Madan, M.; Deshmukh, S.; Biswas, P. Efficient interaction with automotive heads-up displays using appearance-based gaze tracking. In Proceedings of the 14th International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI ’22), Seoul, Republic of Korea, 17–20 September 2022; pp. 99–102. Available online: https://dl.acm.org/doi/abs/10.1145/3544999.3554818 (accessed on 8 May 2025).

- Green, P. Driver Interface/HMI Standards to Minimize Driver Distraction/Overload; Technical Report; SAE Technical Paper: Sydney, Australia, 2008. [Google Scholar]

- National Highway Traffic Safety Administration (NHTSA). Visual-Manual NHTSA Driver Distraction Guidelines for In-Vehicle Electronic Devices. Report No. DOT HS 811 141. 2013. Available online: https://www.nhtsa.gov/sites/nhtsa.gov/files/distraction_npfg-02162012.pdf (accessed on 7 May 2025).

- Regan, M.A.; Hallett, C.; Gordon, C.P. Driver distraction and driver inattention: Definition, relationship and taxonomy. Accid. Anal. Prev. 2011, 43, 1771–1781. [Google Scholar] [CrossRef]

- Pettersson, I.; Ju, W. Design techniques for exploring automotive interaction in the drive towards automation. In Proceedings of the 2017 Conference on Designing Interactive Systems, Edinburgh, UK, 10–14 June 2017; pp. 147–160. [Google Scholar]

- Akamatsu, M.; Green, P.; Bengler, K. Automotive technology and human factors research: Past, present, and future. Int. J. Veh. Technol. 2013, 2013, 526180. [Google Scholar] [CrossRef]

- Kindelsberger, J.; Fridman, L.; Glazer, M.; Reimer, B. Designing toward minimalism in vehicle hmi. arXiv 2018, arXiv:1805.02787. [Google Scholar]

- Liu, W.; Li, Q.; Wang, Z.; Wang, W.; Zeng, C.; Cheng, B. Research on the Effects of in-Vehicle Human-Machine Interface on Drivers’ Pre and Post Takeover Request Eye-tracking Characteristics. In Proceedings of the 2022 2nd International Conference on Intelligent Technologies (CONIT), Hubli, India, 24–26 June 2022; pp. 1–9. [Google Scholar]

- Althoff, F.; Lindl, R.; Walchshäusl, L. Robust multimodal hand-and head gesture recognition for controlling automotive infotainment systems. In VDI-Tagung: Der Fahrer im 21. Jahrhundert; BMW Group Research and Technology: Munich, Germany, 2005. [Google Scholar]

- Sterkenburg, J.; Landry, S.; Jeon, M. Design and evaluation of auditory-supported air gesture controls in vehicles. J. Multimodal User Interfaces 2019, 13, 55–70. [Google Scholar] [CrossRef]

- Gomaa, A. Adaptive user-centered multimodal interaction towards reliable and trusted automotive interfaces. In Proceedings of the 2022 International Conference on Multimodal Interaction, Bengaluru, India, 7–11 November 2022; pp. 690–695. [Google Scholar]

- Chang, X.; Chen, Z.; Dong, X.; Cai, Y.; Yan, T.; Cai, H.; Zhou, Z.; Zhou, G.; Gong, J. “It Must Be Gesturing Towards Me”: Gesture-Based Interaction between Autonomous Vehicles and Pedestrians. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–25. [Google Scholar]

- White, K.; Ruback, H.; Sedivy, J.; Kojima, K.; Kondo, K. Advanced Development of Speech Enabled Voice Recognition Enabled Embedded Navigation Systems; Technical Report; SAE Technical Paper: Sydney, Australia, 2006. [Google Scholar]

- Graham, J. Amazon makes request: ‘Alexa, get in the car’. In USA Today; Gale: Farmington Hills, MI, USA, 2018; p. 03B. [Google Scholar]

- Ghosh, S.; Chowdhury, P. Google Controlled Car. In Trends in Wireless Communication and Information Security: Proceedings of EWCIS 2020; Springer: Berlin, Germany, 2021; pp. 311–317. [Google Scholar]

- Dolman, S.; Wynne, R.A. ‘Do Not Disturb While Driving’: Distractibility of Tesla In-Vehicle Infotainment System. In Contemporary Ergonomics and Human Factors; CIEHF: London, UK, 2024. [Google Scholar]

- Zengeler, N.; Kopinski, T.; Handmann, U. Hand gesture recognition in automotive human–machine interaction using depth cameras. Sensors 2018, 19, 59. [Google Scholar] [CrossRef]

- Ma, J.; Ding, Y. The impact of in-vehicle voice interaction system on driving safety. Proc. J. Phys. Conf. Ser. Iop Publ. 2021, 1802, 042083. [Google Scholar] [CrossRef]

- Kettle, L.; Lee, Y.C. Augmented reality for vehicle-driver communication: A systematic review. Safety 2022, 8, 84. [Google Scholar] [CrossRef]

- Fadden, S.; Ververs, P.M.; Wickens, C.D. Costs and benefits of head-up display use: A meta-analytic approach. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; Sage Publications: Los Angeles, CA, USA, 1998; Volume 42, pp. 16–20. [Google Scholar]

- Pečečnik, K.S.; Tomažič, S.; Sodnik, J. Design of head-up display interfaces for automated vehicles. Int. J. Hum.-Comput. Stud. 2023, 177, 103060. [Google Scholar] [CrossRef]

- Tarnowski, T.; Haidenthaler, R.; Pohl, M.; Pross, A. Mercedes-Benz MBUX Hyperscreen Merges Technologies into Digital Dashboard Application. Inf. Disp. 2022, 38, 12–17. [Google Scholar] [CrossRef]

- Gupta, S.; Amaba, B.; McMahon, M.; Gupta, K. The evolution of artificial intelligence in the automotive industry. In Proceedings of the 2021 Annual Reliability and Maintainability Symposium (RAMS), Orlando, FL, USA, 24–27 May 2021; pp. 1–7. [Google Scholar]

- Stokel-Walker, C. Making Safer Drivers Volvo Cars Driver Understanding System. TIME, 30 October 2024. Available online: https://time.com/7094834/volvo-cars-driver-understanding-system/ (accessed on 14 March 2025).

- Huang, Z.; Huang, X. A study on the application of voice interaction in automotive human machine interface experience design. Aip Conf. Proc. 2018, 1955, 040074. [Google Scholar]

- Mueller, A.S.; Cicchino, J.B.; Calvanelli, J.V., Jr. Habits, attitudes, and expectations of regular users of partial driving automation systems. J. Saf. Res. 2024, 88, 125–134. [Google Scholar] [CrossRef]

- Reagan, I.J.; Kidd, D.G. Using hierarchical task analysis to compare four vehicle manufacturers’ infotainment systems. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications: Los Angeles, CA, USA, 2013; Volume 57, pp. 1495–1499. [Google Scholar]

- Wakeman, K.; Moore, M.; Zuby, D.; Hellinga, L. Effect of Subaru eyesight on pedestrian-related bodily injury liability claim frequencies. In Proceedings of the 26th Enhanced Safety of Vehicles International Conference, Eindhoven, The Netherlands, 10–13 June 2019. [Google Scholar]

- Wickens, C.D.; Helton, W.S.; Hollands, J.G.; Banbury, S. Engineering Psychology and Human Performance; Routledge: London, UK, 2021. [Google Scholar]

- Simões, A.; Cunha, L.; Ferreira, S.; Carvalhais, J.; Tavares, J.P.; Lobo, A.; Couto, A.; Silva, D. The user and the automated driving: A state-of-the-art. In Advances in Human Factors of Transportation: Proceedings of the AHFE 2019 International Conference on Human Factors in Transportation, Washington, DC, USA, 24–28 July 2019; Springer: Berlin/Heidelberg, Germany, 2020; pp. 190–201. [Google Scholar]

- Ganesh, M.I. The ironies of autonomy. Humanit. Soc. Sci. Commun. 2020, 7, 1–10. [Google Scholar] [CrossRef]

- Penmetsa, P.; Sheinidashtegol, P.; Musaev, A.; Adanu, E.K.; Hudnall, M. Effects of the autonomous vehicle crashes on public perception of the technology. IATSS Res. 2021, 45, 485–492. [Google Scholar] [CrossRef]

- Collision Between Vehicle Controlled by Developmental Automated Driving System and Pedestrian. Accident Report NTSB/HAR-19/03 PB2019-101402, 18 March 2018. National Transportation Safety Board. Available online: https://www.ntsb.gov/investigations/accidentreports/reports/har1903.pdf (accessed on 27 March 2025).

- He, S. Who is liable for the UBER self-driving crash? Analysis of the liability allocation and the regulatory model for autonomous vehicles. In Autonomous Vehicles: Business, Technology and Law; Springer: Berlin/Heidelberg, Germany, 2021; pp. 93–111. [Google Scholar]

- Vienna Convention on Road Traffic, 1968 November, United Nations. 1968. Available online: https://treaties.un.org/pages/ParticipationStatus.aspx (accessed on 27 March 2025).

- Regulation (EU) 2019/2144 of the European Parliament and of the Council of 27 November 2019 on Type-Approval Requirements for Motor Vehicles and Their Trailers, and Systems, Components and Separate Technical Units Intended for Such Vehicles, as Regards Their General Safety and the Protection of Vehicle Occupants and Vulnerable Road Users, Amending Regulation (EU), European Parliament and Council of the European Union. 2019. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32019R2144 (accessed on 27 March 2025).

- Commission Implementing Regulation (EU) 2022/1426 of 5 August 2022 Laying Down Rules for the Application of Regulation (EU) 2019/2144 of the European Parliament and of the Council as Regards Uniform Procedures and Technical Specifications for the Type-Approval of the Automated Driving System (ADS) of Fully Automated Vehicles (Text with EEA Relevance), European Commission. 2022. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX%3A32022R1426 (accessed on 27 March 2025).

- Saber, E.M.; Kostidis, S.C.; Politis, I. Ethical Dilemmas in Autonomous Driving: Philosophical, Social, and Public Policy Implications. In Deception in Autonomous Transport Systems: Threats, Impacts and Mitigation Policies; Springer: Berlin/Heidelberg, Germany, 2024; pp. 7–20. [Google Scholar]

- Vellinga, N.E. Automated driving and the future of traffic law. In Regulating New Technologies in Uncertain Times; T.M.C. Asser Press: The Hague, The Netherlands, 2019; pp. 67–82. [Google Scholar]

- Martínez-Cruz, A.; Ramírez-Gutiérrez, K.A.; Feregrino-Uribe, C.; Morales-Reyes, A. Security on in-vehicle communication protocols: Issues, challenges, and future research directions. Comput. Commun. 2021, 180, 1–20. [Google Scholar] [CrossRef]

- Muslam, M.M.A. Enhancing security in vehicle-to-vehicle communication: A comprehensive review of protocols and techniques. Vehicles 2024, 6, 450–467. [Google Scholar] [CrossRef]

- Silva, L.; Magaia, N.; Sousa, B.; Kobusińska, A.; Casimiro, A.; Mavromoustakis, C.X.; Mastorakis, G.; De Albuquerque, V.H.C. Computing paradigms in emerging vehicular environments: A review. IEEE/CAA J. Autom. Sin. 2021, 8, 491–511. [Google Scholar] [CrossRef]

- Truby, J.; Brown, R.D.; Antoine Ibrahim, I. Regulatory options for vehicle telematics devices: Balancing driver safety, data privacy and data security. Int. Rev. Law Comput. Technol. 2024, 38, 86–110. [Google Scholar] [CrossRef]

- Unterweger, A.; Knirsch, F.; Engel, D.; Musikhina, D.; Alyousef, A.; de Meer, H. An analysis of privacy preservation in electric vehicle charging. Energy Inform. 2022, 5, 3. [Google Scholar] [CrossRef]

- Pesé, M.D.; Shin, K.G. Survey of Automotive Privacy Regulations and Privacy-Related Attacks; SAE International: Warrendale, PA, USA, 2019. [Google Scholar]

- Miller, C.; Valasek, C. Remote Exploitation of an Unaltered Passenger Vehicle; Technical Report; IOActive: Seattle, DC, USA, 2015. [Google Scholar]

- Morris, D.; Madzudzo, G.; Garcia-Perez, A. Cybersecurity and the auto industry: The growing challenges presented by connected cars. Int. J. Automot. Technol. Manag. 2018, 18, 105–118. [Google Scholar] [CrossRef]

- Okomanyi, A.O.; Sherwood, A.R.; Shittu, E. Exploring effective strategies against cyberattacks: The case of the automotive industry. Environ. Syst. Decis. 2024, 44, 779–809. [Google Scholar] [CrossRef]

- Ebert, C.; Beck, M. Generative Artificial Intelligence for Automotive Cybersecurity. ATZ Electron. Worldw. 2024, 19, 50–54. [Google Scholar] [CrossRef]

- Ahmad, J.; Zia, M.U.; Naqvi, I.H.; Chattha, J.N.; Butt, F.A.; Huang, T.; Xiang, W. Machine learning and blockchain technologies for cybersecurity in connected vehicles. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2024, 14, e1515. [Google Scholar] [CrossRef]

- Yasin, G.; Tyagi, A.K.; Nguyen, T.A. Blockchain Technology in the Automotive Industry; CRC Press: Boca Raton, FL, USA, 2024. [Google Scholar]