Abstract

Extended reality (XR) technologies are gaining traction in technical education due to their potential for creating immersive and interactive training environments. This study presents the development and empirical evaluation of X-RAPT, a collaborative VR-based platform designed to train students in industrial robotics programming. The system enables multi-user interaction, cross-platform compatibility (VR and PC), and real-time data logging through a modular simulation framework. A pilot evaluation was conducted in a vocational training institute with 15 students performing progressively complex tasks in alternating roles using both VR and PC interfaces. Performance metrics were captured automatically from system logs, while post-task questionnaires assessed usability, comfort, and interaction quality. The findings indicate high user engagement and a distinct learning curve, evidenced by progressively shorter task completion times across levels of increasing complexity. Role-based differences were observed, with main users showing greater interaction frequency but both roles contributing meaningfully. Although hardware demands and institutional constraints limited the scale of the pilot, the findings support the platform’s potential for enhancing robotics education.

1. Introduction

The integration of robotics into industrial processes is accelerating rapidly, driven by the transition to Industry 4.0 and beyond, which has created an urgent demand for scalable and effective training solutions for the future workforce [1]. However, traditional robotic programming education often entails significant entry barriers, including safety concerns when working with physical equipment, limited access to expensive robotic platforms, and steep learning curves for novice users [2,3]. These challenges are particularly pronounced in educational environments where availability of equipment and instructional support is constrained. To overcome these limitations, extended reality (XR) technologies, especially virtual reality (VR), are increasingly employed as pedagogical alternatives, offering immersive, repeatable, and safe learning environments that can simulate real-world industrial workflows [4]. Empirical evidence confirms that VR training can not only reduce perceived risk and increase safety awareness, but also improve task accuracy and shorten learning durations compared to conventional hands-on approaches [5].

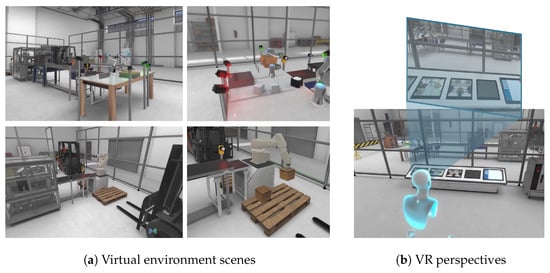

X-RAPT (eXtended Reality Adaptive Programming Training) is a simulation-based training framework designed to bridge the gap between theoretical knowledge and hands-on experience in industrial robotics. The platform enables students to perform realistic programming tasks using virtual replicas of common industrial robots (see Figure 1), such as the UR3 and ABB IRB120, within collaborative, role-based training scenarios. Through the use of a virtual programming tablet, students control robotic components in a simulated work cell, performing operations like assembly, labelling, and object handling.

Figure 1.

Illustrations of the X-RAPT training platform. (a) shows multiple scenes from the virtual industrial environment used in robotic programming tasks. (b) displays the immersive VR experience from both first-person and third-person viewpoints.

The study focuses on the pedagogical validation of the platform. Specifically, we present the results of a structured pilot study conducted with vocational students, aimed at assessing the system’s effectiveness in supporting skill acquisition, user engagement, and usability across different roles, devices, and task complexities.

This paper presents both quantitative and qualitative insights derived from log analysis and post-task questionnaires. Key metrics such as task completion time, error frequency, and action distribution are analysed alongside subjective user ratings related to comfort, ease of use, and perceived control. This evaluation provides the first empirical evidence of X-RAPT’s educational value and highlights areas for future improvement.

The rest of the paper is organised as follows. Section 2 reviews related work in XR-based technical education. Section 3 describes the architecture and design rationale of the X-RAPT platform. Section 4 presents the main findings of the pilot evaluation. Section 5 discusses the implications of the results and contextualises them within the broader educational landscape. Finally, Section 6 summarises the study’s contributions and outlines directions for future research.

2. Related Work

The integration of extended reality technologies, particularly VR, has become a transformative force in technical training, especially in fields requiring procedural mastery and spatial understanding. This section reviews prior literature in XR for robotics education, immersive simulation platforms, evaluation methodologies, and broader challenges associated with deploying XR in educational contexts.

2.1. XR in Robotics and Technical Education

Simulation frameworks have long supported robotics education by allowing learners to safely test programming logic and control schemes [6]. Early tools such as Gazebo laid foundational groundwork [7], while more recent systems integrate mixed reality and digital twin models [8,9]. For instance, ARNO, a desktop robot enhanced with AR, was developed to teach kinematics and safety protocols [8], while Nava-Téllez et al. [9] demonstrated how VR-based digital twins improve process understanding before real-world deployment.

ARNO and related AR/MR kits foreground concept acquisition (e.g., kinematics and safety) with desk-scale hardware [8], whereas our scenarios target full workcell coordination with multiple devices and roles. VR digital twins, as in [9], emphasise process understanding and risk-free rehearsal. X-RAPT builds on this by coupling rehearsal with role orchestration and integrated performance logging, enabling post hoc analytics not commonly reported in those systems.

Within industrial and manufacturing training, VR platforms have shown superior outcomes in task-based learning. Benotsmane et al. [10] employed immersive simulations for production line assembly, emphasising repeatability and workflow optimisation. Collaborative systems such as VR Co-Lab [1] allow for real-time multi-user training in tasks like human–robot disassembly, simulating realistic ergonomics and coordination. Wang et al. [11] further contributed with a mixed-reality system that integrates 3D CAD models for remote instruction, resulting in higher task accuracy compared to conventional setups. Taken together, these systems validate immersion and collaboration for industrial workflows. X-RAPT advances this line by combining multi-user training with explicit role differentiation (programming vs. supervision), device-agnostic access (VR and PC), and fine-grained action/error logging to support evidence-based feedback.

Beyond VR, mixed reality (MR) has been examined in robotics education from the supervisor’s perspective. Orsolits et al. [12] show that MR supports project-based thesis work by preserving spatial context with real equipment, enabling iterative prototyping in safe conditions, and improving alignment between supervisors and students on objectives and assessment. They also note practical hurdles, including device management, calibration effort, and the need for explicit evaluation rubrics. In light of these observations, X-RAPT prioritises low-friction supervision through a web-based scenario editor and persistent analytics, seeking to retain MR/VR benefits while reducing overheads highlighted by Orsolits et al. [12].

In line with the growing interest in XR-based training platforms, two notable projects have employed the VIROO platform for deployment and testing. Barz et al. [13] developed MASTER-XR, focused on human–robot collaboration and advanced interaction (e.g., eye tracking) for teaching robotics. Esen et al. [14] introduced XR4MCR for collaborative robot maintenance with no-code scenario creation and real-time synchronisation. While both showcase VIROO’s versatility, they do not report role-structured collaboration with dual device modalities and integrated, session-level analytics. X-RAPT complements these efforts by operationalising role allocation, cross-device participation (VR/PC), and automatic logging for post-session evaluation, which together enable scalable formative assessment.

Beyond role allocation, logging, and cross-device access, X-RAPT contributes a role-coupled task logic that enforces collaboration: several tablet commands in the main role remain disabled until the support role has spawned the correct items and scene sensors report valid states, and both users share synchronised camera feeds and a live event log to reach joint checkpoints. Instructors pre-author difficulty and initial activation of components via a web editor, binding these parameters to the role-gated logic at each level. A server-authoritative, centralised log generates automatic per-user, per-level summaries of actions and error classes for formative review. To our knowledge, prior VIROO-based systems such as MASTER-XR and XR4MCR report co-presence and scenario creation, but do not combine explicit role-gating with synchronised shared situational awareness and immediate, per-user analytics within mixed VR/PC cohorts in the same session.

2.2. Educational Use and Skill Development

Numerous implementations confirm VR’s effectiveness in fostering hands-on competence and immersive engagement. Andone and Frydenberg [15] reported success in digital literacy and international collaboration through VR use in higher education. Berki and Sudár [16] highlighted VR’s impact on practical learning in engineering, while Rajeswaran et al. [17] demonstrated accelerated medical skill acquisition in intubation training. VR technologies are also increasingly applied in healthcare education, where they support the development of clinical skills through safe, repeatable, and immersive training environments [18,19]. These examples reflect VR’s ability to create repeatable, risk-free, and domain-agnostic training environments.

Compared with these studies, X-RAPT emphasises collaborative competency in addition to individual skill gains: the main role develops programming fluency and temporal sequencing, while the support role cultivates monitoring, verification, and supply management. This division mirrors real industrial practices and aligns with findings that structured collaboration in XR strengthens procedural understanding and coordination beyond solitary training.

2.3. Evaluation Methodologies in XR Training

While adoption of XR in education has surged, its evaluation presents methodological gaps. Toni et al. [20] argue that most XR evaluations rely on Kirkpatrick’s lower levels, user satisfaction and short-term learning, without sufficient focus on behavioural change or long-term retention. Stefan et al. [21] confirm this limitation in a VR safety training review, showing only 72% of studies assessed learning gains.

To overcome this, modern approaches employ mixed methods combining quantitative log data with subjective questionnaires. Objective metrics such as task duration, error rate, and interaction count are increasingly used [22,23]. In medical training, for example, VR-trained cohorts consistently perform faster and with greater precision than control groups [22]. Tools such as the NASA-TLX [24] and System Usability Scale (SUS) are widely used to quantify cognitive load and usability, respectively [25]. Pavlou et al. [26] applied presence questionnaires to evaluate immersion and task comprehension in XR-based instruction.

The importance of triangulating qualitative insights, such as instructor observations or participant comments, is increasingly recognised as essential for identifying usability bottlenecks and contextualising quantitative data [24].

2.4. Systemic Challenges and Emerging Directions

Despite encouraging results, systemic issues persist. XR implementations face hardware limitations, high setup costs, and insufficient instructor training [27,28]. Ethical considerations in data usage [19] and technical barriers in multi-user synchronisation further complicate deployment [29]. Nevertheless, research continues to address these through cyber–physical systems, intelligent tutoring [30,31], and IoT-enhanced platforms [32].

Tisza and Ortega [33] proposed a digital twin laboratory using MQTT for remote training in green hydrogen production, exemplifying how these technologies generalise across domains. Still, robust longitudinal studies, especially in collaborative XR, remain scarce [31,34].

In light of the existing literature, the X-RAPT platform introduces a novel contribution: a multi-user, role-based VR training system with real-time logging, scenario authoring, and device-agnostic access. It bridges gaps in collaborative control, adaptive training flow, and integrated assessment, extending the state of the art in immersive robotics education.

3. Method

The X-RAPT platform has been engineered as a modular and extensible framework aimed at facilitating immersive, collaborative, and adaptive training for industrial robotics programming. Moving beyond the limitations of traditional, single-user simulators, X-RAPT supports multi-user interaction, cross-platform compatibility (VR and PC), dynamic scenario deployment, and comprehensive performance logging. This section outlines the design rationale and technical architecture, with particular emphasis on features that enhance scalability, pedagogical flexibility, and empirical assessment.

3.1. System Design and Multimodal Interaction

X-RAPT combines real-time simulation with a robust multi-user networking layer. Built in Unity 2022, the platform integrates seamlessly with VIROO, an XR orchestration system used for spatial anchoring, session management, and real-time device synchronisation. The use of VIROO allowed extensive technical documentation and multiplayer testing environments to be leveraged, thereby accelerating implementation and reducing development overhead.

The interaction paradigm accommodates various input modalities, including VR controllers, optical hand-tracking, and traditional keyboard–mouse input. This multimodal architecture ensures inclusivity across hardware profiles and learning preferences. Central to the experience is a virtual tablet that functions as the main programming interface. Users deploy robotic sequences and manipulate scene elements using a visual scripting interface comprising drag-and-drop logic blocks and adjustable parameters.

User roles are defined explicitly within the system. Instructors can design scenarios, configure difficulty, and assign roles via a web-based task editor that also encodes role-coupled preconditions. Trainees are designated as either main (typically using VR) or support (typically on PC), with interactions tailored to each role. Many actions taken by one role are gated by scene states produced by the other (e.g., object placement, sensor/status checks), so that progress requires turn-taking and agreement on when to proceed. This editor is directly linked to the VR environment, streamlining deployment and enabling non-technical educators to create scenarios with explicit interdependence between roles. Actions are role-gated: multiple tablet operations in the main role remain unavailable until the support role has spawned required parts and scene sensors (e.g., part–lid availability, conveyor occupancy) satisfy preconditions. Both users see synchronised camera feeds and the live event log to support joint decision-making, and roles rotate across levels to balance participation. Core session state is server-authoritative with centralised logging on the host.

The main user operates the virtual tablet, which serves as the core interface for programming and issuing commands to robotic components such as arms, conveyor belts, and sensors. The support user oversees the scene through cameras, verifies state (e.g., correct part–lid pairing and placement), and manages supply by triggering spawns and delivering items to the assembly area. Crucially, many tablet operations are blocked until the support user has satisfied the required preconditions (and vice versa, supply is paced by tablet execution), which forces coordination rather than parallel, independent work. In practice, this yields verbal requests and confirmations, joint checks of camera views and indicators, and temporal interleaving of actions across roles; tutors present in the sessions reported consistent back-and-forth communication as necessary to complete multi-step assemblies correctly. This role-coupled structure fosters purposeful collaboration, ensures complementary responsibilities, and enables fine-grained post-session analysis of how teams sequence and coordinate their actions.

X-RAPT follows contemporary XR education principles synthesised in recent reviews [35,36]. Concretely, (i) an experiential cycle integrates briefing, hands-on task execution, and log-based debrief; (ii) collaborative learning is enacted via explicit roles (main/support) and shared goals; and (iii) cognitive-load-aware task design is achieved through a stable tablet UI and levelled task complexity. Fine-grained logging and the instructor-facing editor support formative assessment and provide a foundation for future analytics-driven guidance [37].

3.2. Scenario Logic and Learning Structure

Training is organised around scenario-based simulations that reflect key industrial processes, assembly, packaging and labelling. These are arranged in levels of increasing complexity and are designed to reinforce procedural thinking, robotic logic, and collaborative task execution. The task editor allows instructors to define objectives, place components, configure robot behaviours, and set success criteria without writing code. Scenarios are then synchronised to the VR/PC clients via VIROO’s deployment infrastructure. During execution, users interact with digital representations of industrial robots (UR3 and ABB IRB120). They can also interact with other components, such as conveyor systems, Cognex camera sensors and actuators. These components are illustrated in Figure 2.

Figure 2.

Main components of the X-RAPT simulation environment, including a virtual tablet for programming, a conveyor belt for replicating material flow, and two industrial robots (UR3 and ABB IRB120) configured for multi-step task execution within the simulated scene.

Each training session includes four stages: introduction, task briefing, task execution, and debriefing. The briefing includes scenario-specific instructions, while the execution phase offers contextual guidance using visual overlays and voice prompts. In the debriefing stage, instructors access system-generated analytics, while students submit structured feedback questionnaires.

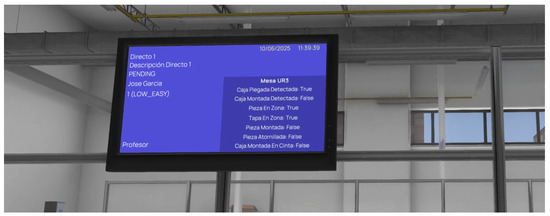

Two core modules support real-time decision-making: the Status Screen and the Control Zone. The status screen provides an overview of scenario parameters (task name, difficulty, user role) and real-time state information (e.g., object presence in zones, sensor states). Figure 3 illustrates this interface.

Figure 3.

Status screen showing real-time task and workspace parameters. The left column presents scenario metadata, while the right column reports on object presence, robot status, and sensor activation.

The Control Zone comprises four virtual monitors. Three display real-time feeds from scene cameras, while the fourth shows a live text log of user actions. This log is exported as a JSON file at session end and includes timestamps, user IDs, and action descriptions, enabling detailed post-session review (see Figure 4).

Figure 4.

Control zone used for task supervision and session logging. The initial three screens show the cameras data. The textual log (right screen) captures user actions in real time, later exported for analysis.

3.3. Performance Monitoring and Scalability

X-RAPT features a real-time logging and analytics engine developed using PHP and JSON. This backend component tracks all interaction events and scenario updates. Logs are parsed to extract key metrics such as task completion time, error types, and object manipulation patterns. These data inform both individual feedback and longitudinal studies on learning progression.

The system is fully cross-platform. All scenarios can be accessed using a VR headset (for high-fidelity interaction) or a PC (for broader accessibility), with all devices synchronised through VIROO’s multiplayer networking. Technologies such as Unity Netcode and Vivox voice integration ensure synchronised, real-time interaction and communication.

Role-based UI adaptation is implemented to streamline user experience. Upon login, the interface dynamically adjusts based on whether the user is an instructor or trainee. Instructors see scenario management tools and analytics panels, while trainees see task-relevant views and control widgets.

4. Results

This section presents the empirical results gathered during the pilot study of the X-RAPT simulator. Both objective system logs and subjective user evaluations are analysed to assess performance, usability, and collaborative dynamics within the immersive training environment. Quantitative data include task completion times, interaction counts, and error types, while qualitative feedback is derived from post-session questionnaires. Together, these findings provide insight into the platform’s efficacy for teaching robotics programming through extended reality and inform areas for further refinement.

4.1. Implementation of the Pilot Study

The pilot study was conducted at the SAFA School as part of the final evaluation phase of the X-RAPT simulator. The initial design of the pilot included six sessions per student pair: one introductory session, one familiarisation session, and four evaluation levels (e.g., Levels 1, 3, 5, 9), with planned rotations between devices (PC and VR) and roles (main and support). A total of 15 students participated, organised in pairs.

The pilot’s scope was adjusted from its original design due to several practical limitations encountered during implementation. Some equipment dependencies, such as the availability of high-performance computers and VR connectivity hardware, posed challenges, occasionally resulting in temporary instability during the early stages. Additionally, while training sessions and support materials were provided, instructors required more time to become familiar with the XR platform than initially expected. Consequently, the evaluation prioritised the quality of observation and user feedback over the number of sessions to ensure the collection of meaningful data from the pilot activities.

Despite these deviations, the sessions that were completed followed the intended structure, using both PC and VR, rotating roles, and collecting performance and post-task feedback. The data obtained, though limited in volume, proved sufficient to extract relevant technical insights and user experience metrics.

Each training session follows a structured workflow:

- Introduction: A brief system orientation and learning goal overview, typically delivered to the full group.

- Briefing: Each user receives role-specific objectives and instructions tailored to the scenario’s parameters.

- Task Execution: Users complete the scenario using the PC and immersive interfaces. Contextual prompts, audio feedback, and visual cues guide performance.

- Debriefing: Upon task completion, users complete a standardised self-assessment. The system generates analytics summarising completion time, number of errors, interaction density, and other key metrics.

4.2. Participant Profile and Pre-Pilot Baseline

The pre-pilot questionnaire, completed by 15 students, provided essential context for interpreting subsequent evaluation results. Participants had an average age of 22 years (SD = 5.28), ranging from 16 to 35, reflecting a moderately diverse age group. Two-thirds of students (66.7%) had previous exposure to VR technologies, while only one student (6.7%) reported prior use of robotics or industrial simulators.

Notably, familiarity with robot programming was low, with an average self-assessment score of 1.67 out of 5 (SD = 0.98). This confirms that the pilot group represented an appropriate target population for X-RAPT: novice users who could benefit from immersive, low-barrier-to-entry learning platforms.

4.3. Quantitative Task Performance

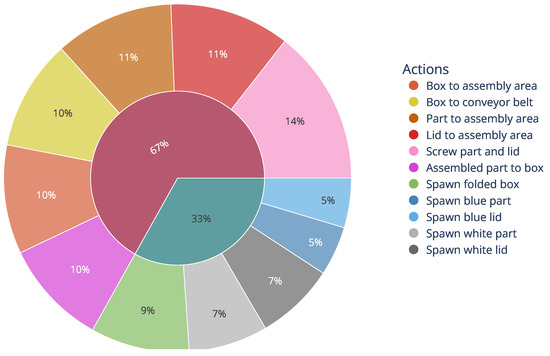

System logs from the pilot allowed detailed tracking of participant actions and errors during task execution. Across all sessions, a total of 662 user actions were recorded. The most frequent action was Screw part and lid (14.42%), followed by Lid to assembly area (11.20%) and Part to assembly area (11.04%). Actions involving box handling, such as Box to conveyor belt and Box to assembly area, each accounted for approximately 10% of the activity, while object spawning comprised the remaining 34.1%.

The distribution of actions by role followed the simulator’s design: 67% were performed by the main user operating the tablet, and 33% by the support user managing part delivery and environmental supervision, as seen in Figure 5. This demonstrates that the simulation supports active collaboration, even when role responsibilities differ, with both participants contributing meaningfully to task completion through distinct but interdependent functions. Notably, active time decreased while learners traversed an increasing number of rule-checked state transitions (e.g., correct part–lid pairing, sensor and conveyor states), indicating faster satisfaction of content constraints rather than interface manipulation alone.

Figure 5.

Distribution of actions performed during the simulation. Actions executed by the user in the main role (67% of the total) are shown in warm tones, while actions performed by the auxiliary user (33%) are represented in cool tones.

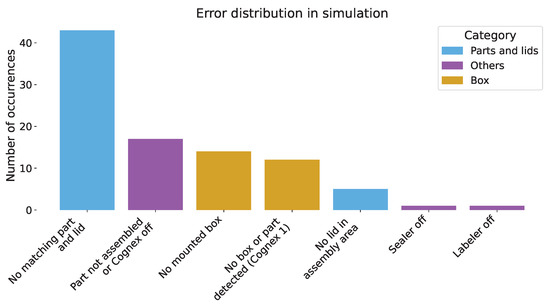

A total of 93 errors were logged. Among these, 48 were related to incorrect part or lid handling and sequencing, while 26 involved box-related operations (see Figure 6). The most common issues were No matching part and lid (43 instances) and Part not assembled or Cognex off (17 instances), suggesting a cognitive challenge in mastering multi-step assembly logic, precisely the educational focus of X-RAPT.

Figure 6.

Distribution of errors occurring during the simulations. Errors related to parts and lids are shown in blue, those associated with boxes are shown in orange, and other types of errors are represented in purple.

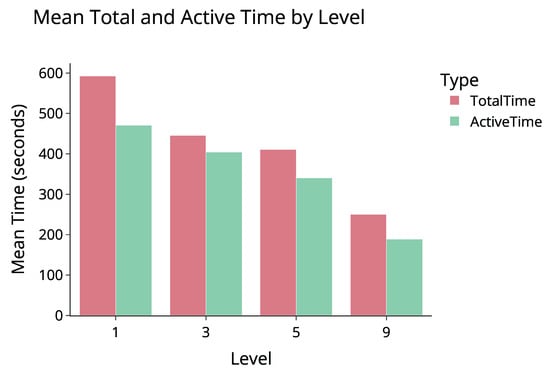

Time metrics reinforce the presence of a learning curve. Despite increasing task complexity, average session duration decreased from 593 s (Level 1) to 250 s (Level 9). Active task time, defined as the interval between the first and last user action, dropped from 471 to 189 s. Figure 7 shows the time distribution for each evaluated level. This indicates improved familiarity with the system, more efficient task execution, and enhanced role coordination as users progressed through the simulation.

Figure 7.

Distribution of the mean time required to complete each level. TotalTime refers to the overall time spent inside the simulation environment, including idle moments or pauses. ActiveTime represents the effective interaction time, measured from the first to the last recorded user action.

4.4. Subjective Feedback and Usability Insights

Participants also completed post-task questionnaires after each session, evaluating their experiences on a Likert scale (1 = strongly disagree, 5 = strongly agree). The questions addressed physical comfort, latency perception, ease of interaction, control accuracy, and navigability. The following questions were presented to participants after each simulation session:

- Q1:

- Did you experience any discomfort (such as visual fatigue or dizziness)?

- Q2:

- Did you perceive any delay between your actions and the system response?

- Q3:

- How natural was the interaction with the environment?

- Q4:

- How easy was it to use the tablet to control the components? (If you used it)

- Q5:

- How precise did you feel the control over the environment was?

- Q6:

- Did you feel comfortable interacting with the simulation?

- Q7:

- Was it easy to move around the environment?

In addition to the numerical responses, students were invited to provide open-ended feedback at the end of each questionnaire. Several participants offered short qualitative insights that helped contextualise their experiences. One participant described the interaction as “very positive, very manageable and visual.” Another noted a technical issue: “At the end of the session, the system froze and I couldn’t interact with the support buttons.” Some also pointed out areas for improvement, such as one participant who commented, “Sometimes the tablet was hard to use with the controllers—it would be easier with hand tracking.” These reflections confirm the simulator’s capacity to deliver meaningful learning while also identifying opportunities for refinement.

Although brief in number, the free-text entries converged on three themes that triangulate with questionnaire items: (i) interaction quality and visual clarity, consistent with higher ratings on naturalness, comfort, and navigability (Q3, Q6, Q7); (ii) input ergonomics, where a suggestion for hand tracking aligns with tablet usability (Q4); and (iii) stability at session end, where a reported UI freeze is relevant to perceived latency and comfort (Q2, Q1). Given the small corpus, we conducted a rapid, descriptive synthesis of free-text remarks and interpreted them alongside the item scores (Q1–Q7). The intent is to contextualise questionnaire trends and derive immediate design implications, rather than to claim thematic saturation or broad generalisability.

The three themes translate into actionable adjustments: (i) an optional hand-tracking mode for tablet interaction to reduce controller friction (Q4); (ii) a session-health watchdog to recover input focus at end-of-session transitions and prevent UI freeze (Q1–Q2); and (iii) short, role-specific checklists to scaffold coordination and make expectations explicit. These changes are planned for the next iteration.

Device Comparison (VR vs. PC): Table 1 reports item-wise differences. For Q1 (discomfort), VR users reported higher values than PC (2.48 vs. 1.00), consistent with the greater sensory load of head-mounted displays. Q2 (latency) remained low on both devices (VR 1.67, PC 1.35), indicating stable performance. Q3 (naturalness) was moderately higher in VR than PC (3.52 vs. 3.35), suggesting benefits of embodied interaction. Q4 (tablet usability) favoured VR (4.18 vs. 3.54), likely due to direct manipulation and spatial alignment. Q5 (perceived precision) was similar across devices with a slight advantage for VR (3.81 vs. 3.65). Q6 (overall comfort with the simulation) was high for both and nearly identical (VR 3.90, PC 3.82). Q7 (navigability) was high on both devices, again slightly higher in VR (4.10 vs. 3.94). Overall, VR yielded marginal gains in naturalness, tablet usability, precision and navigation, with a moderate cost in physical comfort. While Q1 indicates higher discomfort in VR, the absolute values remained below the scale midpoint and were accompanied by higher naturalness, tablet usability, and navigability (Q3/Q4/Q7), suggesting that mild discomfort did not outweigh the usability benefits in this setting.

Table 1.

Mean and standard deviation of question scores by device.

Level-Based Trends: As difficulty increased, Q1 (discomfort) rose slightly from 1.60 to 2.12, a plausible effect of longer focus and denser interaction demands. Q2 (latency perception) increased from 1.10 to 1.88 yet remained low, indicating acceptable responsiveness under higher task load. Q3 (naturalness) showed a U-shaped pattern, highest at Level 1 (4.00), dipping at Level 5 (2.70), and recovering by Level 9 (3.50), consistent with an adaptation trough as complexity rises. Q4 (tablet usability) started high (4.22), dropped at Level 3 (3.57), then stabilised (3.86), suggesting early familiarisation followed by consistent operation. Q5 (precision) decreased through mid-levels (4.10 to 3.30) and recovered at Level 9 (3.88), a pattern typical of added task constraints and subsequent adaptation. Q6 (comfort with the simulation) declined with difficulty (4.40 to 3.62), which is expected as cognitive demands grow. Q7 (navigability) remained high overall, with an initial decline then recovery (4.40, 3.70, 4.12), indicating spatial acclimation over time (see Table 2).

Table 2.

Mean and standard deviation of question scores by level.

Role-Based Comparison (Main vs. Support): The main user, responsible for simulation control, reported slightly higher comfort (Q6) and precision (Q5) than the support user. As shown in Table 3, the grouped scores demonstrate that the support role continues to receive similarly high scores across all questions. This confirms that both roles offer valuable and engaging experiences. This balance supports the simulator’s collaborative design and justifies the role-rotation strategy implemented during the pilot.

Table 3.

Mean and standard deviation of question scores by role.

5. Discussion

The evaluation results provide preliminary, feasibility-level indications of the educational potential of X-RAPT. Rather than merely showing shorter times, the progressive reduction in total and active task duration across levels is consistent with consolidation of procedural schemas and more efficient perceptual–motor coordination in a complex, spatial task setting, although interface familiarisation may also have contributed. This interpretation is consistent with recent reports of improved task efficiency and conceptual understanding in immersive learning settings, where embodied interaction supports faster routine formation and transfer to applied tasks [38]. Additionally, the stable performance across PC and VR, with modest comfort costs in VR, aligns with findings that virtual laboratories can extend access while preserving usability for core engineering activities [39]. This pattern aligns with our pedagogical framing, experiential cycles, purposeful collaboration, and cognitive-load-aware task design, as emphasised in recent XR education syntheses [35,36]. Given the small, single-institution sample and the absence of a control group or delayed retention measures, these patterns should be interpreted cautiously.

The collaborative distribution of actions between main and support roles indicates that X-RAPT fosters team coordination and shared problem solving, not just individual skill acquisition. This echoes broader evidence that XR experiences can cultivate collaboration, communication and critical thinking when roles are explicit and interdependent [36]. In our case, the tablet-centred main role and the monitoring and supply-oriented support role appear to promote complementary forms of engagement, which may be particularly valuable in industrial training where division of labour is the norm.

Error patterns concentrated on multi-step assembly logic, especially part–lid pairing and sequencing. Consistent with this interpretation, the dominant error categories were conceptually grounded (assembly logic and sequencing), which are unlikely to improve purely through interface habituation. Rather than a weakness, this pinpoints where scaffolding should be targeted and where analytics can inform adaptive support. The platform’s fine-grained logging and instructor-facing scenario editor are aligned with calls to pair XR with learning analytics and AI-ready instrumentation to enable evidence-based feedback and personalised progression [37]. In the current prototype, guidance is not triggered automatically at runtime, adaptation is instructor-driven (e.g., edited constraints or hints), and post-session feedback is given. Accordingly, adaptive support is framed here as future functionality that will use log-derived triggers (e.g., repeated error classes, idle-time thresholds) to deliver just-in-time prompts without interrupting collaboration.

Building on these error signatures, future iterations will incorporate adaptive prompts and lightweight feedback messages that trigger on context, providing just-in-time guidance without interrupting collaboration and making the logging pipeline actionable for formative assessment.

Subjective responses showed high perceived usability and natural interaction, particularly in VR for tablet use, which mirrors reports of increased presence and engagement in immersive conceptual design and engineering tasks [38]. Together with the device-agnostic deployment and supervisor-oriented tools, these results suggest that X-RAPT may serve as a pragmatic solution that bridges hands-on fidelity with operational feasibility in institutional settings also highlighted in the literature [39], pending confirmation in larger, controlled, multi-institution studies.

VR’s comfort cost warrants explicit consideration. Although discomfort ratings were higher in VR, they remained below the scale midpoint and coexisted with gains in naturalness, tablet usability, and navigability. Potential impacts of discomfort on time-on-task, sustained attention, and fine motor precision are more likely in longer or highly dynamic sessions; to mitigate them, we target high refresh rates (90 Hz) and low latency, adopt comfort locomotion (teleportation, snap turns), and keep a stable, world-anchored UI with readable text and moderate contrast. At the interaction and protocol level, we provide optional hand-tracking for tablet interaction, design levels with natural pause points, deliver a short pre-session orientation, run 10–15 min blocks with voluntary breaks, and rotate roles between VR and PC so that learning continuity is preserved across modalities.

From a deployment standpoint, X-RAPT currently runs in VIROO-orchestrated sessions. The pilot established conservative requirements: for VR, a desktop or laptop with a discrete GPU, 16 GB RAM, and a tethered headset supported by VIROO; for PC-only use, a standard classroom computer with 8 GB RAM was sufficient at reduced visual fidelity. These requirements delimit affordability and infrastructure needs, but also outline a pragmatic adoption path: institutions without immediate VR capacity can begin on PC and phase in headsets as resources permit while preserving synchronous collaboration and assessment.

For anecdotal, non-instrumented instructor observations outside the study protocol, the same cohort subsequently performed analogous procedures on physical equipment (UR3 and ABB IRB120) in the teaching lab. We treat these observations solely as contextual and do not consider them empirical validation; they were not included in our analyses. Instructors anecdotally noted apparent carryover of procedural steps and scene logic from X-RAPT to the real cells, which motivates a planned controlled sim-to-real evaluation with matched tasks and standardised metrics.

Because the pilot did not include a control group, we refrain from causal claims about superiority over traditional instruction. Educational potential is interpreted as preliminary evidence from a feasibility study: a consistent within-subject learning pattern, role-structured collaboration reflected in action distributions, and convergent usability and engagement reports across devices. Long-term retention was not assessed in this pilot.

Because the pilot did not include a control group, we refrain from causal claims about superiority over traditional instruction. Educational potential is interpreted as preliminary evidence from a feasibility study: a consistent within-subject learning pattern, role-structured collaboration reflected in action distributions, and convergent usability and engagement reports across devices; long-term retention was not assessed in this pilot.

Without a control condition, the observed improvements (shorter total/active times and changes in error rates) cannot be causally attributed to X-RAPT; they may partly reflect repeated practice or interface familiarisation. This constitutes an internal validity threat. We attempted to limit this risk by (i) increasing task complexity across levels, (ii) rotating roles within pairs, and (iii) comparing performance across VR and PC to check for device-specific habituation. Nonetheless, residual confounds remain. A follow-up controlled (two-arm or crossover) study with randomisation, pre/post and delayed retention, and matched sim-to-real tasks is planned to establish comparative effectiveness.

The sample was restricted to 15 vocational students within the project’s validation phase, constraining generalisability. Future work will broaden participation across institutions and include controlled comparisons with traditional instruction and delayed post-tests to assess retention, addressing recurrent recommendations to strengthen study designs in XR education and to report outcomes beyond short-term performance [36,37]. To enrich the qualitative strand beyond brief free-text remarks, we will incorporate short semi-structured interviews (10–15 min) and in-scenario think-aloud sessions analysed by two coders with inter-rater reliability, enabling stronger triangulation with log-derived and questionnaire data. Accordingly, the qualitative strand should be read as hypothesis-generating and design-informing; claims of thematic saturation or general qualitative generalisability are outside the scope of this pilot.

6. Conclusions

This paper presented the design, implementation, and empirical evaluation of X-RAPT, an immersive training platform for industrial robotics education based on extended reality technologies. The system was conceived to support collaborative, multi-user interactions across both VR and PC modalities, enabling flexible deployment and role-based learning experiences. Through the integration of a virtual programming tablet, realistic simulation of industrial workflows, and detailed logging mechanisms, X-RAPT offers a novel framework for skill acquisition in robotic assembly tasks. Importantly, these patterns arise in a collaboration design where role-gated preconditions and shared situational awareness (cameras and live logs) structure joint checkpoints, rather than mere co-presence.

To assess the platform’s educational impact, a structured pilot study was conducted with vocational students, combining system logs and user questionnaires. Results indicate that participants exhibited a measurable learning curve across levels, with decreasing task completion times and increasing familiarity with the interface. Qualitative feedback revealed generally positive perceptions of usability, comfort, and interaction quality, with slight advantages observed in VR usage. Moreover, role-based analysis confirmed the collaborative nature of the experience, with both main and support users contributing actively to task execution.

Despite these promising findings, the study also presents several limitations. The system currently requires high-performance hardware for VR deployment, which may limit its adoption in under-resourced educational settings. Additionally, the pilot was conducted within a single institution, with a relatively small and homogeneous participant group, due to the logistical demands of setup and data collection.

Future work will address these constraints by optimising system performance for lower-end devices and expanding the range of training scenarios to include more diverse industrial tasks and failure states. This will enhance the realism and instructional value of the simulations, better preparing learners for real-world problem-solving. Furthermore, broader validation studies will be pursued across different educational levels, including university settings, to evaluate generalisability and long-term learning outcomes. To establish comparative effectiveness, we will conduct a controlled two-arm or crossover study with pre/post and delayed retention.

In conclusion, X-RAPT demonstrates preliminary evidence that XR-based, role-structured training can support procedural learning and collaborative practice in industrial robotics. Its modular and replicable architecture, combining role-aware interfaces, a browser-based scenario editor, integrated session-level analytics, and device-agnostic deployment across VR and PC, makes the platform readily transferable across institutions and appealing to both educators and developers. In practical terms, institutions can adopt a PC-first deployment and phase in headsets as resources permit while preserving synchronous collaboration and analytics.

Author Contributions

Conceptualisation, D.M.-P. and B.Z.-S.; methodology, D.M.-P.; software, B.Z.-S.; validation, D.M.-P., E.R.Z. and J.G.-R.; formal analysis, D.M.-P.; investigation, D.M.-P., M.F.-V. and E.R.Z.; resources, J.G.-R.; data curation, D.M.-P.; writing—original draft preparation, D.M.-P., M.F.-V. and B.Z.-S.; writing—review and editing, M.F.-V. and J.G.-R.; visualisation, D.M.-P.; supervision, J.G.-R.; project administration, J.G.-R. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been conducted as part of the X-RAPT (Immersive XR-Based Adaptive Training for Robotics Programming in Assembly and Packaging) project, funded by the European Union under the Horizon Europe programme [Project ID: 101093079]. This work has also been supported by the Valencian regional government CIAICO/2022/132 Consolidated group project AI4Health, and International Center for Aging Research ICAR funded project “IASISTEM”. It has also been funded by a regional grants for PhD studies from the Valencian government, CIACIF/2021/430.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

No new data were created or analysed in this study. The work focused on the design and evaluation of a simulation framework.

Acknowledgments

The authors would like to express their gratitude to the X-RAPT project, funded by the European Union under the Horizon Europe programme, for supporting the development and deployment of the training platform. We also thank the Sagrada Familia School (SAFA) in Valladolid for their collaboration and commitment during the pilot evaluation phase. During the preparation of this manuscript, the authors used large language models (OpenAI ChatGPT 4.5) for the purposes of text refinement and generation of illustrative figures. The authors have reviewed and edited all outputs and take full responsibility for the content of this publication.

Conflicts of Interest

Beatriz Zambrano-Serrano was employed by MetaMedicsVR. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| GPU | Graphics Processing Unit |

| PC | Personal Computer |

| XR | Extended Reality |

| VR | Virtual Reality |

References

- Maddipatla, Y.; Tian, S.; Liang, X.; Zheng, M.; Li, B. VR Co-Lab: A Virtual Reality Platform for Human–Robot Disassembly Training and Synthetic Data Generation. Machines 2025, 13, 239. [Google Scholar] [CrossRef]

- De La Rosa Gutierrez, J.P.; Silva, T.R.; Dittrich, Y.; Sørensen, A.S. Design Goals for End-User Development of Robot-Assisted Physical Training Activities: A Participatory Design Study. In Proceedings of the ACM on Human-Computer Interaction; Association for Computing Machinery: New York, NY, USA, 2024; Volume 8. [Google Scholar] [CrossRef]

- Dianatfar, M.; Latokartano, J.; Lanz, M. Concept for Virtual Safety Training System for Human-Robot Collaboration. Procedia Manuf. 2020, 51, 54–60. [Google Scholar] [CrossRef]

- Dianatfar, M.; Pöysäri, S.; Latokartano, J.; Siltala, N.; Lanz, M. Virtual reality-based safety training in human-robot collaboration scenario: User experiences testing. In Proceedings of the Modern Materials and Manufacturing 2023, Tallinn, Estonia, 2–4 May 2023; AIP Conference Proceedings. AIP Publishing LLC: Melville, NY, USA, 2024; Volume 2989. [Google Scholar] [CrossRef]

- Monetti, F.M.; de Giorgio, A.; Yu, H.; Maffei, A.; Romero, M. An experimental study of the impact of virtual reality training on manufacturing operators on industrial robotic tasks. Procedia CIRP 2022, 106, 33–38. [Google Scholar] [CrossRef]

- Devic, A.; Vidakovic, J.; Zivkovic, N. Development of Standalone Extended-Reality-Supported Interactive Industrial Robot Programming System. Machines 2024, 12, 480. [Google Scholar] [CrossRef]

- Chikurtev, D. Mobile robot simulation and navigation in ROS and Gazebo. In Proceedings of the 2020 International Conference Automatics and Informatics (ICAI), Varna, Bulgaria, 1–3 October 2020; pp. 1–6. [Google Scholar]

- Orsolits, H.; Rauh, S.F.; Estrada, J.G. Using mixed reality based digital twins for robotics education. In Proceedings of the 2022 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Singapore, 17–21 October 2022; pp. 56–59. [Google Scholar] [CrossRef]

- Nava-Téllez, I.A.; Elias-Espinosa, M.C.; Escamilla, E.B.; Hernández Saavedra, A. Digital Twins and Virtual Reality as Means for Teaching Industrial Robotics: A Case Study. In Proceedings of the 2023 11th International Conference on Information and Education Technology (ICIET), Fujisawa, Japan, 18–20 March 2023; pp. 29–33. [Google Scholar] [CrossRef]

- Benotsmane, R.; Trohak, A.; Bartók, R.; Mélypataki, G. Transformative Learning: Nurturing Novices to Experts through 3D Simulation and Virtual Reality in Education. In Proceedings of the 2024 25th International Carpathian Control Conference (ICCC), Krynica Zdrój, Poland, 22–24 May 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Wang, P.; Bai, X.; Billinghurst, M.; Zhang, S.; Han, D.; Lv, H. An MR Remote Collaborative Platform Based on 3D CAD Models for Training in Industry. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Beijing, China, 10–18 October 2019; pp. 91–92. [Google Scholar] [CrossRef]

- Orsolits, H.; Valente, A.; Lackner, M. Mixed Reality-Based Robotics Education—Supervisor Perspective on Thesis Works. Appl. Sci. 2025, 15, 6134. [Google Scholar] [CrossRef]

- Barz, M.; Karagiannis, P.; Kildal, J.; Pinto, A.R.; de Munain, J.R.; Rosel, J.; Madarieta, M.; Salagianni, K.; Aivaliotis, P.; Makris, S.; et al. MASTER-XR: Mixed Reality Ecosystem for Teaching Robotics in Manufacturing. In Integrated Systems: Data Driven Engineering; Springer: Cham, Switzerland, 2024; pp. 167–178. [Google Scholar] [CrossRef]

- Esen, Y.E.; Çetincan, B.K.; Yayan, K.; Burnak, O.; Yayan, U. XR4MCR: Multiplayer Collaborative Robotics Maintenance Training Platform in Extended Reality. SSRN Preprint. 2025. Available online: https://ssrn.com/abstract=5334736 (accessed on 15 August 2025).

- Andone, D.; Frydenberg, M. Creating Virtual Reality in a Business and Technology Educational Context. In Augmented Reality and Virtual Reality; tom Dieck, M., Jung, T., Eds.; Progress in IS; Springer: Cham, Switzerland, 2019. [Google Scholar] [CrossRef]

- Berki, B.; Sudár, A. Virtual and Augmented Reality in Education Based on CogInfoCom and cVR Conference Insights. In Proceedings of the 2024 IEEE 15th International Conference on Cognitive Infocommunications (CogInfoCom), Hachioji, Tokyo, Japan, 16–18 September 2024; pp. 115–120. [Google Scholar] [CrossRef]

- Rajeswaran, P.; Varghese, J.; Kumar, P.; Vozenilek, J.; Kesavadas, T. AirwayVR: Virtual Reality Trainer for Endotracheal Intubation. In Proceedings of the 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Osaka, Japan, 23–27 March 2019; pp. 1345–1346. [Google Scholar] [CrossRef]

- Hein, J.; Grunder, J.; Calvet, L.; Giraud, F.; Cavalcanti, N.A.; Carrillo, F. Virtual Reality for Immersive Education in Orthopedic Surgery Digital Twins. In Proceedings of the 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bellevue, WA, USA, 21–25 October 2024; pp. 628–629. [Google Scholar] [CrossRef]

- van der Meijden, O.A.; Schijven, M.P. The value of haptic feedback in conventional and robot-assisted minimal invasive surgery and virtual reality training: A current review. Surg. Endosc. 2009, 23, 1180–1190. [Google Scholar] [CrossRef]

- Toni, E.; Toni, E.; Fereidooni, M.; Ayatollahi, H. Acceptance and Use of Extended Reality in Surgical Training: An Umbrella Review. Syst. Rev. 2024, 13, 299. [Google Scholar] [CrossRef] [PubMed]

- Stefan, H.; Mortimer, M.; Horan, B. Evaluating the effectiveness of virtual reality for safety-relevant training: A systematic review. Virtual Real. 2023, 27, 2839–2869. [Google Scholar] [CrossRef]

- Mao, R.Q.; Lan, L.; Kay, J.; Lohre, R.; Ayeni, O.R.; Goel, D.P.; de Sa, D. Immersive Virtual Reality for Surgical Training: A Systematic Review. J. Surg. Res. 2021, 268, 40–58. [Google Scholar] [CrossRef]

- Chan, V.S.; Haron, H.N.; Isham, M.I.B.M.; Mohamed, F.B. VR and AR virtual welding for psychomotor skills: A systematic review. Multimed. Tools Appl. 2022, 81, 12459–12493. [Google Scholar] [CrossRef] [PubMed]

- An, D.; Deng, H.; Shen, C.; Xu, Y.; Zhong, L.; Deng, Y. Evaluation of Virtual Reality Application in Construction Teaching: A Comparative Study of Undergraduates. Appl. Sci. 2023, 13, 6170. [Google Scholar] [CrossRef]

- Bareisyte, L.; Slatman, S.; Austin, J.; Rosema, M.; van Sintemaartensdijk, I.; Watson, S.; Bode, C. Questionnaires for Evaluating Virtual Reality: A Systematic Scoping Review. Comput. Hum. Behav. Rep. 2024, 16, 100505. [Google Scholar] [CrossRef]

- Pavlou, M.; Laskos, D.; Zacharaki, E.I.; Risvas, K.; Moustakas, K. XRSISE: An XR Training System for Interactive Simulation and Ergonomics Assessment. Front. Virtual Real. 2021, 2, 646415. [Google Scholar] [CrossRef]

- Van Mechelen, M.; Smith, R.C.; Schaper, M.M.; Tamashiro, M.; Bilstrup, K.E.; Lunding, M.; Graves Petersen, M.; Sejer Iversen, O. Emerging Technologies in K–12 Education: A Future HCI Research Agenda. ACM Trans. Comput.-Hum. Interact. 2023, 30, 1–40. [Google Scholar] [CrossRef]

- Bicalho, D.R.; Piedade, J.M.N.; de Lacerda Matos, J.F. The Use of Immersive Virtual Reality in Educational Practices in Higher Education: A Systematic Review. In Proceedings of the 2023 International Symposium on Computers in Education (SIIE), Setúbal, Portugal, 16–18 November 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Wegner, K.; Seele, S.; Buhler, H.; Misztal, S.; Herpers, R.; Schild, J. Comparison of Two Inventory Design Concepts in a Collaborative Virtual Reality Serious Game. In Proceedings of the CHI PLAY ’17 Extended Abstracts, New York, NY, USA, 15–18 October 2017; pp. 323–329. [Google Scholar] [CrossRef]

- Adel, A. The Convergence of Intelligent Tutoring, Robotics, and IoT in Smart Education for the Transition from Industry 4.0 to 5.0. Smart Cities 2024, 7, 14. [Google Scholar] [CrossRef]

- Flowers, B.A.; Rebensky, S. Are you Seeing what I’m Seeing?: Perceptual Issues with Digital Twins in Virtual Reality. In Proceedings of the 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Christchurch, New Zealand, 12–16 March 2022; pp. 126–130. [Google Scholar] [CrossRef]

- Serebryakov, M.Y.; Moiseev, I.S. Current Trends in the Development of Cyber-physical Interfaces Linking Virtual Reality and Physical System. In Proceedings of the 2022 Conference of Russian Young Researchers in Electrical and Electronic Engineering (ElConRus), Saint Petersburg, Russia, 25–28 January 2022; pp. 419–424. [Google Scholar] [CrossRef]

- Tisza, J.; Ortega, D. Methodological Proposal for the Use of Digital Twins in Remote Engineering Education. In Proceedings of the 2024 IEEE 4th International Conference on Advanced Learning Technologies on Education & Research (ICALTER), Tarma, Peru, 10–12 December 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Partarakis, N.; Zabulis, X.; Zourarakis, D.; Demeridou, I.; Moreno, I.; Dubois, A.; Nikolaou, N.; Fallahian, P.; Arnaud, D.; Crescenzo, N.; et al. Physics-Based Tool Usage Simulations in VR. Multimodal Technol. Interact. 2025, 9, 29. [Google Scholar] [CrossRef]

- Huang, T.C.; Tseng, H.P. Extended Reality in Applied Sciences Education: A Systematic Review. Appl. Sci. 2025, 15, 4038. [Google Scholar] [CrossRef]

- Crogman, H.T.; Cano, V.D.; Pacheco, E.; Sonawane, R.B.; Boroon, R. Virtual Reality, Augmented Reality, and Mixed Reality in Experiential Learning: Transforming Educational Paradigms. Educ. Sci. 2025, 15, 303. [Google Scholar] [CrossRef]

- Lampropoulos, G. Intelligent Virtual Reality and Augmented Reality Technologies: An Overview. Future Internet 2025, 17, 58. [Google Scholar] [CrossRef]

- Díaz González, E.M.; Belaroussi, R.; Soto-Martín, O.; Acosta, M.; Martín-Gutierrez, J. Effect of Interactive Virtual Reality on the Teaching of Conceptual Design in Engineering and Architecture Fields. Appl. Sci. 2025, 15, 4205. [Google Scholar] [CrossRef]

- Strazzeri, I.; Notebaert, A.; Barros, C.; Quinten, J.; Demarbaix, A. Virtual Reality Integration for Enhanced Engineering Education and Experimentation: A Focus on Active Thermography. Computers 2024, 13, 199. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).