Deepwater 3D Measurements with a Novel Sensor System

Abstract

Featured Application

Abstract

1. Introduction

2. Materials and Methods

2.1. Underwater 3D Sensor System

2.1.1. Sensor Hardware

- Two monochrome measurement cameras (type Baumer VLTX-28M.I with lenses SCHNEIDER KMP-IR CINEGON 12/1.4);

- A projection unit (in-house manufactured with lens SCHNEIDER STD XENON 17/0.95);

- A color camera (type Baumer VLTX-71M.I with lens SCHNEIDER KMP CINEGON 10/1.9);

- A Fiber Optic Gyro Inertial Measurement Unit (IMU, type KVH 1750 IMU);

- Two flashlights (using Cree CXB3590 LEDs);

- An electronic control box (in-house manufactured);

- Cylindrical underwater housing for individual components;

- Wiring for power supply and data transfer.

- Size (spatial dimensions): 1.25 m × 0.7 m × 0.5 m (0.9 m × 0.7 m × 0.5 m);

- Mass: 65 kg (approx.);

- Color camera frame rate: 25 Hz at 7 Mpix resolution;

- Measurement camera frame rate: up to 900 Hz at 1 MPix resolution;

- Three-dimensional frame rate: up to 50 Hz;

- Measurement distance 2.0 m ± 0.4 m (1.3 m ± 0.3 m);

- Field of view at a standard distance: 0.9 m × 0.8 m (0.7 m × 0.6 m);

- Maximum diving depth: 1000 m.

2.1.2. Software Architecture

2.1.3. Graphical User Interface

2.2. Data Recording

2.2.1. Generation of Measurement Data

2.2.2. Geometric Modeling, Calibration, and 3D Data Calculation

2.2.3. Motion Compensation

2.2.4. 3D Model Generation

3. Results

- Use case 1: The complete measurement of a “large” structure (approx. 5–10 m in diameter) on the ground with a mean scanner speed of approx. 0.5 m/s;

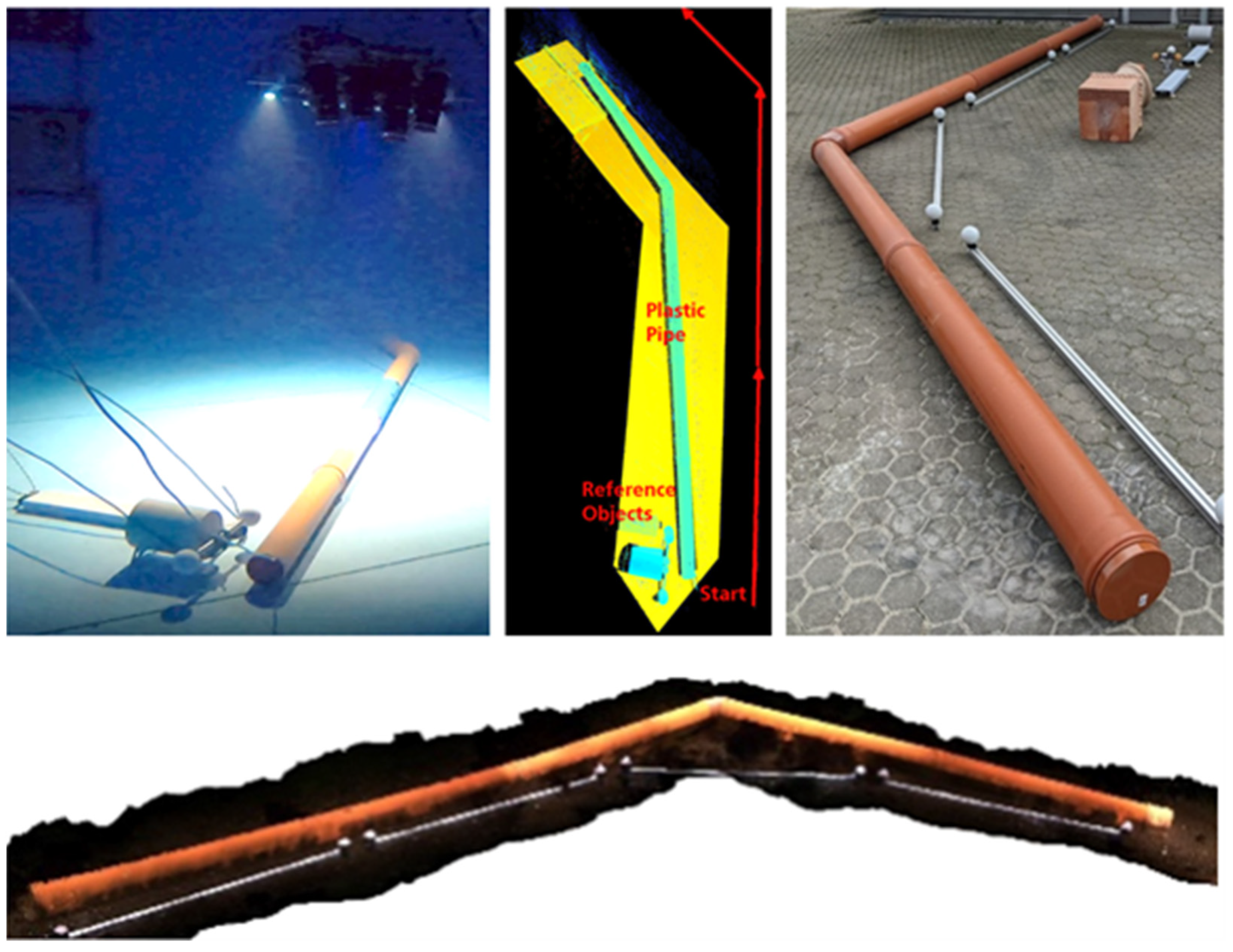

- Use case 2: A pipeline is traveled at a high scanner speed of approx. 1 m/s (constant) with minimal changes in direction and an average measuring distance of 2 m;

- Use case 3: The inspection of an anchor chain or similar object is carried out at a low scanner speed of approx. 0.2 m/s (constant) with minimal changes in direction.

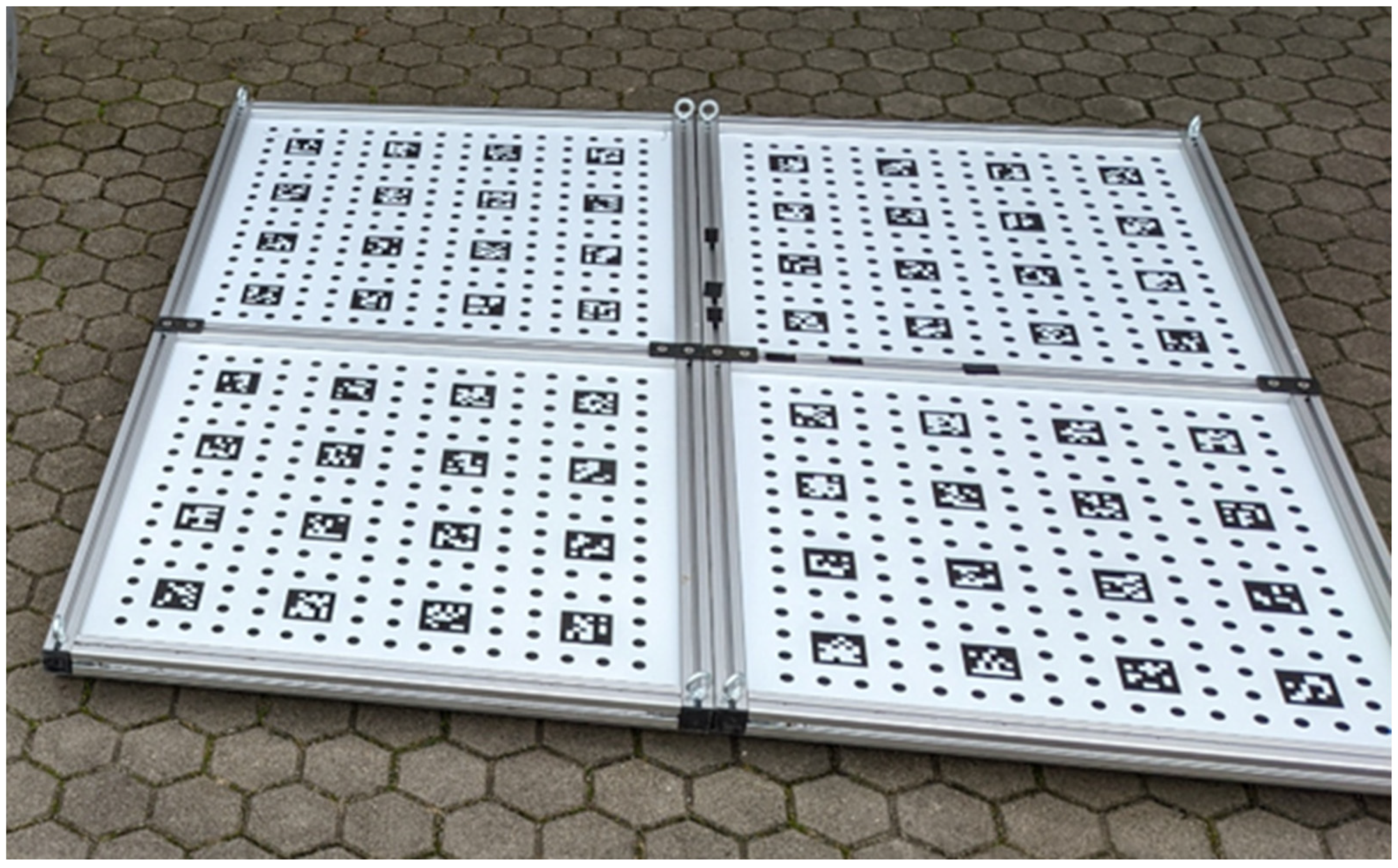

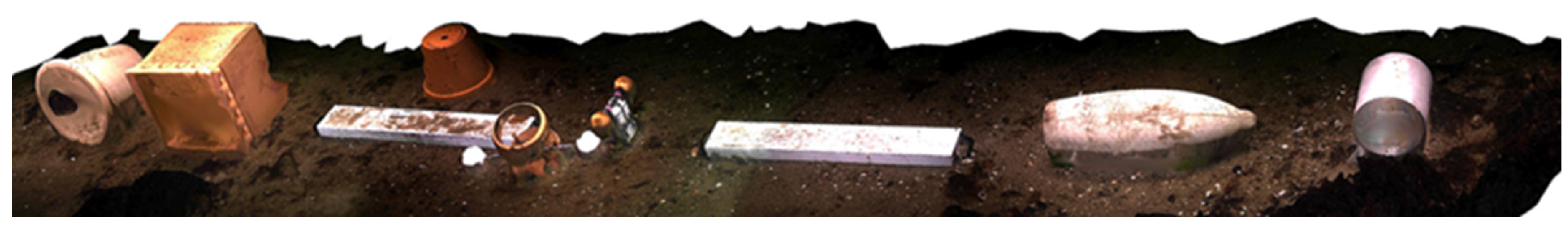

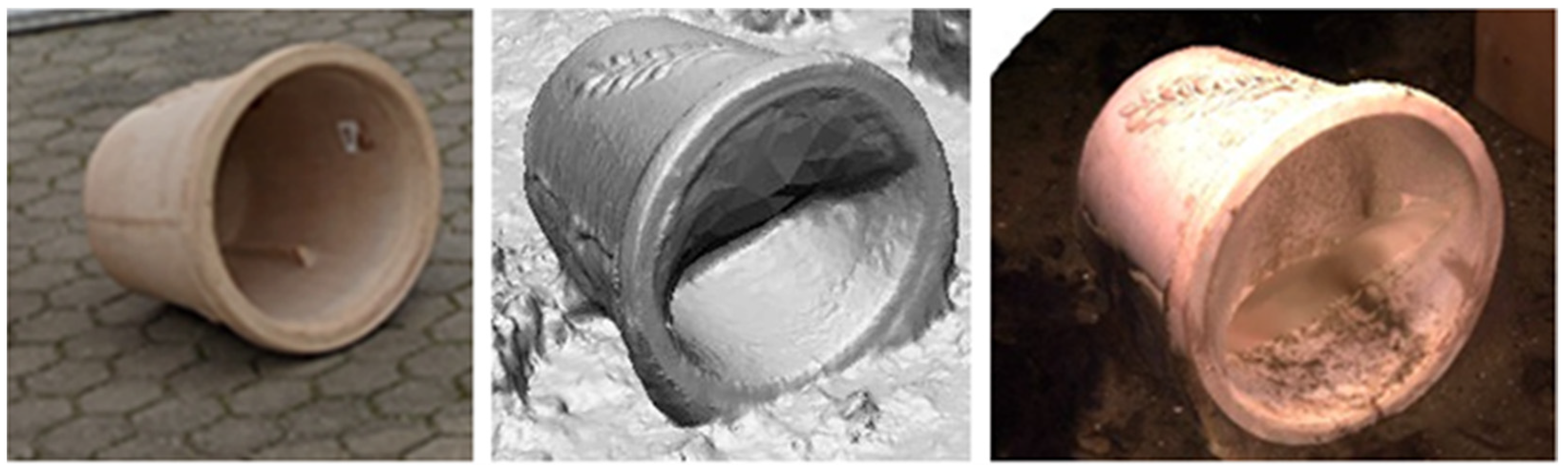

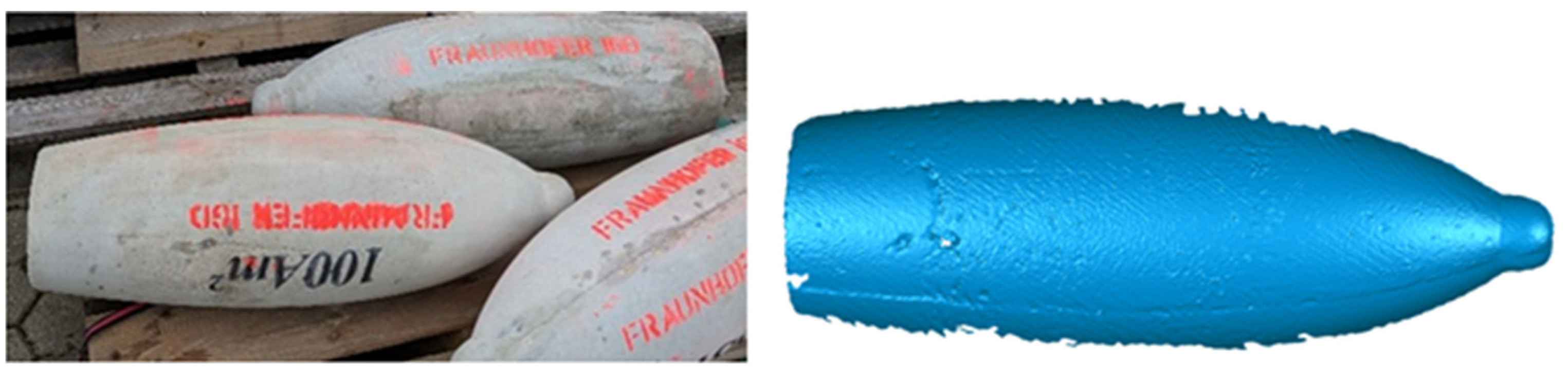

3.1. Evaluation Measurements

3.2. Offshore Measurement Examples

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Davis, A.; Lugsdin, A. Highspeed underwater inspection for port and harbour security using Coda Echoscope 3D sonar. In Proceedings of the Oceans 2005 MTS/IEEE, Washington, DC, USA, 17–23 September 2005. [Google Scholar] [CrossRef]

- Guerneve, T.; Pettilot, Y. Underwater 3D Reconstruction Using BlueView Imaging Sonar; IEEE: New York, NY, USA, 2015. [Google Scholar] [CrossRef]

- ARIS-Sonars. 2022. Available online: http://soundmetrics.com/Products/ARIS-Sonars (accessed on 9 November 2023).

- McLeod, D.; Jacobson, J.; Hardy, M.; Embry, C. Autonomous inspection using an underwater 3D LiDAR. In An Ocean in Common, Proceedings of the 2013 OCEANS, San Diego, CA, USA, 23–27 September 2013; IEEE: New York, NY, USA, 2014. [Google Scholar]

- 3DatDepth. 2022. Available online: http://www.3datdepth.com/ (accessed on 9 November 2023).

- Mariani, P.; Quincoces, I.; Haugholt, K.H.; Chardard, Y.; Visser, A.W.; Yates, C.; Piccinno, G.; Risholm, P.; Thielemann, J.T. Range gated imaging system for underwater monitoring in ocean environment. Sustainability 2019, 11, 162. [Google Scholar] [CrossRef]

- Balletti, C.; Beltrane, C.; Costa, E.; Guerr, F.; Vernier, P. Underwater photogrammetry and 3D reconstruction of marble cargos shipwrecks. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Piano di Sorrento, Italy, 16–17 April 2015; pp. 7–13. [Google Scholar]

- Zhukovsky, M.O.; Kuznetsov, V.D.; Olkhovsky, S.V. Photogrammetric techniques for 3-D underwater record of the antique time ship from from Phangoria. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume XL-5/W2, 2013, XXIV International CIPA Symposium, Strasbourg, France, 2–6 September 2013; pp. 717–721. [Google Scholar]

- Vaarst. 2023. Available online: https://vaarst.com/subslam-3d-imaging-technology/ (accessed on 20 December 2023).

- Menna, F.; Battisti, A.; Nocerino, E.; Remondino, F. FROG: A portable underwater mobile mapping system. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Padua, Italy, 24–26 May 2023; pp. 295–302. [Google Scholar] [CrossRef]

- Tetlow, S.; Allwood, R.L. The use of a laser stripe illuminator for enhanced underwater viewing. In Proceedings of the Ocean Optics XII 1994, Bergen, Norway, 26 October 1994; Volume 2258, pp. 547–555. [Google Scholar]

- CathXOcean. 2022. Available online: https://cathxocean.com/ (accessed on 9 November 2023).

- Voyis. 2022. Available online: https://voyis.com/ (accessed on 9 November 2023).

- Yousif, K.; Bab-Hadiashar, A.; Hoseinnezhad, R. An Overview to Visual Odometry and Visual SLAM: Applications to Mobile Robotics. Intell. Ind. Syst. 2015, 1, 289–311. [Google Scholar] [CrossRef]

- Kwon, Y.H.; Casebolt, J. Effects of light refraction on the accuracy of camera calibration and reconstruction in underwater motion analysis. Sports Biomech. 2006, 5, 315–340. [Google Scholar] [CrossRef]

- Telem, G.; Filin, S. Photogrammetric modeling of underwater environments. ISPRS J. Photogramm. Remote Sens. 2010, 65, 433–444. [Google Scholar] [CrossRef]

- Sedlazeck, A.; Koch, R. Perspective and non-perspective camera models in underwater imaging—Overview and error analysis. In Theoretical Foundations of Computer Vision; Springer: Berlin/Heidelberg, Germany, 2011; Volume 7474, pp. 212–242. [Google Scholar]

- Li, R.; Tao, C.; Curran, T.; Smith, R. Digital underwater photogrammetric system for large scale underwater spatial information acquisition. Mar. Geod. 1996, 20, 163–173. [Google Scholar] [CrossRef]

- Maas, H.G. On the accuracy potential in underwater/multimedia photogrammetry. Sensors 2015, 15, 1814–1852. [Google Scholar] [CrossRef] [PubMed]

- Beall, C.; Lawrence, B.J.; Ila, V.; Dellaert, F. 3D reconstruction of underwater structures. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4418–4423. [Google Scholar]

- Skinner, K.A.; Johnson-Roberson, M. Towards real-time underwater 3D reconstruction with plenoptic cameras. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejon, Republic of Korea, 9–14 October 2016; pp. 2014–2021. [Google Scholar]

- Bruno, F.; Bianco, G.; Muzzupappa, M.; Barone, S.; Razionale, A.V. Experimentation of structured light and stereo vision for underwater 3D reconstruction. ISPRS J. Photogramm. Remote Sens. 2011, 66, 508–518. [Google Scholar] [CrossRef]

- Bianco, G.; Gallo, A.; Bruno, F.; Muzzupappa, M. A comparative analysis between active and passive techniques for underwater 3D reconstruction of close-range objects. Sensors 2013, 13, 11007–11031. [Google Scholar] [CrossRef] [PubMed]

- Luhmann, T.; Robson, S.; Kyle, S.; Harley, I. Close Range Photogrammetry; Wiley Whittles Publishing: Caithness, UK, 2006. [Google Scholar]

- Lam, T.F.; Blum, H.; Siegwart, R.; Gawel, A. SL sensor: An open-source, ROS-based, real-time structured light sensor for high accuracy construction robotic applications. arXiv 2021, arXiv:2201.09025. [Google Scholar] [CrossRef]

- Furukawa, R.; Sagawa, R.; Kawasaki, H. Depth estimation using structured light flow-analysis of projected pattern flow on an object’s surface. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4640–4648. [Google Scholar]

- Leccese, F. Editorial to selected papers from the 1st IMEKO TC19 Workshop on Metrology for the Sea. Acta IMEKO 2018, 7, 1–2. [Google Scholar] [CrossRef]

- Gaglianone, G.; Crognale, J.; Esposito, C. Investigating submerged morphologies by means of the low-budget “GeoDive” method (high resolution for detailed 3D reconstruction and related measurements). Acta IMEKO 2018, 7, 50–59. [Google Scholar] [CrossRef]

- Heist, S.; Dietrich, P.; Landmann, M.; Kühmstedt, P.; Notni, G. High-speed 3D shape measurement by GOBO projection of aperiodic sinusoidal fringes: A performance analysis. In Proceedings of the SPIE Dimensional Optical Metrology and Inspection for Practical Applications VII, 106670A, Orlando, FL, USA, 17–19 April 2018; Volume 10667. [Google Scholar] [CrossRef]

- Bleier, M.; Munkelt, C.; Heinze, M.; Bräuer-Burchardt, C.; Lauterbach, H.A.; van der Lucht, J.; Nüchter, A. Visuelle Odometrie und SLAM für die Bewegungskompensation und mobile Kartierung mit einem optischen 3D-Unterwassersensor. In Photogrammetrie Laserscanning Optische 3D-Messtechnik, Beiträge der Oldenburger 3D-Tage 2022; Jade Hochschule: Wilhelmshaven, Germany, 2022; pp. 394–405. [Google Scholar]

- Schütz, M. Potree: Rendering Large Point Clouds in Web Browsers. Bachelor’s Thesis, Technische Universität Wien, Vienna, Austria, 2015. [Google Scholar] [CrossRef]

- Bräuer-Burchardt, C.; Munkelt, C.; Gebhart, I.; Heinze, M.; Kühmstedt, P.; Notni, G. Underwater 3D Measurements with Advanced Camera Modelling. PFG-J. Photogramm. Remote Sens. Geoinf. Sci. 2022, 90, 55–67. [Google Scholar] [CrossRef]

- Shortis, M. Camera calibration techniques for accurate measurement underwater. In 3D Recording and Interpretation for Maritime Archaeology; Coastal Research Library; McCarthy, J., Benjamin, J., Winton, T., van Duivenvoorde, W., Eds.; Springer: Cham, Switzerland, 2019; Volume 31. [Google Scholar]

- Kruck, E. BINGO: Ein Bündelprogramm zur Simultanausgleichung für Ingenieuranwendungen—Möglichkeiten und praktische Ergebnisse. In Proceedings of the International Archive for Photogrammetry and Remote Sensing, Rio de Janeiro, Brazil, 7–9 May 1984. [Google Scholar]

- Bräuer-Burchardt, C.; Munkelt, C.; Bleier, M.; Heinze, M.; Gebhart, I.; Kühmstedt, P.; Notni, G. A New Sensor System for Accurate 3D Surface Measurements and Modeling of Underwater Objects. Appl. Sci. 2022, 12, 4139. [Google Scholar] [CrossRef]

- VDI/VDE; VDI/VDE 2634. Optical 3D-Measuring Systems. In VDI/VDE Guidelines; Verein Deutscher Ingenieure: Düsseldorf, Germany, 2008. [Google Scholar]

- Baltic Taucherei- und Bergungsbetrieb Rostock GmbH. 2023. Available online: https://baltic-taucher.com/ (accessed on 9 November 2023).

- Rahman, S.; Quattrini Li, A.; Rekleitis, I. SVIn2: A multi-sensor fusion-based underwater SLAM system. Int. J. Robot. Res. 2022, 41, 1022–1042. [Google Scholar] [CrossRef]

- Kwasnitschka, T.; Köser, K.; Sticklus, J.; Rothenbeck, M.; Weiß, T.; Wenzlaff, E.; Schoening, T.; Triebe, L.; Steinführer, A.; Devey, C.; et al. DeepSurveyCam—A Deep Ocean Optical Mapping System. Sensors 2016, 16, 164. [Google Scholar] [CrossRef] [PubMed]

| Distance (m) | Error S1 (mm) | Noise (mm) | Error S2 (mm) | Noise (mm) |

|---|---|---|---|---|

| 1.5 ± 0.01 | 0.21 ± 0.07 | 0.07 ± 0.01 | 0.04 ± 0.01 | 0.07 ± 0.01 |

| 1.8 ± 0.01 | 0.18 ± 0.01 | 0.09 ± 0.01 | 0.02 ± 0.03 | 0.08 ± 0.01 |

| 2.1 ± 0.01 | 0.33 ± 0.01 | 0.09 ± 0.01 | 0.05 ± 0.06 | 0.09 ± 0.01 |

| 2.4 ± 0.01 | 0.37 ± 0.04 | 0.13 ± 0.01 | 0.12 ± 0.04 | 0.10 ± 0.01 |

| Distance (m) | Flatness Deviation (mm) | Noise (mm) | n |

|---|---|---|---|

| 1.7 | 0.33 ± 0.04 | 0.06 ± 0.01 | 4 |

| 2.0 | 0.37 ± 0.04 | 0.07 ± 0.01 | 4 |

| 2.4 | 0.73 ± 0.13 | 0.15 ± 0.02 | 4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bräuer-Burchardt, C.; Munkelt, C.; Bleier, M.; Baumann, A.; Heinze, M.; Gebhart, I.; Kühmstedt, P.; Notni, G. Deepwater 3D Measurements with a Novel Sensor System. Appl. Sci. 2024, 14, 557. https://doi.org/10.3390/app14020557

Bräuer-Burchardt C, Munkelt C, Bleier M, Baumann A, Heinze M, Gebhart I, Kühmstedt P, Notni G. Deepwater 3D Measurements with a Novel Sensor System. Applied Sciences. 2024; 14(2):557. https://doi.org/10.3390/app14020557

Chicago/Turabian StyleBräuer-Burchardt, Christian, Christoph Munkelt, Michael Bleier, Anja Baumann, Matthias Heinze, Ingo Gebhart, Peter Kühmstedt, and Gunther Notni. 2024. "Deepwater 3D Measurements with a Novel Sensor System" Applied Sciences 14, no. 2: 557. https://doi.org/10.3390/app14020557

APA StyleBräuer-Burchardt, C., Munkelt, C., Bleier, M., Baumann, A., Heinze, M., Gebhart, I., Kühmstedt, P., & Notni, G. (2024). Deepwater 3D Measurements with a Novel Sensor System. Applied Sciences, 14(2), 557. https://doi.org/10.3390/app14020557