1. Introduction

Cyber-attack techniques have advanced and diversified. There is a need for new techniques to detect attacks and threats and to predict attack patterns. Therefore, many studies on various security technologies based on data analysis exist. To detect, analyze, predict, and counter security attacks and threats, there are studies on techniques based on intelligent systems such as data mining, soft computing, artificial intelligence, and information fusion. These can be defined as security technologies based on intelligent security data analysis. Intelligent systems cover a wide range of research and technology areas, and they have been researched in each area over a long time. Research on security technologies based on data analysis has been conducted in various forms for a long time. Research has mainly focused on security alert correlation analysis [

1], insider/outsider intrusion detection [

2], malware detection, and fraud detection [

3]. It has been difficult to apply these studies and technologies to security systems directly due to their long runtime and computational complexity.

Feature-selection methods for anomaly detection and data classification use detection precision, also known as classification precision [

4]. This method performs well in general domains; however, in security anomaly detection, higher detection precision results in better performance. Characteristically, in the anomaly-detection domain, there is a lot of normal data and little anomalous data. In other words, the data can be considered skewed or highly imbalanced. Accordingly, the anomalous data detection rate can be low. Alternatively, the erroneous detection of normal data as anomalous can be high despite a high overall detection rate, as it is difficult for the model to characterize anomalous data. If the rate of failing to detect anomalies or erroneously detecting normal behavior as anomalous increases, the performance of the security system can be regarded as poor. That is, classification precision may seem to indicate high performance despite a high error rate. Detection and error rates should result in different outcomes depending on the environment and configurations, according to which proper performance should be maintained. Therefore, the most appropriate detection method, which can increase the detection rate and reduce the error rate, should be applied in anomaly detection.

Principal component analysis (PCA) is a technique for reducing high-dimensional data to low-dimensional data. Orthogonal transformation is used to convert samples in high-dimensional space that are likely to be related to each other into samples in low-dimensional space (principal components) without linear correlation. When data are mapped to one axis, they are linearly transformed into a new coordinate system so that the axis with the largest variance is placed as the first principal component and the axis with the second largest variance as the second principal component [

5]. PCA has a close relationship with factor analysis. Factor analysis usually involves domain-specific assumptions about the underlying structure and solves the eigenvectors of matrices with some difference. Therefore, a dimensionality reduction method using PCA is also proposed in feature selection [

6].

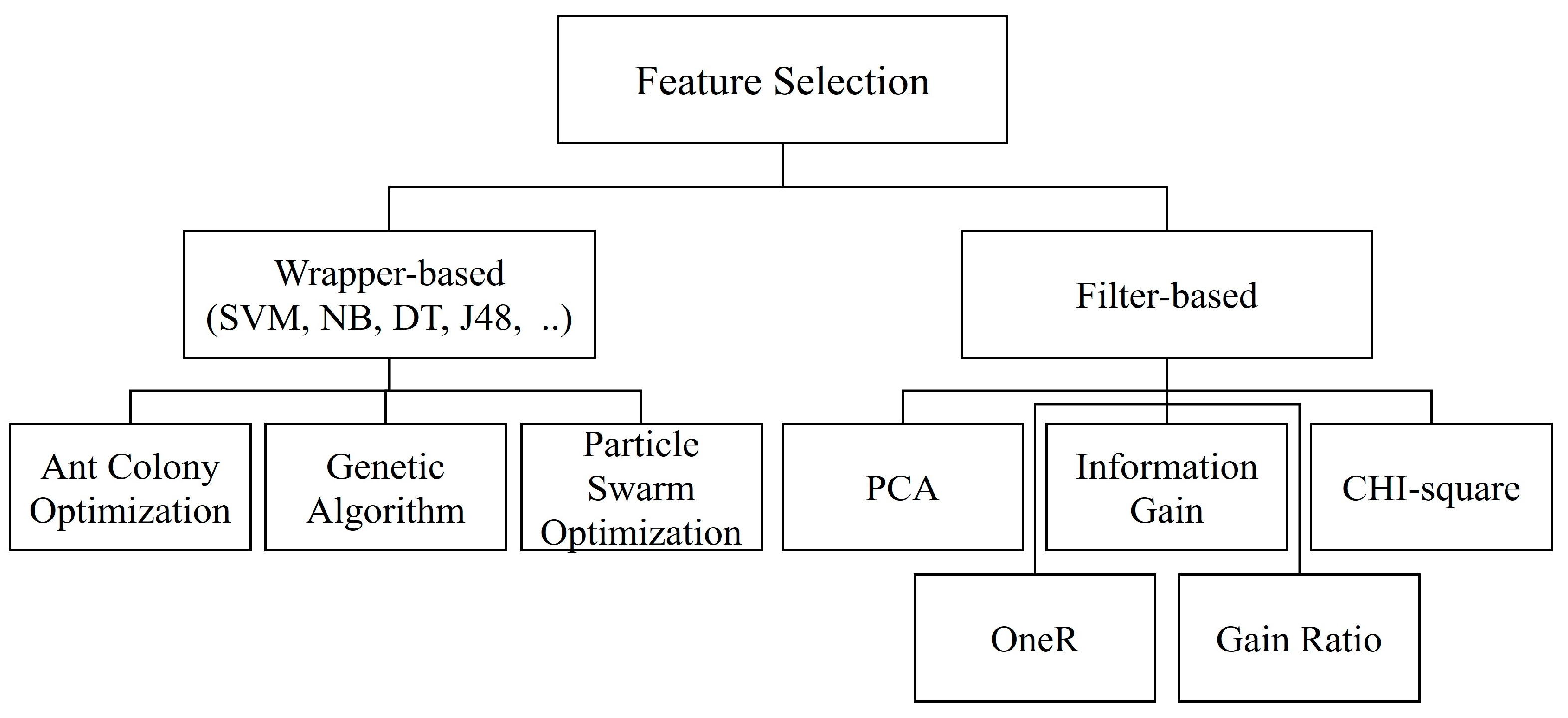

Wrapper-based feature selection [

7] is a method of extracting a subset that performs best in terms of machine-learning prediction accuracy. In intrusion detection, prediction accuracy is used as the detection rate. Because it is a method of finding the best feature subset during machine learning, the time and cost are very high. However, it is a very desirable method for model performance because it finally finds the best feature subset. The parameters of the model and the algorithm must have a high level of completeness to find a proper best feature subset.

Optimization in terms of the feature-selection problem includes a detection/analysis model optimization, security response assignment, etc. A detection or analysis model depends on the type of classifier, detection method, or dataset. It is important to find an optimization model that is suitable for domain and analysis/detection methods. However, it is impossible to search for the optimization model from all existing models (a multitude of models exist). An optimization algorithm can search for an optimization model that is suitable for the classifier/detector or dataset, thus solving this problem. Feature selection is the best solution for the model optimization problem [

8] because it can search both the optimization sub-feature set and model. The model optimization problem is similar to finding an adaptive training model in learning algorithms [

9].

We proposed an advanced fitness function for anomaly-detection performance. To evaluate the proposed method, we performed experiments using a genetic algorithm (GA) and machine-learning classifiers. Chromosomes were decoded according to the anomaly-detection domain to enhance the detection rate and reduce the error rate. Additionally, we proposed an improved fitness function that finds the most ideal feature set for feature selection, raising the detection rate, reducing the error rate, and enhancing detection speed using only a subset of features. This method is not dependent on a specific algorithm but can be adaptively applied to a variety of detection algorithms. It is possible to reduce overfitting with this method, as problems resulting from imbalanced and skewed data can be partially alleviated through feature selection. The proposed fitness function showed better detection performance compared to other PCA-based feature-selection methods, with only 21 features out of 41 features based on knowledge discovery and data mining (KDD) when it was GA-based. Even when only 10% to 20% of the features were used, the detection performance index showed an F1-score of approximately 0.98. The contributions of our study include the following:

Defining an advanced fitness function for an optimal intrusion-detection model using feature selection.

Technology to build an efficient detection model based on a method that can reduce the dimensions of a high-dimensional intrusion-detection dataset.

Proposal of a function method for intrusion detection usable in optimization algorithms and reinforcement learning.

Proposal of an improved method for dimensionality reduction.

This paper is organized as follows. In

Section 2, several studies related to anomaly detection and proposed algorithms are introduced. In

Section 3, we propose intelligent feature selection using GA for anomaly detection.

Section 3 covers the formulations and methods of the proposed algorithm.

Section 4 discusses the results of the experiment performed using the proposed algorithm. Conclusions are drawn in

Section 5.

3. Proposed Solution

3.1. Proposed Feature-Selection Method Based on Improved Fitness Function

In the proposed feature-selection algorithm based on an improved fitness function, an optimized partial feature set is selected to improve anomaly detection and error rates, thus improving detection performance and solving data imbalance. The problem of feature selection is an optimization problem: selecting optimal partial feature sets from all feature sets, which is an nondeterministic polynomial (NP)-complete problem [

35]. We used GA as an optimization algorithm to verify the performance of the proposed fitness function. Therefore, the core elements of the proposed feature-selection algorithm in this study are chromosomes and fitness functions that allow the GA to solve given optimization problems and, in doing so, select the optimal feature set to improve anomaly-detection performance. The proposed fitness function can find the optimal solution, enabling detection performance improvement and a solution for data imbalance, which confirms that a feature subset improves anomaly detection performance. Each process in the proposed algorithm is described below.

3.2. Chromosome Decoding

A chromosome is used to express the solution of a GA and is decoded according to domain-specific characteristics. To use a GA to improve anomaly detection, this study expresses each feature set as a permutation so that a partial feature set can be selected from the whole set (

Figure 3). The method proposed in this study uses a multi-chromosome representation and allows both permutation and binary decoding to be used; hence, the appropriate selection can be made according to the purpose of the user and can be adaptively used for the environment.

3.2.1. Permutation Decoding

In permutation decoding, each gene is a number indicating an index in a complete feature set, which is expressed by numbers along with expressions with permutations.

Each chromosome represents a selected feature subset.

The order of genes in a chromosome is not considered, and duplicate values are not permitted.

The length of the chromosome is set to the same length as that of the partial feature set.

Chromosomes are expressed as

c =

,

, …,

, where

expresses the

ith feature,

i = (1, 2, 3, …

n),

n is equal to the number of total features, and

. The value of each gene of a chromosome is chosen by an initialization method, which is random for the initial population step and located in the problem space. The length of the chromosome is the number of partial features to be selected. For example, the chromosome initialized as

c = (1, 6, 25, 3, 9) in

Figure 3 indicates that five features (the 1st, 6th, 25th, 3rd, and 9th) are to be selected, and detection models will be generated by building training models with only these features.

The advantage of permutation decoding is that users can obtain as many feature sets as they want and operation time can be reduced, depending on the length of the chromosome, compared to binary decoding. The disadvantages are that experiments must be performed to determine the optimal size of the feature subset and that local optima can be encountered during crossover owing to the order-independent selection.

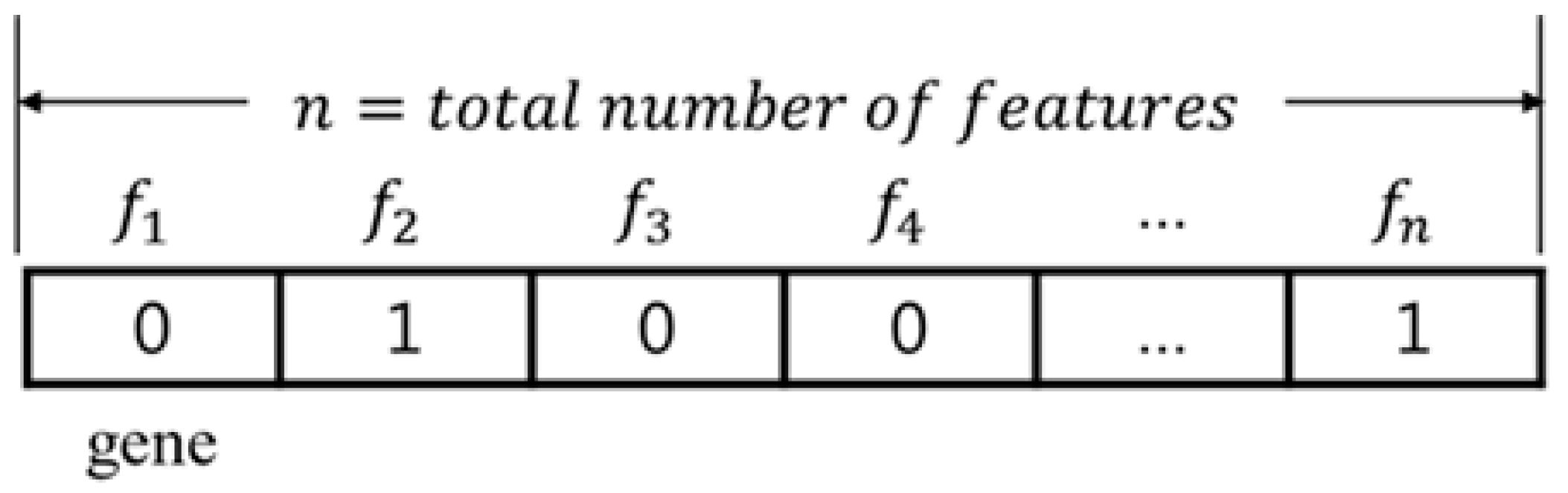

3.2.2. Binary Decoding

In binary decoding, each gene represents one feature: true indicates that the feature at that gene is selected and false marks it as unselected.

Each chromosome represents the selected feature subset.

The order of genes in a chromosome is set to the same order as the features in the dataset.

The length of the chromosome n is the same as the number of features.

A chromosome is expressed as

c =

,

, …,

, where

expresses

nth feature,

n is equal to the number of total features, and, therefore,

n = (1, 2, 3, …

n), and

. The value of each gene is randomly chosen as true or false by the initialization method in the initial population step and located in the problem space. The length of a chromosome is the same as the number of total features. As an example (

Figure 4), the number of features of KDD Cup ’99 [

36] was 41. If feature selection is performed for this dataset, the chromosome length becomes 41. Given a dataset having five features,

c = (1, 0, 1, 1, 0) indicates that the 1st, 3rd, and 4th features will be selected and detection models will be generated by building training models only with the selected features.

The advantage of binary decoding is that both the optimal partial feature set and its length can be found despite the simple decoding design. Since a global optimal solution (partial feature set) can be obtained for the corresponding dataset, the feature set which shows the best performance, and its length, can be obtained. When the number of original features in the dataset is large, the operation and search times for the optimal solution may abruptly increase since selections should be made from the maximum number of features.

3.3. Proposed Advanced Fitness Function for Feature Selection

In this study, an improved fitness function is proposed for the selection of optimized features; this function can address overfitting by solving the problem of anomaly-detection performance from imbalanced security datasets. In general cases, fitness is calculated using classification precision, which can improve simple detection rates but has difficulty in considering erroneous detection and incomplete detection, along with the possibility of overfitting. Lowering the erroneous detection probability is an important performance factor in anomaly detection as it is important in detecting attacks. In other words, it is necessary to reduce erroneous warnings that are issued by detecting a normal state as an anomalous state. In conclusion, it is necessary to develop a method to obtain optimal solutions that can simultaneously raise the detection rate and reduce the error rate. The fitness function proposed in this study solves the above problems. We introduce a new fitness function that uses detection probability, error detection probability, and the incomplete probability generated as a result of performing detection algorithms for the given data. The notations used are shown below.

NR = normal detection, AN = abnormal detection, FP = false positive, MD = miss detection

≜ ith chromosome = selected sub-feature set,

≜ the number of 𝒌 data instance, 𝒌∈(𝑵𝑹, 𝑨𝑵, 𝑭𝑷, 𝑴𝑫)

≜ the number of predicted k data instance using the selected feature set , 𝒌∈(𝑵𝑹, 𝑨𝑵, 𝑭𝑷, 𝑴𝑫)

≜ probability of k, 𝒌∈(𝑵𝑹, 𝑨𝑵, 𝑭𝑷, 𝑴𝑫)

≜ detection rate using the selected feature set

≜ error rate using the selected feature set

≜ balancing weight parameter of class imbalance =

To obtain the detection rate, normal and anomaly-detection probabilities should be calculated using the formula below.

From Formulas (1a) and (1b), the detection rate

can be obtained from the equation below.

Then the erroneous and incomplete detection probabilities should be obtained to calculate the error rate, which is expressed by the formulae below.

From Formulas (1d) and (1e), the error rate

can be obtained from the equation below.

is used as a weight parameter for correcting the value of each rate to solve the class imbalance problem. The value is used to solve the problem that a measured class value is not evenly reflected in anomaly detection, where a class is very small or very large, which solves the problem of data imbalance.

The fitness function obtained from the above derived Formulas (1c) and (1f) can be expressed as follows.

Formula (1g) is expressed as an exponential function to prevent the value of 0, and the solving capacity for class imbalance is improved by increasing the importance of the error rate by lowering the total fitness as the error rate increases. The two types of chromosomes are decoded as follows:

where

n is the total number of features in the original set.

The fitness function in Formula (1g) has the property that the fitness value increases with the decrease in the error rate and with the increase in the detection rate. The fitness value is maximum when the error rate is 0. Therefore, it can be defined as an optimization problem whose solution has the maximum approximate value according to the fitness function.

The purpose of the proposed fitness function is to converge to a fitness value with high detection accuracy and low error based on the results output of the machine-learning model. It is designed to achieve this purpose. Finally, high detection accuracy and low error rates can be expected by obtaining a feature index set that maximizes the proposed fitness function.

3.4. Feature-Selection Algorithm

Feature selection is performed to obtain the desired number of feature subsets through the algorithm (Algorithm 1). In addition, Algorithm 1 shows the procedure code of binary decoding feature selection. First, the population is initialized, and the fitness of each chromosome is calculated using Formula (1g). A training dataset is used in the calculation and the anomaly-detection training model is built by applying only the feature subset from the current chromosome in the detection method.

| Algorithm 1: Feature Selection Procedure (binary decoding) |

featureLength: The number of features in dataset

finalFeatureSet: The number of final feature set

maxGeneration: Maximum generation

popsize: Population Size |

Crossover probability = 0.8

Mutation probability = 0.2

p <-initialPopulation(fatureLength, popsize)

#value of gene = 0 or 1

computeFitness(p)

#fitness function calculation->

generation = 1

while(maxGeneration >= generation {

newPop <- linearRankSelection(p)

pbxCrossover(newPop)

mutate(newPop)

p <- newPop

computeFitness(p)

generation = generation + 1

}

subFeature <- TopFeatureSet(p)

finalFeautreSet <- addFeature(subFeature)

return finalFeatureSet |

Anomaly detection is similarly performed using only the feature subset from the current chromosome, and then its fitness is calculated from the results. Since the anomaly-detection algorithm used here is available for any method used, it can be applied adaptively to various anomaly-detection domains. When the fitness of all chromosomes in the population has been calculated, optimal solutions are obtained by performing selection and crossover according to the GA process. When convergence is obtained, the stop criteria is met, or a predetermined maximum number of generations is reached; thus, feature selection using the chromosome with the current best fitness is performed.

4. Evaluation Methodology

4.1. Environment

The hardware and operating system environment used for the experiment are described below.

The software used in the experiment was R, version 3.02 [

37], modified for adapting the present research from the GA package [

38] to execute a GA in R. Classification-based anomaly detection was performed in this experiment. The classifier used for data modeling and detection was an NB classifier [

39]. The e1071 [

40] package in R was used for simulation.

4.2. Dataset

The most representative KDD Cup ’99 dataset [

36] among anomaly-detection datasets was used for this experiment. The dataset is stereotyped data, which are attack and normal data that MIT’s Lincoln Labs collected from the U.S. Airforce LAN to experiment with anomaly detection. The dataset has been widely used in many IDS studies. It contains nearly 4.90 million records with 41 features. Experiments are generally performed using 10% of the dataset. The contents of 41 features, which can be classified into 3 categories, are shown in [

36].

In the experiments, datasets were reconstructed to perform anomaly detection in general situations. In the general case, normal data dominate and attack data are few, but since these data are made for attack classification, the number of attack records is larger than the number of normal records. Therefore, 9918 records were reconstructed from 10% of the dataset, with 97,278 normal and attack records at a ratio of approximately 10%, at random. Although the types of attacks are classified into four groups: DoS, R2L, U2R, and probing, in the original KDD Cup ’99 dataset, as shown in

Table 1, datasets for the experiment were sampled with normal datasets and all other attack data, which were considered anomalous in the experiments performed in this study. All 41 features were used in the experiment.

4.3. Evaluation Method

4.3.1. Detection Rate

The detection rate is the value calculated for evaluation focused on detection performance only by Formula (1). In general, for anomaly detection with a very large normal data class, this value cannot measure performance in the case of class imbalance.

4.3.2. F1-Measure

The F1-measure is an improved method for evaluating the performance of simple precision, or detection rate, including data classification, document classification, and classification detection. The true positive (TP), true negative (TN), false positive (FP), and false negative (FN) conditions used to obtain precision and recall values have some conceptual differences. When precision and recall values have been obtained, their harmonic mean is calculated by making the weight of each value the same [

4]. If this value is high, the performance of the classifier is considered to be high. In this evaluation, the positive class is set to normal for performance evaluation.

P is precision, and

R is recall, which can be calculated using Formula (2a) with the F1-measure shown in Formula (2b).

TP: Normal data correctly classified as normal.

TN: Anomalous data correctly classified as anomalous.

FP: Normal data classified as anomalous.

FN: Anomalous data classified as normal.

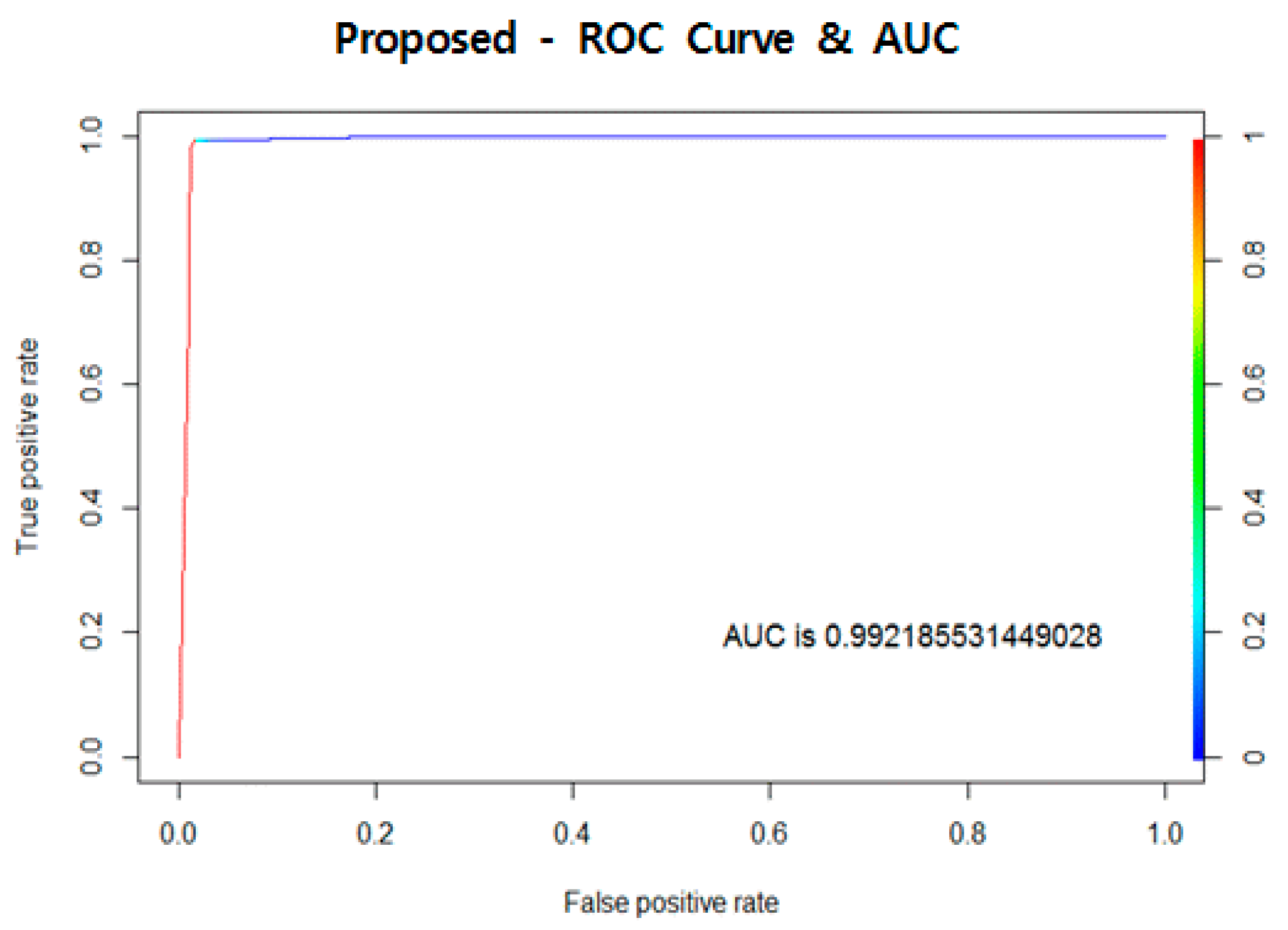

A receiver operating characteristic (ROC) curve is a measurement method used in signal processing feature analysis and has been widely used as a performance evaluation method for binary classification problems, such as pattern recognition, data search, and classification [

41]. The graph shown in

Figure 5 can be obtained by plotting the true positive rate against the false positive rate. Performance can be regarded as high when the inflection point in the valley of the graph approaches 0 on the

x-axis and 1 on the

y-axis.

Therefore, in this example, C shows the best performance, and A shows the worst performance. To obtain numerical values for analysis rather than visual analysis of the graph, the area under the ROC curve (AUC) is calculated from the ROC curve. ROC calculations were performed and graphs were drawn using the ROCR package of R [

42].

4.3.3. ETS/CSI/PAG/ACC

In class imbalance problems, there are methods for detection algorithm performance measurement: equitable threat score (ETS), critical success index (CSI), post agreement (PAG), and accuracy (ACC) [

43,

44,

45,

46,

47]. There are common methods to verify the results of dichotomous (yes/no) forecasts on weather, detection, etc. Some indicators according to the confusion matrix are shown below (

Table 2) (using indicators such as TP, TN, FP, and FN in F1-Measure)

The equations of methods are as follows:

where

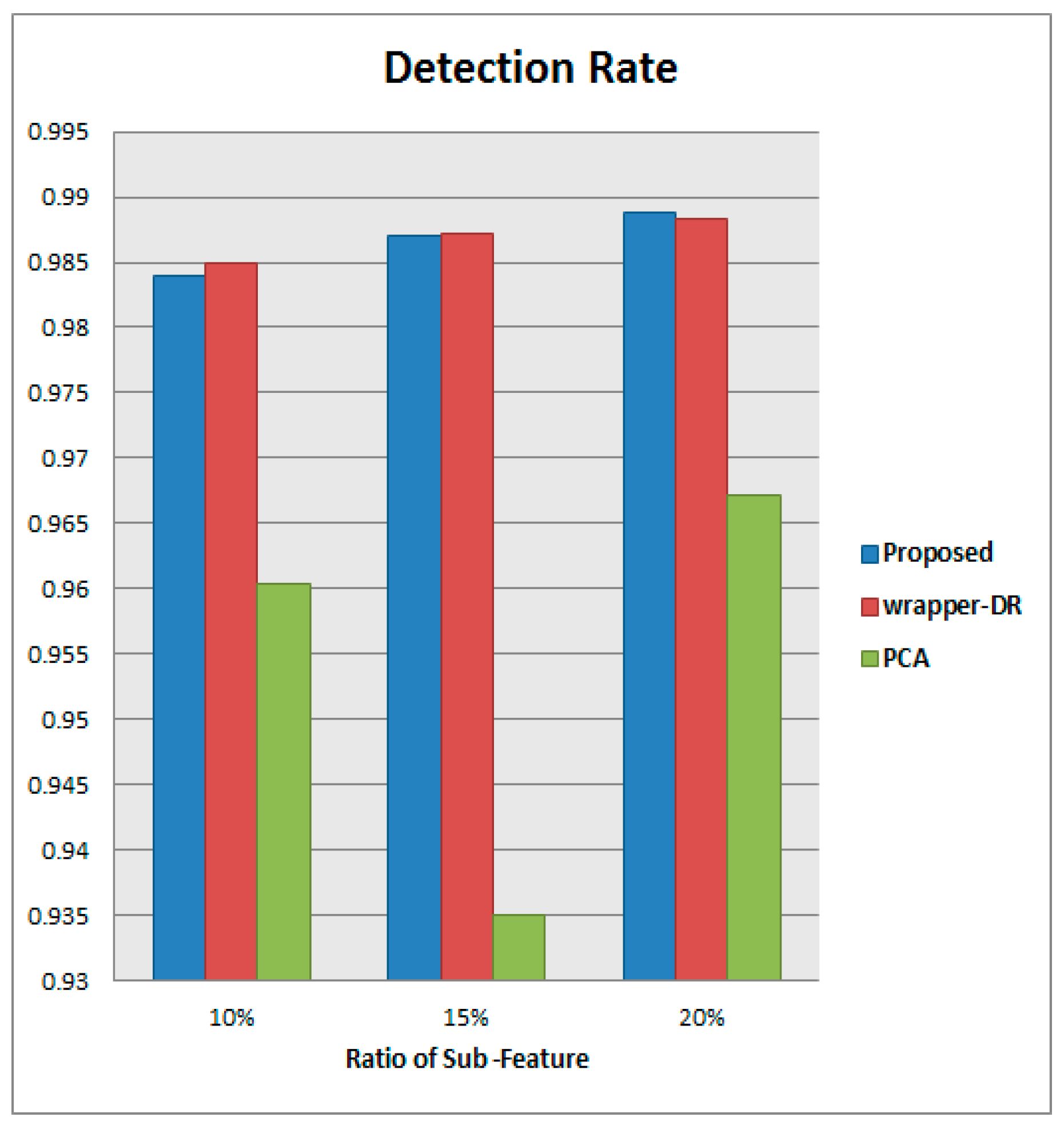

4.4. Experiment Result of Feature Selection with Permutation Decoding

In the experiment, performances were compared with detection models that use all 41 features from the KDD Cup ’99 dataset, and simple detection rate (detection precision)-based wrapper, PCA, and the proposed feature-selection methods were tested for feature selection performance comparison. For feature-selection methods, 4, 6, and 8 feature selections were performed by selecting 10%, 15%, and 20% of the total features, and they were applied to each of the 10 models for average performance comparison. An NB classifier-based detection model was used.

4.4.1. Result of Detection Rate

First, the detection rate performance was compared and the results of this comparison are shown in

Table 3.

Figure 6 shows the results in graphical form. Reviewing the results of the experiment, it can be seen that the performances are good overall, since this is used as the evaluation index of feature selection on detection rate in wrapper-DR. When 20% of features are selected, the proposed algorithm and wrapper-DR showed very similar detection rates, with 0.988781 and 0.98828, respectively. Since the simple detection rate also converges in the direction of finding high optimal solutions as the error rate approaches 0 in the proposed algorithm, the performance results are similar to those of wrapper-DR. However, as mentioned above, the single detection rate evaluation has not been calibrated for the problem of class imbalance; the proposed method is expected to show more objective and better performances in overall anomaly-detection performance. A more precise comparison of performances was performed through F1-measure.

4.4.2. Result of F1-Measure

The F1-measures are shown in

Table 4 and expressed graphically in

Figure 7. The results show that the proposed algorithm performs better than the PCA and wrapper-DR methods in all cases, with 0.99564 at 10%, 0.996455 at 15%, and 0.996679 at 20%. Overall, its performance is higher than that of wrapper-DR, by 0.95%, and PCA, by 3.76%, which shows higher differences in performance than in the detection rates. In the case of F1-measures, precision and recall values are reflected as the performance of the classifier is calculated as their harmonic mean, making the method more objective and comprehensive in performance evaluation compared to the detection rate. Therefore, the proposed algorithm is proved to have excellent performance in detection as an anomaly-detection model, and it can be confirmed to show objectively excellent performance in overall data analysis. Overall improvement in the detection model performance is possible owing to the reduced error rate of the proposed algorithm, and induction is performed so that the optimal solution can be found, solving the class imbalance problem. It can be seen from the experiment that optimal feature selection is not available in PCA, despite having the fastest speed owing to feature selection using a simple model of main component analysis.

Finally, the average detection rate, F1-measure, and the total of 41 features of each of 10–20% selected by the algorithm were compared with the feature-selection performances. Performances were greatly improved by about 49.5%, on average, in detection rate and by about 15.3% in F1-measure compared to using all features by all of the feature-selection algorithms. Therefore, the performance evaluation shows that the feature-selection algorithm solves the problem of imbalance in the detection model and improves the appropriateness of the model. This proves that the performance of the detection model can be enhanced by our algorithm. The proposed algorithm showed large improvements in detection rate, by 50.11%, and in F1-measure, by 16.43%, compared to algorithms using the entire feature set, and can be considered an excellent algorithm that is appropriate to use in anomaly-detection models owing to performances that are better than those of other algorithms.

Therefore, the feature selection approach affects the time needed to investigate the traffic behavior and improve the accuracy level. Machine learning-based anomaly detection and intrusion detection models play an important role in modern security systems, and many commercial solutions have already applied and used major functions. In the case of a machine learning-based detection model, it is necessary to detect anomalies and attacks from large datasets. In the case of modern detection systems, it is necessary to detect anomalies and attack behaviors from high-dimensional data that includes various features collected from a wider range of traffic and various security sensors. All features are made meaningful, but the extent of their effect on performance is different depending on the characteristics of the detection model. Therefore, it is important to improve accuracy and speed performance in ways such as dimensionality reduction and feature selection. Metaheuristic algorithms such as GA have been used for such feature selection for a long time, and their performance has been proven through several studies. Various studies have shown that feature selection methods affect the time needed to investigate the traffic behavior and improve the accuracy level [

48].

Figure 8 shows the average experimental results of 10~20% selection features for detection rates and F1-measure values. In the experiment in this paper, using feature selection showed better performance than using all features (

Figure 8), and it showed a significant difference.

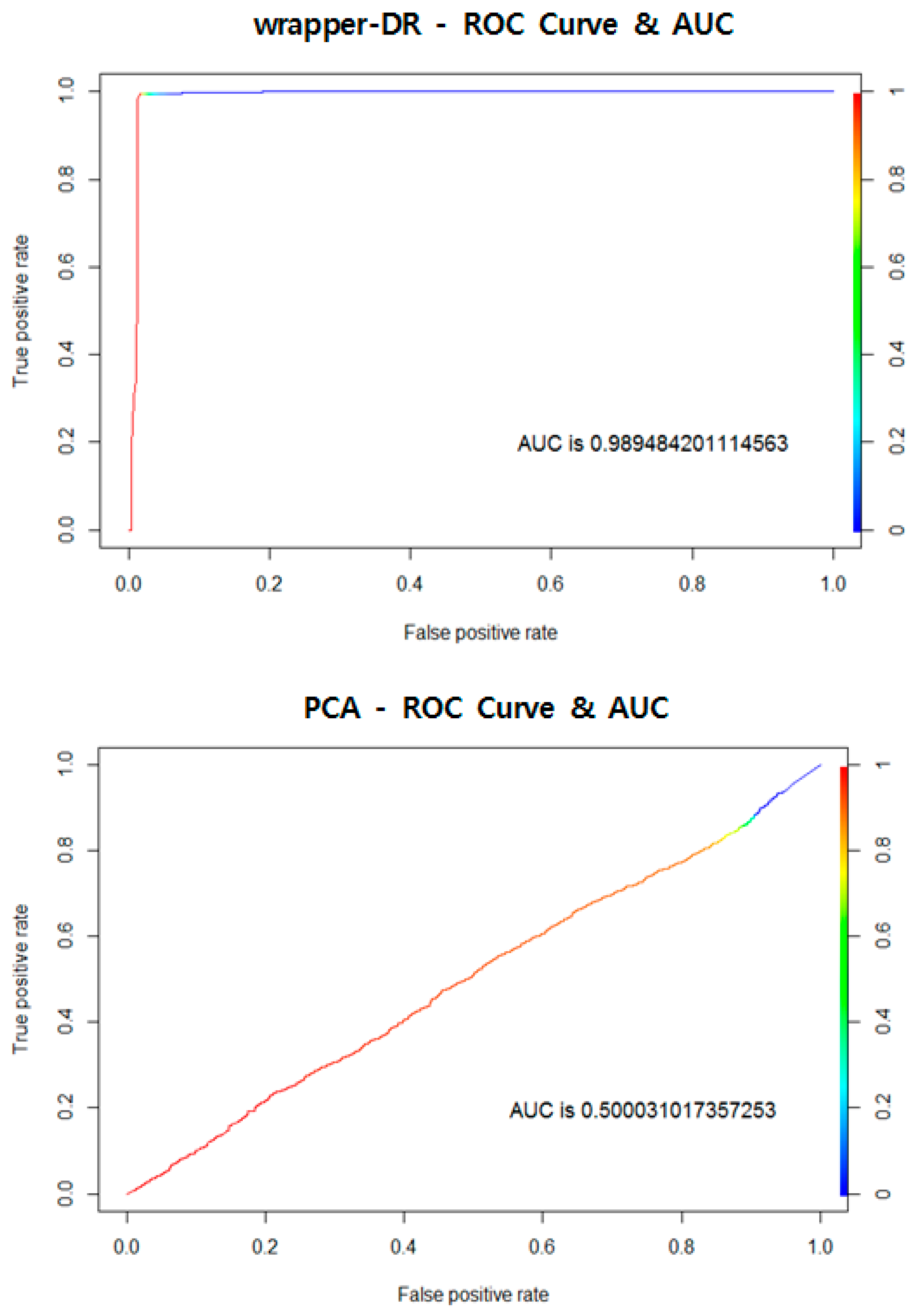

4.4.3. Result of ROC Curve

Performance evaluation using the ROC curve and AUC was performed as the next evaluation method.

Figure 9 shows ROC curves for the proposed algorithm, wrapper-DR, and PCA, with the results of AUC shown on the lower right. Visual analysis confirmed that the proposed method and wrapper-DR had excellent performances, with graph curves approaching 0 on the

x-axis and 1 on the

y-axis. PCA, on the other hand, showed an ROC curve close to a line graph y = x, implying that the performance is poor. The AUC results show that the proposed algorithm performed better by about 0.0005, with our algorithm at 0.9899 and wrapper-DR at 0.9894. PCA was excluded from the performance evaluation since it performed poorly, at close to 0.5. Although large differences were not seen in the ROC curves, the proposed method achieved the best numerical performance, confirming that it is an excellent algorithm.

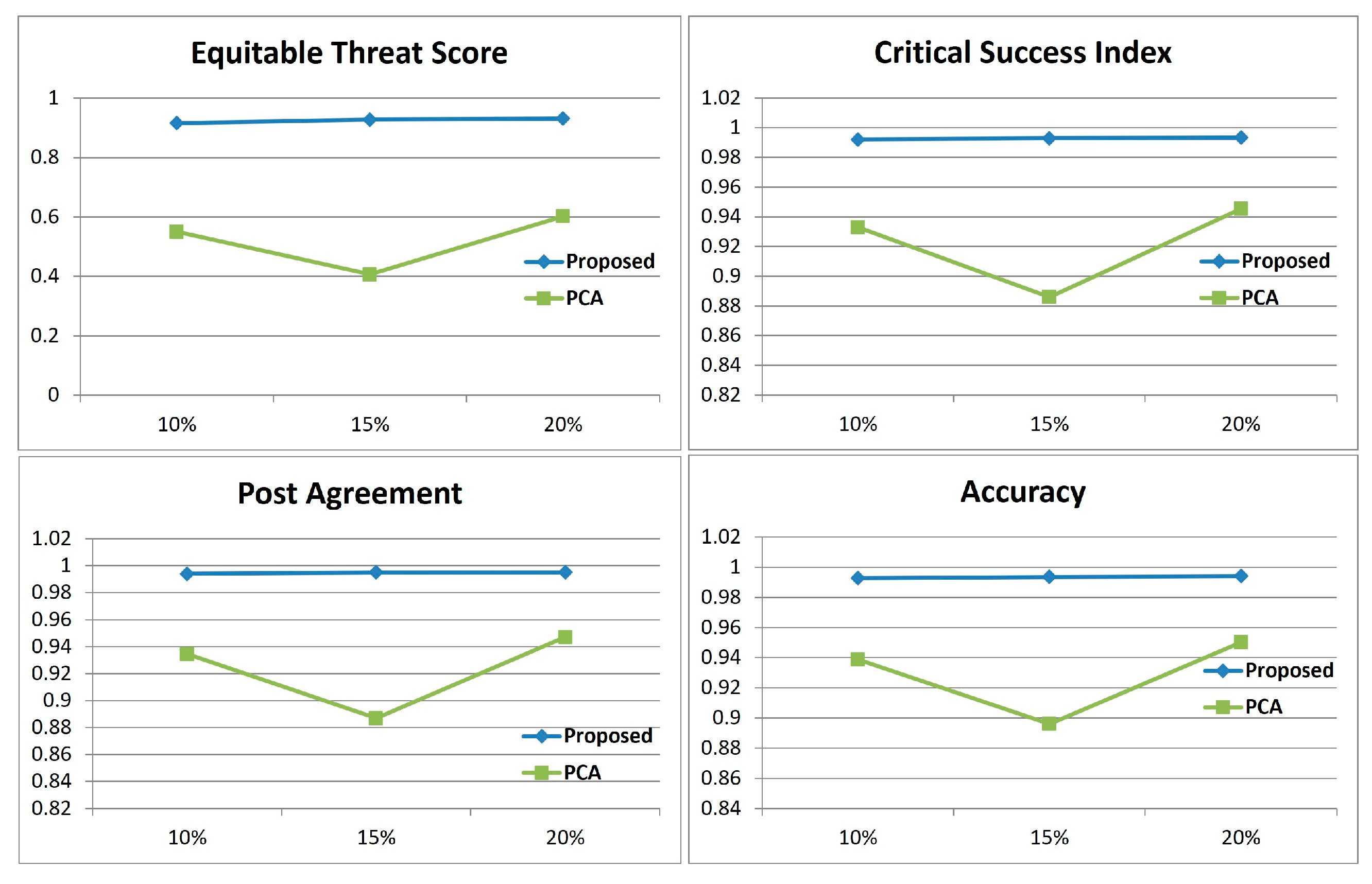

4.4.4. Result of ETS, CSI, PAG, ACC

The results of ETS/CSI/PAG/ACC are shown in

Table 5 and expressed graphically in

Figure 10 (Proposed vs. wrapper-DR) and

Figure 11 (Proposed vs. PCA). The ETS, CSI, PAG, and ACC results show that the proposed algorithm performs better than PCA and wrapper-DR methods in all cases, with 0.9160, 0.9920, 0.9940, and 0.9927 at 10%; 0.9279, 0.9929, 0.9950; and 0.9935 at 15%, and 0.9318, 0.9933, 0.9951, and 0.9942 at 20%. ETS and CSI score are reflected, as that algorithm solves the imbalance class problem and searches for the optimal forecast model. In these results, we find that the proposed algorithm performs better than the others in searching for optimal detection models. In the boxplot (

Figure 10) analysis, it can be seen that the proposed algorithm shows less deviation between repeated experiments. This can be considered as showing consistent performance indicators compared to wrapper-DR.

4.5. Experiment Results of Feature Selection with Binary Decoding

In the binary decoding experiment, the performances were compared and analyzed using 41 KDD Cup ’99 features for analysis of the performances and using the wrapper feature-selection method based on simple detection rate (detection precision) and the proposed feature-selection method for the performance comparison of feature selection. PCA was excluded from the experiment since it is impossible to assign the number of features differently from permutations. The algorithm was applied to each of the 10 models in the same way to compare average performances, and an NB classifier-based detection model was used.

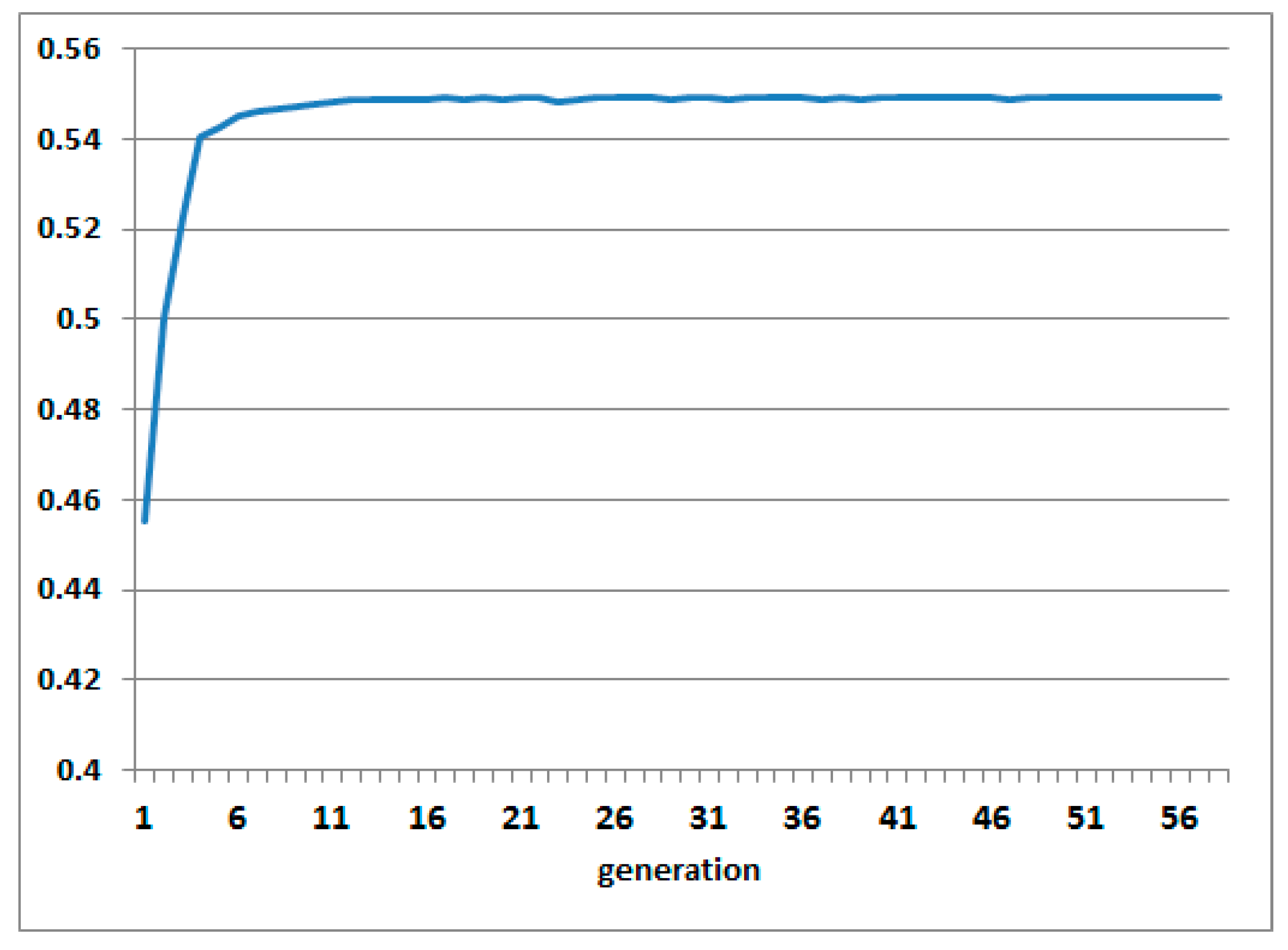

4.5.1. Analysis of Fitness Curve

Figure 12 shows the fitness curves of the proposed algorithm for binary decoding. In this experiment, the GA used a population size of 100. Our algorithm found the optimal model for approximately 15 generations. The fitness value converged from approximately 0.45 to 0.55. Therefore, our proposed algorithm solves the detection model optimization problem and achieves good results.

4.5.2. Result of Detection Rate and F1-Measure, ETS/CSI/PAG/ACC

The detection rate and F1-measure for this experiment are given in

Table 6, and are expressed as a graph in

Figure 13. This experiment showed, as in the previous experiments, that the proposed algorithm resulted in a better detection rate performance and F1-measure than wrapper-DR. The performance difference in F1-measure was somewhat larger than that of the detection rate, with values of 0.000876 and 0.000402, respectively. The proposed algorithm showed better performance overall in binary decoding.

To objectively compare the model performance, published research datasets should be used. In the experimental dataset for research, there is a part where the data for each normal and attack class is biased; therefore, in general, the performance index of the measure of the classification model is already high. However, the proposed method attempted to obtain a more optimized feature set by reflecting the error rate, and the experimental results are considered appropriate for verification. Better results are expected for multi-object classification or less biased datasets.

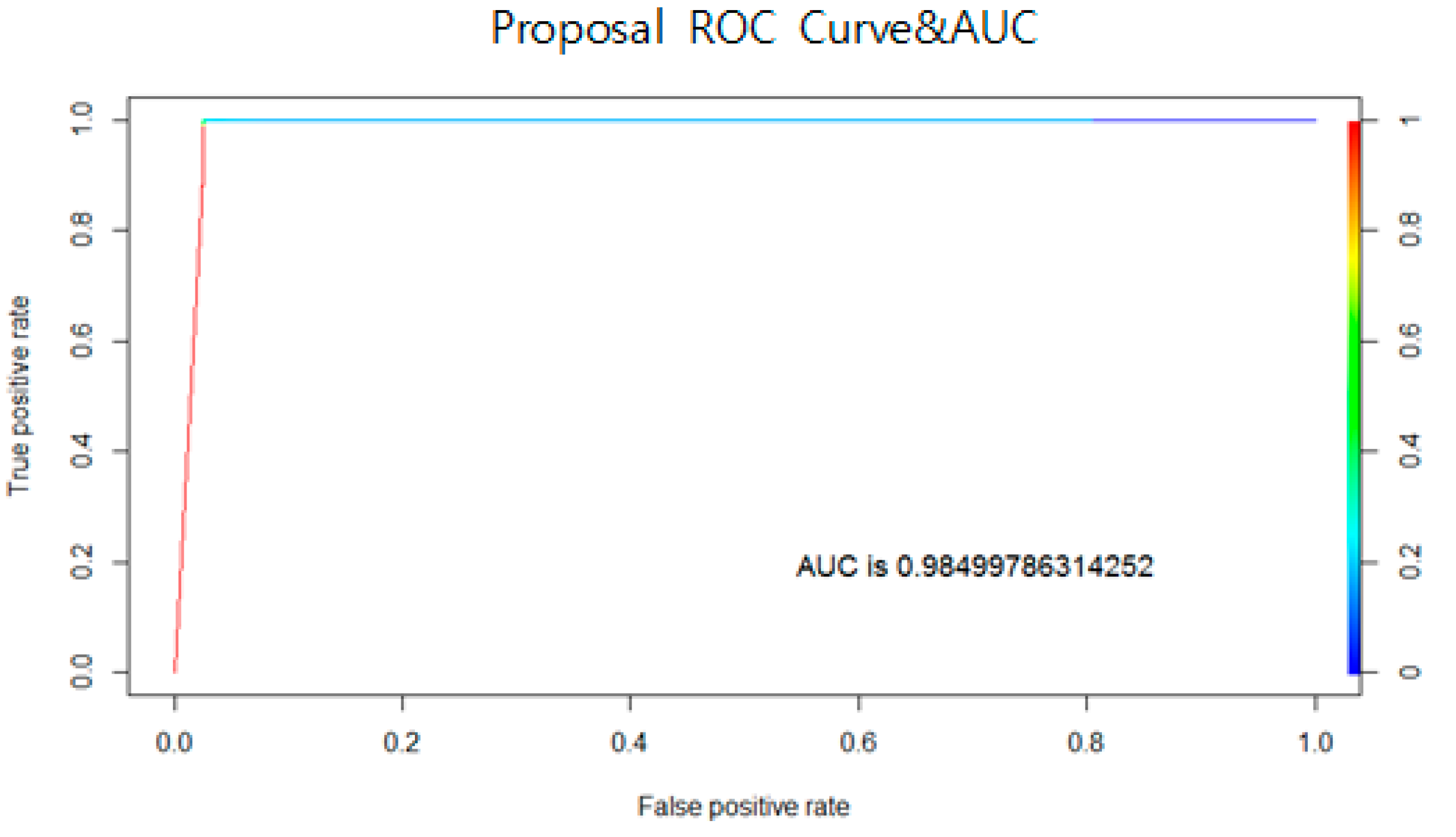

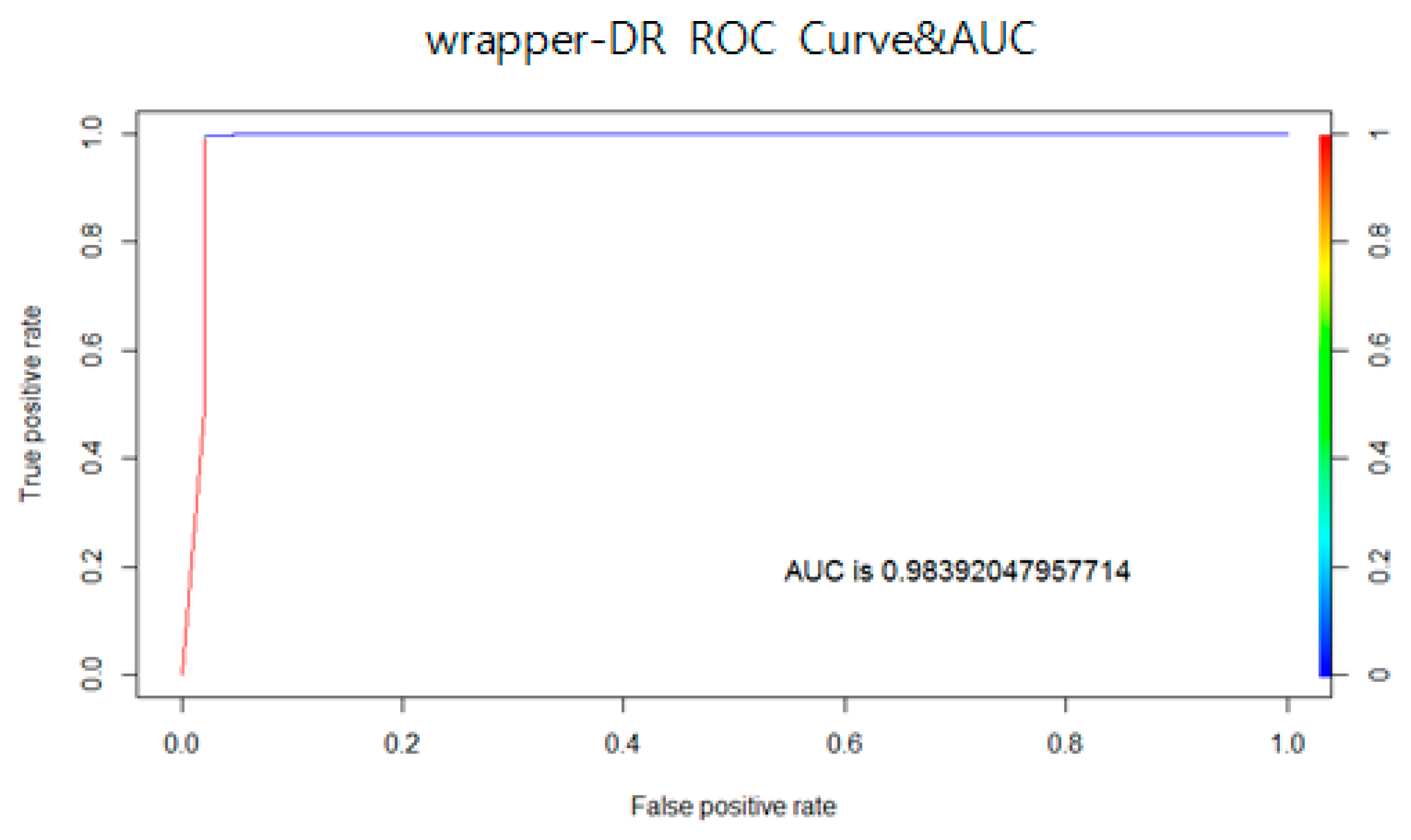

4.5.3. Result of ROC Curve

Figure 14 and

Figure 15 shows the ROC curves and AUC calculations for the proposed algorithm and wrapper-DR, respectively. Similarly, in visual graph analysis, graphs representing excellent performances with inflection points close to 0 on the

x-axis and 1 on the

y-axis are shown for the two methods. According to AUC results, the proposed algorithm performed better, with values of 0.984997 and 0.98392 for wrapper-DR, which are approximately 0.001027 higher for the proposed algorithm. The experiment showed that the proposed algorithm performed better overall in binary as opposed to in permutation.

4.6. Result of Selected Sub-Feature Set

The advantage of binary decoding is that the algorithm creates a feature set with the optimal number and elements without specifying the number of features to be obtained. Therefore, it is not necessary for a user to assign the most appropriate number of features. The selection results of each algorithm for the feature set showing the best experimental performance are shown in

Table 7, below. The results show that there are 18 methods and 21 wrapper-DRs proposing the feature set representing the best performance measurements when the same number of generations is performed. This confirms that the proposed method can find the most optimized subset of features with the most efficient length and best performance. Reviewing the results of previous experiments and feature selections, the performance of the proposed algorithm is expected to be higher than that of the other algorithms as the amount of data or the number of features increases. As the dataset becomes larger, error rates and imbalances generally increase, and methods to improve these conditions are needed. Our proposed algorithm can provide a solution for improving detection model performance owing to excellent overall detection and error rates compared to PCA, or compared to methods that reflect only detection rates.

In this study, we proposed a GA-based feature-selection algorithm for an improved anomaly-detection system. This algorithm is a wrapper-based feature-selection algorithm for improving anomaly-detection rate and error rate, as well as data imbalance from structured data. A GA was applied to the methods to seek optimal solutions, and optimization problems are presented, along with the improved fitness functions proposed, to solve the problem. In addition, the GA structure was redesigned to fit each feature-selection method and detection purpose, and the feature selection algorithm for selecting final features was proposed.

The proposed algorithm was experimentally proven to provide excellent performance compared to algorithms using the entire feature set, and its performance improvement over conventional methods was confirmed. The results show that the proposed algorithm performs better than the PCA and wrapper-DR (only detection rates are considered) methods in all cases, with 0.99564 at 10%, 0.996455 at 15%, and 0.996679 at 20%. Overall, its performance is higher than that of wrapper-DR by 0.95%, and PCA by 3.76%, which shows higher differences in performance than in the detection rates. In binary decoding, the proposed fitness function showed better detection performance compared to other PCA-based feature-selection methods, with only 21 features out of 41 features based on KDD when it was GA-based. In addition, even when only 10% to 20% of the features were used, the detection performance index showed an F1-score of approximately 0.98. We proposed an optimization method for solving the problem of data imbalance in anomaly detection and verified that our algorithm performs well with fewer features than other algorithms.

4.7. Result of Binary Decoding with Other Intrusion Detection Dataset: NSL-KDD, CIC-IDS2017

The kddcup99 is a commonly used dataset for benchmarking IDS detection performance. Other datasets are the NSL-KDD and CIC-IDS 2017 datasets [

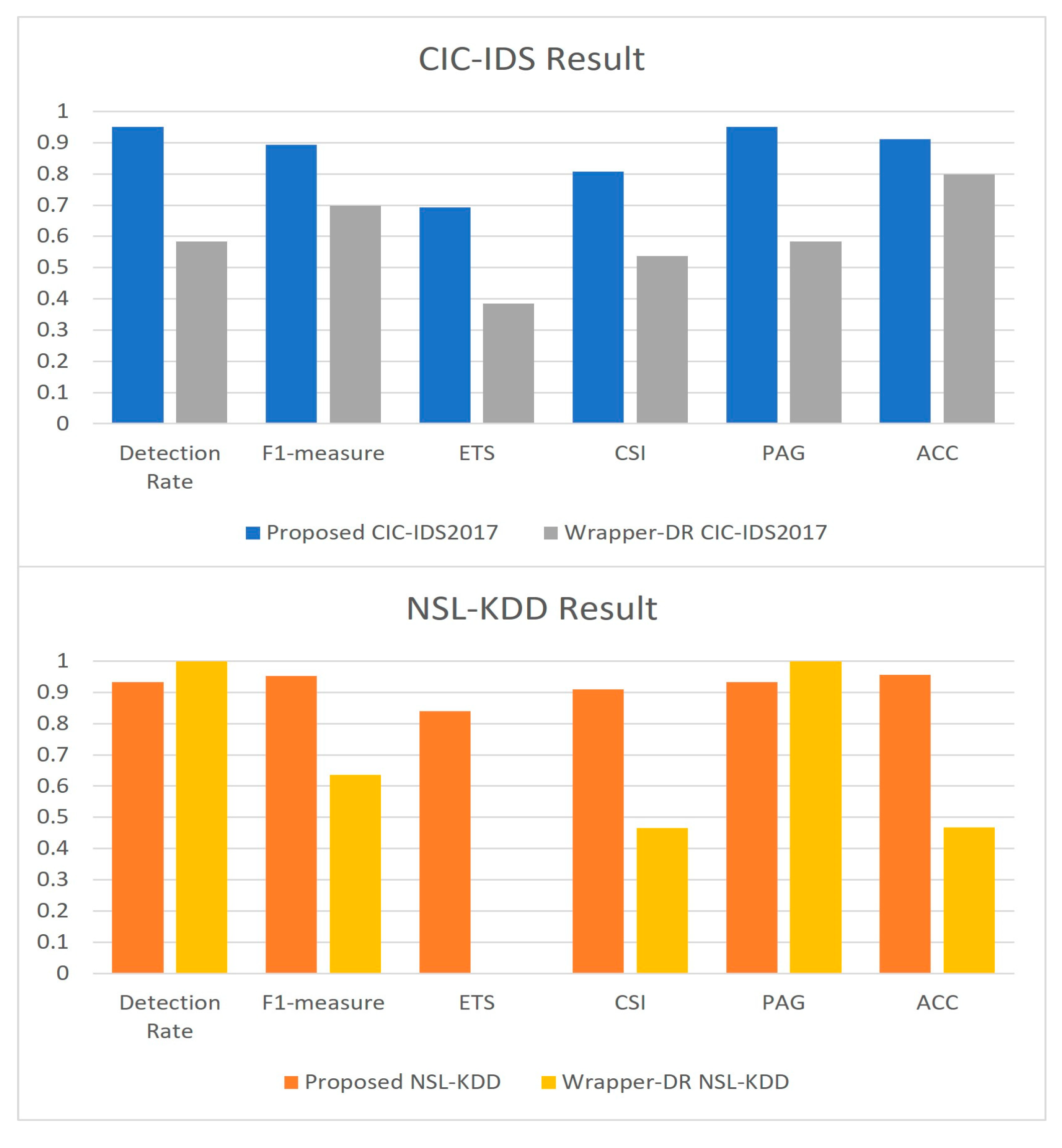

49]. The NSL-KDD is an updated and improved dataset of the existing KDD99 dataset. The basic structure is the same as KDD99. The CIC-IDS 2017 dataset contains normal and the most up-to-date common attacks, which resembles the true real-world data (PCAPs). It also includes labeled flows based on the time stamp, source and destination IPs, source and destination ports, protocols, and attack. For machine learning model benchmarking, MachineLearningCSV.zip is provided separately, and we conducted additional experiments with this dataset. MachineLearningCSV consists of 78 features excluding class labels. A dataset consisting of 104,255 records sampled from 10% of the entire dataset was used. The training set and the test set were split at a ratio of 9:1. To verify the feature selection performance from high-dimensional data, the proposed method based on binary decoding and the wrapper-DR method were compared.

Table 8 shows the results of experiments with the CIC-IDS 2017 and NSL-KDD datasets. For the CIC-IDS 2017 dataset, the proposed method showed an F1-measure of 0.9511(CIC-IDS)/0.9523(NSL-KDD), 0.1952/0.3165 points higher than wrapper-DR’s 0.5840, and showed better performance in all performance metrics except detection rate than wrapper-DR.

Figure 16 shows the experimental results as a bar chart.

In experiments on the CIC-IDS 2017 dataset, which has a higher dimension (more features) than the kddcup99 dataset, it was found that the proposed method showed a larger performance difference. It was confirmed that the proposed fitness function method was designed so that the optimization algorithm could search for the optimal feature set even in a large-dimensional dataset.

Table 9 shows the feature set results selected by the method proposed in the CIC-IDS 2017 dataset and the wrapper-DR method.

In this paper, since the fitness function is important in using GA, a more suitable fitness function for the detection system was studied, proposed, and verified through various experiments and performance indicators. In the relatively high-dimensional CIC-IDS2017 dataset, the proposed algorithm showed a more significant performance improvement than in the low-dimensional data [

11]. Modern security datasets are becoming more and more dimensional, so analysis using all features is increasingly difficult. If a detection model is built with an optimized dataset composed of key features, it is possible to secure detection performance and real-time detection speed performance in operation. Although there is an overhead required for feature selection, the loss of time required for feature selection can be reduced if the main features are selected using a sampled dataset and feature selection is performed using techniques such as parallel processing.

4.8. Time Complexity of Proposed Algorithm

The proposed algorithm has a time complexity O (time complexity of optimization algorithm * time complexity of detection model). In the experiment case, the time complexity is O(n

2 * mF) because GA has O(n

2) without the time complexity of the fitness function [

50]. The fitness function of our algorithm is different from that of using the detection model (classification based). The time complexity of the proposed algorithm is O(n

2*mF), because the NB model is used in the fitness function and NB has O(mF) [

51] where

m is the instance and

F is the length of features. If the instance and feature of the dataset are increased, our algorithm calculation time will be increased dramatically. Therefore, the implementation of the algorithm requires the application of batch, parallel, and distributed processes such as Hadoop and MapReduce [

52,

53]. However, it is possible to reduce the computation time using the sub-features selected by the feature-selection algorithm because

F in O(mF) is affected by the length of the features.