Progress of Machine Vision Technologies in Intelligent Dairy Farming

Abstract

Featured Application

Abstract

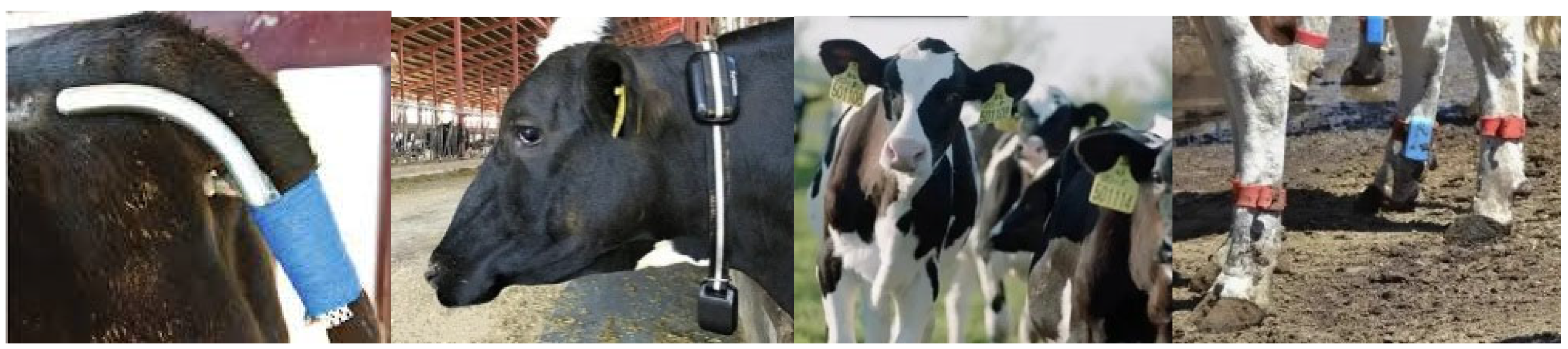

1. Introduction

- High cost

- Easily damaged

- Waste of human resources

- Single function

2. Application of Machine Vision in Dairy Cow Identification

| Reference | Identify Features | Recognition Methods | Accuracy |

|---|---|---|---|

| Xu et al. [22], 2022 | Cow facial features | Integrated light-weight Retina Face-mobilenet with Additive Angular Margin Loss (ArcFace) | 91.3% |

| Weng et al. [23], 2022 | Cow facial features | Improved Convolutional Neural Network (ResNet) | 94.5% |

| Chen et al. [24], 2022 | Cow facial features | a novel unified global and part feature deep network (GPN)-framework-based ResNet50 | 97.4% |

| Guo et al. [25], 2022 | Cow facial features | YOLO V3-Tiny Deep Learning Algorithm | 90.0% |

| Awad Aet al. [26], 2013 | Cow muzzle print features | SIFT (Scale-Invariant Feature Transform), RANSAC (Random Sample Consensus) algorithm | 93.3% |

| Tharwat et al. [27], 2014 | Cow muzzle print features | LBP (Local Binary Pattern), Naive Bayes, SVM, and KNN | 99.5% |

| Kaur et al. [28], 2022 | Cow muzzle print features | SIFT (Scale-Invariant Feature Transform), SURF (Speeded-up Robust Features), MLP(Multilayer Perceptron Network), RF (Random Forest), and DT (Decision tree) | 83.35% |

| Sian et al. [29], 2020 | Cow muzzle print features | WLD (Weber Local Descriptor), SVM (Support Vector Machine) | 96.5% |

| Kumar et al. [30], 2018 | Cow muzzle print features | CNN (Convolution Neural Network) and DBN (Deep Belief Network) | 98.99% |

| Kosana et al. [31], 2022 | Cow muzzle print features | InceptionResnetV2 + MLP (Multilayer Perceptron Network) | 98.21% |

| Zhao et al. [32], 2019 | Cow body features | FAST (Features from accelerated segment test) + SIFT (Scale-invariantfeature transform)+FLANN(Fast Library for Approximate Nearest Neighbors) | 96.72% |

| Bhole et al. [33], 2022 | Cow body features | Combination of Receptive Fields (CORF) + Convolution Neural Network (ConvNet) + linear SVM | 99.64% |

| Qiao et al. [34], 2019 | Cow body features | InceptionV3 + LSTM (Long Short-Term Memory) | 91% |

| Yukun et al. [35], 2022 | Cow body features | CNN (Convolution Neural Network) + LRM (Linear Regression Model) | 93.7% |

| Hu et al. [36], 2022 | Cow body features | YOLO (You Only Look Once) + CNNs (ConvolutionalNeural Networks) + SVM (Support Vector Machine) | 98.36% |

3. Application of Machine Vision in Main Behavior Recognition of Dairy Cows

4. Application of Machine Vision in Disease Prevention and Treatment of Dairy Cows

5. Conclusions

5.1. The Advantages of Machine Vision in Precision and Large-Scale Breeding of Cows

- (1)

- Reduce farming costs

- (2)

- Improve breeding efficiency

- (3)

- Improve animal welfare

- (4)

- Provide data support for precise breeding of cows

5.2. Challengess Faced by Machine Vision Technologies in the Application of Intelligent Dairy Farming

- (1)

- Strengthen the promotion of research results to production applications

- (2)

- Algorithms

- (3)

- Research on Comprehensive Application of Multi-technology Mixing

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- FAO. Dairy Market Review: Emerging Trends and Outlook 2022; FAO: Rome, Italy, 2022. [Google Scholar]

- Shahbandeh, M. Available online: https://www.statista.com/statistics/869885/global-number-milk-cows-by-country/ (accessed on 4 January 2023).

- Allden, W.G. The herbage intake of grazing sheep in relation to pasture availability. Proc. Aust. Soc. Anim. Prod. 1962, 4, 163–166. [Google Scholar]

- Stevenson, J.S. A review of estrous behavior and detection in dairy cows. BSAP Occas. Publ. 2001, 26, 43–62. [Google Scholar] [CrossRef]

- Mottram, T. Automatic monitoring of the health and metabolic status of dairy cows. Livest. Prod. Sci. 1997, 48, 209–217. [Google Scholar] [CrossRef]

- Maatje, K.; De Mol, R.M.; Rossing, W. Cow status monitoring (health and estrus) using detection sensors. Comput. Electron. Agric. 1997, 16, 245–254. [Google Scholar] [CrossRef]

- Gardner, J.W.; Hines, E.L.; Molinier, F.; Bartlett, P.N.; Mottram, T.T. Prediction of health of dairy cattle from breath samples using neural network with parametric model of dynamic response of array of semiconducting gas sensors. IEE Proc.-Sci. Meas. Technol. 1999, 146, 102–108. [Google Scholar] [CrossRef]

- Kuklane, K.; Gavhed, D.; Fredriksson, K. A field study in dairy farms: Thermal condition of feet. Int. J. Ind. Ergon. 2001, 27, 367–373. [Google Scholar] [CrossRef]

- Cho, S.I.; Ryu, K.H.; An, K.J.; Kim, Y.Y.; You, G.Y. Development of an Electronic Identification Unit for Automatic Dairy Farm Management. J. Anim. Environ. Sci. 2002, 8, 63–72. [Google Scholar]

- Shen, W.; Cheng, F.; Zhang, Y.; Wei, X.; Fu, Q.; Zhang, Y. Automatic recognition of ingestive-related behaviors of dairy cows based on triaxial acceleration. Agric. Inf. Process. 2020, 7, 17. [Google Scholar] [CrossRef]

- Haladjian, J.; Hodaie, Z.; Nüske, S.; Brügge, B. Gait anomaly detection in dairy cattle. In Proceedings of the Fourth International Conference on Animal-Computer Interaction, Milton Keynes, UK, 21 November 2017; pp. 1–8. [Google Scholar]

- Morais, R.; Valente, A.; Almeida, J.C.; Silva, A.M.; Soares, S.; Reis, M.J.; Valentim, R.; Azevedo, J. Concept study of an implantable microsystem for electrical resistance and temperature measurements in dairy cows, suitable for estrus detection. Sens. Actuators A Phys. 2006, 132, 354–361. [Google Scholar] [CrossRef]

- Wikse, S.E.; Herd, D.B.; Field, R.W.; Holland, P.S.; Mcgrann, J.M.; Thompson, J.A. Food animal economics—Use of performance ratios to calculate the economic impact of thin cows in a beef cattle herd. J. Am. Vet. Med. Assoc. 1995, 10, 207. [Google Scholar]

- Chelotti, J.O.; Vanrell, S.R.; Rau, L.; Galli, J.R.; Planisich, A.M.; Utsumi, S.A.; Milone, D.H.; Giovanini, L.L.; Rufiner, H.L. An online method for estimating grazing and rumination bouts using acoustic signals in grazing cattle. Comput. Electron. Agric. 2020, 173, 105443. [Google Scholar] [CrossRef]

- Bewley, J.; Boyce, R.; Hockin, J.; Munksgaard, L.; Eicher, S.; Einstein, M.; Schutz, M. Influence of milk yield, stage of lactation, and body condition on dairy cattle lying behaviour measured using an automated activity monitoring sensor. J. Dairy Res. 2010, 77, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Thomsen, P.T.; Houe, H. Cow mortality as an indicator of animal welfare in dairy herds. Res. Vet. Sci. 2018, 119, 239–243. [Google Scholar] [CrossRef] [PubMed]

- Oltenacu, P.A.; Broom, D.M. The impact of genetic selection for increased milk yield on the welfare of dairy cows. Anim. Welf. 2010, 19, 39–49. [Google Scholar] [CrossRef]

- Schulte, H.D.; Armbrecht, L.; Bürger, R.; Gauly, M.; Musshoff, O.; Hüttel, S. Let the cows graze: An empirical investigation on the trade-off between efficiency and farm animal welfare in milk production. Land Use Policy 2018, 79, 375–385. [Google Scholar] [CrossRef]

- Singh, A.K.; Ghosh, S.; Roy, B.; Tiwari, D.K.; Baghel, R.P. Application of radio frequency identification (RFID) technology in dairy herd management. Int. J. Livest. Res. 2014, 4, 10–19. [Google Scholar] [CrossRef]

- Hammer, N.; Pfeifer, M.; Staiger, M.; Adrion, F.; Gallmann, E.; Jungbluth, T. Cost-benefit analysis of an UHF-RFID system for animal identification, simultaneous detection and hotspot monitoring of fattening pigs and dairy cows. Landtechnik 2017, 72, 130–155. [Google Scholar]

- Kumar, S.; Tiwari, S.; Singh, S.K. Face recognition of cattle: Can it be done? Proc. Natl. Acad. Sci. India Sect. A Phys. Sci. 2016, 86, 137–148. [Google Scholar] [CrossRef]

- Xu, B.; Wang, W.; Guo, L.; Chen, G.; Li, Y.; Cao, Z.; Wu, S. CattleFaceNet: A cattle face identification approach based on RetinaFace and ArcFace loss. Comput. Electron. Agric. 2022, 193, 106675. [Google Scholar] [CrossRef]

- Weng, Z.; Fan, L.; Zhang, Y.; Zheng, Z.; Gong, C.; Wei, Z. Facial Recognition of Dairy Cattle Based on Improved Convolutional Neural Network. IEICE Trans. Inf. Syst. 2022, 105, 1234–1238. [Google Scholar] [CrossRef]

- Chen, X.; Yang, T.; Mai, K.; Liu, C.; Xiong, J.; Kuang, Y.; Gao, Y. Holstein cattle face re-identification unifying global and part feature deep network with attention mechanism. Animals 2022, 12, 1047. [Google Scholar] [CrossRef] [PubMed]

- Guo, S.S.; Lee, K.H.; Chang, L.; Tseng, C.D.; Sie, S.J.; Lin, G.Z.; Chen, J.Y.; Yeh, Y.H.; Huang, Y.J.; Lee, T.F. Development of an Automated Body Temperature Detection Platform for Face Recognition in Cattle with YOLO V3-Tiny Deep Learning and Infrared Thermal Imaging. Appl. Sci. 2022, 12, 4036. [Google Scholar] [CrossRef]

- Awad, A.I.; Zawbaa, H.M.; Mahmoud, H.A.; Nabi, E.H.; Fayed, R.H.; Hassanien, A.E. A robust cattle identification scheme using muzzle print images. In Proceedings of the 2013 Federated Conference on Computer Science and Information Systems, Krakow, Poland, 8–11 September 2013; pp. 529–534. [Google Scholar]

- Tharwat, A.; Gaber, T.; Hassanien, A.E.; Hassanien, H.A.; Tolba, M.F. Cattle identification using muzzle print images based on texture features approach. In Proceedings of the Fifth International Conference on Innovations in Bio-Inspired Computing and Applications IBICA 2014, Ostrava, Czech Republic, 23–25 June 2014; pp. 217–227. [Google Scholar]

- Kaur, A.; Kumar, M.; Jindal, M.K. Shi-Tomasi corner detector for cattle identification from muzzle print image pattern. Ecol. Inform. 2022, 68, 101549. [Google Scholar] [CrossRef]

- Sian, C.; Jiye, W.; Ru, Z.; Lizhi, Z. Cattle identification using muzzle print images based on feature fusion. In Proceedings of the 2020 the 6th International Conference on Electrical Engineering, Control and Robotics, Xiamen, China, 10–12 January 2020; IOP Publishing: Bristol, UK, 2020; p. 012051. [Google Scholar]

- Kumar, S.; Pandey, A.; Satwik, K.S.; Kumar, S.; Singh, S.K.; Singh, A.K.; Mohan, A. Deep learning framework for recognition of cattle using muzzle point image pattern. Measurement 2018, 116, 1–17. [Google Scholar] [CrossRef]

- Kosana, V.; Gunda, V.S.; Kosana, V. ADEEC-Multistage Novel Framework for Cattle Identification using Muzzle Prints. In Proceedings of the 2022 Second International Conference on Computer Science, Engineering and Applications (ICCSEA), Gunupur, India, 8 September 2022; pp. 1–6. [Google Scholar]

- Zhao, K.; Jin, X.; Ji, J.; Wang, J.; Ma, H.; Zhu, X. Individual identification of Holstein dairy cows based on detecting and matching feature points in body images. Biosyst. Eng. 2019, 181, 128–139. [Google Scholar] [CrossRef]

- Bhole, A.; Udmale, S.S.; Falzon, O.; Azzopardi, G. CORF3D contour maps with application to Holstein cattle recognition from RGB and thermal images. Expert Syst. Appl. 2022, 192, 116354. [Google Scholar] [CrossRef]

- Qiao, Y.; Su, D.; Kong, H.; Sukkarieh, S.; Lomax, S.; Clark, C. Individual cattle identification using a deep learning based framework. IFAC-PapersOnLine 2019, 52, 318–323. [Google Scholar] [CrossRef]

- Yukun, S.; Pengju, H.; Yujie, W.; Ziqi, C.; Yang, L.; Baisheng, D.; Runze, L.; Yonggen, Z. Automatic monitoring system for individual dairy cows based on a deep learning framework that provides identification via body parts and estimation of body condition score. J. Dairy Sci. 2019, 102, 10140–10151. [Google Scholar] [CrossRef]

- Hu, H.; Dai, B.; Shen, W.; Wei, X.; Sun, J.; Li, R.; Zhang, Y. Cow identification based on fusion of deep parts features. Biosyst. Eng. 2020, 192, 245–256. [Google Scholar] [CrossRef]

- Balasso, P.; Marchesini, G.; Ughelini, N.; Serva, L.; Andrighetto, I. Machine learning to detect posture and behavior in dairy cows: Information from an accelerometer on the animal’s left flank. Animals 2021, 11, 2972. [Google Scholar] [CrossRef] [PubMed]

- Tian, F.; Wang, J.; Xiong, B.; Jiang, L.; Song, Z.; Li, F. Real-time behavioral recognition in dairy cows based on geomagnetism and acceleration information. IEEE Access 2021, 9, 109497–109509. [Google Scholar] [CrossRef]

- Strutzke, S.; Fiske, D.; Hoffmann, G.; Ammon, C.; Heuwieser, W.; Amon, T. Development of a noninvasive respiration rate sensor for cattle. J. Dairy Sci. 2019, 102, 690–695. [Google Scholar] [CrossRef]

- Ma, S.; Zhang, Q.; Li, T.; Song, H. Basic motion behavior recognition of single dairy cow based on improved Rexnet 3D network. Comput. Electron. Agric. 2022, 194, 106772. [Google Scholar] [CrossRef]

- Guo, Y.; He, D.; Chai, L. A machine vision-based method for monitoring scene-interactive behaviors of dairy calf. Animals 2020, 10, 190. [Google Scholar] [CrossRef]

- Wu, D.; Wang, Y.; Han, M.; Song, L.; Shang, Y.; Zhang, X.; Song, H. Using a CNN-LSTM for basic behaviors detection of a single dairy cow in a complex environment. Comput. Electron. Agric. 2021, 182, 106016. [Google Scholar] [CrossRef]

- Yin, X.; Wu, D.; Shang, Y.; Jiang, B.; Song, H. Using an EfficientNet-LSTM for the recognition of single Cow’s motion behaviours in a complicated environment. Comput. Electron. Agric. 2020, 177, 105707. [Google Scholar] [CrossRef]

- Nguyen, C.; Wang, D.; Von Richter, K.; Valencia, P.; Alvarenga, F.A.; Bishop–Hurley, G. Video-based cattle identification and action recognition. In Proceedings of the 2021 Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, QLD, Australia, 29 November 2021; pp. 1–5. [Google Scholar]

- Wang, Y.; Li, R.; Wang, Z.; Hua, Z.; Jiao, Y.; Duan, Y.; Song, H. E3D: An efficient 3D CNN for the recognition of dairy cow’s basic motion behavior. Comput. Electron. Agric. 2023, 205, 107607. [Google Scholar] [CrossRef]

- Ayadi, S.; Ben Said, A.; Jabbar, R.; Aloulou, C.; Chabbouh, A.; Achballah, A.B. Dairy cow rumination detection: A deep learning approach. In Proceedings of the Distributed Computing for Emerging Smart Networks: Second International Workshop, DiCES-N 2020, Bizerte, Tunisia, 18 December 2020; Springer: Cham, Switzerland, 2020; pp. 123–139. [Google Scholar]

- Wang, Y.; Chen, T.; Li, B.; Li, Q. Automatic Identification and Analysis of Multi-Object Cattle Rumination Based on Computer Vision. J. Anim. Sci. Technol. 2023, 65, 519–534. [Google Scholar] [CrossRef]

- Mao, Y.; He, D.; Song, H. Automatic detection of ruminant cows’ mouth area during rumination based on machine vision and video analysis technology. Int. J. Agric. Biol. Eng. 2019, 12, 186–191. [Google Scholar] [CrossRef]

- Song, H.; Li, T.; Jiang, B.; Wu, Q.; He, D. Automatic detection of multi-target ruminate cow mouths based on Horn-Schunck optical flow algorithm. Trans. Chin. Soc. Agric. Eng. 2018, 34, 163–171. [Google Scholar]

- Arago, N.M.; Alvarez, C.I.; Mabale, A.G.; Legista, C.G.; Repiso, N.E.; Robles, R.R.; Amado, T.M.; Romeo, L.J., Jr.; Thio-ac, A.C.; Velasco, J.S.; et al. Automated estrus detection for dairy cattle through neural networks and bounding box corner analysis. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 303–311. [Google Scholar] [CrossRef]

- Pasupa, K.; Lodkaew, T. A new approach to automatic heat detection of cattle in video. In Proceedings of the Neural Information Processing: 26th International Conference, ICONIP 2019, Sydney, NSW, Australia, 12–15 December 2019; Springer: Cham, Switzerland, 2019; pp. 330–337. [Google Scholar]

- Chowdhury, S.; Verma, B.; Roberts, J.; Corbet, N.; Swain, D. Deep learning based computer vision technique for automatic heat detection in cows. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, QLD, Australia, 30 November 2016; pp. 1–6. [Google Scholar]

- Guo, Y.; Zhang, Z.; He, D.; Niu, J.; Tan, Y. Detection of cow mounting behavior using region geometry and optical flow characteristics. Comput. Electron. Agric. 2019, 163, 104828. [Google Scholar] [CrossRef]

- Noe, S.M.; Zin, T.T.; Tin, P.; Kobayashi, I. Automatic detection and tracking of mounting behavior in cattle using a deep learning-based instance segmentation model. Int. J. Innov. Comput. Inf. Control 2022, 18, 211–220. [Google Scholar]

- Reith, S.; Hoy, S. Behavioral signs of estrus and the potential of fully automated systems for detection of estrus in dairy cattle. Animal 2018, 12, 398–407. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Wu, Q.; Yin, X.; Jiang, B.; Wang, H.; He, D.; Song, H. Lameness detection of dairy cows based on the YOLOv3 deep learning algorithm and a relative step size characteristic vector. Biosyst. Eng. 2020, 189, 150–163. [Google Scholar] [CrossRef]

- Song, H.; Jiang, B.; Wu, Q.; Li, T.; He, D. Detection of dairy cow lameness based on fitting line slope feature of head and neck outline. Trans. Chin. Soc. Agric. Eng. 2018, 34, 190–199. [Google Scholar]

- Zheng, Z.; Zhang, X.; Qin, L.; Yue, S.; Zeng, P. Cows’ legs tracking and lameness detection in dairy cattle using video analysis and Siamese neural networks. Comput. Electron. Agric. 2023, 205, 107618. [Google Scholar] [CrossRef]

- Li, Q.; Chu, M.; Kang, X.; Liu, G. Temporal aggregation network using micromotion features for early lameness recognition in dairy cows. Comput. Electron. Agric. 2023, 204, 107562. [Google Scholar] [CrossRef]

- Wang, Y.; Kang, X.; He, Z.; Feng, Y.; Liu, G. Accurate detection of dairy cow mastitis with deep learning technology: A new and comprehensive detection method based on infrared thermal images. Animal 2022, 16, 100646. [Google Scholar] [CrossRef]

- Cai, Y.; Ma, L.; Liu, G. Design and experiment of rapid detection system of cow subclinical mastitis based on portable computer vision technology. Trans. Chin. Soc. Agric. Eng. 2017, 33, 63–69. [Google Scholar]

- Golzarian, M.R.; Soltanali, H.; Doosti Irani, O.; Ebrahimi, S.H. Possibility of early detection of bovine mastitis in dairy cows using thermal images processing. Iran. J. Appl. Anim. Sci. 2017, 7, 549–557. [Google Scholar]

| Reference | Behavior | Recognition Methods | Results |

|---|---|---|---|

| Ma et al. [40], 2022 | Lying, standing, walking | Rank eXpansion Network 3D (Rexnet 3D), Resnet101, Mobilev2, Mobilev3, Shufflev2, C3D and S3D | lying and walking: 97.5%, standing: 90% |

| Guo et al. [41], 2020 | Entering, leaving, turning, resting, feeding, and drinking of calves | Gaussian mixture model, ViBe, and the new integrated background model (combine background-subtraction and inter-frame difference methods) | Entering: 94.38%, leaving: 92.86%, staying: 96.85%, turning: 93.51%, feeding: 79.69%, drinking: 81.73%. |

| Wu et al. [42], 2021 | drinking, ruminating, walking, standing and lying | VGG16 feature extraction network, Bi-LSTM (bidirectional long short-term memory) classification model | Average precision, recall, specificity, and accuracy were 0.971, 0.965, 0.983, and 0.976, respectively |

| Yin et al. [43], 2020 | Lying, standing, walking, drinking, and feeding | EfficientNet for feature extraction, BiFPN (bidirectional feature pyramid network) for feature fusion, C3D, VGG16-LSTM, ResNet50-LSTM, and DensNet169-LSTM for behavior recognition | 97.87% |

| Nguyen et al. [44], 2021 | Drinking, grazing | Cascade R-CNN, Temporal Segment Networks (TSNs) | drinking: 84.4%, grazing: 94.4% |

| Wang et al. [45], 2023 | lying, standing, walking, drinking, and feeding | ECA (Efficient Channel Attention) for channel information filtering, E3D (Efficient 3D CNN) algorithm for the spatiotemporal information processing of the video | Precision, recall, parameters, and FLOPs of the E3D were 98.17%, 97.08%, 2.35 M, and 0.98 G, respectively |

| Ayadi et al. [46], 2020 | Rumination behavior of dairy cows | VGG16, VGG19, and ResNet152V | average accuracy: 95%, recall: 98%, precision: 98% |

| Wang et al. [47], 2022 | Rumination behavior of dairy cows | YOLO algorithm combined with the Kernelized Correlation Filter (KCF) | 91.87% |

| Mao et al. [48], 2019 | Rumination behavior of dairy cows | optical flow method | Highest accuracy was 87.80%, average accuracy was 76.46% |

| Song et al. [49], 2022 | Rumination behavior of dairy cows | Horn–Schunck optical flow method | Highest filling rate was 96.76%, the lowest filling rate was 25.36%. |

| Arago et al. [50], 2020 | Estrus behavior of dairy cows | SSD (Single Shot Detector) + Inception V2, Faster R-CNN | 90% |

| Pasupa et al. [51], 2019 | Estrus behavior of dairy cows | SVM (Support Vector Machine) | 90% |

| Dolecheck et al. [52], 2015 | Estrus behavior of dairy cows | Random Forest, Linear Discriminant Analysis, and Neural Network | 65.6% |

| Guo et al. [53], 2019 | Estrus behavior of dairy cows | Background Subtraction with Color and Texture Features (BSCTF), SVM | 98.3% |

| Noe et al. [54], 2022 | Estrus behavior of dairy cows | Mask R-CNN, Kalman filter and Hungarian algorithm | 95.5% |

| Reference | Behavior | Characteristic Extraction and Classification Model | Result |

|---|---|---|---|

| Wu et al. [56], 2020 | Lameness Behavior | YOLO V3, Long Short-Term Memory (LSTM) | 98.57% |

| Song et al. [57], 2018 | Lameness Behavior | DSKNN (Distilling data of K-Nearest Neighbor) + LCCCT (Local Cyclic Center Compensation Tracking Model), SVM, Naive Bayes, KNN | SVM: 91.11%, Naive Bayes: 86.11%, KNN: 93.89% |

| Zheng et al. [58], 2023 | Legs Tracking, Lameness Behavior | Siamese attention model (Siam-AM), SVM | 94.73% |

| Li et al. [59], 2023 | Lameness Behavior | Temporal aggregation network using micromotion features | 98.89% |

| Wang et al. [60], 2022 | Cow Mastitis | YOLO v5 | 85.71% |

| Cai et al. [61], 2017 | Cow Mastitis | Linear Regression, Power Regression, Quadratic Regression, and Principal Component Regression | average relative error was 3.67% |

| Golzarian et al. [62], 2017 | Cow Mastitis | Matlab and SPSS Tool Software | 57.3% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Zhang, Q.; Zhang, L.; Li, J.; Li, M.; Liu, Y.; Shi, Y. Progress of Machine Vision Technologies in Intelligent Dairy Farming. Appl. Sci. 2023, 13, 7052. https://doi.org/10.3390/app13127052

Zhang Y, Zhang Q, Zhang L, Li J, Li M, Liu Y, Shi Y. Progress of Machine Vision Technologies in Intelligent Dairy Farming. Applied Sciences. 2023; 13(12):7052. https://doi.org/10.3390/app13127052

Chicago/Turabian StyleZhang, Yongan, Qian Zhang, Lina Zhang, Jia Li, Meian Li, Yanqiu Liu, and Yanyu Shi. 2023. "Progress of Machine Vision Technologies in Intelligent Dairy Farming" Applied Sciences 13, no. 12: 7052. https://doi.org/10.3390/app13127052

APA StyleZhang, Y., Zhang, Q., Zhang, L., Li, J., Li, M., Liu, Y., & Shi, Y. (2023). Progress of Machine Vision Technologies in Intelligent Dairy Farming. Applied Sciences, 13(12), 7052. https://doi.org/10.3390/app13127052