Abstract

Personal assistants and social robotics have evolved significantly in recent years thanks to the development of artificial intelligence and affective computing. Today’s main challenge is achieving a more natural and human interaction with these systems. Integrating emotional models into social robotics is necessary to accomplish this goal. This paper presents an emotional model whose design has been supervised by psychologists, and its implementation on a social robot. Based on social psychology, this dimensional model has six dimensions with twelve emotions. Fuzzy logic has been selected for defining: (i) how the input stimuli affect the emotions and (ii) how the emotions affect the responses generated by the robot. The most significant contribution of this work is that the proposed methodology, which allows engineers to easily adapt the robot personality designed by a team of psychologists. It also allows expert psychologists to define the rules that relate the inputs and outputs to the emotions, even without technical knowledge. This methodology has been developed and validated on a personal assistant robot. It consists of three input stimuli, (i) the battery level, (ii) the brightness of the room, and (iii) the touch of caresses. In a simplified implementation of the general model, these inputs affect two emotions that generate an externalized emotional response through the robot’s heartbeat, facial expression, and tail movement. The three experiments performed verify the correct functioning of the emotional model developed, demonstrating that stimuli, independently or jointly, generate changes in emotions that, in turn, affect the robot’s responses.

1. Introduction

Rosalind Picard published Affective Computing in 1997 [1], aligned with Minsky’s thesis, who in the 1970s and 1980s had already discussed whether a machine could become intelligent without being emotional [2]. Researchers are still working with this approach, which is still innovative and determines many of the questions that computational models need for improving communication with machines. Some of these questions are connected to human emotion detection, intimacy, enhanced interaction, and private user data, among other terms.

Years later, in 2001, Picard herself defined emotional artificial intelligence (EAI) [3]. Artificial intelligence techniques enable a machine to recognize, understand, and express emotional responses to certain stimuli. Moreover, she argued that emotion had an essential impact on cognitive processes, such as creativity, human intelligence, reasoning, and decision-making. One of her main conclusions is the one she made concerning the Turing Test [4]: no machine could pass the test without having emotions, however she asserted that emotions were implicit in the text used to perform the test. In this way, emotions enable a more natural and satisfactory human–robot interaction. As a result, more robots, co-bots, personal assistants, and chat-bots incorporate robotic emotional models [5,6,7,8].

From a psychological point of view, it is considered that personality is closely linked to emotions and is, therefore, vital for an individual to interact well with the outside world. Personality is a psychological construct that refers to a person’s character in terms of some characteristics of their way of being and behaving. Therefore, this work considers including a robotic personality to improve the effectiveness of robotic emotional models in terms of interaction [9]. To implement this concept in the proposed robot, Bandura’s approach has been chosen, based on social interactions, specifically on the interaction between behavior, environment, and psychological processes [10]. In this sense, the robotic personality is instrumental in making the speaker and the context feel considerate and important to the robot, determining its responses. For example, one robot with a calm and happy personality could be optimal for a hospital context, but maybe not for an academic environment, where a neutral robot (neither happy nor sad) could be more functional. The bidirectional relation between personality and the environment opens, in robotics, new paths in research regarding this field.

This paper presents an emotional model incorporating personality to enhance human–robot interaction by making it more coherent and natural. This model is dimensional to obtain a continuous magnitude from a negative to a positive intensity. It also proposes a series of relationships between the input stimuli, the sensors that quantify them, the implied emotions, the emotional response output of the robot, and the actuators in charge of transmitting these outputs to the user with whom the robot interacts. Each of these relationships and the set of emotions can be customized, depending on the hardware and purpose of the robot used. This customization makes it possible to model a specific personality for the robot, making it, for example, a calm and cheerful robot.

A review of the literature shows that there are many theories related to the emotions proposed by engineers for robotics and by psychologists for humans. However, it is difficult to find multidisciplinary teams that allow a good interpenetration between both fields. This is one of the main contributions of this work in comparison with those that can be seen in Section 2. Because of this multidisciplinarity, fuzzy logic has been chosen as it allows the definition of rules using natural language. This simplifies the task of the expert psychologist and its final implementation on the robot. Accordingly, rule tables are defined to model how stimuli affect emotions and how emotions develop responses. Since these rules can be expressed in natural language thanks to fuzzy logic, integrating the guidelines of the expert psychologist who has developed the model and the robotic personality is easily achievable. Thus, the modeling has been conducted through natural language and in an intuitive way, as seen throughout the paper. This also allows the verification and validation of the system to be supervised by both engineers and psychologists at the same time.

To develop an in-depth validation of the effect of the stimuli and the emotional responses, the implementation of the model is reduced to just two emotional dimensions (the continuum of happiness–sadness and the continuum of fear–calm). Section 7 will show how these two dimensions are sufficient to evaluate any stimulus–emotion–response process developed in this emotional model. Adding more emotions would only complicate the explanation without adding value. The sensors used to obtain the stimuli inputs are the crest feelers of the robot and the luminosity sensor. Qualitative inputs, such as the voice tone or the body language, have been avoided to prevent contaminating the tests with biases. The tests produced changes in the stimuli so that the robot’s emotional state could vary, following patterns similar to humans. The results achieved demonstrate the usefulness of the model and the rules proposed. In addition, the developed system serves as a test bench to analyze different robotic personalities and the use of other sensors.

The remainder of this paper is organized as follows: the first section analyzes the most used emotions theories in the literature, how stimuli affect the emotional state, and how these theories are modeled and implemented. The following section describes the robot where the proposed emotional model has been implemented. The emotional model proposed as a general scheme is then presented. Then, it is explained how the implementation of the model has been carried out based on fuzzy logic. Followed by this, the experimental results achieved are discussed. Finally, we present the general outcomes and describe further work.

2. Background Review

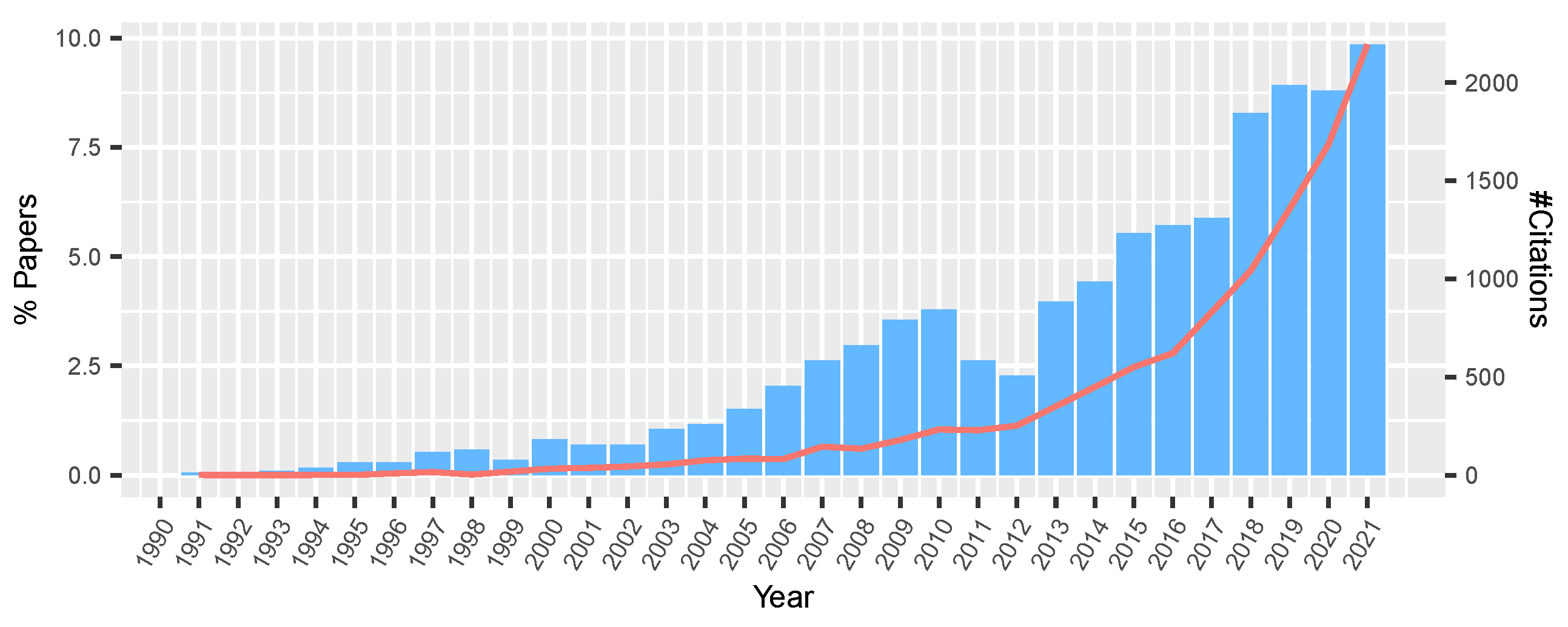

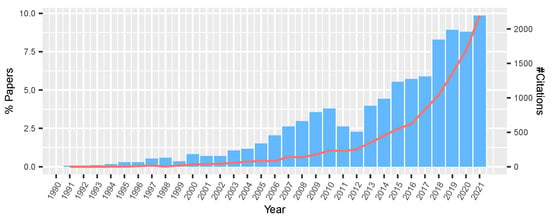

EAI is becoming increasingly interesting and fruitful for researchers. Proof of this is the growing number of publications and, therefore, citations of papers related to this area of research. Figure 1 shows this growing development from 1991 to 2021.

Figure 1.

Percentage of publications (left axis) and total number of citations (right axis) related to emotional artificial intelligence from 1983 to 2021 obtained from Web of Science.

Initially, the leading general theories related to emotions will be presented. Subsequently, the stimuli which affect most robotic emotional states will be introduced, and finally, how the proposed emotional models are implemented will be explained.

2.1. Emotion Theories

An emotion can be defined as a psycho-physiological change in response to certain stimuli. These changes are directly related to the modes of adaptation the individual receiving the emotion has acquired. Based on this, psychologists have defined several theories about how many basic emotions are perceived, how humans generate and perceive them, and what stimuli cause them [8]. Some authors highlight among these stimuli those related to communication: tone of voice, choice of words, and body expressions such as posture or facial expression [3,11].

Among the most accepted and famous classifications of emotions, it is worth mentioning the following:

- Basic discrete categorization: each emotion can be classified within a finite set of emotions. Ekman and Friesen’s classification is an example, where the basic emotions proposed are [12] surprise, happiness, fear, sadness, disgust, anger, and neutral. From these initial six, the authors added more secondary categories to their theory encompassed in these. Each of these categories represents a different mental state and requires different expressions [13]. Other authors have defined a larger number of primary emotions, considering pain, aggression, or factors such as shyness and shame [14,15].

- Valence-intensity categorization: emotions could be classified according to a plane with the two coordinates: positive or negative valence and high or low intensity [16]. Within this plane, the number of emotions generated is unlimited, but traditionally they are usually grouped in those defined by Ekman and Friesen.

- Color categorization: this uses segments to represent emotions. Each segment is related to a different color. The intensity of emotion is defined according to whether it is closer or farther away from the central point. One side represents positive and the other negative intensity. The closer to the center, the darker the color and, therefore, the stronger the emotion. One of the most relevant theories was the Plutchik classification. They proposed eight emotions: anticipation, joy, disgust, confidence, anger, fear, surprise, and sadness [17]. Modern classifications and theories have been developed from this model where the space of emotions gains in complexity [18,19].

Since Ekman and Friesen’s classification is the theory most accepted by psychologists nowadays and has several categories with a complexity that can be assumed for a robot, it is the one that has served to establish the basis of the emotional model proposed in this work. In this case, each emotion is quantified, so its value indicates intensity and valence.

Once the main classifications of emotions are described, it will be possible to examine the principal theories about the generation of emotions. It is possible to group them into three categories [20]:

- The neurological activity produces the emotion (e.g., Heilamn theory [21] or Morris theory [22])

- The physiological response causes the emotion (e.g., Damasio theory [23], James–Lange theory [24], or Cannon–Bard theory [25]).

- The thoughts generate emotions (e.g., Smith and Ellsworth theory [26]).

In the case of the proposed robot, this distinction is meaningless. External stimuli are inputs to the emotional system that, through fuzzy logic, using tables of states encoded in its memory according to a given personality, generate a change in its emotions. This process is detailed throughout the paper.

2.2. Stimuli Affecting Robotic Emotional States

There is no consensus on how many and which emotions should be defined in a robot, since it depends on the application for which they are conceived. In the literature, it is possible to find recent works showing demonstrators that implement more than twenty emotions based on modern and complex theories [27,28]. However, It is also possible to find demonstrators incorporating fewer emotions, such as those defined by Plutchik or Ekman and Friesen [29,30].

Despite this lack of consensus, it does seem that the input stimuli that can affect emotions are very narrowly defined. For example, data related to the facial expressions of the interlocutor are one of the most used to generate actions and emotions in robots [31]. Within computer vision, some works also take body expression and gestures as inputs [32]. The next component of emotional stimulation is related to natural language processing. Certain words’ tone, cadence, and use are analyzed to determine inputs for the emotional model [33,34]. Finally, it is also worth remembering that some authors consider these data unreliable. They prefer to use more invasive methods for specific applications to have more consistent inputs (i.e., electroencephalogram, electrocardiogram, and electromyogram) [35].

2.3. Implementing Robotic Emotions

It is evident that robotic emotional intelligence has gone hand in hand with advances in artificial intelligence. The most widely used algorithms are neural networks [36], fuzzy logic [37], Markov models [38], probability tables [39], and reinforcement learning [40]. According to the application approach, it is possible to classify these algorithms into three categories [41]:

- Biological approaches: they implement systems that seek to mimic the human being [42].

- Simple computational models: where what is intended is an action–reaction type interaction, and these models simply map specific inputs to particular outputs [43].

- Complex computational models: where the implementation is highly customized depending on the application of the robot and the number, usually high, of inputs and emotions to be managed [44].

In this work, we propose the use of fuzzy logic, a field in which the authors have long experience, such as in fuzzy control [45], fuzzy systems stability [46,47], dynamic modeling of fuzzy systems [48], or fuzzy Kalman filters [49,50,51].

The amount of research papers published about emotions in robotics has increased during the last decade [52]. A close review shows differences between the field of study and robotic research, which focuses more on a technical improvement of human–robot interaction (HRI). On the other hand, HRI, psychology, and social robotics are more focused on human responses within the interaction with the robot [52]. The research team involved in this project tried to avoid that gap by mixing disciplines in the team itself, with people from robotic backgrounds and a psychologist. Having professionals from both backgrounds will facilitate the process and will relieve the pressure of trying to find the one valid theory of emotions. The same happens with the myth of replicating the brain. Psychologists or biologists do not develop most papers that analyze psychological theories applied to robotics, so the information tends towards reductionism, in which the proposed meaning is far from the original concept and theory. For example, some researchers compare the process of learning defined by Vygotsky with the machine learning process [52], avoiding the fact that Vygotsky studied humans/live organisms and not machines, so there are a lot of variables that machine learning does not use that humans do. However, the authors understand the complexity of the material. The same paper interprets most emotional theories and shows Russel’s circumplex model that is not focused on basic emotions as most of the models are [52], us included. Some studies are focused on the recompilation of robots and their characteristics. Following this, the robot presented in this paper is something in between a toy and an animal [53]. A large number of models that also use fuzzy logic are focused on the detection and interpretation of the emotions of the person that is interacting with the robot [54,55,56].

The model is focused on the robotic personality, the interaction with the environment, and more tangible stimuli (for example, light) to avoid subjectivity in emotional detection. Therefore, in the dynamic system presented, the emotional detection of the user is not running the model. Research shows that because of the increase in measuring human emotions through technology for business purposes, results and models are more connected with economic interest through companies and apps than the research itself [57]. Furthermore, Eckman suggested the difficulty and the complexity of measuring emotions, for example “deception” and how different people react differently [58], so having a general model when talking about individuals would be a simplification or reductionism of the human and its emotions. Finally, a significant proportion of the models do not consider the context, as most research teams/companies do not include healthcare professionals in their design or the developing process of the models when developing health products. For example, psychological tests are tools created to help professionals better understand the users or patients, and not the only means to validate or understand someone’s emotions through an app for commercial purposes.

Not considering the context information, the difficulty of an irregular ecosystem, and the limitation of data to train emotion detectors are some of the challenges this field presents [59]. The robotic personality has been designed with specific rules and with a context which is the same as the interactions, with different stimuli being the irregular ecosystem, one of the challenges this research had. When focusing on the personality of the robot instead of the user’s, we try to avoid these mistakes.

Once the state-of-the-art setup has been reviewed, the following section will explain in detail the robotic platform where the emotional model has been implemented.

3. Experimental Setup of the Adopted Robot Hardware and Software

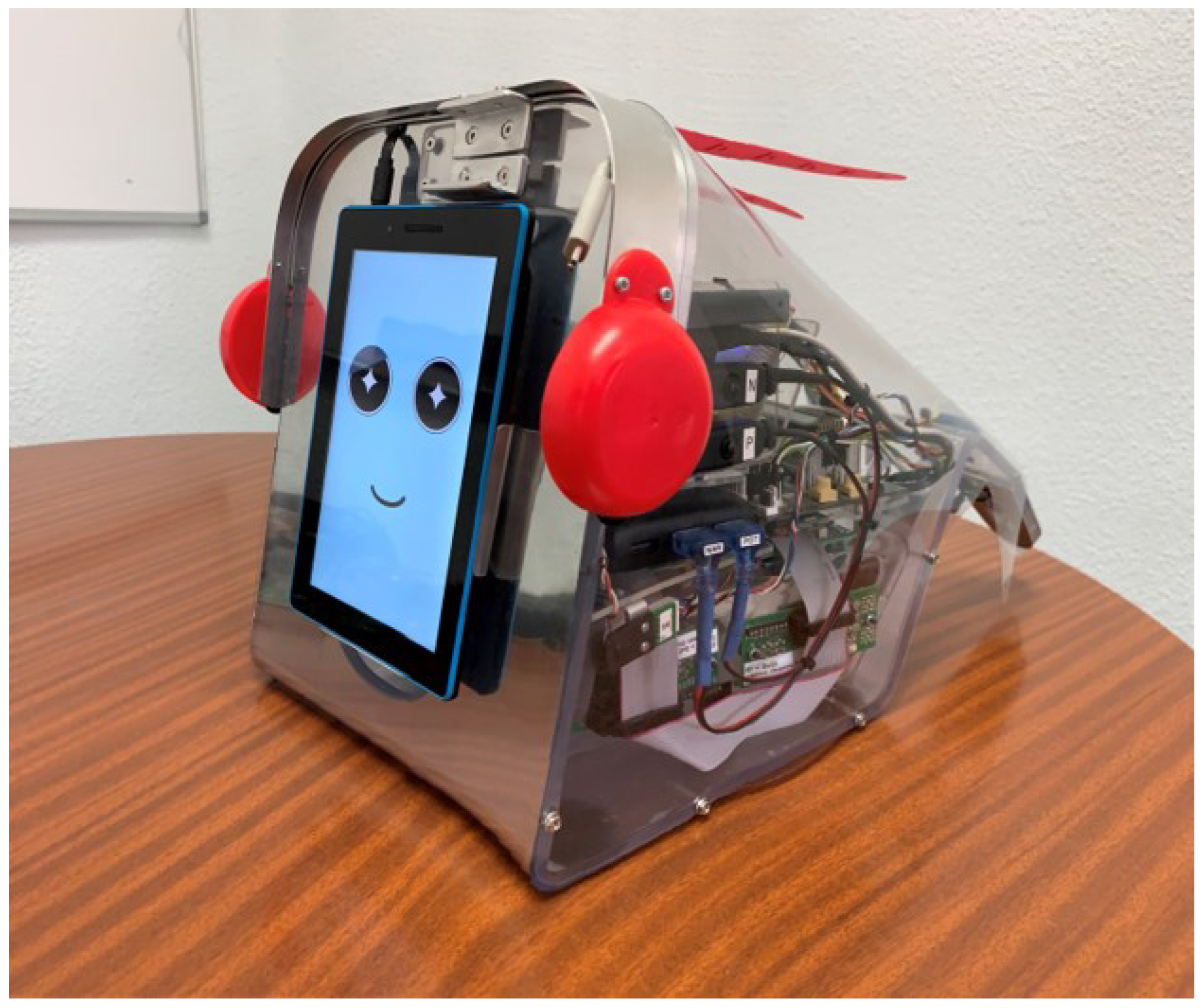

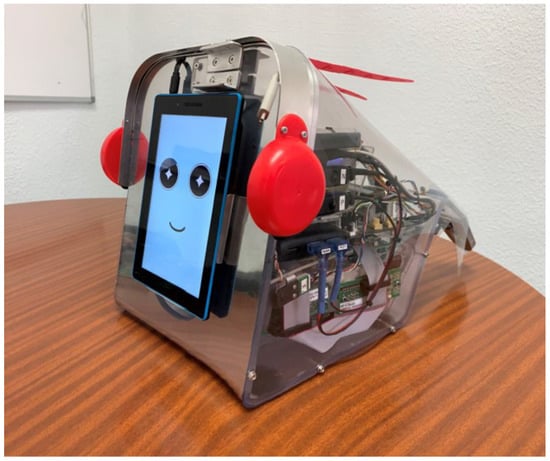

A social robot designed to assist and interact with people is presented. This robot is still under development. However, as shown in Figure 2, the first version is already finished. Although it is a general-purpose assistance robot, its current use is focused on the accompaniment of older adults in isolation and young adolescent diabetic patients. These two groups have been chosen because of their importance in human–machine interaction. In addition, achieving an emotional coupling is essential for gaining user engagement.

Figure 2.

UPM-CSIC CAR personal assistance robot.

3.1. Hardware Components and Architecture

The robot has many hardware components. However, for ease of reading, this paper focuses on explaining the elements related in some way to the emotional model.

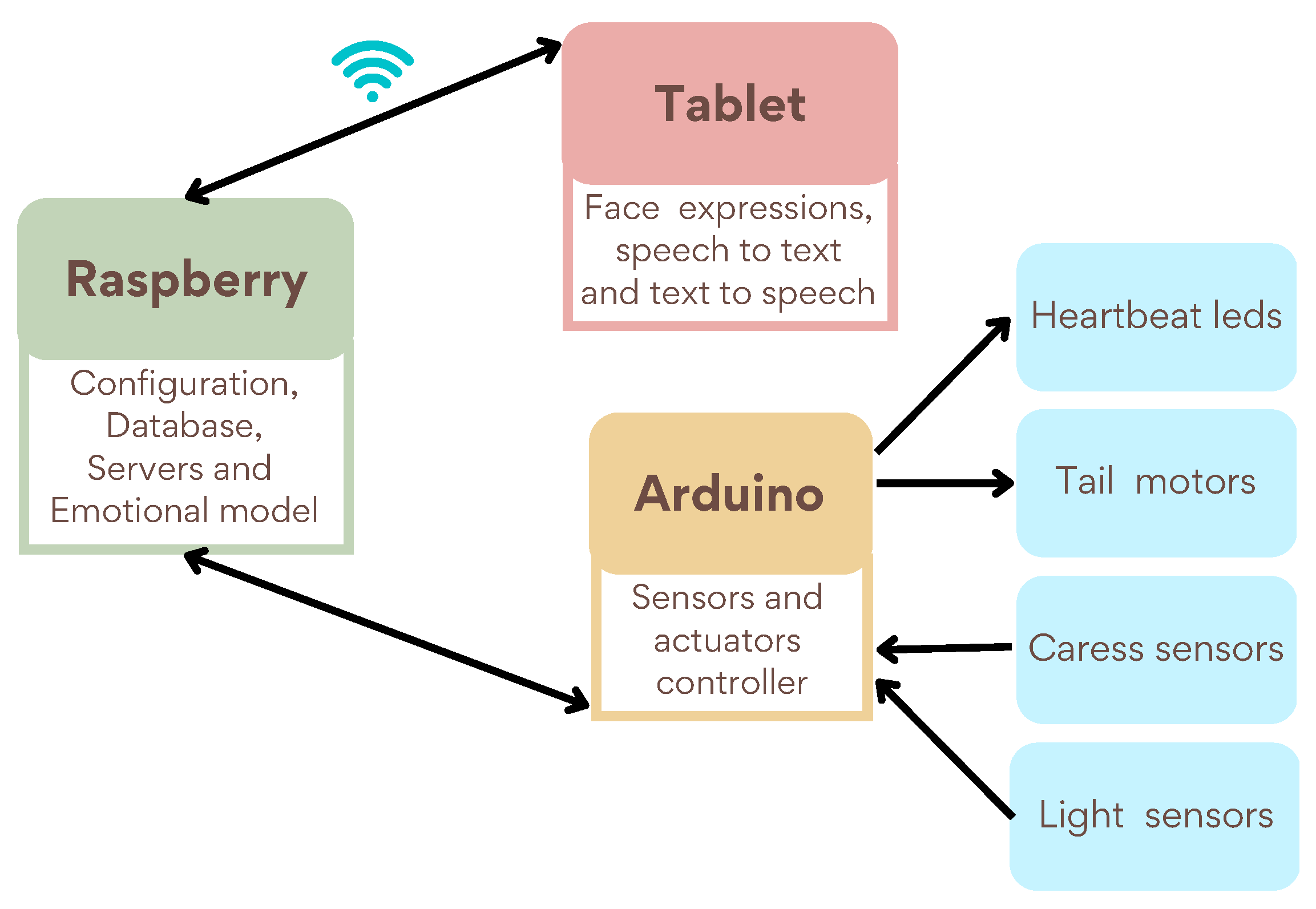

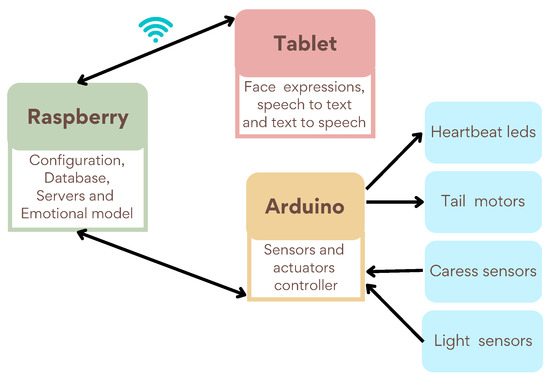

The hardware consists of three computers (a Lenovo TB3-710F Tablet with Android 5.0.1, a Raspberry Pi 3 model B, and an Arduino Micro), drivers to control the inputs and outputs, and actuators to generate the emotional responses. Figure 3 shows a general schematic of the main components of the robot and the connections between them. Specifically, the functions performed by each of them are as follows:

Figure 3.

Schematic diagram of the robot hardware involved in the emotional model.

- Raspberry (Raspberry Pi 3 model B): this runs both the emotional model and the conversational agent. It is constantly connected, via WiFi, with the tablet to transmit the text to be communicated by the robot, as well as the facial expressions to be shown depending on the robot’s mood. It is also responsible for analyzing and managing the texts obtained from the tablet’s voice recognition. It also has a wired connection with the Arduino to receive the measurements of the different sensors and send the outputs produced by the emotional model.

- Arduino (Arduino Micro): Arduino is an open-source development platform capable of communicating with sensors and actuators. It conducts a constant reading of the sensors. Then, when there is a variation in any measurement, it is sent to the Raspberry. In the same way, it receives the output of the emotional model that generates a particular outcome in the actuators.

- Tablet (Lenovo TB3-710F): the tablet runs Android 5.0.1 as it facilitates implementation, thanks to Google’s text-to-speech and speech-to-text features. As well as taking care of these functions, the developed application shows different facial expressions depending on the mood, which will be described in more detail at the end of Section 5.

In regards to the sensors and inputs used by the emotional model:

- Two light-dependent resistors of 5 mm: used to check the room’s luminosity where the robot is. The final value considered is the average of both sensors. The values obtained by these sensors range from 0 to 1000, where 0 is complete darkness and 1000 is the brightest value.

- Antennas or crests (red-colored parts on the top of the robot in Figure 2) are used as touch sensors, which, when touched, close a shelf-developed electronic circuit. It is possible to differentiate whether it is touched a lot, a little, or not, so it is used to check if the user caresses the robot. The sensor values range from 0 (there is no caress) to 3 (the user is caressing the robot a lot).

- Battery level: the system uses the battery level as an input. The range of values is from 0 (no battery) to 100 (full battery). In the implemented version, this measure is not used, since the evolution of the battery is very slow, so it does not offer perceptible responses by users if they do not interact with the robot for long periods of time.

Each of these sensors is directly connected to the Arduino, which periodically reads them and sends them to the Raspberry, where the fuzzy logic module is located and which translates them into fuzzy labels (this process will be better explained in Section 5). These labels are then sent to the emotional model, also located on the Raspberry, to modify the emotional state of the robot. The serial connection between the Arduino and the Raspberry is through a USB cable.

Changes in the emotional state of the robot produce output reactions. These are sent to the fuzzy logic translator that finally sends the numerical values to the actuators connected to the Arduino via USB or to the facial expression software of the tablet through a WiFi connection. The actuators and output of the robot are the following:

- A series of heart-shaped LEDs, simple red light-emitting diodes that blink at a specific frequency simulating the heartbeat. This frequency can be a value from 0 to 300 Hz.

- A moving tail is driven by a small DC motor, whose rotational speed is varied according to the emotional state of the robot. The speed can vary from 0 to 120 RPM.

- The facial expression: a software implemented in the tablet is in charge of receiving from the Raspberry the facial expression that the robot must show according to the emotional state. Each time the emotional state changes its general state, it sends a message to the tablet with the new value. This process is explained in detail in Section 5.2.3.

Both the Arduino and the tablet have active services to read the information coming from the Raspberry with a refresh rate of 2.5 s. This reading rate has been chosen empirically, allowing a relatively high refresh rate and leaving resources to the system to perform the rest of the hardware-related tasks.

3.2. Software Implementation

From the software point of view, two libraries have been used to develop the emotional model and the communication between components: FuzzyLite and LCM. The former allows rule-based systems to be programmed using fuzzy logic in C++ language [60]. The emotional model is mainly based on the use of this library. LCM (light communication and marshalling) is a communication protocol implemented as a software library that provides mechanisms to subscribe to channels [61]. Its primary purpose is to simplify message handling between processes, whether or not they are on the same CPU. Two communication channels have been created in the proposed robot: one between the Arduino and the Raspberry Pi that allows reading from the sensors and acting on the actuators, and another between the tablet and the speech manager.

To allow validation and replication of the emotional model, its software has been made public and is accessible from the following repository: https://github.com/Grupo-de-Control-Inteligente/potato-emocional, accessed on 3 March 2023.

The following section will define the emotional model the robot has integrated, its implementation, and its modeling using fuzzy logic.

4. Emotional Model

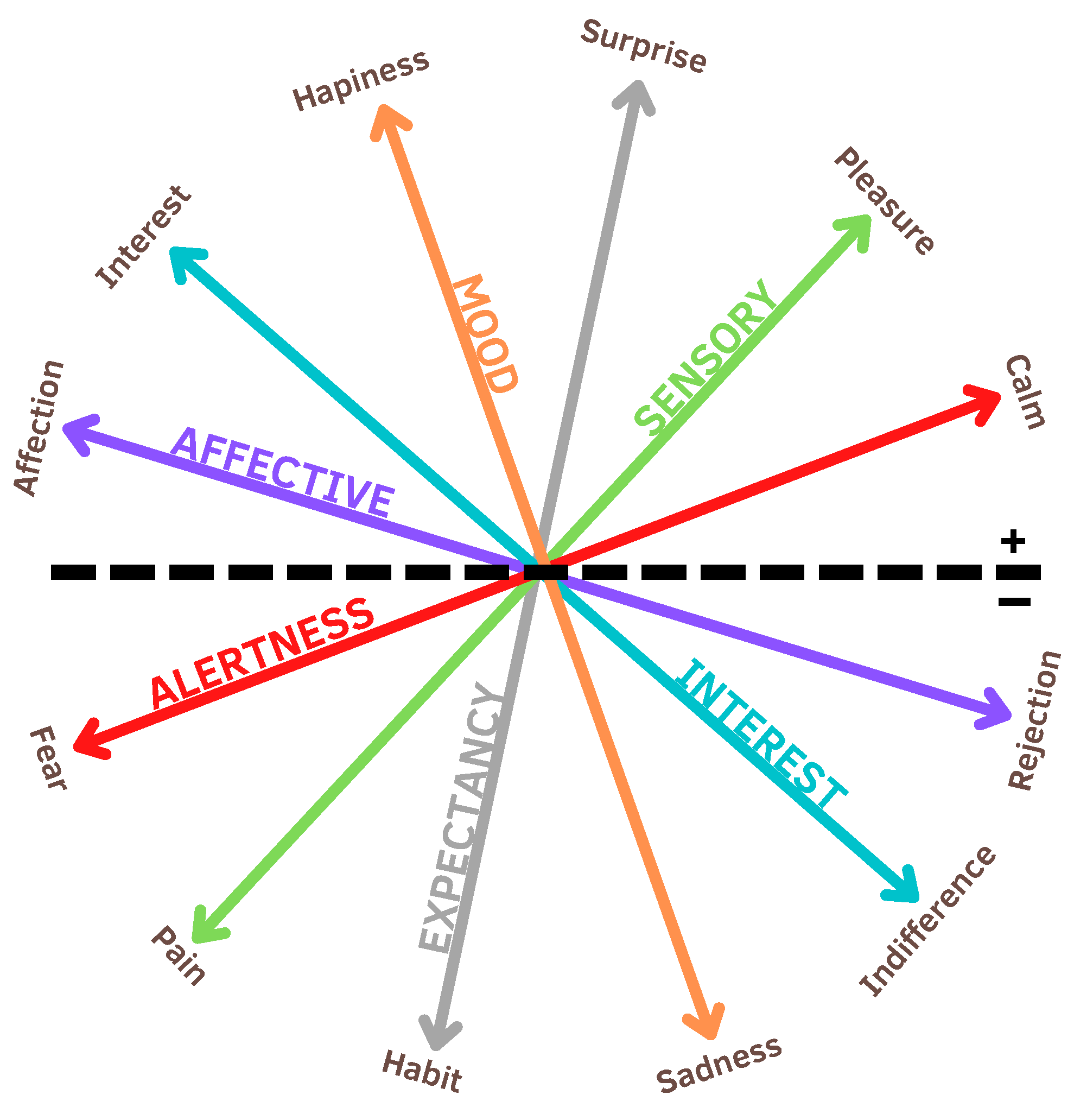

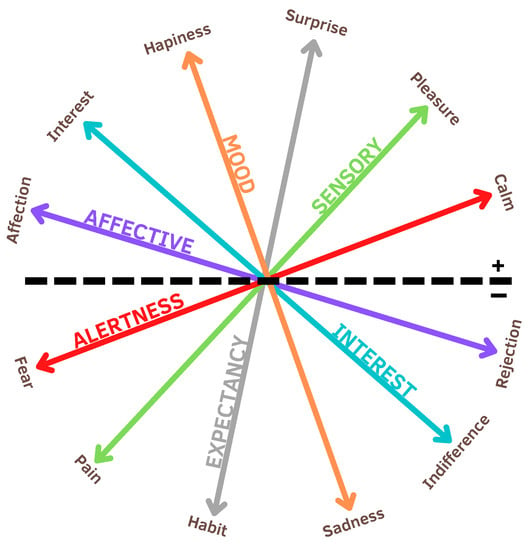

The emotional model proposed in this paper is dimensional, like some previous models [62]. It comprises six emotional dimensions (twelve emotions), as depicted in Figure 4. This number of dimensions has been chosen because their expressions in a robot can be easily distinguishable from each other by users and for alignment with most emotional models. A dimensional model implies that emotions are characterized by being continuous; each can be represented by an axis, where one end characterizes the emotion of a negative tone and the other a positive tone. Therefore, for instance, an emotion can be pleasant, unpleasant, or any intermediate value between these extremes. Each emotion, therefore, will have a variable associated with it that indicates the tone or intensity of that emotion at a given time. The emotional dimensions were defined originally in Spanish. Therefore, we include the original Spanish word in parentheses to understand the dimensions’ names better, keeping the meaning regarding language and culture. The emotional dimensions and, therefore, the emotions proposed are:

Figure 4.

Representation of the emotions for the dimensional emotional model, proposed based on Plutchik’s wheel of emotions [63].

- MOOD (ANÍMICA): continuum happiness–sadness

- SENSORY (SENSORIAL): pain–pleasure continuum

- AFFECTIVE (AFECTIVA): rejection–affection continuum

- ALERTNESS (ALERTA): fear–calm continuum

- INTEREST (INTERÉS): indifference–interest continuum

- EXPECTANCY (EXPECTATIVA): habit–surprise continuum

It is important to note that the emotional response and the dynamic behavior can be customized by the team, the developers, and the professionals needed regarding the context, the objectives of the robot, and its interaction. This will impact how the robot will interact with the users and vice versa. Therefore, the number and nature of emotions can be modified according to the needs of human–robot interaction.

The robot can modulate its emotions along this axis. In addition, each of these emotions can affect or be affected by others. For example, the happiness–sadness continuum (MOOD) and the pain–pleasure continuum (SENSORY) can keep a connection between them so that the more pleasure there is, the more happiness can be generated. How emotions are modulated and the connections between different emotions are defined will generate different personalities, that is, different ways of understanding a stimulus and responding to it.

As mentioned in the introduction, the emotional model presented is based on Bandura’s idea of personality [10], so this model also characterizes how a stimulus affects a given emotion. The intensity of this relation depends on the established personality (general long term) and the emotional state (specific and momentary). This way, the personality established tends typically to a “general state” when no stimuli are provoked. Therefore, time is also another variable related to the stimuli and to the emotional model.

The emotional model by itself does not define a set of inputs that define the stimuli; the robot’s hardware, where it is implemented, will determine which sensors to use. However, it establishes a set of categories that denote the complexity of processing the inputs produced by the stimuli. Table 1 shows the three categories defined in order of increasing complexity: (1) internal, (2) quantitative external, and (3) qualitative external. As seen from the table, the sensors of the used robot have been categorized. Nonetheless, other robots may have other sensors and be categorized differently. As can be seen, the first two categories refer to inputs that can be collected through a sensor that will generate one or more parametric values. However, the third category requires further processing that requires learning and interpretations. Similarly, it is also possible to distinguish whether an external agent produces the stimulus to the robot (categories two and three) or, on the contrary, is intrinsic to the robot’s hardware or software (category one).

Table 1.

Stimuli categorization table.

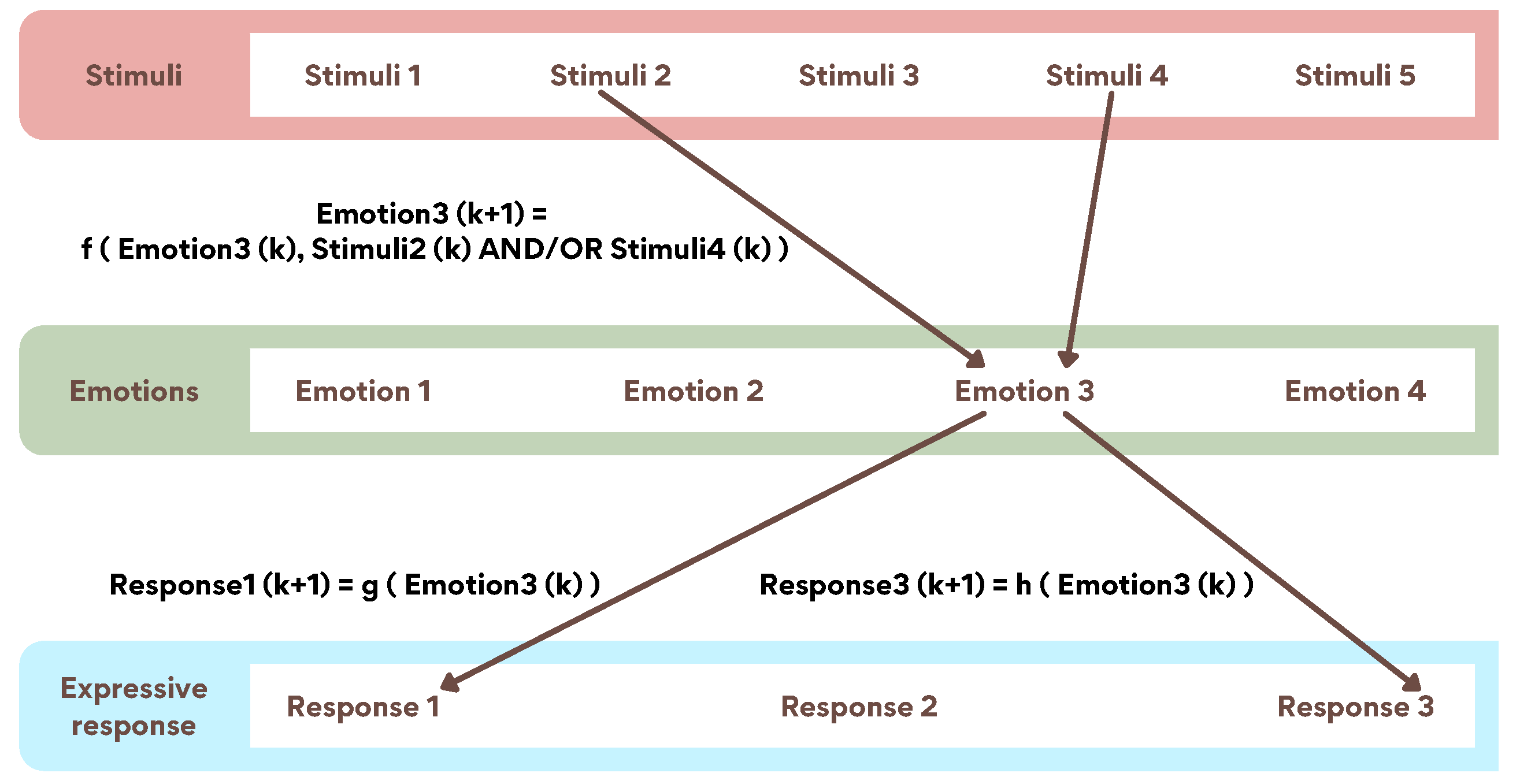

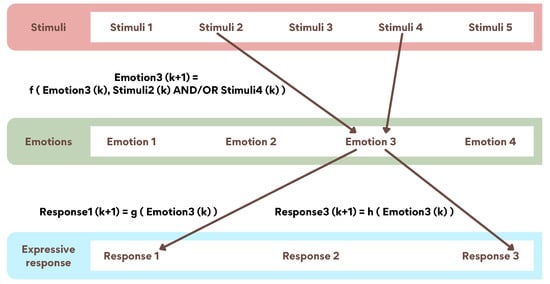

Once the emotions and stimuli have been characterized, their relationships and how the expressive responses can be generated are described. Figure 5 illustrates this. One or more stimuli may affect one or more emotions. The diagram shows how two stimuli can modify an emotion. This interaction can be of independence (OR), e.g., one or the other stimulus affecting the emotion, or they can be combined and affected simultaneously (AND). How a stimulus affects the emotional state will depend on the robot’s personality. For example, a calm robot will need lower luminosity values in the room to be scared. Likewise, if that situation is maintained over time, returning to a calm state may take less time. Similarly, one or several emotions can generate different expressive responses. For instance, in Figure 5, it is possible to see how Emotion 3 generates a change in Response 1 and in Response 3. Taking this as a practical example, if the robot is very happy, it may have a fast pulse, a swift tail movement, and a smiling facial expression. On the other hand, if it is calm, the pulse will be regular, the tail will not move, and the facial expression will be neutral. Section 5 explains how these relationships are modeled and how a specific robot’s personality is generated.

Figure 5.

Connection between stimuli, emotions, and responses.

5. Implementing the Emotional Model in the Personal Assistant Robot

This section describes how, starting from the general emotional model presented by the authors, (1) it has been implemented using fuzzy logic and (2) how the fuzzy logic rules have been customized to give a concrete personality to the assistant robot described in Section 3.

5.1. Fuzzy Logic for Modeling Emotions

The technique for modeling emotions depends largely on the method of knowledge acquisition employed. In this case, the rules were obtained from the experience of a psychologist who provided the linguistic values for each emotion, as well as the inference rules to be implemented in the model. Clearly, fuzzy logic is the most appropriate technique to handle vague concepts and to perform the approximate reasoning required by the information obtained from the human expert [64,65].

Let be the vector of stimuli, the vector of emotions, and the vector of robot output variables. It is proposed to use a state variable model to define the dynamic behavior of the emotional system, in which each emotional dimension depends on its previous state and the stimulus vector , according to Equation (1) [66].

where i corresponds to each of the emotional dimensions that integrate the vector of emotions.

Likewise, each output is a function of the emotional state, as shown in Equation (2) [66].

where j corresponds to each of the robot outputs that integrate the vector of the robot output variables.

In this way, the function f is therefore responsible for relating the input stimuli produced in the robot to its emotional state, while function g is in charge of relating the emotional state with the emotional responses of the robot, i.e., its outputs.

Without a precise mathematical model of the above functions f and g, using artificial intelligence heuristic techniques to obtain them is interesting. Specifically, it is proposed to use a rule-based system using fuzzy logic as an inference mechanism. In previous work, a dynamic model in state-space had already been used to represent the emotional state of a robot [67]; however, the model (f and g) was linear and fuzzy logic was used only to compute the coefficients in each iteration.

This time, this work proposes to use rules directly and obtain them empirically based on consultation with an expert in the field, specifically a psychologist. In this way, the functions f and g can be modeled using two tables of rules that look similar to Table 2 and Table 3.

Table 2.

Example of a state table for a component of the vector of emotions (function f). The gray cells represent the different input and states allowed by the system.

Table 3.

Example of a rules table vs. (function g). The gray cells represent the different states and responses allowed by the system.

The use of fuzzy logic for the inference mechanism on these rules is justified by the fact that the transitions from one state to another (low, normal, high) are not abrupt, as well as that both the data coming from the stimuli and the output take quantitative values [68,69,70].

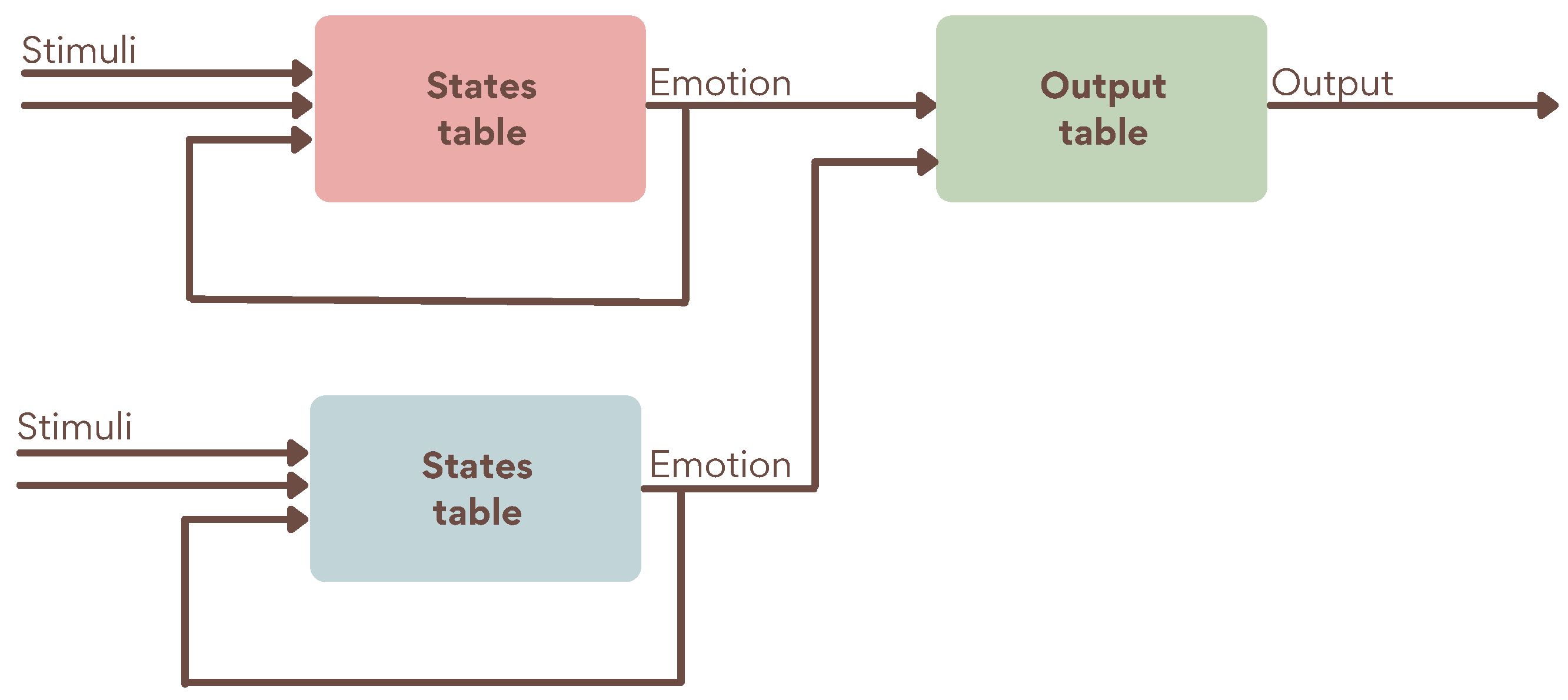

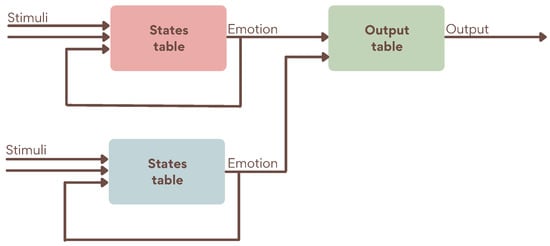

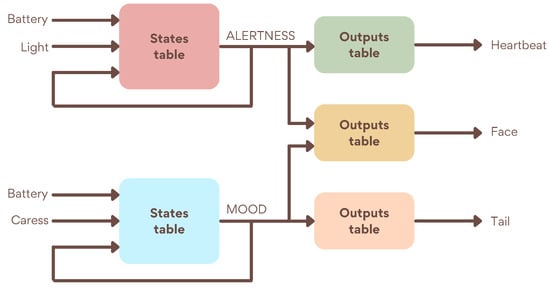

The model is dynamic since the table of rules corresponding to the function g (the state depends on its previous value) corresponds to the temporal evolution of a being’s emotions, as they do not normally change their state instantaneously. This leads to addressing the stability of the presented dynamical model with some care. It is a first-order dynamical system that, starting from a stable state (e.g., low and normal), if the input does not change, the state does not change either, while if the input changes (to, e.g., normal), the state must evolve until it stabilizes at a new value (e.g., low). In Table 4, these stable equilibrium positions have been marked in bold. A scheme of the model can be seen in Figure 6.

Table 4.

Example of stable transition for a component of the vector of emotions (function g). The gray cells represent the different input and states allowed by the system.

Figure 6.

Schematic representation of a simplified version of the emotional state model with two emotions.

In the table’s design, special attention should be paid to ensure that transitions from one steady state always lead to another steady state. For example, in the previous example, where the stimulus transitions from high to normal, the intermediate states marked in Table 4 would be traversed until the new final steady state is reached (following the direction of the arrow). The use of fuzzy logic for the inference mechanism on these rules is justified because the transitions from one state to another (low, normal, high) are not abrupt. As well as that, both the data coming from the stimuli and the output take quantitative values [68,69,70].

Finally, to implement more realistic emotions evolution, this work relies on DeLoux’s research [71]. The author argued that, after passing through the sensory thalamus, there was a pathway through the sensory cortex to build a more conscious, contextual response that was obviously slower than the other response. This emotional reaction is obtained by passing directly through the amygdala. Since the robot is intended to be calm, each emotion has its own dynamics, implemented as a first-order system with a time constant parameter (τ) representing a delay. If this delay were not included, changes in emotions would be expressed as soon as a stimulus is received. Equation (3) shows how the filter should be applied to avoid having an emotion with abrupt changes, achieving a smoother evolution [72].

where smooth_emotion results from applying the delay filter, τ is the time constant, sharp_emotion is the unfiltered input, and s represents the time in the Laplace domain.

The delay constant depends on the emotion, and its variation depends on whether the model considers it instantaneous. For example, the emotion ALERTNESS, which expresses fear, has a much more immediate effect than MOOD, which influences the expression of happiness. These constants must be determined experimentally. For the system proposed in this paper, τ has a value of 10 for the emotional dimension of ALERTNESS and 20 for MOOD.

5.2. Giving Personality to the Robot

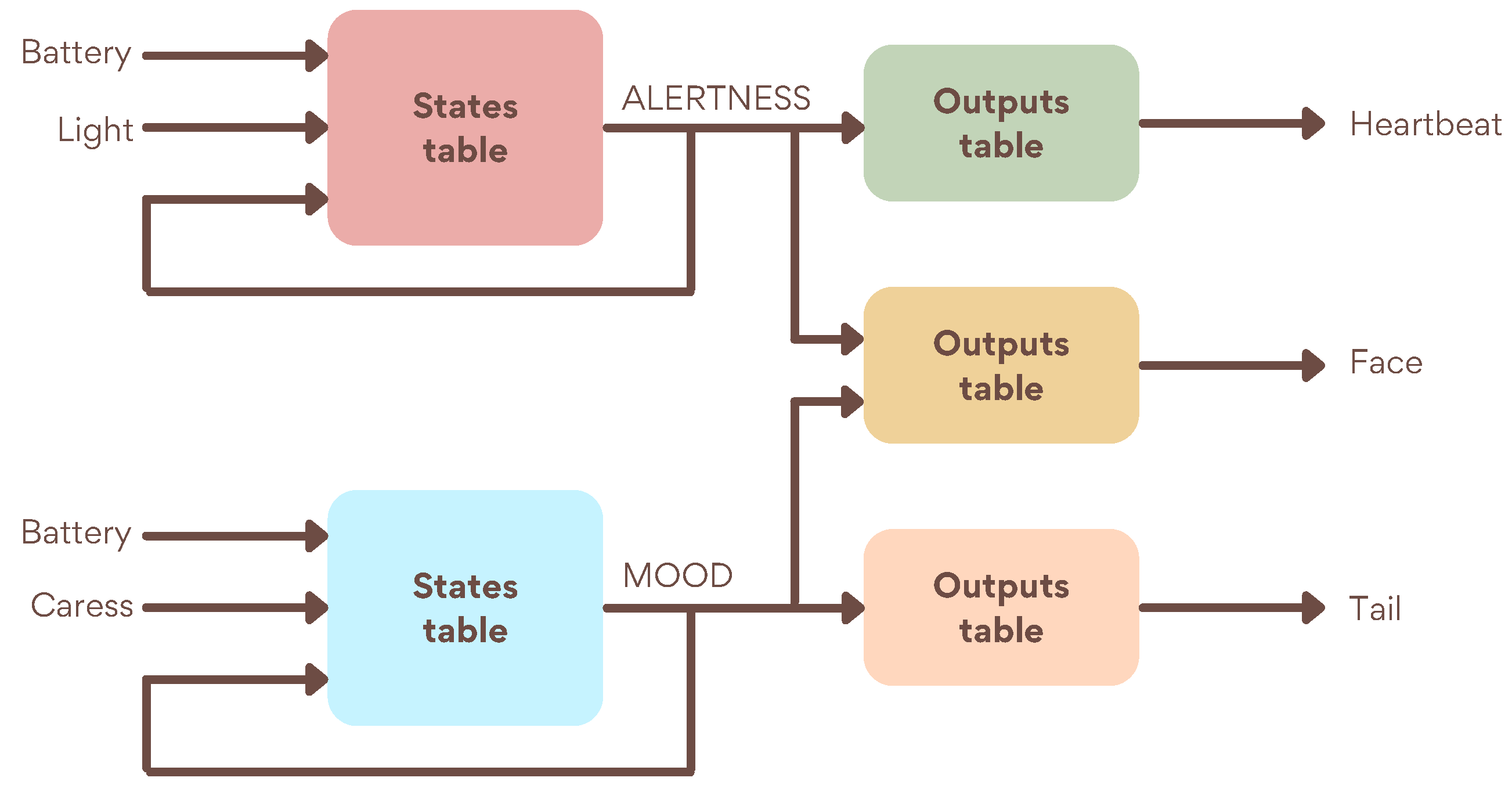

Figure 7 shows the general scheme of the state model that has been designed and programmed into the robot. It relates the input stimuli to the internal emotional state and its effects on the robot’s outputs.

Figure 7.

Model of the emotional state of the robot.

The implemented model includes only two of the six basic emotional dimensions: the happiness–sadness continuum (MOOD) and the fear–calm continuum (ALERTNESS). The fear–calm continuum is affected by different stimuli, battery charge level, and ambient light level, and the happiness–sadness continuum is affected by battery charge level and caress intensity. Regarding the outputs, three have been used: heartbeat, tail movement, and facial expressions. The first is influenced by the fear–calm continuum, the second by the happiness–sadness continuum, and the third by both emotional dimensions.

A stimulus can affect more than one dimension, and these are affected by more than one stimulus at the same time. The same happens with expressions: they can depend only on one emotion or result from a mixture of several. In addition, each emotion will be affected by its previous state and will have a certain dynamic or speed of response.

As can be seen, the emotional model can become quite complex as the number of variables (inputs, states, and outputs) increases; it was decided to use fuzzy logic to facilitate the modeling process. The rules are defined according to the personality given to the robot. In addition to being afraid of the dark and liking physical contact, it has other traits that will be included once it can handle more complex stimuli. In particular, its personality may be similar to that of a curious toddler. Its favorite word is “swarm” because it implies movement as a whole. It enjoys classical music, jazz, and reading about remote places and endangered species. It knows a lot about animals, space, construction materials, and interior design. It fears cockroaches, and becomes nervous if asked too many questions in a row.

5.2.1. Modeling of Robot ALERTNESS

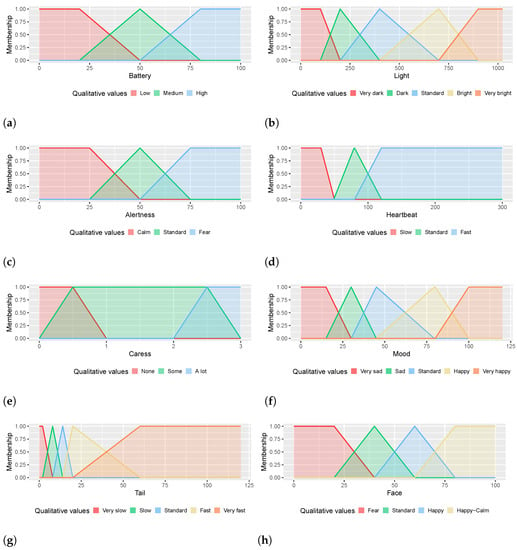

The first component of the robot’s emotional state revolves around the ALERTNESS dimension (battery/light–alert–heartbeat). Thus, the less light the system detects or the lower the charge, the more alert the robot will become, and this will be reflected, consequently, in the increase of the heartbeat rate. Therefore, the following possible qualitative values have been defined for each of the variables:

- ALERTNESS: fear, standard, and calm.

- Light: very dark, dark, normal, bright, and very bright.

- Battery: low, medium, and high.

- Heartbeat: slow, standard, and fast.

As can be seen, for the variable light, five qualitative values have been proposed to increase the sensitivity of the emotion to it. The rules are shown in the form of tables to facilitate their understanding. There will be two tables, from stimulus to emotion and emotion to expression. Table 5 represents the first case.

Table 5.

Table of Light/ALERTNESS Rules for Low, Medium, and High Batteries. The gray cells represent the different ALERTNESS and Light labels defined. Bold respresents steady states.

These tables should read as follows, for example:

IF (Battery is Low) AND (Light is Normal) AND (ALERTNESS was Calm)

THEN (ALERTNESS is Calm)

THEN (ALERTNESS is Calm)

In this situation, as long as the value of any of the inputs (light or battery) does not change, it is unfeasible for the alert state to change, i.e., it corresponds to a stable equilibrium state. It is also important to note that the table reflects several possible, stable states, highlighted in bold. Nevertheless, suppose the light starts to darken. In that case, the output of the rule corresponding to low battery and dark light will bring the ALERTNESS state to standard, stabilizing it to that state:

IF (Battery is Low) AND (Light is Dark) AND (ALERTNESS is Calm)

THEN (ALERTNESS is Standard)

THEN (ALERTNESS is Standard)

This will remain so until the light becomes very dark or returns to normal. Note, however, that in Table 5 for medium or high battery, it is impossible to reach the standard value for ALERTNESS in a stable way, as there is no stable point for this value. In the same way, when the robot has a low battery, it is only able to feel calm or fear. It is relevant to note that the absence of necessary stable states would cause a fluctuation in emotions, making the system unstable.

Furthermore, it is worth noting that since the proposed emotional model can be adapted to any personality, and the rules define this, a specific personality has been implemented in this work. Specifically, the proposed robot is cheerful and relatively calm. Table 5 reflects that, indeed, the robot’s personality is quite calm. The configuration of rules in the tables above is governed by the robot’s fear of the dark. Nevertheless, as it is a relatively calm robot, a rather low level of light (very dark) will be necessary to scare it and make it feel fear. However, most of the robot’s states correspond to the calm state.

Secondly, the rules relating emotion to expression are shown in Table 6. As can be seen, the relationship is straightforward and consists of a single input, thus lacking dynamics. The behavior of the heartbeat concerning ALERTNESS follows a simple biological or/and social pattern: as the value of ALERTNESS increases, the speed of the heartbeat increases. Thus, by joining these rule tables with the previous ones, as the light decreases, the ALERTNESS value becomes fear, and the heartbeat of the robot’s heart accelerates.

Table 6.

Table of rules ALERTNESS/Heartbeat. The gray cells represent the different ALERTNESS labels and the Heartbeat response.

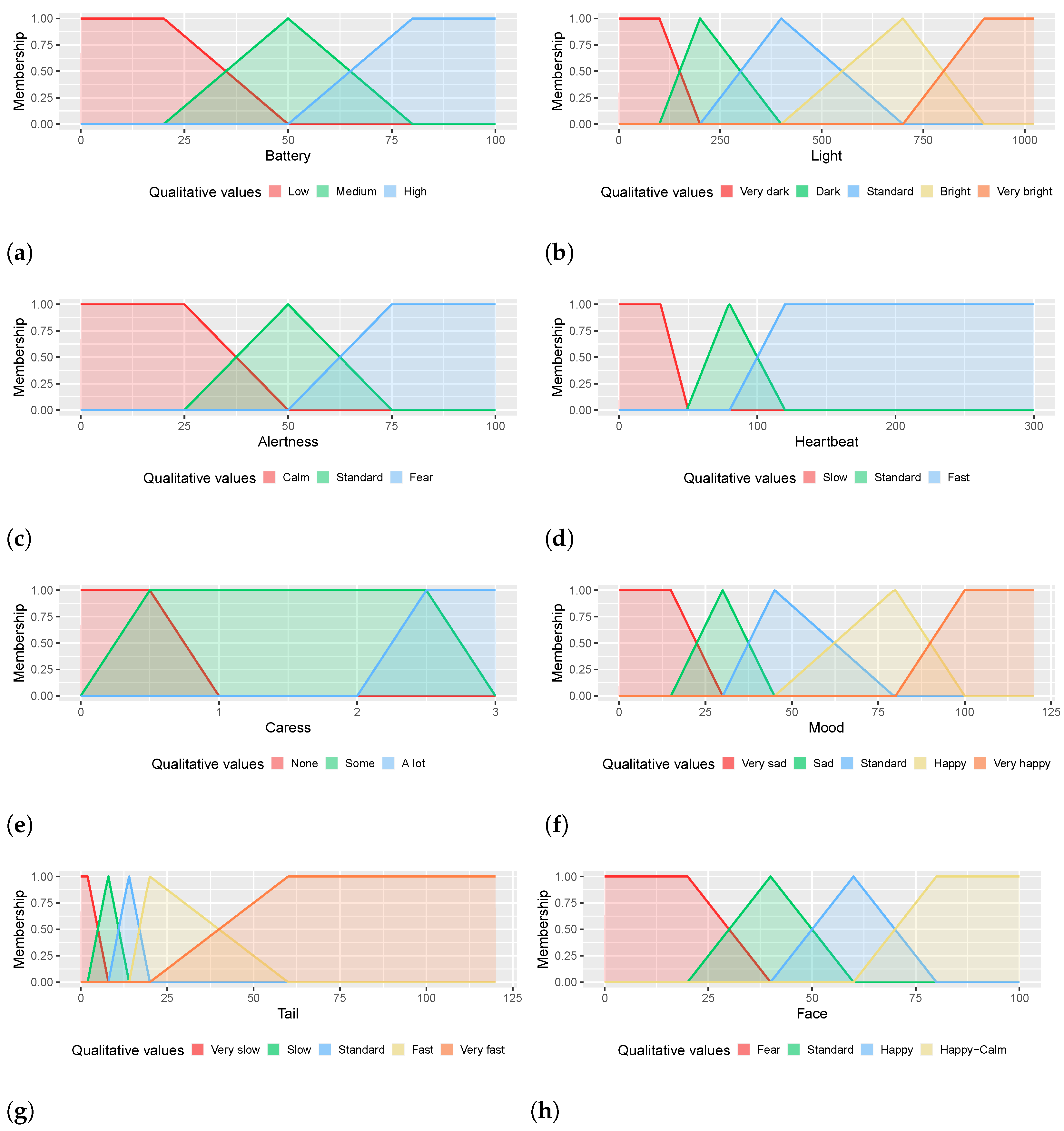

The last step in defining the model of the robot’s ALERTNESS state is to associate the above qualitative values with numerical values. This is formalized with the definition of its membership functions, which are depicted in Figure 8a–d.

Figure 8.

Membership functions. (a) battery charging, (b) light level, (c) ALERTNESS emotion, (d) heartbeat state, (e) caress level, (f) MOOD emotion, (g) tail movement, (h) facial expressions.

5.2.2. Modeling of the Robot’s State of Mind

The second component of the robot’s emotional state deals with MOOD (battery/caress–MOOD–tail), which reflects the robot’s state of happiness. Thus, caressing the robot in a certain way (employing two touch sensors) varies its state of happiness, as well as the speed of its tail movement. Thus, the following possible qualitative values have been defined for each of the variables:

- MOOD: very sad, sad, standard, happy, and very happy.

- Caress: none, little, and very much.

- Battery: low, medium, and high.

- Tail: very slow, slow, normal, fast, and very fast.

For the variable MOOD, five qualitative values have been proposed to increase the sensitivity of this emotional dimension. The rules are shown in the following tables. Table 7 and Table 8 represent how stimuli are related to emotion cases.

Table 7.

Low- battery caress/MOOD rule chart. The gray cells represent the different MOOD and Caress labels defined. Bold respresents steady states.

Table 8.

Table of caress/MOOD rules for medium and high batteries. The gray cells represent the different MOOD and Caress labels defined. Bold respresents steady states.

These tables may be slightly more complex than the previous ones because the emotional dimension has five instead of three possible values. However, their interpretation is the same, for example:

IF (Battery is Low) AND (Caress is Low) AND (MOOD is Happy)

THEN (MOOD is Happy)

THEN (MOOD is Happy)

The steady states indicated in bold in the table are shown. It is impossible to reach a very happy, steady state when the battery is low. A peculiar detail of these tables is that there is more than one steady state of MOOD for some values of caress. This has several interesting implications. First, by varying only the input caress, it is impossible to cycle through all the possible states of a single table as long as the value of the battery does not change. For example, if the robot starts with high battery (Table 8) and both antennas are stroked (e.g., the value of caress would be very much), the steady state is happy. Starting from this state, if the user suddenly stops touching the two antennae simultaneously, caress would be nothing, and the previous value of MOOD would be happy, so that the robot would be, again, at a stable point. On the other hand, if only one of the two antennae is touched, a new stable state would be reached at very happy. If the value of caress is varied further from here, it can be seen that, without further changes, the values standard, sad, and fear cannot be reached, nor is it possible to pass through them, but the battery-charge value must also be changed.

It should be added that the rules have been designed considering that the robot likes to be caressed, but not too much, which is why it can be seen in Table 8 that the very happy state is only reached when caress is worth little. Again, this idea is marked by the personality endowed to the robot. Finally, it should be noted that in none of the previous tables (Table 7 and Table 8) can the value very sad be reached, no matter how much the values of caress and battery are modified. At first sight, it may seem like a design flaw, but in the future and with more complex implementations, this emotional dimension will be affected by other stimuli that make it possible to achieve this state.

Secondly, the table that relates emotion to expression is shown (Table 9).

Table 9.

Table of MOOD/tail rules. The gray cells represent the different MOOD labels and the Tail response.

As with the previous dimension, these rules follow a biological pattern: the happier the robot is, the faster it will wag its tail as a sign of happiness. Summarizing the global behavior of this dimension, when the robot is stroked, its mood varies, preferring to be stroked only a little; the more comfortable it is and the more it likes the stroking, the faster it will move its tail. Figure 8e–g show the belonging functions of MOOD and tail, since those of battery are logically the same as those presented in the previous section.

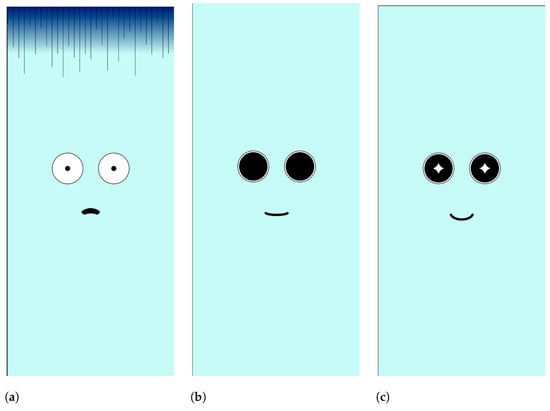

5.2.3. Modeling of the Robot’s Facial Expressions

This interaction relates the two emotional dimensions already discussed (ALERTNESS/MOOD facial expressions) and allows the display of gestures that reflect the general state of the robot. The facial expressions should encompass all the emotions that the robot may feel. The following possible qualitative values have been used for this purpose:

- MOOD: very sad, sad, standard, happy, and very happy.

- ALERTNESS: fear, standard, and calm.

- Facial expressions: standard, happy, happy–calm, and fear.

The rules are shown in Table 10.

Table 10.

Table of rules for MOOD/ALERTNESS facial expressions. The gray cells represent the different MOOD and ALERTNESS labels defined.

As can be seen, facial expressions are considered to be a combination of the MOOD and ALERTNESS dimensional emotions unless ALERTNESS means fear, in which case the dominant expression is fear, thus blocking the rest of the emotions. The membership functions can be seen in Figure 8h.

Again, the Table must be understood as in the previous two cases, following the structure “IF input1 IS input_value1 AND input2 IS input_value2 THEN output IS output_value”. In the present case with these rules, input1 corresponds to alert; therefore, input_value1 varies between calm, standard, and fear. On the other hand, input2 again represents the previous value of mood, so its input_value2 will be bery cheerful, cheerful, standard, sad, or very sad. Finally, the output is equivalent to the general State, and therefore output_value can vary between cheerful–calm, cheerful, standard, and fearful.

The basis of these rules would be: “when the robot is afraid, all other feelings are blocked”. Thus, if and only the emotional dimension alertness marks “Fear”, it will be scared. In all other cases, a distinction will be made between standard, happy, or happy and calm. The standard state will occur when he is “Sad” or “Very Sad”, regardless of the value of alertness, or when both inputs are “Standard”. On the other hand, Potato will feel cheerful–calm when it is in a state of “Calm” and “Cheerful” or “Very Cheerful”. Finally, he will be “Happy” in situations where both mood and alertness are “Standard”, and the other emotion is either “Cheerful”, “Very Cheerful”, or ”Calm”.

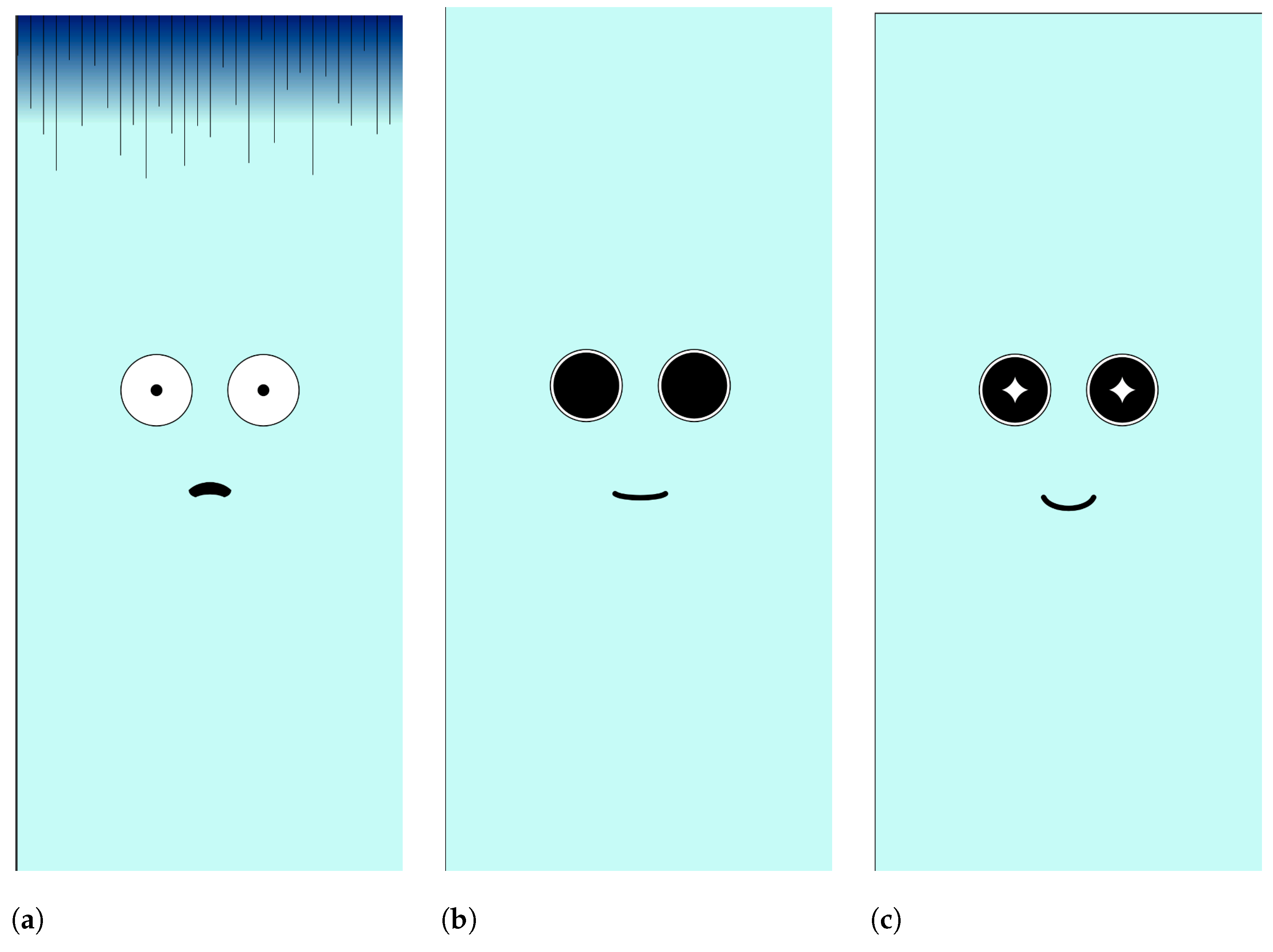

Figure 9 shows a snapshot of the different faces that the robot can express. The fuzzy labels that appear in Figure 8h are those related to facial expressions. It can be seen that even though there are four labels, only three faces are represented. This is because the robot has a cheerful personality, so the face corresponding to the standard label is the same as the cheerful one; when the predominant emotion is fear, the robot shows the face illustrated in Figure 9a. If the most significant emotion is standard or happy, then the face used is the one in Figure 9b. Otherwise, if the robot is happy and calm, the face that appears is Figure 9c.

Figure 9.

Face representing the different fuzzy values of the general state. (a) Face representing fear. (b) Face representing happy and standard. (c) Face representing happy and calm.

Once the implementation of the system has been studied, we proceed to explain the experiments performed and the results obtained.

6. Results

To validate the emotional model implemented, a set of experiments has been carried out to check if the robot’s behavior is as expected. Since the battery level has a slow evolution over time, the experiments have been performed with the battery at 100% to avoid measure disturbances. Experiments with the two emotional dimensions are shown in the following paragraphs.

All results have been carried out experimentally. Therefore, users have interacted with the robot hardware by modifying the brightness or caressing it. The values of the emotional dimensions have been obtained through the output logs of the emotional model.

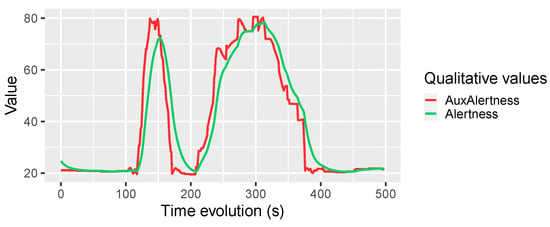

6.1. Tuning the Robotic Emotions

During the first experiments, it was found that the expression of the robot’s emotions was too abrupt when compared to a human being. Therefore, a first-order filter is applied to the output of the emotional dimensions ALERTNESS and MOOD to achieve a more realistic effect and, therefore, better user acceptance.

This filter slows down their dynamic evolution, which allows emotions not to vary immediately in the face of variations in the input stimuli. Moreover, each dimension has its own time constant since ALERTNESS, which expresses fear, has a much more immediate effect than MOOD, which influences happiness. These parameters have been experimentally adjusted to follow biological patterns as well. Therefore, these are the values that the hardware will use to express the emotional states from now on.

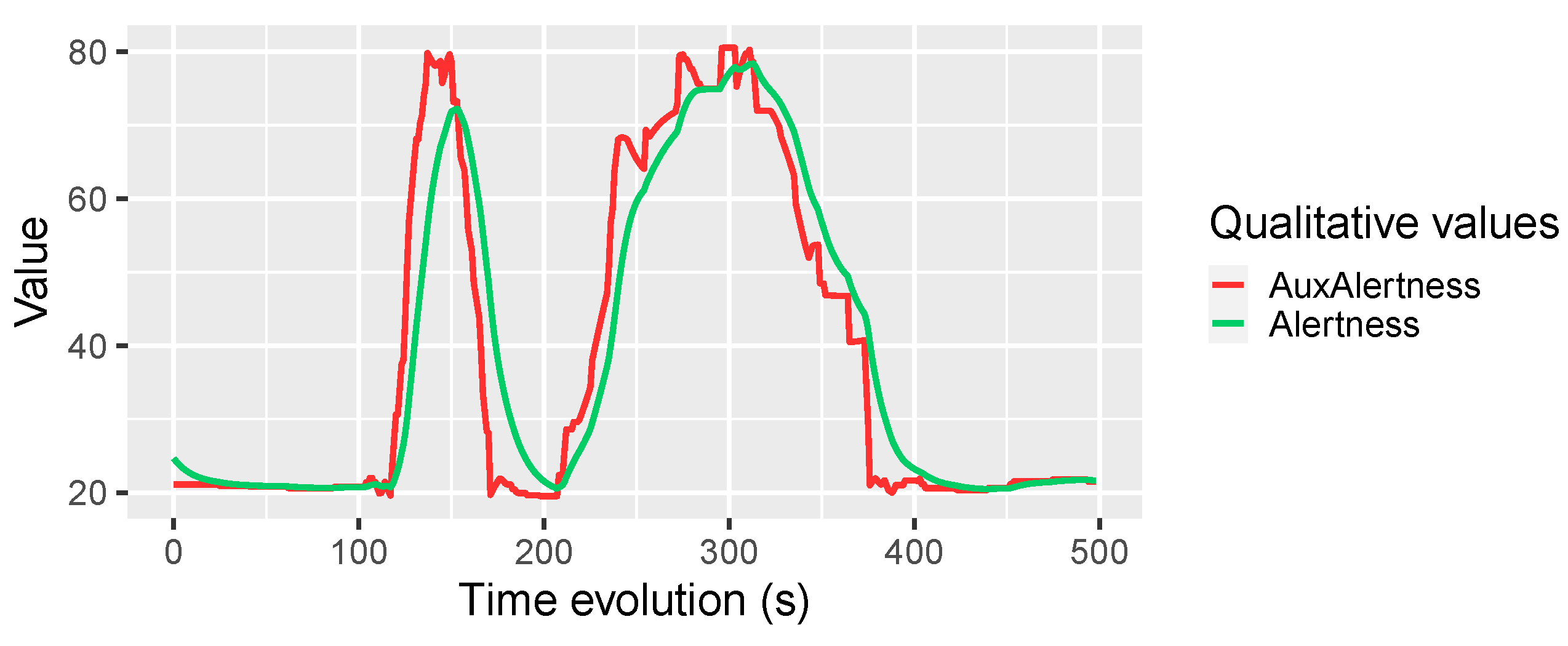

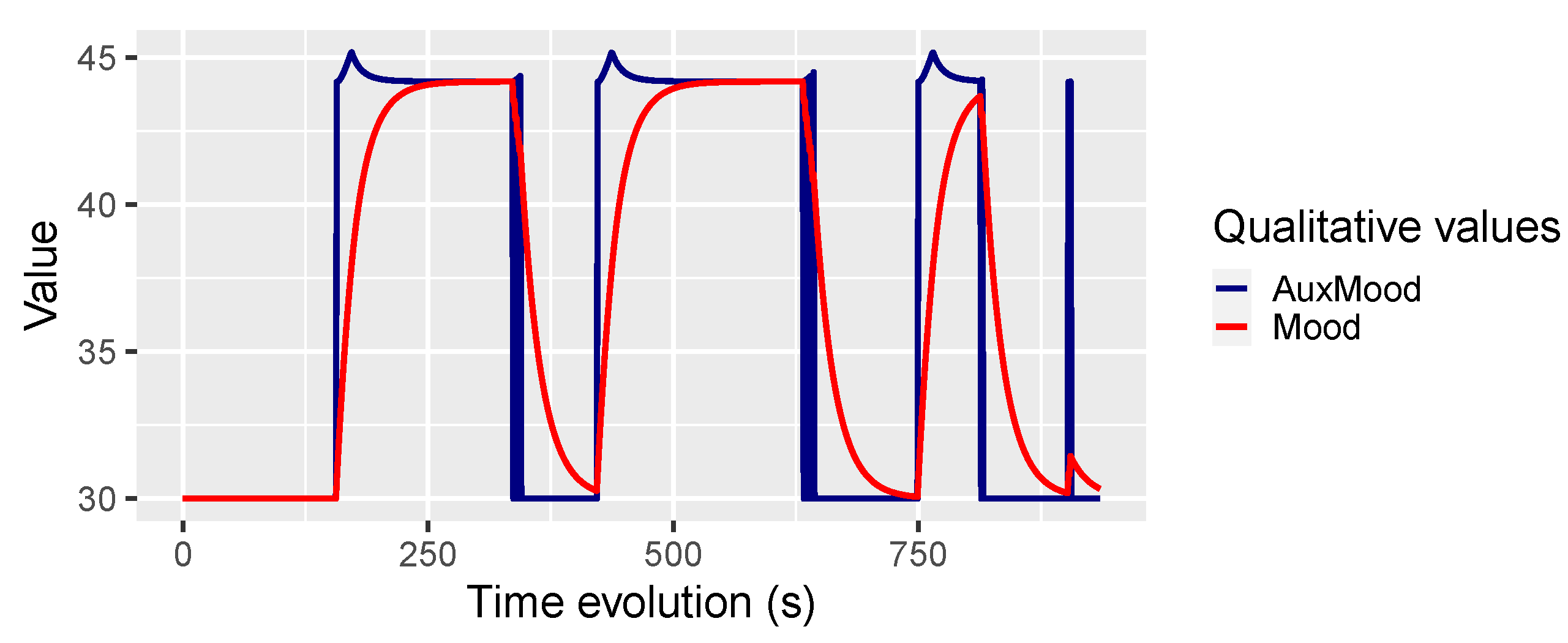

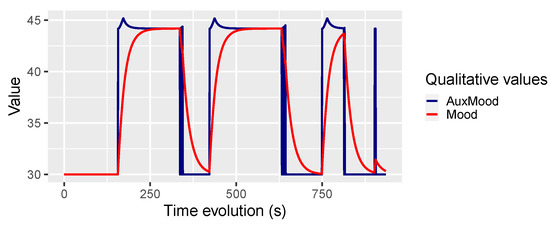

Figure 10 shows in red the unfiltered values obtained from the emotional model for the ALERTNESS dimension, named AuxAlertness. The filtered values are shown in green, with the name alertness. In the same way, Figure 11 shows the filtering of the values obtained for the emotional dimension MOOD, where it can be seen that the variations are less aggressive compared to the filtering of the emotional dimension ALERTNESS.

Figure 10.

Comparison between the non-filter ALERTNESS and the filter one.

Figure 11.

Comparison between the non-filter MOOD and the filter one.

6.2. Experiment 1: Light to Modify the ALERTNESS Dimension

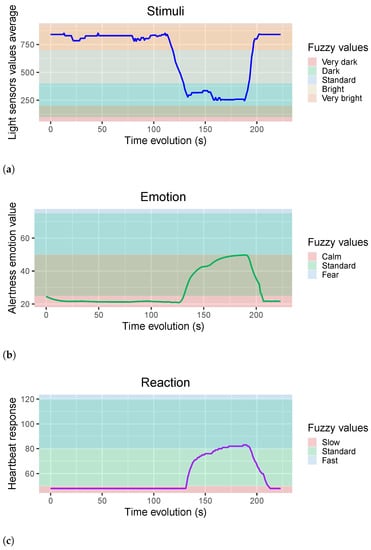

The first experiment studies how brightness affects the emotional dimension of ALERTNESS. For this, the stimulus was to cover the robot’s body with a blanket so that the luminosity sensors do not detect the same intensity. For this experiment, the emotional dimensions have been decoupled so that the dynamics of one emotional state do not affect another. In this way, it is intended to validate that the robot responses obtained from isolated changes in the emotional state of the robot are correct.

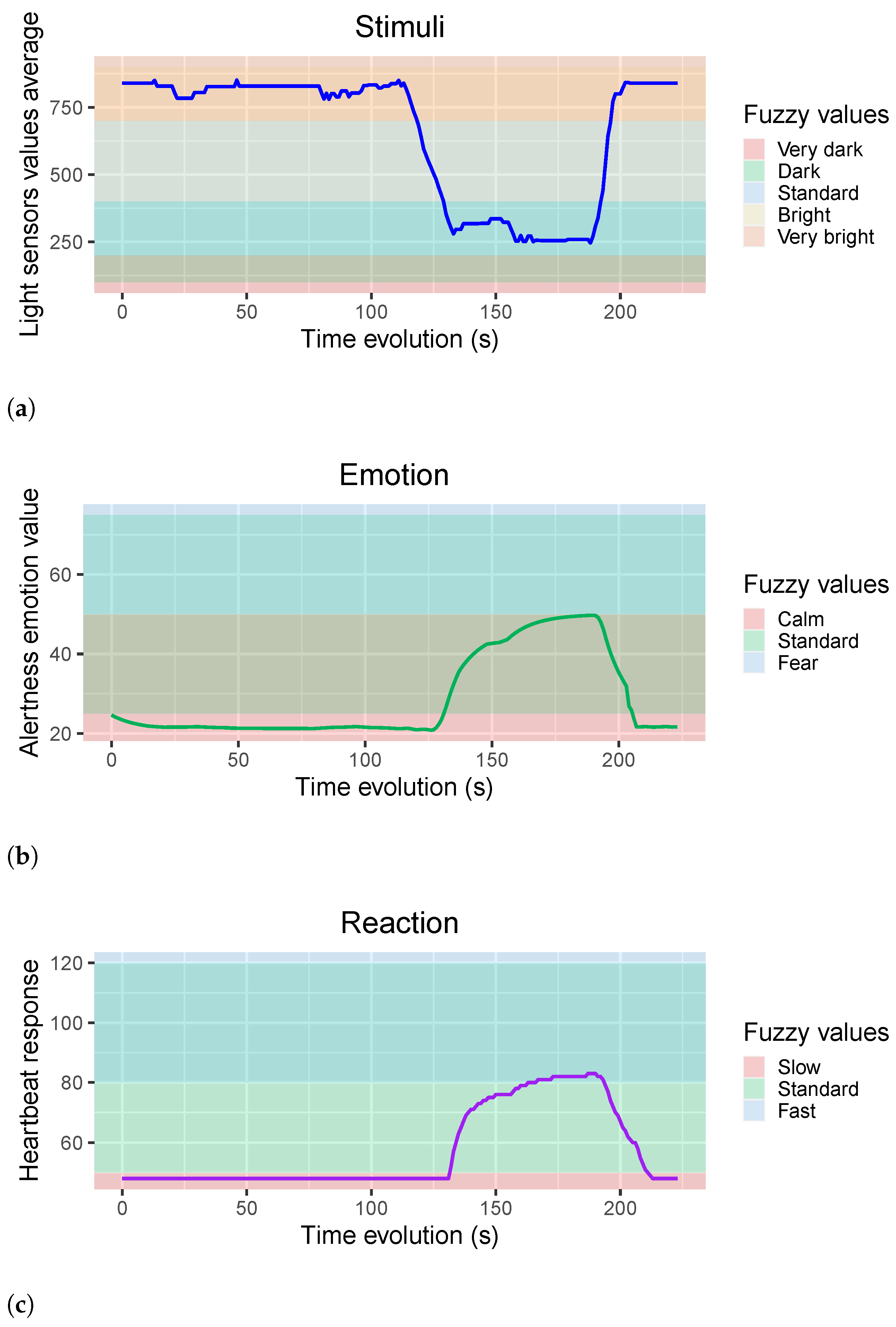

Figure 12 shows three different plots. The first one represents the average values of the two light sensors. It starts in a stable situation in a room which is well illuminated. Next, the robot is covered with a blanket, and finally, it is uncovered. The second plot represents, in red, the already filtered values obtained from the alertness dimensional emotion. It can be seen how the ALERTNESS is increased when the robot detects less luminosity. This change in the emotional state leads to the third plot. It can be seen how the heartbeat reacts to this change. When the brightness values captured by the sensors are drastically reduced, this change produces an increase in the ALERTNESS of the robot.

Figure 12.

Experiment one results. (a) Light sensors’ average values. (b) ALERTNESS emotion in function of the light stimuli. (c) Heartbeat speed in function of the emotional state.

In each of the graphs, it is also possible to see a relationship with the fuzzy values defined for using the emotional model. However, it is important to remember that the colors represented are an approximation since there are regions where different values can coexist simultaneously.

From the hardware point of view, the experiment is straightforward. The two brightness sensors send data to the Arduino. First, it obtains the average value of both sensors. Then, it sends it synchronously to the Raspberry along with the rest of the values obtained from the other sensors. The Raspberry hosts the emotional model and is responsible for sending back to the Arduino the new values for each of the sensors, in this case, for the heartbeat frequency. Again, this board will be responsible for turning on and off the heart LEDs with the marked frequency.

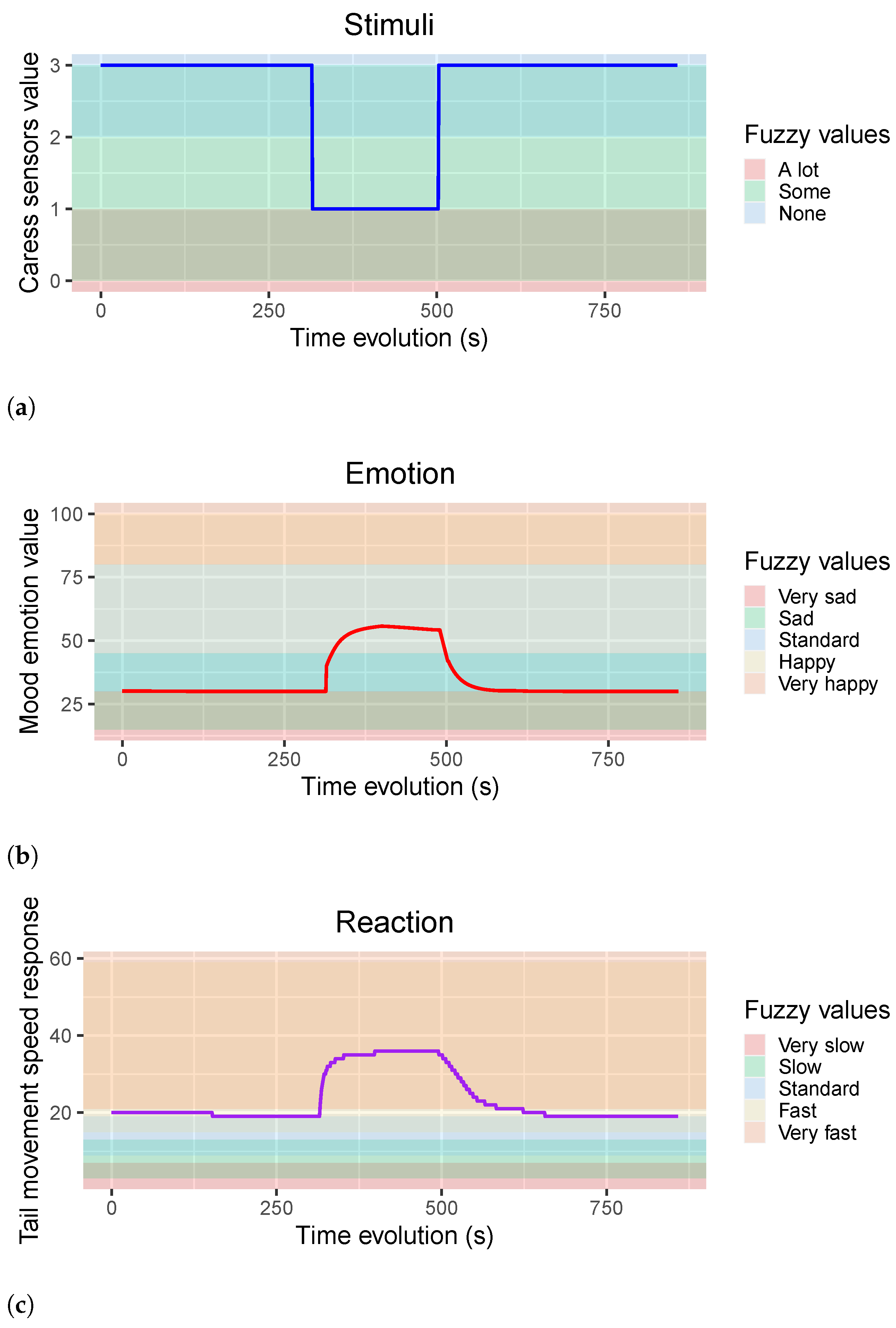

6.3. Experiment 2: Caresses to Modify the MOOD Dimension

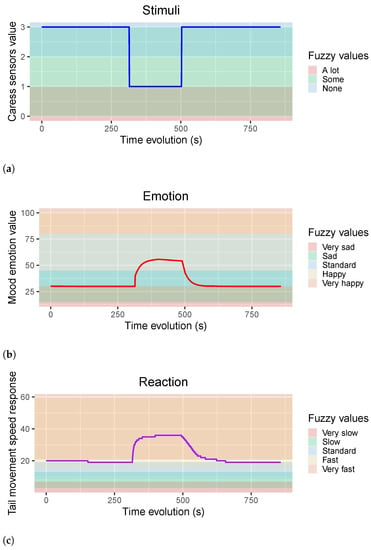

In this experiment, it is studied how caresses affect the emotional state, specifically, the emotional dimension MOOD. For this purpose, the test starts from a stable condition, with no past interactions with the robot. Then, the user caressed the robot slightly for some seconds, and after that, the user stopped.

Figure 13 shows the same three graphs as in the previous experiment. The first one represents the stimulus of being caressed. It can be seen how it affects the dimensional emotion of MOOD. In the second plot, it can be seen how the robot goes from being in a neutral state to a happier one. This fact also causes an emotional response generated by the robot. In the last graph, it can be seen that the robot, while being caressed, is moving the tail faster.

Figure 13.

Experiment two results. (a) Caress-sensors’ values. (b) MOOD emotion in function of the caress stimuli. (c) Tail movement speed in function of the emotional state.

The hardware behavior, in this case, is similar to the previous experiment.

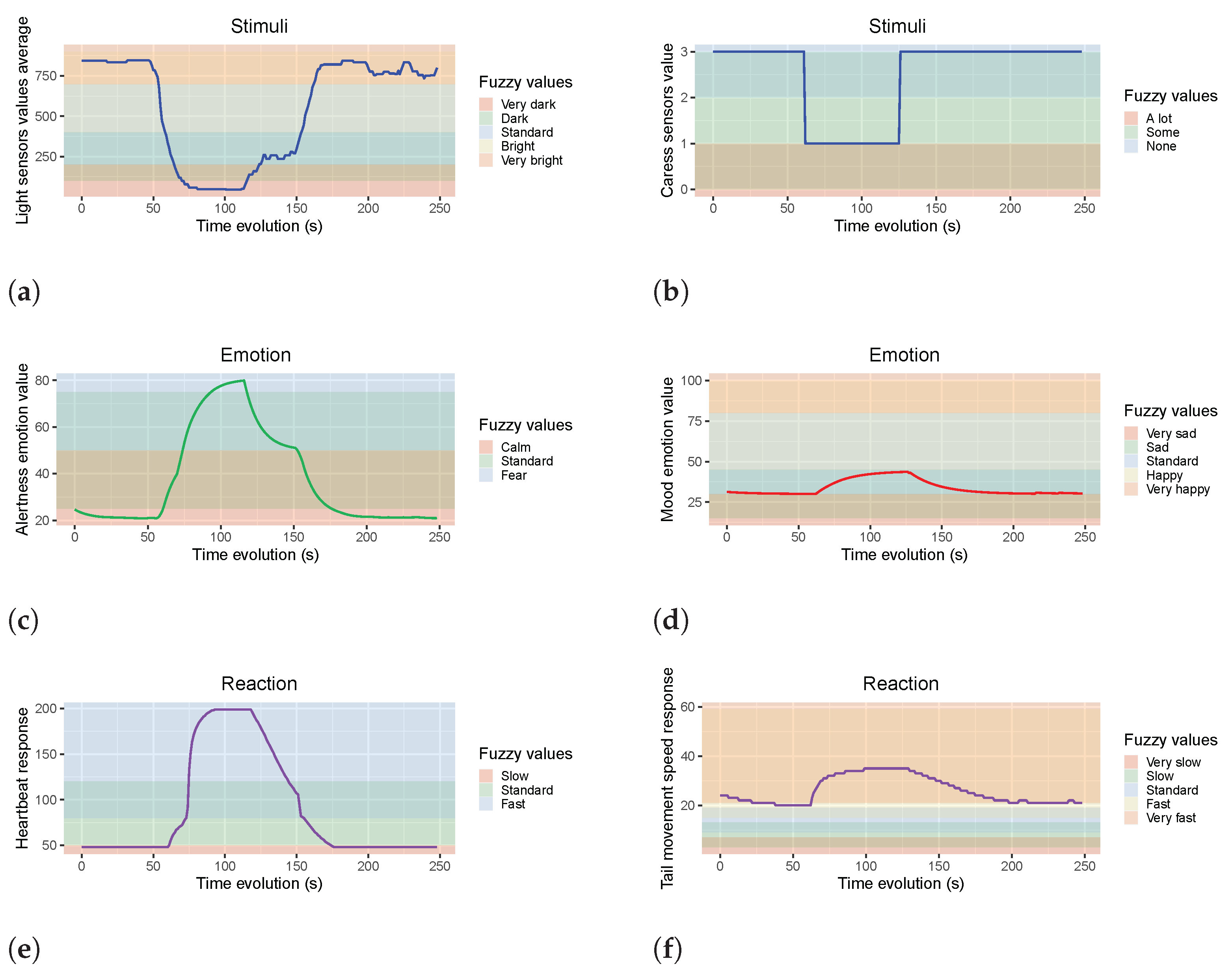

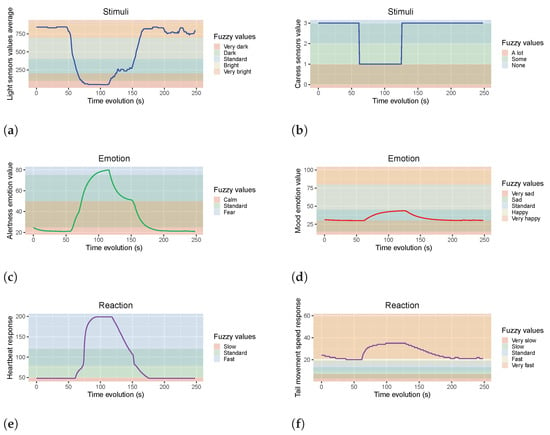

6.4. Experiment 3: Coupled Stimulus

In this third experiment, two stimuli are given at the same time. First, the user covers the robot with a blanket and caresses it simultaneously. As can be seen in the rules implemented for the emotional model of the robot, each stimulus affects only one emotional dimension. Thus, as in the first experiment, the ALERTNESS emotional dimension evolves to a more alert state when the robot is covered. As in experiment two, when the robot is caressed, its MOOD becomes happier.

Figure 14 has six plots in three rows. The first one depicts the stimuli, the second one the dimensional emotions, and the third one the response.

Figure 14.

2 × 3 grid of images. (a) Brightness stimulus. (b) Caress stimulus. (c) ALERT dimensional emotion. (d) MOOD dimensional emotion. (e) Heartbeat response. (f) Tail speed response.

Despite the responses seen, where tail velocity and beat frequency are independent, some elements are not. The face of the robot is one of those elements. The face can express fear, happiness, and happiness and calm simultaneously. If the implementation of the emotional model did not include any additional elements, the reaction would be chaotic when encountered with low light and caressing stimuli simultaneously. The emotional dimension of alertness would be high, so that fear increases. At the same time, the emotional dimension of mood would be in a state of contentment when receiving caresses. As a result, the face would receive opposite orders to activate the fear and joy faces. However, as seen in Section 5, facial expressions do not depend only on one emotional dimension but on both. Thus, if the robot is frightened because it is covered, it cannot express joy even if it is caressed.

The following section recapitulates the main conclusions of this work once it has been verified that the emotional model works correctly in the robot.

7. Conclusions

An emotional model has been designed for implementation in social robots. This model has been created by applying the fundamentals of social psychology to improve human–robot interaction. It presents six dimensional emotions: MOOD, SENSORY, AFFECTIVE, ALERTNESS, INTEREST, and EXPECTANCY. The operation of the model is based on the perception of stimuli, which generates changes in the emotional state of the robot. These changes produce, in turn, a visible reaction in the robot. These relationships between stimuli, emotions, and responses allow the definition of the robot’s personality.

This personality has been defined using fuzzy logic through tables of rules in a very intuitive and relatively easy-to-understand way. Fuzzy logic allows a simple and customizable implementation. Moreover, it enables expert psychologists to participate in design, using natural language to generate the rules that make up the robot’s personality. The fact that the development team relies on the expertise of psychologists, usually consisting only of engineers, is one of the main gaps observed in the existing literature about social robotics.

The model has been adjusted to match the evolution of emotions to human persons. In addition, different experiments have been carried out to validate the model. For this purpose, the emotional model has been reduced to only two emotional dimensions. Reducing it to the minimum logical set has allowed a precise evaluation of how stimuli affect emotions, how these emotions evolve, and what emotional responses they generate. It has been verified how each of the stimuli in isolation causes changes in the related emotion and, therefore, a specific reaction from the robot. In the same way, it has been verified that the system behaves appropriately when faced with simulated stimuli that can generate antagonistic emotions and responses.

As for the lines of continuation of this work, the main ones are the following:

- Adding more stimuli and outputs to the robot.

- Adding more emotional dimensions and relationships to the already implemented ones and their responses.

- Implement different personalities defined by/for specific situations.

- Conducting tests on the acceptance of the model by humans. Since this is a personal assistance robot, all its development is meaningless without demonstrating that, indeed, an emotion-driven robot is more compatible with the potential users, comparing the results with those of others lacking an emotional system controlling their behavior.

Author Contributions

Theory, G.F.-B.M.; conceptualization, G.F.-B.M. and F.M.; methodology, G.F.-B.M.; software, L.G.G.-E. and M.R.-C.; validation, M.G.S.-E., P.d.l.P. and F.M.; writing—original draft preparation, D.G.; writing—review and editing, D.G.; visualization, D.G.; supervision, F.M.; project administration, F.M.; funding acquisition, F.M. and D.G. All authors have read and agreed to the published version of the manuscript.

Funding

This publication is part of the R&D project “Cognitive Personal Assistance for Social Environments (ACOGES)”, reference PID2020-113096RB-I00, funded by MCIN/AEI/10.13039/501100011033 and by ESF Investing in your future.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Acknowledgments

This work is dedicated to Professor Ramón Galán, who conceived and designed the Potato robot with Gema Fernández-Blanco Martín and implemented the initial versions of the emotional model. Thank you, Ramón, for your incredible work in the robotic field, your passion, and your generosity. You were a pioneer in building spaces and social relations, opening new paths for people to work together between different disciplines.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Picard, R.W. Affective Computing; The MIT Press: Cambridge, MA, USA, 1997. [Google Scholar]

- Minsky, M. Society of Mind; Simon and Schuster: Cammeray, NSW, Australia, 1988. [Google Scholar]

- Picard, R.W.; Vyzas, E.; Healey, J. Toward machine emotional intelligence: Analysis of affective physiological state. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef]

- Turing, A.M. Computing machinery and intelligence. In Parsing the Turing Test; Springer: Berlin/Heidelberg, Germany, 2009; pp. 23–65. [Google Scholar]

- Gan, Y.; Ji, Y.; Jiang, S.; Liu, X.; Feng, Z.; Li, Y.; Liu, Y. Integrating aesthetic and emotional preferences in social robot design: An affective design approach with Kansei engineering and deep convolutional generative adversarial network. Int. J. Ind. Ergon. 2021, 83, 103128. [Google Scholar] [CrossRef]

- Hong, A.; Lunscher, N.; Hu, T.; Tsuboi, Y.; Zhang, X.; dos Reis Alves, S.F.; Nejat, G.; Benhabib, B. A multimodal emotional human-robot interaction architecture for social robots engaged in bidirectional communications. IEEE Trans. Cybern. 2020, 51, 5954–5968. [Google Scholar] [CrossRef] [PubMed]

- Toichoa Eyam, A.; Mohammed, W.M.; Martínez Lastra, J.L. Emotion-driven analysis and control of human-robot interactions in collaborative applications. Sensors 2021, 21, 4626. [Google Scholar] [CrossRef]

- Yan, F.; Iliyasu, A.M.; Hirota, K. Emotion space modelling for social robots. Eng. Appl. Artif. Intell. 2021, 100, 104178. [Google Scholar] [CrossRef]

- Hughes, D.J.; Kratsiotis, I.K.; Niven, K.; Holman, D. Personality traits and emotion regulation: A targeted review and recommendations. Emotion 2020, 20, 63. [Google Scholar] [CrossRef] [PubMed]

- Bandura, A. Social Foundations of Thought and Action: A Social Cognitive Theory; Prentice Hall: Hoboken, NJ, USA, 1986. [Google Scholar]

- Keltner, D.; Sauter, D.; Tracy, J.; Cowen, A. Emotional expression: Advances in basic emotion theory. J. Nonverbal Behav. 2019, 43, 133–160. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124. [Google Scholar] [CrossRef] [PubMed]

- Cavallo, F.; Semeraro, F.; Fiorini, L.; Magyar, G.; Sinčák, P.; Dario, P. Emotion modelling for social robotics applications: A review. J. Bionic Eng. 2018, 15, 185–203. [Google Scholar] [CrossRef]

- Darwin, C. The Expression of the Emotions in Man and Animals; University of Chicago Press: Chicago, IL, USA, 2015. [Google Scholar]

- Arnold, M.B. An Excitatory Theory of Emotion; McGraw-Hill: Irvine, CA, USA, 1950. [Google Scholar]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Plutchik, R. A general psychoevolutionary theory of emotion. In Theories of Emotion; Elsevier: Amsterdam, The Netherlands, 1980; pp. 3–33. [Google Scholar]

- Cowen, A.S.; Keltner, D. Self-report captures 27 distinct categories of emotion bridged by continuous gradients. Proc. Natl. Acad. Sci. USA 2017, 114, E7900–E7909. [Google Scholar] [CrossRef] [PubMed]

- Cordaro, D.T.; Sun, R.; Keltner, D.; Kamble, S.; Huddar, N.; McNeil, G. Universals and cultural variations in 22 emotional expressions across five cultures. Emotion 2018, 18, 75. [Google Scholar] [CrossRef] [PubMed]

- Myers, D.G. Theories of emotion. In Psychology, 7th ed.; Worth Publ.: New York, NY, USA, 2004; Volume 500. [Google Scholar]

- Heilman, K.M. Emotional experience: A neurological model. In Cognitive Neuroscience of Emotion; Oxford University Press: Oxford, UK, 2000; pp. 328–344. [Google Scholar]

- Morris, A.S.; Silk, J.S.; Steinberg, L.; Myers, S.S.; Robinson, L.R. The role of the family context in the development of emotion regulation. Soc. Dev. 2007, 16, 361–388. [Google Scholar] [CrossRef] [PubMed]

- Bechara, A.; Damasio, H.; Damasio, A.R. Emotion, decision making and the orbitofrontal cortex. Cereb. Cortex 2000, 10, 295–307. [Google Scholar] [CrossRef]

- James, W. What is an emotion? Mind 1884, os-IX, 188–205. [Google Scholar] [CrossRef]

- Cannon, W.B. The James-Lange theory of emotions: A critical examination and an alternative theory. Am. J. Psychol. 1927, 39, 106–124. [Google Scholar] [CrossRef]

- Smith, C.A.; Ellsworth, P.C. Patterns of cognitive appraisal in emotion. J. Personal. Soc. Psychol. 1985, 48, 813. [Google Scholar] [CrossRef]

- Eisenberg, I.W.; Bissett, P.G.; Zeynep Enkavi, A.; Li, J.; MacKinnon, D.P.; Marsch, L.A.; Poldrack, R.A. Uncovering the structure of self-regulation through data-driven ontology discovery. Nat. Commun. 2019, 10, 2319. [Google Scholar] [CrossRef]

- Cowen, A.; Sauter, D.; Tracy, J.L.; Keltner, D. Mapping the passions: Toward a high-dimensional taxonomy of emotional experience and expression. Psychol. Sci. Public Interest 2019, 20, 69–90. [Google Scholar] [CrossRef]

- McGinn, C. Why do robots need a head? The role of social interfaces on service robots. Int. J. Soc. Robot. 2020, 12, 281–295. [Google Scholar] [CrossRef]

- Del Coco, M.; Leo, M.; Carcagnì, P.; Fama, F.; Spadaro, L.; Ruta, L.; Pioggia, G.; Distante, C. Study of mechanisms of social interaction stimulation in autism spectrum disorder by assisted humanoid robot. IEEE Trans. Cogn. Dev. Syst. 2017, 10, 993–1004. [Google Scholar] [CrossRef]

- Rawal, N.; Stock-Homburg, R.M. Facial emotion expressions in human-robot interaction: A survey. arXiv 2021, arXiv:2103.07169. [Google Scholar] [CrossRef]

- Inthiam, J.; Mowshowitz, A.; Hayashi, E. Mood perception model for social robot based on facial and bodily expression using a hidden Markov model. J. Robot. Mechatron. 2019, 31, 629–638. [Google Scholar] [CrossRef]

- Liu, M. English speech emotion recognition method based on speech recognition. Int. J. Speech Technol. 2022, 25, 391–398. [Google Scholar] [CrossRef]

- Chen, L.; Su, W.; Feng, Y.; Wu, M.; She, J.; Hirota, K. Two-layer fuzzy multiple random forest for speech emotion recognition in human-robot interaction. Inf. Sci. 2020, 509, 150–163. [Google Scholar] [CrossRef]

- Liu, Z.T.; Xie, Q.; Wu, M.; Cao, W.H.; Li, D.Y.; Li, S.H. Electroencephalogram emotion recognition based on empirical mode decomposition and optimal feature selection. IEEE Trans. Cogn. Dev. Syst. 2018, 11, 517–526. [Google Scholar] [CrossRef]

- Ruiz-Garcia, A.; Elshaw, M.; Altahhan, A.; Palade, V. A hybrid deep learning neural approach for emotion recognition from facial expressions for socially assistive robots. Neural Comput. Appl. 2018, 29, 359–373. [Google Scholar] [CrossRef]

- Chen, L.; Su, W.; Wu, M.; Pedrycz, W.; Hirota, K. A fuzzy deep neural network with sparse autoencoder for emotional intention understanding in human–robot interaction. IEEE Trans. Fuzzy Syst. 2020, 28, 1252–1264. [Google Scholar] [CrossRef]

- Shao, M.; Alves, S.F.D.R.; Ismail, O.; Zhang, X.; Nejat, G.; Benhabib, B. You are doing great! only one rep left: An affect-aware social robot for exercising. In Proceedings of the 2019 IEEE International Conference on Systems, Man and Cybernetics (SMC), Bari, Italy, 6–9 October 2019; pp. 3811–3817. [Google Scholar]

- Yu, C.; Tapus, A. Interactive robot learning for multimodal emotion recognition. In Proceedings of the International Conference on Social Robotics, Madrid, Spain, 26–29 November 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 633–642. [Google Scholar]

- Moerland, T.M.; Broekens, J.; Jonker, C.M. Emotion in reinforcement learning agents and robots: A survey. Mach. Learn. 2018, 107, 443–480. [Google Scholar] [CrossRef]

- Savery, R.; Weinberg, G. A survey of robotics and emotion: Classifications and models of emotional interaction. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 986–993. [Google Scholar]

- Chung, S.E.; Ryoo, H.Y. Functional/Semantic Gesture Design Factor Studies on Social Robot for User Experience Design. Int. J. Smart Home 2020, 14, 1–8. [Google Scholar] [CrossRef]

- Antona, M.; Ioannidi, D.; Foukarakis, M.; Gerlowska, J.; Rejdak, K.; Abdelnour, C.; Hernández, J.; Tantinya, N.; Roberto, N. My robot is happy today: How older people with mild cognitive impairments understand assistive robots’ affective output. In Proceedings of the 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 5–7 June 2019; pp. 416–424. [Google Scholar]

- Cañamero, L. Embodied affect for real-world human-robot interaction. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 459–460. [Google Scholar]

- Matía, F.; Jiménez, A.; Galán, R.; Sanz, R. Fuzzy controllers: Lifting the linear-nonlinear frontier. Fuzzy Sets Syst. 1992, 52, 113–128. [Google Scholar] [CrossRef]

- Matía, F.; Al-Hadithi, B.M.; Jiménez, A. On the global stability of Takagi-Sugeno general model. Mathw. Soft Comput. 1999, 6, 293–304. [Google Scholar]

- Matía, F.; Al-Hadithi, B.M.; Jiménez, A. Generalization of stability criterion for Takagi-Sugeno continuous fuzzy model. Fuzzy Sets Syst. 2002, 129, 295–309. [Google Scholar] [CrossRef]

- Matía, F.; Al-Hadithi, B.M.; Jiménez, A.; San Segundo, P. An affine fuzzy model with local and global interpretations. Appl. Soft Comput. 2011, 11, 4226–4235. [Google Scholar] [CrossRef]

- Matía, F.; Jiménez, A.; Al-Hadithi, B.M.; Rodríguez-Losada, D.; Galán, R. The fuzzy Kalman filter: State estimation using possibilistic techniques. Fuzzy Sets Syst. 2006, 157, 2145–2170. [Google Scholar] [CrossRef]

- Matía, F.; Jiménez, V.; Alvarado, B.P.; Haber, R. The fuzzy Kalman filter: Improving its implementation by reformulating uncertainty representation. Fuzzy Sets Syst. 2021, 402, 78–104. [Google Scholar] [CrossRef]

- Jiménez, V.; Alvarado, B.P.; Matía, F. A set of practical experiments to validate the fuzzy Kalman filter. Fuzzy Sets Syst. 2021, 417, 152–170. [Google Scholar] [CrossRef]

- Stock-Homburg, R. Survey of emotions in human–robot interactions: Perspectives from robotic psychology on 20 years of research. Int. J. Soc. Robot. 2022, 14, 389–411. [Google Scholar] [CrossRef]

- Dimitrievska, V.; Ackovska, N. Behavior models of emotion-featured robots: A survey. J. Intell. Robot. Syst. 2020, 100, 1031–1053. [Google Scholar] [CrossRef]

- Ghayoumi, M.; Pourebadi, M. Fuzzy Knowledge-Based Architecture for Learning and Interaction in Social Robots. arXiv 2019, arXiv:1909.11004. [Google Scholar]

- Kowalczuk, Z.; Czubenko, M.; Merta, T. Interpretation and modeling of emotions in the management of autonomous robots using a control paradigm based on a scheduling variable. Eng. Appl. Artif. Intell. 2020, 91, 103562. [Google Scholar] [CrossRef]

- Martins, P.S.; Faria, G.; Cerqueira, J.d.J.F. I2E: A Cognitive Architecture Based on Emotions for Assistive Robotics Applications. Electronics 2020, 9, 1590. [Google Scholar] [CrossRef]

- Garcia-Garcia, J.M.; Penichet, V.M.; Lozano, M.D. Emotion detection: A technology review. In Proceedings of the XVIII International Conference on Human Computer Interaction, New York, NY, USA, 25–27 September 2017; pp. 1–8. [Google Scholar]

- Ekman, P. Mistakes when deceiving. Ann. N. Y. Acad. Sci. 1981, 364, 269–278. [Google Scholar] [CrossRef]

- Garcia-Garcia, J.M.; Lozano, M.D.; Penichet, V.M.; Law, E.L.C. Building a three-level multimodal emotion recognition framework. Multimed. Tools Appl. 2023, 82, 239–269. [Google Scholar] [CrossRef]

- Rada-Vilela, J.F. A fuzzy logic control library in C++. In Proceedings of the Open Source Developers Conference, Auckland, New Zealand, 21–23 October 2013; pp. 2751–2779. [Google Scholar]

- Huang, A.S.; Olson, E.; Moore, D.C. LCM: Lightweight communications and marshalling. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 4057–4062. [Google Scholar]

- Reisenzein, R. Wundt’s three-dimensional theory of emotion. Pozn. Stud. Philos. Sci. Humanit. 2000, 75, 219–250. [Google Scholar]

- Plutchik, R. The nature of emotions: Human emotions have deep evolutionary roots, a fact that may explain their complexity and provide tools for clinical practice. Am. Sci. 2001, 89, 344–350. [Google Scholar] [CrossRef]

- Zadeh, L.A. Fuzzy logic and approximate reasoning: In memory of Grigore moisil. Synthese 1975, 30, 407–428. [Google Scholar] [CrossRef]

- Zall, R.; Kangavari, M.R. Comparative Analytical Survey on Cognitive Agents with Emotional Intelligence. Cogn. Comput. 2022, 14, 1223–1246. [Google Scholar] [CrossRef]

- Hamilton, J.D. State-space models. Handb. Econom. 1994, 4, 3039–3080. [Google Scholar]

- Álvarez, M.; Galán, R.; Matía, F.; Rodríguez-Losada, D.; Jiménez, A. An emotional model for a guide robot. IEEE Trans. Syst. Man, Cybern.-Part A Syst. Humans 2010, 40, 982–992. [Google Scholar] [CrossRef]

- Zadeh, L.A. Is there a need for fuzzy logic? Inf. Sci. 2008, 178, 2751–2779. [Google Scholar] [CrossRef]

- Azizzadeh, L.; Zadeh, L. Fuzzy sets, information and control. Inf. Control 1965, 8, 338–353. [Google Scholar]

- Zadeh, L. Fuzzy logic. Computer 1988, 21, 83–93. [Google Scholar] [CrossRef]

- Sotres-Bayon, F.; Cain, C.K.; LeDoux, J.E. Brain mechanisms of fear extinction: Historical perspectives on the contribution of prefrontal cortex. Biol. Psychiatry 2006, 60, 329–336. [Google Scholar] [CrossRef] [PubMed]

- Laakso, T.; Valimaki, V.; Karjalainen, M.; Laine, U. The unit delay. IEEE Signal Process. Mag. 1996, 13, 30–60. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).