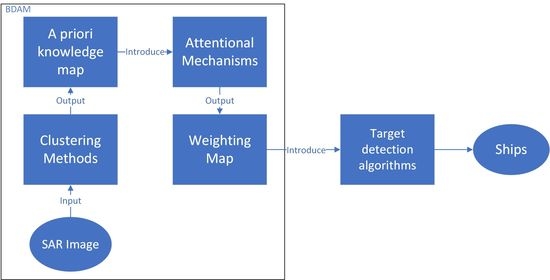

Integrating Prior Knowledge into Attention for Ship Detection in SAR Images

Abstract

1. Introduction

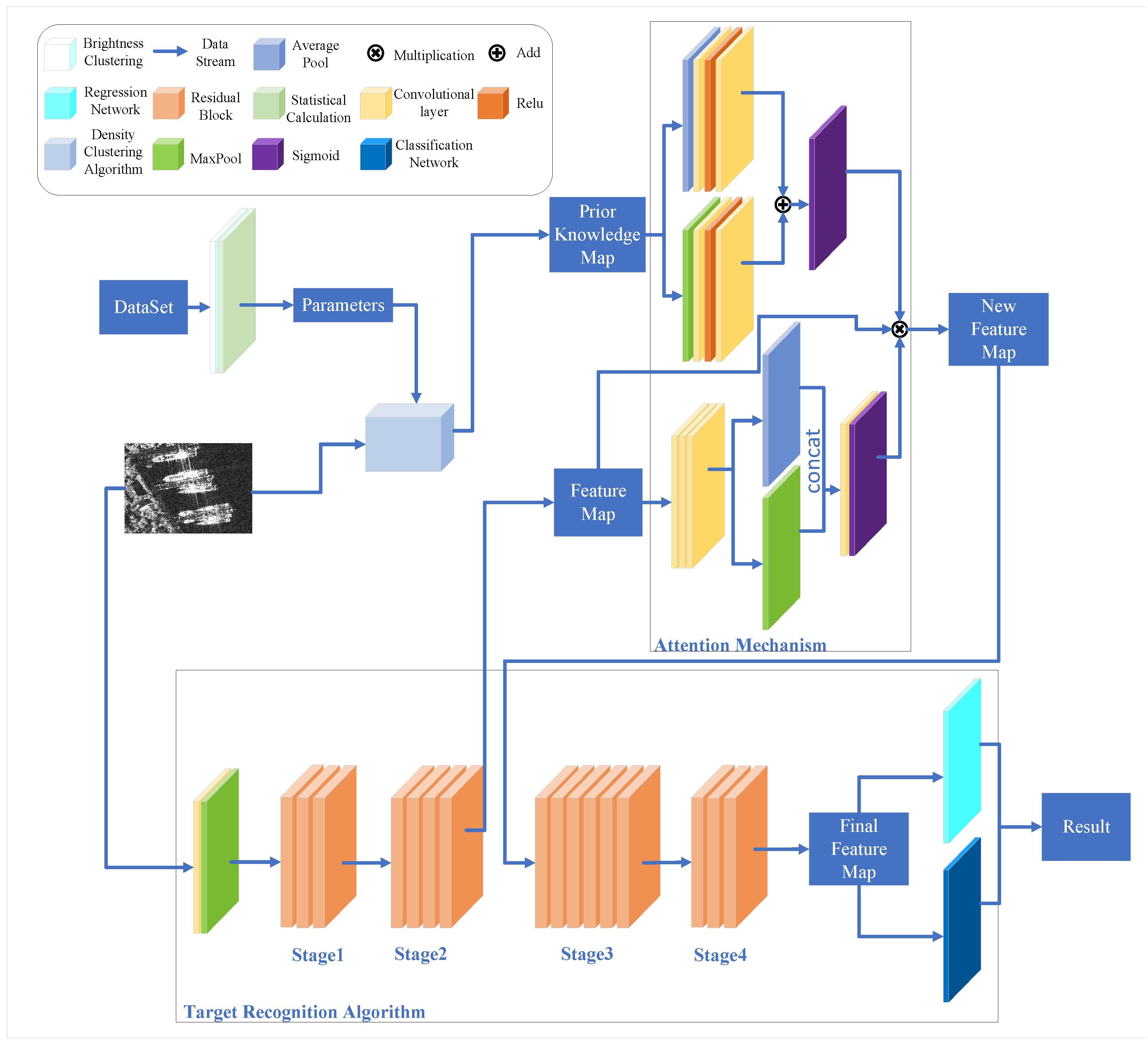

- To our best knowledge, our framework is the first that can integrate prior knowledge into arbitrary CNN-based detectors using attention mechanism for SAR images.

- By the attention mechanism, the deep learning models can learn from both prior knowledge and vanilla images.

- Our experiments exhibit the superiority of our method using object detection algorithms (Faster R-CNN, RetinaNet, YOLOv4, and SSD) on three SAR image datasets (SSDD, LS-SSDD, and HRSID).

2. Related Work

2.1. General CNN-Based Object Detection

2.2. Prior Knowledge for Detection SAR Images

2.3. Attention Mechanisms

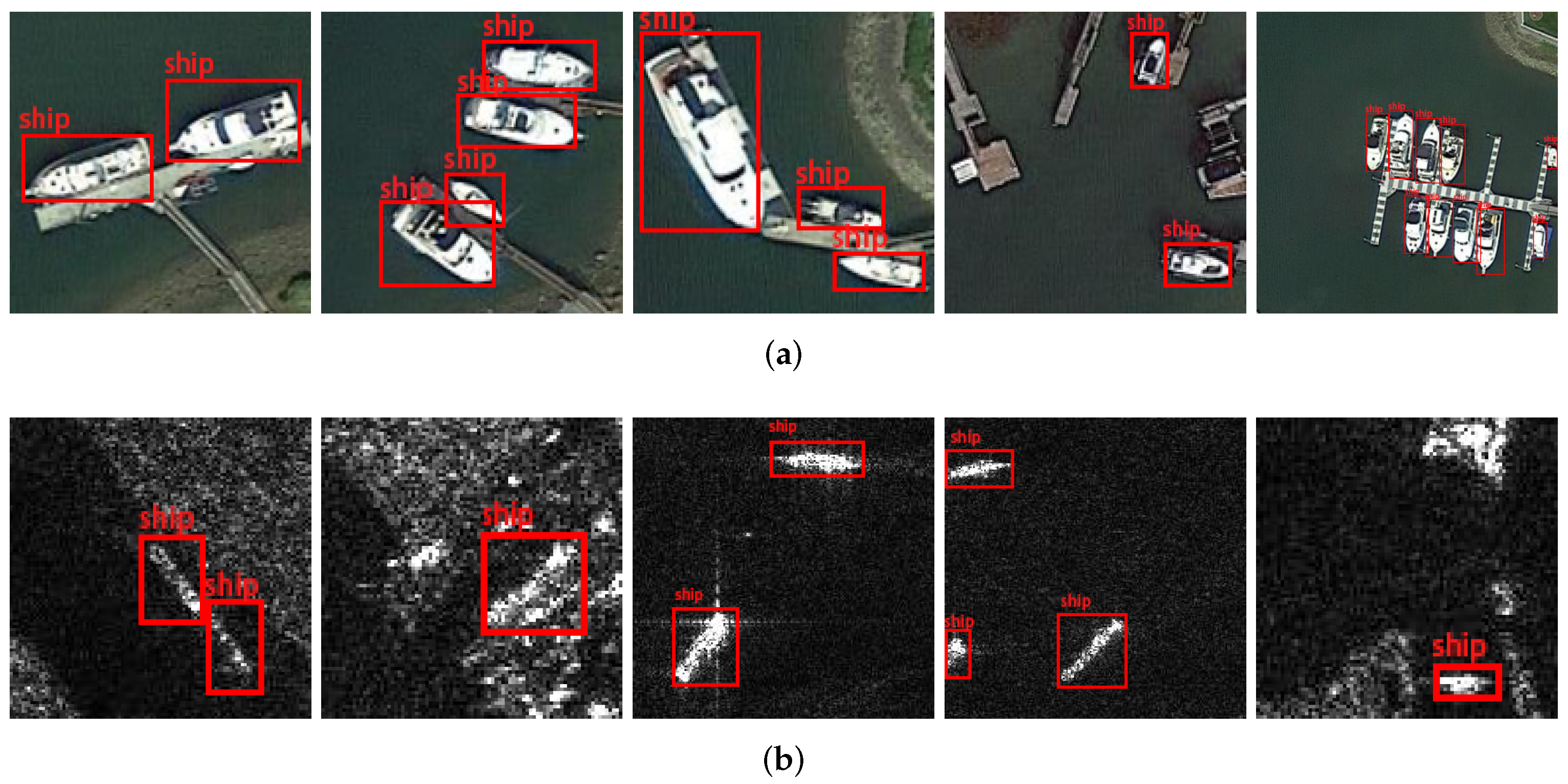

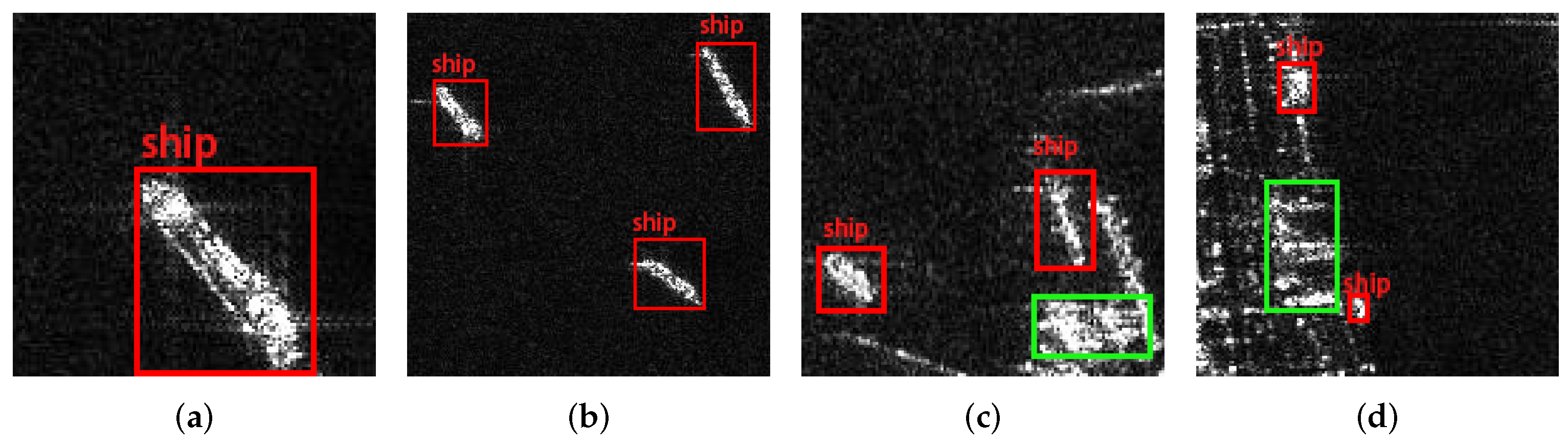

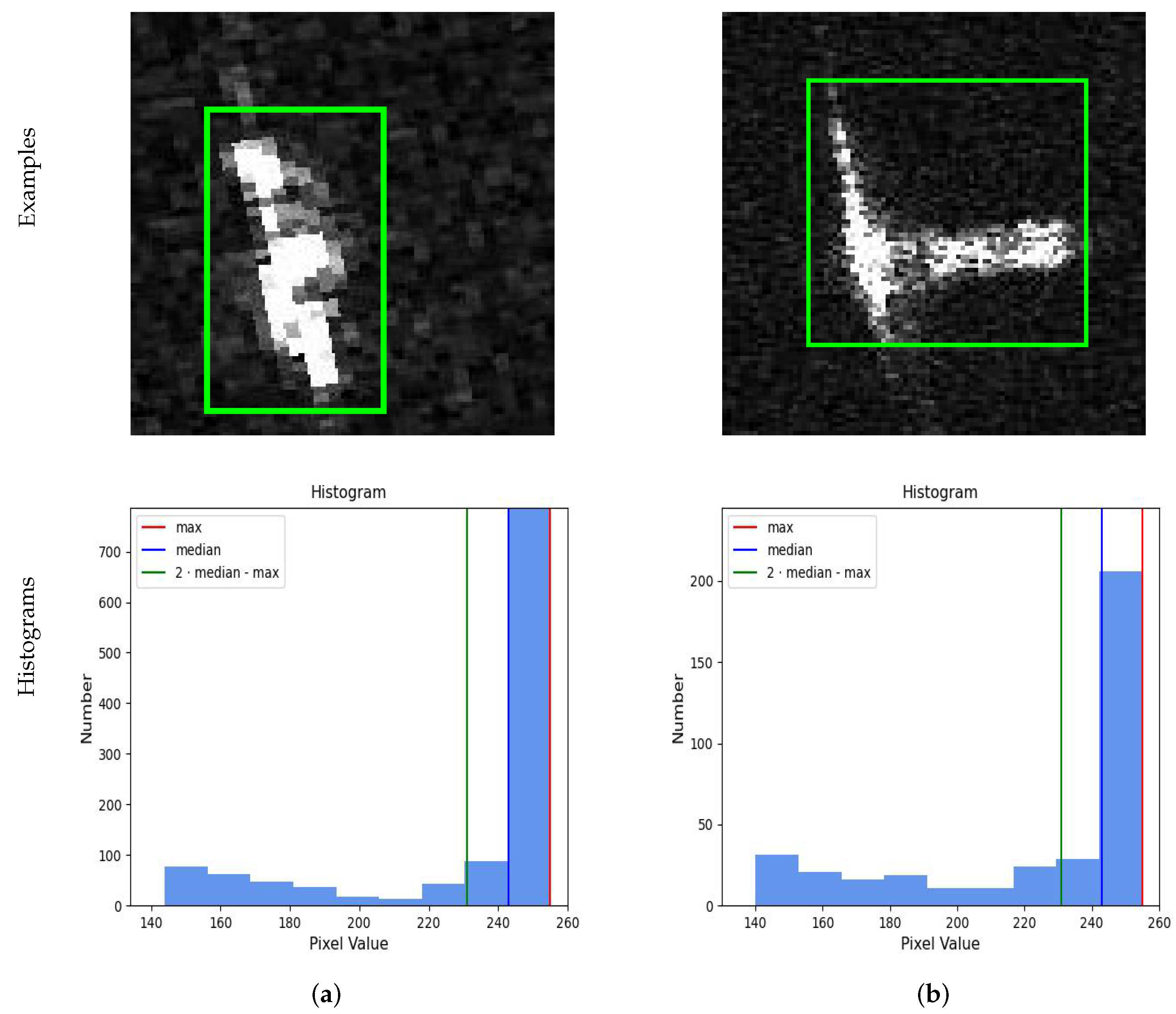

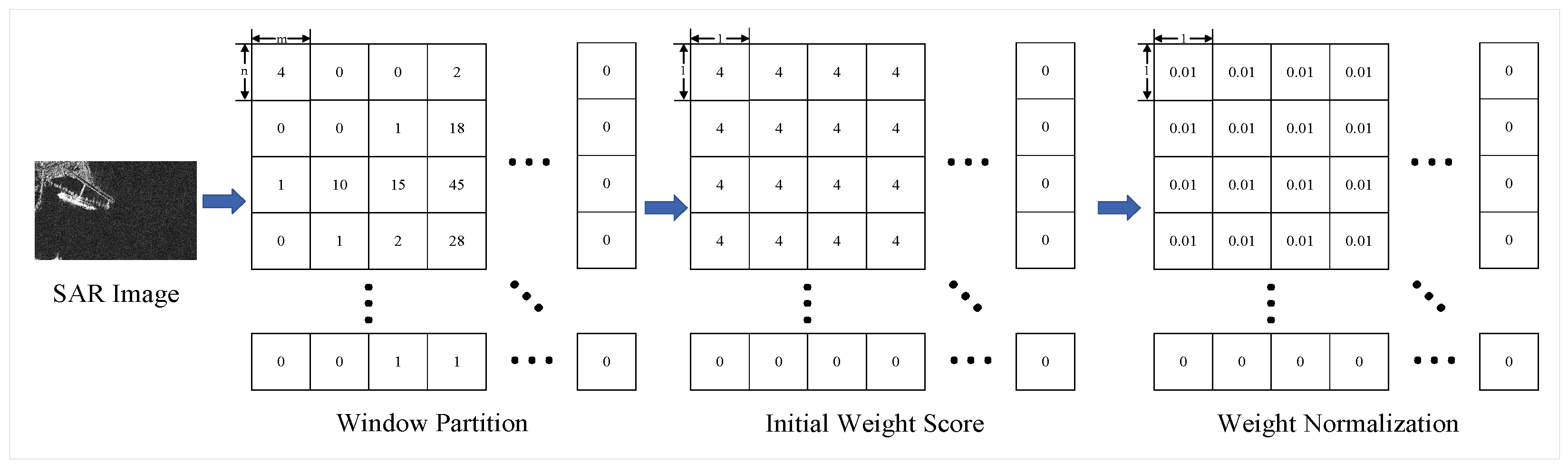

3. Representing Prior Knowledge

3.1. Analysis

3.2. Generating by Brightness

3.3. Generating by Density

4. Combining Prior Knowledge Map

4.1. Resizing Prior Knowledge Map

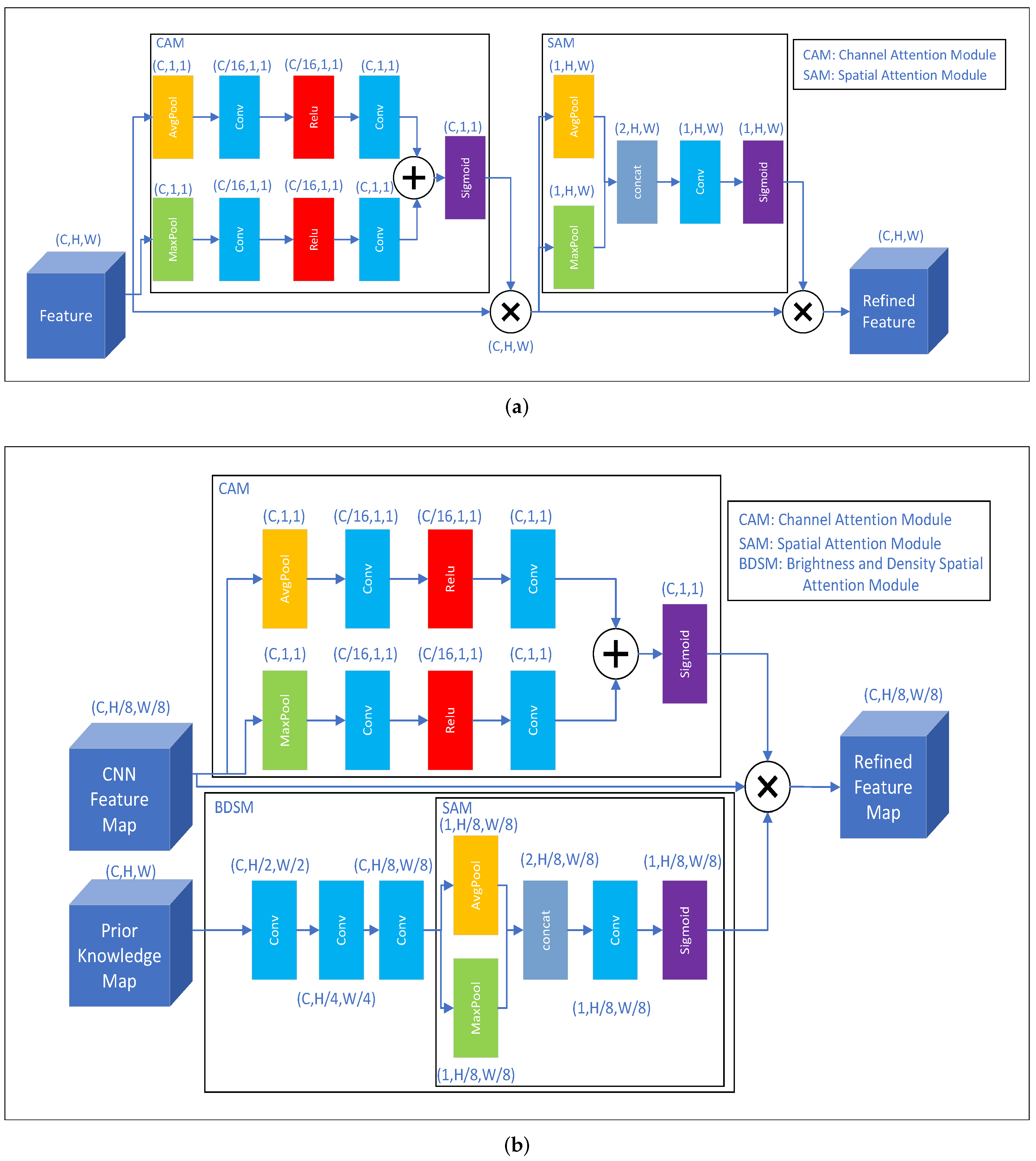

4.2. Integrating Prior Knowledge Map

5. Experiments and Results

5.1. Environment

5.2. Datasets

5.3. Implementation Details

5.4. Evaluation Criteria

5.5. Hyper-Parameter Analysis

5.6. Time Analysis

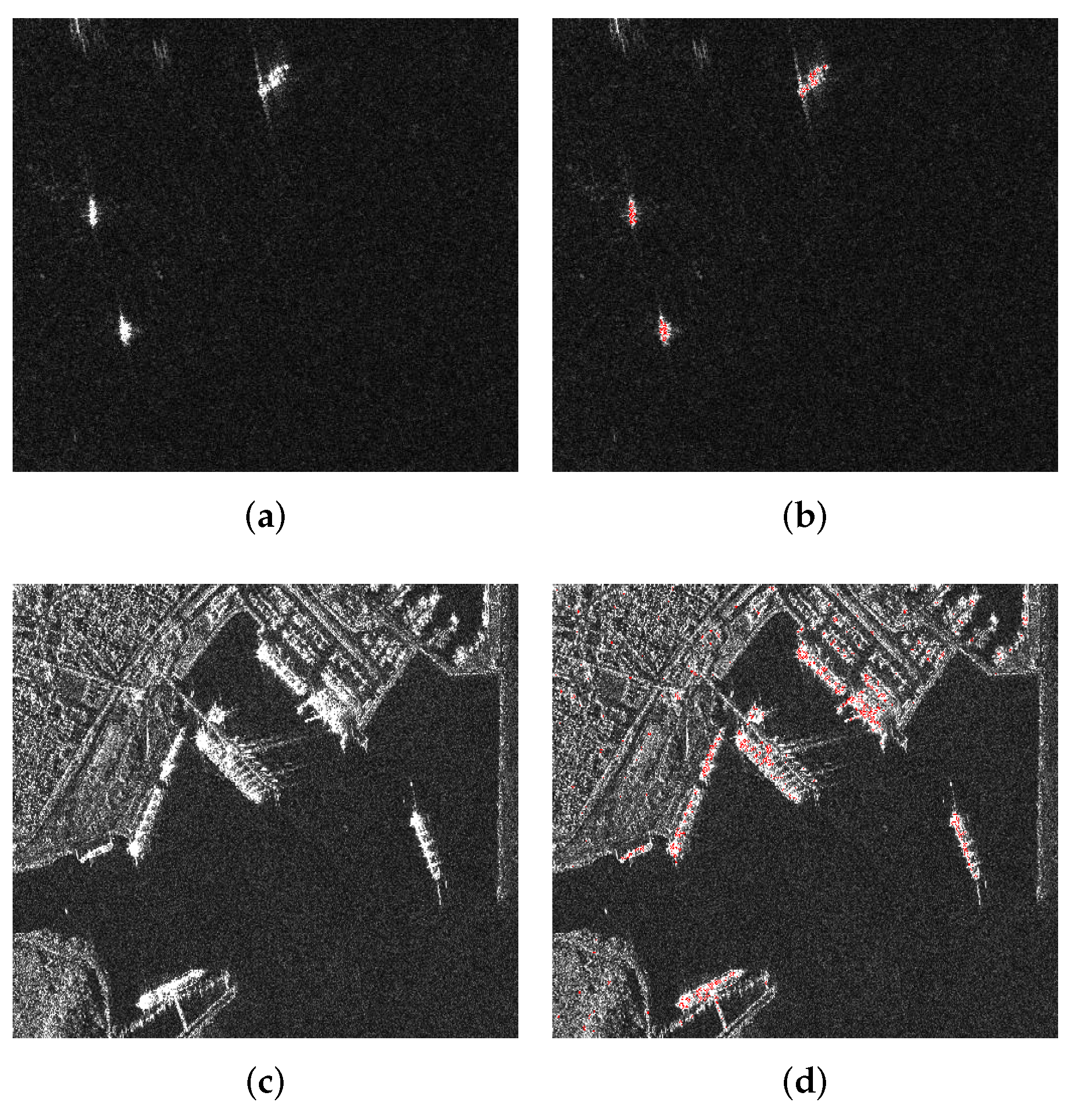

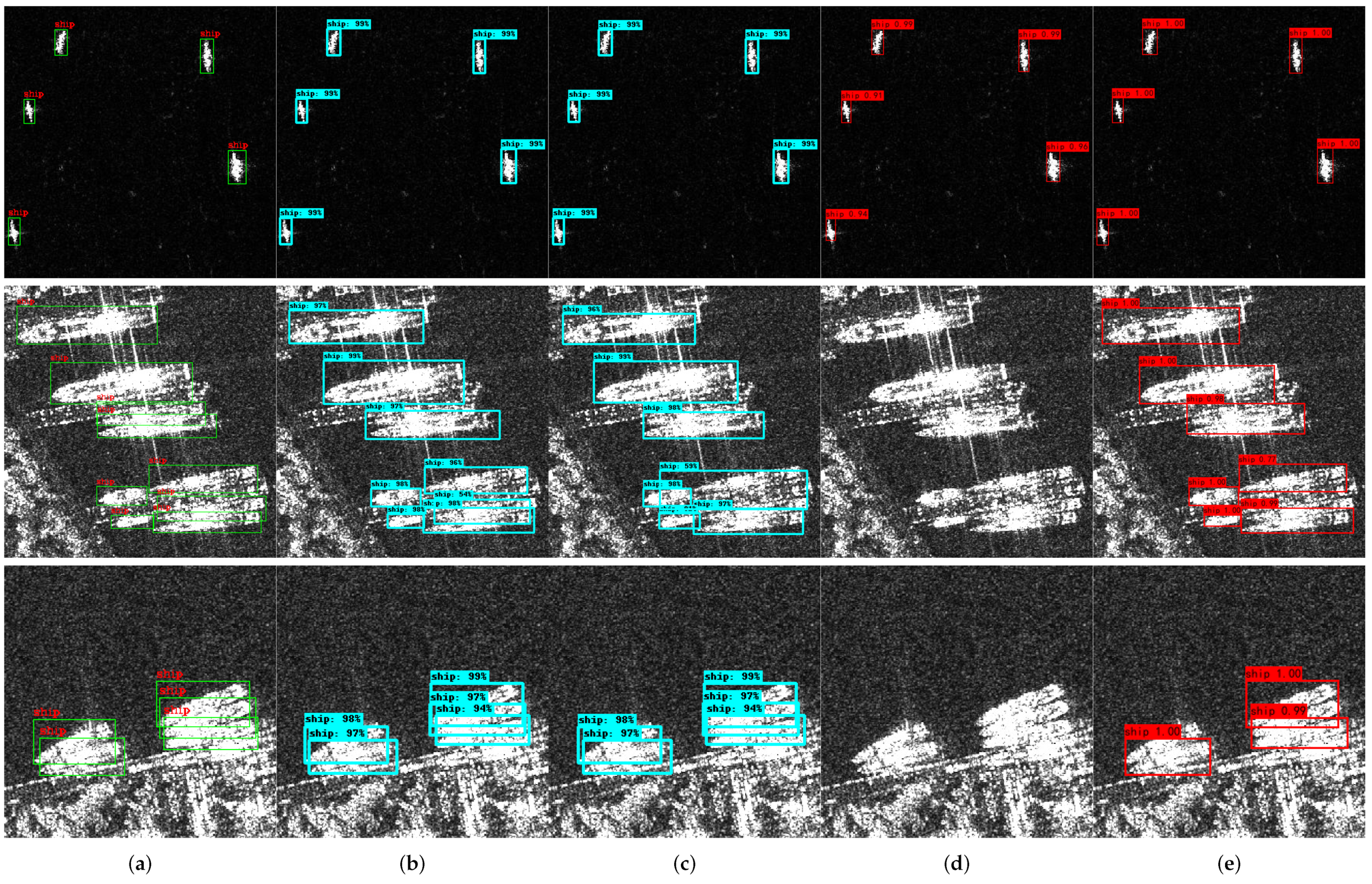

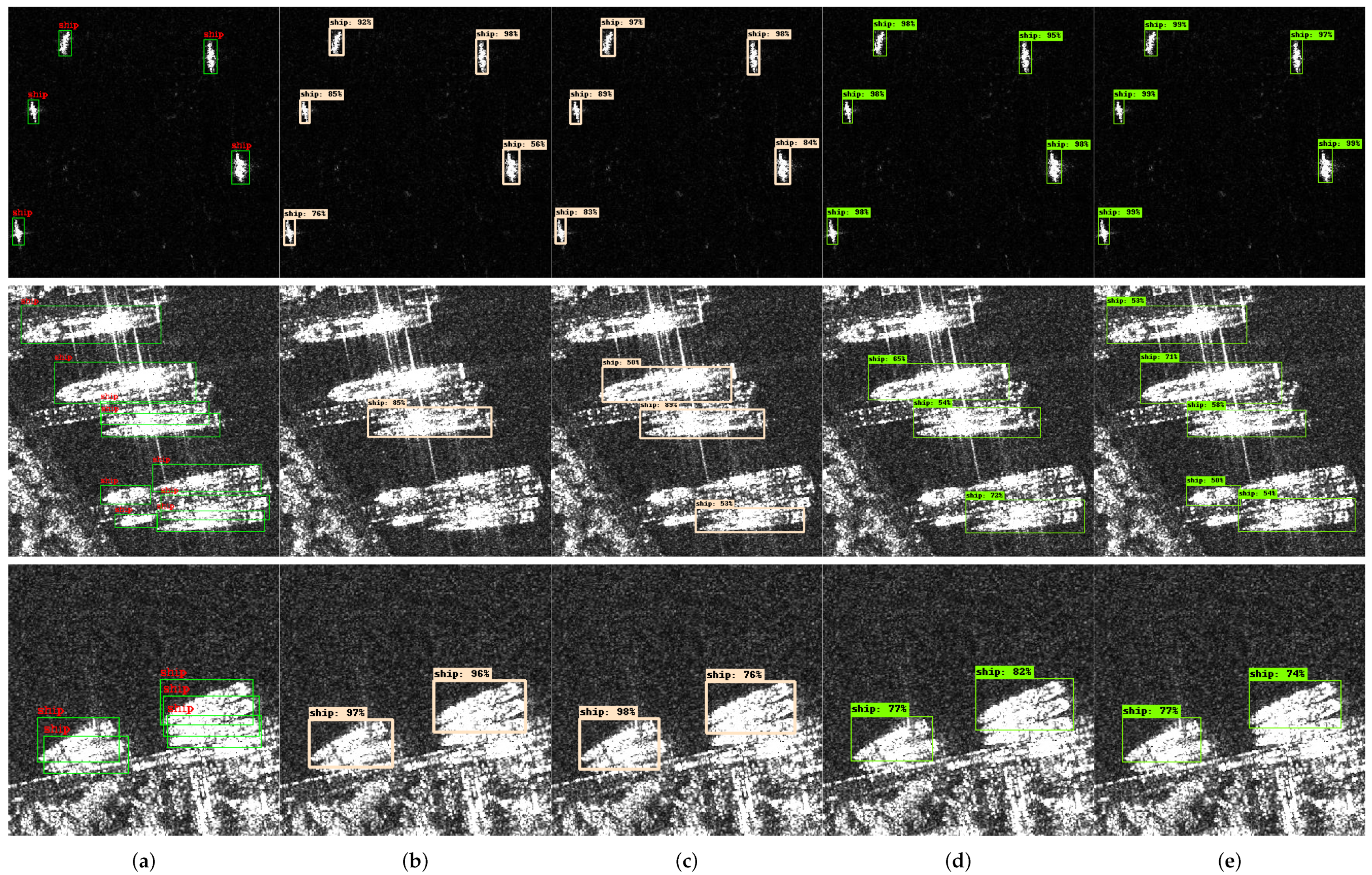

5.7. Qualitative Results

5.8. Ablation Study

5.8.1. Insertion Strategy

5.8.2. Attention Components

6. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Brusch, S.; Lehner, S.; Fritz, T.; Soccorsi, M.; Soloviev, A.; van Schie, B. Ship surveillance with TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2010, 49, 1092–1103. [Google Scholar] [CrossRef]

- Zhao, Z.; Ji, K.; Xing, X.; Zou, H.; Zhou, S. Ship Surveillance by Integration of Space-borne SAR and AIS—Review of Current Research. J. Navig. 2014, 67, 177–189. [Google Scholar] [CrossRef]

- Paes, R.L.; Lorenzzetti, J.A.; Gherardi, D.F.M. Ship detection in the Brazilian coast using TerraSAR-X SAR images. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium, Cape Town, South Africa, 12–17 July 2009; Volume 4, pp. IV-983–IV-986. [Google Scholar] [CrossRef]

- Brekke, C.; Weydahl, D.J.; Helleren, O.; Olsen, R. Ship traffic monitoring using multi-polarisation satellite SAR images combined with AIS reports. In Proceedings of the 7th European Conference on Synthetic Aperture Radar, Friedrichshafen, Germany, 2–5 June 2008; pp. 1–4. [Google Scholar]

- Lin, Z.; Ji, K.; Leng, X.; Kuang, G. Squeeze and Excitation Rank Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 751–755. [Google Scholar] [CrossRef]

- Wang, R.; Xu, F.; Pei, J.; Wang, C.; Huang, Y.; Yang, J.; Wu, J. An Improved Faster R-CNN Based on MSER Decision Criterion for SAR Image Ship Detection in Harbor. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1322–1325. [Google Scholar] [CrossRef]

- Wang, X.; Cui, Z.; Cao, Z.; Dang, S. Dense Docked Ship Detection via Spatial Group-Wise Enhance Attention in SAR Images. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1244–1247. [Google Scholar] [CrossRef]

- Tang, G.; Zhao, H.; Claramunt, C.; Men, S. FLNet: A Near-shore Ship Detection Method Based on Image Enhancement Technology. Remote Sens. 2022, 14, 4857. [Google Scholar] [CrossRef]

- Zhu, M.; Hu, G.; Zhou, H.; Wang, S.; Feng, Z.; Yue, S. A Ship Detection Method via Redesigned FCOS in Large-Scale SAR Images. Remote Sens. 2022, 14, 1153. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Wang, W.Q. A Lightweight Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Shi, H.; Chai, B.; Wang, Y.; Chen, L. A Local-Sparse-Information-Aggregation Transformer with Explicit Contour Guidance for SAR Ship Detection. Remote Sens. 2022, 14, 5247. [Google Scholar] [CrossRef]

- Yu, J.; Wu, T.; Zhou, S.; Pan, H.; Zhang, X.; Zhang, W. An SAR Ship Object Detection Algorithm Based on Feature Information Efficient Representation Network. Remote Sens. 2022, 14, 3489. [Google Scholar] [CrossRef]

- Li, C.; Li, Y.; Hu, H.; Shang, J.; Zhang, K.; Qian, L.; Wang, K. Efficient Object Detection in SAR Images Based on Computation-Aware Neural Architecture Search. Appl. Sci. 2022, 12, 10978. [Google Scholar] [CrossRef]

- Zhao, K.; Zhou, Y.; Chen, X. A Dense Connection Based SAR Ship Detection network. In Proceedings of the 2020 IEEE 9th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 1–13 December 2020; Voume 9, pp. 669–673. [Google Scholar] [CrossRef]

- Velotto, D.; Tings, B. Performance Analysis of Time-Frequency Technique for the Detection of Small Ships in SAR Imagery at Large Grazing Angle and Moderate Metocean Conditions. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6071–6074. [Google Scholar] [CrossRef]

- Yue, T.; Zhang, Y.; Liu, P.; Xu, Y.; Yu, C. A Generating-Anchor Network for Small Ship Detection in SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 7665–7676. [Google Scholar] [CrossRef]

- Li, M.; Lin, S.; Huang, X. SAR Ship Detection Based on Enhanced Attention Mechanism. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Computer Engineering (ICAICE), Hangzhou, China, 5–7 November 2021; pp. 759–762. [Google Scholar] [CrossRef]

- Yao, C.; Xie, P.; Zhang, L.; Fang, Y. ATSD: Anchor-Free Two-Stage Ship Detection Based on Feature Enhancement in SAR Images. Remote Sens. 2022, 14, 6058. [Google Scholar] [CrossRef]

- Ao, W.; Xu, F. Robust Ship Detection in SAR Images from Complex Background. In Proceedings of the 2018 IEEE International Conference on Computational Electromagnetics (ICCEM), Chengdu, China, 26–28 March 2018; pp. 1–2. [Google Scholar] [CrossRef]

- Zhi, L.; Changwen, Q.; Qiang, Z.; Chen, L.; Shujuan, P.; Jianwei, L. Ship detection in harbor area in SAR images based on constructing an accurate sea-clutter model. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 13–19. [Google Scholar] [CrossRef]

- Sun, Y.; Sun, X.; Wang, Z.; Fu, K. Oriented Ship Detection Based on Strong Scattering Points Network in Large-Scale SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–18. [Google Scholar] [CrossRef]

- Velotto, D.; Soccorsi, M.; Lehner, S. Azimuth Ambiguities Removal for Ship Detection Using Full Polarimetric X-Band SAR Data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 76–88. [Google Scholar] [CrossRef]

- Zhang, T.; Yang, Z.; Mao, B.; Ban, Y.; Xiong, H. Ship Detection Using the Surface Scattering Similarity and Scattering Power. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1264–1267. [Google Scholar] [CrossRef]

- Zhang, H.; Tian, X.; Wang, C.; Wu, F.; Zhang, B. Merchant Vessel Classification Based on Scattering Component Analysis for COSMO-SkyMed SAR Images. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1275–1279. [Google Scholar] [CrossRef]

- Wang, C.; Jiang, S.; Zhang, H.; Wu, F.; Zhang, B. Ship Detection for High-Resolution SAR Images Based on Feature Analysis. IEEE Geosci. Remote Sens. Lett. 2014, 11, 119–123. [Google Scholar] [CrossRef]

- Leng, X.; Ji, K.; Yang, K.; Zou, H. A Bilateral CFAR Algorithm for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1536–1540. [Google Scholar] [CrossRef]

- Gao, S.; Liu, H. RetinaNet-Based Compact Polarization SAR Ship Detection. IEEE J. Miniat. Air Space Syst. 2022, 3, 146–152. [Google Scholar] [CrossRef]

- Gao, G.; Gao, S.; He, J.; Li, G. Adaptive ship detection in hybrid-polarimetric SAR images based on the power–entropy decomposition. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5394–5407. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, P.; Wang, H.; Jin, Y. Saliency-Based Centernet for Ship Detection in SAR Images. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 1552–1555. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, Z.; Sun, X.; Fu, K. SPAN: Strong Scattering Point Aware Network for Ship Detection and Classification in Large-Scale SAR Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 1188–1204. [Google Scholar] [CrossRef]

- Fu, K.; Fu, J.; Wang, Z.; Sun, X. Scattering-Keypoint-Guided Network for Oriented Ship Detection in High-Resolution and Large-Scale SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11162–11178. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Ballard, D.H. Modular learning in neural networks. In Proceedings of the AAAI, Seattle, DC, USA, 13–17 July 1987; Volume 647, pp. 279–284. [Google Scholar]

- Ye, T.; Wang, T.; McGuinness, K.; Guo, Y.; Gurrin, C. Learning multiple views with orthogonal denoising autoencoders. In Lecture Notes in Computer Science, Proceedings of the International Conference on Multimedia Modeling, Miami, FL, USA, 4–6 January 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 313–324. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Wang, Y.; Ye, T.; Cao, L.; Huang, W.; Sun, F.; He, F.; Tao, D. Bridged Transformer for Vision and Point Cloud 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12114–12123. [Google Scholar]

- Xie, S.; Girshick, R.; Dollar, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhang, Y.; Wang, Z.; Song, R.; Yan, C.; Qi, Y. Detection-by-tracking of traffic signs in videos. Appl. Intell. 2022, 52, 8226–8242. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Lecture Notes in Computer Science, Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Misra, D. Mish: A self regularized non-monotonic activation function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Lv, X.; Chen, J.; Qiu, X. A Pylon Detection Method Based on Faster R-CNN in High-Resolution SAR Images. In Proceedings of the 2021 7th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Virtual, 1–3 November 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Ge, J.; Zhang, B.; Wang, C.; Xu, C.; Tian, Z.; Xu, L. Azimuth-Sensitive Object Detection in Sar Images Using Improved Yolo V5 Model. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 2171–2174. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Zhu, W. Target Detection Based on Edge-Aware and Cross-Coupling Attention for SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, W.; Zhang, Q.; Ni, H.; Zhang, X. Improved YOLOv5 with Transformer for Large Scene Military Vehicle Detection on SAR Image. In Proceedings of the 2022 7th International Conference on Image, Vision and Computing (ICIVC), Xi’an, China, 26–28 July 2022; pp. 87–93. [Google Scholar] [CrossRef]

- Pelich, R.; Longépé, N.; Mercier, G.; Hajduch, G.; Garello, R. AIS-Based Evaluation of Target Detectors and SAR Sensors Characteristics for Maritime Surveillance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3892–3901. [Google Scholar] [CrossRef]

- Qu, Z.-G.; Tan, X.-S.; Wang, H.; Gang, H. A CFAR Based on Statistics of Cell Under Test. In Proceedings of the 2006 CIE International Conference on Radar, Shanghai, China, 16–19 October 2006; pp. 1–4. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, X.; Guo, X. A novel CFAR detector in heterogeneous environment. In Proceedings of the 2013 2nd International Conference on Measurement, Information and Control, Harbin, China, 16–18 August 2013; Volume 1, pp. 443–446. [Google Scholar] [CrossRef]

- Pourmottaghi, A.; Taban, M.R.; Norouzi, Y.; Sadeghi, M.T. A robust CFAR detection with ML estimation. In Proceedings of the 2008 IEEE Radar Conference, Rome, Italy, 26–30 May 2008; pp. 1–5. [Google Scholar] [CrossRef]

- Wang, C.Y.; Pan, R.Y.; Liu, J.H. Clutter suppression and target detection based on biparametric clutter map CFAR. In Proceedings of the IET International Radar Conference 2015, Xi’an, China, 14–16 April 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Zhou, X.; Zhang, G.; Zhang, G. Approved HG-CFAR Method for Infrared Small Target Detection. In Proceedings of the 2008 IEEE Pacific-Asia Workshop on Computational Intelligence and Industrial Application, Wuhan, China, 19–20 December 2008; Volume 2, pp. 877–881. [Google Scholar] [CrossRef]

- Li, W.; Zou, B.; Zhang, L. Ship detection in a large scene SAR image using image uniformity description factor. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Gambardella, A.; Nunziata, F.; Migliaccio, M. A Physical Full-Resolution SAR Ship Detection Filter. IEEE Geosci. Remote Sens. Lett. 2008, 5, 760–763. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, J.; Meng, J.-M.; Chen, L.-M. A novel polarimetric SAR ship detection filter. In Proceedings of the IET International Radar Conference 2013, Xi’an, China, 14–16 April 2013; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, C.; Zhang, H.; Zhang, B.; Tian, S. An efficient object-oriented method of Azimuth ambiguities removal for ship detection in SAR images. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 2275–2278. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Xu, C.; Ye, Q.; Liu, J.; Li, L. Research on Vehicle Detection Based on YOLOv3. In Proceedings of the 2020 2nd International Conference on Information Technology and Computer Application (ITCA), Guangzhou, China, 18–20 December 2020; pp. 433–436. [Google Scholar] [CrossRef]

- Sommer, L.; Acatay, O.; Schumann, A.; Beyerer, J. Ensemble of Two-Stage Regression Based Detectors for Accurate Vehicle Detection in Traffic Surveillance Data. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Zhu, X.; Lyu, S.; Wang, X.; Zhao, Q. TPH-YOLOv5: Improved YOLOv5 Based on Transformer Prediction Head for Object Detection on Drone-captured Scenarios. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 2778–2788. [Google Scholar] [CrossRef]

- Wang, S.H.; Fernandes, S.L.; Zhu, Z.; Zhang, Y.D. AVNC: Attention-Based VGG-Style Network for COVID-19 Diagnosis by CBAM. IEEE Sens. J. 2022, 22, 17431–17438. [Google Scholar] [CrossRef] [PubMed]

- Feng, T.T.; Ge, H.Y. Pedestrian detection based on attention mechanism and feature enhancement with SSD. In Proceedings of the 2020 5th International Conference on Communication, Image and Signal Processing (CCISP), Chengdu, China, 13–15 November 2020; pp. 145–148. [Google Scholar] [CrossRef]

- Zhang, M.; An, J.; Yu, D.H.; Yang, L.D.; Wu, L.; Lu, X.Q. Convolutional Neural Network With Attention Mechanism for SAR Automatic Target Recognition. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Chen, L.; Weng, T.; Xing, J.; Pan, Z.; Yuan, Z.; Xing, X.; Zhang, P. A new deep learning network for automatic bridge detection from SAR images based on balanced and attention mechanism. Remote Sens. 2020, 12, 441. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, H.; Xu, F. Scattering Enhanced Attention Pyramid Network for Aircraft Detection in SAR Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 7570–7587. [Google Scholar] [CrossRef]

- Shao, S.; Li, H.; Wang, S. SAR Ship Detection from Complex Background Based on Dynamic Shrinkage Attention Mechanism. In Proceedings of the 2021 SAR in Big Data Era (BIGSARDATA), Nanjing, China, 22–24 September 2021; pp. 1–5. [Google Scholar] [CrossRef]

- Bai, Y.; Zhou, D.; Wang, X.; Tong, C. Study of comprehensive influencing factors on RCS in SAR imaging. In Proceedings of the 2005 Asia-Pacific Microwave Conference Proceedings, Suzhou, China, 4–7 December 2005; Volume 1, p. 4. [Google Scholar] [CrossRef]

- Knott, E.F.; Schaeffer, J.F.; Tulley, M.T. Radar Cross Section; SciTech Publishing: Nugegoda, Sri Lanka, 2004. [Google Scholar]

- Singh, P.; Diwakar, M.; Shankar, A.; Shree, R.; Kumar, M. A Review on SAR Image and its Despeckling. Arch. Comput. Methods Eng. 2021, 28, 4633–4653. [Google Scholar] [CrossRef]

- Kang, Y.; Wang, Z.; Fu, J.; Sun, X.; Fu, K. SFR-Net: Scattering Feature Relation Network for Aircraft Detection in Complex SAR Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Liu, G.; Zhang, X.; Meng, J. A small ship target detection method based on polarimetric SAR. Remote Sens. 2019, 11, 2938. [Google Scholar] [CrossRef]

- Liu, S.; Gao, L.; Lei, Y.; Wang, M.; Hu, Q.; Ma, X.; Zhang, Y.D. SAR Speckle Removal Using Hybrid Frequency Modulations. IEEE Trans. Geosci. Remote Sens. 2021, 59, 3956–3966. [Google Scholar] [CrossRef]

- Lloyd, S. Least squares quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-based algorithm for discovering clusters in large spatial databases with noise. In Proceedings of the KDD, Portland, OR, USA, 2–4 August 1996; Volume 96, pp. 226–231. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1. 0: A deep learning dataset dedicated to small ship detection from large-scale Sentinel-1 SAR images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Wei, S.; Zeng, X.; Qu, Q.; Wang, M.; Su, H.; Shi, J. HRSID: A High-Resolution SAR Images Dataset for Ship Detection and Instance Segmentation. IEEE Access 2020, 8, 120234–120254. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Lecture Notes in Computer Science, Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Zhang, T.; Zhang, X.; Li, J.; Xu, X.; Wang, B.; Zhan, X.; Xu, Y.; Ke, X.; Zeng, T.; Su, H.; et al. Sar ship detection dataset (ssdd): Official release and comprehensive data analysis. Remote Sens. 2021, 13, 3690. [Google Scholar] [CrossRef]

- Deng, Y.; Guan, D.; Chen, Y.; Yuan, W.; Ji, J.; Wei, M. Sar-Shipnet: Sar-Ship Detection Neural Network via Bidirectional Coordinate Attention and Multi-Resolution Feature Fusion. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; pp. 3973–3977. [Google Scholar] [CrossRef]

- He, K.; Girshick, R.; Dollar, P. Rethinking ImageNet Pre-Training. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

| Datasets | The Largest Ship (pixel ) | The Smallest Ship (pixel) | Average (pixel) | Number of Pictures |

|---|---|---|---|---|

| HRSID | 522,400 | 3 | 1808 | 5604 |

| SSDD | 62,878 | 28 | 1882 | 1160 |

| LS-SSDD | 5822 | 6 | 381 | 9000 |

| Datasets | Training | Validation | Testing | Total |

|---|---|---|---|---|

| SSDD | 812 | 116 | 232 | 1160 |

| HRSID | 4538 | 505 | 561 | 5604 |

| LS-SSDD | 5000 | 1000 | 3000 | 9000 |

| Datasets | Median of the Interval | Interval Width |

|---|---|---|

| SSDD | 0.9531 | 0.0928 |

| HRSID | 0.8245 | 0.3443 |

| LS-SSDD | 0.8507 | 0.2346 |

| Operation | Total Time Spent | Average Time Spent (Per Image) | FPS |

|---|---|---|---|

| brightness | 71.3828 s | 0.0879 s | 11.375 |

| density | 217.8586 s | 0.2683 s | 3.727 |

| the complete process | 289.2406 s | 0.1781 s | 5.615 |

| Operation | Total Time Spent | Average Time Spent (Per Image) | FPS |

|---|---|---|---|

| brightness | 7.2804 s | 0.00897 s | 111.532 |

| density | 7.0043 s | 0.0086 s | 115.929 |

| the prior knowledge map | 2.1932 s | 0.0027 s | 370.233 |

| the complete process | 18.0784 s | 0.0223 s | 44.916 |

| Algorithm | ||||||

|---|---|---|---|---|---|---|

| Faster R-CNN | 0.659 | 0.959 | 0.776 | 0.638 | 0.700 | 0.678 |

| + BDAM | 0.664 | 0.961 | 0.798 | 0.643 | 0.702 | 0.720 |

| + CBAM | 0.655 | 0.959 | 0.781 | 0.634 | 0.693 | 0.681 |

| + EAM | 0.657 | 0.961 | 0.776 | 0.635 | 0.692 | 0.678 |

| + BCA | 0.660 | 0.961 | 0.762 | 0.639 | 0.701 | 0.663 |

| RetinaNet | 0.639 | 0.921 | 0.750 | 0.616 | 0.687 | 0.601 |

| + BDAM | 0.641 | 0.942 | 0.762 | 0.621 | 0.681 | 0.678 |

| + CBAM | 0.637 | 0.925 | 0.764 | 0.612 | 0.689 | 0.620 |

| + EAM | 0.634 | 0.927 | 0.750 | 0.605 | 0.696 | 0.635 |

| + BCA | 0.641 | 0.931 | 0.758 | 0.612 | 0.702 | 0.656 |

| SSD | 0.548 | 0.885 | 0.643 | 0.515 | 0.610 | 0.596 |

| + BDAM | 0.555 | 0.891 | 0.651 | 0.520 | 0.626 | 0.598 |

| + CBAM | 0.544 | 0.885 | 0.645 | 0.511 | 0.623 | 0.558 |

| + EAM | 0.551 | 0.897 | 0.631 | 0.520 | 0.615 | 0.545 |

| + BCA | 0.499 | 0.834 | 0.558 | 0.450 | 0.585 | 0.548 |

| YOLOv4 | 0.499 | 0.937 | 0.472 | 0.453 | 0.633 | 0.637 |

| + BDAM | 0.508 | 0.942 | 0.464 | 0.452 | 0.647 | 0.729 |

| + CBAM | 0.507 | 0.958 | 0.464 | 0.457 | 0.639 | 0.657 |

| + EAM | 0.504 | 0.940 | 0.485 | 0.452 | 0.644 | 0.675 |

| + BCA | 0.505 | 0.952 | 0.452 | 0.454 | 0.632 | 0.713 |

| Algorithm | ||||||

|---|---|---|---|---|---|---|

| Faster R-CNN | 0.626 | 0.866 | 0.716 | 0.502 | 0.768 | 0.599 |

| + BDAM | 0.625 | 0.876 | 0.719 | 0.505 | 0.764 | 0.628 |

| + CBAM | 0.625 | 0.875 | 0.712 | 0.505 | 0.761 | 0.612 |

| + EAM | 0.621 | 0.866 | 0.710 | 0.500 | 0.763 | 0.615 |

| + BCA | 0.613 | 0.855 | 0.707 | 0.493 | 0.749 | 0.618 |

| RetinaNet | 0.561 | 0.787 | 0.627 | 0.380 | 0.765 | 0.507 |

| + BDAM | 0.567 | 0.796 | 0.633 | 0.387 | 0.764 | 0.566 |

| + CBAM | 0.561 | 0.785 | 0.627 | 0.380 | 0.765 | 0.494 |

| + EAM | 0.558 | 0.784 | 0.625 | 0.377 | 0.763 | 0.546 |

| + BCA | 0.554 | 0.779 | 0.615 | 0.382 | 0.753 | 0.475 |

| SSD | 0.449 | 0.686 | 0.510 | 0.255 | 0.674 | 0.537 |

| + BDAM | 0.457 | 0.694 | 0.518 | 0.257 | 0.684 | 0.539 |

| + CBAM | 0.454 | 0.690 | 0.513 | 0.259 | 0.683 | 0.528 |

| + EAM | 0.453 | 0.691 | 0.506 | 0.250 | 0.683 | 0.538 |

| + BCA | 0.437 | 0.666 | 0.498 | 0.246 | 0.662 | 0.421 |

| YOLOv4 | 0.550 | 0.914 | 0.603 | 0.395 | 0.686 | 0.607 |

| + BDAM | 0.552 | 0.920 | 0.618 | 0.389 | 0.702 | 0.650 |

| + CBAM | 0.550 | 0.912 | 0.610 | 0.377 | 0.690 | 0.621 |

| + EAM | 0.540 | 0.920 | 0.578 | 0.376 | 0.680 | 0.619 |

| + BCA | 0.545 | 0.912 | 0.600 | 0.382 | 0.690 | 0.616 |

| Algorithm | |||||

|---|---|---|---|---|---|

| Faster R-CNN | 0.258 | 0.722 | 0.073 | 0.255 | 0.339 |

| + BDAM | 0.262 | 0.741 | 0.093 | 0.258 | 0.352 |

| + CBAM | 0.258 | 0.722 | 0.087 | 0.255 | 0.342 |

| + EAM | 0.257 | 0.727 | 0.093 | 0.250 | 0.346 |

| + BCA | 0.235 | 0.675 | 0.070 | 0.230 | 0.352 |

| RetinaNet | 0.213 | 0.607 | 0.072 | 0.206 | 0.363 |

| + BDAM | 0.223 | 0.638 | 0.075 | 0.219 | 0.352 |

| + CBAM | 0.211 | 0.599 | 0.069 | 0.204 | 0.385 |

| + EAM | 0.218 | 0.632 | 0.063 | 0.214 | 0.333 |

| + BCA | 0.207 | 0.598 | 0.058 | 0.203 | 0.324 |

| SSD | 0.158 | 0.488 | 0.045 | 0.148 | 0.325 |

| + BDAM | 0.169 | 0.517 | 0.045 | 0.160 | 0.343 |

| + CBAM | 0.162 | 0.517 | 0.042 | 0.157 | 0.298 |

| + EAM | 0.139 | 0.476 | 0.030 | 0.133 | 0.301 |

| + BCA | 0.052 | 0.210 | 0.006 | 0.052 | 0.122 |

| YOLOv4 | 0.284 | 0.819 | 0.092 | 0.280 | 0.402 |

| + BDAM | 0.287 | 0.830 | 0.087 | 0.284 | 0.408 |

| + CBAM | 0.284 | 0.826 | 0.090 | 0.280 | 0.405 |

| + EAM | 0.273 | 0.809 | 0.088 | 0.270 | 0.408 |

| + BCA | 0.256 | 0.789 | 0.074 | 0.252 | 0.400 |

| Algorithm | ||||||

|---|---|---|---|---|---|---|

| + BDAM | 0.664 | 0.961 | 0.798 | 0.643 | 0.702 | 0.720 |

| + BDAM * | 0.427 | 0.727 | 0.453 | 0.516 | 0.280 | 0.166 |

| Algorithm | ||||||

|---|---|---|---|---|---|---|

| Faster R-CNN | 0.659 | 0.959 | 0.776 | 0.638 | 0.700 | 0.678 |

| Input Size/4 | 0.659 | 0.959 | 0.779 | 0.639 | 0.696 | 0.663 |

| Input Size/16 | 0.661 | 0.969 | 0.790 | 0.639 | 0.709 | 0.676 |

| Input Size/64 | 0.664 | 0.961 | 0.798 | 0.643 | 0.702 | 0.720 |

| Input Size/256 | 0.661 | 0.959 | 0.779 | 0.634 | 0.713 | 0.681 |

| Input Size/1024 | 0.659 | 0.960 | 0.774 | 0.638 | 0.705 | 0.664 |

| Algorithm | ||||||

|---|---|---|---|---|---|---|

| Faster R-CNN | 0.626 | 0.866 | 0.716 | 0.502 | 0.768 | 0.599 |

| + BDAM | 0.625 | 0.876 | 0.719 | 0.505 | 0.764 | 0.628 |

| + BDSM | 0.626 | 0.868 | 0.716 | 0.506 | 0.762 | 0.678 |

| + CAM | 0.622 | 0.856 | 0.713 | 0.500 | 0.763 | 0.622 |

| + CBAM | 0.625 | 0.875 | 0.712 | 0.505 | 0.761 | 0.612 |

| RetinaNet | 0.561 | 0.787 | 0.627 | 0.380 | 0.765 | 0.507 |

| + BDAM | 0.565 | 0.795 | 0.630 | 0.387 | 0.766 | 0.563 |

| + BDSM | 0.567 | 0.796 | 0.633 | 0.385 | 0.767 | 0.558 |

| + CAM | 0.551 | 0.777 | 0.624 | 0.370 | 0.752 | 0.525 |

| + CBAM | 0.561 | 0.785 | 0.627 | 0.380 | 0.765 | 0.494 |

| SSD | 0.449 | 0.686 | 0.510 | 0.255 | 0.674 | 0.537 |

| + BDAM | 0.457 | 0.694 | 0.518 | 0.257 | 0.684 | 0.539 |

| + BDSM | 0.457 | 0.693 | 0.518 | 0.261 | 0.682 | 0.536 |

| + CAM | 0.454 | 0.692 | 0.509 | 0.255 | 0.683 | 0.526 |

| + CBAM | 0.454 | 0.690 | 0.513 | 0.259 | 0.683 | 0.528 |

| YOLOv4 | 0.550 | 0.914 | 0.603 | 0.395 | 0.686 | 0.607 |

| + BDAM | 0.552 | 0.920 | 0.618 | 0.389 | 0.702 | 0.650 |

| + BDSM | 0.556 | 0.920 | 0.622 | 0.399 | 0.695 | 0.641 |

| + CAM | 0.549 | 0.908 | 0.599 | 0.389 | 0.687 | 0.565 |

| + CBAM | 0.550 | 0.912 | 0.610 | 0.377 | 0.690 | 0.621 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, Y.; Ye, L.; Xu, Y.; Liang, J. Integrating Prior Knowledge into Attention for Ship Detection in SAR Images. Appl. Sci. 2023, 13, 2941. https://doi.org/10.3390/app13052941

Pan Y, Ye L, Xu Y, Liang J. Integrating Prior Knowledge into Attention for Ship Detection in SAR Images. Applied Sciences. 2023; 13(5):2941. https://doi.org/10.3390/app13052941

Chicago/Turabian StylePan, Yin, Lei Ye, Yingkun Xu, and Junyi Liang. 2023. "Integrating Prior Knowledge into Attention for Ship Detection in SAR Images" Applied Sciences 13, no. 5: 2941. https://doi.org/10.3390/app13052941

APA StylePan, Y., Ye, L., Xu, Y., & Liang, J. (2023). Integrating Prior Knowledge into Attention for Ship Detection in SAR Images. Applied Sciences, 13(5), 2941. https://doi.org/10.3390/app13052941