A Survey: Network Feature Measurement Based on Machine Learning

Abstract

1. Introduction

- To process a significant volume of complicated, high-speed, and heterogeneous network monitoring data gathered in particular applications, network measurement must first assure a real-time environment. These data frequently emerge as fast data streams. Applications for network measurement must be able to swiftly and continuously examine this data. A data analysis model that can continue to process significant amounts of data offline is preferred;

- The choice of the best ML model for a certain network measurement problem is a challenging task, according to theory. Different ML approaches for distinct features have different forms of expression during processing since a significant amount of data corresponds to a large number of features. To do this, academics must have a broad enough knowledge base to use a variety of ML techniques to discover the best solution [16].

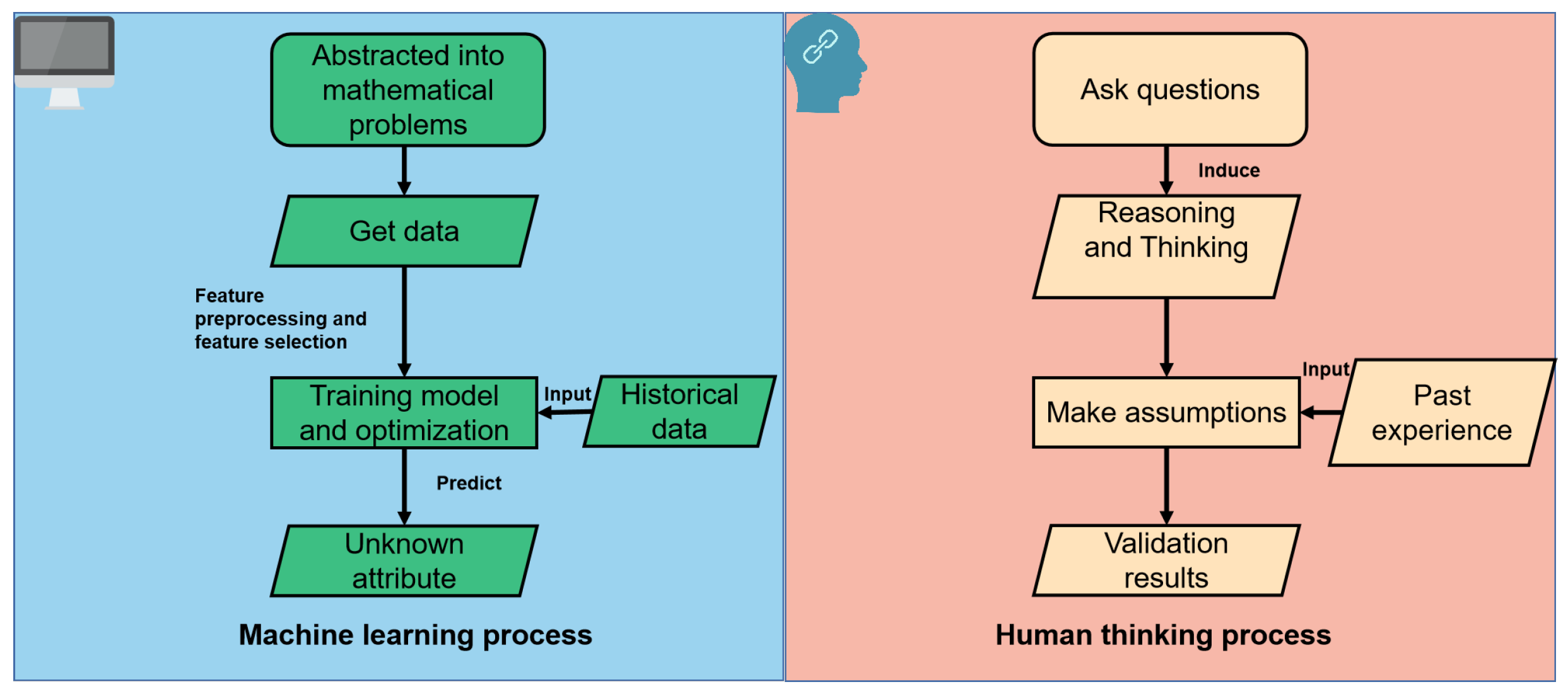

2. Machine Learning Method in Network Measurement

2.1. Supervised Learning in Network Measurement

2.1.1. Random Forest in Network Measurement

2.1.2. ANN in Network Measurement

2.1.3. SVM in Network Measurement

2.1.4. DNN in Network Measurement

2.1.5. CNN in Network Measurement

2.1.6. LSTM in Network Measurement

2.1.7. DT in Network Measurement

2.1.8. RBF Neural Network in Network Measurement

2.1.9. Discussion on Supervised Learning in Network Measurement

2.2. Unsupervised Learning in Network Measurement

- Unsupervised learning has no clear purpose;

- Unsupervised learning does not need to label data;

- Unsupervised learning cannot quantify the effect.

- One of the most common tasks in unsupervised learning is clustering. Instead of defining groups before seeing data, it enables us to identify and evaluate naturally generated groups, that is, groups that were established depending on the data itself. K-Means, hierarchical, and probabilistic clustering are popular methods;

- Too many features could waste an ML model’s storage space and processing time in the event of a dimensional catastrophe. Researchers want to represent data accurately in smaller dimensions without sacrificing too much useful information. The dimensions of the data can be decreased using a dimension-reduction technique. PCA and SVD were the two basic methods employed.

K-Means in Network Measurement

2.3. Reinforcement Learning in Network Measurement

2.4. Transfer Learning in Network Measurement

2.5. Semi-Supervised Learning in Network Measurement

2.6. Discussion of Different ML Methods in Network Measurement

3. Network Measurement Based on ML Method

3.1. Network Delay and RTT

3.2. Packet Loss

3.3. Throughput

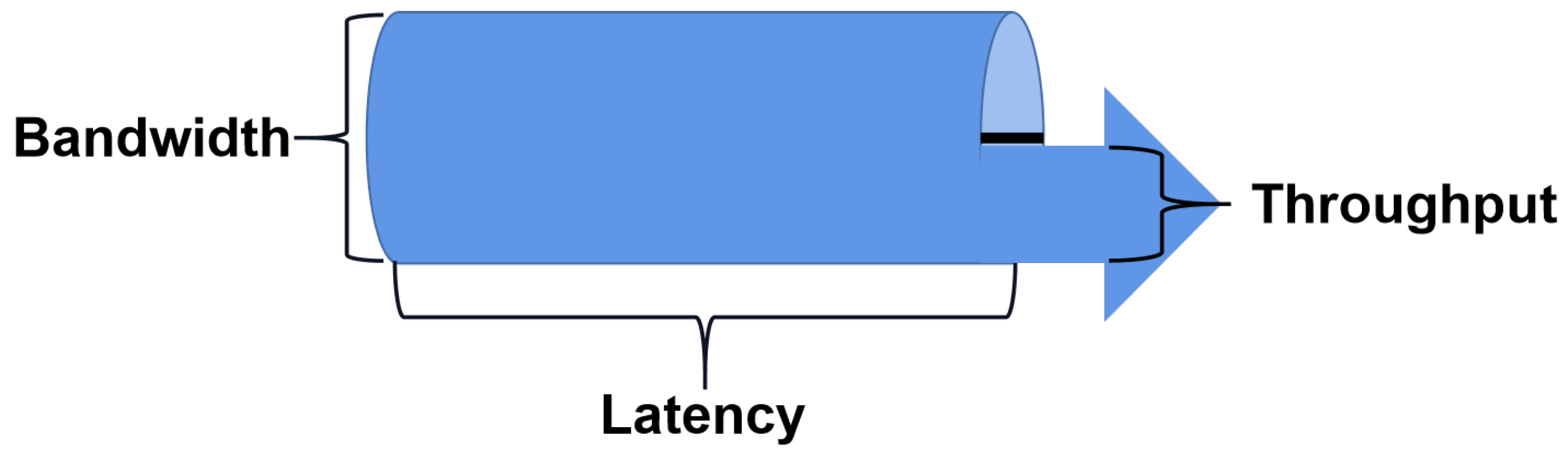

3.4. Bandwidth

3.5. Path Loss

3.6. Congestion Control

3.7. Discussion on Network Measurement Application with ML

| Scenarios | Applications | Ref | ML Algorithms | Performance Analysis |

|---|---|---|---|---|

| Path delay | Reduce data volume | [51] | RF, LR, SVM | It shows the importance of data pre-evaluation and feature selection, but the basic algorithm can not provide high accuracy in this case. |

| Reduce computing effort | [79] | RF, BP NN | It can better predict the change in path delay under the curve that has not been simulated during the preparation of the training set. | |

| Provides accurate estimation of round-trip time in TCP transmission | [31,64] | Experts Frame | The number of retransmitted packets decreases significantly, and the throughput increases. | |

| Predicting Delay in IoT Models | [80] | RNN, ANN | Compared with similar models, the model using the Trainlm training algorithm has the best prediction accuracy, while the model using the Trainrp training algorithm has the lowest prediction accuracy. | |

| Packet loss | Faster computing with lower call latency | [65] | DT, LR | Use LR to perform predictive analysis and describe data. By contrast, the DT model has a better prediction effect. |

| Combining the two networks for prediction | [42] | ANN, RNN | Improve the accuracy of Internet of Things traffic prediction. | |

| Throughput | Use characteristics to generate throughput predictions | [24] | SVR | Use different combinations of path attributes for training. Compared with the history-based predictor, the efficiency is improved by three times. |

| Three super parameters are optimized | [69] | DNN | The prediction achieved an average accuracy of 82% over a continuous time interval of one week. | |

| Forecast based on the cellular link throughput of each network slice | [23] | DT, ANN, SVR, MLP | The non-network intrusive machine learning model is used to predict the available throughput in a non-independent 5G network based on the network slice. | |

| Evaluate three variations of each model for four epoch durations | [74] | LSTM | In each case, the average error obtained by the LSTM network is significantly lower than the ARIMA model | |

| Bandwidth | The available bandwidth is obtained through simulation | [81] | SVM | The combination of the pathChirp-like model and SVM can obtain a more accurate estimation than the two widely used tools. |

| Use the existing program information to estimate the available bandwidth | [71] | ANN | Two neural networks are trained to deal with incompletely filled input vectors and prediction of output bandwidth, respectively. | |

| Simplify the prediction of actual available bandwidth into classification | [47] | ANN, SVM, K-NN | Median filtering of estimated values to improve accuracy. | |

| Provides reliable physical and available bandwidth estimation for the simulated single-hop and multi-hop networks | [72] | ANN | It is proven that the accuracy of bandwidth estimation is only limited by the input data. | |

| The available bandwidth estimation task is described as a classification problem. | [26] | SVM, RF | Proved that neural networks can significantly improve available bandwidth estimation by reducing bias and variability. | |

| Path loss | A faster training algorithm is added to the training process | [75] | ANN | The prediction accuracy and generalization characteristics are given while reducing the training time. |

| Establish the optimization model of path loss | [76] | ANN, FNN | ANN with the highest prediction accuracy by changing the number of hidden layer neurons. | |

| Several super parameters of the RBF model are adjusted | [52] | SVM | By comparing the performance of SVR and RBF machine learning with the other five empirical models, it is concluded that the performance of the machine learning model is higher than that of the empirical model. | |

| Congestion control | Detect the congestion of the transport layer sink node | [82] | ANN | It can accurately detect the degree of congestion in wireless sensor networks and can better reflect the congestion state. |

| An effective method to determine the best parameters of classifier model selection | [53] | SVM | Improve accuracy through optimal parameters. |

4. Future Work

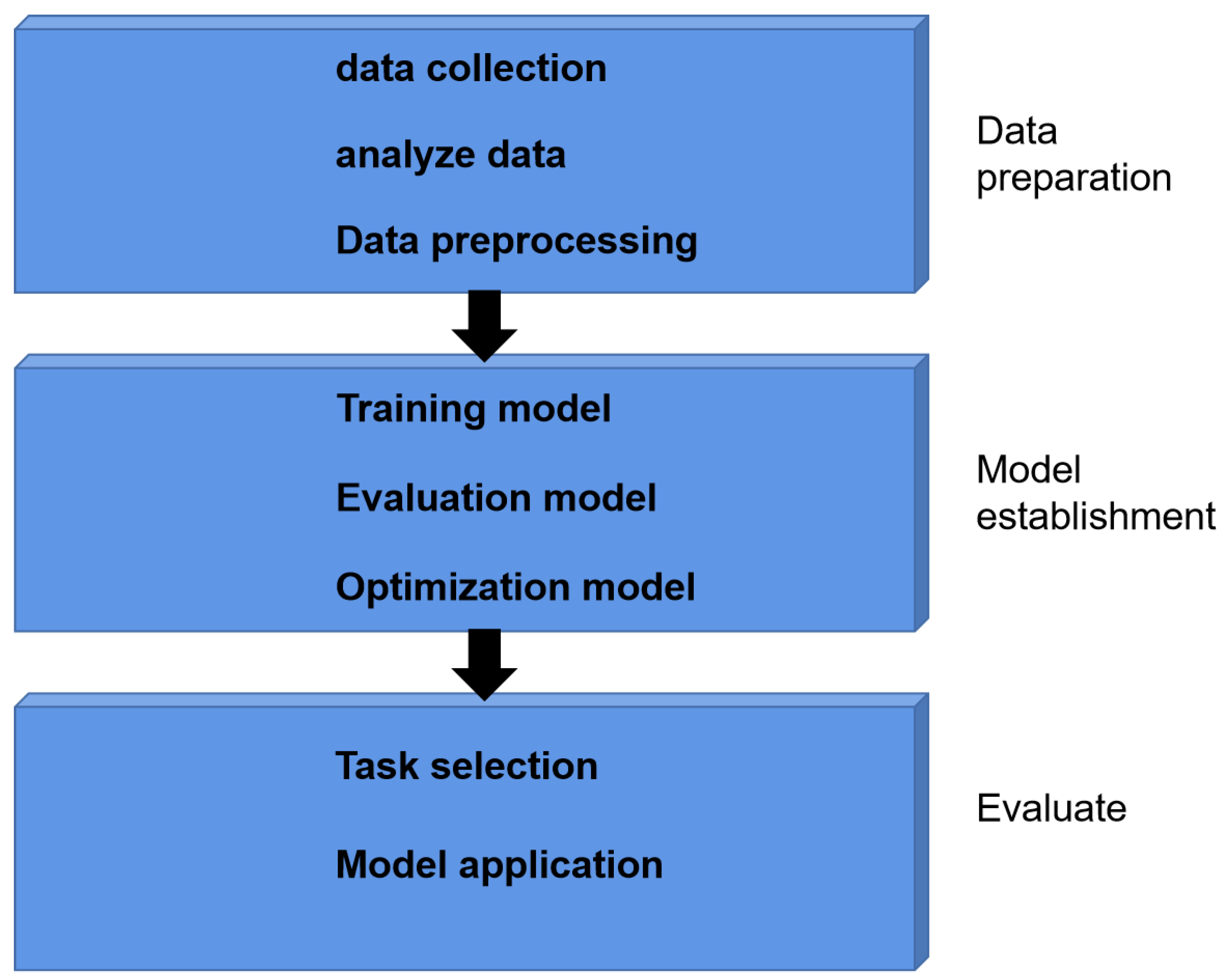

4.1. Future ML in Network Measurement

4.1.1. Dataset

4.1.2. Define and Express Problems

4.1.3. Model Establishment and Optimization

4.1.4. Algorithm Selection

4.1.5. Discussion on Future ML Methods

- Congestion control;

- Automatic extraction of the most important features;

- Network routing;

- Time-series analysis;

- Network layer analysis.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| SVM | Support Vector Machine |

| DNN | Deep Neural Network |

| DT | Decision Tree |

| FNN | Feedforward Neural Network |

| RNN | Recursive Neural Network |

| LSTM | Long Short-Term Memory |

| CNN | Convolutional Neural Networks |

| RBF | Radial Basis Function |

| XGBoost | eXtreme Gradient Boosting |

| LR | Logistic Regression |

| SVR | Support Vector Regression |

| RF | Random Forest |

| MLP NN | Multilayer Perceptron Neural Networks |

| SSL | Semi-Supervised Learning |

References

- Gheisari, M.; Alzubi, J.; Zhang, X.; Kose, U.; Saucedo, J.A.M. A new algorithm for optimization of quality of service in peer to peer wireless mesh networks. Wirel. Netw. 2020, 26, 4965–4973. [Google Scholar] [CrossRef]

- Qi, J.; Wu, F.; Li, L.; Shu, H. Artificial intelligence applications in the telecommunications industry. Expert Syst. 2007, 24, 271–291. [Google Scholar] [CrossRef]

- Feldmann, A.; Gasser, O.; Lichtblau, F.; Pujol, E.; Poese, I.; Dietzel, C.; Wagner, D.; Wichtlhuber, M.; Tapiador, J.; Vallina-Rodriguez, N.; et al. The lockdown effect: Implications of the COVID-19 pandemic on internet traffic. In Proceedings of the ACM Internet Measurement Conference, Virtual Event, 27–29 October 2020; pp. 1–18. [Google Scholar]

- Sun, Y.; Peng, M.; Zhou, Y.; Huang, Y.; Mao, S. Application of machine learning in wireless networks: Key techniques and open issues. IEEE Commun. Surv. Tutor. 2019, 21, 3072–3108. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, Y.; Zhang, X.; Geng, G.; Zhang, W.; Sun, Y. A survey of networking applications applying the software defined networking concept based on machine learning. IEEE Access 2019, 7, 95397–95417. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Y.; Yang, M.; Geng, G. Toward concurrent video multicast orchestration for caching-assisted mobile networks. IEEE Trans. Veh. Technol. 2021, 70, 13205–13220. [Google Scholar] [CrossRef]

- Yao, S.; Wang, M.; Qu, Q.; Zhang, Z.; Zhang, Y.F.; Xu, K.; Xu, M. Blockchain-empowered collaborative task offloading for cloud-edge-device computing. IEEE J. Sel. Areas Commun. 2022, 40, 3485–3500. [Google Scholar] [CrossRef]

- Ojo, S.; Akkaya, M.; Sopuru, J.C. An ensemble machine learning approach for enhanced path loss predictions for 4G LTE wireless networks. Int. J. Commun. Syst. 2022, 35, e5101. [Google Scholar] [CrossRef]

- Ateeq, M.; Ishmanov, F.; Afzal, M.K.; Naeem, M. Predicting delay in IoT using deep learning: A multiparametric approach. IEEE Access 2019, 7, 62022–62031. [Google Scholar] [CrossRef]

- Shafiq, M.; Tian, Z.; Bashir, A.K.; Du, X.; Guizani, M. CorrAUC: A malicious bot-IoT traffic detection method in IoT network using machine-learning techniques. IEEE Internet Things J. 2020, 8, 3242–3254. [Google Scholar] [CrossRef]

- Park, J.; Samarakoon, S.; Bennis, M.; Debbah, M. Wireless network intelligence at the edge. Proc. IEEE 2019, 107, 2204–2239. [Google Scholar] [CrossRef]

- Tjoa, E.; Guan, C. A survey on explainable artificial intelligence (xai): Toward medical xai. IEEE Trans. Neural Networks Learn. Syst. 2020, 32, 4793–4813. [Google Scholar] [CrossRef]

- Abdellah, A.R.; Mahmood, O.A.; Kirichek, R.; Paramonov, A. Machine learning algorithm for delay prediction in IoT and pactile internet. Future Internet 2021, 13, 304. [Google Scholar] [CrossRef]

- Wang, C.X.; Di Renzo, M.; Stanczak, S.; Wang, S.; Larsson, E.G. Artificial intelligence enabled wireless networking for 5G and beyond: Recent advances and future challenges. IEEE Wirel. Commun. 2020, 27, 16–23. [Google Scholar] [CrossRef]

- Wang, M.; Cui, Y.; Wang, X.; Xiao, S.; Jiang, J. Machine learning for networking: Workflow, advances and opportunities. IEEE Netw. 2017, 32, 92–99. [Google Scholar] [CrossRef]

- Jiang, J.; Sekar, V.; Stoica, I.; Zhang, H. Unleashing the potential of data-driven networking. In Proceedings of the International Conference on Communication Systems and Networks, Bengaluru, India, 4–8 January 2017; pp. 110–126. [Google Scholar]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 685–695. [Google Scholar] [CrossRef]

- Ray, S. A quick review of machine learning algorithms. In Proceedings of the 2019 International Conference on Machine Learning, Big Data, Cloud and Parallel Computing (COMITCon), Faridabad, India, 14–16 February 2019; pp. 35–39. [Google Scholar]

- Sarker, I.H. Machine learning: Algorithms, real-world applications and research directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Sarker, I.H.; Kayes, A.; Watters, P. Effectiveness analysis of machine learning classification models for predicting personalized context-aware smartphone usage. J. Big Data 2019, 6, 57. [Google Scholar] [CrossRef]

- Ojo, S.; Imoize, A.; Alienyi, D. Radial basis function neural network path loss prediction model for LTE networks in multitransmitter signal propagation environments. Int. J. Commun. Syst. 2021, 34, e4680. [Google Scholar] [CrossRef]

- Roy, K.S.; Ghosh, T. Study of packet loss prediction using machine learning. Int. J. Mob. Commun. Netw. 2020, 11, 1–11. [Google Scholar]

- Botta, A.; Mocerino, G.E.; Cilio, S.; Ventre, G. A machine learning approach for dynamic selection of available bandwidth measurement tools. In Proceedings of the International Conference on Communications, Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar]

- Minovski, D.; Ogren, N.; Ahlund, C.; Mitra, K. Throughput prediction using machine learning in LTE and 5g networks. IEEE Trans. Mob. Comput. 2021, 22, 1825–1840. [Google Scholar] [CrossRef]

- Hu, W.; Wang, Z.; Sun, L. Guyot: A hybrid learning and model based RTT predictive approach. In Proceedings of the IEEE International Conference on Communications, London, UK, 8–12 June 2015; pp. 5884–5889. [Google Scholar]

- Labonne, M.; Lopez, J.; Poletti, C.; Munier, J.B. WIP: Short-term flow based bandwidth forecasting using machine learning. In Proceedings of the International Symposium on a World of Wireless, Mobile and Multimedia Networks (WoWMoM), Pisa, Italy, 7–11 June 2021; pp. 260–263. [Google Scholar]

- Abdellah, A.R.; Mahmood, O.A.; Koucheryavy, A. Delay prediction in IoT using machine learning approach. In Proceedings of the International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), Brno, Czech Republic, 5–7 October 2020; pp. 275–279. [Google Scholar]

- Khangura, S.K.; Akın, S. Measurement based online available bandwidth estimation employing reinforcement learning. In Proceedings of the International Teletraffic Congress (ITC 31), Budapest, Hungary, 27–29 August 2019; pp. 95–103. [Google Scholar]

- Chen, L.; Chou, C.; Wang, B. A machine learning-based approach for estimating available bandwidth. In Proceedings of the IEEE Region 10 Conference, Taipei, Taiwan, 30 October–2 November 2007; pp. 1–4. [Google Scholar]

- Hága, P.; Laki, S.; Tóth, F.; Csabai, I.; Stéger, J.; Vattay, G. Neural network based available bandwidth estimation in the etomic infrastructure. In Proceedings of the International Conference on Testbeds and Research Infrastructure for the Development of Networks and Communities, Lake Buena Vista, FL, USA, 21–23 May 2007; pp. 1–10. [Google Scholar]

- Nunes, B.A.A.; Veenstra, K.; Ballenthin, W.; Lukin, S.; Obraczka, K. A machine learning approach to end-to-end rtt estimation and its application to TCP. In Proceedings of the International Conference on Computer Communications and Networks (ICCCN), Lahaina, HI, USA, 31 July–4 August 2011; pp. 1–6. [Google Scholar]

- Jaiswal, A.; Babu, A.R.; Zadeh, M.Z.; Banerjee, D.; Makedon, F. A survey on contrastive self-supervised learning. Technologies 2020, 9, 2. [Google Scholar] [CrossRef]

- Maulud, D.; Abdulazeez, A.M. A review on linear regression comprehensive in machine learning. J. Appl. Sci. Technol. Trends 2020, 1, 140–147. [Google Scholar] [CrossRef]

- Jahromi, H.Z.; Hamed, Z.; Hines, A.; Delanev, D.T. Towards application-aware networking: ML-based end-to-end application KPI/QoE metrics characterization in SDN. In Proceedings of the Tenth International Conference on Ubiquitous and Future Networks (ICUFN), Prague, Czech Republic, 3–6 July 2018; pp. 126–131. [Google Scholar]

- Sheykhmousa, M.; Mahdianpari, M.; Ghanbari, H.; Mohammadimanesh, F.; Ghamisi, P.; Homayouni, S. Support vector machine versus random forest for remote sensing image classification: A meta-analysis and systematic review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 6308–6325. [Google Scholar] [CrossRef]

- Loh, W.Y. Classification and regression trees. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 14–23. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Giannakou, A.; Dwivedi, D.; Peisert, S. A machine learning approach for packet loss prediction in science flows. Future Gener. Comput. Syst. 2020, 102, 190–197. [Google Scholar] [CrossRef]

- Xie, Y.; Oniga, S. A review of processing methods and classification algorithm for EEG signal. Carpathian J. Electron. Comput. Eng. 2020, 13, 23–29. [Google Scholar] [CrossRef]

- Otchere, D.A.; Ganat, T.O.A.; Gholami, R.; Ridha, S. Application of supervised machine learning paradigms in the prediction of petroleum reservoir properties: Comparative analysis of ANN and SVM models. J. Pet. Sci. Eng. 2021, 200, 108182. [Google Scholar] [CrossRef]

- Kurani, A.; Doshi, P.; Vakharia, A.; Shah, M. A comprehensive comparative study of artificial neural network (ANN) and support vector machines (SVM) on stock forecasting. Ann. Data Sci. 2023, 10, 183–208. [Google Scholar] [CrossRef]

- Mirza, M.; Sommers, J.; Barford, P.; Zhu, X. A machine learning approach to TCP throughput prediction. IEEE/ACM Trans. Netw. 2010, 18, 97–108. [Google Scholar] [CrossRef]

- Devan, P.; Khare, N. An efficient XGBoost–DNN-based classification model for network intrusion detection system. Neural Comput. Appl. 2020, 32, 12499–12514. [Google Scholar] [CrossRef]

- Maier, C.; Dorfinger, P.; Du, J.L.; Gschweitl, S.; Lusak, J. Reducing consumed data volume in bandwidth measurements via a machine learning approach. In Proceedings of the Network Traffic Measurement and Analysis Conference (TMA), Paris, France, 19–21 June 2019; pp. 215–220. [Google Scholar]

- Bhatt, D.; Patel, C.; Talsania, H.; Patel, J.; Vaghela, R.; Pandya, S.; Modi, K.; Ghayvat, H. CNN variants for computer vision: History, architecture, application, challenges and future scope. Electronics 2021, 10, 2470. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Sato, N.; Oshiba, T.; Nogami, K.; Sawabe, A.; Satoda, K. Experimental comparison of machine learning based available bandwidth estimation methods over operational LTE networks. In Proceedings of the IEEE Symposium on Computers and Communications (ISCC), Heraklion, Greece, 3–6 July 2017; pp. 339–346. [Google Scholar]

- Landi, F.; Baraldi, L.; Cornia, M.; Cucchiara, R. Working memory connections for LSTM. Neural Netw. 2021, 144, 334–341. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A review of recurrent neural networks: LSTM cells and network architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef] [PubMed]

- Charbuty, B.; Abdulazeez, A. Classification based on decision tree algorithm for machine learning. J. Appl. Sci. Technol. Trends 2021, 2, 20–28. [Google Scholar] [CrossRef]

- Khatouni, A.S.; Soro, F.; Giordano, D. A machine learning application for latency prediction in operational 4g networks. In Proceedings of the IFIP/IEEE Symposium on Integrated Network and Service Management (IM), Arlington, VA, USA, 8–12 April 2019; pp. 71–74. [Google Scholar]

- Zhang, Y.; Wen, J.; Yang, G.; He, Z.; Wang, J. Path loss prediction based on machine learning: Principle, method, and data expansion. Appl. Sci. 2019, 9, 1908. [Google Scholar] [CrossRef]

- Lateef, I.; Akansu, A.N. Machine learning in eigensubspace for network path identification and flow forecast. IET Commun. 2021, 15, 1997–2006. [Google Scholar] [CrossRef]

- Schmarje, L.; Santarossa, M.; Schröder, S.M.; Koch, R. A survey on semi-, self-and unsupervised learning for image classification. IEEE Access 2021, 9, 82146–82168. [Google Scholar] [CrossRef]

- Dike, H.U.; Zhou, Y.; Deveerasetty, K.K.; Wu, Q. Unsupervised learning based on artificial neural network: A review. In Proceedings of the 2018 IEEE International Conference on Cyborg and Bionic Systems (CBS), Shenzhen, China, 25–27 October 2018; pp. 322–327. [Google Scholar]

- MacQueen, J. Some methods for classification and analysis of multivariate observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability, Berkeley, CA, USA, 21 June–18 July 1965; p. 281. [Google Scholar]

- Luong, N.C.; Hoang, D.T.; Gong, S.; Niyato, D.; Wang, P.; Liang, Y.C.; Kim, D.I. Applications of deep reinforcement learning in communications and networking: A survey. IEEE Commun. Surv. Tutor. 2019, 21, 3133–3174. [Google Scholar] [CrossRef]

- Zhuang, F.; Qi, Z.; Duan, K.; Xi, D.; Zhu, Y.; Zhu, H.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2020, 109, 43–76. [Google Scholar] [CrossRef]

- Ouali, Y.; Hudelot, C.; Tami, M. An overview of deep semi-supervised learning. arXiv 2020, arXiv:2006.05278. [Google Scholar]

- Abdelkefi, A.; Jiang, Y. A structural analysis of network delay. In Proceedings of the Ninth Annual Communication Networks and Services Research Conference, Ottawa, ON, Canada, 2–5 May 2011; pp. 41–48. [Google Scholar]

- Zhang, X.; Wang, Y.; Geng, G.; Yu, J. Delay-optimized multicast tree packing in software-defined networks. IEEE Trans. Serv. Comput. 2021, 261–275. [Google Scholar] [CrossRef]

- She, C.; Sun, C.; Gu, Z.; Li, Y.; Yang, C.; Poor, H.V.; Vucetic, B. A tutorial on ultrareliable and low-latency communications in 6G: Integrating domain knowledge into deep learning. Proc. IEEE 2021, 109, 204–246. [Google Scholar] [CrossRef]

- Guo, J.; Cao, P.; Wu, J.; Xu, B.; Yang, J. Path delay variationpPrediction model with machine learning. In Proceedings of the IEEE International Conference on Solid-State and Integrated Circuit Technology (ICSICT), Qingdao, China, 31 October–3 November 2018; pp. 1–3. [Google Scholar]

- Nunes, B.A.A.; Veenstra, K.; Ballenthin, W.; Lukin, S.; Obraczka, K. A machine learning framework for TCP round-trip time estimation. EURASIP J. Wirel. Commun. Netw. 2014, 2014, 47. [Google Scholar]

- Abdellah, A.R.; Mahmood, O.A.K.; Paramonov, A.; Koucheryavy, A. IoT traffic prediction using multi-step ahead prediction with neural network. In Proceedings of the International Congress on Ultra Modern Telecommunications and Control Systems and Workshops (ICUMT), Dublin, Ireland, 28–30 October 2019; pp. 1–4. [Google Scholar]

- Yajnik, M.; Moon, S.; Kurose, J.; Towsley, D. Measurement and modelling of the temporal dependence in packet loss. In Proceedings of the IEEE INFOCOM’99. Conference on Computer Communications, Eighteenth Annual Joint Conference of the IEEE Computer and Communications Societies. The Future is Now (Cat. No. 99CH36320), New York, NY, USA, 21–25 March 1999; Volume 1, pp. 345–352. [Google Scholar]

- Zhang, X.; Wang, Y.; Zhang, J.; Wang, L.; Zhao, Y. RINGLM: A link-level packet loss monitoring solution for software-defined networks. IEEE J. Sel. Areas Commun. 2019, 37, 1703–1720. [Google Scholar] [CrossRef]

- Lazaris, A.; Prasanna, V.K. Deep learning models for aggregated network traffic prediction. In Proceedings of the International Conference on Network and Service Management (CNSM), Halifax, NS, Canada, 21–25 October 2019; pp. 1–5. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the ACM Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 13–17. [Google Scholar]

- Zhang, X.; Wang, T. Elastic and reliable bandwidth reservation based on distributed traffic monitoring and control. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 4563–4580. [Google Scholar] [CrossRef]

- Khangura, S.K.; Akın, S. Online available bandwidth estimation using multiclass supervised learning techniques. Comput. Commun. 2021, 170, 177–189. [Google Scholar] [CrossRef]

- Khangura, S.K.; Fidler, M.; Rosenhahn, B. Neural networks for measurement based bandwidth estimation. In Proceedings of the IFIP Networking Conference (IFIP Networking) and Workshops, Zurich, Switzerland, 14–16 May 2018; pp. 1–9. [Google Scholar]

- Labonne, M.; Chatzinakis, C.; Olivereau, A. Predicting bandwidth utilization on network links using machine learning. In Proceedings of the European Conference on Networks and Communications (EuCNC), Dubrovnik, Croatia, 15–18 June 2020; pp. 242–247. [Google Scholar]

- Ostlin, E.; Zepernick, H.J.; Suzuki, H. Macrocell path-loss prediction using artificial neural networks. IEEE Trans. Veh. Technol. 2010, 59, 2735–2747. [Google Scholar] [CrossRef]

- Popoola, S.I.; Adetiba, E.; Atayero, A.A.; Faruk, N.; Yuen, C.; Calafate, C.T. Optimal model for path loss predictions using feed-forward neural networks. Cogent Eng. 2018, 5, 1444345. [Google Scholar] [CrossRef]

- Ojo, S.; Sari, A.; Ojo, T.P. Path loss modeling: A machine learning based approach using support vector regression and radial basis function models. Open J. Appl. Sci. 2022, 12, 990–1010. [Google Scholar] [CrossRef]

- Singhal, P.; Yadav, A. Congestion detection in wireless sensor network using neural network. In Proceedings of the International Conference for Convergence for Technology-2014, Pune, India, 6–8 April 2014; pp. 1–4. [Google Scholar]

- Madalgi, J.B.; Kumar, S.A. Development of wireless sensor network congestion detection classifier using support vector machine. In Proceedings of the International Conference on Computational Systems and Information Technology for Sustainable Solutions (CSITSS), Bengaluru, India, 20–22 December 2018; pp. 187–192. [Google Scholar]

- Beverly, R.; Sollins, K.; Berger, A. SVM learning of IP address structure for latency prediction. In Proceedings of the SIGCOMM Workshop on Mining Network Data, Pisa, Italy, 15 September 2006; pp. 299–304. [Google Scholar]

- Mohammed, S.A.; Shirmohammadi, S.; Alchalabi, A.E. Network delay measurement with machine learning: From lab to real-world deployment. IEEE Instrum. Meas. Mag. 2022, 25, 25–30. [Google Scholar] [CrossRef]

- Khangura, S.K.; Fidler, M.; Rosenhahn, B. Machine learning for measurement based bandwidth estimation. Comput. Commun. 2019, 144, 18–30. [Google Scholar] [CrossRef]

- Madalgi, J.B.; Kumar, S.A. Congestion detection in wireless sensor networks using MLP and classification by regression. In Proceedings of the International Conference on Applied and Theoretical Computing and Communication Technology (iCATccT), Tumkur, India, 21–23 December 2017; pp. 226–231. [Google Scholar]

- Sarker, I.H.; Kayes, A.; Badsha, S.; Alqahtani, H.; Watters, P.; Ng, A. Cybersecurity data science: An overview from machine learning perspective. J. Big Data 2020, 7, 41. [Google Scholar] [CrossRef]

| Algorithm Category | Algorithm | Application | Ref | Performance Analysis |

|---|---|---|---|---|

| Supervised | Random forest | Packet loss | [22] | The training period is brief and can result in accurate estimation |

| ANN | throughput | [23] | High level of accuracy in forecasting end-user throughput in LTE and related 5G networks | |

| SVM | throughput | [24] | When compared to historical methods, the accuracy rate is over three times higher | |

| DNN | Path delay | [9] | To increase prediction accuracy, fine-tune several super parameters | |

| DT | Path delay | [25] | A high prediction accuracy and the ability to be applied to large-scale systems | |

| FNN | Bandwidth | [26] | Substantial data savings | |

| RNN | Path delay | [27] | Find the algorithm with the most accurate prediction | |

| CNN | Path Delay | [28] | This technique’s estimation accuracy clearly outperforms that of PathQuick3 | |

| RBF | Path loss | [21] | The mistake is relatively modest, and the estimated route loss is close to the true value | |

| MLP NN | Path loss | [8] | The MLP neural network model combined with the RBF neural network is shown to have the best performance | |

| Unsupervised | LSTM | Bandwidth | [29] | Find a more accurate prediction method in the two modes |

| K-Means | Bandwidth | [29] | More storage space enhances the classification system | |

| Reinforcement learning | Greedy algorithm | Bandwidth | [30] | With less unpredictability, the available bandwidth can be anticipated correctly |

| Transfer learning | Experts framework | Path delay | [31] | The quantity of retransmission packets is greatly decreased while throughput increases |

| Ref | Application | Dataset | RF | ANN | SVM | FNN | Else |

|---|---|---|---|---|---|---|---|

| [22] | Predict Packet loss | 10 DTN system in NERSC | 97–99% | - | - | - | - |

| [23] | Predict throughput | Real Dataset | 91% | 93% | 89% | - | XGBoost:93% |

| [26] | Predict Bandwidth | Manual Dataset | - | - | - | 78% | - |

| [51] | Predict Path Delay | Commercial mobile operators | 73% | - | 66% | - | LR:60% |

| [52] | Predict Path loss | Real Dataset | - | - | 85% | - | RBF:89% |

| [53] | Congestion control | Manual Dataset | - | - | 98% | - | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, M.; He, B.; Li, R.; Li, J.; Zhang, X. A Survey: Network Feature Measurement Based on Machine Learning. Appl. Sci. 2023, 13, 2551. https://doi.org/10.3390/app13042551

Sun M, He B, Li R, Li J, Zhang X. A Survey: Network Feature Measurement Based on Machine Learning. Applied Sciences. 2023; 13(4):2551. https://doi.org/10.3390/app13042551

Chicago/Turabian StyleSun, Muyi, Bingyu He, Ran Li, Jinhua Li, and Xinchang Zhang. 2023. "A Survey: Network Feature Measurement Based on Machine Learning" Applied Sciences 13, no. 4: 2551. https://doi.org/10.3390/app13042551

APA StyleSun, M., He, B., Li, R., Li, J., & Zhang, X. (2023). A Survey: Network Feature Measurement Based on Machine Learning. Applied Sciences, 13(4), 2551. https://doi.org/10.3390/app13042551