Abstract

Rehabilitation is a vast field of research. Virtual and Augmented Reality represent rapidly emerging technologies that have the potential to support physicians in several medical activities, e.g., diagnosis, surgical training, and rehabilitation, and can also help sports experts analyze athlete movements and performance. In this study, we present the implementation of a hybrid system for the real-time visualization of 3D virtual models of bone segments and other anatomical components on a subject performing critical karate shots and stances. The project is composed of an economic markerless motion tracking device, Microsoft Kinect Azure, that recognizes the subject movements and the position of anatomical joints; an augmented reality headset, Microsoft HoloLens 2, on which the user can visualize the 3D reconstruction of bones and anatomical information; and a terminal computer with a code implemented in Unity Platform. The 3D reconstructed bones are overlapped with the athlete, tracked by the Kinect in real-time, and correctly displayed on the headset. The findings suggest that this system could be a promising technology to monitor martial arts athletes after injuries to support the restoration of their movements and position to rejoin official competitions.

1. Introduction

Innovative technologies contribute to the growth of the rehabilitation sector by providing effective and safe solutions [1,2,3,4]. The technology involved in rehabilitation protocols is varied. Examples of applied technologies are assistive devices and robotics [5], optoelectronic systems [6], inertial measurement units [7], and virtual and augmented reality environments [8,9,10,11]. Virtual reality (VR) and augmented reality (AR) have aroused particular interest in the creation of customized rehabilitation protocols in the study of body mechanics. Biomechanical outcomes, such as joint movement analysis, are effective not only in diagnosing but also in understanding the mechanism of symptom progression. Most experts’ clinical assessments of these situations are based on observing a given movement performed by the subject or the manual measurement of angles on clinical images [12]. Several studies have proposed different innovative systems based on markerless motion tracking devices [13,14,15,16,17,18,19] and virtual or augmented reality systems [20,21] to support diagnosis and biomechanical measurements. Furthermore, a significant aspect of rehabilitation in which these new cutting-edge technologies are applied is postural analysis, a fundamental factor regarding personal well-being. Various pathologies or bad habits can influence posture and lead to the deformation of the bone structure. Consequently, malfunctioning of the musculoskeletal, respiratory, and nervous systems might also occur. The study of posture is based on measurements of anatomical angles and alignment of bone and joint components [22,23]. In many studies, practicing sports, such as martial arts, is recommended for correcting postural problems. In fact, in martial arts, posture and balance are crucial to the correct performance of exercises [24,25]. For example, in karate, repetition of simple positions, such as the Juntzuki (lunge punch) or the Zenkutsu Dachi stance (forward leaning stance), is fundamental to learning the basics of karate. Positions are also subjected to evaluation in competition kata (i.e., a set of shots and stances combined to perform an imaginary fight), kumite (i.e., fights with other athletes) [26], or to pass the exam to proceed to the next belt level. To be able to perform the positions correctly, control of coordination and total body balance, perception of the surrounding space, and knowledge of the anatomical angles to be achieved are necessary [22,25,26,27]. In these disciplines, experienced instructors can guide their disciples on how to perform the movement and maintain the correct posture. Still, studies have highlighted the contribution that technological systems could make to support instructors and disciples who want to improve and correct their mistakes [28,29].

The present study proposes a project implemented to visualize in real-time bones and other anatomical components of a subject performing critical karate shots and stances. The system comprises an economic motion tracking device that recognizes the subject movements and the position of anatomical joints and an AR headset, with which the physicians can observe bones and anatomical information fidelity overlapped to the subject in real-time. This hybrid system has the potential to contribute to the monitoring of martial arts athletes after injuries to support the restoration of their movements and position to rejoin the official competitions by taking advantage of new innovative technologies.

2. Related Works

In the medical field, VR and mixed reality (MR) are mainly applied in surgical planning and training. Several reviews [1,2,3,4] highlight the current application of VR and MR in surgical training for orthopedic procedures. According to these studies, numerous randomized clinical trials (RCTs) demonstrate the proficiency of innovative virtual techniques in teaching orthopedic surgical skills. In this framework, pilot studies [11,30] and clinical trials [31] evaluate whether VR or MR improve learning effectiveness for surgical trainees compared to traditional preparatory methods in orthopedic surgery. Innovative surgical simulators are presented in [8,9,10,32], proposing new approaches in surgical navigation, training preparations, and patient-specific modeling.

The efficiency of the HoloLens as a suitable device for such applications is highlighted in the previously mentioned studies [4,8,9,10,11,20,32]. For instance, ref. [33] specifically analyzes the use of the HoloLens 2 (HL2) in orthopedic surgery and compares it with the previous version HoloLens 1. Moreover, several studies [33,34] evaluate and quantify errors committed by the device in positioning and overlapping the virtual object with the real object reproduction. The results of [33] show that the newest model improved the AR projection accuracy by almost 25 percent, while both HoloLens versions yielded a root mean square error (RMSE) below 3 mm. In addition, El-Hariri and colleagues [34] evaluate possible new orthopedic surgical guidelines.

In addition, the authors in [6,20,21] propose the applications of AR, VR, or MR in pose or posture evaluation and correction. In these studies, tracking algorithms and systems, such as OpenPose and Vuforia, are used to recognize the position of the subject and to identify the posture accordingly. The aim is to provide support in the sport and physiotherapy fields and the diagnosis of orthopedic disease.

Hämäläinen [35] was the first to introduce martial arts in an AR game where the player has to fight virtual enemies. In [29], Wu et al. composed an AR martial arts system using deep learning based on real-time human pose forecasting. An external RGB camera was used to capture the motion of the trainer. The student wore a VR-Head Mounted Display (HMD) and could see the results directly on the screen. Moreover, Shen et al. [36] focused their work on the construction and visualization of the posture-based graph that focuses on the standard postures for launching and ending actions. They propose two numerical indices, the Connectivity Index and the Action Strategy Index, to measure skill level and the strengths and weaknesses of the boxers.

In a physiotherapeutic application, Debarba and co-workers [6] developed an AR tool to accurately overlap anatomical structures on the subject in motion on the HoloLens device using the external tracking system VICON. In [6], they present the first real-time bone mapping system of a moving subject. The VICON represents the gold standard of tracking and gait analysis systems but realistically is not usable in the everyday medical field. A markerless and dynamic system, such as the Microsoft Kinect, although less accurate, can be considered a viable technology to introduce to clinics and hospitals [37,38,39,40,41]. Certain precautions are necessary, such as designing a suitable joint model to correct device error.

The last version of the Microsoft Kinect devices, the Azure Kinect, has been validated by several studies [42,43,44]. In [42], the Azure Kinect showed a significantly higher accuracy of the spatial gait parameters than the previous version. Results provided by [43] confirm the officially stated values of standard deviation and distance error, i.e., std dev ≤ 17 mm and distance error > 11 mm + 0.1% of distance without multi-path interference. However, this study suggests a warmup of the device of 40 min before acquisition to obtain stable results. In [44], Antico and colleagues calculated an RMSE value of 0.47 between the marker-less tracking systems and the VICON, considering the average results among all joints. In contrast, the range of value of the angular mean absolute error is 5–15 degrees for all the upper joints [44].

Azure Kinect and its previous versions were applied in various circumstances in the medical field. For instance, [15,16] applied Kinect in evaluating patients with hip disorders. The inclination angles of the trunk and the pelvis were similar to the outcomes from the VICON system. Ref. [17] presents a tool for deducting forearm and wrist range of motion. In this study, results are obtained by a reliability test performed by a healthy group. In [18], evaluating the Global Gait Asymmetry index (GGA index) after knee joint surgery is accomplished using a set of Kinects. Moreover, in [19], the device was used to monitor the dynamic valgus of the knee. The Kinect measurements were compared with OptiTrack, and the absolute average difference for the pelvis was 1.3 ± 0.7 cm and for the knee in lateral-medial movement 0.7 ± 0.3 cm. Moreover, the Azure Kinect is also useful in a telemedicine system to teleport the knowledge and skills of doctors [45].

Many studies evaluate AR-based applications highlighting challenges that still need to be addressed. Ref. [46] reassumes and sifts through all the technical challenges of AR and MR: tracking, rendering, processing speed, and ergonomics. The new Microsoft headset for MR still needs hardware improvements to overcome these issues and to allow the real-time use of MR in daily life applications. Indeed, ref. [47] shows all limitations of AR in sports and training fields. The most impacting challenge is the tracking accuracy which depends on the speed of motion, distance, noise, and hardware performances, followed by the Field of View limited by the headset. The Kinect can be considered the pioneer among marker-less tracking systems [47]. However, skeleton tracking and motion reconstructions must still be monitored and filtered. Ref. [48] reports factors that can influence the results of Kinect performance, such as the absence of silhouette visual changes and the changeable hands and foot joint estimations. Newer Kinect versions have reduced some issues; however, other improvements or algorithm corrections may still be needed.

3. Materials and Methods

3.1. Materials

The device selected to implement the MR application of this study correctly is the HMD HL2, the second version of the Microsoft device.

This device is a stand-alone holographic computer composed of a pair of see-through transparent lenses (also called waveguides) with a holographic resolution of 2 k 3:2 light engine and holographic density major of 2.5 k light points per radiant. The waveguides are flat optical fibers in which the light can be projected by the specific projectors in each lens. The light bounces between the interior surfaces of the display to be directly sent to the user’s pupils to display the holograms directly in front of the user’s eyes. HL2 is also equipped with an IR camera for eye tracking, RGB cameras, a Depth camera, and an IMU sensor that includes an accelerometer, gyroscope, and magnetometer. The device has a resolution of 2048 × 1080 for each eye and an FOV of 52 degrees (information on the Microsoft official site).

A high-performance computer with appropriate technical characteristics that allowed programming in MR was used to implement the application and to manage the distribution process on the device.

Azure Kinect is the new version of the camera system developed by Microsoft. The Azure Kinect has an RGB (red, green, and blue) camera, a depth camera, IR emitters, and IMU sensors LSM6DSMUS (gyroscope and accelerometer) are simultaneously sampled at 1.6 kHz. The samples are reported to the host at 208 Hz. Therefore, due to the presence of IMU sensors, it can measure and track the entire body in real-time and estimate 3 coordinates of every major joint of the human body in 3 planes without requiring any marker or other supplemental equipment (information available from the official site).

To implement the 3D models of anatomical districts, DICOM computed tomography (CT) scans were used. The scans were achieved from different free and open-access databases, in particular:

- Tibia and Fibula scans are from a subject in the National Cancer Institute’s Clinical Proteomic Tumor Analysis Consortium Sarcomas (CPTAC-SAR) cohort;

- Femur scans are from the Cancer Imaging Archive;

- Humerus scans are from the image datasets of the Laboratory of Human Anatomy and Embryology, University of Brussels (ULB), Belgium.

Humerus scans were acquired at 120 kVp, exposure of 200 mAs, and an X-ray current of 200 mA. Ulna and radius scans were acquired using 130 kVp, time of exposure of 1000 ms, current of X-ray of 70 mA, and a generator power of 10 kW. Femur scans were acquired at 80 kVp, an X-ray current of 20 mA, and a generator power of 1600 kW.

Table 1 reports other key information about the CT scans grouped by the anatomical areas.

Table 1.

Technical features of CT scans of the bones segmented.

3D Slicer version 4.11.20210226, a free and open-source software for clinical and biomedical research applications, was used to develop the corresponding 3D models of the bones through segmenting the CT Scans. Subsequently, the 3D models are used in the MR application.

Blender is a free and open-source software for 3D manipulation, and it was used to convert the model into a readable format with Unity 3D.

Unity 3D version 2020.3.30f1 is a game engine that was combined with the Mixed Reality Toolkit package (vs. 2.7.0) (MRTK) to develop the MR application. The MRTK contains a set of basic features that added to a Unity project can implement MR behavior.

Visual Studio 2019 is a free integrated development environment (IDE) that allows C# scripts to be written.

3.2. Method

3.2.1. 3D Models Reconstruction

To build the 3D models of the bones, the corresponding CT scans were imported into the 3D Slicer software. The segmentation was performed using the manual segmentation algorithms of the threshold and smoothing effect. All the objects were exported as obj files to have a Unity-readable 3D object.

After the segmentation, Blender software was used to modify the system of references of each 3D model and to scale it. These actions are required to obtain models congruent with real anatomical dimensions and to allow correct object manipulation in the Unity application.

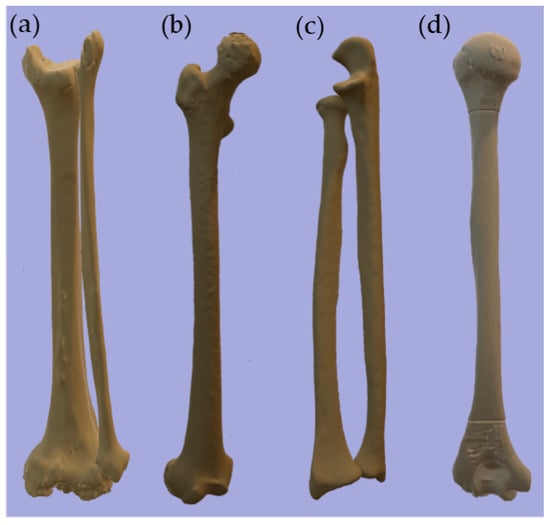

The final 3D models (Figure 1) are exported in an obj format file.

Figure 1.

3D models of bones. From the left: (a) Tibia and Fibula, (b) Femur, (c) Ulna and Radius, (d) Humerus.

3.2.2. Mixed Reality Behaviour and Unity Editor Settings

Since the 3D models were imported in Unity, it was necessary to deselect the conversion of measurement units to maintain the correct real proportions of 3D objects.

To implement skeleton tracking with Azure Kinect and to allow for the possibility of using the HL2, including the MR behavior in the project by adding the MRTK was required.

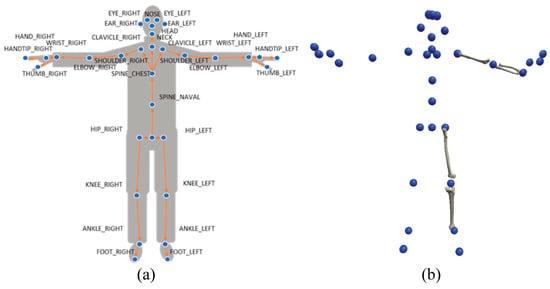

A skeleton was implemented to map all the bones and joints of the human body that the Azure Kinect can track (Figure 2a). In the first version, the bones were represented by a red cylinder and the joints as a grey ball. Then, each cylinder that corresponds to the anatomical part acquired was replaced with the segmented 3D object.

Figure 2.

(a) Map of the joints tracked by the Azure Kinect; (b) Map of the 3D joint in Unity.

MRTK provides the elements to track both hands of the users correctly. Thus, it is possible to build a personalized prefab (3D object) that can reproduce the movement of all body parts in real-time. Through a C# code, it was possible to correctly assign each 3D object to the mapped joint through the Kinect (Figure 2b).

The script in Unity scales the 3D bones according to the distance between the centers of the Kinect mapped joints. For instance, the distance between the shoulder and elbow is considered by the algorithm in order to scale the humerus dimensions appropriately and show an adequate holographic overlay of the subject. Besides, the proposed 3D bone models can be substituted with a 3D reconstruction of the anatomical segments from DICOM images of the user, and, in this case, the scaling action will not be necessary.

3.2.3. Distribution of Application

To correctly distribute the application on the HL2, the Holographic Remoting Player was used. Holographic Remoting is a complementary application that can be connected to the HL2 to display the game without deploying the application. In this manner, it is possible to modify the real-time application.

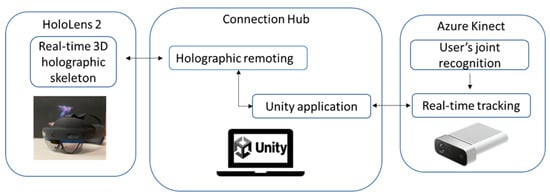

To link the HL2 and the Unity Editor, it was necessary to connect the computer and the HL2 to the same internet connection or connect them using a USB-C cable. After the pairing, it was possible to insert the IP address of the HL2 displayed on the home screen of the Holographic Remoting Player directly on the Unity Editor (Figure 3). Subsequently, the user could start the session.

Figure 3.

Block diagram of the system architecture and the functioning of each module.

Figure 3 shows the system architecture and the interaction among the hardware.

3.2.4. Experimental Protocol

Before launching the application, a subject person must be positioned in front of the Kinect Azure in its functioning area (within 1.5–2 m) to allow the mapping of the subject’s joints.

The users analyzing the joint movement must wear the HL2 and activate the Holographic remoting player to allow the application to run on HL2.

When the application is running, the Azure Kinect starts to recognize all of the body segments, and the Unity application starts to associate each anatomical part with its own 3D object. To correctly verify the association between the bones-3D object and joint-3D object, it should be noted that the virtual skeleton reproduces exactly the same movement as the tracked person. The real-time movement of the 3D skeleton is visualized directly on the HL2 glasses.

During the streaming session, the user can walk around the holograms to better analyze the anatomical movement.

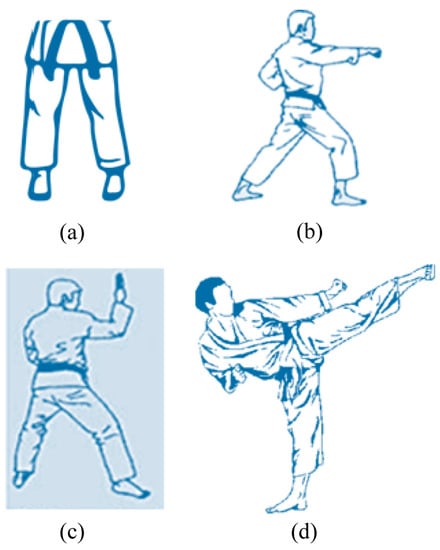

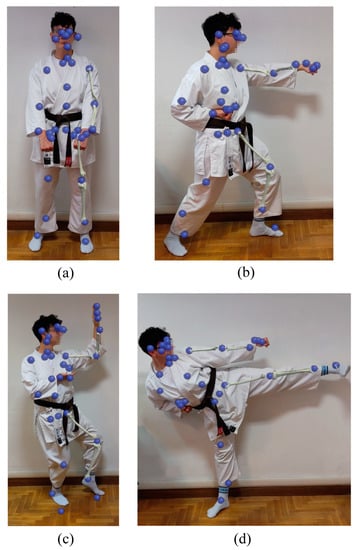

To evaluate the performance of the system concerning tracking karate positions of the Wado Ryu traditional Japanese style, a 28-year-old brown-belt karateka reproduced a set of karate shots and stances used during the training: Heiko Dachi (parallel stance) stance (Figure 4a); Juntzuki (lunge punch) on Zenkutsu Dachi (forward leaning stance) stance (Figure 4b); Shuto Uke (knife hand block) on Shomen Neko Aishi Dachi (front facing cat leg stance) stance (Figure 4c); Sokuto Geri (lateral kick) (Figure 4d).

Figure 4.

Examples of karate shots and stances evaluated in the study: (a) Heiko Dachi (parallel stance) stance; (b) Juntzuki (lunge punch) on Zenkutsu Dachi (forward leaning stance) stance; (c) Shuto Uke (knife hand block) on Shomen Neko Aishi Dachi (front facing cat leg stance) stance; (d) Sokuto Geri (lateral kick).

The application saved the positions in the joint space of the subject during his movements. During the session, 1 crucial karate position was analyzed. The position analyzed was Shuto Uke on Shomen Neko Aishi Dachi, and data from left and right hip, left and right knee, left and right ankle, left foot, right foot, pelvis, left elbow, and wrist were measured.

Following a post-processing analysis of the data, the anatomical measurements necessary to evaluate the individual positions from a postural and competitive point of view of the discipline were derived.

4. Results

Figure 5 shows the HL2 view screenshots of the karate stances acquired by the system.

Figure 5.

Karateka doing shots and stance and 3D holographic skeleton reproduced in HL2 glasses to evaluate the system performance: (a) Heiko Dachi (parallel stance) stance; (b) Juntzuki (lunge punch) on Zenkutsu Dachi (forward leaning stance) stance; (c) Shuto Uke (knife hand block) on Shomen Neko Aishi Dachi (front facing cat leg stance) stance; (d) Sokuto Geri (lateral kick) chudan.

Table 2 reports the mean value in real-time of the three coordinates for the selected joints during the Shuto Uke on Shomen Neko Aishi Dachi position.

Table 2.

The table reports the mean value of the joints selected to analyze the Shuto Uke on Shomen Neko Aishi Dachi position.

To evaluate karateka performance, 3D joints were used to compute the angles between the joints and were then compared with the standards (Table 3). The angles evaluated for the Shuto Uke on Neko Aishi Dachi are the angle of rotation between the right foot and the left foot and the angle of rotation between the wrist, the elbow, and the shoulder.

Table 3.

The table compares the angles computed from the data output of the system and the karate standards for the Shuto Uke on Neko Aishi Dachi.

5. Discussion

The proposed system has the potential to provide support in assessing posture after sports injuries, particularly in martial arts, such as karate, where posture is fundamental to performing the sport correctly [24,25,36], and to monitor martial arts athletes after injuries to support the restoration of their movements and position. The superposition of 3D bone models reconstructed from medical imaging develops a more physiologically relevant environment. A more meaningful and detailed visualization of the body structures might be beneficial for experts to improve their assessment. Moreover, the 3D bone models overlayed on the subject allow observing how the bone segment is positioned during the athlete’s performance without adding markers that could be affected by soft tissue artifacts.

The Unity application is not yet complete but shows adaptability to be used in sport application. Indeed, it emerges that the devices are adequate as a starting point in applying this type. HL2 and Azure Kinect represent valid substitutes for the gold standard systems of their categories, although not as much accurate.

The long-term purpose of this hybrid system composed of HL2 and Azure Kinect is to support athletes in restoring their abilities after an injury, but it still needs some improvements. Currently, MR-based systems that support athletes’ recovery are not available. The systems presented in the literature are focused on the improvement of an athlete’s performance. In Table 4, we compare the system illustrated in this paper with existing ones in the literature.

Table 4.

Comparison of the systems used for supporting martial arts athletes.

Studies proposing MR in orthopedics stop at the 3D reproduction of bones from DICOM and the possibility of interaction as an inanimate object in order to support experts in surgical planning [1,2,3,4]. Several studies recognize the contribution of such innovative technologies in reducing errors in surgery [8,9,10,11,30,31,32]. To our knowledge, a similar approach to the one presented in this work has not been suggested, except from [6]. It is worth noticing that in the work of [6], Vicon was the proposed device, which although allowing for the best possible accuracy in bone positioning and articulation is not applicable in clinical reality. Conversely, this project provides a system that is sufficiently accurate without the need for specific knowledge, given the absence of markers [42,43,44]. Furthermore, the system can also be considered low-cost if the HL2 is replaced by a cheaper VR visor, even though the AR or MR is more beneficial for this type of application for the overlap of the skeleton on the subject and lesser side effects, such as motion sickness. The system could also be considered applicable in a remote setting where the trainer is not present. In this situation, the overlay of bones on the subject is not applicable, which can occur if the examination is in the present. In this context, the video recorded by the HL2 camera might be visible to the trainer in real-time with the skeleton reconstruction.

Unfortunately, the use of remoting and real-time data saving introduces a delay between the movement of the subject and the movement repeated by the skeleton. The movement captured by the Kinect is correct and can follow even a fast movement, such as a kick or a punch, but it is reproduced on Unity with a delay of about half a second.

Furthermore, the system finds its greatest application when the subject has a fracture in the spine or a long bone. In these cases, the 3D reconstructions of the bones are directly built from their DICOM scans, and the fracture behavior can be studied during the sports movements. The percentage of fractures and dislocations to which karatekas are subject should not be underestimated [49,50]. In many cases, these are due to the incorrect execution of the basic position and stance assumed during a kick or a punch [51]. The adequate rotation of the foot, knee, and hips in a kick are essential to give more force and efficacy to the blow without suffering damage to the joint and bone to cushion the reaction force suffered on impact elegantly and correctly. Also, punches could be affected by the joint’s wrong position. To better perform the punch, the wrist should be straight and parallel to the floor, the fingers might be correctly closed, and the punch’s force is associated with the perfect rotation of the hips during the stance. The correct performance does not have a negative impact on the shoulder.

Anatomical fidelity is important for this type of application, so caution and improvements are required. As documented in other studies [42,43,44], Kinect-based systems can have poor joint tracking when a body part is not visible to the camera and during unusual poses or interactions with objects. It is worth pointing out that in this work, the assessment is characterized by the subject sited frontally to the Kinect Azure camera, and the athlete did not interact with any object. Complex poses are behind the scope of this preliminary study. Future implementations can be carried out to correct or minimize the 3D reconstruction errors of the devices.

Firstly, to effectively and immediately achieve the overlapping of the 3D anatomical components on the patient through the HoloLens viewer, the Kinect must be positioned as close as possible to the camera of the HoloLens device. In this way, the position detected by the Kinect could be used to locate the 3D reconstruction in MR correctly. In addition, other application components can be implemented depending on the purpose of use, such as the viewing of medical images or the possibility of remote sharing. Finally, the Kinect can determine the position of the body joints, albeit with a certain margin of error. If the subject is stationary and with arms outstretched, the joints are correctly recognized, and the skeletal overlap is coherent. When the subject bends the elbow, bringing the hand towards the shoulder, the joint remains in the correct position. Conversely, when the algorithm connects the elbow joint with the hand joint, it positions the forearm bones in an anatomically incorrect way. This situation is justified by the 3D prefab of the skeleton in which the bones are separated from each other and managed separately by the tracking algorithm. Therefore, the integration of algorithms for simulating the behavior of joints and bones is necessary to enable a more anatomically correct realization of the positions assumed by the skeleton in each situation [52]. In this context, an improvement of the system might take into account the integration of artificial intelligence and deep learning algorithms that can identify the position of the athlete and correct the position detected by the Azure Kinect.

Even more fundamental is this integration when considering patients with certain bone diseases or implanted prostheses that affect bone movement and joint function [53,54]. In these cases, patient-specific simulation studies are crucial to be considered. Thus, a user-friendly application that allows an in-depth analysis of a pathological joint in real-time represents a clinical need to improve the accuracy of the diagnosis or the surgical planning. For example, for joint-related pathologies, experts are interested in the range of motion and its value changing over time. For patients who underwent joint replacement surgery, the prostheses may affect posture or walking, and their effect should be examined [55]. Eventually, the system might be useful in assessing what would happen to the patient’s movement in the case of an incorrect joint replacement, thanks to a properly trained and implemented artificial intelligence algorithm [56,57].

6. Conclusions

The combined system of HL2 and Azure Kinect shows the possibility of monitoring movement in certain conditions for athletes playing martial arts, such as karate. Due to its adaptability, this system could also be used to evaluate athletes after injuries and has shown high potential to support sports rehabilitation. However, the system needs to be tested with the engagements of professional athletes after injuries that need to restore their initial condition. In the future, the proposed system might also be used to train orthopedic clinicians. In fact, orthopedics students may interact with the virtual anatomical segments and may observe how bones could be affected by a pathology progression, such as valgus legs, an implanted prosthesis, or back sciatica. It could also be used in sports halls where the trainer can provide students with innovative technologies to objectively assess and correct their posture or for beginners’ learning. We do not exclude the possibility of also using this system for boxing or other martial arts, such as kung-fu or jujutsu.

Furthermore, in official competitions and graduating exams, correct posture and execution of movements are the evaluated components [25,26,27]. The karateka must repeat the positions many times in training to reach perfection, and, with the help of this technology, a video can be recorded of his performances in conjunction with real-time observation.

Author Contributions

Conceptualization, F.B.; methodology, M.F. and S.P.; software, M.F. and S.P.; validation, M.F., S.P. and A.P.; formal analysis, M.F.; investigation, M.F.; resources, F.B. and F.M.; data curation, M.F. and S.P.; writing—original draft preparation, M.F. and S.P.; writing—review and editing, F.B. and A.P.; visualization, M.F., S.P. and A.P.; supervision, F.B. and F.M.; project administration, F.B.; funding acquisition, F.B. and F.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Berthold, D.P.; Muench, L.N.; Rupp, M.C.; Siebenlist, S.; Cote, M.P.; Mazzocca, A.D.; Quindlen, K. Head-Mounted Display Virtual Reality Is Effective in Orthopaedic Training: A Systematic Review. Arthrosc. Sport. Med. Rehabil. 2022, 4, e1843–e1849. [Google Scholar] [CrossRef] [PubMed]

- Clarke, E. Virtual Reality Simulation—The Future of Orthopaedic Training? A Systematic Review and Narrative Analysis. Adv. Simul. 2021, 6, 2. [Google Scholar] [CrossRef]

- Hasan, L.K.; Haratian, A.; Kim, M.; Bolia, I.K.; Weber, A.E.; Petrigliano, F.A. Virtual Reality in Orthopedic Surgery Training. Adv. Med. Educ. Pract. 2021, 12, 1295–1301. [Google Scholar] [CrossRef] [PubMed]

- Barcali, E.; Iadanza, E.; Manetti, L.; Francia, P.; Nardi, C.; Bocchi, L. Augmented Reality in Surgery: A Scoping Review. Appl. Sci. 2022, 12, 6890. [Google Scholar] [CrossRef]

- Son, S.; Lim, K.B.; Kim, J.; Lee, C.; Cho, S.I.I.; Yoo, J. Comparing the Effects of Exoskeletal-Type Robot-Assisted Gait Training on Patients with Ataxic or Hemiplegic Stroke. Brain Sci. 2022, 12, 1261. [Google Scholar] [CrossRef]

- Debarba, H.G.; De Oliveira, M.E.; Ladermann, A.; Chague, S.; Charbonnier, C. Augmented Reality Visualization of Joint Movements for Rehabilitation and Sports Medicine. In Proceedings of the 2018 20th Symposium on Virtual and Augmented Reality (SVR), Foz do Iguacu, Brazil, 28–30 October 2018; pp. 114–121. [Google Scholar] [CrossRef]

- Bertoli, M.; Cereatti, A.; Croce, U.D.; Pica, A.; Bini, F. Can MIMUs Positioned on the Ankles Provide a Reliable Detection and Characterization of U-Turns in Gait? In Proceedings of the 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Condino, S.; Turini, G.; Parchi, P.D.; Viglialoro, R.M.; Piolanti, N.; Gesi, M.; Ferrari, M.; Ferrari, V. How to Build a Patient-Specific Hybrid Simulator for Orthopaedic Open Surgery: Benefits and Limits of Mixed-Reality Using the Microsoft Hololens. J. Healthc. Eng. 2018, 2018, 5435097. [Google Scholar] [CrossRef]

- Turini, G.; Condino, S.; Parchi, P.D.; Viglialoro, R.M.; Piolanti, N.; Gesi, M.; Ferrari, M.; Ferrari, V. A Microsoft HoloLens Mixed Reality Surgical Simulator for Patient-Specific Hip Arthroplasty Training. In Augmented Reality, Virtual Reality, and Computer Graphics, Proceedings of the 5th International Conference, AVR 2018, Otranto, Italy, 24–27 June 2018; De Paolis, L., Bourdot, P., Eds.; Springer: Cham, Switzerland, 2018; pp. 201–210. [Google Scholar] [CrossRef]

- Liebmann, F.; Roner, S.; von Atzigen, M.; Wanivenhaus, F.; Neuhaus, C.; Spirig, J.; Scaramuzza, D.; Sutter, R.; Snedeker, J.; Farshad, M.; et al. Registration Made Easy—Standalone Orthopedic Navigation with HoloLens. arXiv 2020, arXiv:2001.06209. [Google Scholar] [CrossRef]

- Cevallos, N.; Zukotynski, B.; Greig, D.; Silva, M.; Thompson, R.M. The Utility of Virtual Reality in Orthopedic Surgical Training. J. Surg. Educ. 2022, 79, 1516–1525. [Google Scholar] [CrossRef]

- Vanicek, N.; Strike, S.; McNaughton, L.; Polman, R. Gait Patterns in Transtibial Amputee Fallers vs. Non-Fallers: Biomechanical Differences during Level Walking. Gait Posture 2009, 29, 415–420. [Google Scholar] [CrossRef]

- Lau, I.Y.S.; Chua, T.T.; Lee, W.X.P.; Wong, C.W.; Toh, T.H.; Ting, H.Y. Kinect-Based Knee Osteoarthritis Gait Analysis System. In Proceedings of the 2020 IEEE 2nd International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 26–27 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Yoshimoto, K.; Shinya, M. Use of the Azure Kinect to Measure Foot Clearance during Obstacle Crossing: A Validation Study. PLoS ONE 2022, 17, e0265215. [Google Scholar] [CrossRef]

- Lahner, M.; Mußhoff, D.; Von Schulze Pellengahr, C.; Willburger, R.; Hagen, M.; Ficklscherer, A.; Von Engelhardt, L.V.; Ackermann, O.; Lahner, N.; Vetter, G. Is the Kinect System Suitable for Evaluation of the Hip Joint Range of Motion and as a Screening Tool for Femoroacetabular Impingement (FAI)? Technol. Heal. Care 2015, 23, 75–82. [Google Scholar] [CrossRef] [PubMed]

- Asaeda, M.; Kuwahara, W.; Fujita, N.; Yamasaki, T.; Adachi, N. Validity of Motion Analysis Using the Kinect System to Evaluate Single Leg Stance in Patients with Hip Disorders. Gait Posture 2018, 62, 458–462. [Google Scholar] [CrossRef] [PubMed]

- Aleksandra, K.; Maj, A.; Dejnek, M.; Prill, R.; Skotowska-Machaj, A.; Kołcz, A. Wrist Motion Assessment Using Microsoft Azure Kinect DK: A Reliability Study in Healthy Individuals. Adv. Clin. Exp. Med. 2022, 32. [Google Scholar] [CrossRef] [PubMed]

- Cho, H.M.; Seon, J.; Park, J.Y.; Ahn, J.; Lee, Y. Usefulness of the Kinect-V2 System for Determining the Global Gait Index to Assess Functional Recovery after Total Knee Arthroplasty. Orthop. Surg. 2022, 14, 3216–3224. [Google Scholar] [CrossRef]

- Uhlár, Á.; Ambrus, M.; Kékesi, M.; Fodor, E.; Grand, L.; Szathmáry, G.; Rácz, K.; Lacza, Z. Kinect Azure–Based Accurate Measurement of Dynamic Valgus Position of the Knee—A Corrigible Predisposing Factor of Osteoarthritis. Appl. Sci. 2021, 11, 5536. [Google Scholar] [CrossRef]

- Johnson, P.B.; Jackson, A.; Saki, M.; Feldman, E.; Bradley, J. Patient Posture Correction and Alignment Using Mixed Reality Visualization and the HoloLens 2. Med. Phys. 2022, 49, 15–22. [Google Scholar] [CrossRef]

- Jan, Y.F.; Tseng, K.W.; Kao, P.Y.; Hung, Y.P. Augmented Tai-Chi Chuan Practice Tool with Pose Evaluation. In Proceedings of the 2021 IEEE 4th International Conference on Multimedia Information Processing and Retrieval (MIPR), Tokyo, Japan, 8–10 September 2021; pp. 35–41. [Google Scholar] [CrossRef]

- Singla, D.; Veqar, Z.; Hussain, M.E. Photogrammetric Assessment of Upper Body Posture Using Postural Angles: A Literature Review. J. Chiropr. Med. 2017, 16, 131–138. [Google Scholar] [CrossRef]

- Do Rosário, J.L.P. Photographic Analysis of Human Posture: A Literature Review. J. Bodyw. Mov. Ther. 2014, 18, 56–61. [Google Scholar] [CrossRef]

- Byun, S.; An, C.; Kim, M.; Han, D. The Effects of an Exercise Program Consisting of Taekwondo Basic Movements on Posture Correction. J. Phys. Ther. Sci. 2014, 26, 1585–1588. [Google Scholar] [CrossRef]

- Cherepov, E.A.; Eganov, A.V.; Bakushin, A.A.; Platunova, N.Y.; Sevostyanov, D.Y. Maintaining Postural Balance in Martial Arts Athletes Depending on Coordination Abilities. J. Phys. Educ. Sport 2021, 21, 3427–3432. [Google Scholar] [CrossRef]

- Gauchard, G.C.; Lion, A.; Bento, L.; Perrin, P.P.; Ceyte, H. Postural Control in High-Level Kata and Kumite Karatekas. Mov. Sport. Sci.-Sci. Mot. 2017, 100, 21–26. [Google Scholar] [CrossRef]

- Güler, M.; Ramazanoglu, N. Evaluation of Physiological Performance Parameters of Elite Karate-Kumite Athletes by the Simulated Karate Performance Test. Univers. J. Educ. Res. 2018, 6, 2238–2243. [Google Scholar] [CrossRef]

- Petri, K.; Emmermacher, P.; Danneberg, M.; Masik, S.; Eckardt, F.; Weichelt, S.; Bandow, N.; Witte, K. Training Using Virtual Reality Improves Response Behavior in Karate Kumite. Sport. Eng. 2019, 22, 2. [Google Scholar] [CrossRef]

- Wu, E.; Koike, H. FuturePose—Mixed Reality Martial Arts Training Using Real-Time 3D Human Pose Forecasting with a RGB Camera. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019; pp. 1384–1392. [Google Scholar] [CrossRef]

- Lu, L.; Wang, H.; Liu, P.; Liu, R.; Zhang, J.; Xie, Y.; Liu, S.; Huo, T.; Xie, M.; Wu, X.; et al. Applications of Mixed Reality Technology in Orthopedics Surgery: A Pilot Study. Front. Bioeng. Biotechnol. 2022, 10, 740507. [Google Scholar] [CrossRef] [PubMed]

- Lohre, R.; Bois, A.J.; Pollock, J.W.; Lapner, P.; McIlquham, K.; Athwal, G.S.; Goel, D.P. Effectiveness of Immersive Virtual Reality on Orthopedic Surgical Skills and Knowledge Acquisition among Senior Surgical Residents: A Randomized Clinical Trial. JAMA Netw. Open 2020, 3, e2031217. [Google Scholar] [CrossRef] [PubMed]

- Gregory, T.M.; Gregory, J.; Sledge, J.; Allard, R.; Mir, O. Surgery Guided by Mixed Reality: Presentation of a Proof of Concept. Acta Orthop. 2018, 89, 480–483. [Google Scholar] [CrossRef]

- Pose-Díez-De-la-lastra, A.; Moreta-Martinez, R.; García-Sevilla, M.; García-Mato, D.; Calvo-Haro, J.A.; Mediavilla-Santos, L.; Pérez-Mañanes, R.; von Haxthausen, F.; Pascau, J. HoloLens 1 vs. HoloLens 2: Improvements in the New Model for Orthopedic Oncological Interventions. Sensors 2022, 22, 4915. [Google Scholar] [CrossRef] [PubMed]

- El-Hariri, H.; Pandey, P.; Hodgson, A.J.; Garbi, R. Augmented Reality Visualisation for Orthopaedic Surgical Guidance with Pre- and Intra-Operative Multimodal Image Data Fusion. Healthc. Technol. Lett. 2018, 5, 189–193. [Google Scholar] [CrossRef]

- Hämäläinen, P.; Ilmonen, T.; Höysniemi, J.; Lindholm, M.; Nykänen, A. Martial Arts in Artificial Reality. In Proceedings of the CHI05: CHI 2005 Conference on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; pp. 781–790. [Google Scholar] [CrossRef]

- Shen, Y.; Wang, H.; Ho, E.S.L.; Yang, L.; Shum, H.P.H. Posture-Based and Action-Based Graphs for Boxing Skill Visualization. Comput. Graph. 2017, 69, 104–115. [Google Scholar] [CrossRef]

- Franzo’, M.; Pascucci, S.; Serrao, M.; Marinozzi, F.; Bini, F. Kinect-Based Wearable Prototype System for Ataxic Patients Neurorehabilitation: Software Update for Exergaming and Rehabilitation. In Proceedings of the 2021 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Lausanne, Switzerland, 23–25 June 2021. [Google Scholar] [CrossRef]

- Franzo’, M.; Pascucci, S.; Serrao, M.; Marinozzi, F.; Bini, F. Kinect-Based Wearable Prototype System for Ataxic Patients Neurorehabilitation: Control Group Preliminary Results. In Proceedings of the 2020 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Bari, Italy, 1–3 June 2020. [Google Scholar] [CrossRef]

- Franzo’, M.; Pascucci, S.; Serrao, M.; Marinozzi, F.; Bini, F. Exergaming in Mixed Reality for the Rehabilitation of Ataxic Patients. In Proceedings of the 2022 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Messina, Italy, 22–24 June 2022. [Google Scholar] [CrossRef]

- Yeung, L.F.; Cheng, K.C.; Fong, C.H.; Lee, W.C.C.; Tong, K.Y. Evaluation of the Microsoft Kinect as a Clinical Assessment Tool of Body Sway. Gait Posture 2014, 40, 532–538. [Google Scholar] [CrossRef]

- Otte, K.; Kayser, B.; Mansow-Model, S.; Verrel, J.; Paul, F.; Brandt, A.U.; Schmitz-Hübsch, T. Accuracy and Reliability of the Kinect Version 2 for Clinical Measurement of Motor Function. PLoS ONE 2016, 11, e0166532. [Google Scholar] [CrossRef] [PubMed]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the Pose Tracking Performance of the Azure Kinect and Kinect v2 for Gait Analysis in Comparison with a Gold Standard: A Pilot Study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef] [PubMed]

- Tölgyessy, M.; Dekan, M.; Chovanec, L. Skeleton Tracking Accuracy and Precision Evaluation of Kinect V1, Kinect V2, and the Azure Kinect. Appl. Sci. 2021, 11, 5756. [Google Scholar] [CrossRef]

- Antico, M.; Balletti, N.; Laudato, G.; Lazich, A.; Notarantonio, M.; Oliveto, R.; Ricciardi, S.; Scalabrino, S.; Simeone, J. Postural Control Assessment via Microsoft Azure Kinect DK: An Evaluation Study. Comput. Methods Programs Biomed. 2021, 209, 106324. [Google Scholar] [CrossRef]

- Bailey, J.L.; Jensen, B.K. Telementoring: Using the Kinect and Microsoft Azure to Save Lives. Int. J. Electron. Financ. 2013, 7, 33–47. [Google Scholar] [CrossRef]

- Eswaran, M.; Raju Bahubalendruni, M.V.A. Challenges and opportunities on AR/VR technologies for manufacturing systems in the context of industry 4.0: A state of the art review. J. Manuf. Syst. 2022, 65, 260–278. [Google Scholar] [CrossRef]

- Soltani, P.; Morice, A.H.P. Augmented reality tools for sports education and training. Comput. Educ. 2020, 155, 103923. [Google Scholar] [CrossRef]

- Da Gama, A.E.F.; de Menezes Chaves, T.; Fallavollita, P.; Figueiredo, L.S.; Teichrieb, V. Rehabilitation motion recognition based on the international biomechanical standards. Expert Syst. Appl. 2019, 116, 396–409. [Google Scholar] [CrossRef]

- McLatchie, G. Karate and Karate Injuries. Br. J. Sports Med. 1981, 15, 84–86. [Google Scholar] [CrossRef]

- Critchley, G.R.; Mannion, S.; Meredith, C. Injury Rates in Shotokan Karate. Br. J. Sports Med. 1999, 33, 174–177. [Google Scholar] [CrossRef]

- Ambroży, A.T.; Dariusz Mucha, A.; Czarnecki, W.; Ambroży, D.; Janusz, M.; Piwowarski, A.J.; Mucha, T. Most Common Injuries to Professional Contestant Karate. Secur. Dimens. Int. Natl. Stud. 2015, 2015, 142–164. [Google Scholar]

- Rinaldi, M.; Nasr, Y.; Atef, G.; Bini, F.; Varrecchia, T.; Conte, C.; Chini, G.; Ranavolo, A.; Draicchio, F.; Pierelli, F.; et al. Biomechanical characterization of the Junzuki karate punch: Indexes of performance. Eur. J. Sport Sci. 2018, 18, 796–805. [Google Scholar] [CrossRef] [PubMed]

- Bini, F.; Pica, A.; Marinozzi, A.; Marinozzi, F. Prediction of Stress and Strain Patterns from Load Rearrangement in Human Osteoarthritic Femur Head: Finite Element Study with the Integration of Muscular Forces and Friction Contact. In New Developments on Computational Methods and Imaging in Biomechanics and Biomedical Engineering. Lecture Notes in Computational Vision and Biomechanics; Tavares, J., Fernandes, P., Eds.; Springer: Cham, Switzerland, 2019; Volume 33, pp. 49–64. [Google Scholar] [CrossRef]

- Araneo, R.; Bini, F.; Rinaldi, A.; Notargiacomo, A.; Pea, M.; Celozzi, S. Thermal-Electric Model for Piezoelectric ZnO Nanowires. Nanotechnology 2015, 26, 265402. [Google Scholar] [CrossRef]

- Lu, T.W.; Chang, C.F. Biomechanics of Human Movement and Its Clinical Applications. Kaohsiung J. Med. Sci. 2012, 28, S13–S25. [Google Scholar] [CrossRef] [PubMed]

- Albuquerque, P.; Verlekar, T.T.; Correia, P.L.; Soares, L.D. A Spatiotemporal Deep Learning Approach for Automatic Pathological Gait Classification. Sensors 2021, 21, 6202. [Google Scholar] [CrossRef] [PubMed]

- Halilaj, E.; Rajagopal, A.; Fiterau, M.; Hicks, J.L.; Hastie, T.J.; Delp, S.L. Machine Learning in Human Movement Biomechanics: Best Practices, Common Pitfalls, and New Opportunities. J. Biomech. 2018, 81, 1–11. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).