Efficient Reachable Workspace Division under Concurrent Task for Human-Robot Collaboration Systems

Abstract

1. Introduction

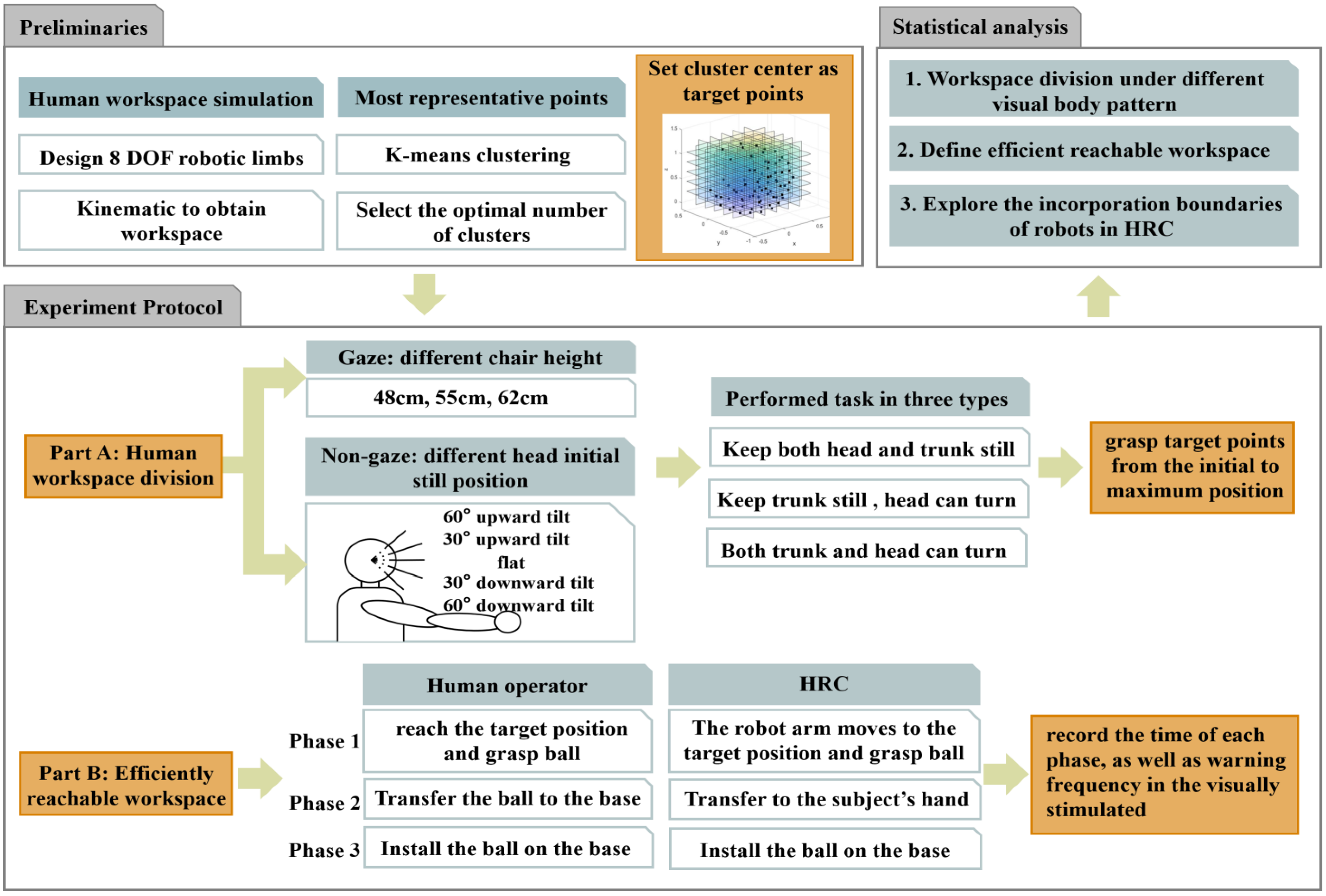

2. Method

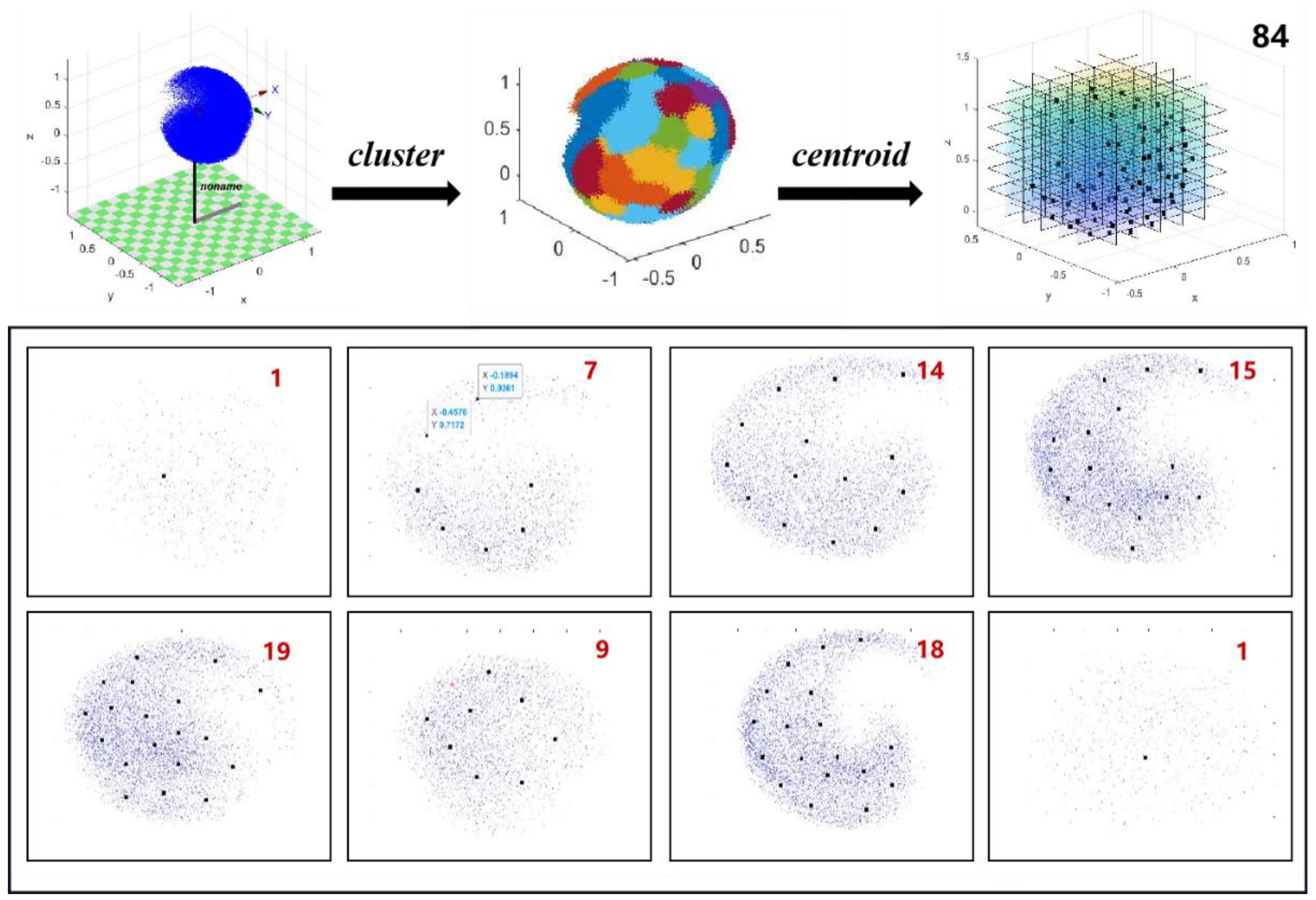

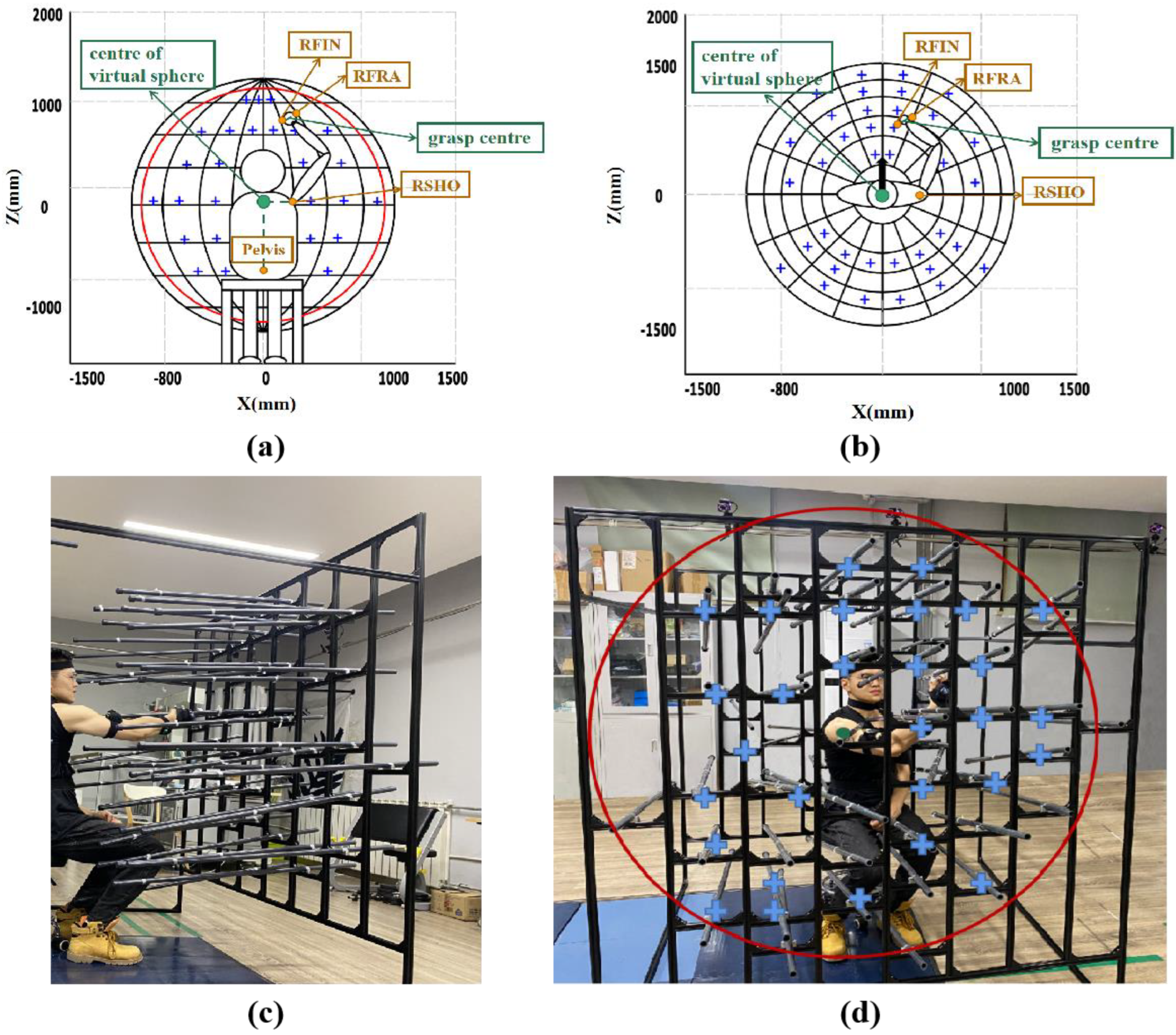

2.1. Reachable Workspace Division

2.1.1. Participants

2.1.2. Experiment Protocol

Part A Gaze State

Part B Non-Gaze State

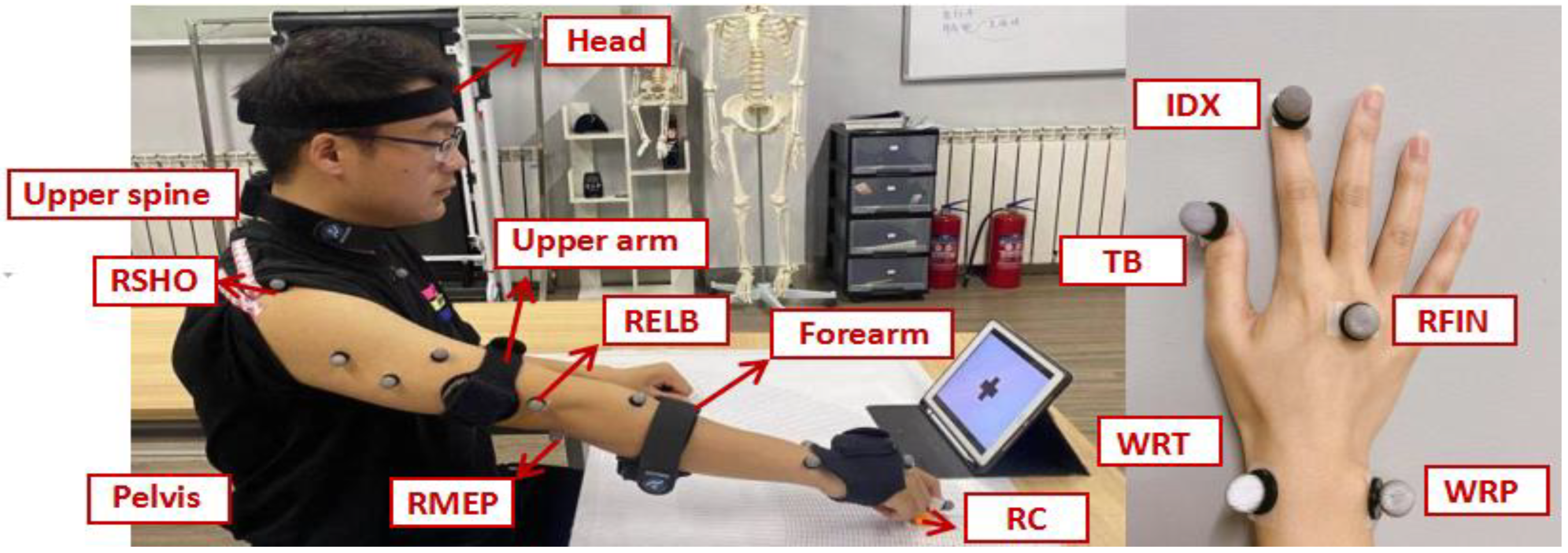

2.1.3. Data Acquisition

2.2. Efficiently Reachable Workspace and Robot Incorporation Boundary Exploration

2.2.1. Participants

2.2.2. Experiment Protocol

2.2.3. Data Acquisition

3. Results

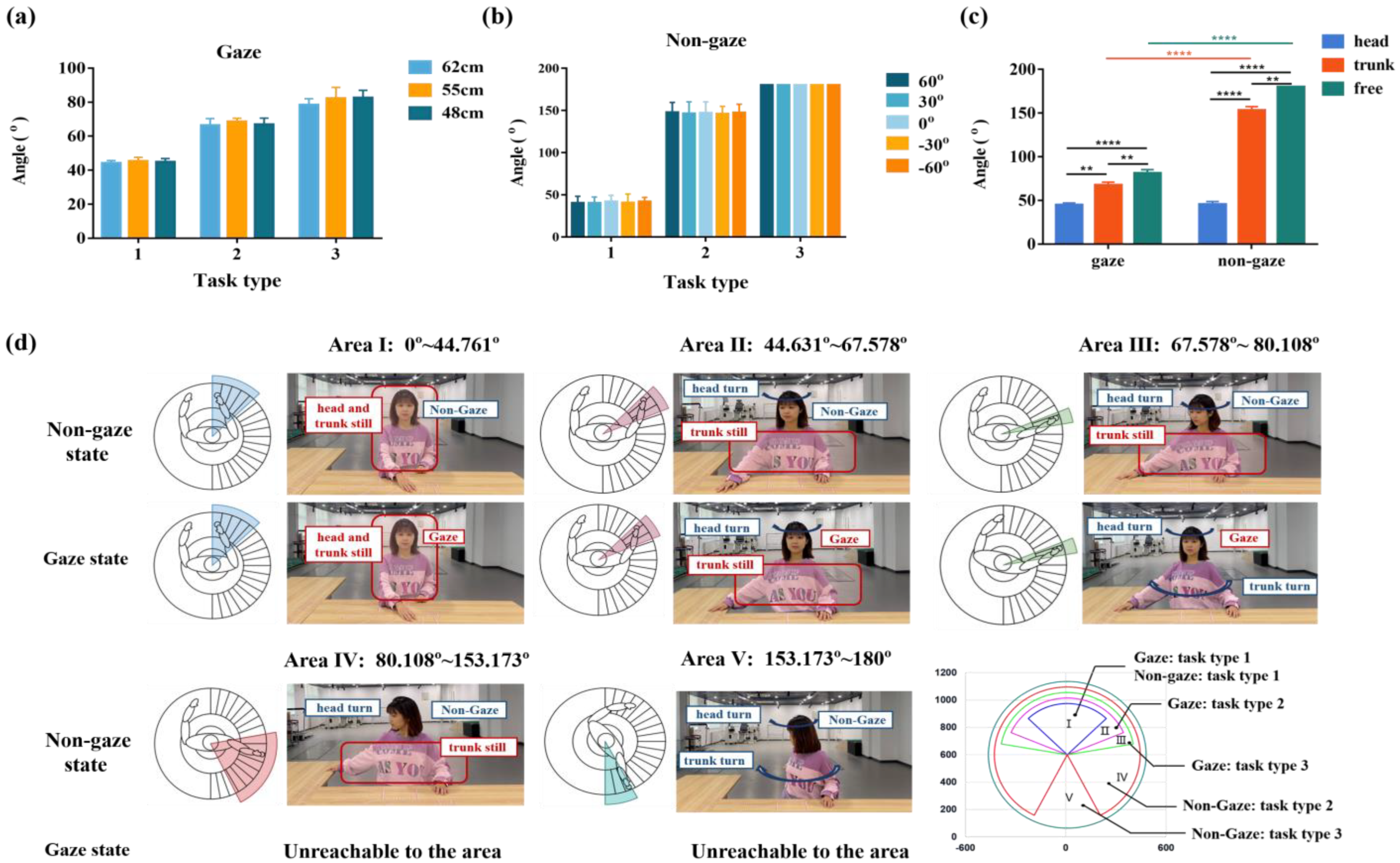

3.1. Workspace Division

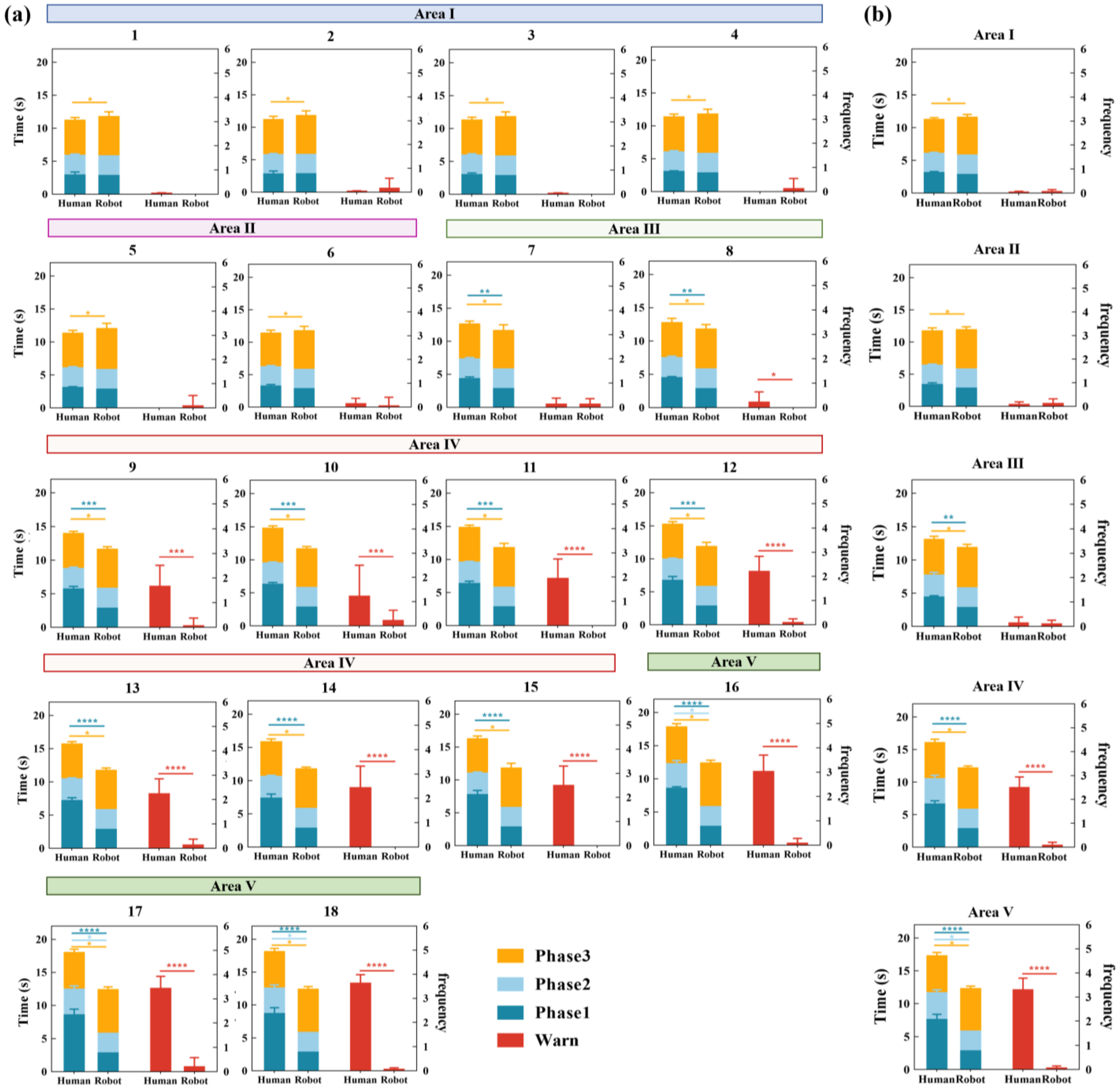

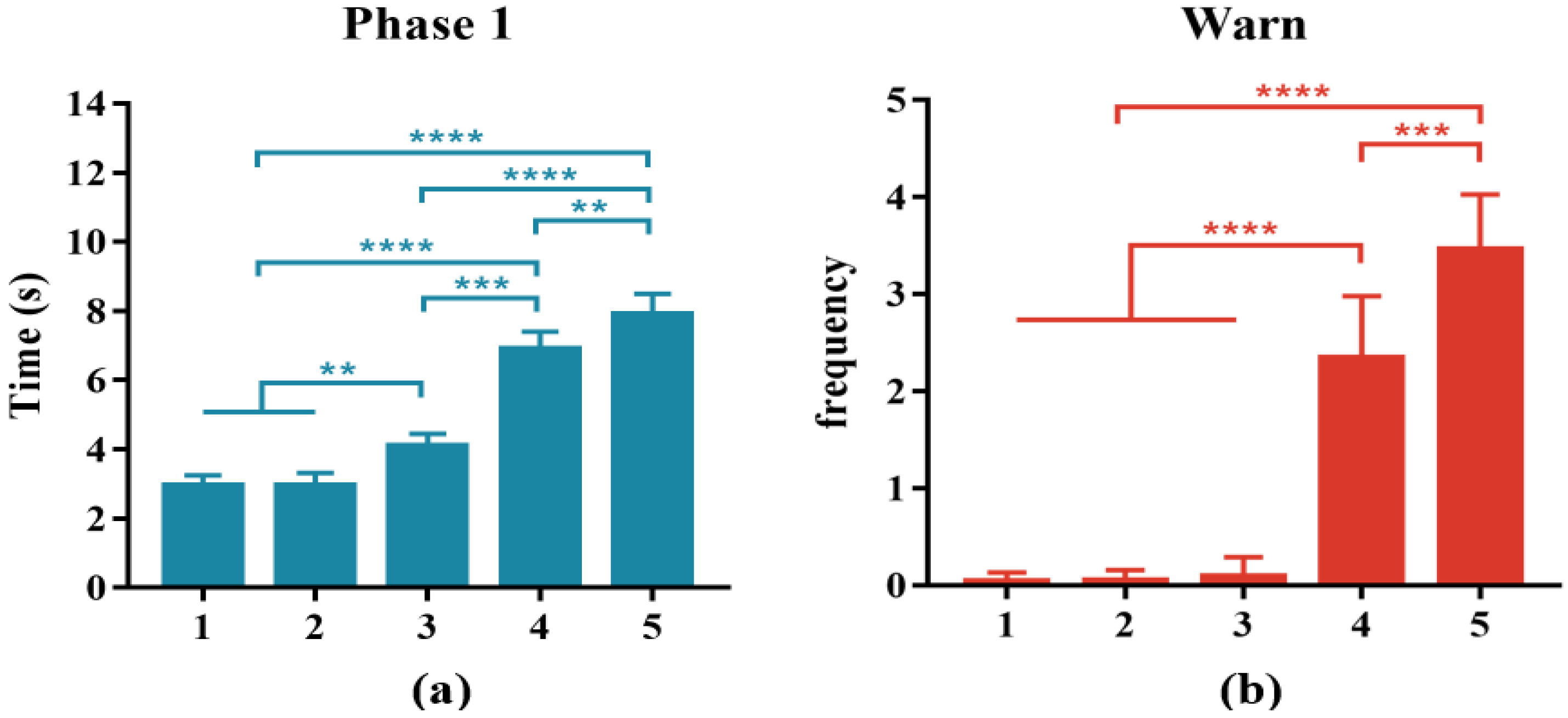

3.2. Completion Time of HRC Tasks

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| 1 | 220 | −π/2 | 468 | |

| 2 | 0 | π/2 | 0 | |

| 3 | 0 | π/2 | 0 | |

| 4 | 0 | π/2 | 313 | |

| 5 | 0 | π/2 | 0 | |

| 6 | 0 | π/2 | 237 | |

| 7 | 0 | π/2 | 0 | |

| 8 | 148 | 0 | 0 |

Appendix B

References

- Navarro, J.; Hernout, E.; Osiurak, F.; Reynaud, E. On the nature of eye-hand coordination in natural steering behavior. PLoS ONE 2020, 15, 0242818. [Google Scholar] [CrossRef] [PubMed]

- Bright, L. Supernumerary Robotic Limbs for Human Augmentation in Overhead Assembly Tasks. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2017. [Google Scholar]

- Penaloza, C.I.; Nishio, S. BMI control of a third arm for multitasking. Sci. Robot. 2018, 3, eaat1228. [Google Scholar] [CrossRef] [PubMed]

- Di Pino, G.; Maravita, A.; Zollo, L.; Guglielmelli, E.; Di Lazzaro, V. Augmentation-related brain plasticity. Front. Syst. Neurosci. 2014, 8, 109. [Google Scholar] [CrossRef] [PubMed]

- Pratt, J.E.; Krupp, B.T.; Morse, C.J.; Collins, S.H. The RoboKnee: An exoskeleton for enhancing strength and endurance during walking. In Proceedings of the IEEE International Conference on Robotics & Automation, New Orleans, LA, USA, 26 April–1 May 2004. [Google Scholar]

- Gierke, H.E.; Keidel, W.D.; Oestreicher, H.L. Principles and Practice of Bionics; Technivision Services: Slough, UK, 1970. [Google Scholar]

- Gualtieri, L.; Rauch, E.; Vidoni, R. Emerging research fields in safety and ergonomics in industrial collaborative robotics: A systematic literature review. Robot. Comput.-Integr. Manuf. 2021, 67, 101998. [Google Scholar] [CrossRef]

- Liu, Z.; Jiang, L.; Yang, B. Task-Oriented Real-Time Optimization Method of Dynamic Force Distribution for Multi-Fingered Grasping. Int. J. Hum. Robot. 2022, 19, 2250013. [Google Scholar] [CrossRef]

- Ajoudani, A.; Zanchettin, A.M.; Ivaldi, S.; Albu-Schäffer, A.; Kosuge, K.; Khatib, O. Progress and prospects of the Human-Robot Collaboration. Auton. Robot. 2017, 42, 957–975. [Google Scholar] [CrossRef]

- Kieliba, P.; Clode, D.; Maimon-Mor, R.O.; Makin, T.R. Robotic hand augmentation drives changes in neural body representation. Sci. Robot. 2021, 6, eabd7935. [Google Scholar] [CrossRef]

- Wang, L.; Gao, R.; Váncza, J.; Krüger, J.; Wang, X.; Makris, S.; Chryssolouris, G. Symbiotic human-robot collaborative assembly. CIRP Ann. 2019, 68, 701–726. [Google Scholar] [CrossRef]

- Burns, A.; Adeli, H.; Buford, J.A. Upper Limb Movement Classification Via Electromyographic Signals and an Enhanced Probabilistic Network. J. Med. Syst. 2020, 44, 176. [Google Scholar] [CrossRef]

- Pang, Z.; Wang, T.; Liu, S.; Wang, Z.; Gong, L. Kinematics Analysis of 7-DOF Upper Limb Rehabilitation Robot Based on BP Neural Network. In Proceedings of the IEEE 9th Data Driven Control and Learning Systems Conference (DDCLS), Liuzhou, China, 20–22 November 2020. [Google Scholar]

- Kiguchi, K.; Hayashi, Y. An EMG-Based Control for an Upper-Limb Power-Assist Exoskeleton Robot. IEEE Trans. Syst. Man Cybern. Part B 2012, 42, 1064–1071. [Google Scholar] [CrossRef]

- Bendre, N.; Ebadi, N.; Prevost, J.J.; Najafirad, P. Human Action Performance using Deep Neuro-Fuzzy Recurrent Attention Model. IEEE Access 2020, 8, 57749–57761. [Google Scholar] [CrossRef]

- Bestick, A.M.; Burden, S.A.; Willits, G.; Naikal, N.; Sastry, S.S.; Bajcsy, R. Personalized kinematics for human-robot collaborative manipulation. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015. [Google Scholar]

- Lin, C.J.; Lukodono, R.P. Sustainable human–robot collaboration based on human intention classification. Sustainability 2021, 13, 5990. [Google Scholar] [CrossRef]

- Gloumakov, Y.; Spiers, A.J.; Dollar, A.M. Dimensionality Reduction and Motion Clustering during Activities of Daily Living: 3, 4, and 7 Degree-of-Freedom Arm Movements. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2826–2836. [Google Scholar] [CrossRef] [PubMed]

- Gloumakov, Y.; Spiers, A.J.; Dollar, A.M. Dimensionality Reduction and Motion Clustering During Activities of Daily Living: Decoupling Hand Location and Orientation. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2955–2965. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Wachs, J.P. Early Prediction for Physical Human Robot Collaboration in the Operating Room. Auton. Robot. 2018, 42, 977–995. [Google Scholar] [CrossRef]

- Costanzo, M.; Maria, G.D.; Natale, C. Handover Control for Human-Robot and Robot-Robot Collaboration. Front. Robot. AI 2021, 8, 672995. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Zhang, Z.; You, Y.; Mu, Y.; Feng, C. Data Driven Models for Human Motion Prediction in Human-Robot Collaboration. IEEE Access 2020, 8, 227690–227702. [Google Scholar] [CrossRef]

- Nakabayashi, K.; Iwasaki, Y.; Iwata, H. Development of Evaluation Indexes for Human-Centered Design of a Wearable Robot Arm. In Proceedings of the 5th International Conference on Human-Agent Interaction, Bielefeld, Germany, 17–20 October 2017; pp. 305–310. [Google Scholar]

- Gene-Sampedro, A.; Alonso, F.; Sánchez-Ramos, C.; Useche, S.A. Comparing oculomotor efficiency and visual attention between drivers and non-drivers through the Adult Developmental Eye Movement (ADEM) test: A visual-verbal test. PLoS ONE 2021, 16, 0246606. [Google Scholar] [CrossRef]

- Roux-Sibilon, A.; Trouilloud, A.; Kauffmann, L.; Guyader, N.; Mermillod, M.; Peyrin, C. Influence of peripheral vision on object categorization in central vision. J. Vis. 2019, 19, 7. [Google Scholar] [CrossRef]

- Vater, C.; Williams, A.M.; Hossner, E.J. What do we see out of the corner of our eye? The role of visual pivots and gaze anchors in sport. Int. Rev. Sport Exerc. Psychol. 2019, 13, 81–103. [Google Scholar] [CrossRef]

- Wolfe, B.; Dobres, J.; Rosenholtz, R.; Reimer, B. More than the Useful Field: Considering peripheral vision in driving. Appl. Ergon. 2017, 65, 316–325. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Zhang, W.; Zeng, B.; Zhang, K.; Cheng, Q.; Ming, D. The Analysis of Concurrent-Task Operation Ability: Peripheral-Visual-Guided Grasp Performance Under the Gaze. In Intelligent Robotics and Applications, Proceedings of the 14th International Conference, ICIRA 2021, Yantai, China, 22–25 October 2021; Springer: Cham, Switzerland, 2021; pp. 500–509. [Google Scholar]

- Asghar, Z.; Ali, N.; Javid, K.; Waqas, M.; Dogonchi, A.S.; Khan, W.A. Bio-inspired propulsion of micro-swimmers within a passive cervix filled with couple stress mucus. Comput. Methods Programs Biomed. 2020, 189, 105313. [Google Scholar] [CrossRef] [PubMed]

- Javid, K.; Ali, N.; Asghar, Z. Rheological and magnetic effects on a fluid flow in a curved channel with different peristaltic wave profiles. J. Braz. Soc. Mech. Sci. Eng. 2019, 41, 483. [Google Scholar] [CrossRef]

- Asghar, Z.; Javid, K.; Waqas, M.; Ghaffari, A.; Khan, W.A. Cilia-driven fluid flow in a curved channel: Effects of complex wave and porous medium. Fluid Dyn. Res. 2020, 52, 015514. [Google Scholar] [CrossRef]

- Tsakiris, M.; Carpenter, L.; James, D.; Fotopoulou, A. Hands only illusion: Multisensory integration elicits a sense of ownership for body parts but not for non-corporeal objects. Exp. Brain Res. 2010, 204, 343–352. [Google Scholar] [CrossRef]

- Wu, G.; van der Helm, F.C.; Veeger, H.E.; Makhsous, M.; Van Roy, P.; Anglin, C.; Nagels, J.; Karduna, A.R.; McQuade, K.; Wang, X.; et al. ISB recommendation on definitions of joint coordinate systems of various joints for the reporting of human joint motion—Part II: Shoulder, elbow, wrist, and hand. J. Biomech. 2005, 38, 981–992. [Google Scholar] [CrossRef]

- Liu, X.; Zhong, X. An improved anthropometry-based customization method of individual head-related transfer functions. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016. [Google Scholar]

- ISO 7250:1996; Basic Human Body Measurements for Technological Design. ISO: Geneva, Switzerland, 1996.

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Zhang, W.; Cheng, Q.; Ming, D. Efficient Reachable Workspace Division under Concurrent Task for Human-Robot Collaboration Systems. Appl. Sci. 2023, 13, 2547. https://doi.org/10.3390/app13042547

Liu Y, Zhang W, Cheng Q, Ming D. Efficient Reachable Workspace Division under Concurrent Task for Human-Robot Collaboration Systems. Applied Sciences. 2023; 13(4):2547. https://doi.org/10.3390/app13042547

Chicago/Turabian StyleLiu, Yuan, Wenxuan Zhang, Qian Cheng, and Dong Ming. 2023. "Efficient Reachable Workspace Division under Concurrent Task for Human-Robot Collaboration Systems" Applied Sciences 13, no. 4: 2547. https://doi.org/10.3390/app13042547

APA StyleLiu, Y., Zhang, W., Cheng, Q., & Ming, D. (2023). Efficient Reachable Workspace Division under Concurrent Task for Human-Robot Collaboration Systems. Applied Sciences, 13(4), 2547. https://doi.org/10.3390/app13042547