A Modeling of Human Reliability Analysis on Dam Failure Caused by Extreme Weather

Abstract

:Featured Application

Abstract

1. Introduction

2. HRA Analysis Framework Construction

3. PSFs Analysis

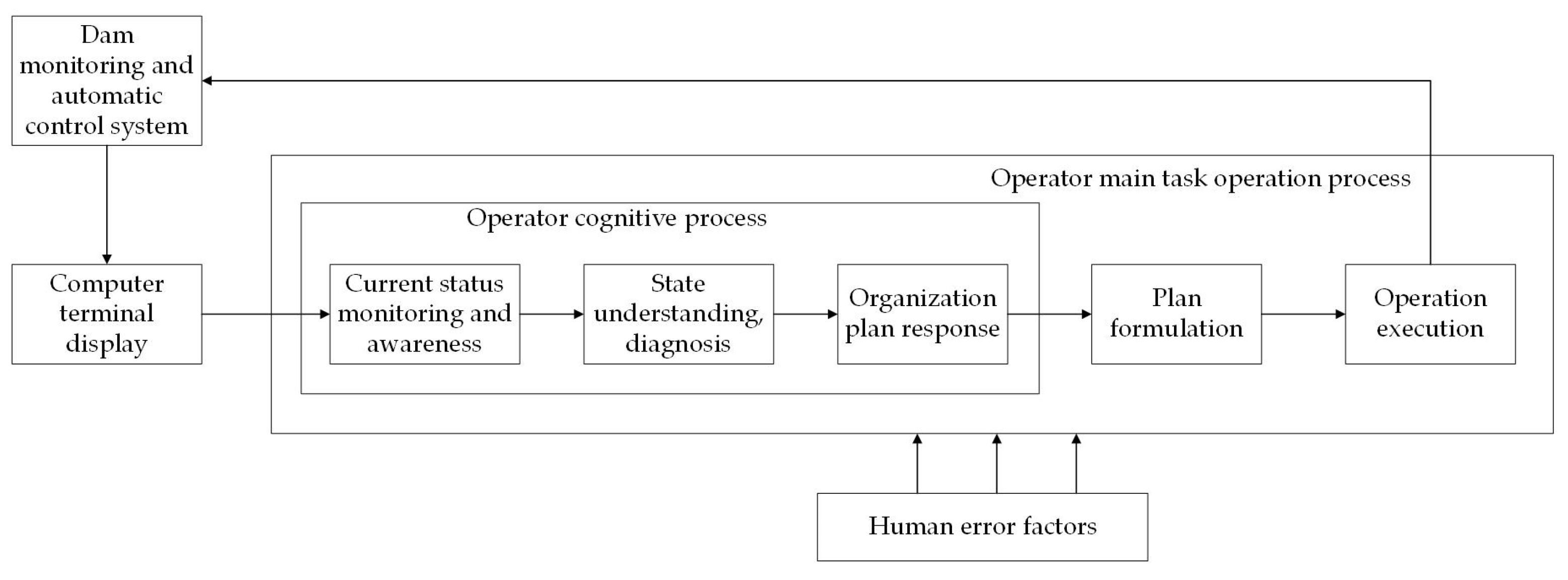

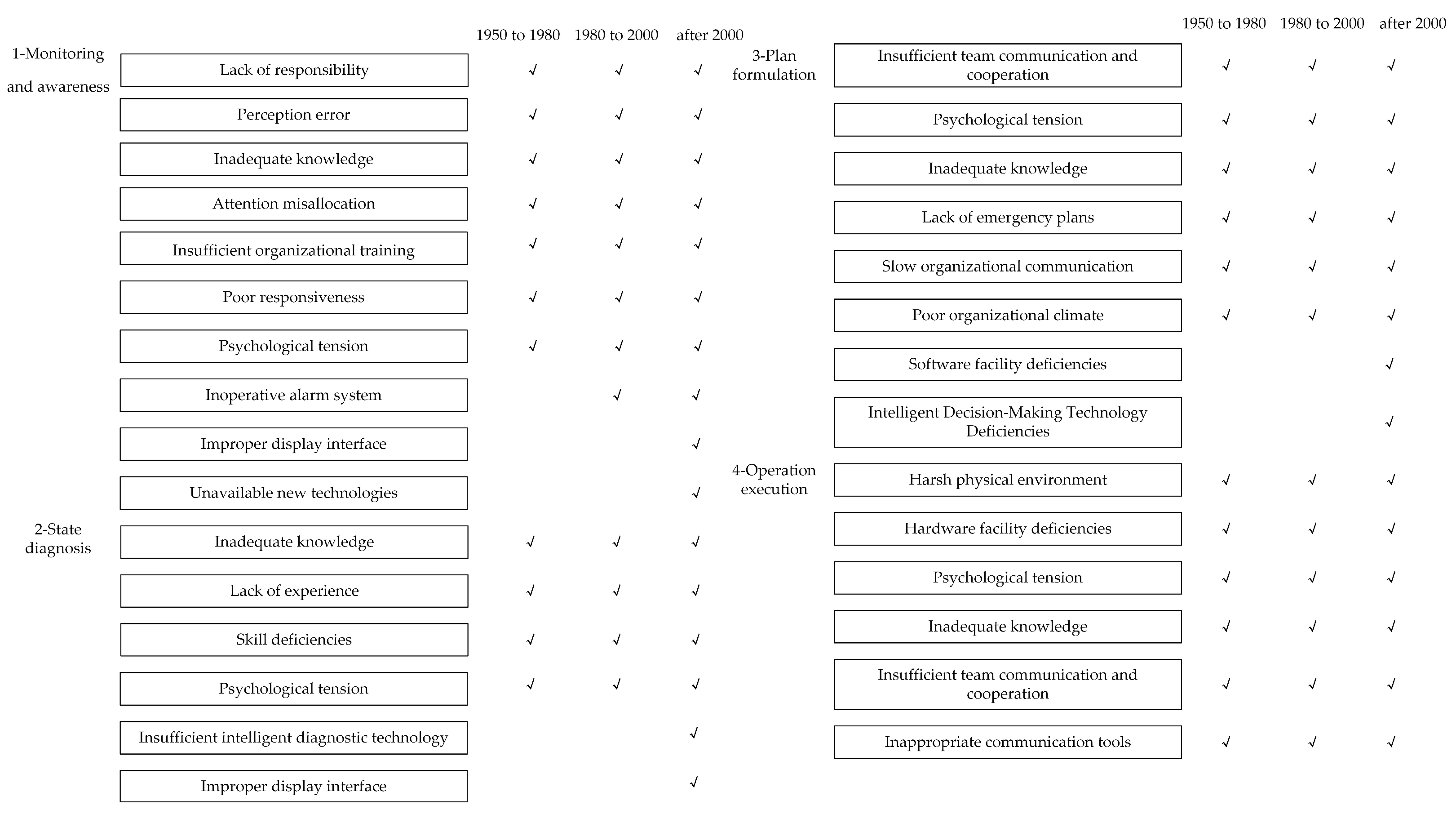

3.1. The Basic Path of Human Error in Dam Failure

- Monitoring and awareness;

- State diagnosis;

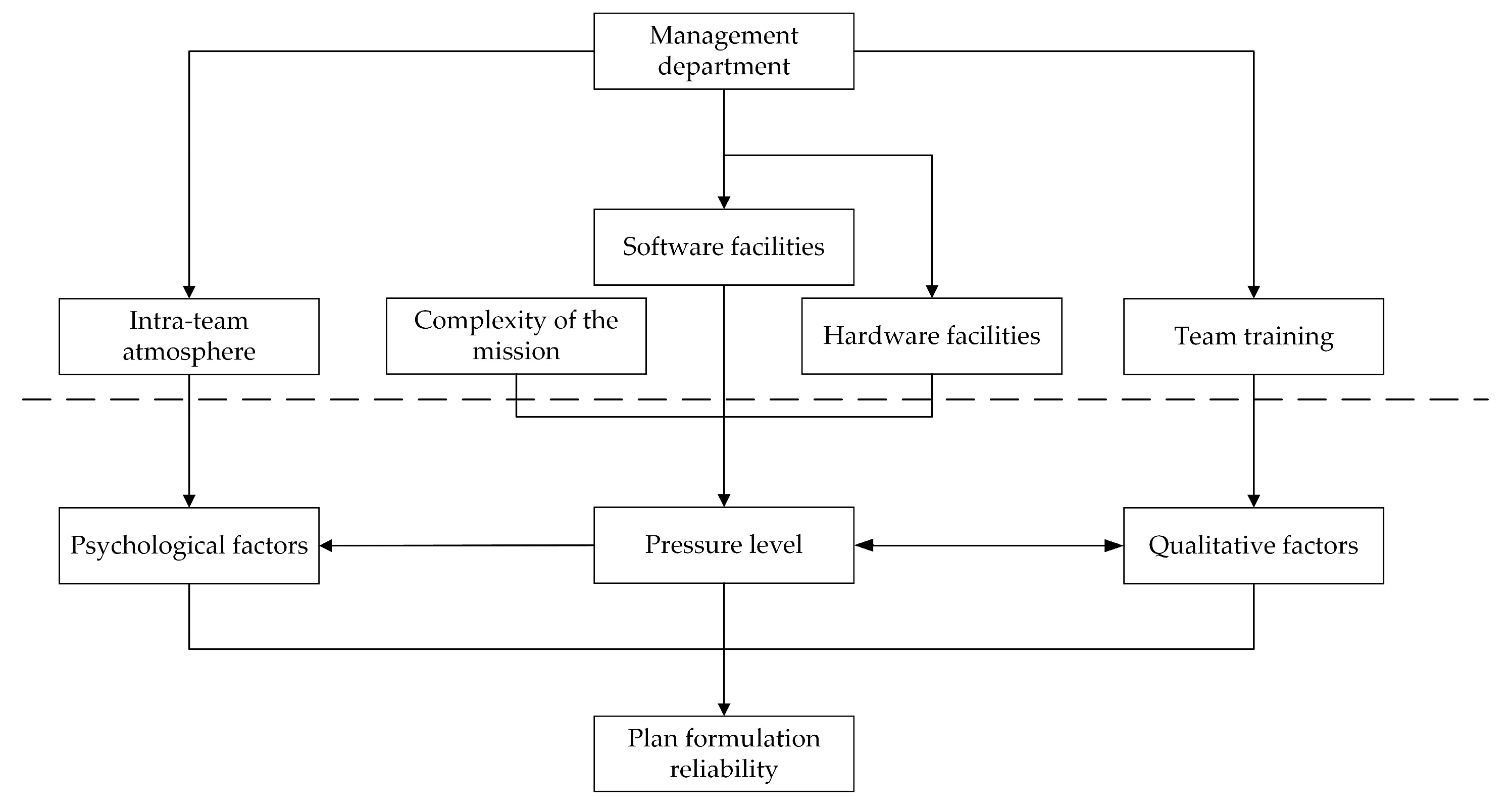

- Plan formulation;

- Use one’s own state model to determine goals;

- Select the appropriate protocol step;

- Assess whether the behavior in the process will meet the objectives;

- Adapt the protocols to the actual conditions.

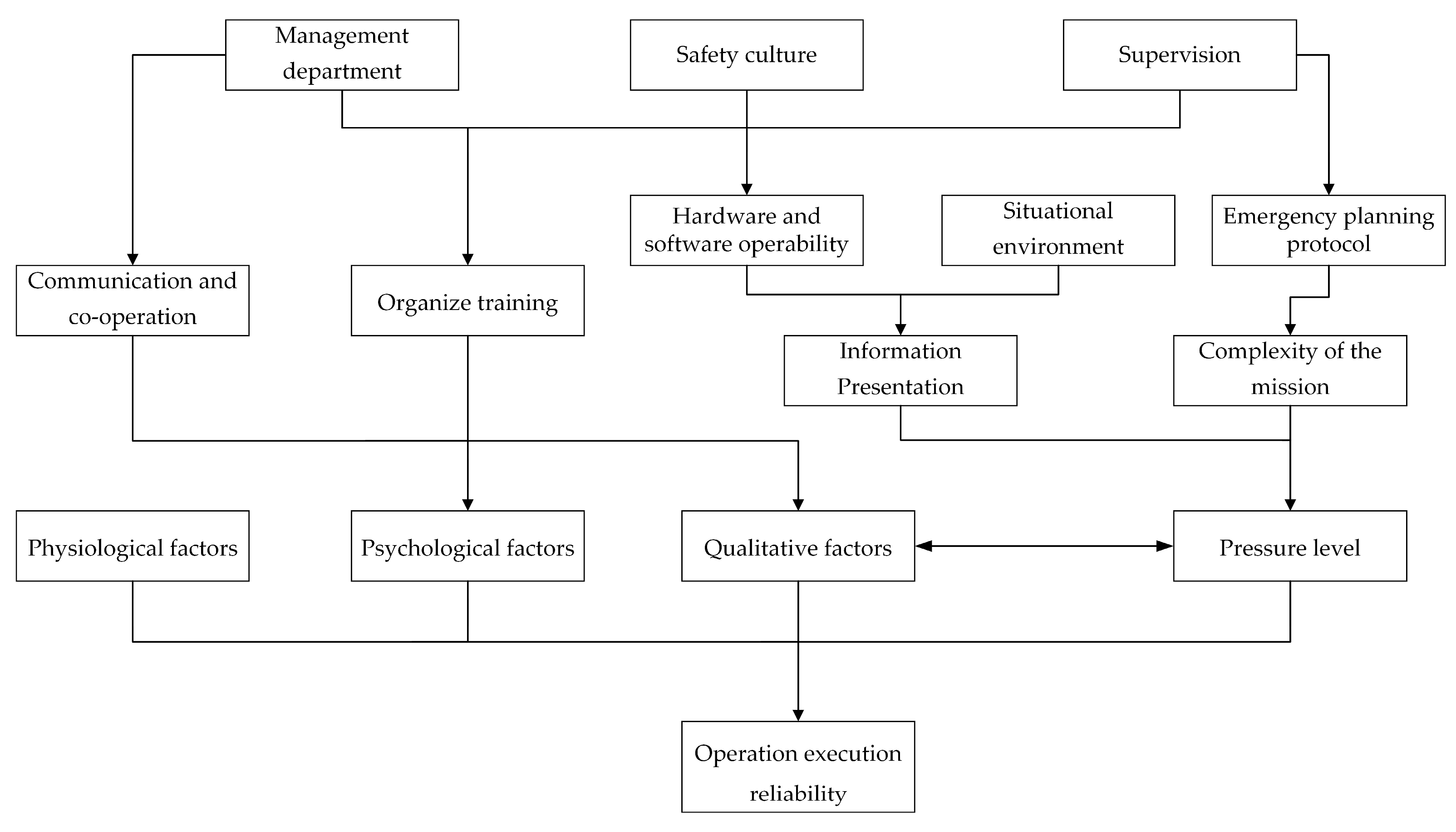

- Operation execution.

3.2. Failure Patterns of Nodes on the Basic Path

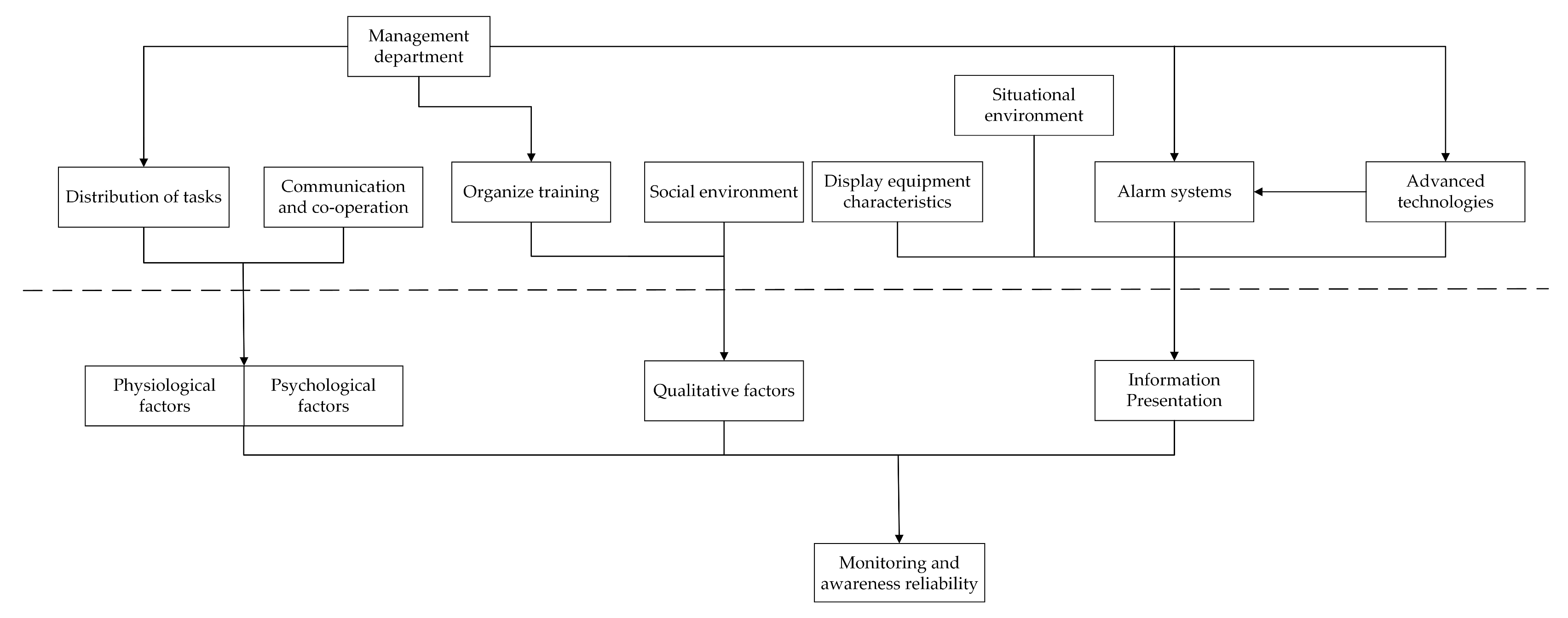

3.3. Performance Shaping Factors (PSFs) in Dam Failure Accidents

- Before the 21st century, plans were developed at a slow pace, with a high probability of human error, mainly owing to the absence of contingency plans and a poor sense of safety management. After entering the 21st century, with the popularization of emergency protocols and digital operating systems, the reasonableness of the information presented in the display interface, reliability of the software facilities, completeness of emergency plans, and popularization of new hydrologic technology also have an impact on the human reliability;

- Overtopping is mainly generated during heavy rainfall, so the harsh physical environment can affect human operation. In contrast, most of the infiltration damage and engineering quality problems are incidents that occur out of flood season, and the physical environment has less impact on people;

- In the case of excessive flooding caused by extreme weather, the monitoring awareness phase focuses more on the perceptual observations of operators during short-term high-water levels. At the technical level, the most important causes are failure to open gates, defects in engineering facilities leading to landslides, and so on. Deficiencies in software, such as monitoring systems, can also affect the time to awareness.

- With the development of science and technology—for example, rain prediction forecasts—perspective perception technology, smart diagnostic technology, intelligent decision-making technology, potential danger detection technology, and other modern dam safety management technology is also used, and its perfection and degree of informatization also affects the reliability of operators. In general, the application of new technology increases human reliability and makes diagnosis-decision-making-operation rule-based. On the other hand, due to technological improvements, managers may be less knowledgeable about the system, leading to reduced human reliability.

4. Bayesian Network Construction

4.1. Introduction to Bayesian Networks

- Bayesian networks are similar to the natural representation of knowledge structures in the human brain, which is more reasonable and convenient to represent and explain knowledge, and therefore more suitable for modeling human behavior;

- With simple and clear representation, it can efficiently save storage space, simplify knowledge acquisition and domain modeling process, and reduce inference process and computational complexity;

- The structure of Bayesian networks allows for both forward predictive inference and backward implementation of retrospection, which is in line with the requirements of behavioral prediction and root cause analysis in human reliability analysis.

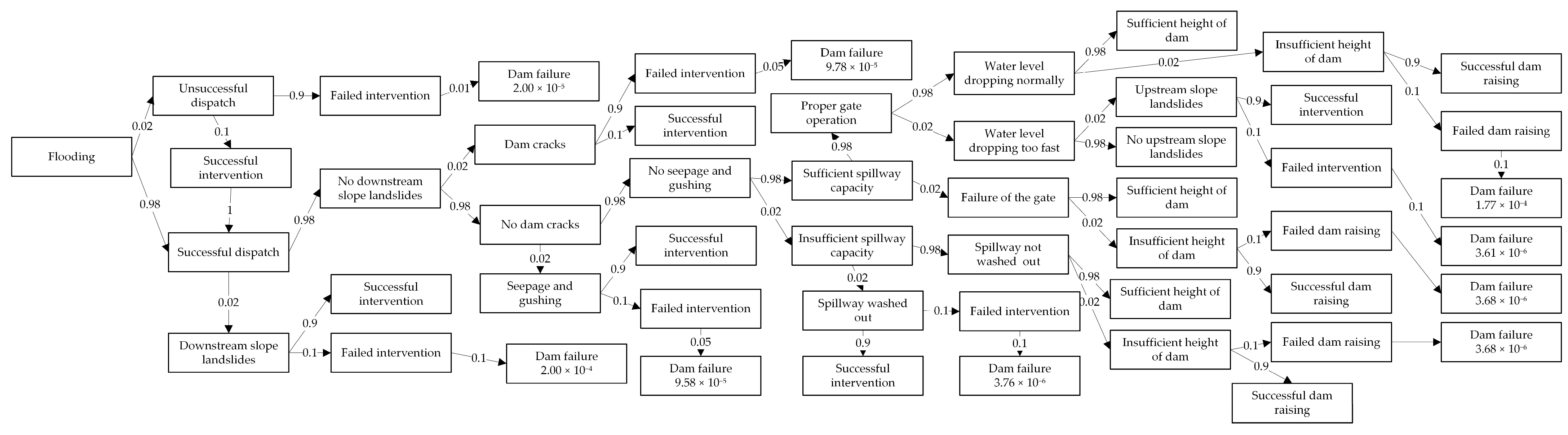

4.2. Accident Event Tree

- It is necessary to identify the possible routes of dam failure, without missing the main routes, often requiring the help of dam experts who are familiar with dam conditions;

- It is necessary to determine the various possible loads and their frequencies, often requiring more detailed information on reservoir utilization and water levels;

- It is necessary to determine the probability of occurrence for each collapse development process, which is often evaluated and assigned by an expert, with a high degree of uncertainty.

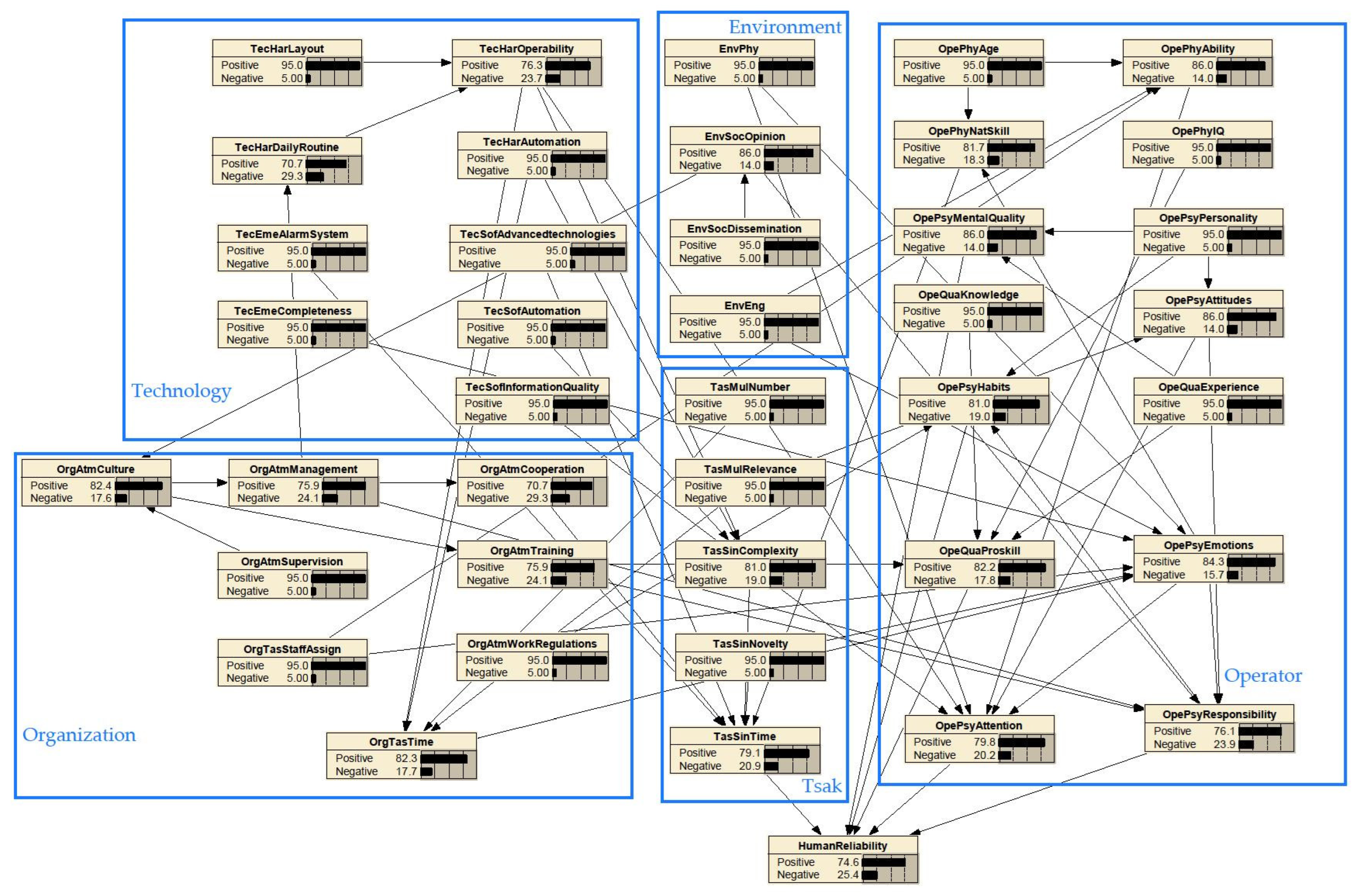

4.3. Bayesian Network Construction

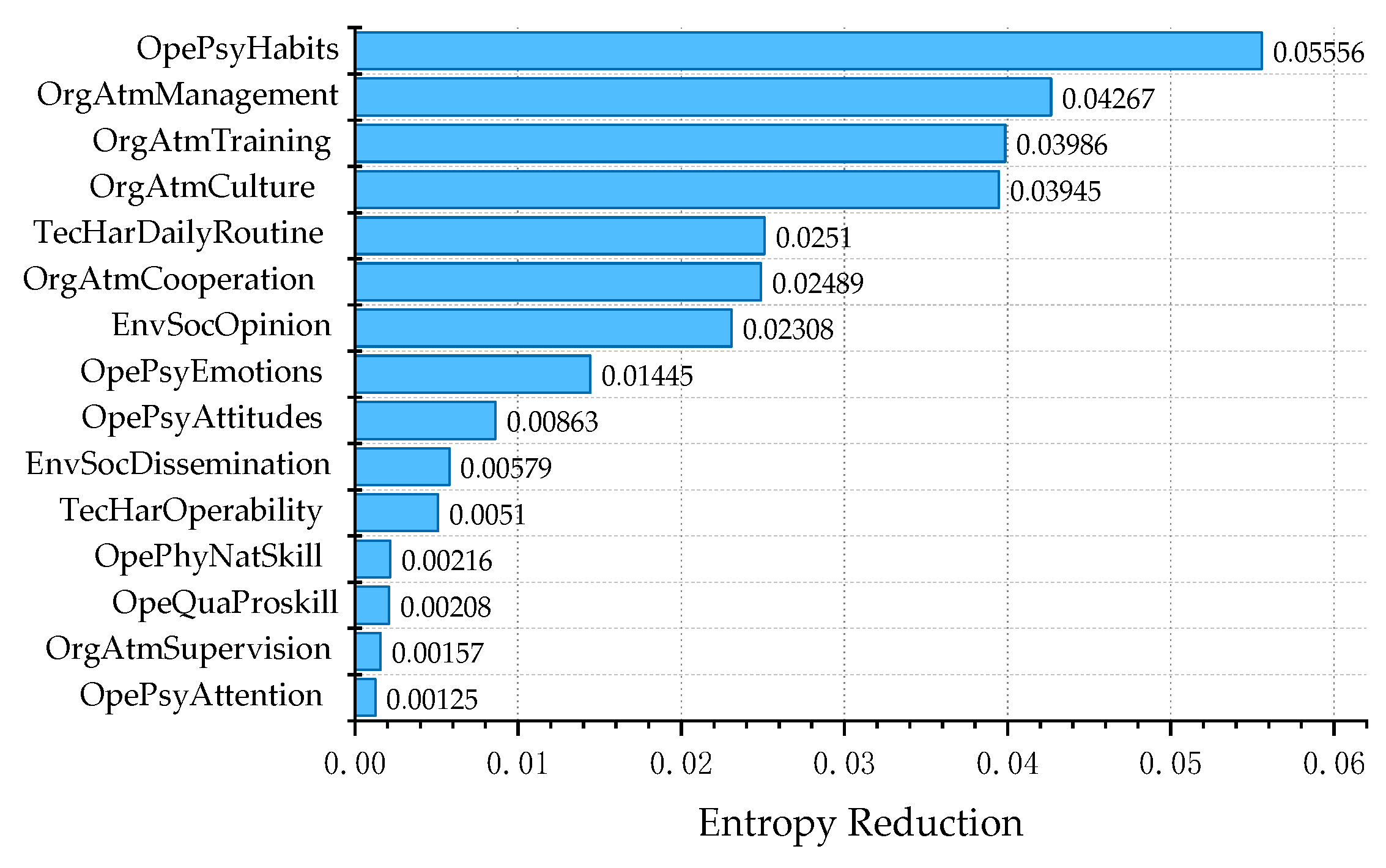

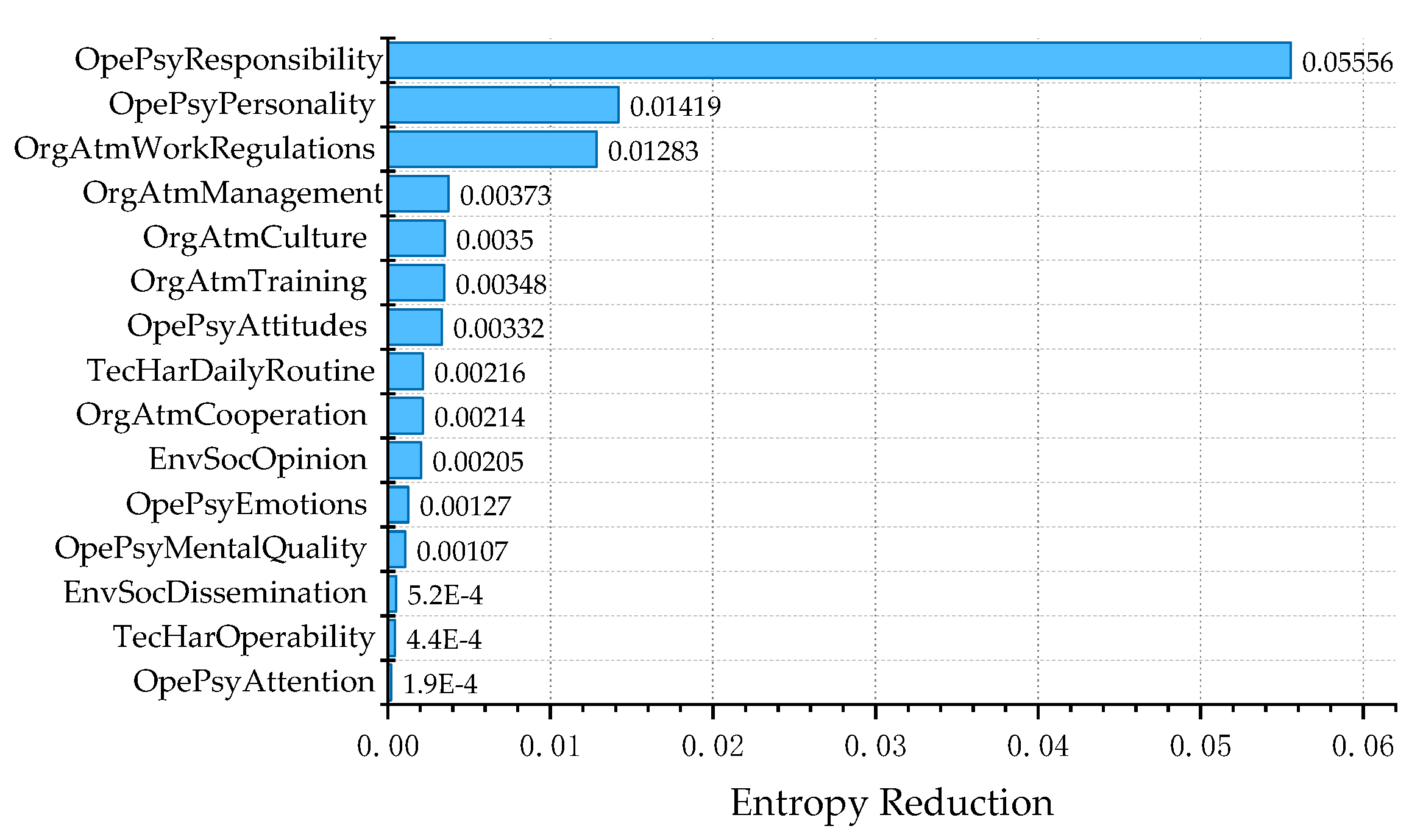

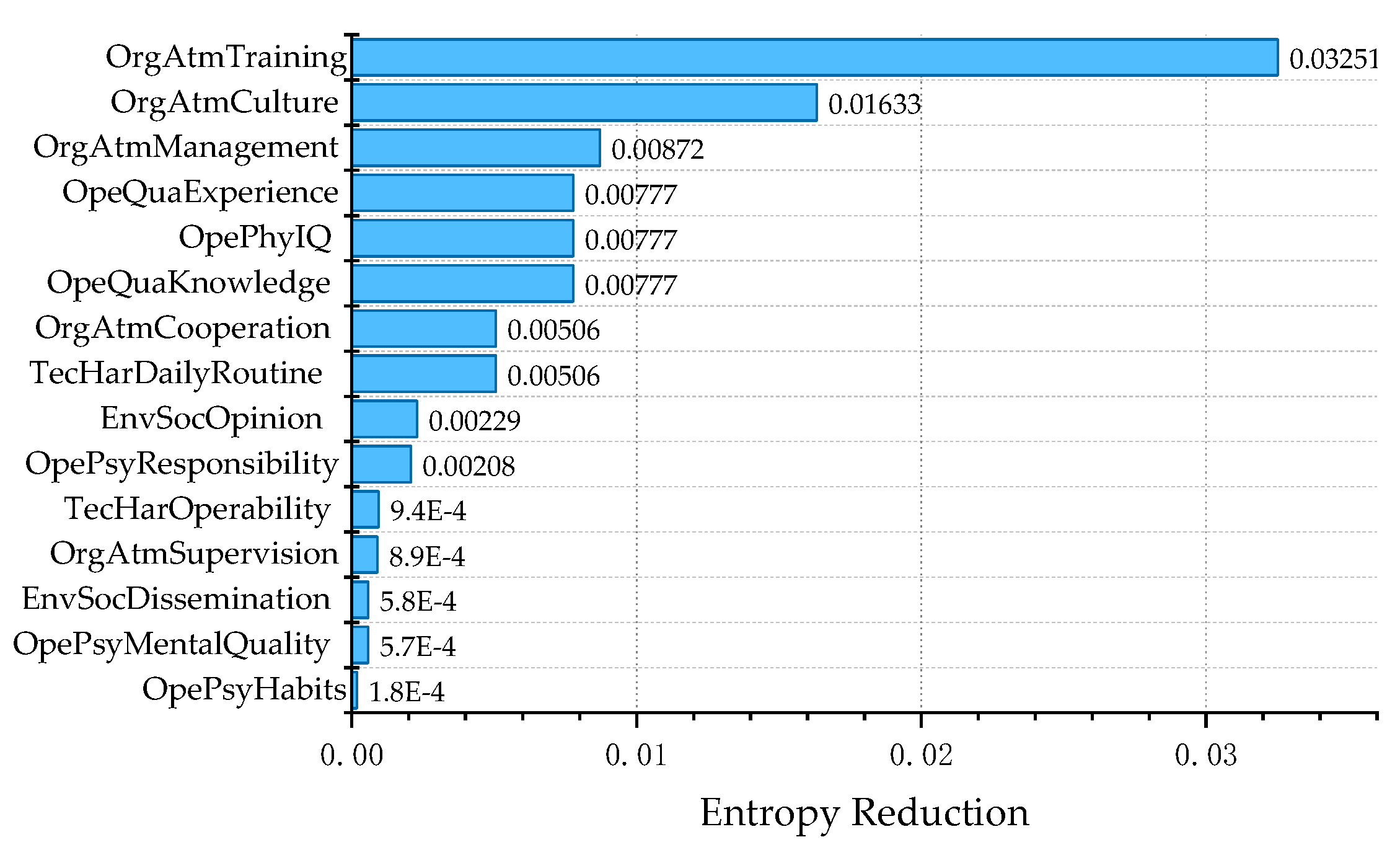

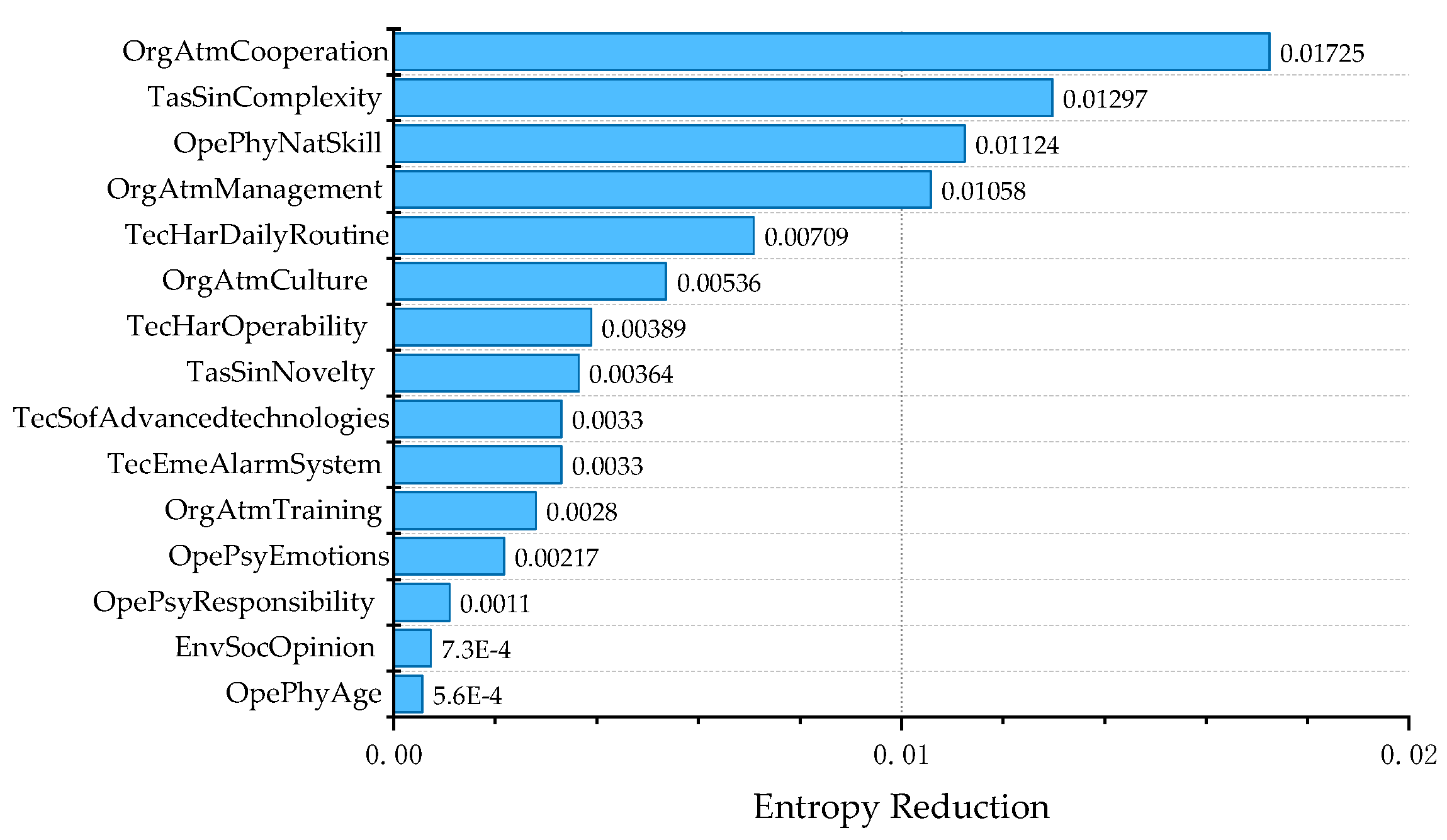

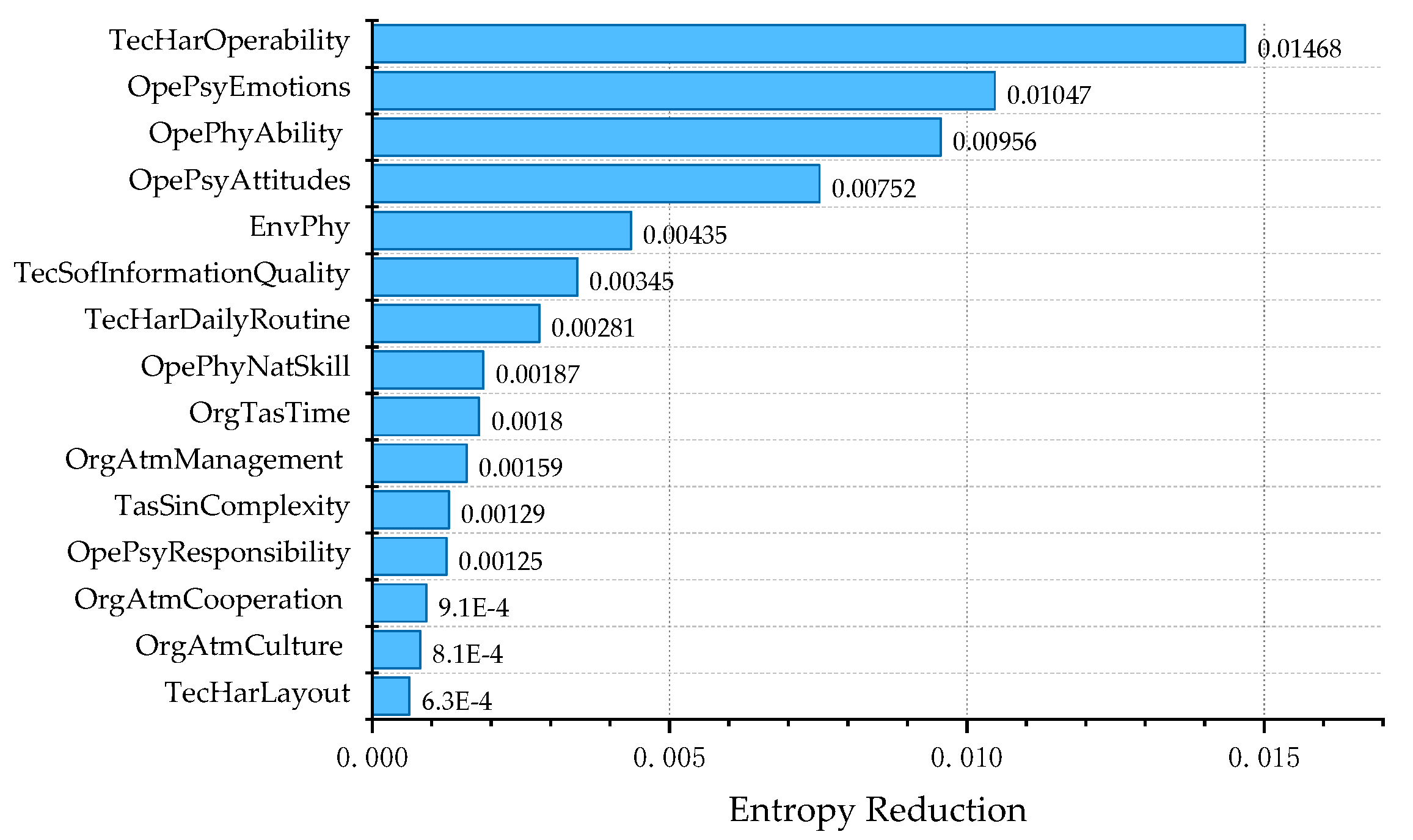

4.4. Sensitivity Analysis

- a small increase/decrease in the a priori subjective probability of each parent node should be matched by a corresponding increase/decrease in the posterior probability of the child nodes;

- the impact of subjective probability changes of each parent node on the child nodes should remain the same;

- the total magnitude of the impact of changes in the probability of x needs to be always larger than the ensemble x − y (y∈x).

5. Conclusions

- (1)

- The paper firstly proposes a HRA analysis framework for dams incorporating Bayesian networks in the premise of existing methods from other domains, including four parts: familiarization, qualitative analysis, quantitative analysis and incorporation. The research methodology and process were systematically defined. Qualitative and quantitative analyses of human factors were conducted for the dam failure accidents.

- (2)

- By studying the path of human errors in the operation process of the dam failure accident, a cognitive model of the operator is proposed, which divides human actions into four processes, namely, “monitoring and awareness–state diagnosis–plan formulation–operation execution”. The human behavior model at each stage was analyzed and combined with the node failure model to obtain the performance shaping factors, or PSFs, in the dam failure incidents. PSFs are an important characterization of human influences, and their successful combination contributes greatly to the improvement of human reliability.

- (3)

- Using Bayesian networks to characterize the resulting PSFs and the interdependencies between each other and using entropy reduction information for sensitivity analysis. The results show that the operator directly affects human reliability and is the subject of the accident. In contrast, its deep root cause is the ineffectiveness of the management organization, and the system.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Qualitative Assessment | Descriptions | Failure Probability |

|---|---|---|

| The gate system is very reliable. |

| 0.000001~0.0001 (Selected by experts on the basis of experience) |

| The gate system is reliable. |

| 0.0001~0.01 |

| The gate system is generally reliable. |

| 0.01~0.1 |

| The gate system is unreliable. |

| 0.1~0.5 |

| The gate system is very unreliable. |

| 0.5~0.99 |

| Qualitative Assessment | Descriptions | Failure Probability |

|---|---|---|

| The operator is very responsible. |

| 0.000001~0.0001(Selected by experts on the basis of experience) |

| The operator is responsible |

| 0.0001~0.01 |

| The operator is generally responsible |

| 0.01~0.1 |

| The operator is less responsible |

| 0.1~0.5 |

| The operator is poorly responsible |

| 0.5~0.99 |

References

- Xie, J.B.; Sun, D.Y. Statistics of dam failures in China and analysis on failure causations. Water Resour. Hydropower Eng. 2009, 40, 124–128. [Google Scholar]

- Hariri-Ardebili, M.A. Risk, reliability, resilience (R3) and beyond in dam engineering: A State-of-the-Art review. Int. J. Disaster Risk Reduct. 2018, 31, 806–831. [Google Scholar] [CrossRef]

- Zeng, X.; Lund, J.R.; Cai, X.M. Linear Versus Nonlinear (Convex and Concave) Hedging Rules for Reservoir Optimization Operation. Water Resour. Res. 2021, 57, 29160. [Google Scholar] [CrossRef]

- Ministry of Water Resources of the People’s Republic of China. General Office of the Ministry of Water Resources on Effectively Working on the Safety of Reservoirs in 2023 Flood; Ministry of Water Resources of the People’s Republic of China: Beijing, China, 2023.

- Li, H.E.; Ma, G.Z.; Wang, F.; Rong, W.J.; He, Y.J. Analysis of dam failure trend of China from 2000 to 2018 and improvement suggestions. Hydro-Sci. Eng. 2021, 101–111. [Google Scholar]

- Zhang, J.Y.; Yang, Z.H.; Jiang, J.P. Analyzing the risk and failure patterns of dams in China. Sci. Sin. Technol. 2017, 47, 1313–1320. [Google Scholar] [CrossRef]

- Li, L.; Wang, R.Z.; Sheng, J.B. Dam Risk Evaluation and Risk Management; China Water Conservancy and Hydropower Press: Beijing, China, 2006. [Google Scholar]

- Zhang, J.Y.; Sheng, J.B.; Cai, Y.B.; Li, Y. Key technologies for safety guarantee of reservoir dam. Water Resour. Hydropower Eng. 2015, 46, 1–10. [Google Scholar]

- Cai, Q. Research on Real-Time Risk Assessment and Dynamic Regulation Method of Earth and Rock Dams. Ph.D. Thesis, Nanjing Hydraulic Research Institute, Nanjing, China, 2018. [Google Scholar]

- Pei, L.; Chen, C.; He, K.; Lu, X. System Reliability of a Gravity Dam-Foundation System Using Bayesian Networks. Reliab. Eng. Syst. Saf. 2022, 218, 108178. [Google Scholar] [CrossRef]

- Chen, B.; Gu, C.S.; Bao, T.F.; Wu, B.B.; Su, H.Z. Failure Analysis Method of Concrete Arch Dam Based on Elastic Strain Energy Criterion. Eng. Fail. Anal. 2016, 60, 363–373. [Google Scholar] [CrossRef]

- Wu, Z.; Xu, B.; Gu, C.; Li, Z. Comprehensive Evaluation Methods for Dam Service Status. Sci. China Technol. Sci. 2012, 55, 2300–2312. [Google Scholar] [CrossRef]

- Peng, M.; Zhang, L.M. Analysis of Human Risks Due to Dam-Break Floods—Part 1: A New Model Based on Bayesian Networks. Nat. Hazards 2012, 64, 903–933. [Google Scholar] [CrossRef]

- Alvi, I. Human Factors in Dam Failures; ASDSO Dam Failure & Incidents Committee (DFIC): Lexington, KY, USA, 2013. [Google Scholar]

- UNE-EN 62508-2011; Guidance on Human Aspects of Dependability. ES-AENOR: Madrid, Spain, 2011.

- Zhang, L. Human Factors Reliability Analysis Techniques in Probabilistic Safety Evaluation; The Atomic Energy Press: Beijing, China, 2006. [Google Scholar]

- Lee, H.C.; Seong, P.H. A Computational Model for Evaluating the Effects of Attention, Memory, and Mental Models on Situation Assessment of Nuclear Power Plant Operators. Reliab. Eng. Syst. Saf. 2009, 94, 1796–1805. [Google Scholar] [CrossRef]

- Groth, K.M.; Swiler, L.P. Bridging the Gap between HRA Research and HRA Practice: A Bayesian Network Version of SPAR-H. Reliab. Eng. Syst. Saf. 2013, 115, 33–42. [Google Scholar] [CrossRef]

- Kirwan, B. The Development of a Nuclear Chemical Plant Human Reliability Management Approach: HRMS and JHEDI. Reliab. Eng. Syst. Saf. 1997, 56, 107–133. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, Y.; Cai, B.; Zhang, D.; Zheng, C. Dynamic Bayesian Network Modeling of Reliability of Subsea Blowout Preventer Stack in Presence of Common Cause Failures. J. Loss Prev. Process Ind. 2015, 38, 58–66. [Google Scholar] [CrossRef]

- Martins, M.R.; Maturana, M.C. Human Error Contribution in Collision and Grounding of Oil Tankers. Risk Anal. 2010, 30, 674–698. [Google Scholar] [CrossRef]

- Yang, K.; Tao, L.Q.; Bai, J. Assessment of Flight Crew Errors Based on THERP. Procedia Eng. 2014, 80, 49–58. [Google Scholar] [CrossRef]

- Sheng, J.B.; Liu, J.X.; Zhang, S.C.; Xiang, Y.; Yang, Z.H. Investigation and analysis of failure mechanism of reinforced dams. Chin. J. Geotech. Eng. 2008, 30, 1620–1625. [Google Scholar]

- Li, D.D. Human Factors Reliability Studies in Dam Risks. Ph.D. Thesis, Nanjing Hydraulic Research Institute, Nanjing, China, 2012. [Google Scholar]

- Su, X.; Mahadevan, S.; Xu, P.; Deng, Y. Dependence Assessment in Human Reliability Analysis Using Evidence Theory and AHP: Dependence Assessment in Human Reliability Analysis Using Evidence Theory and AHP. Risk Anal. 2015, 35, 1296–1316. [Google Scholar] [CrossRef] [PubMed]

- Martins, M.R.; Maturana, M.C. Application of Bayesian Belief Networks to the Human Reliability Analysis of an Oil Tanker Operation Focusing on Collision Accidents. Reliab. Eng. Syst. Saf. 2013, 110, 89–109. [Google Scholar] [CrossRef]

- Christine, C.; Salim, L.; Gaël, M.; Jean-Pierre, C.; Benoît, L. Human and Organisational Factors in Maritime Accidents: Analysis of Collisions at Sea Using the HFACS. Accid. Anal. Prev. 2013, 59, 26–37. [Google Scholar]

- Liu, P.; Li, Z. Human Error Data Collection and Comparison with Predictions by SPAR-H: Human Error Data Collection and Comparison. Risk Anal. 2014, 34, 1706–1719. [Google Scholar] [CrossRef] [PubMed]

- Wen, D.S.; Bao, Y.K.; Zhang, Y.; Guo, C.X.; Fu, H.J.; Wang, J.G. Analysis of human reliability in power system operation and research on its database system. Power Syst. Prot. Control 2017, 45, 8. [Google Scholar]

- Basra, G.; Kirwan, B. Collection of Offshore Human Error Probability Data. Reliab. Eng. Syst. Saf. 1998, 61, 77–93. [Google Scholar] [CrossRef]

- O’Hara, J.M.; Brown, W.S.; Lewis, P.M.; Persensky, J.J. The Effects of Interface Management Tasks on Crew Performance and Safety in Complex Computer-Based Systems; U.S. Nuclear Regulatory Commission: Washington, DC, USA, 2002.

- Reason, J. Human Error; Cambridge University Press: New York, NY, USA, 1990. [Google Scholar]

- Li, P.C.; Wang, Y.Q.; Zhang, L. Analysis of human error modes and causal factors. Ind. Eng. Manag. 2006, 11, 6. [Google Scholar]

- Yang, Z.L.; Bonsall, S.; Wall, A.; Wang, J.; Usman, M. A Modified CREAM to Human Reliability Quantification in Marine Engineering. Ocean Eng. 2013, 58, 293–303. [Google Scholar] [CrossRef]

- Groth, K.M.; Mosleh, A. A Data-Informed PIF Hierarchy for Model-Based Human Reliability Analysis. Reliab. Eng. Syst. Saf. 2012, 108, 154–174. [Google Scholar] [CrossRef]

- Jiang, Y.J.; Sun, Z.Q. A systematic taxonomy for performance shaping factor. China Saf. Sci. J. 2010, 20, 6. [Google Scholar]

- Podofillini, L.; Dang, V.N. A Bayesian Approach to Treat Expert-Elicited Probabilities in Human Reliability Analysis Model Construction. Reliab. Eng. Syst. Saf. 2013, 117, 52–64. [Google Scholar] [CrossRef]

- Cai, B.; Liu, Y.; Zhang, Y.; Fan, Q.; Liu, Z.; Tian, X. A Dynamic Bayesian Networks Modeling of Human Factors on Offshore Blowouts. J. Loss Prev. Process Ind. 2013, 26, 639–649. [Google Scholar] [CrossRef]

- Groth, K.M.; Smith, C.L.; Swiler, L.P. A Bayesian Method for Using Simulator Data to Enhance Human Error Probabilities Assigned by Existing HRA Methods. Reliab. Eng. Syst. Saf. 2014, 128, 32–40. [Google Scholar] [CrossRef]

- Li, P.C.; Chen, G.H.; Zhang, L.; Xiao, D.S. A human factors reliability analysis method integrating organizational Factors. Nucl. Power Eng. 2010, 31, 82–86. [Google Scholar]

- Zhou, Q.; Wong, Y.D.; Loh, H.S.; Yuen, K.F. A Fuzzy and Bayesian Network CREAM Model for Human Reliability Analysis—The Case of Tanker Shipping. Saf. Sci. 2018, 105, 149–157. [Google Scholar] [CrossRef]

- Chen, S. Simulation of Earth-Rock Dam Failure Mechanism and Failure Process; China Water Resources and Hydropower Press: Beijing, China, 2012. [Google Scholar]

- Steven, G.V. Degrees of Belief: Subject Probability and Engineering Judgement; ASCE Press: Reston, VA, USA, 2002. [Google Scholar]

- USBR. Dam Safety Risk Analysis Methodology; Version 3.3; Technical Service Center: Washington, DC, USA, 1999.

- Cai, B.; Kong, X.; Liu, Y.; Lin, J.; Yuan, X.; Xu, H.; Ji, R. Application of Bayesian Networks in Reliability Evaluation. IEEE Trans. Ind. Inf. 2019, 15, 2146–2157. [Google Scholar] [CrossRef]

- Pearl, J. Chapter 3—Markov and Bayesian Networks: Two Graphical Representations of Probabilistic Knowledge. In Probabilistic Reasoning in Intelligent Systems; Pearl, J., Ed.; Morgan Kaufmann: San Francisco, CA, USA, 1988; pp. 77–141. [Google Scholar]

- Nakayasu, H.; Nakagawa, M.; Miyoshi, T.; Patterson, P. Human Cognitive Reliability Analysis on Drivers Using Simulator Experiments (Theory and Methodology, The 40th International Conference on Computers and Industrial Engineering (CIE 40)). J. Jpn. Ind. Manag. Assoc. 2012, 62, 278–285. [Google Scholar]

| Work Process | Reasons for Errors | Failure Mode |

|---|---|---|

| Monitoring and awareness | Observation of targeting error | Target error |

| Failure to detect in time | Time error | |

| State diagnosis | Delayed diagnosis | Time error |

| Wrong diagnosis | Target error | |

| Plan formulation | Irrational distribution of tasks | Behavior error |

| Unreasonable planning | Sequence/target error | |

| Excessive decision time | Time error | |

| Operation execution | Failure of operation | Behavior/Sequence/target error |

| Insufficient teamwork | Behavior error |

| Main Category | Subcategories | Specific Elements |

|---|---|---|

| Operator | Physiological factors | Age; Physical ability; Natural skills; Intelligence level |

| Psychological factors | Personality; Emotions; Attitudes; Mental Qualities; Attention; Habits; Responsibility | |

| Quality factors | Knowledge level; Experience; Professional skills | |

| Technology | Hardware facilities | Hardware operability; Layout of control equipment; Automation level of control equipment; Equipment routine maintenance |

| Software facilities | Level of software automation; Advanced technologies; Layout of display equipment; Mode of displaying information; Shape and color of display instruments; Quality of information | |

| Emergency plan | Completeness; Alarm system | |

| Organization | Organizational atmosphere | Communication and cooperation; Training quality; Operating procedures; Management system; Safety culture; Level of supervision |

| Task allocation | Personnel assignments; Duration of tasks | |

| Environment | Physical environment | Sound; Light; Temperature; Humidity; Vibration; Air Quality |

| Social environment | Social opinion; Publicity | |

| Engineering environment | Comfort; Safety | |

| Task | Single task | Available time; Complexity; Novelty |

| Multitask | Number of tasks; Relevance |

| Operator Physical IQ | Operator Quality Knowledge | Operator Quality Experience | Organization Atmosphere Training | k | Positive | Negative |

|---|---|---|---|---|---|---|

| Positive | Positive | Positive | Positive | 4 | 0.90 | 0.10 |

| Positive | Positive | Positive | Negative | 3 | 0.70 | 0.30 |

| Positive | Positive | Negative | Positive | 3 | 0.70 | 0.30 |

| Positive | Positive | Negative | Negative | 2 | 0.50 | 0.50 |

| Positive | Negative | Positive | Positive | 3 | 0.70 | 0.30 |

| Positive | Negative | Positive | Negative | 2 | 0.50 | 0.50 |

| Positive | Negative | Negative | Positive | 2 | 0.50 | 0.50 |

| Positive | Negative | Negative | Negative | 1 | 0.30 | 0.70 |

| Negative | Positive | Positive | Positive | 3 | 0.70 | 0.30 |

| Negative | Positive | Positive | Negative | 2 | 0.50 | 0.50 |

| Negative | Positive | Negative | Positive | 2 | 0.50 | 0.50 |

| Negative | Positive | Negative | Negative | 1 | 0.30 | 0.70 |

| Negative | Negative | Positive | Positive | 2 | 0.50 | 0.50 |

| Negative | Negative | Positive | Negative | 1 | 0.30 | 0.70 |

| Negative | Negative | Negative | Positive | 1 | 0.30 | 0.70 |

| Negative | Negative | Negative | Negative | 0 | 0.10 | 0.90 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Li, D.; Sheng, T.; Sheng, J.; Jing, P.; Zhang, D. A Modeling of Human Reliability Analysis on Dam Failure Caused by Extreme Weather. Appl. Sci. 2023, 13, 12968. https://doi.org/10.3390/app132312968

Wang H, Li D, Sheng T, Sheng J, Jing P, Zhang D. A Modeling of Human Reliability Analysis on Dam Failure Caused by Extreme Weather. Applied Sciences. 2023; 13(23):12968. https://doi.org/10.3390/app132312968

Chicago/Turabian StyleWang, Huiwen, Dandan Li, Taozhen Sheng, Jinbao Sheng, Peiran Jing, and Dawei Zhang. 2023. "A Modeling of Human Reliability Analysis on Dam Failure Caused by Extreme Weather" Applied Sciences 13, no. 23: 12968. https://doi.org/10.3390/app132312968

APA StyleWang, H., Li, D., Sheng, T., Sheng, J., Jing, P., & Zhang, D. (2023). A Modeling of Human Reliability Analysis on Dam Failure Caused by Extreme Weather. Applied Sciences, 13(23), 12968. https://doi.org/10.3390/app132312968