Abstract

In recent years, social networks have developed rapidly and have become the main platform for the release and dissemination of fake news. The research on fake news detection has attracted extensive attention in the field of computer science. Fake news detection technology has made many breakthroughs recently, but many challenges remain. Although there are some review papers on fake news detection, a more detailed picture for carrying out a comprehensive review is presented in this paper. The concepts related to fake news detection, including fundamental theory, feature type, detection technique and detection approach, are introduced. Specifically, through extensive investigation and complex organization, a classification method for fake news detection is proposed. The datasets of fake news detection in different fields are also compared and analyzed. In addition, the tables and pictures summarized here help researchers easily grasp the full picture of fake news detection.

1. Introduction

With the rapid development of network technology and the widespread application of social networks, platforms such as Facebook, Twitter, YouTube, Weibo and Zhihu (the last two are based in China) have become important sources and main venues for users to publish, obtain, and share information. However, they also provide a hotbed for the proliferation of fake news on the internet. After the 2016 US presidential election, social networks have been facing increasing pressure to crack down on fake news [1].

The term “fake news” has evolved over time, and there is still no exact definition for the term. Fake news was initially defined as intentional and verifiable false news that may mislead readers [2]. Later, other definitions were established, such as in the Collins English Dictionary, where the term was defined as false and often sensational information spread under the cover of news reports. For all definitions of fake news, all agreed that the authenticity of fake news is false. However, they did not reach an agreement on whether fake news included satire, rumors, conspiracy theories, misinformation, and hoaxes [3,4,5]. Recently, some scholars reported that politicians define the news that does not support them as fake news. It is known that this term cannot express the authenticity of the information [6]. Therefore, the term “fake news” has been rejected because it has meanings of “unstable” and “absurd” [7].

Meanwhile, many terms and concepts related to fake news have been found in the literature. The elementary terms related to fake news generally include misinformation, disinformation and misinformation. There are also general terms including false information, false news, information disorder, etc. [3]. The above terms are some concepts that attempt to define false information in the fields of news and politics. In fact, the phenomenon of fluid terminology brings semantic confusion, making it difficult to study and detect fake news [8]. It may be more accurate to use more objective, comprehensive and verifiable general terms [8,9].

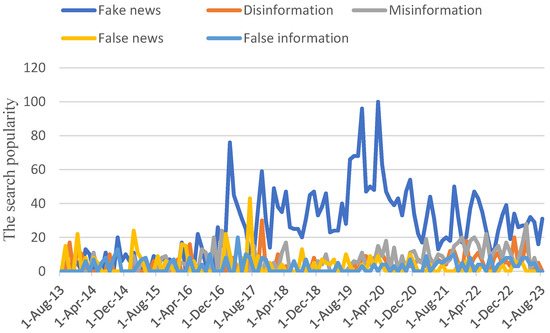

However, the attention of “fake news” has become relatively high recently. The term “fake news” was defined by the Macquarie Dictionary in 2021 and by the American Dialect Association and Collins English Dictionary in 2017 [10]. The Google Trends service shows the search popularity of the common terms mentioned above in the past ten years, as shown in Figure 1. Trends shows that the search popularity of these common terms did not significantly differ before June 2016. However, starting from around the second half of 2016, the popularity of the term fake news exceeded that of several other terms. This confirms that the term fake news has been a topic of public debate since the 2016 US presidential election. With the prevalence of COVID-19 and the US presidential election in 2020, the search popularity of the term fake news reached its peak by March 2020. In the past three years, the search index for fake news has generally been higher than other terms. Given the popularity of the term fake news, further analysis needs to be conducted using the term fake news instead of other general terms.

Figure 1.

The Google Trends analysis of common terms related to fake news in the past decade.

For financial and ideological purposes, a significant amount of fake news has been produced and distributed through social networks [11]. Zhang et al. [12,13] showed that fake news has three basic characteristics as follows: volume, velocity, and veracity (3V). This means that fake news is massive, uncertain, and in spread in real time. Most people define fake news as “deliberate and verifiable false news” [14,15]. Authenticity and intention are two important characteristic dimensions of fake news [3,16]. Table 1 shows the similarities and differences of basic terms related to fake news based on these two important characteristics [3,17].

Table 1.

Comparison of terms related to fake news.

In order to cope with the chaos of information dissemination in the new media era, some online fact-checking systems have emerged. Fact checking is the task of evaluating whether news content is true. It was initially developed in journalism and is an important task that is usually manually executed by specialized organizations, such as PolitiFact [18]. Even though the automatic fact-checking systems have been developed to assist human fact-checkers, they heavily rely on the knowledge base and require regular updates [19]. Additionally, it is difficult to deal with sudden topics and knowledge, such as COVID-19 symptoms in the early stages of the pandemic. In addition, most online fact-checking systems mainly focus on verifying political news [12]. With the large amount of real-time information being created, commented on, and shared through social networks, automatic fake news detection technology is urgently needed [13,20,21,22].

The relatively new and representative literature in the domain of fake news detection has been analyzed in this paper. In particular, we focused on how to derive detection methods based on the characteristics of fake news. The references were obtained by searching for keywords “fake news” or terms equivalent to the fake news declared by the authors, such as “false news” through Google Scholar. In addition, the above keywords were also combined with “detection”, “detection model”, “overview”, “survey”, “review”, “dataset”, etc., for search and selection. Subsequently, we studied the papers and systemically sorted the fake news detection models and datasets. Finally, considering factors such as the citation frequency and the publication time, the methods with a greater impact on the existing technologies were listed.

The contributions of this article are as follows: Firstly, the characteristics of fake news and its related terms are analyzed. Secondly, the classification of different fake news detection methods are compared from the perspective of characterization to detection. Thirdly, the existing approaches for detecting fake news are reviewed and divided in three categories, including content-based, propagation-based and source-based methods. The advantages and disadvantages of the three detection methods are also given; moreover, the datasets of fake news detection in different fields are analyzed. Finally, some insights are provided to provide a direction for further research.

2. Development and Classification of Fake News Detection Technology

Many existing studies have adopted an intuitive method: that is, to detect fake news based on the news content. Capuano et al. [23] showed that the manual fact-checking method is insufficient when faced with a massive amount of fake news information. They reviewed content-based fake news detection methods adopting machine learning. In addition to content features, many other types of features can be added to the detection model. Shu et al. [14] pointed out that fake news is deliberately written to misguide readers, making it difficult to detect fake news based on its content. It is necessary to include other auxiliary information to assist in making decisions. Sahoo et al. [13] also indicated that detecting fake news based on shared content is difficult and requires the addition of new information related to the users’ profiles. Specifically, multiple features related to each user’s Facebook account and some news content features were combined. Sheikhi et al. [24] reported that fake news detection systems are generally divided into news content and social context methods based on their data sources. The social context method focuses on social features such as user interactions and participation based on the given news.

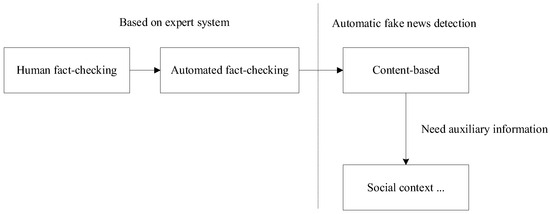

In summary, the fake news detection technology has evolved from expert systems to automatic detection, as shown in Figure 2. For the automatic detection technology, the above literature indicates that the news content alone may be not enough and requires additional auxiliary information, such as social context, for fake news detection.

Figure 2.

Fake news detection has evolved from expert systems to automatic detection.

There have been various classification methods for detecting fake news in recent years. Guo et al. [15] classified false information and other related terms based on the intention, dividing the existing false information detection methods into content-based, social context-based, feature fusion-based, and deep learning-based methods based on the type of features used. Most of the literature categorizes fake news detection into broader categories, including content-based and contextual aspects [3].

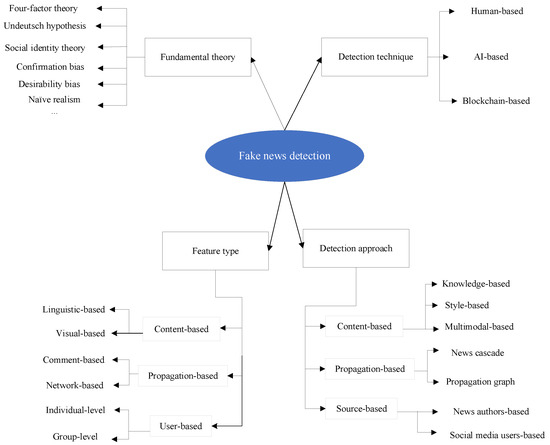

To facilitate the research of fake news detection on social networks, it is necessary to first study the characterizations of fake news and then propose the fake news detection methods. This paper sorts the classification of the detection methods from this perspective, as shown in Figure 3. Shu et al. [14] first used the theory of psychology and sociology to describe the background of fake news detection. Fake news can be categorized into traditional media fake news and social network fake news. The detection of fake news on traditional news media mainly depends on the content of the news, and social contextual information can serve as additional information for detecting fake news on social networks. Then, fake news detection methods can be classified into news content methods and social context methods. Furthermore, the news content model can be divided into knowledge-based and style-based methods. The social context model involves the social participation of relevant users and can be categorized into stance analysis and propagation analysis.

Figure 3.

Fake news from characterization to detection [12,14,17,25,26].

To better understand the fake news detection task, fake news can be investigated from both temporal and spatial dimensions. From the time angle, Zhou et al. [17] divided the life cycle of fake news into three stages: creation, publication, and propagation. According to the life cycle of fake news, they detailed the methods for detecting fake news from four aspects: knowledge, writing style, communication mode, and source. From the spatial dimension, Zhang et al. [12] divided fake news and its related content into four parts: creator, target victim, content, and social context. Therefore, fake news detection can be categorized into creator and user-based, content-based, and social context-based detection.

As the Undeutsch hypothesis implies, fake news may differ from real news in writing style. Therefore, Zhou et al. [25] studied the writing style of fake news based on vocabulary, syntax, semantics and discourse for early detection of fake news. Sharma et al. [26] studied different characteristics of fake news for detection, including the source, promoters, content, and responses on social networks. Consequently, they divided the fake news detection methods into three types, including content-based, feedback-based and intervention-based methods.

3. Fake News Detection

3.1. Problem Formulation

Let N = {n1, n2, …, nM} represent M news items. Each news nj has at least one publisher P and a maximum of K users U = {u1, u2, …, uK} forwarding the news. For the fake news detection task, the objective is to obtain the learning function p(c | nj, N, P, U, θ) to detect fake news. c represents the class label of the news, and θ denotes the hyper-parameters. The learning function can be described as follows:

Some fake news detection approaches are only based on the news itself, while in other detection methods, the information of the news publishers or forwarders is also considered. The following section will describe the fake news detection methods in detail.

3.2. Fundamental Theories of Fake News Detection

The cognitive and behavioral theories in social sciences and economics, as the fundamental theories of fake news, can promote the establishment of reasonable and interpretable fake news detection models.

To illustrate the role of fundamental theories, such as cognitive and behavioral theories, in fake news detection, a comparison was made between fake news detection and traditional distributed denial of service (DDoS) attack detection, as shown in Figure 4.

Figure 4.

The role of the fundamental theories in fake news detection.

Why are these two detection models compared? Fake news and distributed denial of service attacks are both very important issues in the field of information security. The research on fake news detection and distributed denial of service attack detection models plays an important role in protecting the network ecological environment. Before detection, the unique features of fake news and distributed denial of service attacks should be identified first. Therefore, it is necessary to first study the definitions of fake news and DDoS and then further summarize their characteristics, namely the detection criterion, based on the definitions. For example, traffic anomalies and frequency anomalies can serve as the detection criteria for distributed denial of service attacks. Meanwhile, relevant theories have revealed that fake news and true news are different in terms of presentation form and propagation patterns. Cognitive and behavioral theories can serve as the criteria for detecting fake news. For example, the four-factor theory suggests that lies are expressed differently in terms of emotions, behavioral control, and other aspects [17]. The latest research also shows that fake news spreads faster and further than true news [12,13]. Thus, the content or propagation features can be extracted based on the above theories for fake news detection.

3.3. Fake News Detection Approaches

News involves multiple factors in the propagation process. Chi et al. [27] thought that most online data involve four aspects: people, relationship, content, and time. Ruchansky et al. [28] showed fake news has the following three characteristics: the text of the article, the user response after receiving the text, and the user source for promoting the article. In order to summarize and unify relevant concepts, scholars have proposed the term “social context” to describe how news is disseminated online [12].

As the classification of fake news detection approaches into content-based and context-based is too broad, this article attempts to describe different fake news detection methods based on detection characteristics. Meel et al. [29] detected false information based on text/content, visual, user, message, propagation, temporal, structural, and linguistic features. It is worth noting that some of these features can be grouped together. For example, text/content, visual, message, and linguistic features all belong to content-based features. The propagation, temporal, and structural features can be referred to as propagation features. Moreover, source detection, which refers to identifying individuals or locations in the network where false information begins to spread, plays a crucial role in reducing misinformation [29].

Consequently, to highlight the characteristics of the detection method, this paper divides fake news detection methods into the following three types: content-based, propagation-based, and source-based methods. The pros and cons of the three detection approaches are shown in Table 2 [17,30]. Based on this viewpoint, the current fake news detection methods have been carefully organized, as shown in Table 3.

Table 2.

The pros and cons of fake news detection methodologies.

3.3.1. The Content-Based Methods

(1) Knowledge-based

For this method, a knowledge base or knowledge graph must first be established. Knowledge can be expressed in the form of triples [17]. Then, the extracted knowledge from a piece of news is compared with the facts in the knowledge base to check its authenticity. The above is actually the implementation process of an automatic fact-checking system which can be categorized into fact extraction and fact checking.

Some studies use knowledge-based methods to detect fake news. For example, researchers have been exploring how to realize the automation of fact-checking, using technologies based on natural language processing (NLP) and machine learning to automatically predict the authenticity of reports [31]. Mayank et al. [32] proposed a knowledge graph-based (KG) framework which includes the news encoder, the entity encoder, and the final classification layer to detect fake news articles. Firstly, the NLP-based technology is used to encode the headline of the news. Then, named entity recognition (NER) is adopted to recognize and extract named entities from the texts, and then named entity disambiguation (NED) is adopted to map the entities to the KG. The ComplEx model is adopted to obtain the representation of the entities. Finally, the embedding of the two parts is combined to detect fake news. Hu et al. [33] designed a new graph neural model, which compares the news with an external knowledge base. Firstly, a directed heterogeneous document graph containing topics and entities for each type of news is constructed. Then, through a carefully designed entity comparison network, different entity representations are compared. Finally, the above comparison features are fed into the fake news classifiers. Pan et al. [34] proposed a fake news detection method based on incomplete and imprecise knowledge graphs using the TransE and B-TransE methods. Firstly, three different knowledge graphs are created to generate background knowledge. Then, the B-TransE method is used to establish the entity and relation embedding and check whether news articles are authentic. The results show that even an incomplete knowledge graph can be used for fake news detection.

(2) Style-based

The style-based approach can evaluate news intent [17]. The pre-trained language model based on neural networks and deep learning has brought about breakthrough development in natural language processing technology. These provide better ideas for fake news detection.

Nasir et al. [35] proposed a combination of convolutional neural networks and recurrent neural networks for fake news classification. Zhou et al. [25] proposed a theory-driven fake news detection method. The news content is analyzed based on vocabulary, syntax, semantics, and discourse. Furthermore, the supervised machine learning method is used to detect fake news. Choudhary et al. [36] proposed a linguistic model to extract the syntactic, grammatical, sentimental, and readability features of specific news. Moreover, the sequential learning method based on a neural network is adopted to detect fake news. Alonso et al. [10] pointed out that the authors of fake news adopt various stylistic tricks, such as stimulating readers’ emotions, to promote their creative success. The role of emotional analysis in detecting fake news has been studied. Verma et al. [37] detected fake news based on linguistic features combined with the word-embedding technology. The extracted linguistic features include syntactic and semantic features. Umer et al. [38] designed a fake news stance detection framework based on titles and news text. Firstly, principal component analysis (PCA) and the Chi-square test are used to extract features, and then the extracted features are fed into the CNN-LSTM classifier.

With the development of multimedia technology, fake news tries to attract and mislead consumers by using the multimedia content of images or videos, making visual features become an integral part to fake news. Cao et al. [39] showed that although visual content is very important, our understanding of the visual features in fake news detection is still limited. Qi et al. [40] designed a CNN-based neural network framework to fuse the visual information of the frequency domain and pixel domain to detect fake news. Uppada et al. [41] proposed the credibility neural network to evaluate the credibility of images on OSNs. They applied the spatial characteristics of CNNs to find physical changes in images and analyze whether images reflect negative emotions.

In recent years, the issue of facial manipulation videos has received widespread attention, especially deepfake techniques that use deep learning tools to manipulate images and videos. The deepfake algorithm can use the generative model to replace the face in the target video [42]. As people become increasingly interested in deepfake technology, more and more deepfake detection technologies are underway. Early attempts mainly focused on the inconsistency of features caused by the facial synthesis process, while recently proposed methods center on basic features, where camera fingerprinting and biological signal-based schemes perform better [42].

(3) Multimodal-based

Currently, most fake news detection schemes focus on a single modality (such as text or visual features only). Due to the combination of multimodal information in social media in recent years, fake news detection based on multimodal information has gradually attracted extensive research. How to effectively fuse the data from different modalities is a challenge.

Song et al. [43] designed a multimodal fake news detection method that combines multimodal attention residuals and multi-channel convolutional neural networks (CNNs). Singh et al. [44] proposed a multimodal approach for detecting fake news using the text and visual features of news stories. Khattar et al. [45] proposed a fake news detection model consisting of a bimodal variational autoencoder and a binary classifier. The bimodal variational autoencoder is used for the multimodal representation, with the input being the text of the post and the accompanying images. Segura-Bedmar et al. [46] used unimodal and multimodal methods to classify fake news in a fine-grained way. Experiments showed that the multimodal CNN method that combines text and image data has the best performance. Wang et al. [47] extracted features based on text, images, and user attributes for Sina Weibo as well as image–text correlation features. Finally, a new deep neural network framework was established to detect fake news.

3.3.2. Propagation-Based Methods

Many studies have shown that fake news differs from true news in terms of dissemination methods [48]. A promising fake news detection method involves the study of propagation-based methods. The information propagation on social networks has strong temporal characteristics as follows: sudden updates, fast propagation speed, and rapid disappearance [29]. Based on the factors involved in the news propagation, the propagation-based approaches can be categorized into (1) only considering the propagation pattern of the news itself, known as news cascade, and (2) capturing other additional information, such as comments, during the news propagation process [17].

(1) News cascade

In recent years, the news cascade, a tree-like structure, has been used to describe news propagation. This structure only represents the dissemination mode of news and does not contain any additional information [17].

Graph neural networks (GNNs) can better simulate the news propagation on social networks, and thus, there are numerous fake news detection methods based on the GNNs. Monti et al. [48] applied the geometric deep learning model to describe the propagation mode of fake news. Four types of features, including user profile, user activity, news cascade, and content, are extracted to describe news, users, and their activity. Silva et al. [49] showed that the news propagation model, including the corresponding tweets and retweets, can be seen as a tree. The tree is composed of multiple cascades, each consisting of a series of tweets/retweets. They proposed a propagation network model called Propagation2Vec for the early detection of fake news. A hierarchical attention mechanism is adopted to encode the propagation network, which can assign corresponding weights to different cascades. At the same time, a technique for reconstructing a complete propagation network in the early stages has been proposed.

Barnabò et al. [50] used three graph neural network algorithms, including GraphSAGE, GAT and GCN, for fake news detection, with the URL diffusion cascade as the input. An active learning method has also been proposed for sample annotation. Due to the dynamic nature of information diffusion networks in the real world, Song et al. [51] designed a fake news detection model called the Dynamic Graph Neural Network (DGNF). Specifically, the discrete time dynamic graph (DTDG) is used to model news propagation networks. Jeong et al. [52] designed a hypergraph neural network to jointly model multiple propagation trees for fake news detection. Han et al. [53] used a GNN to model information propagation patterns. Continual learning techniques are used to gradually train GNNs so that they can achieve stable performance across different datasets. Wei et al. [54] proposed the concept of a propagation forest to cluster propagation trees at the semantic level. A new framework based on Unified Propagation Forest (UniPF) was proposed to fully explore the potential correlation between propagation trees and improve the performance of fake news detection. Murayama et al. [55] designed a point process model for fake news dissemination on Twitter. In the model, the dissemination of fake news consists of two parts as follows: the cascade of original news and the cascade of assertion of news falsehoods. The experiments indicated that the proposed method is helpful in detecting and mitigating fake news.

(2) Propagation graph

Sometimes, it is also necessary to consider other additional information, such as comments and user characteristics, in the dissemination of information. Various propagation graphs can be created to model the information dissemination process.

Zhou et al. [17] showed that the propagation-based fake news detection method can be classified into news cascades and self-defined graphs. For self-defined graphs, homogeneous, heterogeneous, or hierarchical networks can be constructed to describe the propagation pattern of fake news. Ni et al. [56] proposed a new neural network framework for detecting fake news based on source tweets and their propagation structures. The text semantic attention network and the propagation structure attention network are used to obtain the semantic features and propagation structure features of tweets, respectively. Shu et al. [57] designed a hierarchical propagation network for fake news detection. Firstly, a hierarchical propagation network is constructed, based on which the corresponding features are extracted from the structural, temporal, and linguistic perspectives. Finally, the effectiveness of these propagation network features in fake news detection is verified. Davoudi et al. [58] used both the propagation tree and the stance network for early fake news detection. A new method for constructing the stance network has been proposed, and various graph-based features are extracted for sentiment analysis. The node2vec technology uses graph embedding to obtain feature representations of the two networks mentioned above. Yang et al. [30] designed a model called PostCom2DR for rumor detection. Firstly, a response graph is created between posts and comments. Secondly, a two-layer GCN and self-attention mechanism are used to obtain the features of comments. Finally, a post-comment co-attention mechanism is applied to fuse information. Shu et al. [59] described the relationship among publishers, news, and users which may improve the detection of fake news. A tri-relationship-embedding framework, which models both publisher–news relationship and user–news interactions, is proposed for fake news detection. Nguyen et al. [60] proposed a novel graph representation called the Fact News Graph (FANG), which models all major social participants and their interactions to improve representation quality.

3.3.3. Source-Based Methods

The source-based methods evaluate the authenticity of news based on the credibility of the source. The source mainly includes the news authors and social media users. This method, an indirect way to detect fake news, may seem arbitrary but is very effective [17].

(1) News author-based

Many studies have shown that considering news authors can improve the performance of fake news detection. For example, Sitaula et al. [61] reported that adding features such as the number of authors and the historical connection between authors and fake news can improve fake news detection. They showed that fake news can be effectively detected by adopting a few features based on the source’s credibility. Yuan et al. [62] indicated that the content-based and propagation-based methods are not suitable for the early detection of fake news. They pointed out that the credibility of publishers and users can be adopted to quickly locate fake news in a massive amount of news. They proposed a multi-head attention network that considers publishing and forwarding relations to optimize fake news detection. Luvembe et al. [63] used the stacked BiGRU to extract dual emotional features derived from publisher emotions and social emotions. An adaptive genetic weight update-random forest (AGWu-RF) was proposed to improve the accuracy of fake news detection.

(2) Social media user-based

Fake news can also be detected by detecting malicious social media users. Social media users are the communicators of news on social media, and malicious users are more likely to spread fake news. The social robot, sybil account, fake profile, fake account, etc., are generally malicious users of social media [64]. Kudugunta et al. [65] proposed a deep neural network based on long short-term memory (LSTM) architecture. This network uses content and metadata to detect robots at the tweet level. Rostami et al. [66] pointed out that feature selection is the main process of fake account detection based on machine learning. A multi-objective hybrid feature selection method is used for feature selection. Gao et al. [67] designed an end-to-end Sybil detection model based on the content. The self-normalizing CNN and bi-SN-LSTM are simultaneously adopted to improve the performance of fake news detection. Bazmi et al. [68] pointed out that according to the fundamental theories in fake news detection, the credibility of authors and users varies across different themes. They proposed a network model named MVCAN, which jointly models the potential topic credibility of authors and users in fake news detection. Zhang et al. [69] extracted a set of explicit and implicit features from the text information, and a deep diffusion network model was proposed based on the connections among the news content, creators, and news subjects.

Table 3.

Classifications and comparisons of various fake news detection methods.

Table 3.

Classifications and comparisons of various fake news detection methods.

| Detection Approach | Main Models | Datasets | Characteristics | |

|---|---|---|---|---|

| Content-based | Knowledge-based | biLSTM, ComplEx [32] | Kaggle, CoAID | The authenticity of the news is evaluated |

| GNN [33] | LUN, SLN | |||

| TransE [34] | Kaggles + BBC news, etc. | |||

| Style-based | CNN, RNN [35], | FA-KES, ISOT | Evaluate news intention | |

| BOW, SVM, LR, etc. [25] | PolitiFact, BuzzFeed | |||

| Sequential neural network [36] | Buzzfeed, Random political | |||

| WE, LFS, KNN, Ensemble [37] | WELFake | |||

| PCA, CNN-LSTM [38] | FNC | |||

| CNN-RNN [40] | Weibo dataset | |||

| SENAD, CNN [41] | FakeNewsNet | |||

| Multimodal-based | Residual network, CNN [43] | Tweet, Weibo | Obtain better results than unimodal methods; there is significant room for improvement | |

| LIWC, LDA, LR, KNN, etc. [44] | Kaggle | |||

| VAE [45] | Twitter, Weibo | |||

| CNN, BiLSTM, BERT [46] | Fakeddit | |||

| ERNIE, CNN, FNN [47] | ||||

| Propagation-based | News cascade | Geometric DL [48] | Evaluate news intention; more robust; directly capture news propagation | |

| Propagation2Vec [49] | PolitiFact, GossipCop | |||

| GraphSAGE, GAT and GCN [50] | FbMultiLingMisinfo, PolitiFact | |||

| DTDG, GNN [51] | Weibo, FakeNewsNet, Twitter | |||

| Hypergraph NN [52] | FakeNewsNet | |||

| GNN [53] | FakeNewsNet | |||

| UniPF [54] | FakeNewsNet, Twitter | |||

| Point process model [55] | ||||

| Propagation graph | Graph attention networks [56] | Twitter15, Twitter16 | Evaluate news intention; more robust; indirectly capture the propagation of the news | |

| Hierarchical propagation network, GNB, DT [57] | FakeNewsNet | |||

| Stance network, RNN [58] | FakeNewsNet | |||

| Tri-relationship, TriFN [59] | FakeNewsNet | |||

| FANG [60] | ||||

| Source-based | News author-based | Seven classifiers [61] | Buzzfeed news, PolitiFact | Detect fake news by assessing the credibility of the authors or publishers |

| Multi-head attention network, CNN [62] | Twitter15, Twitter16, Weibo | |||

| BiGRU, AGWu-RF [63] | RumorEval19, Pheme | |||

| Social media user-based | LSTM [65] | Presented by others | Detect fake news by detecting malicious social media users | |

| mRMR, RF, NB, SVM [66] | ||||

| CNN, bi-SN-LSTM [67] | MIB | |||

| Multi-view co-attention network [68] | PolitiFact, GossipCop | |||

| Diffusive network [69] | PolitiFact |

Figure 5 introduces the concepts related to fake news detection, including the following four aspects: fundamental theory, feature type, detection technique, and detection approach. The fundamental theories include the four-factor theory, Undeutsch hypothesis, social identity theory, confirmation bias, desirability bias, Naïve realism, etc. [17]. Furthermore, the feature type consists of content-based, i.e., “linguistic-based’’ or ‘‘visual-based’’. Propagation-based features can be comment-based and network-based. The comment-based method is a direct method for detecting fake news. Furthermore, different types of networks can be constructed, such as stance networks and diffusion networks, in the process of news dissemination. Existing network metrics (e.g., degree coefficient, clustering coefficient, etc.) can be used as network features, or embedding algorithms can be applied to extract network-embedding representations. Furthermore, user-based features can be categorized into individual level and group level. The credibility of users can be obtained through individual-level features such as registration age and the number of followers/followers. Group-level features are used to describe the characteristics of different communities formed by the spreaders of fake news and true news [14]. Moreover, it is worth mentioning that detection technologies include human-based, AI-based and blockchain-based technologies [3]. Human-based technology relies on knowledge for fake news detection through crowdsourcing and fact-checking techniques. AI-based technology applies shallow or deep machine learning approaches to detect fake news. For the blockchain-based technology, fake news can be detected by checking news sources and tracking news. Finally, as for the detection approach, some of the ‘‘content-based’’, ‘‘propagation-based’’, or ‘‘source-based’’ methods are adopted and illustrated in Section 3.3.

Figure 5.

An overview of the concepts related to fake news detection.

3.4. Discussion

For the three fake news detection methods mentioned in this paper, the content-based detection method is generally language-related. This means that the detection model applicable to political fake news may not be applicable to fake news detection in other fields. If additional information, such as comments generated during the information dissemination, is not considered, the propagation-based method is language-independent. Therefore, this method can better resist adversarial attacks and has better robustness. The source-based method sounds simple but effective. The characteristics of users are generally collected from the user’s homepage on social media, such as their personal description, gender, fan base, followers, place of residence, and hobbies. However, with the increasing awareness of privacy protection among people, many users are unwilling to disclose too much of their information. Some source-based detection methods have shifted to detecting malicious users through text published by users, and so they are also language-related.

The content-based detection method and source-based method are suitable for the early detection of fake news, as fake news can be recognized before it spreads on social media. However, the propagation-based detection is inefficient for the early detection of fake news, as it is difficult to detect fake news before it spreads. It can be seen that the content-based detection method and the source-based detection method generally have higher detection efficiency than the propagation-based detection.

The above three fake news detection methods are not independent. It is possible to jointly predict fake news from multiple perspectives; therefore, the advantages of the different approaches can be combined.

4. Datasets

For fake news detection, useful features are extracted from social media datasets, and an effective detection model is established to detect fake news in the future. Supervised learning methods are widely used in the field of fake news detection. Although these methods have shown promising results, reliable annotated datasets are needed to train the detection model [70]. Therefore, establishing a large-scale dataset with multidimensional information is very important.

Vlachos and Riedel [71] were the first to publish a dataset in the field of fake news, but only 221 statements were issued. In recent years, several fake news datasets have been proposed, which involve politics, security, health, and satire. The relatively new and representative datasets are classified based on the data sources of the news, as shown in Table 4. The CHECKED [72] and Weibo21 [73] datasets were collected from Weibo, COVID-19 [74] and FibVID [75] were collected from Twitter, and BuzzFeed [76] and Fakeddit [77] were collected from Facebook and Reddit, respectively. Datasets such as IFND [78], FakeNewsNet [79], FA-KES [80], ISOT [81], GermanFakeNC [82], BanFakeNews [83] and LIAR [84] are sourced from news websites.

Table 4.

The relatively new and representative datasets in fake news detection.

Table 4 shows the characteristics of the datasets, including the source, dataset name, feature types, modality, news domain, language, annotation methods for true news, annotation methods for fake news, and time. The main differences between these datasets lie in the data features they contain, the granularity of classification, and the language of the news. The CHECKED and Weibo21 are Chinese datasets; IFND, GermanFakeNC, and BanFakeNews are Indian, German, and Bangladeshi datasets, respectively; and the rest are English datasets.

4.1. Annotation Methods: Manual to Automatic

The annotation of news is a major bottleneck in constructing datasets, which mainly includes manual annotation and automatic annotation. Manual annotation is labor-intensive as it requires a careful examination of news content and other additional information. Crowdsourcing methods can be used for the annotation to reduce the burden on experts. Moreover, automatic annotation based on machine learning has also received attention. The annotation methods for news are also listed in Table 4. Most datasets are usually collated from fact-checking websites and marked by human experts [80]. The fact-checking systems claim to operate on the principles of “independence”, “objectivity”, and “neutrality”. Due to the fact that the news content may be entirely or partially true, most fact-checking websites use a multi-level method to describe the authenticity of the news when evaluating information rather than using two simple judgments, “True” and “Fake”. For example, PolitiFact, a trusted fact-checking website in the United States, provides six rating levels through a scale called the Trust-O-Meter, including “True”, “Mostly True”, “Half True”, “Mostly False”, “False”, and “Pants on Fire”. Figure 6 shows a Facebook post that is rated as “False” by the Truth-O-Meter. Table 5 lists the rating levels of common fact-checking websites, including PolitiFact [85], Snopes [86], TruthOrFiction [87], CheckYourFact [88], FactCheck [89], Gossip [90], and Ruijianshiyao [91].

Figure 6.

Illustrations of the fact-checking website PolitiFact.com.

Table 5.

The rating levels of common fact-checking websites.

Most fake news detection methods are generally defined as binary classification problems. This means that news is classified in a coarse-grained manner. Thus, it is necessary to classify the news labels into two categories, “True” and “Fake” [75,92], when using fact-checking websites to label news. For example, Khan et al. [92] used the LIAR dataset collated from PolitiFact.com to carry out the fake news detection experiment. Statements labeled as “Half True”, “Mostly True” and “True” were classified as “True”, while statements with “Pants on Fire”, “False”, and “Mostly False” were classified as “Fake”. Nakamura et al. [77] introduced the Fakeddit dataset, where samples have two-way, three-way, and six-way category labels. In addition to the label of “True”, fake labels in the six-way classification labels include “Manipulated Content”, “False Connection”, “Satire/Parody”, “Misleading Content” and “Imposter Content”. The three-way classification model was created to determine whether a sample is true, the sample is fake with real text, or the sample is fake and contains false text. The two-way classification labels only include fake or true. Segura-Bedmar et al. [46] classified fake news based on texts and images in a fine-grained way on the Fakeddit dataset. Results showed that the detection rate of some fake news categories increased after using images. The detection rate of other categories has also slightly improved after using images.

The semi-supervised fact-checking approach and distant supervision methods are also used for automatic annotation. Salem et al. [80] used a semi-supervised fact-checking method to label news articles in a dataset. The crowdsourcing method is used to obtain information from news articles and then to check whether it matches the information in the VDC database, which represents the truth of the facts. Finally, unsupervised machine learning is used to cluster the articles into two sets based on the extracted information. Nakamura et al. [77] did not manually label each sample. Instead, distant supervision was used to generate the final tag.

4.2. Feature Types

The feature types include textual and visual features as well as social contextual features. Patwa et al. [74] published a manually annotated dataset containing 10,700 real and fake news articles about COVID-19. Only English text contents were considered. The TF-IDF technology was used for feature extraction, and SVM and other machine learning methods were used to detect fake news. Shu et al. [79] proposed the FakeNewsNet dataset which contains two comprehensive datasets with different characteristics in terms of news content, social context, and spatio-temporal information. The FakeNewsNet contains 23,921, texts which are obtained from the fact-checking websites PolitiFact and GossipCop. The main feature of the dataset is that it includes which users have forwarded the news in addition to the original news. Thus, the propagation pattern of the news can be plotted into a network graph for further processing. Sharma et al. [78] proposed the Indian fake news dataset IFND, which consists of text and images. A multimodal method was also proposed, which considers both text and visual features for fake news detection. In the Fakeddit dataset, Nakamura et al. [77] showed that multimodal features perform best, followed by text-only and image-only features. Finally, the authors point out that some auxiliary information, such as the submission metadata, is useful for future research.

4.3. Discussion

The existing fake news datasets have significant differences in labeling categories, modalities, topic domains, and other aspects. Most datasets are related to politics and economics with limited coverage of topic areas. Additionally, some samples in some multi-category datasets were not annotated. Furthermore, most datasets only contain linguistic features. Few datasets contain both linguistic and social contextual features. From Table 3, it can be seen that most propagation-based detection methods use the FakeNewsNet dataset for experiments, and some source-based detection methods also use this dataset. The content-based detection method uses text-based or image-based datasets for experimentation. The dataset plays a very important role in training fake news detection models. As fake news takes on different forms, it is necessary to continuously iterate and update the datasets in terms of multimodality, dataset size, topic domains, and other aspects.

5. Conclusion

In the post-truth era, the public pays attention to the truth. Researchers have sought to improve performance in detecting fake news. About 40% of the research focuses on fake news detection by adopting machine learning [29]. Nevertheless, some key areas remain unresolved. The following highlights the current research gaps and future work directions.

(1) Fake news detection is an interdisciplinary study, involving graph mining, NLP, information retrieval (IR), and other fields [17]. We need to have a deeper understanding of what fake news is and what the nature of fake news is. More importantly, the cooperation between experts in different fields should be strengthened to study fake news.

(2) Due to the high cost of manual tagging, semi-supervised or unsupervised fake news detection methods should be studied. Automatic annotation methods can also be sought to reduce annotation costs.

(3) At present, most detection models roughly classify news into the following two categories: “true” and “fake”. Experiments have shown that applying multimodal information can improve detection performance, but there is room for improvement in terms of aligning and fusing information from different modalities.

(4) The current fake news detection models are usually limited to specific topics. A deeper understanding of fake news is needed to identify its unique invariant features. Cross-topic fake news detection models should be studied.

Author Contributions

Methodology, Y.S.; validation, Y.S., Q.L., N.G., J.Y. and Y.Y.; investigation, Y.S. and Q.L.; resources, Y.S. and N.G.; data curation, Y.S. and Y.Y.; writing—original draft preparation, Y.S.; writing—review and editing, Y.S.; visualization, Y.S. and J.Y.; supervision, Q.L.; project administration, Q.L.; funding acquisition, Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Fundamental Research Funds for the Central Universities, grant number ZY20215151; and the Natural Science Project of Xinjiang University Scientific Research Program, grant number XJEDU2021Y003.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Papanastasiou, Y. Fake News Propagation and Detection: A Sequential Model. Manag. Sci. 2020, 66, 1826–1846. [Google Scholar] [CrossRef]

- Allcott, H.; Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 2017, 31, 211–236. [Google Scholar] [CrossRef]

- Aïmeur, E.; Amri, S.; Brassard, G. Fake news, disinformation and misinformation in social media: A review. Soc. Netw. Anal. Min. 2023, 13, 1–36. [Google Scholar] [CrossRef]

- Islam, M.R.; Liu, S.; Wang, X.; Xu, G. Deep learning for misinformation detection on online social networks: A survey and new perspectives. Soc. Netw. Anal. Min. 2020, 10, 1–20. [Google Scholar] [CrossRef]

- Alam, F.; Cresci, S.; Chakraborty, T.; Silvestri, F.; Dimitrov, D.; Martino, G.D.S.; Shaar, S.; Firooz, H.; Nakov, P. A survey on multimodal disinformation detection. arXiv 2021, arXiv:2103.12541. [Google Scholar]

- Vosoughi, S.; Roy, D.; Aral, S. The spread of true and false news online. Science 2018, 359, 1146–1151. [Google Scholar] [CrossRef]

- Habgood-Coote, J. Stop talking about fake news! Inquiry 2019, 62, 1033–1065. [Google Scholar] [CrossRef]

- Baptista, J.P.; Gradim, A. A Working Definition of Fake News. Encyclopedia 2022, 2, 632–645. [Google Scholar] [CrossRef]

- Pennycook, G.; Rand, D.G. The psychology of fake news. Trends Cogn. Sci. 2021, 25, 388–402. [Google Scholar] [CrossRef]

- Alonso, M.A.; Vilares, D.; Gómez-Rodríguez, C.; Vilares, J. Sentiment Analysis for Fake News Detection. Electronics 2021, 10, 1348. [Google Scholar] [CrossRef]

- Au, C.H.; Ho, K.K.W.; Chiu, D.K.W. The role of online misinformation and fake news in ideological polarization: Barriers, catalysts, and implications. Inf. Syst. Front. 2022, 1331–1354. [Google Scholar] [CrossRef]

- Zhang, X.; Ghorbani, A.A. An overview of online fake news: Characterization, detection, and discussion. Inf. Process. Manag. 2019, 57, 102025. [Google Scholar] [CrossRef]

- Sahoo, S.R.; Gupta, B. Multiple features based approach for automatic fake news detection on social networks using deep learning. Appl. Soft Comput. 2020, 100, 106983. [Google Scholar] [CrossRef]

- Shu, K.; Sliva, A.; Wang, S.; Tang, J.; Liu, H. Fake news detection on social media: A data mining perspective. ACM SIGKDD Explor. Newsl. 2017, 19, 22–36. [Google Scholar] [CrossRef]

- Guo, B.; Ding, Y.; Yao, L.; Liang, Y.; Yu, Z. The future of false information detection on social media: New perspectives and trends. ACM Comput. Surv. (CSUR) 2020, 53, 1–36. [Google Scholar] [CrossRef]

- Tandoc, E.C., Jr.; Lim, Z.W.; Ling, R. Defining “fake news” A typology of scholarly definitions. Digit. J. 2018, 6, 137–153. [Google Scholar]

- Zhou, X.; Zafarani, R. A survey of fake news: Fundamental theories, detection methods, and opportunities. ACM Comput. Surv. (CSUR) 2020, 53, 1–40. [Google Scholar] [CrossRef]

- Guo, Z.; Schlichtkrull, M.; Vlachos, A. A survey on automated fact-checking. Trans. Assoc. Comput. Linguist. 2022, 10, 178–206. [Google Scholar] [CrossRef]

- Nakov, P.; Corney, D.; Hasanain, M.; Alam, F.; Elsayed, T.; Barrón-Cedeño, A.; Papotti, P.; Shaar, S.; Martino, G.D.S. Automated Fact-Checking for Assisting Human Fact-Checkers. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 19–27 August 2021; pp. 4551–4558. [Google Scholar]

- Mridha, M.F.; Keya, A.J.; Hamid, A.; Monowar, M.M.; Rahman, S. A Comprehensive Review on Fake News Detection with Deep Learning. IEEE Access 2021, 9, 156151–156170. [Google Scholar] [CrossRef]

- Paka, W.S.; Bansal, R.; Kaushik, A.; Sengupta, S.; Chakraborty, T. Cross-SEAN: A cross-stitch semi-supervised neural attention model for COVID-19 fake news detection. Appl. Soft Comput. 2021, 107, 107393. [Google Scholar] [CrossRef]

- Garg, S.; Sharma, D.K. Linguistic features based framework for automatic fake news detection. Comput. Ind. Eng. 2022, 172, 108432. [Google Scholar] [CrossRef]

- Capuano, N.; Fenza, G.; Loia, V.; Nota, F.D. Content-Based Fake News Detection with Machine and Deep Learning: A Systematic Review. Neurocomputing 2023, 530, 91–103. [Google Scholar] [CrossRef]

- Sheikhi, S. An effective fake news detection method using WOA-xgbTree algorithm and content-based features. Appl. Soft Comput. 2021, 109, 107559. [Google Scholar] [CrossRef]

- Zhou, X.; Jain, A.; Phoha, V.V.; Zafarani, R. Fake news early detection: A theory-driven model. Digit. Threat. Res. Pract. 2020, 1, 1–25. [Google Scholar] [CrossRef]

- Sharma, K.; Qian, F.; Jiang, H.; Ruchansky, N.; Zhang, M.; Liu, Y. Combating fake news: A survey on identification and mitigation techniques. ACM Trans. Intell. Syst. Technol. (TIST) 2019, 10, 1–42. [Google Scholar] [CrossRef]

- Chi, Y.; Zhu, S.; Hino, K.; Gong, Y.; Zhang, Y. iOLAP: A framework for analyzing the internet, social networks, and other networked data. IEEE Trans. Multimed. 2009, 11, 372–382. [Google Scholar]

- Ruchansky, N.; Seo, S.; Liu, Y. CSI. In Proceedings of theCIKM’17: ACM Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 797–806. [Google Scholar]

- Meel, P.; Vishwakarma, D.K. Fake news, rumor, information pollution in social media and web: A contemporary survey of state-of-the-arts, challenges and opportunities. Expert Syst. Appl. 2019, 153, 112986. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, Y.; Wang, L.; Meng, J. PostCom2DR: Utilizing information from post and comments to detect rumors. Expert Syst. Appl. 2022, 189, 116071. [Google Scholar] [CrossRef]

- Zeng, X.; Abumansour, A.S.; Zubiaga, A. Automated fact-checking: A survey. Lang. Linguist. Compass 2021, 15, e12438. [Google Scholar] [CrossRef]

- Mayank, M.; Sharma, S.; Sharma, R. DEAP-FAKED: Knowledge graph based approach for fake news detection. In Proceedings of the 2022 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM), Istanbul, Turkey, 10–13 November 2022; pp. 47–51. [Google Scholar]

- Hu, L.; Yang, T.; Zhang, L.; Zhong, W.; Tang, D.; Shi, C.; Duan, N.; Zhou, M. Compare to the knowledge: Graph neural fake news detection with external knowledge. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 754–763. [Google Scholar]

- Pan, J.Z.; Pavlova, S.; Li, C.; Li, N.; Li, Y.; Liu, J. Content based fake news detection using knowledge graphs. In Proceedings of the International Semantic Web Conference, Monterey, CA, USA, 8–12 October 2018; pp. 669–683. [Google Scholar]

- Nasir, J.A.; Khan, O.S.; Varlamis, I. Fake news detection: A hybrid CNN-RNN based deep learning approach. Int. J. Inf. Manag. Data Insights 2021, 1, 100007. [Google Scholar] [CrossRef]

- Choudhary, A.; Arora, A. Linguistic feature based learning model for fake news detection and classification. Expert Syst. Appl. 2020, 169, 114171. [Google Scholar] [CrossRef]

- Verma, P.K.; Agrawal, P.; Amorim, I.; Prodan, R. WELFake: Word Embedding Over Linguistic Features for Fake News Detection. IEEE Trans. Comput. Soc. Syst. 2021, 8, 881–893. [Google Scholar] [CrossRef]

- Umer, M.; Imtiaz, Z.; Ullah, S.; Mehmood, A.; Choi, G.S.; On, B.-W. Fake News Stance Detection Using Deep Learning Architecture (CNN-LSTM). IEEE Access 2020, 8, 156695–156706. [Google Scholar] [CrossRef]

- Cao, J.; Qi, P.; Sheng, Q.; Yang, T.; Guo, J.; Li, J. Exploring the role of visual content in fake news detection. In Disinformation, Misinformation, and Fake News in Social Media: Emerging Research Challenges and Opportunities; Springer: Cham, Switzerland, 2020; pp. 141–161. [Google Scholar]

- Qi, P.; Cao, J.; Yang, T.; Guo, J.; Li, J. Exploiting multi-domain visual information for fake news detection. In Proceedings of the 2019 IEEE international conference on data mining (ICDM), Beijing, China, 8–11 November 2019; pp. 518–527. [Google Scholar]

- Uppada, S.K.; Manasa, K.; Vidhathri, B.; Harini, R.; Sivaselvan, B. Novel approaches to fake news and fake account detection in OSNs: User social engagement and visual content centric model. Soc. Netw. Anal. Min. 2022, 12, 1–19. [Google Scholar] [CrossRef] [PubMed]

- Yu, P.; Xia, Z.; Fei, J.; Lu, Y. A Survey on Deepfake Video Detection. IET Biom. 2021, 10, 607–624. [Google Scholar] [CrossRef]

- Song, C.; Ning, N.; Zhang, Y.; Wu, B. A multimodal fake news detection model based on crossmodal attention residual and multichannel convolutional neural networks. Inf. Process. Manag. 2020, 58, 102437. [Google Scholar] [CrossRef]

- Singh, V.K.; Ghosh, I.; Sonagara, D. Detecting fake news stories via multimodal analysis. J. Assoc. Inf. Sci. Technol. 2021, 72, 3–17. [Google Scholar] [CrossRef]

- Khattar, D.; Goud, J.S.; Gupta, M.; Varma, V. Mvae: Multimodal variational autoencoder for fake news detection. In The World Wide Web Conference; Association for Computing Machinery: New York, NY, USA, 2019; pp. 2915–2921. [Google Scholar]

- Segura-Bedmar, I.; Alonso-Bartolome, S. Multimodal Fake News Detection. Information 2022, 13, 284. [Google Scholar] [CrossRef]

- Wang, H.; Wang, S.; Han, Y. Detecting fake news on Chinese social media based on hybrid feature fusion method. Expert Syst. Appl. 2022, 208, 118111. [Google Scholar] [CrossRef]

- Monti, F.; Frasca, F.; Eynard, D.; Mannion, D.; Bronstein, M.M. Fake news detection on social media using geometric deep learning. arXiv 2019, arXiv:1902.06673, 2019. [Google Scholar]

- Silva, A.; Han, Y.; Luo, L.; Karunasekera, S.; Leckie, C. Propagation2Vec: Embedding partial propagation networks for explainable fake news early detection. Inf. Process. Manag. 2021, 58, 102618. [Google Scholar] [CrossRef]

- Barnabò, G.; Siciliano, F.; Castillo, C.; Leonardi, S.; Nakov, P.; Martino, G.D.S.; Silvestri, F. Deep active learning for misinformation detection using geometric deep learning. Online Soc. Netw. Media 2023, 33, 100244. [Google Scholar] [CrossRef]

- Song, C.; Teng, Y.; Zhu, Y.; Wei, S.; Wu, B. Dynamic graph neural network for fake news detection. Neurocomputing 2022, 505, 362–374. [Google Scholar] [CrossRef]

- Jeong, U.; Ding, K.; Cheng, L.; Guo, R.; Shu, K.; Liu, H. Nothing stands alone: Relational fake news detection with hypergraph neural networks. In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data), Osaka, Japan, 17–20 December 2022; pp. 596–605. [Google Scholar]

- Han, Y.; Karunasekera, S.; Leckie, C. Graph neural networks with continual learning for fake news detection from social media. arXiv 2020, arXiv:2007.03316. [Google Scholar]

- Wei, L.; Hu, D.; Lai, Y.; Zhou, W.; Hu, S. A Unified Propagation Forest-based Framework for Fake News Detection. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, South Korea, 12–17 October 2022; pp. 2769–2779. [Google Scholar]

- Murayama, T.; Wakamiya, S.; Aramaki, E.; Kobayashi, R. Modeling the spread of fake news on Twitter. PLoS ONE 2021, 16, e0250419. [Google Scholar] [CrossRef]

- Ni, S.; Li, J.; Kao, H.-Y. MVAN: Multi-View Attention Networks for Fake News Detection on Social Media. IEEE Access 2021, 9, 106907–106917. [Google Scholar] [CrossRef]

- Shu, K.; Mahudeswaran, D.; Wang, S.; Liu, H. Hierarchical propagation networks for fake news detection: Investigation and exploitation. In Proceedings of the International AAAI Conference on Web and Social Media, Limassol, Cyprus, 5–8 June 2020; Volume 14, pp. 626–637. [Google Scholar]

- Davoudi, M.; Moosavi, M.R.; Sadreddini, M.H. DSS: A hybrid deep model for fake news detection using propagation tree and stance network. Expert Syst. Appl. 2022, 198, 116635. [Google Scholar] [CrossRef]

- Shu, K.; Wang, S.; Liu, H. Beyond news contents: The role of social context for fake news detection. In Proceedings of the twelfth ACM International Conference on Web Search and Data Mining, Melbourne, Australia, 11–15 February 2019; pp. 312–320. [Google Scholar]

- Nguyen, V.H.; Sugiyama, K.; Nakov, P.; Kan, M.-Y. Fang: Leveraging social context for fake news detection using graph representation. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, San Francisco, CA, USA, 27 October–1 November 2020; pp. 1165–1174. [Google Scholar]

- Sitaula, N.; Mohan, C.K.; Grygiel, J.; Zhou, X. Credibility-based fake news detection. In Disinformation, Misinformation, and Fake News in Social Media: Emerging Research Challenges and Opportunities; Springer: Cham, Switzerland, 2020; pp. 163–182. [Google Scholar]

- Yuan, C.; Ma, Q.; Zhou, W.; Han, J.; Hu, S. Early Detection of Fake News by Utilizing the Credibility of News, Publishers, and Users based on Weakly Supervised Learning. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 5444–5454. [Google Scholar]

- Luvembe, A.M.; Li, W.; Li, S.; Liu, F.; Xu, G. Dual emotion based fake news detection: A deep attention-weight update approach. Inf. Process. Manag. 2023, 60, 103354. [Google Scholar] [CrossRef]

- Ramalingam, D.; Chinnaiah, V. Fake profile detection techniques in large-scale online social networks: A comprehensive review. Comput. Electr. Eng. 2018, 65, 165–177. [Google Scholar] [CrossRef]

- Kudugunta, S.; Ferrara, E. Deep neural networks for bot detection. Inf. Sci. 2018, 467, 312–322. [Google Scholar] [CrossRef]

- Rostami, R.R.; Karbasi, S. Detecting Fake Accounts on Twitter Social Network Using Multi-Objective Hybrid Feature Selection Approach. Webology 2020, 17, 1–18. [Google Scholar] [CrossRef]

- Gao, T.; Yang, J.; Peng, W.; Jiang, L.; Sun, Y.; Li, F. A Content-Based Method for Sybil Detection in Online Social Networks via Deep Learning. IEEE Access 2020, 8, 38753–38766. [Google Scholar] [CrossRef]

- Bazmi, P.; Asadpour, M.; Shakery, A. Multi-view co-attention network for fake news detection by modeling topic-specific user and news source credibility. Inf. Process. Manag. 2023, 60, 103146. [Google Scholar] [CrossRef]

- Zhang, J.; Dong, B.; Yu, P.S. Fake News Detection with Deep Diffusive Network Model. arXiv 2018, arXiv:1805.08751. [Google Scholar]

- Yang, S.; Shu, K.; Wang, S.; Gu, R.; Wu, F.; Liu, H. Unsupervised fake news detection on social media: A generative approach. In Proceedings of the AAAI conference on artificial intelligence, Hilton, HI, USA, 27 January–1 February 2019; Volume 33, pp. 5644–5651. [Google Scholar]

- Vlachos, A.; Riedel, S. Fact checking: Task definition and dataset construction. In Proceedings of the ACL 2014 Workshop on Language Technology and Computational Social Science, Baltimore, MD, USA, 26 June 2014. [Google Scholar]

- Yang, C.; Zhou, X.; Zafarani, R. CHECKED: Chinese COVID-19 fake news dataset. Soc. Netw. Anal. Min. 2021, 11, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Nan, Q.; Cao, J.; Zhu, Y.; Wang, Y.; Li, J. MDFEND: Multi-domain fake news detection. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Queensland, Australia, 1–5 November 2021; pp. 3343–3347. [Google Scholar]

- Patwa, P.; Sharma, S.; Pykl, S.; Guptha, V.; Kumari, G.; Akhtar, M.S.; Ekbal, A.; Das, A. Fighting an infodemic: COVID-19 fake news dataset. In Proceedings of the Combating Online Hostile Posts in Regional Languages during Emergency Situation: First International Workshop, CONSTRAINT 2021, Collocated with AAAI 2021, Virtual Event, 8 February 2021; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 21–29. [Google Scholar]

- Kim, J.; Aum, J.; Lee, S.; Jang, Y.; Park, E.; Choi, D. FibVID: Comprehensive fake news diffusion dataset during the COVID-19 period. Telemat. Inform. 2021, 64, 101688. [Google Scholar] [CrossRef]

- BuzzFeed. Available online: https://github.com/BuzzFeedNews/2016-10-facebook-fact-check/tree/master/data (accessed on 10 August 2023).

- Nakamura, K.; Levy, S.; Wang, W.Y. r/fakeddit: A new multimodal benchmark dataset for fine-grained fake news detection. arXiv 2019, arXiv:1911.03854, 2019. [Google Scholar]

- Sharma, D.K.; Garg, S. IFND: A benchmark dataset for fake news detection. Complex Intell. Syst. 2023, 2843–2863. [Google Scholar] [CrossRef]

- Shu, K.; Mahudeswaran, D.; Wang, S.; Lee, D.; Liu, H. FakeNewsNet: A Data Repository with News Content, Social Context, and Spatiotemporal Information for Studying Fake News on Social Media. Big Data 2020, 8, 171–188. [Google Scholar] [CrossRef]

- Salem, F.K.A.; Al Feel, R.; Elbassuoni, S.; Jaber, M.; Farah, M. Fa-kes: A fake news dataset around the syrian war. In Proceedings of the international AAAI conference on web and social media, Limassol, Cyprus, 5–8 June 2019; Volume 13, pp. 573–582. [Google Scholar]

- ISOT Fake News Dataset. Available online: https://www.uvic.ca/engineering/ece/isot/datasets/ (accessed on 22 August 2021).

- Vogel, I.; Jiang, P. Fake news detection with the new German dataset “GermanFakeNC”. In International Conference on Theory and Practice of Digital Libraries; Springer International Publishing: Cham, Switzerland, 2019; pp. 288–295. [Google Scholar]

- Hossain, M.Z.; Rahman, M.A.; Islam, M.S.; Kar, S. Banfakenews: A dataset for detecting fake news in bangla. arXiv 2020, arXiv:2004.08789. [Google Scholar]

- Wang, W.Y. “liar, liar pants on fire”: A new benchmark dataset for fake news detection. arXiv 2017, arXiv:1705.00648. [Google Scholar]

- PolitiFact. Available online: https://www.politifact.com/ (accessed on 12 August 2023).

- Snopes. Available online: https://www.snopes.com/ (accessed on 12 August 2023).

- TruthOrFiction. Available online: https://www.truthorfiction.com/ (accessed on 12 August 2023).

- CheckYourFact. Available online: https://checkyourfact.com/ (accessed on 12 August 2023).

- FactCheck. Available online: https://www.factcheck.org/ (accessed on 12 August 2023).

- Gossip. Available online: https://www.gossipcop.com (accessed on 12 August 2023).

- Ruijianshiyao. Available online: http://www.newsverify.com (accessed on 12 August 2023).

- Khan, J.Y.; Khondaker, M.; Islam, T.; Iqbal, A.; Afroz, S. A benchmark study on machine learning methods for fake news detection. arXiv 2019, arXiv:1905.04749. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).