Abstract

An Augmented Reality (AR) system is a technology that overlays digital information, such as images, sounds, or text, onto a user’s view of the real world, providing an enriched and interactive experience of the surrounding environment. It has evolved into a potent instrument for improving human perception and decision-making across various domains, including industrial, automotive, healthcare, and urban planning. This systematic literature review aims to offer a comprehensive understanding of AR technology, its limitations, and implementation challenges in the most significant areas of application in engineering and beyond. The review will explore the state-of-the-art AR techniques, their potential use cases, and the barriers to widespread adoption, while also identifying future research directions and opportunities for innovation in the rapidly evolving field of augmented reality. This study works as a compilation of the existing technologies in the subject, especially useful for beginners in AR or as a starting point for developers who seek to innovate or implement new technologies, thus knowing the limitations and current challenges that could arise.

1. Introduction

Augmented Reality (AR) is an innovative technology that has generated significant attention from researchers and professionals across a wide range of industries and domains. By seamlessly integrating digital information with the physical environment, AR offers enhanced experiences, contextual insights, and interactive capabilities to users. Various domains such as education, healthcare, manufacturing, retail, and urban planning have witnessed a growing interest in AR research and implementation.

Before beginning to explain and give examples of the different types of augmented reality applications that exist, it is important to understand the differences that encompass the technologies of extended reality, virtual reality, mixed reality, and augmented reality and know how to classify them.

- Extended Reality (XR) is a catch-all to refer to augmented reality, virtual reality, and mixed reality. Sometimes the term “XR” is utilized to encompass all these technologies. The aim is to integrate or replicate the real world with a corresponding “digital version”, enabling interaction between the two [1].

- Virtual Reality (VR) creates a three-dimensional digital space that allows individuals to engage with and navigate a simulated environment closely resembling the real world, as experienced via their sensory perception. The environment is created with computer hardware and software, although users might also need to wear devices such as helmets or goggles to interact with the environment [2].

- Augmented Reality amplifies the existing physical environment by overlaying it with digital visual components, auditory cues, or other sensory inputs, all made possible through technological means [3].

- Mixed Reality (MR), also sometimes called hybrid reality, is the combination of virtual reality and augmented reality. This combination allows the creation of new spaces in which both real and virtual objects and/or people interact [4].

The market trajectory for AR is optimistic. As the technology evolves from its initial challenges towards a stage of consistent growth, it’s earning more widespread recognition and integration into everyday life. This evolution not only underscores its historical progression but also highlights its innate capacity for lucrative returns and broad user acceptance. Presently, AR isn’t just a budding innovation; it’s a blossoming technology on the cusp of widespread adoption. Economists agree that the AR market is set for further growth, supported by its established use cases and the transformative interactions it offers users. The worldwide economic outlook of AR reflects its transformative potential across various domains. Industry analysts predict a steep upward growth trajectory for the global AR market, mirroring the increasing interest and adoption in both the public and private sectors. According to the report by Grand View Research in [5], the global AR market size is anticipated to reach USD 340.16 billion by 2028, growing at a Compound Annual Growth Rate (CAGR) of 43.8% from 2021 to 2028. This projected growth signifies the increasing integration and acceptance of AR technologies across diverse industries and sectors. Regionally, North America holds the lion’s share of the AR market due to the strong presence of key industry players, technological advancements, and high acceptance rates of AR in sectors such as healthcare, education, retail, and manufacturing. The Asia Pacific region is also witnessing rapid growth in AR adoption, driven by the expansive consumer electronics market and increasing investment in AR technologies by countries such as China, Japan, and South Korea. The upward economic trend of AR is also reflective of the increasing investment in AR research and development, patent acquisitions, and strategic collaborations among tech giants, startups, and academic institutions. This, coupled with supportive government policies and initiatives aimed at promoting digital transformation, further contributes to the global AR market’s growth and economic impact.

This work aims to provide a comprehensive understanding of AR technology by examining its applications, challenges, limitations, and trends across different domains. The focus will be on offering a holistic perspective of the technology as well as a domain-specific analysis. The study will also explore the technical aspects, benefits, future research directions, and practical considerations of AR technology.

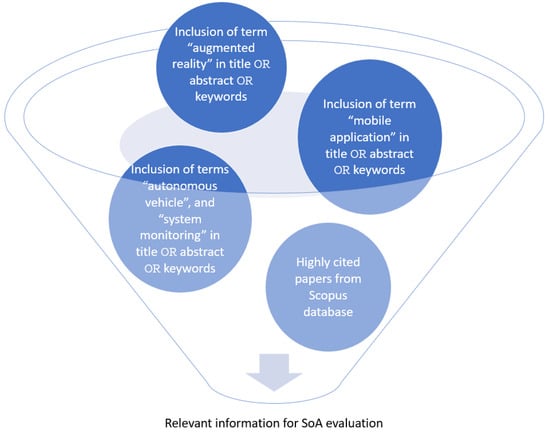

To achieve these objectives, will be an employed a Systematic Literature Review (SLR) method in accordance with Figure 1, following established guidelines. A systematic review is a structured approach that aims to gather evidence fitting pre-specified eligibility criteria to answer a well-defined research question while minimizing bias through the use of explicit, systematic methods documented in a protocol. The SLR process includes the following stages: (1) formulating research questions, (2) developing a protocol, (3) conducting a systematic search of related literature, (4) selecting relevant studies, (5) performing a thorough revision, (6) extracting and synthesizing information, and (7) writing and publishing the review.

Figure 1.

Selection criteria process for publication comparative analysis.

The justification for this study arises from the current state of augmented reality technology and its rapidly expanding influence across numerous domains. Despite the increasing interest in AR, there is a perceived lack of comprehensive literature that holistically studies AR from various domain perspectives. Thus, there is an evident need for a detailed study that both offers a broad understanding of AR applications and deeply investigates the challenges and opportunities in the field. This paper serves to fill this gap by presenting a comprehensive overview of usage, innovation, and research in AR technologies. Its cross-domain analysis breaks from the norm of domain-specific studies, providing a more extensive and insightful understanding of AR technology. The work further explores the current technological advancements and forecasts future trends, helping to direct ongoing and future research in the field.

The main contributions that this work provides to the scientific community are as follows:

- A comprehensive understanding of AR applications and trends across various domains: By examining the relevant literature in Section 3, Section 4 and Section 5, this work aims to offer a clear view of the current state of AR applications, benefits, and challenges in different domains. In contrast to other works that focus on a specific field, this study presents a cross-domain analysis, providing a broader perspective on AR technology.

- Insight into the technological advancements and emerging trends in augmented reality: This paper investigates the latest developments, ongoing research, and future directions in AR technology, as discussed in Section 7.2. The analysis highlights the potential impact of these advancements on the development and improvement of AR applications and their implications for various domains.

- A structured evaluation of AR implementation challenges and limitations across different domains: By examining the challenges and limitations of AR applications in Section 7.1, this work presents a detailed analysis of the common obstacles and potential solutions for AR technology across various fields.

The organization of this work is outlined as follows. Section 2 presents the systematic literature review methodology. The AR definitions and concepts, and the classification of AR applications, technologies, and devices are discussed in Section 3 and Section 4 respectively. Section 5 describes the application of AR in various domains and their respective limitations and challenges. Section 6 presents the results and findings on the trends of AR technology, while Section 7 discusses the current application challenges, technological advancements, emerging trends, and the benefits and implications of AR technology. Finally, Section 8 concludes the paper. In Abbreviations all abbreviations used along the paper appear with their meanings.

2. Methodology

To carry out this SLR, the subsequent segments outline the procedure adhered to and the methodical strategy for literature reviews in line with [6]. The assembled research was chosen via particular search techniques and standards and was examined to offer a state-of-the-art review of AR technologies, the overarching AR framework, various AR categories, their integration degrees, and a maturity assessment approach.

2.1. Stage 1: Research Questions

This initial stage is an imperative stepping stone towards comprehending the wider scope of AR, as it is used to guide the definition and outlining of the research inquiries. The aim here is not just to raise questions but to construct a clear and methodical road map to navigate the complex landscape of AR literature. Through this, it is possible to identify the key developments, applications, challenges, and trends that AR encompasses across various domains. Furthermore, an additional goal is to delve into the unique benefits and potential drawbacks that AR implementation offers in a variety of sectors such as education, healthcare, manufacturing, retail, and urban planning. Additionally, the Research Question (RQ) and Sub-Questions (SQ) must be formulated to comprehend the current technological advancements and emerging trends in AR, assessing how these developments shape the future of AR applications and their potential impact on the respective domains. This initial stage sets the foundation for this systematic investigation, fostering a comprehensive understanding of AR, its potential, and its limitations.

- RQ: What are the key developments, applications, challenges, and trends in augmented reality across various domains, and what are the implications of these findings for future research and practical implementation?

- SQ1: How has augmented reality been implemented in different domains, such as education, healthcare, manufacturing, retail, and urban planning, and what are the unique benefits and challenges in each domain?

- SQ2: What are the current technological advancements and emerging trends in augmented reality, and how do these developments shape the future of AR applications and their potential impact on various domains?

- SQ3: What are the primary challenges and limitations associated with the application of augmented reality across different fields, and how can these challenges be addressed to improve the effectiveness and user-friendliness of AR solutions?

In order to answer these questions correctly, AR technology will be addressed through three main sections: types of AR applications (by functionality), AR devices, and AR usage by domain, thus having a broad understanding of the landscape of recent years in this type of technology, being aware of its applications in various domains and its limitations in each of its forms, for example, limitations of devices, whether by hardware or software, limitations by sectors and even by the type of application and the way in which said AR technology operates and is related with the users and the environment.

2.2. Stage 2: Protocol

Extensive exploration in Scopus for relevant publications has been carried out. Various forms of literature (journal articles, white papers, reviews, and more) were initially gathered. Nevertheless, to acquire an initial assortment of publications, the subsequent conditions have been established:

- Search parameters: publications containing the phrase “augmented reality”, “mobile application”, “autonomous vehicle”, and “system monitoring” in the keywords, title or abstract.

- Papers results were ordered from most cited to least cited.

- Literature was chosen from diverse sectors, including education and training, healthcare and medical applications, manufacturing and industrial processes, retail and marketing, urban planning and smart cities, and others. The areas covered in this study have been chosen according to the selected articles, where the main criterion is the number of citations for each one with respect to its year of publication, i.e., the H-index. In the end, those areas that were most covered in the selected articles have predominated for this study. Other sectors included in this study, but which are not as predominated as the previous ones, are travel and tourism, entertainment and gaming, and the automotive industry.

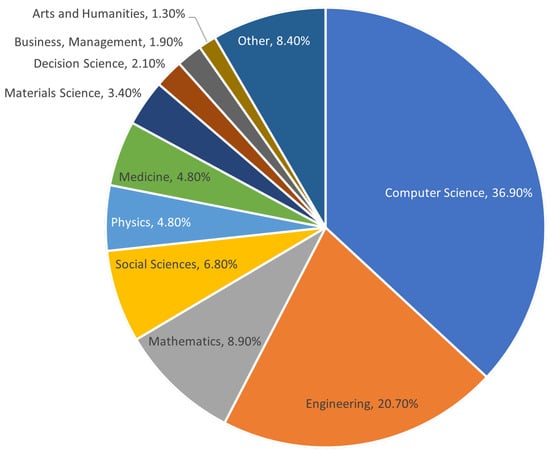

The organization of publications depends on their related application fields, following the third rule of the protocol. As shown in Figure 2, the most important application fields for research publications include computer science, engineering, medicine, and others. The main domain areas that have been identified with the most predominance are education and training, healthcare and medical uses, manufacturing and industrial processes, retail and marketing, and urban planning and smart cities (five areas in total). It is important to note that the application fields in the research publications from Figure 2 were divided into these previously mentioned domain areas which will be the ones discussed in later sections.

Figure 2.

Classification of research publications by application fields.

2.3. Stage 3: Systematic Search of Related Literature

After determining the search parameters and publication prerequisites, the subsequent phase involved carrying out a thorough exploration of relevant literature. To achieve this, the Scopus database has been employed. Ultimately, this review does not establish a publication year filter in order to not lose valuable information, since many sets of keywords do not provide a lot of articles, in addition, it is important to include advances and terms from previous years in the subsequent sections of the review. Despite this, the most to least cited filter was used with the purpose of prioritizing the most relevant information that already exists in the scientific community. The used set of keywords was:

- “Augmented reality” AND “mobile application” AND “applications” AND “systems”

- “AV” AND “Augmented reality”

- “System monitoring” AND “Augmented reality”

2.4. Stage 4: Selection of Relevant Studies

An initial search yielded a substantial collection of publications, totaling 200, read, analyzed, and determined as important information. After sifting through and identifying the most highly regarded studies, 96 references were selected for inclusion in this review. The entire selection procedure is illustrated in Figure 1.

In order to understand in a clear way the article selection procedure, Table 1 shows the steps for paper selection as well as general information about the citations of selected papers. A different specific number of papers have been collected from each group of search terms due to the H-index varies for each group. For instance, group four contains newer AR technologies or recent advancements, such that the H-index is much lower in comparison to the other groups in which the H-index reaches up to 106. In this way, the H-index is the key parameter in this SLR methodology to determine the instance in which the selection process stops getting papers to review (the reason why the number of useful papers is different between search terms).

Table 1.

Definition of the scientific papers selection criteria in this SLR.

2.5. Stage 5 & 6: Revision, Extraction and Synthesis

Upon finalizing the selection of publications, the comparative Table 2 was constructed to examine various aspects from each publication, including: technology and its sector, Technology Readiness Level (TRL) [7], the application’s industry, used hardware and software, type of connectivity between devices or networks, and so forth. This table is described in Section 6.

It is common that AR-related terms are commonly mistaken, most people refer to all these technologies as virtual reality, like a single set, when the reality is that AR term encompasses only certain types of specific technologies. This can be a bit problematic when trying to classify and understand the different AR technologies that have been developed over the years. In Section 3 will be discussed a classification by types of application of the main AR developments in a deeper way.

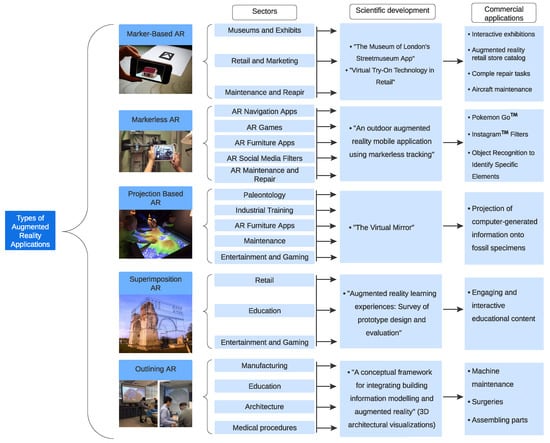

3. Types of Augmented Reality Applications

Figure 3 summarizes in detail the types of augmented reality that are going to be discussed, including examples, demonstrative images, and some references used to extract the information from each of the types of AR. Figure 3 is divided into five AR types with illustrative images to have a visual aid for differentiating better this classification, and then the sectors of each type are shown. Finally, the applications of the corresponding AR type are separated into commercial applications and scientific development. Commercial applications refer to available applications in the market that can be found in the different mentioned sectors, while scientific development refers to research projects that are about a developed or developing technology.

Figure 3.

Types of augmented reality applications and illustrative examples [8,9,10]. More details about some examples of scientific developments on Marker-Based AR are in [11,12], on Markerless AR in [13], on Projection Based AR in [14], on Superimposition AR in [15], and on Outlining AR in [16]; whereas interesting commercial applications can be reviewed for Marker-Based AR in [17,18,19,20,21], for Markerless AR in [22,23,24,25], for Projection Based AR in [26], for Superimposition AR in [27], and for Outlining AR in [28,29,30].

According to Figure 3, AR applications can primarily be classified based on their functions, and secondarily by the industries where they find application [31]. As far as functionality is concerned, there are specific characteristics an AR application works. Functionality classification includes:

3.1. Marker-Based AR

Marker-based augmented reality applications use a specific visual trigger to overlay digital content onto the real world [32]. These markers can be anything from QR codes to specific images or symbols. When the AR device’s camera detects the marker, it generates a virtual object or scene that appears to exist in the real world [33]. Please note that while marker-based AR has many uses, it also has some limitations [34]. The AR device must be able to see the marker clearly, which means that lighting conditions, angle, and distance can affect the AR experience. Also, the application can only generate AR content when a marker is present, limiting its use in environments without prepared markers [33]. Marker-based AR systems generally have two main components: the marker and the AR application. The marker is a visual cue or pattern that is recognized by the AR system. It can be a QR code, 2D bar code, or a specific image. The marker must have a high contrast and unique design for easy identification. The application uses a camera (often a smartphone or tablet camera) to capture the marker. Then, it processes the image to identify the marker and calculates its position and orientation. Once identified, the application overlays digital content (for example, 3D models, animations, or videos) onto the marker in the real world, creating an augmented experience [35]. Marker-based AR applications have gained popularity in various industries and fields, some common examples are:

- Museums and Exhibits: AR can enrich the visitor experience by providing additional information or interactive experiences. For example, an exhibit sign could include a marker that, when viewed through an AR app, shows a video or 3D model related to the exhibit [17]. The Museum of London’s Streetmuseum App is an excellent example of this type of technology, which overlays historical images on modern London streets. Users point their smartphone cameras at designated locations, and the app uses geolocation data as a marker to display historical photos of that location. This gives users a unique, immersive way to learn about the history of London [11]. The work of “Interactive AR for Tangible Cultural Heritage” [18] describes the development of a marker-based AR system used to bring museum exhibits to life. The system uses custom markers placed near each exhibit, which visitors can scan using a smartphone app. When the app recognizes a marker, it overlays a 3D animation on the exhibit, providing additional context and information.

- Retail and Marketing: Businesses often use AR for marketing products. For example, a furniture catalog might include markers that, when scanned with a smartphone, show what the furniture would look like in the user’s own home [19]. “Virtual Try-On Technology in Retail” work [12] discusses the development and implementation of a marker-based AR system for a virtual dressing room. Using markers placed on clothing items, customers can use a smartphone app to see how the items would look on them.

- Maintenance and Repair: AR can assist in complex repair tasks. For instance, an AR application could use markers on a machine part to overlay step-by-step repair instructions [20]. Currently, there are a lot of technologies that are capable of this, it is possible to use an AR system in order to aid aircraft maintenance technicians [21]. The system uses markers placed on various parts of an aircraft. When a technician points a tablet camera at a marker, the AR application overlays instructions or diagrams that guide the technician through the maintenance process.

3.2. Markerless AR

Also known as location-based AR [22], markerless augmented reality applications are a type of AR that doesn’t require a predefined marker to overlay digital content in the real world. Instead, these applications use features in the environment, such as surfaces, patterns, or objects, or leverage technologies like GPS, accelerometer data, or computer vision techniques to position the AR content [23]. The exact way this type of AR technology works can vary depending on the specific application [22], for example:

- AR Navigation Apps: AR navigation apps typically use a combination of GPS, accelerometer, gyroscope, and sometimes compass data from the user’s device to determine the location and orientation in the real world. This data is combined with computer vision techniques to recognize landmarks or features in the environment. For instance, Google’s Live View feature in Google Maps uses machine learning to recognize buildings and landmarks from its Street View data. This combination of data allows the application to accurately overlay directions in the user’s view of the real world [24].

- AR Games: AR games like Pokémon Go use the device’s GPS to determine the player’s location and then spawn digital creatures in the real world at those coordinates. The device’s camera captures the real world, and the game overlays the digital creatures onto this view. The game uses data from the device’s accelerometer and gyroscope to adjust the view and orientation of the digital creatures as the players move their device [22].

- AR Furniture Apps: These apps use computer vision techniques to identify and track flat surfaces in the user’s environment. The process typically involves feature detection and extraction, where key points in the image are identified. These features are then tracked across multiple frames to estimate the motion of the camera and create a 3D representation of the environment. Once a flat surface has been identified, the app can overlay a 3D model of a piece of furniture onto that surface [24].

- AR Social Media Filters: These applications use facial recognition and tracking to overlay digital filters onto users’ faces. The process typically involves first detecting the face in the image, often using machine learning models. Once the face is detected, key facial features such as the eyes, nose, and mouth are identified. The position of these features is then tracked in real-time as the user moves. The digital filter is adjusted based on these movements to ensure it aligns correctly with the user’s face [24].

- AR Maintenance and Repair: These applications can use object recognition to identify specific components in a machine or system. This often involves training a machine learning model on images of the components so it can recognize them in the real world. Once a component is recognized, the application can overlay digital instructions or diagrams onto the component to guide the user through the repair process [25].

Alternatively, new sectors have encouraged the use of markerless AR technologies, such as in [13] where the author presents an outdoor markerless AR mobile application for tourism. The application provides tourists with AR information overlays related to points of interest, such as historical sites or landmarks, using GPS and sensor data to position the AR content. An algorithm based on Natural Feature Tracking (NFT) to create a more immersive and accurate AR experience is used. The application captures images of the environment using the device’s camera and then extracts feature points from the images. These feature points are matched with pre-stored points in a database, allowing the app to accurately position the AR content, enhancing the overall experience for tourists [13].

Markerless AR has many advantages over marker-based AR, including greater flexibility and a more immersive experience. However, it also poses significant technical challenges, such as the need for more computational power and more complex algorithms, and it might not be as accurate or reliable as marker-based AR in certain situations [22].

3.3. Projection-Based AR

Projection-based AR, also known as Spatial Augmented Reality (SAR), is a branch of AR that aims to embed virtual information in the physical world in a seamless and intuitive manner. Unlike other AR techniques that require users to look through a display screen (for example, a tablet, smartphone, or smart glasses). SAR uses digital projectors to overlay graphical information directly onto physical objects in the user’s environment [36]. This method allows for interactivity by letting users interact with the projected images using hand gestures or touch, for example, a system might use computer vision or other sensing technologies to detect when a user touches a projected button, allowing the user to interact with the AR content in a natural way [37]. The main objective of this approach is to make the interaction between humans and digital information more natural by aligning virtual objects with physical ones. This results in a more immersive and intuitive experience as users are not required to shift their focus between a screen and the environment [38].

Currently, there are systems that implement this type of AR technology that projects computer-generated information onto fossil specimens, allowing researchers to visualize and study the fossils in new ways. For example, they can project a reconstructed skin surface onto a fossil skull to study the relationship between the skull’s shape and the creature’s external appearance [26]. Another interesting development that makes use of this technology is “The Virtual Mirror” [14], which is a system that projects medical imaging data onto a patient’s body, providing a guide for surgeons during operations. The system also allows the surgeon to interact with the projected images using hand gestures, creating a more intuitive interface for viewing and manipulating the medical data. Other applications that involve projection-based AR are industrial training and maintenance, architecture and design, entertainment and gaming, etc. [39].

Despite the technological advances in this type of AR, there are a few disadvantages and challenges that technologies of this type face [40]:

- Lighting Conditions: The visibility and quality of the projections can be significantly affected by the lighting conditions in the environment. Developing projection techniques that can adapt to varying lighting conditions is an active area of research.

- Surface Properties: The color, texture, and shape of the projection surfaces can affect the appearance of the projected images. Future work includes developing algorithms that can account for these surface properties to ensure accurate and consistent projections.

- Tracking and Calibration: Accurate tracking of the user’s viewpoint and the physical objects in the environment is essential for maintaining alignment between the virtual and physical objects. Achieving this in dynamic environments where objects may move is a challenging problem.

3.4. Superimposition-Based AR

Superimposition-based augmented reality is a type of AR where the original view of an object in the real world is either partially or fully replaced by a newly augmented view of that same object. This replacement is typically driven by object recognition, so the system needs to understand what the object is in order to replace it with an augmented version [41]. For example, in a furniture shopping app, when the phone camera is pointing to an existing piece of furniture in a home, the app could recognize the object and replace it with a 3D model of a new piece of furniture that could be considered to buy. In this way, it is possible to see how that new piece would look in place of the old one [42]. Another example is in the medical field, where doctors could point a device at a patient and have the system recognize certain anatomical features. The system could then overlay a view of the patient’s internal organs or systems, allowing doctors to see inside the patient without invasive procedures [43]. There are two main types of superimposition-based AR:

- Partial Superimposition: Only parts of the original view are replaced by augmented content. For instance, in an AR application that helps users visualize how new furniture might look in their room, the original room view remains visible, but the furniture is replaced with new models [44].

- Full Superimposition: The entire original view is replaced by augmented content. A well-known example of this is the IKEA AR furniture catalog, where users can see how furniture pieces would look in their homes [44].

Currently, as with other AR technologies, superimposition-based AR also is involved in other fields such as retail, maintenance and repair, education, gaming, and entertainment [27]. For example, different AR learning experiences include the use of superimposition AR to create more engaging and interactive educational content. In [15], the authors examine AR learning prototypes in various education fields, which include the use of superimposition-based AR. They delve into how these AR systems can provide immersive, interactive, and enlightening educational content that can significantly enhance traditional learning methods. Furthermore, the authors analyze the technical and pedagogical challenges faced in implementing AR in education. They discuss issues like the need for accurate tracking, the creation of meaningful and engaging content, and the cognitive load placed on the learner when interacting with AR [15].

As with all AR technology, superimposition-based AR faces challenges such as accurate object recognition and tracking, realistic rendering of augmented objects, and seamless integration of augmented content with the real-world environment [42].

3.5. Outlining AR

Also known as edge-based AR or contour-based AR, is a type of augmented reality where the system identifies and highlights the edges or contours of real-world objects. This is often used to bring attention to certain features or details of an object, which can be particularly useful in areas like maintenance, education, and medical procedures [28]. The basic operation of outlining AR involves object recognition and edge detection. The AR system must first recognize the object in the view of the camera, typically through techniques like machine learning or pattern recognition. Once the object is recognized, the system can then use edge detection algorithms to identify the contours of the object. These contours can then be highlighted or augmented in some way [29].

Outlining AR technologies is used to highlight the edges of components that need to be fitted together into assembling parts, in the manufacturing sector [30]. Also, in [16] is discussed the use of AR in the field of construction, outlining how AR can be used to highlight specific architectural features, providing real-time, 3D architectural visualizations that can help in understanding complex structures and designs.

It is important to mention that some types of technologies can be implemented with multiple technologies mentioned above, for example, AR games can be developed with marker-based and markerless, or different types of projects in retail and marketing can be implemented without using the same technology necessarily. Different examples were covered in order to gain a more comprehensive grasp of the range and implications of each category of AR application. Classifying the different types of AR applications according to their functionality and their applied sector was made through real-life examples of technology implementations.

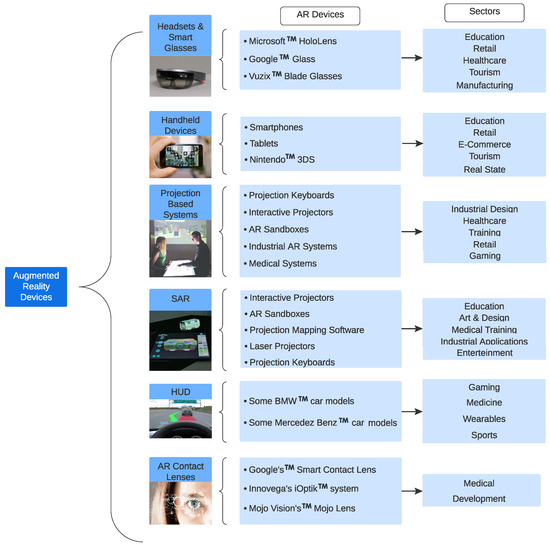

4. Augmented Reality Devices

Building on the comprehensive exploration of the diverse applications of AR in Section 3, the subsequent discussion in Section 4 transitions to focus on the underlying devices that facilitate these applications. The multifaceted nature of AR applications, as observed in the previous section, underscores the necessity for a variety of AR technologies. Whether marker-based or markerless, these technologies serve as the backbone of AR applications, enabling immersive experiences across multiple sectors.

Different devices that drive AR applications will be unpacked in this section, their unique features, and their contribution to the overall AR experience. The discussion will expand upon the foundational knowledge of AR applications, enhancing the understanding of the intricate relationship between AR applications and the devices that make them possible. Figure 4 summarizes in detail the types of devices that are used in each type of AR technology that was discussed in Section 3, including examples, demonstrative images, and some references used to extract the information from each of the AR devices. Figure 4 is divided into six types of AR devices with illustrative images to support the concept and characteristics of each device, then they are shown the sectors where these devices are used. Finally, some examples of each AR device type are enlisted.

Figure 4.

Types of augmented reality devices and illustrative examples [45,46,47,48,49,50]. More details about some examples of AR devices whose technology is based on headsets and smart glasses are in [51,52,53], on handheld devices in [54,55], on projection-based systems in [56], on SAR in [57], on HUD in [58], and on AR contact lenses in [59,60].

AR devices are hardware tools that overlay virtual information onto the real world, enhancing what we see, hear, and feel [61]. These devices can be categorized into several types but this classification encompasses and separates current devices in a practical way [62]: (1) Headsets and Smart Glasses, (2) Handheld Devices, (3) Projection-based Systems, (4) Spatial Augmented Reality (SAR), (5) HUD (Head-Up Displays) and, (6) AR Contact Lenses.

4.1. Headsets and Smart Glasses

AR headsets are often larger, more immersive devices that provide a wider Field of View (FoV) and more advanced features. They typically include built-in sensors, cameras, and often depth sensors to map the surrounding environment and overlay 3D digital content seamlessly onto the real world [63]. A well-known example of an AR headset is the Microsoft HoloLens [51]. HoloLens features high-definition holograms integrated with the real world that provide a new way to see the world. It features sensors, a high-resolution 3D optical head-mounted display, and spatial audio capabilities to facilitate augmented reality experiences. Users can engage with an intuitive interface through eye movements, vocal commands, and hand motions [51].

AR smart glasses are typically lighter, less immersive, and resemble a pair of standard eyeglasses. They often have a smaller FoV compared to AR headsets but are usually more comfortable to wear for extended periods. Smart glasses often provide simpler, heads-up display-style AR overlays, rather than the full 3D holograms seen in some AR headsets [52]. An example of AR smart glasses is Google Glass [53]. Google Glass presents data in a format similar to a smartphone but operates hands-free. Users interact with online content through voice commands in natural language. Another instance is the Vuzix Blade [53], offering a transparent display that enables the user to see both the physical environment and augmented reality elements at the same time. Other companies, such as Epson and Magic Leap, have also developed AR glasses and headsets, each with their unique features, strengths, and weaknesses. These devices allow for hands-free operation, which is particularly useful in fields like medicine, manufacturing, and maintenance where users need to access information while using their hands for other tasks [52]. Some of the sectors with implemented applications using this type of AR device include: education, healthcare, manufacturing, retail, tourism, and gaming [63].

4.2. Handheld Devices

Handheld devices, including smartphones and tablets, are the most common and accessible devices used for AR experiences. They utilize the device’s camera to capture real-world images and then overlay virtual information onto these images, which are displayed on the device’s screen [54]. This type of AR typically relies on two main technologies: marker-based AR, which uses image recognition to identify specific visual markers (like QR codes), and markerless AR, which uses GPS, digital compass, velocity meter, or accelerometer data to provide location or motion cues [64]. Something interesting is that certain portable gaming systems, like the Nintendo 3DS, also include AR capabilities. The 3DS, for example, uses physical cards as markers to create AR experiences [55]. The main sectors with implemented applications using this type of AR device include education, retail, e-commerce, navigation, tourism, and real estate [54].

4.3. Projection-Based Systems

Projection-based AR devices work by projecting artificial light onto real-world surfaces and then sensing the human interaction with that projected light. The projection can be done on any type of surface and can be interactive depending on the sophistication of the system. This type of AR technology does not require the user to wear or hold any equipment, which differentiates it from other AR methods [65]. Some main domains where projection-based AR devices are used are: industrial design and manufacturing, medical and healthcare, education and training, gaming and entertainment, and retail and advertising [65]. Projection-based AR can be found in a variety of devices, ranging from advanced setups used in industrial applications to smaller, consumer-focused products [56]:

- Projection Keyboards: Devices like the Celluon Epic project a virtual keyboard onto a flat surface, allowing users to type as they would on a physical keyboard. While not AR in the traditional sense, these devices use similar principles of light projection and interaction detection.

- Interactive Projectors: These are advanced systems that can project images onto any surface, turning them into an interactive display. Examples include the Sony Xperia Touch and the Lightform LF1, which can scan the environment to create a 3D map and project interactive AR content.

- AR Sandboxes: These are educational tools that project a topographic map onto a sandbox. As users move the sand around, changing the landscape, a sensor detects the changes and the projector adjusts the map in real-time. While these are often custom-built for educational environments, the principles could be applied in other AR projection systems.

- Industrial AR Systems: Companies like DAQRI and MetaVision have developed projection-based AR systems for industrial applications. These often involve projecting instructions or data onto work surfaces or components.

- Medical Systems: Certain advanced surgical systems use projection-based AR to provide visual guidance during procedures. For example, the VeinViewer system projects an image of a patient’s veins onto their skin, aiding in procedures like blood draws or IV placements.

4.4. Spatial Augmented Reality

Spatial Augmented Reality (SAR), also known as “room-scale AR” or “tabletop AR”, uses projectors to display graphical information on physical objects. A defining feature of SAR is that the display elements are not tied to the users, as is the case with handheld or head-mounted devices. SAR can provide a more natural and intuitive interface as it aligns with the physical world [57]. The main sectors with implemented applications using these AR devices are education, art and design, medical training, industrial applications (SAR can be used in the manufacturing industry to guide workers through assembly processes), museums and exhibits, and entertainment. One of the main advantages of SAR is that it allows multiple users to interact with the AR content simultaneously, without the need for any special wearables or devices [66]. Some examples of specific devices of SAR technology are [57]:

- Interactive Projectors: Projectors such as the Lightform LFC Kit and Sony’s Xperia Touch can be used to create SAR experiences. These projectors can scan the environment and allow users to “paint with light,” projecting AR content onto any surface.

- AR Sandboxes: An AR Sandbox is a combination of a physical sandbox, a 3D camera (like a Microsoft Kinect), and a projector. The 3D camera tracks the topography of the sand, and the projector overlays a topographic map and water simulation onto the sand. This is often used in educational contexts to teach geography and geology.

- Projection Mapping Software: Software solutions like TouchDesigner, MadMapper, or HeavyM can be used with standard projectors to create complex projection mappings onto 3D surfaces, which can be used for SAR applications.

- Laser Projectors: Devices like the Bosch GLM 50 C, which is technically a laser distance measurer with Bluetooth, can be used for SAR applications. Its ability to measure distances with a high degree of precision makes it suitable for applications that require precise overlays.

- Projection Keyboards: Devices like the Celluon Epic Projection Keyboard project a virtual keyboard onto a flat surface. Users can “type” on this virtual keyboard, and the device uses infrared sensors to detect the position of the fingers.

It is common that sometimes this type of AR device is overlapped with projection-based AR devices but they have differences between them. Both categories involve augmenting physical spaces with virtual information, they do so in different ways and can serve different purposes, but projection-based AR focuses on projecting light onto real-world surfaces, which may or may not allow for interaction, while spatial AR focuses on integrating virtual objects into the physical environment in a way that they appear to share the same 3D space, with a strong emphasis on multi-user interaction.

The fundamental principle of SAR is that it augments the physical environment by adding virtual objects that seem to co-exist within the same space as real objects. This generally requires that the system possess a deep understanding of the physical environment’s geometry and lighting conditions, which can be achieved using advanced depth sensors or computer vision techniques.

4.5. HUD

Type of AR display that projects images onto a transparent surface, usually a screen or visor, so that the viewer can maintain their focus on their physical environment while simultaneously seeing relevant data or images. The purpose of a HUD is to present data without requiring users to shift their attention from their typical viewpoints. The transparency of HUDs allows them to overlay information directly onto the user’s view of the real world. This information can be static (like a heads-up display in a video game) or it can change in response to the movement and interaction of the user with the physical world (like in AR applications) [58].

Many modern cars, such as certain models from BMW (e.g., the BMW i8 model manufactured in Leipzig, Germany) and Mercedes-Benz (e.g., the Mercedes-AMG GT model manufactured in Sindelfingen, Germany), come equipped with HUDs that can display a variety of information such as the car’s speed, the local speed limit, navigation instructions, and safety warnings directly onto the windshield. Also, commercial airliners and military aircraft use HUDs to provide pilots with crucial information such as altitude, airspeed, and navigation directions directly in their line of sight [67]. Some other sectors in which these types of AR devices can be found implemented are gaming, medicine, wearables, and sports.

4.6. AR Contact Lenses

AR contact lenses are a vision for the future of wearable technology. They would function by superimposing digital images or information onto the wearer’s field of vision directly on the lens. This technology is still in its early stages of development and presents a number of technological and physiological challenges that need to be addressed before it becomes commercially viable [59]. Technically, AR contact lenses would need to have the ability to project digital images onto the retina, track eye movement, adjust optics for variations in distance, and potentially even monitor health indicators. They would need to be powered in a way that is safe and doesn’t interfere with vision, and the technology would need to be miniaturized enough to fit within a contact lens [60].

There have been several attempts to develop AR contact lenses [60]:

- Google’s Smart Contact Lens Project: In 2014, Google announced a project aimed at creating a contact lens with an integrated glucose sensor for people with diabetes. While not an AR lens, this project demonstrated the potential for integrating technology into a contact lens.

- Innovega’s iOptik system: This system uses contact lenses to create a much larger field of view for AR and VR applications than would be possible with glasses or headsets alone.

- Mojo Vision’s Mojo Lens: This company is developing a contact lens with an integrated display that can provide information like navigation instructions or health data.

It’s important to note that any AR contact lens will have to meet stringent safety and comfort standards before it can be brought to market. This technology is still in its early stages, but if these hurdles can be overcome, AR contact lenses could provide a seamless and highly integrated form of AR [59].

As with the types of AR, something similar occurs with the devices, that is, the sectors in which each of the different types of devices can intervene are various, and applications from the same sector can be implemented with various types of AR devices. In this sense, the next section presents how AR technologies (algorithms and devices) have influenced the development of different domain areas in recent years.

5. Literature Review by Domain Area

Building upon the exploration of AR devices and their versatility across different sectors, this section delves into augmented reality by domain area. Just as the various types of AR devices offer flexibility in their applications, the domains in which augmented reality can be employed are equally diverse. By examining how AR impacts specific industries, fields, and areas of expertise, is possible to uncover the transformative potential of this technology in enhancing and revolutionizing various sectors. From healthcare to education, entertainment to manufacturing, AR holds promise for delivering immersive experiences and practical solutions, transcending boundaries, and reshaping the way users interact with the world around them. Nowadays, AR encompasses a lot of domains, but this section develops the most advanced and important ones.

5.1. Education and Training

AR has emerged as a powerful tool in education and training, revolutionizing traditional teaching methods and providing immersive learning experiences [68]. By seamlessly blending the real world with virtual elements, AR enhances the educational process, fostering engagement, interactivity, and knowledge retention. In the field of education, AR offers students a dynamic and interactive learning environment. Textbooks can be transformed into multimedia experiences, with 3D models, animations, and interactive elements overlaying printed content. Complex concepts, such as anatomical structures or historical landmarks, can be visualized in three dimensions, allowing students to explore and interact with virtual objects, bringing abstract ideas to life [69].

Furthermore, AR enables collaborative learning experiences, where students can interact with virtual content together, fostering teamwork, communication, and problem-solving skills. For instance, group projects can involve creating and manipulating virtual objects or solving puzzles in a shared AR space, promoting active learning and collaboration [69]. AR also has significant potential in training scenarios, particularly in industries that require hands-on experience and practical skills [70]. AR can simulate real-world situations, allowing trainees to practice and refine their abilities in a safe and controlled environment. For example, in medical training, AR can overlay virtual organs onto a mannequin, enabling aspiring surgeons to practice complex procedures with precision and accuracy [71]. Similarly, in industrial settings, AR can guide workers through complex tasks, providing real-time instructions and visual cues. By superimposing digital information onto the physical workspace, AR can assist technicians in equipment maintenance or assembly processes, reducing errors and improving efficiency [71]. These are some of the most recent developments in the “education and training” domain:

- ZooAR: The ZooAR project, developed by researchers at the University of Melbourne, Australia, leverages augmented reality to enhance zoology education. It allows students to interact with virtual 3D models of animals, providing a unique and immersive learning experience. Students can explore the anatomy, behavior, and habitats of various animals through their smartphones or tablets [72].

- ARChem: ARChem is an AR application developed by a team at the University of Bristol, UK. It focuses on teaching chemistry concepts through interactive AR experiences. Students can visualize and manipulate molecular structures in real-time, fostering a deeper understanding of chemical compounds and reactions [73].

- Google Expeditions: Google Expeditions is an AR application designed for educational purposes. It enables teachers to take students on virtual field trips to various locations around the world using AR technology. Students can explore historical sites, natural wonders, and cultural landmarks, enhancing their knowledge and global awareness [74].

5.2. Healthcare and Medical Applications

AR is proving to be an exciting development in the healthcare and medical sector, offering a multitude of applications that have the potential to transform medical processes and improve patient outcomes [75]. One area where AR is making a significant impact is surgery. Surgeons are now able to visualize the internal anatomy of a patient without the need for large incisions. This is achieved by superimposing a 3D model of a patient’s anatomy onto their body, effectively providing the surgeon with a kind of “X-ray vision”. This aids both in the planning of complex procedures and during the surgery itself, resulting in less invasive operations and quicker patient recovery times [76].

In the field of rehabilitation, AR is being used to make physical therapy more engaging and enjoyable for patients. AR games can be developed that transform exercises into fun activities, which can be particularly beneficial for children or those undergoing long-term rehabilitation [77]. Finally, AR is even influencing the world of prosthetics and orthotics. It can assist in the design and fitting of these devices, increasing comfort and usability for patients [75]. These are some of the most recent developments in the “healthcare and medical applications” domain:

- AccuVein: AccuVein is a handheld device that uses AR to visualize veins in real-time, making it easier for healthcare providers to insert needles for IV placement and blood draws. It illuminates the skin to show vein structures and improves the accuracy of vein puncture [78].

- ProjectDR: Developed by researchers at the University of Alberta, ProjectDR is an AR system that projects medical imaging data, like computed tomographies and magnetic resonance imaging datasets, onto a patient’s body in real-time. This technology could help surgeons see beneath a patient’s skin without the need for incisions [79].

- HoloAnatomy: Developed by Case Western Reserve University and Cleveland Clinic in collaboration with Microsoft, HoloAnatomy uses Microsoft’s HoloLens AR headset to teach medical students about human anatomy. It allows students to visualize and interact with life-sized 3D models of the human body [80].

5.3. Manufacturing and Industrial Processes

AR in the industrial and manufacturing sectors integrates virtual data into the physical world to create an enhanced version of reality. This technology overlays digital information, such as images, 3D models, and data, onto a real-world environment in real time [81]. It allows workers to learn new procedures in a risk-free environment before applying these skills in the actual workspace. This kind of immersive training helps improve workers’ understanding and retention of information, also, AR can provide technicians with real-time information and guidance during maintenance and repair tasks. By overlaying digital information onto the physical equipment, technicians can easily identify issues and understand the necessary steps to resolve them [82].

Designers and engineers can use AR to visualize and manipulate 3D models of products in the real world. This helps in better understanding the design, enabling modifications before actual production [83]. Quality control is another important use that has been given to AR in this sector, in order to compare the manufactured product with the original design by overlaying the 3D model onto the product [84]. Following are some promising developments in this field:

- Tech Live Look: Porsche uses an AR application called “Tech Live Look” that connects dealership technicians with remote experts. These experts can look at a car through the technician’s AR glasses and provide real-time guidance and support, significantly reducing service resolution times [85].

- Vision Picking: DHL has implemented AR in their warehouses to assist workers with “vision picking”. Workers wear smart glasses that display where items are located, the quickest route to them, and where they need to be placed on the cart. This results in increased efficiency and reduced errors [86].

- Siemens: Siemens uses AR to train their workers on Sematic Controller assembly. With AR, the company shows step-by-step instructions directly on their field of view, reducing errors and the time spent on assembling the controllers [87].

5.4. Urban Planning and Smart Cities

AR presents exciting possibilities for the fields of urban planning and smart cities. By overlaying digital information in the real world, AR allows for a seamless integration of digital intelligence and analytics within the physical environment. This can facilitate real-time decision-making and public engagement, streamline construction and maintenance processes, and enhance community well-being [88]. For urban planners, AR provides a dynamic tool for visualizing changes in landscapes, infrastructures, and traffic flow, making it easier to evaluate the impact of proposed developments. Meanwhile, smart city initiatives can benefit from AR applications to improve public transportation, emergency services, and citizen engagement [89]. Following are some developments of AR in this domain area:

- Virtual Zoning and Land Use Modeling: AR can make the process of zoning and land-use planning much more interactive and accessible. Using AR apps, planners and citizens alike can visualize different zoning scenarios on-site, thereby promoting more informed discussions and decisions. For instance, you could point your smartphone at an empty lot and see a digital overlay of a proposed building, complete with height, design, and how it interacts with its surroundings [90].

- Asset Management and Maintenance: Cities have numerous assets like streetlights, water pipes, and public benches that need regular maintenance. AR can help city workers identify and assess the condition of these assets in real time. Maintenance staff can point a device at an asset and receive immediate information on its maintenance history, required repairs, or even step-by-step repair instructions, thereby improving operational efficiency [91].

- Public Transport Navigation: One of the challenges in public transport is navigating complex stations or understanding when the next bus or train will arrive. Augmented reality can improve user experience by providing real-time information through AR glasses or smartphones. For instance, as you enter a subway station, AR could guide you to your platform or even your seat by projecting arrows onto your field of view, while also displaying real-time schedules [92].

5.5. Other Applications

There are more applications found in smaller sectors, or at least, not as developed as those previously mentioned in this section. This doesn’t mean these applications are less developed than others found in more prolific sectors, it is important to understand that one thing is the sector development and another different one the level of application development due to the used technology. A very clear example is Pokémon Go [22], popularity was not only thanks to the originality of the idea at that time but also to how well implemented the technology in order to simulate the capture of Pokémons. Following are sectors/areas in development with important AR technologies:

- Video Games: There are more games that implement AR into the gameplay aside from Pokémon Go [22], like “AR Quake”, which is the first game to implement AR [93]. AR games can also be educational. For instance, learning history by scanning a historical marker with your phone to see events unfold on screen [94].

- Retail and Marketing: Nowadays, a lot of retail stores use AR technology not just to inform customers about products but also to enhance customer experience, for example, customers can scan products with their smartphones to get instant access to reviews, tutorials, and other content. Also, stores offer “virtual fitting rooms” where customers can see how clothes, glasses, or makeup would look on them without physically trying them on [12].

- Real State: Instead of physically visiting a property, you can take a virtual tour where AR overlays can provide information about the property, like dimensions, furniture arrangement, etc. Another interesting thing is to see how a property will look after specific changes, such as a different paint color or added furniture, via AR. Real estate developers can use AR to visualize how a construction project will look upon completion [13].

- Sports: Athletes can use AR to analyze and improve their performance by overlaying data like speed, trajectory, and form [95]. In stadiums or at home, fans can use AR through their phones or AR glasses to get real-time statistics, player profiles, and other interactive content. Also, sports brands can use AR to offer virtual try-ons of equipment or apparel [96].

6. Results and Findings

The Technology Readiness Level (TRL) is a metric introduced by the European Commission that employs a 1–9 scale to assess studies based on their level of technological preparedness, such as implementation and validation stages, as cited in [7]. The scale is broken down as follows: (1) observation of fundamental principles, (2) conceptualization of the technology, (3) empirical evidence of the concept, (4) lab-based validation of the technology, (5) validation in a pertinent setting (industry-relevant context for key technologies), (6) technology demonstration in a relevant setting (industry-relevant in the case of key technologies), (7) prototype system exhibited in a functional environment, (8) fully developed and certified system, (9) validated system in an operational context. In this paper, all reviewed studies have been analyzed by rigorously adhering to every guideline stated in the methodology, and these technologies have been scored according to the TRL index.

The exploration of AR across various domains, as detailed in the previous section, underscores the vast potential of AR technologies in a multitude of sectors. From education to healthcare, and through to manufacturing and industrial processes, AR is reshaping how people perceive and interact with the world, and in the process, transforming traditional workflows and practices. In the education and training sector, the inclusion of AR into learning environments has fundamentally enhanced the educational experience, making it more engaging and immersive. Whether by bringing textbook content to life, enabling collaborative learning experiences, or training aspiring professionals in high-stakes industries, AR is making learning more dynamic and interactive. Similarly, healthcare and medical applications of AR are revolutionizing patient care and medical procedures. By providing surgeons with “X-ray vision” and making physical therapy more enjoyable, AR is improving patient outcomes and transforming the medical landscape. In the industrial and manufacturing sectors, AR’s ability to overlay digital information onto the physical world is improving efficiency, accuracy, and safety. From enhancing worker training to guiding technicians during complex tasks and facilitating product design and quality control, AR is fast becoming an indispensable tool in these domains. These findings underscore that AR’s potential is immense and widespread across various sectors. Yet, it is essential to understand that the adoption and effective implementation of AR are contingent on overcoming certain challenges, including technological limitations, user acceptance, and ethical considerations. As future technology continues to navigate this relatively nascent terrain, ongoing research and development will be pivotal in realizing the full potential of AR in these and other domains.

Table 2 outlines the emerging technology trends in AR, summarizing diverse elements such as: AR Technology, Paper Reference, Commercial Application, Sector, Publication Classification, Hardware, Software, Connectivity, Protocol, and TRL index. The findings in Table 2 show those technologies that are more advanced in terms of TRL. In this sense, although all the research presents several technologies only those more advanced are highlighted in Table 2 to align with the purpose of the methodology. Note that the hardware in every application always is a series of devices that work together in order to implement the AR technology, the same occurs with the software part. The main sectors that are involved in AR trends are mostly medical, urban planning, and entertainment. Publication classification in all of these selected studies is “Computer Science” due to the nature of this technology (the development of AR applications implies various software implementations). Due to most of the AR selected studies being in a high level of TRL, these technologies already have been proven in an operational environment; however, it is important to consider that there are a lot of challenges and limitations, especially in some non-conventional sectors of AR, e.g., the commercialization of AR contact lenses, whose development still in an early stage.

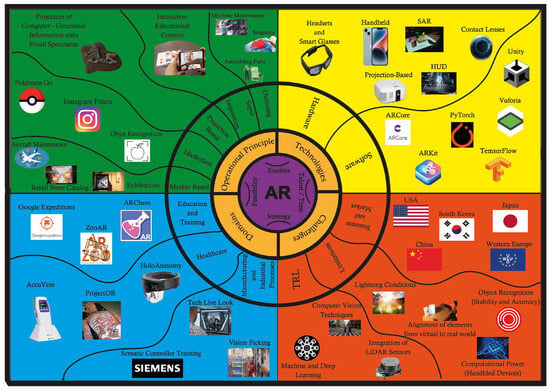

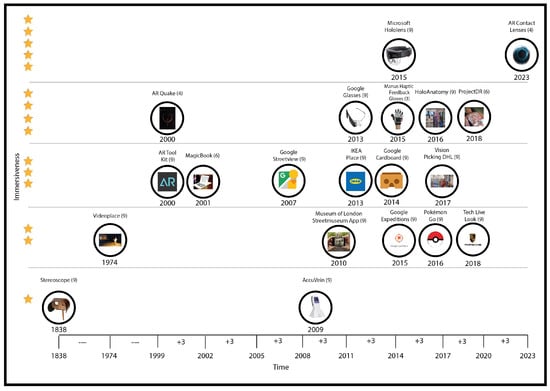

Figure 5 summarizes in an illustrative way the principal elements collected throughout the presented SLR. The map is divided into four main sections, which are: Operational Principle (the way the AR technology works), Technologies (divided into hardware and main software commonly used for development), Domains, and Challenges (including the markets in the world that invest most nowadays). In addition, Figure 6 shows the evolution of AR from the beginning of this technology (first documented developments) to 2023. The “x” axis is for the time and the “y” axis is for the level of immersiveness, ranked from 1 to 5 stars, with being 1 the less immersive and 5 being the most immersive (based on the type of technology and the way a user interacts with it). The name of the specific technology is indicated with its TRL index in parenthesis. Some of the shown AR technologies are discontinued today, however, they reached a TRL of 9 due to their commercialization or open usage to the public, others, despite their high immersiveness level, never were completed, which means a low TRL.

Figure 5.

The Map of Augmented Reality.

Figure 6.

Evolution of Augmented Reality. Stars indicate the immersiveness level from 1 to 5 (from low to high, respectively). Each technology has indicated its name and TRL above the corresponding circled image.

Table 2.

AR application technological comparison. The version and/or manufacturer of each commercial application is the one presented in the corresponding reference.

Table 2.

AR application technological comparison. The version and/or manufacturer of each commercial application is the one presented in the corresponding reference.

| AR Technology | Reference | Commercial Application | Sector | Publication Classification | Hardware | Software | Connectivity | Protocol | TRL |

|---|---|---|---|---|---|---|---|---|---|

| Marker-Based AR | [12] | Virtual Try-On Technology in Retail | Retail and Marketing | Computer Science | Smartphones/Tablets, Webcams, Depth Cameras, 3D Scanners, Smart Mirrors, Wearables, and AR Headsets | ARCore, ARKit, Vuforia, OpenCV, TensorFlow, PyTorch, Blender, Android Studio, Unity, Unreal Engine, and Google™ Cloud | Wi-Fi, Bluetooth, and Cloud Connectivity | TCP/IP | 8 |

| Marker-Based AR | [11] | The Museum of London’s Streetmuseum App | Museums and Exhibits | Computer Science | Smartphones/Tablets, Camera, Accelerometer, Gyroscope, and GPS Sensor | ARCore, ARKit, Xcode, Android Studio, Swift, Kotlin, Amazon™ Web Services, and Mongo DB | Wi-Fi | HTTP/HTTPS | 9 |

| Markerless AR | [24] | Google’s™ Live View | AR Navigation | Computer Science | Smartphones/Tablets, Camera, Accelerometer, Gyroscope, and GPS Sensor | Google™ Maps, Android, iOS, Location APIs, Camera APIs, ARCore, and ARKit | Wi-Fi | HTTP/HTTPS, and NMEA 0183 | 9 |

| Markerkless AR | [22] | Pokémon™ Go | AR Games | Computer Science | Smartphones, Gyroscope GPS Receiver, Camera, and Accelerometer | Niantic™ Real Word Pokémon™, Kotlin, Swift, Objective-C, ARKit, ARCore, Adobe™ Photoshop, Trello | Wi-Fi/Mobile Data | TCP/IP | 9 |

| Markerless AR | [23] | Instagram™ Filters | AR Social Media Filters | Computer Science | Smartphones, and Cameras | Adobe™ Photoshop, GIMP, Autodesk™ Maya, Blender, Unity Shader Graph, Unreal Engine Material Editor, Visual Studio Code, PyCharm, OpenCV, TensorFlow, PyTorch, Git, and Mercurial | APIs, Server, and Cloud Connectivity | HTTP/HTTPS | 9 |

| Markerless AR | [13] | Outdoor Augmented Reality Mobile Application Using Markerless Tracking | Urban Planning | Computer Science | Smartphones/Tablets, IMU, Cameras, LiDAR, Depth Sensors, ToF Sensors, and Qualcomm™ Snapdragon | OpenCV, ARCore, ARKit, Unity 3D, Unreal Engine, TensorFlow, PyTorch, Caffe, Xcode, Java, C++ | Mobile Data, Bluethoot | TCP, GSM, GAP | 6 |

| Projection Based AR | [14] | The Virtual Mirror | Healthcare and Medical | Computer Science | Display Device, Cameras, and ToF Sensors | ARCore, ARKit, APIs, Unity 3D, Vuforia, OpenCV, Apple’s™ SceneKit, SfM, SLAM, Backend, and Cloud Integration | HIPAA Compliance | DICOM, HL7 | 6 |

| Markerless AR | [72] | ZooAR | Education | Computer Science | Smartphones/Tablets, Camera, AR Glasses/Headsets, Accelerometer, Gyroscope, GPS, Proximity Sensor, and Ambient Light Sensor | Unity, ARCore, ARKit, Blender, and JetBrains | Wi-Fi/Mobile Data, GPS/Location Services, and NFC | UDP | 8 |

| Markerless AR | [72] | ARChem | Education | Computer Science | Cameras, Accelerometer, Gyroscope, Magnometer, and Depth Sensor | ARCore, ARKit, Unity Engine, C#, AutoCAD, SolidWorks, Unity Physics, NVIDIA PhysX, Bleder, and Maya | Wi-Fi, Cloud Service, and Real-Time Communication | HTTP/HTTPS, TCP/IP, and WebSockets | 6 |

| Markerless AR | [74] | Google™ Expeditions | Education | Computer Science | Smartphones/Tablets, Google’s™ Daydream View, and Google™ Cardboard (another VR compatible headsets) | Java, Daydream, Cardboard, ARCore, and Unity | Wi-Fi | HTTP/HTTPS, TCP/Ip, and WebRTC | 9 |

7. Discussion

As this paper navigates deeper into AR technology, it is important to delve into a more comprehensive exploration of its intricacies, potential, and the hurdles that lie ahead. This section discusses the challenges and limitations associated with the application of AR, by analyzing the technological advancements and trends transforming the AR landscape and discerning the contributions, benefits, and implications AR carries across various sectors. The ensuing discussion is structured into five segments: Section 7.1 underscores the application challenges and limitations, Section 7.2 covers the recent technological advancements and emerging trends in AR, Section 7.3 discusses the diverse contributions, benefits, and implications of AR across various domains, Section 7.4 addresses some important implications that must be considered at the moment of developing AR technology, in order to stay within the ethical margins, finally, Section 7.5 explains in a deeper and specific way the research questions established at the beginning of this work. Each segment individually clarifies a unique aspect of AR, thereby contributing to a multifaceted perspective that captures both the current realities and prospective developments of this transformative technology.

7.1. Application Challenges and Limitations

In the comprehensive exploration of AR presented in this paper, this research delves into various AR techniques such as marker-based AR, markerless AR, projection-based AR, superimposition AR, and outlining AR. The assessment of AR’s utilization across numerous domains, including education and training, healthcare and medical applications, manufacturing and industrial processes, retail and marketing, as well as urban planning and smart cities, brings to the first discussion segment: application challenges & limitations.

AR, despite its significant advancements and widespread applicability, faces some critical challenges that limit its full exploitation. These hurdles vary depending on the type of AR technique in use and the domain of application. Marker-based and Markerless AR systems, for instance, often encounter difficulties related to the stability and accuracy of object recognition and tracking. Lighting conditions, occlusions, and camera quality significantly impact these systems’ reliability, making them less feasible in environments where precision is paramount, such as healthcare and manufacturing. Projection-based and Superimposition AR, on the other hand, face issues concerning the alignment of virtual and real-world elements. This incongruity may lead to an ineffective or distorted AR experience. Furthermore, these techniques need high computational power and advanced graphics capabilities, limiting their widespread adoption, especially in resource-constrained environments such as handheld devices. One of the AR’s main challenges lies in the accuracy of real-time depth sensing and perception. Errors or delays in rendering could lead to insufficient or incorrect information, adversely impacting the user experience and the applicability in fields such as education and training, where real-time interactivity is key.

Considering AR devices, challenges also persist. For instance, headsets and smart glasses may offer immersive experiences but can be physically uncomfortable for prolonged use and pose social acceptability issues. Handheld devices suffer from limitations in display size and interaction modalities. Projection-based systems require controlled environmental conditions for optimal performance, limiting their usability in varying settings. AR contact lenses, while promising, are still in nascent stages, with challenges related to comfort, safety, and ethical considerations yet to be addressed comprehensively. Moreover, complexities related to user interface design, privacy concerns, and the cost of implementing AR solutions are pervasive challenges across all applications and technologies. They impose significant restrictions on AR’s broader adoption and usability in domains like retail and marketing or urban planning and smart cities. While AR holds immense potential in transforming numerous domains, these limitations necessitate concerted efforts in research and development to mitigate the identified challenges and unlock AR’s full potential. The subsequent sections will delve into the technological advancements and emerging trends that are set to shape the future of AR, followed by a discussion on the contributions, benefits, and implications of these developments.

7.2. Technological Advancements and Emerging Trends in Augmented Reality

This subsection discusses the direction of the advancements in tandem with the burgeoning trends that are driving AR’s evolution for mitigating the previously discussed challenges.

In terms of AR techniques, significant strides have been made. Advanced algorithms and computer vision techniques have markedly improved the precision and reliability of marker-based and markerless AR. Sophisticated image processing, machine learning, and deep learning algorithms are enabling more robust object recognition and tracking, even under challenging conditions, significantly enhancing applications in domains like healthcare and manufacturing. In particular, deep learning techniques have started to revolutionize the field of AR by introducing higher levels of automation and efficiency in object recognition and scene understanding. Convolutional Neural Networks (CNN) have proven particularly effective in image classification tasks, making it easier for AR systems to identify and superimpose digital information over real-world objects with greater accuracy. Furthermore, Generative Adversarial Networks (GAN) are facilitating more realistic virtual object generation, contributing to a more immersive AR experience. Reinforcement learning algorithms have also been adapted to optimize tracking algorithms in AR, making them more resilient to environmental noise and variable lighting conditions. These deep learning models are often trained on large datasets and can be deployed on cloud infrastructures to ensure real-time performance, thus making scalable and robust AR applications more feasible. The maturation of these deep learning technologies has synergistic implications for AR, extending its capabilities and applications across various sectors from gaming to remote surgery. Projection-based and superimposition AR systems have seen substantial advancements in terms of graphic processing capabilities. The development of powerful GPUs and cloud-based rendering solutions have made it possible to handle complex computations and graphics-intensive tasks more efficiently, thereby enhancing their implementation on various devices. Outlining AR is witnessing advancements in depth-sensing technologies. Integration of LiDAR (Light Detection and Ranging) sensors in handheld devices and headsets is improving real-time depth perception, thereby leading to a more accurate and interactive AR experience, particularly in education and training.