Mobile Customer Satisfaction Scoring Research Based on Quadratic Dimension Reduction and Machine Learning Integration

Abstract

:1. Introduction

2. Literature Review

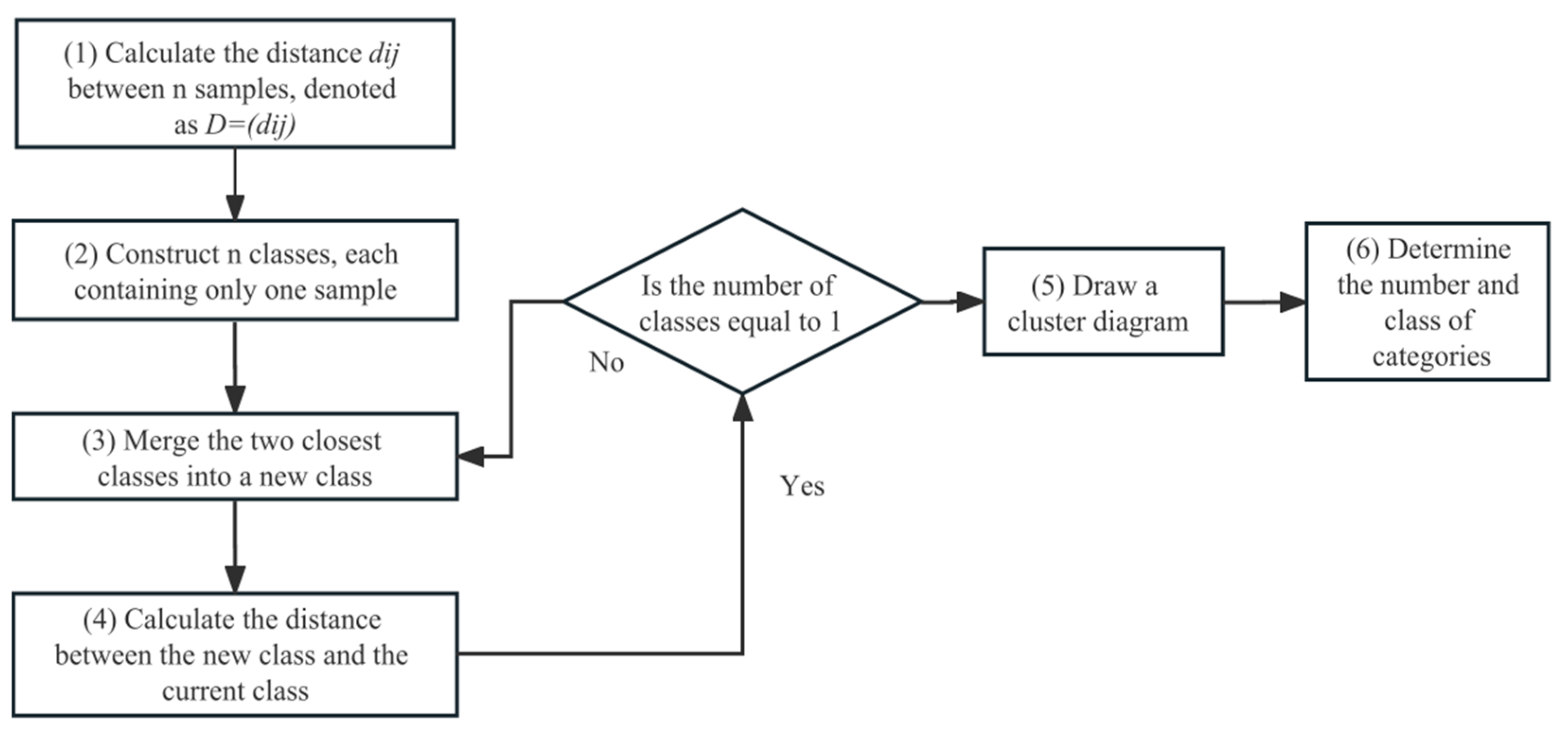

2.1. Systematic Clustering

2.2. Exploratory Factor Analysis (EFA)

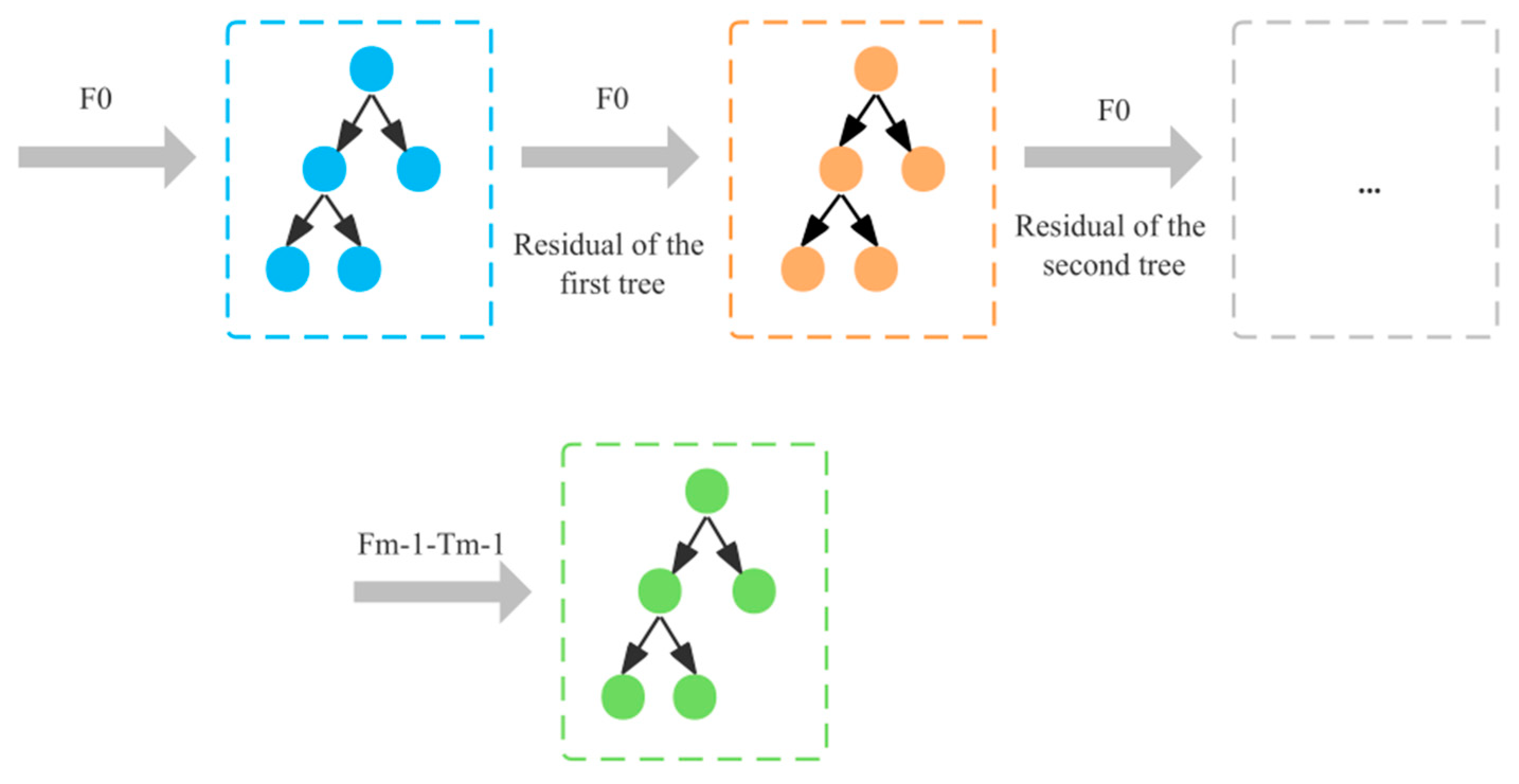

2.3. GBDT Algorithm

3. Establishment of Core Indicator System

3.1. Initial Indicator System

3.1.1. Data Cleaning

- 1.

- Quantitative processing of data

- ①

- Whether encountered network problems (yes → 1, not → −1)

- ②

- 4/5G User (2G → 2, 4G → 4, 5G → 5)

- ③

- Phonetic method (VONR → 6, EPSFB → 5(5G))

- ④

- Whether to care for the user (not → −1, yes → 1)

- ⑤

- Whether or not the user is a real – name registered user (not → −1, yes → 1)

- ⑥

- Client star rating logo (unrated → −1, semi – starred → 0)

- 2.

- Empty value processing

- 3.

- Outlier handling

3.1.2. Systematic Clustering

3.2. Core Indicator System

- 1.

- Standardizing the data. In this paper, the data are standardized using the Z-Score method, which uses the standard deviation as a ruler to measure the distance that a particular raw score deviates from the mean, which contains a few standard deviations and Z-Scores. Thus, the position of these data in the whole data is determined. Z is determined as

- 2.

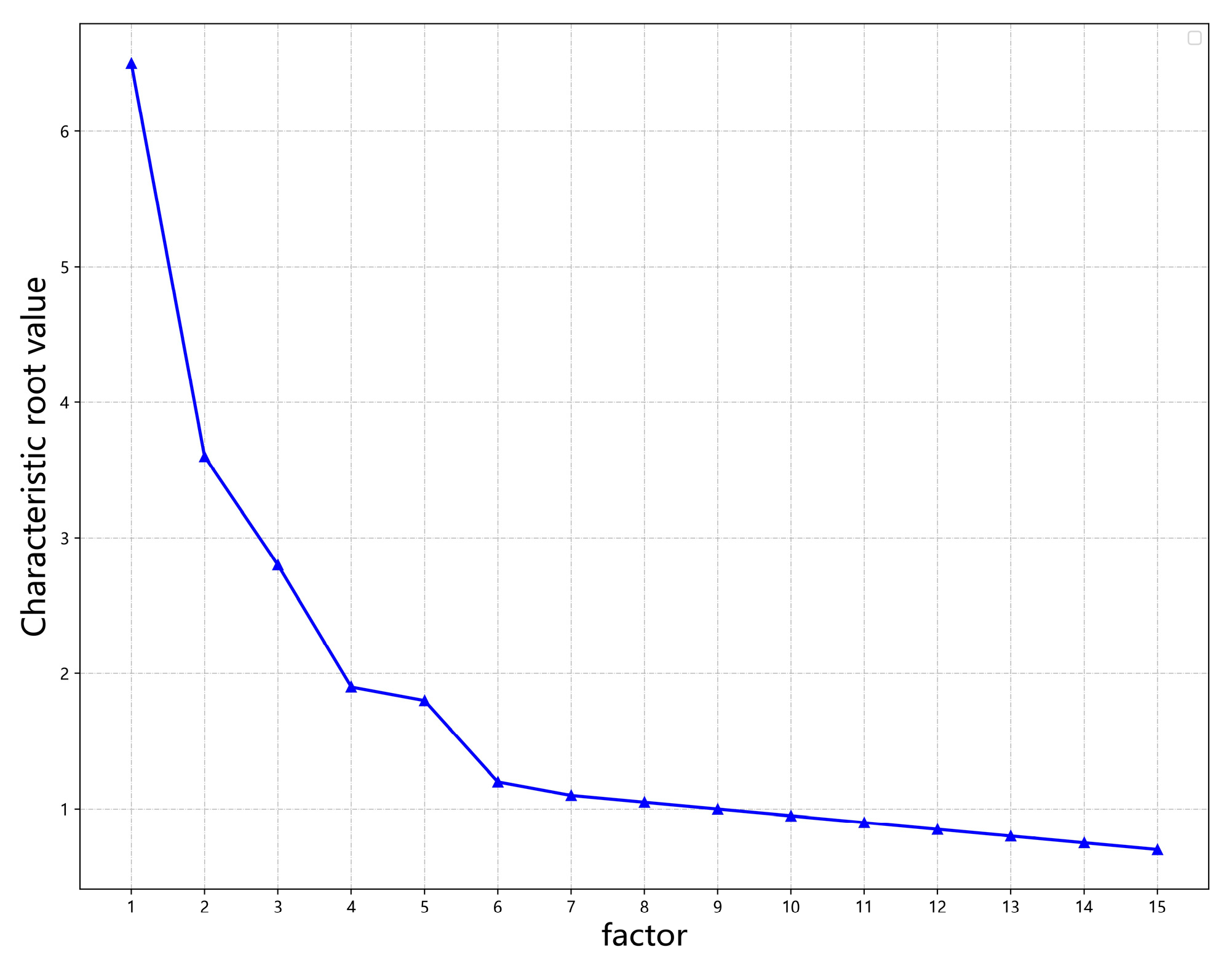

- The KMO test and Bartlett’s test are performed, and the standardized data are brought through the SPSSPRO platform to determine whether factor analysis could be performed. The results of the tests are represented in Table 4.

- 3.

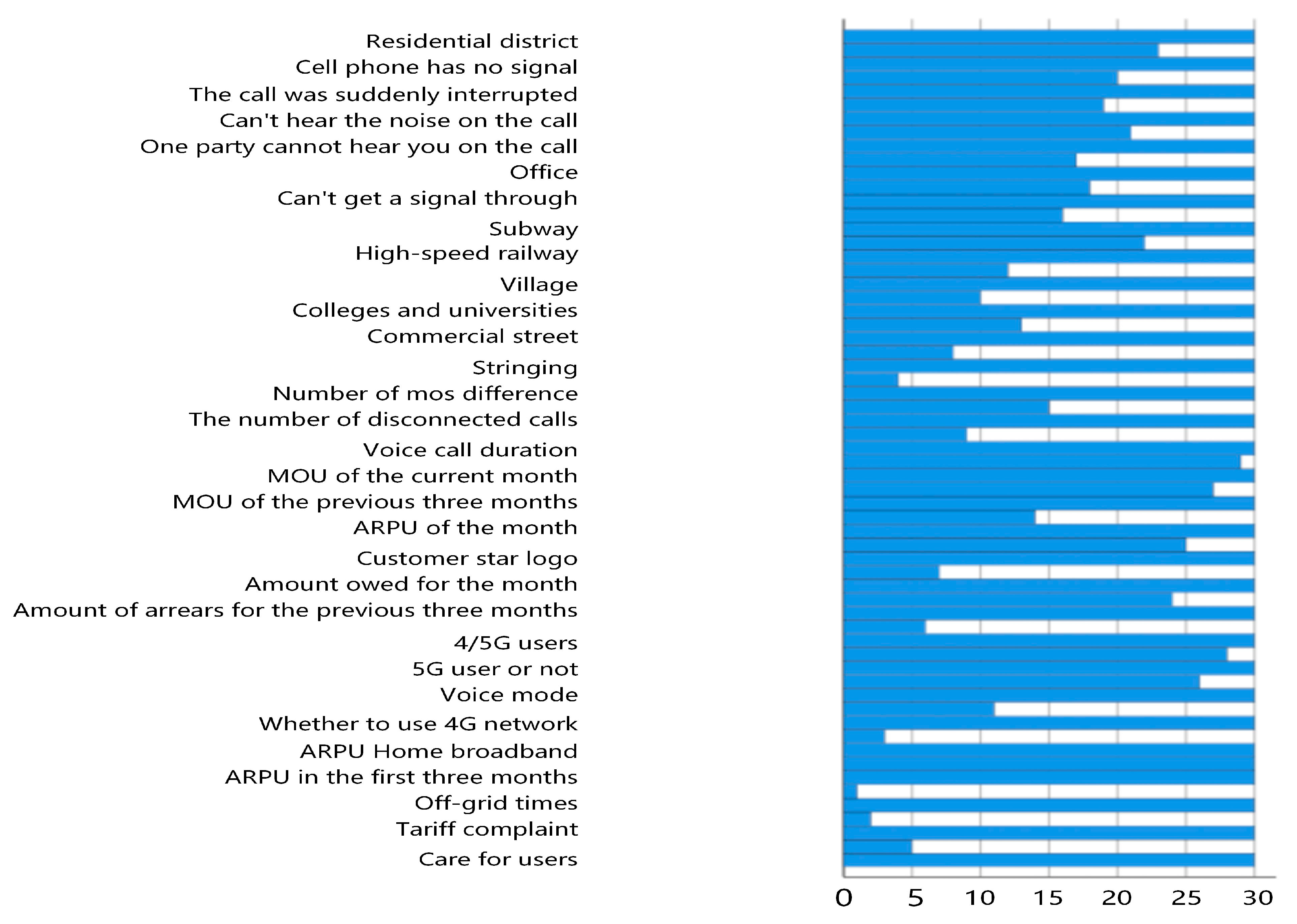

- Determining the number of principal factors.

- 4.

- The elbow rule corrects the model

- 5.

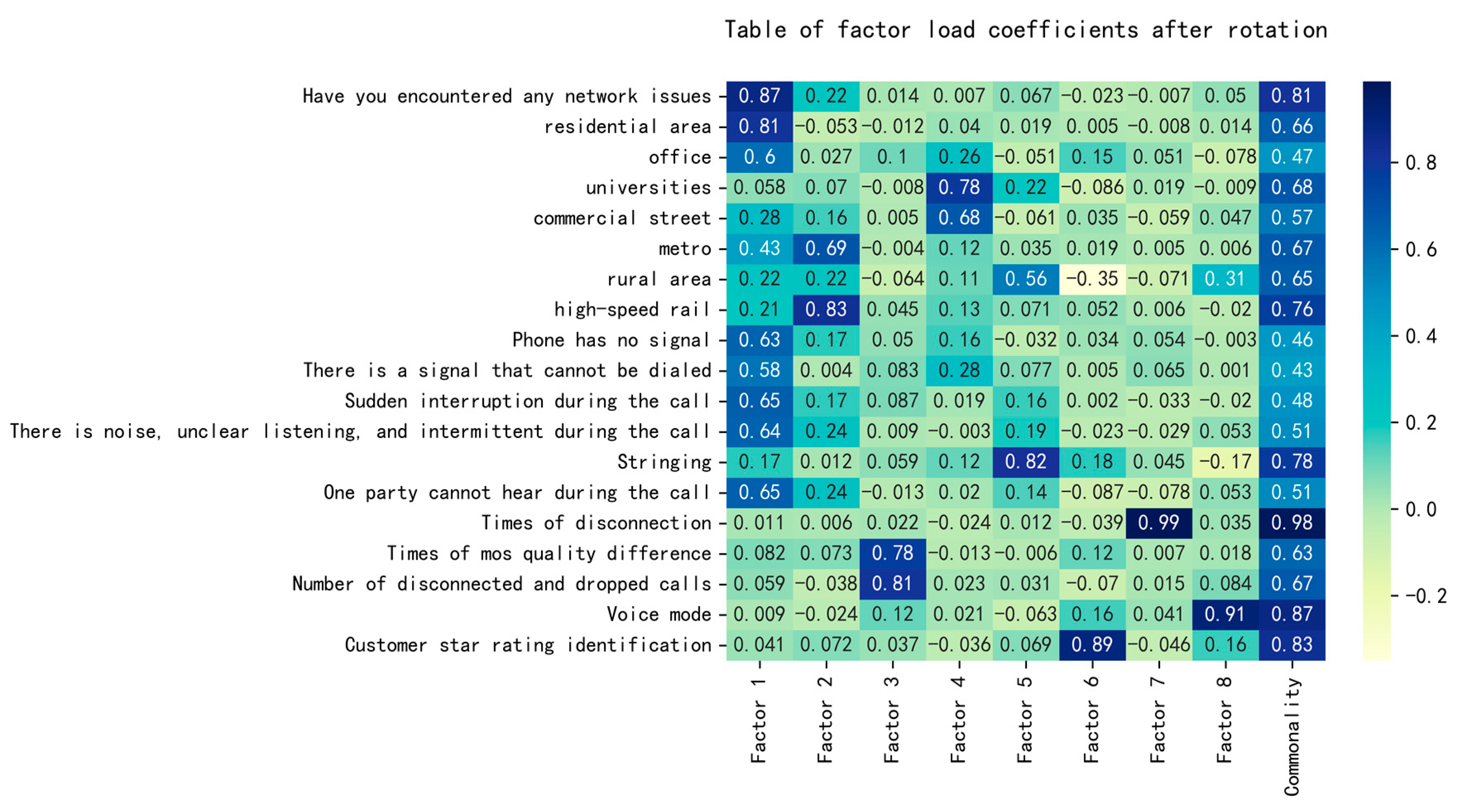

- Naming factor loading coefficients

4. GBDT Algorithm Predicts User Satisfaction

4.1. Data Re-Cleaning

4.2. GBDT Algorithm Prediction

5. Conclusions and Future Work

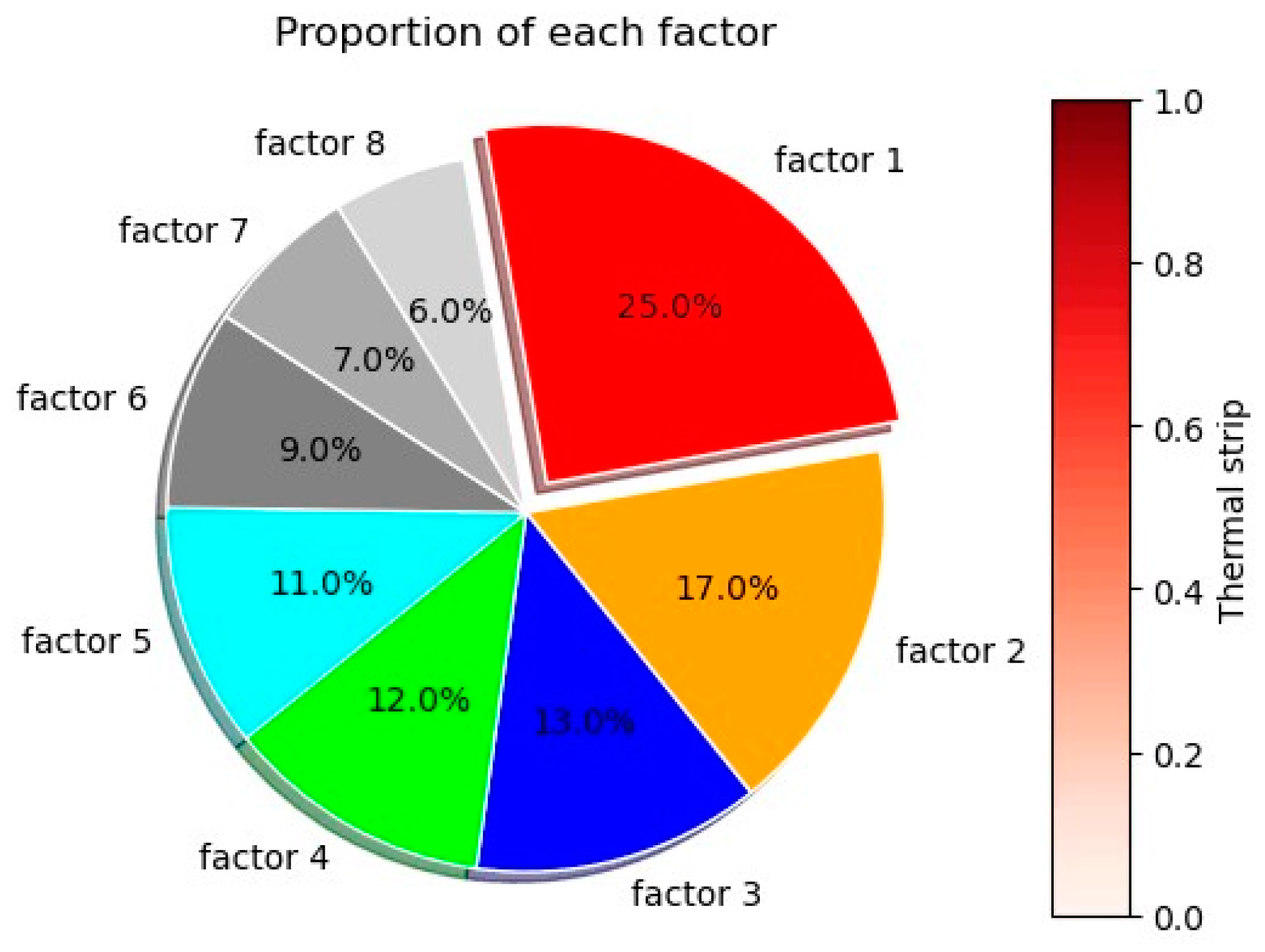

- (1)

- In this paper, we obtain eight core influence factors through the double dimensionality reduction combining systematic clustering and exploratory factor analysis, which is more reasonable and can obtain the most core influence factors compared with Zi Ye [22], who selects the core influence factors of mobile users of Wuhan communication through correlation. Core factor 1 has the highest weight of 24.952%, i.e., the status quo of indoor voice problems has the greatest influence. Factor 1 has seven core influencing factors. Therefore, if mobile operators want to improve user satisfaction, they need to improve these seven core impact factors, including whether they have encountered network problems, residential area, underground, no signal of mobile phone, sudden interruption during the call, inaudible intermittent and intermittent call with noise, and one party cannot be heard during the call.

- (2)

- General machine learning prediction algorithms have an accuracy of about 70% [23,40,41], and the prediction accuracy of this study can reach 99.96%, which is a very high accuracy when predicting. Highly accurate satisfaction prediction can help operators more accurately adjust their operational strategies, so as to improve their market competitiveness. In addition, improved user satisfaction can help promote the development of the communication industry and promote national informatization and economic growth.

- (3)

- Although this study is based on data from Chinese operators, it can be generalized to a certain extent to foreign operators or other related satisfaction rating studies. However, it should be noted that factors such as culture, social background, the level of economic development, laws and regulations in different countries and regions will have an impact on the user satisfaction evaluation system. Therefore, it is necessary to make corresponding adjustments when applying the research results to other countries or regions. If one would like to apply them for Amazon’s mobile marketplace in the UK, the following areas can be explored further:

- ①

- Cultural factors are crucial to user satisfaction. The UK and China have different cultural backgrounds that influence values, socialization and communication habits. Amazon, as a multinational company, needs to adapt to the cultural expectations of UK users. UK users may value privacy more and have different attitudes toward data use and sharing. Therefore, it is important to understand the cultural characteristics of UK users to accurately reflect their needs and expectations when evaluating user satisfaction.

- ②

- Economic factors are also important. The economic level, spending power and shopping habits in the UK are different from those in China, which will affect user demand for mobile services and satisfaction levels. Amazon’s pricing strategy and package selection in the UK market must take into account the purchasing power and preferences of UK users. Therefore, economic factors need to be thoroughly analyzed in the rating system to more accurately reflect user evaluation and satisfaction.

- ③

- Regulatory differences also need to be taken into account. The UK and China have different privacy and user rights regulations. Amazon’s mobile services in the UK market must comply with local laws to ensure data processing and user privacy. This has implications for service design, data collection and user interface. Considering cultural, economic and regulatory factors together will help to better understand the scope and limitations of the findings. This in-depth research will provide guidance to multinational organizations worldwide to ensure high user satisfaction with services and products in diverse environments.

- (4)

- At the same time, thousands of data collected in the real communication environment contain hundreds of millions of users, corresponding to each user’s quality of experience influencing factors, and behavioral characteristics also present ultra-high-dimensional characteristics. In order to effectively cope with the task of analyzing hundreds of millions of data, distributed and parallel processing algorithms, as well as corresponding processing software frameworks, can be adopted to reduce the time complexity of data mining algorithms and improve the efficiency of the algorithms.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hajar, M.A.; Alkahtani, A.A.; Ibrahim, D.N.; Al-Sharafi, M.A.; Alkawsi, G.; Iahad, N.A.; Darun, M.R.; Tiong, S.K. The effect of value innovation in the superior performance and sustainable growth of telecommunications sector: Mediation effect of customer satisfaction and loyalty. Sustainability 2022, 14, 6342. [Google Scholar] [CrossRef]

- Ramachandran, A.; Chidambaram, V. A review of customer satisfaction towards service quality of banking sector. Period. Polytech. Soc. Manag. Sci. 2012, 20, 71–79. [Google Scholar] [CrossRef]

- Devriendt, F.; Berrevoets, J.; Verbeke, W. Why you should stop predicting customer churn and start using uplift models. Inf. Sci. 2021, 548, 497–515. [Google Scholar] [CrossRef]

- England, R.; Owadally, I.; Wright, D. An Agent-Based Model of Motor Insurance Customer Behaviour in the UK with Word of Mouth. J. Artif. Soc. Soc. Simul. 2022, 25, 2. [Google Scholar] [CrossRef]

- Sweeney, J.; Payne, A.; Frow, P.; Liu, D. Customer advocacy: A distinctive form of word of mouth. J. Serv. Res. 2020, 23, 139–155. [Google Scholar] [CrossRef]

- Mukhsin, M.; Suryanto, T. The effect of sustainable supply chain management on company performance mediated by competitive advantage. Sustainability 2022, 14, 818. [Google Scholar] [CrossRef]

- Firestone, D.; Putnam, A.; Mundkur, S.; Chiou, D.; Dabagh, A.; Andrewartha, M.; Angepat, H.; Bhanu, V.; Caulfield, A.; Chung, E.; et al. Azure Accelerated Networking:{SmartNICs} in the Public Cloud. In Proceedings of the 15th USENIX Symposium on Networked Systems Design and Implementation, Renton, WA, USA, 9–11 April 2018; pp. 51–66. [Google Scholar]

- Kothandaraman, P.; Wilson, D.T. The future of competition: Value-creating networks. Ind. Mark. Manag. 2001, 30, 379–389. [Google Scholar] [CrossRef]

- Sharma, G.; Lijuan, W. The effects of online service quality of e-commerce Websites on user satisfaction. Electron. Libr. 2015, 33, 468–485. [Google Scholar] [CrossRef]

- China Mobile Mobile Business Customers Decreased by 242,000 in April! A Number of Customers Reported that the Fee Was Deducted. Available online: https://baijiahao.baidu.com/s?id=1733339562685510245&wfr=spider&for=pc (accessed on 22 August 2023).

- National Network Speed and Quality Report 2022. Available online: http://5g.speedtest.cn/article/51OpWlBZqWly4RL4AdJ6 (accessed on 22 August 2023).

- Consumer Data Released by Mobile Analytics and Business Intelligence Firm GWS Shows that Amazon’s Market Position is under Threat. Available online: https://xueqiu.com/7949779229/255858474 (accessed on 22 August 2023).

- Mobile Business Drags Japan’s Rakuten Deeper into the Loss Quagmire.2023 Becomes the Key Year! Available online: https://baijiahao.baidu.com/s?id=1757887714493188586&wfr=spider&for=pc (accessed on 22 August 2023).

- Asia Pacific to See Tenfold Rise in 5G Mobile Connections by 2030 as Digital Transformation Gathers Pace, Gsma Report Reveals. Available online: https://www.gsma.com/newsroom/press-release/the-mobile-economy-asia-pacific-report/ (accessed on 22 August 2023).

- Mahmoud, M.A.; Hinson, R.E.; Anim, P.A. Service innovation and customer satisfaction: The role of customer value creation. Eur. J. Innov. Manag. 2018, 21, 402–422. [Google Scholar] [CrossRef]

- Wang, W.T.; Ou, W.M.; Chen, W.Y. The impact of inertia and user satisfaction on the continuance intentions to use mobile communication applications: A mobile service quality perspective. Int. J. Inf. Manag. 2019, 44, 178–193. [Google Scholar] [CrossRef]

- Hulland, J.; Baumgartner, H.; Smith, K.M. Marketing survey research best practices: Evidence and recommendations from a review of JAMS articles. J. Acad. Mark. Sci. 2018, 46, 92–108. [Google Scholar] [CrossRef]

- Chen, T.; Peng, L.; Yin, X.; Rong, J.; Yang, J.; Cong, G. Analysis of user satisfaction with online education platforms in China during the COVID-19 pandemic. Healthcare 2020, 8, 20. [Google Scholar] [CrossRef]

- Jiang, H.; Zhang, Y. An investigation of service quality, customer satisfaction and loyalty in China’s airline market. J. Air Transp. Manag. 2016, 57, 80–88. [Google Scholar] [CrossRef]

- Kurdi, B.; Alshurideh, M.; Alnaser, A. The impact of employee satisfaction on customer satisfaction: Theoretical and empirical underpinning. Manag. Sci. Lett. 2020, 10, 3561–3570. [Google Scholar] [CrossRef]

- Lin, H.; Zhang, M.; Gursoy, D. Impact of nonverbal customer-to-customer interactions on customer satisfaction and loyalty intentions. Int. J. Contemp. Hosp. Manag. 2020, 32, 1967–1985. [Google Scholar] [CrossRef]

- Ye, Z. Campus Mobile Communication Service Satisfaction Survey and Influencing Factors Analysis—Taking Seven Schools in Wuhan as an Example; Zhongnan University of Economics and Law: Wuhan, China, 2019. [Google Scholar]

- Li, J. Research on Mobile Internet Satisfaction Evaluation Based on Decision Tree; Southwest Jiaotong University: Chengdu, China, 2018. [Google Scholar]

- Ferreira, D.C.; Marques, R.C.; Nunes, A.M.; Figueira, J.R. Customers satisfaction in pediatric inpatient services: A multiple criteria satisfaction analysis. Socio-Econ. Plan. Sci. 2021, 78, 101036. [Google Scholar] [CrossRef]

- Lucini, F.R.; Tonetto, L.M.; Fogliatto, F.S.; Anzanello, M.J. Text mining approach to explore dimensions of airline customer satisfaction using online customer reviews. J. Air Transp. Manag. 2020, 83, 101760. [Google Scholar] [CrossRef]

- Khan, M.M.; Fasih, M. Impact of service quality on customer satisfaction and customer loyalty: Evidence from banking sector. Pak. J. Commer. Soc. Sci. (PJCSS) 2014, 8, 331–354. [Google Scholar]

- Lee, M.; Cai, Y.M.; DeFranco, A.; Lee, J. Exploring influential factors affecting guest satisfaction: Big data and business analytics in consumer-generated reviews. J. Hosp. Tour. Technol. 2020, 11, 137–153. [Google Scholar] [CrossRef]

- Ezugwu, A.E.; Ikotun, A.M.; Oyelade, O.O.; Abualigah, L.; Agushaka, J.O.; Eke, C.I.; Akinyelu, A.A. A comprehensive survey of clustering algorithms: State-of-the-art machine learning applications, taxonomy, challenges, and future research prospects. Eng. Appl. Artif. Intell. 2022, 110, 104743. [Google Scholar] [CrossRef]

- Kabir, M.E.; Wang, H.; Bertino, E. Efficient systematic clustering method for k-anonymization. Acta Inf. 2011, 48, 51–66. [Google Scholar] [CrossRef]

- Schreiber, J.B. Issues and recommendations for exploratory factor analysis and principal component analysis. Res. Soc. Adm. Pharm. 2021, 17, 1004–1011. [Google Scholar] [CrossRef] [PubMed]

- Huang, D.; Jiang, F.; Li, K.; Tong, G.; Zhou, G. Scaled PCA: A new approach to dimension reduction. Manag. Sci. 2022, 68, 1678–1695. [Google Scholar] [CrossRef]

- Ma, L.; Xiao, H.; Tao, J.; Su, Z. Intelligent lithology classification method based on GBDT algorithm. Editor. Dep. Pet. Geol. Recovery Effic. 2022, 29, 21–29. [Google Scholar]

- Xia, Y.; Zhao, J.; He, L.; Li, Y.; Niu, M. A novel tree-based dynamic heterogeneous ensemble method for credit scoring. Expert. Syst. Appl. 2020, 159, 113615. [Google Scholar] [CrossRef]

- Guelman, L. Gradient boosting trees for auto insurance loss cost modeling and prediction. Expert. Syst. Appl. 2012, 39, 3659–3667. [Google Scholar] [CrossRef]

- Wu, W.; Jiang, S.; Liu, R.; Jin, W.; Ma, C. Economic development, demographic characteristics, road network and traffic accidents in Zhongshan, China: Gradient boosting decision tree model. Transp. A Transp. Sci. 2020, 16, 359–387. [Google Scholar] [CrossRef]

- Xu, H. GBDT-LR: A Willingness Data Analysis and Prediction Model Based on Machine Learning. In Proceedings of the 2022 IEEE International Conference on Advances in Electrical Engineering and Computer Applications (AEECA), Dalian, China, 18–19 August 2023; pp. 396–401. [Google Scholar]

- Wang, R.; Pei, X.; Zhu, J.; Zhang, Z.; Huang, X.; Zhai, J.; Zhang, F. Multivariable time series forecasting using model fusion. Inf. Sci. 2022, 585, 262–274. [Google Scholar] [CrossRef]

- Wu, M.L. Questionnaire Statistical Analysis Practice—SPSS Operation and Application; Chongqing University Press: Chongqing, China, 2010. [Google Scholar]

- Cui, M. Introduction to the k-means clustering algorithm based on the elbow method. Account. Audit. Financ. 2020, 1, 5–8. [Google Scholar]

- Yao, L.; Wang, Z.; Gu, H.; Zhao, X.; Chen, Y.; Liu, L. Prediction of Chinese clients’ satisfaction with psychotherapy by machine learning. Front. Psychiatry 2023, 14, 947081. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.; Yang, H.; Hou, J.; Li, Q. A machine learning approach to primacy-peak-recency effect-based satisfaction prediction. Inf. Process. Manag. 2023, 60, 103196. [Google Scholar] [CrossRef]

| User ID | Overall Satisfaction with Voice Calls | Whether Encountered Network Problems | Residential District | Offices | Colleges | Commercial Streets, Subways | Subways | Rural Areas | High-Speed Railways |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 10 | 1 | −1 | 2 | −1 | −1 | −1 | −1 | −1 |

| 2 | 2 | 1 | 1 | 2 | −1 | 4 | −1 | −1 | −1 |

| 3 | 10 | 1 | −1 | −1 | −1 | −1 | −1 | 6 | −1 |

| 4 | 6 | 1 | 1 | 2 | −1 | −1 | −1 | −1 | −1 |

| 5 | 5 | 1 | −1 | 2 | −1 | −1 | 5 | −1 | 7 |

| 6 | 7 | 1 | 1 | −1 | −1 | −1 | 5 | 6 | −1 |

| 7 | 8 | 1 | −1 | −1 | −1 | 4 | −1 | 6 | 7 |

| 8 | 10 | −1 | −1 | −1 | −1 | −1 | −1 | −1 | −1 |

| 9 | 10 | −1 | −1 | −1 | −1 | −1 | −1 | −1 | −1 |

| Category | Indicators |

|---|---|

| 1 | Residential district; offices; colleges and universities; commercial streets; metro; rural areas; high-speed rail; mobile phone no signal; have a signal can not be dialed; call suddenly interrupted during the call; call in the murmur, inaudible, intermittent; crosstalk; call during the call of one party can not hear; mos poor quality number of times; failed to connect to the number of dropped calls; 4/5G subscribers; voice mode; whether the 4G network customers (local) Excluding IoT); voice call-length (minutes); ARPU in the current month; MOU in the current month; MOU in the previous 3 months; whether or not a 5G network customer; customer star identification; amount of arrears in the current month; amount of arrears in the previous 3 months |

| 2 | Whether encountered network problems |

| 3 | Number of off-grid trips |

| 4 | Complaints about tariffs, caring for users or not, complaints about home broadband |

| 5 | ARPU in the first three months, ARPU (home broadband), whether or not you have been to a business office, extra traffic (MB), extra traffic fee (yuan), percentage of voice from abroad, inter-provincial roaming-hours (minutes), percentage of traffic from abroad, total GPRS-traffic (KB), GPRS-domestic roaming-traffic (KB), whether or not you are a real-name-registered subscriber |

| Level 1 Indicators | Level 2 Indicators | Level 3 Indicators |

|---|---|---|

| User Issues | User scenarios | Residential district |

| Office | ||

| High School | ||

| Commercial Street | ||

| Subway | ||

| Rural | ||

| High Speed Rail | ||

| User Calls Network problems during user calls | No mobile phone signal | |

| Can’t get through with signal | ||

| Sudden interruption during the call | ||

| Noise, inaudible, intermittent calls | ||

| Crosstalk | ||

| One party cannot be heard during the call | ||

| Networks, costs and remaining issues | Number of problems | Whether encountered network problems |

| Off-network | ||

| Poor mos quality | ||

| Number of missed calls | ||

| Remaining issues | Voice mode | |

| Client Star Rating | ||

| 4/5G users | ||

| Whether 4G network customer (local excluding IoT) | ||

| Whether 5G network customer | ||

| Voice Call—Duration (minutes) | ||

| Cost issues | Amount in arrears for the current month | |

| Amount in arrears for the previous 3 months | ||

| ARPU for the current month | ||

| Current Month MOU | ||

| Previous 3 Months MOU |

| KMO Value | 0.807 | |

| Bartlett sphericity test | approximate chi-square | 285933.639 |

| df | 465 | |

| P | 0.0002 | |

| Factor | Explanatory Rate of Variance before Rotation | Factor | Post-Rotation Variance Explained | ||||

|---|---|---|---|---|---|---|---|

| Characteristic Root | Explanation of Variance (%) | Cumulative Variance Explained (%) | Characteristic Root | Explanation of Variance (%) | Cumulative Variance Explained (%) | ||

| 1 | 6.457 | 20.828 | 30.828 | 1 | 485.446 | 15.66 | 25.66 |

| 2 | 3.586 | 11.567 | 42.395 | 2 | 293.767 | 9.476 | 35.136 |

| 3 | 2.604 | 8.4 | 50.796 | 3 | 286.541 | 9.243 | 44.379 |

| 4 | 1.74 | 5.613 | 56.409 | 4 | 264.02 | 8.517 | 52.896 |

| 5 | 1.691 | 5.454 | 61.863 | 5 | 168 | 5.419 | 58.315 |

| 6 | 1.185 | 3.823 | 65.686 | 6 | 162.372 | 5.238 | 63.553 |

| 7 | 1.113 | 3.591 | 69.277 | 7 | 157.675 | 5.086 | 68.639 |

| 8 | 1.08 | 3.484 | 75.761 | 8 | 127.704 | 4.119 | 74.759 |

| 9 | 0.976 | 3.147 | 78.908 | ||||

| 10 | 0.959 | 3.094 | 80.002 | ||||

| Factor | Nomenclature | Factor | Nomenclature |

|---|---|---|---|

| Factor 1 | State of the indoor voice problem | Factor 5 | Status of the voice signal stability problem |

| Factor 2 | State of the art of voice problems in traffic | Factor 6 | Status of voice route independence issues |

| Factor 3 | Speech stability issues | Factor 7 | Current status of voice service level issues |

| Factor 4 | Densely populated areas | Factor 8 | Current status of voice mode issues |

| Core Impact Factor | Factor 1 Components (%) |

|---|---|

| Whether encountered network problems | −0.136 |

| Residential district | 0.111 |

| Subway | 0.102 |

| No mobile phone signal | 0.095 |

| Sudden interruption during a call | 0.101 |

| I can’t hear any noise during the call | 0.105 |

| One party cannot be heard during the call | 0.106 |

| Parameter Name | Parameter Value |

|---|---|

| Data Slicing | 1 |

| Data Shuffling | not |

| Cross Validation | not |

| Loss Function | friedman_mse |

| Node splitting evaluation criteria | friedman_mse |

| Number of base learners | 600 |

| Learning rate | 0.3 |

| Proportion of no-playback sampling | 0.8 |

| Maximum proportion of features considered for splitting | None |

| Minimum number of samples for internal node splitting | 2 |

| Minimum number of samples in leaf nodes | 1 |

| Minimum weight of samples in leaf nodes | 0 |

| Maximum depth of the tree | 10 |

| Maximum number of leaf nodes | 50 |

| Threshold for impurity of node division | 0 |

| MSE | RMSE | MAE | MAPE | R² | |

|---|---|---|---|---|---|

| reasonable dataset | 0.014 | 0.118 | 0.082 | 1.253 | 0.997 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zeng, F.; He, Y.; Yang, C.; Hu, X.; Yuan, Y. Mobile Customer Satisfaction Scoring Research Based on Quadratic Dimension Reduction and Machine Learning Integration. Appl. Sci. 2023, 13, 9681. https://doi.org/10.3390/app13179681

Zeng F, He Y, Yang C, Hu X, Yuan Y. Mobile Customer Satisfaction Scoring Research Based on Quadratic Dimension Reduction and Machine Learning Integration. Applied Sciences. 2023; 13(17):9681. https://doi.org/10.3390/app13179681

Chicago/Turabian StyleZeng, Fei, Yuqing He, Chengqin Yang, Xinkai Hu, and Yining Yuan. 2023. "Mobile Customer Satisfaction Scoring Research Based on Quadratic Dimension Reduction and Machine Learning Integration" Applied Sciences 13, no. 17: 9681. https://doi.org/10.3390/app13179681

APA StyleZeng, F., He, Y., Yang, C., Hu, X., & Yuan, Y. (2023). Mobile Customer Satisfaction Scoring Research Based on Quadratic Dimension Reduction and Machine Learning Integration. Applied Sciences, 13(17), 9681. https://doi.org/10.3390/app13179681