Abstract

Alzheimer’s disease (AD) is a progressive, irreversible neurodegenerative disorder that requires early diagnosis for timely treatment. Functional magnetic resonance imaging (fMRI) is a non-invasive neuroimaging technique for detecting brain activity. To improve the accuracy of Alzheimer’s disease diagnosis, we propose a new network architecture called Dynamic Multi-Task Graph Isomorphism Network (DMT-GIN). This approach uses fMRI images transformed into brain network structures to classify Alzheimer’s disease more effectively. In the DMT-GIN architecture, we integrate an attention mechanism with the Graph Isomorphism Network (GIN) to capture node features and topological structure information. To further enhance AD classification performance, we incorporate auxiliary tasks of gender and age classification prediction alongside the primary AD classification task in the network. This is achieved through sharing network parameters and adaptive weight adjustments for simultaneous task optimization. Additionally, we introduce a method called GradNorm for dynamically balancing gradient updates between tasks. Evaluation results demonstrate that the DMT-GIN model outperforms existing baseline methods on the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database, leading in various metrics with a prediction accuracy of 90.44%. This indicates that our DMT-GIN model effectively captures brain network features, providing a powerful auxiliary means for the early diagnosis of Alzheimer’s disease.

1. Introduction

Alzheimer’s disease (AD) is described as a fatal degenerative dementia [1]. It affects patients’ memory, thinking, and communication abilities, accounting for 60% to 70% of progressive cognitive impairment cases in the elderly [2]. Currently, approximately 50 million individuals worldwide are affected by Alzheimer’s disease and related dementias. Owing to the rise in life expectancy, it is anticipated that by 2050, the global patient population will reach 139 million [3,4], which poses significant impacts on society, the patients themselves, and their families. Alzheimer’s disease progresses slowly, and there is still no effective treatment to prevent, halt, or reverse the disease [5]. In this context, Artificial Intelligence is emerging as a promising tool for not only enhancing the efficiency and effectiveness of healthcare services, but also for providing optimal strategies to clinicians [6]. Specifically, in early diagnosis, functional magnetic resonance imaging (fMRI) and deep learning methods play a critical role [7]. Functional MRI is a neuroimaging technique that utilizes measurements of cerebral blood flow to visualize brain activity [8]. This technique has been widely used for the automated diagnosis of brain disorders, thereby providing a rich source of information about the brain’s functional connectivity. Recent studies suggest that AD’s functional connectivity undergoes extensive changes, providing important clues for understanding the disease mechanism and searching for effective treatments.However, diagnostic accuracy remains a challenge, and there is a need for non-invasive biomarkers that can detect prodromal or early pathophysiological changes in AD symptoms. The blood oxygen level-dependent (BOLD) signal in fMRl, which is an indicator of basic underlying vascular and respiratory factors in the brain, has recently been investigated as a potential biomarker for AD. The variability of the BOLD signal, especially at cardiorespiratory frequencies, is found to increase among AD patients and may be associated with the impairment of the lymphatic clearance system responsible for clearing soluble proteins and metabolites from the central nervous system. Therefore, the investigation of BOLD signal variability could provide important insights into the pathophysiological changes of AD and contribute to the development of non-invasive biomarkers for early diagnosis and effective treatments. The study by Tuovinen et al. [9] provides evidence of a non-invasive, highly sensitive biomarker for diagnosing incipient AD using BOLD signal variability measurements from fMRI, which could contribute to the development of effective treatments and early interventions for AD.

The data generated by fMRI are typically presented in the form of images, which represent the signal intensity of various brain regions at distinct time points. These images are commonly aligned with standard brain region templates and processed into adjacency matrices. Each matrix value signifies the connection strength between the corresponding brain regions, exhibiting promising classification results when utilizing deep learning methods such as CNN. However, since CNN’s convolution and pooling operations are based on the local similarity of Euclidean data, the brain networks, as represented by their adjacency matrices, exhibit non-Euclidean properties due to the complex, irregular, and high-dimensional structure of the underlying connectivity patterns. CNN cannot effectively capture all their properties. This is because CNN is fundamentally designed for Euclidean data and cannot effectively capture all properties inherent in non-Euclidean spaces. In contrast, a Graph Neural Network (GNN) excels at handling such complex structures. GNNs operate directly on the graph structure and can aggregate or ‘pool’ information from a node’s neighbors—the nodes it is directly connected to. This aggregation forms a new representation of the node, encapsulating information from its local neighborhood. As a result, a GNN is particularly well-suited for handling non-Euclidean data, and it has recently been widely used for brain network classification problems. However, GNNs have limited representation capabilities and cannot distinguish certain simple graph structures [10]. Moreover, they struggle to explain classification results in a neuroscientifically interpretable manner [11]. In recent years, GNNs have proven to be a potent tool for learning representations from graph data. However, the Graph Isomorphism Network (GIN), a specific variant of GNN introduced by Xu et al. [10], has demonstrated superior performance. The GIN builds upon traditional GNNs by better capturing the rich topological information present in graph data. It employs a unique update rule that considers both node features and edge attributes, ensuring a more comprehensive representation of graph structures. Additionally, GIN is designed to effectively distinguish different graph structures—an ability not shared by all GNN models. This capacity is particularly crucial in tasks where understanding the detailed structure and relationships between nodes is vital. Most notably, GIN’s strength lies in its ability to preserve the property of graph isomorphism, meaning it can distinguish non-isomorphic graphs and recognize isomorphic ones. This feature is integral to its success in tasks such as chemical compound classification, social network analysis, and other applications where subtle variations in graph structure can have significant impacts. It is important to note that while GINs provide a powerful tool for graph data representation, they can be further enhanced by coupling with multi-task learning (MTL) [12] methods. MTL is a machine learning approach that improves model performance by simultaneously learning multiple related tasks. In contrast to learning each task independently, multi-task learning allows models to share knowledge across different tasks, which helps them learn general features and patterns across tasks, thereby improving generalization capabilities. In the context of our research, incorporating MTL into our GIN model allows the model to learn from not only the graph structure, but also from the related tasks of age prediction and gender classification. This, in turn, can provide a more holistic view and a better understanding of Alzheimer’s disease, contributing to its improved classification and diagnosis. In neuroimaging research, age and gender have been proven to be related to the occurrence and progression of Alzheimer’s disease. Age is the greatest risk factor for Alzheimer’s disease, with the incidence rate increasing significantly with age [13]. Gender differences may also affect brain structure and functional changes, thereby influencing the incidence and progression of Alzheimer’s disease [14]. Therefore, by incorporating age prediction and gender classification as auxiliary tasks, we can better classify and diagnose Alzheimer’s disease. Although multi-task learning [12] has also been successfully applied in Alzheimer’s disease classification research, previous studies have not adequately addressed the task conflict issue during training, particularly in terms of dynamic weight balancing [15]. Each task may optimize model parameters in different directions, leading to interference between tasks and adversely affecting model performance.

An efficient classification method for Alzheimer’s disease was developed with the core motivation and objective of playing a crucial role in early diagnosis. Limitations of the use of traditional machine learning and graph neural networks in processing graph data were identified, and potential weight imbalance issues in multi-task learning during Alzheimer’s disease classification were acknowledged. The interpretability of the model was emphasized due to its paramount importance in the field of neuroscience, as it aids in understanding the underlying mechanisms of the disease and provides clues for finding effective treatments.

A new Dynamic Multi-Task Graph Isomorphism model for Alzheimer’s disease classification was developed. The main component of the model is a Graph Isomorphism Network (GIN), which classifies fMRI images of Alzheimer’s disease as its primary task. By adopting an improved GIN to analyze the brain functional network and learn node features on adjacency matrices, the network’s representational capability was significantly enhanced. Simultaneously, age prediction and gender classification were incorporated as auxiliary tasks to guide the classification of fMRI images of Alzheimer’s disease. The GradNorm algorithm was employed to dynamically adjust the weights of different tasks during model training, which accelerated the learning process, prevented interference between tasks, and improved learning efficiency.

The remaining parts are structured as follows. Section 2 presents the related work. Section 3 introduces our proposed methodology, including the main model, the network architecture employed, and the integration of multi-task learning. Section 4 provides a comparison of our model with other models, as well as ablation experiments for the model itself. Section 5 offers a discussion and conclusion.

2. Related Work

In this section, a main review of deep learning-based Alzheimer’s disease classification methods and the application of multi-task learning in AD classification is conducted.

2.1. Deep Learning-Based Alzheimer’s Disease Classification Methods

2.1.1. CNN-Based Methods

CNN-based methods for AD classification usually preprocess fMRI data, then use point multiplication of image data and convolution kernels in convolution layer calculations to extract image data features and obtain classification prediction results. For example, Saman Sarraf et al. [7] used the LeNet-5 model to extract shift and scale-invariant features before performing deep learning classification for AD, achieving an accuracy rate of 96.85% on the dataset. This method opened new avenues for medical image analysis, but it may not be well-equipped to handle more complex problems in the future. Yosra Kazemi et al. [16] employed the convolutional neural network architecture AlexNet to extract and learn features from low to high levels, improving AD classification performance. Compared to previous studies, this method achieved classification for all stages of Alzheimer’s disease, reaching an accuracy rate of 94.97%. R.R. Janghel et al. [17] converted and scaled 3D images to 2D images before extracting features and used the VGGNet architecture to classify fMRI images. Sima Ghafoori et al. [18] proposed a three-dimensional CNN to combine and analyze rs-fMRIs, clinical assessment results, and demographic information to predict the conversion from MCI to AD within an average of 5 years. The method successfully predicted the prognosis of MCI to AD with high accuracy, demonstrating the potential of CNN methods in solving this problem. In comparison to other experiments based on CNN models, this study improved the classification average accuracy to 99.95% by performing some preprocessing on the image dataset before sending it to the CNN architecture for feature extraction. However, since adjacency matrices are non-Euclidean data, CNNs cannot effectively handle them and capture all their properties.

2.1.2. GNN-Based Methods

GNN-based methods build on brain functional networks stored as binary matrices, with each brain region as a node, forming graph data input into GNNs. For example, Xiaoxiao Li et al. [19] proposed an interpretable GNN framework with a new regularization pooling layer. The pooling layer calculates the node pooling scores to identify Regions of Interest essential for AD classification. This method is characterized by encouraging reasonable Region of Interest selection. Chunde Yang et al. [20] introduced a method called PSCR, which inputs transformed brain maps into a graph attention network for fMRI image classification. This method demonstrates good performance, with a maximum accuracy of 72.4%, a satisfactory sensitivity of 71.15%, and a specificity of 75.00%. Yun Zhu et al. [21] proposed a structure and feature-based Graph U-Net model that integrates GNN into the high-performance U-Net structure, considering graph node structure and features in the pooling layer to enhance AD classification accuracy. Compared to previous methods, this approach excels at extracting both node-level (low-level) and structural (high-level) information from graphs. The low-level information involves direct attributes of individual nodes and connections, as derived from functional magnetic resonance imaging (fMRI). On the other hand, the high-level information encapsulates the overall structure and interconnected patterns within the graph, which are captured through the structure and feature-based Graph U-Net’s adaptive pooling layer.These optimizations have allowed the model to reach an average classification accuracy of 83.69%. Xuegang Song et al. [22] introduced a dual-modality fusion brain connectivity network that combines fMRI and diffusion tensor imaging, proposing three mechanisms in the current GCN to improve classifier performance. However, GNNs have limited representation capabilities and cannot distinguish graph isomorphic structures, leaving room for improvement in these methods.

2.1.3. Other Deep Learning Methods

Besides CNN and GNN-based methods, other deep learning methods have also been applied to AD diagnosis. In this section, we will discuss some of these methods and their applications in this field. Kai Lin et al. [23] proposed a learning framework based on Recurrent Neural Networks (RNNs) to extract sequence features from dynamic FC networks using rs-fMRI data for brain disease classification. This method, in contrast to CNN approaches, considered time dynamics and time steps, achieving an accuracy rate of 92.8% for AD classification. Abdulaziz Alorf et al. [24] proposed a method using stacked sparse autoencoders to classify preprocessed rs-fMRI images. Evaluating the proposed model using k-fold cross-validation resulted in an accuracy rate of 77.13%. This method is characterized by analyzing brain regions using network learning weights, but the performance of autoencoders in feature extraction and dimensionality reduction may be limited by training data quality and model architecture. Zhengwang Xia et al. [25] proposed a novel framework based on Finer-DBN, which, compared to traditional CNNs, can directly judge whether a sample is healthy based on the difference between reconstructed data and original data without the need for multiple model tests, achieving an overall accuracy of 78.36% and an F1 score of 76%. However, the DBN used may require a large amount of training data and computational resources and is sensitive to the selection of hyperparameters. Furthermore, the Finer-DBN framework may not fully utilize multimodal data and spatial information.

2.2. Application of Multi-Task Learning in AD Classification

Multi-task learning is a machine learning paradigm that simultaneously learns multiple related tasks, improving each task’s performance by sharing information and latent knowledge. Multi-task learning has been widely applied in neuroimaging analysis. For example, Wei et al. [26] proposed a multi-modal Alzheimer’s disease classification method, considering feature selection for each modality in medical images as a separate task, allowing information sharing across multiple tasks. This method achieved an accuracy rate of 92.51% in AD classification. Nianyin et al. [27] introduced a multi-task learning algorithm based on Deep Belief Network and employed a multi-task feature selection method to select a feature set relevant to all tasks.This method shared weights across the AD vs. HC, pMCI vs. HC, and AD vs. sMCI tasks, resulting in accuracy rates exceeding 95% for all tasks. Xiaodan et al. [15] proposed a new GCN that uses demographic information as additional outputs, adding two structurally similar but parameter-different auxiliary networks to predict gender and age, sharing parameters with the main network. Ultimately, the classification results yielded an accuracy of 90.0%, a sensitivity of 91.7%, and a specificity of 88.6%. These studies show that multi-task learning can effectively utilize latent correlated information in neuroimaging data, thereby improving model performance. However, previous research has not adequately addressed the issue of balancing task weights during training. If weights are not allocated properly, some tasks may be over-optimized, while others may be negatively impacted.

A novel DMT-GIN model has been proposed, effectively capturing local and global features of brain networks through graph-based layers and outperforming CNN-based methods. This architecture overcomes the limitations of CNNs in handling non-Euclidean data. In comparison to GNN-based methods, our DMT-GIN model incorporates the GIN model, addressing the limited representational capabilities of GNN-based methods and enhancing the model’s ability to distinguish graph isomorphic structures. In contrast to other deep learning methods such as RNNs and autoencoders, our DMT-GIN model efficiently extracts local and global features from graph data. In the context of AD classification based on fMRI data, our dynamic multi-task learning strategy effectively resolves task conflicts during training and optimizes the balance of weights between tasks. This approach distinguishes our DMT-GIN model from traditional multi-task learning strategies and contributes to its superior performance in Alzheimer’s disease classification.

3. Methods

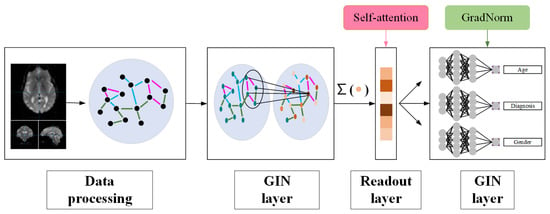

A new DMT-GIN model is proposed for classifying fMRI images of Alzheimer’s disease patients, as depicted in Figure 1. The input of the model is preprocessed brain network data obtained from fMRI data. The primary task of the model is to classify Alzheimer’s disease, while the auxiliary tasks involve predicting and classifying age and gender. The network parameters of the main and auxiliary tasks are shared to guide the main task. The weights between the tasks are dynamically balanced using the GradNorm algorithm. In this section, we will detail our classification method, covering data preprocessing, graph isomorphism network architecture, and multi-task integration.

Figure 1.

Framework diagram of DMT-GIN approach, including data processing part, GIN layer part, and FC layer part.

3.1. Data Preprocessing

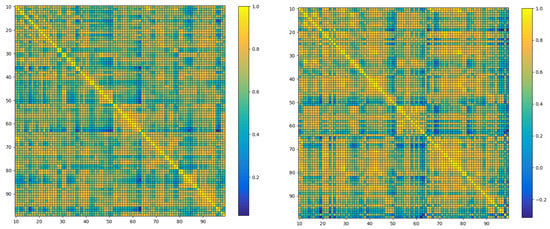

The aim is to explore the relationship between the human brain functional network and Alzheimer’s disease using fMRI data. To achieve this, a series of preprocessing tasks was conducted on the original data. Initially, time difference correction [28] was performed, and time drift was corrected by resampling the original data so that data at all time points were aligned on the time scale. Next, head movement correction was carried out to eliminate the offset and deformation caused by head movement [29], resulting in a final image that more accurately reflected the brain activity area. Neuroimaging data from other sources were aligned to eliminate differences in their spatial positions. Finally, smoothing was performed to remove noise and extreme values by conducting spatial filtering on the image, rendering the data easier to analyze and interpret. Each preprocessed fMRI data point was then matched with the AAL standard template [30], resulting in data containing 116 brain regions. Ninety of these regions, belonging to the cerebrum, were selected for subsequent research. Pearson correlation coefficients were calculated between these brain regions, and the corresponding correlation coefficient matrices for the 90 brain regions were obtained, as shown in Figure 2’s heatmap. To obtain the brain functional network, a soft thresholding method [31] was employed to binarize the correlation coefficient matrices, selecting the top five most strongly correlated brain regions for each region as edges in the brain functional network. Ultimately, 312 brain functional networks were obtained and stored as binary matrices. Six data features were then extracted from these brain functional networks, including degree centrality, betweenness centrality, clustering coefficient, eigenvector centrality, closeness centrality, and PageRank centrality. The continuous features were discretized using the equal-interval binning method, ensuring the stability of the GIN model and reducing the risk of overfitting. The brain functional network and corresponding node feature data obtained in the preprocessing part were used for subsequent experimental analysis.

Figure 2.

Heat map of the brain network composed of 90 × 90 adjacency matrices. It displays the functional connectivity strength between various brain regions. The left figure is the heat map of the brain network of ordinary people, and the right figure is the heat map of the brain network of AD patients. The differences observed between the heatmaps of Alzheimer’s disease patients and those of healthy individuals highlight the variations in connectivity strength among the brain regions.

Furthermore, to better explore the relationship between human cognition and brain functional network, the AGE and PTGENDER fields from ADNI’s clinical information were utilized as age and gender labels [32]. For the age labels, the data were normalized to fall within the [0, 1] range. For the gender labels, binary encoding was used, where 0 represents male and 1 represents female.

Finally, the preprocessed data were expanded fivefold using the bootstrap method [33] to meet the data volume requirements of subsequent experiments.

3.2. GIN Architecture

The GIN architecture consists of two GIN layers, two ReLU layers, two batch normalization layers, and a readout layer, as illustrated in Figure 3. The input of the network architecture is the graph representation of the brain network obtained in the data preprocessing part. The graph isomorphism layer is a key component of this network architecture, capturing the topological structure and node features of the brain network. In each graph isomorphism network layer, the features of each node are aggregated with the features of neighboring nodes to obtain new nodes, which are then input into a multilayer perceptron (MLP), with the specific process as follows:

Figure 3.

GIN framework diagram.

In the equation, k represents the k-th update, v represents the current node, u represents the neighboring nodes, MLP is the multilayer perceptron, and is the learnable parameter. The updated node features are sent to the next graph isomorphism network layer for node aggregation and further updates. After obtaining the updated node features, we introduce an attention mechanism in the readout layer rather than using ordinary global pooling (such as Sum Pooling or Max Pooling) to better capture the importance of each node’s real-world significance [34]. The attention mechanism can filter out unnecessary or less relevant information, focusing on the most significant nodes [35]. In the self-attention mechanism we adopt, each element of the input sequence participates in the weight calculation [36], capturing the correlations and dependencies between nodes more effectively. In our attention-based pooling operation, we first use graph convolution to compute the attention scores for each node, as follows:

In the equation, i represents the node, and are learnable parameters, represents nodes adjacent to node i, and represents the edge weight from source node j to target node i. Using the attention scores, we apply the TopK principle to select the nodes for pooling, determining which nodes are retained in the pooling results and which are discarded, as follows:

After obtaining the graph representation data from the readout layer, we input it into a fully connected layer and introduce the ReLU activation function to increase nonlinearity [37], as follows:

In the equation, W is the weight matrix of the linear transformation, b is the bias vector, x is the input, and y is the output. Finally, the output of the fully connected layer is fed into a sigmoid activation function to map the result between 0 and 1. For the regression task, no sigmoid activation function is used, and the prediction result is directly output.

3.3. Multi-Task Integration

Multi-task Learning (MTL) is a technique that simultaneously optimizes the objective functions of multiple related tasks within a single model [38]. Compared with single-task learning, MTL can improve the model’s generalization ability and learning efficiency by leveraging the commonalities and differences between different tasks, reducing the risk of overfitting [39]. We employed a multi-task auxiliary network model, with Alzheimer’s Disease (AD) classification as the primary task and age and gender prediction as auxiliary tasks. As the onset of AD is associated with age and gender [40], we believe that the auxiliary tasks can help the primary task extract more effective features and adjust network parameters. Our model consists of three Fully Connected (FC) layers, each designed to output different results. Specifically, one FC layer is assigned to provide the AD classification result. The labels used for this task are binary: 0 for the Cognitively Normal (CN) group and 1 for the AD patient group. This layer essentially separates the input data into two distinct categories based on the risk of Alzheimer’s disease. The second FC layer focuses on age regression, outputting a continuous value that represents the predicted age of the individual. This task is essential to understanding how age-related changes can affect the disease’s progression. The third FC layer is tasked with gender classification, which results in binary labels, with 0 representing female and 1 representing male. This task helps in understanding the gender-based differences that might exist in the progression and risk of Alzheimer’s disease. For the calculation of the loss function, we use different approaches for different tasks. For the AD classification and gender classification tasks, we employ the Cross Entropy loss function (CE). This function is commonly used in binary classification tasks due to its effectiveness in measuring the performance of a classification model whose output is a probability value between 0 and 1. For the age regression task, we use the Mean Squared Error loss function (MSE), a popular choice for regression tasks as it penalizes the model based on the difference between the actual and predicted age values. The losses from these three tasks are then combined using a weighted summation method. This allows us to balance the contribution of each task to the overall loss, ensuring that one task’s loss does not overwhelm the others and skew the model’s learning process. Once the combined loss is computed, we update the model’s parameters using backpropagation. This method iteratively adjusts the model’s parameters to minimize the total loss, thus optimizing the model’s performance across all three tasks.

To adaptively weight the losses of different tasks, we employ the GradNorm algorithm. This algorithm optimizes a loss function called Grad Loss by balancing the gradient norms of each task, dynamically adjusting the weights of each task. The core idea of the GradNorm algorithm is to make the gradient norms of each task as close as possible to a reference value, preventing certain tasks from dominating training resources. Specifically, given T tasks and their corresponding loss functions , a shared network parameter W, and individual task parameters , we define the gradient norms for each task as follows:

A reference gradient norm is also defined as:

In the equation, is the relative inverse training rate of task i at epoch t, is a hyperparameter, and a larger enforces stronger constraints on balancing training speeds. We then construct a Grad Loss [41] function as follows:

The gradient update formula for task loss weight is as follows:

In the equation, L is the Grad Loss, and is the learning rate. By minimizing the Grad Loss, the GradNorm algorithm can adaptively adjust the weights of each task, preventing any single task from dominating the training process and improving the performance of multi-task learning.

4. Experiments

4.1. Benchmark Dataset

To conduct our experiments, a total of 303 fMRI scans from the publicly available Alzheimer’s Disease Neuroimaging Initiative (ADNI) were selected as the dataset. ADNI is a multi-center, longitudinal, observational study funded jointly by the National Institutes of Health, pharmaceutical companies, and non-profit organizations. It aims to discover, optimize, evaluate, and track the neuroimaging, clinical and psychological data, and biomarkers of AD and mild cognitive impairment (MCI) patients [32,42,43]. According to the dataset we chose, a comprehensive analysis was conducted, as presented in Table 1. Our dataset consists of 118 patients with AD and 185 cognitively normal (CN) control participants. We found that in the AD group, the mean age was 74.69 years with a standard deviation of 7.44 years. In the CN group, the mean age was 74.79 years with a standard deviation of 5.97 years. The age difference between the AD and CN groups was significant, indicating age as an important factor influencing the onset of AD. Furthermore, we examined the distribution of gender in the AD and CN groups. In the AD group, 47.5% of the participants were female, and 52.5% were male. In the CN group, 54.6% were female, and 45.4% were male. The gender distribution difference between the AD and CN groups was also significant, suggesting gender as an important factor influencing the onset of AD. These individuals underwent resting-state fMRI scans, and all the scan data have been preprocessed to enhance data quality and analyzability. The preprocessing steps include noise removal, motion artifact correction, and spatial normalization, ensuring data consistency and comparability.

Table 1.

Dataset introduction.

To improve the generalization and robustness of our model, we also utilized clinical information provided by the ADNI, including the participants’ age and gender. Age and gender are important factors influencing the onset of AD and can also affect the characteristics of fMRI signals [14,44]. Therefore, by simultaneously learning the AD classification task and predicting the age and gender of the participants, our DMT-GIN model can comprehensively understand and analyze neuroimaging features associated with AD.

4.2. Experimental Settings

4.2.1. Baseline

In our experiments, the proposed DMT-GIN is compared with four classic models in Alzheimer’s disease fMRI image classification: Support Vector Machine (SVM) [45], K-Nearest Neighbors (KNN) [46], Weighted K-Nearest Neighbors (WKNN) [47], CNN [48], GCN [49], Graph Attention Networks (GAT) [50], Graph Wavelet Neural Network (GWNN) [51], and Topology Adaptive Graph Convolutional Networks (TAGCN) [52]. SVM, KNN and WKNN are machine learning methods, with SVM using the RBF kernel. CNN is a deep learning model with powerful representation learning capabilities, while the GNN-based methods include GCN and GAT, GWNN, and TAGCN.

4.2.2. Evaluation Metrics

To comprehensively evaluate the performance of the classification task, multiple evaluation metrics derived from the confusion matrix are utilized, including accuracy, recall rate, specificity, F1 score, and AUC [53]. These metrics, widely used in fMRI image classification research in recent years, reflect the performance of the model from various perspectives. The confusion matrix allows a more concise representation of true positives, true negatives, false positives, and false negatives, providing a comprehensive overview of the model’s performance. To ensure the reliability and objectivity of the experimental results, 5-fold cross-validation is used [54]. All participants are randomly divided into five groups, and in each experiment, four of them are selected as the training set and the remaining one as the test set. This setup ensures a comprehensive and stable assessment of the model’s performance under different data distributions.

4.2.3. Implementation Details

The DMT-GIN was implemented using PyTorch 1.10.2 and PyTorch Geometric 2.0.3 on a single NVIDIA RTX 3090 GPU. The adaptive moment estimation (Adam) optimizer [55] was utilized for network optimization to quickly find suitable weights. This choice was based on Adam’s ability to combine the advantages of Momentum and RMSprop, automatically adjusting the learning rate to find the most suitable weights rapidly. The learning rate was initially set at 0.001, as a higher learning rate at the start promotes faster convergence and prevents settling into local minima. To stably converge to a better solution and avoid oscillation near the optimal solution in the later stages of training, the maximum number of epochs was set to 300, and the learning rate was halved every 50 epochs after the first 100 epochs. To select the optimal batch size and learning rate, parameter sensitivity tests were conducted using a grid search approach around predetermined candidate values to identify optimal parameters. The results of the sensitivity tests are shown in Table 2.

Table 2.

The performance of the model under different combinations of batch sizes and learning rates.

From the results in Table 2, it was found that the optimal performance was achieved when the batch size was set to 64 and the learning rate was at 0.001. This parameter choice was driven by our sensitivity tests and was selected to minimize computational burden while maintaining model performance. Setting the batch size to 64 allowed us to efficiently utilize computational resources and avoid inaccurate gradient estimates due to excessive batch sizes. For the loss function, we opted for cross-entropy loss due to its superior performance and stability in classification tasks. The cross-entropy loss effectively measures the inconsistency between model predictions and actual outcomes, which is crucial for our classification task. By implementing the cross-entropy loss, we ensure that the model strives to minimize prediction errors with each iteration, thereby optimizing model performance.

4.3. Model Comparison

To validate the performance of the proposed DMT-GIN model in Alzheimer’s disease classification tasks, the DMT-GIN model is compared with the seven baseline models listed below, as shown in Table 3. These models were trained using the adjacency matrix and node features of the brain network. Through comparison, it is found that the proposed DMT-GIN method performs excellently on the four evaluation metrics in Table 3, with an accuracy of 90.44%, significantly higher than other machine learning algorithms and neural network algorithms.

Table 3.

Comparison with the baseline model.

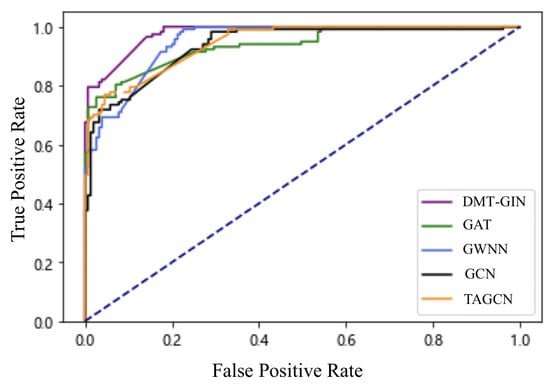

To further validate performance, we plot the ROC curves of the DMT-GIN model and other graph-based neural network models, as shown in Figure 4. In the figure, we can see that the AUC value of the DMT-GIN model is significantly higher than other GNN models. This indicates that our DMT-GIN model can maintain high specificity while maintaining a high recall rate. It has a stronger discriminative ability in distinguishing between diseased and healthy individuals, thus achieving better results in practical auxiliary diagnosis.

Figure 4.

ROC curve plots of various different GNNs; the purple one is DMT-GIN.

4.4. Ablation Study

In this section, we conducted several experiments to validate the performance of each key component of our DMT-GIN by successively removing critical parts of the model and observing the changes in model performance. Specifically, we conducted ablation studies on the following three aspects.

4.4.1. Effectiveness of the Attention Mechanism

We replaced the attention pooling mechanism in the DMT-GIN model with traditional global pooling methods (such as Max Pooling). We derived No. 1 (backbone only) and No. 2 (Backbone + AT) in Table 4. Through accuracy improvement, we found that attention is necessary to improve performance.

Table 4.

Results of ablation studies.

4.4.2. Effectiveness of Multi-Task Learning

We simplified the DMT-GIN model from multi-task learning to single-task learning, i.e., only performing an Alzheimer’s disease classification task without involving age and gender prediction auxiliary tasks. We derived No. 2 (Backbone + AT) and No. 3 (Backbone + AT + MT) in Table 4. It can be observed that No. 3 outperforms No. 2 in all evaluation metrics, indicating that the multi-task learning strategy has played a positive role in improving Alzheimer’s disease classification performance.

4.4.3. Effectiveness of the GradNorm Algorithm

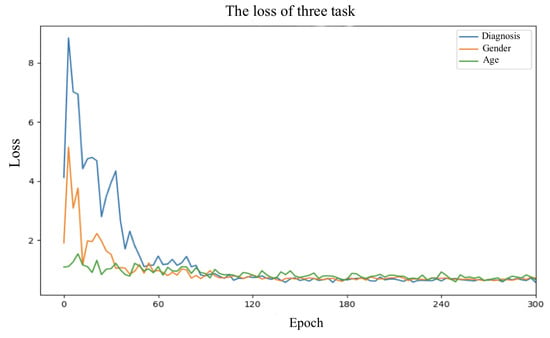

The effectiveness of the GradNorm algorithm was also investigated. By removing the GradNorm algorithm from the DMT-GIN model and using fixed weights to balance the losses of different tasks, the variation in loss values for each task is shown in Figure 5. No. 3 (Backbone + AT + MT) and No. 5 (Backbone + AT + MT + GN) in Table 4 were derived. It can be clearly observed that the GradNorm algorithm plays a role in adjusting task weights to optimize model performance.

Figure 5.

Loss value plot for different tasks during the training process.

4.5. Correlation Analysis

To further investigate the relationships among the extracted features, a correlation analysis was performed. The correlation coefficients between the six features, namely Degree Centrality (D), Betweenness Centrality (B), Clustering Coefficient (C), Eigenvector Centrality (E), Closeness Centrality (C), and PageRank Centrality (P), are presented in Table 5.

Table 5.

Correlation analysis among the six features.

The correlation analysis reveals interesting patterns among the features. For instance, a strong positive correlation is observed between Degree Centrality (D) and Betweenness Centrality (B) with a coefficient of 0.75, indicating that nodes with high degree centrality also tend to have high betweenness centrality. Additionally, Degree Centrality (D) and Eigenvector Centrality (E) exhibit a positive correlation coefficient of 0.67, suggesting that nodes with high degree centrality are also likely to have high eigenvector centrality.

On the other hand, negative correlations are observed between Clustering Coefficient (C) and Degree Centrality (D) (−0.56), Clustering Coefficient (C) and Eigenvector Centrality (E) (−0.75), and Clustering Coefficient (C) and PageRank Centrality (P) (−0.59). These negative correlations imply that nodes with high clustering coefficients tend to have lower values of degree centrality, eigenvector centrality, and PageRank centrality.

4.6. Model Interpretability

In the proposed DMT-GIN, the SHapley Additive exPlanations (SHAP) [56] method is employed to dissect the basis of model predictions. The SHAP method, grounded in Shapley values from game theory, assigns an importance value to each feature, reflecting its contribution to the prediction outcome. The central idea of SHAP involves iterating over all possible combinations of model inputs, computing the average contribution of each feature to the prediction result. The strengths of this method lie in its fairness, as the importance scores of features are derived based on all potential combinations of features, ensuring comprehensive and unbiased evaluations. Moreover, SHAP scores not only offer the importance of features, but also indicate the direction of their impact on the prediction outcome, granting us a more profound comprehension of the predictive behavior of the model.

This methodology was applied not only to the multi-task graph isomorphism network, but also to other models like the GCN and GIN. We identified the top five brain regions that contributed the most to the Alzheimer’s disease classification task (see Table 6). It was interesting to note that despite the different models used, some brain regions consistently emerged among the top contributors. For instance, the Precentral gyrus and the Middle frontal gyrus were identified as crucial by all three models. These brain regions may play a vital role in the pathogenesis of Alzheimer’s disease and hold potential as biomarkers. This highlights the robustness of these regions in contributing to Alzheimer’s disease classification, reinforcing their potential as focal points for future research.

Table 6.

Top 5 brain regions with the highest relevance to the Alzheimer’s disease classification task based on SHAP values for different models.

5. Discussion and Conclusions

A DMT-GIN model is proposed for the classification of Alzheimer’s disease fMRI images. The novelty of this model is manifested in three aspects. Firstly, the node features of brain network data are learned by incorporating a GIN with a self-attention mechanism in the readout layer, effectively capturing the spatial information and topological structure in fMRI images. Secondly, the model adopts a multi-task training approach, outputting age and gender information as auxiliary tasks to guide the classification task of Alzheimer’s disease. Lastly, the GradNorm algorithm is used to dynamically allocate weights for different tasks, adaptively learning the correlations between tasks, thus improving overall performance. To enhance the interpretability of the model, the SHAP method is employed to identify the key brain regions that contribute significantly to the Alzheimer’s disease classification task.

The method was compared with seven other baseline models, and ablation experiments were conducted on the proposed framework for extensive performance evaluation. By observing the accuracy, recall rate, and AUC evaluation metrics in the experimental results, it becomes evident that DMT-GIN significantly outperforms other baseline models in terms of effectiveness.

Our research has certain limitations, the first being the limited scale of the dataset. We used some publicly available datasets for experimentation. However, these datasets are relatively small in size. To fully validate the performance of the model, future research could consider using larger-scale and more diverse datasets. While we have incorporated age and gender as auxiliary tasks in our model, we acknowledge the potential existence of other auxiliary tasks with higher informational value. These tasks could encompass areas such as occupation, family environment, genetic information, lifestyle, and certain medical conditions like smoking status, respiratory problems, hypertension, and diabetes, which are recognized as key risk factors for numerous health conditions. These variables have been successfully employed in past studies, as exemplified by the application of photoplethysmography signals for detecting cardiorespiratory disorders [57]. Including such a diversity of auxiliary tasks could not only enrich our understanding of the subjects, but also enhance the predictive capabilities of our model. Therefore, future research could explore more auxiliary tasks with potential information value to further optimize model performance, drawing inspiration from such methods. Moreover, we recognize the potential value of incorporating anomaly detection methodologies into our future research. Given the complexity and nonlinearity of the data structures we are dealing with, anomaly detection could provide valuable insights and opportunities for performance optimization.

Author Contributions

Conceptualization, X.W.; Methodology, Z.W. and Z.L.; Validation, S.L.; Data curation, Y.W. and W.Z.; Supervision, J.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by National Natural Science Foundation of China (62072089); Fundamental Research Funds for the Central Universities of China (N2104001 and N2019007).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Smith, M.A. Alzheimer disease. Int. Rev. Neurobiol. 1998, 42, 1–54. [Google Scholar] [PubMed]

- Cummings, J.L.; Cole, G. Alzheimer disease. JAMA 2002, 287, 2335–2338. [Google Scholar] [CrossRef] [PubMed]

- Nichols, E.; Szoeke, C.E.; Vollset, S.E.; Abbasi, N.; Abd-Allah, F.; Abdela, J.; Aichour, M.T.E.; Akinyemi, R.O.; Alahdab, F.; Asgedom, S.W.; et al. Global, regional, and national burden of Alzheimer’s disease and other dementias, 1990–2016: A systematic analysis for the Global Burden of Disease Study 2016. Lancet Neurol. 2019, 18, 88–106. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. The World Health Report 2000. Available online: https://www.who.int/publications/i/item/924156198X (accessed on 20 June 2023).

- Huang, Y.; Mucke, L. Alzheimer mechanisms and therapeutic strategies. Cell 2012, 148, 1204–1222. [Google Scholar] [CrossRef] [PubMed]

- Calisto, F.M.G.F.; de Matos Fernandes, J.G.; Morais, M.; Santiago, C.; Abrantes, J.M.V.; Nunes, N.J.; Nascimento, J.C. Assertiveness-based Agent Communication for a Personalized Medicine on Medical Imaging Diagnosis. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–20. [Google Scholar]

- Sarraf, S.; Tofighi, G. Deep learning-based pipeline to recognize Alzheimer’s disease using fMRI data. In Proceedings of the Future Technologies Conference, San Francisco, CA, USA, 6–7 December 2016; pp. 816–820. [Google Scholar]

- Matthews, P.M.; Jezzard, P. Functional magnetic resonance imaging. J. Neurol. Neurosurg. Psychiatry 2004, 75, 6–12. [Google Scholar]

- Tuovinen, T.; Kananen, J.; Rajna, Z.; Lieslehto, J.; Korhonen, V.; Rytty, R.; Mattila, H.; Huotari, N.; Raitamaa, L.; Helakari, H.; et al. The variability of functional MRI brain signal increases in Alzheimer’s disease at cardiorespiratory frequencies. Sci. Rep. 2020, 10, 21559. [Google Scholar] [CrossRef]

- Xu, K.; Hu, W.; Leskovec, J.; Jegelka, S. How powerful are graph neural networks? arXiv 2018, arXiv:1810.00826. [Google Scholar]

- Kim, B.H.; Ye, J.C. Understanding graph isomorphism network for rs-fMRI functional connectivity analysis. Front. Neurosci. 2020, 14, 630. [Google Scholar] [CrossRef]

- Caruana, R. Multitask Learning; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Braak, H.; Braak, E. Frequency of stages of Alzheimer-related lesions in different age categories. Neurobiol. Aging 1997, 18, 351–357. [Google Scholar] [CrossRef]

- Podcasy, J.L.; Epperson, C.N. Considering sex and gender in Alzheimer disease and other dementias. Dialogues Clin. Neurosci. 2022, 18, 437–446. [Google Scholar] [CrossRef]

- Xing, X.; Li, Q.; Yuan, M.; Wei, H.; Xue, Z.; Wang, T.; Shi, F.; Shen, D. DS-GCNs: Connectome classification using dynamic spectral graph convolution networks with assistant task training. Cereb. Cortex 2021, 31, 1259–1269. [Google Scholar] [CrossRef] [PubMed]

- Kazemi, Y.; Houghten, S.K. A deep learning pipeline to classify different stages of Alzheimer’s disease from fMRI data. In Proceedings of the IEEE Conference on Computational Intelligence in Bioinformatics and Computational Biology, Saint Louis, MO, USA, 30 May–2 June 2018; pp. 1–8. [Google Scholar]

- Janghel, R.; Rathore, Y. Deep convolution neural network based system for early diagnosis of Alzheimer’s disease. IRBM 2021, 42, 258–267. [Google Scholar] [CrossRef]

- Ghafoori, S.; Shalbaf, A. Predicting conversion from MCI to AD by integration of rs-fMRI and clinical information using 3D-convolutional neural network. Int. J. Comput. Assist. Radiol. Surg. 2022, 17, 1245–1255. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Zhou, Y.; Dvornek, N.C.; Zhang, M.; Zhuang, J.; Ventola, P.; Duncan, J.S. Pooling Regularized Graph Neural Network for fMRI Biomarker Analysis. Lecture Notes in Computer Science. In Proceedings of the Medical Image Computing and Computer Assisted Intervention, Lima, Peru, 4–8 October 2020; Volume 12267, pp. 625–635. [Google Scholar]

- Yang, C.; Wang, P.; Tan, J.; Liu, Q.; Li, X. Autism spectrum disorder diagnosis using graph attention network based on spatial-constrained sparse functional brain networks. Comput. Biol. Med. 2021, 139, 104963. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Song, X.; Qiu, Y.; Zhao, C.; Lei, B. Structure and Feature Based Graph U-Net for Early Alzheimer’s Disease Prediction. In Proceedings of the 11th International Workshop on Multimodal Learning for Clinical Decision Support, Strasbourg, France, 1 October 2021; Volume 13050, pp. 93–104. [Google Scholar]

- Song, X.; Zhou, F.; Frangi, A.F.; Cao, J.; Xiao, X.; Lei, Y.; Wang, T.; Lei, B. Multi-center and multi-channel pooling GCN for early AD diagnosis based on dual-modality fused brain network. IEEE Trans. Med. Imaging 2022, 42, 354–367. [Google Scholar] [CrossRef] [PubMed]

- Lin, K.; Jie, B.; Dong, P.; Ding, X.; Bian, W.; Liu, M. Extracting Sequential Features from Dynamic Connectivity Network with rs-fMRI Data for AD Classification. In Proceedings of the Machine Learning in Medical Imaging—12th International Workshop, Strasbourg, France, 27 September 2021; Volume 12966, pp. 664–673. [Google Scholar]

- Alorf, A.; Khan, M.U.G. Multi-label classification of Alzheimer’s disease stages from resting-state fMRI-based correlation connectivity data and deep learning. Comput. Biol. Med. 2022, 151, 106240. [Google Scholar] [CrossRef]

- Xia, Z.; Zhou, T.; Mamoon, S.; Lu, J. Recognition of dementia biomarkers with deep finer-DBN. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1926–1935. [Google Scholar] [CrossRef]

- Shao, W.; Peng, Y.; Zu, C.; Wang, M.; Zhang, D.; The Alzheimer’s Disease Neuroimaging Initiative. Hypergraph based multi-task feature selection for multimodal classification of Alzheimer’s disease. Comput. Med. Imaging Graph. 2020, 80, 101663. [Google Scholar] [CrossRef]

- Zeng, N.; Li, H.; Peng, Y. A new deep belief network-based multi-task learning for diagnosis of Alzheimer’s disease. Neural Comput. Appl. 2021, 35, 11599–11610. [Google Scholar] [CrossRef]

- Friston, K.J.; Williams, S.; Howard, R.; Frackowiak, R.S.; Turner, R. Movement-related effects in fMRI time-series. Magn. Reson. Med. 1996, 35, 346–355. [Google Scholar] [CrossRef]

- Jenkinson, M.; Bannister, P.; Brady, M.; Smith, S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage 2002, 17, 825–841. [Google Scholar] [CrossRef] [PubMed]

- Tzourio-Mazoyer, N.; Landeau, B.; Papathanassiou, D.; Crivello, F.; Etard, O.; Delcroix, N.; Mazoyer, B.; Joliot, M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage 2002, 15, 273–289. [Google Scholar] [CrossRef] [PubMed]

- Rubinov, M.; Sporns, O. Complex network measures of brain connectivity: Uses and interpretations. Neuroimage 2010, 52, 1059–1069. [Google Scholar] [CrossRef]

- Petersen, R.C.; Aisen, P.S.; Beckett, L.A.; Donohue, M.C.; Gamst, A.C.; Harvey, D.J.; Jack, C.R.; Jagust, W.J.; Shaw, L.M.; Toga, A.W.; et al. Alzheimer’s disease neuroimaging initiative (ADNI): Clinical characterization. Neurology 2010, 74, 201–209. [Google Scholar] [CrossRef] [PubMed]

- Efron, B. Bootstrap Methods: Another Look at the Jackknife; Springer: Berlin/Heidelberg, Germany, 1992. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- He, K.; Gan, C.; Li, Z.; Rekik, I.; Yin, Z.; Ji, W.; Gao, Y.; Wang, Q.; Zhang, J.; Shen, D. Transformers in medical image analysis: A review. Intell. Med. 2023, 3, 59–78. [Google Scholar] [CrossRef]

- Lin, Z.; Feng, M.; Santos, C.N.d.; Yu, M.; Xiang, B.; Zhou, B.; Bengio, Y. A structured self-attentive sentence embedding. arXiv 2017, arXiv:1703.03130. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified Linear Units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Zhang, Y.; Yang, Q. A survey on multi-task learning. IEEE Trans. Knowl. Data Eng. 2021, 34, 5586–5609. [Google Scholar] [CrossRef]

- Ruder, S. An overview of multi-task learning in deep neural networks. arXiv 2017, arXiv:1706.05098. [Google Scholar]

- Nebel, R.A.; Aggarwal, N.T.; Barnes, L.L.; Gallagher, A.; Goldstein, J.M.; Kantarci, K.; Mallampalli, M.P.; Mormino, E.C.; Scott, L.; Yu, W.H.; et al. Understanding the impact of sex and gender in Alzheimer’s disease: A call to action. Alzheimer’s Dement. 2018, 14, 1171–1183. [Google Scholar] [CrossRef]

- Chen, Z.; Badrinarayanan, V.; Lee, C.; Rabinovich, A. GradNorm: Gradient Normalization for Adaptive Loss Balancing in Deep Multitask Networks. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; Volume 80, pp. 793–802. [Google Scholar]

- Weiner, M.W.; Veitch, D.P.; Aisen, P.S.; Beckett, L.A.; Cairns, N.J.; Cedarbaum, J.; Donohue, M.C.; Green, R.C.; Harvey, D.; Jack, C.R., Jr.; et al. Impact of the Alzheimer’s disease neuroimaging initiative, 2004 to 2014. Alzheimer’s Dement. 2015, 11, 865–884. [Google Scholar] [CrossRef]

- Jack, C.R., Jr.; Bernstein, M.A.; Fox, N.C.; Thompson, P.; Alexander, G.; Harvey, D.; Borowski, B.; Britson, P.J.; Whitwell, J.L.; Ward, C.; et al. The Alzheimer’s disease neuroimaging initiative (ADNI): MRI methods. J. Magn. Reson. Imaging Off. J. Int. Soc. Magn. Reson. Med. 2008, 27, 685–691. [Google Scholar] [CrossRef]

- Kaiser, A.; Haller, S.; Schmitz, S.; Nitsch, C. On sex/gender related similarities and differences in fMRI language research. Brain Res. Rev. 2009, 61, 49–59. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Altman, N.S. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar]

- Al Fahoum, A.; Ghobon, T.A. Performance predictions of Sci-Fi films via machine learning. Appl. Sci. 2023, 13, 4312. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Liò, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the 6th International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Xu, B.; Shen, H.; Cao, Q.; Qiu, Y.; Cheng, X. Graph wavelet neural network. arXiv 2019, arXiv:1904.07785. [Google Scholar]

- Du, J.; Zhang, S.; Wu, G.; Moura, J.M.F.; Kar, S. Topology adaptive graph convolutional networks. arXiv 2017, arXiv:1710.10370. [Google Scholar]

- Hanley, J.A.; McNeil, B.J. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 1982, 143, 29–36. [Google Scholar] [CrossRef]

- Kohavi, R. A Study of Cross-Validation and Bootstrap for Accuracy Estimation and Model Selection. In Proceedings of the 14th International Joint Conference on Artificial Intelligence, Montréal, QC, Canada, 20–25 August 1995; pp. 1137–1145. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4768–4777. [Google Scholar]

- Al Fahoum, A.S.; Abu Al-Haija, A.O.; Alshraideh, H.A. Identification of coronary artery diseases using photoplethysmography signals and practical feature selection process. Bioengineering 2023, 10, 249. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).