Distributed GNE Seeking under Global-Decision and Partial-Decision Information over Douglas-Rachford Splitting Method

Abstract

1. Introduction

| Algorithm 1 GNE seeking under partial-decision |

|

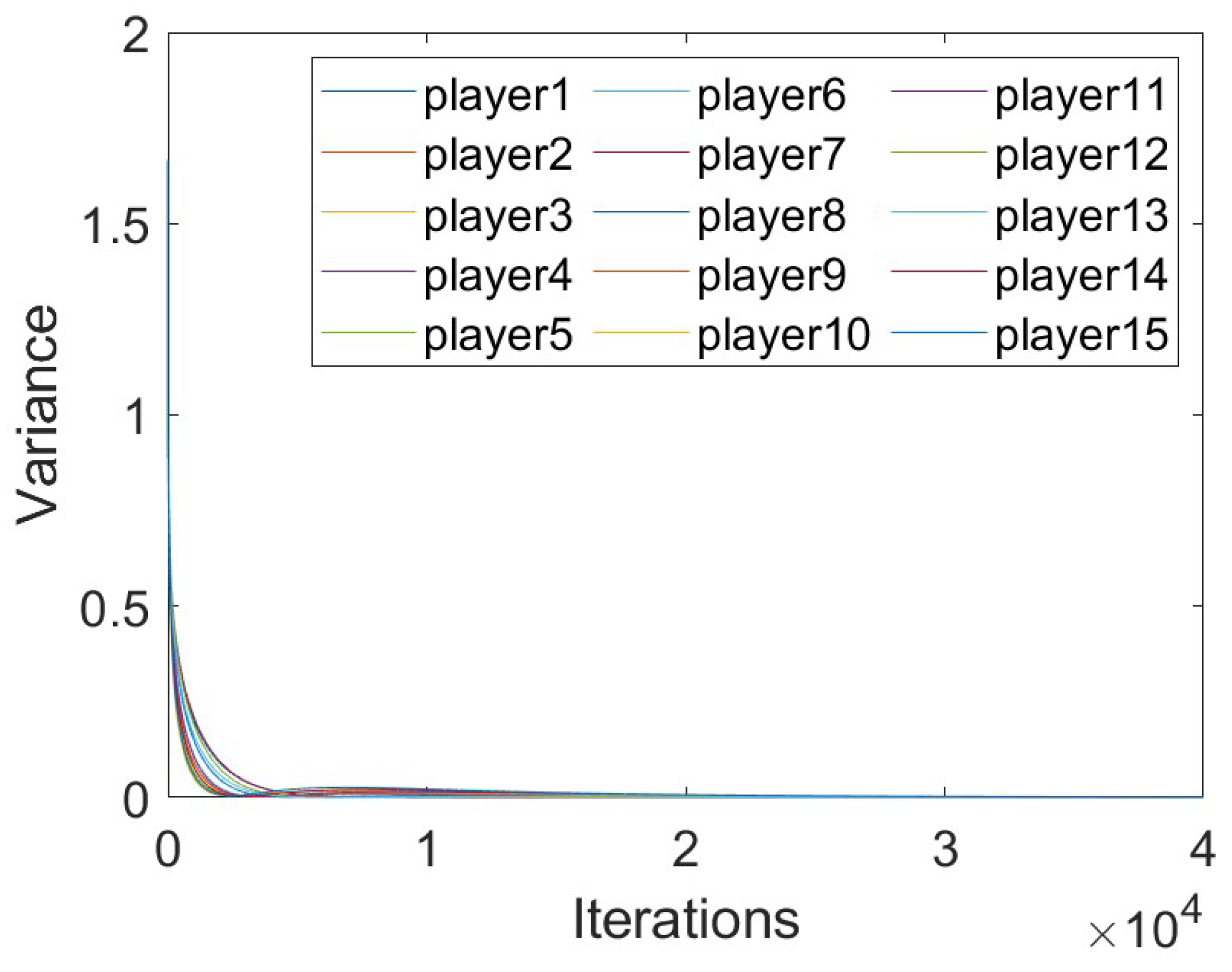

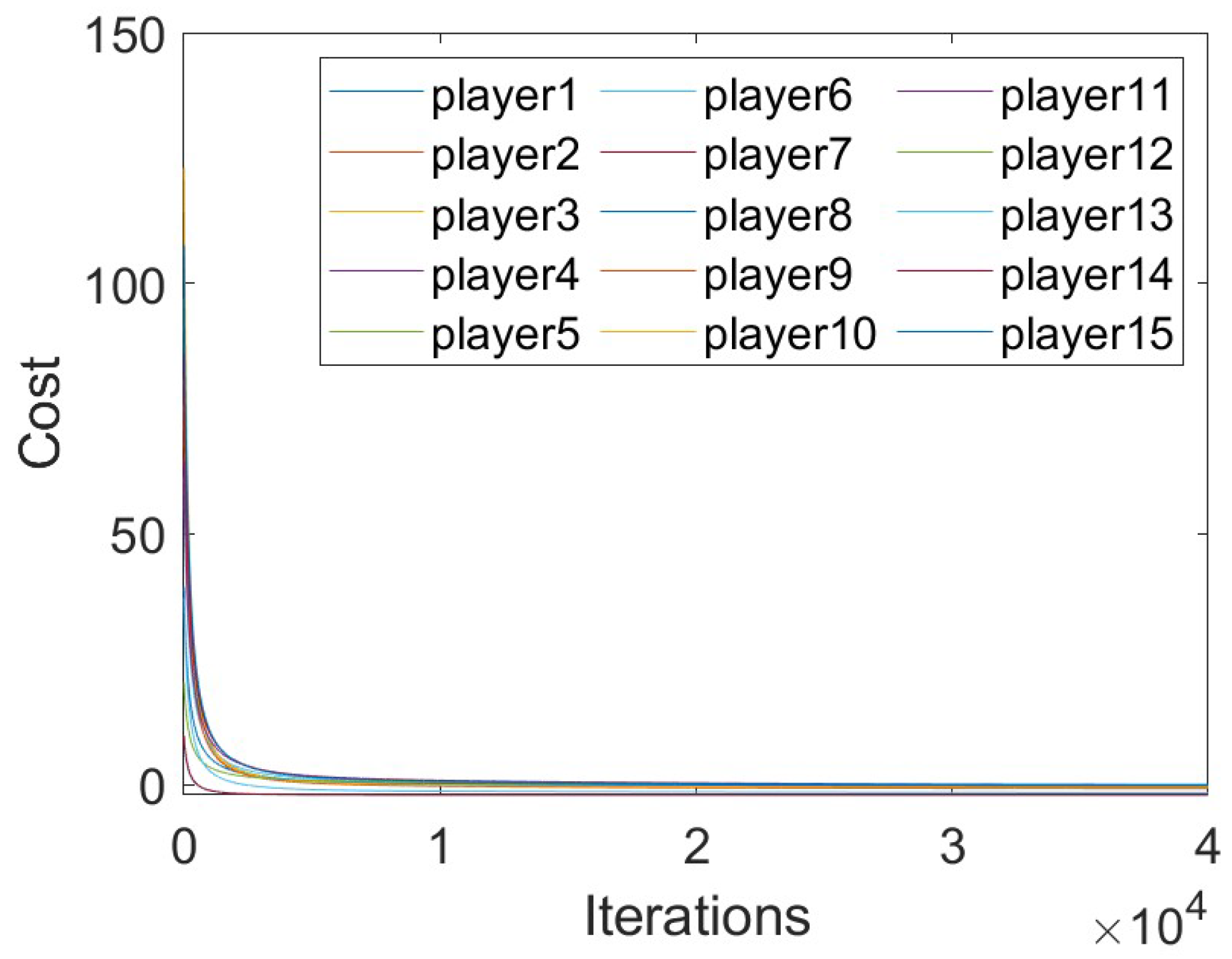

- Two new variants of the Douglas-Rachford algorithm are proposed in this paper, denoted as Algorithms 1 and 2, which can solve Nash equilibrium problems.

- Two pairs of new splitting operators are introduced for Algorithms 1 and 2, respectively, which can ensure the convergence and efficiency of the algorithms.

- Algorithm 2 is proved in this paper, and it has a linear convergence rate under the global information setting, while Algorithm 1 has a faster convergence rate under the local information setting.

- The theoretical results in this paper are verified by numerical experiments and the advantages of Algorithm 1 are shown by comparison with the existing method.

| Algorithm 2 GNE seeking under global-decision |

|

2. Preliminary Acquaintance

2.1. Graph Theory

2.2. Game Model

- 1.

- The domain of the function dom is nonempty, compact and convex.

- 2.

- is lower semicontinuous on its domain.

- 3.

- The function satisfies the convexity inequality on its domain.

- 1.

- Every locally feasible set is a non-empty closed convex set.

- 2.

- Globally shared feasible set is nonempty.

- 3.

- For any feasible point, there exists a nonzero vector that is orthogonal to all gradients of the constraint functions, i.e., (MFCQ) holds.

- 1.

- When given , is a non-empty compact convex set and , is

- 2.

- continuously differentiable at .

- 3.

- is a convex function and is a nonempty set satisfying Slater-constraint-qualification.

2.3. Nash Equilibrium for Variational Problems (V-GNE) and KKT Condition

3. Distributed Algorithm

3.1. Global Information Setting

3.2. Partial Information Setting

- is undirected and connected and it has no self-loops.

4. Convergence Analysis

- 1.

- The set of Nash equilibria of the variational problem of the original problem is nonempty

- 2.

- and is maximally monotone operator.

- 1.

- The pseudo-gradient operator is strongly monotone and Lipschitz continuous, i.e., there exist , satisfying and .

- 2.

- The operator is Lipschitz continuous, i.e., there exist , satisfying , .

5. Algorithm Simulation

6. Summary and Prospect

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Facchinei, F.; Kanzow, C. Generalized Nash equilibrium problems. 4OR 2007, 5, 173–210. [Google Scholar] [CrossRef]

- Fischer, A.; Herrich, M.; Schonefeld, K. Generalized Nash equilibrium problems-recent advances and challenges. Pesqui. Oper. 2014, 34, 521–558. [Google Scholar] [CrossRef]

- Garcia, A.; Khatami, R.; Eksin, C.; Sezer, F. An Incentive Compatible Iterative Mechanism for Coupling Electricity Markets. IEEE Trans. Power Syst. 2021, 37, 1241–1252. [Google Scholar]

- Cadre, H.L.; Jacquot, P.; Wan, C.; Alasseur, C. Peer-to-Peer Electricity Market Analysis: From Variational to Generalized Nash Equilibrium. Eur. J. Oper. Res. 2018, 282, 753–771. [Google Scholar]

- Wu, C.; Gu, W.; Bo, R.; MehdipourPicha, H.; Jiang, P.; Wu, Z.; Lu, S.; Yao, S. Energy Trading and Generalized Nash Equilibrium in Combined Heat and Power Market. IEEE Trans. Power Syst. 2020, 35, 3378–3387. [Google Scholar] [CrossRef]

- Debreu, G. A Social Equilibrium Existence Theorem. Proc. Natl. Acad. Sci. USA 1952, 38, 886–893. [Google Scholar] [CrossRef]

- Rosen, J.B. Existence and Uniqueness of Equilibrium Points for Concave N-Person Games. Econometrica 1965, 33, 520–534. [Google Scholar] [CrossRef]

- Facchinei, F.; Kanzow, C. Generalized Nash Equilibrium Problems. Ann. Oper. Res. 2010, 175, 177–211. [Google Scholar] [CrossRef]

- Facchinei, F.; Pang, J.S. Nash equilibria: The Variational Approach. In Convex Optimization in Signal Processing and Communications; Cambridge University Press: Cambridge, UK, 2009. [Google Scholar]

- Yi, P.; Pavel, L. An Operator Splitting Approach for Distributed Generalized Nash Equilibria Computation. Automatica 2019, 102, 111–121. [Google Scholar] [CrossRef]

- Latafat, P.; Freris, N.M.; Patrinos, P. A New Randomized Block-Coordinate Primal-Dual Proximal Algorithm for Distributed Optimization. IEEE Trans. Autom. Control. 2019, 64, 4050–4065. [Google Scholar]

- Malitsky, Y. Golden Ratio Algorithms for Variational Inequalities. Math. Program. 2020, 184, 383–410. [Google Scholar] [CrossRef]

- Pavel, L. Distributed GNE Seeking Under Partial-Decision Information Over Networks via a Doubly-Augmented Operator Splitting Approach. IEEE Trans. Autom. Control. 2020, 65, 1584–1597. [Google Scholar] [CrossRef]

- Bianchi, M.; Belgioioso, G.; Grammatico, S. Fast generalized Nash equilibrium seeking under partial-decision information. Automatica 2022, 136, 110080. [Google Scholar] [CrossRef]

- Cai, X.; Xiao, F.; Wei, B. Distributed generalized Nash equilibrium seeking for noncooperative games with unknown cost functions. Int. J. Robust Nonlinear Control. 2022, 32, 8948–8964. [Google Scholar] [CrossRef]

- Aragón Artacho, F.J.; Campoy, R. Solving Graph Coloring Problems with the Douglas-Rachford Algorithm. Set-Valued Var. Anal. 2018, 26, 277–304. [Google Scholar]

- Lakshmi, R.J.; Rao, G.V.S. A Re-Constructive Algorithm to Improve Image Recovery in Compressed Sensing. J. Theor. Appl. Inf. Technol. 2017, 95, 5443–5453. [Google Scholar]

- Steidl, G.; Teuber, T. Removing Multiplicative Noise by Douglas-Rachford Splitting Methods. J. Math. Imaging Vis. 2010, 36, 168–184. [Google Scholar] [CrossRef]

- Egerestedt, M. Graph-Theoretic Methods for Multi-Agent Coordination; Georgia Institute of Technology: Atlanta, GA, USA, 2007. [Google Scholar]

- Giselsson, P.; Boyd, S. Linear Convergence and Metric Selection for Douglas-Rachford Splitting and ADMM. IEEE Trans. Autom. Control. 2014, 62, 532–544. [Google Scholar]

- Combettes, P.L. Monotone operator theory in convex optimization. Math. Program. 2018, 170, 177–206. [Google Scholar]

- Bell, H.E. Gershgorin’s Theorem and the Zeros of Polynomials. Am. Math. Mon. 1965, 72, 292–295. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces, 2nd ed.; Springer Publishing Company: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Peng, Y.; Pavel, L. Asynchronous Distributed Algorithms for Seeking Generalized Nash Equilibria Under Full and Partial-Decision Information. IEEE Trans. Cybern. 2020, 50, 2514–2526. [Google Scholar]

- Moschini, G.; Vissa, A. A Linear Inverse Demand System. J. Agric. Resour. Econ. 1992, 7, 294–302. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, J.; Chen, M.; Li, H.; Shi, Y.; Wang, Z.; Tang, J. Distributed GNE Seeking under Global-Decision and Partial-Decision Information over Douglas-Rachford Splitting Method. Appl. Sci. 2023, 13, 7058. https://doi.org/10.3390/app13127058

Cheng J, Chen M, Li H, Shi Y, Wang Z, Tang J. Distributed GNE Seeking under Global-Decision and Partial-Decision Information over Douglas-Rachford Splitting Method. Applied Sciences. 2023; 13(12):7058. https://doi.org/10.3390/app13127058

Chicago/Turabian StyleCheng, Jingran, Menggang Chen, Huaqing Li, Yawei Shi, Zhongzheng Wang, and Jialong Tang. 2023. "Distributed GNE Seeking under Global-Decision and Partial-Decision Information over Douglas-Rachford Splitting Method" Applied Sciences 13, no. 12: 7058. https://doi.org/10.3390/app13127058

APA StyleCheng, J., Chen, M., Li, H., Shi, Y., Wang, Z., & Tang, J. (2023). Distributed GNE Seeking under Global-Decision and Partial-Decision Information over Douglas-Rachford Splitting Method. Applied Sciences, 13(12), 7058. https://doi.org/10.3390/app13127058