An Ensemble-Based Machine Learning Approach for Cyber-Attacks Detection in Wireless Sensor Networks

Abstract

1. Introduction

2. Related Work

3. Methodology

3.1. Dataset Selection and Data Preprocessing

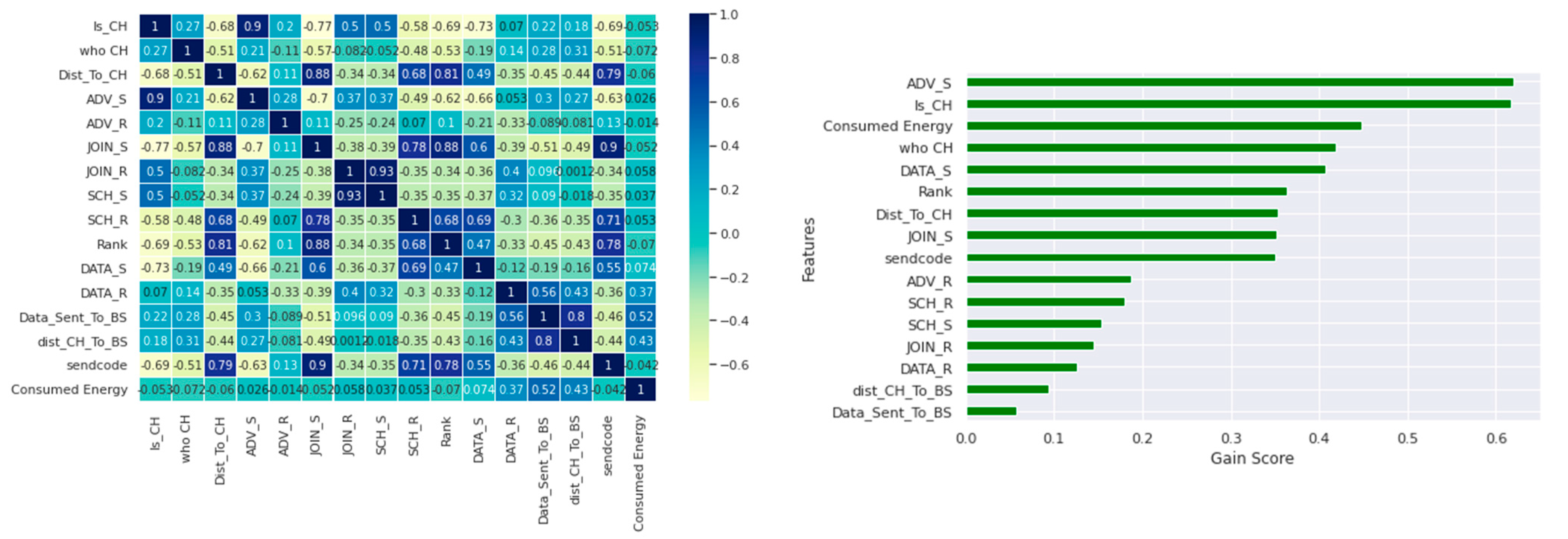

3.2. Feature Selection

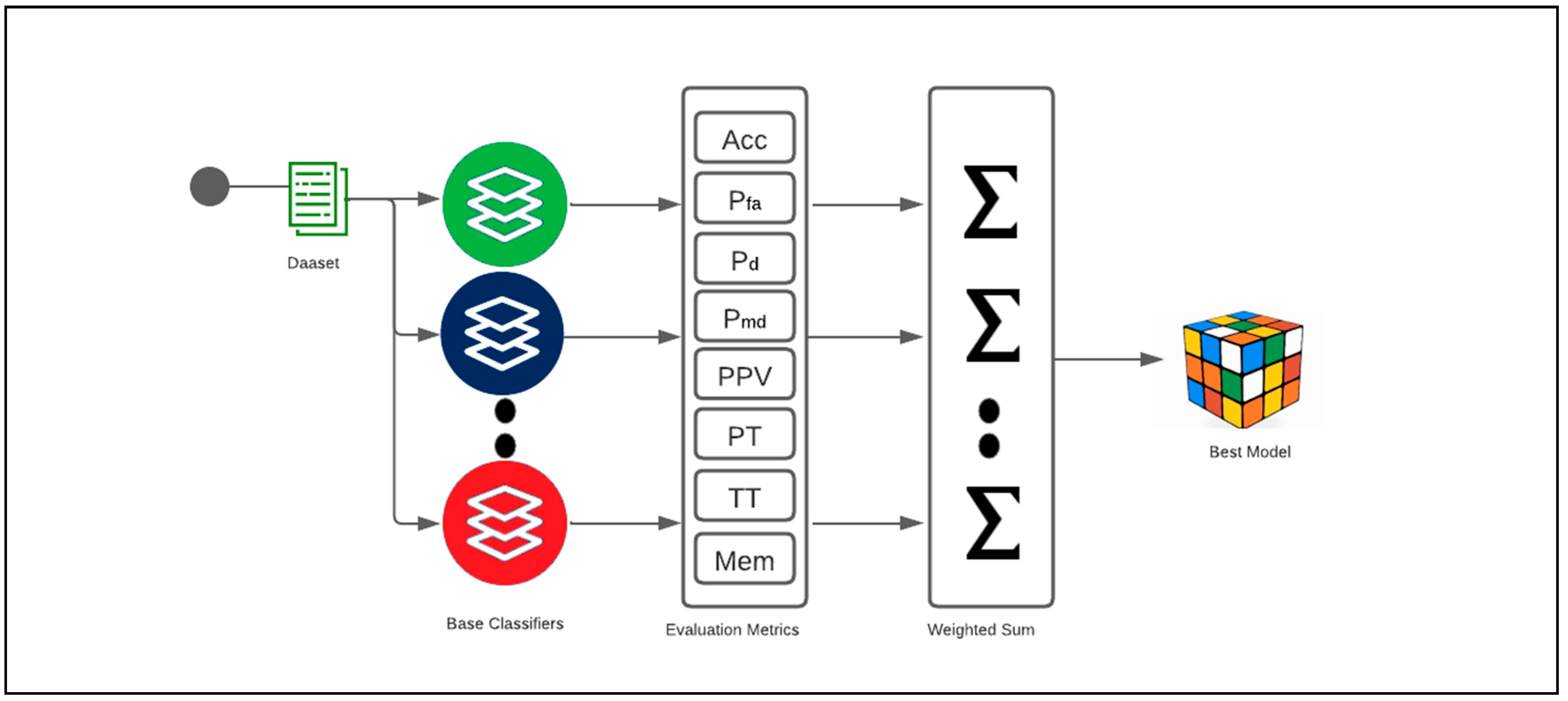

3.3. Proposed Ensemble Machine Learning Approaches

4. Simulation Results

- The original dataset was divided into multiple binary balanced datasets to simplify the classifications and reduce the cost of computation and memory before the data preprocessing phase to avoid multi-class issues.

- Two independent feature selection techniques, Kendall’s correlation coefficient, and MI, can be used to determine feature-reduced datasets. Kendall’s correlation works best with ordinal variables, and Mutual Information measures the relevance between each individual feature and the class labels so that the features with the highest predictive ability have larger mutual information values. Reducing the number of features minimizes the model’s learning time substantially and improves the classifier’s performance.

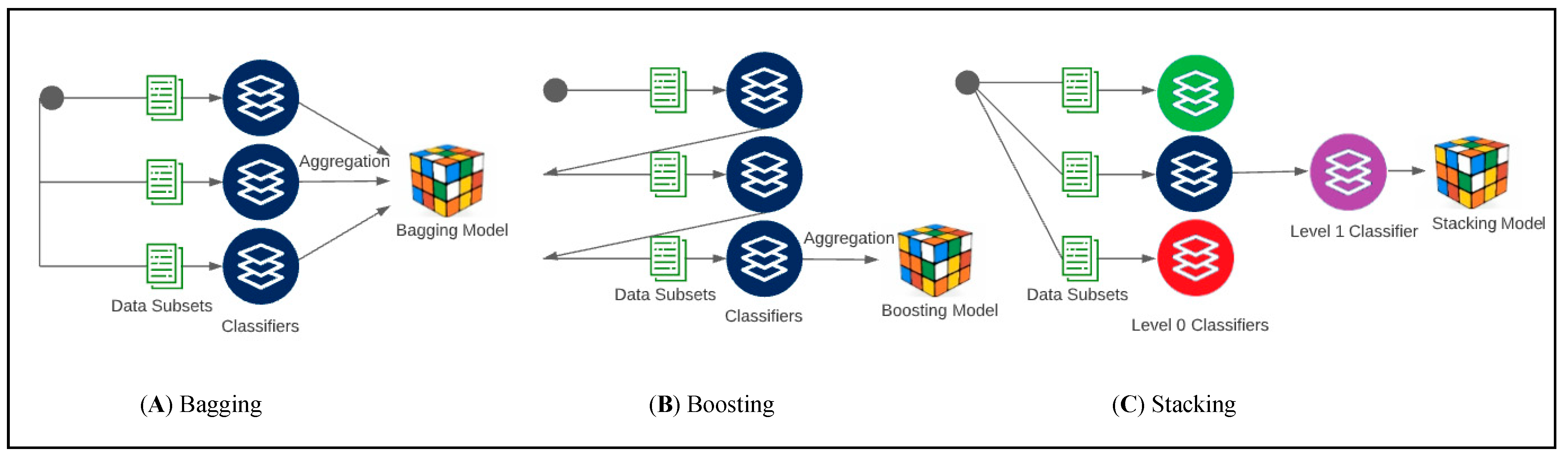

- We used three classical ensemble classifiers: Bagging, Boosting, and Stacking. The Bagging-based model was built based on a decision tree algorithm, the Boosting-based model was built with the AdaBoost algorithm, and the Stacking-based model was implemented using three different classification methods for level 0, KNN, RF, NB, and LR for the level 1 classifier.

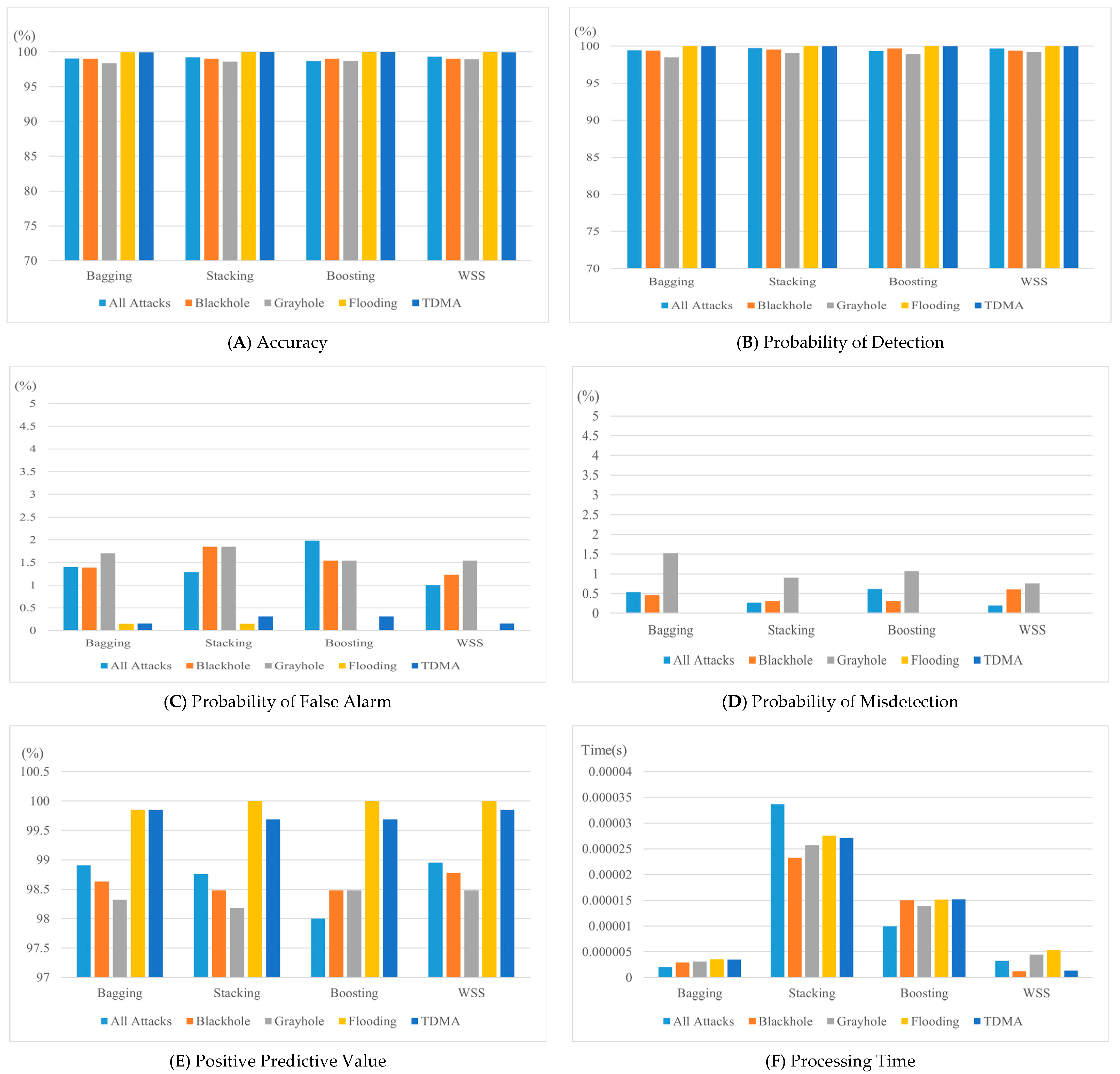

- The proposed model, WSS, achieved comparable results to other classical ensemble classifiers in terms of accuracy, probability of false alarm, probability of detection, probability of misdetection, positive predictive rate, processing time, and average prediction time per sample. Further analysis and testing are required to prove its effectiveness for other attack types in energy-constrained networks.

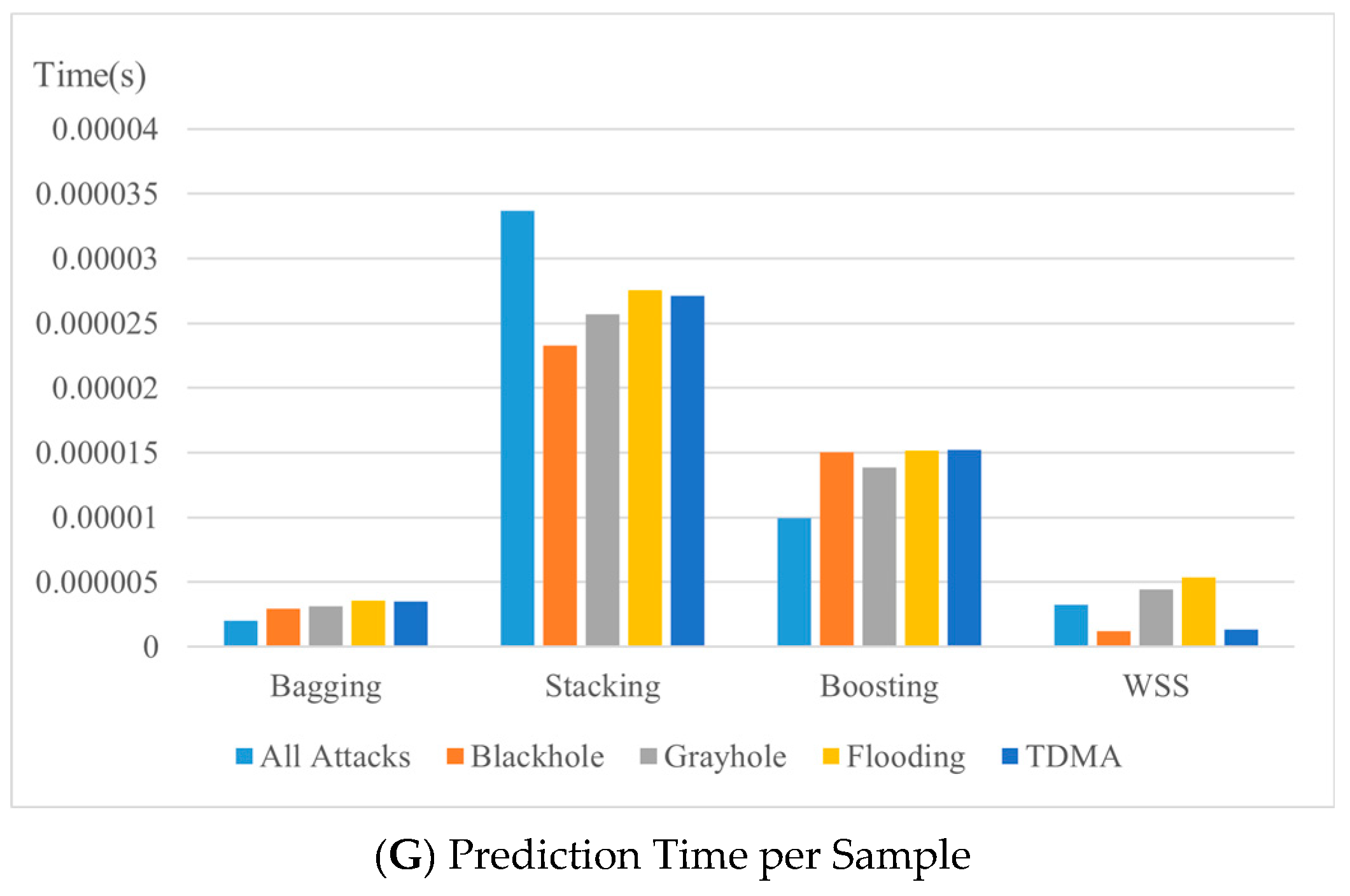

- Ensemble techniques functioned well when detecting cyber-attacks targeting WSN for most of the results and achieved similar values, except for the Stacking algorithm. Bagging has the best processing time and average prediction time per sample, while Stacking performed the worst in terms of processing time and prediction time per sample, making it a poor choice to detect DoS attacks on a WSN.

- A comprehensive analysis of the results indicates that WSS, along with the other ensemble techniques, performs well when detecting most DoS attacks in terms of accuracy, probability of false alarm, probability of detection, and probability of misdetection with the default hyperparameter tuning settings. WSS, followed by Bagging, are the best candidates for detecting WSN attacks using the labeled datasets extracted from WSN-DS since both achieve reasonable results regarding the evaluation metrics used in this study. Further investigation and analysis using other datasets are required considering other types of attacks targeting WSNs.

- WSNs have unique properties caused by constrained power, storage, and bandwidth; therefore, the proposed approach, WSS, achieved promising results for identifying cyber-attacks that target WSNs. The proposed approach is simpler, diverse in terms of selecting base classifiers, and flexible in adopting any number of ML models compared to Bagging, Boosting, and Stacking ensemble techniques. The proposed approach selects the appropriate ML model dynamically, which could significantly improve detection performance.

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ismail, S.; Alkhader, E.; Ahmad, A. Prison perimeter surveillance system using WSN. J. Comput. Sci. 2017, 13, 674–679. [Google Scholar] [CrossRef][Green Version]

- Ismail, S.; Alkhader, E.; Elnaffar, S. Object tracking in Wireless Sensor Networks: Challenges and solutions. J. Comput. Sci. 2016, 12, 201–212. [Google Scholar] [CrossRef]

- Ahmad, R.; Wazirali, R.; Abu-Ain, T. Machine Learning for Wireless Sensor Networks Security: An Overview of Challenges and Issues. Sensors 2022, 22, 4730. [Google Scholar] [CrossRef] [PubMed]

- Elhoseny, M.; Hassanien, A.E. Secure data transmission in WSN: An overview. Stud. Syst. Decis. Control 2019, 165, 115–143. [Google Scholar] [CrossRef]

- Ismail, S.; Khoei, T.T.; Marsh, R.; Kaabouch, N. A Comparative Study of Machine Learning Models for Cyber-attacks Detection in Wireless Sensor Networks. In Proceedings of the 2021 IEEE 12th Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (UEMCON), New York, NY, USA, 1–4 December 2021; pp. 313–318. [Google Scholar]

- Ismail, S.; Dawoud, D.; Reza, H. A Lightweight Multilayer Machine Learning Detection System for Cyber-attacks in WSN. In Proceedings of the 2022 IEEE 12th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 26–29 January 2022; pp. 481–486. [Google Scholar]

- Ismail, S.; Reza, H. Evaluation of Naïve Bayesian Algorithms for Cyber-Attacks Detection in Wireless Sensor Networks. In Proceedings of the 2022 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 6–9 June 2022; pp. 283–289. [Google Scholar]

- Poornima, I.G.A.; Paramasivan, B. Anomaly detection in wireless sensor network using machine learning algorithm. Comput. Commun. 2020, 151, 331–337. [Google Scholar] [CrossRef]

- Ahmad, Z.; Shahid Khan, A.; Wai Shiang, C.; Abdullah, J.; Ahmad, F. Network intrusion detection system: A systematic study of machine learning and deep learning approaches. Trans. Emerg. Telecommun. Technol. 2021, 32, e4150. [Google Scholar] [CrossRef]

- Yang, Z.; Xu, L.; Cai, Z.; Xu, Z. Knowledge-Based Systems Re-scale AdaBoost for attack detection in collaborative filtering recommender systems. Knowl.-Based Syst. 2016, 100, 74–88. [Google Scholar] [CrossRef]

- Saba, T. Intrusion Detection in Smart City Hospitals using Ensemble Classifiers. In Proceedings of the 2020 13th International Conference on Developments in eSystems Engineering (DeSE), Liverpool, UK, 14–17 December 2020; pp. 418–422. [Google Scholar]

- Rashid, M.; Kamruzzaman, J.; Hassan, M.M. Cyberattacks Detection in IoT-Based Smart City Applications Using Machine Learning Techniques. Int. J. Environ. Res. Public Health 2020, 17, 9347. [Google Scholar] [CrossRef]

- Almomani, I.; Al-Kasasbeh, B.; AL-Akhras, M. WSN-DS: A Dataset for Intrusion Detection Systems in Wireless Sensor Networks. J. Sens. 2016, 2016, 4731953. [Google Scholar] [CrossRef]

- Dou, J.; Yunus, A.P.; Bui, D.T.; Merghadi, A.; Sahana, M.; Zhu, Z.; Chen, C.-W.; Han, Z.; Pham, B.T. Improved landslide assessment using support vector machine with bagging, boosting, and stacking ensemble machine learning framework in a mountainous watershed, Japan. Landslides 2020, 17, 641–658. [Google Scholar] [CrossRef]

- Masoodi, F.S.; Abrar, I.; Bamhdi, A.M.; Arabia, S. An effective intrusion detection system using homogeneous ensemble techniques. Int. J. Inf. Secur. Priv. 2021, 16, 1–18. [Google Scholar] [CrossRef]

- Pham, N.T.; Foo, E.; Suriadi, S.; Jeffrey, H.; Lahza, H.F.M. Improving performance of intrusion detection system using ensemble methods and feature selection. In Proceedings of the Australasian Computer Science Week Multiconference, Brisbane, Australia, 29 January–2 February 2018. [Google Scholar] [CrossRef]

- Khoei, T.T.; Aissou, G.; Hu, W.C.; Kaabouch, N. Ensemble Learning Methods for Anomaly Intrusion Detection System in Smart Grid. In Proceedings of the 2021 IEEE International Conference on Electro Information Technology (EIT), Mt. Pleasant, MI, USA, 14–15 May 2021; pp. 129–135. [Google Scholar] [CrossRef]

- Tan, X.; Su, S.; Huang, Z.; Guo, X.; Zuo, Z.; Sun, X.; Li, L. Wireless sensor networks intrusion detection based on SMOTE and the random forest algorithm. Sensors 2019, 19, 203. [Google Scholar] [CrossRef] [PubMed]

- Dong, R.-H.; Yan, H.-H.; Zhang, Q.-Y. An Intrusion Detection Model for Wireless Sensor Network Based on Information Gain Ratio and Bagging Algorithm. Int. J. Netw. Secur. 2020, 22, 218–230. [Google Scholar]

- Garg, S.; Singh, A.; Batra, S.; Kumar, N.; Obaidat, M.S. EnClass: Ensemble-based classification model for network anomaly detection in massive datasets. In Proceedings of the GLOBECOM 2017—2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017. [Google Scholar] [CrossRef]

- Abdulla, N.N.; Hasoun, R.K. Homogenous Ensemble Learning for Denial of Service Attack Detection. J. Algebr. Stat. 2022, 13, 355–366. [Google Scholar]

- Biswas, P.; Samanta, T. Anomaly detection using ensemble random forest in wireless sensor network. Int. J. Inf. Technol. 2021, 13, 2043–2052. [Google Scholar] [CrossRef]

- Tabbaa, H.; Ifzarne, S.; Hafidi, I. An Online Ensemble Learning Model for Detecting Attacks in Wireless Sensor Networks. arXiv 2022, arXiv:2204.13814. [Google Scholar]

- Sarkar, A.; Sharma, H.S.; Singh, M.M. A supervised machine learning-based solution for efficient network intrusion detection using ensemble learning based on hyperparameter optimization. Int. J. Inf. Technol. 2022, 2022, 1–12. [Google Scholar] [CrossRef]

- Otoum, S.; Kantarci, B.; Mouftah, H.T. A Novel Ensemble Method for Advanced Intrusion Detection in Wireless Sensor Networks. In Proceedings of the ICC 2020—2020 IEEE International Conference on Communications (ICC), Dublin, Ireland, 7–11 June 2020. [Google Scholar] [CrossRef]

- Khoei, T.T.; Ismail, S.; Kaabouch, N. Dynamic Selection Techniques for Detecting GPS Spoofing Attacks on UAVs. Sensors 2022, 22, 662. [Google Scholar] [CrossRef]

- Iwendi, C.; Khan, S.; Anajemba, J.H.; Mittal, M.; Alenezi, M.; Alazab, M. The Use of Ensemble Models for Multiple Class and Binary Class Classification for Improving Intrusion Detection Systems. Sensors 2020, 20, 2559. [Google Scholar] [CrossRef]

- Karatas, G. Increasing the Performance of Machine Learning-Based IDSs on an Imbalanced and Up-to-Date Dataset. IEEE Access 2020, 8, 32150–32162. [Google Scholar] [CrossRef]

- Jafari, F.; Dorafshan, S. Bridge Inspection and Defect Recognition with Using Impact Echo Data, Probability, and Naive Bayes Classifiers. Infrastructures 2021, 6, 132. [Google Scholar] [CrossRef]

- Dubey, G.P.; Bhujade, R.K. Materials Today: Proceedings Optimal feature selection for machine learning based intrusion detection system by exploiting attribute dependence. Mater. Today Proc. 2021, 47, 6325–6331. [Google Scholar] [CrossRef]

- Vergara, J.R.; Estévez, P.A. A review of feature selection methods based on mutual information. Neural Comput. Appl. 2014, 24, 175–186. [Google Scholar] [CrossRef]

- Rehman Javed, A.; Jalil, Z.; Atif Moqurrab, S.; Abbas, S.; Liu, X. Ensemble Adaboost classifier for accurate and fast detection of botnet attacks in connected vehicles. Trans. Emerg. Telecommun. Technol. 2022, 33, e4088. [Google Scholar] [CrossRef]

- Syarif, I.; Zaluska, E.; Prugel-Bennett, A.; Wills, G. Application of Bagging, Boosting and Stacking to Intrusion Detection. In Proceedings of the Machine Learning and Data Mining in Pattern Recognition; Perner, P., Ed.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 593–602. [Google Scholar]

| Dataset | Class | Size | Proportion (%) | Total |

|---|---|---|---|---|

| Original Imbalanced Dataset | Normal Grayhole Blackhole Flooding Scheduling | 340,066 14,596 10,049 6638 3312 | 90.77 3.90 2.68 1.77 0.88 | 374,661 |

| Extracted Balanced Dataset | Normal Grayhole Blackhole Flooding Scheduling | 13,000 3250 3250 3250 3250 | 50 12.5 12.5 12.5 12.5 | 26,000 |

| Dataset | Class Type | Size | Proportion (%) | Total Size |

|---|---|---|---|---|

| Grayhole Dataset | Normal Grayhole | 3250 3250 | 50 50 | 6500 |

| Blackhole Dataset | Normal Blackhole | 3250 3250 | 50 50 | 6500 |

| Flooding Dataset | Normal Flooding | 3250 3250 | 50 50 | 6500 |

| TDMA Dataset | Normal TDMA | 3250 3250 | 50 50 | 6500 |

| Feature | Description |

|---|---|

| Time | Simulation time at the moment for the sensor node |

| ID | Unique number to identify the node during the simulation period |

| Is_CH | Flag bit to determine whether the node is CH or normal |

| Who CH | Unique number to identify the CH of the current round |

| Dist_To_CH | The current round’s distance between the node and its CH |

| ADV_S | Number of advertised broadcast messages sent to the nodes by the CH |

| ADV_R | Number of advertised broadcast messages received from the CHs by nodes |

| JOIN_S | Number of join request messages sent by the nodes to the CH |

| JOIN_R | Number of join request messages sent from the nodes by the CH |

| DATA_S | Number of data packets sent to the CH from the node |

| DATA_R | Number of data packets received from the CH from the node |

| Data_Sent_To_BS | Number of data packets sent toward the BS |

| dist_CH_To_BS | Distance between the CH and the BS |

| SCH_S | Number of advertised TDMA schedule broadcast messages sent to the nodes |

| SCH_R | Number of advertised TDMA scheduled messages received from the CHs |

| Rank | Order of the node in the TDMA schedule |

| send_code | A special code for data exchange |

| Consumed Energy | During the previous round, the estimated energy used |

| Attack type | Grayhole, Blackhole, Flooding, Scheduling |

| Dataset | Removed Features |

|---|---|

| All_Attacks Dataset | Data_Sent_To_BS, dist_CH_To_BS |

| Grayhole Class Dataset | SCH_S, JOIN_R, DATA_R |

| Blackhole Class Dataset | Data_Sent_To_BS, dist_CH_To_BS SCH_S, DATA_R, JOIN_R |

| Flooding Class Dataset | JOIN_R, SCH_S, DATA_R |

| Scheduling Class Dataset | dist_CH_To_BS, Data_Sent_To_BS, ADV_R, DATA_R |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ismail, S.; El Mrabet, Z.; Reza, H. An Ensemble-Based Machine Learning Approach for Cyber-Attacks Detection in Wireless Sensor Networks. Appl. Sci. 2023, 13, 30. https://doi.org/10.3390/app13010030

Ismail S, El Mrabet Z, Reza H. An Ensemble-Based Machine Learning Approach for Cyber-Attacks Detection in Wireless Sensor Networks. Applied Sciences. 2023; 13(1):30. https://doi.org/10.3390/app13010030

Chicago/Turabian StyleIsmail, Shereen, Zakaria El Mrabet, and Hassan Reza. 2023. "An Ensemble-Based Machine Learning Approach for Cyber-Attacks Detection in Wireless Sensor Networks" Applied Sciences 13, no. 1: 30. https://doi.org/10.3390/app13010030

APA StyleIsmail, S., El Mrabet, Z., & Reza, H. (2023). An Ensemble-Based Machine Learning Approach for Cyber-Attacks Detection in Wireless Sensor Networks. Applied Sciences, 13(1), 30. https://doi.org/10.3390/app13010030