Fine-Grained Sentiment-Controlled Text Generation Approach Based on Pre-Trained Language Model

Abstract

1. Introduction

- We propose our conditional generative model by extending a pre-trained state-of-the-art Transformer-based generative model with our introduced query-hint mechanism and sentiment control loss function to further guide the text generation at a finer-grained level.

- To better model a text-to-text schema, we introduce the aspect-opinion pair as the fine-grained sentiment unit to control the constrained text generation.

- Through employing an auxiliary classifier, we leverage a large unannotated dataset to re-train and fine-tune an end-to-end conditioned text generative model.

2. Related Work

2.1. Controlled Text Generation

2.2. Review Generation

2.3. Aspect-Level Sentiment Control

3. Method

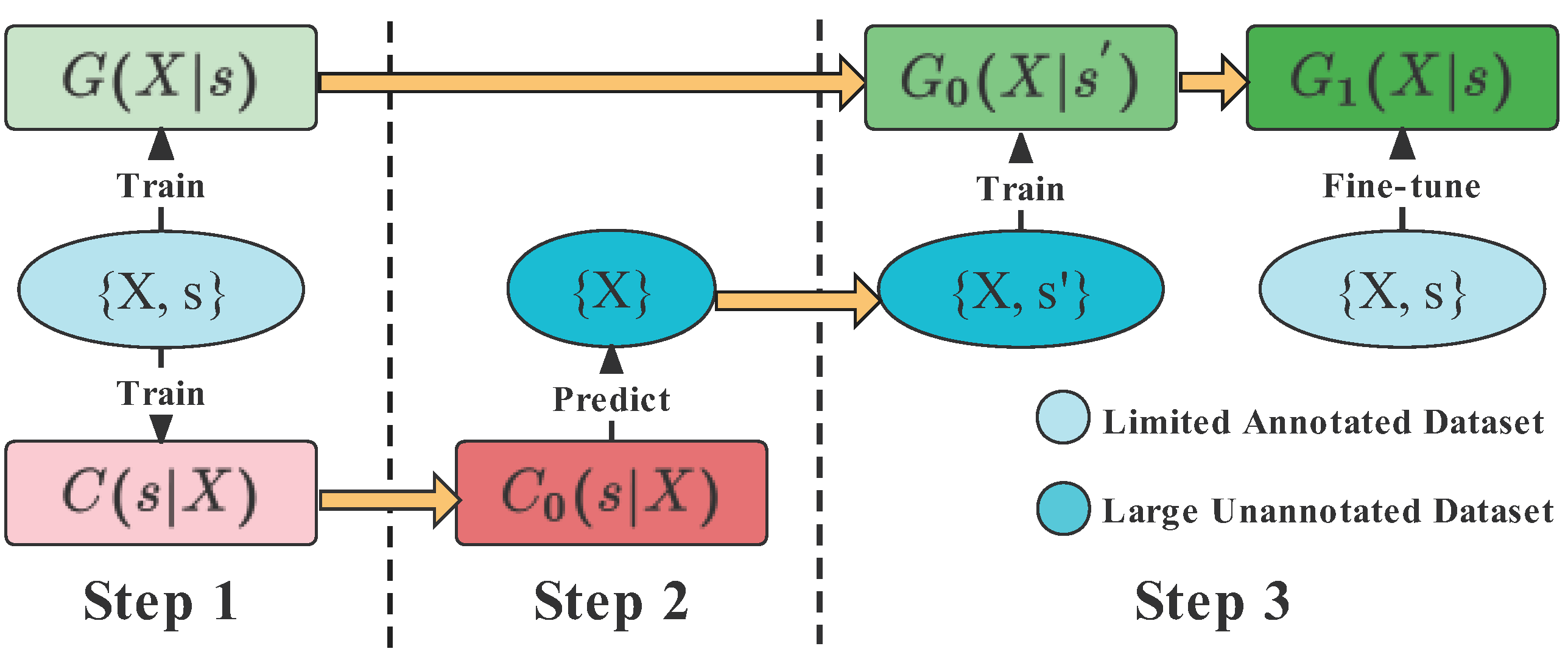

3.1. Main Framework

- Step 1: We train both our generator and classifier on a limited labeled dataset to get G0 and C0, respectively.

- Step 2: The C0 is then used to extract the fine-grained sentiments in the large unlabeled dataset, thus yielding the pseudo labels for the next step’s training.

- Step 3: Again, the generator is trained on the unlabeled dataset that is attached with pseudo labels. Finally, the generator is fine-tuned with the labeled dataset (used in Step 1) to receive the final generator G1.

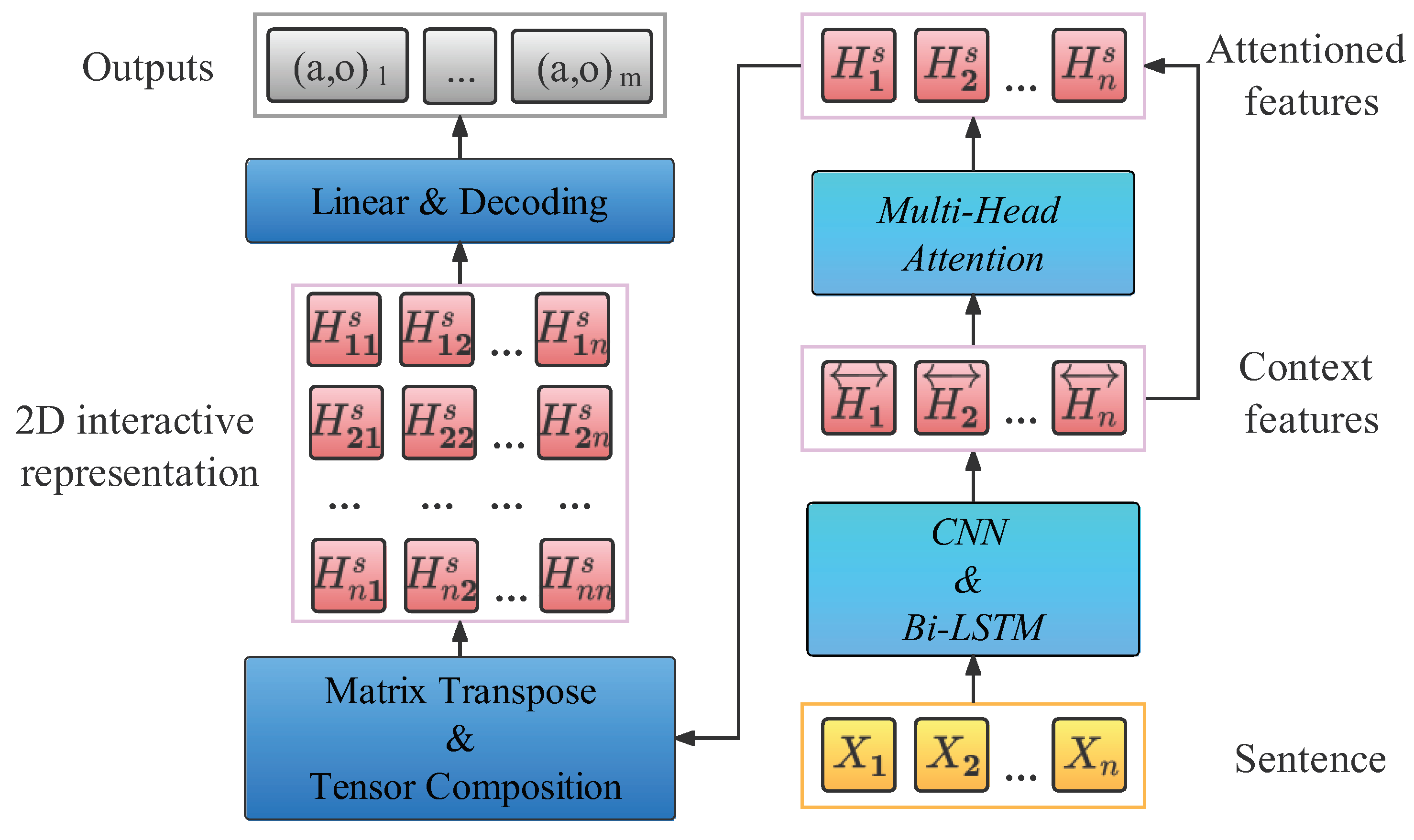

3.2. Generator

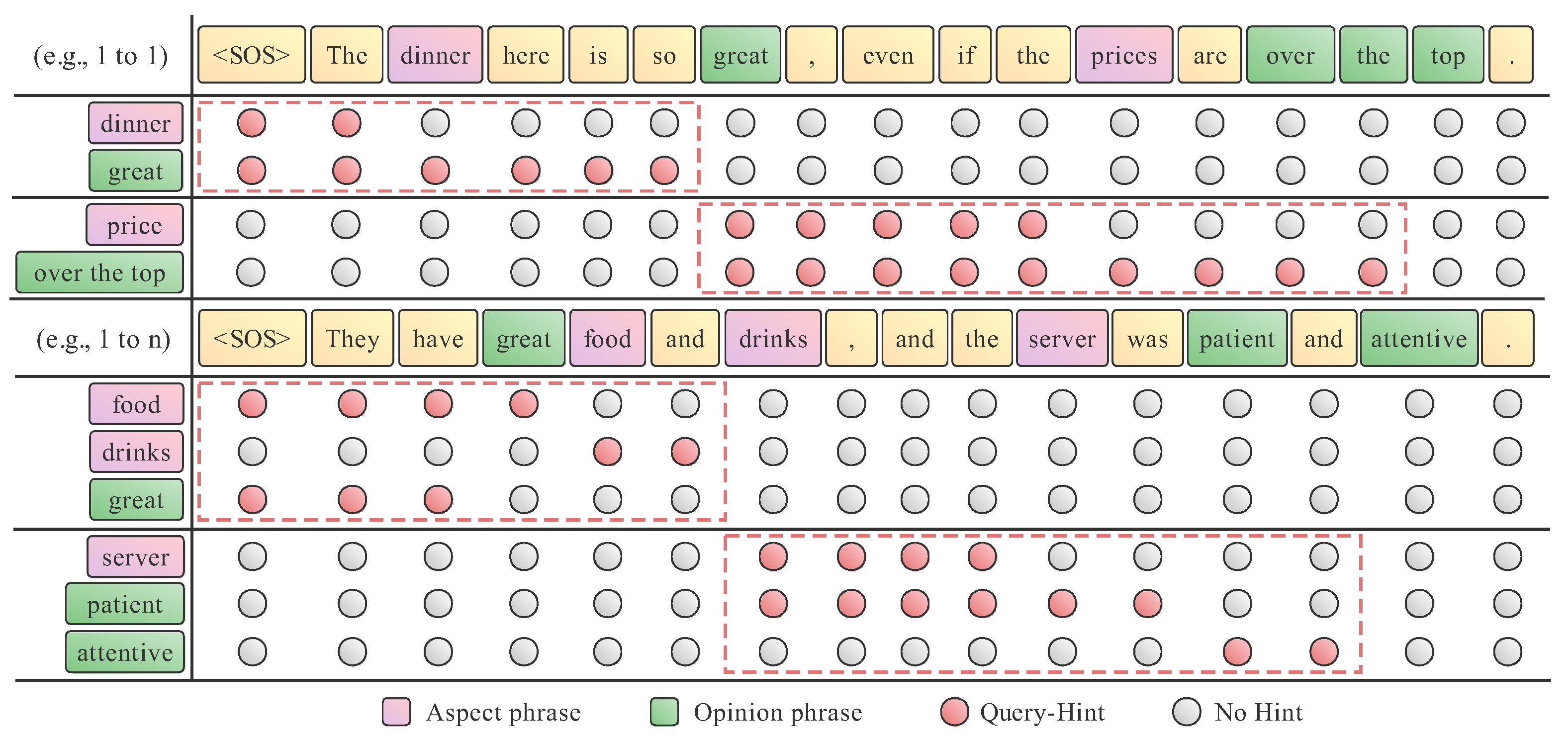

3.3. Query-Hint Mechanism

3.4. Loss Functions

3.5. Classifier

4. Experiments

4.1. Dataset and Settings

4.1.1. Labeled Dataset

4.1.2. Unlabeled Dataset

4.1.3. Experimental Settings

4.2. Baselines

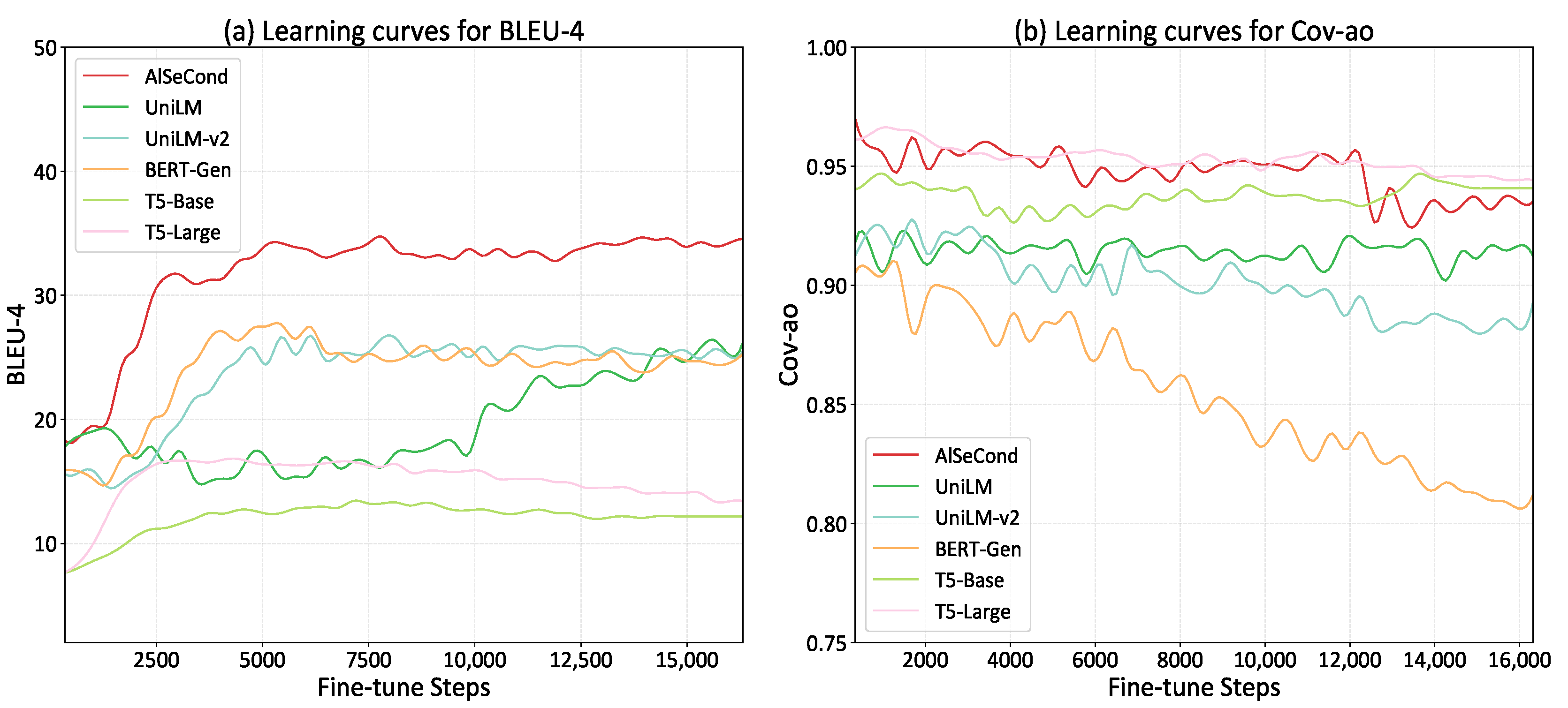

4.3. Generated Quality Evaluation

4.3.1. Fluency and Diversity Evaluation

4.3.2. Sentiment Evaluation

4.4. Case Study

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LMs | Language Models |

| NLG | Natural Language Generation |

| GPT | Generative Pre-Training |

| PPLM | Plug and Play Language Model |

| CTRL | Conditional-Transformer-Language |

| CoCon | Content-Conditioner |

| FSCTG | Fine-grained Sentiment-Controlled Text Generation |

| A2T | Attribute-to-Text |

| AT2T | Attribute-matched-Text-to-Text |

| HTT | Hierarchical Template-Transformer |

| AlSeCond | Aspect-level Sentiment Conditioner |

| AOPE | Aspect Opinion Pair Extraction |

| 2D-IMLF | Two-Dimensional Interaction-Based Multi-task Learning Framework |

| CNN | Convolutional Neural Networks |

| Bi-LSTM | Bidirectional Long Short-Term Memory |

| Val | Validation |

| AST | Aspect Sentiment Triplet |

| ASTE | Aspect Sentiment Triplet Extraction |

| MAMS-ASTA | Multi-Aspect Multi-Sentiment Aspect-Term Sentiment Analysis |

| BPE | Byte Pair Encoding |

| GTS | Grid Tagging Scheme |

| GloVe | Global Vectors |

| UniLM | Unified Language Model |

| BERT | Bidirectional Encoder Representations from Transformer |

| BLEU | Bilingual Evaluation Understudy |

| ROUGE | Recall-Oriented Understudy for Gisting Evaluation |

| METEOR | Metric for Evaluation of Translation with Explicit Ordering |

| Dist | Distinct |

| Cov | Coverage |

| Acc. | Accuracy |

References

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Keskar, N.S.; McCann, B.; Varshney, L.R.; Xiong, C.; Socher, R. CTRL: A Conditional Transformer Language Model for Controllable Generation. arXiv 2019, arXiv:1909.05858. [Google Scholar]

- Ziegler, D.M.; Stiennon, N.; Wu, J.; Brown, T.B.; Radford, A.; Amodei, D.; Christiano, P.F.; Irving, G. Fine-Tuning Language Models from Human Preferences. arXiv 2019, arXiv:1909.08593. [Google Scholar]

- Dathathri, S.; Madotto, A.; Lan, J.; Hung, J.; Frank, E.; Molino, P.; Yosinski, J.; Liu, R. Plug and Play Language Models: A Simple Approach to Controlled Text Generation. arXiv 2019, arXiv:1912.02164. [Google Scholar]

- Zang, H.; Wan, X. Towards Automatic Generation of Product Reviews from Aspect-Sentiment Scores. In Proceedings of the International Conference on Natural Language Generation, Santiago de Compostela, Spain, 4–7 September 2017. [Google Scholar]

- Chen, H.; Lin, Y.; Qi, F.; Hu, J.; Li, P.; Zhou, J.; Sun, M. Aspect-Level Sentiment-Controllable Review Generation with Mutual Learning Framework. In Proceedings of the National Conference on Artificial Intelligence, Online, 2–9 February 2021. [Google Scholar]

- Dong, L.; Huang, S.; Wei, F.; Lapata, M.; Zhou, M.; Xu, K. Learning to Generate Product Reviews from Attributes. In Proceedings of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, 3–7 April 2017. [Google Scholar]

- Sharma, V.; Sharma, H.; Bishnu, A.; Patel, L. Cyclegen: Cyclic consistency based product review generator from attributes. In Proceedings of the International Conference on Natural Language Generation, Tilburg, The Netherlands, 5–8 November 2018. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Kikuchi, Y.; Neubig, G.; Sasano, R.; Takamura, H.; Okumura, M. Controlling Output Length in Neural Encoder-Decoders. arXiv 2016, arXiv:1609.09552. [Google Scholar]

- Ficler, J.; Goldberg, Y. Controlling Linguistic Style Aspects in Neural Language Generation. arXiv 2017, arXiv:1707.02633. [Google Scholar]

- Yu, L.; Zhang, W.; Wang, J.; Yu, Y. SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient. In Proceedings of the National Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016. [Google Scholar]

- Chan, A.T.S.; Ong, Y.S.; Pung, B.T.W.; Zhang, A.; Fu, J. CoCon: A Self-Supervised Approach for Controlled Text Generation. arXiv 2020, arXiv:2006.03535. [Google Scholar]

- Yu, D.; Yu, Z.; Sagae, K. Attribute Alignment: Controlling Text Generation from Pre-trained Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2021, Online, 7–11 November 2021; pp. 2251–2268. [Google Scholar]

- Lipton, Z.C.; Vikram, S.; McAuley, J. Generative Concatenative Nets Jointly Learn to Write and Classify Reviews. arXiv 2015, arXiv:1511.03683. [Google Scholar]

- Kim, J.; Choi, S.; Amplayo, R.K.; Hwang, S.-w. Retrieval-Augmented Controllable Review Generation. In Proceedings of the International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020. [Google Scholar]

- Fei, H.; Li, C.; Ji, D.; Li, F. Mutual disentanglement learning for joint fine-grained sentiment classification and controllable text generation. In Proceedings of the 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022; pp. 1555–1565. [Google Scholar]

- Yuan, L.; Zhang, X.; Yu, L.C. Hierarchical template transformer for fine-grained sentiment controllable generation. Inf. Process. Manag. 2022, 59, 103048. [Google Scholar] [CrossRef]

- Peng, H.; Xu, L.; Bing, L.; Huang, F.; Lu, W.; Si, L. Knowing what, how and why: A near complete solution for aspect-based sentiment analysis. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 8600–8607. [Google Scholar]

- Manning, C.D.; Schütze, H. Foundations of Statistical Natural Language Processing; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Bengio, Y.; Ducharme, R.; Vincent, P. A Neural Probabilistic Language Model. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 29 November–4 December 2000. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Zou, X.; Yin, D.; Zhong, Q.; Ding, M.; Yang, Z.; Tang, J. Controllable Generation from Pre-trained Language Models via Inverse Prompting. In Proceedings of the Knowledge Discovery and Data Mining, Virtual, 14–18 August 2021. [Google Scholar]

- Xia, F.; Wang, L.; Tang, T.; Chen, X.; Kong, X.; Oatley, G.; King, I. CenGCN: Centralized Convolutional Networks with Vertex Imbalance for Scale-Free Graphs. IEEE Trans. Knowl. Data Eng. 2022. [Google Scholar] [CrossRef]

- Zhao, H.; Huang, L.; Zhang, R.; Lu, Q.; Xue, H. SpanMlt: A Span-based Multi-Task Learning Framework for Pair-wise Aspect and Opinion Terms Extraction. In Proceedings of the Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar]

- Chen, S.; Liu, J.; Wang, Y.; Zhang, W.; Chi, Z. Synchronous Double-channel Recurrent Network for Aspect-Opinion Pair Extraction. In Proceedings of the Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar]

- Zhu, L.; Xu, M.; Bao, Y.; Xu, Y.; Kong, X. Deep learning for aspect-based sentiment analysis: A review. PeerJ Comput. Sci. 2022, 8, e1044. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Ying, C.; Zhao, F.; Fan, Z.; Dai, X.; Xia, R. Grid Tagging Scheme for End-to-End Fine-grained Opinion Extraction. In Proceedings of the EMNLP (Findings), Online, 16–20 November 2020. [Google Scholar]

- Xu, L.; Li, H.; Lu, W.; Bing, L. Position-Aware Tagging for Aspect Sentiment Triplet Extraction. arXiv 2020, arXiv:2010.02609. [Google Scholar]

- Jiang, Q.; Chen, L.; Xu, R.; Ao, X.; Yang, M. A Challenge Dataset and Effective Models for Aspect-Based Sentiment Analysis. In Proceedings of the Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Pontiki, M.; Galanis, D.; Pavlopoulos, J.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S. SemEval-2014 Task 4: Aspect Based Sentiment Analysis. In Proceedings of the International Conference on Computational Linguistics, Dublin, Ireland, 23–29 August 2014. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Manandhar, S.; Androutsopoulos, I. SemEval-2015 Task 12: Aspect Based Sentiment Analysis. In Proceedings of the North American Chapter of the Association for Computational Linguistics, Denver, CO, USA, 31 May–5 June 2015. [Google Scholar]

- Pontiki, M.; Galanis, D.; Papageorgiou, H.; Androutsopoulos, I.; Manandhar, S.; AL-Smadi, M.; Al-Ayyoub, M.; Zhao, Y.; Qin, B.; Clercq, O.D.; et al. SemEval-2016 task 5: Aspect based sentiment analysis. In Proceedings of the North American Chapter of the Association for Computational Linguistics, San Diego, CA, USA, 12–17 June 2016. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the Meeting of the Association for Computational Linguistics, Beijing, China, 26–31 July 2015. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global Vectors for Word Representation. In Proceedings of the Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Liu, P.J.; Matena, M.; Lee, K.; Roberts, A.; Zhou, Y.; Shazeer, N.; Raffel, C.; Narang, S.; Li, W. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Dong, L.; Wang, Y.; Wei, F.; Zhou, M.; Yang, N.; Gao, J.; Hon, H.W.; Liu, X.; Wang, W. Unified Language Model Pre-training for Natural Language Understanding and Generation. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Piao, S.; Dong, L.; Wang, Y.; Wei, F.; Zhou, M.; Yang, N.; Gao, J.; Hon, H.W.; Bao, H.; Liu, X.; et al. UniLMv2: Pseudo-Masked Language Models for Unified Language Model Pre-Training. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002. [Google Scholar]

- Lin, C.Y. ROUGE: A Package for Automatic Evaluation of Summaries. In Proceedings of the Meeting of the Association for Computational Linguistics, Barcelona, Spain, 21–26 July 2004. [Google Scholar]

- Lavie, A.; Agarwal, A. METEOR: An Automatic Metric for MT Evaluation with High Levels of Correlation with Human Judgments. In Proceedings of the Workshop on Statistical Machine Translation, Prague, Czech Republic, 23 June 2007. [Google Scholar]

- Brockett, C.; Dolan, B.; Galley, M.; Gao, J.; Li, J. A Diversity-Promoting Objective Function for Neural Conversation Models. In Proceedings of the North American Chapter of the Association for Computational Linguistics, Denver, CO, USA, 31 May–5 June 2015. [Google Scholar]

- Zhu, Y.; Lu, S.; Zheng, L.; Guo, J.; Zhang, W.; Wang, J.; Yu, Y. Texygen: A Benchmarking Platform for Text Generation Models. In Proceedings of the International Acm Sigir Conference on Research and Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018. [Google Scholar]

- Lin, B.Y.; Zhou, W.; Shen, M.; Zhou, P.; Bhagavatula, C.; Choi, Y.; Ren, X. CommonGen: A Constrained Text Generation Challenge for Generative Commonsense Reasoning. arXiv 2019, arXiv:1911.03705. [Google Scholar]

| Dataset | #Instance | #Positive | #Neutral | #Negative | Sentiment Form | |

|---|---|---|---|---|---|---|

| ASTE-Data-V2-Rest | Train | 2728 | 3490 | 241 | 1014 | Aspect-Opinion-Polarity |

| Val | 668 | 841 | 76 | 248 | ||

| Test | 1140 | 1497 | 120 | 376 | ||

| MAMS-ASTA | Train | 4297 | 3380 | 5042 | 2764 | Aspect-Polarity |

| Val | 500 | 403 | 604 | 325 | ||

| Test | 500 | 400 | 607 | 329 | ||

| Yelp | - | 1,160,546 | - | - | - | - |

| Dataset | Models | BLEU-3 (↑) | BLEU-4 (↑) | METETOR (↑) | ROUGE-L (↑) | Self-Bleu-4(↓) | Dist-1 (↑) | Dist-2 (↑) | Dist-3 (↑) |

|---|---|---|---|---|---|---|---|---|---|

| ASTE-Data-V2 | PPLM | 0.196 | 0.032 | 14.078 | 13.827 | 7.939 | 0.0841 | 0.4102 | 0.7180 |

| HTT | 13.100 | 7.656 | 34.899 | 42.544 | 42.664 | 0.0525 | 0.2356 | 0.4113 | |

| T5-base | 21.246 | 13.216 | 29.007 | 41.092 | 22.580 | 0.1621 | 0.4725 | 0.6101 | |

| T5-large | 24.747 | 16.462 | 29.986 | 43.614 | 23.045 | 0.1721 | 0.4658 | 0.5934 | |

| UniLM | 33.093 | 27.486 | 46.808 | 52.582 | 20.334 | 0.1489 | 0.4961 | 0.6663 | |

| BERT-Gen | 32.693 | 28.050 | 45.223 | 45.162 | 24.149 | 0.1450 | 0.4957 | 0.6411 | |

| UniLM-v2 | 32.159 | 27.525 | 45.107 | 44.514 | 22.830 | 0.1451 | 0.5060 | 0.6553 | |

| AlSeCond | 40.453 | 34.611 | 55.127 | 63.720 | 15.972 | 0.1610 | 0.5439 | 0.7073 | |

| ⌊ w/o sentiment loss | 37.961 | 32.190 | 55.699 | 62.911 | 16.195 | 0.1552 | 0.5301 | 0.7028 | |

| ⌊ w/o query-hint | 34.305 | 29.080 | 55.391 | 61.237 | 14.442 | 0.1551 | 0.5431 | 0.7264 | |

| ⌊ w/o unlabeled dataset | 29.085 | 26.387 | 42.601 | 48.213 | 21.727 | 0.1444 | 0.4942 | 0.6628 | |

| MAMS-ASTA | HTT | 2.279 | 0.412 | 17.193 | 23.197 | 51.373 | 0.0602 | 0.2271 | 0.4003 |

| T5-base | 3.653 | 1.479 | 14.400 | 24.181 | 27.671 | 0.1299 | 0.3761 | 0.5541 | |

| T5-large | 4.212 | 1.767 | 15.180 | 25.828 | 27.626 | 0.1418 | 0.3761 | 0.5591 | |

| UniLM | 3.178 | 1.251 | 18.833 | 23.872 | 37.890 | 0.1032 | 0.3211 | 0.4878 | |

| BERT-Gen | 4.003 | 1.605 | 17.751 | 24.162 | 28.284 | 0.1284 | 0.4024 | 0.5778 | |

| UniLM-v2 | 3.898 | 1.559 | 17.757 | 23.999 | 27.858 | 0.1255 | 0.3989 | 0.5796 | |

| AlSeCond | 5.159 | 2.113 | 19.736 | 31.738 | 13.714 | 0.1627 | 0.5085 | 0.6811 | |

| ⌊ w/o sentiment loss | 4.944 | 1.999 | 23.734 | 31.302 | 14.112 | 0.1477 | 0.4978 | 0.7171 | |

| ⌊ w/o query-hint | 4.208 | 1.635 | 23.661 | 29.497 | 10.835 | 0.1604 | 0.5538 | 0.7653 | |

| ⌊ w/o unlabeled dataset | 3.458 | 1.026 | 20.761 | 28.924 | 15.787 | 0.1478 | 0.4728 | 0.6627 |

| Dataset | Models | Cov-a | Cov-o | Cov-ao | Acc. |

|---|---|---|---|---|---|

| ASTE-Data-V2 | PPLM | 0.3597 | 0.3642 | 0.1094 | 0.1761 |

| HTT | 0.7689 | 0.7773 | 0.6050 | 0.6328 | |

| T5-base | 0.9563 | 0.9764 | 0.9403 | 0.7812 | |

| T5-large | 0.9633 | 0.9839 | 0.9508 | 0.7948 | |

| UniLM | 0.9513 | 0.9568 | 0.9182 | 0.7450 | |

| BERT-Gen | 0.9352 | 0.9343 | 0.8886 | 0.7521 | |

| UniLM-v2 | 0.9438 | 0.9488 | 0.9087 | 0.7475 | |

| AlSeCond | 0.9824 | 0.9849 | 0.9734 | 0.7771 | |

| ⌊ w/o sentiment loss | 0.9633 | 0.9649 | 0.9468 | 0.7683 | |

| ⌊ w/o query-hint | 0.9412 | 0.9313 | 0.8966 | 0.7443 | |

| ⌊ w/o unlabeled dataset | 0.8158 | 0.8841 | 0.7556 | 0.6306 | |

| MAMS-ASTA | HTT | 0.7203 | 0.5123 | 0.3800 | 0.4532 |

| T5-base | 0.9610 | 0.9147 | 0.9042 | 0.5734 | |

| T5-large | 0.9738 | 0.9453 | 0.9416 | 0.5698 | |

| UniLM | 0.9251 | 0.7821 | 0.7590 | 0.5883 | |

| BERT-Gen | 0.9438 | 0.8009 | 0.7807 | 0.6048 | |

| UniLM-v2 | 0.9341 | 0.7515 | 0.7305 | 0.6310 | |

| AlSeCond | 0.9798 | 0.9588 | 0.9558 | 0.6267 | |

| ⌊ w/o sentiment loss | 0.9318 | 0.8952 | 0.8825 | 0.6050 | |

| ⌊ w/o query-hint | 0.8338 | 0.6811 | 0.6257 | 0.5447 | |

| ⌊ w/o unlabeled dataset | 0.7829 | 0.7095 | 0.6325 | 0.5157 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, L.; Xu, Y.; Zhu, Z.; Bao, Y.; Kong, X. Fine-Grained Sentiment-Controlled Text Generation Approach Based on Pre-Trained Language Model. Appl. Sci. 2023, 13, 264. https://doi.org/10.3390/app13010264

Zhu L, Xu Y, Zhu Z, Bao Y, Kong X. Fine-Grained Sentiment-Controlled Text Generation Approach Based on Pre-Trained Language Model. Applied Sciences. 2023; 13(1):264. https://doi.org/10.3390/app13010264

Chicago/Turabian StyleZhu, Linan, Yifei Xu, Zhechao Zhu, Yinwei Bao, and Xiangjie Kong. 2023. "Fine-Grained Sentiment-Controlled Text Generation Approach Based on Pre-Trained Language Model" Applied Sciences 13, no. 1: 264. https://doi.org/10.3390/app13010264

APA StyleZhu, L., Xu, Y., Zhu, Z., Bao, Y., & Kong, X. (2023). Fine-Grained Sentiment-Controlled Text Generation Approach Based on Pre-Trained Language Model. Applied Sciences, 13(1), 264. https://doi.org/10.3390/app13010264