Evaluation of Vision-Based Hand Tool Tracking Methods for Quality Assessment and Training in Human-Centered Industry 4.0

Abstract

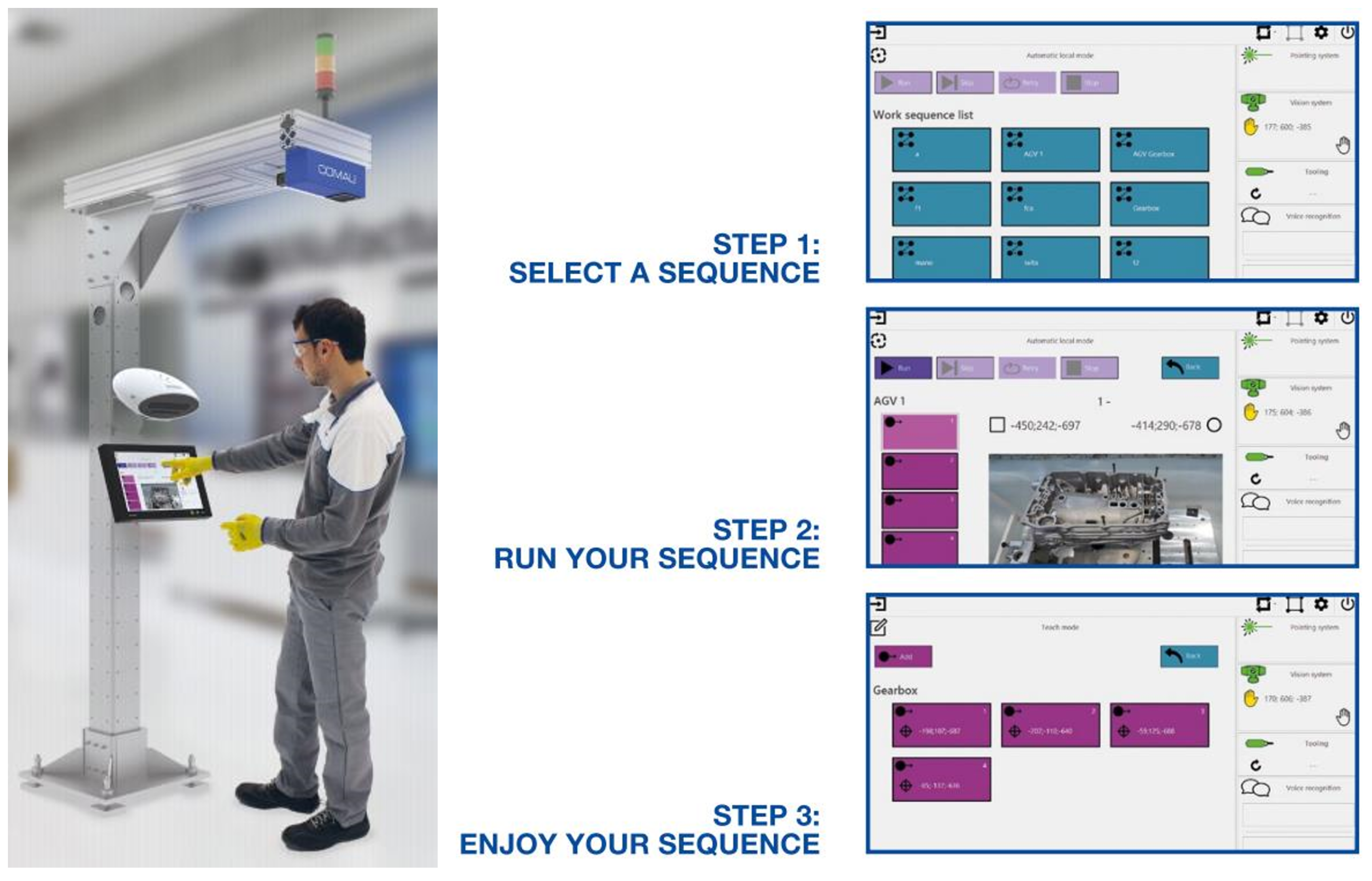

:1. Introduction

- Training an operator on assembly and maintenance procedures with a recorded sequence of actions;

- Tracking an operator activity to validate each manual operation and certify the quality of the job;

- Detecting risky actions and behaviors in time and alerting the operator;

- Ergonomics monitoring during the procedure for always proposing to the operator the less stressful posture to perform the operations.

- Real-time execution;

- High accuracy.

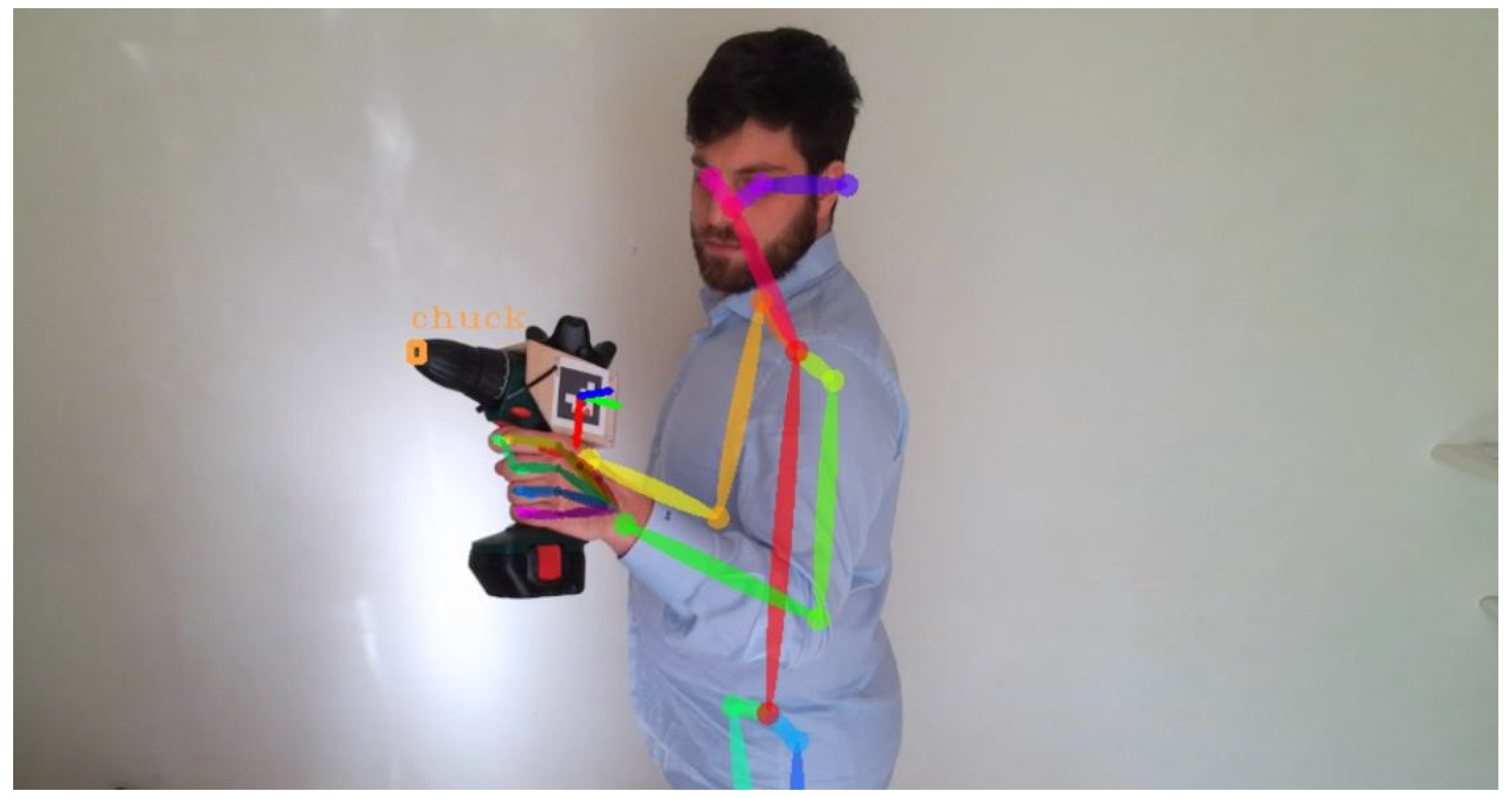

2. Materials and Methods

2.1. Systems for Hand Tool Tracking

- 1

- 2

- 3

- 4

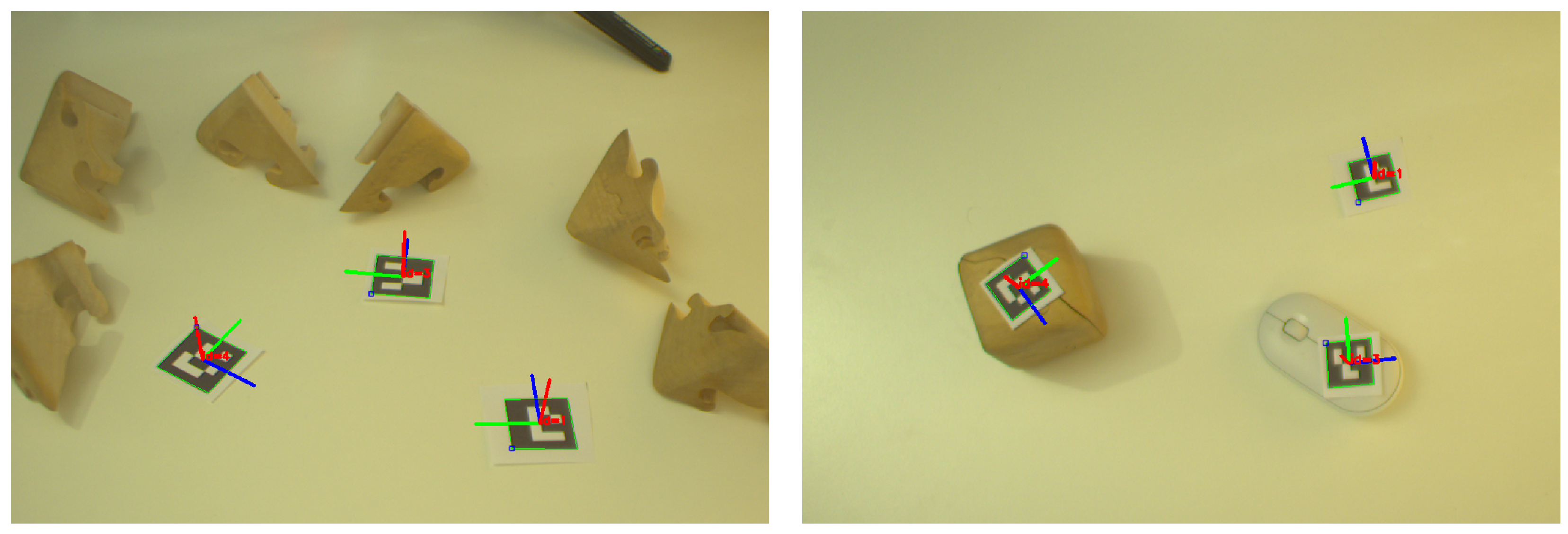

2.1.1. ArUco-Based SYSTEM

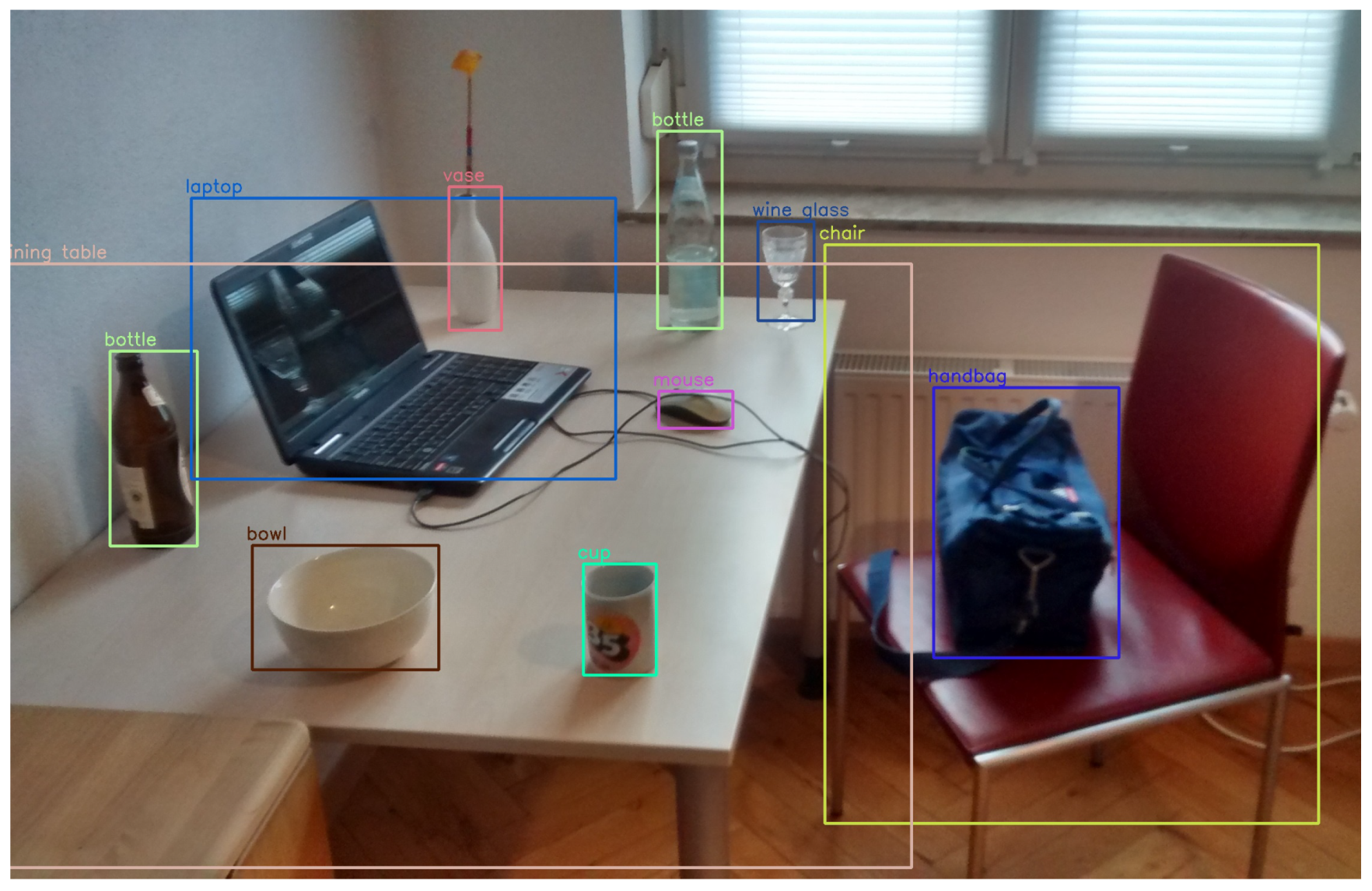

2.1.2. System Based on Deep Detection Model (YOLO)

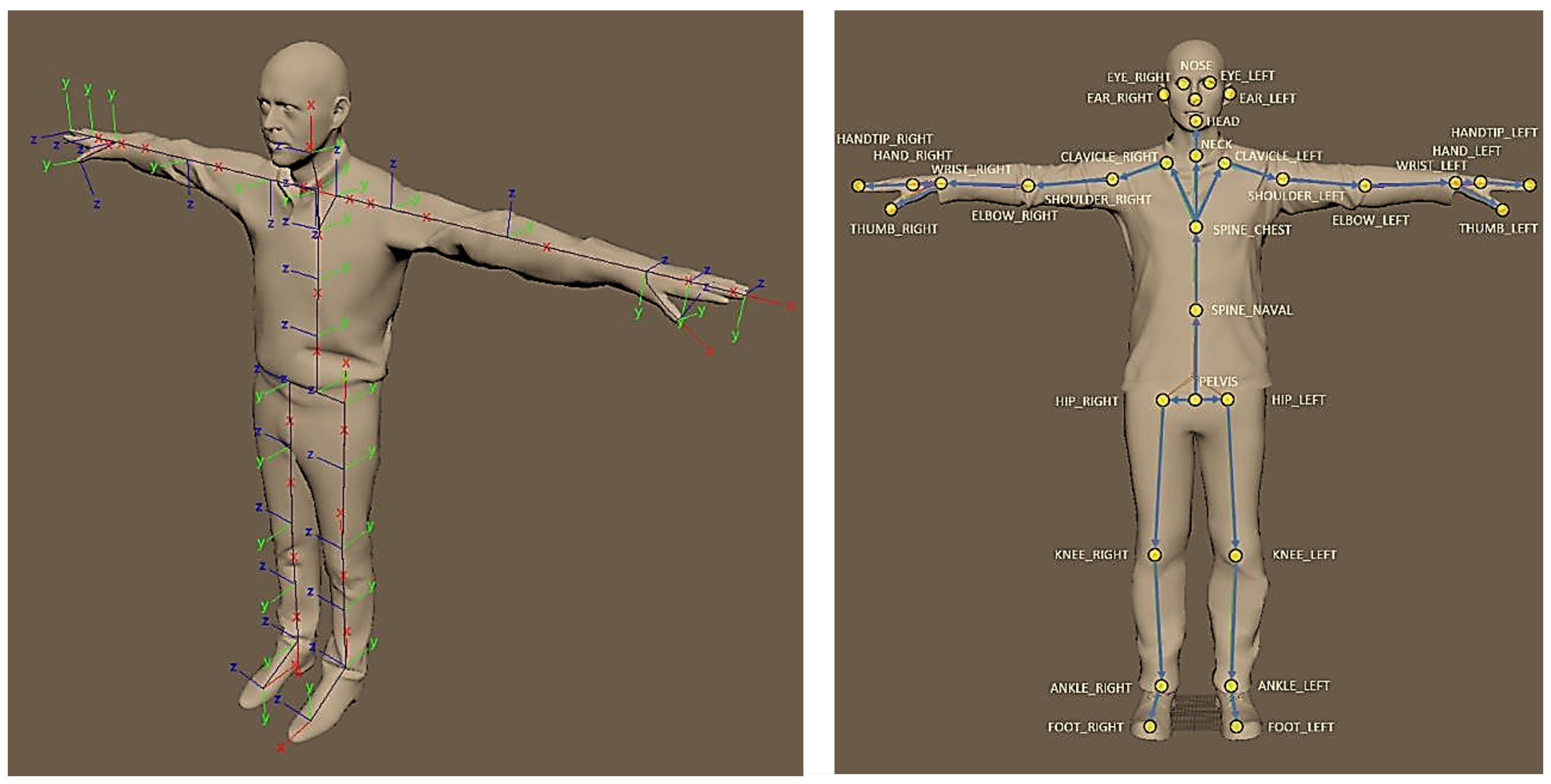

2.1.3. System Based on Azure Kinect Body Tracking

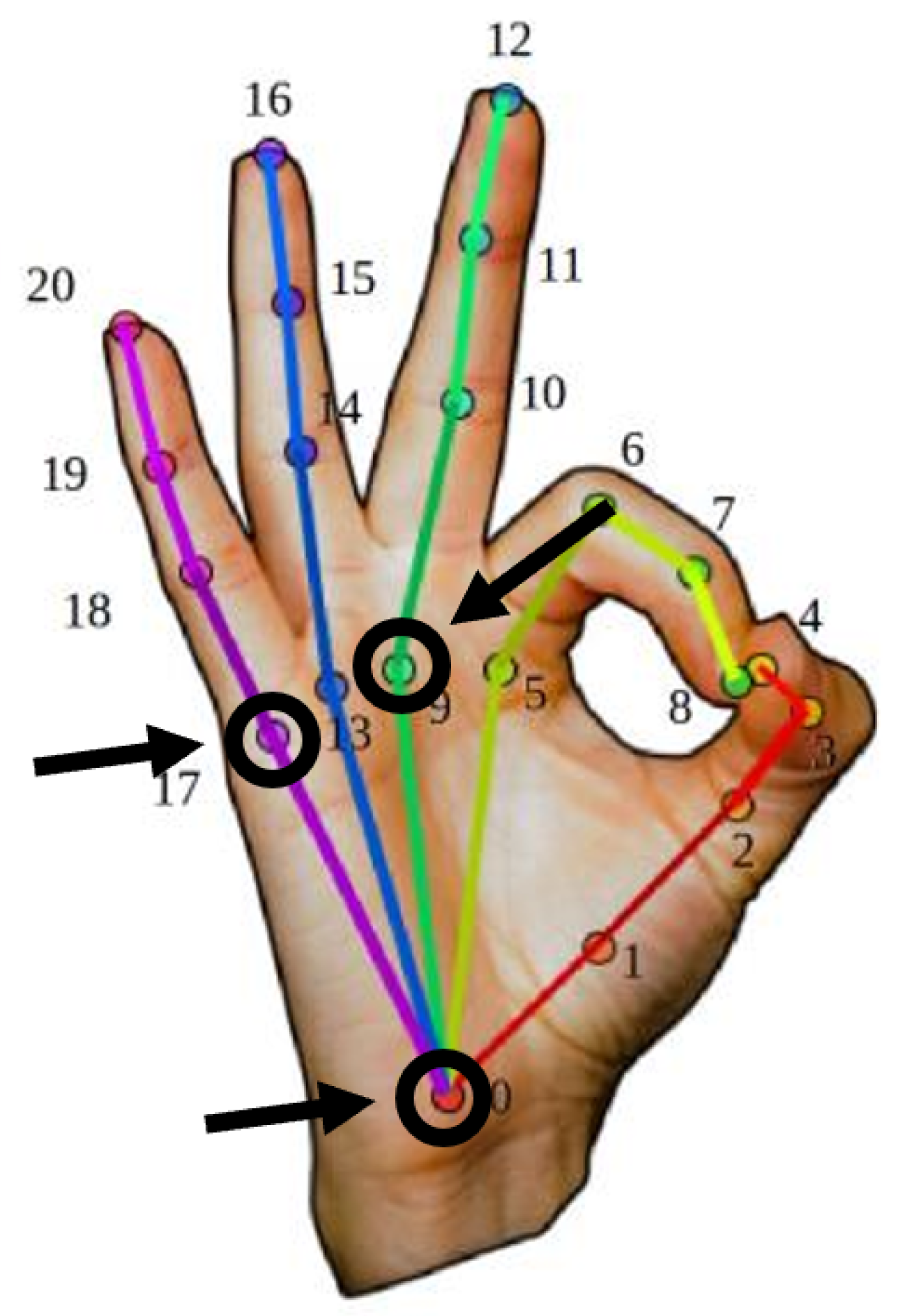

2.1.4. System Based on OpenPose

- Keypoint n.0 (wrist);

- Keypoint n.9 (middle finger knuckles);

- Keypoint n.17 (little finger knuckles).

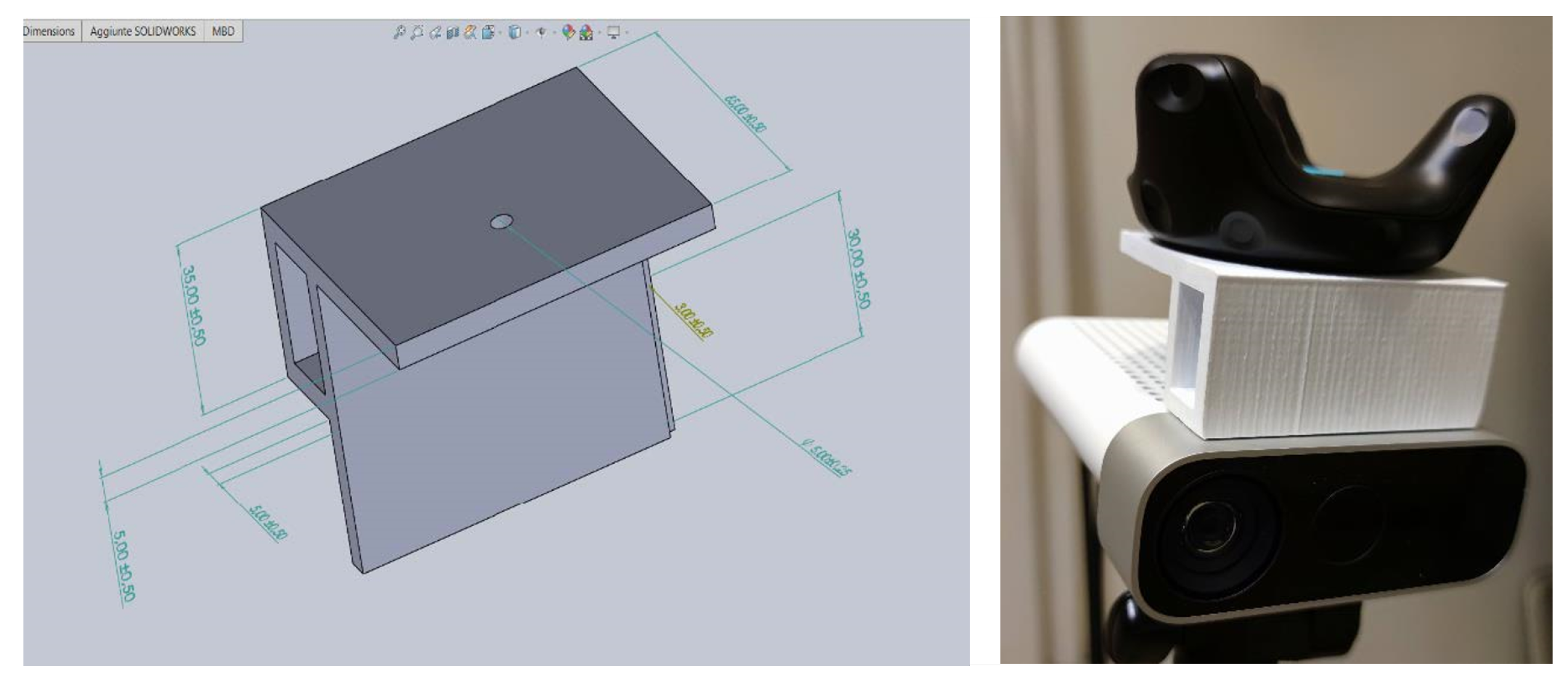

2.1.5. Calibration of the Proposed Systems

2.2. Experimental Validation

2.2.1. Evaluation System Setup

2.2.2. Experiment Description

- In the first scenario, the operator holds the power drill in static position on a workbench (stationary position) (Figure 9—Left);

- In the second scenario, the operator follows a trajectory with the power drill on the platform, keeping the speed of the movement low (slow-motion condition) (Figure 9—Right);

- In the last scenario, the previous trajectory is considered, but the speed was increased (fast-motion condition).

2.2.3. Evaluation Metrics

2.2.4. Statistics

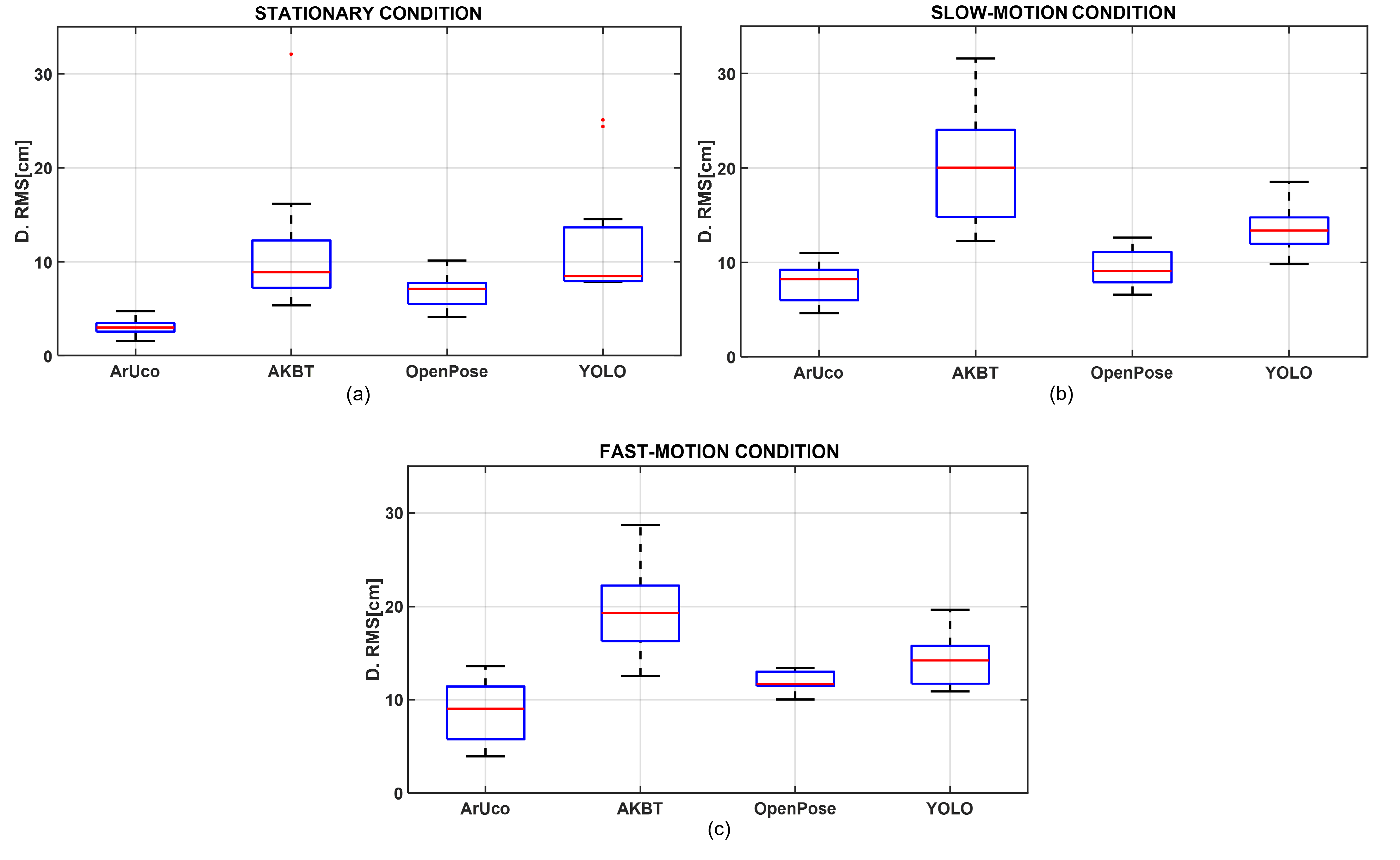

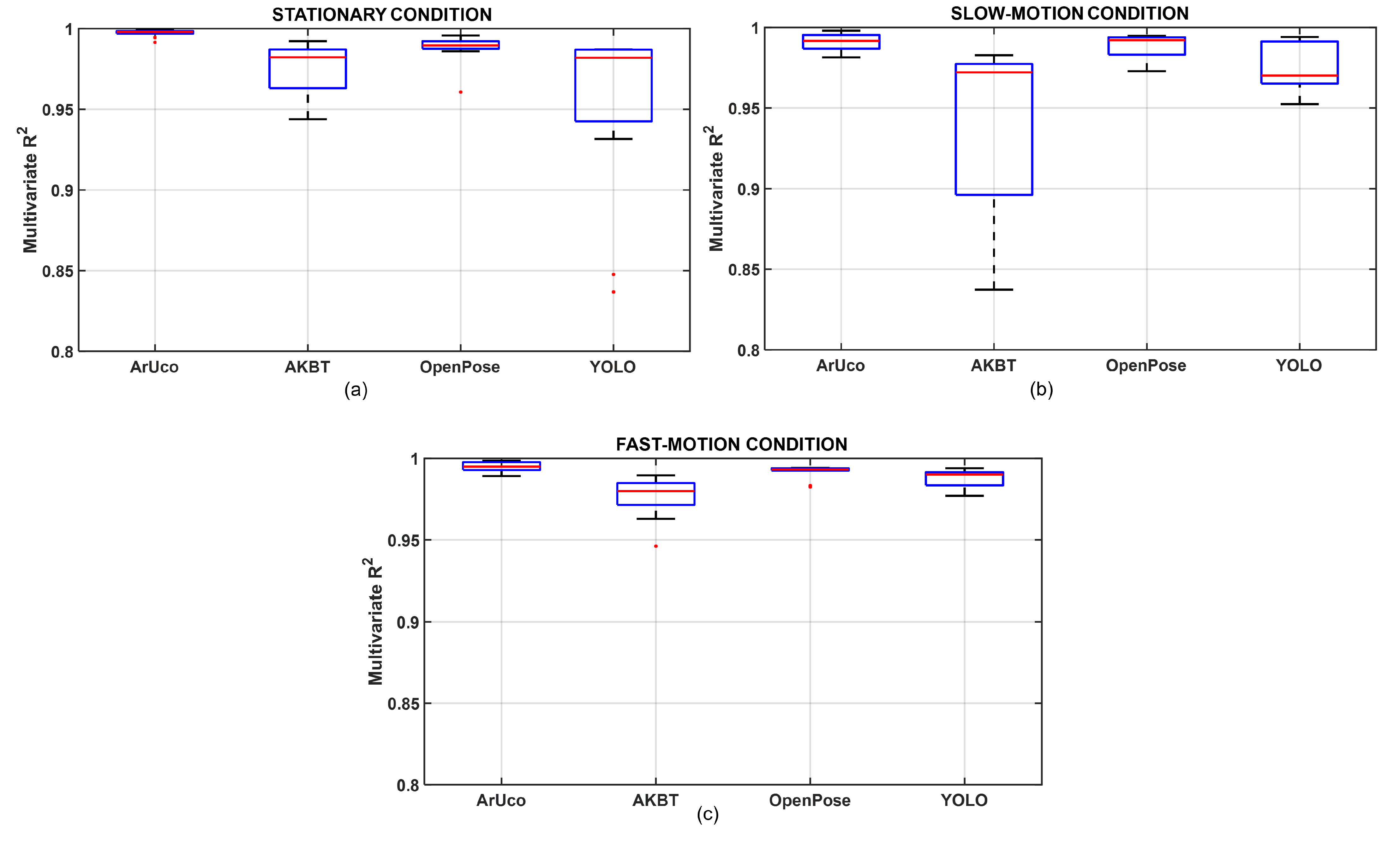

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- García-Pereira, I.; Casanova-Salas, P.; Gimeno, J.; Morillo, P.; Reiners, D. Cross-Device Augmented Reality Annotations Method for Asynchronous Collaboration in Unprepared Environments. Information 2021, 12, 519. [Google Scholar] [CrossRef]

- Brunetti, A.; Buongiorno, D.; Trotta, G.F.; Bevilacqua, V. Computer vision and deep learning techniques for pedestrian detection and tracking: A survey. Neurocomputing 2018, 300, 17–33. [Google Scholar] [CrossRef]

- Manghisi, V.M.; Uva, A.E.; Fiorentino, M.; Bevilacqua, V.; Trotta, G.F.; Monno, G. Real time RULA assessment using Kinect v2 sensor. Appl. Ergon. 2017, 65, 481–491. [Google Scholar] [CrossRef]

- Oztemel, E.; Gursev, S. Literature review of Industry 4.0 and related technologies. J. Intell. Manuf. 2020, 31, 127–182. [Google Scholar] [CrossRef]

- Xu, L.D.; Xu, E.L.; Li, L. Industry 4.0: State of the art and future trends. Int. J. Prod. Res. 2018, 56, 2941–2962. [Google Scholar] [CrossRef] [Green Version]

- Quevedo, W.X.; Sánchez, J.S.; Arteaga, O.; Álvarez, M.; Zambrano, V.D.; Sánchez, C.R.; Andaluz, V.H. Virtual reality system for training in automotive mechanics. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics (AVR 2017), Ugento, Italy, 12–15 June 2017; Springer: Cham, Switzerland, 2017; pp. 185–198. [Google Scholar] [CrossRef]

- Kumar, R.; Banga, H.K.; Kumar, R.; Singh, S.; Singh, S.; Scutaru, M.L.; Pruncu, C.I. Ergonomic evaluation of workstation design using taguchi experimental approach: A case of an automotive industry. Int. J. Interact. Des. Manuf. (IJIDeM) 2021, 15, 481–498. [Google Scholar] [CrossRef]

- Cao, Y.; Jia, F.; Manogaran, G. Efficient traceability systems of steel products using blockchain-based industrial Internet of Things. IEEE Trans. Ind. Inform. 2019, 16, 6004–6012. [Google Scholar] [CrossRef]

- Kostakis, P.; Kargas, A. Big-Data Management: A Driver for Digital Transformation? Information 2021, 12, 411. [Google Scholar] [CrossRef]

- Webel, S.; Bockholt, U.; Keil, J. Design criteria for AR-based training of maintenance and assembly tasks. In Proceedings of the International Conference on Virtual and Mixed Reality Held as Part of HCI International 2011 (VMR 2011), Orlando, FL, USA, 9–14 July 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 123–132. [Google Scholar] [CrossRef]

- Lee, K. Augmented reality in education and training. TechTrends 2012, 56, 13–21. [Google Scholar] [CrossRef]

- Zajec, P.; Rožanec, J.M.; Trajkova, E.; Novalija, I.; Kenda, K.; Fortuna, B.; Mladenić, D. Help Me Learn! Architecture and Strategies to Combine Recommendations and Active Learning in Manufacturing. Information 2021, 12, 473. [Google Scholar] [CrossRef]

- Holz, D.; Ichim, A.E.; Tombari, F.; Rusu, R.B.; Behnke, S. Registration with the point cloud library: A modular framework for aligning in 3-D. IEEE Robot. Autom. Mag. 2015, 22, 110–124. [Google Scholar] [CrossRef]

- Du, G.; Wang, K.; Lian, S.; Zhao, K. Vision-based robotic grasping from object localization, object pose estimation to grasp estimation for parallel grippers: A review. Artif. Intell. Rev. 2021, 54, 1677–1734. [Google Scholar] [CrossRef]

- Altini, N.; De Giosa, G.; Fragasso, N.; Coscia, C.; Sibilano, E.; Prencipe, B.; Hussain, S.M.; Brunetti, A.; Buongiorno, D.; Guerriero, A.; et al. Segmentation and Identification of Vertebrae in CT Scans Using CNN, k-Means Clustering and k-NN. Informatics 2021, 8, 40. [Google Scholar] [CrossRef]

- Zhu, M.; Derpanis, K.G.; Yang, Y.; Brahmbhatt, S.; Zhang, M.; Phillips, C.; Lecce, M.; Daniilidis, K. Single image 3D object detection and pose estimation for grasping. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3936–3943. [Google Scholar]

- Schwarz, M.; Schulz, H.; Behnke, S. RGB-D object recognition and pose estimation based on pre-trained convolutional neural network features. In Proceedings of the 2015 IEEE international conference on robotics and automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1329–1335. [Google Scholar]

- Periyasamy, A.S.; Schwarz, M.; Behnke, S. Robust 6D object pose estimation in cluttered scenes using semantic segmentation and pose regression networks. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 6660–6666. [Google Scholar]

- Kendall, A.; Grimes, M.; Cipolla, R. Posenet: A convolutional network for real-time 6-dof camera relocalization. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2938–2946. [Google Scholar]

- Banga, H.K.; Goel, P.; Kumar, R.; Kumar, V.; Kalra, P.; Singh, S.; Singh, S.; Prakash, C.; Pruncu, C. Vibration Exposure and Transmissibility on Dentist’s Anatomy: A Study of Micro Motors and Air-Turbines. Int. J. Environ. Res. Public Health 2021, 18, 4084. [Google Scholar] [CrossRef] [PubMed]

- Romero-Ramirez, F.J.; Muñoz-Salinas, R.; Medina-Carnicer, R. Speeded up detection of squared fiducial markers. Image Vis. Comput. 2018, 76, 38–47. [Google Scholar] [CrossRef]

- Garrido-Jurado, S.; Munoz-Salinas, R.; Madrid-Cuevas, F.J.; Medina-Carnicer, R. Generation of fiducial marker dictionaries using mixed integer linear programming. Pattern Recognit. 2016, 51, 481–491. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. Scaled-yolov4: Scaling cross stage partial network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13029–13038. [Google Scholar]

- Romeo, L.; Marani, R.; Malosio, M.; Perri, A.G.; D’Orazio, T. Performance analysis of body tracking with the microsoft azure kinect. In Proceedings of the 2021 29th Mediterranean Conference on Control and Automation (MED), Puglia, Italy, 22–25 June 2021; pp. 572–577. [Google Scholar]

- Tölgyessy, M.; Dekan, M.; Chovanec, L.; Hubinskỳ, P. Evaluation of the azure Kinect and its comparison to Kinect V1 and Kinect V2. Sensors 2021, 21, 413. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo Martinez, G.; Simon, T.; Wei, S.; Sheikh, Y.A. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef] [Green Version]

- Simon, T.; Joo, H.; Matthews, I.; Sheikh, Y. Hand Keypoint Detection in Single Images using Multiview Bootstrapping. In Proceedings of the 2017 Hand Keypoint Detection in Single Images using Multiview Bootstrapping (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. In Proceedings of the 2017 Hand Keypoint Detection in Single Images Using Multiview Bootstrapping (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Wei, S.E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional pose machines. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Altini, N.; Cascarano, G.D.; Brunetti, A.; De Feudis, I.; Buongiorno, D.; Rossini, M.; Pesce, F.; Gesualdo, L.; Bevilacqua, V. A Deep Learning Instance Segmentation Approach for Global Glomerulosclerosis Assessment in Donor Kidney Biopsies. Electronics 2020, 9, 1768. [Google Scholar] [CrossRef]

- Ikbal, M.S.; Ramadoss, V.; Zoppi, M. Dynamic Pose Tracking Performance Evaluation of HTC Vive Virtual Reality System. IEEE Access 2020, 9, 3798–3815. [Google Scholar] [CrossRef]

- Niehorster, D.C.; Li, L.; Lappe, M. The accuracy and precision of position and orientation tracking in the HTC vive virtual reality system for scientific research. i-Perception 2017, 8, 2041669517708205. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Borges, M.; Symington, A.; Coltin, B.; Smith, T.; Ventura, R. HTC vive: Analysis and accuracy improvement. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 2610–2615. [Google Scholar]

- Lwowski, J.; Majumdat, A.; Benavidez, P.; Prevost, J.J.; Jamshidi, M. HTC Vive Tracker: Accuracy for Indoor Localization. IEEE Syst. Man Cybern. Mag. 2020, 6, 15–22. [Google Scholar] [CrossRef]

- De Feudis, I.; Buongiorno, D.; Cascarano, G.D.; Brunetti, A.; Micele, D.; Bevilacqua, V. A Nonlinear Autoencoder for Kinematic Synergy Extraction from Movement Data Acquired with HTC Vive Trackers. In Progresses in Artificial Intelligence and Neural Systems; Springer: Singapore, 2021; pp. 231–241. [Google Scholar] [CrossRef]

- Smirnov, A. Hand Tracking for Mobile Virtual Reality. Bachelor’s Thesis, Charles University, Prague, Czech Republic, 2021. [Google Scholar]

| Traj. # | ArUco | AKBT | OpenPose | YOLO |

|---|---|---|---|---|

| 1 | 2.8 (0.4) | 6.1 (1.0) | 6.1 (0.4) | 13.1 (0.5) |

| 2 | 2.7 (0.4) | 8.9 (0.9) | 5.3 (0.2) | 14.5 (0.4) |

| 3 | 3.0 (0.4) | 6.9 (2.3) | 5.6 (0.2) | 24.4 (0.5) |

| 4 | 2.5 (0.5) | 5.4 (1.2) | 5.9 (0.2) | 25.1 (0.4) |

| 5 | 4.7 (0.1) | 2.1 (1.5) | 10.1 (0.7) | 13.4 (0.1) |

| 6 | 4.6 (0.8) | 11.2 (1.6) | 5.1 (0.8) | 8.7 (0.4) |

| 7 | 4.6 (0.9) | 10.3 (1.0) | 4.1 (0.4) | 8.5 (0.3) |

| 8 | 3.1 (1.3) | 7.3 (0.4) | 7.6 (0.2) | 7.9 (0.1) |

| 9 | 3.0 (1.0) | 9.2 (0.7) | 7.4 (0.2) | 7.9 (0.1) |

| 10 | 2.6 (0.4) | 8.7 (0.2) | 7.2 (0.1) | 7.9 (0.1) |

| 11 | 3.1 (0.3) | 8.9 (0.3) | 7.1 (0.1) | 8.0 (0.1) |

| 12 | 1.5 (0.1) | 15.3 (0.4) | 8.1 (0.2) | 7.9 (0.1) |

| 3.1 (1.0) | 8.9 (3.8) | 6.7 (1.6) | 11.9 (6.1) |

| Traj. # | ArUco | AKBT | OpenPose | YOLO | ||||

|---|---|---|---|---|---|---|---|---|

| D. RMS | D. RMS | D. RMS | D. RMS | |||||

| 1 | 7.0 (1.1) | 0.99 | 20.6 (5.3) | 0.98 | 11.2 (1.3) | 0.99 | 14.7 (1.8) | 0.99 |

| 2 | 9.0 (3.3) | 0.99 | 23.1 (2.7) | 0.97 | 12.4 (1.3) | 0.99 | 11.6 (1.9) | 0.99 |

| 3 | 8.2 (3.5) | 0.99 | 20.0 (1.7) | 0.98 | 11.1 (0.7) | 0.99 | 12.1 (1.2) | 0.99 |

| 4 | 9.8 (4.6) | 0.99 | 22.4 (1.5) | 0.97 | 12.6 (0.9) | 0.99 | 13.4 (1.2) | 0.99 |

| 5 | 5.0 (2.7) | 0.99 | 18.3 (0.9) | 0.92 | 9.1 (1.9) | 0.98 | 13.4 (3.4) | 0.96 |

| 6 | 6.2 (1.9) | 0.99 | 27.5 (15.6) | 0.84 | 10.0 (2.2) | 0.98 | 15.7 (4.9) | 0.95 |

| 7 | 9.0 (2.3) | 0.98 | 13.1 (1.4) | 0.97 | 10.7 (1.3) | 0.98 | 12.5 (0.9) | 0.97 |

| 8 | 9.7 (4.5) | 0.98 | 15.0 (2.9) | 0.97 | 6.8 (1.5) | 0.99 | 14.9 (6.4) | 0.96 |

| 9 | 11.0 (4.7) | 0.98 | 14.1 (3.6) | 0.97 | 6.6 (1.1) | 0.99 | 18.5 (4.5) | 0.95 |

| 10 | 8.9 (3.8) | 0.98 | 12.3 (2.3) | 0.97 | 8.1 (1.5) | 0.98 | 13.4 (2.0) | 0.97 |

| 11 | 7.4 (2.6) | 0.99 | 31.6 (12.3) | 0.83 | 7.3 (1.8) | 0.99 | 13.0 (1.7) | 0.97 |

| 12 | 4.6 (1.9) | 0.99 | 26.9 (2.4) | 0.75 | 8.3 (1.0) | 0.97 | 9.8 (1.4) | 0.96 |

| M (SD) | 7.8 (2.0) | - | 20.0 (6.1) | - | 13.3 (2.4) | - | 13.3 (2.3) | - |

| Traj. # | ArUco | AKBT | OpenPose | YOLO | ||||

|---|---|---|---|---|---|---|---|---|

| D. RMS | D. RMS | D. RMS | D. RMS | |||||

| 1 | 12.5 (6.3) | 0.99 | 25.2 (3.3) | 0.97 | 13.4 (1.8) | 0.99 | 16.6 (2.8) | 0.98 |

| 2 | 5.4 (1.7) | 0.99 | 28.7 (1.2) | 0.96 | 11.7 (0.9) | 0.99 | 11.9 (1.1) | 0.99 |

| 3 | 12.2 (4.7) | 0.99 | 21.3 (3.1) | 0.98 | 13.1 (2.0) | 0.99 | 14.5 (1.6) | 0.99 |

| 4 | 6.3 (1.9) | 0.99 | 21.8 (2.4) | 0.97 | 13.1 (1.8) | 0.99 | 13.5 (2.4) | 0.99 |

| 5 | 5.6 (1.0) | 0.99 | 22.6 (0.9) | 0.97 | 11.6 (0.5) | 0.99 | 11.6 (1.7) | 0.99 |

| 6 | 13.6 (8.5) | 0.98 | 20.5 (2.9) | 0.98 | 13.0 (1.7) | 0.99 | 15.3 (2.4) | 0.99 |

| 7 | 8.7 (6.1) | 0.99 | 18.1 (6.5) | 0.98 | 11.4 (1.6) | 0.99 | 16.3 (4.5) | 0.98 |

| 8 | 10.1 (4.0) | 0.99 | 15.5 (5.0) | 0.98 | 11.7 (1.8) | 0.99 | 19.6 (10.1) | 0.97 |

| 9 | 9.4 (4.2) | 0.99 | 17.1 (5.6) | 0.98 | 11.6 (1.3) | 0.99 | 14.6 (1.7) | 0.99 |

| 10 | 10.7 (6.5) | 0.99 | 15.0 (2.7) | 0.99 | 11.6 (1.4) | 0.99 | 13.9 (2.2) | 0.99 |

| 11 | 4.0 (0.6) | 0.99 | 17.4 (4.3) | 0.94 | 10.2 (0.6) | 0.98 | 11.6 (1.8) | 0.97 |

| 12 | 5.9 (2.8) | 0.99 | 12.5 (5.4) | 0.96 | 10.0 (0.7) | 0.98 | 10.9 (2.3) | 0.97 |

| M (SD) | 8.7 (3.2) | - | 19.6 (4.6) | - | 11.8 (1.1) | - | 14.2 (2.5) | - |

| Pairwise Comparison | Stationary | Slow | Fast |

|---|---|---|---|

| ArUco vs. YOLO | <0.001 | 0.003 | 0.002 |

| ArUco vs. AKBT | <0.001 | <0.001 | <0.001 |

| ArUco vs. OpenPose | 0.09 | 1.00 | 0.492 |

| YOLO vs. AKBT | 1.00 | 0.772 | 0.492 |

| YOLO vs. OpenPose | 0.41 | 0.05 | 0.347 |

| AKBT vs. OpenPose | 0.201 | <0.001 | 0.002 |

| Pairwise Comparison | Slow | Fast |

|---|---|---|

| ArUco vs. YOLO | 0.002 | 0.009 |

| ArUco vs. AKBT | <0.001 | <0.001 |

| ArUco vs. OpenPose | 1.00 | 1.00 |

| YOLO vs. AKBT | 1.00 | 0.492 |

| YOLO vs. OpenPose | 0.037 | 0.106 |

| AKBT vs. OpenPose | <0.001 | <0.001 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Feudis, I.; Buongiorno, D.; Grossi, S.; Losito, G.; Brunetti, A.; Longo, N.; Di Stefano, G.; Bevilacqua, V. Evaluation of Vision-Based Hand Tool Tracking Methods for Quality Assessment and Training in Human-Centered Industry 4.0. Appl. Sci. 2022, 12, 1796. https://doi.org/10.3390/app12041796

De Feudis I, Buongiorno D, Grossi S, Losito G, Brunetti A, Longo N, Di Stefano G, Bevilacqua V. Evaluation of Vision-Based Hand Tool Tracking Methods for Quality Assessment and Training in Human-Centered Industry 4.0. Applied Sciences. 2022; 12(4):1796. https://doi.org/10.3390/app12041796

Chicago/Turabian StyleDe Feudis, Irio, Domenico Buongiorno, Stefano Grossi, Gianluca Losito, Antonio Brunetti, Nicola Longo, Giovanni Di Stefano, and Vitoantonio Bevilacqua. 2022. "Evaluation of Vision-Based Hand Tool Tracking Methods for Quality Assessment and Training in Human-Centered Industry 4.0" Applied Sciences 12, no. 4: 1796. https://doi.org/10.3390/app12041796

APA StyleDe Feudis, I., Buongiorno, D., Grossi, S., Losito, G., Brunetti, A., Longo, N., Di Stefano, G., & Bevilacqua, V. (2022). Evaluation of Vision-Based Hand Tool Tracking Methods for Quality Assessment and Training in Human-Centered Industry 4.0. Applied Sciences, 12(4), 1796. https://doi.org/10.3390/app12041796